- 1Department of Social and Human Science, University of Bergamo, Bergamo, Italy

- 2Department of Law, Economics and Human Sciences, University of Reggio Calabria, Reggio Calabria, Italy

Due to the COVID-19 pandemic, many university initial teacher education courses have been adapted into remote mode. Starting from specific topics of the training curriculum, the work focuses on adapting courses on evaluative knowledge and skills in an e-learning environment; it examined the development of student teachers’ evaluative knowledge (terminology and concepts) and skills (design of paper and pencil assessment tools) who took the adapted courses. A comparative study of two adapted university degree courses (University of Bergamo and Mediterranean University of Reggio Calabria in Italy – initial training of future primary school teachers) in the area of evaluation, was carried out. The study involved 155 primary school student teachers and made a mixed method investigation with sequential system. The first exploratory method collected quantitative data by an “ad hoc” questionnaire on student teacher’ knowledge, the second confirmatory method gained qualitative data through the document analysis of paper-and-pencil assessment tool on student teachers’ skill. The results highlight substantial similarities on knowledge (function of evaluation, difference between evaluation and assessment, object of school evaluation) and skill (ability to refer to real situations and promote situated knowledge) but also differences (construct of school “evaluation processes” and ability to provide different solutions for solving the task) e specific difficulty in implementing the “constructive alignment.”

Introduction

Assessment activities involve the entire education system, as assessment of students’ learning and behavior in the classroom and as evaluations of school’s teaching-learning interventions (Galliani, 2015; OECD, 2015). From a professionalization perspective (OECD, 2005; Darling-Hammond et al., 2017; Andrade and Heritage, 2018), teachers’ evaluative competences are described as knowledge, skills, and attitudes useful for carrying out educational interventions and assessment actions in the context scholastic (Popham, 2004; Willis et al., 2013; Münster et al., 2017).

In the initial teacher education curricula, these evaluative competences are related to the subject to be taught, the didactic knowledge of the discipline, the assessment methods, the grading procedures as well as to the ethics of evaluation (DeLuca et al., 2010; Ogan-Bekiroglu and Suzuk, 2014). The development of evaluative competences occurs through the connection between different training activities, i.e., lectures, seminars, exercises, etc. (DeLuca and Volante, 2016).

Due to the COVID-19 pandemic, many university initial teacher education courses implemented adequate solutions in order to ensure the development of students’ competences, such as e-learning environments where to adapt learning activities no longer feasible face-to-face (IAU-UNESCO, 2020; Marinoni and van’t Land, 2020; Gaebel et al., 2021). Starting from specific topics of the training curriculum, the work focuses on adapting courses on evaluative knowledge and skills in an e-learning environment; it examined the development of student teachers’ evaluative knowledge (terminology and concepts) and skills (design of paper and pencil assessment tools) who took the adapted courses (Popham, 2004; Willis et al., 2013; Xu and Brown, 2016).

After the descriptions of two initial training curricula for primary school teachers at University of Bergamo and Reggio Calabria in Italy, methodologies and results of a comparative study conducted in the February–March 2021 are presented.

Theoretical Framework

Perspective on Teacher Evaluation Competences

The scholars proposed several definitions about teachers’ evaluative competencies over the years: teachers’ skills “as evaluators” (Roeder, 1972), evaluation procedures and classroom assessment competences (Stiggins and Faires-Conklin, 1988), assessment literacy (Popham, 2004, 1995; Xu and Brown, 2016), evaluation competencies and assessment capability (DeLuca et al., 2010, 2018). Moreover, various areas of competence have been identified: preparation in using the tests (Roeder, 1972); collection of data useful for the decision-making (Stiggins and Faires-Conklin, 1988); create evaluation tools and use those made by others (Popham, 1995); reporting achievement, synthesize assessments, item reliability, validity, etc. (DeLuca et al., 2010). The definition of teachers’ evaluative competence takes on different nuances according to the functions attributed to the action of evaluating (Stiggins and Faires-Conklin, 1988): classroom decision making; assessment as interpersonal activity; providing a clear and stable target; tools to assess achievement and other traits; providing feedback; meaning of quality assessment; focus on assessment policy. A competent teacher in evaluation is assumed therefore capable not only to diagnose areas of strength and weaknesses of students and groups, to know how to define teaching interventions based on educational needs, to know how to communicate educational objectives and social expectations, but also to know how to evaluate the effectiveness of teaching interventions (OECD, 2013).

From the perspective of teacher professionalization (Willis et al., 2013), the evaluation competencies of teachers are defined as closely related to concepts, representations, and personal beliefs on evaluation (Münster et al., 2017) – as assessment and grading methods, feedback, self-assessment, and peer evaluation as well as the ethics of evaluation – but also to disciplinary knowledge and pedagogical content knowledge (Shulman, 1987; Xu and Brown, 2016).

Perspective on Teacher Training in Evaluation

Xu and Brown (2016, pp. 151–153) identified some subtheme synthetizing research on teacher training in evaluation (as “assessment literacy”): assessment courses, assessment training programs and resources, the relationship among assessment training, teacher conceptions of assessment, teacher assessment training needs, and self-reported efficacy. Despite being considered a problematic area of study (La Marca, 2006; DeLuca et al., 2018), the training of teachers in evaluation is a subject of growing interest for decision makers (Stiggins and Faires-Conklin, 1988); the logic of accountability leads to asking for validated information on students’ learning level and teacher preparation (OECD, 2005; Darling-Hammond and Adamson, 2010; Darling-Hammond et al., 2017). DeLuca and Johnson (2017, p. 125) are confident that “across pre-service and in-service contexts of assessment education there are consistent results that effectively support teachers’ assessment capability” and that learning to assess involves the ability to select priorities with respect to the context of work, the alignment with professional development criteria.

Initial Teacher Training Curricula and Evaluation Competences

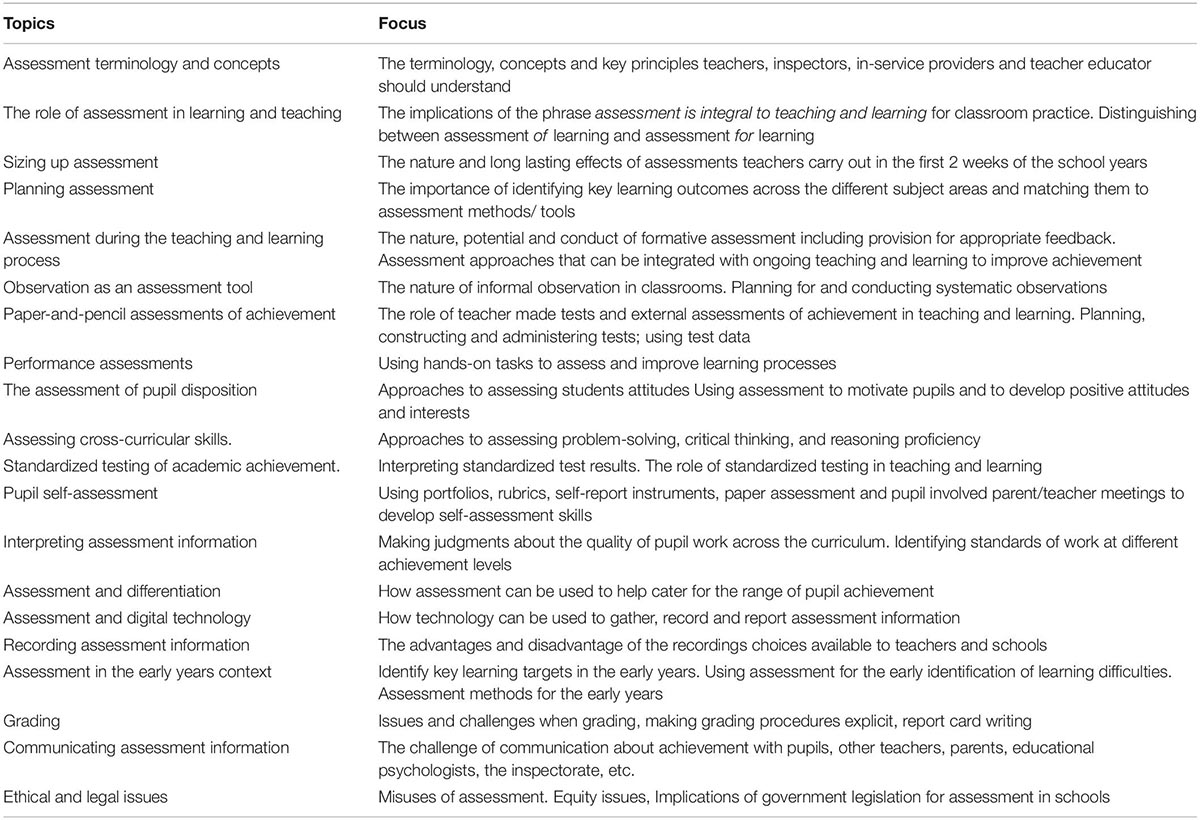

The research has provided a number of key elements for identifying teachers’ evaluation competences (Brookhart, 2011), useful for focusing peculiar topics for professional development programs in evaluation and within pre-service training curricula (see Table 1; O’Leary, 2008, pp. 111–112).

Although training of teachers on evaluation is recognized a priority, given the link with the improvement of schools and students’ learning, the investigations on it were carried out mainly from general perspectives, without connection with daily practice or standards (DeLuca et al., 2010).

Studies on initial training in evaluation focused attentions on curricula and methods of providing (contents, teaching strategies, etc.). Some of these focused on how specific evaluation skills are exercised at higher education and university level (relationship with other disciplines; use of specific educational activities – lectures, seminars, laboratories – and assessment and assessment tools – DeLuca et al., 2018). These allowed to clarify the very construct of “assessment literacy” (DeLuca et al., 2010; Ogan-Bekiroglu and Suzuk, 2014; Pastore, 2020).

Effectiveness of Training Courses

Studies on effectiveness of initial training courses in evaluation taken different perspectives. Studies inspired by the “process-oriented” model (Grossman and Schoenfeld, 2005) focused on the evaluation of “profound knowledge of the subject,” based on the assumption that those who know, can teach and also know how to evaluate. Shepard et al. (2005), from an opposite perspective, considered evaluation skills as a specific declination of basic ones – as planning and instruction. DeLuca’s investigations (DeLuca and Klinger, 2010; DeLuca and Bellara, 2013), however, found such studies lacking objective evidence on effectiveness of training courses and indicate this lack as a contributing factor to the low assessment capability of beginning teachers.

The “effectiveness” concept refers to the property of something to produce the desired results. The effectiveness of a training intervention is linked to the improvement of knowledge, skills, and performance of the people involved, from an absolute point of view and in terms of transfer, i.e., the ability to apply the knowledge received and the skills developed in real life contexts and work (Basarab and Root, 1992). Such improvement can be estimated through several procedures: comparison of learning before-after training; during the training; immediately after training; as a follow-up to the intervention. As a descriptive model of the effectiveness of a training course, the updated model of Kirkpatrick (1979) and Lipowsky and Rzejak (2015) has been proposed. It identifies four levels: a. reaction – as satisfaction with the training received; b. learnings – as information or procedures learned, but also changes in attitudes, beliefs and levels of motivation; c. behaviors – as application of new knowledge in teaching practice; d. results – impact on the organization of the school (as increase in student learning; increase in motivation, etc.).

Effectiveness of Online Courses and Adaptation

In times the pandemic due to COVID-19 made it necessary to adapt the degree courses (IAU-UNESCO, 2020; Marinoni and van’t Land, 2020; Gaebel et al., 2021). Theoretical and operational studies were carried out on the adaptation of degree courses (Rapanta et al., 2020; Agrati and Vinci, 2021).

Systematic studies on how teachers’ evaluation competences have been taught online – in a “hybrid” mode, which guarantees face-to-face or remote activities (Allen et al., 2016; Perla, 2021) or in e-learning environments, through resources specifications (Vai and Sosulski, 2015; Poulin and Straut, 2016; Read et al., 2019), and e-tivity (Salmon, 2002) – are very few. In order to investigate the development of the evaluative competencies (knowledge and skills) of student teachers involved in adapted university courses, it is possible to use the Hamtini model (Hamtini, 2008). As adapted version of the Kirkpatrik’s model, it replaces the four (reaction, learning, behavior, and results) with three (interaction, learning, and results) levels (Hamtini, 2008).

Hypotheses

The broader aim of this study is to get information on initial teacher training in evaluation, provided at the university, based on a descriptive investigation. Assuming specific topics of the training curriculum (“assessment terminology and concepts” and “paper-and-pencil assessments of achievement” [11 – see Table 1] as objects of analysis, the study aimed at known the effect of adapting training courses on student teachers’ evaluation knowledge and skills (describing how the provision of teaching in the assessment area was adapted online during the emergency period due to the COVID-19 pandemic). Descriptive and investigative questions are:

• what knowledge (evaluation terminology and concepts) have student teachers gained?

• have student teachers been able to design assessment tools?

Context, Sample, and Method of Investigation

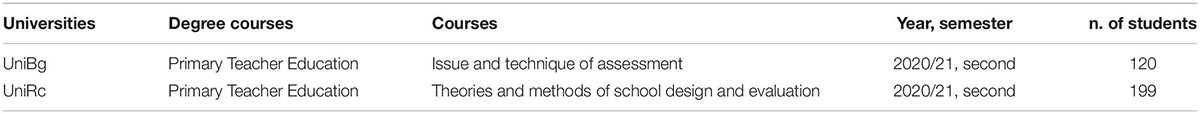

A comparative study of two university courses (University of Bergamo, Italy; Mediterranean University of Reggio Calabria) – degree courses aimed at the initial training of future primary school teachers – in the area of evaluation, was carried out (Table 2). The two university courses lasted 64 h and alternated online training activities: theoretical lectures (the basics of evaluation, evaluation terminology, and concepts, etc.), thematic in-depth seminars (evaluation and assessment tools, skills assessment, etc.), and group laboratory activities (analysis of standardized tests, development of paper-and-pencil tools, etc.).

The study involved 155 primary school student teachers aged 27 on average, mostly women; it was made in the February–March 2021 period.

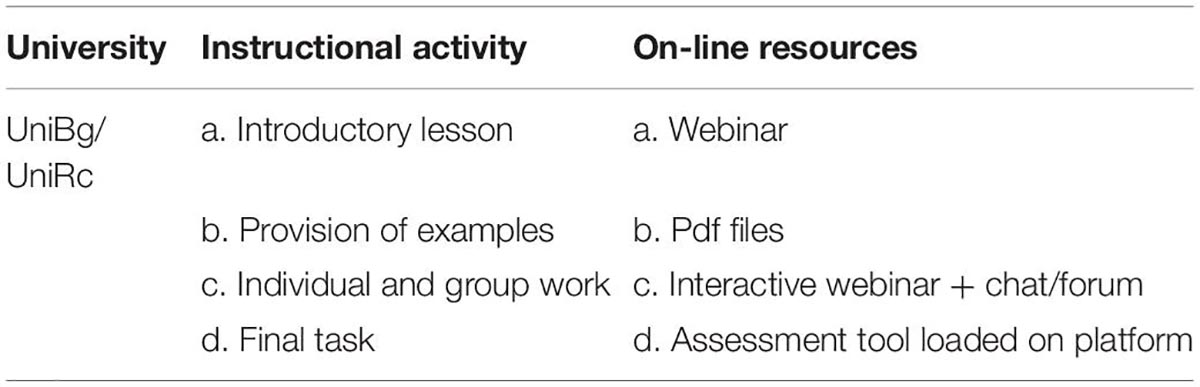

The comparative table below (Table 3) shows the online resources used to convert specific instructional activities (webinars, pdf files, forums, etc.). The adaptation of the two courses analyzed made use of the same articulation of online resources.

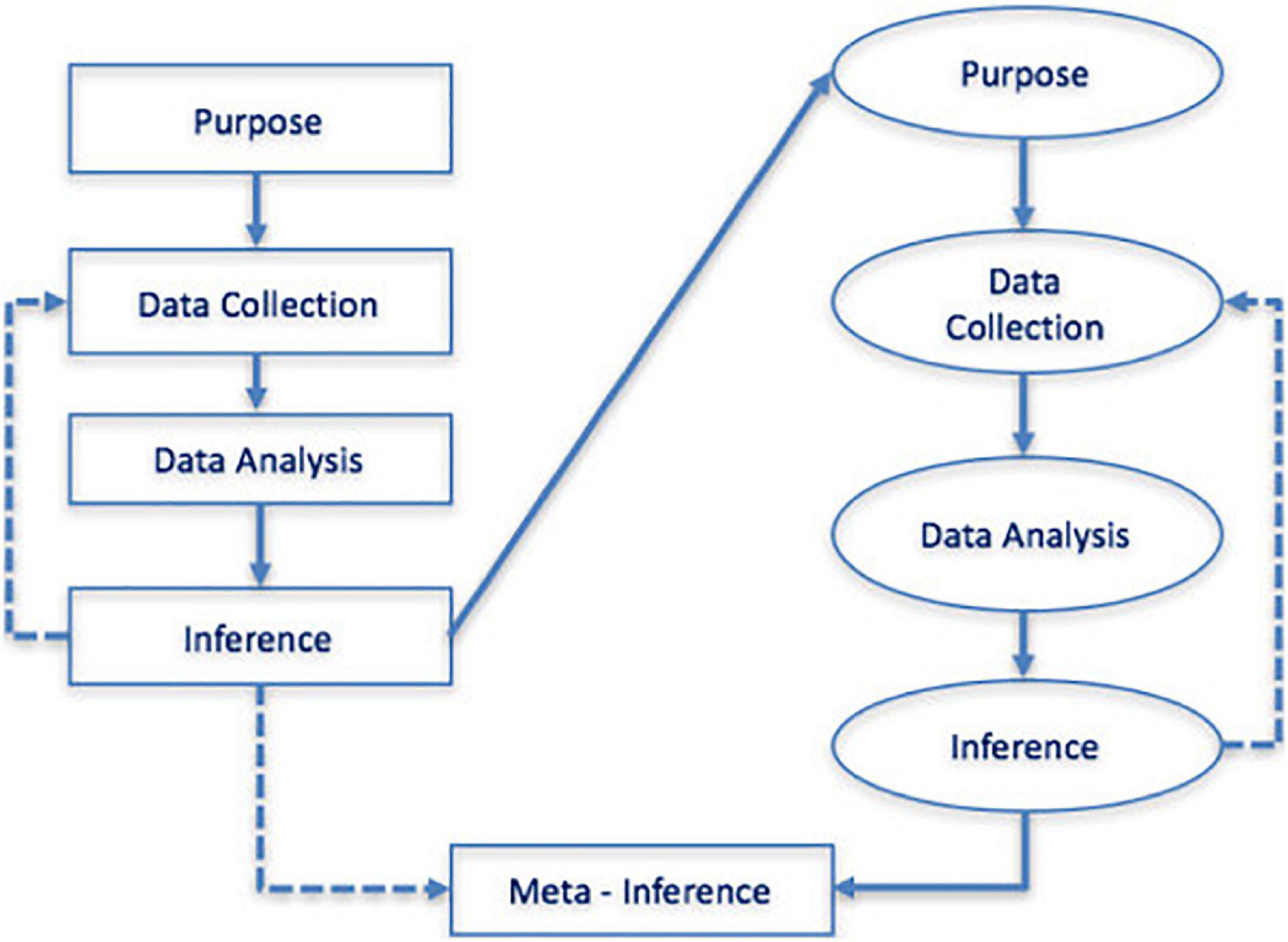

A mixed method design investigation with sequential system was made (Tashakorri and Teddlie, 2003; Cameron, 2015). The first exploratory method (quantitative data) produced early inferences useful to the second confirmatory method (qualitative data). The last synthetic meta inference was carried out through triangulation (Figure 1). Based on the Kirkpatrick model, adapted to the e-learning environment (Hamtini, 2008) attention was focused on student teachers’ learnings, as declarative-procedural knowledge (knowledge – 1) and skills (skills – 2).

Procedure and Tools

The first exploratory method collected quantitative data by an “ad hoc” questionnaire on evaluative student teacher’ knowledge, the second confirmatory method gained qualitative data through the document analysis of paper-and-pencil assessment tool (in this case, the “authentic task” tool), as regards the evaluative student teachers’ skills. All of student teachers involved (n. 155 = UniBg n. 81; UniRc n. 74) have produced the paper-and-pencil assessment tool; n. 130 of them replied to the questionnaire on knowledge (UniBg n. 62; UniRc n. 68).

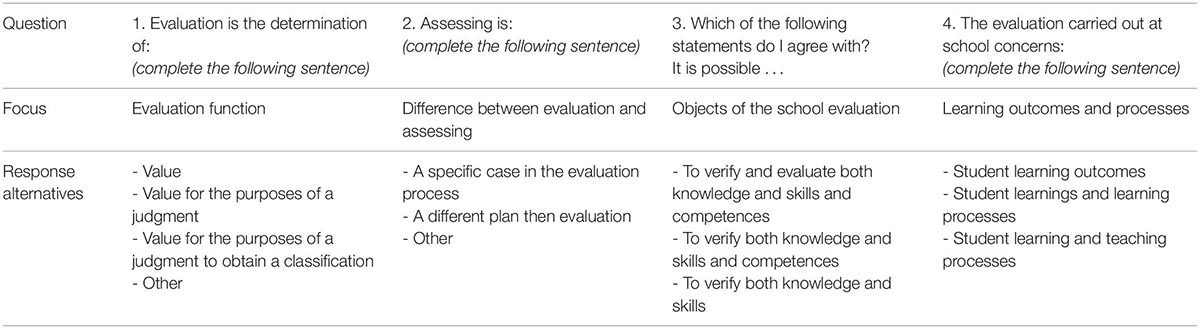

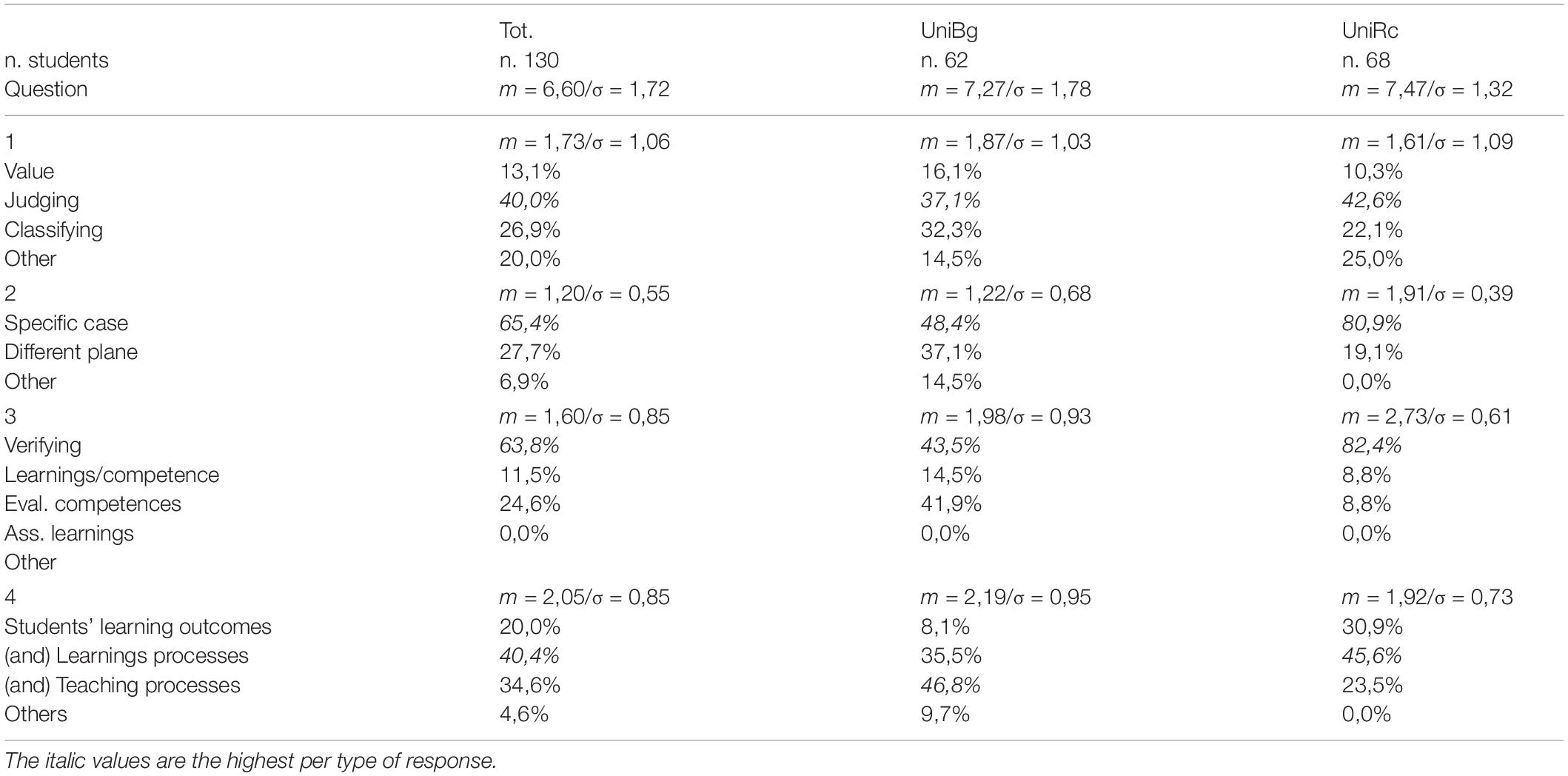

The “ad hoc” questionnaire was administered online through the University platform at the end of the courses. The questionnaire was structured in four close-ended items (Table 4): it focused on specific terminology and concepts – evaluation function (question 1: value, judging, classifying), difference between “evaluation” and “assessing” (question 2: specific case, different plan), school evaluation objects (question 3: verifying students’ knowledge and skills, evaluate competences, assessing learnings), learning outcomes and processes (question 4: assessing student outcomes, assessing teaching processes).

The descriptive statistical analysis on the quantitative data collected by the knowledge questionnaire proceeded to calculate via spreadsheet the mean and standard deviation of the responses (Table 5).

The student teachers’ ability (2) was inferred from the document analysis on paper-and-pencil assessment tools developed and uploaded by student teachers to the platform within thematic workshops at the end of the course. The paper-based assessment tool produced by the students was the “authentic assignment” (Comoglio, 2002; Herrington and Herrington, 2007; Herrington et al., 2014; Tessaro, 2014; Grion et al., 2017; Castoldi, 2018; Grion et al., 2019; Perla and Vinci, 2021), that is a “significant tasks, which agree to the (school) student of this experience and make the discovery of knowledge, to relate to it with a curious spirit, sharing experience with others; to acquire, in this way, significant knowledge, that is, recognized as ‘important’ or necessary by the subject, in order to navigate within a problematic condition verification” (Grion et al., 2019, p. 94). The format of “authentic task” provided to teacher students reported elements as educational goals, focus competence (linked to national curriculum), learning objective(s; linked to specific discipline), age of pupils, operational task, expected product, implementations (times, spaces, work phases and activities, resources, methods and tools), evaluating rubric, self-assessment procedure, methods of peer evaluation.

For the documentary analysis of the “authentic tasks” – and, consequently, the inferring of the ability in the “paper-and-pencil assessment tools” design – “criteria of relevance” (De Ketele and Gerard, 2005) have been identified, due to the complex and “open” nature of the tool, namely:

• (real situation) reference to a real or likely situation/context, capable of promoting situated knowledge (and not decontextualized, as usually happens in knowledge assessment tests);

• (different solution) possibility of different solutions for solving the task;

• (challenging) complex, dynamic, and combinatorial nature of the knowledge required for the solution of the task, such as to assume a “challenging” connotation for the school student who has to solve it;

• (transposition) transposition of the problem from the specialized language of a discipline to natural language;

• (consistency) consistency between the expected product and the key competence selected for the structuring of the task.

Measurement

The analysis of the answers to the student teacher’s questionnaire was carried out by calculating the mean for each alternative answer and the related standard deviation. Table 5 shows the data as a percentage.

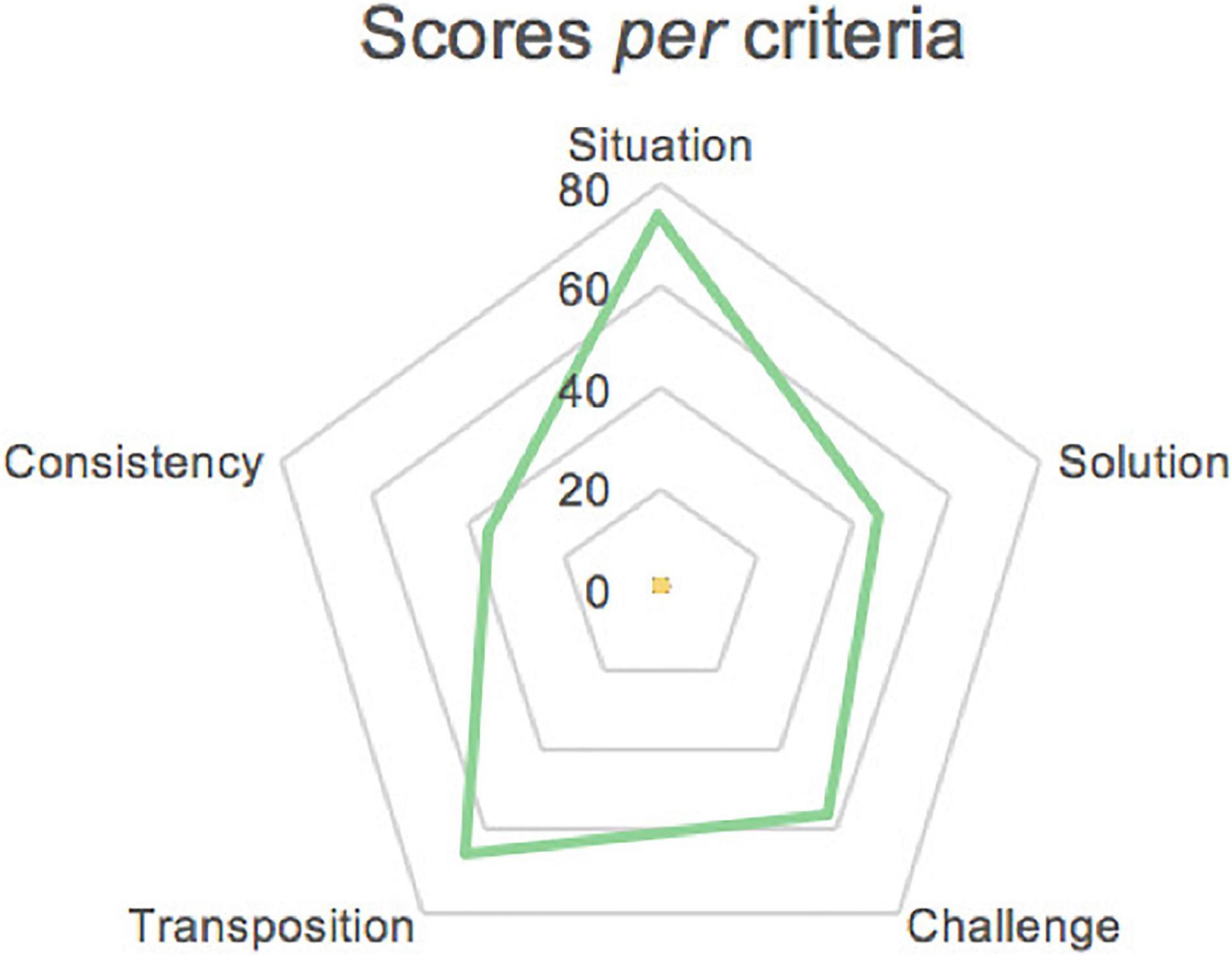

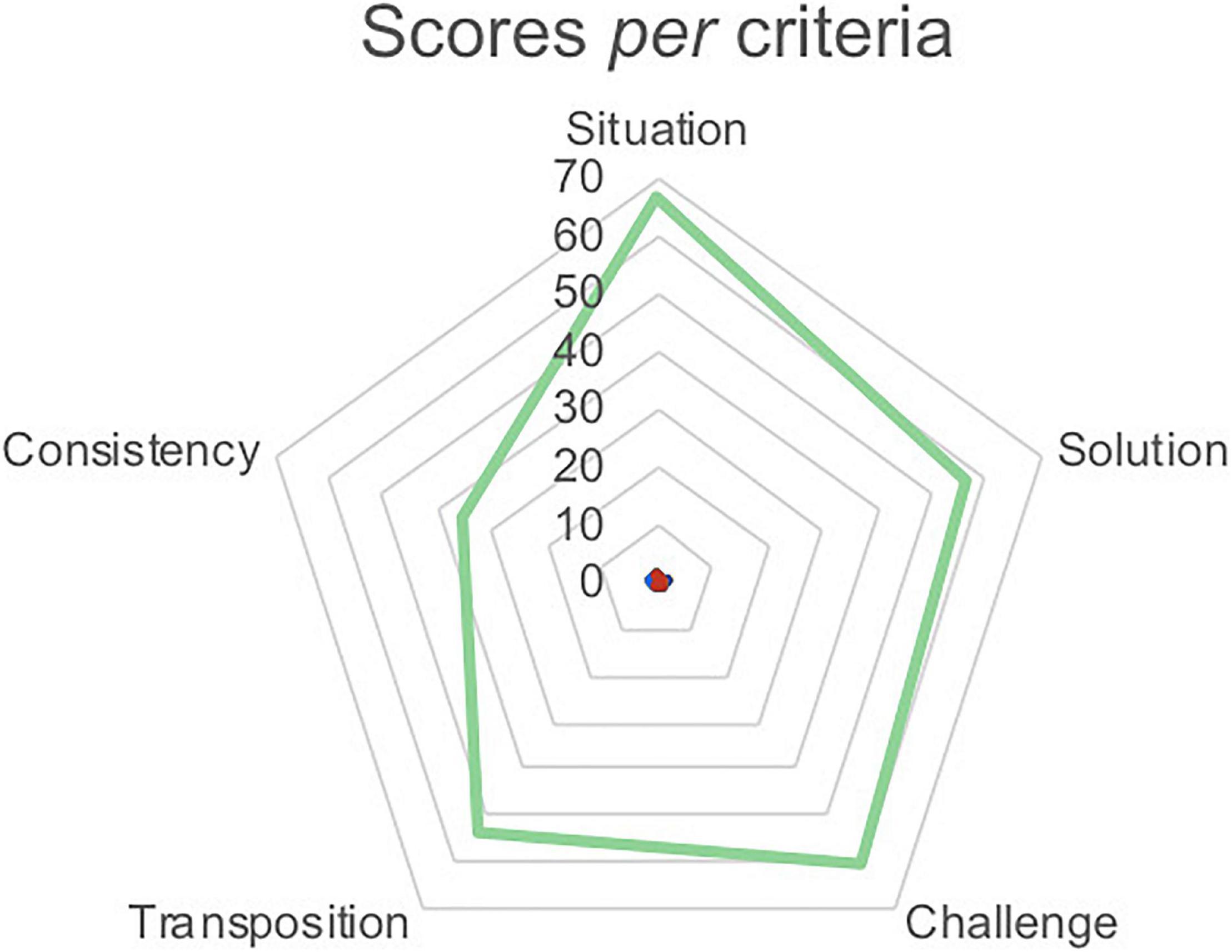

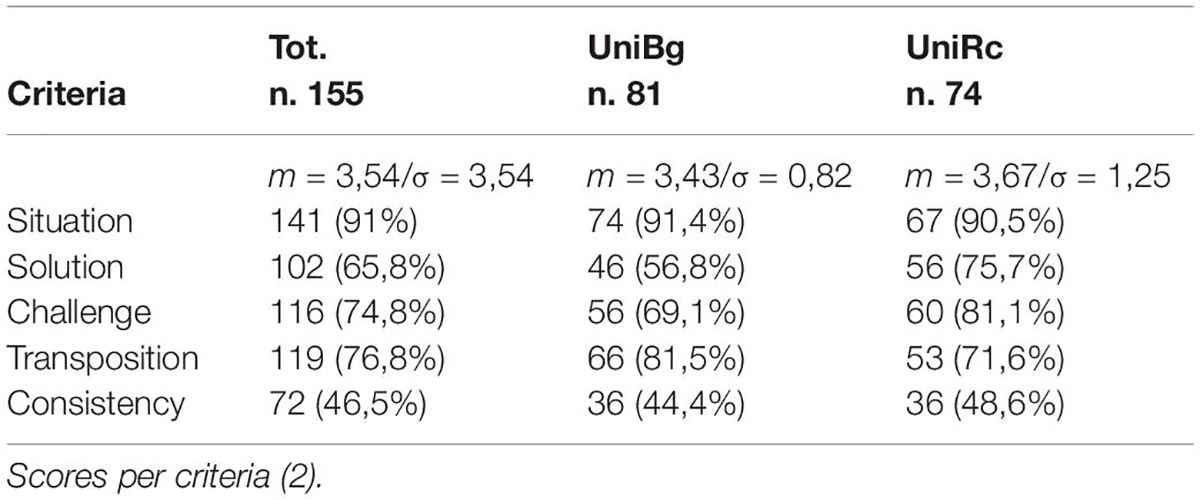

The documentary analysis of the “authentic tasks” obtained quantitative data. In fact, the average scores and related standard deviations were calculated with reference to the five “relevance criteria” identified.

Figures 2, 3 show the quantitative data –, respectively, of UniBg and UniRc – of the document analysis of the “authentic tasks.” The average scores obtained on the basis of the five “relevance criteria” are reported.

The documentary analysis was conducted by assigning a score to each criterion and proceeding to the sum of the scores obtained (Table 6).

The triangulation process proceeded (Salkind, 2010) by cross-analysis between answers’ measurement and the average score of the “authentic tasks” (Table 6).

Results

Evaluation Terminology and Concepts

Table 5 shows a general homogeneity and a specific difference between Bergamo and Reggio Calabria, as regards the student teachers’ knowledge (1). General homogeneity is found with respect to the questions nn. 1, 2, and 3. Student teachers from Bergamo and Mediterranea:

– assign to valuation the function of expressing a value for the purposes of a judgment (UniBg = 37.1%; UniRc = 42.6%). However, it should be noted the high dispersion for both data (UniBg = 1.03; UniRc = 1.09);

– consider the assessment a specific case of evaluation (UniBg = 48.4%; UniRc = 80.9%). Although a reduced dispersion is noted for both data, the responses of UniRc are more numerous than UniBg (+32.5%);

– identify the school evaluation object to verify both the learning and the skills of the students (UniBg = 43.5%; UniRc = 82.4%). Also in this case the responses of UniRc are more numerous than UniBg (+38.9%).

As regards the knowledge related to the evaluation processes carried out at school (question 4), a difference emerges between the student teachers. UniBg student teachers believe that school evaluation is about both the learning processes of the students and the teaching processes of the teachers (46.8%). Instead, UniRc student teachers mainly consider students’ learning outcomes and learnings processes (45.6%). It should be noted, however, that the gap between the answers to question no. 4 is not as broad as in the previous answers.

Research question n. 1 – what knowledge (evaluation terminology and concepts) have student teachers gained?

Almost half of student teachers attribute to evaluation, in general, the function of judging events, things, objects, etc. The absolute majority of them consider the assessment a specific case of evaluation. The absolute majority of student teachers always consider the students’ knowledge, skills and competences as objects of school evaluation. There is disparity, however, in the evaluation processes that take place at school: some consider only the learning processes of students, other also the teaching processes of teachers.

It would be indicated to deepen the investigation to know if this difference in knowledge depends on the contents delivered in the university course – beyond the similar didactic organization that was followed for the adaptation of online provision (Table 3) – or on a sort of “local culture” in evaluation topics.

Design of Paper-and-Pencil Tool

The data relating the document analysis of paper-and-pencil assessment tool (“authentic task”) are shown in Table 6. The documentary analysis, with reference to the above-mentioned relevance criteria, shows some evidences.

Research question n. 2 – have student teachers been able to design assessment tools?

Almost all students (Tot. 91%; UniBg 91.4%; UniRc 90.5%) designed the authentic task referring to a real situation capable of promoting situated knowledge: there are no particular differences between the two groups. This could indicate a widespread awareness on the part of students to refer to real or likely contexts in designing the authentic task.

With regard to the criteria “different solution,” “challenging,” and “transposition” some differences between the two groups emerge. As regards the possibility of different solutions for solving the task (different solution), the UniRc student teachers show higher response rates (75.7%) than UniBg (56.8); the same is true for the criterion related to complex, dynamic, and combinatorial nature of the knowledge required for the solution of the task, such as to assume a “challenging” connotation for the school student who has to solve it (challenging; UniRc: 81.1%; UniBg 69.1%). As regards the criterion relating to the transposition of the problem from the formalized (specialized) language of a discipline to natural language (transposition), on the other hand, responses are higher in the UniBg group (81.5%) than in UniRc (71.6%). It should be noted, however, that the gap between the answers is not broad.

A data that shows wide homogeneity between Bergamo (44.4%) and Reggio Calabria (48.6%) concerns the criterion of “consistency” between the expected product and the key competence selected for the structuring of the task: the figure in both groups is significantly lower than for the other criteria. This suggests the difficulty of designing an authentic task taking into account the coherence between the key competence, to be promoted, and the final assessment product. It also highlights a difficulty of some student teachers to implement “constructive alignment” (Biggs, 2014, pp. 5–6). The constructive alignment occurs when the learning activities that we ask students to engage in help them to develop the knowledge, skills and understandings intended for the unit and measured by our assessment (Tyler, 1949). The term “alignment” refers to the fact that the teacher provides a learning environment that supports the appropriate learning activities to achieve the intended learning outcomes: teaching methods and assessment tests must be aligned with the learning activities presupposed by the intended outcomes; the “constructive” aspect refers to the fact that students construct meanings through relevant learning activities and, knowing what the intended learning outcomes are and at what level, they are more likely to feel motivated and interested in the contents and activities planned by the teacher to facilitate their learning. Involving students also involves making them reflect on their learning process and their perceptions and opinions regarding the constructive alignment process (Serbati and Zaggia, 2012, p. 18).

Limitation and Prospect of the Study

With regard to the analysis/validation of “authentic tasks” tools, given the complexity and “openness’ of them,” specific relevance criteria have been identified. In the validation of an evaluative task based on competences, it was not possible to rely on criteria of validity and reliability (De Ketele and Roegiers, 1993; De Ketele and Gerard, 2005), designed for the validation of tests and/or description of a reference population (De Ketele and Dufays, 2003), but it was deemed appropriate to identify other criteria of documentary analysis closely linked to the complex and situational nature of the authentic task: in fact, the phenomenon under study is the result of a strong systematic effect and many individual or situational random factors.

At methodological level, the validation of tests for competences, such as authentic tasks, is still a challenge. There is not enough scientific evidence to support the identification of effective documentary analysis criteria: on the one hand, capable of meeting quality standards and scientific rigor; on the other hand, sensitive to the subjective, qualitative, and difficult to standardize descriptive dimensions of a complex phenomenon such as competence.

Some limitations of the work are not lacking.

The first critical issue is linked to the terminology under study, since in the Italian lexicon the term “assessment” is used indifferently for two distinct processes, such as “assessment” and “evaluation,” whose meanings and representations by the students would deserve further investigation (Thomas, 1995). In addition to the need to disambiguate the terminology in use, there is also the variability of the contextual conditions that can certainly influence the results: the courses under examination, although homologous in terms of content, belong to two different geographical and cultural contexts.

The second critical point concerns the disciplinary context in which the work was carried out, i.e., the involvement of students of pedagogical courses on the subject of evaluation, with the possibility of reciprocal conditioning between the content of the teaching and the field of investigation of the research: future research with an experimental design and addressed also to non-educational courses could be useful to understand if some results obtained – and especially the training of evaluative skills, nowadays considered “soft skills” and defined as “just-in-time” (EC, 2020), i.e., immediately applicable in multiple work contexts – can be considered valid regardless of the specific disciplinary context in which the work was carried out.

Conclusion for Educational Practice

The results show that the average levels of evaluative knowledge and skills of teaching students (namely, the design of paper-and-pencil tools) – both from Bergamo and Reggio Calabria – are more than satisfactory. With reference to the first question of the research (terminological knowledge and evaluation concepts), the students involved grasp the difference between “assessment” and “evaluation” and a broad idea of school evaluation processes – addressed as much to knowledge, as to the skills and competences of pupils. Regarding the second research question (ability to design evaluation tools) a good level of performance and products has been detected.

The design of the authentic task, which is functional to make the application of the evaluative criteria understood, had the role of promoting evaluative literacy, an essential competence in the future personal and professional life of young people (Sambell, 2011; Boud and Soler, 2016), yet often neglected in school and university practices. Socio-constructive contexts in which to activate teaching-learning processes of an interactive type, based on cooperation (Grion et al., 2019) should be implemented. Furthermore, assessment models based on real-life performances and competences, useful to promote the understanding of quality standards and the comparison of assessment criteria (Tai et al., 2016, 2017) should be shared.

The experience conducted responds to the need to train students, future teachers, to take an active role and reflect on their own learning in a more engaging way through one of the most important transversal skills for future personal and professional life: the ability to develop evaluative judgments, i.e., to create, use, and apply evaluative criteria to discriminate objects or make decisions about external situations or themselves (Restiglian and Grion, 2019, p. 197). This overcomes Assessment of Learning approaches, which see the student taking a passive role in the assessment process (Boud and Falchikov, 2007), to promote a perspective that makes students participate from the design of the assessment process and autonomous in their ability to evaluate their own learning (Boud et al., 2018): such learning itineraries fall fully within the approaches of assessment for learning (Sambell et al., 2013; Grion and Serbati, 2017) and sustainable assessment (Boud and Soler, 2016).

Research has also shown the importance of adapting a university course in remote mode through careful planning of the learning environment and the targeted use of online technologies and resources, to support evaluative design practices in the university: this suggests the need to train university teachers in the development of “sophisticated” skills (Perla et al., 2019) that make them able to choose, use, transform disciplinary content into “digitized disciplinary content” and to know how to effectively design learning environments starting from the potentialities inherent in new technologies for teaching, able to bridge the traditional gap between the world of education and real contexts.

Investigation has highlighted the role of documentation in the formation of students’ planning, evaluative, and reflective skills (Vinci, 2021), showing how the careful structuring of a documentary device has a structuring and transformative function at a cognitive and metacognitive level and assumes the function of scaffolding for more effective and lasting learning. The reflection on the documentary format has also had the result of making the students – future teachers of pre-school and primary schools – aware of the essential link between planning, documentation, and school evaluation. The structuring of an ad hoc documental device for the design of the authentic task has allowed students to collect information from which to design the authentic task, acting as a mediating element (Damiano, 2013) in the performance required between the theoretical knowledge already acquired (through the introductory lessons of school assessment) and the operational skills required in the structuring of the task, then between knowledge acquired in the university course and skills required in the professional field.

Fostering student teachers’ ability to analyze, design, and evaluate implies the renewal of teachers’ professional competences and in particular of the documentary competence (Perla, 2012, 2017, 2019): hence the need to design specific methodological devices that can support teachers in their evaluation and self-assessment actions, as well as to advance research on models for teachers’ professional development.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

LA wrote the sections “Introduction,” “Theoretical Framework,” “Hypotheses,” “Context, Sample, and Method of Investigation,” “Measurement,” and “Evaluation Terminology and Concepts.” VV wrote the sections “Procedure and Tools,” “Design of Paper-and-Pencil Tool,” “Limitation and Prospect of the Study,” and “Conclusion for Educational Practice.” Both authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Allen, E., Seaman, J., Poulin, R., and Straut, T. T. (2016). Online Report Card: Tracking Online Education in the United States. Needham, MA: Babson Survey Research Group and Quahog Research Group.

Agrati, L. S., and Vinci, V. (2021). “Virtual internship as mediatized experience. The educator’s training during COVID19 emergency,” in Bridges and Mediation in Higher Distance Education. Communications in Computer and Information Science, Vol. 1344, eds L. S. Agrati, D. Burgos, P. Ducange, P. Limone, L. Perla, P. Picerno, et al. (Cham: Springer), 1–14. doi: 10.4324/9781315623856

Andrade, H., and Heritage, M. (2018). Using Formative Assessment to Enhance Learning, Achievement, and Academic Self-Regulation. London: Routledge.

Basarab, D. J., and Root, D. K. (1992). The Training Evaluation Process: A Practical Approach to Evaluating Corporate Training Programs. Boston: Kluwer Academic.

Biggs, J. (2014). Constructive alignment in university teaching. Herdsa Rev. Higher Educ. 1, 5–22. doi: 10.1007/978-94-011-2940-4

Boud, D., Ajjavi, R., Dawson, P., and Tai, J. (eds) (2018). Developing Evaluative Judgement In Higher Education. Assessment for Knowing And Producing Quality Work. Abington: Routledge.

Boud, D., and Falchikov, N. (2007). Rethinking Assessment In Higher Education: Learning for the Longer Term. London: Routledge. doi: 10.4324/9781315109251

Boud, D., and Soler, R. (2016). Sustainable assessment revisited. Assess. Evalu. Higher Educ. 41, 400–413. doi: 10.1080/02602938.2015.1018133

Brookhart, S. (2011). Educational assessment knowledge and skills for teachers. Educ. Measure. Pract. 30, 3–12. doi: 10.1111/j.1745-3992.2010.00195.x

Cameron, R. (2015). “The emerging use of mixed methods in educational research,” in Meanings and Motivation in Education Research, eds M. Baguley, Y. S. Findlay, and M. C. Karby (New York: Routledge). doi: 10.1111/j.1745-3992.2010.00195.x

Damiano, E. (2013). La Mediazione Didattica. Per Una Teoria Dell’insegnamento. Milano: FrancoAngeli.

Darling-Hammond, L., and Adamson, F. (2010). Beyond Basic Skills: The Role Of Performance Assessment In Achieving 21st Century Standards Of Learning. Stanford, CA: Stanford University, Stanford Center for Opportunity Policy in Education.

Darling-Hammond, L., Hyler, M. E., and Gardner, M. (2017). Effective Teacher Professional Development. Palo Alto, CA: Learning Policy Institute,

De Ketele, J.-M., and Dufays, J.-L. (2003). “Vers de nouveaux modes d’évaluation des compétences,” in Enseigner Le franşAis, l’espagnol et l’italien. Les langues romanes aÌ l’heure des Compétences, eds L. ColleÌs, J.-L. Dufays, and C. Maeder (Bruxelles-Paris: Eìditions De Boeck Duculot), 171–182. doi: 10.54300/122.311

De Ketele, J.-M., and Gerard, F.-M. (2005). La validation des épreuves d’évaluation selon l’approche par les compétences. Mesure Et É. Educ. 28, 1–26.

De Ketele, J.-M., and Roegiers, X. (1993). Meìthodologie Du Recueil D’informations. Bruxelles: De Boeck Universiteì. doi: 10.7202/1087028ar

DeLuca, C., and Bellara, A. (2013). The current state of assessment education: aligning policy, standards, and teacher education curriculum. J. Teach. Educ. 64, 356–372.

DeLuca, C., and Johnson, S. (2017). Developing assessment capable teachers in this age of accountability. Assess. Educ. Principles Policy Pract. 24, 121–126. doi: 10.1177/0022487113488144

DeLuca, C., and Klinger, D. A. (2010). Assessment literacy development: identifying gaps in teacher candidates’ learning. Assess. Educ. Principles Policy Pract. 17, 419–438. doi: 10.1080/0969594X.2017.1297010

DeLuca, C., and Volante, L. (2016). Assessment for learning in teacher education programs: navigating the juxtaposition of theory and praxis. J. Int. Soc. Teach. Educ. 20, 19–31. doi: 10.1080/0969594X.2010.516643

DeLuca, C., Klinger, D. A., Searle, M., and Shulha, L. M. (2010). Developing a curriculum for assessment education. Assess. Matters 2, 20–42.

DeLuca, C., Schneider, Ch, Pozas, M., and Coombs, A. (2018). Exploring the Structure and Foundations of Assessment Literacy: A Cross-cultural Comparison of German and Canadian Student Teachers. Available online at: https://eera-ecer.de/ecer-programmes/conference/23/contribution/43815 (accessed September 15, 2021). doi: 10.18296/am.0080

Gaebel, M., Zhang, T., Stoeber, H., and Morrisroe, A. (2021). Digitally Enhanced Learning And Teaching In European Higher Education Institutions. Brussels: European University Association.

Grion, V., and Serbati, A. (2017). Assessment for Learning in Higher Education. Nuove Prospettive E Pratiche Di Valutazione All’università. Lecce: Pensa MultiMedia.

Grion, V., Aquario, D., and Restiglian, E. (2017). Valutare. Sviluppi Teorici, Percorsi E Strumenti Per La Scuola E I Contesti Formativi. Padova: Cleup.

Grion, V., Aquario, D., and Restiglian, E. (2019). Valutare Nella Scuola E Nei Contesti Educativi. Padova: CLEUP.

Grossman, P., and Schoenfeld, A. (2005). “Teaching subject matter,” in Preparing Teachers for a Changing World: What Teachers Should Learn And Able To Do, eds L. Darling-Hammond and J. Bransford (San Francisco: Jossey-Bass), 201–231.

Hamtini, T. M. (2008). Evaluating e-learning programs: an adaptation of kirkpatrick’s model to accommodate e-learning environments. J. Comput. Sci. 4, 693–698.

Herrington, J. A., and Herrington, A. J. (2007). “What is an authentic learning environment?,” in Online and Distance Learning: Concepts, Methodologies, Tools, and Applications, ed. L. A. Tomei (Hershey, PA: Information Science Reference), 68–77. doi: 10.3844/jcssp.2008.693.698

Herrington, J., Reeves, T. C., and Oliver, R. (2014). “Authentic learning environments,” in Handbook of Research on Educational Communications and Technology, eds J. M. Spector, M. D. Merrill, J. Elen, and M. J. Bishop (New York: Springer-Verlag), 401–412. doi: 10.4018/978-1-59904-935-9.ch008

IAU-UNESCO (2020). Regional/National Perspectives on the Impact of Higher Education. Paris: UNESCO House. doi: 10.1007/978-1-4614-3185-5_32

La Marca, P. (2006). “Assessment literacy: building capacity for improving student learning,” in Paper Presented at the Council of Chief State School Officers (CCSSO) Annual Conference on Large-Scale Assessment, (San Francisco, CA).

Lipowsky, F., and Rzejak, D. (2015). Key features of effective professional development programmes for teachers. RicercAzione. 7, 27–51.

Marinoni, G., and van’t Land, H. (2020). The impact of COVID-19 on global higher education. Int. Higher Educ. 102, 7–9.

Münster, W., Schneider, C., and Bodensohn, R. (2017). Student teachers’ appraisal of the importance of assessment in teacher education and self-reports on the development of assessment competence. Assess. Educ. Principles Policy Pract. 24, 127–146.

O’Leary, M. (2008). Towards an agenda for professional development in assessment. J. Serv. Educ. 34, 109–114. doi: 10.1080/0969594X.2017.1293002

OECD (2005). Teachers Matter Teachers Matter: Attracting, Developing and Retaining Effective Teachers. Paris: OECD Publishing. doi: 10.1080/13674580701828252

OECD. (2013). “Student assessment: putting the learner at the centre,” in Synergies for Better Learning: An International Perspective on Evaluation and Assessment, eds D. Nusche, T. Radinger, and P. Santiago (Paris: OECD Publishing).

Ogan-Bekiroglu, F., and Suzuk, E. (2014). Pre-service teachers’ assessment literacy and its implementation into practice. Curriculum Journal. 25, 344–371. doi: 10.1787/eag-2015-en

Pastore, S. (2020). Saper (Ben) Valutare. Repertori, Modelli E Istanze Formative Per L’assessment Literacy Degli Insegnanti. Milano: Mondadori.

Perla, L. (2017). “Documentazione = professionalizzazione? Scritture per la co-formazione in servizio degli insegnanti,” in La ProfessionalitaÌ Degli insegnanti. La Ricerca E Le Pratiche, eds P. Magnoler, A. M. Notti, and L. Perla (Lecce: Pensa Multimedia), 69–87.

Perla, L. (2019). Valutare Per Valorizzare. La documentazione Per Il Miglioramento Di Scuola, Insegnanti, Studenti. Brescia: Morcelliana.

Perla, L. (2021). Forma e contenuto del giudizio scolastico. elementi per un dibattito. Nuova Second. Ricerca. 8, 121–130.

Perla, L., and Vinci, V. (2021). Videovalutare l’agire competente dello studente. Excell. Innov. Learn. Teach. 6, 119–135.

Perla, L., Agrati, L. S., and Vinci, V. (2019). “The ‘Sophisticated’ knowledge of e-teacher. Re-shape digital resources for online courses,” in Higher Education Learning Methodologies and Technologies Online. HELMeTO 2019. Communications in Computer and Information Science, Vol. 1091, eds D. Burgos, M. Cimitile, P. Ducange, R. Pecori, P. Picerno, P. Raviolo, et al. (Cham: Springer), 3–17.

Poulin, R., and Straut, T. (2016). WCET Distance Education Enrollment Report 2016. WICHE Cooperative for Educational Technologies. Available online at: http://wcet.wiche.edu/initiatives/research/WCET-Distance-Education-Enrollment-Re (accessed September 15, 2021).

Rapanta, C., Botturi, L., Goodyear, P., Guardia, L., and Koole, M. (2020). Online university teaching during and after the covid-19 crisis: refocusing teacher presence and learning activity. Postd. Sci. Educ. 2, 923–945.

Read, M. F., Morel, G. M., Butcher, T., Jensen, A. E., and Lang, J. M. (2019). “Developing TPACK understanding through experiential faculty development,” in Handbook of Research on TPACK in the Digital Age, eds M. L. Niess, H. Gillow-Wiles, and C. Angeli (Hershey: IGI-Global), 224–256. doi: 10.1007/s42438-020-00155-y

Restiglian, E., and Grion, V. (2019). Valutazione e feedback fra pari nella scuola: uno studio di caso nell’ambito del progetto GRiFoVA. Italian J. Educ. Res. XII, 195–221. doi: 10.4018/978-1-5225-7001-1.ch011

Salmon, G. (2002). E-Tivities: A Key To Active Online Learning. London: Routledge. doi: 10.1080/01619567209537858

Sambell, K. (2011). Rethinking Feedback In Higher Education: An Assessment For Learning Perspective. Bristol: ESCalate.

Sambell, K., McDowell, L., and Montgomery, C. (2013). Assessment for Learning In Higher Education. London: Routledge.

Serbati, A., and Zaggia, C. (2012). Allineare le metodologie di insegnamento, apprendimento e valutazione ai learning outcomes: una proposta per i corsi di studio universitari. Giornale Italiano Della Ricerca Educ. 5, 11–26. doi: 10.4324/9780203818268

Shepard, L., Hammerness, K., Darling-Hammond, L., Rust, F., Baratz-Snowden, J., Gordon, E., et al. (2005). “Assessment,” in Preparing Teachers for a Changing World: What Teachers Should Learn and Be Able To Do, eds L. Darling-Hammond and J. Bransford (San Francisco: Jossey-Bass), 275–326.

Shulman, L. S. (1987). Knowledge and teaching: foundations of the new reform. Harvard Educ. Rev. 57, 1–22.

Stiggins, R. J., and Faires-Conklin, N. (1988). Teacher Training in Assessment. Portland, OR: Northwest Regional Educational Laboratory. doi: 10.17763/haer.57.1.j463w79r56455411

Tai, J. H. M., Canny, B. J., Haines, T. P., and Molloy, E. K. (2016). The role of peer-assisted learning in building evaluative judgement: opportunities in clinical medical education. Adv. Health Sci. Educ. 21, 659–676.

Tai, J., Ajjawi, R., Boud, D., Dawson, P., and Panadero, E. (2017). Developing evaluative judgement: enabling students to make decisions about the quality of work. Higher Educ. 76, 467–481. doi: 10.1007/s10459-015-9659-0

Tashakorri, A., and Teddlie, C. (2003). Handbook of Mixed Methods in Social & Behavioral Research. Thousand Oakes: Sage. doi: 10.1007/s10734-017-0220-3

Tyler, R. W. (1949). Basic Principles of Curriculum and Instruction. Chicago: University of Chicago Press.

Vinci, V. (2021). La documentazione per la valutazione. Nuova Second. Ric. 9, 445–457. doi: 10.4324/9781315770901

Willis, J., Adie, L., and Klenowski, V. (2013). Conceptualizing teachers’ assessment literacies in an era of curriculum and assessment reform. Austr. Educ. Res. 40, 241–256. doi: 10.1007/s13384-013-0089-9

Keywords: evaluation competences, initial teacher training, online environment, adaptation of courses, effectiveness of training

Citation: Agrati LS and Vinci V (2022) Evaluative Knowledge and Skills of Student Teachers Within the Adapted Degree Courses. Front. Educ. 7:817963. doi: 10.3389/feduc.2022.817963

Received: 18 November 2021; Accepted: 02 June 2022;

Published: 07 July 2022.

Edited by:

Mandeep Bhullar, Bhutta College of Education, IndiaReviewed by:

Luxin Yang, Beijing Foreign Studies University, ChinaPargat Singh Garcha, GHG Khalsa College of Education Gurusar Sudhar, India

Copyright © 2022 Agrati and Vinci. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Laura Sara Agrati, bGF1cmFzYXJhLmFncmF0aUB1bmliZy5pdA==

Laura Sara Agrati

Laura Sara Agrati Viviana Vinci

Viviana Vinci