94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

BRIEF RESEARCH REPORT article

Front. Educ., 08 September 2022

Sec. Language, Culture and Diversity

Volume 7 - 2022 | https://doi.org/10.3389/feduc.2022.817284

Each summer, students may lose some of the academic abilities they gained over the previous school year. English learners (ELs) may be at particular risk of losing English skills over the summer, but they have been neglected in previous research. This study investigates the development of oral reading fluency (ORF) of ELs compared with native English speakers. Using the AIMSweb Reading Curriculum-Based Measurement (R-CBM) in a pre-post design, reading fluency of N = 3,280 students] n = 363/11.1% ELs vs. n = 2,917/88.9% native speakers (NS)] was assessed in a school district in the Southeastern U.S. in May (4th grade) before and September (5th grade) after the summer break. Results showed that, on average, ELs performed 23.36 points below NS after the summer break. However, native English speakers and ELs lost ORF at similar rates over the summer (β = –0.02, p = 0.281). Contradictory to our hypothesis, students who had been higher performing in the spring had more reading performance losses over the summer (β = –0.45, p < 0.001). Future studies should assess the underlying individual student characteristics and learning mechanisms in more detail in order to develop evidence-based recommendations for tailored programs that can close the achievement gap between ELs and native English speakers.

Reading development among English Learners (ELs) and English native speakers (NS) has historically been addressed in education policy and research, although often not explicitly. The Equal Educational Opportunities Act (Equal Educational Opportunities Act [EEOA], 1974) prohibits discrimination against students, requiring schools to remove language barriers for ELs (Parsi, 2016). The Elementary and Secondary Act (ESA) of 1965 and later the Every Student Succeeds Act [ESSA] (2015) authorized funding for schools to support EL and low-income students’ academic growth. For instance, schools were mandated to provide language accommodations to non-English speaking students in support of reading growth and narrowing reading achievement gaps (Lyons et al., 2017). However, despite school reforms, evidence shows little to no progress in narrowing existing achievement gaps (Bracey, 2002). Learning regression occurs over the summer break when students lose the skills they have gained over the previous academic year. Importantly, it is during these months that the reading achievement gap widens the most (Entwisle and Alexander, 1992; Allington and McGill-Franzen, 2003; Kim and Guryan, 2010). Many studies attribute achievement gaps and summer regression to contextual school-based factors and educational practices (Heck, 2007; Condron, 2009; Hanushek and Rivkin, 2009). With regard to the achievement gap between socioeconomic groups, research shows that during the academic year, low-income students show achievement growth at the same rate as their middle and high-income peers (Cooper et al., 1996). However, research has not adequately considered the effects of summer regression for at-risk groups like EL students (Allington and McGill-Franzen, 2003). In 2018, one in five families (21.9%) in the US spoke a language other than English at home, a percentage that has doubled since 1980 (Zeigler and Camarota, 2019). Given the growth of ELs in schools nationwide alongside evidence of detrimental summer reading loss, research needs to compare the specific distributions of reading growth patterns among EL and NS students over the summer. The aspect of second language acquisition and respective limited exposure to English during the summer months, in particular, has been minimally studied prior research indicates the achievement gap between EL and NS students widens over the summer months (Alexander et al., 2001; Kim and Guryan, 2010).

The purpose of this study is to examine oral reading fluency (ORF) changes among a whole population-based cohort of EL and NS students in one school district in the Southeastern US. We use regression analysis to assess changes in ORF data using reading curriculum-based measurements (R-CBM) from benchmark assessments at the end of fourth grade and the beginning of fifth grade. Prior research on summer reading loss and second language acquisition theories inform our specific research questions and hypotheses: (1) Do EL and NS students lose ORF over the summer break? Considering the available research literature, we hypothesize that both EL and NS students will lose ORF over the summer. (2) Is the extent of potential summer losses the same or different for EL vs. NS students? Accounting for reduced exposure to English over the summer break, we hypothesize that EL students will lose more oral English reading fluency than their NS peers.

The data originate from a mid-sized school district in the southeastern United States. The population in the school district is characterized by a steady increase of new EL students across the K-12 age span over the past decade, similar to other cities in the region (see Zeigler and Camarota, 2019). The data presented here include ORF benchmark scores from curriculum-based measurements (CBM) that are used district- and state-wide for intermediate grades in elementary school. ORF scores include the Spring benchmark of 4th grade and the following Fall benchmark of 5th grade to analyze changes in reading fluency over the summer months.

Overall, the dataset included 3,280 students’ AIMsweb assessments from May and September 2018. The data represent the whole population of students in the district who transferred from 4th to 5th grade and for whom both the spring and fall reading scores were available. Following the district’s policies on data sharing, no information about student socioeconomic status or other demographic information was provided. However, district statistics indicated that EL students on average had a socioeconomic status than NS students. The study was carried out in adherence to Declaration of Helsinki principles. The school district that provided the data only shared de-identified variables. Accordingly, the Institutional Review Board (IRB) of the University of Tennessee Knoxville determined that the study was exempt from review (UTK IRB-19-05196).

The AIMSweb RCBM is a widely used standardized screening assessment for K-12 ORF and comprehension (Kilgus et al., 2014). Curriculum-Based Measures (CBM) are administered frequently in progress monitoring and use curriculum independent probes to meet reliability and validity standards (Reschly et al., 2009). The AIMSweb RCBM was administered as a standardized ORF assessment that measures reading rate and accuracy, expressed in terms of the number of words read correctly per minute (wcpm). The AIMSweb Benchmark includes both reading rate and accuracy as errors are counted and factored into the comprehensive benchmark score as outlined in the AIMSweb Training workbook. Prosody is also noted by teachers but not included in the score. Data in the present study were collected by the school district under very strict timing policies within 2-week testing windows. Thus, the risk for confounding effects due to variations in the duration of instruction received during a school year was minimal. Teachers administering the test received professional training from the district during in-service days prior to the school year. Testing was conducted 1:1 during regular school hours. During assessments, the student reads a level-based passage for 1 min while the teacher records any errors—words that are mispronounced, substituted, omitted, or read out of sequence- that the student does not self-correct within 3 s (Kilgus et al., 2014). R-CBM probes are scored according to standard CBM criteria in English as norm-referenced, standardized tests of reading achievement for elementary students (Reschly et al., 2009).

CBM are used to assess the development of basic English literacy skills such as phonological awareness, fluency, and reading comprehension (Sandberg Patton and Reschly, 2013). A critical aspect of CBM is a step-by-step progress monitoring model that is sensitive to students’ gains or losses from the end of an academic year to the beginning of the next school year (Good et al., 2012; Sandberg Patton and Reschly, 2013). Accordingly, raw scores are standardized according to a large national norm sample for each assessment.

In this study, AIMSweb R-CBM scores serve as repeated-measures dependent variables for growth in reading performance. Assessments were administered to all students within the assigned 2-week window at the end (i.e., May) and beginning (i.e., September) of the school year. Detailed reliability and validity data indicate good psychometric properties, and studies have confirmed the assessments’ predictive validity (AIMSweb Technical Manual, 2012).

Independent samples t-tests were used to assess descriptive differences between the two groups. Normed spring and fall ORF scores were used to create a d-score for each student. The d-score reflects gains vs. losses on a continuous scale, calculated as the difference between students fall minus their spring scores. To answer our hypotheses, firstly, to assess reading fluency changes over the summer break, mean spring and fall normed scores and national percentage (%) ranks were compared, using paired samples t-tests within the EL and NS groups. Secondly, linear regressions were carried out with fall normed scores as well as d-scores as dependent variables. EL vs. NS students was used as the binary-scored independent variable to test whether the extent of potential summer losses was different between the two groups. Spring scores were added as a covariate to control for performance at baseline. The Type-I-error was set to 5% two-sided.

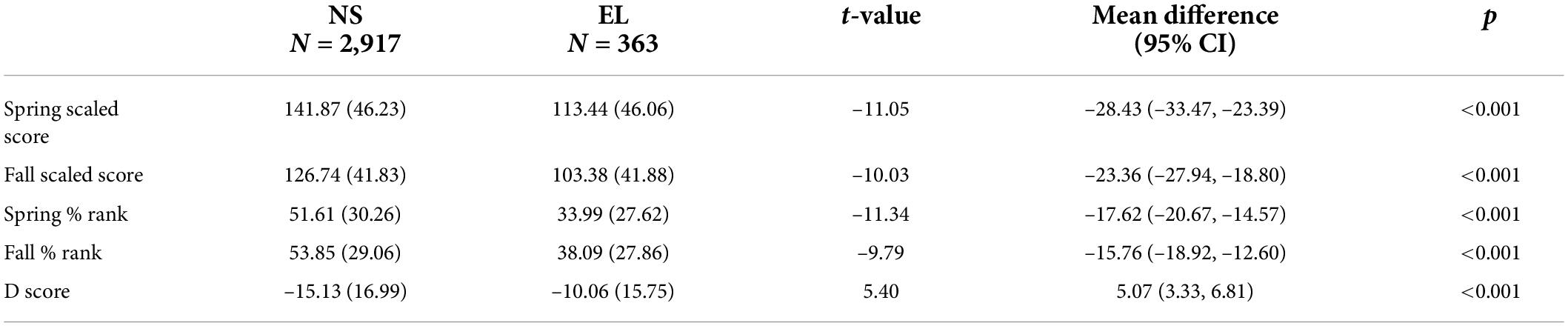

Descriptive statistics showed that, on average, EL students had lower reading scores than NS in both spring and fall. Two independent-samples t-tests confirmed that in the May assessment, there was a significant difference of 28.43 points in grade 4 reading scores between EL (M = 113.44, SD = 46.06) and NS students [M = 141.87, SD = 46.23; t(3,728) = –11.05, p < 0.001, see Table 1]. In the following grade 5 September assessment, the difference was smaller but still significant with 23.3 points, ELs (M = 103.38, SD = 41.88) vs. NS [M = 126.74, SD = 41.83); t(3,728) = –10.03, p < 0.001]. The same group difference was found when comparing national percentage ranks, with ELs ranking far below average, at 33.99 in spring and 38.09 in fall, whereas NS students in our sample ranked slightly above the national student average (i.e., 50), with 51.61 and 53.85, respectively. The national percentage ranks also suggest that the students in our sample, on average, lost less reading fluency over the summer than the national comparison group, since they gained in ranks within the respective EL and NS groups. Interestingly, Table 1 also shows that EL students (M = –10.06, SD = 15.75) had on average less losses in ORF over the summer (i.e., less negative d-scores) than their NS peers [M = –15.13, SD = 16.99; t(3,728) = 5.40, p < 0.001], mean difference = 5.07 [3.33, 6.81].

Table 1. Mean score (standard deviation) comparison of oral reading fluency from native speakers (NS) and English learners (EL) standardized according to the national norm sample.

To answer hypothesis 1, we compared spring and fall normed ORF scores and national percentage (%) ranks, using paired samples t-tests (in contrast to the independent-samples t-tests in Table 1) within the EL and NS groups. These showed that, according to their normed scores, both groups of students had significant losses over the summer [ELs: t(363) = 12.17, p < 0.001; NSs: t(2,917) = 48.08, p < 0.001]. Thus, hypothesis 1 was confirmed. In addition, the D score of the difference between the spring and fall score showed that both groups of students in our sample lost reading fluency over the summer break (ELs = –10.06, NSs = –15.13, respectively).

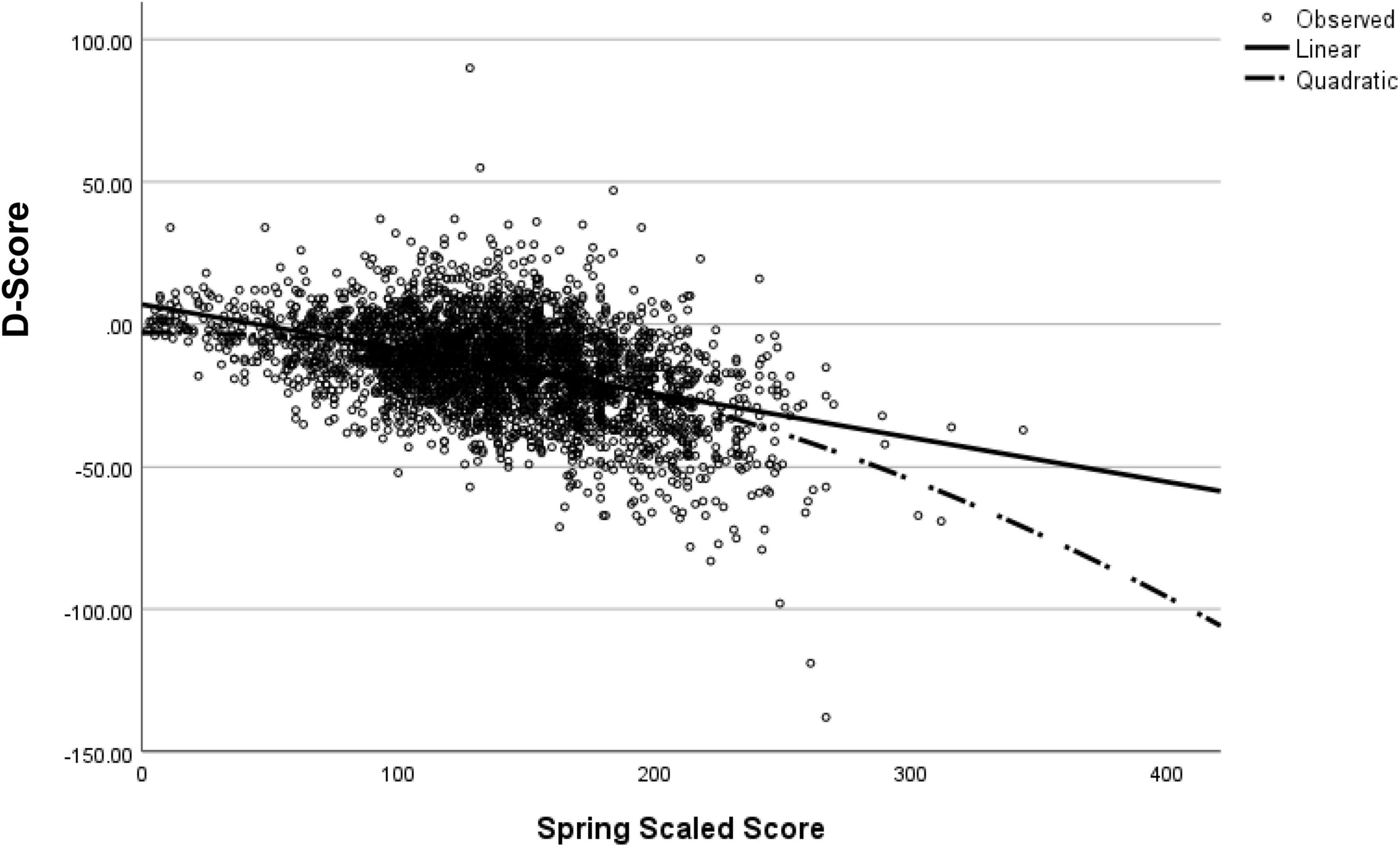

To answer hypothesis 2, two linear regressions were calculated, one with the cross-sectional normed fall scores as the dependent variable, the other with d-scores as the dependent variable. The d-score reflects students’ individual gains vs. losses over the summer on a continuous scale, calculated as the difference between their fall minus their spring scores. Before running the planned regressions, a curve estimation analysis of the relationship between students’ spring scores and the two dependent variables (i.e., fall scores and d-scores) was carried out. Overall, the variance in fall performance (linear relationship: R2 = 0.874, F = 22,646.16, p < 0.001; quadratic relationship: R2 = 0.876, F = 11,555.02, p < 0.001) and growth (linear relationship: R2 = 0.189, F = 765.96, p < 0.001; quadratic relationship: R2 = 0.204, F = 419.57, p < 0.001) was explained by spring performance. The effect of spring scores on the two dependent variables was best described as a quadratic, not a linear relationship (see Figure 1).

Figure 1. Scatter plot and curve estimation analysis showing the linear and quadratic relationship between spring performance and performance change over the summer (D-score).

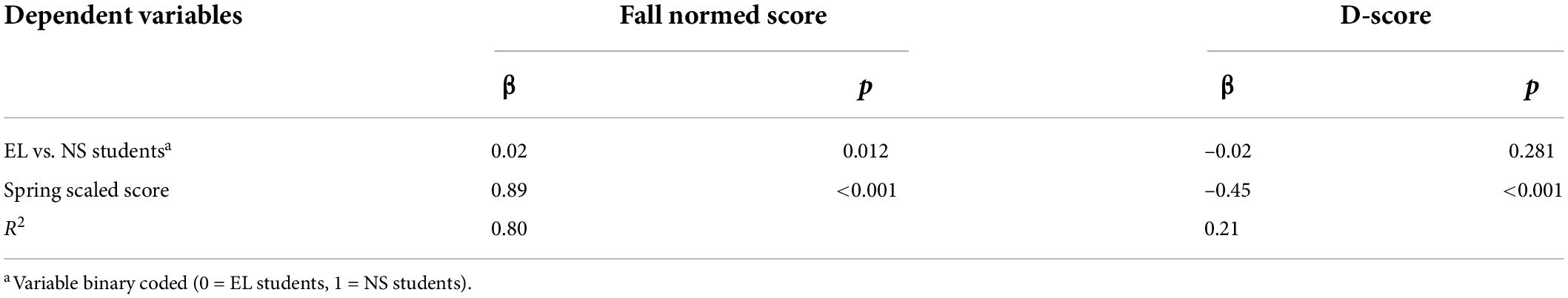

Specifically, the statistical association between spring and fall performance was not best captured as a straight line, but as an inverse u-shaped (i.e., quadratic) line, indicating a more complex relationship across both groups of students than the usually expected ‘the better performance at baseline, the better later performance’. The variable reflecting spring performance was thus rescaled into a quadratic term before inclusion in the linear main analyses. Linear regression analyses then confirmed that EL students had lower fall reading scores than their NS peers (β = 0.02, p = 0.012). Students’ spring scores predicted their fall scores (β = 0.89, p < 0.001, R2 = 0.80; see Table 2). The linear regression with d-scores indicating growth over the summer as dependent variable, however, showed no significant effect of students’ language group status (β = –0.02, p = 0.281), refuting hypothesis 2. Interestingly, students who had been higher performing in the spring had higher reading performance losses over the summer (β = –0.45, p < 0.001, R2 = 0.21) (Table 2).

Table 2. Linear regressions with normed fall scores and D-score as dependent variables including EL (English learners) vs. NS (native speakers) and spring scaled score as independent variables.

We performed two sets of sensitivity analyses. Firstly, two univariate linear regressions predicting fall scores and performance growth without controlling for spring performance confirmed the previous direction of results. EL students, on average, had lower fall reading scores than their NS peers (β = 0.17, p < 0.001), however, they had less negative d-scores (β = –0.10, p = < 0.001; i.e., fewer losses over the summer). Secondly, we repeated our main analyses within the group of students who had been performing above + 1 standard deviation (SD) in spring (n = 467) and confirmed the previous results of significant positive effect of spring performance on fall performance compared to a negative effect on performance growth (d-scores) over the summer. However, there was no effect of EL status, most likely because only n = 12 EL students were among the high performing group.

This study provides new insights into the ORF development of EL and NS students over the summer. Descriptive, cross-sectional data showed that, on average, EL students performed below the level of their NS peers both before and after the summer break. Considering national percentage ranks that indicate substantial reading performance delays, it is important to support ELs in closing the gap to their NS peers. Our data replicate well-known and established findings, but it needs to be stressed that this was not the main aim of the current study. We confirmed hypothesis 1: Both groups of students lost oral English reading fluency between May and September. Prior literature on summer regression showed disparities in reading fluency loss between EL and NS students, with ELs dropping to lower scores from Spring to Fall benchmark assessments (Entwisle and Alexander, 1992; Alexander et al., 2001). Our new findings, however, do not replicate this earlier result, and we partly refuted our second hypothesis. When looking at d-scores to assess change, EL and NS students lost ORF at similar rates over the summer. In other words, even though EL and NS students both experienced summer loss to similar extents, the reading fluency gap remained. Interestingly, however, students who had been higher performing in the spring had more reading performance losses over the summer. These results suggest that ‘those who have more may have more to lose’ and raise the question of how to prevent all students from regressing in their ORF over the summer.

These findings may need to be interpreted in a broader framework that considers ecological and biopsychosocial aspects of development. Considering the role of language exposure for second language development, it seems counterintuitive that EL students would not lose more oral English skills over the summer than their NS peers who are likely exposed to much higher and more frequent daily amounts of English in their home environments. Studies have shown that many ELs converse with their family and community members in a language other than English (Lawrence, 2012; Wood et al., 2018). Conversational English warrants further research as an influential factor on growth in reading fluency (Ambridge and Lieven, 2011; Wood et al., 2018). Indeed, ELs often learn English vocabulary more rapidly than NS peers during the school year, yet they experience loss in conversational English use over the summer, significantly impacting English growth (Lawrence, 2012). Nevertheless, our results suggest that was not the case in our sample, suggesting that mechanisms associated with the immigrant paradox may be at play (Teruya and Bazargan-Hejazi, 2013; Zhang et al., 2021). To speculate, perhaps EL students in our sample had more cohesive and structured family environments over the summer than their NS peers, perhaps they were exposed to healthy amounts of social interaction and communication, perhaps they regularly visited the local library. Our data cannot answer such questions and more research is needed to understand better how families and the larger community can support summer learning (Borman and Dowling, 2006).

The present study’s dataset with 3,280 students is large and a robust representation of the regional 4th and 5th grade population. The dataset, however, lacks further variables beyond the students’ EL status, such as gender or SES and specific level of English proficiency. The RCBM assessment only allows us to draw conclusions about ORF, not reading comprehension. While the dataset provides a longitudinal overview of students’ ORF development over one summer, additional data points would benefit our understanding of long-term development and allow more sophisticated analyses such as growth curve modeling.

The current study contributes new evidence to our understanding of the development of EL and NS students’ reading fluency over the summer. The picture that emerged is complex and indicates that previous studies regarding the detrimental significance of the summer gap for EL students may need to be assessed more closely as part of rigorous, large-scale longitudinal studies. Future research should assess the underlying individual student characteristics and learning mechanisms in more detail to develop evidence-based recommendations for tailored programs that can close the achievement gap between EL students and native English speakers. While ELs in this study did not lose more ORF than their NS peers, they nevertheless performed substantially below the average population level at both timepoints.

The data analyzed in this study was subject to the following licenses/restrictions: data was provided by the school district that restricts sharing the dataset.

The studies involving human participants were reviewed and approved by IRB at UTK, and it was determined that the study was exempt from review. Written informed consent from the participants’ legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

NJ contributed to the conception and design of this report. CB accrued the data for analysis. JJ and NJ performed the statistical analyses. NJ and EF wrote the first draft of the manuscript. NJ, JJ, and EF wrote sections of the manuscript.

Publication costs were funded by the Biodiverse Anthropocenes program, University of Oulu, Academy of Finland Profi6 336449.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Alexander, K. L., Entwisle, D. R., and Olson, L. S. (2001). Schools, achievement, and inequality: A seasonal perspective. Educ. Eval. Policy Anal. 23, 171–191. doi: 10.3102/01623737023002171

Allington, R. L., and McGill-Franzen, A. (2003). The impact of summer setback on the reading achievement gap. Phi Delta Kappan 85, 68–75. doi: 10.1177/003172170308500119

Ambridge, B., and Lieven, E. V. M. (2011). Child language acquisition: Contrasting theoretical approaches. Ben Ambridge, Elena V.M. Lieven. Cambridge: Cambridge University Press.

Borman, G. D., and Dowling, N. M. (2006). Longitudinal achievement effects of multiyear summer school: Evidence from the teach baltimore randomized field trial. Educ. Eval. Policy Anal. 28, 25–48. doi: 10.3102/01623737028001025

Bracey, G. W. (2002). What students do in the summer. Phi Delta Kappan 83, 497–498. doi: 10.1177/003172170208300706

Condron, D. J. (2009). Social class, school and non-school environments, and black/white inequalities in children’s learning. Am. Sociol. Rev. 74, 685–708. doi: 10.1177/000312240907400501

Cooper, H., Nye, B., Charlton, K., Lindsay, J., and Greathouse, S. (1996). The effects of summer vacation on achievement test scores: A narrative and meta-analytic review. Rev. Educ. Res. 66, 227–268. doi: 10.3102/00346543066003227

Entwisle, D. R., and Alexander, K. L. (1992). Summer setback: Race, poverty, school composition, and mathematics achievement in the first two years of school. Am. Sociol. Rev. 57, 72–84.

Every Student Succeeds Act (2015). Every student succeeds act of 2015 Pub. L. No. 114-95 §114 Stat. 1177.

Good, R. H., Kaminski, R. A., Fien, H., Powell-Smith, K. A., and Cummings, K. D. (2012). “How progress monitoring research contributed to early intervention for and prevention of reading difficulty,” in A measure of success: The influence of curriculum-based measurement on education, eds C. A. Espin, K. L. McMaster, S. Rose, and M. M. Wayman (Minneapolis, MN: University of Minnesota Press), 113–124. doi: 10.5749/minnesota/9780816679706.003.0010

Hanushek, E. A., and Rivkin, S. G. (2009). Harming the best: How schools affect the black-white achievement gap. J. Policy Anal. Manag. 28, 366–393. doi: 10.1002/pam.20437

Heck, R. H. (2007). Examining the relationship between teacher quality as an organizational property of schools and students’ achievement and growth rates. Educ. Adm. Q. 43, 399–432. doi: 10.1177/0013161X07306452

Kilgus, S. P., Methe, S. A., Maggin, D. M., and Tomasula, J. L. (2014). Curriculum-based measurement of oral reading (R-CBM): A diagnostic test accuracy meta-analysis of evidence supporting use in universal screening. J. Sch. Psychol. 52, 377–405. doi: 10.1016/j.jsp.2014.06.002

Kim, J., and Guryan, J. (2010). The efficacy of a voluntary summer book reading intervention for low-income Latino children from language minority families. J. Educ. Psychol. 102, 20–31. doi: 10.1037/a0017270

Lawrence, J. F. (2012). English vocabulary trajectories of students whose parents speak a language other than English: Steep trajectories and sharp summer setback. Read. Writ. 25, 1113–1141. doi: 10.1007/s11145-011-9305-z

Lyons, S., Dadey, N., and Garibay-Mulattieri, K. (2017). Considering English language proficiency within systems of accountability under the Every Student Succeeds Act. Downers Grove, IL: Dover Corporation.

Parsi, A. (2016). ESSA and English language learners: Policy update. Arlington, VA: National Association of State Boards of Education.

Reschly, A. L., Busch, T. W., Betts, J., Deno, S. L., and Long, J. D. (2009). Curriculum-based measurement oral reading as an indicator of reading achievement: A meta-analysis of the correlational evidence. J. Sch. Psychol. 47, 427–469. doi: 10.1016/j.jsp.2009.07.001

Sandberg Patton, K. L., and Reschly, A. L. (2013). Using curriculum-based measurement to examine summer learning loss. Psychol. Sch. 50, 738–753. doi: 10.1002/pits.21704

Teruya, S. A., and Bazargan-Hejazi, S. (2013). The immigrant and hispanic paradoxes: A systematic review of their predictions and effects. Hisp. J. Behav. Sci. 35, 486–509. doi: 10.1177/0739986313499004

Wood, C., Wofford, M. C., Gabas, C., and Petscher, Y. (2018). English narrative language growth across the school year: Young Spanish–English dual language learners. Commun. Disord. Q. 40, 28–39. doi: 10.1177/1525740118763063

Zeigler, K., and Camarota, S. A. (2019). 67.3 Million in the United States spoke a foreign language at home in 2018. Available Online at: https://cis.org/Report/673-Million-United-States-Spoke-Foreign-Language-Home-2018 (accessed October 29, 2019).

Keywords: summer regression, English learners (ELs), oral reading fluency (ORF), achievement gap, native English speakers

Citation: Jaekel N, Jaekel J, Fincher E and Brown CL (2022) Summer regression—the impact of summer on English learners’ reading development. Front. Educ. 7:817284. doi: 10.3389/feduc.2022.817284

Received: 17 November 2021; Accepted: 11 August 2022;

Published: 08 September 2022.

Edited by:

Julie Alonzo, University of Oregon, United StatesReviewed by:

Luke Duesbery, San Diego State University, United StatesCopyright © 2022 Jaekel, Jaekel, Fincher and Brown. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nils Jaekel, bmlscy5qYWVrZWxAb3VsdS5maQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.