- College of Computing, Fahad Bin Sultan University, Tabuk, Saudi Arabia

To evaluate and measure the effects of student’s preparation before studying an embedded system design course, a systematic literature analysis was conducted, spanning publications from 1992 to 2020. Furthermore, evaluating such effects may help to close the gap between academically taught abilities and industry-required skills. The goal of the review was to gather and evaluate all reputable and effective studies that have looked into students’ learning preparation issues and solutions in embedded system design courses. Nonetheless, the purpose of this work was to extract and discuss the key features and methodologies from the selected research publications in order to present researchers and practitioners with a clear set of recommendations and solutions. The findings revealed that no instrument has been developed yet to assess students’ readiness to take up an embedded system design course. Furthermore, the majority of the instruments offered lacked concept clarity.

1 Introduction

The major motive for this study work is the operational word “readiness to learn.” Various definitions of readiness have been proposed, demonstrating its significance in learning. Existing knowledge, skills, and capacities influence learners’ readiness to learn. Both the teacher and the student benefit from knowing where they stand in terms of readiness. Furthermore, various scientists and scholars (Freeman et al., 2014; Flosason et al., 2015; Kay and Kibble, 2016) have yet to agree on a single definition of learning. Due to a lack of consensus on the nature of learning, this article used the general learning definition, which is compatible with the concepts that most educational professionals believe are crucial to learning (Ibrahim et al., 2015; Ridwan et al., 2016; Ridwan et al., 2017; Hidayat et al., 2018; Ridwan et al., 2019). Learning is defined as the process of acquiring, improving, and developing one’s knowledge, skills, beliefs, and world views by mixing cognitive, emotional, and environmental influences and experiences (Flosason et al., 2015).

The objective of this systematic literature review (SLR) is to identify the latest research that measures students’ readiness to learn embedded system design courses. Consequently, to achieve the aforementioned objective the following research questions have been developed:

Research question 1: What are the important body of knowledge (BOK) courses for undergraduate students to learn an embedded system design course?

Research question 2: What are the cognitive, affective, and psychomotor skills required to succeed in an embedded system design course?

Research question 3: How can the instrument’s reliability and validity be evaluated?

Research question 4: Are students ready to learn an embedded system design course?

Students’ readiness assessments aim to assess students’ knowledge and abilities based on the products of their participation in a task and completion of a task rather than their replies to a sequence of test items. The lack of such assessment among various published articles is considered a motivation for this research.

The remainder of this work is arranged in the following manner: Section 2 delves deeply into the topic of learning readiness. Section 3 outlines the prerequisites for taking an embedded system course. In Section 4, learning domains and their effects were thoroughly discussed. In Section 5, the research approach was discussed. The results of the systematic literature review are discussed in Section 6. The limitations of the research were mostly highlighted in Section 7. Section 8 focuses on the article’s conclusions.

2 Readiness for Learning

In recent years, there have been a limited number of research studies on learning readiness (Freeman et al., 2014; Flosason et al., 2015; Kay and Kibble, 2016). Despite the fact that much of the research agrees that learning readiness is crucial, there is no agreement on what characterizes learning readiness and how it may be measured (Hidayat et al., 2018). According to Ibrahim et al., (2015) and Ridwan et al., (2019), preparedness for learning refers to a student’s capacity to demonstrate prior knowledge and abilities in order to be successful in his/her courses and meet the demands.

Prior knowledge, abilities, and academic behavior, according to Ibrahim et al., (2015) and Ridwan et al., (2019), are the keys to learning preparedness. On the other hand, Ridwan et al., (2017) stated that “readiness for learning” is conceptualized as a “developmental progression” reflecting student’s ability to learn specific predetermined curriculum content. Ridwan et al., (2017 and Ridwan et al., (2019) suggest that “previous knowledge, skills, motivation, and intellectual ability” are all factors that influence this construct.

Prior knowledge and skills have been found to have a substantial impact on the quality of learning and student accomplishment (Ridwan et al., 2016; Ibrahim et al., 2014); thus, students who are academically equipped in terms of prior knowledge and skills should be able to succeed in the embedded system design course. In recent years, various research studies have focused on prior knowledge and its impact on learning readiness and performance (Ridwan et al., 2016; Shuell, 2016; Dilnot et al., 2017; Rickels, 2017). The constructivist approach to learning, which has been prominent in recent decades, is intimately linked to interest in the influence of prior knowledge.

According to Yueh (Conley and French, 2014), knowledge is defined as the amount of information that pupils learn from an instructor during a class session. As a result, students can learn more knowledge and skills from an expert or an instructor in a given sector, assisting them in improving their understanding of the subject matter (Safaralian, 2017). As a result, it is critical for an instructor to be connected with the topic content and specified outcomes in order to improve students’ of a subject. Instructors should also evaluate their teaching competence on a regular basis in terms of subject knowledge and teaching skills (Kagan and Moss, 2013; Caliskan et al., 2017; Jane Kay, 2018).

In fact, various scholars from a variety of sectors of education concurred that one of the many aspects influencing students’ learning is their prior knowledge, emphasizing the need of subject-specific prior knowledge for the development of competences (Gillan et al., 2015). Furthermore, research that looked into the relationship between prior knowledge and training success found that past knowledge has a strong predictive potential that is relevant (Hailikari et al., 2012; Smith et al., 2015; Bennett et al., 2016).

3 Requirements to Learn Embedded System Courses

“Information processing systems embedded into a larger product that are generally invisible to users” (Yueh et al., 2012) is how the term “embedded systems” is defined. Embedded systems are found in every area of our life, ranging in complexity from a single device, such as a Personal Data Assistant (PDA), to enormous weather forecasting systems. Furthermore, they have a wide range of applications, ranging from everyday consumer electronics to industry automation equipment, from entertainment devices to academic equipment, and from medical instruments to aerospace and armament control systems (Adunola, 2011; Redding et al., 2011).

As a result, embedded systems engineers must be able to address complicated, open, and undefined problems that require combining diverse viewpoints in an interdisciplinary manner for knowledge, skills, techniques, and tools from various fields. As a result, engineers must build proficiency in both hardware/software and soft skills in order to deal with the complexity of integrating multicultural teams in large-scale projects with diverse profiles (Chang, 2010; Ganyaupfu, 2013).

Furthermore, embedded systems students must be prepared to excel in embedded systems technology that is built for study and professional advancement. Such readiness encompasses both hard and soft abilities, which may represent a significant barrier in carrying out the teaching process at higher education institutions (Adunola, 2011).

3.1 Hardware and Software Skills

The physical components of embedded systems that can be touched or seen, such as the CPU, motors, actuators, and other physical pieces, are referred to as hardware. Software, on the other hand, refers to the capacity to create and write programs in a variety of languages to solve technical challenges.

In fact, thinking about difficult talents as skills that can be shown is the simplest way to remember them. Furthermore, embedded systems are typically designed to interact with the physical world via sensors and actuators (Stern, 2001; Adunola, 2011).

Embedded system education should be multidisciplinary in nature and includes several aspects of control and signal processing, computing theory, real-time processing, optimization, and evaluation as well as systems architecture and engineering, according to the ARTIST Guidelines for a Graduate Curriculum on Embedded Software and Systems (Abele et al., 2015). Many universities, such as Ghent University (Schmidt-Atzert, 2004) and Universität Stuttgart (Schuler and Schuler, 2006), provide embedded system courses with the goal of addressing the practical difficulties that arise in the design and development of embedded systems, which include both software and hardware interfaces.

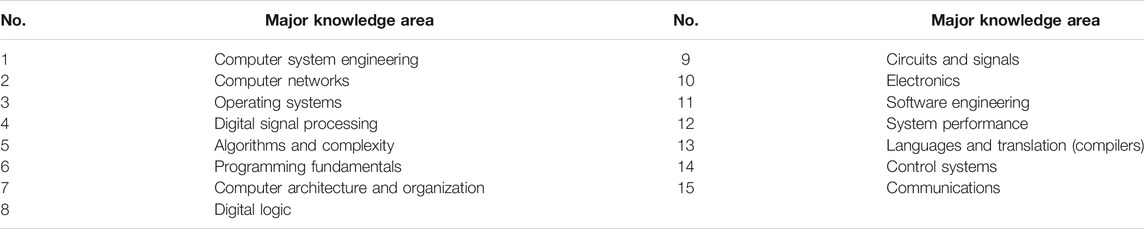

Meanwhile, Oakland University provides a Master of Science in Embedded Systems course (Marwedel, 2011), which takes 32 graduate credits, with 16 core requirements, and at least 12 depth requirements. Others (Ilakkiya et al., 2016) created an embedded system engineering curriculum for a 4-year undergraduate degree (ESE). A body of education knowledge for embedded system engineering was defined in the suggested curriculum, and it comprises 15 knowledge categories, as shown in Table 1.

TABLE 1. Body of knowledge for the embedded system design course (Ilakkiya et al., 2016).

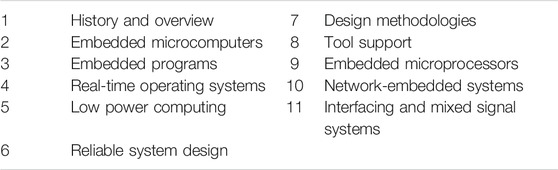

On the other hand, Dick (2016) created an integrated series of courses spanning early undergraduate to graduate course levels that were designed to link lower-order and higher-order cognition levels of Bloom’s taxonomy (Lima et al., 2014), which grouped learning into six cognitive levels: 1) knowledge; 2) comprehension; 3) application; 4) analysis; 5) synthesis; and 6) evaluation. Dick (2016) created a first-year course, a senior course, and a graduate course in embedded systems that covered the embedded system knowledge area (CE-ESY), which was one of the 18 accrediting areas and covered 11 knowledge units. In Table 2, the embedded system’s knowledge units are displayed.

TABLE 2. Knowledge area in an embedded system (Dick, 2016).

3.2 Soft Skills

Soft skills are nontechnical abilities that enable us to maintain self-control and have positive interactions with others. To be more specific, soft talents can be characterized as follows: “Skills that go beyond academic success, such as positive values, leadership abilities, teamwork, communication skills, and lifelong learning”—Roselina appears in Lima et al., (2013).

Employees must be able to use suitable information, skills, and attitudes in every element of their lives in order to improve the competitiveness of both economy and competition in today’s knowledge-based economy (ARTIST network of excellence, 2005; John, 2016; Bertels et al., 2009; Haetzer et al., 2011). Furthermore, businesses and employers respond to economic needs by hiring workers who can communicate, think critically, and solve problems.

In summary, higher education must be redesigned to improve the employability of graduates (Catalog.oakland.edu, 2014). Employers indicated that fresh graduates lacked effective communication and critical thinking abilities, had insufficient knowledge in their industry, and had weak problem-solving skills, according to the National Graduate Employability Blueprint (Bloom, 1956; Seviora, 2005; Rover et al., 2008; Shakir, 2009; Alliance for Excellent Education, 2011).

Furthermore, the issue of unemployment and work preparedness is a global phenomenon that is primarily attributable to poor graduate quality (Sahlberg, 2006; Johanson, 2010; Saavedra and Opfer, 2012; Catalog.oakland.edu, 2014), rather than a lack of employment options. As a result, several researchers have proposed that higher education institutions reform engineering education in order to keep up with industry expectations and bridge the gap between academic perceptions and industry expectations or employability skills for entry-level engineers (NGEM and Ministry of Higher Education, 2012; Hanapi and Nordin, 2014; Seetha, 2014; Alias, 2016; Rahmat et al., 2017; Ting et al., 2017; Fernandez-Chung and Yin Ching, 2018; Karzunina et al., 2018). As a result, the Ministry of Higher Education has mandated that institutions of higher learning should include soft skills in their curricula.

3.3 Critical Thinking Skills

Critical thinking entails combining skills such as assessing arguments, inferring inductive or deductive conclusions, judging or evaluating, and making decisions or solving issues (Howieson et al., 2012). Indeed, these critical abilities are referred to by a variety of terms, including cognitive thinking, reflective judgment, and epistemological development (Itani and Srour, 2016). For example, intelligence, scientific problem-solving, meta-cognition, drive to learn, and learning styles are all constructs and approaches that make up cognitive talents.

Despite the fact that each term has a different goal and application, cognitive abilities have been recognized as an important college student outcome because of their “applicability and value across a wide range of academic areas” (Itani and Srour, 2016). Many educators are interested in teaching critical thinking to their pupils (Howieson et al., 2012). It is widely agreed that critical thinking is an important and vital issue in modern education.

3.4 Communication Skills

When employing engineering graduates, communication skills tend to be one of the most significant qualifications (Srour et al., 2013). Despite its importance, some studies have focused on employers’ growing concern about engineering graduates’ deteriorating communication skills (Yoder, 2011; Baytiyeh, 2012; AUB (American University of Beirut), 2013; Selinger et al., 2013; Crystal, 2016).

3.5 Team-Working Skills

Teamwork has evolved as one of the most important skills for job readiness in the 21st century (Md Zabit et al., 2018), making it a necessary practice in higher education, with lecturers frequently giving group assignments that need student collaboration (Pascarella and Terenzini, 2005).

The development of small-scale business management abilities through entrepreneurship education has been linked to improved living conditions. Furthermore, entrepreneurial education teaches students to work not only for a living but also for themselves. In this context, it has been reported that fresh university graduates enter the work market on a yearly basis (King, Goodson, and Rohani).

The term “lifelong learning” comes from an article by Edger Faure for UNESCO titled “Learning to be” (Markes, 2006). From infancy through old age, one’s actions are reflected in lifelong learning. As a result, everyone should be taught how to learn. In addition, lifelong learning refers to a learning process that can be continued in a variety of settings without returning to school.

4 Learning Domains

Bloom’s taxonomy, developed by Benjamin Bloom in the 1950s, includes various terms relating to learning objectives. This opens the way for schools to standardize educational goals, curriculum, and assessments, further structuring the range and depth of instructional activities and curricula available to pupils (Nair et al., 2009).

This taxonomy uses a hierarchical scale to indicate the level of competence necessary to achieve each quantifiable student result. In fact, there are three different taxonomies to choose from which are as follows: 1) cognitive, 2) psychomotor, and 3) affective. Bloom’s taxonomy has been widely recognized in the engineering community, with a worldwide consensus that engineering graduates should be capable of analysis, synthesis, and evaluation. Furthermore, multiple types of learning can be classified into three categories: cognitive, emotional, and psychomotor (Lang et al., 1999).

4.1 Cognitive Domain

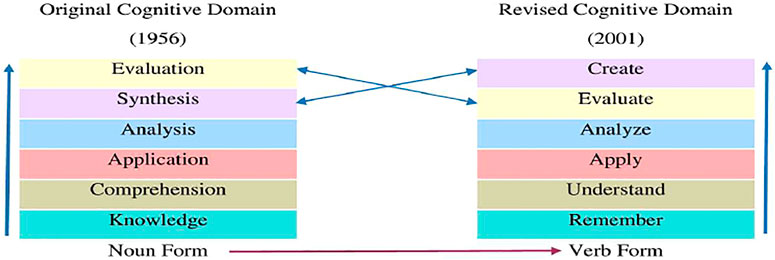

The cognitive domain focuses on how a student acquires and applies knowledge, which can range from simple material memory to more complicated and abstract activities. Furthermore, this domain refers to a hierarchy of learning skills that allows instructors to measure students’ learning in a systematic manner. Furthermore, the cognitive domain can be split into six key categories: 1) knowledge, 2) comprehension, 3) application, 4) analysis, 5) synthesis, and 6) evaluation (Meier et al., 2000; Scott and Yates, 2002a; Scott and Yates, 2002b), which range from simple to complicate and tangible to abstract.

Lorin and Krathwohl (Martin et al., 2005) identified various flaws in the cognitive domain. They asserted that, compared to the other levels, the knowledge level has a dual character. As a result, the Revised Bloom’s taxonomy cognitive domain was introduced, with some alterations to its six levels. The cognitive domain modernizes the taxonomy for use in the twenty-first century, emphasizing the active character of teaching and learning in the learning process of students rather than their behaviors. It also provides students with clear and well-defined objectives that allow them to connect their current learning to old knowledge (Barton, 2007; Ahles and Bosworth, 2004). Figure 1 illustrates the variances between the original cognitive domain and the 2001 revised one.

4.2 Affective Domain

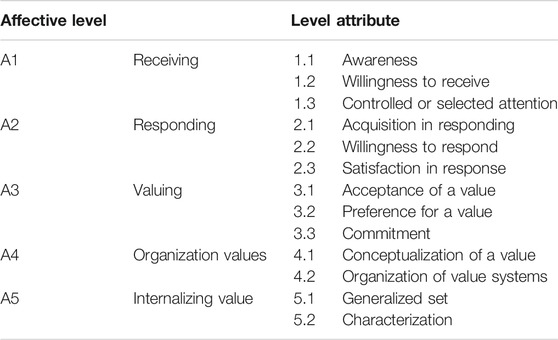

This topic is concerned with issues involving the emotional aspect of learning, and it encompasses everything from basic willingness to information intake as well as beliefs, ideas, and attitudes. It also takes into account student’s knowledge of ethics, global awareness, and leadership. As illustrated in Table 3, the affective domain is divided into five categories: 1) receiving, 2) responding, 3) valuing, 4) organizing, and 5) characterizing.

In the context of engineering, Timothy argued in Nguyen, (2017) that “the affective domain is involved with how one confronts interpersonal issues in their professional work or the realm of professional ethics, where a clear relationship is vivid with the value system to which one must follow.” This idea is similar to ABET criterion (f), which states that students must “understand professional and ethical duties” (Faure, 1972).

Learners’ attitudes of awareness, interest, attention, concern and responsibility, ability to listen and respond to interactions with others, and ability to demonstrate those attitudinal characteristics or values that are appropriate to the test situation and field of study are all examples of affective learning. Krathwohl’s affective domain taxonomy (Qamar et al., 2016), which gives a set of criteria for classifying educational results related to the complexity of thinking in the emotive domain, is possibly the most well-known of all of the affective taxonomies.

Again, the taxonomy is organized from the simplest to the most complicated feelings. Although it is related to the emotional, this taxonomy can be used in conjunction with Bloom’s cognitive taxonomy (Yildirim and Baur, 2016). All learning areas and levels can benefit from Krathwohl’s taxonomy. It gives a framework for instructors to create a series of activities that will help students develop their personal relationships and value systems.

Future educators can instill accountability in their deserving students and recognize that each learner is unique based on individual differences, allowing them to pinpoint his or her strengths and weaknesses in order to further improve the students. As with all taxonomies, there must be a very clear instructional intention for growth in this area specified in the learning objectives when labeling objectives use this domain (Syed Ahmad and Hussin, 2017).

5 Research Methodology

The research methods part focuses mostly on the preparation, execution, and reporting of the review. We start by formulating and defining study questions about students’ learning preparedness for an embedded system design course. Second, we scan several databases for relevant literature and extract pertinent facts. Finally, we prepare a report summarizing the findings of the systematic review. The SLR approach has the advantage of providing insights into a research problem and allowing a study to obtain information from a variety of sources (Kadir, 2016).

5.1 Planning the Review

This section explains how to apply the SLR used in this study (Lorin et al., 2000; Boyle et al., 2016; Hamari et al., 2016), including how to define research questions and how to define a search strategy.

The research questions emerged as a critical feature that must be well defined because they were used to gather data and determine the study’s final outcomes. The following research questions influenced the expected outcomes in this study:

RQ1. What are the key body of knowledge (BOK) courses for undergraduate students interested in learning the embedded system design?

RQ2. What cognitive, emotional, and psychomotor skills are necessary for success in the embedded system design course?

RQ3. How can the instrument’s validity and reliability be assessed?

RQ4. Are students prepared to learn about the embedded system design?

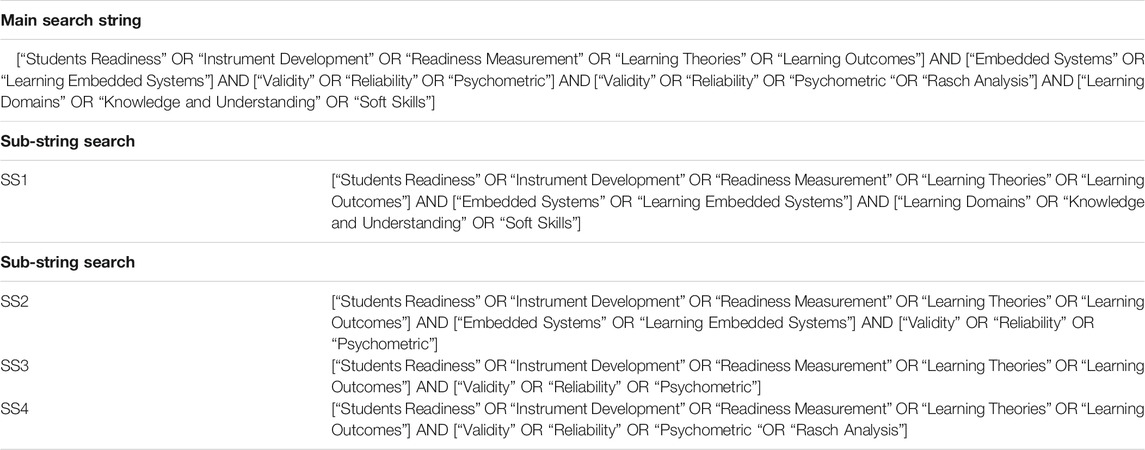

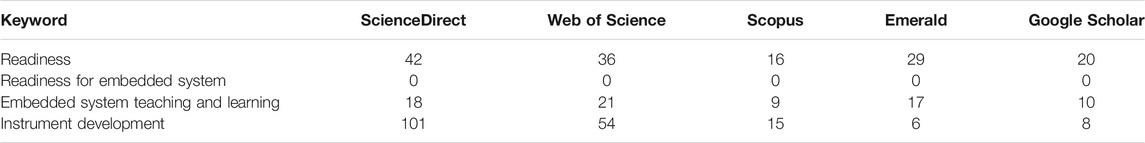

The authors determine the number of articles published in this specific region during the last two decades to answer RQ1 through RQ4. The SLR allows you to explicitly explain your search approach, which aids in the discovery of relevant and useful articles for your research. As a result, the outcomes of the search strategy are heavily influenced by keywords and databases. Articles on embedded readiness, as well as instrument development and validation, were searched for in this study.

5.2 Defining Data Sources

ScienceDirect, Web of Science, Scopus, Emerald, and Google Scholar were used to search for similar article titles in this study. The relevance of these five databases to electrical and electronics engineering, as well as engineering education disciplines, was chosen to assure complete and inclusive data collection.

The Web of Science, established and maintained by Thomson Reuters, is the world’s largest online database, providing access to six databases (Krathwohl, 2002).

Scopus, on the other hand, is an Elsevier-owned online database that contains over 22,000 abstracts and articles from 5,000 publishers in the scientific, technological, medical, and social sciences fields (Ferri, 2015). Aside from that, Google Scholar is a free web search engine that indexes full texts or metadata of academic literature across a number of publishing formats and disciplines, with over 160 million pages indexed as of May 2014 (Chugh and Madhravani, 2016).

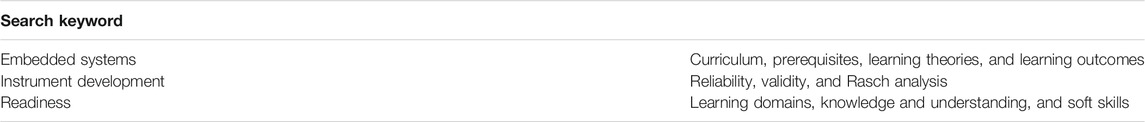

5.3 Defining Search Keywords

The study keywords were chosen based on the research questions (RQs). The chosen keywords were cross-checked against the study RQs to confirm that they matched the research objectives and expectations. As a result, the search keywords listed in Table 4 were used to find the relevant articles.

To identify the primary articles, the search phrase shown in Table 5 was used to implement a search on the four (4) selected web databases using Boolean operators. Meanwhile, the OR operator was utilized to link the synonyms, and the AND operator was employed to chain the important key phrases. During the search process, we also used stemming and wildcards searching tips from another digital library (Norasiken et al., 2016; Sönmez, 2017; Smt and Philip, 2017).

5.4 Conducting the Review Process

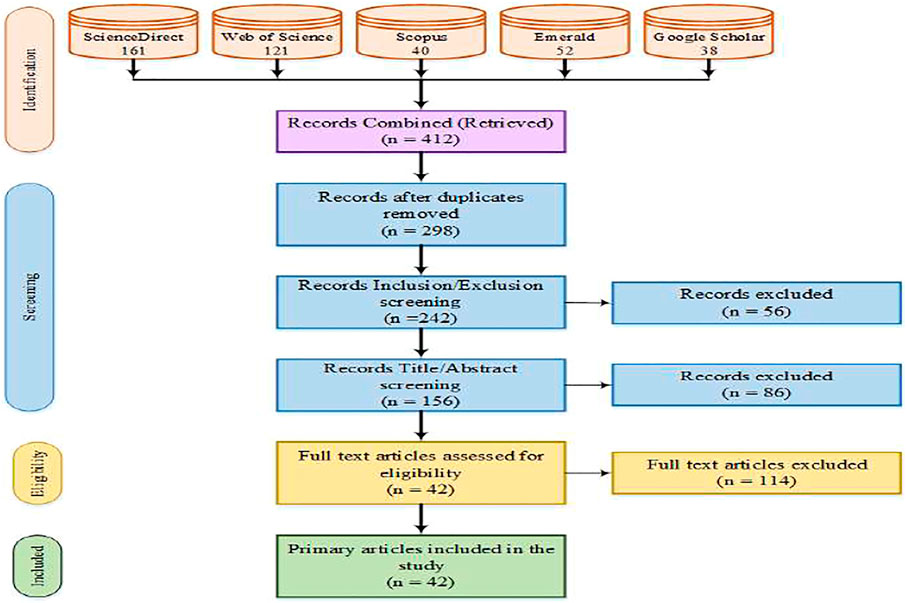

This section explains how the review was actually carried out. As a result, the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) was used to conduct the review, which followed the stated methodology shown in Figure 2. The procedure included identifying a research pilot search, selecting a study, and assessing the quality of the results.

Based on the specified search string, a pilot search was conducted to find as many results as possible relevant to embedded systems and preparedness as well as instrument development and validation. As a consequence, 390 articles were initially identified from the selected databases, including 151 articles from ScienceDirect, 121 articles from Web of Science, 40 articles from Scopus, 52 entries from Emerald, and 38 articles from Google Scholar, as shown in Table 6. Finally, after using the reference management tool, a total of 92 studies were found and eliminated.

While the primary articles for this study were chosen based on a two-level inclusion and exclusion criterion used on the 298 articles that were chosen, 56 studies were discarded for failing to meet the criteria. Following that, after reviewing the titles and abstracts of the publications, 86 were rejected. After reviewing the full texts, 114 articles were eliminated from the 156 articles that were chosen.

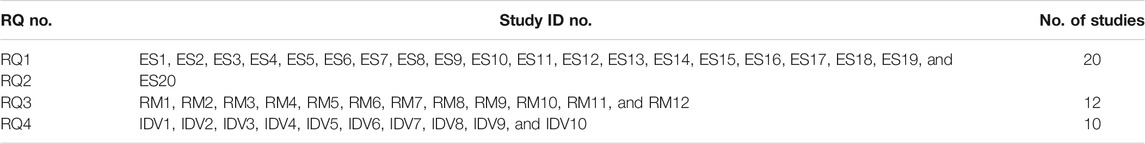

The remaining 42 articles were then divided into three categories based on the RQs, with 20 articles linked to embedded systems (ES), 12 entries linked to readiness measurements (RMs), and 10 articles associated to instrument development and validation (IDV). The SLR was used to incorporate the remaining articles as primary studies.

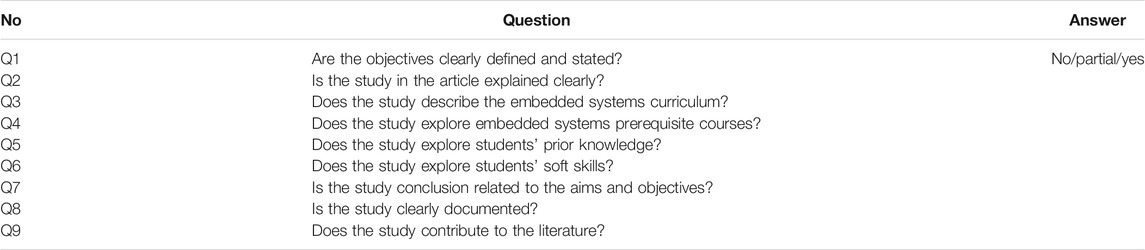

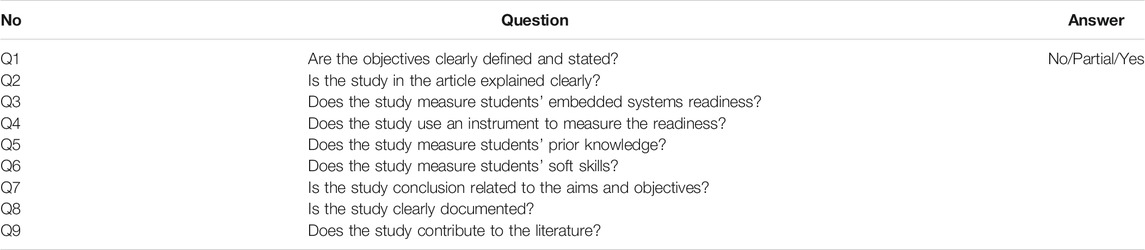

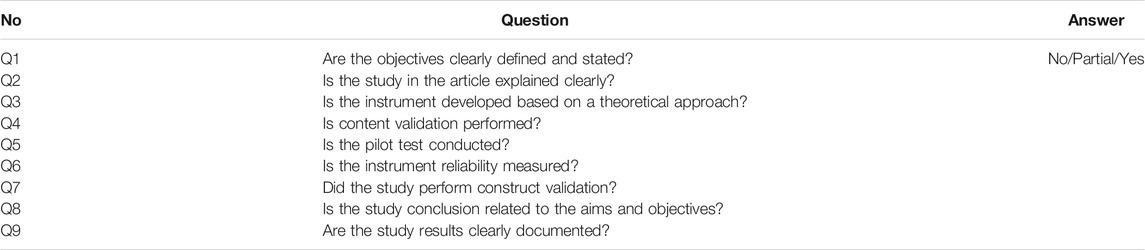

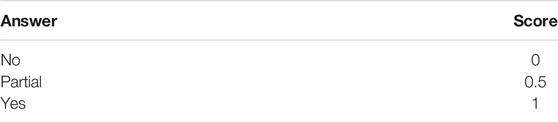

The quality assessment criterion was used in this study to examine the quality of the primary articles in order to eliminate biases and risks to validity in empirical investigations as well as serve as more accurate inclusion and exclusion criteria, as proposed by Kitchenham (2004). As a result, a checklist of questions for embedded system categories, readiness measurement, and instrument creation and validation was created, as illustrated in Tables 7–9, respectively. Meanwhile, the answer scale illustrated in Table 10 was utilized to analyze and validate the primary selected articles, as indicated by Maiani de Mello et al., (2014).

5.5 Data Synthesis

This section contains the report of the performed review, which displays the SLR’s final phase. The study’s findings are presented according to a predetermined review methodology. Using the data gathered, the primary studies were extracted throughout the review based on the established inclusion and exclusion criteria. In addition, descriptive statistical tools were used to present the results.

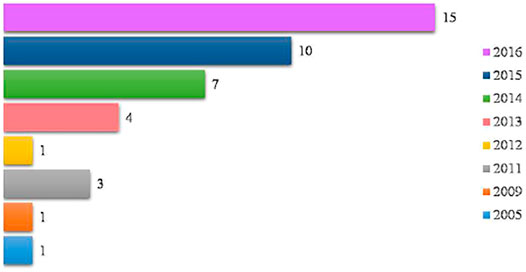

Around 42 main articles were chosen for SLR based on the established selection criteria. Nonetheless, none of the source publications contained all three search terms of study-embedded systems, instrument development, and readiness. Instrument development and readiness, on the other hand, were mentioned frequently in the articles chosen. In reality, as shown in Figure 3, ten of the research articles chosen were journal publications, and ten were conference articles. The most recent research studies [ES1], [ES2], [ES3], [ES4], [ES5], [ES6], and [ES7] were published in 2016, while the oldest one was published in 2005 [ES20]. The primary studies and their publication year are displayed in Figure 4, which indicated an increasing trend in the number of publications.

5.6 Answers of Research Questions

The relevant articles from the data extracted in the data extraction form had been used to answer each RQ, as shown in Table 11.

RQ1: What are the essential body of knowledge (BOK) courses for undergraduate students interested in learning the embedded system design? The courses included in the body of knowledge for the embedded systems design course in electrical and electronics engineering, computer engineering, and computer science degrees were the topic of this research question. Nevertheless, most of the reviewed articles did not mention or have misconception about a concept or a weak understanding of a concept regarding BOK courses.

RQ2: What cognitive, emotional, and psychomotor skills are necessary for success in the embedded system design course? The focus of this research topic was on both hard and soft abilities that students need to succeed in an embedded systems design course. In total, 3 of the 20 articles in the SLR dealt on the development of the embedded systems design courses curriculum. [ES15], [ES18], and [ES20] were the studies in question. Furthermore, [ES2], [ES3], [ES4], [ES5], [ES8], [ES10], [ES16], [ES17], and [ES19] were discovered in 17 research articles, with nine of them focusing on providing hands-on teaching materials for embedded system courses. The remaining eight articles, [ES1], [ES6], [ES7], [ES9], [ES11], [ES12], [ES13], and [ES14], measured the effect of implementing project-based learning (PBL) on the embedded systems design course, while [ES15] evaluated master’s and degree embedded systems programs at three universities, namely the University of Eindhoven’s Master in Embedded Systems program, the University of Freiburg’s Master of Science in Embedded Systems Engineering program, the University of Pennsylvania’s Master of Science in Engineering in Embedded Systems program, and the University of Freiburg’s embedded systems engineering degree program. These articles presented an embedded system curriculum and teaching module that could be included in any degree or master’s program in electrical and electronics engineering, as well as computer science studies, at Berlin’s Humboldt University.

Following that, [ES20] describes a 98-credit hour, 4-year undergraduate embedded systems engineering program at the University of Waterloo in Canada. It offered a curriculum that included solid mathematical foundations and in-depth understanding of the programming and engineering design, as well as hardware and software integration, which appears to be the primary application of embedded systems. Furthermore, the first 3 years of the curriculum were covered by the core, while the final year was an elective. A variety of laboratories and class projects were incorporated into the curriculum to give students a wide range of hands-on experience as well as the opportunity to apply fundamental design tools. Meanwhile, [ES18] is another study that presented the embedded systems curriculum.

The difficulties that software-oriented students encountered while studying hardware courses, specifically microprocessors and embedded controllers, at the Institute of Informatics, Faculty of Natural Sciences and Mathematics at the Saints Cyril and Methodius University of Skopje in the Republic of Macedonia, were presented in this study.

The study advocated changing the hardware course structure to mimic the software course structure that students are familiar with. It also proposed a teaching module on microprocessors and embedded controllers for the institution. The following articles were primarily descriptive and quantitative in nature: in fact, [ES1], [ES2], [ES3], [ES4], [ES5], [ES6], [ES7], [ES8], [ES9], [ES10], [ES11], [ES12], [ES13], [ES14], [ES16], [ES17], and [ES19] stressed the impact of PBL on the embedded systems design in comparison to traditional methods.

RQ3: How can the instrument’s validity and reliability be assessed? This study looked into instrument validation through a four-part psychometric assessment: 1) content validity, 2) pilot test, 3) reliability, and 4) construct validity.

The degree to which a designed instrument fully examines the construct of interest is referred to as content validity. As a result, the content validity index (CVI) was utilized to assess the instrument’s content validity in [IDV1].

A pilot test is an initial study designed to assess the produced instrument’s reliability, comprehensibility, relevance, and acceptability. According to the information gathered, none of the articles had conducted a pilot test study.

The reliability analysis was carried out as part of the primary investigations in [IDV1], [IDV2], [IDV3], [IDV4], [IDV5], [IDV6], [IDV7], [IDV8], [IDV9], and [IDV10] by using Cronbach’s alpha to analyze the internal consistency of the instruments.

Construct validity is the degree to which a designed instrument measures what it is supposed to assess.

Construct validity was assessed using dimensionality analysis, item analysis, and the p Wright map in [IDV6] and [IDV10]. Confirmatory factor analysis (CFA), principal component analysis (PCA), and item analysis were employed by [IDV2], [IDV8], and [IDV9], respectively. To perform construct validity, [IDV1] used item analysis and CFA, whereas [IDV3] used dimensionality analysis and differential item functioning (DIF).

In addition, [IDV4] used dimensionality and ANOVA analysis, whereas [IDV5] used dimensionality, CFA, and the Wright map. In [IDV7], item analysis, Wright maps, and Pearson’s correlations were also utilized.

RQ4: Are students prepared to take a course on the embedded system design? This topic centered on the description of a systematic method for creating an instrument that would be used to assess students’ readiness to take an embedded systems design course. According to the SLR, no studies found a link between preparedness and embedded systems design courses.

In reality, 12 of the 42 primary articles in SLR provided data on students’ readiness, with three (3) studies [RM1], [RM2], and [RM3] measuring the aspect of students’ readiness to learn science courses at the tertiary level. Meanwhile, [RM4] and [RM5] supplied information on students’ readiness for mathematics in higher education, and [RM6], [RM7], and [RM8] provided information on higher school students’ readiness for college.

Students who gained in-depth prior knowledge from earlier courses relating to the areas they sought to learn had higher grades in their target course, according to these research studies. [RM9], [RM10], [RM11], and [RM12] also included information on students’ readiness to accept mobile learning.

6 Systematic Literature Review Findings

This section provides an overview of the findings from a systematic literature review (SLR), including study methodology, design, and process. The SLR has recently gained popularity as a respected research methodology (Ernst et al., 2015; Maiani de Mello et al., 2015; Mariano and Awazu, 2016; Reuters, 2016; Scopus Content Overview, 2016). As a result, it has been frequently used in medical research studies, with a variety of well-documented criteria supporting its use. Since 2004, a number of psychologists, engineers, and social scientists have been using this new paradigm in their research. In addition, a number of prestigious publications have begun to produce special issues devoted to SLR articles (Ernst et al., 2015).

When compared to a literature review, the SLR was used in this study because of its high scientific value, which is comprised of a sound and predefined search approach, as well as excellence evaluation criteria, which are absent in literature reviews, and any chance of missing an article is reduced (Scopus Content Overview, 2016).

Apart from that, Kitchenham (Kitchenham, 2004) managed to come up with following three reasons for using a systematic literature review: 1) to summarize the existing evidence concerning a treatment or a technology, for example, to summarize the empirical evidence of both benefits and limitations of a specific agile method, 2) to identify any gaps in the current research in order to suggest areas for further investigation, and 3) to provide a framework or background to appropriately position a treatment or a technology.

According to the recommendations set by Kitchenham (2004), publications from various database sources were searched using predefined keywords to acquire appropriate research materials in order to answer the research questions (Soomro and Salleh, 2014).

The generic terms “readiness,” “embedded system education,” “instrument development,” “validity,” and “reliability” were found in all of the selected primary publications, according to the SLR findings. Only three studies, [ES15], [ES18], and [ES20], focused on the embedded system curriculum design. The majority of these publications focused on embedded system teaching and learning methodologies.

Authors’ findings revealed that no instrument has been developed yet to assess students’ readiness to take an embedded system design course. Furthermore, the majority of the instruments offered lacked concept clarity. They also lacked the foundational theory to build and assess their instruments as well as considerable psychometric examination of the instruments they had produced. In fact, in their reviews, all research studies ignored pilot testing, while only one study emphasized content validity.

These instruments are used to assess students’ prior knowledge of the fundamentals of physics, mathematics, and physics in the science domain. The elements in the instrument developed to assess student preparation for physics represent the science domain that deals with the facts about matter. Math instruments, on the other hand, tend to assess students’ prior knowledge of mathematical fundamentals.

The chemistry instrument is also designed to assess a student’s prior understanding of fundamental properties of matter and gases. Because these measurement instruments are not designed for the engineering domain, they cannot accurately and totally represent the necessity to assess students’ past engineering design knowledge and skills. MeSRESD was created to assess students’ engineering preparedness, with items representing engineering fundamentals, analysis, and design activities as well as prior knowledge and skills, as recommended by ABET and MQA.

The researcher can utilize the findings of SLR to determine the methodology and reporting standards employed in previous studies on the same or related themes. All researchers who collected and analyzed data in the primary studies listed in Table 11 used quantitative methodologies. However, to give a more comprehensive grasp of the research issue and to assure generalizability of the study findings to a larger population, this study was implemented utilizing a sequential exploratory mixed development technique (Kitchenham et al., 2009; Achimugu et al., 2014).

Furthermore, as indicated in Table 11, the majority of the proposed instruments lacked rigor in terms of reliability and validity methodologies as well as transparency in terms of analytical procedures and findings. As a result, content validity is conducted using CVI and CVR to ensure that the MeSRESD instrument is a trustworthy and valid instrument. To assess the consistency of the scales, the reliability alpha coefficient is calculated. Measurement instrument statistical analysis, item fit analysis, Wright map, and differential item function were also used to determine construct validity.

7 Instrument Development

One of the most important components of any research project is data collection, which assists researchers in answering their research questions. There are numerous data-gathering methods, such as quantitative and qualitative data collection methods, which are dependent on the research design used in the study. In quantitative investigations, questionnaires are the most often used data-gathering method (Kitchenham and Charters, 2007).

The Likert scale (Azhar et al., 2012), semantic differential (Marshall et al., 2015), visual analog, and numerical response formats (Mundy et al., 2015) are only few of the instruments that have been employed in social science. The Likert scale used in this study is one of the most widely used instruments in social science research. The four essential processes in the construction of the MeSRESD instrument are 1) construct development, 2) scale format and item authoring, 3) pilot test, and 4) scale evaluation (Hu and Bai, 2014). Inductively or deductively, the instrument’s evolution was assessed (Nekkanti, 2016).

The inductive instrument development method is one in which the items are created first, and then the instrument is created from them. This strategy is typically employed when investigating a new phenomenon for which there is little or no existing theory. The deductive technique used in this study, on the other hand, begins with a theoretical definition of a construct that is then used as a guide for the development of products. This method necessitates a thorough awareness of the relevant literature as well as the phenomenon under investigation, and it aids in the content adequacy of the final instruments (Rycroft et al., 2013).

The development of instruments has been thoroughly researched in the literature. Unfortunately, the majority of these investigations lacked reliability and validity, making it difficult to interpret research findings (Nekkanti, 2016).

The fundamental indicators of a measuring instrument’s quality are its reliability and validity. The goal of constructing and validating an instrument is to reduce measurement error to the greatest extent possible. As a result, a systematic literature study is carried out in order to locate, review, and critically evaluate existing methodologies for instrument creation and validation.

Item generation, content validity, pilot test, reliability analysis, and construct validity were all included in the authors’ list of development and validation techniques. Table 11 shows the 10 articles that have been chosen as primary studies. In their instrument to assess nurses’ compassion competency, Youngjin (Lawshe, 1975) used content validity and construct validity as well as item analysis and confirmatory factor analysis (CFA).

Construct validity was assessed using dimensionality analysis, item analysis, and the Wright map in Hesse-Biber, (2016) and Creswell (2014). To perform construct validity, Lynn and Radford, (2016) used dimensionality analysis and differential item functioning (DIF).

While Foryś and Gaca, (2016) used dimensionality and ANOVA analysis, Takahashi and McDougal, (2016) used dimensionality, CFA, and the Wright map. Item analysis, the Wright map, and Pearson’s correlations were also employed in Liu and Conrad, (2016).

To summarize, none of the research studies cited previously give theoretical basis for the item creation of their instruments, and none of them have conducted pilot test analysis. However, as part of the major investigations, the reliability study was carried out using Cronbach’s alpha to analyze the internal consistency of the instruments. Furthermore, there is no well-established framework to guide researchers through many stages of instrument creation and validation in these investigations.

Despite this, none of the articles examined have highlighted how students’ attitudes influence their behavior. Several studies on the adoption of various technologies have found a strong correlation between attitude and behavioral intention (Leedy and Ormrod, 2016).

SLRs have a number of goals, one of which is to identify research gaps and pave the path for future studies. As a result, the following points show present research gaps or future research opportunities:

1. None of the studies described previously provide a theoretical foundation for the development of their instruments.

2. They have never carried out a pilot test analysis.

3. In these studies, there is no well-established framework to guide researchers through many steps of instrument construction and validation.

4. None of the articles looked at focusing on how students’ views affect their actions.

5. Social behavior variables should be included in the content validity analysis.

6. The findings of this research highlighted that the universities should reform their curricula to equip the students with the required BOK courses and improve the continuity between the courses.

7. This study also contributes to research by explaining in detail the process of instrument development.

8. The systematic approach to generate items and to assess the reliability and validity of the instrument is clearly elaborated.

9. The different types of prior knowledge influencing the readiness of students to learn the embedded systems design course should be explored by further research.

10. The study is only limited to the embedded system design course. Future research should involve different courses to provide findings that are more comprehensive.

8 Limitations

Because research is a never-ending process, this section provides study limits and constraints that may have an impact on the overall findings and inspires other researchers to conduct additional research.

The study’s goal was to investigate which instruments have previously been utilized to assess students’ readiness to take up an embedded systems design course. This was accomplished by performing a comprehensive search of the ScienceDirect, Scopus, Web of Science, Emerald, and Google Scholar databases, which included only publications written in English. As a result, several articles relevant to this study may have been omitted due to this criterion.

Other flaws, such as bias in primary study selection, study quality assessment, and data extraction imprecision, could have influenced the SLR. As a result, many sub-searching strings were created for execution, and their results were logged separately to overcome these restrictions.

The five databases described previously were chosen for their importance to electrical and electronics engineering as well as engineering education disciplines, in order to assure thorough and inclusive data collection. It is worth noting that these five databases are not comprehensive; therefore, the study had to be constrained.

In addition, only research publications written in English are included in the study. As a result, because it was published in a language other than English, other important research publications may have been overlooked.

Because students are not machines who learn and teachers are not robots who teach, psychological and emotional preparedness to learn is essential. Future research should take such things into account, according to the article.

9 Conclusion

The goal of this research was to assess students’ readiness. A systematic literature evaluation was conducted for this goal, including publications from 1992 to 2018, in order to assess and measure the effects of students’ preparedness before enrolling in embedded system design courses. Furthermore, evaluating such effects may help to close the gap between academically taught abilities and industry-required skills.

This analysis of the literature identified and compared numerous readiness measurement instruments that are used to assess the preparedness of embedded system design students. As a result, there is a requirement for more standard investigations using benchmark datasets. Nonetheless, the findings of the research show that the majority of the selected studies do not give theoretical evidence for the item creation of their instruments, and none of the studies have conducted pilot tests. Furthermore, there is no well-established framework to guide researchers through many stages of instrument creation and validation in the investigations.

This question centered on the description of a systematic approach used to create an instrument that would be used to assess students’ readiness to take an embedded systems design course. None of the studies found a link between readiness and embedded systems design courses, according to the SLR.

Students who gained in-depth prior knowledge from earlier courses relating to the areas they sought to learn scored higher in their target course, according to these research studies. Furthermore, the authors observed during their search and review process that no research has been performed to investigate and examine the impact of preparation on the projected outcomes of the embedded system design course.

Researchers will be able to determine the methodology and reporting standards employed in previous studies of the same or related themes using the findings of a systematic literature review. The authors of this study stressed the need of assessing learning readiness in order to close the gap between academically taught abilities and the skills sought and desired by industry. One of the major issues facing significant expansion and demand for qualified system engineers is assessing students’ learning readiness and competence.

The generic terms “readiness,” “embedded system teaching,” “instrument development,” “validity,” and “reliability” were found in all selected source publications, according to the systematic literature review findings. The majority of these publications were about teaching and learning approaches for embedded systems. Only three research articles, on the other hand, focused on the embedded system curriculum design. The findings revealed that no instrument has been developed yet to assess students’ readiness to take up an embedded system design course. Furthermore, the majority of the instruments offered lacked concept clarity. They also lacked the foundational theory to build and assess their instruments as well as considerable psychometric examination of the instruments they had produced. In fact, in their reviews, all research studies ignored pilot testing, while only one study emphasized content validity.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abele, E., Metternich, J., Tisch, M., Chryssolouris, G., Sihn, W., ElMaraghy, H., et al. (2015). “Learning Factories for Research, Education, and Training” in Key Note Paper of the 5th International Conference on Learning Factories, 32. Bochum, Germany: Procedia CIRP, 1–6. In Press. doi:10.1016/j.procir.2015.02.187

Achimugu, P., Selamat, A., Ibrahim, R., and Mahrin, M. N. R. (2014). A Systematic Literature Review of Software Requirements Prioritization Research. Inf. Softw. Techn. 56 (6), 568–585. doi:10.1016/j.infsof.2014.02.001

Adunola, O. (2011). An Analysis of the Relationship between Class Size and Academic Performance of Students. Ogun State, Nigeria: Ego Booster Books.

Ahles, C. B., and Bosworth, C. C. (2004). The Perception and Reality of Student and Workplace Teams. Journalism Mass Commun. Educator 59, 41–59. doi:10.1177/107769580405900108

Alias, N. A. (2016). Student-Driven Learning Strategies for the 21st Century Classroom Hershey, Pennsylvania. IGI Global. doi:10.4018/978-1-5225-1689-7

Alliance for Excellent Education (2011). A Time for Deeper Learning: Preparing Students for a Changing World. Educ. Dig. 77 (4), 43–49.

ARTIST network of excellence (2005). Guidelines for a Graduate Curriculum on Embedded Software and Systems. ACM Trans. Embedded Comput. Syst. 4 (3), 587–611. doi:10.1145/1086519.1086526

Azhar, D., Mendes, E., and Riddle, P. (2012). “A Systematic Review of Web Resource Estimation,” in Paper presented at the Proceedings of the 8th International Conference on Predictive Models in Software Engineering. doi:10.1145/2365324.2365332

Baytiyeh, H. (2012). Women Engineers in the Middle East from Enrollment to Career: A Case Study. http://www.asee.org/public/conferences/8/papers/3533/download.

Bennett, C., Ha, M. R., Bennett, J., and Czekanski, A. (2016). “Awareness of Self and the Engineering Field: Student Motivation, Assessment of ‘Fit’ and Preparedness for Engineering Education,” in Proc. 2016 Canadian Engineering Education Association (CEEA16) Conference, Halifax, Nova Scotia, Canada, June 19, 2016 - June 22, 2016.

Bertels, P., D'Haene, M., Degryse, T., and Stroobandt, D. (2009). Teaching Skills and Concepts for Embedded Systems Design. SIGBED Rev. 6 (1), 1–8. Article 4 (January 2009). doi:10.1145/1534480.1534484

Boyle, E. A., Hainey, T., Connolly, T. M., Gray, G., Earp, J., Ott, M., et al. (2016). An Update to the Systematic Literature Review of Empirical Evidence of the Impacts and Outcomes of Computer Games and Serious Games. Comput. Educ. 94, 178–192. doi:10.1016/j.compedu.2015.11.003

Caliskan, N., Kuzu, O., and Kuzu, Y. (2017). The Development of a Behavior Patterns Rating Scale for Preservice Teachers. Jel 6 (1), 130. doi:10.5539/jel.v6n1p130

Catalog.oakland.edu (2014). Program: Master of Science in Embedded Systems - Oakland University - AcalogACMSâ. [online] Available at:

Chang, C-S. (2010). The Effect of a Timed reading Activity on EFL Learners: Speed, Comprehension, and Perceptions. Reading a Foreign Lang. 22, 43–62.

Chugh, K. L., and Madhravani, B. (2016). On-Line Engineering Education with Emphasis on Application of Bloom’s Taxonomy. J. Eng. Educ. Transformations 30. doi:10.16920/jeet/2016/v0i0/85709

Conley, D. T., and French, E. M. (2014). Student Ownership of Learning as a Key Component of College Readiness. Am. Behav. Scientist 58 (8), 1018–1034. doi:10.1177/0002764213515232

Creswell, J. W. (2014). Research Design: Qualitative, Quantitative, and Mixed Method Approaches. 4th Ed. Thousand Oaks, CA: Sage Publications, Inc.

Dick, S. (2016). Embedded Systems Industry Focuses on IoT as the Future of Manufacturing. Dedham, Massachusetts, United States: Industrial Internet of Things and Industries.

Dilnot, J., Hamilton, L., Maughan, B., and Snowling, M. J. (2017). Child and Environmental Risk Factors Predicting Readiness for Learning in Children at High Risk of Dyslexia. Dev. Psychopathol 29 (1), 235–244. doi:10.1017/S0954579416000134

Ernst, N. A., Bellomo, S., Ipek, O., Nord, R. L., and Gorton, I. (2015). “Measure it? Manage it? Ignore it? Software Practitioners and Technical Debt,” in Proceedings of the 2015 10th Joint Meeting on Foundations of Software Engineering, 50–60. ACM. doi:10.1145/2786805.2786848

Faure, E. (1972). International Commission on the Development of Education. Washington, DC: The Bureau.

Fernandez-Chung, R. M., and Yin Ching, L. (2018). Phase III - Employability of Graduates in Malaysia: The Perceptions of Senior Management and Academic Staff in Selected Higher Education Institutions. Centre for Academic Partnerships and Engagement, University of Nottingham Malaysia.

Ferri, T. L. J. (2015). “Integrating Affective Engagement into Systems Engineering Education,” in 122nd ASEE Conference & Exposition. June 14-17. USA: SeattleWA.

Flosason, T. O., McGee, H. M., and Diener-Ludwig, L. (2015). Evaluating Impact of Small-Group Discussion on Learning Utilizing a Classroom Response System. J. Behav. Educ. 24 (24), 317–337. doi:10.1007/s10864-015-9225-0

Foryś, I., and Gaca, R. (2016). Application of the Likert and Osgood Scales to Quantify the Qualitative Features of Real Estate Properties. Folia Oeconomica Stetinensia 16 (2), 7–16. doi:10.1515/foli-2016-0021

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., et al. (2014). Active Learning Increases Student Performance in Science, Engineering, and Mathematics. Proc. Natl. Acad. Sci. U S A. 111 (23), 8410–8415. doi:10.1073/pnas.1319030111

Ganyaupfu, E. M. (2013). Factors Influencing Academic Achievement in Quantitative Courses Among Business Students of Private Higher Education Institutions. J. Educ. Pract. 4 (15), 57–65. doi:10.7176/JEP

Gillan, C. M., Otto, A. R., Phelps, E. A., and Daw, N. D. (2015). Model-based Learning Protects against Forming Habits. Cogn. Affect Behav. Neurosci. 15, 523–536. doi:10.3758/s13415-015-0347-6

Haetzer, B., Schley, G., Khaligh, R. S., and Radetzki, M. (2011)., 1–8. doi:10.1145/2077370.2077371Practical Embedded Systems Engineering Syllabus for Graduate Students with Multidisciplinary BackgroundsProceeding of WESE '11 Proceedings of the 6th Workshop on Embedded Systems Education.

Hailikari, T., Katajavuori, N., and Lindblom-Ylanne, S. (2012). The Relevance of Prior Knowledge in Learning and Instructional Design. Am. J. Pharm. Educ. 72 (5), 113. doi:10.5688/aj7205113

Hamari, J., Shernoff, D. J., Rowe, E., Coller, B., Asbell-Clarke, J., and Edwards, T. (2016). Challenging Games Help Students Learn: An Empirical Study on Engagement, Flow and Immersion in Game-Based Learning. Comput. Hum. Behav. 54, 170–179. doi:10.1016/j.chb.2015.07.045

Hanapi, Z., and Nordin, M. S. (2014). Unemployment Among Malaysia Graduates: Graduates'Attributes, Lecturers' Competency and Quality of Education. Proced. - Soc. Behav. Sci. 112, 1056–1063. doi:10.1016/j.sbspro.2014.01.1269

Hesse-Biber, S. (2016). Doing Interdisciplinary Mixed Methods Health Care Research: Working the Boundaries, Tensions and Synergistic Potential of Team-Based Research. Qual. Health Res. 26 (5), 649–658. doi:10.1177/1049732316634304

Hidayat, S., Hendrayana, A., and Pujiastuti, H. (2018). Identification of Readiness of Developing University to Apply Information and Communication Technology (ICT) in Teaching and Learning. SHS Web Conf. 42, 00117. doi:10.1051/shsconf/20184200117

Howieson, C., Mckechnie, J., and Semple, S. (2012). Working Pupils: Challenges and Potential. J. Educ. Work 25 (4), 423–442. doi:10.1080/13639080.2012.708723

Hu, Y., and Bai, Ga. (2014). A Systematic Literature Review of Cloud Computing in E-Health. Health Informatics-An Int. J. (Hiij) 3, 11–20. doi:10.5121/hiij.2014.3402

Ibrahim, I., Ali, R., Zulkefli, M., and Nazar, E. (2015). Embedded Systems Pedagogical Issue: Teaching Approaches, Students Readiness, and Design Challenges. Ajesa 3 (31), 1–10. doi:10.11648/j.ajesa.20150301.11

Ibrahim, I., Ali, R., Adam, M. Z., and Elfidel, N. (2014, Embedded Systems Teaching Approaches & Challenges).2014 IEEE 6th Conference on Engineering Education (ICEED). 9 - 10 December 2014, Kuala Lumpur, Malaysia. doi:10.1109/ICEED.2014.7194684

Ilakkiya, S. N., Ilamathi, M., Jayadharani, J., Jeniba, R. L., and Gokilavani, C. (2016). A Survey on Recent Trends and Applications in Embedded System. Int. J. Appl. Res. 2, 672–674.

Ridwan, I. I., Ali, R., and Adam, Z. (2017). “Rasch Model Validation of Instrument to Measure Students Readiness to Embedded Systems Design Course,” in 2nd International Higher Education Conference 2017 (IHEC). (Kuala Lumpur, Malaysia).

Ridwan, I. I., Kamilah, Bt., Radin Salim, H., Adam, Z., Mohd, I. I., and Nazar, E. F. (2019). “Development and Validation of Scale Using Rasch Analysis to Measure Students’ Entrepreneurship Readiness to Learn Embedded System Design Course,” in Procedia Computer Science of the 9th World Engineering Education Forum (WEEF-2019), Chennai, India, 13th-16th November, 2019.

Itani, M., and Srour, I. (2016). Engineering Students' Perceptions of Soft Skills, Industry Expectations, and Career Aspirations. J. Prof. Issues Eng. Educ. Pract. 142 (11-12), 04015005. doi:10.1061/(ASCE)EI.1943-5541.0000247

Jane Kay, L. (2018). School Readiness: A Culture of Compliance?(UK: the University of Sheffield). PhD Thesis.

Johanson, J. (2010). Cultivating Critical Thinking: An Interview with Stephen Brookfield. J. Develop. Educ. 33 (3), 26–30.

John, J. (2016). Embedded Systems Market. Zion Research, http://www.marketresearchstore.com/news/global-embedded-systems market-249.

Kadir, A. M. (2016). Quality Improvement of Examination's Questions of Engineering Education According to Bloom's TaxonomyThe Sixth International Arab Conference on Quality Assurance in Higher Education (IACQA). Zarqa, Jordan: Zarqa University.

Kagan, S. (2013). “David, Goliath and the Ephemeral Parachute,” in Early Childhood and Compulsory Education: Reconceptualising the Relationship Abingdon. Editor P. Moss (UK: Routledge).

Karzunina, D., West, J., Gabriel, M., Georgia , P., and Gordon, S. (2018). The Global Skills Gap in the 21st Century. QS Glob. Employer Surv. 2018.

Kay, D., and Kibble, J. (2016). Learning Theories 101: Application to Everyday Teaching and Scholarship. Adv. Physiol. Educ. 40 (1), 17–25. doi:10.1152/advan.00132.2015

King, F. J., Goodson, L., and Rohani, F. (2015). Higher Order Thinking Skills. Center for Advancement of Learning and Assessment (Retrived March 7, 2015).

Kitchenham, B., and Charters, S. (2007). Guidelines for Performing Systematic Literature Reviews in Software Engineering. Durham, United Kingdom: University of Durham.

Kitchenham, B., Pearl Brereton, O., Budgen, D., Turner, M., Bailey, J., and Stephen, L. (2009). Systematic Literature Reviews in Software Engineering - A Systematic Literature Review. Inf. Softw. Techn. 51 (1), 7–15. doi:10.1016/j.infsof.2008.09.009

Krathwohl, D. R. (2002). A Revision of Bloom’s Taxonomy: An Overview. Theory into. Practice 41 (4), 212–218. doi:10.1207/s15430421tip4104_2

Lang, J. D., Cruse, S., McVey, F. D., and McMasters, J. (1999). Industry Expectations of New Engineers: a Survey to Assist Curriculum Designers. J. Eng. Educ. 88 (1), 43–51. doi:10.1002/j.2168-9830.1999.tb00410.x

Lawshe, C. (1975). A Quantative Approach to Content Validity. Personnel Psychol. 28 (4), 563–575. doi:10.1111/j.1744-6570.1975.tb01393.x

Leedy, P. D., and Ormrod, J. E. (2016). Practical Research: Planning and Design. 11th Edition. New York, United States: Pearson.

Lima, R. M., Mesquita, D., and Flores, M. A. Project Approaches in Interaction with Industry for the Development of Professional Competences. Industrial and Systems Engineering Research Conference (ISERC 2014). 2014. Montréal, Canada.

Lima, R. M., Mesquita, D., and Rocha, C. (2013). “Professionals’ Demands for Production Engineering: Analyzing Areas of Professional Practice and Transversal Competences,” in Paper presented at the International Conference on Production Research (ICPR 22) (Brazil: Foz do Iguassu), 1–7. 352a.

Liu, M., and Conrad, F. G. (2016). An experiment Testing Six Formats of 101-point Rating Scales. Comput. Hum. Behav. 55, 364–371. [3720]. doi:10.1016/j.chb.2015.09.036

Mundy, L. K., Romaniuk, H., Canterford, L., Hearps, S., Viner, R. M., Bayer, J. K., et al. (2015). Patton Adrenarche and the Emotional and Behavioral Problems of Late Childhood. J. Adolesc. Heal. 57, pp. 608–616. doi:10.1016/j.jadohealth.2015.09.001

Lorin, W. A., Krathwohl, D. R., Airasian, P. W., Cruikshank, K. A., Mayer, R. E., Pintrich, P. R., et al. (2000). A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives.

Lynn, S., and Radford, M. (2016). Research Methods in Library and Information Science. 6th Edition. Philadelphia, United States: Library and Information Science Text.

Maiani de Mello, R., da Silva, P. C., and Travassos, G. H. (2014). “Sampling Improvement in Software Engineering Surveys,” in Proceedings of the 8th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, 13. ACM. doi:10.1145/2652524.2652566

Maiani de Mello, R., Pedro Da Silva, C., and Travassos, G. H. (2015). Investigating Probabilistic Sampling Approaches for Large-Scale Surveys in Software Engineering. J. Softw. Eng. Res. Develop. 3 (1), 1. doi:10.1186/s40411-015-0023-0

Mariano, S., and Awazu, Y. (2016). Artifacts in Knowledge Management Research: a Systematic Literature Review and Future Research Directions. J. Knowledge Manage. 20 (6), 1333–1352. doi:10.1108/JKM-05-2016-0199

Markes, I. (2006). A Review of Literature on Employability Skill Needs in Engineering. Eur. J. Eng. Educ. 31 (6), 637–650. doi:10.1080/03043790600911704

Marshall, C., Brereton, P., and Kitchenham, B. (2015). Tools to Support Systematic Reviews in Software Engineering: a Cross-Domain Survey Using Semi-structured Interviews. EASE 26, 1–26. doi:10.1145/2745802.2745827

Martin, R., Maytham, B., Case, J., and Fraser, D. (2005). Engineering Graduates' Perceptions of How Well They Were Prepared for Work in Industry. Eur. J. Eng. Educ. 30 (62), 167–180. doi:10.1080/03043790500087571

Marwedel, P. (2011). Embedded System Design: Embedded Systems Foundations of Cyber-Physical Systems, 10. Cham, Switzerland: Springer Science & Business Media. doi:10.1007/978-94-007-0257-8_3

Md Zabit, M. N., Abdul Hadi, Z., Ismail, Z., and Zachariah, T. Z. (2018). A Brief History and Review of Critical Thinking Skills: Malaysian Approach. J. Teach. Educ. 08 (01), 153–164.

Meier, R. L., Williams, M. R., and Humphreys, M. A. (2000). Refocusing Our Efforts: Assessing Non-technical Competency Gaps. J. Eng. Educ. 89 (3), 377–385. doi:10.1002/j.2168-9830.2000.tb00539.x

Nair, C. S., Patil, A., and Mertova, P. (2009). Re-engineering Graduate Skills - a Case Study. Eur. J. Eng. Educ. 34 (2), 131–139. doi:10.1080/03043790902829281

Nekkanti, H. (2016). Surveys in Software Engineering: A Systematic Literature Review and Interview Study. Master Thesis.

NGEM, Ministry of Higher Education (2012). National Graduate Employability Blueprint 2012-2017. Putrajaya: University Putra Malaysia Press.

Nguyen, C. (2017). Entrepreneurial Intention of International Business Students in Viet Nam: a Survey of the Country Joining the Trans-Pacific Partnership. J. Innov. Entrep 6, 7. doi:10.1186/s13731-017-0066-z

Norasiken, B., Fesol, S. F. A., Salam, S., Osman, M., and Salim, F. (2016). Learning Style Approaches for Gen Y: An Assessment Conducted in a Malaysian Technical University. Pertanika J. Soc. Sci. Humanities 24 (4).

Pascarella, E. T., and Terenzini, P. T. (2005). How College Affects Students: A Third Decade of Research. San Francisco: Jossey-Bass.

Qamar, S. Z., Kamanathan, A., and Al-Rawahi, N. Z. (2016). “Teaching Product Design in Line with Bloom’s Taxonomy and ABET Student Outcomes,” in IEEE Global Engineering Education Conference (EDUCON), Abu Dhabi, UAE, 10-13 April.

Rahmat, N., Ayub, A. R., and Buntat, Y. (2017). “Employability Skills Constructs as Job Performance Predictors for Malaysian Polytechnic Graduates: A Qualitative Study”, in Malaysian Journal of Society and Space 12. Kuala Lumpur, Malaysia: Ministry of Higher education, 154–167.

Redding, P. M., Jones, E., and Laugharne, J. (2011). The Development of Academic Skills: An Investigation into the Mechanisms of Integration within and External to the Curriculum of First-Year Undergraduates. Western Avenue: Thesis submitted to the Cardiff School of Management University of Wales Institute Cardiff.

Reuters (2016). Thomson Reuters Corporation. https://www.thomsonreuters.com/en.html.

Rickels, H. A. (2017). Predicting College Readiness in STEM: A Longitudinal Study of Iowa Students (USA: University of Iowa). PhD Thesis.

Ridwan, I. I., Ali, R., Mohamed, I. I., Adam, M. Z., and ElFadil, N. (2016). “Rasch Measurement Analysis for Validation Instrument to Evaluate Students Technical Readiness for Embedded Systems,” in 2016 IEEE Region 10 Conference (TENCON2016)-Proceedings of the International Conference, 2117–2212. doi:10.1109/TENCON.2016.7848399

Rover, D. T., Mercado, R. A., Zhang, Z., Shelley, M. C., and Helvick, D. S. (2008). Reflections on Teaching and Learning in an Advanced Undergraduate Course in Embedded Systems. IEEE Trans. Educ. 51 (3), 400–412. doi:10.1109/TE.2008.921792

Rycroft, C., Fernandez, M., and Copley-Merriman, K. (2013). “Systematic Literature Reviews at the Heart of Health Technology Assessment: A Comparison across Markets,” in Presented at ISPOR 16th Annual European Congress. doi:10.1016/j.jval.2013.08.920

Saavedra, A. R., and Opfer, V. D. (2012). Learning 21st-century Skills Requires 21st-century Teaching. Phi Delta Kappan 94 (2), 8–13. doi:10.1177/003172171209400203

Safaralian, L. (2017). Bridging the Gap: A Design-Based Case Study of a Mathematics Skills Intervention (San Marcos, USA: California State University). Program. PhD. Thesis.

Sahlberg, P. (2006). Education Reform for Raising Economic Competitiveness. J. Educ. Change 7, 259–287. doi:10.1007/s10833-005-4884-6

Smt, S. J., and Philip, Sherly. “A Study on the Relationship between Affective Learning Outcome and Achievement in Physics of Secondary School Students”. Quest Journals J. Res. Humanities Soc. Sci. Volume 5 ∼ Issue 1 (2017). pp: 108–111. doi:10.13189/ujer.2017.051024

Schuler, H. (2006). “Work and Requirement Analysis,” in Textbook of Personal Psychology. Editor H. Schuler, 45–68.

Scott, G., and Yates, K. W. (2002). Using Successful Graduates to Improve the Quality of Undergraduate Engineering Programmes. Eur. J. Eng. Educ. 27 (4), 363–378. doi:10.1080/03043790210166666

Scott, G., and Yates, K. W. (2002). Using Successful Graduates to Improve the Quality of Undergraduate Engineering Programmes. Eur. J. Eng. Educ. 27, 363–378. doi:10.1080/03043790210166666

Seetha, N. (2014). Are Soft Skills Important in the Workplace? €" A Preliminary Investigation in Malaysia. Ijarbss 4 (4), 44–56. doi:10.6007/IJARBSS/v4-i4/751

Selinger, M., Sepulveda, A., and Buchan, J. (2013). Education and the Internet of Everything. Cisco.

Seviora, R. E. (2005). A Curriculum for Embedded System Engineering. ACM Trans. Embed. Comput. Syst. 4 (3), 569–586. doi:10.1145/1086519.1086525

Shakir, R. (2009). Soft Skills at the Malaysian Institutes of Higher Learning. Asia Pac. Educ. Rev. 10 (3), 309–315. doi:10.1007/s12564-009-9038-8

Shuell, T. (2016). Theories of Learning. Education.Com. Available from: http://www.education.com/reference/article/theories-of-learning/(Retrieved December 06, 2016).

Smith, M. C., Rose, A. D., Ross-Gordon, J., and Smith, T. J. (2015). Adults’ Readiness to Learn as a Predictor of Literacy Skills. Northern Illinois University. https://static1.squarespace.com/static/51bb74b8e4b0139570ddf020/t/54da7802e4b08c6b90107b4f/1423603714198/Smith_Rose_Ross-Gordon_Smith_PIAAC.pdf (Retrieved on 10 12, 2017).

Sönmez, V. (2017). Association of Cognitive, Affective, Psychomotor and Intuitive Domains in Education, Sönmez Model. Universal J. Educ. Res. 5 (3), 347–356. doi:10.13189/ujer.2017.050307

Soomro, B., and Salleh, N. (2014). “A Systematic Review of the Effects of Team Climate on Software Team Productivity,” in Asia-Pacific World Congress on Computer Science and Engineering, Nadi, Fiji, 4-5 Nov. 2014, 1–7. doi:10.1109/APWCCSE.2014.7053876

Srour, I., Abdul-Malak, M.-A., Itani, M., Bakshan, A., and Sidani, Y. (2013). Career Planning and Progression for Engineering Management Graduates: An Exploratory Study. Eng. Manage. J. 25 (3), 85–100. doi:10.1080/10429247.2013.11431985

Stern, E. (2001). Intelligence, Knowledge, Transfer and Handling of Sign Systems, Perspectives of Intelligence Research, 163–203.

Syed Ahmad, T. S. A., and Hussin, A. A. (2017). Application of the Bloom’s Taxonomy in Online Instructional Games. Int. J. Acad. Res. Business Soc. Sci. 7 (4), 1009–1020. doi:10.6007/IJARBSS/v7-i4/2910

Takahashi, A., and McDougal, T. (2016). Collaborative Lesson Research: Maximizing the Impact of Lesson Study. ZDM Math. Educ. 48, 513. doi:10.1007/s11858-015-0752-x

Ting, S.-H., Marzuki, E., Chuah, K.-M., Misieng, J., and Jerome, C. (2017). Employers' Views on Importance of English Proficiency and Communication Skill for Employability in Malaysia. Indonesian J. Appl. Linguist 7 (2), 77–327. doi:10.17509/ijal.v7i2.8132

Yildirim, S. G., and Baur, S. W. (2016). Development of Learning Taxonomy for an Undergraduate Course in Architectural Engineering Program. Journal of American Society for Engineering Education.

Yoder, B. L. (2011). Engineering by the Numbers. http://www.asee.org/papers-and-publications/publications/college-profiles/2011-profile-engineering-statistics.pdf.

Keywords: embedded system design, learning readiness, instruments, validity, design, students learning

Citation: Elfadil N and Ibrahim I (2022) Embedded System Design Student’s Learning Readiness Instruments: Systematic Literature Review. Front. Educ. 7:799683. doi: 10.3389/feduc.2022.799683

Received: 10 November 2021; Accepted: 03 January 2022;

Published: 18 February 2022.

Edited by:

Ibrahim Arpaci, Bandirma Onyedi Eylül University, TurkeyReviewed by:

Mostafa Al-Emran, British University in Dubai, United Arab EmiratesAiril Haimi Mohd Adnan, MARA University of Technology, Malaysia

Hüseyin Kotaman, Harran University, Turkey

Copyright © 2022 Elfadil and Ibrahim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nazar Elfadil, bmFmZGVsQGZic3UuZWR1LnNh; Intisar Ibrahim, bmZhZGVsQGZic3UuZWR1LnNh

Nazar Elfadil

Nazar Elfadil Intisar Ibrahim*

Intisar Ibrahim*