94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 03 June 2022

Sec. Higher Education

Volume 7 - 2022 | https://doi.org/10.3389/feduc.2022.793151

Renzo Felipe Carranza Esteban1*

Renzo Felipe Carranza Esteban1* Oscar Mamani-Benito2

Oscar Mamani-Benito2 Fiorella Sarria-Arenaza3

Fiorella Sarria-Arenaza3 Anahí Meza-Villafranca1

Anahí Meza-Villafranca1 Ana Paula Alfaro1

Ana Paula Alfaro1 Susana K. Lingan1

Susana K. Lingan1The objective of this study was to translate and validate the Online Homework Distraction Scale (OHDS) for Peruvian university students. Accordingly, an instrumental cross-sectional study was conducted with 811 university students, including students of both sexes aged between 16 and 39 (M = 20.96 years; SD = 4.42) residing in the city of Lima. The content-based validity evidence was analyzed using Aiken’s V coefficient based on the internal structure through a confirmatory factor analysis and considered in relation to other variables a through correlation analysis. The reliability was calculated using the Omega coefficient. Expert opinions were favorable for all items (V > 0.70). The one-dimensional structure of the scale was confirmed, and it presented acceptable reliability (α > 0.70). Thus, the OHDS for university students is a measure with a valid and reliable scale.

In mid-March 2020, the impact of the COVID-19 pandemic forced most governments in the world to order the closure of educational institutions to prevent the proliferation of the virus from Wuhan (Hermann et al., 2021). Owing to this reality and with the date of returning to in-person classes remaining uncertain, both schools and universities found that continuing and completing study programs online was the only alternative (Muthuprasad et al., 2021).

In this new scenario, students had to face various challenges to take advantage of and manage their online learning process. One such challenge involved controlling online learning distractors, which had a greater impact at the university level (Schmidt, 2020). Distractors are defined as any stimulus that generates a shift in focus from study tasks or activities that are clearly related to learning to other activities or situations (Feng et al., 2019).

In relation to this phenomenon, the scientific literature provides a frame of reference to understand how the brain works when it comes to exercising control over attention, a cognitive function key in learning and carrying out online tasks. In this case, the theoretical model of dual attention processes shows that our attention capacities are limited (Corbetta and Shulman, 2002) because even though the brain focuses on an objective activity, its attention can get diverted to other focal points owing to internal or external stimuli. Therefore, trying to distribute attention between different activities can hinder and interfere with student learning and performance (Xu et al., 2020). Thus, the theories of divided attention and dual-task interference (Garcia, 1991) posit that when a student tries to focus on virtual classes and navigate social networks simultaneously, an interference can occur, impeding speed and accuracy in the learning process. This interference occurs because the capacity of the brain’s attentional division index, responsible for controlling the distribution of attentional resources dedicated to tasks and cognitive operations, is exceeded (Baddeley, 1996).

In this regard, it is important to differentiate between the types of distractors. Traditionally, researchers have discussed internal distractors (personal and family problems as well as psychological manifestations such as anxiety, demotivation, and fatigue) and external distractors (noise, lighting, and temperature) (Aagaard, 2015). However, with the development and inclusion of technologies in learning, a new taxonomy currently predominates, classifying distractors as conventional and unconventional (Xu, 2015). In this case, the conventional ones are stimuli that traditionally displaced the student’s attention in the time and learning context (noise, environmental factors, and personal and family problems, among others). Conversely, unconventional stimuli are more related to the use of new technologies (smartphones, social networks, the internet, and network games, among others) (Xu et al., 2016).

Currently, the research emphasizes that unconventional distractors have become more important in the context of virtual learning (Kolhar et al., 2021). The most common involve the use of music players, mobile devices, internet use, and social networks such as Facebook (Feng et al., 2019). These facts have been demonstrated even before the pandemic, as, for example, in the study conducted by Calderwood et al. (2014), who reported that university students who participated in a 3-h independent study session, on average, spent 73 min listening to music while studying. In addition, 35 distractions were noted during the course of the 3 h.

Based on this, serious reflection is necessary; although technology has resulted in great advances for the improvement of educational quality, its misuse has also affected university education. This is why many researchers claim that the role played by self-regulation (Hatlevik and Bjarnø, 2021) in virtual learning contexts (Berridi and Martínez, 2017), such as the one we are currently experiencing, is preponderant.

According to Magalhães et al. (2020), changes in traditional education have apparently led to a different way of performing and delivering academic assignments. Thus, the level of learning in a virtual context highly depends on the disposition and interest of the university students themselves, who are known to complete several activities simultaneously. This is a phenomenon widely documented through studies that describe how multitasking linked to the use of mobile phones makes learning difficult (Chen and Yan, 2016).

For this reason, unconventional means of distraction such as the problematic use of smartphones (Yang et al., 2019; Akinci, 2021), social networks (Ramos-Galarza et al., 2017; Hejab and Shaibani, 2020), and the internet (Aznar-Díaz et al., 2020) tend to have a more significant negative impact on academic performance (Gupta and Irwin, 2016). One of the most significant consequences of such distractors at the academic level is dilatory behavior, better known as academic procrastination, which involves postponing essential academic activities (Steel et al., 2018) despite being aware of the consequences. In this regard, studies affirm that the problematic use of cell phones stems from procrastination (Hong et al., 2021). They add that the prevalence of such behavior is increasing as a result of the presence of technological stimuli (video games, television, the Internet, videos, social networks, etc.) (Madhan et al., 2012), which are inevitably integral aspects of students’ daily lives.

Following this reasoning, a student’s vulnerability to online distractors and their repercussions can lead to a sense of dissatisfaction among students in terms of their university experiences. Such dissatisfaction is particularly experienced by students toward the end of the degree when they undertake self-assessments and believe that their academic, social, and emotional performance (Alvarez et al., 2015) levels could have been higher. At this stage, they realize that excessive use of digital avenues such as social networks for non-academic purposes has gradually affected their academic performance, social interactions, and lifestyle (Kolhar et al., 2021).

A theoretical perspective aimed at clarifying the relationship between online homework distractors, academic procrastination, and satisfaction with studies can be obtained by means of evaluating the findings of relevant researches. For example, Svartdal et al. (2020) found that distractions in the university setting contribute to procrastination. This finding was confirmed in the study by Steel et al. (2018); in this study, procrastinators scored higher on distraction scales. In fact, it has been typically inferred that students already susceptible to procrastination are significantly impacted by environments entailing many distractors, particularly the unconventional ones, which divert planned behavior and drive individuals toward more pleasurable activities Svartdal et al. (2020). Such distractions can lead students to making extreme decisions such as dropping out; however, those who manage to make progress in their courses usually feel a certain level of dissatisfaction with their studies at the end of each academic period (Cieza et al., 2018).

Consequently, having recognized the importance of investigating distractors during the performance of online tasks, there is an urgent need for measurement instruments that allow researchers to evaluate the incidence of this phenomenon in the Peruvian university population. However, a review of the available scientific literature reveals that there are still no valid and reliable measures for this context despite the latent need to conduct research on the effect of certain distractors on academic performance (Durán-Aponte and Pujol, 2013; Ramos-Galarza et al., 2017; Mendoza, 2018; Guillén, 2019).

Given this gap in the literature, the researchers of the present study found it appropriate to examine a short and adaptable version within the Peruvian university population, given the current context in which studies cannot be carried out in person but must utilize virtual resources owing to the social restrictions imposed by the government at this time. In addition, the alternative of constructing an instrument was ruled out because constructing a measure under the international standards for educational and psychological tests (American Educational Research Association, American Psychological Association, and National Council on Measurement in Educationin, 2014) requires resources and a lengthy schedule to implement, issues that are not in accordance with the resources that are presently available to the authors of this article.

Thus, one measure that addresses the aforementioned needs is the Online Homework Distraction Scale (OHDS), which, from its first version, takes into account conventional and unconventional distractors for the teacher population (Xu et al., 2016). Recently, however, Xu et al. (2020) re-examined its psychometric properties in the Chinese university student population, a group that is similar to the one that is intended to be studied herein.

Because of the need to evaluate online homework distractors in the context of online education in the context of COVID-19 (Aguilera-Hermida, 2020), the present study aims to translate and validate the OHDS for Peruvian university students. In addition, owing to the relationship of online task distractors with study satisfaction and procrastination (Balkis and Duru, 2016, 2017), the latter two variables are proposed as a contrast for convergent validity analysis.

It is an instrumental study with an observational and transversal design (Ato et al., 2013). Under an intentional non-probabilistic sampling, 811 undergraduate university students participated voluntarily in this study, of which 295 were male, 511 were female, and 5 preferred to not specify their gender, with an average age of 20.96 years old (SD = 4.423). All undergraduate students were included regardless of their specific academic year, university, or educational institution.

The current study was developed in two stages: the translation and the validation of the OHDS in Latin-American Spanish. Before starting, permission was obtained from the corresponding author of the original instrument via email. In addition, the study was reviewed by the Ethics Committee of Universidad Peruana Unión, and permission was obtained to begin with the corresponding procedure.

The translation process was performed based on the recommendations offered by Guillemin et al. (1993), with an initial translation by a group of translators, a back translation by other translators without knowledge of the original questionnaire, a review committee, and a focus group for pretesting.

The process began with the translation of the original instrument into Spanish; four translators to whom the authors had access were requested to participate. The translators were bilingual with an advanced level of English. The four translators were invited to participate to compare and assess the various translations in terms of the clarity of the criteria and to make any necessary adjustments to grammar.

Once an appropriate translation was discussed and chosen for each item, the scale was sent to three English translators with a university degree who had also been invited to participate in the study. All three translators spoke English and Spanish fluently. Each translator prepared an independent translation of the scale from Spanish to English. Subsequently, the researchers held a virtual meeting in which they reviewed the translation of the scale and prepared a consolidated version, taking the suggestions into consideration and incorporating the corresponding modifications.

A call was made for university students in Metropolitan Lima, and the meeting took place virtually. The main criterion for this activity was for the participants to be enrolled in a professional degree program. In total, 29 students from different programs and universities responded to the call (14 men and 15 women). These students were presented with the items translated into Spanish and invited to comment on them or indicate whether the meaning of the items was clear and if the vocabulary used was understandable. Their comments and suggestions were recorded and considered by the researchers to assess the relevance of the final translation.

The focus group suggested the following change: Mi mente se dispersa or Mi pensamiento divaga instead of Sueño despierto in Item 1.

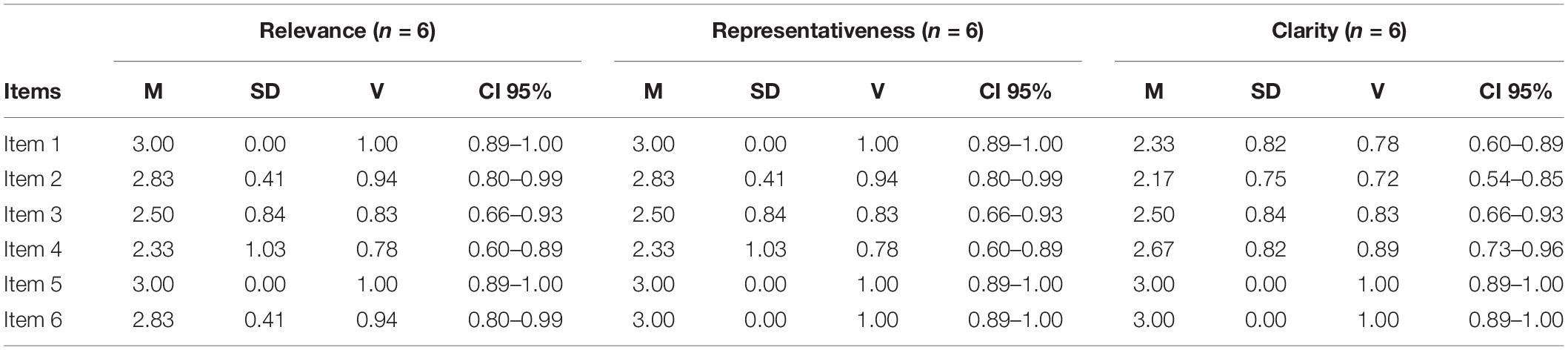

The validation of the scale comprised an expert review. To this end, six psychologists who were experienced in the educational field and university teaching (three of them held a master’s degree and the other three a doctorate) participated. In their review, the psychologists analyzed the content validity through the relevance, representativeness, and clarity of the items; the Aiken V value was calculated for each item. Table 1 shows the original version of the OHDS scale and the translated Peruvian version (distraction scale when performing tasks online).

Following the translation and validation of the scale by experts, an online form was designed in Google Forms wherein the informed consent, a sociodemographic record, and the statements of the scale were included. The first section of the form stated the research objective, that participation was anonymous and voluntary, and that the information collected was for research purposes only.

To measure the EPA variable, the Academic Procrastination Scale was used, validated in the Peruvian context by Dominguez-Lara et al. (2014). This instrument has 12 items distributed in two dimensions: academic self-regulation and the delay of activities. The EPA has proven to be valid (CFI = 1.00, GFI = 0.97, RMSA = 0.078) and reliable (α = 0.81).

In addition, ESE was measured with the Brief Study Satisfaction Scale, validated by Merino-Soto et al. (2017) in Peruvian university students. This instrument is composed of three items and has been reported to be valid (CFI = 0.92, GFI = 0.99, RMSR = 0.053) and reliable (α = 0.788).

This study was approved by the Research Ethics Committee of Universidad Peruana Unión with the approval number 2021-CEUPeU-0038.

First, the analysis was carried out through expert opinions and based on the scores assigned to the items, Aiken’s V coefficient (with significant values ≥ 0.70), and its 95% confidence intervals (CI; Ventura-León, 2019). Through this procedure, the judges’ opinions were quantified based on the analysis of the representativeness, relevance, and clarity of the test’s contents (Ventura-León, 2019). Second, the descriptive statistics of the OHDS’ items (mean, standard deviation, skewness, and kurtosis) were calculated. Finally, a confirmatory factor analysis (CFA) was performed, considering the ordinal nature of the items, and the diagonally weighted least squares with mean and variance robust estimation was corrected. Here, the goodness-of-fit of the model was determined through the comparative fit index (CFI) and the Tucker–Lewis index (TLI). In addition, the parameters for the root mean square error of approximation (RMSEA), root mean square residual, and standardized root mean square residual (SRMR) were used, all of these falling under the criteria suggested by Keith (2019).

As an indication of a good fit, CFI and TLI > 0.90 (Hu and Bentler, 1999) and RMSEA and SRMR < 0.080 (Keith, 2019) were considered. In the third stage, validity based on other variables was analyzed (procrastination and satisfaction with studies), and Pearson’s correlation coefficient was used. Finally, reliability was calculated through Cronbach’s alpha coefficient and their respective CI (Domínguez-Lara and Merino-Soto, 2015). Thus, the goodness-of-fit measures followed the recommendations by Hu and Bentler (1999).

For the analyses, the statistical program FACTOR Analysis, version 10.9.02 (Lorenzo-Seva and Ferrando, 2007), was used to analyze the descriptive statistics. Further, for the CFA, the Rstudio program (version 4.0.2) and lavaan package were used; to calculate the reliability, the statistical software SPSS, version 26.0, was used.

Table 2 shows the results of the assessment of six experts who analyzed the relevance, representativeness, and clarity of the items on the OHDS scale. The results indicate that all the items received a favorable assessment (V > 0.70) (Table 2). In particular, it was observed that Items 1 and 5 were more important than the others (V = 1.00; CI 95: 0.89 to 1.00). Items 1, 5, and 6 were the most representative (V = 1.00; CI 95: 0.89 to 1.00); Items 5 and 6 were the clearest (V = 1.00; CI 95: 0.89 to 1.00) In addition, all the values of the lower limit of the 95% CI were appropriate, and all the values of the V coefficient were statistically significant. Thus, the OHDS reported evidence of content-based validity.

Table 2. Aiken’s V to assess the relevance, representativeness, and clarity of the items in the distraction scale when performing online tasks scale.

Table 3 presents the descriptive statistics (mean, standard deviation, skewness, and kurtosis) of the OHDS. Item 1 has the highest average score (M = 3.29), and Item 6 reports the highest dispersion (DS = 1.09). The asymmetry and kurtosis values of the scale items are not higher than ± 1.5 (Peréz and Medrano, 2010).

To verify the validity based on the internal structure of the OHDS, a CFA was executed (Table 4). The results of the original model did not present adequate fit indices. Therefore, through the index modification technique, Item 5 was eliminated, obtaining a satisfactory factor structure model (χ2 = 29,100, df = 5, p < 0.001; CFI = 0.984; TLI = 0.968; RMSEA = 0.077 and SRMR < 0.05).

Regarding the relationship of the OHDS with other variables, the OHDS relates in a direct and statistically significant way to Academic procrastination (EPA) (r = 0.583, p < 0.01) and in an inverse and statistically significant way to Satisfaction with studies (ESE) (r = –0.383, p < 0.01). In addition, both present a small and medium effect size, respectively. The findings show evidence of validity (Table 5).

The internal consistency of the instrument was calculated using Cronbach’s alpha coefficient (α = 0.72; 95% CI:0.68–0.75), revealing that the scale scores were reliable and consistent with each other.

Given the current relevance of measuring distractions in the development of academic tasks in virtual teaching environments, the aim of the present study was to translate and validate the OHDS for Peruvian university students. Currently, there are few such studies in the national Latin-American context; therefore, pertinent and precise measurement instruments that have evidence validity constitute a necessary contribution.

Per the research objective herein, the results of the analysis procedures allow us to corroborate that the translated version of the OHDS shows evidence of validity based on the content, an adequate internal structure, evidence of validity based on the relationship with other variables, and internal consistency. The psychometric properties found herein are similar to those reported in the study of the original version of the instrument conducted with Chinese university students (Xu et al., 2020).

In terms of content-based validity, using the expert opinion technique, it was confirmed that the OHDS items, as translated into Latin-American Spanish, are representative, relevant, and clear; evidence was given on the adequate linguistic fit and psychological equivalence of the construct of interest. Therefore, the translated version of the OHDS constitutes a relevant instrument for the Peruvian context.

Regarding the validity based on the internal structure, a one-dimensional structure was verified with adequate fit indices based on a CFA. These findings are analogous to those reported in previous studies (Xu et al., 2020), providing evidence in support of technology-related and conventional distractions being empirically indistinguishable in the sample of Peruvian university students. However, in the current study, the model that achieved a better fit was produced by eliminating Item 5 (“I interrupt my online tasks to send or receive emails”), which could respond to cultural differences that affect how to characterize distracting stimuli related to the task of the participants of this study in contrast with the experience of Chinese students. Accordingly, considering the Peruvian context and the generational characteristics of the university students that participated in this study, sending or receiving emails is not a common practice that represents an important distracting stimulus because young Peruvians prefer instant messaging through social networks more than more traditional services such as email (Guillén, 2019).

In relation to the evidence of the validity in relation to other variables, OHDS is directly and statistically significant in relation to EPA, a procrastination measure, and inversely and statistically significant toward ESE, which measures satisfaction with studies. The relationships found herein are consistent with the findings of previous studies, which were mainly conducted with university students, analyzing the predictive power or the strength of the association of variables related to the use of ICTs, which can be considered sources of distraction and academic procrastination. Among these variables are the problematic use of smartphones (Yang et al., 2019; Akinci, 2021) as well as the use of or addiction to social media (Ramos-Galarza et al., 2017; Hejab and Shaibani, 2020) and the internet (Aznar-Díaz et al., 2020).

Finally, regarding the reliability of the instrument, adopting the perspective of internal consistency, OHDS becomes a reliable and precise measure, obtaining results similar to those reported by Xu et al. (2020).

Regarding the limitations of the research, the most important one is related to the characteristics of the intentional sampling. Therefore, future studies should consider using representative samples through a probabilistic sampling that accounts for different cultural contexts. In addition, the data collection herein considered only the use of self-reporting measures, so the responses of the subjects might not accurately reflect their behaviors with respect to the variables that were evaluated. Given these limitations, the pending agenda in future research is related to the need to consider intercultural environments since cultural differences may influence how students’ distractors manifest during the performance of academic activities in virtual learning environments, considering the ethnic diversity present in Peruvian universities, can be demonstrated through the cultural and linguistic plurality of the different indigenous and Amazonian communities. Furthermore, future research should investigate related contextual factors, including the area of training, self-regulation strategies, and a motivational classroom climate, such that clear and understandable indicators can be acquired for intervention in educational contexts. Moreover, it will be necessary for future instrumental studies to explore the invariance of the measure with respect to sociodemographic variables of interest, such as the sex or education level of the students. Lastly, the development of longitudinal research would be useful to assess the evolution of the relevance and representativeness of the items, considering the changes produced in the dynamics of social interaction by the use of technology, which may determine the appearance of new distracting stimuli related to the task.

The evidence obtained herein allows us to conclude that the OHDS has demonstrated adequate psychometric properties (its validity based on the content, internal structure, and relationship with other variables) for Peruvian university students. Therefore, the scale represents an appropriate means to evaluate college students’ distraction when performing online tasks and can be used for research, evaluation, and professional intervention purposes in the field of education. The implications of the present study, then, are that the findings of this study lies in the fact that the findings support the validity of the interpretations obtained with the OHDS for Peruvian university students which will lead to progress in describing, understanding, and explaining the phenomenon of task distraction in online learning environments in this population.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

RC and OM-B conceived and designed the experiments, performed the experiments, analyzed and interpreted the data, and wrote the manuscript. FS-A, AM-V, AP, and SL contributed reagents, materials, analysis tools, or data and wrote the manuscript. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2022.793151/full#supplementary-material

Aagaard, J. (2015). Drawn to distraction: a qualitative study of off-task use of educational technology. Comput. Educ. 87, 90–97. doi: 10.1016/j.compedu.2015.03.010

Aguilera-Hermida, P. (2020). College students’ use and acceptance of emergency online learning due to COVID-19. Int. J. Educ. Res. Open 1:100011. doi: 10.1016/j.ijedro.2020.100011

Akinci, T. (2021). Determination of predictive relationships between problematic smartphone use, self-regulation, academic procrastination and academic stress through modelling. Int. J. Prog. Educ. 17, 35–53. doi: 10.29329/ijpe.2020.329.3

Alvarez, P., Lopez, D., and Perez-Jorge, D. (2015). El alumnado universitario y la planificación de su proyecto formativo y profesional. [University students and the planning of their education and professional project]. Actualidades Inves. Educ. 15, 1–24. scielo.sa.cr/pdf/aie/v15n1/a17v15n1.pdf

American Educational Research Association, American Psychological Association, and National Council on Measurement in Educationin. (2014). Standards for Educational and Psychological Testing. Washington D.C: AERA Publications Sales.

Ato, M., López, J., and Benavente, A. (2013). Un sistema de clasificación de los diseños de investigación en psicología [A classification system for research designs in psychology]. Anales Psicología 29, 1038–1059.

Aznar-Díaz, I., Romero-Rodríguez, J. M., García-González, A., and Ramírez-Montoya, M. S. (2020). Mexican and Spanish university students’ Internet addiction and academic procrastination: Correlation and potential factors. PLoS One 15:e0233655. doi: 10.1371/journal.pone.0233655

Baddeley, A. (1996). The fractionation of working memory. Proc. Natl. Acad. Sci. U.S.A. 93, 13468–13472. doi: 10.1073/pnas.93.24.13468

Balkis, M., and Duru, E. (2017). Gender differences in the relationship between academic procrastination, satisfaction with academic life and academic performance. Rev. Electron. Investig. Psicoeduc. Psigopedag. 15, 105–125. doi: 10.14204/ejrep.41.16042

Balkis, M., and Duru, E. (2016). Procrastination, self-regulation failure, academic life satisfaction, and affective well-being: underregulation or misregulation form. Eur. J. Psychol. Educ. 31, 439–459. doi: 10.1007/s10212-015-0266-5

Berridi, R., and Martínez, J. I. (2017). Estrategias de autorregulación en contextos virtuales de aprendizaje [Self-regulation strategies in virtual learning contexts]. Perf. Educ. 39, 89–93. doi: 10.22201/iisue.24486167e.2017.156.58285

Calderwood, C., Ackerman, P. L., and Conklin, E. M. (2014). What else do college students “do” while studying? An investigation of multitasking. Comput. Educ. 75, 19–29. doi: 10.1016/j.compedu.2014.02.004

Chen, Q., and Yan, Z. (2016). Does multitasking with mobile phones affect learning? Rev.. Comput. Hum. Behav. 54, 34–42. doi: 10.1016/j.chb.2015.07.047

Cieza, J., Castillo, A., Garay, F., and Poma, J. (2018). Satisfacción de los estudiantes de una facultad de medicina peruana. [Student satisfaction at a Peruvian medical school]. Revista Med. Herediana 29, 22–28. doi: 10.20453/rmh.v29i1.3257

Corbetta, M., and Shulman, G. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 201–215. doi: 10.1038/nrn755

Dominguez-Lara, S., Villegas, G., and Centeno-Leyva, S. (2014). Procrastinación académica: Validación de una escala en una muestra de estudiantes de una universidad privada [Academic procrastination: validation of a scale in a sample of students at a private university]. Liberabit 20, 293–304.

Domínguez-Lara, S. A., and Merino-Soto, C. (2015). >Por qué es importante reportar los intervalos de confianza del coeficiente alfa de Cronbach? [Why is it important to report Cronbach’s alpha coefficient confidence intervals?] Revista Latinoamericana de Ciencias Sociales. Niñez y Juventud 13, 1326–1328.

Durán-Aponte, E., and Pujol, L. (2013). Manejo del tiempo académico en jóvenes que inician estudios en la Universidad Simón Bolívar [Academic time management in young people who begin their studies at Universidad Simón Bolívar]. Revista Latinoamericana de Ciencias Sociales, Niñez y Juventud 11, 93–108. doi: 10.11600/1692715x.1115080812

Feng, S., Wong, Y. K., Wong, L. Y., and Hossain, L. (2019). The Internet and Facebook usage on academic distraction of college students. Comput. Educ. 134, 41–49. doi: 10.1016/j.compedu.2019.02.005

Garcia, J. (1991). Paradigmas experimentales en las teorías de la automaticidad. [Experimental paradigms in the theories of automaticity]. Anales Psicología 7, 1–30. https://www.um.es/analesps/v07/v07_1/01-07_1.pdf

Guillemin, F., Bombardier, C., and Beaton, D. (1993). Cross-cultural adaptation of health-related quality of life measures: Literature review and proposed guidelines. J. Clin. Epidemiol. 46, 1417–1432. doi: 10.1016/0895-4356(93)90142-n

Guillén, O. B. (2019). Uso de redes sociales por estudiantes de pregrado de una facultad de medicina en Lima, Perú [Use of social networks by undergraduate students at a medical school in Lima, Peru]. Revista Med. Herediana 30, 94–99. doi: 10.20453/rmh.v30i2.3550

Gupta, N., and Irwin, J. D. (2016). In-class distractions: The role of Facebook and the primary learning task. Comput. Hum. Behav. 55, 1165–1178. doi: 10.1016/j.chb.2014.10.022

Hatlevik, O. E., and Bjarnø, V. (2021). Examining the relationship between resilience to digital distractions, ICT self-efficacy, motivation, approaches to studying, and time spent on individual studies. Teach.Teach. Educ. 102:103326. doi: 10.1016/j.tate.2021.103326

Hejab, M., and Shaibani, A. (2020). Academic procrastination among university students in Saudi Arabia and its association with social media addiction. Psychol. Educ. 57, 1118–1124. http://www.psychologyandeducation.net/

Hermann, M., Gutsfeld, R., Wirzberger, M., and Moeller, K. (2021). Evaluating students’ engagement with an online learning environment during and after COVID-19 related school closures: A survival analysis approach. Trends Neurosci. Educ. 25:100168. doi: 10.1016/j.tine.2021.100168

Hong, W., Liu, R., Ding, Y., Jiang, S., Yang, X., and Sheng, X. (2021). Academic procrastination precedes problematic mobile phone use in Chinese adolescents: a longitudinal mediation model of distraction cognitions. Addict. Behav. 121:106993. doi: 10.1016/j.addbeh.2021.106993

Hu, L. T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equat. Modeling 6, 1–55. doi: 10.1080/10705519909540118

Keith, T. Z. (2019). Multiple regression and beyond: An introduction to multiple regression and structural equation modeling. In Multiple Regression and Beyond: An Introduction to Multiple Regression and Structural Equation Modeling.Milton Park: Routledge

Kolhar, M., Ahmed, R., and Alameen, A. (2021). Effect of social media use on learning, social interactions, and sleep duration among university students. Saudi J. Biol. Sci. 28, 2216–2222. doi: 10.1016/j.sjbs.2021.01.010

Lorenzo-Seva, U., and Ferrando, P. (2007). Factor: a Computer Program to Fit the Exploratory Factor Analysis Model. España: University Rovira y Virgili.

Madhan, B., Kumar, C. S., Naik, E. S., Panda, S., Gayathri, H., and Barik, A. K. (2012). Trait procrastination among dental students in India and its influence on academic performance. J. Dent. Educ. 76, 1393–1398. doi: 10.1002/j.0022-0337.2012.76.10.tb05397.x

Magalhães, P., Ferreira, D., Cunha, J., and Rosário, P. (2020). Online vs traditional homework: A systematic review on the benefits to students’ performance. Comput. Educ. 152:103869 doi: 10.1016/j.compedu.2020.103869

Mendoza, J. R. (2018). Uso excesivo de redes sociales de internet y rendimiento académico en estudiantes de cuarto año de la carrera de psicología UMSA [Excessive use of social networks and academic performance in fourth-year psychology students at UMSA]. Educ. Superior 5, 57–70.

Merino-Soto, C., Dominguez-Lara, S., and Fernández-Arata, M. (2017). Validación inicial de una Escala Breve de Satisfacción con los Estudios en estudiantes universitarios de Lima [Initial validation of a Brief Study Satisfaction Scale in university students in Lima, Peru.]. Educación Médica 18, 74–77. doi: 10.1016/j.edumed.2016.06.016

Muthuprasad, T., Aiswarya, S., Aditya, K. S., and Jha, G. (2021). Students’ perception and preference for online education in India during COVID -19 pandemic. Soc. Sci. Hum. Open 3:100101. doi: 10.1016/j.ssaho.2020.100101

Peréz, E., and Medrano, L. (2010). Análisis factorial exploratorio: Bases conceptuales y metodológicas [Exploratory factor analysis: Conceptual and methodological basis]. Revista Argentina de Ciencias Del Comportamiento 2, 58–66.

Ramos-Galarza, C., Jadán-Guerrero, J., Paredes-Núñez, L., Bolaños-Pasquel, M., and Gómez-García, A. (2017). Procrastinación, adicción al internet y rendimiento académico de estudiantes universitarios ecuatorianos [Procrastination, Internet addiction, and academic performance of Ecuadorian university students]. Estudios Pedagogicos 43, 275–289. doi: 10.4067/S0718-07052017000300016

Schmidt, S. J. (2020). Distracted learning: Big problem and golden opportunity. J. Food Sci. Educ. 19, 278–291. doi: 10.1111/1541-4329.12206

Steel, P., Svartdal, F., Thundiyil, T., and Brothen, T. (2018). Examining procrastination across multiple goal stages: a longitudinal study of temporal motivation theory. Front. Psychol. 9:327. doi: 10.3389/fpsyg.2018.00327

Svartdal, F., Dahl, T. I., Gamst-Klaussen, T., Koppenborg, M., and Klingsieck, K. B. (2020). How study environments foster academic procrastination: overview and recommendations. Front. Psychol. 11:540910. doi: 10.3389/fpsyg.2020.540910

Ventura-León, J. (2019). De regreso a la validez basada en el contenido [Back to content-based validity]. Adicciones 1:1213 doi: 10.20882/adicciones.1213

Xu, J. (2015). Investigating factors that influence conventional distraction and tech-related distraction in math homework. Comput. Educ. 81, 304–314. doi: 10.1016/j.compedu.2014.10.024

Xu, J., Fan, X., and Du, J. (2016). A study of the validity and reliability of the Distraction Scale: A psychometric evaluation. Measurement 81, 36–42. doi: 10.1016/j.measurement.2015.12.002

Xu, J., Núñez, J. C., Cunha, J., and Rosário, P. (2020). Online homework distraction scale: A validation study. Psicothema 32, 469–475. doi: 10.7334/psicothema2020.60

Yang, Z., Asbury, K., and Griffiths, M. D. (2019). An exploration of problematic smartphone use among chinese university students: Associations with academic anxiety, academic procrastination, self-regulation and subjective wellbeing. Int. J. Ment. Health Add. 17, 596–614. doi: 10.1007/s11469-018-9961-1

Keywords: translation, validation, distractors, university students, Peru

Citation: Carranza Esteban RF, Mamani-Benito O, Sarria-Arenaza F, Meza-Villafranca A, Paula Alfaro A and Lingan SK (2022) Translation and Validation of the Online Homework Distraction Scale for Peruvian University Students. Front. Educ. 7:793151. doi: 10.3389/feduc.2022.793151

Received: 11 October 2021; Accepted: 28 February 2022;

Published: 03 June 2022.

Edited by:

Hamid Sharif Nia, Mazandaran University of Medical Sciences, IranReviewed by:

Chia-Lin Tsai, University of Northern Colorado, United StatesCopyright © 2022 Carranza Esteban, Mamani-Benito, Sarria-Arenaza, Meza-Villafranca, Paula Alfaro and Lingan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Renzo Felipe Carranza Esteban, cmNhcnJhbnphQHVzaWwuZWR1LnBl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.