- Department of Education, University of Cyprus, Nicosia, Cyprus

The authors discuss the design and utilization of e-TPCK, a self-paced adaptive electronic learning environment designed to promote the development of teachers’ Technological Pedagogical Content Knowledge (TPCK). The system employs a technological solution that promotes teachers’ ongoing TPCK development by engaging them in personalized learning experiences using technology-infused design scenarios. Results from an experimental research study revealed that the experimental group outperformed the control group in developing TPCK on two design tasks, substantiating the claim that the use of technology can better facilitate the development of TPCK than traditional instruction. Furthermore, e-TPCK’s adaptive guidance using worked-out examples led to mathemagenic learning effects for novice student-teachers, while for the more experienced student-teachers learning with e-TPCK proved beneficial only at the beginning of their engagement with the system. Hence, the relative effectiveness of instructional techniques embedded in e-TPCK became less effective as a result of student-teachers’ increasing expertise, providing evidence for the expertise reversal effect. In conclusion, the findings of the research are encouraging for the advantages of personalized learning in teacher education and showed that the design of adaptive learning technologies must account for learners’ prior knowledge and changing levels of expertise throughout the ongoing interaction with the computer system.

Introduction

In recent decades, teaching approaches in education have shifted from teacher-centered to learner-centered methods (Cuban, 1993; Barr and Tagg, 1995; Watson et al., 2012; Alamri et al., 2020). The acceptance of the constructivist view of learning led to the advocacy of the learner-centered approach in education as a more effective and successful instructional method in which instructional approaches and content are focused on the individual learner. Researchers and practitioners have praised the potential of the learner-centered instructional paradigm to address diverse learner needs and improve learner engagement and achievement. This paradigm shift led to a change in the roles of teachers and learners; teachers became designers and facilitators of learning rather than controllers of the learning process, while learners became not only active collaborators and participants in the construction of knowledge, but also autonomously responsible for their own learning (Barr and Tagg, 1995). However, the predominant teaching model in higher education can still be described as one that promotes the teacher as the center on the stage; someone who stands in front and teaches the content to the people sitting in rows one after the other. Instructors in higher education often rely on the “one-size-fits-all” model to deliver a standardized curriculum, assuming that all learners have similar characteristics without addressing their individual differences (Demski, 2012). Not every student is the same, and higher education institutions should be able to appeal to a wide range of students by providing them with the right learning environment to identify and develop their natural abilities. The “one-size-fits-all” approach to higher education has long been criticized as outdated, because it focuses on providing a curriculum that ends up figuring out who can pass certain subjects and who cannot, ignoring the fact that not every student has the same amount of knowledge in all subjects. Higher education can be described as a time-driven and place-depended system that can lead to learning gaps that negatively impact the knowledge and skills learners need in today’s information age (Demski, 2012; Watson et al., 2012; Alamri et al., 2021). Therefore, higher education faculties and educators are under constant pressure to transform the learning experience by focusing on flexible learner-centered environments that can be personalized to meet the needs, interests, and preferences of each learner and foster the twenty-first century skills that are required for information age learners (Johnson et al., 2016; Alamri et al., 2021). Given today’s familiarity with e-learning platforms that promise personalized experiences, students should be allowed to expect some degree of personalization of their learning experience, forcing higher education to adapt to the rapidly changing expectations of students, the realities of society, and the future workplaces of its graduates (Walkington and Bernacki, 2020).

The personalized learning approach holds promise for tailoring instruction to individual learning trajectories and maximizing student motivation, satisfaction, engagement, and learning efficiency (Liu et al., 2006; Gómez et al., 2014). Specifically, the U.S. Department of Education, Office of Educational Technology (2016) defines personalized learning as “instruction in which the pace of learning and the instructional approach are optimized for the needs of each learner. Learning objectives, instructional approaches, and instructional content all may vary based on learner needs. In addition, learning activities are meaningful and relevant to learners, driven by their interests, and often self-initiated” (p. 7). Johnson et al. (2016) use the term personalized learning to refer to a variety of educational programs, learning experiences, instructional approaches, and academic support strategies designed to address the unique learning needs, interests, aspirations, or cultural backgrounds of individual students. Newman et al. (2013), on the other hand, define personalized learning as an educational method or process that relies on observations to develop tailored educational interventions for students that increase the likelihood of learning success. Although a clearly defined concept of personalized learning is still lacking (Schmid and Petko, 2019), current definitions of personalized learning adhere to the paradigm of learner-centered instruction to provide each student with exactly the type of learning experience they need at a given time and to ensure that their interests, preferences, expectations, learning deficits and needs are addressed while they take responsibility for their own learning progress (Benhamdi et al., 2017; Jung et al., 2019; Plass and Pawar, 2020). At the moment, personalized learning functions more as an umbrella term that describes a range of approaches and models, such as competency-based learning, self-paced instruction, differentiated instruction, individualized instruction, and adaptive learning (Newman et al., 2013; Schmid and Petko, 2019).

Although the use of technology is not mandatory, it greatly facilitates personalized learning. Especially in higher education where instructors address classes with high enrollment, any attempt to personalize learning without learning technologies would be very challenging. In some learning contexts, personalized learning can take the form of blended learning, where both online and face-to-face learning experiences are put into practice to teach students. For example, in a blended learning course, students may participate in a course taught by an instructor in a traditional classroom while completing the online components of the course outside of the traditional classroom. In this case, time spent in the classroom can either be replaced or supplemented by online learning experiences in which students learn the same material as in the classroom (Garrison and Kanuka, 2004). Online and face-to-face learning experiences thus coexist and function in parallel and complementary ways while increasing the effectiveness and efficiency of meaningful learning experiences.

The use of adaptive technologies for personalized learning is not new; computer-based adaptive learning has been around for more than half a century, beginning in the early 1970s with the advent of intelligent tutoring systems (Shemshack and Spector, 2020). In its simplest form, the computer program adapts the learning path based on a student’s responses. However, the growing interest in tailoring instruction to individual student needs has led to the development of new technologies that offer new opportunities for personalized learning (Johnson et al., 2016). More sophisticated adaptive learning technologies go beyond simply responding to learner responses. Adaptive learning technologies, whether computer-based or online, take a “sophisticated, data-driven, and in some cases, non-linear approach to instruction and remediation, adjusting to a learner’s interactions and demonstrated performance level and subsequently anticipating what types of content and resources learners need at a specific point in time to make progress” (Newman et al., 2013, p. 4). The success of any adaptive learning technology to create a didactically sound and flexible learning environment depends on accurately diagnosing the characteristics of a particular learner or group of learners when delivering content by collecting much more data from learners to better adapt to and support learners’ individual learning journeys (Shute and Zapata-Rivera, 2012). With the advent of big data, it is possible to capture and interpret learners’ individual characteristics and real-time state in all aspects of learning (Peng et al., 2019). These big data and learning analytics can include prior learning experiences and performance, learners’ self-expressed preferences for learning methods, analytical predictions of each learner’s likelihood of success through different learning methods, personality traits, affective states, cognitive types, interests, and so on (Shute and Zapata-Rivera, 2012; Zhu and Guan, 2013). Then, the compilation of information about the learner is used as the basis for prescribing optimal content and real-time individualized feedback, such as hints, explanations, hypertext links, practice tasks, encouragement, and metacognitive support (Snow, 1989; Park and Lee, 2004; Shute and Zapata-Rivera, 2012), all of which are effortlessly incorporated into the learning activity. Learners become part of the process of defining learning outcomes, pedagogies, and practices of the learning experience. Until recently, it did not seem possible to meet learners’ needs in this way. The operation of adaptive technologies can be better understood using the framework of Shute and Zapata-Rivera (2012). Their framework includes a four-process cycle that uses a learner model to connect the learner to appropriate educational materials or resources (e.g., other learners, learning objects, applications, and pedagogical agents). The four-process cycle refers to four distinct processes, namely: Capture, Analyze, Select, and Present. During the “Capture” process, the system first collects information (e.g., cognitive data such as answers to a test and/or non-cognitive data such as engagement) about the learner while interacting with the system. The collected information is used to update the internal models that the system maintains. Then, during the “Analyze” process, the system analyzes the information from the assessments and the resulting inferences about the skills contained in the learner model to decide what to do next. This decision is related to adapting and thus optimizing the learning experience. During the “Selection” process, information is selected for a particular learner according to the current state of the learner model and the purpose of the system (e.g., the next learning object or test task). This process is often necessary to decide how and when to intervene. Then, during the “Presentation” process, depending on the results of the previous process, specific content is presented to the learner to optimize their learning path. This includes the appropriate use of media, devices, and technology to successfully deliver information to the learner.

In response to the resulting increase in market demands for adaptive learning solutions, more and more entrepreneurial and innovative services and solutions are emerging that simultaneously challenge the “traditional” models and providers of education and learning services. T he pressure that higher education institutions face today is likely to intensify in the coming years (Newman et al., 2013). Ironically, higher education, which conducts much of the research on learning sciences and effective teaching models, lags far behind K-12 and corporate markets in applying the lessons learned (Newman et al., 2013). Recently, however, interest has emerged among higher education educators in exploring the use of adaptive learning systems to address the individual learning needs and characteristics of students entering formal academic programs (Foshee et al., 2016). The increase in the number of research articles published in high-impact educational technology journals between 2007 and 2017 that address adaptive or technology-enhanced personalized learning indicates that it is gaining traction in higher education (Xie et al., 2019). The main obstacle is that scientific, data-driven approaches that enable effective personalization of learning have only recently become available. Adaptive learning to personalize student learning pathways within higher education is still in development and there is little evidence-based research on how to develop or implement it (Liu et al., 2006; Shemshack and Spector, 2020).

To this end, the authors herein aim to contribute to this line of research by discussing the design and utilization of e-TPCK, a self-paced adaptive electronic learning environment that was developed and used to support the development of student-teachers’ Technological Pedagogical Content Knowledge (TPCK) in a personalized way during their undergraduate studies. According to Angeli and Valanides (2009), TPCK forms a unique body of knowledge that is better understood in terms of competencies that teachers must develop to teach appropriately with technology. Specifically, according to Angeli and Valanides (2009), the construct of TPCK is defined in terms of the following five competencies: (a) identify topics to teach with technology that are not easily comprehensible to learners or difficult to teach, in ways that signify the added value of technological tools, (b) identify representations for transforming the content to be taught into forms that are understandable to learners and difficult to by aided by traditional means, (c) identify teaching approaches that are difficult or impossible to be implemented by traditional means, (d) select technological tools which incorporate inherent features to provide content transformations and support teaching approaches, and (e) infuse technology-enhanced learning activities in the classroom.

The e-TPCK system employs a technological solution that promotes the ongoing development of student-teachers’ TPCK by engaging them in personalized learning experiences using technology-enhanced design scenarios, taking-into-account their diverse needs, information processing limitations, and preferences. The system was first designed and developed, and then integrated as a learning tool in a face-to-face Instructional Technology course that aimed to teach pre-service teachers how to teach with technology. The authors, who were also the course instructors, used e-TPCK as a complement to the course to engage pre-service teachers in technology-enhanced design experiences. This type of engagement allowed pre-service teachers to successively refine their thinking about learning design with technology by making explicit the connections and interactions among content, pedagogy, technology, learners, and context.

Accordingly, the present study assumed an experimental research design in order to examine the extent to which learning with e-TPCK had a statistically significant effect on the development of TPCK between pre-service teachers who interacted with it to learn how to design technology-enhanced learning and those who did not use it as part of their learning during a 13-week semester course in educational technology.

Materials and Methods

Participants

The sample of the study consisted of an experimental and a control group of student-teachers taking a compulsory Instructional Technology course at the undergraduate level. In the study, one group constituted the experimental group that used e-TPCK while participating in the course, while the other group constituted the control group. One hundred and nineteen participants constituted the experimental group and used e-TPCK to learn about how to design technology-enhanced learning, while 87 participants constituted the control group and received the conventional treatment without the use of e-TPCK, one semester earlier. Participants in the control and experimental groups were first- and second-year student-teachers, who had basic computer skills but no experience in instructional design using technology. Both groups attended 13 weekly lectures and 13 weekly labs, used the same course materials and readings, had the same instructor for the lectures and the same teaching assistant for the labs, received the same pre-test and post-test, and were assessed in the same way. The only difference was that the experimental group was purposefully exposed to activities related to the design of technology-infused teaching and learning using the e-TPCK learning environment at home.

In contrast, the control group did not use e-TPCK during the semester. However, students in the control group had access to all learning materials that students in the experimental group accessed through e-TPCK. These were uploaded in an electronic classroom in Blackboard but without having the built-in adaptive feature of the e-TPCK system.

Description of the System

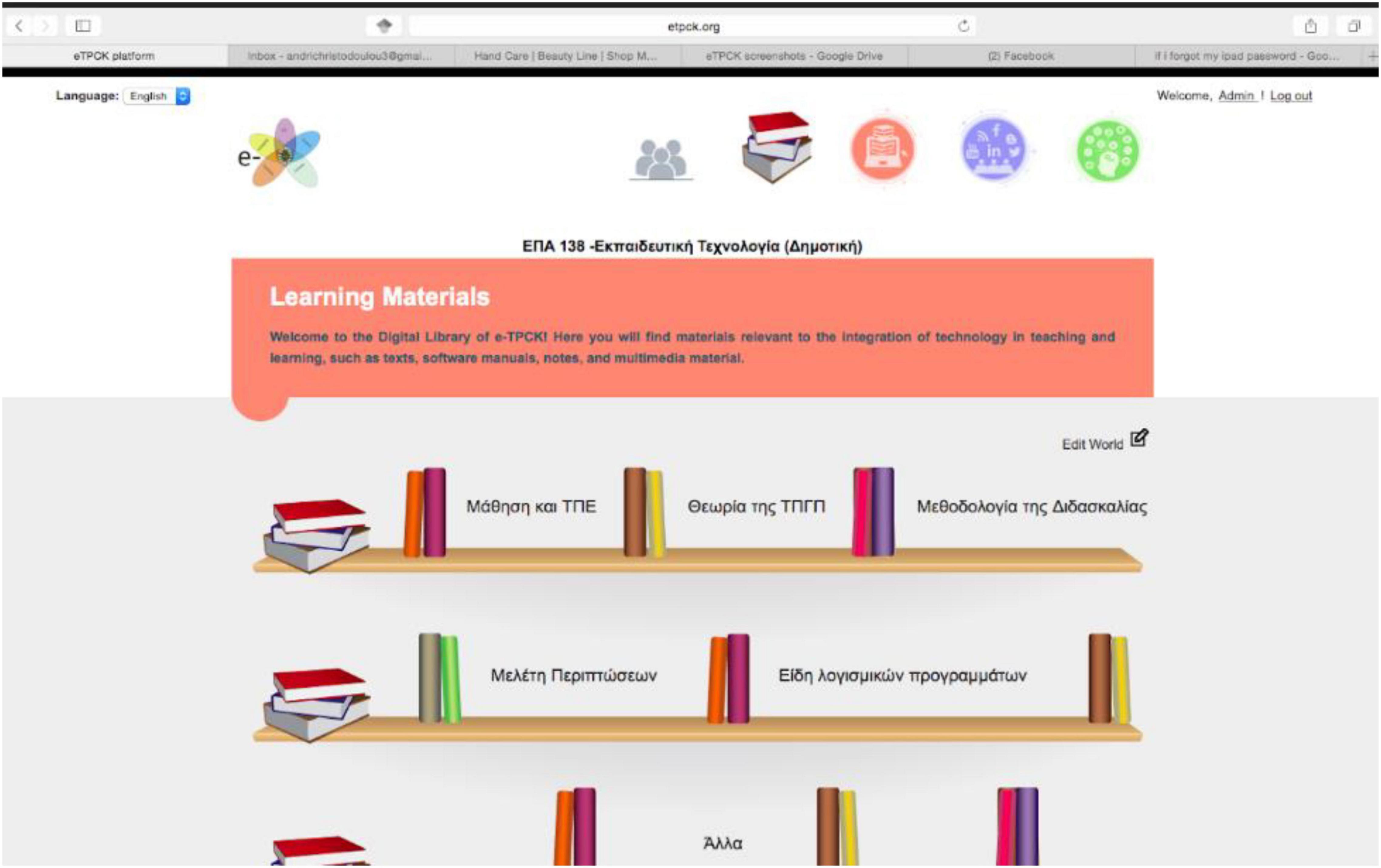

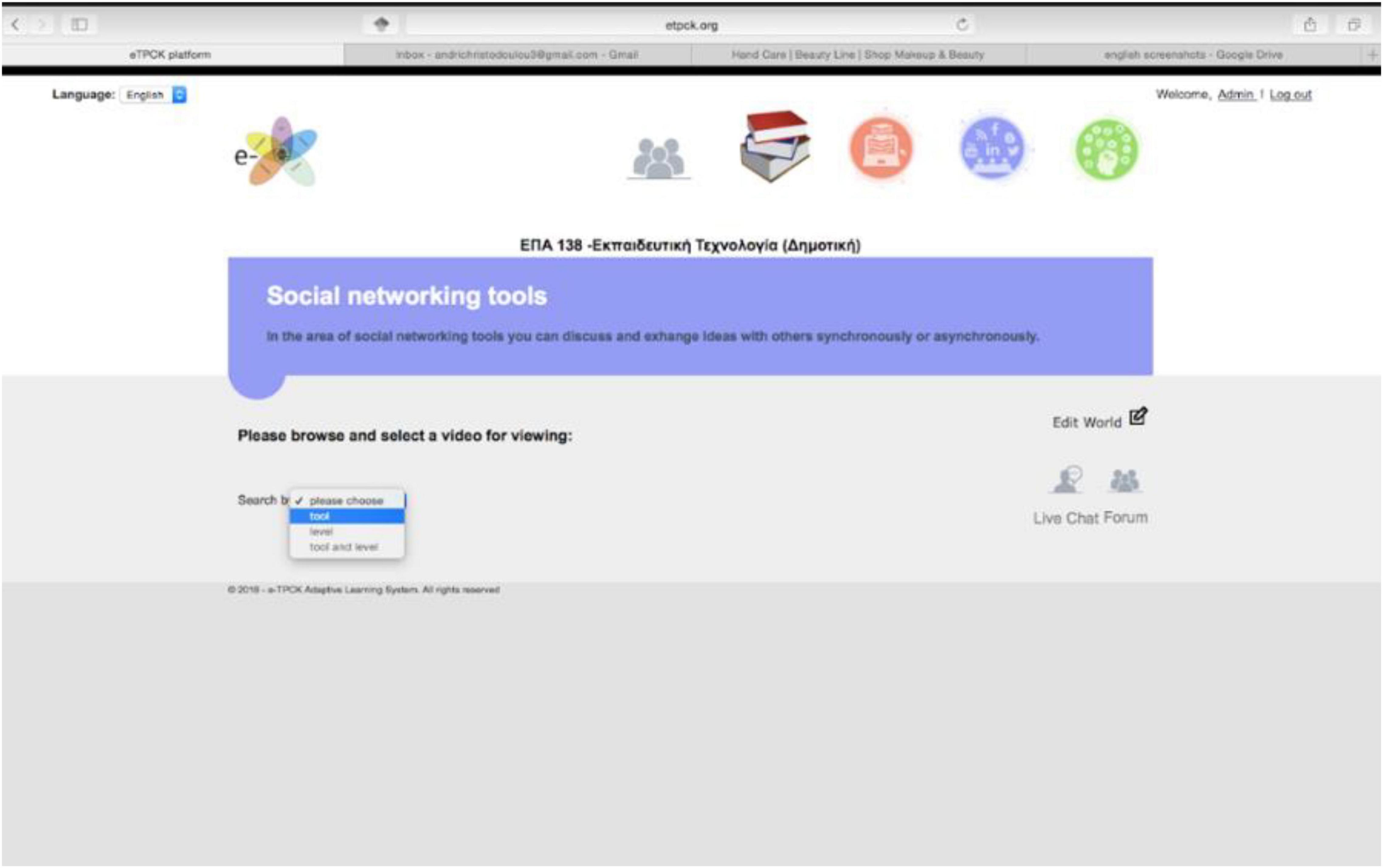

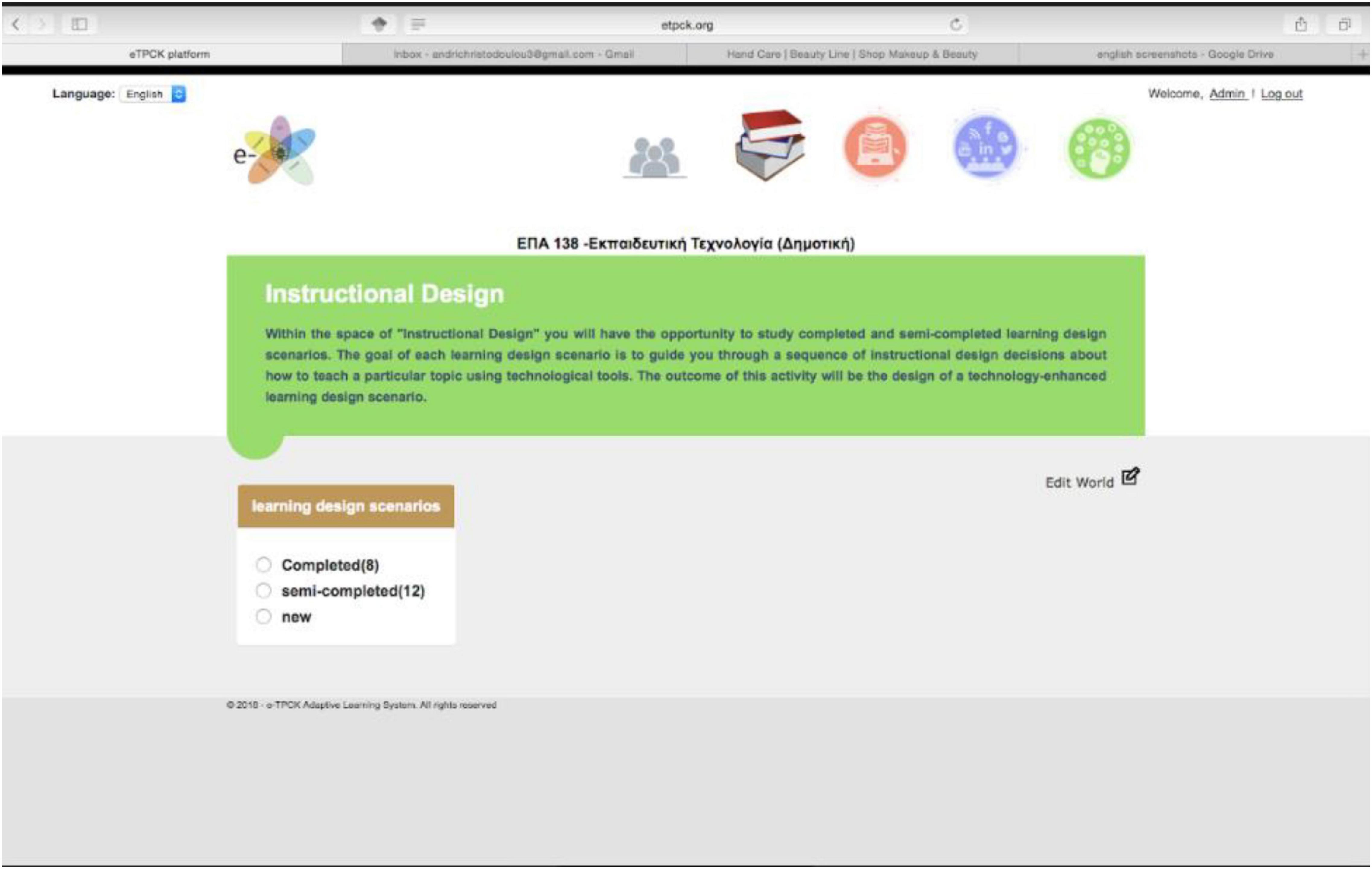

The design and development of e-TPCK was the result of three iterative cycles of a design-based research project. The design of the system incorporated aspects of approaches and theories such as learning-by-design, personalized and adaptive learning, cognitive load theory, scaffolding, and self-regulated learning. The e-TPCK system was not designed as an electronic learning system that simply delivered content to student-teachers. Rather, e-TPCK was a cognitive partner that supported student-teachers’ learning and enabled them to reach the next stages of their TPCK development in a self-paced manner while the system was personalizing the content to them in the form of technology-infused learning design scenarios. Essentially, e-TPCK consists of three main learning spaces, namely, (a) Learning Material (as shown in Figure 1), (b) Social Networking Tools (as shown in Figure 2), and (c) Instructional Design (as shown in Figure 3).

The study presented herein addresses the learning experiences of student-teachers in the Instructional Design space only. The Learning Material learning space was a digital library that contained information and materials to help student-teachers develop an understanding of instructional design and technology integration, while the Social Networking Tools learning space contained Web 2.0 technologies that enabled synchronous and/or asynchronous communication between student-teachers and/or the instructor to negotiate ideas about the design of technology-enhanced teaching and learning via chat or forum. The main learning space of the e-TPCK system was the Instructional Design space, where student-teachers learned, practiced, and gradually developed their TPCK. This learning space included three types of learning design scenarios with different levels of difficulty. The learning scenarios aimed to guide student-teachers through a sequence of instructional design decisions on how to teach a particular topic using technology. Specifically, e-TPCK included (a) completed (worked-out) learning design scenarios (difficulty level = 0) that represented sound examples of instructional design using technology for a specific content to be taught, (b) semi-completed learning design scenarios (difficulty level = 1–4) that lacked some phases in the sequence of learning activities and needed to be completed by student-teachers, and (c) new learning design scenarios (difficulty level = 5) that student-teachers had to develop on their own using the same format as in the completed design scenarios, and submit them in the system when finished for formal assessment by the instructors. The semi-completed learning design scenarios were ranked from simple (difficulty level = 1) to complex (difficulty level = 4), according to the number of phases missing from the sequence of learning activities. The more phases student-teachers had to complete, the more difficult the design task was. Accordingly, the easiest task was the completed design scenarios, while the new design scenarios were the most difficult design tasks for the student-teachers. Specifically, the structure of each technology-enhanced design scenario was as follows:

1. Rationale of topic selection and technology’s added value.

2. Subject-matter content description, including connections with the curriculum.

3. Learning objectives (lower-order learning objectives, higher-order learning objectives, and technology-related objectives).

4. Sequence of classroom activities:

Phase 1: Gain attention/attract student interest.

Phase 2: Identification/diagnosis of learners’ initial perceptions or misconceptions/alternative conceptions.

Phase 3: Destabilization of initial perceptions through the induction of cognitive conflict.

Phase 4: Construction of new knowledge and active engagement of learners in the knowledge construction process.

Phase 5: Application of new knowledge in a new context.

Phase 6: Revision and comparison with initial ideas.

5. Final student assessment.

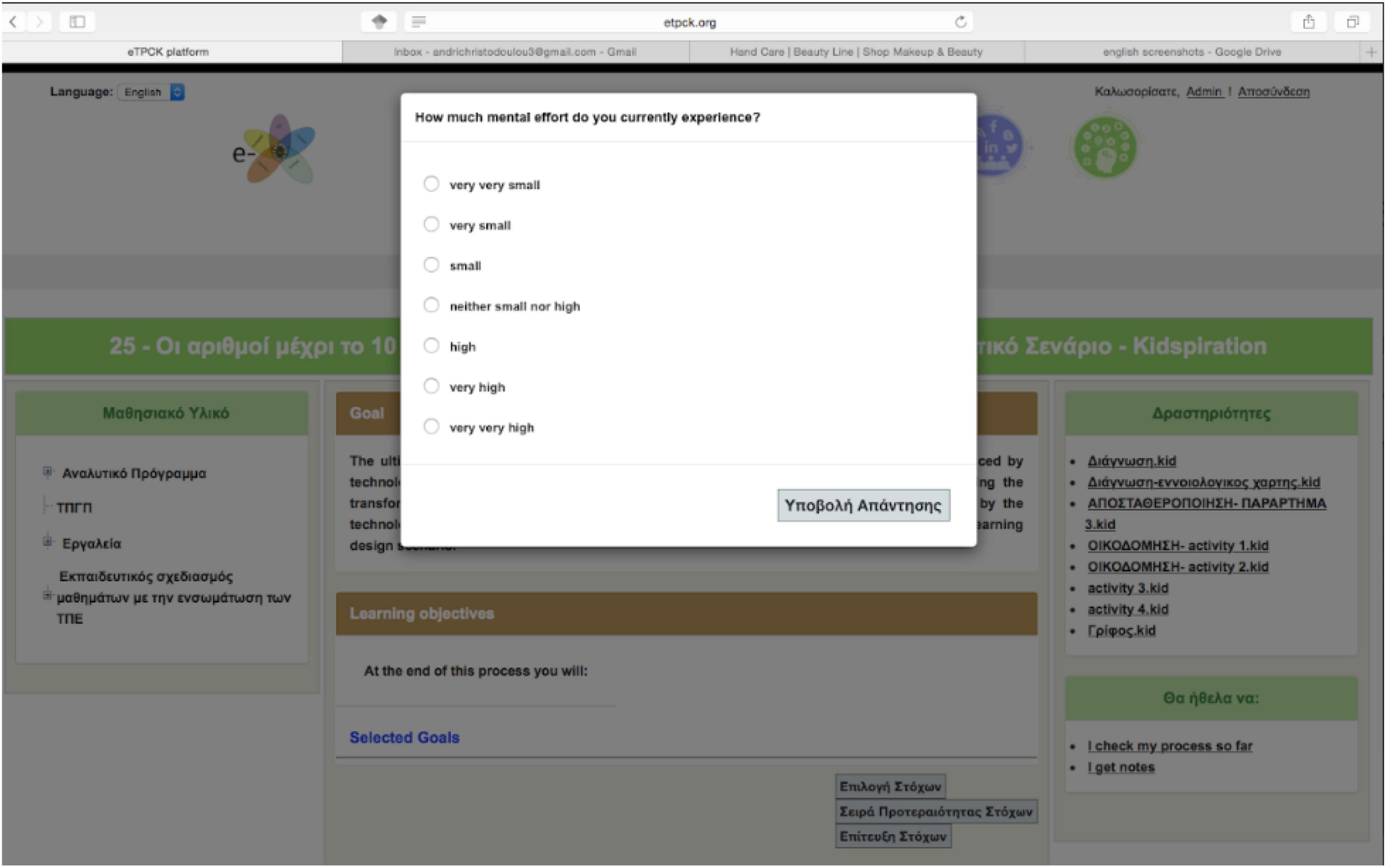

The e-TPCK system provided a personalized learning experience by adapting (a) the user’s learning path based on his/her subjective assessment of cognitive load, (b) the user’s preferences for technological tools, and (c) the difficulty level of the scenario. The adaptation strategy consisted of the following elements: (1) Adaptation Parameters, such as the learners’ perceived cognitive load, the choice of technological tools in the design scenario, and the difficulty level of the technology-enhanced design scenario as determined by the instructional designers of e-TPCK, (2) Adaptation Type, namely, the adaptation of the content, the flow of learning, and the sequence of activities, and (3) Adaptation Rules, such as conditional rules that assign and implement shared control between the system and the end user.

The system asked the student-teachers to choose the difficulty level of the learning design scenario in an attempt to adjust the content to their learning needs and preferences. The adaptation process was facilitated by the cognitive load monitor (CLM), as shown in Figure 4. Once users started with a completed or semi-completed design scenario, they had the opportunity to read the first six elements of the design scenario just before the sequence of learning activities began. At that point, the CLM was activated by asking users to indicate the level of cognitive effort they perceived. Student-teachers’ subjective ratings of perceived cognitive effort were measured using a 7-point Likert scale ranging from very very low to very very high cognitive effort. Depending on the users’ self-assessment of their mental effort, the system shared control with them by allowing them to choose the next step from a list of options provided by the system. Specifically, in cases where users had indicated a high level of cognitive effort, the system asked them if they wanted to proceed with a less difficult design scenario. If the users answered positively, the system gave them a less difficult design scenario with the same technological tool or less difficult design scenarios with different technological tools that had a lower level of difficulty. However, if the users answered negatively, they continued with the same design scenario. In the case where users indicated low cognitive effort, the system asked them if they would like a more difficult design scenario. If they answered positively, the system gave them a more difficult design scenario with the same technological tool or more difficult design scenarios with different technological tools that had a higher level of difficulty. However, if users answered negatively, they continued with the same design scenario. Essentially, e-TPCK involved instances of shared instructional control, where adaptive behavior was controlled by both the learner and the system.

If student-teachers chose to remain with the same completed or semi-completed design scenario, before continuing with e-TPCK, they needed to take a short test that allowed the system to provide them with adaptive feedback. If the users’ performance on the test was equal to or above 50%, they could proceed to the next part of the design scenario. If their performance was below 50%, they remained on the same page and were asked to read it again. On the left side of the user interface, the system displayed the description of the learning material and the learning objectives for each design scenario. Users could decide whether to revise or ignore this type of information. If users successfully completed all five tests of the first completed design scenario, they were allowed to proceed to the first semi-completed design scenario. The same procedure was followed for the first semi-completed design scenario. After successfully completing the five tests, users were allowed to proceed with developing a new design scenario from scratch. In both cases, if the users chose to continue with the same type of design scenario, i.e., completed or semi-completed, the system gave them the option to select a more difficult design scenario using the same or a different tool.

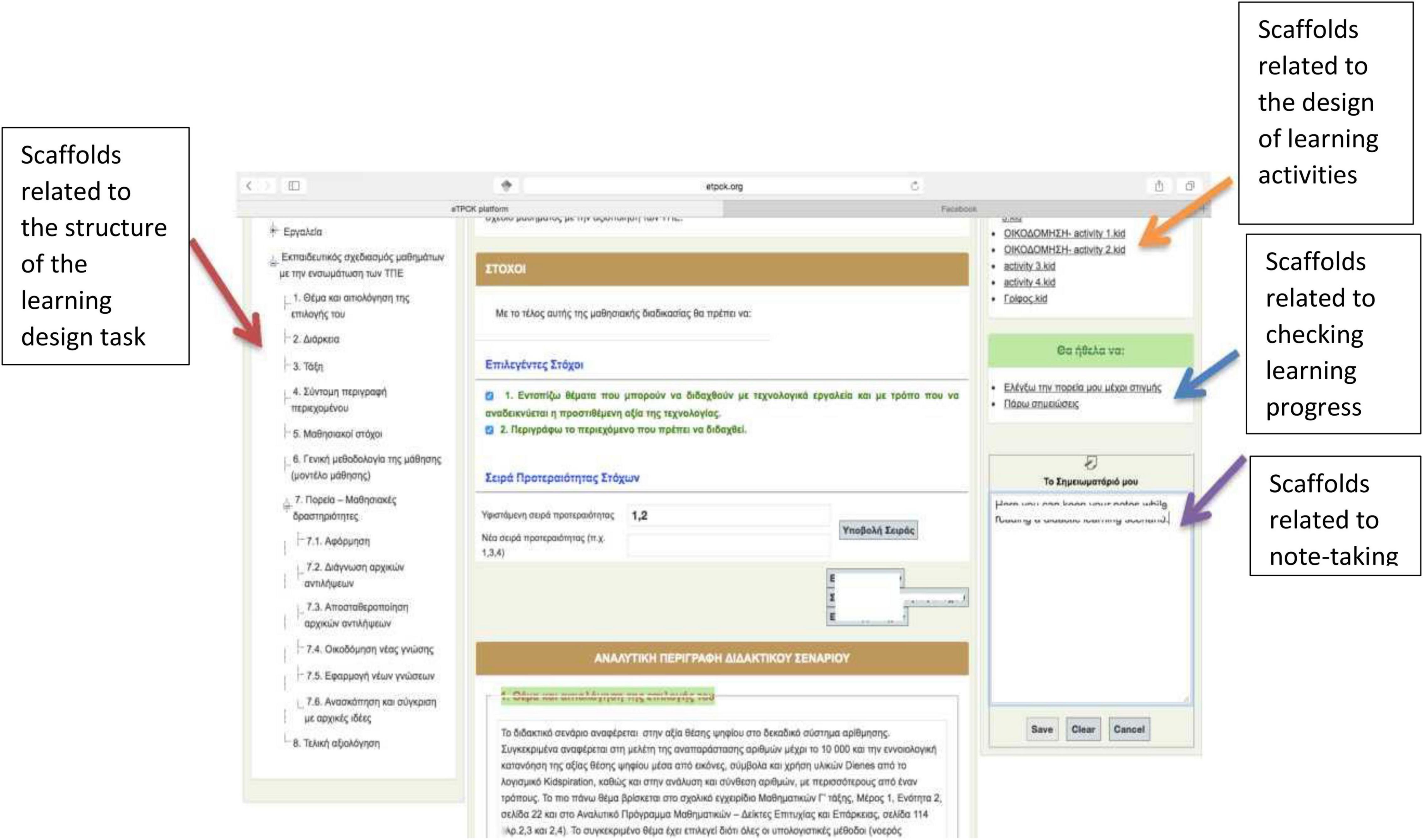

Scaffolds were an important feature in the design and development of e-TPCK, as shown in Figure 5.

Scaffolds are tools, strategies, or instructions that the system provided during the learning process to help learners reach higher levels of understanding that they could not achieve on their own (Hannafin et al., 1999; Saye and Brush, 2002; Azevedo and Hadwin, 2005). Student-teachers’ learning paths were scaffolded only for completed or semi-completed learning design scenarios, while all scaffolding was hidden for new scenarios to assess how well student-teachers could perform without any scaffolding. Scaffolds in e-TPCK provided cognitive, conceptual, metacognitive, procedural, or strategic support. For example, while completing the missing elements of a semi-completed design scenario, student-teachers encountered conceptual scaffolds in the form of pop-up windows to promote their understanding of the elements of constructivism as they appeared in the learning design scenarios. Student-teachers could simply close and ignore the pop-up windows if they found them unhelpful or distracting. Also, e-TPCK informed student-teachers about the general goal of each learning session within the Instructional Design learning space, and also assisted them in defining their own learning sub-goals of their learning. The presence of goals and sub-goals constituted metacognitive scaffolds that provided student-teachers with guidance about what to consider in terms of the task and the context of the task, and what to expect in terms of learning once the task was completed. This supported student-teachers’ monitoring and reflective processes by increasing their metacognitive awareness of the different aspects of their learning (the task, the context, the self in relation to the task). Another example of conceptual scaffolding was the information resources that were always available on the left side of the screen to help student-teachers fill in essential knowledge gaps between what they knew and what they needed to know in relation to the design task. In addition, previously visited pages or other sources of information were marked facilitating student-teachers’ future access to the same information.

In general, the interface of the Instructional Design learning space prompted student-teachers to engage in planning, monitoring, controlling, and reflecting or adapting different strategic learning behaviors. Also, this learning space fostered a variety of self-regulated learning behaviors, including prior knowledge activation, goal setting, planning, evaluation of learning strategies, integrating information across representations, content evaluation, and note-taking.

Research Instruments

The following questionnaires were used to collect data from the participants of the study: (1) a demographics questionnaire, (2) a researcher-made pre-test that assessed pre-service teachers’ initial instructional design skills at the beginning of the semester, and (3) a post-test (same as pre-test) that was administered at the end of the semester to examine pre-service teachers’ final instructional design skills. Additionally, the researchers assessed students’ total TPCK by evaluating their performance on two design artifacts that student-teachers completed around the 8th and 13th weeks of the semester, respectively.

The demographics questionnaire was administered to both groups of participants during the first lab meeting of the semester. The questionnaire consisted of only a few questions for the purpose of collecting data related to students’ name, surname, age, and academic status (i.e., undergraduate student).

The pre-test consisted of 10 questions and assessed student-teachers’ skills on instructional design and it was administered at the beginning of the course. The same test was administered as post-test at the end of the semester. Student-teachers’ total score on the pre-test was regarded to be their initial instructional design competency, whereas their total score on the post-test at the end of the semester was regarded to be their final instructional design competency. All questions on the test were graded on a scale from 0 to 10, thus the maximum score on the test was 100 points.

In addition, the researchers assessed the student-teachers’ overall TPCK competency by assessing their design performance on two design artifacts that the student-teachers developed from scratch during the 8th and 13th weeks of the semester. The overall TPCK competency for the two design artifacts was assessed by the two course instructors using Angeli and Valanides’ (2009) five individual TPCK competencies. Interrater reliability for the first design artifact was determined to be r = 0.96, and r = 0.94 for the second design artifact. Each of the five TPCK competencies was rated on a 0–20-point scale. The sum of the five TPCK competencies resulted in the total TPCK competency. Thus, a total score of 100 points indicated an excellent overall TPCK score.

Research Procedures

At the beginning of the semester, student-teachers in the control and experimental groups took the pre-test consisting of 10 scenario-based questions that tested their initial competencies in instructional design with technology. The same test was administered at the end of the semester as a post-test to assess final instructional design competencies. In addition, the researchers assessed the student-teachers’ overall TPCK competency by assessing their design performance on the two design artifacts that the student-teachers developed from scratch during the 8th and 13th weeks of the course.

During the lectures, student-teachers learned about instructional design according to the TPCK competencies, the added value of technological affordances and how these connect to content and pedagogical transformation, learning theory and learning design. During lab time, student-teachers learned how to use various technological tools and were engaged in instructional design activities using these tools. All participants in the study were engaged in instructional design activities using technology that included completed and semi-completed learning design scenarios. However, only the experimental group had the opportunity to use the e-TPCK system at home.

Results

Pre-service Teachers’ Initial and Final Assessment of Instructional Design Knowledge

At the beginning of the semester, pre-service teachers in each group were administered a researcher-made pre-test to measure initial instructional design knowledge. The pre-test was consisted of 10 questions and aimed at assessing students’ knowledge in terms of designing learning activities based on the constructivist learning model. All questions on the test were graded on a scale from 0 to 10, thus the maximum score on the test was 100 points. At the end of the semester, the pre-test was administered as post-test in order to determine learning gains between the two time points.

A number of statistical tests were conducted to analyze the data. The data met the assumptions of all statistical tests the authors of the study performed. First, a one-way between-subjects ANOVA was conducted to examine whether pre-service teachers’ initial performance on the instructional design knowledge test was different for the control and the experimental groups. The results determined that there was a statistically significant difference between the two groups, F(1,206) = 47.23, p < 0.01, as the experimental group (M = 57.24, SD = 15.19) performed much higher on the pre-test than the control group (M = 44.13, SD = 11.17).

Accordingly, in order to eliminate the initial differences between the two groups, the participants in the control and experimental conditions, were further divided into two sub-groups according to their expertise level as assessed by the instructional design knowledge test. Pre-service teachers who scored between 0 and 49 points were assigned to the novices’ group, while those who scored between 50 and 100 points were assigned to the experts’ group. There were 57 novices and 32 experts in the control group, and 36 novices and 83 experts in the experimental group.

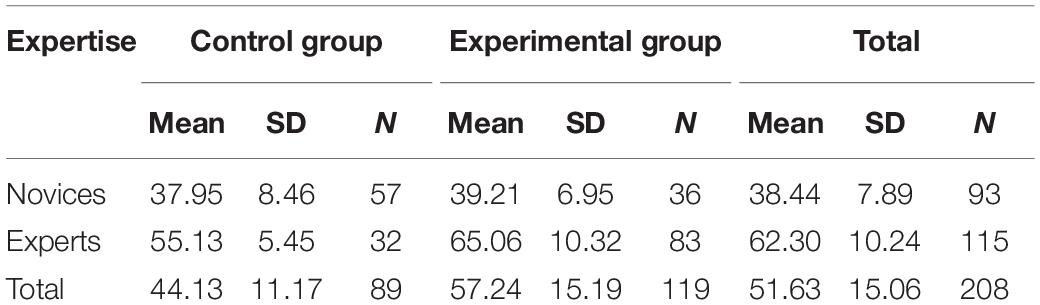

Table 1 shows the descriptive statistics of pre-service teachers’ performance on the pre-test, both in the control and experimental groups. Experts, in both groups, performed higher than the novices. Furthermore, all participants in the experimental group, novices and experts, performed better than their respective counterparts in the control group. The novice participants in the experimental group performed slightly better on the test than their counterparts in the control group, with a mean difference of 1.26 points (SD = 6.95), while the experts in the experimental group had a substantial mean difference of 9.93 points (SD = 10.32) from their counterparts in the control group.

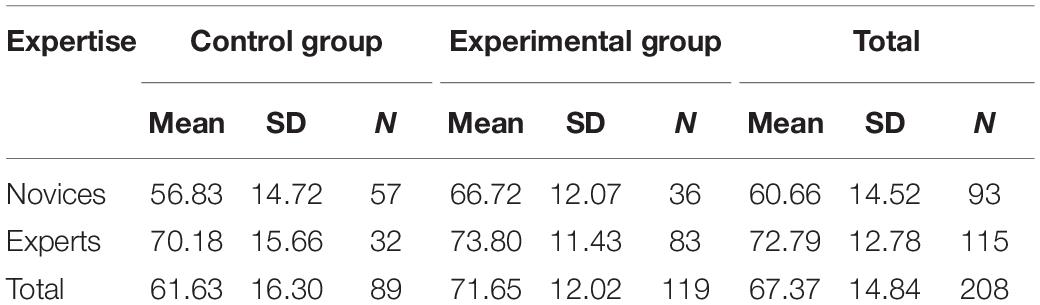

Table 2 presents the descriptive statistics of pre-service teachers’ performance on the post-test, per intervention group and level of expertise. Once again, experts in the control group (M = 70.18, SD = 15.66) and the experimental group (M = 73.80, SD = 11.43) appeared to have higher scores on the post-test questions than novices in the corresponding groups (M = 56.83, SD = 14.72) (M = 66.72, SD = 12.07). However, both novices and experts in the experimental group performed better than their counterparts in the control group. Analytically, novices and experts in the control group had an improvement of 18.88 and 15.05 points on the post-test, respectively, while novices and experts in the experimental group scored 27.51 and 8.74 points higher on the post-test, respectively. Apparently, all participants performed better on the post-test due to the teaching intervention; however, the novices in both groups showed greater improvement than the experts in each group. More importantly, though, the novices in the experimental group had the greatest improvement of all. Specifically, on the post-test, novices in the experimental group managed to increase the post-test mean difference between their counterparts in the control group by 9.89 points, while on the pre-test their mean difference was only 1.26 points. Experts in the experimental group, on the other hand, showed the exact opposite. Although, on the pre-test, the mean difference between the experts’ performance in the control group, and the experts’ performance in the experimental group reached 9.93 points, on the post-test, this mean difference was eliminated to 3.62 points.

A follow-up two-way ANOVA was conducted to determine statistically significant differences between the control and experimental groups. The results showed statistically significant main effects for “expertise” and “treatment condition” on students’ performance on the post-test, F(1,204) = 27.02, p < 0.001, partial η2 = 0.12 and F(1,204) = 11.80, p < 0.001, partial η2 = 0.06, respectively, in favor of the experimental group.

Pre-service Teachers’ Total Technological Pedagogical Content Knowledge Competency

Pre-service teachers’ total TPCK competency was measured on two different occasions using two design artifacts. For each design artifact, pre-service teachers had to identify topics to be taught with technology in ways that signify the added value of the tools, such as topics that learners cannot easily comprehend, or topics that teachers face difficulties in teaching effectively in class. The first design artifact was completed during the 8th week of the semester and the second on the 13th week of the semester. Hence, the overall elapsed time between the two design tasks was 5 weeks.

The total TPCK competency on the two design artifacts was measured by the two instructors using the five individual TPCK competencies reported by Angeli and Valanides (2009), namely: (a) Identification of appropriate topics to be taught with technology in ways that signify the added value of the tools, such as topics that students cannot easily understand, or content that teachers have difficulties in teaching effectively in class, (b) Identification of appropriate representations to transform content to be taught into forms that are comprehensible to students and difficult to be supported by traditional means, (c) Identification of teaching strategies difficult or impossible to be implemented by traditional means, (d) Selection of appropriate tools and effective pedagogical uses of their affordances, and (e) Identification of appropriate integration strategies, which are learner-centered and allow learners to express a point of view, observe, inquire, and problem solve.

The interrater reliability for the first design artifact was calculated to be r = 0.96 while for the second design artifact was found to be r = 0.94. Each of the five TPCK competencies was graded on a scale from 0 to 20 points for a total of 100 points denoting the total TPCK competency. Overall, the control and the experimental groups experienced the same design tasks, followed the same instructions, guidelines, and assessment process. However, the experimental group had the opportunity, before and between the two design tasks, to further practice in instructional design using the learning environment of e-TPCK.

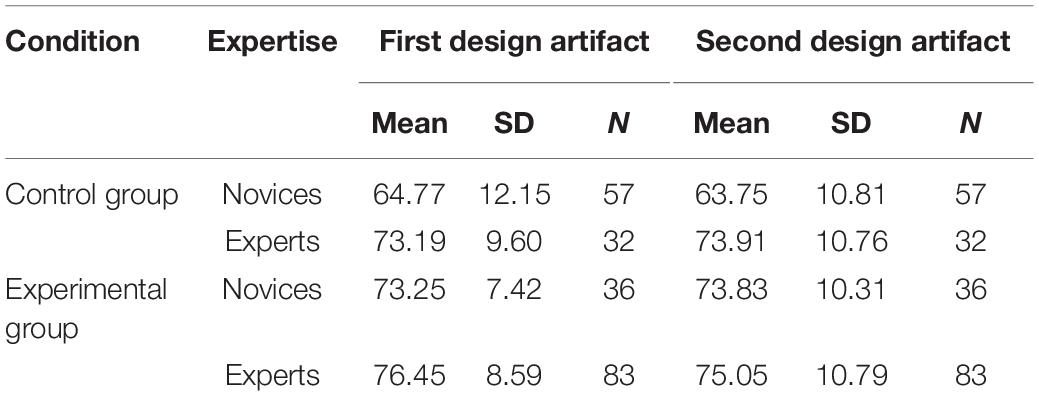

According to Table 3, all participants in the experimental group, both novices and experts, performed better than their counterparts in the control group on the first design artifact.

Novices in the experimental group attained a mean score of 73.25 points (SD = 7.42), performing considerably higher than their counterparts in the control group (M = 64.77, SD = 12.15) with a mean difference of 8.48 points. Experts in the experimental group, on the other hand, had a higher performance (M = 76.45, SD = 8.59) than the experts in the control group (M = 73.19, SD = 9.60) with a mean difference of 3.26 points. Experts in the control group attained a higher mean score of 8.42 points than the novices in the control group, while the experts in the experimental group had a lower mean difference than the novices (3.20 points) in the same group. The most notable finding is the fact that the novices in the experimental group performed not only better than their counterparts in the control group, but also, better than the experts in the control group. This shows that engagement with e-TPCK improved the novices’ performance on the first design artifact.

The results appear to be almost the same with regards to the second design artifact with novices and experts in the experimental group attaining higher mean scores than their counterparts in the control group. Novices in the experimental group achieved a mean score of 73.83 (SD = 10.31), while novices in the control group performed 10.08 points lower (M = 63.75, SD = 10.81) on the second design task. The novices’ performance in the experimental group remained approximately the same as that on the first design task. Experts in the experimental group, on the other hand, attained a mean score of 75.05 points (SD = 10.79), surpassing their counterparts in the control group by 1.14 points (M = 73.91, SD = 10.76). The experts in the control group performed minimally higher on the second design task (0.72 points) compared to their performance on the first design task. Experts, though, in the experimental group performed slightly below their mean score on the first design task (1.40 points). Hence, experts’ mean score difference, in the control and experimental groups, was slightly decreased by 2.12 points on the second design task. Overall, novices and experts, in both groups experienced minimal fluctuations in their performance on the second design task when compared to their performance on the first one.

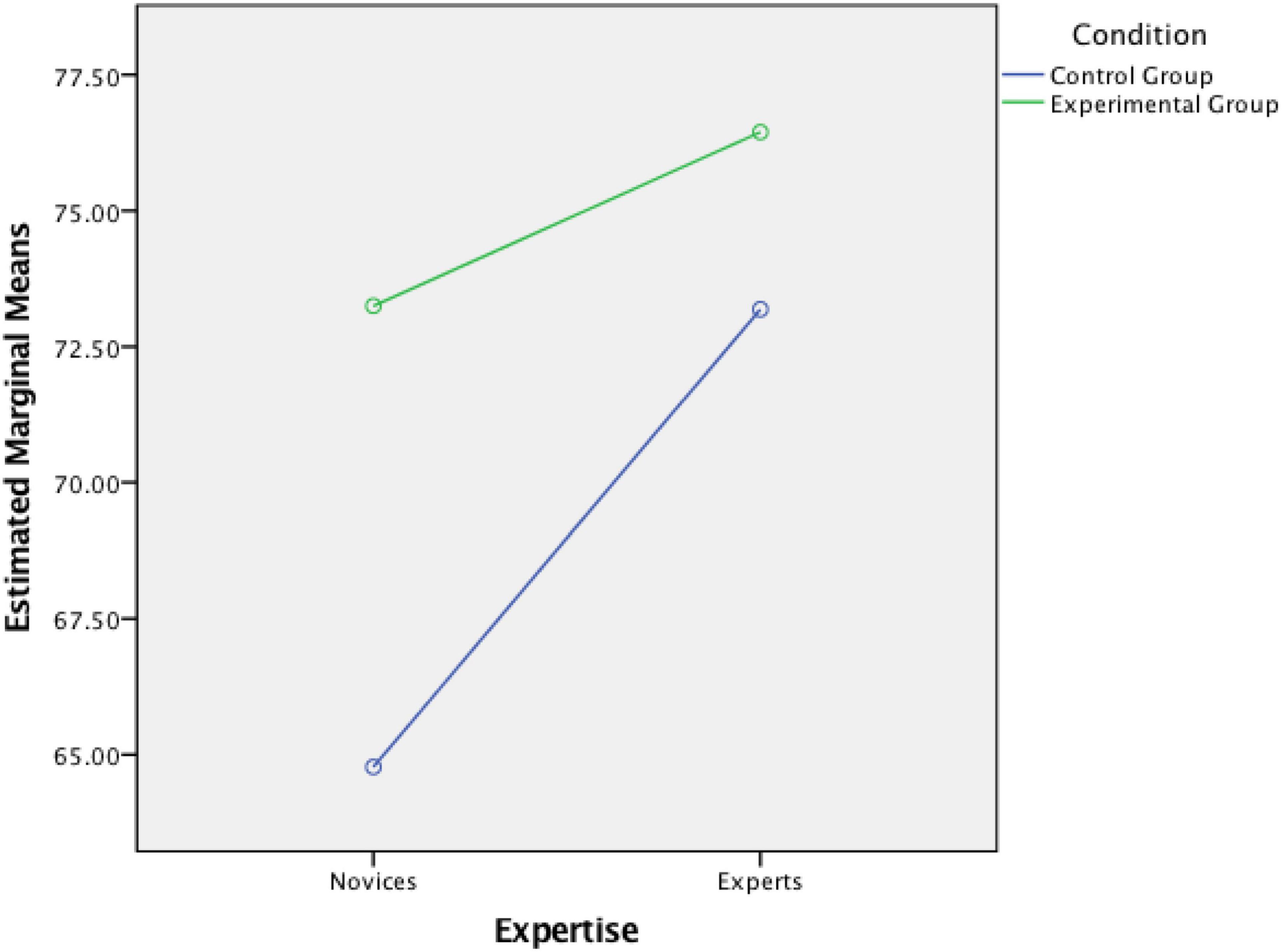

A two-way between-subjects repeated measures ANOVA was conducted to compare the main effects of “condition” (i.e., being in the control or the experimental group) and “expertise” as well as detect an interaction effect between these two factors on pre-service teachers’ performance on the two design artifacts. A statistically significant between-subjects interaction was found between expertise and condition variables, F(1,204) = 7.49, p < 0.05, partial η2 = 0.04, for the first design task. The nature of this interaction effect is shown in Figure 6. Simple main effects were also run. Both main effects of condition and expertise determined that there was a statistically significant difference in pre-service teachers’ performance between the two time points, yielding an F ratio of F(1,204) = 19.71, p < 0.05 and F(1,204) = 19.74, p < 0.05, respectively.

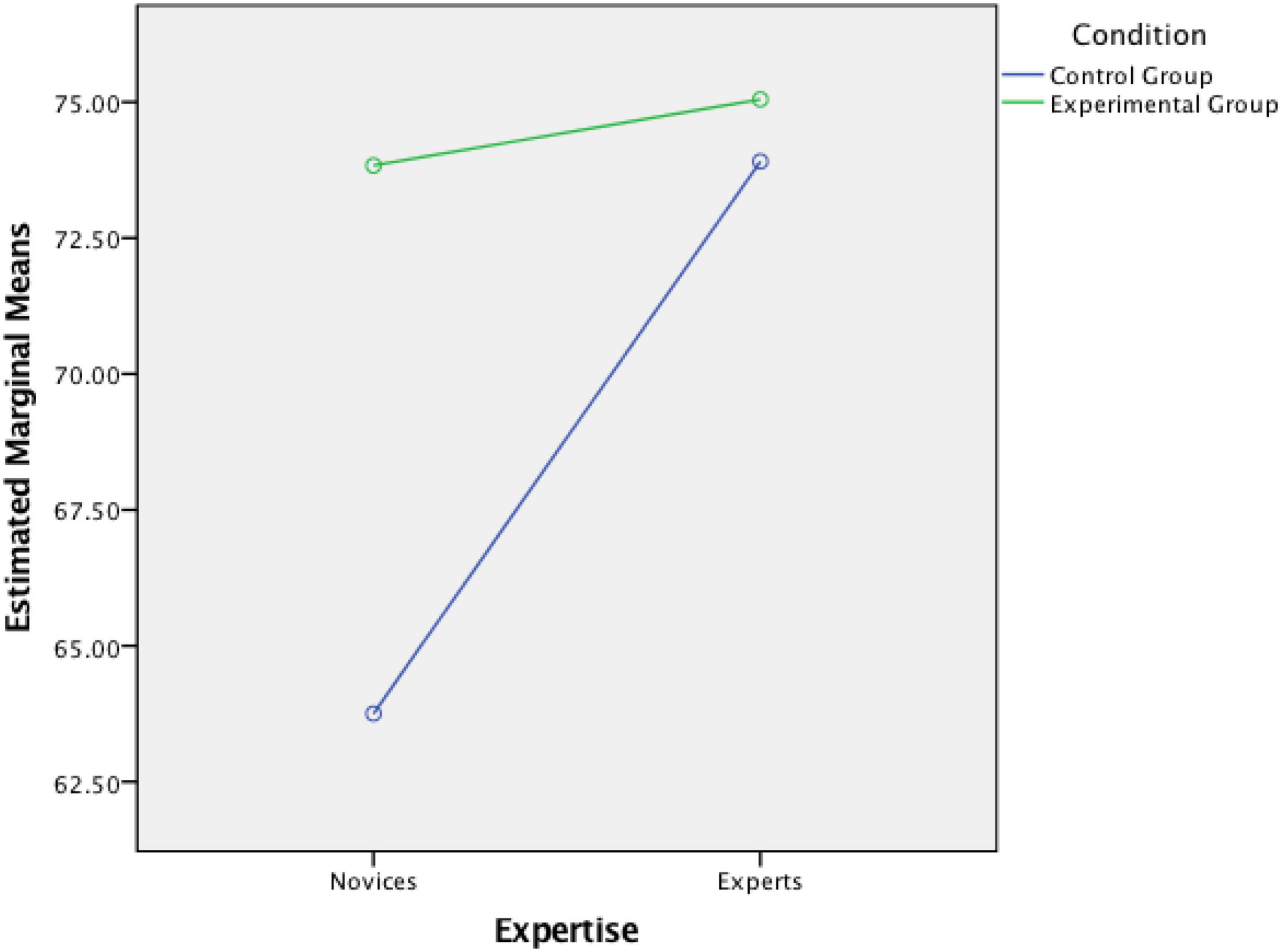

Accordingly, a statistically significant between-subjects interaction was also found between expertise and condition variables, F(1,204) = 6.53, p < 0.05, partial η2 = 0.08, for the second design task. The nature of this interaction effect is shown in Figure 7. Simple main effects were also run. Both main effects of condition and expertise determined that there was a statistically significant difference in pre-service teachers’ performance between the two time points, yielding an F ratio of F(1,204) = 18.61, p < 0.05 and F(1,204) = 18.74, p < 0.05, respectively.

These results show that learning with e-TPCK provided a more facilitating effect for novices in the experimental group that contributed to their high scores on the two design artifacts, fast approaching the performance of the experts in the experimental group and attaining almost the same performance with the performance of the experts in the control group. Evidently, the positive effect of e-TPCK, when integrated in students’ teaching and learning, was manifested mainly in the performance of the novices in the experimental group. On the contrary, the experts in the experimental group tended to score lower on the second design artifact demonstrating that the additional instructional guidance they received by interacting with e-TPCK was not necessary or even detrimental for them. This notable result can be well explained and understood through the concept of expertise reversal effect. Although advantages of individualized learner-tailored instruction are recognized, it is shown that procedures and techniques designed to increase levels of instructional guidance were more effective for novice learners. However, the instructional guidance that is essential for novices may inhibit learning for more experienced learners, as the results of the present study indicated, by interfering with retrieval and application of their available knowledge structures (Kalyuga and Renkl, 2010). Therefore, unnecessary guidance should be removed as learners acquire higher levels of proficiency in a specific domain during the course of their learning with an adaptive computer system.

Discussion

Based on the results of the current study, the integration of adaptive e-learning systems in educational technology courses can foster the development of pre-service teachers’ TPCK faster than traditional instruction that depends solely on face-to-face meetings with no use of adaptive computer technology. The experimental group outperformed the control group in both design tasks, demonstrating the potential of e-TPCK to support student-teachers’ TPCK development in more efficient, effective, and individualized ways. What is more, e-TPCK provided a more facilitating learning effect for some students in the experimental group, whereas for some others this was not the case. The instructional guidance that e-TPCK offered for TPCK development, led to a mathemagenic effect for student-teachers who were regarded as novices in terms of their initial instructional design competency, whereas for student-teachers who were regarded as more experienced, it had more of a neutral effect and, in some cases, a mathemathantic effect. Although both experts and novices benefited from learning with e-TPCK during the initial weeks of the semester, later only the novices continued to experience learning gains, while the experts’ performance decrease leading to an expertise reversal effect. Interestingly, the novelty effect did not affect the novices as they continued to learn throughout the semester. In contrast, the novelty effect affected the more experienced ones as they found the system beneficial only during the first weeks of the semester but not throughout the entire semester.

The expertise reversal effect is a reversal in the relative effectiveness of instructional methods and procedures as levels of learner knowledge in a domain change (Kalyuga, 2007; Kalyuga and Renkl, 2010). Instructional techniques that assist novices or low-experienced learners can lose their effectiveness, or even adverse effects when learners acquire more expertise (Sweller et al., 2003; van Merrienboer and Ayres, 2005; Kalyuga, 2007; Kalyuga and Renkl, 2010). Hence, a learner’s level of expertise (the one’s ability to perform fluently in a class of tasks) is a crucial factor determining what information is relevant for the learners and what instructional guidance is needed (Kalyuga, 2007).

The expertise reversal effect has been replicated in many research studies of different domains and with a large range of instructional means (e.g., tasks that require declarative and/or procedural knowledge in mathematics, science, engineering, programming, social psychology, etc.) and learners (from primary to higher education levels), either as a full reversal (a disordinal interaction with important differences for both novices and experts) or, more often, as a partial reversal (non-significant differences for novices and experts, but with a significant interaction), stressing the need to adjust instructional methods, procedures, and levels of instructional guidance as learners acquire higher levels of proficiency in a particular domain (Kalyuga, 2007). Appropriate instructional support should be provided to novice learners to build new knowledge structures in a relatively efficient way, while unnecessary guidance should be removed as the learners become more knowledgeable in a domain.

The design of e-TPCK attempted to provide adaptive scaffolding to student-teachers by personalizing the content to them to support their learning, acknowledging the advantages of individualized learner-tailored instruction. For both completed and semi-completed design scenarios, several levels of adaptive support were provided in terms of the degree of completeness and instructional hints. The fading from one phase to the next (from completed design scenarios to semi-completed or new design scenarios) was facilitated through the CLM. Based on the subjective ratings concerning learners’ perceived cognitive effort about a design scenario, the system was adapting the learning path of its users, accordingly, as to reduce extraneous cognitive load and not to waste limited resources that could be used for effort. Subsequently, the student-teachers’ learning path was adapted according to their preferences on the technology tools used in the design scenarios, and/or the difficulty level of the design scenarios, by sharing control with them and giving them the opportunity to determine their next step in the learning process. Consequently, adaptive fading in e-TPCK was not employed based on the actual knowledge growth of each learner over time; instead, it was primarily based on the mental effort approach (Paas and Van Merrienboer, 1993) and, then on their preferences in the technological tools in a way that all learners could work on their personal learning pace and gradually guided from the easiest to the most complex design task through the fading strategy. The adaptation strategy employed in the system constituted a more learner-controlled adaptation strategy, and an alternative to system- controlled tailoring of instruction. However, as many researchers (Kalyuga, 2007; Sweller et al., 2011) argue, it is not always correlated with positive learning outcomes; instead, this approach can be advantageous only if the learners have reached that level of expertise that allows them to select appropriate learning strategies to their cognitive states, determining their next step in the learning session. This significant conclusion calls for changes in the instructional design of e-TPCK, addressing learners’ knowledge base as the most important cognitive characteristic that affects learning and performance, as to ensure that learners will not experience the expertise reversal effect. Kalyuga (2007) critiqued several projects in adaptive e-learning that focused primarily on technical issues of tailoring content to learner preferences, interests, selections and history of previous online behavior, rather than taking-into-account fundamental cognitive characteristics such as the level of expertise of the learners. Therefore, e-TPCK should examine the employment of a hybrid approach that would describe the task selection rules for its users. Performance or level of expertise and cognitive load could be combined and measured at the same time while the CLM is being activated. This approach would encourage the instructional design of e-TPCK with presumable learning advantages for all student-teachers, novices and experts, in terms of TPCK development.

Lastly, the authors would like to address three limitations of the study. The first one is related to the fact that the researchers also acted as the instructors of the course. To eliminate any threats to the study’s internal validity, the authors report that all research procedures for each of the 13 lectures and the 13 labs were written down and followed strictly to cope with any possible threats. A second limitation is the technical errors students often had to face while learning with e-TPCK. While the system was well developed, the authors often received students’ complaints about technical errors that prohibited them from advancing from one step to another. Lastly, a third limitation is related to the instructional design of the e-TPCK. As the system did not diagnose progress from one level of expertise to a higher one, it did not recommend a different set of scaffolds to the more expert learner. In the future, the authors could change the system’s algorithm to suggest a different set of scaffolds to learners according to their current level of expertise.

Conclusion

The results presented herein are encouraging and can serve as baseline data for future empirical studies on the integration of adaptive learning systems in teacher education or professional development courses for teachers. The results of the study provide insights for researchers or higher education instructors interested in implementing personalized learning with the use of adaptive technologies in their classrooms to support their students in the learning process. The results of the study can be used to refine the design of such environments, address new or emerging issues, support new theories or approaches to strengthen understanding of the research and practice of such adaptive personalized learning environments and guide further research and theory development.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work, and approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alamri, H., Lowell, V., Watson, W., and Watson, S. L. (2020). Using personalized learning as an instructional approach to motivate learners in online higher education: learner self-determination and intrinsic motivation. J. Res. Technol. Educ. 52, 322–352. doi: 10.1080/15391523.2020.1728449

Alamri, H. A., Watson, S., and Watson, W. (2021). Learning technology models that support personalization within blended learning environments in higher education. TechTrends 65, 62–78. doi: 10.1007/s11528-020-00530-3

Angeli, C., and Valanides, N. (2009). Epistemological and methodological issues for the conceptualization, development, and assessment of ICT–TPCK: advances in Technological Pedagogical Content Knowledge (TPCK). Comput. Educ. 52, 154–168. doi: 10.1016/j.compedu.2008.07.006

Azevedo, R., and Hadwin, A. F. (2005). Scaffolding self-regulated learning and metacognition–Implications for the design of computer-based scaffolds. Instr. Sci. 33, 367–379. doi: 10.1007/s11251-005-1272-9

Barr, R. B., and Tagg, J. (1995). From teaching to learning—A new paradigm for undergraduate education. Change 27, 12–26. doi: 10.1080/00091383.1995.10544672

Benhamdi, S., Babouri, A., and Chiky, R. (2017). Personalized recommender system for e-Learning environment. Educ. Inf. Technol. 22, 1455–1477. doi: 10.1007/s10639-016-9504-y

Cuban, L. (1993). How Teachers Taught: Constancy and Change in American Classrooms, 1890-1990. New York, NY: Teachers College Press.

Foshee, C. M., Elliott, S. N., and Atkinson, R. K. (2016). Technology-enhanced learning in college mathematics remediation. Br. J. Educ. Technol. 47, 893–905. doi: 10.1111/bjet.12285

Garrison, D. R., and Kanuka, H. (2004). Blended learning: uncovering its transformative potential in higher education. Internet High. Educ. 7, 95–105. doi: 10.1016/j.iheduc.2004.02.001

Gómez, S., Zervas, P., Sampson, D. G., and Fabregat, R. (2014). Context-aware adaptive and personalized mobile learning delivery supported by UoLmP. J. King Saud Univ. Comput. Inf. Sci. 26, 47–61. doi: 10.1016/j.jksuci.2013.10.008

Hannafin, M., Land, S., and Oliver, K. (1999). “Open learning environments: foundations, methods, and models, in Instructional-Design Theories and Models, ed. C. Reigeluth (New York, NY: Lawrence Erlbaum).

Johnson, L., Becker, S. A., Cummins, M., Estrada, V., Freeman, A., and Hall, C. (2016). NMC Horizon Report: 2016 Higher Education Edition. Austin, TX: The New Media Consortium.

Jung, E., Kim, D., Yoon, M., Park, S., and Oakley, B. (2019). The influence of instructional design on learner control, sense of achievement, and perceived effectiveness in a supersize MOOC course. Comput. Educ. 128, 377–388. doi: 10.1016/j.compedu.2018.10.001

Kalyuga, S. (2007). Expertise reversal effect and its implications for learner-tailored instruction. Educ. Psychol. Rev. 19, 509–539. doi: 10.1007/s10648-007-9054-3

Kalyuga, S., and Renkl, A. (2010). Expertise reversal effect and its instructional implications: introduction to the special issue. Instr. Sci. 38, 209–215. doi: 10.1007/s11251-009-9102-0

Liu, R., Qiao, X., and Liu, Y. (2006). A paradigm shift of learner-centered teaching style: Reality or illusion? J. Second Lang. Acquis. Teach. 13, 77–91.

Newman, A., Bryant, G., Stokes, P., and Squeo, T. (2013). Learning to Adapt: Understanding the Adaptive Learning Supplier Landscape. Available online at: http://edgrowthadvisors.com/wp-content/uploads/2013/04/Learning-to-Adapt_Report_Supplier-Landscape_Education-Growth-Advisors_April-2013.pdf (accessed July 15, 2021).

Paas, F. G., and Van Merrienboer, J. J. (1993). The efficiency of instructional conditions: an approach to combine mental effort and performance measures. Hum. Factors 35, 737–743. doi: 10.1177/001872089303500412

Park, O., and Lee, J. (2004). “Adaptive instructional systems,” in Handbook of Research on Educational Communications and Technology, 2nd Edn, ed. D. H. Jonassen (New York, NY: Lawrence Erlbaum Associates), 651–684.

Peng, H., Ma, S., and Spector, J. M. (2019). Personalized adaptive learning: an emerging pedagogical approach enabled by a smart learning environment. Smart Learn. Environ. 6:9.

Plass, J. L., and Pawar, S. (2020). Toward a taxonomy of adaptivity for learning. J. Res. Technol. Educ. 52, 275–300. doi: 10.1080/15391523.2020.1719943

Saye, J. W., and Brush, T. (2002). Scaffolding critical reasoning about history and social issues in multimedia-supported learning environments. Educ. Technol. Res. Dev. 50, 77–96. doi: 10.1007/bf02505026

Schmid, R., and Petko, D. (2019). Does the use of educational technology in personalized learning environments correlate with self-reported digital skills and beliefs of secondary-school students? Comput. Educ. 136, 75–86. doi: 10.1016/j.compedu.2019.03.006

Shemshack, A., and Spector, J. M. (2020). A systematic literature review of personalized learning terms. Smart Learn. Environ. 7:33.

Shute, V. J., and Zapata-Rivera, D. (2012). “Adaptive educational systems,” in Adaptive Technologies for Training and Education, eds P. Durlach and A. Lesgold. (Cambridge, MA: Cambridge University Press).

Snow, R. E. (1989). Toward assessment of cognitive and conative structures in learning. Educ. Res. 18, 8–14. doi: 10.3102/0013189X018009008

Sweller, J., Ayres, P. L., Kalyuga, S., and Chandler, P. A. (2003). The expertise reversal effect. Educ. Psychol. 55, 23–31. doi: 10.1207/s15326985ep3801_4

U.S. Department of Education, Office of Educational Technology (2016). Future Ready Learning: Reimagining the Role of Technology in Education 2016 National Education Technology Plan. Washington, D.C: U.S. Department of Education.

van Merrienboer, J. J., and Ayres, P. (2005). Research on cognitive load theory and its design implications for e-learning. Educ. Technol. Res. Dev. 53, 5–13. doi: 10.1007/bf02504793

Walkington, C., and Bernacki, M. L. (2020). Appraising research on personalized learning: Definitions, theoretical alignment, advancements, and future directions. J. Res. Technol. Educ. 52, 235-252.

Watson, W. R., Watson, S. L., and Reigeluth, C. M. (2012). A systemic integration of technology for new-paradigm education. Educ. Technol. 52, 25–29.

Xie, H., Chu, H. C., Hwang, G. J., and Wang, C. C. (2019). Trends and development in technology-enhanced adaptive/personalized learning: a systematic review of journal publications from 2007 to 2017. Comput. Educ. 140:103599. doi: 10.1016/j.compedu.2019.103599

Keywords: adaptive learning, personalized learning, higher education, student-teachers, TPCK development

Citation: Christodoulou A and Angeli C (2022) Adaptive Learning Techniques for a Personalized Educational Software in Developing Teachers’ Technological Pedagogical Content Knowledge. Front. Educ. 7:789397. doi: 10.3389/feduc.2022.789397

Received: 04 October 2021; Accepted: 25 April 2022;

Published: 01 June 2022.

Edited by:

Christos Troussas, University of West Attica, GreeceReviewed by:

Andreas Marougkas, University of West Attica, GreeceAnna Mavroudi, Norwegian University of Science and Technology Museum, Norway

Copyright © 2022 Christodoulou and Angeli. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Charoula Angeli, Y2FuZ2VsaUB1Y3kuYWMuY3k=

Andri Christodoulou

Andri Christodoulou Charoula Angeli

Charoula Angeli