- 1Department of Languages and International Culture, CREAD, IMT Atlantique, Brest, France

- 2CREAD, Faculty of Education and Sports Sciences, Université de Bretagne Occidentale, Brest, France

Self-regulated learning includes the cognitive, metacognitive, behavioral, motivational, and affective aspects of learning. The conceptualization of self and socially regulated learning has recently received much attention and peer assessment has been found to increase the use of metacognitive activity. The present exploratory qualitative study aimed to identify self-, co-, and socially shared regulatory processes in an oral English as a Foreign Language task. The regulatory activity deployed by 10 learners was studied within the context of a peer assessment task using an assessment form paired with video feedback in the context of an English language classroom at a French university. These interactions were filmed and discussed in individual self-confrontation interviews which were analyzed through inductive coding. Specific findings from the classroom setting shed light on existing gaps in the literature. First, students can gain confidence in their own skills through assessing their peers and activating regulatory processes both individually and as a group. Second, appropriate tools can increase co-regulated and socially regulated learning through the structuring of cooperative regulatory behaviors. Third, psychological safety appeared to be a propitious social context for supporting regulated learning (SRL, CoRL, and SSRL). We also shed light on the fact that adaptive regulatory strategies are present in oral (as well as written) English as a Foreign Language tasks. These results indicate the potential for learning situations based on video feedback used in conjunction with peer assessment and collaborative learning in order to develop regulatory behaviors in language learners.

Introduction

Over the past three decades, there has been a shift in learning theories toward a more constructivist approach. Much recent literature has focused on assessment for learning (Boud and Falchikov, 2007; Sambell et al., 2019) in which assessment is no longer considered an obstacle to be overcome at the end of a training course, but rather an opportunity to learn in and of itself (Race, 2009). Such an approach to learning means that education in the classroom and lifelong learning are no longer so clearly divided, and the skills acquired by learners through assessment for learning could also be applied beyond the classroom (Boud, 2014). Assessment for learning, in which learner agency plays a central role (Andrade and Brookhart, 2019), has become one of the leading currents in the education literature and provides opportunities for learners to become more aware of teacher expectations. Through their active involvement in the learning processes, it is argued that they learn to position themselves according to objectives and to engage self-regulated learning (SRL) processes (Pintrich, 2004). A logical extension of this shift is that these pedagogical practices seem to offer a relevant context in which to study SRL which has become a more and more widely-used framework for examining the specific processes underpinning learners’ behaviors and strategies (Pintrich and Zusho, 2002; Pintrich, 2004).

Self-regulated learning includes cognitive, metacognitive, behavioral, motivational, and affective components (Panadero, 2017) with regards to the attainment of specific learning goals (Schunk and Greene, 2018).

First, SRL has been conceptualized from a cyclical, or phases, view (Pintrich and Zusho, 2002; Zimmerman et al., 2011). It is argued that SRL components are activated through recursive cycles of forethought, performance and self-reflection (Zimmerman and Moylan, 2009). Early SRL research tended to focus on how SRL interventions could improve performance, whereas more recent studies focus more on examining the processes self-regulated learners display though the strategies they use during various phases of the SRL cycle, and how these can benefit the learners (Schunk and Greene, 2018). However, there is little research into how assessment for learning specifically affects different components of the SRL processes (Panadero et al., 2016), so further empirical studies are expected (Panadero et al., 2018).

Second, early SRL literature focused on SRL as an individual phenomenon, in which learners were still very much considered individual entities reaching out to other individuals for help-seeking purposes. It is only in the last 10–15 years that co-regulation (CoRL) and socially shared regulation of learning (SSRL) have begun to take center stage in the search for a more rounded approach to SRL processes in the highly social context of the classroom. The conceptualization of self, co and socially regulated learning have received a great deal of attention in recent years (Greene and Azevedo, 2007; Järvelä and Hadwin, 2013; Panadero and Järvelä, 2015; Hadwin et al., 2018).

Socially shared regulation of learning refers to groups “regulating together as a collective” (Panadero and Järvelä, 2015, p. 192), in which regulations are supported or inhibited by interaction with others. Decisions are therefore made together and “emerge through a series of transactive exchanges amongst group members” (Hadwin et al., 2018, p. 86). It implies “jointly evoked regulative acts and jointly emerging perceptions” (Hadwin et al., 2018, p. 87), and “assumes reciprocity in regulatory actions between the group members” (Järvelä et al., 2019, p. 427). As such, SRL can become SSRL where learner’s regulatory activities are supported or constrained through interactions with others (Hadwin et al., 2018).

In co-regulation of learning, the regulatory actions are instead guided by specific members of the group (Panadero, 2017) and, in collaborative situations, provide “transitional, temporary shifts” in regulation. It can broadly be thought of as the “affordances and constraints stimulating appropriation of strategic planning, enactment, reflection, and adaptation” (Hadwin et al., 2018, p. 87).

From these definitions, we can see that, while co-regulatory actions tend to be unidirectional (i.e., finding support from a more skilled peer or using tools to enhance regulation), socially shared regulation is multidirectional, with many learners working together to determine goals and find solutions as a group.

It should be clearly noted here that there are competing interpretations of the concept of co-regulation in the literature, from the specific definitions above, to a more holistic concept of the learning situation itself, including “the structure of the teaching […] materials, artifacts and tools used for instruction, and—in particular—for assessment” (Allal, 2016, p. 263), essentially encompassing the three levels of regulation separated by Hadwin et al. (2018).

For the purposes of the present article, we will retain the clear distinction between SRL, CoRL, and SSRL in order to identify the specific focus of the regulatory activity in question.

Third, early SRL literature did not shun the importance of context on the regulation of learning (Pintrich and Zusho, 2002) and recently, theoretical evolutions have led researchers (such as Ben-Eliyahu and Bernacki, 2015) to investigate self-regulated processes as context-dependent (i.e., a coupling between a student and a learning context) rather than an individual–dependent phenomenon. Thus, in the most influential research, “context” typically refers to regulation of one’s environment (Panadero and Järvelä, 2015). If learners are considered as embedded within contexts including material resources, pedagogical content, and help-giving or -seeking opportunities with peers, qualitative analysis could offer insight into students’ behaviors and thoughts within the learning task. Methodologies such as traditional and standardized self-report questionnaires are starting to be supplemented with think-aloud protocols, observations and interviews geared toward qualitative and grounded theory approaches (Hogenkamp et al., 2021).

Due to the importance of considering regulation of learning as a context-dependent phenomenon (Schunk and Greene, 2018), it is vital to examine as wide a variety of contexts as possible when studying it. Language classes in particular require learners to cooperate on a regular basis and, for utterances to be meaningful, communication is, of course, essential. The Common European Framework of Reference for Languages identifies language learners as social agents “co-constructing meaning in interaction” (Piccardo et al., 2018, p. 23) and “acting in the social world and exerting agency in the learning process” (idem, p. 26). One shortcoming that has been highlighted in the SSRL literature (Panadero and Järvelä, 2015) is that there is a lack of clarity regarding the reasoning behind the tasks chosen in the studies exploring these processes. Thus, the aim of this study was to explore the processes underpinning SRL, CoRL, and SSRL in closer detail in collaborative situations in a language classroom.

Where emphasis has been placed on studying SRL as a social process in learning contexts, it has been suggested that peer assessment, a specific instance of assessment for learning, be considered a critical resource for teachers and learners (Panadero et al., 2016). Many authors (Nicol and Macfarlane, 2006; Bijami et al., 2013) have posited that peer assessment offers ideal affordances for enhancing learners’ SRL, providing a scaffold for metacognitive activity and reflection on one’s own learning. Bijami et al. (2013) looked specifically at written peer assessment in the context of English as a Foreign Language (EFL) and found that it enforced SRL, with students becoming less passive and less dependent on their teacher. Teng (2020) also highlights the importance of increased metacognition and regulatory skills for enhancing writing performance in EFL learners. However, peer assessment for oral skills in EFL is a field which has not been extensively studied despite the fact that peer assessment often occurs naturally in the language classroom, with learners correcting and questioning one another when the communications are unclear, ineffective or imprecise.

Despite all of the encouraging theoretical literature connecting peer assessment and SRL, “Empirical evidence of a connection between peer assessment as an instance of co-regulation and enhanced outcomes is scarce” (Panadero et al., 2016, p. 320). Our first area of focus therefore aims to examine the connection between peer assessment and CoRL, and how they relate to self-assessment and self-perception.

Through peer assessment, and the associated metacognitive monitoring activity, students develop awareness of their own competence, helping them to become better self-regulated learners (Pintrich, 2004; Bijami et al., 2013). Peer assessment can provide opportunities for learners to interact (Strijbos and Sluijsmans, 2010), as “[PA] is an inherently social process in which students, by assessing each other, learn with and from each other as peers” (van Gennip et al., 2010, p. 281). It is said to help learners deploy self-regulatory strategies supported by interactional and metacognitive processes (De Wever et al., 2011), and thus can be considered to be of formative value as it can provide “opportunities to close the gap” (Nicol and Macfarlane, 2006, p. 207) between where the student currently is in his/her learning, and where s/he needs to be, thanks to interactions with peers. Panadero et al. (2016) argue that this opportunity for closing the gap in one’s knowledge also has an impact in terms of affect, as students who know they will receive feedback with the aim of enhancing their learning will be “less likely to feel anxious or stressed during the performance, as they know there will be opportunities to improve” (Panadero et al., 2016, p. 316) Our second research focus thus aims to explore the connections between peer assessment and SSRL, and how they relate to the perception of social support.

Allal also highlighted the importance of the role of tools in regulating learning in “assuring linkages between the levels of regulation” and “amplifying the effects of interactive co-regulation” (Allal, 2016, p. 265). Our third area of focus will be to examine the role of tools (in this case video feedback and evaluation forms) in supporting SRL, CoRL, and SSRL.

In light of the literature described above, this qualitative exploratory study examines how learners engage in regulatory processes in the language classroom using peer assessment mediated by video feedback. The benefits of peer assessment have been clearly demonstrated in many contexts, including language learning, although for the majority, this has meant written production. We intend to extend the investigation of co- and socially shared regulatory strategies to an oral production task, not only to benefit from the social nature of linguistic competences deployed, but also for the processes that will occur due to peer assessment in interaction.

Our research focus will therefore be centered around:

(i) The role of peer assessment in promoting regulatory processes.

(ii) The importance of perceived social support in promoting regulatory processes.

(iii) The role of tools (in this case, video feedback and evaluation forms) in supporting regulatory processes.

Materials and Methods

Participants

Nineteen students (15 men and 4 women) aged 21–25 years participated in this study. Participants were first year Master’s students on a Sports Management course at a French university. English is a compulsory subject for all university students in France and, as the students are non-specialist, they are not streamed for linguistic ability. Participants’ language levels were therefore highly heterogeneous.

Design

The learning situation for this qualitative exploratory study was designed in collaboration with the group’s English teacher in order to both meet the aims of the research study and the learning objectives of the class in question.

Prior to participation in the study, all students were presented with its aims, and completed a consent form confirming that they were willing participants and informing them that they could withdraw from the study at any time.

The Learning Situation

During a 90-min English class, students worked in groups of 4 (and one group of 3) to prepare a discussion on a theme provided by the teacher. The activity consisted of a role play in which learners used vocabulary and linguistic structures learnt in previous English classes.

In their groups of 4, two pairs (one after the other) were tasked with debating a theme according to a role given to them by their teacher at the start of the class. Each group of 4 worked independently and in parallel.

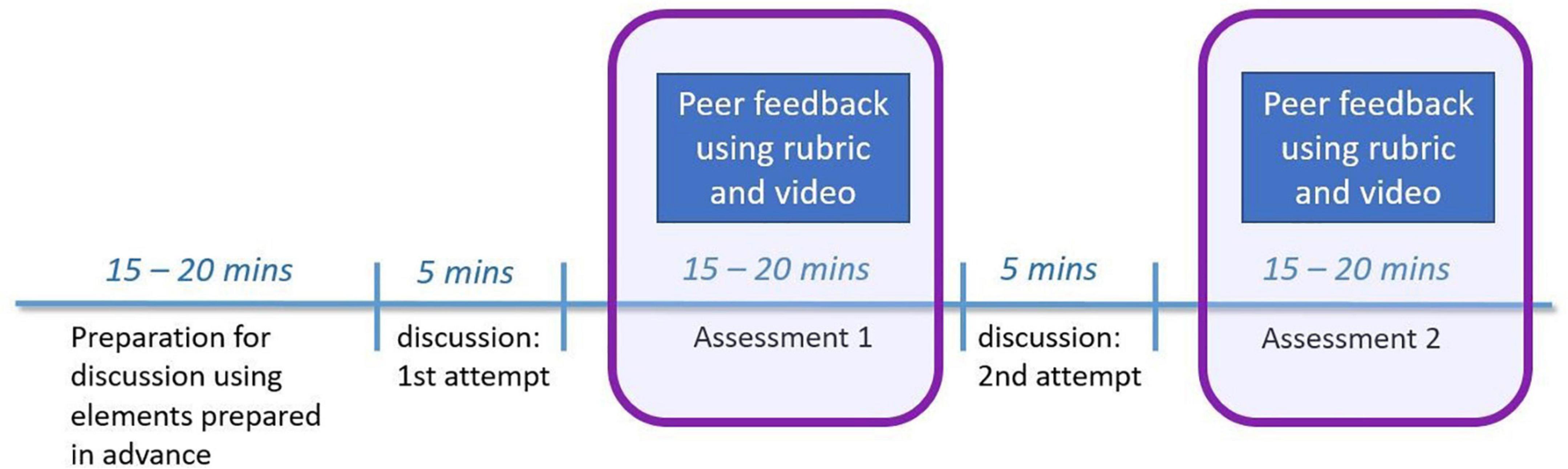

Students began by preparing the discussion based on the work they had prepared at home and during previous English lessons. They then took turns to present their discussions, alternating the roles of assessor and assessee. While the first pair role-played their discussion, the other pair of learners took on the role of assessors. Following the discussion, the assessors gave feedback to their peers. Each pair role-played their discussion twice in order to apply the improvements suggested by their student assessors during feedback and receive feedback on their improved discussions (Figure 1). The feedback sessions (2 for each pair, circled in Figure 1) were filmed by the researchers.

Learners were given three tools to assist them with the peer assessment process:

(1) An evaluation form,

(2) A tablet computer (iPad),

(3) An information sheet with guidelines for giving effective peer assessment feedback.

The three tools were presented to the class by the teacher at the beginning of the session at the same time as the instructions for the task.

The evaluation form (written in English) included criteria based on both linguistic accuracy and the form and content of the discussion.

The tablet computer was presented as a tool for filming the discussions, to be used in conjunction with the evaluation form in order to illustrate any aspect of the presentation identified by the assessors. No specific guidelines were given to the students as to how the tablets should be used and learners were free to use them as they saw fit.

The peer assessment guidelines (also written in English) were presented to the students by the teacher at the beginning of the session. Guidelines were distributed to students and were available throughout the activity. They were divided into two sections: “Providing effective feedback for your peers” and “Using feedback from your peers effectively.” The guidelines were based on recommendations from the peer assessment literature on aspects such as the use of example, clarity in feedback, or giving feedback as a question to encourage reflection on the part of the assessees (Nicol and Macfarlane, 2006; Topping, 2009; Lu and Law, 2012; Gielen and De Wever, 2015).

Data Collection

The full learning situation was filmed for each group of students, in addition to the peer assessment feedback sessions (4 for each group). The feedback session videos were used to conduct self-confrontation interviews in the week following the learning activity. This interview format was chosen in order to help the participants to evoke the processes used during the learning situation. The self-confrontation interviews in this study were based on a procedure during which participants were confronted with a recording of their activity and were invited to describe and comment on it. These self-confrontation interviews were also filmed. Confronting participants with audio-visual stimuli aids in the recall of the elements used and perceived during the different stages of the learning situation (Theureau, 2006).

In such interviews, the interviewer aims to create a safe space, in which participants create a mental state that helps them to explain their actions thanks to specific cues relating to feelings (“how do you feel at this moment?”), perception (“what are you looking at here?”), focus (“what are you focusing on?”), objectives (“what are you trying to do here?”), and thoughts (“what are you thinking about?”) corresponding to their actions (Kermarrec et al., 2004). This type of interview enables participants to describe the dynamics of the activity as they experienced it.

At least one participant from each group of 3 or 4 working together in the learning situation was invited to participate in this study. Ten of the 19 students who took part in the activity volunteered to be interviewed individually. Both participants and interviewers could pause the video at any time in order to describe elements in more detail. The interviews were transcribed for these 10 students.

Data Analysis

Existing SSRL research methodology can trace students’ regulatory acts through observational, trace, and self-report data, but is generally unable to account for why individuals act in a particular way or how much the acts are socially shared (Järvelä et al., 2019). Furthermore, because much of the literature on SRL and peer assessment has relied on quantitative self-report questionnaires, leading voices in the field (Hadwin et al., 2018) have expressed a need for further investigation into learner intentionality and the transactive nature of regulatory behaviors, particularly regulation in interaction (CoRL and SSRL). An inductive analysis looking at the categories of activity in which this type of regulation occurs may contribute to understanding which social and learning challenges trigger strategic adaptations.

Data was analyzed in three phases during which the transcriptions of interviews were investigated using triangulated inductive content analysis.

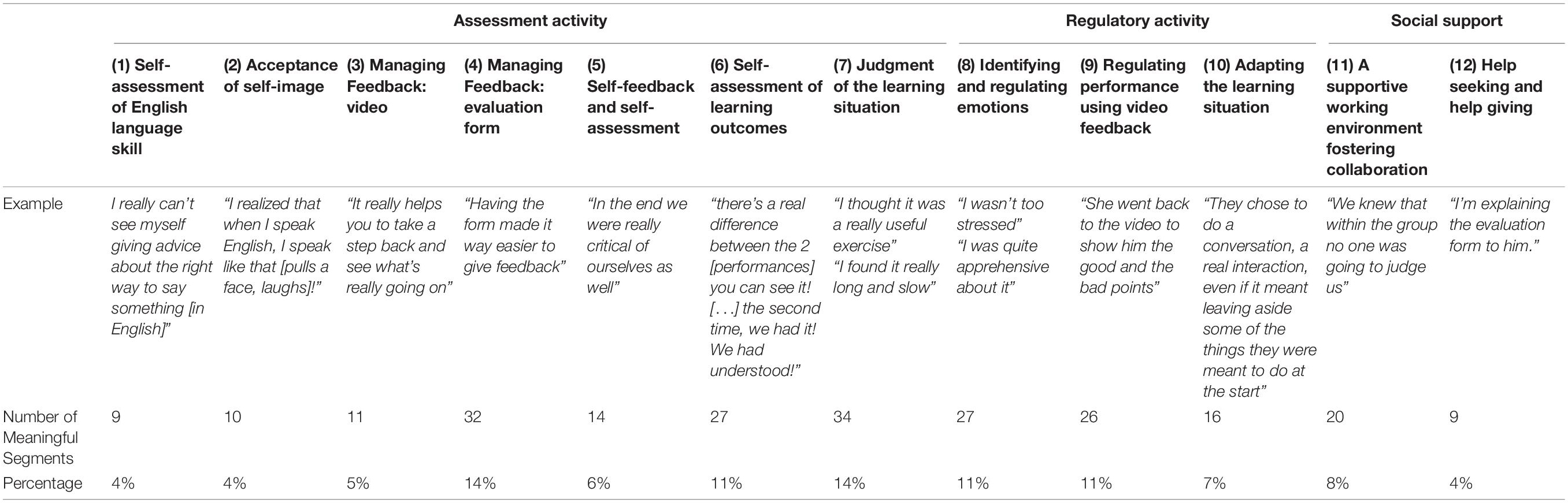

First, the transcribed interview data was read repeatedly and, through initial and focused inductive coding it was broken down into meaningful segments. Meaningful segments are defined as units of distinct semantic significance. In this study, the sensitizing concepts (Charmaz, 2006; Hogenkamp et al., 2021) related to regulatory processes of learning. Two hundred and thirty-five meaningful segments were identified. These were progressively categorized by one researcher, grouping together segments with similar meanings in order to construct homogeneous categories (Corbin and Strauss, 1990). Twelve categories of regulatory processes emerged from the analysis.

The segments contained within these 12 categories were then checked by a second researcher, working alone, who validated or modified the choice of category to which the segments had been assigned. In a third round of validation, the first and second researchers worked together to reach agreement on the attribution of any remaining categories.

In the second level of the inductive analysis, the 12 categories of processes were grouped together into three more general categories of regulatory activity and collective strategy use on the part of both the assessors and the assessees. These three general categories brought together smaller categories around three distinct themes: Assessment activity, regulatory activity, and social support.

Again, the robustness of the categories was enhanced thanks to another round of validation between the two authors. Compatibility between the two researchers was high, with only four segments (1.7% of the total) identified as problematic in the final review and subsequently changing categories.

The data for this exploratory study was processed using inductive content analysis. This method was chosen as it offers a number of opportunities for the researchers in terms of credibility, originality, resonance and usefulness (Charmaz and Thornberg, 2020). Credibility refers to the researchers’ confidence in their systematic knowledge of the data. The credibility of the process is supported by the fact that the categorization was undertaken with knowledge of the field in question and while considering the existing literature on regulatory processes of learning. Originality can refer to the provision of new insights, or “providing a fresh conceptualization of a recognized problem” (Charmaz and Thornberg, 2020, p. 12). In terms of originality, this study aimed to identify occurrences of meaningful segments without limiting the researchers to categories already present in the literature, while at the same time being open to its related gaps. In addition, it aimed to offer an inclusive approach to the study of regulation of learning as suggested by the holistic, situated and constructivist theoretical direction the literature is currently taking. Resonance refers to the fact that researchers have tried to account for concepts that not only represent the participants of the present study, but may also provide insight that could be transferred to other contexts. Resonance here is reflected in the fact that although the self-confrontation interviews and inductive approach shed light on the participants’ experiences of the specific learning situation, the content analysis could also provide insight into processes at play in other similar collaborative learning situations. Finally, in terms of usefulness, the study contributes to new lines of research by bringing together the fields of SRL, CoRL, and SSRL in the context of English as a Foreign language with qualitative approach with a view to contributing to future practical applications for peer assessment procedures.

Results

The three more general categories identified through the inductive content analysis related to: assessment activity, regulatory activity, and social support. The twelve more specific categories, underlined in the following sections, can be found in Table 1 along with an example of a typical meaningful segment.

Assessment Activity

Comments relating to assessment activity were further divided into 7 more specific categories and accounted for 58% of the 235 meaningful segments.

The majority of the students interviewed (n = 8) commented on the assessment of their own skills in English, and the importance of this self-assessment in the feedback they were able (or unable) to give in their role as assessor:

“You don’t feel very legitimate when you don’t have the vocabulary yourself. You feel like what you’re saying isn’t relevant”

Similar meaningful segments (n = 9; 4%) were classified in the category “Self-assessment of English language skill.”

In coming to regulate their own activity, the video not only led students to notice and comment on their communication skills, but on self-image more generally (n = 7). Some students experienced great difficulty watching themselves on film:

“I realize that when I speak English, I speak like that [pulls a face, laughs]!”

These comments tended to occur in the early stages of the interviews, and were reflected in comments students made prior to giving feedback to their peers (made in the initial videos of feedback activity). It would appear that, in such a task, learners feel a general need to comment on their own image, and to accept it in order to be able to move on with the exercise and comment of the performance of their peers.

Similar meaningful segments (n = 10; 4%) were classified in the category “Acceptance of self-image.”

Seven out of ten students identified the tablets or the videos as offering support in terms of managing their feedback activity and the role of assessor:

“they couldn’t agree and we thought they’d said more. F hadn’t written the same thing down. Thanks to the video we could go back and count [the number of expressions they’d used] […] it was useful to really know for sure.”

Similar meaningful segments (n = 11; 5%) were classified in the category “Managing feedback: video.”

However, all of the participants commented on the vital role of the evaluation form in helping them take on this role:

“It was much easier to give feedback with the sheet […] and have all the information to be able to tell them what they need to work on.”

Similar meaningful segments (n = 32; 14%) were classified in the category “Managing feedback: evaluation form.”

Nine out of 10 students interviewed mentioned giving self-feedback or self-assessment during the task, despite only being asked to comment on peer activity. Seven out of ten students reported focusing their attention on themselves during video feedback, as well as comparisons of their own performance with that of their peers.

“I think it was more self-assessment really [rather than peer assessment]”

Similar meaningful segments (n = 14; 6%) were classified in the category “Self-feedback and self-assessment.”

In addition, learners were able to assess their own progress, recognizing that there had been a real change in their performance from one iteration to the next:

“It’s interesting to see that there’s a real difference between the two [performances] you can see it! […] the second time, we had it! We had understood!”

Similar meaningful segments (n = 27; 11%) were classified in the category “Self-assessment of learning outcomes.”

Finally, all but one (n = 9) of the participants assessed the learning situation itself. In particular they commented on the opportunity to act on the feedback they had received to rectify their errors and improve on their performance:

“I thought it was pretty good to go twice like that. That way, we learn straight away, and we can apply it while it’s still fresh. [often in English class] we don’t have particularly constructive feedback. And to have a second chance to take the feedback into account and try again on the same subject… it’s pretty good.”

Not all students were quite so enthusiastic about the learning situation, and some of these segments relating to assessment of the learning situation were more negative:

“When I was filming, I couldn’t hear very well. So I was much less focused and giving feedback was hard.”

Similar meaningful segments (n = 34; 14%) were classified in the category “Judgment of the learning situation.”

Regulatory Activity

Comments relating to regulatory activity were further divided into 3 more specific categories and accounted for 29% of the 235 meaningful segments.

The majority of the learners (n = 8) commented on the emotions they experienced during the unusual learning situation. Some were “quite apprehensive,” and others “not too stressed,” but by identifying and regulating their emotions, some were able to improve their performance.

“I knew that I would see myself [in the video] afterward, so I tried to control my gestures and manage my stress”

Both positive and negative meaningful segments (n = 27; 11%) were classified in the category “Identifying and Regulating emotions.”

The learners frequently commented on the added value of the video for regulating their overall performance, referring to specific added value:

“If we hadn’t had the tablet we would maybe have given more feedback on the English […] and here we also gave feedback on behavior. […] So it was more useful to have the video […] Here we also worked on our communication skills”

and to its usefulness for identifying specific elements requiring improvement:

“Thanks to the video we could […] see if we hadn’t managed to make ourselves understood on certain aspects, so we could try to improve on that the next time.”

Similar meaningful segments (n = 26; 11%) were classified in the category “Regulating performance using video feedback.”

Seven out of 10 participants commented on the way they had worked together to adapt the learning situation:

“We were all working together to work out a scenario”

often choosing to re-define the objectives of the task in order to optimize performance on certain aspects of the task that they, as a group perceived, as being more valuable:

“We didn’t think that 5 min was enough to really get into the discussion. We spoke for nearly 10 the second time. It was much more interesting that way. We were so frustrated the first-time round. So, the second time, we chose to speak for longer!”

Similar meaningful segments (n = 16; 7%) were classified in the category “Adapting the learning situation.”

Social Support

Comments relating to social support were further divided into 3 more specific categories and accounted for over 12% of the 235 meaningful segments.

Preserving a supportive atmosphere throughout the session was key for the participants and the majority of participants (n = 7) evoked its importance for effective collaboration:

“in our class it worked pretty well because… well we’re a pretty tightly knit group. We’re almost like a big group of friends.”

In fact, some of the learners found that the challenging learning situation itself actually helped them develop team spirit, allowing them to gel even further as a group:

“[It’s good because] it helped us create a sort of group solidarity.”

Assessors were also attentive to their role in creating an encouraging working environment:

“he said what needed to be improved, and then what was good, he was trying to talk us up a bit.”

In addition to individual regulatory strategies, participants exhibited and commented on strategies that offered insights into the way they worked together to determine their goals and performance strategies, demonstrating evidence of socially shared regulation of learning:

“we told them what they did wrong, and then the other person [assessor] would soften the blow a little, it’s … well it’s just helping each other out really.”

Meaningful segments relating to this activity (n = 20; 8%) were classified in the category “A supportive working environment fostering collaboration.”

Finally, the participants also engaged in typical co-regulatory help-giving and help-seeking strategies:

“Here I was asking how to say ‘advertising’ in English because I couldn’t even remember the word!”

Meaningful segments relating to this activity (n = 9; 4%) were classified in the category “Help seeking and help giving.”

Discussion

The present exploratory study aimed to identify regulatory processes used by participants in a learning situation designed to elicit cooperation through an oral peer assessment activity using digital tablets and an evaluation form to provide video feedback in a higher education language class setting. It was designed from a social regulation perspective in order to identify and categorize the various aspects of regulatory activity used by learners in the language classroom.

The study aimed to respond to calls from leading voices in the field of SRL (Panadero et al., 2016, p. 322; Hadwin et al., 2018) for “an increased research effort in exploring the effects of assessment for learning practices – and of […] peer assessment in particular.” Previous literature had also identified a number of recommendations for scaffolding feedback (Nicol and Macfarlane, 2006, p. 207), and formative peer assessment feedback in particular (Panadero et al., 2016, p. 320). The results of the present study led us to identify metacognitive and affective conditions along with a number of processes that were implemented by the learners when applying many of these recommendations (presented in the peer assessment guidelines document).

Assessment, Regulatory Processes and Social Support Foster Regulation of Learning in the Language Classroom

Findings from this study highlight the importance of three types of regulatory processes in a collaborative language learning situation using peer assessment supported by an evaluation form and video feedback.

In this section, we will address the findings in the results according to our three initial areas of focus, in light of the existing literature.

(iv) The role of peer assessment in promoting regulatory processes.

(v) The importance of perceived social support in promoting regulatory processes.

(vi) The role of tools (video feedback and evaluation forms) in supporting regulatory processes.

The Role of Peer Assessment in Promoting Regulatory Processes

Assessment is the activity which is represented by the most meaningful segments in the learning context.

It would appear that, when asked to engage in peer assessment, the participants first needed to assess their own competence in order take on the role of assessor. The literature highlights the importance of expertise and training in peer assessment (Gielen and De Wever, 2015). In our study, some participants suggested that a lack of legitimacy and experience led to self-regulatory difficulties despite the guidelines provided in the information sheet. However, one learner evoked prior experience in giving formative feedback, and how it affected his approach to the task:

“we’re used to doing it […] we’re more comfortable doing it than the two [other students].”

Such comments suggest that, given the opportunity to engage in collaborative peer assessment activities during their classes on a regular basis, students would indeed gain confidence and skill both in assessing and providing feedback to their peers (CoRL), and activating regulatory processes both individually (SRL) and as a group (SSRL). Such evidence of the importance of experience underlines the importance of the constructivist dimension to learning. It should also be noted that this study clearly reveals the self-assessment activity activated in the assessors, meaning that peer assessment is as useful in terms of SRL for the assessors as it is for the assessees. This finding has already been reported in the literature for online learning (Lu and Law, 2012), but is often overlooked in studies reporting correlations between peer assessment and regulation of learning.

By giving learners the opportunity to immediately engage with the feedback, we suggest that they are really able to see the benefits of acting on feedback and closing the gap between their performance and where they need to be (Nicol and Macfarlane, 2006).

“I felt great then [the second time], I think it really helps you to make progress quickly”

As a result, they may go on to engage with subsequent formative classroom feedback in a timely manner in order to help them “apply [it] to their future learning attempts” (Andrade and Brookhart, 2020, p. 370).

Similarly, the need to contextualize learners’ strategic behavior has been underlined in the literature. Hadwin et al. (2018, p. 100) highlight the risk of “not adequately contextualiz[ing] situated knowledge, beliefs and intent upon which students operate in regulating their learning.” The present exploratory study goes some way to examining learners’ regulation by specifically situating it within the iterative, collaborative task in question. It has been argued that peer assessment should be “practice-oriented” (Brown, 2004, p. 82), and the language classroom appears to be an ideal context for studying such collaborative tasks.

The Importance of Perceived Social Support in Promoting Regulatory Processes

Our results show that students naturally encouraged and praised each other. According to the literature (Brown, 2004), positive feedback on performance is just as important for group cooperation as tips for improvement. This supportive atmosphere seems to be vital for optimal collaborative activity. Hattie and Timperley (2007) also highlight the fact that feedback is more effective if the threats to self-esteem are perceived to be low.

Importantly, the learners repeatedly focused on the emotional requirements of the task, highlighting the significance of their strong group identity as a class. They repeatedly referred to their learning environment as a safe space, free of judgment, which allowed them to provide constructive feedback in a way that would be positively received by their peers (CoRL). We suggest that this strong team spirit could explain the variety of effective collaborations (SSRL) that went beyond the simple roles of assessor and assessee. Thus, CoRL and SSRL were deployed despite marked heterogeneity in terms of their levels of (linguistic) expertise, which, in the literature has found to be potentially problematic for successful regulation of learning (Allal, 2016).

We also suggest that the social support and security offered by a group of this kind, which was particularly closely knit, are vital for an oral PA activity to function effectively.

“We’ve known each other for 3 years and we knew that we could express ourselves freely without the fear of criticism or any kind of bad feeling.”

The literature has identified the fact that all assessment, but particularly peer assessment, is an emotionally charged activity (Cheng and Tsai, 2012) which “can trigger powerful feelings” (Panadero, 2016, p. 26). Other studies have recognized that peer assessment requires, but can also enhance, feelings of psychological safety (van Gennip et al., 2010). As we have already noted, some participants specifically commented on the fact that the learning situation helped them to gel as a group.

All of these factors seem to suggest that, in the case of a particularly supportive learning environment, an activity based on oral skills, and a learning situation designed to facilitate interactions, learners may actively seek opportunities to interact with their peers in order to self-regulate in addition to regulating the behaviors of the group. In the words of one of the participants: “I prefer to have a conversation in order to learn.”

The Role of Tools (Video Feedback and Evaluation Forms) in Supporting Regulatory Processes

Our study appears to empirically support regulation as a socially dependent activity (Hadwin et al., 2018), with the use of both help-seeking and help-giving strategies, in addition to strategic discussions about goals and adaptation of content.

“They chose to do a conversation, a real interaction, even if it meant leaving aside some of the things they were meant to do at the start.”

These collective regulatory strategies characterize inter-individual collaborative activity, which is a key feature of SSRL in structuring the activity of the group (Hadwin et al., 2018).

This social activity also activates self-feedback and self-assessment and, in turn, complements intra-individual metacognitive activity (SRL). We would argue that, in addition to the role played by tools in assuring “linkages between the levels of regulation” (Allal, 2016, p. 265), these tools also “amplify the effects of interactive co-regulation” (idem) due to the communication and collaborations required in harmonizing the interpretation and use of the evaluation form and the tablet, and the organizational strategy for providing feedback within the group.

The self-feedback activity in which the participants engaged did indeed lead to adaptative self-regulatory strategies. This seems to confirm the deployment of the same metacognitive strategies orally as previously observed in written peer assessment tasks in the context of EFL (Teng, 2020). The added value of the tablet, and in particular its role in increasing self-assessment and monitoring strategies, was identified by many of the students in the task.

“It means you can see your gestures and hear your bad habits and fillers, all those things we do when we’re talking to someone. It’s a great help in correcting them. I think it helps.”

Indeed, the tablets appear to act as an effective tool for the “recording of traces of assessment that can be used for deferred regulation” (Allal, 2016, p. 265). Likewise, the use of the evaluation form embedded in the task enabled learners to benefit from help and information from the assessor (CoRL):

“When he had the evaluation form in front of him, he was really able to say ‘actually no, you didn’t put forward many arguments.”’

In support of Allal’s findings relating to deferred regulation, learners recognized the added value of the peer and video feedback from a metacognitive perspective:

“They didn’t agree. We thought they’d said more, and F hadn’t written down the same comments. So, the great thing about the video is that we can really go back and check […]. We hadn’t done it! […] It means you can really be sure about it and go back to it to give appropriate feedback.”

In addition, they also commented on their dependence on the evaluation form in developing their identity as assessors, highlighting the importance of multiple sources in managing their regulations in the learning environment (CoRL). This finding demonstrates that learners are interacting with the various affordances (both material and social) within their environment to coordinate their collaborative activity and assessment skills. The learners’ dependence on the tools as they learn to engage in peer assessment are to be expected, as it is a skill that requires practice, and drawing on the elements found in their environment can help them in structuring the feedback that they both give and receive (Gielen and De Wever, 2015). Such tools, along with training, can also help learners manage their feelings of illegitimacy (CoRL) as they develop in their roles as assessors (Panadero, 2016).

Contributions to Theoretical Development of Self-Regulated Learning

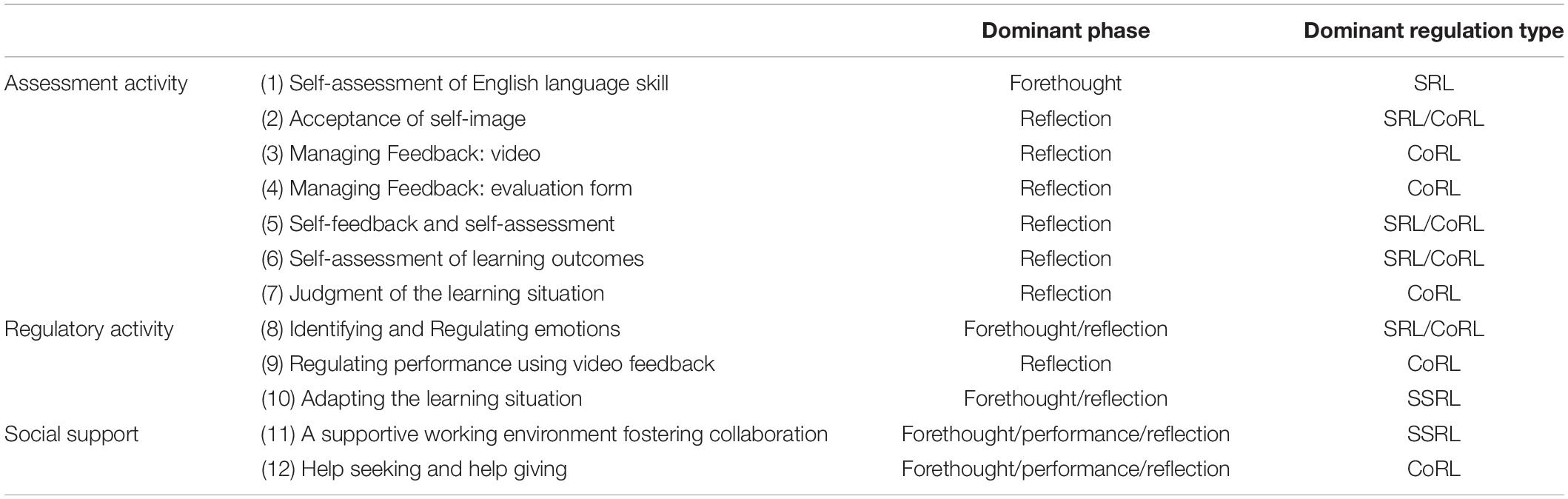

In addition to the discussion around the initial research foci, the results led us to further reflections in relation to the three types of regulation (SRL, CoRL, and SSRL, Hadwin et al., 2018) and their connection to the three cyclic phases (forethought, performance and reflection, Zimmerman and Moylan, 2009). This articulation of the two leading theoretical trends in regulation theory highlights the resonance of the findings. The dominant phases and regulation types to which the participants refer in the meaningful segments contained within each category are outlined in Table 2.

Phases of Regulation

Comments relating to forethought are present in categories accounting for around a third of segments. This phase only occurs alone in the category relating to self-assessment of English level, which many participants appeared to consider a prerequisite for the assessment activity. It relates particularly to self-assessment activity and comments about emotions. This phase seems to be relevant or necessary for establishing appropriate conditions for peer assessment and regulatory activity.

Comments relating to the performance phase are present in categories accounting for a minority of segments, and these categories always contain references to multiple phases of the regulatory cycle. This could be explained by the cognitive load of the multiple activities within the learning situation: the students had to collaborate, speak English, assess their pairs, and use the evaluation form. It is therefore possible that their attention was focused on the elements relating to preparing and debriefing the activity more than performance itself.

Being a task that focused specifically on peer feedback with a view to adapting performance for a second iteration of the same task, it is perhaps unsurprising that the learners’ comments primarily focused on the reflection phase of the regulatory cycle. The reflection phase was present in categories accounting for the vast majority of the total segments. It regularly accounts for all of the segments in a given category, but is also found in categories containing references to other types of regulation.

The predominance of the reflective phase offers support for the literature, suggesting that learners were indeed led to evaluate and adjust their own performance through the evaluation of their peers (peer assessment). In this way, the activity benefits the assessors themselves more than the assessees (Lu and Law, 2012), helping them to close the gap between performance and task achievement (Nicol and Macfarlane, 2006) by reflecting on the advice given and received during the peer assessment task (Gielen et al., 2010).

Types of Regulation

The dominance of different types of regulation were also examined in order to discuss the links between our results and one of the leading current theoretical trends in the field of regulation. According to Hadwin et al. (2018), there are three types of regulation: SRL, CoRL, SSRL. These types of regulation were examined as follows:

(1) As SRL is a set of individual processes used by a learner relating to his/her own activity and resources, categories dominated by comments including the word “I” were considered as being predominantly SRL categories,

(2) As CoRL is a set of unidirectional processes primarily between two individuals with different levels of skill or knowledge, categories dominated by comments including the words “help,” “teacher,” “asked,” “advice,” or the name of another student were considered as being predominantly CoRL categories.

(3) As SSRL is a set of collective processes between equals, in which learners interact in order to establish objectives and share ideas for collaboration, categories dominated by comments including the words “we,” “we decided,” “we chose” were considered as being predominantly CoRL categories.

Perhaps the most striking observation in this classification was that SSRL only occurred alone, and never in a category along with other types of regulatory activity (see Table 2). This type of regulation was dominant for the categories relating to the creation of a supportive working environment, and the adaptation of the learning environment. It would appear that the environment in which learners can experience psychological safety was constructed through SSRL activity. We argue that such feelings of psychological safety are explicitly achieved through co-construction, thanks to exchanges between learners in collaborative learning contexts (Hadwin et al., 2018).

Comments relating to CoRL were very common. This type of regulation frequently occurs with SRL, but also occurs alone (four categories). It should be noted that in the learners’ comments relating to the development of assessment activity, students evoked co-regulatory strategies more than any other type of regulation (CoRL present in 6 out of the 7 categories of assessment activity). We suggest that this reflects the learners’ engagement in a novel learning situation. Through CoRL, learners draw on their new role as assessor, or their peers as social resources (establishing roles as help-givers, or drawing on specific expertise in order to enhance their feelings of legitimacy in the role of assessor) to support their own learning.

Finally, comments relating to SRL are present in categories accounting for around a third of segments. SRL frequently occurs in categories with CoRL, and only one category (self-assessment of English level) contains this type of regulatory process alone.

The participants’ comments on the strategies they used in the learning activity also go some way to providing “data about intent, beliefs, and the transactivity of regulatory interactions,” identified by Hadwin et al. (2018, p. 99) as lacking in the SSRL literature. Across the three phases of regulation, learners in the present study expressed their individual intentions (SRL),

“I was trying to control my mannerisms and manage my stress’;

their co-regulated intentions (CoRL),

“he tried to talk us up a bit”;

And sometimes their socially regulated intentions (SSRL):

“We were all working together to work out a scenario.”

The learners’ beliefs were similarly reflected across the three forms of regulation: individual beliefs (SRL),

“I think that the second time round I was less hesitant and it was better organized”;

their co-regulated beliefs (CoRL),

“I think that if the feedback is positive, if someone says to you ‘you could improve this’ or ‘try doing that’, it encourages you to do it.”

and their socially regulated beliefs (SSRL):

“We knew that within the group no one was going to judge us, we all know how great the atmosphere is in our group”

Thus, our empirical findings led us to better distinguish the differences between SRL, CoRL and SSRL in a real classroom learning setting.

Limitations

Our study was undertaken in the specific setting of a French university. Due to the small number of participants, the results may not be generalizable across other populations.

The setup of the self-confrontation interviews could also be perceived as a potential shortcoming of the study. Despite carefully targeted questioning, participants may struggle to differentiate between the knowledge acquired since they took part in the activity, and the strategies and knowledge mobilized during the activity itself (Theureau, 2010). It is also possible that these characteristics could impact the learning experience overall, however, there is a gap in the literature regarding this issue.

A common charge against qualitative research is that its findings are not generalizable. Advocates of qualitative research argue that case study gives access to the inner lives of people, to the emergent properties of social interaction, and/or to the underlying mechanisms which generate human performance (Gomm et al., 2000). These types of close-contact case studies, while not necessarily generalizable, nonetheless provide insight into underlying components of interactions, including intentionality (Menn et al., 2019), which are unobservable and may by overlooked by self-report questionnaires. As mentioned previously, the inductive approach used in our study sheds light on the participants’ experiences of the learning situation, and therefore could also provide insight that could be transferred to other similar populations in similar situations (Gomm, id.).

Future Directions

In addition to the identified potential of training for enhancing peer assessment, it might be pertinent in future to establish assessment criteria with the help of the learners to help them reflect on the requirements of the task (Fraile et al., 2017). This was indeed an aspect highlighted by the participants in the present study, who had suggestions for improving the assessment criteria:

“there was nothing [on the evaluation form] about how we were arguing, speaking with emotion for example.”

Drawing up assessment criteria with the learners has also been found to increase the reliability of peer assessment activity (Topping, 2009).

The issue of the language used to offer peer feedback for the task was also questioned and commented on by some of the participants. All learners gave feedback in French (their mother tongue), and all thought it best to do so, for different reasons:

“speaking French means stepping out of our role. Really coming back to being an assessor, and not continuing in character”;

“If I’d had to do it in English, I wouldn’t have been able to be so precise.”

The literature suggests that both effective SRL and peer assessment are dependent on the learner’s level of expertise (Panadero et al., 2019). The participants in this study had highly heterogeneous levels of English and therefore the choice was made to let them give feedback in their native language (French). One avenue for future research could be to investigate how the different types of regulation vary between learners with a more homogeneous level in EFL, with one group giving feedback in English, and a control group giving feedback in their native language, working on the same task.

In this study, participants deployed diverse regulatory strategies in order to benefit from the affordances offered by the classroom assessment situation. We therefore suggest that peer assessment in the language classroom enhanced by the use of video feedback is a promising avenue for fostering both self-feedback and group feedback activity and thus stimulating self-regulatory, co-regulatory and socially shared regulatory learning processes. However, the assessment situation must be organized in such a way as to guarantee psychological safety. This can be done in a number of ways including insisting on the formative nature of the activity, providing opportunities for learners to reflect and then act upon the advice that they receive, and by providing appropriate tools on which assessors can rely, in order to boost their confidence and support feelings of legitimacy.

Conclusion

In conclusion, our study therefore reinforces a number of key findings supporting the relevance of the shift in the literature toward a more socio-constructivist approach. First, given the opportunity to engage in collaborative peer assessment activities during their classes on a regular basis, students should gain confidence and skill both in assessing their peers (CoRL), and activating regulatory processes both individually (SRL) and as a group (SSRL). When conducted iteratively, peer assessment also gives learners the opportunity to see the difference in their performance and understand where improvements can be made. Second, we find evidence to support the existing literature that tools can provide opportunities to enhance SRL, CoRL, and SSRL. Third, in addition to the existing literature which insists on the importance of psychological safety for effective peer assessment, it would appear that the construction of a learning environment in which learners can benefit from supportive atmosphere can be fostered in part through peer assessment and through SSRL.

In addition to the research foci targeted by the study, we also find evidence that peer assessment provides essential support for learning in the reflection phase of regulation. Finally, thanks to the observation that SSRL is particularly relevant in processes around social support, we argue that it is a key element of constructing an effective collaborative working environment, and thus assert that regulation at all phases of the regulatory cycle is a socially dependent activity.

Specific findings from the classroom setting also shed light on existing gaps in the literature. We argue that adaptive regulatory strategies are also present in oral (as well as written) EFL tasks. As these tasks, and our findings, are highly contextualized, we hope that they will help to clarify some of the existing literature in terms of the role of co-regulatory and socially regulated behaviors in collaborative tasks. These results indicate the potential for learning situations based on video feedback used in conjunction with peer assessment and collaborative learning in order to develop regulatory behaviors in language learners. This exploratory study shows the scope that classroom-based interventions examining formative peer assessment and co-regulation can have, particularly regarding the co-construction of regulatory behaviors in collaborative learning tasks. Although the emergence of variations in types of regulatory processes according to phases of regulation warrants further investigation, our findings provide direction for recommendations for educators to foster co-regulatory processes in the classroom through the use of a structured learning situation comprising feedback rubrics, video feedback, and clearly defined peer assessment roles.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

Both authors listed have made a substantial, direct, and intellectual contribution to the work, and approved it for publication.

Funding

This research was undertaken in partnership with the AVISCo (Artéfacts Vidéo Intégrés aux Situations d’Apprentissage Collaboratif) project, funded by the CREAD (Center for Research on Education, Learning and Didactics) and the M@rsouin SIG of the Regional Council of Brittany (France).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Allal, L. (2016). “The co-regulation of student learning in an assessment for learning culture,” in Assessment for Learning: Meeting the Challenge of Implementation, Vol. 4, eds D. Laveault and L. Allal (Cham: Springer), doi: 10.1007/978-3-319-39211-0_15

Andrade, H., and Brookhart, S. (2019). Classroom assessment as the co-regulation of learning. Assess. Educ. Princ. Policy Pract. 27, 1–23.

Andrade, H. L., and Brookhart, S. M. (2020). Classroom assessment as the co-regulation of learning. Assess. Educ. Princ. Policy Pract. 27, 350–372. doi: 10.1080/0969594X.2019.1571992

Ben-Eliyahu, A., and Bernacki, M. L. (2015). Addressing complexities in self-regulated learning: a focus on contextual factors, contingencies, and dynamic relations. Metacogn. Learn. 10, 1–13. doi: 10.1007/s11409-015-9134-6

Bijami, M., Kashef, S. H., and Nejad, M. S. (2013). Peer feedback in learning english writing: advantages and disadvantages. J. Stud. Educ. 3, 91–97. doi: 10.5296/jse.v3i4.4314

Boud, D. (2014). “Shifting views of assessment: from ‘secret teachers’ business to sustaining learning’,” in Advances and Innovations in University Assessment and Feedback, eds N. Entwhistle, J. MacArthur, C. Kreber, and C. Anderson (Edinburgh: Edinburgh University Press), 13–31. doi: 10.3366/edinburgh/9780748694549.003.0002

Boud, D., and Falchikov, N. (2007). Rethinking Assessment in Higher Education: Learning for the Longer Term. Milton Park: Routledge.

Charmaz, K. (2006). Constructing Grounded Theory: A Practical Guide Through Qualitative Analysis. Thousand Oaks: Sage.

Charmaz, K., and Thornberg, R. (2020). The pursuit of quality in grounded theory. Qual. Res. Psychol. 18, 305–327. doi: 10.1080/14780887.2020.1780357

Cheng, K.-H., and Tsai, C.-C. (2012). Students’ interpersonal perspectives on, conceptions of and approaches to learning in online peer assessment. Australas. J. Educ. Technol. 28, 599–618. doi: 10.14742/ajet.830

Corbin, A., and Strauss, J. (1990). Grounded theory research: procedures, canons and evaluative criteria. Qual. Sociol. 13, 3–21. doi: 10.1007/bf00988593

De Wever, B., Van Keer, H., Schellens, T., and Valcke, M. (2011). Assessing collaboration in a wiki: the reliability of university students’ peer assessment. Internet High. Educ. 14, 201–206. doi: 10.1016/j.iheduc.2011.07.003

Fraile, J., Panadero, E., and Pardo, R. (2017). Co-creating rubrics: the effects on self-regulated learning, self-efficacy and performance of establishing assessment criteria with students. Stud. Educ. Eval. 53, 69–76. doi: 10.1016/j.stueduc.2017.03.003

Gielen, M., and De Wever, B. (2015). Structuring the peer assessment process: a multilevel approach for the impact on product improvement and peer feedback quality: structuring the peer assessment process. J. Comput. Assist. Learn. 31, 435–449. doi: 10.1111/jcal.12096

Gielen, S., Peeters, E., Dochy, F., Onghena, P., and Struyven, K. (2010). Improving the effectiveness of peer feedback for learning. Learn. Instr. 20, 304–315. doi: 10.1016/j.learninstruc.2009.08.007

Gomm, R., Hammersley, M., and Foster, P. (2000). Case Study Method: Key Issues, Key Texts. London. Thousand Oaks: Sage.

Greene, J., and Azevedo, R. (2007). A theoretical review of Winne and Hadwin’s model of self-regulated learning: new perspectives and directions. Rev. Educ. Res. 77, 334–372. doi: 10.3102/003465430303953

Hadwin, A., Järvelä, S., Miller, M., Schunk, D. H., and Greene, J. A. (2018). “Self-regulation, co-regulation, and shared regulation in collaborative learning environments,” in Handbook of Self-Regulated Learning and Performance, eds D. H. Schunk and B. Zimmerman (Milton Park: Routledge), 83–106. doi: 10.4324/9781315697048-6

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Hogenkamp, L., van Dijk, A. M., and Eysink, T. H. S. (2021). Analyzing socially shared regulation of learning during cooperative learning and the role of equal contribution: a grounded theory approach. Educ. Sci. 11:512. doi: 10.3390/educsci11090512

Järvelä, S., and Hadwin, A. F. (2013). New frontiers: regulating learning in CSCL. Educ. Psychol. 48, 25–39. doi: 10.1080/00461520.2012.748006

Järvelä, S., Järvenoja, H., and Malmberg, J. (2019). Capturing the dynamic and cyclical nature of regulation: methodological Progress in understanding socially shared regulation in learning. Int. J. Comput.-Support. Collab. Learn. 14, 425–441. doi: 10.1007/s11412-019-09313-2

Kermarrec, G., Todorovitch, J., and Fleming, D. (2004). An investigation of the self regulation components students employ in the physical education setting. J. Teach. Phys. Educ. 2, 123–142. doi: 10.1123/jtpe.23.2.123

Lu, J., and Law, N. (2012). Online peer assessment: effects of cognitive and affective feedback. Instr. Sci. 40, 257–275. doi: 10.1007/s11251-011-9177-2

Menn, M. L., Bossard, C., Travassos, B., Duarte, R., and Kermarrec, G. (2019). Handball goalkeeper intuitive decision-making: a naturalistic case study. J. Hum. Kinet. 70, 297–308. doi: 10.2478/hukin-2019-0042

Nicol, D. J., and Macfarlane, D. (2006). Formative assessment and self-regulated learning: a model and seven principles of good feedback practice. Stud. High. Educ. 31, 199–218. doi: 10.1080/03075070600572090

Panadero, E. (2016). “Is it safe? social, interpersonal, and human effects of peer assessment: a review and future directions,” in Handbook of Social and Human Conditions in Assessment, eds G. T. L. Brown and L. R. Harris (Milton Park: Routledge), 247–266. doi: 10.1186/s12913-016-1423-5

Panadero, E. (2017). A review of self-regulated learning: six models and four directions for research. Front. Psychol. 8:422. doi: 10.3389/fpsyg.2017.00422

Panadero, E., Andrade, H., and Brookhart, S. (2018). Fusing self-regulated learning and formative assessment: a roadmap of where we are, how we got here, and where we are going. Aust. Educ. Res. 45, 13–31. doi: 10.1007/s13384-018-0258-y

Panadero, E., and Järvelä, S. (2015). Socially shared regulation of learning: a review. Euro. Psychol. 20, 190–203. doi: 10.1027/1016-9040/a000226

Panadero, E., Jonsson, A., and Strijbos, J.-W. (2016). “Scaffolding self-regulated learning through self-assessment and peer assessment: guidelines for classroom implementation,” in Assessment for Learning: Meeting the Challenge of Implementation, Vol. 4, eds D. Laveault and L. Allal (New York, NJ: Springer International Publishing), 311–326. doi: 10.1007/978-3-319-39211-0_18

Panadero, E., Lipnevich, A., and Broadbent, J. (2019). “Turning self-assessment into self-feedback,” in The Impact of Feedback in Higher Education, eds M. Henderson, R. Ajjawi, D. Boud, and E. Molloy (New York, NJ: Springer International Publishing), 147–163. doi: 10.1007/978-3-030-25112-3_9

Piccardo, E., Goodier, T., and North, B. (2018). Council of Europe (2018). Common European Framework of Reference for Languages: Learning, Teaching, Assessment. Companion Volume with New Descriptors. Strasbourg: Council of Europe Publishing.

Pintrich, P. R. (2004). A conceptual framework for assessing motivation and self-regulated learning in college students. Educ. Psychol. Rev. 16, 385–407. doi: 10.1007/s10648-004-0006-x

Pintrich, P. R., and Zusho, A. (2002). “The development of academic self-regulation: The role of cognitive and motivational factors,” in Development of Achievement Motivation, eds A. Wigfield and J. S. Eccles (Cambridge, MA: Academic Press), 249–284. doi: 10.1016/B978-012750053-9/50012-7

Sambell, K., Brown, S., and Race, P. (2019). Assessment as a locus for engagement: priorities and practicalities. Italian J. Educ. Res. 22, 45–62.

Schunk, D. H., and Greene, J. A. (2018). “Historical, contemporary, and future perspectives on self-regulated learning and performance,” in Handbook of Self-Regulation of Learning and Performance, eds D. H. Schunk and B. Zimmerman (Milton Park: Routledge), 1–15. doi: 10.4324/9781315697048-1

Strijbos, J.-W., and Sluijsmans, D. (2010). Unravelling peer assessment: methodological, functional, and conceptual developments. Learn. Instr. 20, 265–269. doi: 10.1016/j.learninstruc.2009.08.002

Teng, M. F. (2020). The role of metacognitive knowledge and regulation in mediating university EFL learners’ writing performance. Innov. Lang. Learn. Teach. 14, 436–450. doi: 10.1080/17501229.2019.1615493

Theureau, J. (2010). Les entretiens d’autoconfrontation et de remise en situation par les traces matérielles et le programme de recherche « cours d’action ». Revue d’ Anthropol. des Connaiss. 2, 287–322. doi: 10.3917/rac.010.0287

van Gennip, N. A. E., Segers, M. S. R., and Tillema, H. H. (2010). Peer assessment as a collaborative learning activity: The role of interpersonal variables and conceptions. Learn. Instr. 20, 280–290. doi: 10.1016/j.learninstruc.2009.08.010

Zimmerman, B. J., Moylan, A., Hudesman, J., White, N., and Flugman, B. (2011). Enhancing self-reflection and mathematics achievement of at-risk urban technical college students. Psychol Assess. Modeling 53, 141–160.

Keywords: peer assessment (PA), self-regulated learning (SRL), socially-shared regulation of learning (SSRL), co-regulated learning (CoRL), collaborative learning, video feedback, English as a Foreign Language (EFL), higher education

Citation: Clayton Bernard R and Kermarrec G (2022) Peer Assessment and Video Feedback for Fostering Self, Co, and Shared Regulation of Learning in a Higher Education Language Classroom. Front. Educ. 7:732094. doi: 10.3389/feduc.2022.732094

Received: 28 June 2021; Accepted: 21 March 2022;

Published: 15 April 2022.

Edited by:

Heidi L. Andrade, University at Albany, United StatesReviewed by:

Ana Couceiro Figueira, University of Coimbra, PortugalKelly Beekman, Fontys University of Applied Sciences, Netherlands

Copyright © 2022 Clayton Bernard and Kermarrec. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rebecca Clayton Bernard, cmViZWNjYS5jbGF5dG9uQGltdC1hdGxhbnRpcXVlLmZy

†These authors have contributed equally to this work and share first authorship

Rebecca Clayton Bernard

Rebecca Clayton Bernard Gilles Kermarrec

Gilles Kermarrec