- openHPI/Internet Technologies and Systems, Hasso Plattner Institute, Digital Engineering Faculty, University of Potsdam, Potsdam, Germany

About 15 years ago, the first Massive Open Online Courses (MOOCs) appeared and revolutionized online education with more interactive and engaging course designs. Yet, keeping learners motivated and ensuring high satisfaction is one of the challenges today's course designers face. Therefore, many MOOC providers employed gamification elements that only boost extrinsic motivation briefly and are limited to platform support. In this article, we introduce and evaluate a gameful learning design we used in several iterations on computer science education courses. For each of the courses on the fundamentals of the Java programming language, we developed a self-contained, continuous story that accompanies learners through their learning journey and helps visualize key concepts. Furthermore, we share our approach to creating the surrounding story in our MOOCs and provide a guideline for educators to develop their own stories. Our data and the long-term evaluation spanning over four Java courses between 2017 and 2021 indicates the openness of learners toward storified programming courses in general and highlights those elements that had the highest impact. While only a few learners did not like the story at all, most learners consumed the additional story elements we provided. However, learners' interest in influencing the story through majority voting was negligible and did not show a considerable positive impact, so we continued with a fixed story instead. We did not find evidence that learners just participated in the narrative because they worked on all materials. Instead, for 10–16% of learners, the story was their main course motivation. We also investigated differences in the presentation format and concluded that several longer audio-book style videos were most preferred by learners in comparison to animated videos or different textual formats. Surprisingly, the availability of a coherent story embedding examples and providing a context for the practical programming exercises also led to a slightly higher ranking in the perceived quality of the learning material (by 4%). With our research in the context of storified MOOCs, we advance gameful learning designs, foster learner engagement and satisfaction in online courses, and help educators ease knowledge transfer for their learners.

1. Introduction

Massive Open Online Courses (MOOCs) have been a well-established tool for lifelong learning since their first appearance in 2008 (Downes, 2013). Initially, MOOCs were designed for high social interaction and incorporated interactive course structures, proving the connectivist learning theory (Hollands and Tirthali, 2014). In the following years, several other MOOC formats have evolved. They differ mainly in the learning designs1 and privacy settings or entry requirements.2 However, most courses since the big MOOC hype in 2012 (Pappano, 2012) follow a traditional teaching approach (xMOOC format) (Ng and Widom, 2014), which applies to all popular MOOC platforms. xMOOCs often lack interactive elements and suffer from low user engagement (Willems et al., 2014). Hence, a major challenge for course designers is to create exciting and interactive courses to improve user satisfaction and engagement. However, course teachers often struggle with selecting appealing learning materials and applying good learning designs for their online courses, as many have a traditional university teaching background or none at all. So, how to best support course instructors?

In a second hype, gamification has been applied to MOOC platforms to improve user engagement. Many platforms introduced experience points and badges. However, this type of gamification is mainly addressing extrinsic motivation and does not support the learning content. Hence, it did not stop learners from dropping out of the courses. But maybe there are other ways to use gameful designs?

In 2016, we started experimenting with gameful learning, focusing on supporting learners' understanding, engagement, and inner motivation to proceed with the learning materials. First, we conducted a user survey to better understand the learners' needs on our platform openHPI,3 where we provide courses about IT and digital literacy. After evaluating the survey results, we first applied gameful designs in our 2017 Java programming course using a coherent story that spanned all course weeks. We then revised the course and improved its content in 2018, again using a story to connect the course content. Both courses taught similar learning content and used the same characters, but the storytelling in both courses differed significantly. In both courses, we presented multiple story videos, story quizzes, and other gameful Easter eggs so that our learners could immerse and interact with the story. Following this initial idea, we continued telling the detective story in all our Java courses conducted since then, up to our latest Java course in 2021.

This article presents our design decisions, insights, learner feedback, and learner engagement with the storified courses. The article summarizes previous publications (Hagedorn et al., 2017, 2018, 2019, 2022) and expands on the results presented there. Additionally, we developed a guideline in this article, outlining what course instructors need to consider when designing course narratives. Following this guideline can help instructors make their courses more appealing, engaging, and motivating regardless of their teaching background. Our elaboration illustrates specific story design decisions and their effect on the course participants.

2. Background and related work

MOOCs allow for free, self-regulated learning. While they provide an excellent way for learners to increase their knowledge, they also pose high challenges: learners must keep up regularly over a certain period and constantly motivate themselves to continue the course. Making this as easy and enjoyable as possible for learners should be the goal when designing a course, which is why we started to evaluate gameful learning options. This chapter first describes the characteristics of MOOCs and our course vision in Section 2.1. We then highlight related work (see Section 2.2) and our research questions (see Section 2.3). Afterward, we present our platform with their key features in Section 2.4 relevant for the conducted courses we are going to present in the following sections.

2.1. Problem statement and vision

Although cMOOCs were designed to be very interactive, learner interaction has drastically decreased since xMOOCs became the prevailing MOOC format. Nowadays, MOOCs4 are a well-established tool to teach a wide range of different course topics for lifelong learners. A MOOC usually consists of multiple video lectures and quizzes for self-testing or learner assessment (Willems et al., 2013; Staubitz et al., 2016b). Sometimes, they also include written assignments, interactive exercises, and (team) peer assessments (Staubitz et al., 2019). Traditionally, learning materials are released on a weekly basis, with courses spanning from two to eight or even more weeks (Höfler et al., 2017). A trend for shorter course periods of 2 or 4 weeks has evolved in recent years (Serth et al., 2022a) while also offering more self-paced courses. A major advantage of MOOCs over other e-learning formats is the social atmosphere. Learners are encouraged to interact with the teaching team and other learners in a course forum, i.e., to discuss open questions and share further background knowledge. The forum allows learners to connect and create a sense of community (McMillan and Chavis, 1986), increasing learner engagement and receiving fast feedback when struggling with the learning materials. However, only up to 15% (Serth et al., 2022b) of learners actively participate in forum discussions. Therefore, the course's interactivity is severely limited from this aspect. With more self-paced courses, such a problem even increases.

Hence, applying interactive course formats and elements in the correct context is becoming increasingly important, as it can support the learners' engagement and understanding (Hagedorn et al., 2017) and contribute to self-regulated learning. Using the course forum as the only interactive course element did not prove to be the best solution. Thus, course designers should strive to identify and apply elements to create more interactive course formats and foster low-barrier social interaction.

One option for more interactive courses is applying gameful learning in the course design. Gameful learning is an umbrella term for game-based learning and gamification and describes the use of games, game elements, or game mechanics in learning environments (Hagedorn et al., 2018). Thus, gameful learning can be applied in manifold ways to platforms, courses, and learning units. Our research goal is to identify methods to simply employ gameful learning without complex technical means, which we deem crucial to lowering the barrier of using gameful learning in MOOCs for teachers and learners. We strive to evaluate gameful learning methods to support both course content and learner interaction.

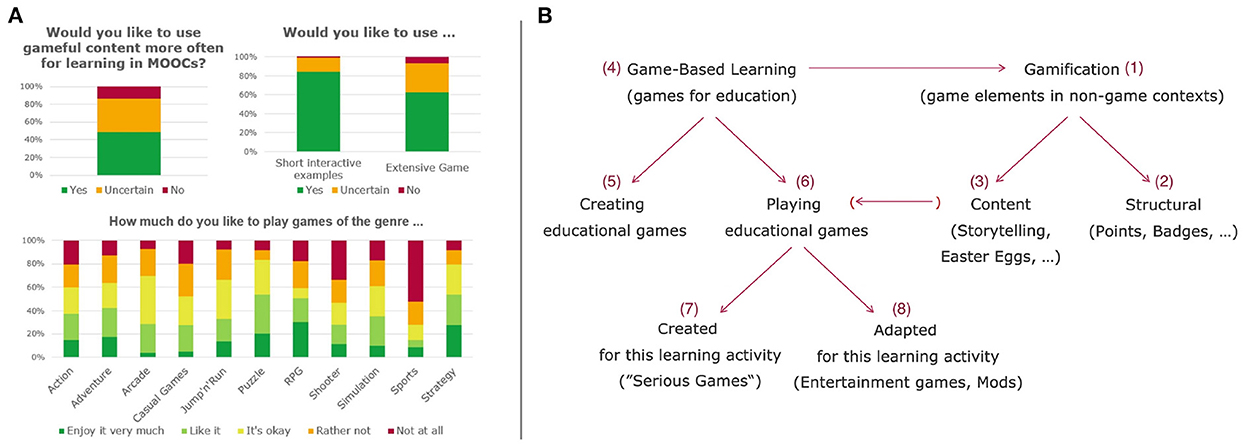

Using gameful learning in the context of course content is particularly useful for course topics that are difficult to master. When the learning goal is hardly achievable through videos and quizzes, learners need more hands-on exercises to deepen their knowledge by practically applying what they have learned. But even if the course content is easy to understand, applying the newly acquired knowledge directly in one global course context is more accessible than focusing on a new problem for each assignment. Our goal was to connect the course content with a coherent gameful element to apply a global course theme. We were uncertain whether a course-wide game or several small gameful elements would be more appropriate for immersion and participant motivation. Thus, we first gathered feedback from our learners in a platform-wide survey, and it turned out that they prefer several small gameful elements (see Figure 1A).

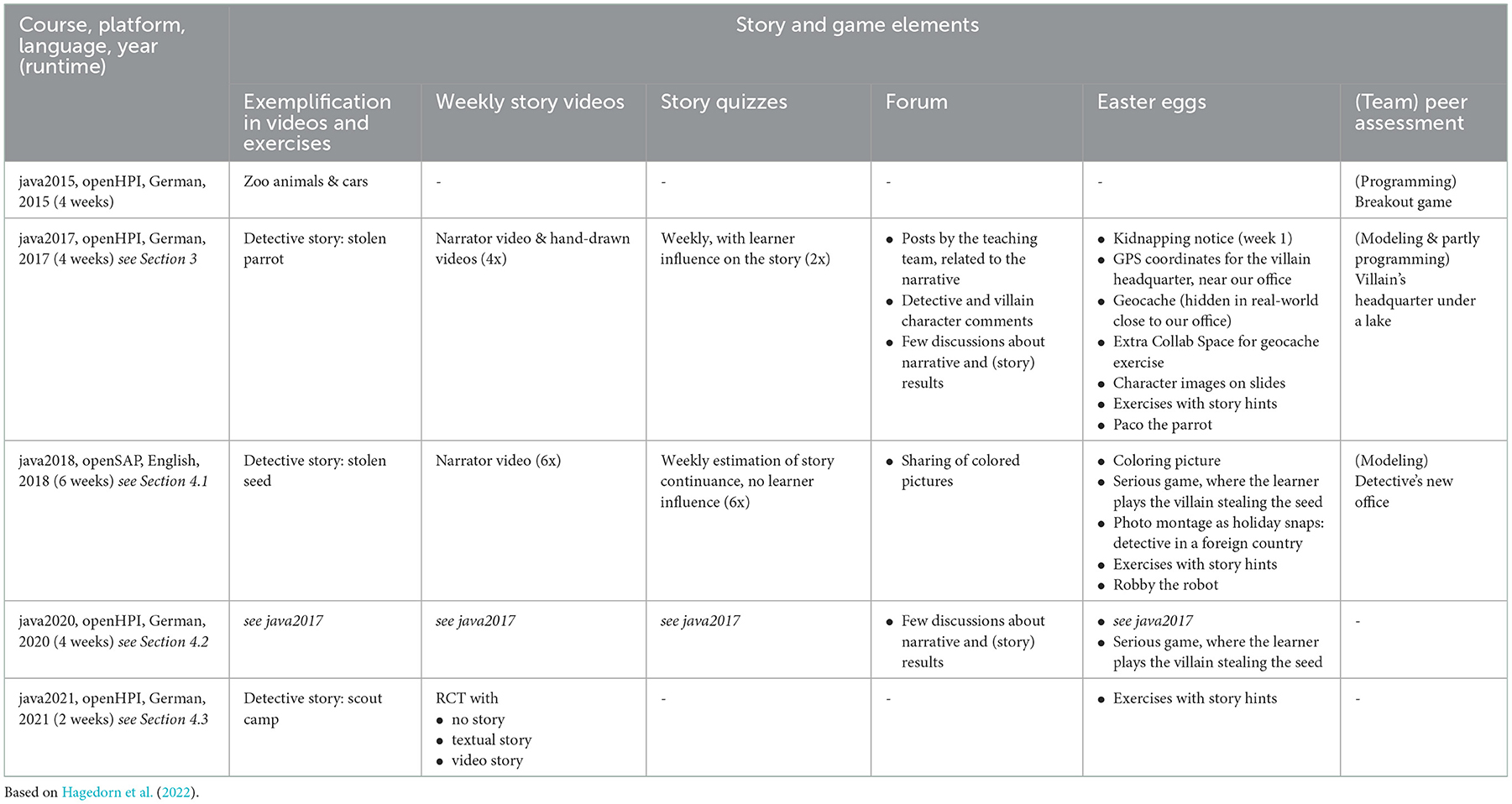

Figure 1. (A) Results from the survey conducted at the start of the experiment, showing learner preferences regarding gameful elements (Hagedorn et al., 2018). (B) Possibilities of how to apply gameful learning through different means of gamification and game-based learning (Hagedorn et al., 2018).

With this knowledge, we discussed various options on how to implement gameful learning in a way it provides value to the learners. To get started, we looked for opportunities the platform already supports without developing additional features—which is also helpful for more accessible teacher adaptation later. We decided against multiple small games, which might be technically challenging, but instead chose to add a connecting story to our course. The course exercises should revolve around the narrative, providing learners with multiple small gameful elements rather than making the entire course a game.

2.2. Related work

In this section, we address the related work that influenced our experiment. We reduced the chapter content to the essential points affecting this article; more extensive elaborations can be found in Hagedorn et al. (2017, 2018) and Staubitz et al. (2017).

2.2.1. Getting the terms right

Many terms around games, game elements, and game mechanics exist in the learning context. Depending on the approach, methods and focus differ slightly, but transitions are seamless and sometimes almost impossible to distinguish. Figure 1B provides an overview of gameful learning, including different manifestations and overlaps of gamification and game-based learning.

Gamification (1, see Figure 1B) can be applied on the structural (2) and content (3) level (Kapp, 2012). The first gamifies structures of learning materials or platforms, e.g., by adding points or badges. On the other hand, content gamification (3) gamifies the content itself, e.g., by storytelling or adding Easter eggs (Farber, 2017). In contrast, game-based learning (4, GBL) describes using games for teaching (Prensky, 2001). Game-based learning can also be applied in different ways: either by designing (5) or by playing (6) a game (Papert, 1996). When playing a game (6), the game can be designed particularly for the learning context (7) or get adapted to suit it (8) (Van Eck, 2006). If a game is specifically designed for one learning context (7), it is usually called a serious game. However, applying content gamification to learning materials (3) could also be considered a game (Van Eck, 2015).

When thinking about gameful learning, traditional structural gamification (2) through points, badges, and leaderboards often come to mind first. Yet, this represents the least favorable option in the learning context; as incorrectly applied, learners' intrinsic motivation can quickly vanish (Deci, 1971; Staubitz et al., 2017). Conversely, content gamification or playing games can increase the learners' curiosity and natural intrinsic motivation to learn new things and fully engage in the system to master it (Kapp, 2012; Juul, 2013). Hence, this is the preferred way of implementing gameful learning.

2.2.2. Gameful learning in MOOCs

Different implementation strategies of gameful learning have been applied to the MOOC context, e.g., Höfler et al. (2017) used a narrative concept in their “Dr Internet” MOOC to examine and foster MOOC completion and motivation. When we started setting up our experiment, their results were not published yet; thus, they were not considered for our design concept. However, both research focus on different aspects. Although MOOC dropout rates, respectively, retention rates will be evaluated in this study, we will go beyond. We accepted that learners drop out of a course for several personal reasons; thus, achieving higher completion rates is not our primary goal. Instead, we strive for highly engaging and informative courses which best support our learners in achieving their personal learning goals, applying the learned skills and knowledge, getting connected to other learners, and keeping them motivated to join further courses.

In other examples of gameful MOOCs, courses were set up as full serious games (Thirouard et al., 2015) or included single interactive exercises, e.g., in interactive textbook format (Narr and Carmesin, 2018). As we identified our learners preferred short, interactive exercises over extensive games, we refrained from encompassing obligatory game elements. Therefore, we decided to build our experiment on optional storified MOOC elements.

2.2.3. Game design

When storifying a MOOC, multiple game principles should be considered in addition to the general learning principles. In games and narratives, it is crucial to create likable characters, thus creating a connection between the learner and the story. This allows learners to identify with the characters, leading to better immersion, whereas both usually lead to higher motivation (Novak, 2012). To create a permanent impression, protagonists should have names that are easy to remember, which might represent significant character traits (Novak, 2012). Identification with female characters usually is possible for both genders, as long as the characters are pictured appropriately for the given tasks, whereas identification with male characters is harder for female players (Jäger, 2013).

Freedom of choice and interactivity are crucial aspects of differentiation when comparing games to non-interactive narratives. Such freedom in games allows players to live out their scientific curiosity (Novak, 2012). Even if a game is linear, the impression of non-linearity is achievable by giving players freedom of choice. Allowing players to decide in which order they are working on different tasks also supports their satisfaction, which is usually a motivating factor for many players (Novak, 2012). Furthermore, interactivity is also an important aspect of all types of MOOCs [23], can take place between learners and (a) other learners, (b) course resources, and (c) teachers (Höfler et al., 2017), and should be supported with tasks and tools.

Good stories should be interesting and engaging, offer story twists and some kind of mystery. The story must be comprehensible, logically structured, and consistent. It is important to consider and indirectly explain why a character behaves in a specific way, keeping in mind the character traits and background story (Novak, 2012). The same applies to the world behavior, e.g., physical rules that apply to the world.

In games, players tend to skim over narrative and descriptive text elements (Novak, 2012). The same applies to MOOCs, where learners tend to skim reading materials, whereas they spend much time on video content. Lengthy introductions add to the users' fatigue in playing (Novak, 2012) and learning contexts; thus, a story should be advanced by providing short videos or interactive elements.

2.3. Research questions

When starting our experiment, we wanted to identify which gameful methods are suitable in a MOOC that, with regard to scalability, offers particular design challenges but also opportunities to involve the whole community. This implementation of gameful learning on openHPI should foster collaboration and improve the learners' understanding. Through analyzing related work, we identified multiple options how to apply gameful learning to our course regardless of the number of expected learners. We favored adding content gamification and decided to include narrative structures5 and Easter eggs6 as low-barrier methods. With those two game elements, we aim to enrich our course content with multiple interactive elements and evaluate the following research questions:

• RQ1.1: Is the application of a narrative perceived as a positive, negative or neutral course element?

• RQ1.2: Does a narrative have any influence on the learners forum behavior?

• RQ1.3: Does story-relevant freedom of choice have any influence on the learner engagement?

• RQ1.4: Which percentage of learners is actively interested in the narrative?

We then build upon this foundation and analyze the effects of the applied designs on the students' motivation, particularly their course engagement and achievement:

• RQ2.1: How did learners engage with story videos compared to regular lecture videos?

• RQ2.2: How did learners respond to story quizzes?

• RQ2.3: How did the story and game elements influence the learning behavior and outcome?

• RQ2.4: How did learners perceive the story and game elements?

After validating the story effects in RQ2, we finally aim to compare different presentation styles:

• RQ3.1: What differences regarding learner preferences and behavior are observed when using different presentation styles (text and video) and scopes (number and duration of elements)?

• RQ3.2: What recommendations for using a story can be derived from the findings in RQ3.1?

2.4. Platform features and prerequisites

Since 2012, we have maintained our own MOOC platform openHPI. Both, the platform and offered courses, are developed by the Hasso Plattner Institute (HPI). Equivalent to the academic teaching program on-site, the courses are mainly related to IT topics. In addition, we maintain separate instances of our software for partners and customers, e.g., openSAP is employed by SAP to provide mostly business-related courses.

Our platform offers the common MOOC feature set mentioned above (videos, quizzes, course forum) and additionally includes features like peer assessment, tools for collaboration, or a coding tool. One of our key platform features is a video streaming format with two video sources—one for the presenter and a second for the presented materials like slides or a computer screen.

2.4.1. Course structure

We offer courses with a length between 2 and 6 weeks. Our courses are conducted over a fixed period, with course weeks released every Wednesday. Usually, a course week consists of five to ten learning units. Each learning unit includes at least a video and a self-test. As a rule of thumb, videos should not exceed 10 min in length, and the self-tests aim to support learners in determining if they fully understood the content of the video. Learners can repeat them without limitations. A learning unit can include additional text pages and interactive exercises depending on the course content.

Primarily for programming courses, openHPI allows the integration of the proprietary developed programming platform CodeOcean (Staubitz et al., 2016a) which provides a web-based coding environment. Using the tool, learners can start programming in their web browsers rather than installing an IDE first. Automatic testing and grading are conducted within CodeOcean, which allows adding numerous programming exercises to a course. We suggest providing one to three programming exercises for each learning unit in programming courses.

The MOOC platform openHPI further offers Peer Assessment (PA) exercises for both teams and single learners. In a PA, learners will work alone or in groups on an assignment, submit it, and afterward evaluate the submissions of other learners or teams. For team peer assessments, every team automatically gets assigned to a Collab Space to work on their group exercise. The Collab Space offers a private forum and different teamwork software to provide a positive work experience. Any learner who wants to team up with others can use the Collab Space, independent of a peer assessment. Usually, learners need to explicitly enroll for (team) peer exercises within a course, making them commit to working on the task.

During course period, the teaching team supervises the courses, and learners can achieve certificates. In the Java courses, we offer two different types of certificates to our learners: the Record of Achievement (RoA) and the Confirmation of Participation (CoP). The CoP is issued to all learners who visit at least 50% of all course materials. The RoA is issued to all learners who achieve at least 50% of all available course points. For this, self-tests do not count toward course points but only the weekly assignments, the final exam, and usually the interactive course exercises (such as programming assignments). The course forum is open for discussions during the course period. After the course end, learners can complete the course in a self-paced mode, but the teaching team no longer provides support. Also, earning a certificate is only possible to a limited extent.

2.4.2. Learning analytics and research methodology

From our perception when we started with the experiments and investigations in December 2016—forum participation, helpdesk requests, etc.—our users often rejoin newer course iterations to see what has changed. We later validated this perception in user surveys and courses (Hagedorn et al., 2019). Most of our learners are between 30 and 50 years old; about 20% identify as female, and 80% identify as male (Staubitz et al., 2019).

In our courses, we measure different metrics. (1) Course enrollment numbers are recorded at course start, middle, and end. The (2) show rate is calculated by enrollments at the course-mid and course end, where all learners who visited at least one item are counted as a show. We assume that all learners who have started the course until the course-mid date have the opportunity to complete the course with a certificate. Therefore, (3) completion rates are calculated using course-mid shows. However, the show rate is no adequate metric for (4) active learners: while no items are unlocked when enrolling before the course starts, learners who register after the course has started are automatically directed to the first learning item. Thus, all learners who enroll after the course start are counted as shows—regardless of their actual course activity. As a result, we calculate active learners as learners who have earned at least one of the total points needed to achieve the graded RoA. In contrast, learners are considered a dropout if they had been a show but quit the course without returning until the course end (Teusner et al., 2017); hence, they receive no course certification.

We regularly collect feedback from our learners through our course start and end surveys, in which an average of 60–80% of learners active at the time participate. If needed, we conduct additional surveys in the course or platform-wide to gather data for our research evaluations.

3. From the first vision to a storified course

Building on the concepts mentioned in Section 2.2.3, we want to create stories suitable for use in MOOCs. For our first experiment, we selected a 4-week German-language beginner's Java course. To create a suitable story, we first considered our target audience, influencing the general course scope and content, the story, and proper characters. This section will present the course design, setup, and informal learner feedback of our first storified course. First, we describe in Section 3.1 how we developed our first story based on our ideas. We will then elaborate on how we applied the story in the course (Section 3.2) and which additional Easter eggs we added (Section 3.3). We conclude this section with details on the first learner feedback we received (see Section 3.4).

3.1. Developing our first story

Our Java programming courses aim at teenagers aged at least 14 years old and adults. In general, our courses are attended more often by people who identify as male. Still, the number of learners who identify as female should not be underestimated. In our opinion, female learners should be actively considered and supported, as we want to contribute to reducing the gender gap in IT. Therefore, the story needed to appeal to young and old learners regardless of gender. As we needed to find a narrative that works for all ages and gender, we decided on a detective setting that we considered most gender and age neutral. For such a story, we needed a detective, a case to solve, and a villain as a counterpart to create thrill. Participants highlighted recurring parrot examples in a previous Java course; thus, we added a parrot to the new course as a recognizing Easter egg for recurring learners and decided this character should have at least one appearance in the story. Further, we included a robot setting for the programming exercises, which gave us room to present many different object-oriented programming (OOP) concepts. We decided that the detective's antagonist should steal the parrot, and the robot would help the detective to get it back. The narrative was supposed to span over all course weeks, revealing the case's solution at the course end. This design was supposed to encourage and motivate the learners to proceed with the course until the very last course item.

Story Concept: With this vague story idea, we started developing the course materials. We let the Easter eggs and the narrative evolve over the course's design phase (element overview can be found in Table 1). Hence, we always had our general story setting in mind when designing examples and exercises, but they did not follow a distinct predefined narrative; we mostly developed the narrative on top of the created materials at the end of the content design phase. We decided on this approach to ensure focus on creating suitable learning materials rather than a great narrative lacking good content examples. At the same time, we decided all learning materials should work without following the narrative, so learners who solely wanted to learn about the course's programming topic could skip the story, which fulfills the freedom of choice we aimed for. To avoid a bias on the learners' intrinsic motivation, we did not add extrinsic motivators like story-related badges and made the story videos optional, not counting toward course completion.

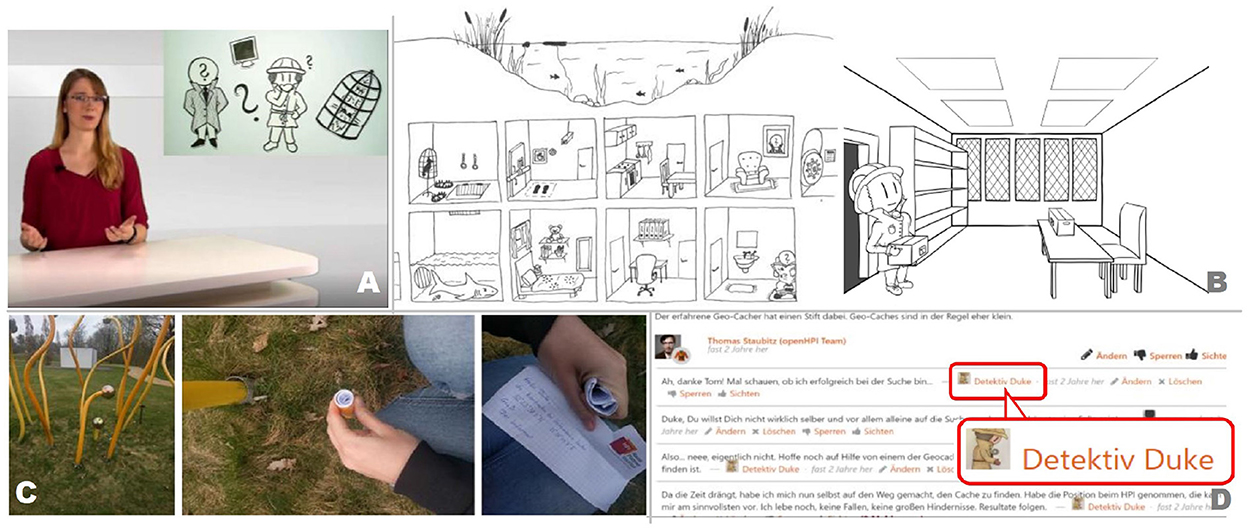

To improve possible learner identification with the characters, those were supposed to be neither male nor female. Thus, we chose names and drew their avatars in a way they could be of both sexes (see Figure 2A). In addition, the names were supposed to be simple and easy to remember; thus, alliterations and character traits were chosen, like “Paco the parrot,” “Robin the Robot,” or “Duke the detective.” The villain was supposed to be called “evil,” which led to the name “E. Vil;” hence we came up with the gender-neutral name “Eike.” Unfortunately, the nickname “Duke” is more often interpreted as a male name, which as well applies to “Eike” and the robot's name “Robin” in German language. Therefore, we added a second explicitly female robot character, calling her “Ronja.”

Figure 2. Different elements of the course story: (A) story video including presenter and animated video, (B) illustrations of peer assessment task, (C) geocache presentation, (D) story characters posting in the course forum (Hagedorn et al., 2019).

Story Outline: Our brainstorming throughout the content creation resulted in the following story outline: Detective Duke is an excellent and famous detective in Potsdam, Germany. One day, his everlasting nemesis, Eike Vil, steals his beloved parrot Paco and leaves only a kidnapper's notice. Eike wants to get the password for Duke's computer, which is where the story in the course begins. During the course, Duke tries to free Paco from Eike. First, Duke needs to find out where Eike is hiding Paco. Hints lead to Eike's headquarters somewhere around the HPI in Potsdam. To get into the headquarter safely, Duke builds a robot named Robin and sends him to the assumed location. Unfortunately, Duke does not yet know enough about secure programming; thus, Eike easily reprograms Robin to work for him instead. Duke builds another now secure robot Ronja to get into the villain's headquarter. This time, Ronja and Duke make it through all traps, finally freeing Paco and Robin. With this finale, the course ends.

Repeatedly, the learning objectives influenced the story's flow. For example, we sought a practical example to illustrate why considering encapsulation7 is crucial in OOP. Typically, incorrect encapsulation allows access to parts of code that should not be externally modifiable. We exemplified this by having Eike steal Robin, so Duke has to build himself a new robot. If we had designed a fixed story in advance to which we would then adapt the learning content, we might not have been able to portray this vital concept well through the story.

3.2. Implementation ideas

To apply our narrative to the course, we needed to incorporate the story into the learning materials in an interactive and motivating way. As learners tend to skip over texts and know the concept of videos in our courses very well, we decided to create multiple story videos (see Table 1). These videos were intended to be skippable without influence on the course progress, so they could not be included in the lecture videos but were stored in separate course items. Adding too many of those videos would overextend the course syllabus, so we decided to add one or two videos for each course week whenever they were required most to advance the story. Generally, the course week start seemed to be a good place to advance the story for the rest of the week.

Story Participation: To meet the interactivity principle, we decided to allow learners to select from alternative options to advance the story. Participants could vote for their favored choice at the end of each course week. We wanted a majority vote to determine how the story continued next week, as this made all learners follow the same narrative throughout the course and not make every learner end up with a different ending.

We offered two or three options to vote between each week, which sums up to at least 14 different narrative paths. However, it was not feasible to create so many different story paths that were not used for our course; thus, we decided to follow one general narrative. Hence, all decisions made led to the same result, which was achieved in a different way, e.g., when Robin was sent to the headquarter, he either was lost in the woods because the learners sent him to the wrong location, or he was caught in a trap at the correct headquarter location. Both ways, Eike was able to steal and reprogram him. Thus, we produced six different story videos instead of 14 or more, and the narrative resulted in the same final ending for all decisions. The story videos were unlocked manually at the end of each week for the next week after the story survey deadline had passed. At the same time, the survey results were presented in the course forum. With this approach, we tried to create a feeling of non-linearity—hence, creating more interactivity—although the story was mostly linear.

Telling the Story Through the Course: We wrote a story script for the narrative videos and drew the relevant characters by hand. Then, we recorded the videos with a document camera. The hand-drawn characters were cut into individually shaped elements and then moved around by our hands under the document camera. After recording the presenter reading the script in the studio, we adjusted the movement speed of the visualized narrative to synchronize with the storyteller's speed. We chose this technical implementation because it was the least effort for us compared to real video animation. In addition, such a presentation is often used for explanatory videos; thus, the outcome seemed suitable. Visualizing the narrative helped improve the narrative flow, so we highly improved the script during the video recording. With about 60 person-hours, creating the videos was a relatively high effort. Besides the professional studio equipment, none of us had an extensive video creation background; thus, we relied on basic video editing skills. Each story video was about 1–3 min long.

In addition to the story videos, we also decided to add programming exercises that advanced the story—the programming tasks needed to be solvable without explicit story knowledge. Hence, the exercise description provided all story information required to solve the task, and narrative elements were mainly used for setting an exercise context.

3.3. Adding more Easter eggs

Finally, we added multiple Easter eggs (see Table 1) to foster even higher interaction and smile occasions. First, a geocache was hidden close to the HPI. The geocache location was revealed in one of the programming exercises. We asked all interested learners (RQ1.4) to join a dedicated Collab Space to discuss and compare their findings. The information hidden in the geocache led to GPS coordinates of Eike's headquarters, pointing to a lake in front of our office. The coordinates suggested Eike could be part of the openHPI team.

In addition, Duke and Eike joined the forum discussions, e.g., in our introductory thread where all learners could introduce themselves (see Figure 2D). Initially, we wanted to post mischief with Eike and ask the learners for more feedback with Duke; but we decreased the number of forum posts during the course period as they received sparse feedback. Also, we considered Paco the parrot a forum character, repeating user phrases and describing his surroundings. We did not implement this character as we feared making the learners accidentally feel fooled.

The course included a team peer exercise for modeling Eike's headquarters, which also was an Easter egg. Especially the image accompanying the exercise task was created as an Easter egg, as the headquarter under the water showed multiple rooms in which, amongst others, Paco was kept (see Figure 2B). Last but not least, we added small character icons to our presentation slides; however, they did not have any specific meaning for the slide content type.

3.4. Conducting the course and receiving first learner feedback

Our German-language course on object-oriented programming in Java (java2017) was conducted from March to May 2017 as a 4-week workshop with additional time for a team peer exercise. 10,402 participants enrolled in the course until the course end, of which we consider 5,645 active learners. To complete this course, learners were required to solve several programming exercises (50% of available course points) and weekly assignments in the form of multiple-choice quizzes.

To advance the detective story throughout all course weeks, we added a story video at the beginning of each week. The story was supposed to increase exemplification, learner motivation, and interaction with the forum and Collab Spaces. The included hands-on programming exercises were occasionally connected to the coherent story. Most of the learning content videos also used examples related to the narrative.

At the course start, the learner feedback in the course forum regarding the story was mixed and somewhat skeptical. Nonetheless, some learners indicated looking forward to the story's development and found a global course theme helpful for their learning experience. We also received feedback from learners recognizing Paco the parrot from the previous Java course on the platform. Some learners mentioned they missed the story votes and did not find the voting results in the course forum. A handful of learners in the course forum wanted to know whether their majority decision influenced the story, which we confirmed. During the course period, Eike and Duke actively participated in the forum discussions; they had proper names and profile pictures, which made it easy for the learners to identify them. We expected that the characters would foster the learners to interact in the course forum and discuss the story twists with them. Despite our design, many learners did not understand that the characters were official course assets and started reporting them as fake accounts. Overall, we saw rare feedback on the results; however, we managed to motivate 20-30 learners throughout the course to discuss the story with our characters and other learners, but most of those learners were active in the course forum anyways. Either way, this number is comparably low—accordingly, we feel that this attempt to promote social interaction has failed (RQ1.2).

Multiple clues and additional information were hidden in the programming exercises, either in the description or the exercise output. To distinguish between learners who passively consumed the story and those actively interested in the narrative, we implemented one programming exercise that invited interested learners to join a specific story-related Collab Space. This dedicated area was supposed to be used for discussing hints regarding the geocache exercise, leading to story-relevant GPS coordinates. We expected at least a handful of learners living close to the campus to search for the geocache. However, as none of the learners found the geocache, or at least did not announce it, we made Duke visit the geocache after a couple of days and posted his findings in the Collab Space (see Figure 2C) to present the geocache information to all learners. Again, we did not see any learner interaction with the character in the Collab Space, so this approach did not prove to work for making learners contribute more to the course forum (RQ1.2). Instead, we saw learners complaining that they had to join the Collab Space to solve the exercise, and they did not realize they could submit the exercise with 100% without the optional Collab Space enrollment (RQ1.4).

Last but not least, the team peer exercise was connected to the narrative; the participants were asked to design the villain's headquarters. Again, we received sparse feedback about the story setting. However, we saw well-designed, complex PA results, which point to the conclusion that the headquarters setting helped learners become creative and engaged with the task.

In the course end survey, we received positive feedback about the story (RQ1.1). Some learners were additionally motivated to complete the course due to the narrative, while only a few stated that they felt the story was disruptive. However, a limiting aspect of the feedback in course end surveys is that we only receive comments from learners who completed the course until this point, so it might be possible that learners decided to quit the course due to the story. As the course completion rates are above platform standard and within normal bounds of programming courses, we do not consider the narrative negatively affecting the course completion rates. We also received textual feedback—either in the course forum or in the free-text form of the course end survey—that many learners started liking the story after some time or felt the target audience would benefit from it, although they did not feel like it was a valuable asset for themselves. Thus, the experiment seemed to have an overall positive impact on exemplification and learner engagement.

4. Advancing our detective story

Following our first story implementation, we added the narration concept to further courses. In this section, we present three more Java courses that used the Detective setting and elaborate our design decisions. Specifically, we introduce an English Java course aimed at professional learners (see Section 4.1), describe changes for a rerun of the first storified Java course in Section 4.2 and explain how we continued the story for an advanced Java course in Section 4.3.

4.1. The second case of Detective Duke

After conducting our first storified Java course on openHPI, we got invited to re-implement this Java OOP course on openSAP. Despite addressing a different target group and language (English instead of German), we decided to keep and evolve our detective story (see Table 1). Our target group changed from life-long learners to learners from a professional background; thus, we wanted to create a more serious ambiance. This decision resulted in a different detective case and narration style. We also decided to extend our course to 6 weeks due to the high number of learning content, giving us more time to advance the story. In general, the same characters were introduced. Solely for better readability in the English language, we renamed Eike to Eric. In addition, the robot Robin, who had a small cameo appearance, was renamed Robby.

For this second detective case, we decided to create a more sophisticated story script. In addition, we removed the alternative story paths to reduce our preparation effort, as we did not receive much feedback about this element anyways. Thus, the story was less interactive but still spanned the whole course period. We decided to present the survey results differently, adding them to the video description below the preceding story videos. With this setup, we also wanted to verify whether learners would still be motivated by interacting with weekly story quizzes if these did not influence the narrative (RQ1.3).

Story Concept: Our setting ideation process was slightly different than in our previous course. While on a business trip in Hong Kong, two teaching team members took a picture of a “Java Road” which they wanted to integrate into the next course story. Thus, we agreed to make Duke travel to Hong Kong for his new case and send some sightseeing pictures to the learners as an Easter egg. Another aspect that influenced our story design was a serious game about Boolean algebra, a course topic that we identified as challenging in our previous course. Thus, we created a new gameful element to see whether it positively influenced our research questions.8 We needed to give the Boolean game a narrative that suited the course story logically at the specific learning content position, making one of our characters activate and deactivate different controls multiple times. We assumed the learning unit about Boolean algebra to be embedded at the end of the first or beginning of the second course week. Thus, we assumed that the story at this point would provide little opportunity to have Duke teach these topics in a way that would contribute to story suspense unless he tries to break into Eric's headquarters again. Accordingly, we decided to let the learner take an omniscient role in the game, learning more about the villain's background while making him break into a museum to steal a rarity. Now, we only needed to combine this with the goal of traveling to Hong Kong. The Hong Kong flag inspired us to make Eric steal a seed, so we just needed a reason why he would do that. Allowing him to exchange the seed for unlimited energy seemed a good goal for a super-villain.

Story Outline: We finally came up with the following course narrative: Duke receives a phone call from museum curator Muson. A special Orchid seed has been stolen from his museum in Potsdam, Germany, and Muson needs Duke's help to get it back. After cleaning up the burglary mess in the museum, the curator spots a booking receipt from a spa in Hong Kong booked on Eric, so Duke receives the first clue where to search for his everlasting nemesis. Shortly after, his old friend robot Robby shows Duke a news article about the rare mineral Accunium, which offers almost unlimited energy capacities. 80% of the world's Accunium deposits are owned by the wealthy landowner Mr. Kwong, who offers the person bringing him a rare orchid seed 50% of his Accunium land. Unsurprisingly, Mr. Kwong lives in Hong Kong; thus, Duke finally discovers Eric's motives and destination. Duke contacts Mr. Kwong, who teams up with Duke, and together they plan to arrest Eric and get back the seed. At the showdown, Eric wants to deliver the seed to Mr. Kwong when he is almost shot by some shady guys who are after the Accunium as well. When Duke saves Eric from being hit, Eric drops the seed and immediately disappears afterward. Finally, Duke travels back home and returns the seed to the museum curator Muson without being able to catch Eric.

Telling the Story Through the Course: When creating the story videos for this course, we dropped the visualization. The narration was performed in an audiobook style, thus appearing to be more professional—with more eloquent word choices and emphasis in the voice compared to the previous course iteration. With 3–5 min, the story videos also were a bit longer now. We also discontinued the characters' forum posts and most Easter eggs (see Table 1) because of the perceived low influence on the learner engagement and the high time effort needed to create them. Despite reducing the overall amount of Easter eggs, we still included some of them: robot Robby had a cameo appearance for the learners who already took part in the 2017 course on the openHPI platform. A coloring page of Duke's office in Hong Kong was provided along with the modeling task asking the learners to model Duke's new office. We added small icons to our presentation slides again. However, they represented different learning material categories this time as we wanted to give them more meaning. Thus, we used visual clues instead of character icons, such as a hammer icon for new concepts or a console icon for coding examples. In the last course week, we added Duke's holiday snapshots from Hong Kong to the course materials.

The English-language course on object-oriented programming in Java (java2018) was conducted from June to July 2018 as a 6-week course on openSAP. Until course-end, 21,693 participants enrolled to the course with 5,531 active learners. As in our previous course, learners were required to solve several programming exercises (50% of available course points) and weekly assignments in the form of multiple-choice quizzes to complete the course.

4.2. Conducting a rerun of our first storified course

From March to April 2020, we conducted a rerun of our first storified Java course from 2017. This time, 12,445 participants enrolled to the course, summing up to 7,743 active learners. We did not change the story and most of the other course content was identical as well. Like in the 2017 course, we used interactive story decisions by majority vote. However, we tried to improve the result presentation by placing a text item that presented the latest learners' voting results (updated on a daily basis until the quiz deadline) directly after each story quiz (that was disabled after the deadline, whereas the text item was kept active). Thereby, we expected to make more learners aware of their impact on the story.

We also added some new lectures regarding coding conventions, excluded the PA, and adjusted the user surveys to our needs. Finally, we decided to add a translation of the game from the java2018 course despite not suiting our initial story. We solved this problem by introducing it as a digression to one of Duke's other mysterious detective cases and received no negative feedback on this approach.

4.3. Detective Duke learns advanced Java concepts

In his newest case, we sent Detective Duke on a journey to learn advanced Java concepts. After identifying the O notation as one of the course's core concepts, we felt Big O was an appropriate name for a new character. For example, how about a little new assistant or a big, friendly bear that Duke exclaims “Oh” out loud when he first sees it? After 1.5 years of pandemic, we felt the need to go on vacation, which is why we also put Duke in an equivalent setting. The repetition on array lists from the java2017 beginner's course in the first course week also suited this idea as we explained them using trains.

Story Outline: At the beginning of the course, Duke receives a call summoning him to a scout camp due to strange events. He makes his way there by train. At the camp, however, no one can tell who called him, and nothing weird has happened except for a friendly bear, called Big O, who visits the camp from time to time. So Duke decides to spend some vacation at the scout camp and actively assist the scouts. After successful trail reading, Duke finds the bear's hiding place, but that one turns out to be Eike in a costume. Duke secretly watches him pack things into strange boxes. Since Eike needed some of Duke's detective equipment and wanted to steal it, he had first called him and alerted him to the scout camp. Finally, at the departure day, both Eike and Duke are pleased that the other does not seem to have found out their respective secrets. When coming back home, Duke finds new clues related to Eike's strange boxes, so he leaves directly for his next case.

Telling the Story Through the Course: Story elements were still optional items. However, with this course, we introduced a randomized controlled trial (RCT) on content-level for the first time in our platform (see Table 1). We wanted to determine whether learners perceived video as the most appropriate presentation format. We also investigated to which extent the story items are valuable only at the beginning or several times within a course week and how long the story elements are ideally. We divided the learners into six groups (including a control group without a story) to obtain a suitable overview through different combinations. Creating several story variants took more time than producing only one story version for all of them. Therefore, we omitted to use Easter eggs in this course.

The 2-week Java advanced course in German was offered in November 2021 on openHPI. With 4,461 learners, fewer participants enrolled than in the beginner courses, which conforms to our expectations.

5. Learner evaluation

This section focuses on the analysis of the collected data. First, limitations are highlighted in Section 5.1. Then, the data collection and preparation are described (see Section 5.2) before the results of two independent evaluations are discussed. The first evaluation in Section 5.3 focuses on the java2017 to java2020 courses, where no RCT was used. The second evaluation in Section 5.4 describes the early results of the RCT that was conducted in the java2021 course.

5.1. Limitations

Completion rates and learning outcomes are influenced by multiple factors. Although all Java courses are independent, we see specific patterns emerge when conducting a new course repetition on our platform. For example, a certain number of learners sign up for each new course iteration to see what has changed from previous courses. These learners do not intend to work through all of the course material. Therefore, they are more likely to skip familiar learning content, resulting in a lower percentage of viewed or completed learning units. We also have learners who want to revisit or improve their knowledge, as a repetition of familiar learning content often enhances the learning outcome compared to previous course participation. Additionally, those learners might already know questions from graded quizzes or look up correct answers from previous graded programming exercises. If the learning content has not changed, this behavior can improve overall completion rates or average scores in course reruns. Such bias is particularly possible for our java2020 course but could also have an influence on the other learning statistics of iterations after the first Java course in 2015—even for the java2018 course that we conducted on openSAP, but that was promoted to learners on our platform.

When starting our experiment series, the platform offered no possibility of using randomized controlled trials (RCTs) on the course content level. Hence, we decided to use the existing platform features for our initial gameful learning experiments and provide the storified content to all learners in the course. Although we could not compare different features with each other, we still got feedback from our learners that allowed us to shape and improve storification and other gameful elements. For example, we constantly improved how we presented the survey results to the learners. When we finally introduced content A/B tests in the Java course in 2021, the advanced concept course had a comparably small number of participants. Therefore, our test groups were relatively small. Hence, these results give us an impression but not necessarily generalizable results.

5.2. Data collection and preparation

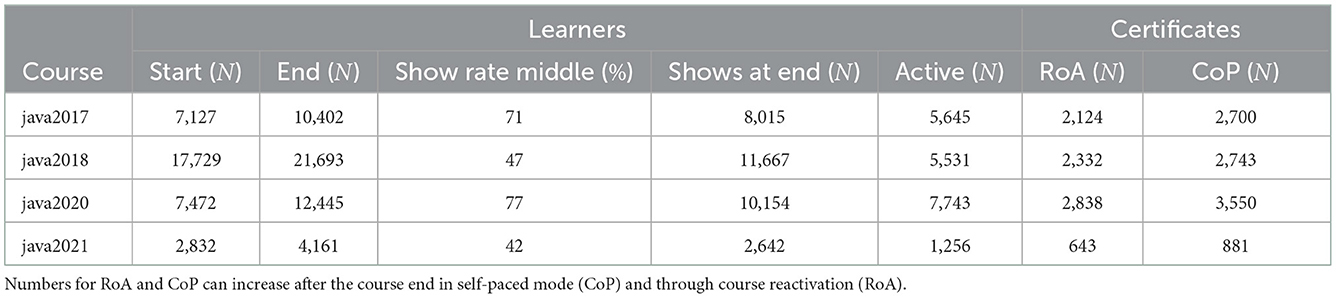

In a first step, we evaluated all three courses and compared the results. Table 2 gives an overview of the enrollment numbers of all three course iterations. As presented there, the number of CoPs awarded is closer to the number of active users we assumed with our metric than to the shows at course end, so using active users for the evaluation filters out many users who do not add value to our evaluation results. Initially, we wanted to focus our evaluation on the openHPI Java courses, as these form the basis for our research. In the end, however, it turned out that the audience of all three courses is very similar in their behavior despite our expectation of different target groups. Hence, our presented results mostly focus on the 6-week java2018 course. By having six course weeks with six story quizzes, we can evaluate much better whether learners engage with the detective story and related quizzes on an ongoing or one-time basis than with the 4-week course including only two story quizzes.

Table 2. Overview over course enrollments, active users, and certificates of the three observed Java OOP Programming MOOC iterations until the end of the course period (Hagedorn et al., 2022).

On our platform, we have access to various learning analytic data. For example, the number of visits per learning item is available, in addition to the play, pause, and seek events for videos. For programming exercises, we record achieved points. For self-tests and weekly assignments, we document the start date and the points earned. However, we cannot tell to what extent learners engaged with text items, as there is no further interaction on those pages that could be measured.

The Top 5 performance calculation is also based on the quantiles of overall achieved course points for the best five percent of all course learners. Since the programming exercises can be solved as many times as desired during the course, the points achieved in our programming courses are usually relatively high.

Data on age and gender is only intermittently available, as this is an optional data field in the user profile on our platform. However, previous research on our platform has shown that the reduced data set can be generalized for all learners in the course, as the provided information on age and gender is representative (John and Meinel, 2020).

In a second step, we investigated the results from the java2021 course including a randomized controlled trial. So far, only the accumulated survey feedback and the usage statistics for storified items have been evaluated. We use this data to back up our results from our first evaluation, our storification guideline, and identify future work.

5.3. Results on learner engagement and perception

In this section, we will present the most important results of our first evaluation. Therewith, we will answer research questions 2.1–2.4. Despite the different target groups of the java2018 and the java2017/java2020 courses, we could see only a limited number of differences between the audience; the trend of results was often very similar across all three observed courses for the active users. Hence, we only refer to the results of the java2018 course here, as they overall are more expressive due to the more extended course period. More details regarding the other courses can be found in Hagedorn et al. (2022), details regarding the course demographics are available in Serth et al. (2022b).

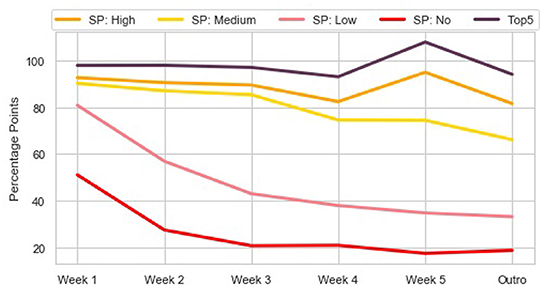

5.3.1. Engagement with story videos

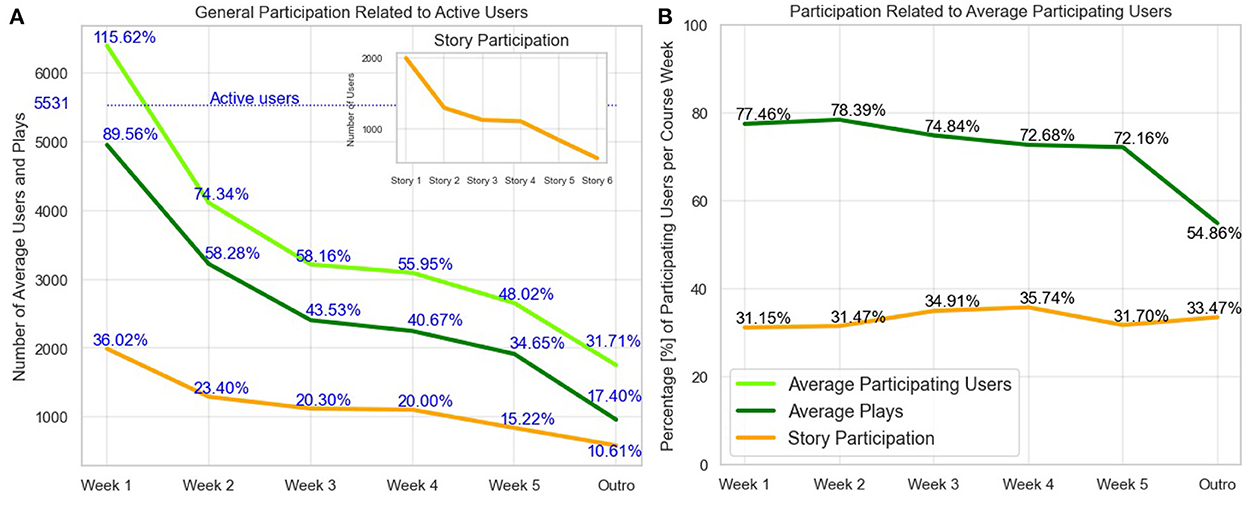

When looking into our data, we first wanted to identify how learners engaged with story quizzes compared to regular lecture videos. In general, by far the highest usage numbers can be observed in Week 1, these drop sharply in Week 2 and Week 3. In Week 3, only around half of the usage numbers from Week 1 remain, but afterward, the usage numbers drop much slower for the rest of the regular course weeks (see Figure 3A). Only the optional Outro section has again considerably fewer views. This dropout pattern can regularly be observed in all courses with a course length of four or more weeks (Teusner et al., 2017). Regarding the average video unit plays compared to story video plays, we identify a similar pattern (see Table 3), which leads to the conclusion that watching the story videos does not affect course completion rates and the engagement does neither increase nor decrease during the course period. However, we can see a slightly more stable usage rate for the story quizzes per course week when related to the average participating users compared to the video plays, which decreases toward the end of the course (see Figure 3B).

Figure 3. Left (A): Average video plays, average participating users (not limited to active users but those who visited at least one course item), and story quiz participation per course week. The absolute numbers are set in relation to the active users identified at the course end (5.531), indicated in blue. As participating users did not necessarily achieve any course points, the percentage can be above 100%. Right (B): Average videos plays and story quiz submissions related to the average number of participating users per course week.

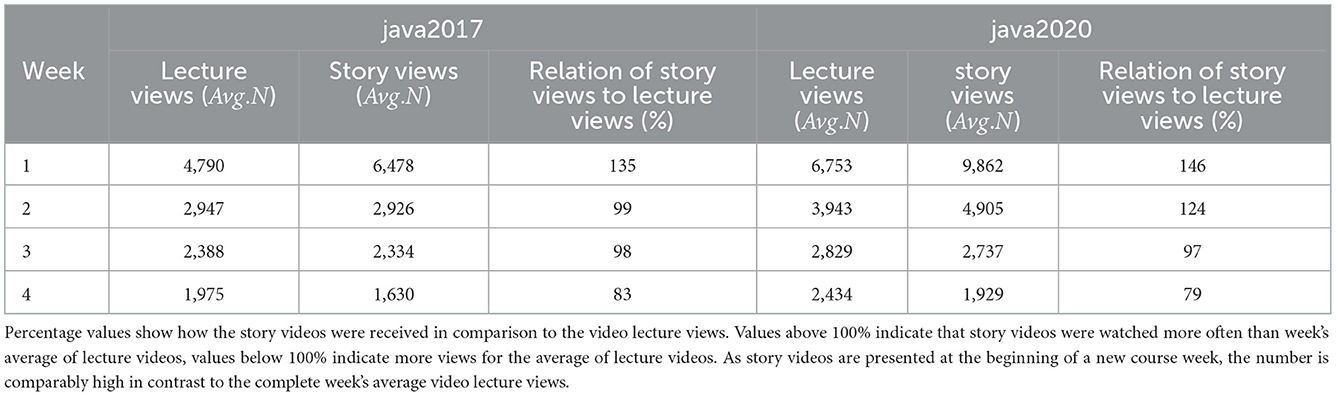

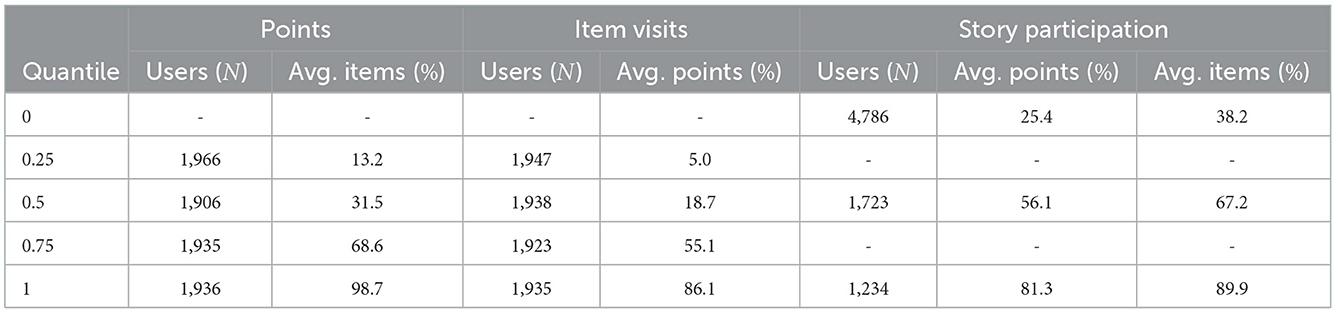

Table 3. Average number of video lecture views compared to story video views per course week in the java2017 and the java2020 course (Hagedorn et al., 2022).

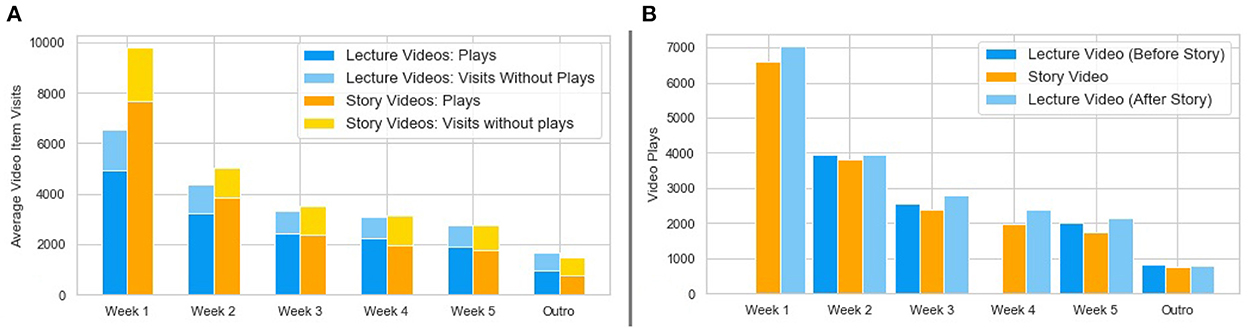

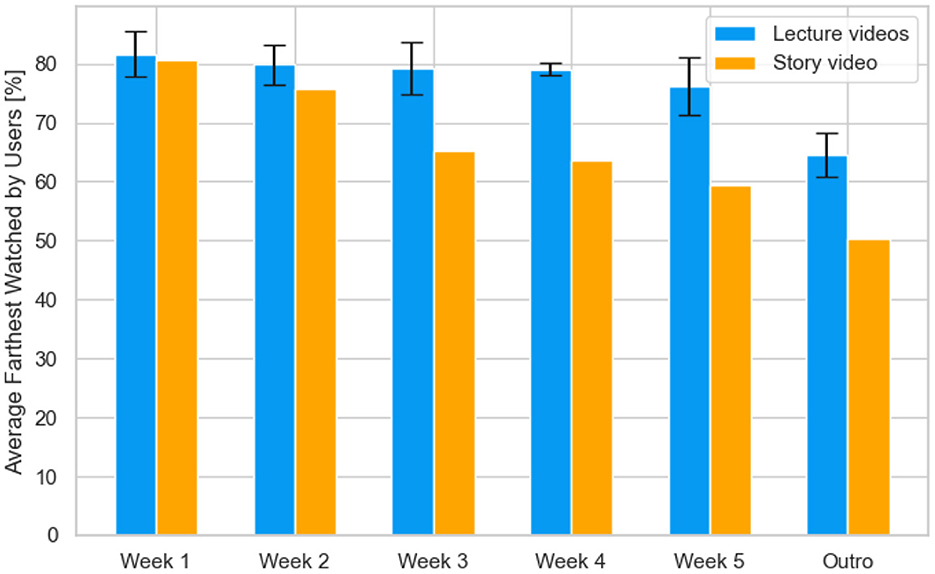

The number of skipped videos (visit without play) in story videos is slightly higher than in lecture videos (on average, 29.7% of lecture videos and 33.2% story videos were skipped, see Figure 4A), but also item visits are a little less than those from the previous (7.3% on average) and next (10.5% on average) regular course items (see Figure 4B). When looking at the numbers of how far a video has been watched, it becomes clear that the interest in the story videos decreased over the course weeks compared to the average of the lecture videos. While in Week 1, the numbers are quite similar with 81.6% (lecture videos) and 80.1% (story videos), the number starts to differ much more from Week 2 onward, leading to a weekly average of farthest watched of 76% for lecture videos and 66% for story videos (see Figure 5). These numbers indicate that the optional story videos were a little less important to learners, were skipped slightly more often than regular learning videos, and were less often watched completely. Overall, we observed a slightly worse general usage pattern with up to 10% less engagement with story videos than regular lecture videos.

Figure 4. Left (A): Average number of video plays and visits without video plays for lecture and story videos per course week. On average, 29.7% of all lecture videos and 33.2% of all story videos were skipped. Story video plays are an absolute number, whereas for the lecture video plays, mean and standard deviation wi, i = {1,…,6} are as follows; w1: 4,954 (SD 1,063), w2: 3,223 (SD 460), w3: 2,407 (SD 264), w4: 2,249 (SD 136), w5: 1,916 (SD 205), w6: 962 (SD 150). Right (B): Video plays of videos items directly before and directly after the story videos, listed per course week. On average, the story videos were played 7.3% less than those videos before the story (a recap video of the last week), and 10.5% less than those videos after the story (the week's first video lecture) (Hagedorn et al., 2022).

Figure 5. Percentages of farthest watch for the lecture videos week average (including standard deviation) compared to the weekly story video. On course average, story videos were watched 67.9% (SD 11.42%) of their total length, and lecture videos 77.25% (SD 6.3%) (Hagedorn et al., 2022).

We also observed that the story videos had <20% of the forward seeks from lecture videos, which is an expected behavioral pattern. While lecture videos are searched frequently to find specific information or to listen to a particular explanation again, our observation validates that story videos are usually viewed only once and in one piece.

5.3.2. Response to story quizzes

The viewing pattern observed for the videos is also reflected in the general response behavior of the story quizzes. In the first course week, the number of responses is considerably high, then drops steeply in Week 2, but afterward decreases rather slowly. However, despite Week 5 with a drop to 36.64%, the ratio of answered story quizzes compared to average video lecture views raises steadily over the weeks from 38.09% in Week 1 up to the highest value of 59.46% in the optional Outro week.

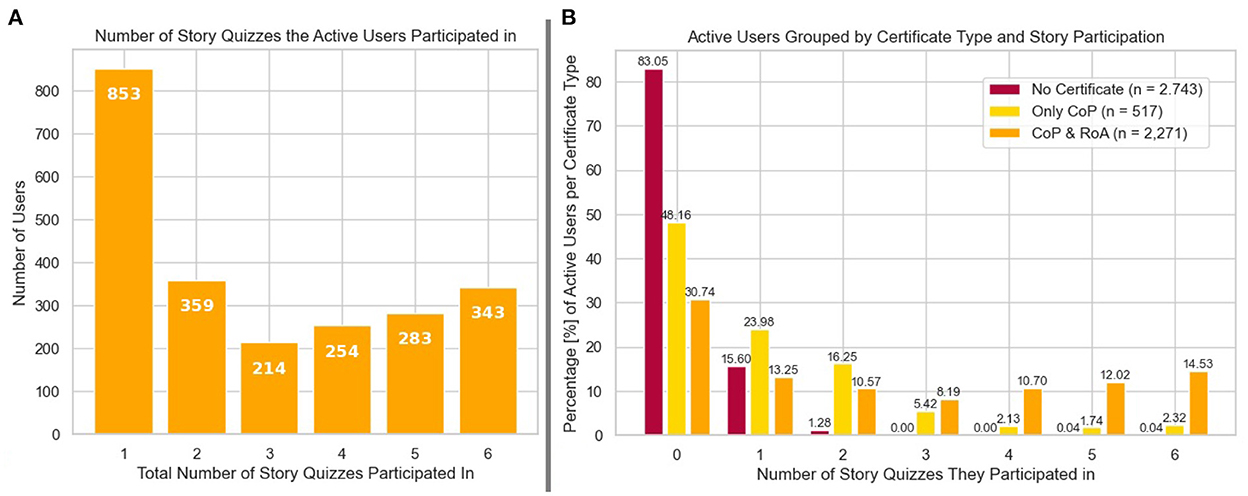

Next, we wanted to determine the total number of story quizzes the learners participated in. As depicted in Figure 6A, most learners (36.99%) participated in only one of the six story quizzes. The number of those who participated in two (15.57%) or three (9.28%) quizzes decreases continuously. After that, however, the number of learners who participated in four to six (11.01%, 12.27%, 14.88%) quizzes increases constantly. These numbers show that some learners were continuously interested in participating in the story. We did not find evidence that the learners' enrollment time has much impact on the response rate—even learners that enrolled after the course started completed all story quizzes (10.5% of all learners that completed all quizzes); however, the number of late enrollment users that completely skipped the story quizzes is 12% higher (19.2% of all learners that skipped the story quizzes).

Figure 6. Left (A): Number of story quiz answers (Hagedorn et al., 2022) for active users. 3.225 active users did not participate in any of the story quizzes. Left (B): Story quiz participation for active users, grouped by their achieved certificate type and the number of quizzes participated in.

Of course, these numbers are different in the java2017 and java2020 courses, as learners in these courses were able to submit the story quizzes only during the respective course week to influence the narrative. We observed course forum reports that multiple learners missed the deadline and wondered about the already closed survey—which is why we deactivated the story surveys after the deadline. Additionally, only two quizzes in total were available. Nevertheless, our data suggests that with more overall story quizzes, we would again see a similar pattern as in the java2018 story survey results: in java2018 with six story quizzes in six course weeks, 2,306 of 5,531 users (41.69%) participated in at least one story quiz and 626 users (11.32%) had a high story quiz participation (five or six of six quizzes). In java2017 and java2020 with two story quizzes in four course weeks, 1,781 of 5,531 (32.21%) users, respectively, 2,957 of 7,743 (38.19%) users participated in at least one story quiz, and 709 (12.82%), respectively, 1,234 (15.94%) participated in both story quizzes.

Hence, we notice high story interest for 11–16% of our learners and do not identify a difference in the story quizzes depending on the quiz' influence on the narrative.

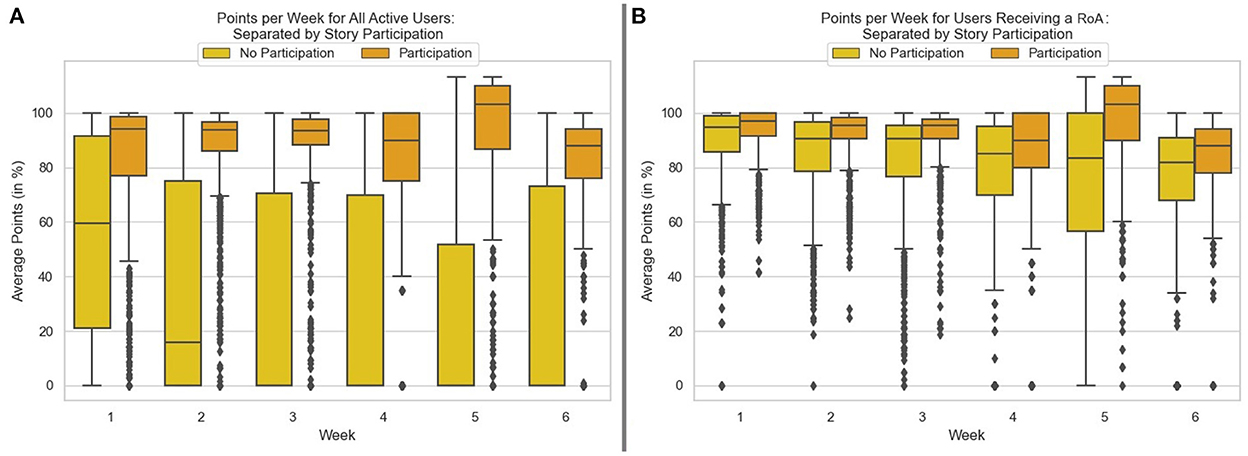

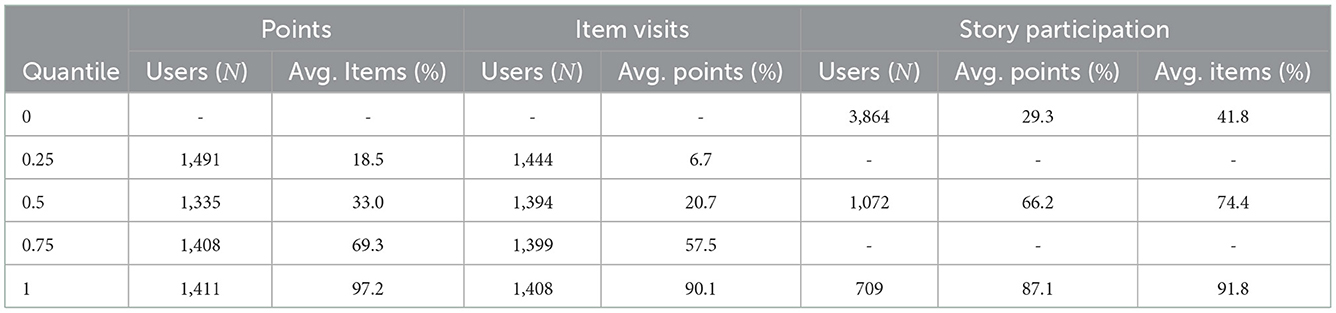

5.3.3. Learning behavior and outcome

Regarding how the story and game elements influenced the learning behavior and outcome, we first wanted to identify how learners who participated in the story performed in the course. To do this, we examined how many points per week (as a percentage of the total points achievable per week) were achieved by the learners, comparing this to how engaged the learners were with the story. As seen in Figure 7, learners who regularly engaged highly and moderately with the story on average much performed better than those who engaged with the story little or not at all. The curve of those who showed strong engagement with the story correlates clearly with the curves of the Top 5 performers. Figure 8 shows that the average points of learners participating in the story quiz of a specific week achieved higher average points than learners that did not participate in them. A very similar pattern can be found in the other courses where only two story quizzes are included (see Tables 4, 5; for example, learners belonging to the 50%-quantile of item visits in java2017 achieved on average 20.7% of all points, learners belonging to the 50%-quantile of story participants achieved on average 66.2% of all points). Figure 6B indicates that active users who achieved no certificate also participated much less in the story quizzes compared to those that received a Record of Achievement. Most of them dropped out already within the first two course weeks (about 88% with only one or two answered story quizzes). Also, users who only received a Confirmation of Participation participated less in the story quizzes than those who received a Record of Achievement.

Figure 7. Percentage of points achieved compared with story participation (SP) and Top 5 performance (Hagedorn et al., 2022). The story participation through the course is visualized for a high (5–6 quizzes), medium (3–4 quizzes), low (1–2 quizzes), and no participation (0 quizzes). In addition to all regular points, week 5 included a few optional bonus points counting toward the Record of Achievement.

Figure 8. Weekly performance for users participating with the story (orange) or skipping the story (yellow). Left (A): All active users in the course including users not receiving a course certificate; course dropouts lead to a median of 0 from week 3 onward. Right (B): Only those users that received a Record of Achievement (and a CoP simultaneously, similar to Figure 6B); median of users participating in the story is higher for all course weeks compared to no story participation.

Table 4. Quantiles for percentage of points and item visits compared to story participation in the java2017 course (Hagedorn et al., 2022).

Table 5. Quantiles for percentage of points and item visits compared to story participation in the java2020 course (Hagedorn et al., 2022).

From these numbers, we cannot conclude whether the story actually improved the learners' completion rates or we simply see the pattern of learners who do a lot in the course anyway achieve good results (Reich, 2020). For this reason, we attempted to find further clues as to whether learners participated in the story simply because they participated in all of the course materials offered or because they were explicitly interested in it and how this influenced the learning outcome.

As the team peer assessment with a modeling task was optional, some deductions could be made about whether certain learners were simply working on all materials or were selectively choosing. Our analysis shows that all 357 learners who participated in the PA also scored high in the course. When it comes to participation in the story, however, the picture is entirely different: only 47% of the learners who participated in the PA also had a high level of participation with the story, and 18% did not participate in the story at all. These numbers prove that PA participants who actively sign up for additional work in the course perform exceptionally well but do not use all of the story elements offered, which at least to some extent allows the conclusion that the story elements were not used only because “learners who do a lot do a lot,” but because learners were actively interested in it. We noticed that learners with high story participation had both the highest average item visits and scored the highest average points. This, in combination with the other results presented, may suggest that the story indeed improves the learning outcome for those learners who have been highly engaged with it; but causality is difficult to prove without a randomized controlled trial.

In addition to the story videos and quizzes, we added other game elements and Easter eggs that we hoped would generate different learning behaviors among learners, such as increased interaction in the forum or active engagement with the story elements. We already elaborated that the usage of forum characters and a special Collab Space did not work well. Identifying the interest of learners in the story by asking motivated story participants to join the Collab Space was a failure; accordingly, we were unable to obtain the expected data. Another Easter egg we provided was some coloring pictures of the modeling task. Some learners have used this opportunity, e.g., 11 learners have posted their colored images in a forum thread in the java2018 course. While these are no outstandingly high numbers, it was still surprising to see any pictures colored by the learners or their children at all.

In the end, however, we did not observe any change in learning behavior and outcome due to the additional game elements other than the story videos and quizzes, so we did not conduct an in-depth evaluation of this data.

5.3.4. Story feedback

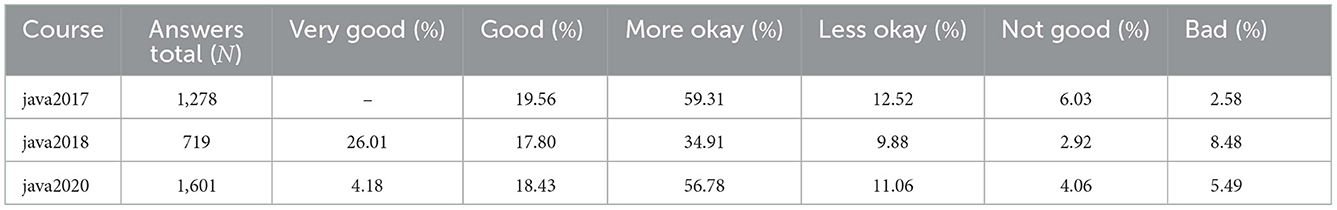

As part of a final course survey, we (among others) asked for the learners' thoughts on the application of the story at the end of each course. The specific questions differed in each course as we gained insights from each previous iteration. For example, in the java2017 course, we asked how the learners liked the story around Detective Duke and to which extent it increased their course motivation. We assumed that the story was only an additional motivation, which is why we offered this as the highest motivation level in our survey. However, in the course rerun java2020 we added an additional answer option that the story was the main reason for the learners to stay in the course, and to our surprise, this was indeed selected (see Table 6; please note: the main reason is coded as very good, and the additional reason as good, whereas bad was considered to be distracting).

Table 6. Learner perception of story elements in all three evaluated Java courses; option “very good” was not available in java2017 (Hagedorn et al., 2022).

In contrast to the question posted in the java2017 course, we asked which elements of the java2018 course the learners found most helpful. This question was designed as a multiple answer question where all that applied could be ticked. 344 (25.73%) of the 1,337 learners who answered this survey selected the option that they perceived the story as very helpful, while 993 users did not select it.

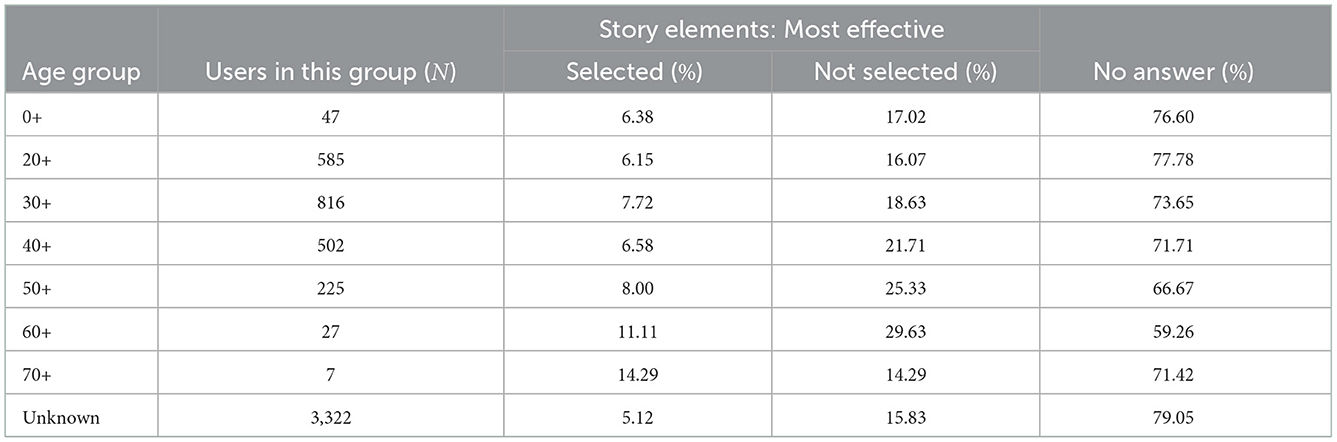

We wanted to determine whether a specific user group received the story particularly well or poorly. For this reason, we analyzed the data according to different age groups and gender. Contrary to popular belief that a gameful approach is primarily appealing to a young male target group, we cannot see this in our data. Instead, both interest and disapproval are distributed across all age groups (see Table 7). Although our data suggests that both agreement and disagreement increase with age (while neutral feedback decreases) the number of people in the group is too small to make a general statement. Similarly, a relatively undifferentiated approval or disapproval in different age structures can also be seen in the data of the openHPI Java courses. Likewise, we also did not find any notable differences in the gender distribution.

Table 7. Answers for each age group in the post course survey in the java2018 course with ratio of learners that selected the story elements as most effective compared to those that did not select this option (Hagedorn et al., 2022).

5.4. Insights from the first randomized controlled trial

A first investigation of the RCT with cumulative usage values and survey data in the java2021 course showed that the visits and interaction rates of story elements decrease more when offering more story elements (RQ3.1). More frequently, learners fast-forwarded longer videos and sought backward in shorter videos. In terms of story intervention length, several short texts were rated best, whereas long texts received the worst ratings; video ratings were in between, with short single videos scoring equally poorly as long texts. In contrast, texts—especially long ones—were skipped more often than videos. Several short texts were rated highest in terms of the extent of story interventions, i.e., the combination of length and number (RQ3.2). Learners indicated that they mostly envision two to three story interventions per week (equivalent to about 5% of the weekly course content), taking up a total of 11–15 min of learning time (equal to about 10% of the video material). Thus, assuming the video material represents about half the self-learning time (in addition to the quizzes and programming exercises), a story scope of about 5% of the overall course material is advisable.

Remarkably, the user group without story elements was particularly active in answering surveys. Toward the end of the course, groups with long story interventions (regardless of the amount) tended to be less motivated to complete the survey. In the survey, consistent examples were rated higher by learners without a story intervention than story content was rated by learners with a story. However, the expected impact of consistent examples on learning success, both for themselves personally and for fellow learners, was generally rated slightly lower by learners without a story than by learners who received a story intervention. Similarly, the desire for a story for future courses was expressed noticeably less by learners without a story intervention than by learners who received a story intervention, and the expected influence on motivation tended to be ranked in the lower end of the spectrum.

Our data indicated that several long videos were most favorable regarding overall ratings (RQ3.1). In terms of evaluating the content and narrative style, as well as in terms of thrill, curiosity about further story progress, and immersion, we received feedback that was often considerably more positive than for other intervention groups. These results indicate a positive influence of these factors on each other. In contrast, multiple long videos performed only averagely when evaluating the form of presentation and the expected impact of consistent examples for learning success. Learners with multiple long videos were also particularly likely to report having followed the interventions attentively, but the usage data confirm this only partially (RQ3.1). The learners with several long videos also expressed their desire for further storification and for linking consistent examples through a story particularly frequently—similarly frequently to those learners who received short texts several times. In most evaluation aspects, multiple short texts scored best after multiple long videos—in some cases, scores were even better than those of the videos—so the use of short texts can also be considered for storification.

In terms of preferred presentation format, animated videos were chosen most often (about 75%), followed by narrated videos (about 49%). A transcript accompanying the video was rated as having little relevance (about 12%). Learners who received no video intervention (i.e., text or no intervention at all) were considerably more open to a text format: A text without visualization was rated lower (27 vs. 10% for the respective test groups) than text with visualization (38 vs. 26%). This feedback is particularly noteworthy given that the text form with multiple long texts performed poorly in the evaluation, yet learners seem open to further text formats.

Our results also indicate that learners who did not receive a story intervention rated the informational quality of the course content about 4% lower than learners who received any story intervention (ranging from 3.73 to 5.59%, mean 4.22%, median 3.94%). If we can verify this result, using a story can substantially increase learner satisfaction. Investing in a narrative would thus positively impact learning content, regardless of how much effort course designers put into the story's presentation.

A detailed description of the RCT and evaluation of the data in terms of learning success and possible recommendations for action is beyond the scope of this article. This will be the subject of a detailed elaboration and is, therefore, part of Future Work.

6. Storification guideline

To develop your own course narrative, our guideline in the Supplementary material might help. The guideline outlines our approach to developing new stories. Although our description in this paper only focused on the courses related to Java, we applied narratives to two courses in a different context and worked on those together with external teaching teams after the second storified Java course. The additional experience with those external teaching teams helped us to refine and improve our guideline.