94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

SYSTEMATIC REVIEW article

Front. Educ., 01 December 2021

Sec. Educational Psychology

Volume 6 - 2021 | https://doi.org/10.3389/feduc.2021.751168

This article is part of the Research TopicClassroom Assessment as the Co-regulation of LearningView all 9 articles

Current conceptions of assessment describe interactive, reciprocal processes of co-regulation of learning from multiple sources, including students, their teachers and peers, and technological tools. In this systematic review, we examine the research literature for support for the view of classroom assessment as a mechanism of the co-regulation of learning and motivation. Using an expanded framework of self-regulated learning to categorize 94 studies, we observe that there is support for most but not all elements of the framework but little research that represents the reciprocal nature of co-regulation. We highlight studies that enable students and teachers to use assessment to scaffold co-regulation. Concluding that the contemporary perspective on assessment as the co-regulation of learning is a useful development, we consider future directions for research that can address the limitations of the collection reviewed.

At its best, classroom assessment is not done_to_students, but_with_them. Informed by social-cognitive perspectives, current conceptions of assessment describe interactive processes of co-regulation of learning from multiple sources, including students themselves, their teachers and peers, and curricular materials, including technological tools (Allal, 2016, Allal, 2019; Adie et al., 2018; Andrade and Brookhart, 2019; Panadero et al., 2019). In this systematic review, we examine the research literature for support for the view of classroom assessment as a key mechanism of the co-regulation of learning and academic motivation.

Using an expanded framework of self-regulated learning to categorize 94 recent studies, we observe that there is ample evidence of a relationship between assessment and self-regulated learning, but less research on reciprocal co-regulation. We highlight studies that not only represent assessment in the service of individual student learning and self-regulation but also enable students to become effective co-regulators. Concluding that the contemporary perspective on assessment as the co-regulation of learning is a useful development for the field, we make recommendations for future directions for research.

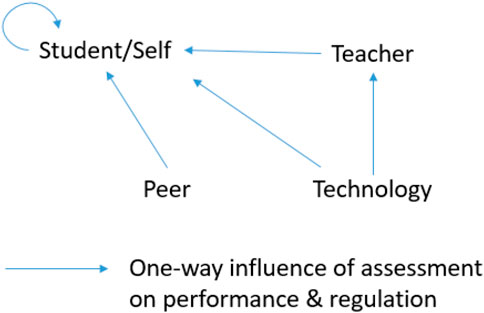

Current theories of formative assessment recognize that the agency for learning resides with the student (Andrade, 2010; Earl, 2013; Adie et al., 2018), and that successful students also use summative assessment as information for learning (Brookhart, 2001). These developments reflect cognitive and constructivist theories of learning, which emphasize the importance of student agency as a matter of the self-regulation of learning (SRL; Pintrich and Zusho, 2002; Zimmerman and Schunk, 2011). Modern assessment theory also draws on socio-cultural theories of learning, which describe how teachers and peers help learners understand the gap between where they are and where they need to be (Dann, 2014). Figure 1 is a simple representation of the view of classroom assessment as involving multiple sources acting together to influence student learning and self-regulation. This was the perspective we took in earlier scholarship on this subject (Andrade and Brookhart, 2016, Andrade and Brookhart, 2019).

FIGURE 1. Classroom Assessment Involving Multiple Sources of Influence on Learning and Self-regulation.

Our thinking about the nature of co-regulation has evolved. The theoretical framework that informs this review recognizes the situated nature of learning and extends the notion of student as active participant in assessment to include teachers, peers, and adaptive technological programs as co-regulators, all of whom are affected by their participation in assessing student learning. The classic example of such mutual influence is of assessment-driven teacher-student interactions during which both parties receive feedback about the student’s learning and make adaptations to learning and instructional processes in response to it (e.g., Heritage, 2018). Another example comes from research that shows that peers learn from giving feedback as well as from receiving it (Gikandi and Morrow, 2016). Because self-regulatory processes often occur under the joint influence of students and other sources of regulation in the learning environment such as teachers, peers, interventions, curriculum materials, and assessment instruments, classroom assessment is a matter of mutual co-regulation (Volet et al., 2009; Allal, 2019).

We define co-regulated learning as the interactive, reciprocal influences of all sources of information about learning on each other. The word reciprocal is important here: Feedback is not simply done to students—it is more complicated and, happily, more interesting than that. Because assessment is transactional, we put heads on both ends of the arrows in Figure 2 to represent its interactive, reciprocal nature.

This definition of co-regulated learning (CoRL) differs from similar concepts such as Hadwin’s conception of co-regulation (Hadwin and Oshige, 2011; Hadwin et al., 2018), which refers to the transitional processes toward self-regulation, and Panadero and Järvelä's (2015, p. 9) socially shared regulation of learning, whichthey characterize as “the joint regulation of cognition,metacognition, motivation, emotion, and behavior”. Although related to our conception of co-regulated learning in many ways, the difference is that Panadero and Järvelä, as well as the scholarship they examined in their review, consider regulation of learning in the context of collaboration, with a focus on interpersonal interaction. Surprisingly, perhaps, we do not assume collaboration, or even interpersonal interactions, in the classroom context. Although interpersonal interaction via assessment is common, the interactions that co-regulate learning can also occur, for example, between one student and an adaptive testing program on a computer. The emphasis on reciprocity also distinguishes our definition from that of Panadero et al. (2019) which, although otherwise compatible with ours, seems to assume a one-way influence of assessment on students, e.g., “teacher and peer assessment co-regulates the acquisition of regulatory strategies and evaluative judgment to the assessee” (p. 24).

Zimmerman (1989) introduced the idea of reciprocal causality among personal, behavioral, and environmental influences on SRL. The reciprocity he described included other sources of regulation from the environment (e.g., the setting, instruction, peers) but referenced this reciprocity to the self. For example, students might learn from their environments that they are easily distracted by others, so they effect a change in the environment by finding a quiet place to study. Zimmerman’s article was ground-breaking because it considered social cognitive processes and included reciprocity, but its reference to learning still revolved around the self. In contrast, our definition of CoRL reflects more recent scholarship that challenges the assumption that self-regulation of learning is the ultimate goal of regulation by pointing out that co-regulation reflects the reality of educational contexts. Allal (2019), for example, argued that, “in classroom settings, the learner never moves out of the process of co-regulation into a state of fully autonomous self-regulation. Students do not become self-regulated learners; rather they learn to participate in increasingly complex and diversified forms of co-regulation” (p. 10). As we will reveal through this review, much of the research on classroom assessment focuses on SRL rather than CoRL. One exception is Yang (2019), who wrote of co-regulation as the ultimate goal, noting, “not everyone in the community needs to be highly metacognitive. Students can scaffold each other’s metacognitive development through collective work and modeling when conducting reflective assessment” (p. 5).

Our aims in this article are to use the systematic literature review method to update our review of research about the relationship of classroom assessment with self- and/or co-regulation, as well as to make more apparent the interactive, reciprocal nature of co-regulation. First, we define key terms and state assumptions. Then we organize the literature using a version of the Pintrich and Zusho (2002) theory of the phases and areas of the self-regulation of learning, expanded to include the co-regulation of learning, in order to demonstrate how classroom assessment is related to most or all aspects of the regulation of learning. Finally, we discuss how several studies point toward useful new directions for research on assessment-driven reciprocal co-regulation.

Classroom assessment (CA) is a process through which teachers and students gather, interpret, and use evidence of student learning “for a variety of purposes, including diagnosing student strengths and weaknesses, monitoring student progress toward meeting desired levels of proficiency, assigning grades, and providing feedback to parents” (McMillan, 2013, p. 4). Some of these purposes are formative, for example, monitoring progress to support student learning. Others are summative, such as certifying achievement at the end of a report period. Learning is central even for summative classroom assessment, because the ultimate purpose of schooling is for students to learn. This relationship to learning sets classroom assessment apart from some other educational evaluation and assessment programs, including the normative assessment of students' educational progress and the evaluation of educational materials and programs.

The classroom assessment process employs a variety of kinds of evidence, including evidence from classroom tests and quizzes, short- and long-term student performance assessment, informal observations, dialogue with students (classroom talk), student self- and peer assessment, and results from computer-based learning programs. Classroom assessment methods are more closely linked with students’ experience of instruction than many other educational assessment methods because the student is the learner as well as the examinee (Dorans, 2012; Kane, 2012). Thus have arisen the current perspectives that classroom assessment can best be understood in the context of how students learn (Bransford et al., 2000; Pellegrino et al., 2001).

Effective classroom assessment is used by teachers and students to articulate learning targets, collect feedback about where students are in relation to those targets, and prompt adjustments to instruction by teachers, as well as changes to learning processes and revision of work products by students. Drawing on Sadler (1989), Hattie and Timperley (2007) summarized this regulatory process in terms of three questions to be asked by students: Where am I going? How am I going? and Where to next?

Most theories of learning include a mechanism of regulation of learners' thinking, affect, and behavior: Behaviorism includes reinforcement, Piaget’s constructivism has equilibration, cognitive models refer to feedback devices, and social constructivist models include social mediation (Allal, 2010). Self-regulated learning occurs when learners set goals and then systematically carry out cognitive, affective, and behavioral practices and procedures that move them closer to those goals (Zimmerman and Schunk, 2011). Scholarship on self-regulation organizes cognitive, metacognitive, and motivational aspects into a general view of how learners understand and then pursue learning goals.

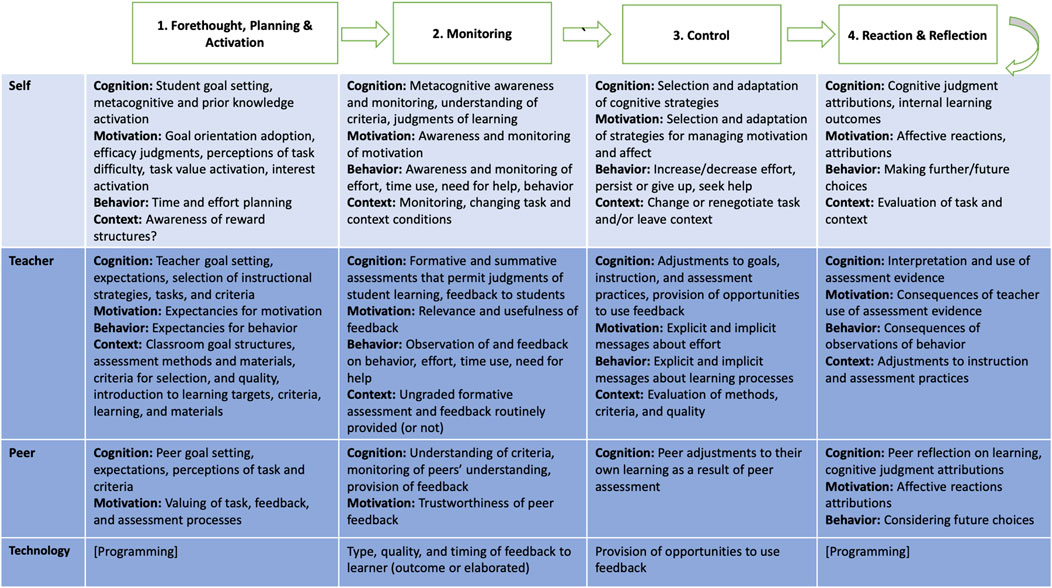

Many models represent SRL as unfolding in phases (Pintrich and Zusho, 2002; Wigfield et al., 2011; Winne, 2011) or as a cycle (Zimmerman, 2011). Phase views of SRL allow theorists to place cognitive, metacognitive, and motivational constructs into the sequence of events that occur as students self-regulate. A phase view of self-regulated learning affords a way to crosswalk the classroom assessment literature, which has mostly taken an event (Brookhart, 1997) or episode of learning (Wolf, 1993) point of view, and generally can be described as having three main phases, also cyclical in nature: 1) goal setting (where am I going?), 2) feedback (how am I going?), and 3) revision or adjustment (where to next?). For example, Pintrich and Zusho (2002) organized self-regulation into four phases and four areas. The four phases are 1) forethought, planning and activation, 2) monitoring, 3) control, and 4) reaction and reflection. The four areas that are regulated by learners as they move through the phases are 1) cognition, 2) motivation/affect, 3) behavior, and 4) context. Although the four phases represent a general sequence, there is no strong assumption that the phases are linear.

In order to represent the co-regulated nature of learning via classroom assessment—even metacognition is now seen as a socially mediated phenomenon (Cross, 2010)—we expanded Pintrich and Zusho (2002) framework to include multiple sources of influence in addition to the self: teachers, peers, and assessment tools, particularly technology-based tools (Figure 3). Although not explicitly represented in our two-dimensional figure, dynamic interactions between students, teachers, peers, and technologies are assumed.

FIGURE 3. Phases, Areas, and Sources of the Regulation of Learning. Note. Adapted from “The Development of Academic Self-Regulation: The Role of Cognitive and Motivational Factors” by P. Pintrich and A. Zusho, in A. Wigfield and J. S. Eccles (Eds.), Development of Achievement Motivation (p. 252), 2002, Academic Press. Copyright 2002 by Academic Press.

Some might urge us to go a step further and erase the distinctions between self- and other-regulation. Allal (2019), for example, recently maintained, “self-regulated learning does not exist as an independent entity. Even when students are working alone on a task, actively monitoring their own progress, using strategies to orient and adjust their progression, they are doing so in a context and with tools that are social and cultural constructions” (p. 10). We agree. Our review of recent literature, however, indicates that the distinction between the self and other sources of regulation is still the prevailing view. Because the majority of the studies in this review focus on SRL, we retain it as an entity worthy of study but also issue a call for more investigations of classroom assessment as co-regulation. Toward that end, we designed two research questions, the first of which reflects interest in classroom assessment involving multiple sources of influence on student learning and self-regulation (Figure 1). The second research question is informed by Figure 2, and guided a search for evidence of interactive, reciprocal co-regulation through classroom assessment.

1) Is there empirical evidence in support of the claim of classroom assessment as the regulation of learning by students, teachers, peers and assessment technologies in the areas of cognition, motivation, behavior, and context?

2) If so, does the research literature represent the interactive and reciprocal nature of co-regulation via assessment, as theorized?

In the remainder of this article, we bring together a collection of 94 studies to test a theory of classroom assessment as the co-regulation of learning. Our interpretation of the collection is based on a social-constructivist, transactional view of teaching and learning that makes the following assumptions (Vermunt and Verloop, 1999; Garrison and Archer, 2000; Pintrich, 2004):

1) Learners are active participants in the learning process.

2) Learners can regulate aspects of their own cognition, motivation, and behavior as well as some features of their environments.

3) Learning is also regulated by and co-regulated with others via transactions with teachers, peers, and curricular materials.

4) Regulatory activities are mediators between personal and contextual characteristics and achievement or performance.

5) Some type of goal, criterion, or standard is used to assess the success of the learning process.

6) Regulation via assessment is a matter of deliberate control intended to promote learning.

All literature searches were conducted using a title and abstract search procedure in the PsycINFO, Web of Science (WoS), and EBSCO (Academic Search Complete, Education Source, ERIC, Open Dissertations) databases. The time period was from 2016 to 2020 because our last review ended at 2016. All database searches were limited to peer-reviewed documents published in English. In order to capture classroom assessment operating as a mechanism of regulation of learning, we used the key terms in Table 1. Because we are interested in student learning in the classroom (face-to-face and online), studies were excluded if they focused on sports (not physical education), teacher development, or workforce training.

Searches with key terms in Table 1 were conducted from July 22, 2020, to August 5, 2020. Three rounds of screening were guided by two inclusion criteria: Each study must 1) address both classroom assessment and the regulation of learning, and 2) be empirical in nature and rigorous enough to produce credible interpretations of the results. A total of 94 studies were included in our review. Figure 4 summarizes the search and screening procedures.

In preparation for analysis, each study was classified on the basis of the following:

1) type of classroom assessment

2) author and year of publication

3) grade level of study participants (i.e., primary, middle school, high school, undergraduate, graduate)

4) number of participants

5) research design: The term “survey” was used to represent all forms of self-report instruments, including inventories and questionnaires.

6) aspects of regulation of learning (i.e., cognition, motivation/affect, behavior, context), phase (i.e., forethought, monitoring, control, reflection), and source (i.e., self, peer, teacher, technology)

7) brief summary of study findings

The studies were then coded in terms of the Area, Phase, and Source of regulation:

• Area: effects of (actual or perceived) CA on cognition, motivation, behavior, and context;

• Phase: effects of manipulations of forethought, monitoring, reflection, and combinations of them on regulation in one or more Areas; and

• Source: effects of teacher, self, peer, technology, and combinations on regulation in one or more Areas.

First, we identified the dependent variable (or equivalent, depending on study design) and coded that as the Area, or what was regulated. Most of the studies investigated the regulation of Cognition and/or Motivation, and a few investigated Behavior (e.g., choosing to do another essay). No studies were coded as Context. Then, we noted what was measured and/or manipulated (independent variable or equivalent, or correlates) and coded them according to Source and Phase. For example, if a study examined the effects of different types of teacher feedback on task performance (cognition) and motivation or motivated strategies (e.g., using the MSLQ), it would be coded as Cognition & Motivation: Teacher2. At least two coders coded 50% of the studies and discussed any differences until agreement was reached. The final collection of studies, their codes, and brief descriptions of each can be found in the Supplementary Materials.

In the 94 studies in this collection, 41 were experiments or quasi-experimental, 24 were non-experimental (descriptive or correlational), 16 used qualitative methods, and two were meta-analyses (Figure 5). Of the 61 studies that used self-report surveys/questionnaires, 17 relied solely on surveys, while 44 employed surveys and additional quantitative and/or qualitative methods such as think alouds, interviews, grades, or trace data.

Of the 94 studies in this collection, Cognition and Motivation were most studied, accounting for 84% of the studies (Figure 6). No studies were coded as having investigated Context. Behavior was only studied in combination with Cognition and/or Motivation (16%). In terms of phases, 52% of the studies focused on Monitoring, followed by 10% on Reflection, and 10% on Forethought and Monitoring in combination (Figure 7). As for sources (Figure 8), Teacher as the sole source represented the largest proportion of the studies (28%), followed closely by Self (24%), Peer (11%), and Technology (9%). Only 28% of the studies examined more than one source. This article focuses on the findings related to area: Findings regarding phases and sources will be reported elsewhere.

Is there Empirical Evidence in Support of the Claim of Classroom Assessment as the Regulation of Learning by Students, Teachers, Peers and Assessment Technologies in the Areas of Cognition, Motivation, Behavior, and Context?

As indicated by Figure 6, there is empirical evidence of classroom assessment, in its many forms, as regulating student cognition, motivation, and behavior but not context. Further, none of the studies had findings that contradicted the generalization that assessment is part of the regulation of learning. This is important because the studies were not true replications. Studies' designs, theoretical bases, and the constructs they investigated differed, so the argument that classroom assessment is part of the co-regulation of learning must be made on the basis of a body of somewhat disparate evidence. The fact that all roads seem to be leading to the same destination strengthens the argument. In the following sections we report on themes for each of the first three areas, and then speculate on the reasons for the lack of findings related to context.

Of the 60 studies focused on Cognition (including those coded as both Cognition and Motivation), two were meta-analysis (Panadero et al., 2017; Koenka et al., 2019), four used qualitative methods, four were case studies, six were descriptive, and 28 were experiments or quasi-experimental. Ten relied solely on self-report surveys/questionnaires, while five employed surveys and additional quantitative and/or qualitative methods such as think alouds, interviews, grades, or trace data. The study by Panadero et al. (2020) is the only one that used a think-aloud protocol and conducted direct observation of behavior.

The collection reflects the full spectrum of classroom assessment practices, from summative to formative. Practices that were set in summative exams include post-exam reviews (or “wrappers”), item-level feedback, and error detection by students. Formative practices included teacher, peer and self-assessment, as well as comprehensive assessment-as-learning approaches that involved goal-setting, co-constructed criteria, portfolios, and feedback from the teacher, peers, and students themselves.

The measures of learning in the Cognition collection also represent a wide variety of PreK-20 curricula, such as performance on science exams (e.g., Andaya et al., 2017; others), writing skill (Farahian and Avarzamani, 2018), mathematical problem solving (Martin et al., 2017), reading comprehension (Schünemann et al., 2017), language learning (Saks and Leijen, 2019; Nederhand et al., 2020), project management (Ibarra-Sáiz et al., 2020), and discourse in the visual arts (Yang, 2019), to name just a few. Depending on the focus of the study, SRL was operationalized either narrowly, e.g., in terms of metacognitive accuracy regarding tests taken or problems solved (Callender et al., 2016; Nugteren et al., 2018; Nederhand et al., 2020), or broadly, to include person and strategic metacognitive awareness (Farahian and Avarzamani, 2018) or understanding and setting learning goals, adopting strategies to achieve goals, managing resources, extending effort, responding to feedback, and producing products (Xiao and Yang, 2019).

Some studies selected very specific self-regulatory moves, such as Fraile et al. (2017) who operationalized SRL in terms of learners comparing their own performance with an expert model, identifying successes and errors in their explanations, and asking questions about the meaning of the quality definitions on a rubric. Ibarra-Sáiz et al. (2020) also operationalized SRL rather idiosyncratically as analyzing one’s own and others' work, and learning from mistakes and how to help others. In contrast, the study by Trogden and Royal (2019) operationalized SRL more conventionally in terms of scores on the Metacognitive Awareness Inventory. Muñoz and Cruz (2016) examined individual students’ planning, monitoring and evaluating work on tasks done in small groups, while three studies examined self- and co-regulation in groups (Schünemann et al., 2017; Yang, 2019; Yang et al., 2020).

The studies in the Cognition collection generally support our claim that assessment is integral to the regulation of cognition and SRL. This is true of both types of studies that appear here most often: 1) Exploratory, descriptive studies that examine the relationships between assessment, learning, and SRL, and 2) interventions that employ assessment to influence learning and/or SRL. For an example of the former, Yan (2020) modeled the characteristics of self-assessment practices at three SRL phases (preparatory, performance, and appraisal), as well as their relationships with academic achievement. The model suggested that for the graduate students in the sample, self-assessment was ongoing across the phases but less during appraisal. Self-directed feedback seeking through monitoring at the performance phase was the strongest, and positive predictor of achievement. An example of an intervention that supports our claim is by Saks and Leijen (2019), who used digital learning diaries to guide undergraduate English language learners’ self-critical analysis of learning processes, which resulted in growth in description, evaluation, justification, dialogue, and transfer from the beginning to the end of their course.

The exception to the nearly uniform support for the notion of assessment as regulation is related to self-assessment item-level accuracy, which is generally low (e.g., Rivers et al., 2019) and difficult to improve, even with multiple forms of feedback (Raaijmakers et al., 2019). As a result, students tended to base task selection decisions on inaccurate self-assessments (Nugteren et al., 2018). However, the body of work in this area is infamously inconsistent. Drawing only on the studies in this Cognition collection, we see that exam-level accuracy improved with feedback and training in calibration that included psychological explanations of the phenomenon (Callender et al., 2016), and global and item-specific accuracy improved when children could compare test responses with a feedback standard and then restudy selections became more strategic (Van Loon and Roebers, 2017). Self-assessment scripts also increased accuracy (Zamora et al., 2018). In contrast, Maras et al. (2019) found no effect of accuracy feedback on judgments of accuracy by typical secondary students or students with Autism Spectrum Disorder.

It is tempting to propose that depth of processing might help explain the mixed results of calibration studies: If students simply estimate their grade and/or mental effort, which can require little mental processing, we might predict little improvement in accuracy as compared to when students are supported in thoughtfully reflecting on their grades or effort. The Nederhand et al. (2020) study showed that proposal is not true, at least in their study. However, as argued elsewhere (Andrade, 2019), self-assessment accuracy might be the wrong problem to solve, given what we know about the small to medium effect sizes of self-assessment studies that do not focus on accuracy (Sitzmann and Ely, 2011; Brown and Harris, 2013; Li and Zhang, 2021). If strategic task selection is the goal, teach that. If content knowledge is the problem, teach that. If self-regulated learning is the objective, teach and assess SRL. A number of studies in the Cognition collection did just that.

While some studies assessed content learning (the traditional role of assessment), others required students to do tasks related to SRL which, since the tasks contributed to course grades (Colthorpe et al., 2018; Farahian and Avarzamani, 2018; Maras et al., 2019; Saks and Leijen, 2019; Trogden and Royal, 2019), effectively turned SRL into an assessment. Perhaps because “you get what you assess” (Resnick and Resnick, 1992, p. 59), the summative assessments were consistently associated with improvements in students' learning strategies, study behaviors, and metacognitive awareness, as well as content mastery.

There is also evidence that ungraded, formative feedback related to SRL is effective. Wallin and Adawi (2018) had four students use reflective diaries to probe SRL with some success. Muñoz and Cruz (2016) taught PreK teachers to provide feedback at the level of self-regulation, which resulted in more metacognition by the young children in their classrooms. Studies conducted in the computer-based Knowledge Forum context (Yang, 2019; Yang et al., 2020) explicitly scaffolded metacognition, self-, and co-regulation with reflective assessments, and saw increases in epistemic agency, goal-setting, planning and reflection. In these studies, SRL was not just the goal of assessment but also its object. We consider this a significant advancement of the field of classroom assessment (see also Bonner et al., 2021).

Fourteen of the 20 studies in the Motivation collection used self-report scales on a questionnaire to measure motivation constructs. Different instruments and different motivational constructs were used. Studies measured, for example: intrinsic and extrinsic motivation, goal orientations, self-efficacy, various kinds of self-regulation strategies, situational interest, and various kinds of attitudes, perceptions, and emotions. Three of the studies (Dolezal et al., 2018; Ghahari and Sedaghat, 2018; Cho, 2019) used student choice as an indicator of motivation. One of these studies used behavioral observation as an indicator (Cho, 2019): whether students chose to write a second essay. Two studies (Dolezal et al., 2018; Ghahari and Sedeghat, 2018) measured choice by survey: both were from studies of peer assessment and asked about what kinds of peer review students would prefer or what percent of exercises they would prefer to be peer reviewed. Three of the studies (Fletcher, 2016; Ismail et al., 2019; Schut et al., 2020) used thematic analysis of qualitative data to make inferences about student self-regulation, student agency, and whether students described an assessment method as motivating.

Twenty studies investigated the regulation of motivation. The studies in this group investigated activity in one or more of the phases of regulation from one or more sources—consistent with our focus on co-regulation and socio-cultural theories of learning, in which multiple sources interact together to accomplish the regulation of learning. As with the cognition area, the findings from this set of studies of the regulation of motivation give beginning support to the claim that classroom assessment is part of the co-regulation of learning. Classroom formative assessment processes help students know where they are going, where they are now, and where they go next in their learning. These three questions, sometimes called the formative learning cycle, are a practice-oriented rendering of the phases of regulation, where students begin by setting a goal and then monitoring and adjusting their progress toward it, finally reflecting on the results.

Most of the studies investigated effects of various aspects of classroom assessment on motivation in general, or on self-regulation in general, not on motivation to do one particular task or assignment or to reach one particular learning goal. For example, the influence of portfolio use on motivation (e.g., Baas et al., 2020), the effect of written self-assessments on goal orientation and interest (Bernacki et al., 2016), the effects of self- and/or peer assessment on motivation (Meusen-Beekman et al., 2016; David et al., 2018), the effect of in-class diagnostic assessment and teacher scaffolding on motivation (Gan et al., 2019), the effect of metacognitive feedback using learning analytics on motivation (Karaoglan Yilmaz and Yilmaz, 2020), the effects of video and teacher feedback on motivation (Roure et al., 2019), the effects of teacher and peer feedback on motivation (Ruegg, 2018), the effects of emotional and motivational feedback on motivation (Sarsar, 2017), the effects of grades on motivation (Vaessen et al., 2017; Weidinger et al., 2017), and the effects of writing multiple choice questions and feedback on motivation (Yu et al., 2018) have been studied in this general way.

Studies of the regulation of motivation in general show that classroom assessment tools and processes from one or more sources (self, teacher, peer, technology), used during one or more phases of the formative learning cycle, are associated with increased motivation. Two important themes are evident. First, active student involvement in assessment scaffolds the regulation of motivation. Second, as expected in any study of motivation, students display individual differences in motivation.

We illustrate the first theme—that formative assessment strategies, especially those with active student involvement and that used scaffolding, were associated with enhancement of a variety of motivational variables that are in play as students regulate their learning—with a comparison of four studies.

1) In a sample of Dutch students in 4th through 6th grade, Baas et al. (2020) found that experiencing scaffolding (e.g., “My teacher asks questions that help me gain understanding of the subject matter”) affected intrinsic regulation (intrinsically-motivated regulation, Deci and Ryan, 2000) beyond the effects of demographics like gender (girls were more intrinsically motivated than boys) and age (older students were less intrinsically motivated). Self-determination theory was the basis for this study and its measures.

2) In a sample of US students in 7th and 8th grade (Bernacki et al., 2016), students who wrote weekly self-evaluations carefully scaffolded with prompts that articulated goal development and a mastery orientation to learning had higher mastery goal orientations and greater situational interest in science topics compared with students who wrote weekly lesson summaries. Achievement goal theory was the basis for this study and its measures.

3) Using qualitative methods, Fletcher (2016) investigated the effects of formative assessment emphasizing student agency with students in years two, four, and six (ages 7, 9, and 11) in Australia. Students used planning templates organized according to Zimmerman’s three phases of self-regulation—forethought, performance, and self-regulation—to plan their writing. For all students, but especially those whom their teachers identified as low-achievers or with poor motivation, this process was related to greater motivation, persistence, effort, and pride in their work. Self-regulation theory was the basis for this study and its measures.

4) Using portfolios with 69 secondary students in an English writing classroom, Mak and Wong (2018) observed that students who engaged in the four phases of self-regulation, with their teacher’s support, actively monitored their goals and regulated their effort, as evidenced by one student’s remark, “the goals are like giving you a blueprint and direction and you’ll try to achieve your goals” (p. 58).

These four studies came from different countries, invoked different theories, and used different methods, yet all three showed that formative assessment practices scaffolding student involvement were associated with increased motivation.

Several studies support the second theme—that the development of motivation for learning and its use in regulating learning is not uniform among all students but displays individual differences (Weidinger et al., 2017). Studies identified several individual characteristics that partly account for those individual differences, including self-concept, achievement, and experience. Regarding self-concept and achievement, Vaessen et al. (2017) found that, for a sample of Dutch undergraduate students, most students valued graded frequent assessments as a study motivator. However, some students perceived either more positive (higher self-confidence and less stress) or negative (lower self-confidence and more stress) effects. Students with high grades perceived the effects of frequent graded assessments to be more positive, and students with lower grades perceived the effects to be more negative. That is, individual differences were operating. The assessments in the Vaessen study were graded (summative), which provided the opportunity for differences in self-concept in the face of evaluation to stand out and high achievers to benefit most.

In studies where assessments were formative, the effects of individual differences in achievement were sometimes the opposite. As reported above, in a study of formative assessment among younger students, Fletcher (2016) found that lower achievers became more motivated. Nikou and Economides (2016) also found greater increases in motivation for lower achievers in a secondary physics class. Yet Koenka et al. (2019) reported that students who were less academically successful were less motivated. Individual differences in self-concept and self-efficacy may be conditional on the summative/formative distinction, or at least the classroom consequences of this distinction.

Regarding individual differences and assessment experience, two different studies showed that perceptions of peer feedback changed as students got more experience with it. Joh (2019) found that for a sample of Korean college students, extroverts expected to get more from peer feedback than did introverts, but after experiencing peer feedback, these differences disappeared and positive perceptions developed. Ghahari and Sedaghat (2018) similarly found that college students' desire for peer comments on their writing grew as they experienced peer feedback, and that feelings of jealousy and resentment toward peers gradually decreased as desire to make progress in their writing increased with experience.

We can tentatively conclude, then, that studies in the motivation area support the theme that classroom assessment is part of the regulation of learning. Some of the studies in this area have demonstrated that classroom formative assessment strategies, when carefully applied, improve motivation. Others have demonstrated that even classroom summative (grading) strategies that give students useful information they can use can improve motivation. In other words, students can and do extract formative information for the regulation of motivation from many types of classroom assessment.

The 15 studies coded as Behavior measured both adaptive and maladaptive behaviors. Adaptive behaviors include revision, information seeking (Llorens et al., 2016), help seeking (Fletcher, 2018), analyzing and correcting errors, doing supplementary exercises, annotating text, selecting and using study strategies (Gezer-Templeton et al., 2017), and referring to standards (Lerdpornkulrat et al., 2019). A study by Chaktsiris and Southworth (2019) serves as a reminder that feedback does not always influence behavior as intended: They found that peer review led to little revision of student work from draft to final paper but students found the process useful in terms of the development of self-discipline that helped with time management and in overcoming anxiety.

Maladaptive (or unsanctioned) behaviors include cheating, not completing tasks, and avoiding help and feedback (Harris et al., 2018). When students in Harris et al.’s study recounted maladaptive behaviors related to assessment, they had either well-being goals or fear of recourse from the teacher, system, parents, or peers for poor performance.

The Behavior studies generally focused on behaviors as outcomes, rather than the object of regulation. No studies explicitly examined the co-regulation of behavior. Naturally, however, there are elements of co-regulation that could be studied. For example, this collection echoes scholarship that shows that behaviors such as revision, error correction, doing supplementary exercises, and making annotations depend on support from teachers and/or peers, and on having opportunities and time to take such actions (e.g., Carless, 2019).

Contextual factors have been shown to influence assessment beliefs and practices across micro, meso, and macro levels (Teasdale et al., 2000; Davison, 2004; Carless, 2011a; Lam, 2016; Ma, 2018; Vogt et al., 2020). However, none of the 94 studies explicitly studied the area of Context, which Pintrich and Zusho (2002) referred to in terms of academic tasks, reward structures, instructional methods, and instructor behaviors (Figure 3). Classroom context can mean many other things as well, such as the classroom climate, the mode of instruction (e.g., face to face or online), the type of program (e.g., Common Core State Standards, International Baccalaureate programs), and the local culture.

Regarding the intellectual, social, and emotional climate of the classroom, some research shows that many of the variables listed in the Context area in Figure 3 are at least partly determined by some of the variables listed in the Cognition or Motivation areas (Ames, 1992). When teachers select instructional and assessment strategies, share expectations for motivation, use formative assessment strategies, give feedback, and so on, they are creating classroom climates that are either evaluative (where students are focused on getting the right answer and sometimes on out-performing others) or learning-focused (where students are focused on learning and understanding). Thus, some of the studies in this review implicate context, but they did not specifically measure it, for example by using a classroom climate survey.

Given that self-regulatory skills are acquired through social interactions such as feedback (McInerney and King, 2018), socially mediated and culturally laden assessment practices are influences on all aspects of students' regulation of learning. This influence is well documented for attempts to implement Anglophone formative assessment in Confucian Heritage Culture settings (CHC, e.g., Hong Kong, China) (Carless, 2011a, Carless, 2011b; Chen et al., 2013; Lam, 2016; Cookson, 2017). For example, Zhu and Mok (2018) explained their null findings in terms of culture: “Hong Kong students are, in general, low in self-regulation compared with other countries (Organisation for Economic Co-operation and Development, 2014). As such, the sampling in this study might not have captured enough students with regulation capacities for relationships among variables to be manifested in the analyses” (p. 14). But classroom culture can trump national culture, as when students in a high school in Central China tended to consider test follow-up lessons helpful “when they were actively engaged (e.g., through thinking and reflecting) and less helpful when the teachers focused solely on the correct answers” (Xiao, 2017, p. 10). We know that culture influences assessment-related beliefs, practices, and outcomes, but the studies in this collection did not examine the ways in which classroom assessment is used (intentionally or not) to regulate culture.

Does the Research Literature Represent the Interactive and Reciprocal Nature of Co-regulation via Assessment, as Theorized?

The answer to this question is a qualified no. Most of the studies focused on individual students’ SRL but occasionally we saw a hint of reciprocity in co-regulation, such as when Martin et al. (2017) reported that teachers read students’ reflections, conferred with them for clarification, and then adjusted instruction as needed. Similarly, Crimmins et al. (2016) reported that the iterative, dialogic feedback processes they studied allowed three of twelve teachers to recognize the value of students’ feedback to them: “Feedback worked both ways, students want to share too” and “it is a great opportunity to receive direct feedback from students on our marking” (p. 147). In each case, feedback from teachers to students fed back (so to speak) feedback for the teachers: There are points on both ends of the arrow.

Studies of peer feedback are perhaps the most fertile ground for these hints of reciprocity in co-regulation because, by definition, students must interact with each other. It is not a long leap from student interaction to noticing students having an influence on each other (e.g., Hsia et al., 2016; Moneypenny et al., 2018). Crimmins et al. (2016) had an unexpected finding regarding peer co-regulation: Several focus group participants noted that class discussions based on feedback reflection created an opportunity for students to discuss their areas for improvement and support each other in addressing them by monitoring each other’s work, asking questions, and recommending strategies, which encouraged them to seek help. Gikandi and Morrow’s (2016) case study of 16 teacher education students intentionally focused on peer interactions and found that asynchronous discussions enabled students to review others’ thinking and compose ideas to offer feedback. Gikandi and Morrow reported that assessment guidelines and rubrics supported monitoring of peers' understandings and progress—a clear but rare reference to assessment-driven co-regulation between students. Similarly, without making reference to CoRL, Miihkinen and Virtanen (2018) observed that the use of a rubric in a peer assessment activity allowed undergraduate students to regulate their efforts to “put more emphasis on the most useful issues that can be taken into discussions to help others,” “really thought what kind of feedback would be useful and constructive,” and “prepare for the opponent work with care and to take part in discussions” (pp. 21-22).

Several studies have more strongly implied characteristics of co-regulation. Hawe and Dixon (2017), for example, described a comprehensive use of assessment that, by its nature, scaffolds the co-regulation of learning by teachers and students via the five assessment for learning (AfL) strategies:

• promotion of student understanding about the goal(s) of learning and what constitutes expected performance;

• the engineering of effective discussion and activities, including assessment tasks that elicit evidence of learning;

• generation of feedback (external and internal) that moves learning forward;

• activation of students as learning resources for one another including peer review and feedback;

• activation of student ownership over and responsibility for their learning (p. 2).

Hawe and Dixon’s qualitative analyses of interviews and classroom artifacts suggest that, “the full impact of AfL as a catalyst for self-regulated learning was realised in the cumulative and recursive effect these strategies had on students' thinking, actions and feelings” (p. 1). The abundant interactions they described about learning goals, discussions, activities, and feedback from multiple sources on evidence of learning might also be analyzed in terms of co-regulation, with or without an eye for effects on self-regulation.

A study by Schünemann et al. (2017) also lends itself to analysis in terms of co-regulation. They embedded peer feedback and SRL support into reciprocal teaching (RT) methods. RT is a natural context for co-regulation because it involves students working together in fixed small groups and giving each other both task-oriented and content- or performance-oriented feedback. Task-oriented feedback is a clear example of co-regulation, as described by Schünemann et al., because it involves “providing team members with feedback about the quality of their strategy-related behavior and stimulating them to correct and refine the administration of the respective comprehension strategy” (p. 402). Task-oriented feedback informed students about their strategy application and influenced knowledge of and decisions about strategy use, which is co-regulated learning.

Schünemann et al. (2017) note that their research and the field more broadly is now defining the role of the social context of SRL. Like Hadwin et al. (2011), however, they maintain that individual regulation is the ultimate goal, rather than co-regulation: “Characteristic for co-regulation is that it is always aimed at a transition towards individual self-regulation or the coordination of individual self-regulatory activities in a collaborative group environment” (p. 397). In contrast, Yang and others (Yang, 2019; Yang et al., 2020), who examined reflective assessment in the context of the web-based Knowledge Forum, take the stance that co-regulation itself is the aim:

Whereas prior research on reflective assessment has focused on individuals, reflective assessment in this study has a richer dimension, as it is extended to collaborative assessment in the context of a knowledge-building community. Not everyone in the community needs to be highly metacognitive. Students can scaffold each other’s metacognitive development through collective work and modelling when conducting reflective assessment. For example, they can help others to engage in metacognitive activities by asking questions, inviting explanations, or monitoring and reflecting on group growth in a community context (p. 4).

In Knowledge Forum, reflective assessment scaffolds co-regulation via analytic tools that provide evidence of how well students are doing in terms of learning processes and products. As a result, the low achieving, secondary visual arts students in Yang's (2019) study learned to reflect on their learning process and products, make further learning plans, and improve their collective ideas and knowledge. In the 2020 study, Yang et al. had undergraduate science students collectively use the analytic tools to develop “shared epistemic agency” which is a “capacity that enables groups to deliberately carry out collaborative, knowledge-driven activities with the aim of creating shared knowledge objects” (p. 2). Assessment in Knowledge Forum is the most direct example in this collection of studies of interactive, reciprocal co-regulation of learning symbolized in Figure 2. However, we acknowledge that it is difficult to distinguish between assessment and pedagogy in environments like Knowledge Forum. Whether or not it is important to distinguish between assessment and pedagogy is a debate (see Brown, 2021) we will not enter into here. In the interest of producing research that enriches the field, however, we will encourage researchers to carefully define assessment in the context of their studies, and ensure that assessment-related variables can be documented and measured.

This systematic review reveals that there is abundant empirical evidence in support of the claim of classroom assessment as the regulation of learning by students, teachers, peers and assessment technologies in the areas of cognition, motivation, and behavior but not context. The lack of studies of context is likely an artifact of our coding scheme. If context is defined as the academic tasks, reward structures, instructional methods, and instructor behaviors (Pintrich and Zusho, 2002), what is regulated as part of the assessment process that is not accounted for by the cognition, motivation, and behavior areas?

In contrast, this review highlights the lack of research on the interactive and reciprocal nature of co-regulation via assessment, as theorized. Although many believe that learning is situated in a complex environment with joint influences, the studies in this review tend to focus one-way effects (as in Figure 1) from one source, often the teacher. Dawson et al. (2018) call that kind of assessment Feedback Mark 0, “Conventional–teachers provide comments without monitoring effects.” We propose more studies of what they describe as Feedback Mark 2 (“Participatory–both students and teachers have the role of monitoring and responding to effects” (p. 2). As such studies emerge, we will better understand the distinctions between the types of regulation identified by Panadero and Järvelä (2015), with teacher-directed assessment as “an unbalanced regulation of learning usually known as co-regulation in which one or more group members regulate other member’s activity,” and student-centered assessment as “a more balanced approach… in which [the group members] jointly regulate their shared activity” (p. 10).

We believe that many contemporary studies of assessment and self-regulation could be studies of interactive, reciprocal co-regulation; it is just a matter of framing and data collection. Designing research related to co-regulation would mean collecting data on the effects of assessment processes on all parties, not on just individual students' learning and SRL, and making self- and co-regulation the object of classroom assessment, rather than just the measured outcome of a study.

The quality of research on assessment-driven co-regulation will be highest in studies that address four limitations of the extant research by doing the following: 1) collecting data on students’ goals and their effects on 2) the uptake of feedback, 3) the qualities of which must be examined and reported. The fourth limitation discussed below is the perennial problems associated with self-report data.

One problem to avoid in research on assessment-driven co-regulation is the assumption that regulation is always done in the service of the teacher’s goals. Boekaerts (1997) pointed out long ago that student self-regulation can be oriented toward very different goals: Growth goals related to learning and academic achievement, or well-being goals related to psychological and social safety. Interviews of students conducted by Harris et al. (2018) remind us that they “do not always act in the growth-oriented ways that educators envision”:

In real-world classroom situations, assessment processes can elicit behaviours that are more ego-protective than growth-oriented. Resistance to teacher expectations in assessment can arise from the individual’s need to protect his or her own identity or ego within the psychosocial context of the classroom. In addition, resistance can arise from strategic choices learners make to cope with competing demands on their time and resources. Thus, students may exercise their agency by not following assessment expectations or protocols (e.g. lying, cheating, or failing to give their best effort).... While the adaptive potential of student agency within assessment is widely discussed, examination of potentially maladaptive forms of assessment agency is largely missing from the literature. (p. 102)

Mistaking regulation motivated by well-being goals as something other than regulation is likely to be particularly consequential in studies of co-regulation, which often involve interactions between people. Researchers will have to be careful to collect data on students' goals, perhaps with interviews and focus groups.

A related limitation to address in future research is about the uptake of feedback, which is not always interpreted or used as intended (Brown et al., 2016; Moore and MacArthur, 2016). Winstone et al. (2017) proposed that, in addition to characteristics of the feedback itself, four recipience processes—self-appraisal, assessment literacy, goal setting and self-regulation, and engagement and motivation—affect students' proactive engagement with the feedback process. Feedback is feedback, not a mandate, and so it is not enough to ensure that feedback is accurately interpreted: We must understand how feedback interacts with students' individual and group goals in order to determine whether or not regulation has occurred.

It goes without saying that uptake of feedback is related to its quality, but the quality of feedback is rarely reported or even mentioned in the 94 studies in this collection, with the exceptions of Koenka et al. (2019), Panadero et al. (2020), and Schünemann et al. (2017). Feedback is a central concern of the sample, so the quality and characteristics of the feedback under investigation is important. This was recently demonstrated by Hattie et al. (2021), who found that “where to next” feedback led to the greatest gains from the first to the final submission of students' essays.

Finally, as we have already pointed out, this collection heavily relies on self-report surveys and questionnaires, some of questionable quality. Although self-report is a valid way to collect data in the social sciences (Chan, 2010), our concern is related to the likelihood that an over reliance on self-report unaided by other types of measures will produce data that suffers from social response bias and/or survey-takers' misunderstandings of items. A few examples will illustrate the point. Fraile et al. (2017), who wisely used think aloud protocols to triangulate their data, found that students who co-created rubrics had higher levels of learning self-regulation as measured through think aloud protocols, whereas the results from the self-reported self-regulation and self-efficacy questionnaires did not show significant differences between groups, leaving them to speculate about the reasons for the different results. Another example is from the meta-analysis by Panadero et al. (2017), which revealed a larger effect of self-assessment when SRL was measured via qualitative data as compared to questionnaires.

Why did different measures produce conflicting results? Did students misunderstand the survey items, or did the qualitative methods elicit social response bias? We have some evidence both are in play. Fukuda et al. (2020) indicated that student-reported instances of formative assessment in their classes and SRL were inflated, likely due to misunderstandings of the survey questions and/or social response bias. Similarly, Gezer-Templeton et al. (2017) observed that when “students were asked to identify study strategies they used in a free-response format, rather than selecting study strategies from a checklist, student responses lacked standardization. For instance, there were students who attended review sessions and/or came to the office hours, but did not include these practices as study strategies in their [free-responses]” (p. 31). In this case, self-report data was shown to be inaccurate by comparison to behavioral data.

We suspect that misunderstanding of survey questions might be common, given some of the items used in surveys. For example, “I modify my learning methods to meet the needs of a certain subject” was used in a culture in which the authors acknowledged that teachers tended not to support SRL and where PISA results indicated that students had a low capacity for SRL (Zhu and Mok, 2018, p. 9): Would those students know what it means to modify their learning methods to meet the needs of a certain subject, especially pre-intervention? Questions like this one point to the need to collect evidence of the validity of surveys, as well as data related to actual student behaviors. Muñoz and Cruz. (2016) videotaped teacher-student interactions in PreK classrooms. Not surprisingly, they were endowed with very rich data about how teacher feedback on self-regulation promoted students' planning, monitoring, and evaluation of their own work.

Multiple measures and methods can also ameliorate the limitation of self-report surveys in capturing data on the frequency of assessment or SRL events, rather than their quality. Gašević et al. (2017) emphasize the importance of attention to quality of regulation: “learners do not increase their usage of a newly acquired learning strategy, but rather apply this strategy in a more effective manner. In other words, when a strategy is effectively applied, the quantity remains consistent while the quality of the learning product increases” (p. 209).

Even self-report measures can become richer sources of data when they are used more than once or twice during a study. SRL is not static, especially (and hopefully) in the presence of an intervention, so a score on a one-time, retrospective questionnaire cannot capture the dynamic nature of regulation. Findings from several studies in the collection point toward the need to measure regulation during different phases: the meta-analysis by Koenka et al. (2019) indicated that the effects of performance feedback on motivation might fluctuate based on the stage at which they are measured; Panadero et al. (2020) found that secondary students adjusted their self-assessment strategies and criteria to using feedback as their main strategies after receiving feedback; and Chien and colleagues’ quasi-experimental study (2020) with high school students showed no relationship between irrelevant feedback and students' performance in the beginning stage of peer-assessment, but a significantly negative relationship as found at a later stage.

Studies that draw on trace data (e.g., Llorens et al., 2016; Lin, 2018) avoid the problem of self-report and address but do not resolve the issue of quantity versus quality. Lin (2018) addressed the issue of performance quality by using software that counted the number of words in students' posts, a proxy for quality with some evidence of validity. Llorens et al. (2016) used trace data to document and provide feedback on students' self-regulation during reading. Given the complexity of investigating assessment as the co-regulation of learning, we recommend the use of multiple, rigorous measures, particularly those that capture student thinking, learning, and co-regulating as it occurs. Because large sample sizes are often out of reach, we encourage the use of single-subject research designs (Lobo et al., 2017).

With this review, we have made a case for regulation as the defining feature of classroom assessment, particularly formative assessment (Allal, 2019). In effect, this means that the formative learning cycle—the questions “Where am I going? Where am I now? Where to next?” (Sadler, 1989; Hattie and Timperley, 2007)—describes the regulation of learning in action during teaching and learning. Because classroom assessment involves sources in addition to the self (teachers, peers, classroom instructional and assessment materials), classroom assessment is central to the co-regulation of learning.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

HA and SB contributed to the conception and design of the review. EY conducted the search of the literature. HA, SB and EY coded the studies and wrote summaries. HA wrote the first draft of the manuscript. SB wrote sections of the manuscript. All authors contributed to manuscript revision and read and approved of the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2021.751168/full#supplementary-material

Adie, L. E., Willis, J., and Van der Kleij, F. M. (2018). Diverse Perspectives on Student agency in Classroom Assessment. Aust. Educ. Res. 45 (1), 1–12. doi:10.1007/s13384-018-0262-2

Agricola, B. T., Prins, F. J., and Sluijsmans, D. M. A. (2020). Impact of Feedback Request Forms and Verbal Feedback on Higher Education Students' Feedback Perception, Self-Efficacy, and Motivation. Assess. Educ. Principles, Pol. Pract. 27 (1), 6–25. doi:10.1080/0969594X.2019.1688764

Allal, L. (2019). Assessment and the Co-regulation of Learning in the Classroom. Assess. Educ. Principles, Pol. Pract. 27 (4), 332–349. doi:10.1080/0969594X.2019.1609411

Allal, L. (2010). “Assessment and the Regulation of Learning,” in International Encyclopedia of Education. Editor E. B. P. Peterson (Elsevier), 3, 348–352. doi:10.1016/b978-0-08-044894-7.00362-6

Allal, L. (2016). “The Co-regulation of Student Learning in an Assessment for Learning Culture,” in Assessment for Learning: Meeting the challenge of Implementation. Editors D. Laveault, and L. Allal (Springer), 4, 259–273. doi:10.1007/978-3-319-39211-0_15

Ames, C. (1992). Classrooms: Goals, Structures, and Student Motivation. J. Educ. Psychol. 84 (3), 261–271. doi:10.1037/0022-0663.84.3.261

Andaya, B., Andaya, L., Reyes, S. T., Diaz, R. E., and McDonald, K. K. (2017). The Demise of the Malay Entrepôt State, 1699-1819. J. Coll. Sci. Teach. 46 (4), 84–121. doi:10.1057/978-1-137-60515-3_4

Andrade, H., and Brookhart, S. M. (2016). “The Role of Classroom Assessment in Supporting Self-Regulated Learning,” in Assessment for Learning: Meeting the challenge of Implementation. Editors D. Laveault, and L. Allal (Springer), 293–309. doi:10.1007/978-3-319-39211-0_17

Andrade, H. L. (2019). A Critical Review of Research on Student Self-Assessment. Front. Educ. 4. doi:10.3389/feduc.2019.00087

Andrade, H. L., and Brookhart, S. M. (2019). Classroom Assessment as the Co-regulation of Learning. Assess. Educ. Principles, Pol. Pract. 27 (4), 350–372. doi:10.1080/0969594X.2019.1571992

Andrade, H. (2010). “Students as the Definitive Source of Formative Assessment: Academic Self-Assessment and the Self-Regulation of Learning,” in Handbook of Formative Assessment. Editors H. Andrade, and G. Cizek (New York, NY: Routledge), 90–105.

Baas, D., Vermeulen, M., Castelijns, J., Martens, R., and Segers, M. (2020). Portfolios as a Tool for AfL and Student Motivation: Are They Related? Assess. Educ. Principles, Pol. Pract. 27 (4), 444–462. doi:10.1080/0969594X.2019.1653824

Bernacki, M., Nokes-Malach, T., Richey, J. E., and Belenky, D. M. (2016). Science Diaries: A Brief Writing Intervention to Improve Motivation to Learn Science. Educ. Psychol. 36 (1), 26–46. doi:10.1080/01443410.2014.895293

Boekaerts, M. (1997). Self-regulated Learning: A New Concept Embraced by Researchers, Policy Makers, Educators, Teachers, and Students. Learn. instruction 7 (2), 161–186. doi:10.1016/S0959-4752(96)00015-1

Bonner, S., Chen, P., Jones, K., and Milonovich, B. (2021). Formative Assessment of Computational Thinking: Cognitive and Metacognitive Processes. Appl. Meas. Edu. 34 (1), 27–45. doi:10.1080/08957347.2020.1835912

Bransford, J. D., Brown, A. L., and Cocking, R. R. (2000). How People Learn: Brain, Mind, Experience, and School. Washington, DC: National Academies Press.

Braund, H., and DeLuca, C. (2018). Elementary Students as Active Agents in Their Learning: An Empirical Study of the Connections between Assessment Practices and Student Metacognition. Aust. Educ. Res. 45 (1), 65–85. doi:10.1007/s13384-018-0265-z

Brookhart, S. M. (1997). A Theoretical Framework for the Role of Classroom Assessment in Motivating Student Effort and Achievement. Appl. Meas. Edu. 10 (2), 161–180. doi:10.1207/s15324818ame1002_4

Brookhart, S. M. (2001). Successful Students' Formative and Summative Uses of Assessment Information. Assess. Educ. Principles, Pol. Pract. 8 (2), 153–169. doi:10.1080/09695940123775

Brown, G. (2021). Responding to Assessment for Learning. nzaroe 26, 18–28. doi:10.26686/nzaroe.v26.6854

Brown, G. T., Peterson, E. R., and Yao, E. S. (2016). Student Conceptions of Feedback: Impact on Self-Regulation, Self-Efficacy, and Academic Achievement. Br. J. Educ. Psychol. 86 (4), 606–629. doi:10.1111/bjep.12126

Brown, G. T., and Harris, L. R. (2013). “Student Self-Assessment,” in SAGE Handbook of Research on Classroom Assessment. Editor J. H. McMillan (Los Angeles: SAGE), 367–393.

Burke Moneypenny, D., Evans, M., and Kraha, A. (2018). Student Perceptions of and Attitudes toward Peer Review. Am. J. Distance Edu. 32 (4), 236–247. doi:10.1080/08923647.2018.1509425

Çakir, R., Korkmaz, Ö., Bacanak, A., and Arslan, Ö. (2016). An Exploration of the Relationship between Students’ Preferences for Formative Feedback and Self-Regulated Learning Skills. Malaysian Online J. Educ. Sci. 4 (4), 14–30.

Callender, A. A., Franco-Watkins, A. M., and Roberts, A. S. (2016). Improving Metacognition in the Classroom through Instruction, Training, and Feedback. Metacognition Learn. 11 (2), 215–235. doi:10.1007/s11409-015-9142-6

Carless, D. (2019). Feedback Loops and the Longer-Term: Towards Feedback Spirals. Assess. Eval. Higher Edu. 44 (5), 705–714. doi:10.1080/02602938.2018.1531108

Carless, D. R. (2011a). From Testing to Productive Student Learning: Implementing Formative Assessment in Confucian-Heritage Settings. New York, NY: Routledge. doi:10.4324/9780203128213

Carless, D. R. (2011b). “Reconfiguring Assessment to Promote Productive Student Learning,” in New Directions: Assessment And Evaluation – A Collection of Papers (East Asia). Editor P. Powell-Davies (London, United Kingdom: British Council), 51–56.

Chaktsiris, M. G., and Southworth, J. (2019). Thinking beyond Writing Development in Peer Review. cjsotl-rcacea 10 (1). doi:10.5206/cjsotl-rcacea.2019.1.8005

Chan, D. (2010). “So Why Ask Me? Are Self-Report Data Really that Bad,” in Statistical and Methodological Myths and Urban Legends. Editors C. E. Lance, and R. J. Vandenberg (New York, NY: Routledge), 329–356.

Cheah, S., and Li, S. (2020). The Effect of Structured Feedback on Performance: The Role of Attitude and Perceived Usefulness. Sustainability 12 (5), 2101. doi:10.3390/su12052101

Chen, Q., Kettle, M., Klenowski, V., and May, L. (2013). Interpretations of Formative Assessment in the Teaching of English at Two Chinese Universities: A Sociocultural Perspective. Assess. Eval. Higher Edu. 38 (7), 831–846. doi:10.1080/02602938.2012.726963

Chien, S.-Y., Hwang, G.-J., and Jong, M. S.-Y. (2020). Effects of Peer Assessment within the Context of Spherical Video-Based Virtual Reality on EFL Students' English-Speaking Performance and Learning Perceptions. Comput. Edu. 146, 103751. doi:10.1016/j.compedu.2019.103751

Cho, K. W. (2019). Exploring the Dark Side of Exposure to Peer Excellence Among Traditional and Nontraditional College Students. Learn. Individual Differences 73, 52–58. doi:10.1016/j.lindif.2019.05.001

Colthorpe, K., Sharifirad, T., Ainscough, L., Anderson, S., and Zimbardi, K. (2018). Prompting Undergraduate Students' Metacognition of Learning: Implementing 'meta-Learning' Assessment Tasks in the Biomedical Sciences. Assess. Eval. Higher Edu. 43 (2), 272–285. doi:10.1080/02602938.2017.1334872

Cookson, C. (2017). Voices from the East and West: Congruence on the Primary Purpose of Tutor Feedback in Higher Education. Assess. Eval. Higher Edu. 42 (7), 1168–1179. doi:10.1080/02602938.2016.1236184

Crimmins, G., Nash, G., Oprescu, F., Liebergreen, M., Turley, J., Bond, R., et al. (2016). A Written, Reflective and Dialogic Strategy for Assessment Feedback that Can Enhance Student/teacher Relationships. Assess. Eval. Higher Edu. 41 (1), 141–153. doi:10.1080/02602938.2014.986644

Cross, J. (2010). Raising L2 Listeners' Metacognitive Awareness: a Sociocultural Theory Perspective. Lang. Awareness 19 (4), 281–297. doi:10.1080/09658416.2010.519033

Dann, R. (2014). Assessmentaslearning: Blurring the Boundaries of Assessment and Learning for Theory, Policy and Practice. Assess. Educ. Principles, Pol. Pract. 21 (2), 149–166. doi:10.1080/0969594X.2014.898128

David, L. C. G., Marinas, F. S., and Torres, D. S. (2018). “The Impact of Final Term Exam Exemption Policy: Case Study at MAAP,” in IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Wollongong, NSW, December 4–7, 2018, 955. doi:10.1109/TALE.2018.8615448

Davison, C. (2004). The Contradictory Culture of Teacher-Based Assessment: ESL Teacher Assessment Practices in Australian and Hong Kong Secondary Schools. Lang. Test. 21 (3), 305–334. doi:10.1191/0265532204lt286oa

Dawson, P., Henderson, M., Ryan, T., Mahoney, P., Boud, D., Phillips, M., et al. (2018). “Technology and Feedback Design,” in Learning, Design and Technology: An International Compendium of Theory, Research, Practice and Policy. Editors J. M. Spector, B. B. Lockee, and M. D. Childress (Springer), 1–45. doi:10.1007/978-3-319-17727-4_124-1

Deci, E. L., and Ryan, R. M. (2000). The "What" and "Why" of Goal Pursuits: Human Needs and the Self-Determination of Behavior. Psychol. Inq. 11 (4), 227–268. doi:10.1207/S15327965PLI1104_01

Dolezal, D., Motschnig, R., and Pucher, R. (2018). “Peer Review as a Tool for Person-Centered Learning: Computer Science Education at Secondary School Level,” in Teaching and Learning in a Digital World Proceedings of the 20th International Conference on Interactive Collaborative Learning. Editors D. Auer, I. Guralnick, and Simonics (Springer), Vol. 1, 468–478. doi:10.1007/978-3-319-73210-7_56

Dorans, N. J. (2012). The Contestant Perspective on Taking Tests: Emanations from the Statue within. Educ. Meas. Issues Pract. 31 (4), 20–37. doi:10.1111/j.1745-3992.2012.00250.x

Earl, L. (2013). Assessment as Learning: Using Classroom Assessment to Maximise Student Learning. 2nd ed. Thousand Oaks, CA: Corwin.

Farahian, M., and Avarzamani, F. (2018). The Impact of Portfolio on EFL Learners' Metacognition and Writing Performance. Cogent Edu. 5 (1), 1450918. doi:10.1080/2331186x.2018.1450918

Fletcher, A. K. (2016). Exceeding Expectations: Scaffolding Agentic Engagement through Assessment as Learning. Educ. Res. 58 (4), 400–419. doi:10.1080/00131881.2016.1235909

Fletcher, A. K. (2018). Help Seeking: Agentic Learners Initiating Feedback. Educ. Rev. 70 (4), 389–408. doi:10.1080/00131911.2017.1340871

Förster, M., Weiser, C., Maur, A., Förster, M., Weiser, C., and Maur, A. (2018). How Feedback provided by Voluntary Electronic Quizzes Affects Learning Outcomes of university Students in Large Classes. Comput. Edu. 121, 100–114. doi:10.1016/j.compedu.2018.02.012

Fraile, J., Panadero, E., and Pardo, R. (2017). Co-creating Rubrics: The Effects on Self-Regulated Learning, Self-Efficacy and Performance of Establishing Assessment Criteria with Students. Stud. Educ. Eval. 53, 69–76. doi:10.1016/j.stueduc.2017.03.003

Fukuda, S. T., Lander, B. W., and Pope, C. J. (2020). Formative Assessment for Learning How to Learn: Exploring university Student Learning Experiences. RELC J., 003368822092592. doi:10.1177/0033688220925927

Gan, Z., He, J., He, J., and Liu, F. (2019). Understanding Classroom Assessment Practices and Learning Motivation in Secondary EFL Students. J. Asia TEFL 16 (3), 783–800. doi:10.18823/asiatefl.2019.16.3.2.783

Garrison, D. R., and Archer, W. (2000). A Transactional Perspective on Teaching and Learning: A Framework for Adult and Higher Education. United Kingdom: Emerald.

Gašević, D., Mirriahi, N., Dawson, S., and Joksimović, S. (2017). Effects of Instructional Conditions and Experience on the Adoption of a Learning Tool. Comput. Hum. Behav. 67, 207–220. doi:10.1016/j.chb.2016.10.026

Gezer-Templeton, P. G., Mayhew, E. J., Korte, D. S., and Schmidt, S. J. (2017). Use of Exam Wrappers to Enhance Students' Metacognitive Skills in a Large Introductory Food Science and Human Nutrition Course. J. Food Sci. Edu. 16 (1), 28–36. doi:10.1111/1541-4329.12103

Ghahari, S., and Sedaghat, M. (2018). Optimal Feedback Structure and Interactional Pattern in Formative Peer Practices: Students' Beliefs. System 74, 9–20. doi:10.1016/j.system.2018.02.003

Gikandi, J. W., and Morrow, D. (2016). Designing and Implementing Peer Formative Feedback within Online Learning Environments. Technol. Pedagogy Edu. 25 (2), 153–170. doi:10.1080/1475939X.2015.1058853

Guo, W., Lau, K. L., and Wei, J. (2019). Teacher Feedback and Students' Self-Regulated Learning in Mathematics: A Comparison between a High-Achieving and a Low-Achieving Secondary Schools. Stud. Educ. Eval. 63, 48–58. doi:10.1016/j.stueduc.2019.07.001

Guo, W., and Wei, J. (2019). Teacher Feedback and Students' Self-Regulated Learning in Mathematics: A Study of Chinese Secondary Students. Asia-pacific Edu Res. 28 (3), 265–275. doi:10.1007/s40299-019-00434-8

Hadwin, A. F., Järvelä, S., and Miller, M. (2011). “Self-regulated, Co-regulated, and Socially Shared Regulation of Learning,” in Handbook of Self-Regulation of Learning and Performance. Editors D. Schunk, and B. Zimmerman (New York, NY: Routledge), 65–84.

Hadwin, A., Järvelä, S., and Miller, M. (2018). “Self-regulation, Co-regulation, and Shared Regulation in Collaborative Learning Environments,” in Handbook of Self-Regulation of Learning and Performance. Editors D. Schunk, and J. Greene. 2nd ed. (New York, NY: Routledge), 83–106.

Hadwin, A., and Oshige, M. (2011). Self-Regulation, Coregulation, and Socially Shared Regulation: Exploring Perspectives of Social in Self-Regulated Learning Theory. Teach. Coll. Rec. 113 (2), 240–264.

Harris, L. R., Brown, G. T. L., and Dargusch, J. (2018). Not playing the Game: Student Assessment Resistance as a Form of agency. Aust. Educ. Res. 45 (1), 125–140. doi:10.1007/s13384-018-0264-0

Hattie, J., Crivelli, J., Van Gompel, K., West-Smith, P., and Wike, K. (2021). Feedback that Leads to Improvement in Student Essays: Testing the Hypothesis that “Where to Next” Feedback Is Most Powerful. Front. Educ. 6 (182), 645758. doi:10.3389/feduc.2021.645758

Hattie, J., and Timperley, H. (2007). The Power of Feedback. Rev. Educ. Res. 77 (1), 81–112. doi:10.3102/2F003465430298487

Hawe, E., and Dixon, H. (2017). Assessment for Learning: A Catalyst for Student Self-Regulation. Assess. Eval. Higher Edu. 42 (8), 1181–1192. doi:10.1080/02602938.2016.1236360

Heritage, M. (2018). Assessment for Learning as Support for Student Self-Regulation. Aust. Educ. Res. 45 (1), 51–63. doi:10.1007/s13384-018-0261-3

Hsia, L.-H., Huang, I., and Hwang, G.-J. (2016). A Web-Based Peer-Assessment Approach to Improving Junior High School Students' Performance, Self-Efficacy and Motivation in Performing Arts Courses. Br. J. Educ. Technol. 47 (4), 618–632. doi:10.1111/bjet.12248