- 1Department of Biology, University of Nebraska Omaha, Omaha, NE, United States

- 2Offices of Undergraduate and Graduate Medical Education, University of Nebraska Medical Center, Omaha, NE, United States

- 3STEM TRAIL Center, University of Nebraska Omaha, Omaha, NE, United States

Multiple meta-analyses and systematic reviews have been conducted to evaluate methodological rigor in research on the effect that mentoring has on the mentee. However, little reliable information exists regarding the effect of mentoring on the mentor. As such, we conducted a systematic review of the literature focused on such an effect (if any) within the fields of science, technology, engineering, and mathematics (STEM), aiming to better understand the quality of the research that has been conducted. We focused on undergraduate or post-secondary students as mentors for near-peers and/or youth. This review functions to identify commonalities and disparities of the mentoring program and research components and further promote methodological rigor on the subject by providing a more consistent description of the metrics utilized across studies. We analyzed articles from 2013 to 2020 to determine the features of undergraduate mentor programs and research, the methodological rigor of research applied, and compared them to prior research of this nature. In total, 80 eligible articles were identified through Cronbach’s UTOS framework and evaluated. Our key findings were that nearly all studies employed non-experimental designs, most with solely qualitative measurements and all lacked a full description of program components and/or experimental design, including theoretical framework. Overall, we identified the following best practice suggestions for future research on the effect of mentoring on mentors, specifically: the employment of longitudinal and exploratory mixed methods designs, utilizing sequential collection, and experimental descriptions nested within a theoretical framework.

Introduction

Programs focusing on undergraduates (UGs) providing mentoring are widespread within and outside of science, technology, engineering, and mathematics (STEM) disciplines. The effects of these programs are not beyond empirical analysis, with much of the existing research on mentoring focusing only on the impact of mentoring on mentees, objective data (e.g., exam scores, course grades, grade point average, etc.), or quantitative data (Crisp and Cruz, 2009; Gershenfeld, 2014), which ultimately limits the scope of understanding and application. Our present study is a systematic review to determine the methodological rigor of research measuring outcomes for UG mentors (i.e., the individuals doing the mentoring, as opposed to those benefiting from the mentoring, as is commonly reported in the literature). We reviewed studies between 2013 and 2020, since 2014 (Gershenfeld) was the last publication on this topic and would not have included articles in press (i.e., during 2012 and published in 2013) at the time of its writing. In all, we identified 80 studies containing quantitative and/or qualitative insights from UG mentors.

Jacobi (1991) review of a decade (1980–1990) of mentoring research on mentor and mentee perspectives proposed a need for improved methodology and reasoned for the importance of situating mentoring programs and research within a theoretical base. Consequently, Jacobi (1991) put forward four major theoretical frameworks of mentoring programs: 1) involvement with learning, 2) academic and social integration, 3) social support, and 4) developmental support. Hannafin et al. (1997) indirectly extended and expounded upon this reasoning for use of the grounded theory design namely alignment of methods, theoretical or conceptual framework, and research are essential in understanding learning environments.

Nora and Crisp’s (2007) report on a survey of UG mentor perspectives and a corresponding literature review detailed the functional roles of mentors and prompted their assertion that mentoring programs and research continued to lack theoretical/conceptual bases. Nora and Crisp (2007) identified four major components that mentoring programs can utilize to provide a strong conceptual base namely 1) education/career goal establishment and evaluation, 2) emotional and psychological support, 3) academic content knowledge support, and 4) presence of a role model. Two years later, Crisp and Cruz, (2009) updated the review by Jacobi (1991), outlining a continued lack of methodological rigor in a wider body of mentoring research between 1990 and 2007.

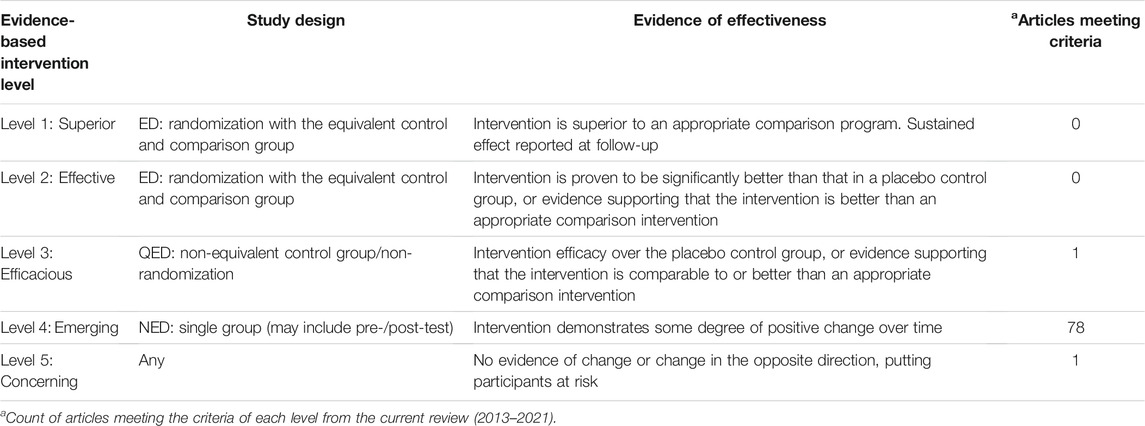

The last major review prior to this was conducted by Gershenfeld (2014) with the intention of extending the analysis of mentoring research to include published works between 2008 and 2012. Gershenfeld (2014) ultimately reported some improvement in the application of theoretical or conceptual frameworks but similarly outlined persistent methodological shortcomings. Of particular note, Gershenfeld (2014) identified some of what is termed “key mentoring program components” (Supplementary Tables S1, 2) and innovatively applied the Levels of Evidence-Based Intervention Effectiveness (LEBIE; shown in Table 1; Jackson, 2009) scale to evaluate methodological rigor.

TABLE 1. Levels of Evidence-Based Intervention Effectiveness scale (LEBIE). The LEBIE scale taken from Jackson (2009) and used by Gershenfeld (2014). ED, Experimental design; QED, Quasi-experimental design; NED, Non-experimental design.

However, Gershenfeld (2014) identified a skew in article rankings by the LEBIE scale, assigning only 3’s, 4’s, and 5’s (which are inferior scores, as 5 = concerning). They attributed this skew to the scale’s rankings tending toward typical quantitative studies, in which the presence of equivalent controls and randomization is more common. In isolation, this issue would be significant, but Gershenfeld (2014) employed other forms of evaluation to ensure appropriate analysis of qualitative and mixed-method study designs, a strategy in which the present study adopts as well.

The aim of this study was to extend the analysis of research on the effect of mentoring on mentors, from the last review of such literature (i.e., the period covering 2013–2020). We aimed to address two key research questions:

1) Does the application of the LEBIE scale (Jackson, 2009) to evaluate mentoring research that contains mentor perspectives published between 2013 and 2020 mirror that shared by Gershenfeld (2014)? Or, did the field respond with more expansive mentoring evaluation practices after that publication?

2) Identify “key mentoring program components” (Gershenfeld, 2014), theoretical or conceptual frameworks (if provided), methods, and general findings of the mentoring literature. We sought to determine what these components are, based upon the frameworks of Jacobi (1991) and Hannafin et al. (1997), Nora and Crisp (2007), Crisp and Cruz (2009), and Gershenfeld (2014).

Ultimately, these results will allow for recommendations for future researchers to improve upon methodological rigor in research that studies the impact of mentoring on mentors.

Materials and Methods

The methods employed for this systematic review are consistent with the practices within the literature, namely of Cronbach and Shapiro (1982) and Moher et al. (2009), using the following Cronbach’s units, treatments, outcomes, and study designs (UTOS) framework. Our population of interest (Units) is UG mentors within STEM and peripheral fields. We focused on the provision of mentoring by UGs (Treatments) as an intervention, including but not limited to mentoring within peer-mentoring, service-learning, course-related, internship, and research programs. The Outcomes we are interested in for eligibility are those reported openly by or requiring insights from UG mentors on what effect the experience had on them. Due to the exploratory nature of this study and the widely variable outcomes measured, we do not further constrict this parameter. However, we did also identify and report on other subjective components (e.g., demographics, compensation, support, frequency, etc.). As one of our major goals is to identify methods employed, all Study Designs are eligible for review, so long as outcomes are reported and are in line with the aforementioned parameter.

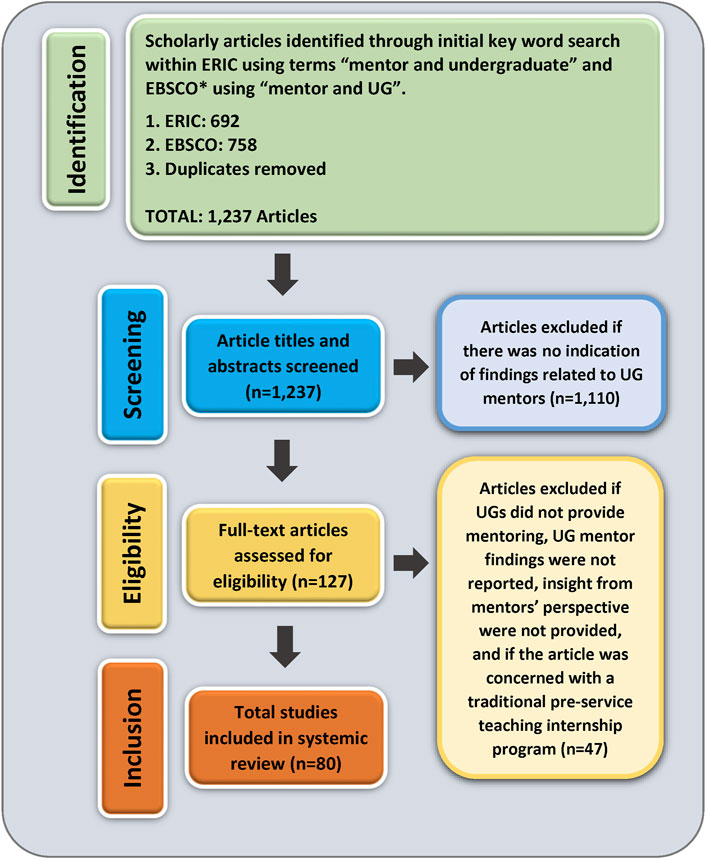

We completed a literature search within the Education Resources Information Center database (ERIC) and multiple databases within ESBCO (namely Academic Search Complete, Education Source, E-Journals, PsycARTICLES, PsycINFO, Psychology and Behavioral Sciences Collection, and Teacher Reference Center) using the respective search terms “mentor and undergraduate” in ERIC and “mentor and UG” in EBSCO. One set of search terms could not be used exclusively within both databases due to an issue with ERIC producing only two search results with the latter and EBSCO producing thousands of unrelated results with the former. Our other search criteria included scholarly articles, written in English, peer reviewed, and published between 2013 and 2020 (see Figure 1 for stepwise exclusion). We used a date range that included any articles published, while the Gershenfeld paper (i.e., the last most recent review) would have been under review (i.e., 2013) and through the final full year prior to submission (i.e., 2020). Therefore, this systematic review includes studies from 2013 to 2020, covering the entire ERIC database and multiple databases within EBSCO, and yielding 1,231 positive hits.

FIGURE 1. Prisma flow diagram (Moher et al., 2009) for record identification, inclusion, and exclusion. *Databases included within EBSCO search: Academic Search Complete, Education Source, E-Journals, PsycARTICLES, PsychINFO, Psychology and Behavioral Sciences Collection, and Teacher Reference Center.

After the removal of duplicates, the article titles and abstracts were screened for any indication of findings related to UG mentors (e.g., title and/or abstract explicitly contain the words undergraduate/UG mentors and suggest or explicitly state something about mentor perspectives/insight), which would fulfill our Units parameter. Those included through this initial screening were reviewed in full for eligibility if the focus was on the provision of mentoring by UGs, findings were reported, and insights from the mentors’ perspective were provided (i.e., explicit statements and data were provided to demonstrate each), therefore fulfilling our Outcomes and Treatment parameters. Articles or programs pertaining to service-learning were included only if the service-learning involved provision of mentoring by UGs, and any articles or programs concerning traditional pre-service teaching internship programs (e.g., co-teaching within a classroom setting under the supervision of a certified teacher) were excluded, as such positions do not revolve around the adoption of a mentor role. While mentors may certainly serve as teachers and teachers may certainly serve as mentors, they are generally observed and/or measured as separate roles albeit closely related (Crisp and Cruz, 2009; Gershenfeld, 2014; Jacobi, 1991; Nelson et al., 2017; 2017; Nora and Crisp, 2007), prompting our decision to exclude pre-service programs in order to maintain focus on mentoring in alignment with our Treatment parameter.

Throughout each step of the review process, two authors (ASL and KLN) independently read and evaluated relevant articles/sections (e.g., abstract vs. methods vs. whole document), addressing any discrepancies prior to moving on. We routinely compared independent running documents containing all positive hits and subsequent inclusions of articles/extracted data (i.e., independent versions of Figure 1, Table 1, and Supplementary Tables) in a stepwise manner, while the third author (CEC) addressed any discrepancies not clearly resolved by the other two (e.g., whether certain language indicated a program to be a pre-service program). Nearly all inclusion criteria and data that we collected were concerned with the presence or absence of some attribute or the reporting of what is explicitly stated or not stated by article authors and were based on priorly established frameworks as discussed in our research questions. For this reason, many inconsistencies between reviewers could be attributed to one of the authors missing a qualifying article or not identifying data. However, some discrepancies did arise from unclear language or subjective interpretation (e.g., analytic logic, sequencing, and data priority in studies utilizing mixed methods design). Inconsistencies of the former type were resolved by comparing data sets and identifying where the criteria or data were located within articles/sections, while discrepancies of the latter type were resolved through discussion with the third author.

In total, there were 1,231 positive hits through the database query after duplicate removal. Of these, n = 80 met all of our inclusion criteria and were analyzed by the following evaluative tools. We used the LEBIE scale (Jackson, 2009) to examine methodological rigor (Table 1) in terms of study design (e.g., presence of equivalent vs. non-equivalent vs. no control group) and evidence of effectiveness (e.g., evidence that intervention results in some positive change over time or is better than or comparable to a control/placebo). To examine program and research functionality and qualities, we used (Nora and Crisp, 2007) conceptualization of core functional roles (e.g., assist with a course, provide peer-mentoring, service-learning, etc.) and (Gershenfeld, 2014) key-mentoring program and research components (namely mentor and mentee demographics, compensation, frequency of mentoring, support, N = number of mentors, quantitative vs. qualitative vs. mixed methods, how data are collected, and major findings). In line with prior researchers from Jacobi (1991) and Hannafin et al. (1997) to Nora and Crisp (2007) and Gershenfeld, (2014), we also identified theoretical/conceptual frameworks (if stated by authors).

Finally, for relevant studies, we examined characteristics deemed essential within the literature to mixed methods designs (Supplementary Tables S3), including an explicit statement that mixed methods research is being utilized, rationale for using mixed methods research, integration of quantitative and qualitative data (merging, connecting, or building), analytic logic (independent or dependent), sequencing/timing (concurrent or sequential), and/or priority (quantitative, qualitative, or both; Creswell, 2013; Creswell and Plano Clark, 2017; Harrison et al., 2020; O’Cathain et al., 2008; plano Clark and Ivankova, 2016). We took the former three from eligible studies (i.e., stated or not and what was stated) but interpreted the latter three for all but one. Ultimately, our results will consist of LEBIE scale ratings, compiled qualitative data on program and research qualities, and reporting of relative proportions of qualities where possible. Of note, where we discuss proportions/percentages, the sample size (n) may not equal the total number of eligible studies (n = 80) due to some qualities not being reported or present in certain studies (e.g., mixed methods design), and percentages may add up to be greater than 100% due to certain studies reporting multiple elements within a given quality (e.g., different types of compensation given to different participants).

Results

Consistent with prior research, we have included many components of the articles we reviewed and the mentoring programs they analyzed (contained within the following table and supplemental materials). It is and always was our intention to compile this large amount of data in order to provide easy access to overview these studies for other mentoring researchers (we have grouped similar data together for this reason). However, our primary goal is to identify trends within mentoring programs and research approaches in addition to analyzing methodological rigor in studies on the subject in order to provide suggestions for improvement of future research. To this end, our results and discussion will be focused on our research questions to determine rigor (i.e., Table 1, Supplementary Tables S1, 2) and methodology (i.e., Supplementary Tables S2, 3).

Rigor in the Experimental Design for Mentoring Articles

Mirroring Gershenfeld (2014) review, we analyzed rigor by the LEBIE scale and components deemed essential to mentoring and mentoring research within the literature (Jacobi, 1991; Hannafin et al., 1997; Nora and Crisp, 2007; Crisp and Cruz, 2009). Our rankings by use of the LEBIE scale (Table 1) were consistent with Gershenfeld (2014) review (only Level 5s, 4s, and 3s are given) but with considerable regression onto Level 4 (Gershenfeld assigned eleven Level 5s, four Level 4s, and three Level 3s). Of note, we only ranked one article as efficacious (Level 3) and one other as concerning (Level 5). For all remaining articles (78 of n = 80) included in this review, we assigned the rank of emerging (Level 4), with 11 containing some form of pre- and post-intervention measurement.

While reviewing articles for theoretical/conceptual frameworks (Supplementary Tables S2), we recorded any that were explicitly stated (61.25%, n = 49) and also identified those that relate to at least one of the four major theoretical frameworks of mentoring programs put forward by Jacobi (1991; 45%, n = 36). For program functionality (Supplementary Tables S1), our concern was with the type of mentoring (i.e., peer, near-peer, and youth), whether the authors considered other core functions (i.e., internship and service-learning), and which of (Nora and Crisp, 2007) four major components were present. We found that 65% (n = 52) of articles contained programs for peer mentoring, 22.5% (n = 18) for near-peer mentoring, 32.5% (n = 26) for youth mentoring, 22.5% (n = 18) for service-learning, and 2.5% (n = 2) for internships. Concerning (Nora and Crisp, 2007) four major components, our analysis found 45% (n = 36) of programs to be solely or primarily focused on academic content and knowledge support, 8.75% (n = 7) to include discussion and focus on all four components, and the remainder to be focused on other single components or combinations of at least two of the four.

Type of Method for Data Collection Utilized

The majority (70%, n = 56; methods; Supplementary Tables S2) of articles we reviewed employed qualitative methodologies, and a small minority employed quantitative methodologies (6.25%, n = 5) or were systematic reviews (3.75%, n = 3). Our inspection shows that the number of mentors or sample sizes (N; Supplementary Tables S2) within the included studies is considerably variable, ranging from 1 to 1,972. Additionally, some articles did not report N at all or reported it vaguely (e.g., greater than 150). We found that a large portion of studies collected data (data collection; Supplementary Tables S2) through self-report surveys (38.75%, n = 31), and of these many were Likert scale–based (18.75%, n = 15). A total of twelve articles (15%) used priorly developed tools for quantitative measurements, and the remaining data collection methods were made up by spread and/or variable combinations of interviews, document analysis, focus groups, observation, demographic information, general feedback, or commentary, and questionnaires. While 9 studies (methods; Supplementary Tables S2) did explicitly state the use of the mixed methods design, we analyzed another 7 that contained both quantitative and qualitative data collection as employing the mixed methods design (20%, n = 16 employed mixed methods design).

Key Qualities of Mixed Methods Research in Relevant Articles

All of the articles we identified as utilizing mixed methods designs explicitly stated the use of qualitative and quantitative measures, and just over half of these (56.25%, n = 9; Supplementary Tables S3) also explicitly stated the utilization of mixed methods design. Less than half of these (37.5%, n = 6) articles state a mode of integration (all but one report integration by triangulation) and seven (43.75%) studies provide no evidence of combining quantitative and qualitative data sets. The outlier (Hastings and Sunderman, 2019) reports integration by using qualitative data to build on and support quantitative data and is the only article to include explicit details on analytic logic (dependent), sequencing/timing (quantitative prior to qualitative), and priority (quantitative, the only article with this priority). For the remaining articles, we interpreted that 68.75% (n = 11) had even priority between quantitative and qualitative data, 25% (n = 4) prioritized qualitative data, and all but one study (87.5%, n = 14) had independent analytic logic and concurrent sequencing/timing [McIntosh (2019); could not be interpreted due to a lack of methodological description]. Of the studies that did not explicitly state integration (62.5%, n = 10), one provided some discussion of using qualitative and quantitative data to build on each other (Pica and Fripp, 2020), and two discussed looking for common patterns in each (Köse and Johnson, 2016; Bonner et al., 2019).

Discussion

Present State of Research According to This Review

Our LEBIE scale rankings are consistent with but do not directly mirror that shared by Gershenfeld (2014), suggesting that mentoring research between 2013 and 2020 has, in general, responded with at least some more expansive mentoring evaluation practices after its publication. However, the proportion of articles explicitly stating the adoption of a theoretical or conceptual framework in our systematic review is smaller than previously reported, and the most common and predominating functions from Nora and Crisp’s (2007) four major components are largely used in academic content and knowledge support (Gershenfeld, 2014). Considering best practice in mentoring programs and research (Jacobi, 1991; Hannafin et al., 1997; Nora and Crisp, 2007; Crisp and Cruz, 2009), we reason that a decrease in theoretical bases and lack of change in functional grounding suggests a general decrease in methodological rigor that is not measured by the LEBIE scale.

Our analysis of article methodology is meant to augment these findings, as LEBIE scale rankings and functional component identification do not evaluate the full spectrum of methodological designs within the field. The vast majority of studies we have identified through this systematic review employ qualitative-only designs over singular and relatively short time periods, and most utilize self-report surveys (Likert scale or otherwise) developed for the sole purpose of evaluating the program of interest. Additionally, we examined that qualitative or quantitative measurements generally were not taken pre-/mid- and post-intervention.

In programs that have employed mixed methods research, we found that evidence of quantitative and qualitative data integration was lacking and that methodological description was often limited or not present. Curiously, we identified the article by (Hastings and Sunderman (2019) as providing the most detailed methodological description that employed an exploratory mixed methods design but used quantitative measurement for exploration and qualitative data for support. This is in opposition to recommendations in the literature for exploratory mixed methods studies (Creswell and Plano Clark, 2017; Harrison et al., 2020), in which qualitative then quantitative data are sequentially collected, and the latter depends on the former. Our systematic review suggests that there remains a lack of valid and reliable tools for quantitative measurement of the effect of mentoring on UG mentors and leading exploration with qualitative measurements is more likely to provide progress toward the development of such tools (Creswell and Plano Clark, 2017; Harrison et al., 2020).

Ultimately, our analyses of UG mentor program components and function (Table 1 and Supplementary Tables S1) demonstrate even more variability than priorly identified (Gershenfeld, 2014). Alongside the invariability of LEBIE scale (Table 2) rankings presently and previously (Gershenfeld, 2014), this reinforces the need for methodological rigor and evaluation appropriate to such a complex subject. Accordingly, our suggestions for future researchers on the effect of mentoring on UG mentors are that there is a need for studies of the longitudinal design (Plano Clark et al., 2015), of an exploratory nature (Gershenfeld, 2014), utilizing a sequential collection of qualitative and then quantitative data (Creswell and Plano Clark, 2017; Harrison et al., 2020). We recognize that research completed to analyze mentoring programs is often constricted by the variable nature of its components and participant characteristics. None of these suggestions should necessitate the application of all others, as the employment of even a single one would be beneficial to methodological rigor (e.g., well-established qualitative exploration to understand where quantitative measurements are most beneficial and appropriate).

Limitations

The limitations of this review include our bias in focusing solely on the effect of mentoring on mentors at the omission of discussion on the effect on mentees. Conjecture back and forth on the latter effect has occurred and is ongoing at length elsewhere, and we, therefore, chose not to include it in this article. Another limitation of note would be the scope of databases queried for this systematic review, namely the Education Resources Information Center database (ERIC) and multiple databases within the ESBCO. These databases represent a sizeable group, with a focus that should include a representative sample of research relevant to this review. However, it is possible that articles meeting our inclusion criteria were missed if their publishing journals were not contained within the aforementioned databases.

Suggestions for Future Researchers

Collecting data over longer and multiple periods of time should provide more information on whether and/or what long term effects of mentoring can realistically be expected (Plano Clark et al., 2015; Nelson and Cutucache, 2017), while more rigorous quantitative data collection and analysis would provide studies with more generalizability (Kruger, 2003) and increased objectivity (Linn et al., 2015; Owen, 2017). Moreover, by employing exploratory and longitudinal mixed-method designs, methodological rigor can be improved (Creswell and Plano Clark, 2017; Harrison et al., 2020) and progress can be made toward the development of tools for valid and reliable quantitative measurement, hopefully creating a cycle of reciprocity.

We further assert that it is vital for studies on this topic to provide descriptions and explicit statements relating to their methodology, program, and participants. Many of the studies we identified in this systematic review did not share important details, requiring interpretation and a lot of time to properly evaluate and understand them. Providing information explicitly not only improves the ease of access for future researchers but is also valuable to methodological rigor by encouraging the adoption of theoretical/conceptual frameworks (Jacobi, 1991; Gershenfeld, 2014) and fleshing out mentor and program functionality (Nora and Crisp, 2007; Gershenfeld, 2014).

Author Contributions

AL, KN, and CC conceived the study idea. AL and KN collated and analyzed the articles for inclusion. AL, KN, and CC wrote and edited several drafts of the manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

Thanks to the Department of Biology, the Honors Program, and the University of Nebraska at Omaha for providing the resources for this project.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2021.731657/full#supplementary-material

References

Abdolalizadeh, P., Pourhassan, S., Gandomkar, R., Heidari, F., and Sohrabpour, A. A. (2017). Dual Peer Mentoring Program for Undergraduate Medical Students: Exploring the Perceptions of Mentors and Mentees. Med. J. Islam Repub. Iran 31, 2. doi:10.18869/mjiri.31.2

Aderibigbe, S., Antiado, D., and Sta Anna, A. (2015). Issues in Peer Mentoring for Undergraduate Students in a Private university in the United Arab Emirates. Int. J. Evid. Based Coaching Mentoring 13 (2), 64–80. doi:10.24384/IJEBCM/13/2

Afghani, B., Santos, R., Angulo, M., and Muratori, W. (2013). A Novel Enrichment Program Using Cascading Mentorship to Increase Diversity in the Health Care Professions. Acad. Med. 88 (9), 1232–1238. doi:10.1097/ACM.0b013e31829ed47e

Anderson, M. K., Tenenbaum, L. S., Ramadorai, S. B., and Yourick, D. L. (2015). Near-peer Mentor Model: Synergy within Mentoring. Mentoring Tutoring: Partnership Learn. 23 (2), 116–132. doi:10.1080/13611267.2015.1049017

Athamanah, L. S., Fisher, M. H., Sung, C., and Han, J. E. (2020). The Experiences and Perceptions of College Peer Mentors Interacting with Students with Intellectual and Developmental Disabilities. Res. Pract. Persons Severe Disabilities 45 (4), 271–287. doi:10.1177/1540796920953826

Baroudi, S., and David, S. A. (2020). Nurturing Female Leadership Skills through Peer Mentoring Role: A Study Among Undergraduate Students in the United Arab Emirates. Higher Educ. Q. 74 (4), 458–474. doi:10.1111/hequ.12249

Bonner, H. J., Wong, K. S., Pedwell, R. K., and Rowland, S. L. (2019). A Short-Term Peer mentor/mentee Activity Develops Bachelor of Science Students' Career Management Skills. Mentoring Tutoring: Partnership Learn. 27 (5), 509–530. doi:10.1080/13611267.2019.1675849

Bunting, B., and Williams, D. (2017). Stories of Transformation: Using Personal Narrative to Explore Transformative Experience Among Undergraduate Peer Mentors. Mentoring Tutoring: Partnership Learn. 25 (2), 166–184. doi:10.1080/13611267.2017.1327691

Burton, L. J., Chester, A., Xenos, S., and Elgar, K. (2013). Peer Mentoring to Develop Psychological Literacy in First-Year and Graduating Students. Psychol. Learn. Teach. 12 (2), 136–146. doi:10.2304/plat.2013.12.2.136

Creswell, J. W., and Plano Clark, V. L. (2017). Designing and Conducting Mixed Methods Research. Thousand Oaks, CA: SAGE Publications, Inc.

Crisp, G., and Cruz, I. (2009). Mentoring College Students: A Critical Review of the Literature between 1990 and 2007. Res. High Educ. 50 (6), 525–545. doi:10.1007/s11162-009-9130-2

Cronbach, L. J., and Shapiro, K. (1982). Designing Evaluations of Educational and Social Programs. San Francisco: Jossey-Bass.

Cruz, P., and Diaz, A. (2020). Peer-to-Peer Leadership, Mentorship, and the Need for Spirituality from Latina Student Leaders' Perspective. New Dir. Stud. Leadersh. 2020 (166), 111–122. doi:10.1002/yd.20386

Cushing, D. F., and Love, E. W. (2013). Developing Cultural Responsiveness in Environmental Design Students through Digital Storytelling and Photovoice. Jld 6 (3). doi:10.5204/jld.v6i3.148

Cutright, T. J., and Evans, E. (2016). Year-Long Peer Mentoring Activity to Enhance the Retention of Freshmen STEM Students in a NSF Scholarship Program. Mentoring Tutoring: Partnership Learn. 24 (3), 201–212. doi:10.1080/13611267.2016.1222811

Daley, S., and Zeidan, P. (2020). Motivational Beliefs and Self-Perceptions of Undergraduates with Learning Disabilities: Using the Expectancy-Value Model to Investigate College-Going Trajectories. Learn. Disabilities: A Multidisciplinary J. 25 (2). doi:10.18666/LDMJ-2020-V25-I2-10391

Davis, S. A. (2017). "A Circular Council of People with Equal Ideas". J. Music Teach. Edu. 26 (2), 25–38. doi:10.1177/1057083716631387

de Oliveira, C. A., de França Carvalho, C. P., Céspedes, I. C., de Oliveira, F., and Le Sueur-Maluf, L. (2015). Peer Mentoring Program in an Interprofessional and Interdisciplinary Curriculum in Brazil. Anat. Sci. Educ. 8 (4), 338–347. doi:10.1002/ase.1534

Diaz, M. M., Ojukwu, K., Padilla, J., Steed, K., Schmalz, N., Tullis, A., et al. (2019). Who Is the Teacher and Who Is the Student? the Dual Service- and Engaged-Learning Pedagogical Model of Anatomy Academy. J. Med. Educ. Curric Dev. 6, 2382120519883271. doi:10.1177/2382120519883271

Douglass, A. G., Smith, D. L., and Smith, L. J. (2013). An Exploration of the Characteristics of Effective Undergraduate Peer-Mentoring Relationships. Mentoring Tutoring: Partnership Learn. 21 (2), 219–234. doi:10.1080/13611267.2013.813740

Draves, T. J. (2017). Collaborations that Promote Growth: Music Student Teachers as Peer Mentors. Music Edu. Res. 19 (3), 327–338. doi:10.1080/14613808.2016.1145646

Dunn, A. L., and Moore, L. L. (2020). Significant Learning of Peer-Mentors within a Leadership Living-Learning Community: A Basic Qualitative Study. J. Leadersh. Edu. 19 (2).

Everhard, C. J. (2016). Implementing a Student Peer-Mentoring Programme for Self-Access Language Learning. Sisal J. 6 (3), 300–312. doi:10.37237/060306

Finkel, L. (2017). Walking the Path Together from High School to STEM Majors and Careers: Utilizing Community Engagement and a Focus on Teaching to Increase Opportunities for URM Students. J. Sci. Educ. Technol. 26 (1), 116–126. doi:10.1007/s10956-016-9656-y

Fogg-Rogers, L., Lewis, F., and Edmonds, J. (2017). Paired Peer Learning through Engineering Education Outreach. Eur. J. Eng. Edu. 42 (1), 75–90. doi:10.1080/03043797.2016.1202906

Forrester, G., Kurth, J., Vincent, P., and Oliver, M. (2020). Schools as Community Assets: an Exploration of the Merits of an Asset-Based Community Development (ABCD) Approach. Educ. Rev. 72 (4), 443–458. doi:10.1080/00131911.2018.1529655

Fried, R., Karmali, S., Irwin, J., Gable, F., and Salmoni, A. (2018). Making the Grade: Mentors’ Perspectives of a Course-Based, Smart, Healthy Campus Pilot Project for Building Mental Health Resiliency through Mentorship and Physical Activity. Int. J. Evid. Based Coaching Mentoring 16 (2), 84–98. doi:10.24384/000566

Gershenfeld, S. (2014). A Review of Undergraduate Mentoring Programs. Rev. Educ. Res. 84 (3), 365–391. doi:10.3102/0034654313520512

Goodrich, A., Bucura, E., and Stauffer, S. (2018). Peer Mentoring in a University Music Methods Class. J. Music Teach. Edu. 27 (2), 23–38. doi:10.1177/1057083717731057

Grant, B. L., Liu, X., and Gardella, J. A. (2015). Supporting the Development of Science Communication Skills in STEM University Students: Understanding Their Learning Experiences as They Work in Middle and High School Classrooms. Int. J. Sci. Educ. B 5 (2), 139–160. doi:10.1080/21548455.2013.872313

Gunn, F., Lee, S. H., and Steed, M. (2017). Student Perceptions of Benefits and Challenges of Peer Mentoring Programs: Divergent Perspectives from Mentors and Mentees. Marketing Edu. Rev. 27 (1), 15–26. doi:10.1080/10528008.2016.1255560

Haddock, S., Weiler, L. M., Krafchick, J., Zimmerman, T., McLure, M., and Rudisill, S. (2013). Campus Corps Therapeutic Mentoring: Making a Difference for Mentors. J. Higher Edu. Outreach Engagement 17 (4), 225. Available at: http://files.eric.ed.gov/fulltext/EJ1018626.pdf.

Hannafin, M. J., Hannafin, K. M., Land, S. M., and Oliver, K. (1997). Grounded Practice and the Design of Constructivist Learning Environments. ETR&D 45 (3), 101–117. doi:10.1007/BF02299733

Haqqee, Z., Goff, L., Knorr, K., and Gill, M. B. (2020). The Impact of Program Structure and Goal Setting on Mentors' Perceptions of Peer Mentorship in Academia. Cjhe 50 (2), 24–38. doi:10.7202/1071393ar10.47678/cjhe.v50i2.188591

Harrison, R. L., Reilly, T. M., and Creswell, J. W. (2020). Methodological Rigor in Mixed Methods: An Application in Management Studies. J. Mixed Methods Res. 14 (4), 473–495. doi:10.1177/1558689819900585

Hastings, L. J., and Sunderman, H. M. (2019). Generativity and Socially Responsible Leadership Among College Student Leaders Who Mentor. J. Leadersh. Edu. 18, 1–19. doi:10.12806/V18/I3/R1

Hemmerich, A. L., Hoepner, J. K., and Samelson, V. M. (2015). Instructional Internships: Improving the Teaching and Learning Experience for Students, Interns, and Faculty. JoSoTL 15 (3), 104–132. doi:10.14434/josotl.v15i3.13090

Hryciw, D. H., Tangalakis, K., Supple, B., and Best, G. (2013). Evaluation of a Peer Mentoring Program for a Mature Cohort of First-Year Undergraduate Paramedic Students. Adv. Physiol. Educ. 37 (1), 80–84. doi:10.1152/advan.00129.2012

Huvard, H., Talbot, R. M., Mason, H., Thompson, A. N., Ferrara, M., and Wee, B. (2020). Science Identity and Metacognitive Development in Undergraduate mentor-teachers. IJ STEM Ed. 7 (1), 1–17. doi:10.1186/s40594-020-00231-6

Jackson, K. F. (2009). Building Cultural Competence: A Systematic Evaluation of the Effectiveness of Culturally Sensitive Interventions with Ethnic Minority Youth. Child. Youth Serv. Rev. 31 (11), 1192–1198. doi:10.1016/j.childyouth.2009.08.001

Jacobi, M. (1991). Mentoring and Undergraduate Academic Success: A Literature Review. Rev. Educ. Res. 61 (4), 505–532. doi:10.3102/00346543061004505

James, A. I. (2014). Cross-Age Mentoring to Support A-Level Pupils' Transition into Higher Education and Undergraduate Students' Employability. Psychol. Teach. Rev. 20 (2), 79–94.

James, A. I. (2019). University-school Mentoring to Support Transition into and Out of Higher Education. Psychol. Teach. Rev. 25 (2).

Karlin, B., Davis, N., and Matthew, R. (20132013). GRASP: Testing an Integrated Approach to Sustainability Education. Spring: Journal of Sustainability Education.

Karp, T., and Maloney, P. (2013). Exciting Young Students in Grades K-8 about STEM through an Afterschool Robotics Challenge. Ajee 4 (1), 39–54. doi:10.19030/ajee.v4i1.7857

Keup, J. R. (2016). Peer Leadership as an Emerging High-Impact Practice: An Exploratory Study of the American Experience. Jsaa 4 (1). doi:10.14426/jsaa.v4i1.143

Köse, E., and Johnson, A. C. (2016). Women in Mathematics: A Nested Approach. Primus 26 (7), 676–693. doi:10.1080/10511970.2015.1132802

Kramer, D., Hillman, S. M., and Zavala, M. (2018). Developing a Culture of Caring and Support through a Peer Mentorship Program. J. Nurs. Educ. 57 (7), 430–435. doi:10.3928/01484834-20180618-09

Kruger, D. J. (2003). Integrating Quantitative and Qualitative Methods in Community Research. Community Psychol. 36, 18–19.

Lamb, P., and Aldous, D. (2014). The Role of E-Mentoring in Distinguishing Pedagogic Experiences of Gifted and Talented Pupils in Physical Education. Phys. Edu. Sport Pedagogy 19 (3), 301–319. doi:10.1080/17408989.2012.761682

Lee, J. J., Bell, L. F., and Shaulskiy, S. L. (2017). Exploring Mentors' Perceptions of Mentees and the Mentoring Relationship in a Multicultural Service-Learning Context. Active Learn. Higher Edu. 18 (3), 243–256. doi:10.1177/1469787417715203

Lim, J. H., MacLeod, B. P., Tkacik, P. T., and Dika, S. L. (2017). Peer Mentoring in Engineering: (Un)shared Experience of Undergraduate Peer Mentors and Mentees. Mentoring Tutoring: Partnership Learn. 25 (4), 395–416. doi:10.1080/13611267.2017.1403628

Linn, M. C., Palmer, E., Baranger, A., Gerard, E., and Stone, E. (2015). Education. Undergraduate Research Experiences: Impacts and Opportunities. Science 347 (6222), 1261757. doi:10.1126/science.1261757

Masehela, L. M., and Mabika, M. (2017). An Assessment of the Impact of the Mentoring Programme on Student Performance. Jsaa 5 (2). doi:10.24085/jsaa.v5i2.2707

Matheson, D. W., Rempe, G., Saltis, M. N., and Nowag, A. D. (2020). Community Engagement: mentor Beliefs across Training and Experience. Mentoring Tutoring: Partnership Learn. 28 (1), 26–43. doi:10.1080/13611267.2020.1736774

McIntosh, E. A. (2019). Working in Partnership: The Role of Peer Assisted Study Sessions in Engaging the Citizen Scholar. Active Learn. Higher Edu. 20 (3), 233–248. doi:10.1177/1469787417735608

Menard, E. A., and Rosen, R. (2016). Preservice Music Teacher Perceptions of Mentoring Young Composers. J. Music Teach. Edu. 25 (2), 66–80. doi:10.1177/1057083714552679

Moher, D., Liberati, A., Tetzlaff, J., and Altman, D. G. (2009). & the PRISMA GroupPreferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. Plos Med. 6 (7). doi:10.1371/journal.pmed1000097

Monk, M., Baustian, M., Saari, C. R., Welsh, S., D’Elia, C., Powers, J., et al. (2014). EnvironMentors: Mentoring At-Risk High School Students through University Partnerships. Int. J. Environ. Sci. Educ. 9, 385–397. doi:10.12973/ijese.2014.223a

Murphy, J. A. (2016). Enhancing the Student Experience: A Case Study of a Library Peer mentor Program. Coll. Undergraduate Libraries 23 (2), 151–167. doi:10.1080/10691316.2014.963777

Najmr, S., Chae, J., Greenberg, M. L., Bowman, C., Harkavy, I., and Maeyer, J. R. (2018). A Service-Learning Chemistry Course as a Model to Improve Undergraduate Scientific Communication Skills. J. Chem. Educ. 95 (4), 528–534. doi:10.1021/acs.jchemed.7b00679

Nelson, C. A., and Youngbull, N. R. (2015). Indigenous Knowledge Realized: Understanding the Role of Service Learning at the Intersection of Being a Mentor and a College-Going American Indian. Educ. 21 (2), 89–109. doi:10.37119/ojs2015.v21i2.268

Nelson, K. L., and Cutucache, C. E. (2017). How Do Former Undergraduate Mentors Evaluate Their Mentoring Experience 3-years post-mentoring: A Phenomenological Study. Qualitative Report, 22(7), 2033.

Nora, A., and Crisp, G. (2007). Mentoring Students: Conceptualizing and Validating the Multi-Dimensions of a Support System. J. Coll. Student Retention: Res. Theor. Pract. 9 (3), 337–356. doi:10.2190/CS.9.3.e

O'Cathain, A., Murphy, E., and Nicholl, J. (2008). The Quality of Mixed Methods Studies in Health Services Research. J. Health Serv. Res. Pol. 13 (2), 92–98. doi:10.1258/jhsrp.2007.007074

Owen, L. (2017). Student Perceptions of Relevance in a Research Methods Course. Jarhe 9 (3), 394–406. doi:10.1108/jarhe-09-2016-0058

Packard, B. W., Marciano, V. N., Payne, J. M., Bledzki, L. A., and Woodard, C. T. (2014). Negotiating Peer Mentoring Roles in Undergraduate Research Lab Settings. Mentoring Tutoring: Partnership Learn. 22 (5), 433–445. doi:10.1080/13611267.2014.983327

Philipp, S., Tretter, T., and Rich, C. (2016). Research and Teaching: Development of Undergraduate Teaching Assistants as Effective Instructors in STEM Courses. J. Coll. Sci. Teach. 045 (3). doi:10.2505/4/jcst16_045_03_74

Pica, E., and Fripp, J. A. (2020). The Impact of Participating in a Juvenile Offender Mentorship Course on Students' Perceptions of the Legal System and Juvenile Offenders. J. Criminal Justice Edu. 31 (4), 609–618. doi:10.1080/10511253.2020.1831033

Plano Clark, V. L., Anderson, N., Wertz, J. A., Zhou, Y., Schumacher, K., and Miaskowski, C. (2015). Conceptualizing Longitudinal Mixed Methods Designs. J. Mixed Methods Res. 9 (4), 297–319. doi:10.1177/1558689814543563

Plano Clark, V. L., and Ivankova, N. V. (2016). Mixed Methods Research: A Guide to the Field. Thousand Oaks, CA: SAGE Publications, Inc. doi:10.4135/9781483398341

Rohatinsky, N., Harding, K., and Carriere, T. (2017). Nursing Student Peer Mentorship: a Review of the Literature. Mentoring Tutoring: Partnership Learn. 25 (1), 61–77. doi:10.1080/13611267.2017.1308098

Rompolski, K., and Dallaire, M. (2020). The Benefits of Near-Peer Teaching Assistants in the Anatomy and Physiology Lab: An Instructor and a Student's Perspective on a Novel Experience. HAPS Educator 24 (1), 82–94. doi:10.21692/haps.2020.003

Ross, L., and Bertucci, J. (2014). Perspectives on the Pathway to Paramedicine Programme. Med. Educ. 48 (11), 1113–1114. doi:10.1111/medu.12563

Roy, V., and Brown, P. A. (2016). Baccalaureate Accounting Student Mentors' Social Representations of Their Mentorship Experiences. cjsotl-rcacea 7 (1), 1–17. doi:10.5206/cjsotl-rcacea.2016.1.6

Ryan, S., Nauheimer, J. M., George, C., and Dague, E. (2017). The Most Defining Experience": Undergraduate University Students' Experiences Mentoring Students with Intellectual and Developmental Disabilities. J. Postsecondary Edu. Disabil. 30, 283–298.

Santiago, J. A., Kim, M., Pasquini, E., and Roper, E. A. (2020). Kinesiology Students' Experiences in a Service-Learning Project for Children with Disabilities. Tpe 77 (2), 183–207. doi:10.18666/TPE-2020-V77-I2-9829

Schuetze, A., Claeys, L., Bustos Flores, B., and Sezech, S. (2015). La Clase Mágica as a Community Based Expansive Learning Approach to STEM Education. Ijree 2 (2), 27–45. doi:10.3224/ijree.v2i2.19545

Skjevik, E. P., Boudreau, J. D., Ringberg, U., Schei, E., Stenfors, T., Kvernenes, M., et al. (2020). Group Mentorship for Undergraduate Medical Students-A Systematic Review. Perspect. Med. Educ. 9 (5), 272–280. doi:10.1007/s40037-020-00610-3

Spaulding, D. T., Kennedy, J. A., Rózsavölgyi, A., and Colón, W. (2020b). Differences in Outcomes by Gender for Peer Mentors Participating in a STEM Persistence Program for First-Year Students. J. STEM Edu. 21 (1), 5–10.

Spaulding, D. T., Kennedy, J. A., Rózsavölgyi, A., and Colón, W. (2020a). Outcomes for Peer-Based Mentors in a University-Wide STEM Persistence Program: A Three-Year Analysis. J. Coll. Sci. Teach. 49 (4), 30–36.

Sweeney, A. B. (2018). Lab Mentors in a Two-Plus-Two Nursing Program: A Retrospective Evaluation. Teach. Learn. Nurs. 13 (3), 157–160. doi:10.1016/j.teln.2018.03.006

Tenenbaum, L. S., Anderson, M. K., Jett, M., and Yourick, D. L. (2014). An Innovative Near-Peer Mentoring Model for Undergraduate and Secondary Students: STEM Focus. Innov. High Educ. 39 (5), 375–385. doi:10.1007/s10755-014-9286-3

Thalluri, J., O'Flaherty, J. A., and &Shepherd, P. L. (2014). Classmate Peer-Coaching: "A Study Buddy Support Scheme. J. Peer Learn. 7, 92–104. Available at: http://ro.uow.edu.au/ajpl/vol7/iss1/8.

Wallin, D., DeLathouwer, E., Adilman, J., Hoffart, J., and Prior-Hildebrandt, K. (2017). Undergraduate Peer Mentors as Teacher Leaders: Successful Starts. Int. J. Teach. Leadersh. 8 (1), 56–75.

Walsh, D., Veri, M., and Willard, J. (2015). Kinesiology Career Club: Undergraduate Student Mentors’ Perspectives on a Physical Activity-Based Teaching Personal and Social Responsibility Program. The Phys. Educator 72 (2), 317.

Ward, E. G., Thomas, E. E., and Disch, W. B. (2014). Mentor Service Themes Emergent in a Holistic, Undergraduate Peer-Mentoring Experience. J. Coll. Student Dev. 55 (6), 563–579. doi:10.1353/csd.2014.0058

Wasburn-Moses, L., Fry, J., and Sanders, K. (2014). The Impact of a Service-Learning Experience in Mentoring At-Risk Youth. J. Excell. Coll. Teach. 25 (1).

Weiler, L. M., Boat, A. A., and Haddock, S. A. (2019). Youth Risk and Mentoring Relationship Quality: The Moderating Effect of Program Experiences. Am. J. Community Psychol. 63 (1-2), 73–87. doi:10.1002/ajcp.12304

Wheat, L. S., Szepe, A., West, N. B., Riley, K. B., and Gibbons, M. M. (2019). Graduate and Undergraduate Student Development as a Result of Participation in a Grief Education Service Learning Course. J. Community Engagement Higher Edu. 11 (2), 31–45.

Won, M. R., and Choi, Y. J. (2017). Undergraduate Nursing Student Mentors' Experiences of Peer Mentoring in Korea: A Qualitative Analysis. Nurse Educ. Today 51, 8–14. doi:10.1016/j.nedt.2016.12.023

Wong, C., Stake-Doucet, N., Lombardo, C., Sanzone, L., and Tsimicalis, A. (2016). An Integrative Review of Peer Mentorship Programs for Undergraduate Nursing Students. J. Nurs. Educ. 55 (3), 141–149. doi:10.3928/01484834-20160216-04

Yilmaz, M., Ozcelik, S., Yilmazer, N., and Nekovei, R. (2013). Design-Oriented Enhanced Robotics Curriculum. IEEE Trans. Educ. 56 (1), 137–144. doi:10.1109/TE.2012.2220775

Zentz, S. E., Kurtz, C. P., and Alverson, E. M. (2014). Undergraduate Peer-Assisted Learning in the Clinical Setting. J. Nurs. Educ. 53 (3), S4–S10. doi:10.3928/01484834-20140211-01

Keywords: stem education, UG mentoring, rigor, methods, systematic review

Citation: Leavitt AS, Nelson KL and Cutucache CE (2022) The Effect of Mentoring on Undergraduate Mentors: A Systematic Review of the Literature. Front. Educ. 6:731657. doi: 10.3389/feduc.2021.731657

Received: 27 June 2021; Accepted: 07 December 2021;

Published: 31 January 2022.

Edited by:

Chi-Cheng Chang, National Taiwan Normal University, TaiwanReviewed by:

Olivera J. Đokić, University of Belgrade, SerbiaJinny Han, Michigan State University, United States

Copyright © 2022 Leavitt, Nelson and Cutucache. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christine E. Cutucache, Y2N1dHVjYWNoZUB1bm9tYWhhLmVkdQ==

Andrew S. Leavitt

Andrew S. Leavitt Kari L. Nelson

Kari L. Nelson Christine E. Cutucache

Christine E. Cutucache