- 1Queens College and the Graduate Center, City University of New York, New York, NY, United States

- 2Ikerbasque, Basque Foundation for Science and Universidad de Deusto, Bilbao, Spain

The positive effect of feedback on students’ performance and learning is no longer disputed. For this reason, scholars have been working on developing models and theories that explain how feedback works and which variables may contribute to student engagement with it. Our aim with this review was to describe the most prominent models and theories, identified using a systematic, three-step approach. We selected 14 publications and described definitions, models, their background, and specific underlying mechanisms of feedback processes. We concluded the review with eight main points reached from our analysis of the models. The goal of this paper is to inform the field and to help both scholars and educators to select appropriate models to frame their research and intervention development. In our complementary review (Panadero and Lipnevich, 2021) we further analyzed and compared the fourteen models with the goal to classify and integrate shared elements into a new comprehensive model.

A Review of Feedback Models and Theories: Descriptions, Definitions, Empirical Evidence, and Conclusions

For many decades, researchers and practitioners alike have been examining how information presented to students about their performance on a task may affect their learning (Black and Wiliam, 1998; Lipnevich and Smith, 2018). The term feedback was appropriated into instructional contexts from the industry (Wiliam, 2018), and the original definitions referred to feedback as information from an output that was looped back into the system. Over the years, definitions and theories of feedback evolved, and scholars in the field continue to accumulate evidence attesting to feedback’s key role in student learning.

To provide the reader with a brief historical overview, we will start from the early 20th century and consider Thorndike’s Law of Effect to be at the very inception of feedback research (Thorndike, 1927; Kluger and DeNisi, 1996). Skinner and behaviorism with positive and negative reinforcements and punishments can also be considered as precursors to what the field currently views as instructional feedback (Wiliam, 2018). Further, the value of formative assessment, as it is now known, was first explicated by Benjamin Bloom in his seminal 1968 article, in which he described the benefits of offering students regular feedback on their learning through classroom formative assessments. Bloom described specific strategies teachers could use to implement formative assessments as part of regular classroom instruction, both to improve student learning, to reduce gaps in the achievement of different subgroups of students, and to help teachers to adjust their instruction (Bloom, 1971). Hence, extending ideas of Scriven (1967) who proposed the dichotomy of formative and summative evaluation, Bloom deserves the credit for introducing the concept of formative assessment (Guskey, 2018).

Importantly, the arrival of cognitive and constructivist theories started to change the general approach to feedback (Panadero et al., 2018), with researchers moving from a monolithic idea of feedback as “it is done to the students to change their behavior” to “it should give information to the students to process and construct knowledge.” So, in the late seventies and most of the eighties, there was a push to investigate the type of feedback that would be most beneficial to students’ learning. Even though the first publications were heavily influenced by behaviorism (e.g. Kulhavy, 1977), by the end of the 80s there was a pedagogical push to turn feedback into opportunities for learning (e.g., Sadler, 1989).

It was in the nineties when the “new” learning theories gained major traction in psychological and educational literature on feedback. Around that time, cognitive models of feedback were developed such as the ones by Butler and Winne (1995) and Kluger and DeNisi (1996). These theories focused on cognitive processes that were central to the processing of feedback, and the mechanisms, through which feedback affected cognitive processes and students’ subsequent behavior, were also explored. The key point in the development of the field, however, was Black and Wiliam (1998) publication of their thematic review that expedited and reshaped the field of formative assessment. The main message of their review still stands: across instructional settings assessment should be used to provide information to both the learner and the teacher (or other instructional agent) about how to improve learning and teaching, with feedback being the main vehicle to achieve it. This idea may seem simple but it is neither fully implemented nor sufficiently integrated within the summative functions of assessment (Panadero et al., 2018). After two decades following Black and Wiliam’s (1998) publication, a lot of progress has been made, with classroom assessment literature being fused with learning theories such as self-regulated learning (Panadero et al., 2018), cognitive load (Sweller et al., 1998), and control-value theory of achievement emotions (Goetz et al., 2018; Pekrun, 2007). In the current review we do not intend to delve into these voluminous strands of research, but we encourage the reader to conduct future exploration to expand on these.

As the field of formative assessment and feedback was evolving, scholars were devising feedback models to describe processes and mechanisms of feedback. In general, these models have gotten both more comprehensive and focused, depicting more specific cognitive processes (Narciss and Huth, 2004), student responses to feedback (Lipnevich et al., 2016), the context (Evans, 2013), and pedagogical aspects of feedback (Carless and Boud, 2018; Hattie and Timperley, 2007; Nicol and Macfarlane-Dick, 2006). In contrast to the earlier conception where “feedback was done” to the student, in the most current models the learner is not only at the center of the feedback process, but is now an active agent that does not only process feedback, but responds to it, can generate it, and acquires feedback expertise to engage with it in more advanced ways (Shute, 2008; Stobart, 2018). Additionally, a lot is known about how to involve students in the creation of feedback either as self-feedback (Andrade, 2018; Boud, 2000) or peer feedback (Panadero et al., 2018; van Zundert et al., 2010), and what key elements influence students’ use of feedback (Winstone et al., 2017; Jonsson and Panadero, 2018). Due to the ongoing proliferation of models, theories, and strands of research, now may be a critical moment to examine the most influential models and theories currently utilized by researchers and educators. It will help us to consider how the models have evolved and what the main developments in our conceptions of feedback are after decades of research. In the current review we did just that. Through a rigorous multi-step process we selected, described, and compared 14 prominent models and theories currently discussed and utilized by researchers in the field. Our aim was to provide a guide for researchers for selecting the most suitable model for framing their studies, as well as to provide the newcomers to the field with a starting point to the key theoretical approaches and descriptions of feedback mechanisms. We worked on two reviews simultaneously. In the current one we focus on the description of the fourteen included models, drawing conclusions about definitions and supporting evidence. In the second review (Panadero and Lipnevich, 2021) we compare typologies of feedback and discuss elements of the included models, proposing an integrative model of feedback elements: the MISCA model (Message, Implementation, Students, Context, and Agents). To get the complete picture we strongly recommend that the reader engages with both reviews, starting with the present one.

Methods

Selection of Relevant Publications

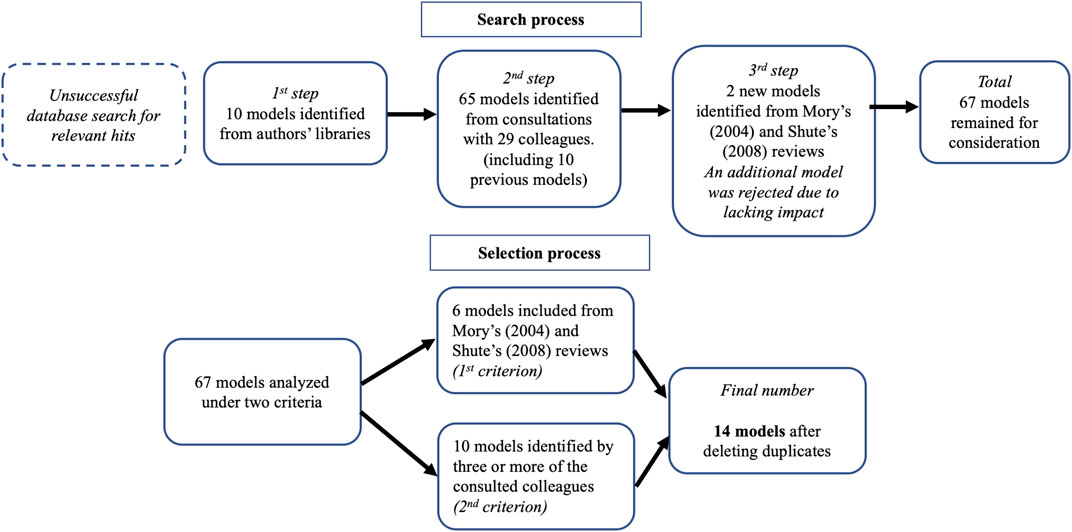

To select models for review we searched PsycINFO, ERIC, and Google Scholar databases. Unfortunately, this approach was not fruitful as the combination of “feedback + model” and “feedback + model + education” in either title, abstract, or document offered a mix of results from different disciplines in the first combination and focus on variables other than feedback in the second (e.g. self-assessment, peer assessment). Therefore, we established a three-way search method. First, we used our own reference libraries to locate the feedback models we had previously identified. This exercise resulted in ten models. Second, we consulted colleagues to get their opinion on models that they thought had an impact in feedback literature. We sent emails to 38 colleagues asking the following questions: what were the feedback models they knew of, have they developed any model themselves, and would they be available for further consultation. Our instructions intentionally did not include either a strict definition or operationalization of what constituted a feedback model; this was done purposefully to allow for individual interpretation. To select the 38 colleagues, we first contacted all the first authors of the models we had already identified, the authors of the two existing reviews, and internationally reputed feedback researchers. We obtained 29 responses, but two of them did not provide enough information and were rejected. Through this procedure we identified 65 models, including the ten models that were already selected from our reference libraries. Finally, we used two previous feedback model reviews (Mory, 2004; Shute, 2008) to identify models that might not have been included in the two previous steps, selecting two more. Importantly, one of the models included in Mory (2004) that had not been identified earlier, had a very limited impact in the field. Clariana (1999) had only been cited 39 times since 1999, thus this model was no longer considered for inclusion. The resulting number of feedback models was 67.

Acknowledging that so many models could not have been meaningfully represented in our review, we tasked ourselves with further reduction of the database. We used a combination of two inclusion criteria. First, we selected all but one model that had been included in the two previous feedback reviews (Mory, 2004; Shute, 2008). Application of this criterion produced a total of six models. Second, in addition to the previous criterion, we established a minimum threshold of three votes by the consulted colleagues to add new models, which resulted in ten models. After deleting duplicates, a total of 14 models were included in this review (see Figure 1). The final list is: Ramaprasad (1983); Kulhavy and Stock (1989); Sadler (1989); Bangert-Drowns et al. (1991); Butler and Winne (1995); Kluger and DeNisi (1996); Tunstall and Gipps (1996); Mason and Bruning (2001); Narciss (2044, 2008); Nicol and Macfarlane-Dick (2006); Hattie and Timperley (2007); Evans (2013); Lipnevich et al. (2016); and Carless and Boud (2018).

The fourteen selected publications are different in their nature. Some of them are meta-analytic reviews, others are narrative reviews, in addition to several empirical papers. Importantly, two of the papers present theoretical work without any empirical evidence and without describing any links among variables related to feedback (Ramaprasad, 1983; Sadler, 1989). Hence, we would like to alert the reader that some of the articles discussed in this review do not present anything that would fit with the definition of a model. Traditionally, a model explains existing relations among components and is typically pictorially represented (Gall, Borg, and Gall, 1996). Some of the manuscripts that we included neither described relations nor offered graphical representations of feedback mechanisms. Our decision in favor of inclusion was based exclusively on the criteria that we described above. For the purposes of simplicity, we will refer to the fourteen scholarly contributions as models, but will explain the type of each contribution in the upcoming sections.

Extracting, Coding, Analyzing Data and Consulting Models’ Authors

The data were extracted from the included publications for the following categories: 1) Reference, 2) Definition of feedback, 3) Theoretical framework, 4) Citations of previous models, 5) Areas of feedback covered, 6) Model description, 7) Empirical evidence supporting the model, 8) Pictorial representation, 9) References to formative assessment, 10) Notes first author, 11) Notes second author, and 12) Summaries of the model from other publications. We coded the sources in a descriptive manner for most categories except for the definition of feedback category, where we copied them directly from the original source. Both authors coded the articles and there was a total agreement in the assignment of separate components to the aforementioned categories.

Finally, we contacted authors of all the included publications except for one (the two authors were in retirement and not accessible). Twelve authors replied (two from the same publication) but only seven agreed and scheduled an interview (Boud, Carless, Kluger, Narciss, Ramaprasad, Sadler, and Winne). The interviews were recorded and helped us to better understand the models. Also, to ensure adequate interpretation of the models we shared our descriptions with the authors, which they returned with their approval and, in some cases, suggestions for revisions.

We would like to alert the reader that the Method section is shared with the second part of this review (Panadero and Lipnevich, 2021).

Models and Theories: Descriptions

In this section we present descriptions of the models and theories included in the current systematic review. We do not claim to provide a comprehensive overview of each model or theory. Rather, we give the reader a flavor of what they represent. Due to the fact that most summaries were checked by the corresponding authors, we believe that our account is fairly accurate and unbiased, and in this section we try to withhold our own interpretation or opinions about the utility of each model. We will follow this section with a detailed analysis of definitions, summary of existing empirical evidence, as well as conclusions and recommendations. We would also like to direct the reader to the second review, wherein we integrate the fourteen models into a comprehensive taxonomy (Panadero and Lipnevich, 2021).

Ramaprasad (1983): Clarifying the Purpose of Feedback From Outside the Field

Ramaprasad’s work has been crucial for the current conceptualization of feedback in educational settings with his paper on the definition of feedback being cited over 1500 times, more frequently from educational psychology and education.

Theoretical Framework

Ramaprasad’s seminal article was published in Behavioral Science (now called “Systems Research and Behavioral Science”), which is the official journal of the Society for General Systems Research (SGSR/ISSS). The paper does not contain a single educational source, drawing upon management, behavioral, I/O, and social psychology.

Description

Ramaprasad’s article does not describe a model in its traditional sense. Rather, the author presents a theoretical overview of feedback, focusing on mechanisms, valence, and consequences of feedback for subsequent performance. His work is influential in education because of his definition of feedback, but it contains other concepts that have been somewhat overlooked by educators. We will concentrate on three main aspects of the paper.

1) The definition. Ramaprasad’s definition of feedback is very well-known and is referenced extensively. According to the author: “Feedback is information about the gap between the actual level and the reference level of a system parameter which is used to alter the gap in some way” (p. 4). There are three key points that need to be emphasized: 1) the focus of feedback may be any system parameter, 2) the necessary conditions for feedback are the existence of data on the reference level of the parameter, data on the actual level of the parameter, and a mechanism for comparing the two to generate information about the gap between the two levels, and 3) the information on the gap between the actual level and the reference level is considered to be feedback only when it is used to alter the gap.

2) Three key ideas. Due to the fact that Ramaprasad comes from a different academic field, it is important to clarify the main ideas that are present in his definition. The first one concerns the reference level and is a representation of a position that can be used for assessing a product or performance. According to Ramaprasad, it can vary along two continua bounded by explicit and implicit, and quantitative and qualitative. “When reference levels are implicit and/or qualitative, comparison and consequent feedback is rendered difficult. Despite the above fact, implicit and qualitative reference levels are extremely important in management and cannot be ignored.” (p. 6). In educational settings this would refer to the importance of explicit criteria and standards.

The second key idea is the comparison of reference and actual levels. Here, Ramaprasad states that “irrespective of the unit performing the comparison, a basic and obvious requirement is that the unit should have data on the reference level and the actual level of the system parameter it is comparing. In the absence of either comparison it is impossible” (p. 7). This aspect emphasizes the critical role of the two key questions that Hattie and Timperley (2007) discussed in their work: “where am I going?” and “where am I supposed to be?”

Further, using information about the gap to alter the gap is an idea that is critical in instructional contexts. Ramaprasad maintains that only if the information about the gap is used (and the decision can be to not do anything about it) it can be considered feedback. If it is just information that gets stored but nothing happens with it, then it is just information, and, hence, cannot be considered feedback. It is important to remember that Ramaprasad viewed feedback through the lens of the systems and management perspective. From the learning sciences perspective, if information gets stored in the long term memory, a change has already occurred and feedback has had an effect, even if no external changes had been observed. On the other hand, if the process and the outcome of storing the information is interpreted as ignoring the feedback, then it has not had any effect.

3) Positive and negative feedback. Ramaprasad’s description of positive and negative feedback differs from a more traditional interpretation in terms of its affective valence. From Ramaprasad’s perspective: “if the action triggered by feedback widens the gap between the reference and the actual levels of the system parameter...is called positive feedback; ...if the action reduces the gap between the two levels...is negative” (p. 9). Ramaprasad did acknowledge alternative interpretations of positive and negative feedback, with the valence being determined by the emotions triggered in the feedback receiver (e.g., positive for enjoyment and pride, negative for disappointment and anxiety), as well as within the parameters of positive and negative reinforcement from Skinner. In the context of education, we usually describe feedback as being positive or negative depending on the emotions it elicits.

Kulhavy and Stock (1989): A Model From Information Processing

Kulhavy and Stock’s (1989) seminal work introduced the idea of multiple feedback cycles, considered types, form, and content of feedback, and explicitly equated feedback with general information. The authors consider feedback from the information processing perspective, juxtaposing it with earlier studies that used the behaviorist approach as their foundation.

Theoretical Framework

According to the authors, many of the early studies conducted within the behaviorist perspective viewed feedback as corrective information that strengthened correct responses through reinforcement, and weakened incorrect responses through punishment. This somewhat mechanistic perspective stressed the importance of minimizing errors, but no description of error correction, nor the means for it were presented. Feedback following an instructional response was viewed as fitting the sequence of events of the Thorndike’s Law of Effect (Thorndike, 1927, 1997), and was construed as the driving force of human learning. The fact that a learner 1) received a task, 2) produced a response, and 3) received feedback indicating whether the answer was correct or not (punishment or reinforcement) provided a superficial parallel to the familiar sequence of the 1) stimulus, 2) response, and 3) reinforcement. However, as Kulhavy and Stock (1989) noted, people involved in instructional tasks were not under the powerful stimulus control found in the laboratory, which, along with constantly changing stimuli and responses, carried very little resemblance to the typical operant setting. Hence, presentation of corrective feedback following an incorrect response may carry no effect on the learner. Thus, errors that students made were ignored, and instructors’ attention was directed to students’ correct responding only.

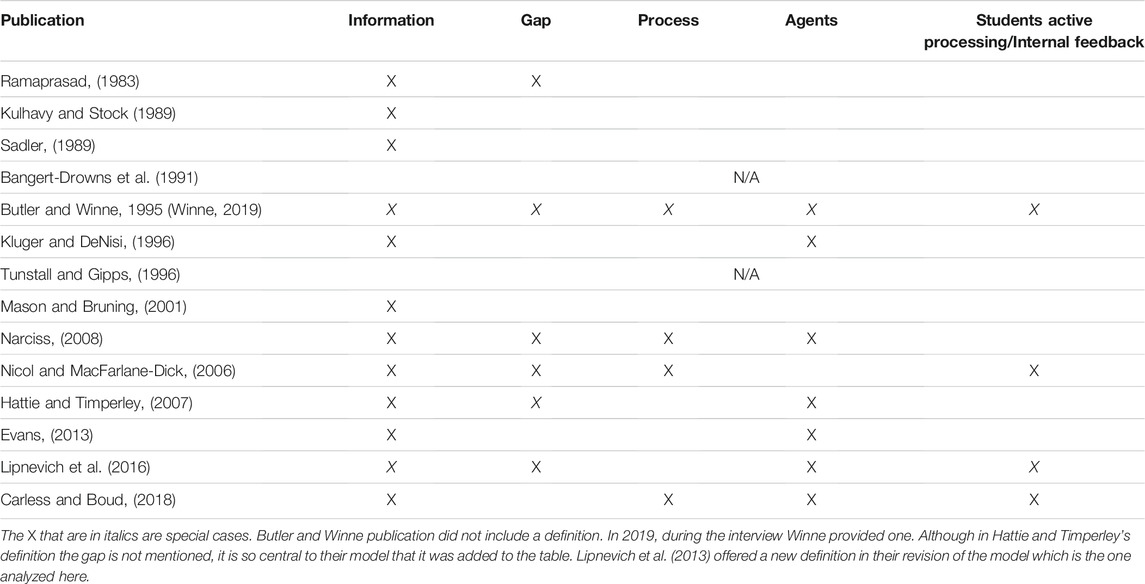

From the information-processing perspective, on the contrary, errors were of central importance, as this approach described the exact mechanisms through which external feedback helped to correct mistakes in the products of a learning activity. Both direct and mediated feedback could be distinguished according to its content on two vectors of verification and elaboration. Verification represents students’ evaluation of whether a particular feedback message matches their response, whereas elaboration can be classified according to load, form, and type of information. Load is represented by the amount of information provided in the feedback message that can range from a letter grade to a detailed narrative account of students’ performance. Type of information is reflected in the dichotomy of process-related, or descriptive feedback, and outcome-related, or evaluative feedback. Form is defined as changes in stimulus structure between instruction and the feedback message that a learner receives.

Description

Although Kulhavy and Stock (1989) did not provide a clear definition of feedback, they adhered to the one offered by Kulhavy (1977). Kulhavy (1977) defined feedback as “any of the numerous procedures that are used to tell a learner if an instructional response is right or wrong” (Kulhavy, 1977, p. 211). In its simple form, this would imply simply indicating whether a learner’s response to an instructional prompt was correct or not whereas more complex forms of feedback included messages that provided the learner with additional information on what needed improvement (“correctional review”). Interestingly, the authors also noted that with increasing complexity, feedback would inevitably become indistinguishable from instruction.

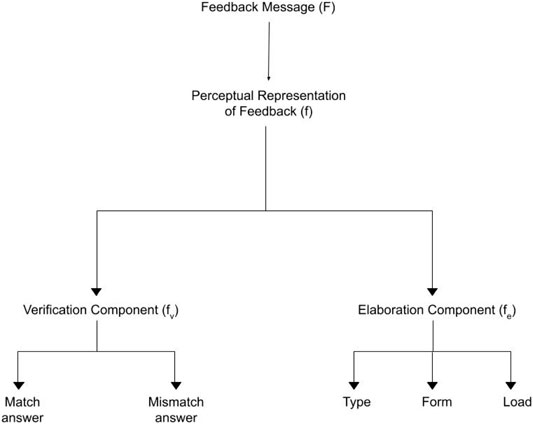

The central idea of Kulhavy and Stock’s model is that of response certitude (Figure 2). The authors defined it as a degree to which the learner expected his or her response to be a correct one. This central tenet of the model is only indirectly related to the classification of the response as right or wrong, so the model is not limited to a basic error analysis. In other words, it seeks to make predictions about student performance. In addition to response certitude, the authors discuss response durability, which is the likelihood that an instructional response will be available for the learner’s use at some later point in time. Thus, the key underlying premise of Kulhavy and Stock (1989) model is that certitude estimates and response durability are positively related, so in situations where feedback is unavailable, the magnitude of certitude increases, and the probability of selecting the same response (often incorrect) increases also.

FIGURE 2. Kulhavy and Stock (1989) feedback components.

The model itself comprises three cycles, each of which includes an iteration loop (Figure 3). The first cycle depicts instructional task demands, the second represents feedback message, and the third is a criterion task demand. In the first cycle the perceived task demand is compared to the set of existing cognitive referents available to the learner. In the second cycle, the feedback message is compared to the cognitive referents retained from the initial cycle. These two cycles are followed by cycle three, where the perceived stimulus is again the original task demand that is compared to the cognitive referents which have been modified by the feedback message.

FIGURE 3. Adapted from Kulhavy and Stock (1989) three-cycle feedback model.

Kulhavy and Stock (1989) also suggested that an introduction of a small delay between responses and feedback helped to eliminate proactive interference and thus increased the impact of error-correcting feedback.

Sadler (1989): Seminal Work for Formative Assessment

Sadler’s work has been foundational for the conceptualization of feedback, assessment criteria, and assessment philosophy. His seminal paper outlines a theory of formative assessment. Sadler does not describe an actual model of feedback but focuses on feedback’s formative features. However, a significant portion of his paper is dedicated to feedback.

Theoretical Framework

Before developing and extending his own definition of feedback, Sadler referred to earlier writings on formative assessment and feedback, specifically those by Kulhavy (1977), Kulik and Kulik (1988), and the much earlier work, Thorndike’s Law of Effect (Thorndike, 1913; Thorndike, 1927). These authors equated feedback with learners’ knowledge of results, whereas Sadler adopted a much broader view. His theoretical exploration built upon a definition of feedback offered by Ramaprasad (1983), which referred to feedback as it is found in many different contexts – not specifically in education. Sadler brought Ramaprasad’s definition into education and extended it into such areas as writing assessment or qualitative judgment, which are characterized by multidimensional criteria that cannot be evaluated as correct or incorrect (Sadler and Ramaprasad, October 2019, personal communication).

Description

Sadler referred to feedback as: “a key element in formative assessment… usually defined in terms of information about how successfully something has been or is being done.” (p. 120). Sadler applied Ramaprasad’s conceptualization of feedback to situations, in which the teacher provides feedback, and learners are the main actors who have to understand the feedback in order to improve their work. If information from the teacher is too complex, if students do not possess knowledge or opportunity to use the information, then this feedback is no more than “dangling data” and, hence, highly ineffective. For the feedback to be effective students should be familiar with assessment criteria, should be able to monitor the quality of their work, and should have a wide arsenal of strategies from which they can draw to improve their work. Sadler emphasized the importance of continuous self-assessment, which he described as judgments of quality of one’s own work at any given time.

Sadler also discussed three conditions that had to be satisfied for the feedback to be effective. According to Sadler, the first condition had to do with a standard, toward which students aimed as they worked on a task at hand. The second condition required students to compare their actual levels of performance with the standard, and the third emphasized student engagement in actions that eventually closed the gap. Sadler stressed that all three conditions had to be satisfied for any feedback to be effective.

Sadler made an interesting distinction that we did not encounter in any other writings of scholars included in the current review. He juxtaposed feedback and self-monitoring, with the former defined as information that came from an external source, and the latter being self-generated by a learner. He further suggested that one of the key instructional goals was to move learners away from feedback and have them fully rely on self-monitoring.

Sadler also proposed that it was difficult to evaluate students’ work on a dichotomous scale of correct or incorrect. Effective evaluations resulted from “direct qualitative human judgments.” Consequently, Sadler broadened the definition of feedback as information about the quality of performance to include: “…knowledge of the standard or goal, skills in making multicriterion comparisons, and the development of ways and means for reducing the discrepancy between what is produced and what is aimed for.” (p. 142).

Sadler discussed a variety of tools and approaches that should help students with effective self-monitoring. He addressed such topics as peer assessment, the use of exemplars, continuous assessment, Bloom’s taxonomy, grading on the curve, and curriculum structure. In his account, Sadler’s primary focus was on the need to help students to develop effective evaluative skills, so they could transition from their complete reliance on teacher-delivered evaluations to students’ own self-monitoring.

Bangert-Drowns et al. (1991): The First Attempt to Meta-Analyzing (CAP) the Effects of Feedback

Bangert-Drowns et al. (1991) are well-known for a series of a meta-analyses and empirical reviews that they published throughout the 80s and mid 90s on a range of topics (e.g. coaching aptitude tests, computer-based education, frequent classroom testing). This particular meta-analysis to our knowledge, was the first to aggregate results of empirical studies on the effects of feedback on meaningful educational outcomes.

Theoretical Framework

This meta-analysis is grounded in early feedback research, especially research conducted by Kulhavy and Stock (1989). The paper also draws upon behaviorist (Thorndike, 1913) and cognitive psychology (Shuell, 1986) ideas on how feedback may affect learning.

Description

In their paper the authors did not present a clear definition of feedback. They discussed previous research on feedback, going back to the first decade of the 19th century, without ever operationalizing feedback. Nevertheless, they presented a typology of feedback that included three main categories that characterized feedback:

1. Intentionality: feedback can be intentional, that is, delivered via interpersonal action or through intervening agents such as computer, or informal, which is more incidental in nature.

2. Target: feedback can influence affective dimensions, for example, motivation, it can scaffold self-regulated learning, and it can signal whether the student has correctly applied concepts, procedures, and retrieved the correct information.

3. Content: characterized by load, which is the total amount of information given in the feedback message, ranging from simple yes/no statements to extended explanations; form, defined as the structural similarity between information as presented in feedback compared to the instructional presentation; and type of information indicating whether feedback restated information from the original task, referred to information given elsewhere in the instruction, or provided new information.

Additionally, the authors presented a five-stage model describing the feedback process. The five stages were: 1) Learner’s initial state defined by four elements of interest, goal orientation, self-efficacy, and prior knowledge; 2) a question (or task) that activated the search and retrieval strategies, 3) the learner’s response to the question, 4) followed by the learner’s evaluation of the response and its comparison to the information offered in the feedback, and 5) learners’ subsequent adjustments from this evaluation to their knowledge, self-efficacy, and interest.

Finally, in their meta-analytic review the authors used additional moderators that included the “type of feedback” and the “timing of feedback.” Regarding the former, the researchers found that just indicating the correctness of responses was less powerful than providing an explanation. In regards to the latter, the authors found superior effects of delayed feedback. All of the studies included in this meta-analysis had been published before 1990.

Butler and Winne (1995): Answers From Self-Regulated Learning Theory (SRL)

Butler and Winne (1995) model served a twofold purpose: it explained differential effects of feedback at the cognitive processing level and, at the same time, it represented one of the widely cited SRL models (for a comparison of SRL models see Panadero, 2017).

Theoretical Framework

In their model, Butler and Winne (1995) attempted to explain how internal and external feedback influenced students’ learning. Their main theoretical lens was information processing, with their focus expanding to include motivational factors in later years (Winne and Hadwin, 2008). Most of the references the authors used came from the domain of cognitive psychology (e.g. Balzer et al., 1989; Borkowski, 1992), SRL theory (e.g. Zimmerman, 1989), and attribution theory (e.g. Schunk, 1982).

Description

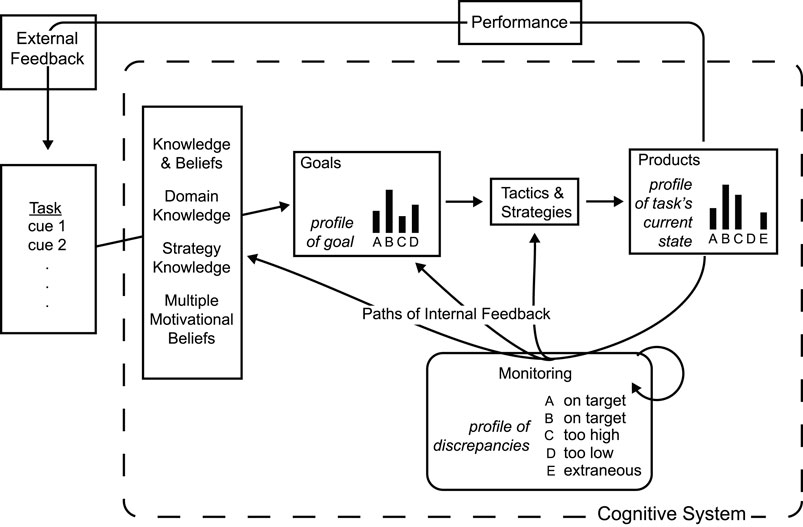

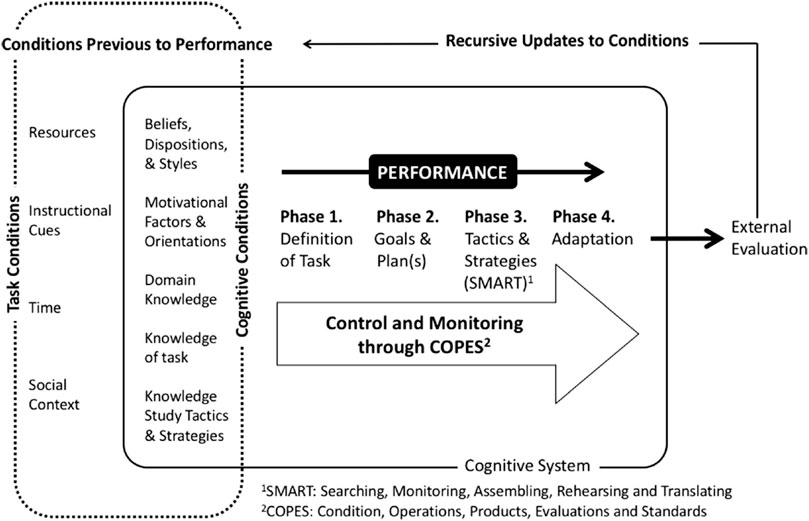

The authors did not include an explicit definition of feedback, but in the section “Four views on feedback…” the authors provided a broad description of the processes related to feedback. The model depicted mental processes that students activated when self-regulating during their execution of a task. Figure 4 is the original version, whereas Figure 5 represents the modified version of the model. Our subsequent discussion is based on the more current model depicted in Figure 5.

FIGURE 4. First version of Winne’s SRL model (extracted from Winne, 1996).

FIGURE 5. Modified version of Winne’s SRL model (extracted from Panadero et al., 2019).

According to this model, there is a number of antecedent variables that affect students’ later performance. These are task conditions that are processed through the learner’s cognitive conditions such as “domain knowledge” or “knowledge of study tactics and strategies” along with motivational conditions. When students begin their performance, there are four different phases that take place: 1) defining the task, 2) establishing goals and plans, 3) applying study tactics and strategies by searching, monitoring, assembling, rehearsing, and translating (SMART) and 4) adapting. Throughout the whole process students monitor and control their progress. When they receive an external evaluation and feedback that comes with it, their initial conditions get updated.

Butler and Winne (1995), drawing upon Carver and Scheier’s (1990) ideas, viewed feedback as an internal source, with students undergoing loops of feedback through monitoring and control. The loop related their interpretation about the product of monitoring (e.g. successful/unsuccessful, slow/fast, satisfying/disappointing) to the learner’s decision to maintain or adapt their thinking or actions in light of the product of monitoring. Feedback was then the information learners perceived about aspects of thought (e.g., accuracy of beliefs or calibration) and performance (e.g., comparison to a standard or a norm). Feedback could come from external sources when additional information was provided by an external agent. At any given point of the performance, students generated internal feedback comparing the profile of their current state to their ideal profile of the goal. According to Butler and Winne (1995) it happened via self-assessment by comparing different features of a task and through learners’ active engagement with the task. With both internal and external feedback learners could undergo small-scale adaptations represented by basic modifications in their current performance, or large-scale adaptations that would subsequently affect their future performance on the task. This model, with some modifications, has been used in at least two other publications to anchor self-regulated learning and different assessment practices (Nicol and MacFarlane-Dick, 2006; Panadero et al., 2018).

Kluger and DeNisi (1996): An Ambitious Meta-Analysis Exploring Moderators of Feedback Interventions

Kluger and DeNisi (1996) paper is regarded as a seminal piece in feedback research literature. It is frequently referenced to support a somewhat counter-intuitive finding – the fact that in 1/3 of cases feedback may have negative effect on performance. The authors did a thorough job reviewing 3000 publications on feedback to reduce it to the final set of 131. The number of considered moderators is also quite impressive and supersedes those examined in other meta-analyses. The paper quantitatively synthesized research into feedback interventions and proposed a new Feedback Intervention Theory with the goal to integrate multiple theoretical perspectives.

Theoretical Framework

This review is one of the most thorough syntheses of the psychological feedback literature. Kluger and DeNisi (1996) carefully summarized work into knowledge of results and knowledge of performance, and stressed the key relevance of Thorndike’s Law of Effect, cybernetics (Annett, 1969), goal setting theory (Locke and Latham, 1990), social cognitive theory (Bandura, 1991), learned helplessness (Mikulincer, 1994), and multiple-cue probability learning paradigm (Balzer, Doherty, and O’Connor, 1989). Interestingly, this review did not reference Kulhavy and Stock (1989), Bangert-Drowns et al. (1991), or Sadler (1989) Ramaprasad — all of whom came from the field of educational assessment. It shows that until recently, feedback research in psychology and education was conducted largely in parallel, despite a range of commonly shared ideas. For example, Kulhavy and Stock (1989) idea of “response certitude” has a clear overlap with “discrepancy,” which is a foundational idea of the Feedback Intervention Theory model.

Description

Kluger and DeNisi (1996) did not offer a clear definition of feedback but did define feedback intervention as: “…actions taken by (an) external agent (s) to provide information regarding some aspect(s) of one’s task performance.” (p. 225). The model has several pictorial representations (see Figure 6). Additional diagrams represented the effects of feedback intervention for more specific processes, such as attention and task-motivation processes.

FIGURE 6. A schematic overview of feedback intervention theory by Kluger and DeNisi (1996).

Their model focused on feedback that provided information about the discrepancy between the individual’s current level of performance and goals or standards. Kluger and DeNisi further proposed that individuals may have varying goals activated at the same time. For example, they could be comparing their performance to an external standard, to their own prior performance, performance of other reference groups, and their ideal goals. These discrepancies may be averaged or summed into an overall evaluation of feedback. The Feedback Intervention Theory also suggested that when the discrepancy between current and desired performance was established, the individual could: 1) choose to work harder, 2) lower the standard, 3) reject the feedback altogether, or 4) abandon their efforts to achieve the standard. Option selection depends upon how committed individuals are to the goal, whether the goal is clear, and how likely success will be if more effort is applied.

In the Feedback Intervention Theory, when an individual received feedback indicating that a goal had not been met, individuals’ attention could be focused on one of three levels: 1) the details of how to do the task, 2) the task as a whole, and 3) processes that the individual engages in doing the task (meta-task processes). Kluger and DeNisi (1996) argued that individuals typically processed feedback at the task level, but that the feedback could influence the level at which the feedback was received and attended to. Similarly to many educational researchers, Kluger and DeNisi claimed that if a task was clear to the individual, receiving feedback containing too many task-specific details could be detrimental to performance.

The impact of Kluger and DeNisi’s work could be seen in much of the theoretical work that followed it (e.g., Hattie and Timperley, (2007) model). The Feedback Intervention Theory model generally focused on feedback that communicated to individuals whether they were doing a particular task at an expected or desired level, thus assuming that individuals knew how to do the task. This is not often the case in educational settings, wherein the development of new skills is often the main goal. The authors acknowledged limitations of the model in that its breadth made the theory hardly falsifiable.

The main and frequently cited finding of their meta-analysis was that feedback interventions increased individuals’ performance by 0.4 standard deviations. At the same time, there was a great deal of variability of results, with 1/3 of studies showing a negative influence on performance. Based on the results of their meta-analysis and close examination of moderators, Kluger and DeNisi demonstrated the utility of the Feedback Intervention Theory.

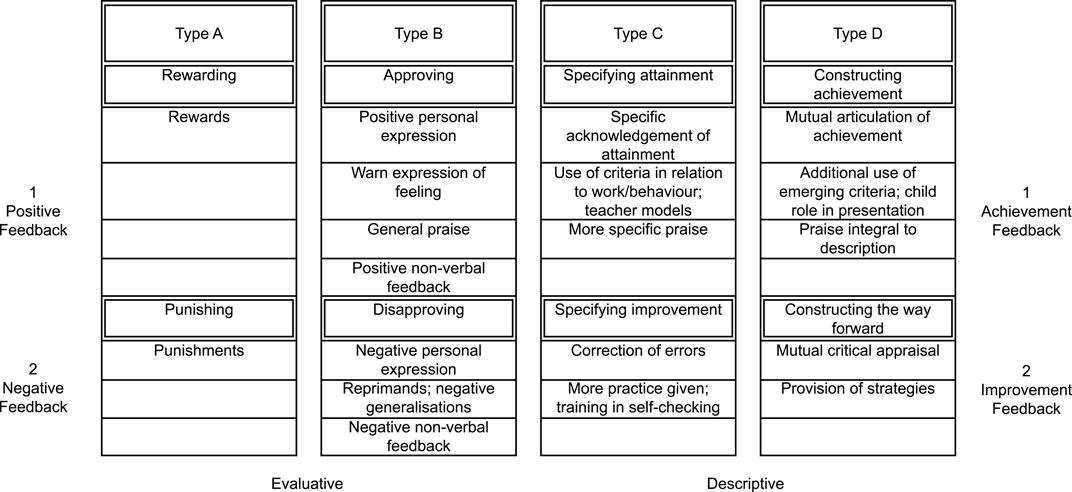

Tunstall and Gipps (1996): A Typology for Elementary School Students

This publication was included based on three votes, cast by the consulted experts. Unlike other models, its contribution may be more limited in scope. First, it is not a model that describes links and interactions but a typology. The authors’ primary goal was to categorize different types of feedback that they observed in classrooms. Second, its theoretical framework and links to the literature are rather limited. And third, the typology was developed based on a sample of 6 and 7 year old students, hence, it is only applicable to early primary grades. We decided to keep this publication in the current review to be consistent with our specified inclusion criteria. It may be beneficial for those researchers who are interested in the downward extension of feedback studies, as primary school samples are generally scarce in the field of instructional feedback and assessment research (Lipnevich and Smith, 2018).

Theoretical Framework

The publication has a short theoretical introduction, with the authors referencing studies in early childhood education (e.g. Bennett and Kell, 1989), feedback (e.g. Brophy, 1981), and educational psychology (e.g. Dweck, 1986). They authors do not frame their study within any specific theoretical approach.

Description

Despite the fact that this paper described a typology of feedback, the authors did not present a definition of feedback. Tunstall and Gipps (1996) conducted their study in six schools and selected 49 students for detailed examination. Based on document analyses, recordings of dialogues and observations, they derived a typology that included five different types of feedback and their valence (Figure 7). These types were organized around two more general aims: Feedback and socialization (i.e. Type S) and Feedback in relationship to assessment. The former included four types that differed according their purpose: classroom/individual management, performance orientation, mastery orientation, and learning orientation. Feedback in relationship to assessment was differentiated into feedback that is rewarding, approving, specifying attainment, and constructing achievement. The authors also included dimensions of positive and negative feedback, as well as achievement and improvement feedback. The typology appears to be very descriptive with multiple overlapping categories.

FIGURE 7. Feedback typology by Tunstall and Gipps (1996).

Mason and Bruning (2001): Considering Individual Differences in a Model for Computer Based Instruction

Mason and Bruning’s (2001) contribution is unique in that it is the first one introducing the role of individual differences in the context of the Computer Based Instruction. The authors present a framework for decision making about feedback options in computerized instruction.

Theoretical Framework

The authors review literature on feedback in traditional instructional contexts, summarize research into computer based education (e.g. Cohen, 1985), and discuss publications combining studies of both regular and computer-based instructional settings (e.g. Mory, 1994).

Description

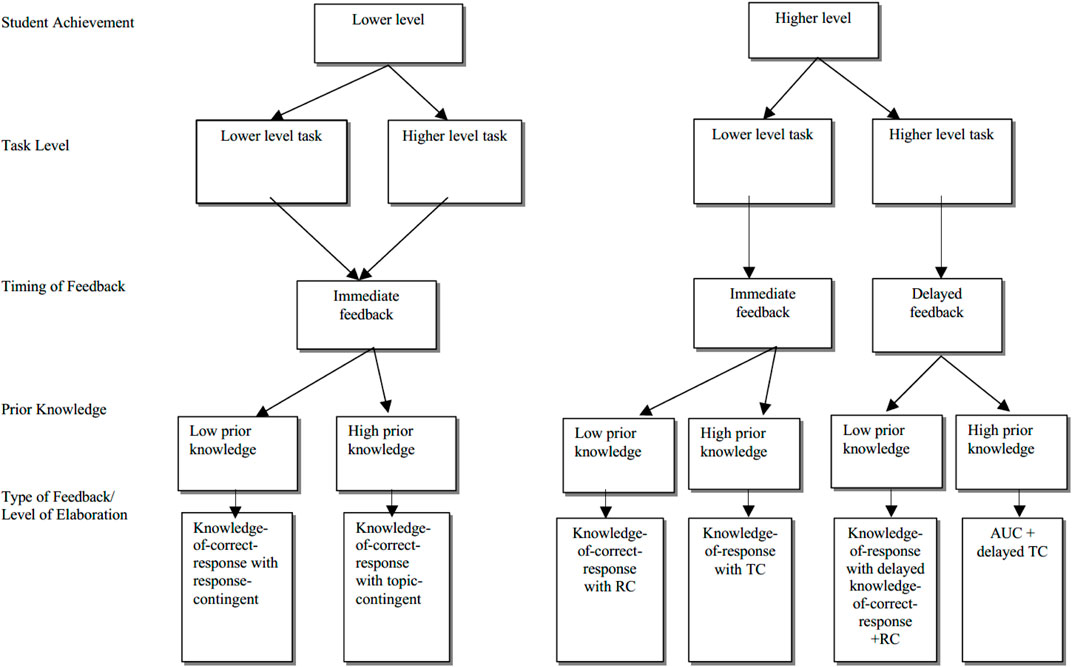

The authors provided the following definition of feedback: “In general terms, feedback is any message generated in response to a learner’s action.” (p. 3). They started off by describing eight types of feedback that came from the literature, differentiated based on two main vectors of verification and elaboration: 1) No Feedback, which presents only a performance score; 2) Knowledge of response, which communicates whether the answer was correct or incorrect; 3) Answer until correct, which provides verification but no elaboration; 4) Knowledge of correct response, which provides verification and knowledge of correct answer; 5) Topic contingent, which delivers verification and elaboration regarding the topic; 6) Response contingent, which includes both verification and item specific elaboration; 7) Bug related, which presents verification and addresses errors and 8) Attribute isolation, which focuses learner on key components. In this categorization, the authors considered the instructional context, and some of these types are more common in computer based instruction than others (e.g., “answer until correct”).

This paper’s main contribution is the model that differentiates among types of feedback based on learners’ characteristics, prior knowledge, and the timing of feedback. The pictorial representation includes a flowchart starting at the student achievement level and going down to the complexity of the task, and the type of feedback (Figure 8). The model offers clear guidelines on how to deliver better feedback based on previous empirical research, while considering a range of key variables, such as student level of achievement and prior knowledge, as well as the timing of feedback. Interestingly, “attitude towards feedback” and “learner control,” two additional individual student characteristics that the authors explored in their introduction, were not incorporated into the model.

FIGURE 8. Mason and Bruning (2001) model.

Narciss and Huth (2004, 2008): An Ambitious Model Created for Computer Supported Learning

This model is probably the most ambitious out of those described in this review. It explores both the reception and processing of feedback. There are connections between this model and Butler and Winne’s, however, this model has a range of unique contributions. This model was presented first in two publications: one from 2004 and an updated and much more specific version from 2008. In later publications Narciss introduced minor changes in the figures to make them easier to understand.

Theoretical Framework

The model is based upon the cybernetic paradigm from systems theory, at the same time having aspects of “notions of competencies and models of self-regulated learning” (Narciss, 2017). The Interactive Tutoring Feedback model, also known as interactive-two-feedback-loops model, is heavily steeped in vast research base of general feedback literature. The model represents interacting processes and factors of the two feedback loops that may account for a large variety of feedback. The model also focuses on computer supported learning with a strong emphasis on tutoring systems that adapt feedback to students’ needs. Despite its strong focus on tutoring systems, the contentions of this model can be applied to face-to-face learning situations (2017).

Description

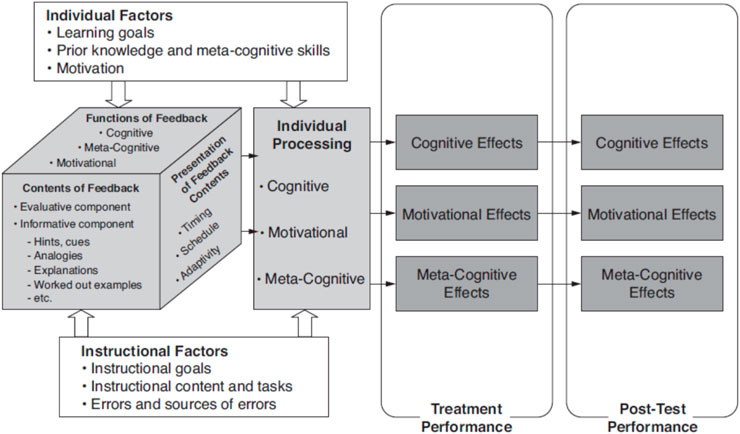

Narciss describes feedback as follows: “In instructional contexts the term feedback refers to all post-response information which informs the learner on his/her actual state of learning or performance in order to regulate the further process of learning in the direction of the learning standards strived for (e.g., Narciss, 2008; Shute, 2008). This notion of feedback can be traced back to early cybernetic views of feedback (e.g., Wiener, 1954) and emphasizes that a core aim of feedback in instructional contexts is to reduce gaps between current and desired states of learning (see also Ramaprasad, 1983; Sadler, 1989; Hattie, 2009).” (Narciss, 2017 p. 174). As it can be seen, it is an ambitious definition that includes aspects from multiple theories.

The model presents factors and processes of both the external and internal loop and how their potential interactions may influence the effects of feedback. When a student receives feedback it is not just the characteristics of the feedback message that will explain student responses. Rather, it is an interactive process in which the students and instructional characteristics create a particular type of feedback processing. Narciss presented three main components that had to be considered when designing feedback strategies (Figure 9): 1) characteristics of the feedback strategy (e.g., function, content, and presentation); 2) learner’s individual factors (e.g. goals, motivation); and 3) instructional factors (e.g. goals, type of task). Hence, the model integrates multiple factors that influence if and how feedback from an external source is processed effectively. Additionally, in 2013 and 2017, Narciss elaborated upon the individual and instructional factors and added specific conditions of the feedback source useful for designing efficient feedback strategies.

FIGURE 9. Narciss (2008) model of factors and effects of external feedback.

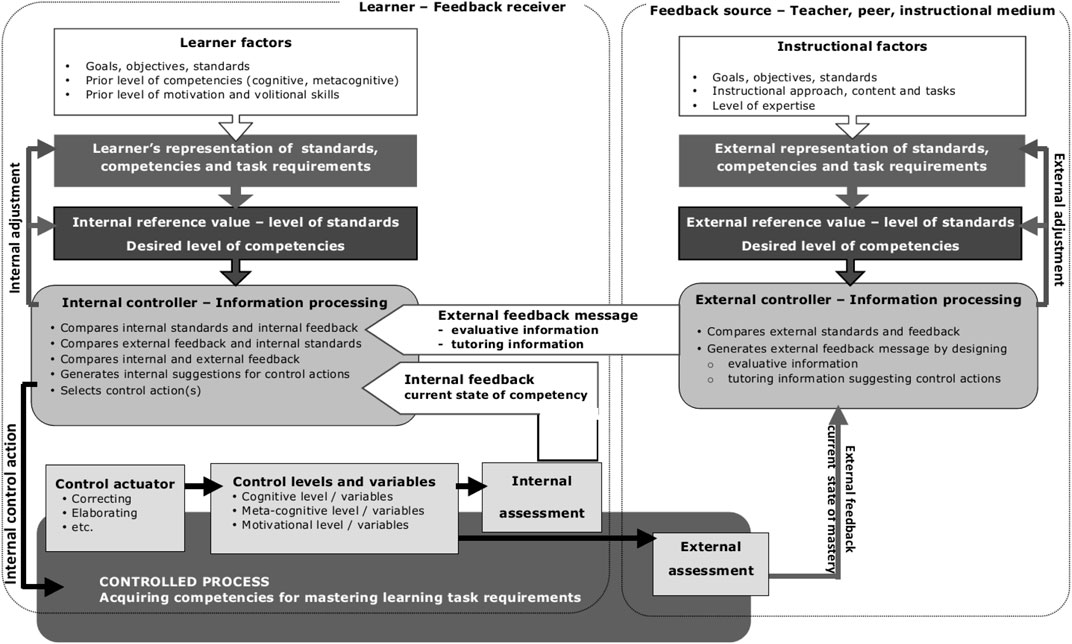

The model is represented in more detail in Figure 10. As one can see, learners’ engagement with feedback is influenced by both their characteristics and teacher, peer, and instructional medium. Narciss juxtaposes internal and external standards, competencies, and task requirements, as well as internal and external reference values. So, for example, an external controller compares external standards to feedback and communicates this information to the learner’ internal controller, which, in turn, generates internal feedback via self-assessment. This leads to different actions such as control actuator and controlled variables. If we go back to Butler and Winne’s model, the interactive processes described in Narciss’ model explain in a similar way the adaptation (small and large scale) proposed by Butler and Winne.

FIGURE 10. Narciss (2013) feedback model explaining interactions.

Additionally, Narciss (2008) provided what is probably the most specific taxonomy of feedback from all the models herein included, based on a multidimensional approach to describing the many ways feedback can be designed and provided. According to her typology, feedback can have three functions: 1) cognitive (informative, completion, corrective, differentiation, restructuring); 2) metacognitive (informative, specification, corrective, guiding); and 3) motivational (incentive, task facilitation, self-efficacy enhancing, and reattribution). Additionally, feedback can be classified by its content with an evaluative component or an informative component, with eight different categories (Narciss, 2008. Table 11.2tbl112 p. 135). And finally, the presentation of feedback can vary in timing, schedule, and adaptivity. This multidimensional classification, which also represents ways of designing feedback, is extremely detailed and stems from Narciss’ extensive work in the subfield of feedback.

Nicol and McFarlane-Dick (2006): Connecting Formative Assessment With Self-Regulated Learning

This article has become one of the most important readings in the formative assessment literature. The article connects self-regulated learning theory, more specifically the model developed by Winne (2011), and seven principles that are introduced as “good feedback practices.” Nicol and MacFarlane-Dick were among the first authors to provide specific connections between the two fields of self-regulated learning and formative assessment (Panadero et al., 2018).

Theoretical Framework

The theoretical framework of the paper draws upon the two fields of self-regulated learning and formative assessment, combining assessment literature (Sadler, 1998; Boud, 2000) with studies coming from self-regulated learning scholars (e.g. Pintrich, 1995; Zimmerman and Schunk, 2001).

Description

According to Nicol and MacFarlane-Dick, “Feedback is information about how the student’s present state (of learning and performance) relates to goals and standards. Students generate internal feedback as they monitor their engagement with learning activities and tasks and assess progress towards goals. Those more effective at self-regulation, however, produce better feedback or are abler to use the feedback they generate to achieve their desired goals (Butler and Winne, 1995)” (p. 200).

Additionally, they referred to the seminal work of Sadler (1989) and Black and Wiliam (1998) and emphasized the importance of the three conditions that must be explicated for students to benefit from feedback: 1) the desired performance; 2) the current performance; 3) how to close the gap between the two.

Their model is largely based on Winne’s model of self-regulated learning, and it describes how feedback interacts within each of the components of the model. For example, the authors suggested that comparisons of goals to outcomes generated internal feedback at cognitive, motivational, and behavioral levels, and this information prompted the student to change the process or continue as it was. They emphasized that self-generated feedback about the potential discrepancy between the goal and the performance may result in revisions of the task, changes in internal goals or strategies. The model also presented variable sources of feedback, which could be provided by the teacher, peer, or by other means (e.g. a computer). Just like Sadler (1989), Nicol and MacFarlane-Dick emphasized the importance of active engagement with feedback.

Their model can be categorized as instructional and pedagogical as it presented seven feedback principles that influenced self-regulated learning. According to the authors, good feedback that may influence self-regulated learning:

1. helps clarify what good performance is (goals, criteria, expected standards);

2. facilitates the development of self-assessment (reflection) in learning;

3. delivers high quality information to students about their learning;

4. encourages teacher and peer dialogue around learning;

5. encourages positive motivational beliefs and self-esteem;

6. provides opportunities to close the gap between current and desired performance;

7. provides information to teachers that can be used to help shape teaching.

These principles are among the main instructional practices that the formative assessment literature has been emphasizing for years (e.g. Lipnevich and Smith, 2018; Black and Wiliam, 1998; Black et al., 2003; Dochy and McDowell, 1997). However, the clarity of the presentation of the feedback practices in relationship to self-regulated learning (Figure 11) turns this model into a very accessible one. Additionally, each principle is presented in detail describing empirical support and instructional recommendations.

FIGURE 11. Nicol and MacFarlane-Dick (2006) model.

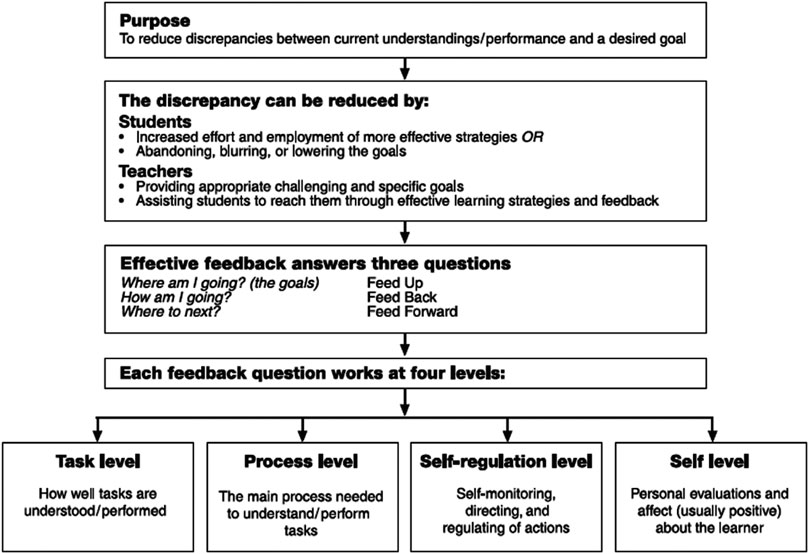

Hattie and Timperley (2007): A Typology Model Supported by Meta-Analytic (CAP) Evidence

This is by far the most cited model of feedback not only in terms of the number of citations (+14000) but also in terms of expert selections (all consulted experts identified this model). It also both a model and a typology because it established instructional recommendations while linking them to four different types of feedback.

Theoretical Framework

The theoretical framework of this paper builds upon previous feedback reviews and meta-analyses, ideas presented in Hattie (1999), and general educational psychology literature (e.g. Deci and Ryan, 1985).

Description

The authors presented a simple definition that is applicable to a wide range of behaviors, contexts, and instructional situations: “... feedback is conceptualized as information provided by an agent (e.g., teacher, peer, book, parent, self, experience) regarding aspects of one’s performance or understanding.” (p. 81).

The model is based on the following proposition: Feedback should serve the purpose of reducing the gap between the desired goal and the current performance. To this end, Hattie and Timperley (2007) proposed different ways, in which the students and teachers can reduce this gap (Figure 12). For the feedback to be more effective, it should answer three questions, each of them representing a type of feedback: where am I going? = feed up; how am I going? = feed back; and where to next? = feed forward. The authors claimed that the last type was the least frequently delivered and it was the one having the greatest impact, and when the authors asked students what they meant by feedback this was the one the students overwhelmingly desired (Hattie, personal communication, 30/11/2019). This, in itself, could be considered a typology differentiating feedback based on the context and the content of it. However, the typology that resonated the most with the field places feedback into four levels of task, process, self-regulation, and self. Most of the feedback given in an instructional setting is at the task level (i.e., specific comments relating to the task itself) and the self level (i.e., personal comments), despite the fact the process (i.e., comments on processes needed to perform the task) and self-regulation (i.e., higher-order comments relating to self-monitoring and regulation of actions and affect) are the ones with more potential for improvement. The authors also noted that the self level feedback (e.g., generic, person-level praise) is almost never conducive to enhancing performance regardless of its valence. Self-level feedback may interfere with the task-, process-, or self-regulation feedback by taking individuals’ attention away from those other types. This review tackles a range of additional topics, describing feedback timing, effects of positive and negative feedback, teacher role in feedback, and feedback as part of a larger scheme of assessment.

FIGURE 12. Hattie and Timperley (2007) feedback model.

Importantly, through personal communication with the authors (Hattie, personal communication, 30/11/2019), Hattie stated: “The BIG idea we missed in the earlier review was that we needed to conceptualize feedback more in terms of what is received as opposed to what is given.” This line of reasoning is prominently featured in Hattie’s recent work (Hattie and Clarke, 2019).

Evans (2013): Reviewing the Literature on Assessment Feedback in Higher Education

Evans (2013) publication presents a compelling review of literature on feedback in higher education settings. The author showed an excellent understanding and pedagogical reading of the field covering different areas of formative assessment ranging from lecturers’ instructional activities, peer, and self-assessment.

Theoretical Framework

The primary purpose of this article was to review current literature focusing on feedback in the context of higher education. Evans described feedback from socio-constructivist, co-constructivist, and cognitivist perspectives, to name a few, and reviewed characteristics of feedback that were most pertinent in the context of higher education.

Description

Evans spent substantial amount of time reviewing definitions of feedback. She proposed that “Assessment feedback therefore includes all feedback exchanges generated within assessment design, occurring within and beyond the immediate learning context, being overt or covert (actively and/or passively sought and/or received), and importantly, drawing from a range of sources.” (p. 71). Evans systematically reviewed principles of effective feedback and provided an excellent overview of methodological approaches employed in feedback research.

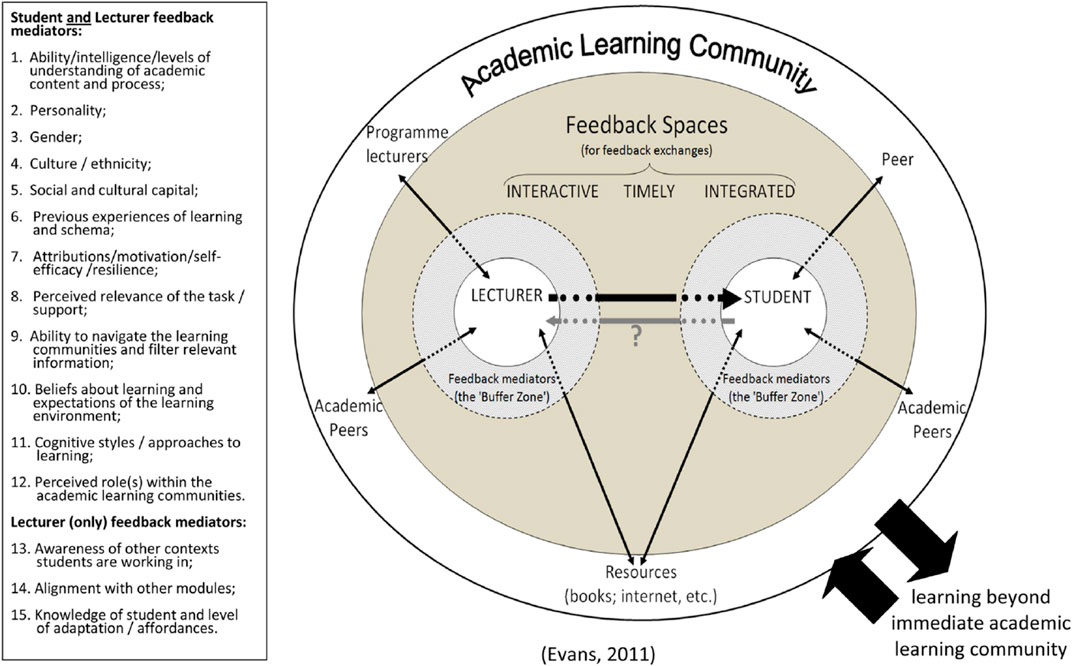

Towards the end of her review, Evans presented a model entitled “The feedback landscape.” The pictorial representation of the model is presented in Figure 13. The underlying idea of the model is in the close interaction between students and lecturers. Evans suggested that feedback was moderated by 12 variables shared by feedback receivers and givers (e.g. ability, personality, etc.), with three additional mediators selected for lecturers (i.e., awareness of other contexts, alignment of other modules, and knowledge of students). Surrounding this interaction there is the academic learning community (e.g. resources, academic peers, etc.) and an emphasis of temporal and special variability of mediators. Interestingly, most of these variables are not explored in detail in the review but are presented to the reader as part of the model. In general, presentation of the model was not among the articulated goals of the manuscript and its description is somewhat cursory.

FIGURE 13. Evans (2013) Feedback landscape model.

Nevertheless, the publication provided a remarkable amount of information about instructional applications of feedback. For example, the author presented a table with a list of key principles of effective feedback practice. For each principle, Evans provided a significant number of references. Additionally, she summarized these practices into “12 pragmatic actions”:

1. ensuring an appropriate range and choice of assessment opportunities throughout a program of study;

2. ensuring guidance about assessment is integrated into all teaching sessions;

3. ensuring all resources are available to students via virtual learning environments and other sources from the start of a program to enable students to take responsibility for organizing their own learning;

4. clarifying with students how all elements of assessment fit together and why they are relevant and valuable;

5. providing explicit guidance to students on the requirements of assessment;

6. clarifying with students the different forms and sources of feedback available including e-learning opportunities;

7. ensuring early opportunities for students to undertake assessment and obtain feedback;

8. clarifying the role of the student in the feedback process as an active participant and not as purely receiver of feedback and with sufficient knowledge to engage in feedback;

9. providing opportunities for students to work with assessment criteria and to work with examples of good work;

10. giving clear and focused feedback on how students can improve their work including signposting the most important areas to address;

11. ensuring support is in place to help students develop self-assessment skills including training in peer feedback possibilities including peer support groups;

12. ensuring training opportunities for staff to enhance shared understanding of assessment requirements.

She delivered multiple lists and tables containing recommendations for peer feedback (p. 92), the basics about the feedback landscape (p. 100), and a list of potential avenues for future research (p. 107). These instructional recommendations are arguably more relevant than the model itself as the model is not sufficiently developed in the text.

Lipnevich, Berg, and Smith (2016): Describing Students – Feedback Interaction

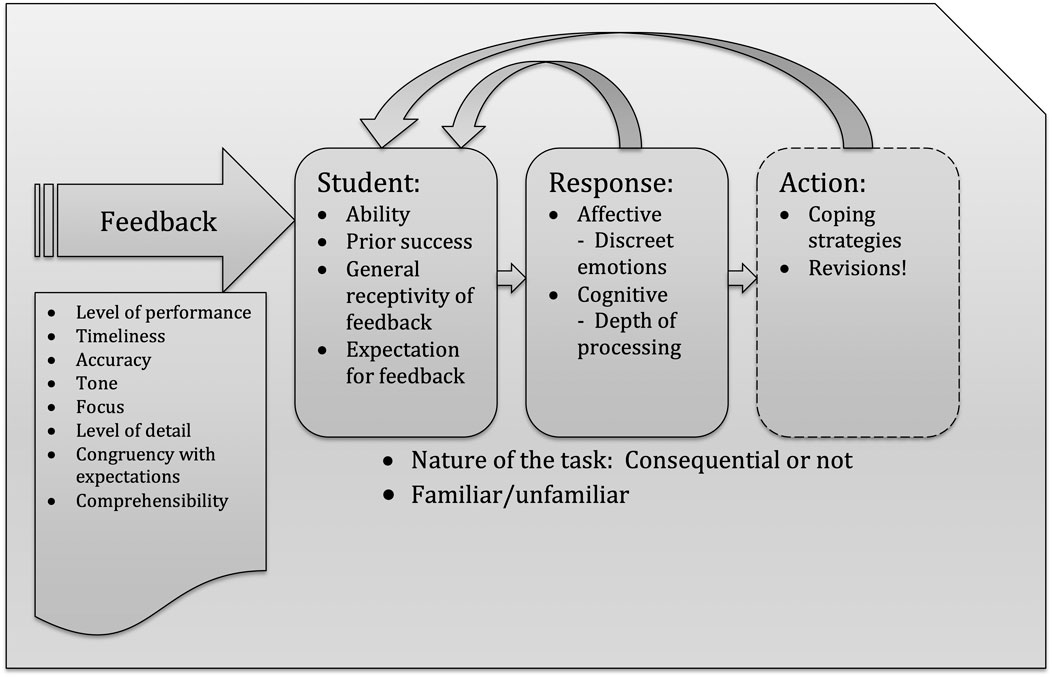

Lipnevich et al. (2016) model is one of the more recent models and it has been first presented in a chapter of a Handbook. The model, however, was selected by five experts as one of the models with which they are most familiar. The model has been recently revised and expanded to incorporate empirical findings and recent developments in the field of feedback.

Theoretical Framework

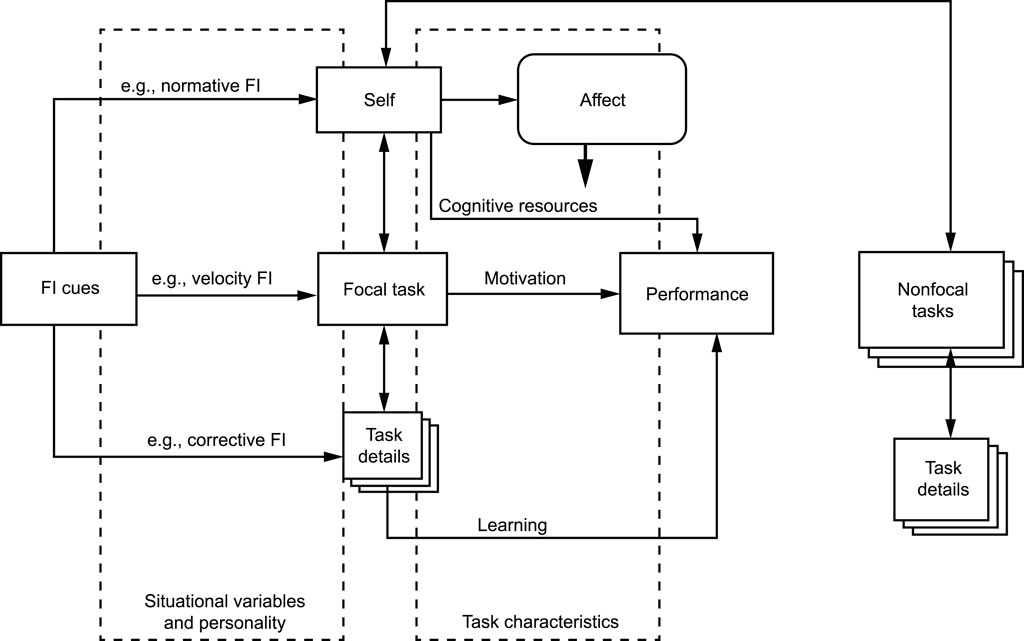

The authors ground their model in the literature on feedback, reporting a thorough review of the field. The definition that Lipnevich et al. (2016) used came from Shute (2008) who defined feedback as “information communicated to the learner that is intended to modify his or her thinking or behavior for the purpose of improving learning” (p. 154). Lipnevich et al. (2016) emphasized students’ affective responses and used Pekrun’s Control-Value theory of achievement emotions to frame their discussion. In their revision of the model, Lipnevich et al. (2013) proposed the following definition of feedback: “Instructional feedback is any information about a performance that learners can use to improve their performance or learning. Feedback might come from teachers, peers, or the task itself. It may include information on where the learner is, where the learner is going, or what steps should be taken and strategies employed to get there.”

Description

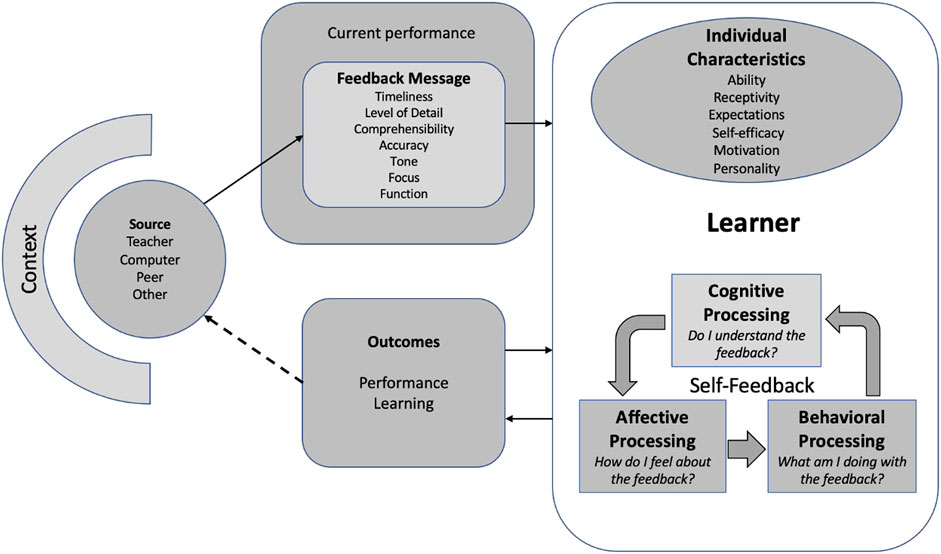

In their walk through the model the authors emphasized that feedback was always received in context (Figure 14). The same type of feedback would be processed differentially depending on a class, academic domain, or consequential nature of the task. Within the context, the feedback is delivered to the student. Students will inevitably vary on their personality, general cognitive ability, receptivity to feedback, prior knowledge, and motivation. The feedback itself may be detailed or sparse, aligned with the students’ level of knowledge or not. It may be direct but delivered in a supportive fashion or may be unpleasantly critical. It may match what the student is expecting or be highly below or above those expectations. All these characteristics will contribute to students’ differential processing of feedback. In their new version of the model Lipnevich et al. (2013) included the source of feedback as a separate variable. The authors reported evidence that the feedback from the teacher, computer, peer, and the task itself may be perceived very differently, and may variably interact with student characteristics.

FIGURE 14. Lipnevich et al. (2016) Feedback – student interaction Model.

When students receive the feedback message, they produce cognitive and affective responses that are often tightly interdependent. The student may cognitively appraise the situation, deciding whether the task is of interest and importance and whether they have control over the outcome, and make a judgment of whether the feedback is clear and understandable. That is, in reading through the feedback, students might be baffled by the comments, or may fully comprehend them. These appraisals result in a range of emotions, which, in turn, lead to some sort of behavioral responses. Students may engage in adaptive or maladaptive behavioral responses which will have a bearing on performance on a task and, possibly, learning. In the revision of the model the authors differentiated between learning and performance discussing potential effects of feedback on short-term changes on a task and long-term transfer to subsequent tasks. The authors also showed that both the response to the feedback and the actions that the student takes reflect on who the student was, what the student knew and could do in this area, and how the student would respond in the next cycle of feedback. Lipnevich et al. (2016) described feedback as a conversation between the teacher and a student, and cautioned scholars and practitioners that utterance that each party uses would be highly consequential for future student-teacher interactions and student learning progress.

The authors recently revised their model to further emphasize the three types of student processing: cognitive, affective, and behavioral1. Figure 15 of the revised model shows that message, student characteristics, and cognitive, affective, and behavioral responses contribute to an action that may alter student performance and learning1. Emphasized three questions, important for student receptivity of feedback: Do I understand feedback? How do I feel about feedback? What am I going to do about feedback? Importantly, the authors suggest that all feedback that comes from any external source will have to be internalized and converted into self- or inner feedback (Nicol, 2021; Panadero et al., 2019; Andrade, 2018). The efficiency of this internal feedback would vary depending on a variety of factors.

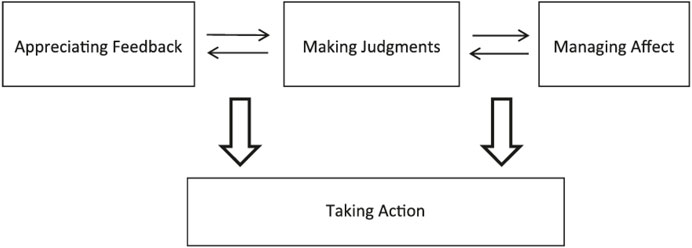

Carless and Boud (2018): A Proposal for Students’ Feedback Literacy

Carless’ work was voted in favor of inclusion by three experts but it was difficult to decide on a specific model, because he had proposed four (Carless et al., 2011; Yang and Carless, 2013; Carless, 2018; Carless and Boud, 2018). The feedback literacy model included into the current review was suggested by the author as being both influential and matching with the goals of the current paper. In its short time since publication this model has achieved a significant number of citations.

Theoretical Framework

The theoretical framework is based on social constructivist learning principles (e.g. Palincsar, 1998; Rust, O’Donovan and Price, 2005) and previous assessment research literature (e.g., Sadler, 1989; Price et al., 2011), with a clear grounding in higher education (31 out of the 53 cites come from journals with “higher education” in the title, with 18 of them from Assessment and Evaluation in Higher Education) and a few references to general feedback literature (e.g. Hattie and Timperley, 2007; Lipnevich et al., 2016).

Description

Carless and Boud provided the following definition of feedback: “Building on previous definitions (Boud and Molloy, 2013; Carless, 2015), feedback is defined as a process through which learners make sense of information from various sources and use it to enhance their work or learning strategies. This definition goes beyond notions that feedback is principally about teachers informing students about strengths, weaknesses and how to improve, and highlights the centrality of the student role in sense-making and using comments to improve subsequent work.” (p. 1). The authors then continued by defining student feedback literacy “…as the understandings, capacities and dispositions needed to make sense of information and use it to enhance work or learning strategies.”

The model and the main propositions are straightforward (Figure 16). The model is composed of four inter-related elements. For students to develop feedback literacy they need to 1) appreciate the value and processes of feedback, 2) make judgments about their work and that of others, 3) manage the affect feedback can trigger in them, and all of this leads towards 4) taking action in response to such feedback. However, how these elements are operationalized could have been explicated in further detail as strategies are not explicit in the model. Carless and Boud provided a few illustrative examples of activities needed to develop feedback literacy that included peer feedback and analyzing exemplars, and described teachers’ role in the development of student feedback literacy. Some parts remain at a general level of description and the paper is concisely packaged, with some concepts and practices being mentioned but not described in detail.

FIGURE 16. Carless and Boud (2018) Feedback literacy model.

Feedback Models Comparison

In the upcoming sections we will compare the models, focusing on definitions and empirical evidence behind them. Importantly, the comparison continues in Panadero and Lipnevich (2021) where we synthesize typologies and models, and propose a new integrative feedback model. Page limitations prevented us from having both articles in one, but we are hopeful that the reader will find them useful.

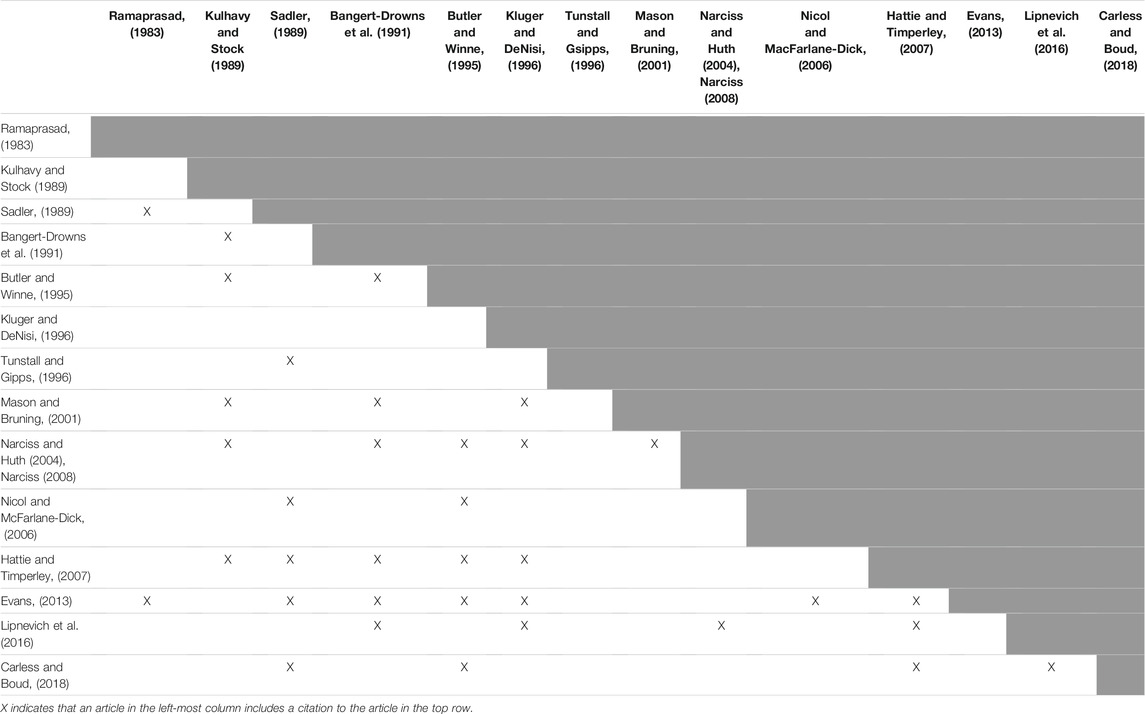

To offer the reader an idea about the historical and temporal continuity of the publications, we developed Table 1 that shows cross-citations among the fourteen publications discussed in this review. There is a clear evidence of cross-citations among the included publications. This is reassuring because a number of fields suffer from isolated pockets of research that do not inform each other (Lipnevich and Roberts, 2014). An evidence to that is the model by Kluger and DeNisi (1996) that came to us from the field of industrial-organizational psychology: The authors did not reference any of the models published before them, most of which were situated within the field of education. Also, the only publication that was voted to be included but had not been cited was that of Tunstall and Gipps (1996), possibly due to its limited demographic focus (i.e., early elementary students). However, for most included models the cross-citation is high and especially evident in the latest ones (e.g. Evans, 2013, cited seven previous models).

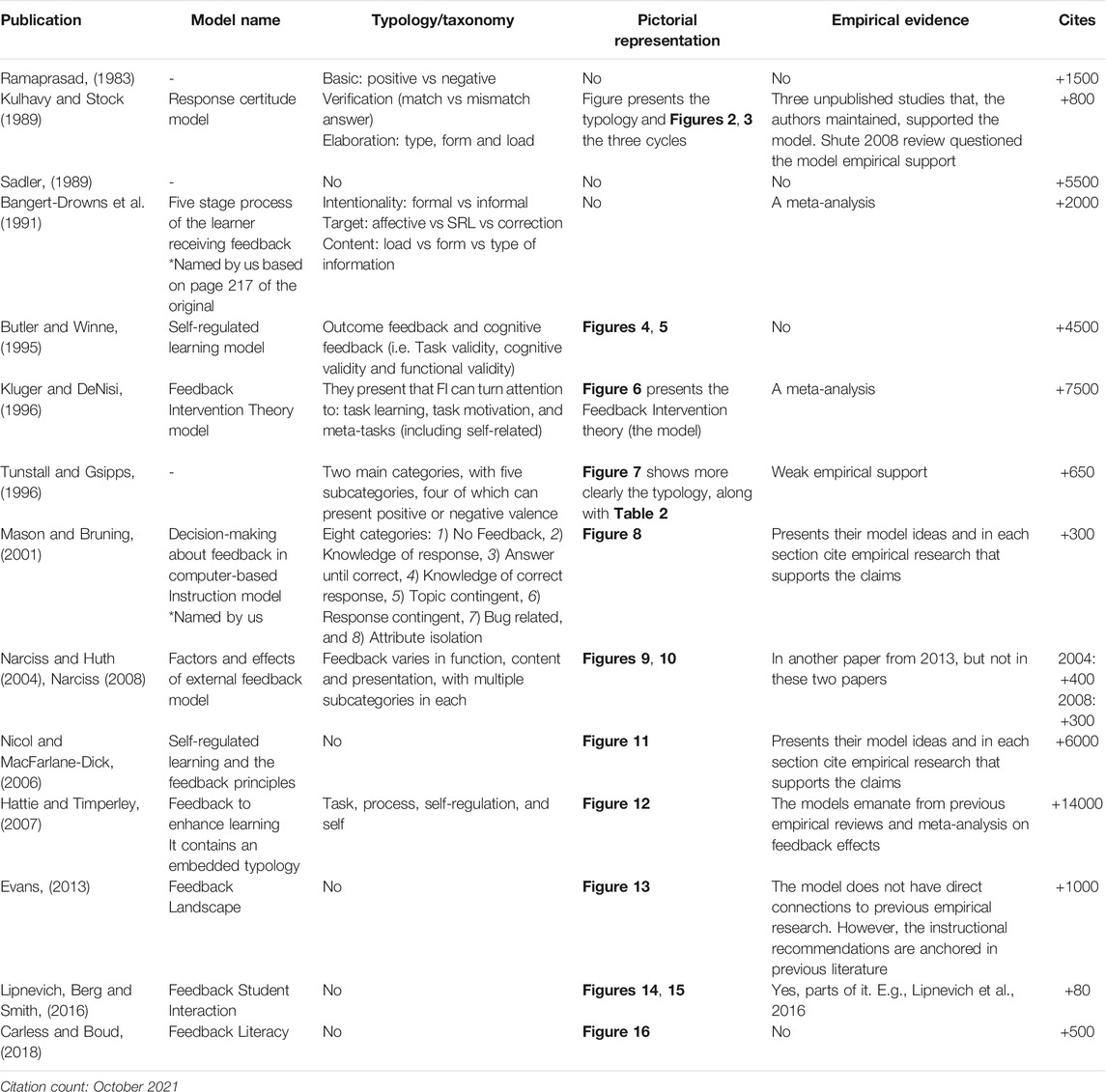

When it comes to the number of citations of the models (Table 2), it is clear that feedback models generate significant attention in the field of educational research. Interestingly, it does not matter whether the models are definitional (e.g. Ramaprasad, Sadler), are based on meta-analyses (e.g. Bangert-Drowns et al., Hattie and Timperley) or are linking multiple subfields (e.g. Butler and Winne, Nicol and McFarlane-Dick). Furthermore, it is clear that the field is constantly evolving with new models being developed that reflect the current focus of feedback research. We would like to encourage researchers to frame their studies within models that are currently available to avoid proliferation of redundant depictions of the feedback phenomena. For example, the field of psychosocial skills research currently has 136 models and taxonomies discussed by researchers and practitioners (Berg et al., 2017). Obviously, it is not humanly possible to make sense of all of them, so the utility of proposing new models is very limited. Rather, validating existing models would be a more fruitful investment of researchers’ time. In Panadero and Lipnevich (2021) we integrate the fourteen models, selecting the most prominent elements of Message, Implementation, Student, Context, and Agents (MISCA). We hope the reader will find it instrumental.

The Definitions of Feedback

The problem of defining feedback has occupied minds of many feedback scholars and it has been a contested area, especially in the confluence of educational psychology and education. There are hundreds of definitions of educational feedback. This is not unique to the field of feedback and is common for other psychological and educational constructs, where lacking agreement on definitions stifles scientific developments.

When it comes to feedback, there appear to be opposing camps with some researchers arguing that feedback is information that is presented to a learner, whereas others viewing feedback as an interactive process of exchange between a student and an agent. There are also more extreme positions that describe feedback as the process where students put the information to use. Hence, according to this view, if not utilized, information delivered to students cannot be regarded as feedback (e.g. Boud and Molloy, 2013). However, there seems to be some common ground with some educational psychologists emphasizing the importance of the receptivity of feedback yet acknowledging that not acting upon feedback may be a valid situational response to it (Lipnevich et al., 2016; Winstone et al., 2016; Hattie and Clarke, 2019; Jonson and Panadero, 2018). This tension shows that how researchers define feedback directly influence how they operationalize research. Therefore, it is crucial to explore how the models actually define feedback.

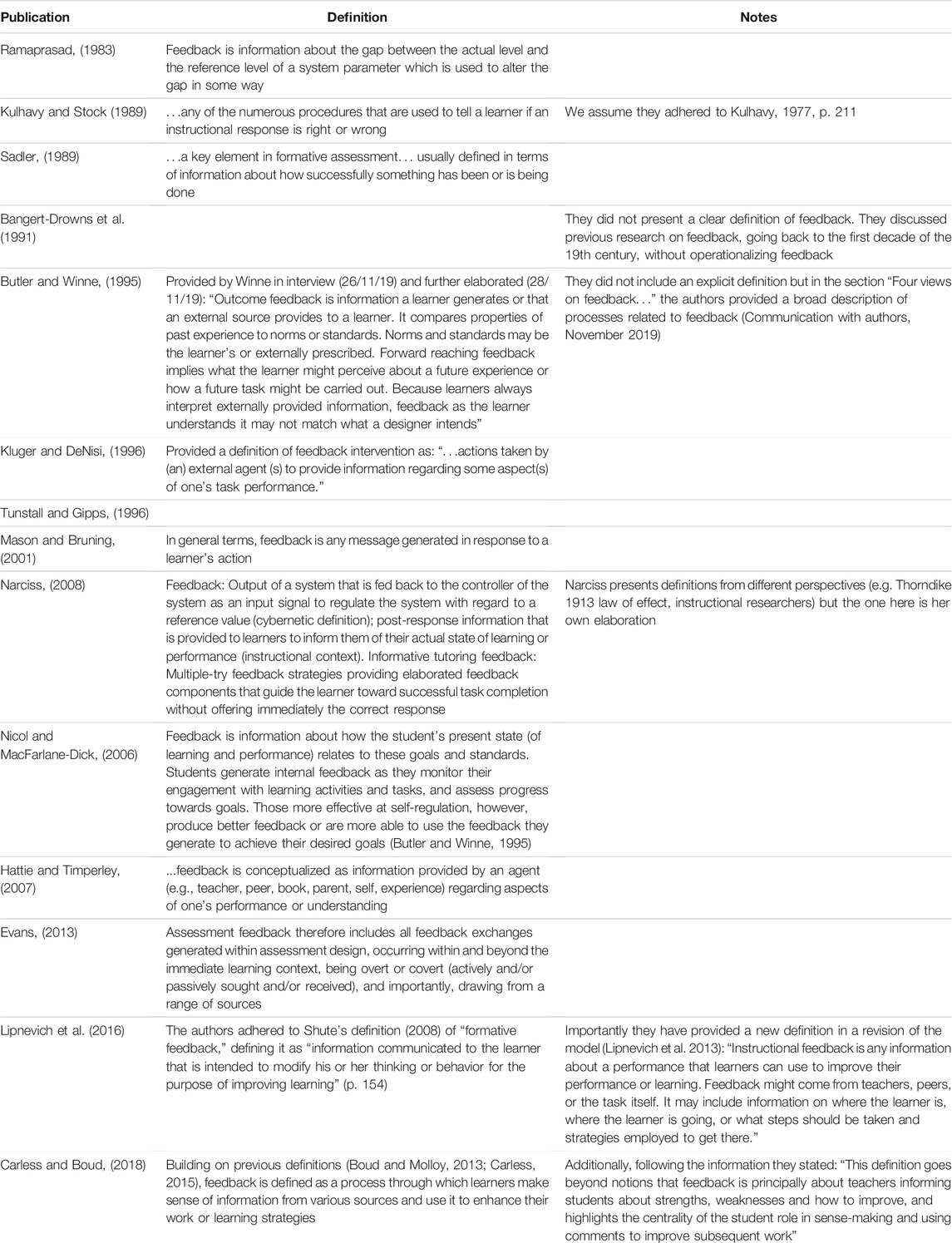

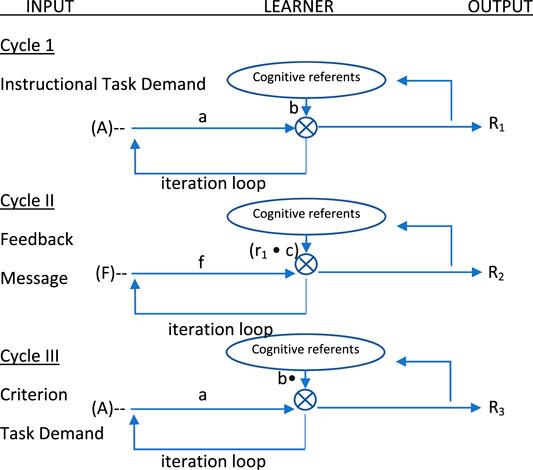

To achieve such goal, we looked in the included publications for sentences or paragraphs that were clearly indicative of a definition (e.g. “feedback is…”, “our definition of feedback is…”). We intentionally tried not to borrow definitions from subsequent or previous work of the authors and focused exclusively on what was presented in publications included in this review. Table 3 contains all definitions and Table 4 a comparison which we further develop below. Four publications did not include definitions (Kulhavy and Stock, 1989; Bangert-Drowns et al., 1991; Tunstall and Gipps, 1996; Butler and Winne, 1995).

To offer the authors of the included models a chance at presenting their evolved ideas, in our interviews or email communication we asked whether they still agreed with their definitions or wanted to present one if it was missing in the original manuscript. This way we obtained a definition from Winne. Additionally, Lipnevich et al. (2016), who used the unaltered Shute’s (2008) definition of feedback, put forward a new definition in their model revision (Lipnevich et al., 2013). After analyzing the definitions, we identified five elements that are present in multiple definitions across the included publications (Table 4). Our conclusions will be anchored to them.

The first conclusion is that the definitions of feedback are getting more comprehensive as more recent definitions include more elements than older definitions. For example, whereas the first chronological definition included two of the six elements we identified in the definitions (Ramaprasad, 1983) the latest included six of the elements (Winne, 2019 via personal communication with authors). Table 4 shows the increase in the number of elements that occurs with the more recent models. This is an important reflection of the evolution and maturity of the feedback field where we are now looking at such aspects as feedback sources or the degree of student involvement in the feedback process. It appears that the expansion of the formative assessment field after the publication of Black and Wiliam (1998) played a major role in the development of definitions, and the models that followed this publication were more likely to include references to formative assessment. This, of course, makes sense. For formative assessment and assessment for learning theories (Wiliam, 2011) feedback has to aim at improving students’ learning, to help students to process such feedback, and to become active agents in the process. Hence, within the realm of formative assessment, the field moved from a static understanding where feedback is “done” to the students (e.g., just indicating their level of performance) to our current understanding of the complex process that feedback involves.

A second conclusion takes us to the individual analysis of the definitional elements. So, all of the definitions discuss feedback as information that is exchanged or produced. This is a crucial component of feedback as without information there is nothing to process and, thus, it simply cannot be successful. This information may range from detailed qualitative commentary to a score, or from being delivered face to face to notes scribbled on the students’ work. Information is the essence of feedback, or, as many would maintain, is feedback.