95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 20 April 2021

Sec. Digital Learning Innovations

Volume 6 - 2021 | https://doi.org/10.3389/feduc.2021.629220

This article is part of the Research Topic Technology-Assisted Learning: Honing Students’ Affective Outcomes View all 11 articles

Helaine Mary Alessio1*

Helaine Mary Alessio1* Jeff D. Messinger2

Jeff D. Messinger2The recent COVID-19 pandemic presented challenges to faculty (Fac) and students (Stu) to uphold academic integrity when many classes transitioned from traditional to remote. This study compared Fac and Stu perceptions surrounding academic integrity when using technology assisted proctoring in online testing.

Methods College Fac (N = 150) and Stu (N = 78) completed a survey about perceptions of academic integrity and use of proctoring software for online testing. Wilcoxon rank-sum tests were used to determine if there were differences in the distribution of agreement between students and faculty.

Results Fac and Stu agreed maintaining academic integrity was a priority (93 vs. 94%), and that it is easier to cheat in online tests (81 vs. 83%). Responses differed on whether online proctoring software was effective at preventing academic dishonesty (23% of Fac vs. 42% of Stu disagreed). 53% of Fac and 70% of Stu perceived that online proctoring was an invasion of privacy. Only 7% of Stu and 49% of Fac perceived importance in having a policy about proctoring online tests, whether cheating in an academic setting is likely associated with cheating in a work setting (78 vs. 51%), and if given a choice, 46% of Fac and only 2% of Stu would choose to use proctoring software. Answers to open-ended questions identified feelings of stress and anxiety by Stu and concerns about privacy by Fac.

Conclusion Fac and Stu had similar perceptions of the importance of academic integrity and ease of cheating in online tests. They differed in perception of proctoring software’s effectiveness in deterring cheating, choosing to give or take a proctored online test, and having a policy in place. Policies on technology-assisted online testing should be developed with faculty and student input to address student concerns of privacy, anxiety, and stress and uphold academic integrity.

A steady growth in technology-assisted learning was recently intensified by the COVID-19-initiated closing of many schools from kindergarten to colleges worldwide. This unexpected pandemic resulted in a rapid move from traditional face to face classroom to online teaching and testing that affected schools everywhere. The transition presented challenges to faculty and students for learning material and upholding academic integrity when assessing learning. This was especially evident in the absence of policies about educational tools including proctoring software associated with online teaching and testing. There is widespread concern that cheating has been made easier by advances in technology which provide a large number of innovative schemes to provide students with unauthorized assistance in ways that are difficult to detect (Newton, 2018; Ison, 2020). There are also growing concerns about the potential that proctoring software may be biased in its flagging of suspicious student identification and test taking behavior, violate one’s privacy, and convey a sense of mistrust to test takers (Beck, 2014). Adding to complexity, some self-report studies are inconclusive about whether cheating is actually on the rise (Curtis and Clare, 2017).

Cheating is big business and a growing number of unscrupulous companies have profits estimated to approach $100 billion globally (Clarke and Lancaster, 2013; Newton, 2015, 2018) for providing dishonest services for students that provide answer keys, previously written papers, and even people who are hired to take exams or write papers for fees (Allen and Seaman, 2015; Ison, 2020). With the availability of websites that provide a variety of nefarious services, the challenge of ensuring academic integrity is ever-increasing (Alessio and Wong, 2018). To address academic dishonesty in online tests, companies have developed proctoring software designed to identify test takers, monitor student behavior and detect cheating in online tests. Very few studies have directly observed cheating behavior, nevertheless, faculty are aware of the many ways that students cheat both in traditional and online classes. An indication of potential academic dishonesty may be obtained from indirect evidence. One study reported a grade disparity mean of 17 points and double the amount of allotted time to take the test in students who were not proctored compared with proctored (Alessio et al., 2017). Alessio and Maurer (2018) examined the impact of an institutional decision to provide and support proctoring software to all departments in which online exams were used. Final grades were compared in 29 online courses representing 10 departments that were taught 1 year prior to and 1 year following the campuswide adoption of video proctoring software. Results of this study showed that average course grade point averages were significantly reduced with a 2.2% drop in GPA on a 4-point scale after the adoption of the proctoring software. While this study did not directly detect cheating and only compared final course grades that included test and assignment grades, the lower GPA across most courses following the implementation of proctoring software campus-wide found grade differences due to whether or not tests were proctored.

Not all studies have reported grade disparities when comparing proctored and non-proctored test results. Beck (2014) reported no significant difference between proctored and non-proctored tests using a statistical model to provide R2 statistics for test scores to predict academic dishonesty. Measures of student characteristics such as major, grade point average, and class rank were used to predict examination scores. When comparing different classes, if no cheating occurs it was expected that the prediction model would have the same explanatory power in both classes, and conversely, if cheating occurred it was expected that the R2 statistic would be relatively low because a large portion of the variation would be explained by cheating. Beck (2014) reported that only grade point average explained a greater degree of variation for test results in proctored vs. unproctored. This is in contrast to Harmon and Lambrinos (2008) who compared proctored and non-proctored tests results using a similar statistical model and reported R2 values of 50% in the one proctored exam which was much higher than the 15% R2 value for the first three unproctored exams. In another study at a medium size Midwestern university, Kennedy et al. (2000) reported that 64% of faculty and 57% of students perceived it would be easier to cheat online compared with face to face classes. They also found that faculty who had experience teaching online tended to lessen their perception about the ease of cheating online. These results differ from a survey of faculty teaching online at a different university, located in the southern part of the United States, where the majority of faculty surveyed did not perceive there was a big difference in cheating between online and in-person (McNabb and Olmstead, 2009). Surveys of faculty and student perceptions of cheating in proctored compared with unproctored seem to be similar whether the test is online or in person (Watson and Sottile, 2010). The critical factor is whether or not the test is monitored.

There is currently a vigorous debate occurring on campuses all over the world about the appropriate use of technology assisted proctoring software in online testing. Concerns about bias and surveillance associated with proctoring software that uses artificial intelligence to monitor and flag suspicious identification and behaviors while a student is taking a test has led some institutions to ban the use of proctoring in online testing (Supiano, 2020). There is also concern about the impact of remote testing on student affect due to feelings of intrusion and the discomfort of being watched by a remote proctor or video that uses artificial intelligence to look for suspicious actions. Kolski and Weible (2018) conducted an exploratory study that observed behaviors associated with anxiety during a virtually proctored online test and interviewed students after taking an online test. They reported higher than expected examples of test anxiety and left to right gazing behavior that could have been flagged as being suspicious behavior by proctoring software. Critics of virtual proctoring often refer to added stress, feelings of intrusion, and implicit bias in the practical work as well as the algorithms used by proctoring software. Test anxiety has been reported to increase in some, but not all students, and interestingly, Stowell and Bennett (2010) reported lower test anxiety in students who took tests online vs. in a traditional classroom environment.

There is a need for a balanced approach by academic institutions to the students, faculty, and broader society to assure that all course platforms assign grades that accurately reflect how well students master course material while considering student and faculty perceptions of fairness and support in the testing environment. Rubin (2018) states that technology alone will not solve our academic integrity problems. The debate on how best to address upholding academic integrity in online testing includes using multiple modes of assessment, lowering the stakes of exams, spending extra time with struggling students, and using technological assistance where and when appropriate. Perceptions of both faculty and students about the challenges associated with academic integrity in online learning will eventually lead to ways to prevent academic dishonesty, however, many faculty have found that the process of deterring cheating takes an inordinate amount of time and effort on their part, which sometimes still results in cases of students cheating even after substantial efforts were made to prevent its occurrence (Supiano, 2020). This study compares perceptions of faculty and students on the importance of academic integrity, the use of proctoring software to deter cheating, and level of assurance when giving or taking a test online that uses proctoring software.

The surveys and experimental design in this study were reviewed and approved by the University’s Internal Review Board. The survey included Likert scale questions and open-ended questions that inquired about faculty members’ and students’ perceptions of academic integrity in online testing (Supplementary Materials 1, 2). Survey questions were virtually identical except for one extra question specifically designed for faculty members. A random sample of 500 faculty and 1,000 students were invited to complete the survey which was distributed and submitted anonymously online using Qualtrics (1 Provo, UT, United States). 150 faculty and 78 students responded and submitted surveys. Not every faculty member and student answered all questions in the survey.

To analyze the difference between the distribution of how students and faculty responded to each of these different questions, a Mann–Whitney–Wilcox test was used. The Wilcoxon rank-sum test is a non-parametric procedure that looks at the location of where answers are at which makes it slightly different from a Chi-Square test for independence. Unlike the Chi-Square test for independence where we need to have at least 80% of the table with expected cell counts of five or more, the Wilcoxon rank-sum test just requires us to have independent responses and data that are at least ordinal. There are some questions that don’t have many responses for certain categories, hence the reasoning to go with the Wilcoxon rank-sum test over the Chi-Square test for independence. In the Wilcoxon rank-sum test, we test the following hypotheses:

H0: The responses for Faculty and Students have the same location/distribution.

HA: The responses for Faculty and Students have differing locations/distributions.

The derivation of the test statistic W is the summation of the ranks for one of the groups minus an adjustment of m(m + 1)/2 where m is the sample size of the group with its ranks being summed. Under the above null hypothesis, we would expect the summation of the ranks in both groups to be about the same. If the alternative hypothesis is correct, then there would be a difference in the summation of the ranks for each group. To generate a p-value, we assume the null hypothesis and randomly sample many different permutations of the sample for each group. Specifically we combine the ranks from both groups into one hat and randomly sample from that hat for group 1, the rest are considered group 2. This gives us a distribution of potential W’s to approximate a p-value of our observed W.

Another non-parametric procedure that was applied was the Fisher Exact test. This is the non-parametric cousin to the Chi-Square test for Independence. The test specifically looks at the hypothesis of:

H0: the two variables are not associated.

HA: the two variables are associated.

The calculation of the p-value gets more complex given the tables being larger than 2 × 2 tables but can still be computed via Monte Carlo simulation.

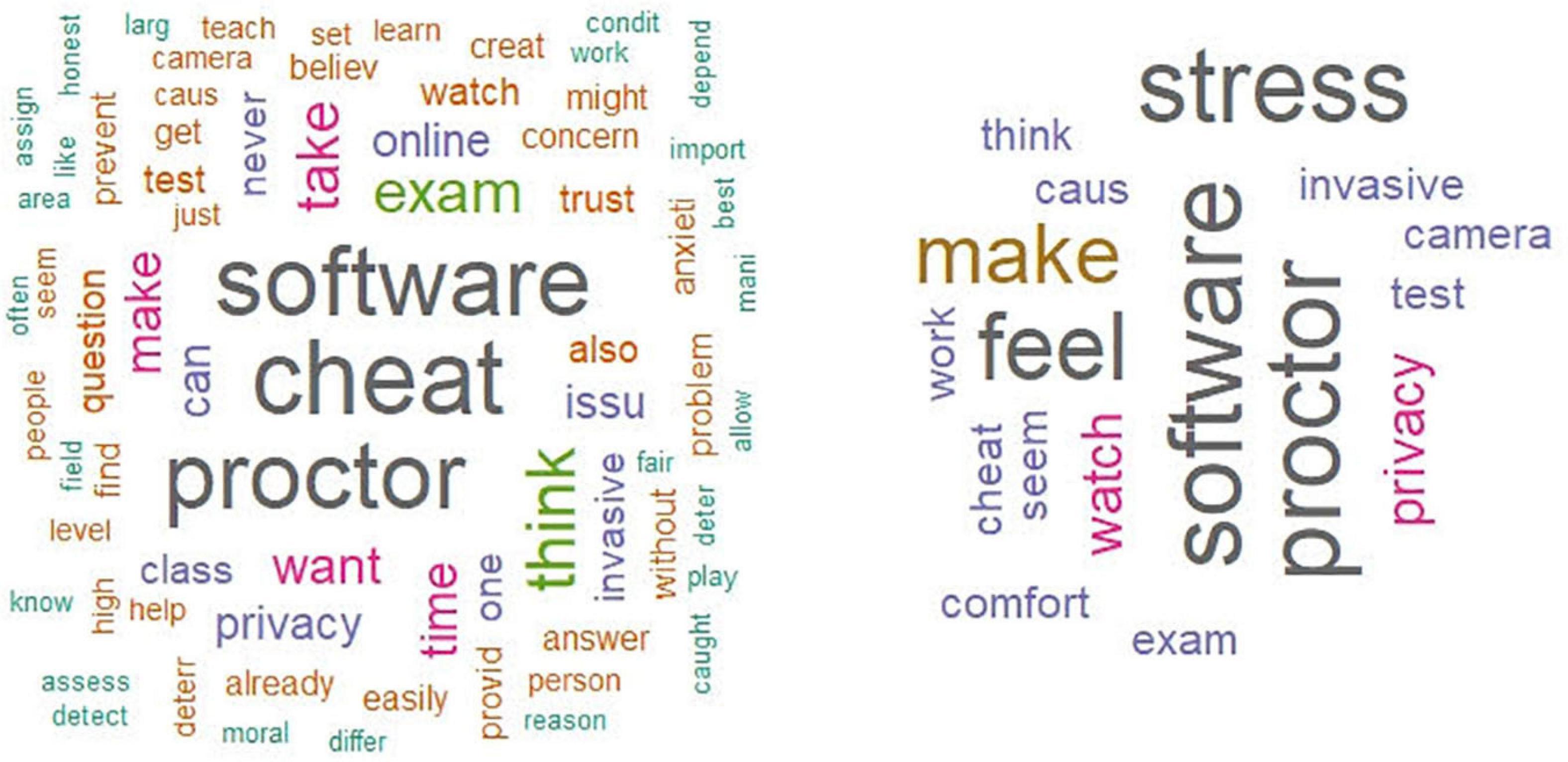

All of the Wilcoxon rank-sum and Fisher Exact tests were done using the statistical software R (R Core Team, 2020). Mosaic plots were constructed with the use of the tidyverse package in R (Wickham et al., 2019). Word clouds were constructed with the help of the wordcloud (Ian Fellows, 2018), tm (Feinerer et al., 2008), SnowballC (Bouchet-Valat, 2020), and RColorBrewer (Neuwirth, 2014).

The survey return rate for faculty was 30% and for students, 8%. The 150 faculty and 78 students who returned the surveys represented departments across five colleges at a large, public university in the Midwest, United States. Not all respondents answered all questions. Fisher Exact test results were run comparing faculty and student answers to the Likert Scale questions. Wilcoxon rank sum and p-values for Likert scale questions are shown in Table 1. No differences in response between faculty and students occurred for several questions: When asked about their perceptions of their department’s priority, 93% of faculty and 94% of students somewhat agreed, agreed or strongly agreed that academic integrity is a high priority in their departments. When asked about perceptions about ease of cheating online, faculty and students somewhat agreed, agreed or strongly agreed to a similar extent that cheating online was easier, 81 and 83%, respectively. The most common reasons shared by faculty and students included access to notes and the internet when unproctored.

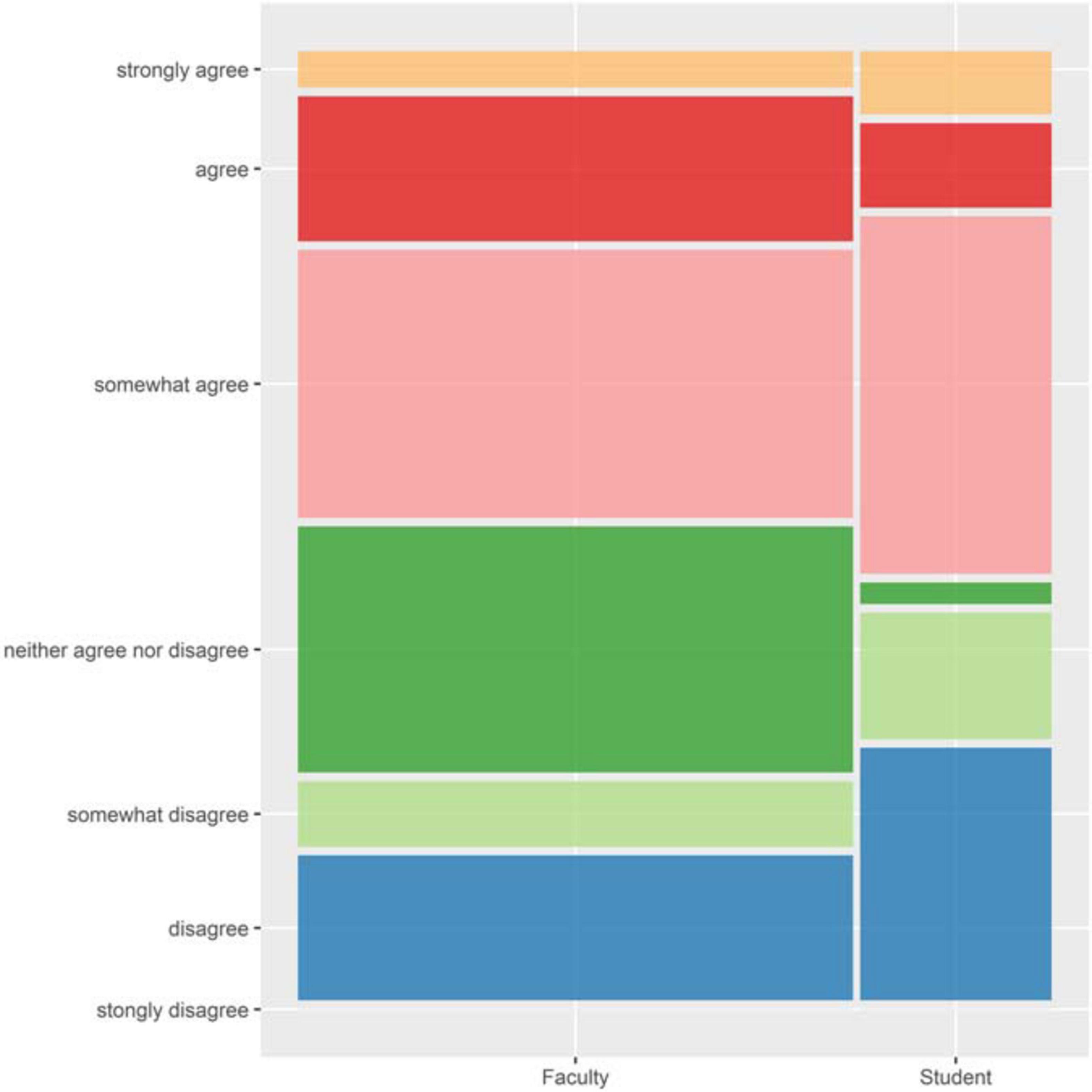

Figures displaying differences in Fac and Stu responses are displayed as matrix graphs with the different width representing the larger Fac sample size compared with Stu and colors are representing different levels of agreement or disagreement. The distribution of faculty and student answers to a question about the effectiveness of online proctoring software to prevent academic dishonesty did not differ (W = 2869, p < 0.05) though there was an association found when a Fisher Exact test was run (p = 0.0013 < 0.05) with 23% of faculty disagreeing or somewhat disagreeing compared with 42% of students. On the other hand, 50% of faculty and 55% of students somewhat agreed, agreed, and strongly agreed that online proctoring was effective at preventing academic dishonesty (Figure 1).

Figure 1. Faculty and student responses to: Proctoring software in online tests is effective at preventing academic dishonesty.

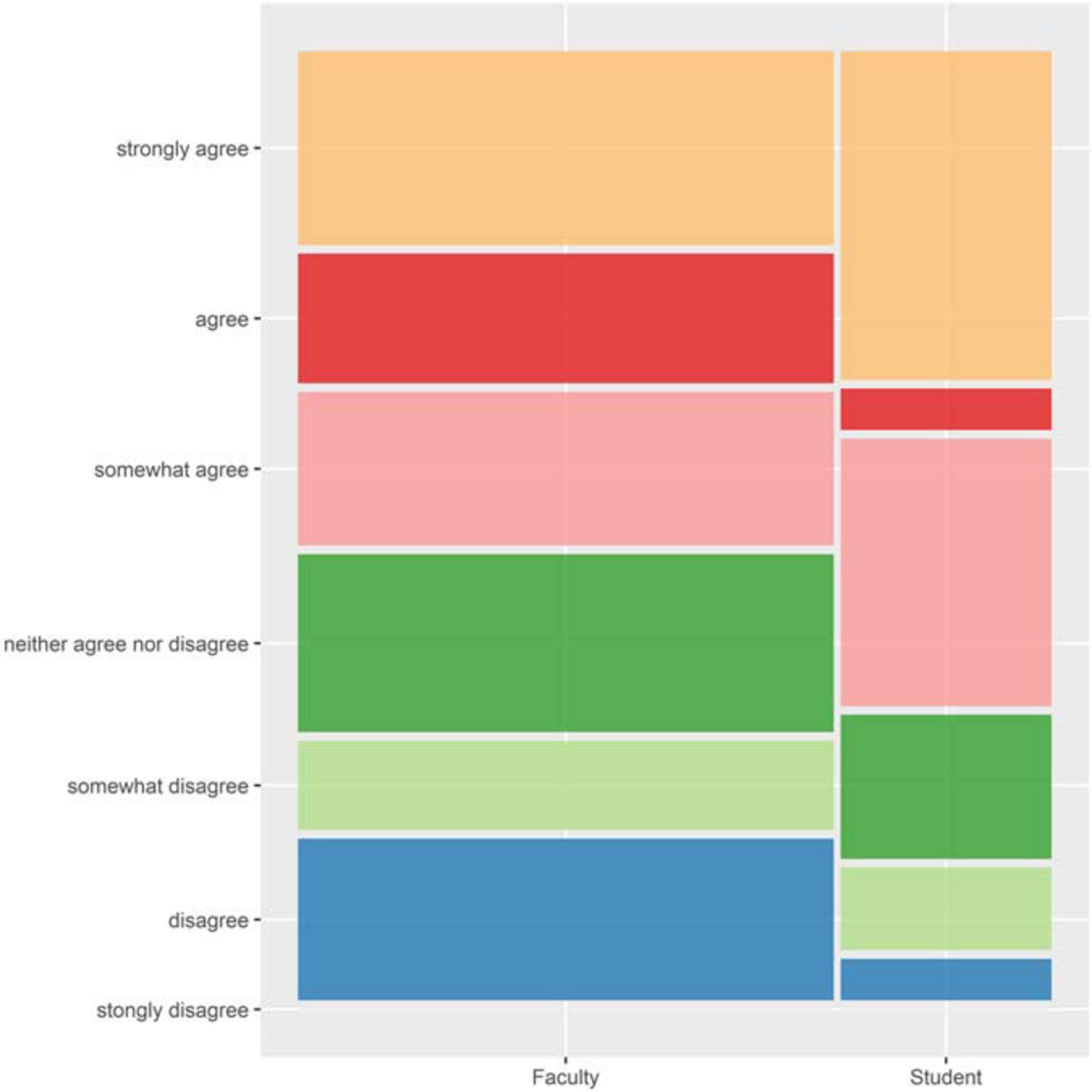

Fifty three percent of faculty and 70% of students somewhat agreed, agreed or strongly agreed that the use of online proctoring software is an invasion of student privacy (Figure 2), a difference (W = 1939, p < 0.05) that included similar themes in descriptive terms by students, such as “watch,” “feel,” and “room” compared with faculty who more often used terms such as “privacy,” “invasion,” and “space.”

Figure 2. Faculty and student responses to: Proctoring software in online tests is an invasion of student privacy.

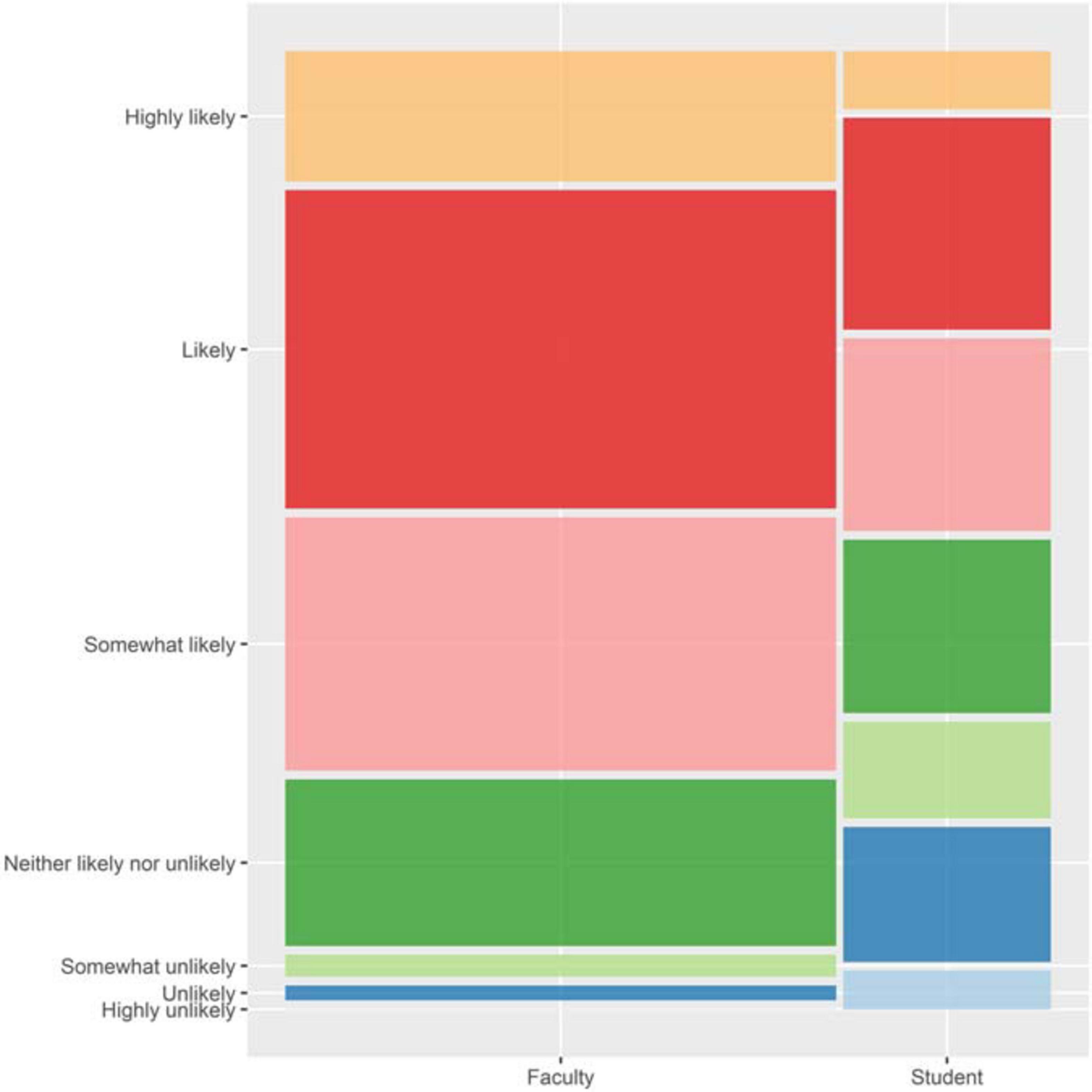

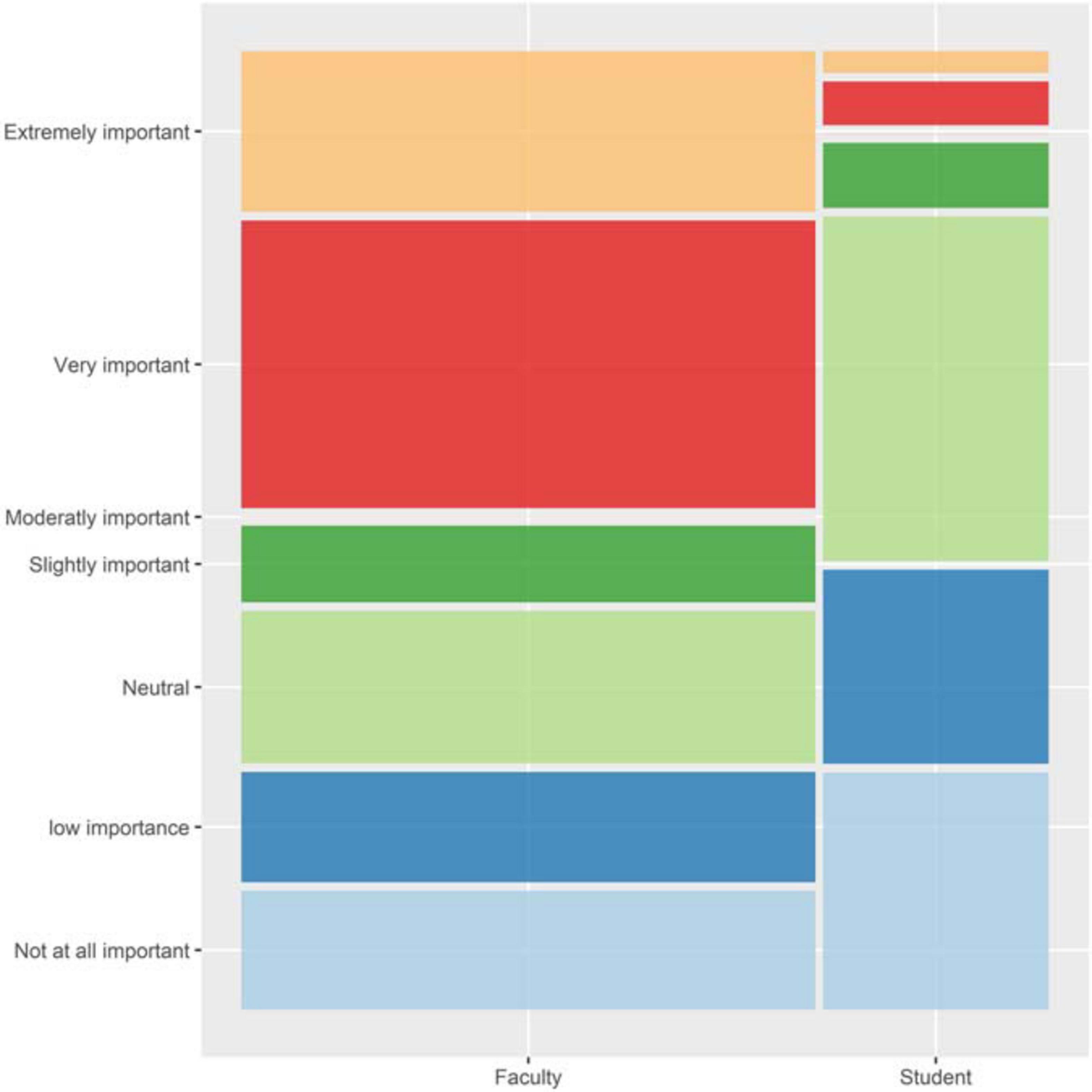

Another difference occurred when 78% of faculty compared with 51% of students somewhat agreed, agreed, or strongly agreed that those who cheat in an academic setting would likely cheat in a work setting (W = 3964, p < 0.05) (Figure 3). There was also significant disagreement (W = 3268, p < 0.05) in the importance of having a University policy in place for proctoring online tests with 50% of faculty and only 2% of students perceiving it to be very or extremely important (Figure 4). If faculty were given a choice to use proctoring software or students given a choice to take an exam with proctoring software, 46% of faculty were somewhat likely, likely or highly likely to choose proctoring software, compared with just 2% of students (W = 4531, p < 0.05). The wordcloud that emerged from qualitative answers included a high frequency of “stress,” “feel,” and “comfort” for students (Figure 5).

Figure 3. Faculty and student responses to: How likely is it that students who cheat in an academic setting would likely to behave dishonestly in a work setting?

Figure 4. Faculty and student responses to: How important is it to you that a Department or University policy is in place for proctoring online tests that are given outside of a traditional classroom setting?

Figure 5. Wordcloud of Faculty (left) and Student (right) comments about the use of proctoring software, with frequency of terms directly proportional to font size.

While more instructors at every educational level were developing online courses and adding online components to face-to-face courses on an ever-broadening array of topics each semester (Kleinman, 2005), COVID-19 hastened the adoption of online teaching and testing when virtually all schools in countries throughout the world closed or transitioned to technology assisted teaching in 2020 due to the virus. This unexpected pandemic presented challenges to both faculty and students to adjust to a delivery of learning material and assessment of learning in technology assisted online teaching and testing. In the current study, faculty and students were asked to share their perceptions of academic integrity in their departments and their opinions about the effectiveness of proctoring software when taking online tests in deterring cheating and the extent they felt their privacy was impacted as a result of using proctoring software when taking online tests. Perceptions of academic integrity in technology-assisted testing were similar in some items and differed in others when comparing faculty and students’ responses. These differences were revealed in both quantitative and open-ended questions.

In a review of the recent debate on proctoring software use, Supiano (2020) explained how some faculty and students are concerned about the negative impact remote proctoring has on students’ affect due to feelings of intrusion and anxiety as students sense they are being watched by either a remote proctor or by a video with artificial intelligence monitoring software. Student perceptions in the current study aligned with this concern as only 2% of students indicated they would likely (somewhat-highly) prefer being proctored when taking an online test and used descriptors such as stress, feel, and comfort as reasons. Nearly half of all faculty respondents, on the other hand, indicated they would likely (somewhat-highly) use proctoring software in online exams, although they did acknowledge the invasiveness and privacy concerns of its use.

Some faculty do not relish the role of policing students when they take tests online. Nevertheless, academic integrity is critical to an institution’s reputation, as well as the expectation of workplaces and society that college graduates actually master the content and skills assessed in their program of study. Despite efforts to encourage honesty in all types of course assessments, higher education institutions face the same types of scandals and deceit that occur in the workplace and society (Boehm et al., 2009). One of the findings of this current study worth noting is the discrepancy between faculty and student perceptions that those who cheat in an academic setting would likely behave dishonestly in a work setting. While most (78%) faculty agreed (somewhat-strongly) that those who cheat in an academic setting would likely behave dishonestly in a work setting, only about half of the students surveyed felt the same way. A possible explanation is that some students may perceive cheating in college as a means to an end as they face high stakes when competing for admission to selective programs. Once accepted to those programs, cheating behavior may be less likely to occur thereafter. Support for this explanation comes indirectly by a result from Alessio et al. (2018) that significant grade disparities and time used to take unproctored online tests were greater in students who were vying for admission into academic programs with a high grade point average restriction.

Faculty rely on their institution to provide policies and support for academic integrity in a variety of teaching and assessment settings-traditional and remote. In the current study faculty were asked whether or not they perceived their departments prioritized academic integrity. The clear majority of both faculty and students agreed or strongly agreed that their departments prioritized academic integrity. But only half of faculty respondents and hardly any (2%) students perceived having a policy in place as being important. In the absence of policies and educational tools associated with online teaching and testing, faculty are left to decide themselves how to assure that there is an even and just playing field for all students when they are being assessed.

Studies of faculty perceptions of academic dishonesty in online testing have provided mixed results. One survey of 1,967 college faculty and 178 administrators’ attitudes about online teaching reported that 60% of faculty believed that academic dishonesty is more common in online vs. traditional face to face courses. On the other hand, 86% of digital learning administrators believed that academic dishonesty happens in both online and face to face settings equally (Lederman, 2019). The majority of the 303 faculty and 656 students at a midsized public university perceived cheating and plagiarism as greater problems in online classes vs. traditional, face-to-face classes. However, perceptions about cheating online vs. face to face also depended on whether faculty had experience teaching online. The use of proctoring software in online testing has emerged as a hot button topic in higher education as faculty struggle with upholding academic integrity while resenting the role of policing students (Supiano, 2020). Faculty surveyed in this study agree with other reports of faculty being sensitive to the anxiety proctoring software may exacerbate when students take online tests (Kolski and Weible, 2018).

In conclusion, the majority of faculty surveyed in this study perceived that cheating was easier in online compared to face to face testing, that cheating in college may generalize to cheating in a workplace with reasons including student characteristics and their ability to get away with cheating in college. Faculty supported having policies in place that prioritize academic integrity, especially in online learning and testing, and if given access to proctoring software, would use it. Also, this study found that students agreed with faculty on many matters of academic integrity, except notably their desire to take proctored tests online, whether cheating in class would associate with dishonest behavior in the workplace, and the feelings of stress when taking proctored online exams. Faculty place a higher priority than students on the need for policies to provide guidance on using technology assisted software to proctor online exams. They are sensitive to students’ privacy issues and it is clear that an understanding between faculty and student perceptions could help set a tone of responsibility and agreement for how best to assure that proctoring online exams is implemented with fairness and concern for all.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Miami University Institution Review Board. The patients/participants provided their written informed consent to participate in this study.

HA created and conducted the survey. JM provided statistical leadership and support for analyzing and interpreting the data. Both authors agree to be accountable for the content of this work.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2021.629220/full#supplementary-material

Alessio, H., and Wong, E. Y. W. (2018). Academic integrity and ethical behavior in college students-experiences from universities in China (Hong Kong SAR) and the US. J. Excell. Coll. Teach. 29, 1–8.

Alessio, H. M., Malay, N., Maurer, K., Bailer, A. J., and Rubin, B. (2017). Examining the effect of proctoring on online test scores. Online Learn. 21:1. doi: 10.24059/olj.v21i1.885

Alessio, H. M., Malay, N., Maurer, K., Bailer, A. J., and Rubin, B. (2018). Interaction of proctoring and student major on online test performance. Int. Rev. Res. Open Dis. Learn. 19:165–185.

Alessio, H. M., and Maurer, K. (2018). The impact of video proctoring in online courses. J. Excell. Coll. Teach. 29, 183–192.

Allen, I. E., and Seaman, J. (2015). Grade Level: Tracking Online Education in the United States. Dayton, OH: LLC.

Beck, V. (2014). Testing a model to predict online cheating—much ado about nothing. Act. Learn. High. Educ. 15, 65–75. doi: 10.1177/1469787413514646

Boehm, P. J., Justice, M., and Weeks, S. (2009). Promoting Academic Integrity in Higher Education. Livonia, MI: The Community College Enterprise. 45–61.

Bouchet-Valat, M. (2020). SnowballC: Snowball Stemmers Based on the C ‘libstemmer’ UTF-8 Library. R package version 0.7.0. https://CRAN.R-project.org/package=SnowballC.

Clarke, R., and Lancaster, T. (2013). “Commercial aspects of contract cheating,” in Proceedings of the 18th ACM Conference on Innovation and Technology in Computer Science Education, (New York, NY: ITiCSE’13; ACM), 219–224.

R Core Team (2020). R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing.

Curtis, G. J., and Clare, J. (2017). How prevalent is contract cheating and to what extent are students repeat offenders? J. Acad. Ethics 2, 115–124. doi: 10.1007/s10805-017-9278-x

Feinerer, I., Hornik, K., and Meyer, D. (2008). Text mining infrastructure in R. J. Stat. Softw. 25, 1–54.

Fellows, I. (2018). Wordcloud: Word Clouds. R package version 2.6. https://CRAN.R-project.org/package=wordcloud.

Harmon, O. R., and Lambrinos, J. (2008). Are online exams an invitation to cheat? J. Econ. Educ. 39, 116–125. doi: 10.3200/jece.39.2.116-125

Ison, D. C. (2020). Detection of online contract cheating through stylometry: a pilot study. Online Learn. 24, 142–165. doi: 10.24059/olj.v24i2.2096

Kennedy, K., Nowak, S., Raghuraman, R., Thomas, J., and Davis, S. F. (2000). Academic dishonesty and distance learning: student and faculty views. Coll. Stud. J. 34, 309–315.

Kleinman, S. (2005). Strategies for encouraging active learning, interaction, and academic integrity in online courses. Commun. Teach. 19, 13–18. doi: 10.1080/1740462042000339212

Kolski, T., and Weible, J. (2018). Examining the relationship between student test anxiety and webcam based exam proctoring. Online J. Distance Learn. Adm. 21:15.

Lederman, D. (2019). Professors’ Slow Steady Acceptance of Online Learning: A Survey. Washington, DC: Inside Higher Education.

McNabb, L., and Olmstead, A. (2009). Communities of integrity in online courses: faculty member beliefs and strategies. Merlot J. Online Learn. Teach. 5, 208–221.

Neuwirth, E. (2014). RColorBrewer: ColorBrewer Palettes. R package version 1.1-2. https://CRAN.R-project.org/package=RColorBrewer.

Newton, P. (2018). How common is commercial contract cheating in higher education? Front. Educ. 3, 1–18. doi: 10.3389/fedue.2018.00067

Rubin, B. (2018). Designing systems for academic integrity in online courses. J. Excell. Coll. Teach. 29, 193–208.

Stowell, J. R., and Bennett, D. (2010). Effects of online testing on student exam performance and test anxiety. J. Educ. Comput. Res. 42, 161–171. doi: 10.2190/EC.42.2.b

Watson, G., and Sottile, J. (2010). Cheating in the digital age: do students cheat more in online courses. Online J. Distance Learn. Admin. 13:9.

Keywords: cheating, online, remote, proctoring, faculty perceptions

Citation: Alessio HM and Messinger JD (2021) Faculty and Student Perceptions of Academic Integrity in Technology-Assisted Learning and Testing. Front. Educ. 6:629220. doi: 10.3389/feduc.2021.629220

Received: 13 November 2020; Accepted: 23 March 2021;

Published: 20 April 2021.

Edited by:

Eva Yee Wah Wong, Hong Kong Baptist University, Hong KongReviewed by:

Theresa Kwong, Hong Kong Baptist University, Hong KongCopyright © 2021 Alessio and Messinger. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Helaine Mary Alessio, YWxlc3NpaEBtaWFtaW9oLmVkdQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.