94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 07 May 2021

Sec. Assessment, Testing and Applied Measurement

Volume 6 - 2021 | https://doi.org/10.3389/feduc.2021.616216

This article is part of the Research Topic Exploring Classroom Assessment Practices and Teacher Decision-making View all 12 articles

Motivation is a prerequisite for students’ learning, and formative assessment has been suggested as a possible way of supporting students’ motivation. However, there is a lack of empirical evidence corroborating the hypothesis of large effects from formative assessment interventions on students’ autonomous forms of motivation and motivation in terms of behavioral engagement in learning activities. In addition, formative assessment practices that do have an impact on students’ motivation may put additional requirements on teachers than more traditional teaching practices. Such requirements include decisions teachers need to make in classroom practice. The requirements on teachers’ decision-making in formative assessment practices that have a positive impact on students’ autonomous forms of motivation and behavioral engagement have not been investigated. This study describes one teacher’s formative assessment practice during a sociology course in upper secondary school, and it identifies the requirements for the teacher’s decision-making. The teacher had participated in a professional development program about formative assessment just prior to this study. This study also investigated changes in the students’ motivation when the teacher implemented the formative assessment practice. The teacher’s practice was examined through observations, weekly teacher logs, the teacher’s teaching descriptions, and an interview with the teacher. Data on changes in the students’ type of motivation and engagement were collected in the teacher’s class and in five comparison classes through a questionnaire administered in the beginning and the end of the course. The students responded to the questionnaire items by choosing the extent to which they agreed with the statements on a scale from 1–7. The teacher’s formative assessment practice focused on collecting information about the students’ knowledge and skills and then using this information to make decisions about subsequent instruction. Several types of decisions, and the knowledge and skills required to make them that exceed those required in more traditional teaching practices, were identified. The students’ in the intervention teacher’s class increased their controlled and autonomous forms of motivation as well as their engagement in learning activities more than the students in the comparison classes.

Motivation is the driving force of human behavior and is a prerequisite for students’ learning. Students’ motivation to learn may be manifested through students’ behavioral engagement, which refers to how involved the student is in learning activities in terms of on-task attention and effort (Skinner et al., 2009). Research has consistently found student reports of higher levels of behavioral engagement to be associated with higher levels of achievement and less likelihood to drop out of school (Fredricks et al., 2004).

Students may also have different types of motivation. That is, they may be motivated for different reasons (Ryan and Deci, 2000). Students who have autonomous forms of motivation engage in learning activities either because they find them inherently interesting or fun, and feel competent and autonomous during the activities, or because they find it personally valuable to engage in the activities as a means to achieving positive outcomes. Students with controlled forms of motivation, on the other hand, experience the reasons for engaging as imposed on them. They may feel pressured to engage because of external rewards (such as being assigned stars or monetary rewards), to avoid discomfort or punishment (such as the teacher being angry or assigning extra homework), to avoid feeling guilty (e.g., to avoid the feeling of letting parents or the teacher down), or to attain ego enhancement or pride. Students’ type of motivation has consequences for their learning. Autonomous forms of motivation have been shown to be associated with greater engagement, but also with higher-quality learning and greater psychological well-being. The more-controlled forms of extrinsic motivation, on the other hand, have been shown to be associated with negative emotions and poorer coping with failures (Ryan and Deci, 2000). Successfully supporting student motivation is, however, not an easy task, and several studies have shown that student motivation often both decreases and becomes less autonomous throughout the school years (Winberg et al., 2019).

Formative assessment, which is a classroom practice that identifies students’ learning needs through assessments and then adapts the teaching and learning to these needs, has been suggested as a possible way of supporting student motivation (e.g., Clark, 2012). There is great variation in how scholars conceptualize formative assessment, and Stobart and Hopfenbeck (2014) describe some common conceptualizations. Formative assessment practices may be teacher-centered, student-centered, or a combination of these. Teacher-centered approaches to formative assessment focus on the teachers’ actions, and in these practices teachers gather evidence of student learning, for example, through classroom dialogue or short written tests, and they adapt feedback or the subsequent learning activities to the information gathered from these assessments. In the student-centered approaches to formative assessment, the students are involved in peer assessment and peer feedback and/or self-assessment in order to take a more proactive role in the core formative assessment processes of identifying their learning needs and acting on this information to improve their learning. It may be noted however, that although the teacher may be seen as the proactive agent in teacher-centered formative assessment practices, such practices may still have the students at the center in the sense that the focus is on identifying the students’ learning needs and adapting the classroom practices to these needs. The following definition by Black and Wiliam (2009) incorporates many of the meanings given to formative assessment:

Practice in a classroom is formative to the extent that evidence about student achievement is elicited, interpreted, and used by teachers, learners, or their peers, to make decisions about the next steps in instruction that are likely to be better, or better founded, than the decisions they would have taken in the absence of the evidence that was elicited (p. 9).

Researchers have provided different suggestions about how formative assessment may affect student motivation. Brookhart (2013) emphasizes the students as the main source and users of learning information in formative assessment, and points to the consistency between the formative assessment cycle [establishing where the learners are in their learning, establishing where they are going (the learning goals), and establishing what needs to be done to get there] (Wiliam and Thompson, 2008), and the phases of self-regulated learning (Zimmerman, 2000). Brookhart (2013) uses several motivation theories to discuss how the characteristics of assessment tasks, the classroom environments they are administered in, and teachers’ feedback may influence students’ motivation to use assessment information and engage in learning activities. Heritage and Wylie (2018) emphasize the inclusion of both teachers and students as active participants in the formative assessment processes. In these processes teachers and students notice the students’ learning, respond to this information by choosing learning tasks suitable for the students to take the next steps in their learning, and provide feedback that emphasize evidence of students’ learning. They argue that such processes support the development of an identity as effective and capable learners. Such identity beliefs enhance students’ motivation to engage in learning activities. Shepard et al. (2018) argues that formative assessment practices in which feedback helps students to see what they have learned and how to improve may foster a learning orientation. Within such a learning orientation, students find it personally valuable to engage in learning activities, and they feel less controlled in their motivation by, for example, a need to please others or appear competent. Pat-El et al. (2012) argue that teacher feedback that helps students monitor their learning progress and provides support for how goals and criteria can be met, may enhance students’ satisfaction of the three psychological needs of competence, autonomy and relatedness. Pat-El et al. (2012) then draw on self-determination theory (Ryan and Deci, 2000), which posits that these psychological needs influence students’ autonomous forms of motivation. Their findings in a questionnaire study performed on one single occasion (Pat-El et al., 2012) indicated that competence and relatedness mediated an influence of feedback on autonomous motivation. In an intervention study by Hondrich et al. (2018), teachers implemented teacher-centered formative assessment practices involving the use of short written tasks to assess students’ conceptual understanding, feedback and adaptation of instruction. They found an indirect effect of formative assessment on autonomous motivation mediated by perceived competence (the other two psychological needs, competence and relatedness, were not included in the study). Thus, when discussing the possible mechanisms by which formative assessment may affect students’ engagement and type of motivation, some scholars focus on the students as the main proactive agents in the formative assessment processes (e.g., Brookhart, 2013), while others also focus on the teachers (e.g., Heritage and Wylie, 2018). Researchers also use different theories of motivation when discussing the nature of these possible mechanisms. Shepard et al. (2018) uses learning orientation theory, while Pat-El et al. (2012) and Hondrich et al. (2018) draw on self-determination theory when discussing possible mechanisms for the effects of formative assessment on students’ type of motivation. When discussing the effects of formative assessment on students’ engagement, Heritage and Wylie (2018) use the notion of identity beliefs, while Brookhart (2013) uses several different motivation theories.

However, as of yet there is no solid research base corroborating neither the promising hypothesis of large effects, nor the mechanisms underlying such effects, from different approaches to formative assessment on student motivation in terms of autonomous motivation or behavioral engagement in learning activities. Studies that investigate effects of formative assessment on motivation within an ecologically valid, regular classroom environment are scarce (Hondrich et al., 2018). Indeed, we performed a comprehensive literature search in the databases ERIC, APA PsychInfo, Academic Search Premier and SCOPUS, which returned 557 journal articles, but only 11 of them were empirical studies that examined the association between formative assessment and grade 1–12 students’ type of motivation or engagement in learning activities. In the database search we used the Boolean search command (motivation OR engagement) AND (“formative assessment” OR “assessment for learning” OR “self-assessment” OR “peer-assessment” OR “peer feedback”) AND (effect* OR impact OR influenc* OR affect* OR relation OR predict*) AND (school* OR grade* OR secondary OR primary OR elementary) in the title, abstract and keywords. We included a number of terms commonly used for formative assessment. The term “motivation” was used to include all articles that deal with the motivational terms investigated in our own study, and the term “engagement” was used as a complement to the term “motivation” to ensure that articles focusing engagement were found. Since we were interested in empirical evidence for the association between formative assessment and motivational outcomes, a number of terms commonly used in such studies were used to limit the search to such studies. Finally, since the present study included upper-secondary school students, and university studies may differ in important aspects from compulsory school, we limited our search to journal articles including studies from school year 1–12. A number of terms (see above) were used to filter out studies involving higher education or out-of-school learning.

In the literature search, we found a few studies that are based on questionnaire responses from students on one single occasion, and they have found associations between students’ autonomous motivation and different approaches to formative assessment. Pat-El et al. (2012) and Federici et al. (2016) found an association between students’ autonomous motivation and the students’ perceptions of teachers’ providing feedback that facilitates the monitoring of their learning progress and an understanding of how goals and criteria can be met (formative feedback). However, in the context of portfolio use, Baas et al. (2019) found an association between autonomous motivation and students’ perceptions that scaffolding is integrated in their classroom practice, but not between autonomous motivation and the monitoring of growth. Gan et al. (2019) found associations between students’ autonomous motivation and their perceptions of a daily classroom practice involving continuous informal assessments and dialogic feedback, and Zhang (2017) found associations between autonomous motivation and students’ perceptions of their possibilities to self-assess and take follow-up measures in the classroom practice. Thus, the three first studies focused on teacher-centered formative assessment. The studies by Pat-El et al. (2012) and Federici et al. (2016) both involved an emphasis on the teachers’ feedback, while the study by Baas et al. (2019) did so in the specific context of portfolio use. The fourth study (Zhang, 2017) focused on student-centered formative assessment practices in terms of self-assessment.

Intervention studies examining the effects on students’ type of motivation show mixed effects. Three intervention studies (1–6 months) were primarily teacher-centered and focused on both the collection of evidence of student learning and feedback. In these interventions, tests or assignments and educational materials were made available for the teachers or students to use. When teachers were not provided with information about how to best use information about students’ progress for learning purposes, the intervention did not have an effect on students’ autonomous motivation (Förster and Souvignier, 2014). When the teachers were provided with a short professional development course (13 h; Hondrich et al., 2018) or a digital formative assessment tool (Faber et al., 2017) to aid the formative processes of providing student assignments and feedback, then small effects were found on students’ self-reported autonomous motivation. Finally, in the study by Meusen-Beekman et al. (2016) the teachers were provided with information about a larger array of approaches to formative assessment. This information included how to establish and share assessment criteria with students, how to implement rich questioning and provide feedback, and how to support either peer assessment or self-assessment (two different intervention conditions). Both of these intervention conditions showed a nearly medium-size effect on autonomous motivation in comparison to a control group, and the teachers’ practices in the two conditions can be characterized as both teacher-centered and student-centered.

Three studies have investigated the association between formative assessment and students’ motivation in terms of engagement in learning activities. One study focused on teacher feedback (Federici et al. (2016), which can be considered a teacher-centered approach to formative assessment. The second study (Wong, 2017) involved a student-centered approach, and the formative assessment practice in the third study (Ghaffar et al., 2020) can be characterized as both teacher-centered and student-centered. Federici et al. (2016) analyzed questionnaire responses from students on one single occasion, and found an association between students´ perceptions of teachers’ formative feedback and students’ persistence when doing schoolwork. Wong (2017) found a medium-size effect on students’ self-reported engagement from an intervention in which the researcher taught self-assessment strategies to the students (thus no teacher professional development was necessary). In the study by Ghaffar et al. (2020) a teacher engaged her students in co-construction of writing rubrics together with both teacher feedback and peer feedback. The results indicated some positive outcomes for students’ autonomous motivation and engagement in learning activities in comparison with a control class during the two-months intervention using a writing assignment.

Thus, formative assessment may be carried out in a range of different ways, and the few existing studies investigating its effects on motivation have been built on formative assessment practices with different characteristics. Because formative assessment practices may have different characteristics, which in turn may affect students’ motivation differently, the available research base needs to be extended with more investigations into the effects of all of the different main approaches to formative assessment (such as student self-assessment or more teacher-centered approaches where the teachers carry out the assessment and provide feedback) to be able to draw more well-founded conclusions regarding the effects of formative assessment on motivation. In particular, there exist only a very few intervention studies investigating the effects of teacher-centered formative assessment practices on autonomous motivation, and we have not found any intervention studies investigating the effects of teacher-centered formative assessment practices on students’ behavioral engagement.

Studying the effects of different classroom practices on student outcomes such as motivation is important, for obvious reasons, but studying what is required by teachers to carry out these practices is also of significant value. Teachers need to master many different skills in order to carry out their teaching practices, and different practices may require a slightly different set of skills. However, regardless of the type of practice, the importance of teachers’ decision-making while planning and giving lessons has been recognized for a long time (for reviews, see for example Shavelson and Stern, 1981; Borko et al., 2008; Hamilton et al., 2009; Datnow and Hubbard, 2015; Mandinach and Schildkamp, 2020), and teachers’ decision-making may be regarded to be at “the heart of the teaching process” (Bishop, 1976, p. 42). Teachers’ decision-making is seldom straightforward, however. Teachers need to make judgments and decisions in a complex, uncertain environment, having limited time to process information (Borko et al., 2008) and, in general, having limited access to information. Teachers’ decisions about content, learning activities, and so forth are affected by a number of variables such as their knowledge, beliefs, and goals (Schoenfeld, 1998) that are shaped by the context in which they reside. The types of decisions teachers need to make, that is, the requirements on the teachers’ decision making, depends on the type of classroom practice that is carried out. For example, if a classroom practice includes adapting teaching to students’ learning needs, then decisions about gathering information about these needs and how to adapt teaching to these needs have to be made. If a classroom practice does not have such an adaptation focus, then the teacher may not be required to make these kinds of decisions to the same extent. Furthermore, the knowledge and skills the teachers need to have to make decisions depends on the type of decisions they have to make. In the classroom practice with the adaptation focus, teachers need the knowledge and skills to successfully use assessment information to make decisions on teaching adaptations that fit different student learning needs.

Teachers’ teaching during lesson has been characterized as carrying out well-established routines (Shavelson and Stern, 1981). The routines include monitoring the classroom, and if the routine is judged to be proceeding as planned there is no need to deviate from the lesson plan. But, if the teacher sees cues that the lesson is deviating too much from the plan, then the teacher has to decide whether other actions need to be taken. The main issue for many teachers in their monitoring seems to be the flow of the activity, that is, the decisions are most often based on the students’ behavior such as their lack of involvement or other behavioral student problems (Shavelson and Stern, 1981), and teachers seldom use continuous assessments of students’ learning as a source of information when deciding how to resolve pedagogical issues (Lloyd, 2019).

Practices such as formative assessment, in which teachers make decisions based on assessment of students’ subject matter knowledge, may require other types of teacher knowledge and skills than in practices in which decisions are primarily based on teachers’ needs to cover the curriculum, their experiences with former students, current students’ prior learning, their intuition, and the behavior in the classroom. It has been argued that in formative assessment practices, teachers need to be skillful in a variety of ways in order to gather information about students’ subject matter knowledge, how to interpret the students’ responses in terms of learning needs, and how to use these interpretations to adapt the classroom practice to improve the students’ learning (Brookhart, 2011; Means et al., 2011; Gummer and Mandinach, 2015; Datnow and Hubbard, 2016). Consequently, there have been calls for developing support for teachers on not only how to gather information about students’ learning, but also on how to interpret the collected information and how to use these interpretations for instructional purposes (Mandinach and Gummer, 2016). However, despite many attempts at professional developments aimed at building teachers’ capacity for using assessment data when making instructional decisions, many teachers often feel unprepared to do so (Datnow and Hubbard, 2016), and many professional development programs in formative assessment have failed to lead to substantially developed formative assessment practices (Schneider and Randel, 2010). If teachers are supposed to implement new practices, they need the knowledge and skills required to do so. If they do not already possess them they need to be provided with sufficient support to acquire them. Teachers will not implement new practices they do not find viable to carry out, but to be able to provide teachers with necessary support, and in order for teachers to be able to assess the viability of implementing the new practice, insights into the skills necessary to carry out the practice are needed.

Therefore, studies that describe the decisions teachers are required to make, and the skills needed to make these decisions, in order for classroom practices to have a positive effect on students’ motivation would be of fundamental value. Some studies have explored the decisions teachers make in practices that include aspects of formative assessment. For example, Hoover and Abrams (2013) explored teachers reported use of summative assessments in formative ways. They found that most teachers reported use of summative assessment data in order to change the pace of instruction, to regroup or remediate students as needed, or to provide instruction using different strategies. However, a minority of the teachers made such decisions on a weekly basis, and the decisions were most often based on central tendency data, interpretation of results within the context of their teaching or validation of test items. Such instructional decisions would be informed by conclusions about students’ areas of weaknesses, but less on conclusions about students’ conceptual understanding (Oláh et al., 2010). However, in the literature search described in Formative Assessment as a Means of Supporting Students’ Motivation section, we found no studies that describe teachers’ decision-making in daily formative assessment classroom practices that are empirically shown to have positive effects on students’ autonomous forms of motivation or behavioral engagement.

In the present study we analyze the characteristics of a teacher’s implemented teacher-centered formative assessment practice, including the practice’s requirements on the teacher’s decision-making. We also investigate the changes in the students’ motivation, both in terms of engagement in learning activities and in terms of the type of motivation. We ask the following RQs:

RQ1. What are the characteristics of this teacher-centered formative assessment practice, and what are the requirements on the teacher’s decision-making?

RQ2. Does the intervention class students’ behavioral engagement in learning activities increase in comparison with five comparison classes?

RQ3. Does the intervention class students’ type of motivation (autonomous and controlled forms of motivation) increase in comparison with the five comparison classes?

The intervention teacher, Anna (fictitious name), with comprehensive university studies in all her teaching subjects and extensive teaching experience (>20 years), had participated in a professional development program in formative assessment the year before this intervention. During that year she worked in another school than the school in which the intervention took place, and the principal at that school had decided that all teachers in the school had to attend the professional development program. Thus, she had not volunteered to participate in the program, nor had she been selected to the program based on any of her characteristics. At the beginning of the autumn term in 2016, she started teaching a course in sociology with 19 second-year students who were enrolled in the Child and Recreation Program at a Swedish upper secondary school. Inspired by the professional development program, Anna implemented a formative assessment practice in her class during October 2016 to May 2017. All 19 students in the intervention class were invited to take part in the study, and none of the students declined to participate. Twelve students attended class on both occasions when the student questionnaires were administered.

The students in the comparison classes were all taught by experienced teachers. These teachers had not participated in the professional development program in formative assessment and did not specifically aim to implement a formative assessment practice. The students in these classes were enrolled in the Building and Construction Program, the Industrial Technology Program, the Child and Recreation Program, and the Social Science Program (two classes). All programs are vocational programs except for the Social Science Program, which is an academic program. The comparison classes were chosen based on the fact that the classes in these programs, despite program differences, did not differ much in overall academic achievement when they began their upper-secondary school studies (students enrolled in the Social Science Program had a little higher grade-average from school-year 9, which is the school year that precedes upper secondary school, than the students from the classes in the other three programs). In Sweden a number of courses are taken at the same time, but during the period when the intervention class took the sociology course none of the other classes took the exact same course. Therefore, type of motivation and behavioral engagement of the students in the comparison classes were measured in the courses most similar to the sociology course. These courses were a social science course for the two classes belonging to the Social Science Program, and a history course for the classes belonging to The Industrial Technology program, the Building and Construction Program, and the other class in the Child and Recreation Program. All of these courses belong to the social science domain, and both the social science course and the sociology course include an historical perspective. The courses (including the sociology course taken by the intervention class) corresponded to five weeks of full-time studies, but since the students take several courses at the same time they lasted through the whole intervention period. Although program-specific courses differ between programs, the same academic course, for example in social science, is not dependent on the program. Among the 121 students in the comparison classes, 72 of them agreed to participate as well as attended class on both occasions the questionnaires were administered so they could complete them. Only three of the 121 students declined to participate. The participating students, in total 84, were 17–18 years of age and enrolled in the same upper secondary school. Among the students in the intervention group, 55% were girls and 86% had Swedish as their mother tongue. In the comparison group, 50% were girls and 88% had Swedish as their mother tongue.

The research project was conducted in accordance with Swedish laws as well the guidelines and ethics codes from the Swedish Research Council that regulate and place ethical demands in the research process (http://www.codex.vr.se/en/). For the type of research conducted in this study, it is not necessary to apply for ethical evaluation to the Swedish Ethical Review Authority. Written consent was obtained from the teacher and the students.

For RQ1, multi-method triangulation was used with four qualitative methods: classroom observation, the teacher’s logs, the teacher’s teaching descriptions and a teacher interview. The aim of this triangulation was to develop a comprehensive view of the teacher’s formative assessment practice and the decisions she made when carrying out this practice. This type of multi-method triangulation is a way of enhancing internal validity of the qualitative data (Meijer et al., 2002). The intention was not to establish if the data from these methods would show the same results. Classroom observations can provide examples of how the teacher uses formative assessment in the classroom, but a single observation cannot show the variation of the practice over different lessons or how common an observed practice is. Teacher logs, teacher teaching descriptions and teacher interviews, on the other hand, may provide more information about how a classroom practice varies over lessons and how common certain aspects of the practice are. Therefore, the four methods were used to provide complementary data on the teacher’s formative assessment practice.

The teacher log was used over a period of 6 months, from November 2016 to April 2017. Anna was asked to make notes shortly after each lesson or series of lessons in the intervention class. The log was digital and asked, with six questions, Anna for information about each teaching activity used during the lesson. The questions asked for a description of the implementation of the activity, information about whether the activity involved any pedagogical adjustments, the rationale behind the decision to choose each activity, an evaluation of the implementation, an evaluation of the outcome of the activity, and finally the log included an open question for further comments. During that period Anna wrote detailed notes answering the six questions in the teacher log twelve times. Some of these described lessons entailed more than one teaching activity so data consisted of written reports from 17 teaching activities.

Anna furthermore wrote teaching descriptions (5–10 pages) at three occasions; before, in the middle, and at the end of the intervention. These descriptions aimed to capture her overall teaching design, how her teaching with respect to formative assessment changed over time, and her rationale for her teaching decisions.

One classroom observation was conducted in February 2018, just prior to the teacher interview, by the first author. The researcher used a protocol to keep notes of Anna’s teaching, aiming for observing what activities Anna implemented, how she introduced them, if the students reacted as if they were used to the activity or not, and how the students engaged in that particular activity. The researcher furthermore informally spoke to the teacher before the observation, and information gained from this conversation was also included in the field notes.

The interview was conducted by the first author immediately after the classroom observation. It lasted about 1 h, and was audio recorded. To begin with, Anna was asked to describe her teaching before the intervention, especially activities that she had changed or excluded when she planned for the intervention. She was thereafter invited to, in detail, describe each of her chosen activities used during the intervention. Activities written down in the teacher log and noticed during the observation were also brought up during the interview to be described in more detail. She was then asked to describe her motives behind the decisions to change, exclude, or choose a particular activity. She was finally invited to elaborate on how she thought the activity could work as a part of a formative classroom practice and how she expected the activity to support her students’ learning.

To capture the characteristics of Anna’s formative assessment practice and the requirements from this practice on Anna’s decision making, the analysis was conducted in three steps. The analysis was made jointly by the first, third and fourth author, and decisions on categorizations were made in consensus.

The first step aimed to capture Anna’s classroom practice before and during the formative assessment intervention. This was done by analyzing the field notes from the classroom observation, the logbooks, the teacher’s teaching descriptions and the interview data to identify learning activities that were regularly implemented. Thereafter, the definition by Black and Wiliam (2009, p. 9) quoted in Formative Assessment as a Means of Supporting Students’ Motivation section, was used as a framework for examining which of the identified activities that could be characterized as being formative assessment. Thus, activities in which the teacher or students elicited evidence of student achievement, and used this information to make decisions on the next step in the teaching or learning practice, would be categorized as formative assessment. In the final step of the analysis the collected data was used to identify the types of decisions Anna’s practice required her to make, and the knowledge and skills needed to make these decisions. Table 1 provides examples of this analysis procedure.

For RQ2 and RQ3, a quasi-experimental design with intervention and comparison classes was used. The participating students completed a web questionnaire at the beginning and the end of the intervention. They did so during lesson time and on each occasion one of the authors was there to introduce the questionnaire and answer questions from the students if anything was unclear to them. This method of data collection will be further described in the following.

Measures of changes in student engagement and type of motivation in both the intervention class and comparison classes were obtained through a questionnaire administered before and after the intervention. All items measuring students’ engagement in learning activities were statements that the students were asked to mark to what extent they agreed with on a scale from 1 (not at all) to 7 (fully agree). The items measuring students’ type of motivation were statements of reasons for working during lessons or for learning the course content. The students were asked to mark to what extent these reasons were important on a scale from 1 (not at all a reason) to 7 (really important reason). Five items measuring behavioral engagement were adaptations of items from Skinner et al. (2009) questionnaire items on behavioral engagement, and six items each measuring autonomous and controlled motivation were adapted from Ryan and Connell (1989) Self-Regulation Questionnaire. The adaptations were made to suit the context of the participants, and before the study these adaptations were piloted with students in four other classes of the same age group to ensure that the questions were easy to understand. A list of all questionnaire items can be found in Appendix A. An example of a behavioral engagement item is: “I am always focused on what I’m supposed to do during lessons.” Examples of items measuring autonomous and controlled motivation are: “When I work during lessons with the tasks I have been assigned, I do it because I want to learn new things” and “When I try to learn the content of this course, I do it because it’s expected of me.” Cronbach’s alpha for each set of the items in spring/fall was 0.86/0.88 for behavioral engagement, 0.90/0.89 for autonomous motivation, and 0.74/0.78 for controlled motivation, indicating acceptable to good internal consistency of the scales. To examine whether each scale was unidimensional, exploratory factor analysis was performed on each set of items for each time point. The extraction method was principal axis factor and the scales were deemed to be unidimensional if the scree plot had a sharp elbow after the first factor, if the eigenvalue of the second factor was <1, and if parallel analysis suggested that only one factor should be retained. The choice of not doing exploratory factor analysis on all items for each time point was based on that the low subject to item ratio (<5:1) would make the risk of misclassifying items and not finding the correct factor structure high (Costello and Osborne, 2005). The mean of the items connected to a construct at each time point was used as a representation of students’ behavioral engagement, autonomous motivation, and controlled motivation at the time point.

To investigate the changes in students’ behavioral engagement (RQ2) and autonomous and controlled motivation (RQ3), mean differences in the responses to the questionnaire items pertaining to these constructs between fall and spring were calculated for both students in the intervention class and in the comparison classes. Students are nested within classes, and therefore it was not reasonable to treat the comparison classes as one group. Because of this, the change in each construct between fall and spring for the intervention class was compared with the same change in each of the comparison classes. Partially due to nesting of students within classes, the study lacks power and statistically significant differences in mean values (or variances) were not seen between groups. We therefore chose to indicate the size of the difference in changes between the intervention class and the comparison classes through calculation of Hedges’ g (Hedges, 1981). A commonly used interpretation of sizes of this type of effect measure suggested by Cohen (1988) is that 0.2, 0.5, and 0.8 indicate small, medium, and large effects, respectively.

Anna’s teaching before the professional development program can be characterized as traditional, as Anna described:

My lessons followed the same dramaturgy. I started by presenting the aim of the present lesson by writing on the smart board or presented as the first slide of my PowerPoint. Thereafter I gave a lecture for 20–30 minutes using my PowerPoint. Then, the students worked with assignments, individually or in groups. Sometimes we watched an educational film followed up by a whole class discussion. … I always tried to choose films, questions and tasks that I believed would be interesting for my students to work with.

Anna described her way of interacting with her students, that she, during students’ work, mainly supported students who asked for help. Anna expressed the challenges of providing support to 30 students in the classroom: “It is difficult to divide my time wisely … the students that are active and ask for help get more support than those not reaching out for me, and these students also need help.” When Anna is asked to describe her assessment practice she described her way of using written tests and reports mainly for summative purposes, grading the students.

Anna’s classroom practice entailed some recurrent decisions. For example, to decide on how to present subject matter in a way that the students would understand and find engaging. Anna based these decisions on her general knowledge of teenagers’ interests. Before the intervention, Anna had decided to primarily help students who asked for support, which is a decision she questioned during the intervention since she knows that students who really need help don’t always ask for it. Since Anna’s assessment focus was on summative assessment, her assessment decisions pertained to these kinds of assessments, and she needed skills to assess students’ gained knowledge in relation to national standards and to decide on the assignment of grades to the students. Decisions rarely concerned how to gather and interpret information about the current students’ knowledge and skills in order to use this information to support their learning. Thus, she did not need skills to make such decisions. When she planned and carried out her teaching, judgments of what the students would understand were based on her knowledge about the content that had been included in prior courses the students had taken (and thus should have been learned) and experiences of former students’ understanding of the content in the current course.

During the professional development program, Anna changed her view of teaching from a focus on how to teach for the students to be interested in learning the subject to a focus on the students’ actual learning, as Anna describes:

I used to aim for planning interesting lessons, my idea was that if students are interested they will be motivated … I was not particularly interested in each student’s learning besides at the end of a course, when I assessed their level of knowledge … However, I now realize that I gave my students assignments that were too difficult (even though interesting). They were not familiar with the essential concepts they needed to solve the tasks.

After the professional development program, Anna said she started to ask herself three questions: 1) What are the students’ knowledge and skills in relation to the learning goals at the beginning of a teaching and learning unit? 2) What are their knowledge and skills later on during learning sequences? 3) Based on the answers to 1) and 2), what would be the best teaching method to meet these learning needs? This change in view had important consequences for her practice, including her decision-making.

The analysis showed that Anna regularly gathered and interpreted information about the students’ learning needs and adapted feedback and learning activities to meet these needs. That is, she implemented a formative assessment practice that can be characterized as teacher-centered focusing on information gathered by the teacher (and not by the students). Anna described that she now gathers information about: students’ prior knowledge and knowledge gained during lessons. These are presented below.

Anna described that her notion that some of her students are likely to have insufficient prior knowledge to fully understand the course made her change her way of introducing new courses. Now she always starts by gathering information about her students’ prior knowledge. She uses that information to plan her forthcoming lessons but also to act in a timely manner during the lesson itself. She mainly uses Google forms because, besides gathering information from all students, it compiles and presents the results straight away. Anna explains:

The digital tool is essential to be able to work with formative assessment. To gather information, using pen and paper and spend time compiling the answers would be too time consuming, and you would not be able to act during the lesson itself.

Anna described that she now collects information in two main ways. First, in the initial lesson she asks her students to rate their understanding of some main concepts in the subject matter domain in order to acquire indications of their familiarity with the learning content. For example, the students were to rate 19 concepts such as gender, intersectionality, social constructivism, socialization, and feminism, and for each concept they were to answer whether they “never heard of it,” “recognize it but can’t explain it,” “can explain it a little,” or have “a total understanding of it.” Anna described that since she felt she needed more information about students’ actual understanding of the concepts (not merely their rating); she decided to ask the students to write down explanations for some concepts of her choice at the end of such lessons. That information, measuring their understanding of these concepts could be described as more accurate and useful to make decisions about what to emphasize in her subsequent teaching. Second, as a complement to the information about the students’ perceived understanding of the subject matter, Anna uses the digital tool to administer questions measuring the actual extent (not only the perceived extent) to which they already possess the knowledge to be learned in the teaching and learning unit. For example, she poses the following question to her students: “Which of the following (parliament, municipality, county council, market, or other) decides on the following?” followed a number of decisions made in society such as “It is forbidden to hit children in Sweden.” (See Table 1 for other examples). In these instances the students answered anonymously.

Directly after the students have answered her questions, in any of these two ways, Anna and her students look at the results provided by Google forms. Anna shares the diagrams that show the results on a group level with her students, and she clarifies the learning objectives of the teaching and learning unit. These results indicate to both Anna and her students, part of the students’ current knowledge in relation to the learning goals. Anna points out the challenge when it turns out that her students’ prior knowledge differs considerably, by stating

There are situations when some students can’t identify the European countries using a map (that should have been learnt in middle school) when other students can account for the social, economic, political and cultural differences between Greece and Germany. … so where do I start, at middle school level, to include students with insufficient prior knowledge or should I start at the level where the students are expected to be?

Anna explained that she decided to aim to adjust her teaching on an individual level and, to be able to do so, she has changed her approach to have the students answering anonymously, as Anna said: “Now I often invite students to write their name, so that I, in peace and quiet after the lesson, can identify students who need extra support.” Anna described that she now, knowing who they are, actively approaches these students during lessons to provide support, even if they do not ask for help.

At the end of the teaching and learning unit (for example, one month later), she then sometimes again lets the students rate their understanding of subject matter concepts in order to support their awareness of their learning progress.

To gather information about students’ prior knowledge entails some consecutive decisions. She needs to decide on what prior knowledge is needed and what she can expect her students to know when the students enroll for the course. Then she can decide on, for example what 19 concepts she should ask her students to rate and describe. That is, concepts that her students could be expected to already know, together with concepts that are likely to be new. To make these decisions she needs to have extensive knowledge of the subject matter and sufficient knowledge about learning goals from her students’ prior courses.

Then, having information about students’ prior knowledge puts additional demands on her decision making. Based on that information, Anna decides how to adjust her teaching to fit the majority of her students. However, when Anna found that group level information is not enough to identify students who really need help (and may not ask for it) she decided to list the students’ names as well. To support these identified students she made the decision to approach these students intentionally during lessons even when they don’t ask for help.

Besides using gathered information to make decisions on planning future lessons, Anna takes the opportunity to provide timely feedback or instructions directly after the students answer her questions. The latter being a complex matter of instant decision making on what concepts to explain, what misunderstanding to challenge, what action will benefit students with insufficient prior-knowledge the most, and so forth. This kind of decision-making puts great demands on her skills to quickly assess and choose information about her students’ shortcomings and provide feedback accordingly. Anna pointed out: “These decisions are made ‘on the fly’. However, I have been teaching for a fairly long time and have some experience to rely on. I mean, I know what students usually find difficult.”

Anna reported that she now uses questions during and at the end of lessons to gather the information about what her students have learned during the lesson. Based on this information she decides what feedback to give the whole class or individual students, whether to focus more or less on certain content, and which learning activities would meet the class’s or individual student’s identified learning needs.

Anna furthermore describes how she tells her students that they will be requested to answer some questions after a learning activity. She thinks that if the students know in advance that they are expected to answer questions, they are given extra incentive to pay attention and to engage in their own learning; as Anna said:

At the beginning of a lesson, when I am going to give a lecture or show an educational movie … I tell my students that I am going to give them questions using Google forms afterwards, and that we will discuss them. This is a way of making them more focused, paying attention and providing them with an opportunity, and for me, to check whether they understand the important stuff. … if not they can ask me or, when I get the information that something was really tricky, I know what I need to explain again or I let them practice more.

The students’ answers to the questions, and their utterances in the discussion, provide Anna with information about the students’ understanding of the learning content included in the lecture or in the movie. Based on her interpretation of this information, she makes decisions on which parts of the content the students have not yet grasped and therefore need immediate further clarification or attention during the next lesson.

When the students work with tasks, Anna walks around in the classroom to help her students. As described above, Anna also did this before the implementation of her formative assessment practice, but she has now changed her way of providing support. Instead of immediately helping her students, she now first requires them to orally formulate what they have understood and what exactly their problem is. She then interprets their formulations and asks them to respond to her interpretation. She said that the decision to change her responses in this way is based on her belief that it would increase the validity of her interpretations of what the students have understood so far as well as their learning needs, which in turn provides her with a better foundation for her decisions on what feedback would be most beneficial for the students’ learning.

At the end of many lessons, Anna gives the students questions using Google forms in order to gather information about what they have learned from the lessons so far in the teaching and learning unit. For example, at the end of one lesson she returned to some of the concepts for which the students had rated their understanding earlier (e.g., social constructivism and feminism), and the students were now asked to “formulate a few sentences that show your understanding of these concepts.” In these cases the students also provided their names together with their answers. Based on her interpretation of the students’ learning needs, she then makes decisions about how to best support the students’ learning in the following lesson. Generally, when she judges that many students lack sufficient understanding, she will revisit the content with the whole class during the next lesson; if on the other hand only a few students lack sufficient understanding, she will work with them separately the following lesson.

Anna furthermore points out that there are situations when the information from the student is insufficient to even try to understand their difficulty and other supporting strategies are needed; Anna describes:

For example, students that are convinced that they will fail. Their answers don’t entail any information besides “I don’t know” and they do not seem to make an effort during the lessons. I have tried many different strategies to motivate them more, but the one that has been most successful is to divide the assignment into smaller and more defined parts. That will make them take one step at the time and I can provide timely and frequent feedback. This will make them feel competent, that they are able to complete one (or several) sub-tasks within a lesson.

Introducing subtasks to bring the students to initiate their work at all will create further possibilities for Anna to gain information about their learning needs. Anna pointed out her aim to prevent students from falling behind, and that besides making sure that all students really understand the key concept in the course, to actively approach students who have difficulties. This way of breaking down assignments into sub-tasks to overcome one difficulty at a time works for some of her other students as well. That is, if the assignment is to examine the political and cultural differences between Greece and Germany the first easy-solved sub-task could be to learn where these countries are on a map.

Anna’s decision to continuously gather and act on information from all students put great demands on her decision-making, several of which have been accounted for earlier (see Gathering Information About Students’ Prior Knowledge section). Together with her decision to approach students whom she has identified as having difficulties (besides those who ask for help), she decided to base these interactions on formative assessment. That is, to gather information about and identify the difficulty before providing feedback. Furthermore, when Anna encounters students not active during lessons and unwilling to share their difficulties, Anna has gone through a series of decisions about trying out, evaluating and discharging supporting strategies. Her latest decision however, that of dividing and concretize assignments into sub-tasks, managed to bring these students to engage and feel competent in finalizing tasks during the lesson.

Anna’s shift in focus from students’ learning outcome at the end of a course to her students’ learning process made her implement a formative assessment practice. The progress of her assessment practice could be described as: Moving from merely summative assessment, to adding formative assessment at a group level and thereafter also adding formative assessment at the individual level. Thus, she added a formative aim to her assessment practice, resulting in additional requirements on her decision-making and her knowledge and skills. She shifted from mainly eliciting information about her students’ learning for grading purposes to using this information to adjust her teaching to fit her students’ level of prior knowledge and to support them to attain the learning goals. This additional aim required her to make decisions she did not have to make before, such as deciding what information would be useful for making instructional adjustments, when and how this information should be collected, and how to act on this information to support her students’ learning. For example, to gain extensive insight into her students’ prior knowledge and learning achievements during lessons, and the heterogeneity thereof, she had to make a series of decisions. She needed to decide what prior knowledge of subject matter concepts was important for the students to have for the learning practice to be as efficient as possible, and thereafter decide how to design introductory lessons to target the students’ lack of such knowledge. She had to decide when to intentionally approach students she identified as having specific learning needs, to provide repetitive instructions to smaller groups of students identified as falling behind and divide tasks into subtasks to fit students with low motivation and self-esteem, and so forth. But she also had to decide how to give students opportunities to choose tasks based on personal interests in order to give them the independence they needed to aim for course content that suited their level of knowledge. These decisions require teacher knowledge and skills that go beyond familiarity of national standards and curriculums. For example, Anna needed to gain insights into what prior subject matter knowledge the students could be expected, and would be necessary, to have when they enrolled in her class. She furthermore needs skills to choose, elicit, interpret and act on information about students’ learning needs. But moreover, these skills included how to interpret and act instantly to be able to provide timely feedback during the lessons. What is noteworthy is that Anna realizes situations where the information about her students’ knowledge and skills is insufficient and she needs to resort to supporting strategies, for example the design of sub-tasks, in the formative assessment process. Thus, the aim of using assessment for instructional purposes adds requirements of constant flexibility and choosing or inventing strategies.

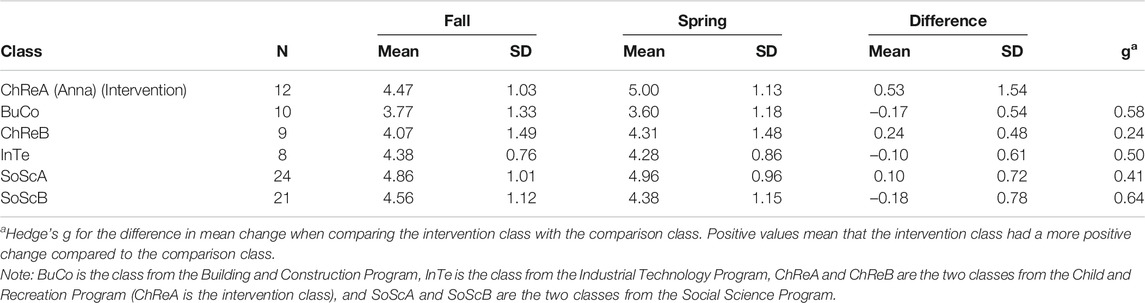

Students in the intervention class increased their behavioral engagement between spring and fall, and had a more positive change than all of the comparison classes (Table 2). Three of the comparison classes actually show a decrease in behavioral engagement. The size of the change in students’ behavioral engagement, as estimated by comparing the difference between fall and spring in the intervention class with the difference in each of the comparison classes, was between small and medium (from 0.24 to 0.64).

TABLE 2. Behavioral engagement in the intervention and comparison classes in the fall and spring and their difference.

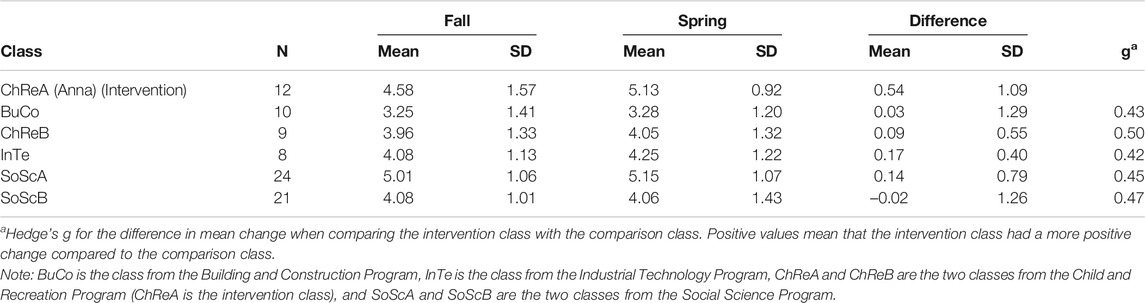

Table 3 shows that students in the intervention class increased their autonomous motivation between spring and fall, and had a more positive change than all of the comparison classes. The size of the change in students’ autonomous motivation, as estimated by comparing the difference between fall and spring in the intervention class with the difference in each of the comparison classes, was close to medium for all comparisons (from 0.42 to 0.50).

TABLE 3. Autonomous motivation in the intervention and comparison classes in the fall and spring and their difference.

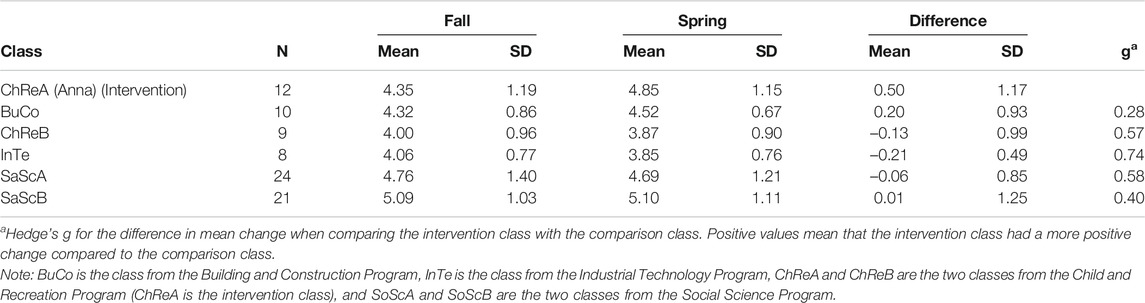

Table 4 shows that students in the intervention class increased also their controlled motivation between spring and fall more than all of the comparison classes. The size of the change in students’ controlled motivation, as estimated by comparing the difference between fall and spring in the intervention class with the difference in each of the comparison classes, was between small and large (from 0.28 to 0.74).

TABLE 4. Controlled motivation in the intervention and comparison classes in the fall and spring and their difference.

The implementation of the formative assessment practice had a profound influence on the decisions about teaching and learning that Anna had to make. The analysis of Anna’s implemented practice shows how such teacher-centered formative assessment put further demands on teacher decision-making than more traditional teaching practices. In both of these types of teaching practices teachers need to make decisions about how to present content, which tasks to use in learning activities and summative tests, what kind of feedback to give, and which grades to assign to students. However, in many traditional forms of teaching information about students’ learning based on continuous assessments is not the focus when deciding on how to resolve pedagogical issues (Lloyd, 2019) or when monitoring the classroom practice (Shavelson and Stern, 1981). In contrast, the focus in Anna’s formative assessment practice is on making pedagogical decisions based on continuously gathered empirical evidence about her students’ learning. As a consequence, in line with arguments from several researchers (Brookhart, 2011; Means et al., 2011; Gummer and Mandinach 2015; Datnow and Hubbard, 2016) and empirically shown in the present study, the teacher in such a formative assessment practice also needs to make decisions about how to gather information about the students’ knowledge and skills during the teaching and learning units, what this information means in terms of learning needs, and how to use the conclusions about learning needs to adapt feedback and learning activities to these needs. However, formative assessment may be carried out in different ways (Stobart and Hopfenbeck, 2014). Some formative assessment practices may have a positive effect on students’ motivation while others may not, and the requirements of these different practices on teachers’ decision making may not be the same. The present study exemplifies some of the decisions, and the skills used to make them, that are required in a formative assessment practice in which both students’ autonomous motivation and their behavioral engagement in learning activities increased. Studies that investigate effects of formative assessment on motivation within an ecologically valid, regular classroom environment are scarce (Hondrich et al., 2018), and we have not found any studies that have examined the requirements on teachers’ decision making in formative assessment practices that have been empirically shown to have an impact on students’ engagement or on autonomous and controlled motivation.

It should be noted that the formative assessment practice requires Anna to make some of the decisions under difficult conditions, and her disposition and skills need to afford her the ability to cope with making decisions under such conditions. These conditions are in many ways more difficult than those in, for example, practices in which the formative aspect of the practice only is constituted by formative use of summative assessment data. Instructional decisions based on summative assessment data are made much more infrequent and under less time pressure. That kind of data does not appear to inform the instructional decisions in the day-to-day practice (Oláh et al., 2010; Hoover and Abrams, 2013). In order to be able to adapt the teaching during a lesson, Anna needs to be able to develop or choose tasks that provide information about students’ conceptual understanding but do not take a long time for the students to answer and for Anna to assess. Moreover, because the formative assessment practice is founded on the idea of continuously adapting teaching and learning to all students’ learning needs, it is not sufficient to gather information only about a few students’ learning needs or to only adapt the teaching in coming lessons. Therefore, Anna needs to be able to administer the questions and collect and interpret the answers from all students even in the middle of lessons. Letting the individual students who raise their hands answer the questions would not suffice, and the use of an all-response system such as Google Forms allows her to see the responses from all students at the same time. When adaptations of teaching are made during the same lesson that the assessment is done, Anna needs to make decisions both under time pressure and without knowing in advance exactly which learning needs the assessment will show. In her formative assessment practice, Anna will much more often than in her previous more traditional way of teaching make decisions on how to use the conclusions about all the students’ learning needs. This means that she much more often is required to make decisions about how to adapt teaching to a class of students that may have different learning needs and must be able to individualize instruction and learning activities to these different needs when her interpretation of the assessment information suggests this to be most useful for the students’ learning. Whatever actions are taken, the decisions about actions need to be taken based on the identified learning needs and not on a predetermined plan for the teaching and learning unit. The latter, in contrast, would generally be a cornerstone of a more traditional teaching practice (Shavelson and Stern, 1981).

It should be noted that the additional decisions teachers need to make, and the skills required to make them, in the formative assessment practice in comparison with a more traditional way of teaching are by no means trivial. Thus, as is argued by, for example, Mandinach and Gummer (2016), in order to provide teachers with reasonable possibilities to implement this kind of practice it would be important for teacher education and professional development programs to take into account the decisions and skills required to carry out this practice. In the present study we have identified some of the skills that may be useful to take into account when supporting pre- and in-service teachers in developing the skills necessary for implementing formative assessment that have a positive effect on motivation. For example, in line with the results of this study, our practical experience suggests that it may be crucial that professional development programs help teachers in how to use assessment information to adapt their teaching to their students’ often different learning needs. In addition, the teachers may need assistance in finding ways to carry out formative assessment practices in the practicalities of disorderly classroom situations. To accomplish such assistance, professional development leaders may also need to collect evidence of the teachers’ difficulties and successes in the actual flow of their classroom activities to be able to provide sufficient assistance.

However, using these additionally required skills in making the decisions and implementing this practice may pay off in terms of positive student outcomes. The students’ behavioral engagement and autonomous motivation increased in the intervention class both in absolute numbers and compared to all of the comparison classes. The changes compared to the comparison classes were mostly of medium size. Thus, the change in students’ autonomous motivation in the present study was higher than the changes in autonomous motivation coming from formative assessment implementations of teachers who did not receive comprehensive professional development support (e.g., Förster and Souvignier, 2014), and from interventions in which teachers were provided with a short professional development course (Hondrich et al., 2018) or a digital formative assessment tool (Faber et al., 2017) to aid the formative assessment processes of providing student assignments and feedback. The change in autonomous motivation was of a similar order of magnitude as when teachers were provided with information about how to implement a formative assessment practice that involved both teachers and students in the core processes of formative assessment (Meusen-Beekman et al., 2016). The change in students’ engagement were of a similar order of magnitude as when a researcher taught self-assessment strategies in a student-centered formative assessment practice (Wong, 2017).

In this study we also investigated the change in students’ controlled forms of motivation. This is not commonly done in existing studies of effects of formative assessment on students’ motivation. Interestingly, the results show that not only autonomous forms of motivation increased more in the intervention class than in the comparison classes. Controlled forms of motivation also increased in the intervention class both in absolute numbers and compared to all of the comparison classes. In comparison with the comparison classes, these students experienced both the autonomous reasons and the controlled reasons for engaging in learning to be more important after the formative assessment intervention than before. As a consequence, there was no shift away from more controlled forms of motivation toward more autonomous forms of motivation among the students. This shows the value of investigating changes of different types of motivation, not just of autonomous motivation. Any type of motivation may enhance students’ engagement in learning activities, but because autonomous motivation has been associated with more positive emotions and better learning strategies than controlled motivation (Ryan and Deci, 2000), it might have been even more valuable for the students if the increase in behavioral engagement and autonomous motivation had been achieved without the corresponding increase in controlled motivation.

The present study does not investigate the reasons for the change in students’ motivation. But the characteristics of the practice provide some indications of possible reasons. Anna began to require her students to orally formulate what they had understood and what exactly they perceived their problem to be before she provided them with help, she also started to using google forms which required all of her students (and not only a few students) to respond to her questions, and sometimes she informed her students that after a presentation of content or some other activity they would be given questions about the content. These activities may have given the students direct incitement to engage in learning during these occasions, which may have affected their learning habits in general toward more engagement also in other learning activities. Anna’s more frequent assessments of her students’ knowledge and skills followed by feedback and learning activities adapted to the information from the assessments, may have helped the students to acknowledge that they have learned and can meet goals and criteria. The feedback and learning activities adapted to information about students’ learning needs may also have increased students’ actual learning. In line with theorizing by for example Heritage and Wylie (2018), these experiences may have facilitated students’ development of an identity as effective and capable learners, and as a consequence enhanced students’ motivation to engage in learning activities. In line with Shepard et al. (2018) theorizing, Anna’s formative assessment practices in which feedback helps students see what they have learned and how to improve may also have fostered a learning orientation in which students find it personally valuable to engage in learning activities and thus feel more autonomous in their motivation. Finally, in line with arguments by Hondrich et al. (2018) and Pat-El et al. (2012), Anna’s focus on gathering information about students’ knowledge and skills and providing feedback that both helps students monitor their learning progress and provides support for how goals and criteria can be met, may have enhanced students’ satisfaction of the psychological need for competence, which according to self-determination theory (Ryan and Deci, 2000) influences students’ autonomous forms of motivation.

The formative assessment practice described in the present study is teacher-centered in the sense that the teacher is the main active agent in the core formative assessment processes. Formative assessment may also have other foci. For example, formative assessment may combine the characteristics of a teacher-centered approach with practice in which the students are more proactive in the formative assessment processes. In such practices, the students would also be engaged in peer and self-assessment followed by adapting feedback and learning based on the identified learning needs. The teacher’s role is to support the students in these processes. This approach to formative assessment would require the teacher to be involved in even more types of decision making about teaching and learning, and would require even more skills than the practice analyzed in the present study. Such practice may produce other effects on students’ engagement and type of motivation. The shift from a practice in which the teacher is seen as the agent responsible for most decisions about teaching and learning to a practice in which the responsibility for these decisions are more balanced between the teacher and the students might cause an increase in students’ engagement in learning activities (Brookhart, 2013; Heritage and Wylie, 2018), and in autonomous motivation without a similar increase in controlled motivation (Shepard et al., 2018). Future studies investigating this hypothesis would be a valuable contribution to research on the effects of formative assessment on motivation.

One limitation of this study is that only one teacher’s implementation of formative assessment was investigated. This is sufficient for identifying some of the decisions required to be made in teacher-centered formative assessment practices and the skills needed to make them. However, in the investigation of the changes in students’ motivation, this opens up for some uncertainties about whether there are other characteristics of the classroom practice than formative assessment that may have contributed to the positive changes in the students’ motivation. In addition, only having one intervention class also makes the study underpowered, which means that changes that are not very large will not be detected in significance analyses. This also makes it uncertain as to whether the results would be similar with other students and in other contexts. A second limitation of the study is that there is no analysis of the classroom practices in the comparison groups.

However, to avoid the risk of different changes in the intervention group and in the comparison groups on the outcome variables (behavioral engagement, and autonomous and controlled forms of motivation) not being due to the implemented formative assessment practice but to differences in prior academic achievement, the comparison classes were chosen based on the fact that classes in these programs, despite program differences, did not differ much regarding prior academic achievement. Furthermore, we have used both prequestionnaires and postquestionnaires to measure the changes on the outcome variables. In this way, the risk that students’ prior forms of motivation and behavioral engagement would influence the changes on the outcome variables is minimized.

Another possible threat to the validity of a conclusion that the change in students’ motivation is due to the formative assessment practice would be if those students in the intervention class who increased their engagement and motivation the most chose to participate in the questionnaire survey to a higher extent than other students, and if the opposite was true for the students in the comparison classes. However, since only three persons declined to participate, and they were spread over the classes, almost all of the non-participating students were those who happened to not be present on both occasions when the questionnaire was administered. Such non-participation could affect the mean values of the students’ answers on each questionnaire item because students who attend most classes might be overrepresented in our samples. However, such overrepresentation would be similarly distributed over all classes, and thus not affect the results of the study.

Another variable that could have had an influence on the results are the teaching practices in the comparison classes. If some of the comparison teachers also would have implemented formative assessment practices, it would be difficult to draw any conclusions about the higher increase in motivation in the intervention class being due to the implemented formative assessment practice. However, none of the comparison teachers had participated in any professional development program in formative assessment, and they continued to teach in the ways they had taught before. This makes it highly unlikely that they would have engaged in formative assessment practices. Furthermore, the results show that the intervention group increased more than all of the comparison classes on all outcome variables. Thus, whatever characteristics of the teaching in the comparison classes, none of them had the same influence on the outcome variables as the intervention teacher’s implemented formative assessment practice.

Another possibility is that the intervention teacher was especially proficient in enhancing students’ motivation in other ways than by the use of formative assessment, and that those ways are the reasons for the changes in motivation being more positive in the intervention class than in the comparison classes. This cannot be ruled out but may be less likely since the intervention teacher was not selected to the study for any other reason than that she had participated in a professional development program in formative assessment to which she had not volunteered and was not selected based on any of her characteristics. She came from another school in which all teachers participated in that professional development program, so the reason for her participation was just that she happened to be at that school when the program was carried out.