94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

CONCEPTUAL ANALYSIS article

Front. Educ., 23 December 2020

Sec. Leadership in Education

Volume 5 - 2020 | https://doi.org/10.3389/feduc.2020.583157

Arguments for and against the idea of evidence-based education have occupied the academic literature for decades. Those arguing in favor plead for greater rigor and clarity to determine “what works.” Those arguing against protest that education is a complex, social endeavor and that for epistemological, theoretical and political reasons it is not possible to state, with any useful degree of generalizable certainty, “what works.” While academics argue, policy and practice in Higher Education are beset with problems. Ineffective methods such as “Learning Styles” persist. Teaching quality and teacher performance are measured using subjective and potentially biased feedback. University educators have limited access to professional development, particularly for practical teaching skills. There is a huge volume of higher education research, but it is disconnected from educational practice. Change is needed. We propose a pragmatic model of Evidence-Based Higher Education, empowering educators and others to make judgements about the application of the most useful evidence, in a particular context, including pragmatic considerations of cost and other resources. Implications of the model include a need to emphasize pragmatic approaches to research in higher education, delivering results that are more obviously useful, and a pragmatic focus on practical teaching skills for the development of educators in Higher Education.

“….higher education practitioners….tend to dismiss educational research and still largely base decision-making on personal experiences and ‘arm chair’ analyses” (Locke, 2009).

Higher Education (HE) is important to society. It is how we train many important professional roles: our nurses, engineers, doctors, lawyers, midwives, teachers, dentists, accountants, veterinarians and many more. People with university degrees are more likely to be employed, and with a higher salary (OECD, 2018). HE is also big. UNESCO estimates are that there are over 200 million students in HE worldwide. This number has doubled in the last 20 years (UNESCO, 2017), and is on course to double again in ~10 years (ICEF, 2018). Approximately one third of school leavers now enter HE (UNESCO, 2017). HE is also expensive. Much of the cost of HE is borne by the public, paid through taxes. In some countries the cost is increasingly borne directly by students, leading to questions over value for money (Jones et al., 2020) and there are problems with access to HE and dropout rates of students (OECD, 2018).

Given the size, impact, importance and cost of HE, it would be reasonable to assume that policies and practices in HE are the best available, based upon rigorous evidence. There have been repeated calls for education to become more evidence based (e.g., see Slavin, 1986; Hargreaves, 1997; Davies, 1999; Harden et al., 1999). However, many common practices and policies in HE do not appear to be evidence-based, for example:

Students are the major stakeholder in HE and so their feedback is important, but it has limited validity as a primary source of data upon which to base judgements about teaching quality. Student feedback has been repeatedly shown to be subject to bias, dependent upon factors such as teacher's gender, ethnic background, age, qualification status and “attractiveness.” It is also influenced by factors related to the subject being taught, such as perceived difficulty and expected grades (Basow and Martin, 2012; Boring et al., 2016; Fan et al., 2019; Murray et al., 2020).

Despite this, feedback from students is routinely used to evaluate programmes of study, educators and universities. In the United Kingdom the “National Student Survey” (NSS) of satisfaction is currently used to create league tables of universities and programmes. Student Evaluation of Teaching (SET) data are an established aspect of hiring, tenure and promotion decisions about academic staff in many countries (Fan et al., 2019; Murray et al., 2020). This simplistic use of student feedback is one example of attempts to use basic metrics to evaluate the complex, difficult enterprise of HE [e.g., see (Robertson et al., 2019)].

Furthermore, many effective teaching practices make use of the principle of Desirable Difficulty, wherein learning is facilitated by engaging students with tasks that are challenging (Bjork and Bjork, 2011). These principles inform many approaches to “Active Learning,” which improves outcomes for students (Freeman et al., 2014), in particular students from underrepresented groups (Theobald et al., 2020), but appears to be, initially, more poorly evaluated in feedback from students, who prefer didactic lectures and report that they feel they have learned more from didactic lectures compared to Active Learning (Deslauriers et al., 2019).

Many ineffective teaching methods are commonly used in HE. A full review is outside the scope of this paper but we include some common examples. One of these involves diagnosing the supposed “Learning Style” of a student, normally via a questionnaire, with the aim of identifying teaching and study methods to help that student. A popular example is the “VAK”/“VARK” classifications, which defines individuals as being one or more of “Visual, Auditory, Read/Write, or Kinaesthetic” learners. There are over 70 different Learning Style classification systems (Coffield et al., 2004). It has been repeatedly shown, over 15 years, that matching teaching to these narrow “Learning Styles” does not improve outcomes for students and potentially causes harm by, e.g., ignoring individual differences, pigeonholing learners into a supposed style, and wasting resources [for reviews see (Coffield et al., 2004; Pashler et al., 2008; Rohrer and Pashler, 2012; Aslaksen and Lorås, 2018)]. Despite this, multiple studies have shown that a majority of educators in HE believe that matching teaching to these Learning Styles will result in improved outcomes for learners [e.g., (Dandy and Bendersky, 2014; Newton and Miah, 2017; Piza et al., 2019)].

A related example is “Dales Cone,” which organizes learning activities into a hierarchy and makes unfounded numerical predictions about the amount of learning that will result. There is limited evidence to support the approach, but it is widely cited as effective (Masters, 2013, 2020).

Then there are teaching techniques which have been widely adopted without evidence of their effectiveness, and/or with limited considerations of cost. One example which has been used for many years is the flipped classroom wherein lectures and other didactic activities are undertaken outside class, with class then focused on higher order learning (King, 1993). Recent evidence now demonstrates that implementation of a flipped classroom results in only a modest improvement in learning (Strelan et al., 2020b) and student satisfaction (Strelan et al., 2020a) which may not be found in all contexts [e.g., (Chen et al., 2017)] and is associated with significant costs to implement (Spangler, 2014; Wang, 2017). Thus, many students have acted as guinea pigs, at cost, to trial a method without knowing whether or not it is effective. Similar arguments can be made for other methods such as discovery learning (Kirschner et al., 2006) and case-based learning (Thistlethwaite et al., 2012).

A related example, this time of a way of organizing teaching, is Bloom's taxonomy, a term which will be familiar to almost anyone involved in education. First published in 1956, the original intention was to define learning in a measurable, objective way (Bloom and Krathwohl, 1956). The taxonomy has been criticized for almost 50 years [e.g., (Pring, 1971; Sockett, 1971; Stedman, 1973; Ormell, 1974; Karpen et al., 2017; Dempster and Kirby, 2018)] and yet it remains a cornerstone of accreditation and practice in HE. The original publication ran to over 200 pages but is now commonly presented as a single hierarchy of verbs designed to write learning outcomes. There is no consistency within HE regarding those verbs, and HE institutions rarely cite the evidence base for the list that they present, meaning that the definitions of a particular level of learning may be completely different between HE institutions (Newton et al., 2020).

Could we say that current teaching in HE uses “effective” methods? Multiple efforts from cognitive psychology have identified simple, generalizable, effective teaching and learning strategies. Many of the techniques are relatively straightforward for both educators and students to use and have shown to be effective in practice [e.g., (Chandler and Sweller, 1991; Dunlosky et al., 2013; Weinstein et al., 2018)]. However, HE faculty display limited awareness of evidence-based practices (Henderson and Dancy, 2009). Those that are aware show limited use of them (Ebert-May et al., 2011; Henderson et al., 2011; Froyd et al., 2013), and may modify them so as to remove critical features (Dancy et al., 2016).

However, problems may arise when “effective” teaching methods are identified without a consideration of context. An exhaustive 2017 analysis identified variables associated with achievement in Higher Education (Schneider and Preckel, 2017). The list of variables came from meta-analyses going back decades and included many simple interventions. For example, positive effects were found for teacher clarity and enthusiasm, frequency and quality of feedback, frequent testing, peer assessment and teaching. Negative effects were found for, amongst others, the use of distracting or irrelevant “seductive details” in instructional materials, test anxiety, the use of Problem Based Learning for basic knowledge acquisition. This meta-analysis type approach results in outputs that are easy to understand, but strips out local, contextual factors which make it difficult for educators to make judgements about the implementation of such methods in their own context. For example, what might be considered “seductive details” in one context might promote engagement and interest in another. Also, considerations of costs are normally absent from such analyses, which are also dogged by concerns about the underlying statistical methodology [e.g., see (Bergeron and Rivard, 2017; Simpson, 2017)].

The absence of a clear pattern of the use and awareness of effective teaching methods may further confound the use of inappropriate or simplistic methods of evaluating the quality of teaching. These can include a calculation of the number of “contact hours,” the staff-student ratio, class size and so on, Gibbs (2010), as well as student feedback as described above. These sorts of metrics have some value, but their significance is difficult to judge in the absence of useful information about what makes effective teaching. As a simple example; students may be taught in small groups, using high contact hours with a good staff-student ratio. This may result in them giving positive feedback on their experience. Such a pattern would be rated highly using existing measures of teaching quality (Gibbs, 2010). However, if these students are being taught, and learning, using ineffective methods, then the significance of these metrics as measures of teaching quality is undermined.

This term is widely accepted, as is evident in strategy and policy documents, job titles and departmental names, and continuing professional development programmes (Kirkwood and Price, 2014; Bayne, 2015). The term describes an outcome, not an object i.e., technologies (generally referring to digital technologies) are enhancing learning.

There is pressure on academic staff to adopt digital technologies for teaching and learning (Islam et al., 2015), and national professional standards. For example, it is stated in the UK Professional Standards Framework (UKPSF) (Higher Education Academy, 2011) that the “…use and value of appropriate learning technologies” (p. 3) must be demonstrated for educators to achieve accreditation at fellowship level (and above).

However, there is no comprehensive or consistent evidence that digital technologies, in and of themselves, will result in learning enhancement. A systematic review of the relevant evidence concluded that “…both computer-based and web-based eLearning is no better and no worse than traditional learning with regards to knowledge and skill acquisition” (Al-Shorbaji et al., 2015, p. xvi). Furthermore, the authors assert that effective use of digital technologies for learning depends on its successful integration with pedagogy and content knowledge. Reviews from other educational sectors and disciplines have reached similar conclusions [e.g., (Means et al., 2009; OECD, 2015b; Luckin, 2018)].

This is not to say that digital technologies are not beneficial to learning or higher education, but that learning is complex and situated, as demonstrated in the following statement:

“Technology cannot, in itself, improve learning. The context within which educational technology is used is crucial to its success or otherwise. Evidence clearly suggests that technology in education offers potential opportunities that will only be realized when technology design and use takes into account the context in which the technology is used to support learning” (Luckin, 2018, p. 21).

According to Blackboard Inc. “Today's digital natives hunger for new educational approaches” (Blackboard Inc., 2019). Similar arguments state that today's digital natives are learners in early adulthood who, “…enjoy mobile, virtual reality and augmented reality for learning, personal and professional experiences,” “…prefers on-demand learning (think Udemy) vs. formal education [and]…actually question if formal education is really worth it, or if it's a mistake” (Findley, 2018, para. 6). Recommendations include “getting ahead of the curve with the tools, technology, and platforms that they are interested in is crucial (think Snapchat)” (Findley, 2018, para. 15).

The term digital natives was popularized in 2001 by Marc Prensky, who proposed that digital natives are a new generation of students who are “native speakers” of the language of digital technologies such as computers and the internet (Prensky, 2001). Due to their exposure and use of digital technologies throughout their life, digital natives have different preferences, needs and abilities (such as multitasking and technical skills). Prensky asserts that they think and process information differently, and considers whether “…our students' brains have physically changed - and are different from ours - as a result of how they grew up” (Prensky, 2001). The emergence of this new generation, he argued, has significant implications for higher education.

Older individuals who grew up without digital technologies were categorized as digital immigrants. It was argued that despite attempts to learn to adapt to the new digital world, digital immigrants would always retain some form of “accent”; outdated behaviors or characteristics, and would “struggle to teach a population that speaks an entirely new language” (Prensky, 2001, p. 2).

However, a comprehensive review of the literature by Bennett et al. reported that the concept of digital natives is not empirically or theoretically informed. They concluded that young people's use of, and capabilities with, digital technologies are diverse and more complex than the binary classification of natives and immigrants, and that there is no evidence of widespread dissatisfaction, or demands to radically transform higher education on the basis of generational differences (Bennett et al., 2008). Similarly, Jones and Shao reported that the global empirical evidence demonstrates young people's behaviors, interests, use and experiences with digital technologies are diverse, and do not conform with the idea of a single group or generation with common characteristics (Jones and Shao, 2011). More recently Kirschner and De Bruyckere have stated that:

“…there is quite a large body of evidence showing that the digital native does not exist nor that people, regardless of their age, can multitask. …[R]esearch shows that these learners may actually suffer if teaching and education plays to these alleged abilities to relate to, work with, and control their own learning with multimedia and in digitally pervasive environments” (Kirschner and De Bruyckere, 2017).

However, the original Prensky article “Digital Natives, Digital Immigrants” (Prensky, 2001) has been cited over 29,000 times. Over 4,500 of those since the beginning of 2019 alone (according to Google Scholar as of October 2020).

In summary then, these are just some examples of approaches to Higher Education policy and practice that are not evidence-based, but which are widespread, supporting Locke's observation of a disconnect between research, policy and practice in HE (Locke, 2009).

In 1996 David Sackett put forward a model that is now fundamental to practice in healthcare; decisions about patient care are based on 3 things (1) the best available research evidence, (2) the values and wishes of the patient, and (3) prior experience of the healthcare professional (Sackett et al., 1996). Also in 1996, David Hargreaves argued for essentially the same principle in education. His lecture “Teaching as a research-based profession; prospects and possibilities” stated;

“both education and medicine are profoundly people-centered professions. Neither believes that helping people is a matter of simple technical application but rather a highly skilled process in which a sophisticated judgement matches a professional decision to the unique needs of each client” (Hargreaves, 1997).

Hargreaves' lecture made repeated comparisons between education and medicine, urging education researchers to work on identifying and implementing methods which “work.” How have these calls for evidence-based practice fared? Medicine now has, amongst many initiatives, developed the Cochrane Collaboration, with tens of thousands of people systematically reviewing research evidence around the world (Allen and Richmond, 2011). As we will describe below, evidence-based approaches to education have not progressed as far, despite there being a significant volume of research evidence. There are philosophical objections to the very idea of an evidence-based approach (Biesta, 2007, 2010; Oancea and Pring, 2008; Wrigley, 2018) and many ineffective teaching methods persist, as we described above.

Here we propose that, with the application of a pragmatic approach, we might move beyond the controversy and focus on a model of evidence-based education that is useful for everyone involved in HE, allowing educators to utilize the vast volume of existing research evidence and other literature to make critical judgements about how to improve learning in their particular context.

Pragmatism is a philosophy, a research paradigm, and an approach to practice, all focused on an attempt to identify what is practically useful (James, 1907; Creswell, 2003; Ormerod, 2006; Duram, 2010; Feilzer, 2010). This single word, useful, is at the heart of pragmatism.

Pragmatism has a long history as a philosophy. It is perhaps best known by the early 20th century works of Charles Sanders Pierce, William Dewey and William James, whose ideas were then refined and developed by later 20th century scholars such as Richard Rorty and Hilary Putnam (Ormerod, 2006). Within the work of these philosophers, the pragmatic emphases on usefulness and practical outcomes are set against other philosophical approaches which prioritize metaphysical and epistemological considerations, as summed up in this quote from William James;

“It is astonishing to see how many philosophical disputes collapse into insignificance the moment you subject them to [a] simple test of tracing a concrete consequence.” (James, 1907 Lecture II).

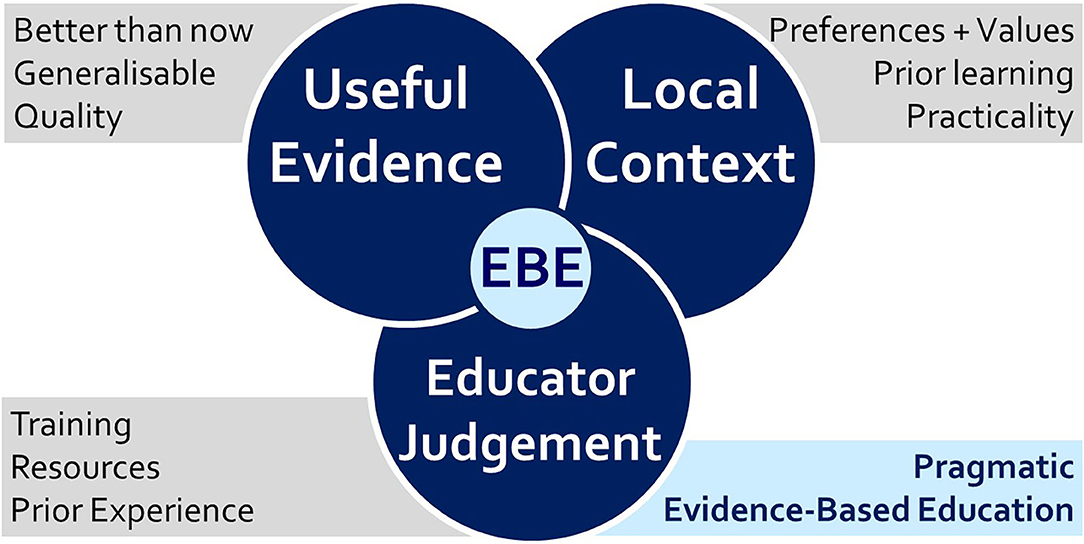

This distinction is also reflected in the definition of Pragmatism as a research paradigm, where the research question itself has primacy, rather than methodological or philosophical underpinnings of the research; these are simply means of answering the question and one chooses the best tools for the job (Creswell, 2003). Many researchers consider epistemological questions, particularly regarding what can be considered as “knowledge” or “truth,” to be a significant issue when undertaking research in education. These perspectives form a significant aspect of many objections to evidence-based education as we will describe below. Pragmatic research approaches place a reduced emphasis on this conflict, instead prioritizing the undertaking of research that is practically useful (Feilzer, 2010), valuing knowledge for its usefulness, and using it to address real world problems that affect people (Duram, 2010). Here we propose that these pragmatic approaches be extended into a model of evidence-based educational practice (Figure 1).

Figure 1. A Model of Pragmatic, Evidence-Based Education. A decision-making model for the application of research evidence to improving educational practice. The most useful research evidence is combined with practitioner judgement about how and why to apply it in a specific context, with specific questions for each aspect. At the intersection of these three factors is Pragmatic Evidence-Based Education (EBE).

The model is intended for educators and policymakers, to help them make the best use of existing education research evidence when making contextual decisions about local practice. We will work through each aspect of the model in turn and highlight some key questions and factors, along with a consideration of relevant criticism of evidence-based approaches and how these might be addressed by taking a pragmatic approach. In later sections we go on to describe the application of the model and some future directions.

When making decisions based on research evidence, a key question to consider is whether the findings of that research will apply in a new context; for example at a different university, for a different group of students. Key characteristics of the context would include the prior knowledge and skills of the learners, and the use to which any new learning will be put. The values, preferences and autonomy of learners and other stakeholders are an important aspect of the context. Physical and practical considerations would also feature here.

Evidence from the K-12 sector suggests that decisions made by educators about whether a piece of research is useful are already strongly influenced by the context in which that research was undertaken; how compatible is it with the context in which the educator might apply the findings (Neal et al., 2018). It seems obvious that a study from a similar institution with a group of demographically similar learners studying the same subject in the same way will be more obviously relevant. But these are likely to be rare, so how might we apply the findings of studies from other contexts?

Generalizability is fundamental to evidence-based clinical practice. Research evidence is organized into hierarchies wherein methods such as Randomized Controlled Trials (RCTs) and meta-analyses are prioritized, partly because the findings are more likely to be generalizable to other contexts. However, doubts over the generalizability of education research findings between contexts are central to many criticisms of evidence-based education. These doubts are captured in the following quote;

“Within the educational research community there is, therefore, a divide between, on the one hand, those who seem to aspire to making a science of educational research, hypothesizing the causes of events and thus of ‘what might work’, seeking to generalize, for example, about the ‘effective school’ or the ‘good teacher’ on the basis of unassailable observations, and, on the other hand, those who reject such a narrow conception, pointing to the fact that human beings are not like purely physical matter and cannot be understood or explained within such a positivist framework” (Oancea and Pring, 2008).

The last line presents the argument but from an epistemological perspective; that any effort to identify generalizable research is taking a positivist approach, searching for defined truths that are applicable in any context. A common argument against evidence-based education is then that such positivist approaches are not possible in a complex, social activity such as education (Pring, 2000; Badley, 2003; Hammersley, 2005; Biesta, 2007; Oancea and Pring, 2008; Howe, 2009; Wrigley, 2018).

We find much to agree with in this perspective, particularly the difficulty of taking simple generalizable findings and assuming they will work in any context. However, we also agree with Selwyn that those undertaking research have a responsibility, where possible and appropriate, to identify how their findings might be used by others, and how they might not (Selwyn, 2014). For us this is a fundamentally important issue. The underpinning epistemological debates are interesting and challenging, but, from a pragmatic perspective, they are less important to an educator or a university seeking to improve practice for their students. When considering how to improve education in their local context, any educator, student or policymaker must be able to turn to the research literature and ask; how has this been done before? What happened, to whom, and why? How much did it cost? Could we implement that here? What can I learn from what has been done before? How useful is this research in my context.

Our emphasis here is on the most useful, rather than the “best” or “most generalizable” evidence. How might an educator identify the most useful research evidence for them to apply, in their context? What questions would they need to ask? Much of this will be based on considerations of similarities between contexts and other variables in the evidence, as we explain in the section Applying the Model.

Practical evidence summaries, that provide practitioners with evidence-based information in a digestible format focused on practice, are currently limited (Cordingley, 2008), and some high profile syntheses have met with considerable criticism from methodological perspective, in part because they strip out all local context and focus entirely on generalizability (Bergeron and Rivard, 2017; Simpson, 2017, 2018).

In medicine there are an abundance of such syntheses. They are translated into a practical format in the formal of clinical guidelines (Turner et al., 2008). These are recommendations, not rules, contributing to contextual decision making as part of a triad similar to that shown in Figure 1. There are at least two existing initiatives whose aim is broadly similar, and already have a pragmatic aspect that could be adopted across HE.

This initiative was established in 1999 and argued for a move from an opinion-based to evidence-based teaching (Harden et al., 1999). BEME reviews summarize current evidence on a topic in a way that is designed to help educators in practice. The BEME concept has two important pragmatic principles; the “best evidence” approach, and the use of the QUESTS dimensions to evaluate evidence.

Despite the title, the best evidence approach rejects the idea of evidence as an universal truth independent of the judgment of those in practice. It adopts a view of evidence as a continuum of quality, whereby different types of evidence can be considered. For example, “evidence from professional judgement,” or “Evidence based on consensus views built on experience” are considered as valid, although less-heavily weighted than more obviously generalizable sources of evidence. This results in a variety of review types (e.g., Scoping reviews, realistic review, systematic review) all anchored on the same principle: how does this help educators to develop an evidence-based practice? In summary – is this useful for practice.

QUESTS (quality, utility, extent, strength, target, setting) dimensions are criteria used to evaluate the evidence which underpins a BEME review. They include some obviously pragmatic factors such as utility, defined as “the extent to which the method or intervention, as reported in the original research report can be transplanted to another situation without adaptation.” The “setting” dimension asks reviewers to consider the location in which research was undertaken (Harden et al., 1999).

The EEF takes a practical approach to the reporting of evidence, using visually appealing, short, structured summaries of evidence organized by practically focused points, thus making the summaries maximally useful. Contrary to BEME, however EEF defines a methodological criterion for inclusion/exclusion of studies in their reviews, but considers them alongside costs and impact, thus building in a pragmatic aspect. EEF outputs are primarily focused on school-age learners, particularly those from disadvantaged backgrounds, but the findings can be useful at other levels. Similar summaries for HE could be helpful and useful.

This aspect of the model is the execution of a judgement, based upon experience. This is, by definition, a pragmatic action. The relevant experience would include overall teaching experience of the person(s) making the judgement, along with experience of the particular features of the context and the research. Any training needs and other resource considerations would feature here.

Many arguments against evidence-based education are based on the idea that methods commonly associated with evidence-based approaches to medicine (e.g., RCTs, systematic review, meta-analysis), and their underlying philosophical assumptions “threaten to replace professional judgement” [e.g., see (Clegg, 2005; Biesta, 2007, 2010; Wrigley, 2018)].

For example, when considering “demands for evidence-based teaching,” Wrigley writes

“What now stands proxy for a breadth of evidence is statistical averaging. This mathematical abstraction neglects the contribution of the practitioner's accumulated experience, a sense of the students' needs and wishes, and an understanding of social and cultural context” (Wrigley, 2018).

The 1996 models of evidence-based practice described above rate practitioner judgement and patient/learner needs as fundamentally important, but this message has clearly been lost in the intervening years; a similar concern has been voiced in medicine, alongside concerns of an imbalance in the triad of inputs to decision-making, wherein “evidence” trumps the others, through rigid and inflexible hierarchies (Greenhalgh et al., 2014). This has led for calls for the phrase “evidence-based” to be replaced with “evidence-informed” to reflect and restore the importance of practitioner judgement in decision-making.

Thus, one aspect of the case made here is a need to recognize the importance of judgement in models of evidence-based education that have existed for almost 25 years, and to reassure those who are hesitant about evidence-based education, that judgement is a cornerstone of the approach.

However, a truly pragmatic approach to evidence-based education requires a recognition of the practical, pragmatic judgements about evidence that go beyond whether or not it “works,” and which are an everyday fact of life for practitioners in HE. Issues such as cost, training and other resources need to be factored into judgements about the application of evidence to practice. Such considerations are commonly absent from research evidence about the effectiveness of new teaching methods, particularly regarding technological innovations (Kennedy et al., 2015). Educating educators about costing models would further facilitate a pragmatic approach (Maloney et al., 2019). Setting in place plans to evaluate the effectiveness of new innovations, with realistic expectations and plans to follow up [e.g. (Parsons, 2017, p. 31)] would then facilitate further judgement and review.

It is also important to consider who makes the judgements. Higher Education is a hierarchical field. Multiple stakeholders wield significant power; senior managers, governments, professional bodies and regulators often define the circumstances wherein individual educators and students might make judgements (or not) about their own practice. There might also then be disagreement between stakeholders about what constitutes useful evidence. The model described in Figure 1 is not designed to completely change those imbalances, whether they exist for good or bad. They will likely persist, and there will always be disagreements over policy and practice. Instead we hope to shift the nature of the discussion toward pragmatic, contextual considerations of useful evidence and moving away from circumstances which lead to the examples described in the introduction. Application of the model should also facilitate a more transparent, and thus hopefully democratic, account of the evidence upon which decisions are based.

Here now we discuss the application of the pragmatic model shown in Figure 1, with examples of how it might be applied and suggestions for future directions to improve teaching practice in HE from a pragmatic perspective.

There are many situations where, as individual educators, we are asked to make decisions about our practice. For example, consider an educator, based at a UK university who wants to improve the written feedback they give to their students. The model shown in Figure 1, along with the explanations above, gives that educator a structure for pragmatic decision making based on useful evidence. This could include generalizable studies focused on improving feedback for learning, rather than just student satisfaction [e.g., (Hattie and Timperley, 2007; Shute, 2008)]. However, it would be reasonable to assume that an ethnographic study on perceptions written feedback provided by educators at a similar UK Higher Education institution might also be useful [e.g., (Bailey and Garner, 2010)], in part because it has been undertaken in a similar context. The ethnographic study might be less useful in a different context, for example a clinical educator who wants to improve the verbal feedback given to students following bedside teaching encounters. That clinical educator might still find something useful in the generalizable studies, but could again supplement that with findings from a qualitative study from their own context [e.g., (Rizan et al., 2014)].

These two study designs; generalizable reviews and ethnographic investigation, would be at opposite ends of a traditional hierarchy of evidence, in large part because of a perceived lack of generalizability of an ethnographic study. Yet when viewed contextually through a pragmatic lens, both may represent useful evidence for educators when considering their specific question (how do I improve a certain type of feedback given to a particular group of students studying a specific subject).

The model can be applied to decision making processes at the departmental, or institutional level, resulting in pragmatic evidence-based policy. The balance of generalizable and contextual data is likely to vary depending on the level at which the model is being enacted. In all cases, the application of the evidence would require the execution of a pragmatic judgement; the availability of time and other resources to implement the recommendations from the evidence, the relevance of the evidence to the context, and so on. The model can also be used by learners themselves, to make decisions about how, when, why and what to study, and for the teaching of study skills to learners.

The decisions made using the model would need to be reviewed regularly, as the evidence base updates, and the context shifts. An example of this, perhaps extreme but certainly relevant at the time of writing, is the sudden pivot to online learning made by the global Higher Education sector in response to the COVID-19 pandemic. There is an abundant evidence-base regarding learning online and at a distance, but much of this was developed to optimize learning under planned circumstances where students could choose to learn online, or in a structured blended way, very different to the one we find ourselves in, late in 2020. A pragmatic application of the existing evidence to the new context can help us with this rapid change, and help us plan for what might become a “new normal” (Nordmann et al., 2020).

Applying a pragmatic approach would also help us make sense of the many new approaches and innovations that we are encouraged to apply. “Active Learning” is a method that is often advocated, on the back of meta-analyses showing that it improves short-term outcomes and reduces dropout rates (Freeman et al., 2014), and particularly benefits under-represented groups (Theobald et al., 2020). However, some studies report that students prefer didactic lectures to Active Learning, and feel that they learn more in those environments (Deslauriers et al., 2019). How might an educator apply these results in their own context, balancing the proposed benefits of Active Learning with respect for the values and preferences of their learners? The 2014 meta-analysis from Freeman et al. is focused on achievement in STEM, and lists dozens of studies from within that field, using a broad definition of Active Learning;

“Active learning engages students in the process of learning through activities and/or discussion in class, as opposed to passively listening to an expert. It emphasizes higher-order thinking and often involves group work.” (Freeman et al., 2014).

Analyzing the studies included in the meta-analysis reveals a number of different ways in which this approach has been included. For example, Crider applied a “hot seat” approach wherein students were asked to come to the front of a class and answer questions in front of their peers. Students performed better on questions relating to the material for which they had been in the “hot seat” (Crider, 2004). Although set in the context of astronomy education, this approach would probably generalize to other contexts, including those outside STEM. It would be cheap and easy to implement. However, when applying the model shown in Figure 1, an educator would consider more than just whether or not student test scores improved. They would also consider whether it would fit with the values and preferences of all their learners to be bought to the front of the class to answer questions in front of their peers?

Other studies included by Freeman et al. are those by Beichner, who have been developing a successful Active Learning approach to physics and other subjects since 1997 (Beichner, 1997). When considered as part of an academic meta-analysis, the positive effects on learning found by Beichner are substantial. However, a pragmatic perspective goes beyond the effect size; these Active Learning approaches often require a significant investment in restructuring of the physical classroom space and technological infrastructure, as well as training and support for staff. Funding issues may therefore be prohibitive for individual educators (Foote et al., 2016).

By pragmatically considering all aspects of the context and evidence, an individual educator may be able to take advantage of many of the benefits of Active Learning, while maintaining student satisfaction and keeping down costs. For example, many common interventions deployed in the studies analyzed by Freeman et al. (2014) are worksheets, clickers and quizzes. Many studies use these methods in commonly used large group settings such as lectures.

Lectures themselves are another area where a pragmatic consideration of the evidence might help us move a debate forward. Scholars of learning and teaching have complained about (in)effectiveness of lectures since at least the 1960's (French and Kennedy, 2017) and they are often the control condition against which new methods of teaching are compared [e.g., (Freeman et al., 2014; Theobald et al., 2020)]. Despite these concerns about their effectiveness, lectures remain a dominant teaching method around the world [e.g., (Stains et al., 2018)]. Pragmatic considerations are often missing from the academic debate about lecturing as a teaching method. French and Kennedy make the argument that lectures are a cost-effective method of delivering large-group teaching, that can include many evidence-based teaching practices at scale, when compared to alternatives (French and Kennedy, 2017). This seems intuitively obvious. For example, salary costs for additional tutors are one of the main costs associated with small-group techniques such as Problem-based learning (Finucane et al., 2009). It seems likely to be simpler, and cheaper, for one educator to teach 300 students all at the same time, in one room, for an hour, than (e.g.,) for 30 educators to utilize small group teaching methods with groups of ~10 all in separate rooms, likely for longer than an hour. Alongside this basic cost is a need to consider the opportunity cost (Maloney et al., 2019); the 29 other educators might then spend that time writing feedback, or developing authentic assessment items; doing other things that are useful for the students. The benefits that might be achieved using a small group approach might be gained elsewhere, through online activities, aligned to the lecture but which students complete in their own time. Alternately, a poorly designed lecture might then have displacement costs in terms of staff time required to support students to catch up, likely through less visible means such as informal emails and tutorials.

To be clear; we are not arguing for or against lectures as a teaching technique. Instead we are arguing for the pragmatic perspective shown in Figure 1. A fundamental part of this model is that it allows educators and others to determine whether or not a specific piece of evidence is useful in a particular context, for a particular groups of students, rather than reaching simple conclusions that one teaching method is good or bad. This needs to include operational, practical considerations in addition to academic metrics.

These are just a few examples of how the model could be used to integrate evidence and local context into a judgement about how best to apply research evidence to a teaching and learning situation. These same principles could be applied to many of the situations described in the introduction. For example, the context-less list of interventions described by Schneider and Preckel (2017) could be a starting place for a deeper consideration of the literature as applied to a particular context.

There is still a place for traditional notions of rigor in pragmatic research, but this rigor should be designed, applied and interpreted in ways that seek to promote the usefulness of research findings. For example, when an educator is considering whether or not to use an unfamiliar teaching technique, traditional notions of rigor would suggest that well-conducted rigorous multisite research which uses multiple objective outcomes across large samples with high response rates, would likely be more useful to that educator than a subjective self-report from a third party, or a low-response survey of stakeholder opinions at one site. However, a well-conducted qualitative study undertaken in the same context as the one the educator is considering, could be more useful than a survey study from a different context.

Pragmatic research need not always develop new ideas and innovations, in fact the evidence base could be considerably improved if more emphasis was placed on the evaluation of techniques once implemented. A 2015 report from the OECD showed that education accounted for 12% of public spending by member states. They tracked 450 innovations implemented over 6 years; only 10% were evaluated to determine whether they were effective (OECD, 2015a). These evaluations could include costs, usefulness, and other pragmatic measures described above.

A challenge then is how we enact this pragmatic research and evaluation. This work can and should take place at all “levels” of a traditional hierarchy of evidence; Action Research, for example, is a commonly used tool for individual practitioners to evaluate the implementation of new innovations into their own practice, and is a methodology that fits well with the pragmatic approach (Greenwood, 2007; Ågerfalk, 2010).

Another pragmatic approach which does not need to involve the large scale project and university research centers would be to establish research-practice partnership models, that value and support high quality practitioner-led research to promote a local evidence-base (Wentworth et al., 2017). Such models have been advocated at other levels of education [e.g., (Tseng et al., 2017)].

Another, complimentary approach would be to develop tools to appraise and categorize existing research outputs according to their pragmatic usefulness, in particular to allow an educator to determine “how useful is this research to my decision-making.” There is an abundance of traditional evidence available; ~10,000 education research papers per year. Tools such as the Medical Education Research Study Quality Instrument (MERSQI) have been designed to generate descriptive data about various quality measures relating to medical education research (Cook and Reed, 2015), but they are largely based on notions of rigor that are drawn from medicine and basic sciences; they are not (yet) truly pragmatic. The development of tools for educators and other decision makers to make pragmatic judgements based on the evidence, and the context, could benefit all the stakeholders in HE.

In 1967, Schwartz and Lellouch proposed differentiating between two types of clinical trials. “Explanatory” trials were aimed at advancing knowledge and “acquiring information” under ideal or laboratory conditions designed to test and reveal the effectiveness of a treatment. “Pragmatic” trials were aimed at generating information to “make a decision” under “normal” or practice-based conditions. They proposed many ways of planning for such approaches, for example by using one tailed statistical tests in Pragmatic trials where there was an obvious intent to test whether “Treatment A is better than Treatment B” (Schwartz and Lellouch, 1967). Since then, evidence-based medicine has embraced the use of “pragmatic trials,” and has developed standards of reporting for them (Zwarenstein et al., 2008). This approach seems well-suited to many of the practical and funding challenges of education research (Sullivan, 2011) but has seen only a limited implementation [e.g., see (Torgerson, 2009)].

The value of pragmatic trials in education could be enhanced by the development of ways to identify useful outcomes. Glasgow and Riley identify a number of “Pragmatic Measures” for healthcare research, as well as ways to identify them. Proposed criteria include that the measures be “important to stakeholders,” “low burden,” “actionable,” and “broadly applicable” and that they can be benchmarked to established norms (Glasgow and Riley, 2013). It seems reasonable that these could be developed to be relevant to research and practice in education. Perhaps the most useful would be “important to stakeholders.” If researchers, funders and journal editors were to prioritize questions such as “who is this useful for and why”? then this could facilitate the generation of pragmatic research findings, and their translation into practice.

An increased emphasis on practitioner research in education, such as Professional/Practitioner Doctorates, could help facilitate the generation of research which is aimed at being directly useful to practice. Students on such doctorates are often already working as professionals, for example as teachers, or healthcare professionals, and so they bring with them the experience and service user perspectives that are a part of the model shown in Figure 1. The UK Quality Assurance Agency defines these degrees as being

“….designed to meet the needs of the various professions in which they are rooted, including: business, creative arts, education, engineering, law, nursing and psychology. They can advance professional practice or use practice as a legitimate research method” and are“are normally located within the candidate's profession or practice……….in both practice-based and professional doctorate settings, the candidate's research may result directly in organizational or policy - related change.” (QAA, 2015).

This emphasis on the advancements of professional practice means that the research undertaken as a Professional Doctorates is by, its very nature, more often pragmatic in approach (Costley and Lester, 2012).

Perhaps most importantly, we need to prioritize practical, pragmatic teaching skills for educators. Teaching in HE is a multi-faceted activity requiring practical skills in communication, presentation, feedback, listening, technology, reflection and more. Effective, short, practical skills workshops are a feature of HE teacher training in some countries [e.g., (Steinert et al., 2006)] but in other countries these practical approaches are often overlooked in favor of a reflective approach (Henderson et al., 2011; Foxe et al., 2017). Professional development programmes focused on practical teaching skills are limited, both in terms of their availability, and the amount of time that staff have available to dedicate to them (Jacob et al., 2015). Uptake of evidence-based approaches is then restrained by institutional cultures that are content with existing approaches (Henderson and Dancy, 2007).

In many countries there is no requirement to attain a formal teaching qualification, or any practical training, to become or remain a teacher in Higher Education (ICED, 2014). Amongst the qualifications that are available, there is no consistent requirement for the learning, or assessment, of practical teaching skills. Instead the emphasis is on training to use new technologies (Jacob et al., 2015) or reflective practice (Kandlbinder and Peseta, 2009), which is a major focus of faculty development for HE (Henderson et al., 2011). Teaching staff report engaging in the reflective professional development programmes for a variety of reasons unrelated to the development of their teaching skills, for example in order to meet criteria for promotion (Spowart et al., 2016; Jacob et al., 2019), or as a result of institutional requirements driven by league tables (Spowart et al., 2019). The efficacy of such programmes varies widely (ICED, 2014; Jacob et al., 2019), and there is limited evidence that they contribute to the strategic priorities of HEIs (Bell and Brooks, 2016; Newton and Gravenor, 2020).

There is an abundance of academic literature on Higher Education, stretching back decades. We owe it to all involved in education to ensure that this literature can best inform innovation and improvement in future educational policy and practice, in a way that allows for professional judgement and a consideration of context. We also need to prioritize future research endeavors that are useful for the sector. This could be achieved by adopting principles of pragmatic, evidence-based higher education.

If HE faculty development was designed to be practically useful, visibly aligned to useful research evidence, then this should cascade improvements to the other issues identified in the introduction. The collection of student feedback as a measure of teaching quality could be methodologically improved, and there would be less of a reliance on it as a metric; having qualified teachers, deploying evidence-based practical approaches would result in improved teaching (Steinert et al., 2006), and the numbers of truly “qualified” teachers at a HE provider could then serve as a metric for teaching quality.

HE teachers, and policymakers, who were trained and supported to take a critical, but pragmatic, perspective on research evidence and academic literature that was itself designed to be maximally useful might then be equipped to finally move us all past common but flawed concepts such as Learning Styles and Digital Natives, into an age where teaching policy and practice make the best use of evidence for the benefit of everyone.

• Faculty development programmes and credentials for educators in Higher Education should be practical and skills based including the skills needed to pragmatically appraise education research evidence.

• Establish pragmatic practical evidence summaries for use across international Higher Education, allowing adjustment for context.

• Foster more syntheses of existing primary research that answer questions that are useful for practice: what works, for whom, in what circumstances, and why? How much does it cost, what is it compared to, how practical is it to implement? How can these be developed internationally using collaborative groups of educators and researchers?

• Where a research study leads to a positive outcome, efforts to replicate the findings across multiple contexts.

• Increased funding for research into the effectiveness (or not) of learning and teaching approaches in HE.

• Requirements for accreditation, policy and practice to be grounded in existing research evidence.

PN conceived the work and developed the ideas through discussion with ADS and SB. PN wrote initial drafts. ADS and SB provided critical feedback and contributed further written sections. PN finalized the manuscript and revisions, which were edited, and approved by ADS and SB.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors would like to thank Professor Andrew Kemp, Professor Michael Draper, and Dr. Nigel Francis for critical feedback on earlier drafts of this work.

Ågerfalk, P. J. (2010). Getting pragmatic. Eur. J. Info. Sys. 19, 251–256. doi: 10.1057/ejis.2010.22

Al-Shorbaji, N., Atun, R., Car, J., Majeed, A., and Wheeler, E. (2015). eLearning for Undergraduate Health Professional Education: A Systematic Review Informing a Radical Transformation of Health Workforce Development. London: Imperial College London.

Allen, C., and Richmond, K. (2011). The cochrane collaboration: International activity within cochrane review groups in the first decade of the twenty-first century. J. Evid. Based Med. 4, 2–7. doi: 10.1111/j.1756-5391.2011.01109.x

Aslaksen, K., and Lorås, H. (2018). The modality-specific learning style hypothesis: a mini-review. Front. Psychol. 9:1538. doi: 10.3389/fpsyg.2018.01538

Badley, G. (2003). The crisis in educational research: a pragmatic approach. Eur. Educ. Res. J. 2, 296–308. doi: 10.2304/eerj.2003.2.2.7

Bailey, R., and Garner, M. (2010). Is the feedback in higher education assessment worth the paper it is written on? Teachers' reflections on their practices. Teach. High. Educ. 15, 187–198. doi: 10.1080/13562511003620019

Basow, S. A., and Martin, J. L. (2012). “Bias in student evaluations,” in Effective Evaluation of Teaching, ed M. E. Kite (Society for the Teaching of Psychology), 40–49.

Bayne, S. (2015). What's the matter with ‘technology-enhanced learning’? Learn. Media Technol. 40, 5–20. doi: 10.1080/17439884.2014.915851

Beichner, R. (1997). “Evaluation of an integrated curriculum in Physics, Mathematics, Engineering, and Chemistry,” in American Physical Society, APS/AAPT Joint Meeting.

Bell, A. R., and Brooks, C. (2016). Is There a Magic Link between Research Activity, Professional Teaching Qualifications and Student Satisfaction? Rochester, NY: Social Science Research Network. Available online at: http://papers.ssrn.com/abstract=2712412 (accessed August 5, 2016).

Bennett, S., Maton, K., and Kervin, L. (2008). The ‘digital natives’ debate: a critical review of the evidence. Br. J. Educ. Technol. 39, 775–786. doi: 10.1111/j.1467-8535.2007.00793.x

Bergeron, P.-J., and Rivard, L. (2017). How to engage in pseudoscience with real data: a criticism of john hattie's arguments in visible learning from the perspective of a statistician. McGill J. Educ. 52, 237–246. doi: 10.7202/1040816ar

Biesta, G. (2007). Why “What Works” won't work: evidence-based practice and the democratic deficit in educational research. Educ. Theory 57, 1–22. doi: 10.1111/j.1741-5446.2006.00241.x

Biesta, G. J. J. (2010). Why what works still won't work: from evidence-based education to value-based education. Stud. Philos. Educ. 29, 491–503. doi: 10.1007/s11217-010-9191-x

Bjork, E. L., and Bjork, R. (2011). “Making things hard on yourself, but in a good way: creating desirable difficulties to enhance learning,” in Psychology and The Real World: Essays Illustrating Fundamental Contributions to Society, eds M. A. Gernsbacher, R. W. Pew, L. M. Hough, J. R. Pomerantz (Worth Publishers), 56–64.

Blackboard Inc. (2019). Blackboard Learn. Learning Management System. Available online at: https://sg.blackboard.com/learning-management-system/blackboard-learn.html (accessed August 29, 2019).

Bloom, B. S., Egelhart, M. D., Furst, E. J., and Krathwohl, D. R. (1956). “Taxonomy of educational objectives,” in The Classification of Educational Goals, Handbook I: Cognitive Domain. New York, NY: Longmans Green.

Boring, A. C., Ottoboni, K., and Stark, R. (2016). Student evaluations of teaching (Mostly) do not measure teaching effectiveness. ScienceOpen Res. 1–11. doi: 10.14293/S2199-1006.1.SOR-EDU.AETBZC.v1

Chandler, P., and Sweller, J. (1991). Cognitive load theory and the format of instruction. Cogn. Instr. 8, 293–332. doi: 10.1207/s1532690xci0804_2

Chen, F., Lui, A. M., and Martinelli, S. M. (2017). A systematic review of the effectiveness of flipped classrooms in medical education. Med. Educ. 51, 585–597. doi: 10.1111/medu.13272

Clegg, S. (2005). Evidence-based practice in educational research: a critical realist critique of systematic review. Br. J. Sociol. Educ. 26, 415–428. doi: 10.1080/01425690500128932

Coffield, F., Moseley, D., Hall, E., and Ecclestone, K. (2004). Learning Styles and Pedagogy in Post 16 Learning: A Systematic and Critical Review. The Learning and Skills Research Centre. Available online at: https://www.leerbeleving.nl/wp-content/uploads/2011/09/learning-styles.pdf (accessed July 26, 2015).

Cook, D. A., and Reed, D. A. (2015). Appraising the quality of medical education research methods: the medical education research study quality instrument and the newcastle–ottawa scale-education. Acad. Med. 90, 1067–1076. doi: 10.1097/ACM.0000000000000786

Cordingley, P. (2008). Research and evidence-informed practice: focusing on practice and practitioners. Cambridge J. Educ. 38, 37–52. doi: 10.1080/03057640801889964

Costley, C., and Lester, S. (2012). Work-based doctorates: professional extension at the highest levels. Stud. High. Educ. 37, 257–269. doi: 10.1080/03075079.2010.503344

Creswell, J. W. (2003). “Chapter 1: A framework for design,” in Research Design: Qualitative, Quantitative and Mixed Methods (Thousand Oaks, CA: SAGE Publications Ltd).

Crider, A. (2004). “Hot Seat” questioning: a technique to promote and evaluate student dialogue. Astronomy Educ. Rev. 3, 137–147. doi: 10.3847/AER2004020

Dancy, M., Henderson, C., and Turpen, C. (2016). How faculty learn about and implement research-based instructional strategies: the case of peer instruction. Phys. Rev. Phys. Educ. Res. 12:010110. doi: 10.1103/PhysRevPhysEducRes.12.010110

Dandy, K., and Bendersky, K. (2014). Student and faculty beliefs about learning in higher education: implications for teaching. Int. J. Teach. Learn. High. Educ. 26, 358–380.

Davies, P. (1999). What is evidence-based education? Br. J. Educ. Stud. 47, 108–121. doi: 10.1111/1467-8527.00106

Dempster, E. R., and Kirby, N. F. (2018). Inter-rater agreement in assigning cognitive demand to Life Sciences examination questions. Perspect. Educ. 36, 94–110. doi: 10.18820/2519593X/pie.v36i1.7

Deslauriers, L., McCarty, L. S., Miller, K., Callaghan, K., and Kestin, G. (2019). Measuring actual learning versus feeling of learning in response to being actively engaged in the classroom. Proc. Natl. Acad. Sci. U.S.A. 20:1821936. doi: 10.1073/pnas.1821936116

Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., and Willingham, D. T. (2013). Improving students' learning with effective learning techniques: promising directions from cognitive and educational psychology. Psychol. Sci. Public Interest 14, 4–58. doi: 10.1177/1529100612453266

Ebert-May, D., Derting, T. L., Hodder, J., Momsen, J. L., Long, T. M., and Jardeleza, S. E. (2011). What we say is not what we do: effective evaluation of faculty professional development programs. BioScience 61, 550–558. doi: 10.1525/bio.2011.61.7.9

Fan, Y., Shepherd, L. J., Slavich, E., Waters, D., Stone, M., Abel, R., et al. (2019). Gender and cultural bias in student evaluations: why representation matters. PLoS ONE 14:e0209749. doi: 10.1371/journal.pone.0209749

Feilzer, M. (2010). Doing mixed methods research pragmatically: implications for the rediscovery of pragmatism as a research paradigm. J. Mix. Methods Res. 4, 6–16. doi: 10.1177/1558689809349691

Findley, A. (2018). What is Human Systems Integration? Retrieved from: https://www.capturehighered.com/higher-ed/getting-know-generation-z/ (accessed December 10, 2020).

Finucane, P., Shannon, W., and McGrath, D. (2009). The financial costs of delivering problem-based learning in a new, graduate-entry medical programme. Med. Educ. 43, 594–598. doi: 10.1111/j.1365-2923.2009.03373.x

Foote, K., Knaub, A., Henderson, C., Dancy, M., and Beichner, R. J. (2016). Enabling and challenging factors in institutional reform: the case of SCALE-UP. Phys. Rev. Phys. Educ. Res. 12:010103. doi: 10.1103/PhysRevPhysEducRes.12.010103

Foxe, J. P., Frake-Mistak, M., and Popovic, C. (2017). The instructional skills workshop: a missed opportunity in the UK? Innov. Educ. Teach. Int. 54, 135–142. doi: 10.1080/14703297.2016.1257949

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., et al. (2014). Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acad. Sci. U.S.A. 111, 8410–8415. doi: 10.1073/pnas.1319030111

French, S., and Kennedy, G. (2017). Reassessing the value of university lectures. Teach. High. Educ. 22, 639–654. doi: 10.1080/13562517.2016.1273213

Froyd, J. E., Borrego, M., Cutler, S., Henderson, C., and Prince, M. J. (2013). Estimates of use of research-based instructional strategies in core electrical or computer engineering courses. IEEE Trans. Educ. 56, 393–399. doi: 10.1109/TE.2013.2244602

Gibbs, G. (2010). Dimensions of Quality | Advance HE. HEA. Available online at: https://www.advance-he.ac.uk/knowledge-hub/dimensions-quality (accessed October 30, 2020).

Glasgow, R. E., and Riley, W. T. (2013). Pragmatic measures: what they are and why we need them. Am. J. Prev. Med. 45, 237–243. doi: 10.1016/j.amepre.2013.03.010

Greenhalgh, T., Howick, J., and Maskrey, N. (2014). Evidence based medicine: a movement in crisis? BMJ 348:g3725. doi: 10.1136/bmj.g3725

Hammersley, M. (2005). Is the evidence-based practice movement doing more good than harm? Reflections on Iain Chalmers' case for research-based policy making and practice. Evidence Policy 1, 85–100. doi: 10.1332/1744264052703203

Harden, R. M., Hart, I. R., Grant, J., and Buckley, G. (1999). BEME guide No. 1: best evidence medical education. Med. Teach. 21, 553–562. doi: 10.1080/01421599978960

Hargreaves, D. H. (1997). In defence of research for evidence-based teaching: a rejoinder to martyn hammersley. Br. Educ. Res. J. 23, 405–419. doi: 10.1080/0141192970230402

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res 77, 81–112. doi: 10.3102/003465430298487

Henderson, C., Beach, A., and Finkelstein, N. (2011). Facilitating change in undergraduate STEM instructional practices: an analytic review of the literature. J. Res. Sci. Teach. 48, 952–984. doi: 10.1002/tea.20439

Henderson, C., and Dancy, M. H. (2007). Barriers to the use of research-based instructional strategies: the influence of both individual and situational characteristics. Phys. Rev. ST Phys. Educ. Res. 3:020102. doi: 10.1103/PhysRevSTPER.3.020102

Henderson, C., and Dancy, M. H. (2009). Impact of physics education research on the teaching of introductory quantitative physics in the United States. Phys. Rev. ST Phys. Educ. Res. 5:020107. doi: 10.1103/PhysRevSTPER.5.020107

Higher Education Academy (2011). UK Professional Standards Framework (UKPSF). Higher Education Academy. Available online at: https://www.heacademy.ac.uk/ukpsf (accessed February 23, 2017).

Howe, K. R. (2009). Positivist dogmas, rhetoric, and the education science question. Educ. Res. 38, 428–440. doi: 10.3102/0013189X09342003

ICED (2014). The Preparation of University Teachers Internationally | icedonline.net. International Consortium for Educational Development. Available online at: http://icedonline.net/iced-members-area/the-preparation-of-university-teachers-internationally/ (accessed October 25, 2018).

ICEF (2018). Study Projects Dramatic Growth for Global Higher Education Through 2040. ICEF Monitor - Market intelligence for international student recruitment. Available at: Available online at: https://monitor.icef.com/2018/10/study-projects-dramatic-growth-global-higher-education-2040/ (accessed July 12, 2019).

Islam, N., Beer, M., and Slack, F. (2015). E-learning challenges faced by academics in higher education: a literature review. J. Educ. Train. Stud. 3, 102–112. doi: 10.11114/jets.v3i5.947

Jacob, W. J., Xiong, W., and Ye, H. (2015). Professional development programmes at world-class universities. Palgrave Commun. 1:15002. doi: 10.1057/palcomms.2015.2

Jacob, W. J., Xiong, W., Ye, H., Wang, S., and Wang, X. (2019). Strategic best practices of flagship university professional development centers. Profession. Dev. Educ. 45, 801–813. doi: 10.1080/19415257.2018.1543722

James, W. (1907). Pragmatism. A New Name for Some Old Ways of Thinking. New York, NY: Hackett Classics.

Jones, C., and Shao, B. (2011). The Net Generation and Digital Natives - Implications for Higher Education. Higher Education Academy. Available online at: https://www.heacademy.ac.uk/knowledge-hub/net-generation-and-digital-natives-implications-higher-education (accessed August 29, 2019).

Jones, S., Vigurs, K., and Harris, D. (2020). Discursive framings of market-based education policy and their negotiation by students: the case of value for money in English universities. Oxford Rev. Educ. 46, 375–392. doi: 10.1080/03054985.2019.1708711

Kandlbinder, P., and Peseta, T. (2009). Key concepts in postgraduate certificates in higher education teaching and learning in Australasia and the United Kingdom. Int. J. Acad. Dev. 14, 19–31. doi: 10.1080/13601440802659247

Karpen, S. C., Welch, A. C., Cross, L. B., and LeBlanc, B. N. (2017). A multidisciplinary assessment of faculty accuracy and reliability with bloom's taxonomy. Res. Pract. Assess. 12, 96–105. doi: 10.1016/j.cptl.2016.08.003

Kennedy, E., Laurillard, D., Horan, B., and Charlton, P. (2015). Making meaningful decisions about time, workload and pedagogy in the digital age: the course resource appraisal model. Distance Educ. 36, 177–195. doi: 10.1080/01587919.2015.1055920

King, A. (1993). From sage on the stage to guide on the side. College Teach. 41, 30–35. doi: 10.1080/87567555.1993.9926781

Kirkwood, A., and Price, L. (2014). Technology-enhanced learning and teaching in higher education: what is ‘enhanced’ and how do we know? A critical literature review. Learn. Media Technol. 39, 6–36. doi: 10.1080/17439884.2013.770404

Kirschner, P. A., and De Bruyckere, P. (2017). The myths of the digital native and the multitasker. Teach. Teach. Educ. 67, 135–142. doi: 10.1016/j.tate.2017.06.001

Kirschner, P. A., Sweller, J., and Clark, R. E. (2006). Why minimal guidance during instruction does not work: an analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educ. Psychol. 41, 75–86. doi: 10.1207/s15326985ep4102_1

Locke, W. (2009). Reconnecting the research–policy–practice nexus in higher education: ‘Evidence-Based Policy’ in practice in National and International contexts. High Educ. Policy 22, 119–140. doi: 10.1057/hep.2008.3

Luckin, R. (2018). Enhancing Learning and Teaching with Technology: What the Research Says. London: UCL Institute of Education Press.

Maloney, S., Cook, D. A., Golub, R., Foo, J., Cleland, J., Rivers, G., et al. (2019). AMEE guide No. 123 – how to read studies of educational costs. Med. Teach. 41, 497–504. doi: 10.1080/0142159X.2018.1552784

Masters, K. (2013). Edgar Dale's pyramid of learning in medical education: a literature review. Med. Teach. 35, e1584–e1593. doi: 10.3109/0142159X.2013.800636

Masters, K. (2020). Edgar Dale's pyramid of learning in medical education: further expansion of the myth. Med. Educ. 54, 22–32. doi: 10.1111/medu.13813

Means, B., Toyama, Y., Murphy, R., Bakia, M., and Jones, K. (2009). Evaluation of Evidence-Based Practices in Online Learning: A Meta-Analysis and Review of Online Learning Studies. US Department of Education. Available online at: https://eric.ed.gov/?id=ED505824 (accessed August 29, 2019).

Murray, D., Boothby, C., Zhao, H., Minik, V., Bérubé, N., Larivière, V., et al. (2020). Exploring the personal and professional factors associated with student evaluations of tenure-track faculty. PLoS ONE 15:e0233515. doi: 10.1371/journal.pone.0233515

Neal, J. W., Neal, Z. P., Lawlor, J. A., Mills, K. J., and McAlindon, K. (2018). What makes research useful for public school educators? Adm. Policy Ment. Health 45, 432–446. doi: 10.1007/s10488-017-0834-x

Newton, P., and Miah, M. (2017). Evidence-based higher education – is the learning styles ‘Myth’ important? Front. Psychol. 8:444. doi: 10.3389/fpsyg.2017.00444

Newton, P. M., Da Silva, A., and Peters, L. G. (2020). A pragmatic master list of action verbs for bloom's taxonomy. Front. Educ. 5:107. doi: 10.3389/feduc.2020.00107

Newton, P. M., and Gravenor, M. B. (2020). A higher percentage of Higher Education Academy (HEA) qualifications among universities' staff does not appear to be positively associated with higher ratings of student satisfaction a letter of concern in response to Nurunnabi et al. MethodsX 6 (2019) 788–799. MethodsX 7:100911. doi: 10.1016/j.mex.2020.100911

Nordmann, E., Horlin, C., Hutchison, J., Murray, J.-A., Robson, L., Seery, M. K., et al. (2020). Ten simple rules for supporting a temporary online pivot in higher education. PLoS Comput. Biol. 16:e1008242. doi: 10.1371/journal.pcbi.1008242

Oancea, A., and Pring, R. (2008). The importance of being thorough: on systematic accumulations of ‘What Works’ in education research. J. Philos. Educ. 42, 15–39. doi: 10.1111/j.1467-9752.2008.00633.x

OECD (2015a). Education Policy Outlook 2015 - Making Reforms Happen - en - OECD. Available online at: http://www.oecd.org/edu/education-policy-outlook-2015-9789264225442-en.htm (accessed May 13, 2016).

OECD (2015b). Students, Computers and Learning - Making the Connection. Available at: http://www.oecd.org/publications/students-computers-and-learning-9789264239555-en.htm (accessed August 29, 2019).

OECD (2018). Education at a Glance - OECD. Available online at: http://www.oecd.org/education/education-at-a-glance/ (accessed March 1, 2019).

Ormell, C. P. (1974). Bloom's taxonomy and the objectives of education. Educ. Res. 17, 3–18. doi: 10.1080/0013188740170101

Ormerod, R. (2006). The history and ideas of pragmatism. J. Oper. Res. Soc. 57, 892–909. doi: 10.1057/palgrave.jors.2602065

Parsons, D. (2017). Demystifying Evaluation: Practical Approaches for Researchers and Users. Bristol: Policy Press.

Pashler, H., McDaniel, M., Rohrer, D., and Bjork, R. (2008). Learning styles: concepts and evidence. Psychol. Sci. Public Interest 9, 105–119. doi: 10.1111/j.1539-6053.2009.01038.x

Piza, F., Kesselheim, J. C., Perzhinsky, J., Drowos, J., Gillis, R., Moscovici, K., et al. (2019). Awareness and usage of evidence-based learning strategies among health professions students and faculty. Med. Teach. 41, 1411–1418. doi: 10.1080/0142159X.2019.1645950

Prensky, M. (2001). Digital natives, digital immigrants part 1. Horizon. 9, 1–6. doi: 10.1108/10748120110424816

Pring, R. (1971). Bloom's taxonomy: a philosophical critique (2). Cambridge J. Educ. 1, 83–91. doi: 10.1080/0305764710010205

Pring, R. (2000). The ‘False Dualism’ of educational research. J. Philos. Educ. 34, 247–260. doi: 10.1111/1467-9752.00171

QAA (2015). QAA Doctoral Degree Characteristics Statement. Available at: https://www.qaa.ac.uk/docs/qaa/quality-code/doctoral-degree-characteristics-statement-2020.pdf?sfvrsn=a3c5ca81_14 (accessed January 8, 2018).

Rizan, C., Elsey, C., Lemon, T., Grant, A., and Monrouxe, L. V. (2014). Feedback in action within bedside teaching encounters: a video ethnographic study. Med. Educ. 48, 902–920. doi: 10.1111/medu.12498

Robertson, A., Cleaver, E., and Smart, F. (2019). Beyond the Metrics: Identifying, Evidencing and Enhancing the Less Tangible Assets of Higher Education. QAA. Available at: https://rke.abertay.ac.uk/en/publications/beyond-the-metrics-identifying-evidencing-and-enhancing-the-less- (accessed October 29, 2020).

Rohrer, D., and Pashler, H. (2012). Learning styles: where's the evidence? Med. Educ. 46, 634–635. doi: 10.1111/j.1365-2923.2012.04273.x

Sackett, D. L., Rosenberg, W. M. C., Gray, J. A. M., Haynes, R. B., and Richardson, W. S. (1996). Evidence based medicine: what it is and what it isn't. BMJ 312, 71–72. doi: 10.1136/bmj.312.7023.71

Schneider, M., and Preckel, F. (2017). Variables associated with achievement in higher education: a systematic review of meta-analyses. Psychol. Bull. 143, 565–600. doi: 10.1037/bul0000098

Schwartz, D., and Lellouch, J. (1967). Explanatory and pragmatic attitudes in therapeutical trials. J. Clin. Epidemiol. 62, 499–505. doi: 10.1016/j.jclinepi.2009.01.012

Selwyn, N. (2014). ‘So What?’ a question that every journal article needs to answer. Learn. Media Technol. 39, 1–5. doi: 10.1080/17439884.2013.848454

Shute, V. J. (2008). Focus on formative feedback. Rev. Educ. Res. 78, 153–189. doi: 10.3102/0034654307313795

Simpson, A. (2017). The misdirection of public policy: comparing and combining standardised effect sizes. J. Educ. Policy 32, 450–466. doi: 10.1080/02680939.2017.1280183

Simpson, A. (2018). Princesses are bigger than elephants: effect size as a category error in evidence-based education. Br. Educ. Res. J. 44, 897–913. doi: 10.1002/berj.3474

Slavin, R. E. (1986). Best-evidence synthesis: an alternative to meta-analytic and traditional reviews. Educ. Res. 15, 5–11. doi: 10.3102/0013189X015009005

Sockett, H. (1971). Bloom's Taxonomy: a philosophical critique (I). Cambridge J. Educ. 1, 16–25. doi: 10.1080/0305764710010103

Spangler, J. (2014). Costs related to a flipped classroom. Acad. Med. 89:1429. doi: 10.1097/ACM.0000000000000493

Spowart, L., Turner, R., Shenton, D., and Kneale, P. (2016). ‘But I've been teaching for 20 years…’: encouraging teaching accreditation for experienced staff working in higher education. Int. J. Acad. Dev. 21, 206–218. doi: 10.1080/1360144X.2015.1081595

Spowart, L., Winter, J., Turner, R., Burden, P., Botham, K. A., Muneer, R., et al. (2019). ‘Left with a title but nothing else’: the challenges of embedding professional recognition schemes for teachers within higher education institutions. High. Educ. Res. Dev. 38, 1299–1312. doi: 10.1080/07294360.2019.1616675

Stains, M., Harshman, J., Barker, M. K., Chasteen, S. V., Cole, R., DeChenne-Peters, S. E., et al. (2018). Anatomy of STEM teaching in North American universities. Science 359, 1468–1470. doi: 10.1126/science.aap8892

Stedman, C. H. (1973). An analysis of the assumptions underlying the taxonomy of educational objectives: cognitive domain. J. Res. Sci. Teach. 10, 235–241. doi: 10.1002/tea.3660100307

Steinert, Y., Mann, K., Centeno, A., Dolmans, D., Spencer, J., Gelula, M., et al. (2006). A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME guide no. 8. Med. Teach. 28, 497–526. doi: 10.1080/01421590600902976

Strelan, P., Osborn, A., and Palmer, E. (2020a). Student satisfaction with courses and instructors in a flipped classroom: a meta-analysis. J. Comp. Assist. Learn. 36, 295–314. doi: 10.1111/jcal.12421

Strelan, P., Osborn, A., and Palmer, E. (2020b). The flipped classroom: a meta-analysis of effects on student performance across disciplines and education levels. Educ. Res. Rev. 30:100314. doi: 10.1016/j.edurev.2020.100314

Sullivan, G. M. (2011). Deconstructing quality in education research. J. Grad. Med. Educ. 3, 121–124. doi: 10.4300/JGME-D-11-00083.1

Theobald, E. J., Hill, M. J., Tran, E., Agrawal, S., Arroyo, E. N., Behling, S., et al. (2020). Active learning narrows achievement gaps for underrepresented students in undergraduate science, technology, engineering, and math. Proc. Natl. Acad. Sci. U.S.A. 117, 6476–6483. doi: 10.1073/pnas.1916903117

Thistlethwaite, J. E., Davies, D., Ekeocha, S., Kidd, J. M., MacDougall, C., Matthews, P., et al. (2012). The effectiveness of case-based learning in health professional education. A BEME systematic review: BEME guide no. 23. Med. Teach. 34, e421–e444. doi: 10.3109/0142159X.2012.680939

Torgerson, C. J. (2009). Randomised controlled trials in education research: a case study of an individually randomised pragmatic trial. Education 37, 313–321. doi: 10.1080/03004270903099918

Tseng, V., Easton, J. Q., and Supplee, L. H. (2017). Research-practice partnerships: building two-way streets of engagement. Soc. Policy Rep. 30, 1–17. doi: 10.1002/j.2379-3988.2017.tb00089.x

Turner, T., Misso, M., Harris, C., and Green, S. (2008). Development of evidence-based clinical practice guidelines (CPGs): comparing approaches. Implement. Sci. 3:45. doi: 10.1186/1748-5908-3-45

UNESCO (2017). Six Ways to Ensure Higher Education Leaves No One Behind. Available online at: http://unesdoc.unesco.org/images/0024/002478/247862E.pdf (accessed March 18, 2018).

Wang, T. (2017). Overcoming barriers to ‘flip’: building teacher's capacity for the adoption of flipped classroom in Hong Kong secondary schools. Res. Pract. Technol. Enhanc. Learn. 12:6. doi: 10.1186/s41039-017-0047-7