- Educational Psychological Services, North Ayrshire Council, Irvine, United Kingdom

The current challenging economic climate demands, more than ever, value for money in service delivery. Every service is required to maximize positive outcomes in the most cost-effective way. To date, a smorgasbord of interventions have been designed to benefit society. Those worthy of attention have solid foundations in empirical research, offering service providers reassurance that positive outcomes are assured; many of these programmes lie within the field of education and everyday school practice. However, often even these highly supported programmes yield poor results due to poor implementation. Implementation science is the study of the components necessary to promote authentic adoption of evidence-based interventions, thereby increasing their effectiveness. Following a brief definition of key terms and theories, this article will go on to discuss why implementation is not a straightforward process. To do so, this article will draw upon examples of evidence-based but poorly implemented school programmes. Having acknowledged how good implementation positively affects sustainability, we will then look at the growing number of frameworks for practice within this field. One such framework, the Core Components Model, will be used to facilitate discussion about the processes of successful design and evaluation. This article will continue by illustrating how the quality of implementation has directly affected the sustainability of the Incredible Years programmes and the Promoting Alternative Thinking Strategies (PATHS) curriculum. Then, by analyzing implementation science, some of the challenges currently faced within this field will be highlighted and areas for further research discussed. This article will then link to the implications for educational psychologists (EPs) and will conclude that implementation science is crucial to the design and evaluation of interventions, and that the EP is in an ideal position to support sustainable positive change.

Introduction

Implementation science is the study of how evidence-based programmes can be embedded to maximize successful outcomes (Kelly and Perkins, 2012). It is concerned with using a systematic and scientific approach to identify the range of factors which are likely to facilitate administration of an intervention. By studying the success and failure of intervention adoption, within various disciplines, this scientific approach offers greater understanding of how accredited strategies can be successfully transferred to new contexts. Implementation science, therefore, bridges the gap between theory and effective practice (Fixsen et al., 2009b). Research studies in this field highlight the factors and variables central to successful adoption and sustainability of programmes. Adopting new programmes necessitates change. Implementation science recognizes that people need to be ready for change and that creating optimal conditions for an intervention is crucial to its maintenance. Therefore, implementation science is fundamental to the design of successful interventions. In addition, to understand true effectiveness, both the intervention and its implementation need to be evaluated to fully understand outcomes and impacts (Kelly and Perkins, 2012). Although implementation science has been employed for some time in clinical, health and community settings, its application within the educational domain is still relatively new and there are many areas for further research within this discipline (Lyon et al., 2018).

Definitions Within Implementation Science

An intervention is defined as “a specified set of activities designed to put into practice an activity of known dimensions” (Fixsen et al., 2005). When the intervention has been evaluated as having yielded the expected results, it can be considered effective within targeted populations and settings. For interventions to be effective, it has been persuasively argued (Fixsen et al., 2005) that the programme should be adopted with fidelity, as this ensures sustainability. This means that the programme should have the same content, coverage, frequency and duration as was intended by the designers (Carroll et al., 2007).

Key to intervention design and evaluation are the core components, which are regarded as the essential aspects of the intervention without which the practice or programme will fail to be sustainable or effective (Fixsen et al., 2005).

The Underpinning Theory of Implementation Science

Personal readiness for change depends upon having the capability, opportunity and motivation to change behavior (Michie et al., 2009; Fallon et al., 2018). However, achieving organizational readiness for change is far more complicated. Ideally, individuals within an organization should feel committed and confident in their collective ability to change practices. This is considered to be of critical importance for success in implementing change within an organization (Armenakis et al., 1993; Weiner, 2009). Indeed, it has been suggested that failing to account for such readiness for change can be responsible for a significant proportion of large-scale change efforts being successful or not (Kotter, 1996; Fallon et al., 2018).

Theories of organizational change illustrate the dynamic web of influences within a complex multilevel, multifaceted construct. A three-stage model has been described by Lewin (1951). Stage one attempts to unfreeze fixed mindsets and motivate individuals for change; stage two takes individuals through a transition that enables communication to identify new norms and attitudes; stage three is the embedding of these new ideas into practice. Implementation science describes similar phases in organizing change; these are discussed in the “Frameworks for Practice” section below.

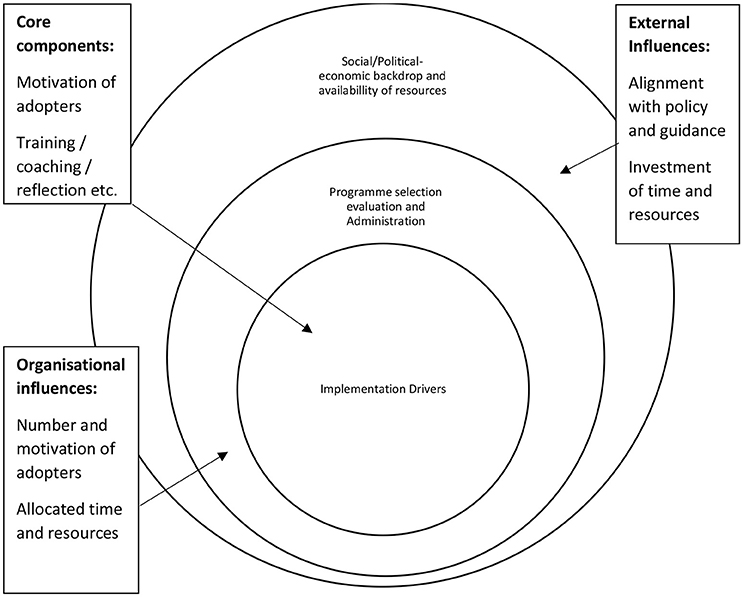

Senge (1990) states: “We tend to focus on snapshots of isolated parts of the system and wonder why our deepest problem never seems to get solved.” Implementation science, therefore, acknowledges the impact of systems and coheres with ecological systems theory (Bronfenbrenner, 1979); a constructionist model which illustrates how complex organizational systems need to be aware of wider political, social and cultural influences. Bronfenbrenner illustrates the need for a well-organized and consistent approach. The key internal components of the programme have to be compatible with external influences for full implementation to occur, as seen in Figure 1 below. Working across these systems with a collaborative focus is necessary for success (Maher et al., 2009).

For any intervention to be successfully embedded, socioeconomic and cultural environments need to be acknowledged, because their variables impact on implementation success. Individuals' readiness for change and group dynamics are enmeshed within their relevant influencing ecological systems. Therefore, when designing and evaluating school-based programmes, it is necessary to clearly understand community cultures and take them into account.

Poor Implementation

There is a tendency for schools to buy new intervention packs marketed as solving all their problems without reference to empirical evidence (Slavin, 2002). In addition, they do not ask many questions about why previously tried programmes have failed. Doing so would, perhaps, be more insightful and cost-effective. Furthermore, while good interventions can be badly implemented, poor interventions can equally be implemented successfully. Therefore, potentially, a theory-based programme may be disbanded while poorly supported interventions may run for years (Kelly and Perkins, 2012).

In essence, having theoretically sound programmes does not, in itself, ensure successful implementation.

One example is a study in Uganda where an empirically supported school-based AIDS education programme was found to be ineffective. Closer examination, using multiple methods, found that this was because it was poorly implemented. Key activities, including role-play, had not been given adequate time. This was due in part to a lack of facilities and in part to a lack of confidence in an intervention concerning such a controversial issue (Kinsman et al., 2001). Here, poor implementation resulted in time, money and resources being wasted.

Furthermore, Barnett found in his review of 36 public programmes that the impacts of empirically based early childhood programmes were affected by the quality of implementation (Barnett, 1995). Also, Greenberg et al. (2005) stated that often, “within-school” initiatives are not implemented with the same quality as the programme designers initially intended, resulting in poor outcomes. Therefore, it is imperative that schools begin to actively embrace implementation considerations when designing and evaluating initiatives. This will be more cost-effective overall and more efficient in promoting positive change.

Frameworks for Practice

There are many frameworks, from various specific disciplines (Birken et al., 2017). However, Tabak et al. (2012) review of 61 models and Meyers et al. (2012) synthesis of 25 frameworks both indicate that many share commonalities, both in their description of stages of implementation and their core components. One example is CASEL (2012), which offers 10 steps and six sustainability factors. Michie et al. (2011) identified 19 frameworks in their systematic enquiry into characterizing and designing behavior change interventions. From their findings, they developed a “Behavior Change Wheel,” which described the key factors of change as being opportunity, capability and motivation. They suggested that the wheel can be used as a framework to identify relevant interventions.

In addition, there is a conceptual framework to measure five indexes of implementation fidelity (Carroll et al., 2007). Measuring fidelity is one way of evaluating implementation, a key process which is just as important as the evaluation of the programme (Fixsen et al., 2005). Initially, three indexes of fidelity were identified, these being exposure, adherence and quality of implementation (Klimes-Dougan et al., 2009). However, Mihalic (2012) later added dimensions of participant responsiveness and programme differentiation. This framework is one of several that are useful when evaluating programme implementation fidelity. More recently, Rojas-Andrade and Bahamondes (2018) analyzed existing data on implementation and found that implementation fidelity of adherence, quality of intervention, exposure to intervention and receptiveness were linked with outcomes 40% of the time, with the latter two indicators having the strongest associations. Measuring fidelity can also be measured via fidelity observations (Pettigrew et al., 2013) perhaps using video (Johnson et al., 2010).

Greenberg et al. (2005) described three phases of implementation—pre-adoption, delivery, and post-adoption—and advised that they should be incorporated into intervention design. Alternatively, the Stages of Implementation Framework (Fixsen et al., 2005) describes six additive stages toward full implementation of programmes. These are:

• current situation exploration

• consideration of change, or installation phase

• preparation for change, or initial implementation phase

• full implementation, where change is being engaged in

• innovation, where after practicing interventions with pure fidelity, subtle adaptations are made to best fit the user

• maintenance of procedures to ensure sustainability

While the selection of implementation frameworks is often driven by previous exposure or convenience rather than theory (Birken et al., 2017), one framework, the Implementation Components Framework (Fixsen et al., 2009a), is based upon a synthesis of 377 implementation articles. It offers a conceptual model concerned with fundamental aspects necessary for implementation to be successful and identifies the key competency drivers, which are the mechanisms that underpin and therefore sustain implementation:

• staff selection

• pre-service/INSET Training

• consultation and coaching

• staff performance evaluation

Furthermore, organization drivers are described as the mechanisms to sustain systems environments and facilitate implementation:

• decision support data systems

• facilitative administrative support

• systems interventions

This article will continue by looking at each of these drivers as they give great insights into how interventions should be designed and evaluated.

Staff Selection

Getting all staff on board and building a philosophy of joint working is paramount to the success of any new initiative (Maher et al., 2009). In Klimes-Dougan et al. (2009) study of the Early Risers Prevention Programme, she found that staff members' personalities, and not their prior experience, were a predictor of the likelihood that an intervention would be implemented with fidelity. Personality factors include breadth of skill, openness, conscientiousness and levels of commitment in the face of challenges. Staff selection is the first key design consideration in any intervention; however, within the real-world context this can be difficult as it depends upon availability of personnel.

In addition, it is essential to ensure that there are lead players within the organization to guide new interventions. Ideally, there should be a dedicated implementation team. Fixsen et al. (2001) found in their analysis of implementation that designated teams led to an 80% success rate in implementation over a 3-year period, compared to 14% success over a 17-year period for programmes that did not have such teams. It must be noted that this comparison only incorporated two studies as only two could be identified as having the same implementation measures. However, such a significant difference in results still persuasively argues for having dedicated implementation staff. The conclusion can be drawn that without a key stakeholder within the organization who has decision-making authority and the ability to persuade others in the process of implementation, interventions may fall by the wayside or become diluted.

Pre-service/INSET Training

Making a change in organizational practices necessitates training. A threat to effective training can be the difficulty of predicting training needs. Therefore, before any training, best implementation science practice dictates that individuals should complete a pre-INSET questionnaire: a check for readiness. This both offers the facilitator the opportunity to set a benchmark for current knowledge, skill and motivation, and also allows for the negotiation of truly relevant and differentiated sessions (Dunst and Trivette, 2009; Fallon et al., 2018). The process should become a partnership between all involved, as participants' ownership of training increases motivation (Gregson and Sturko, 2007).

In addition, the instructor's characteristics have also been found to be associated with the quality of overall implementation (Spoth et al., 2007); recommendations have been made for having enthusiastic and committed facilitators.

Consultation and Coaching

Modern-day practices require staff to undergo continuous professional development to enhance their competencies. On-the-job coaching not only ensures that these practices will become enmeshed in everyday procedures, but also has the potential to promote a cycle of continuous development. Peer coaching facilitates the development of new school norms and offers the opportunity for sustainable ongoing practice (Joyce and Showers, 2002). Joyce and Showers (2002) found in their meta-analysis of teachers doing training that only 5% put newly learnt strategies into practice. However, coaching and on-the-job training after initial teaching sessions ensured that 95% of teachers used the newly learnt techniques. Coaching, therefore, has a massive impact on the effectiveness of training and should be built into intervention design.

In addition, not only should the coach be proficient, there should also be manuals and materials available to further support new practices (Fixsen et al., 2013). In Dane and Schneider (1998) meta-analysis, only 20% of programmes incorporated both support for staff and training and materials into new interventions. This is, therefore, an area for development.

Staff Performance Evaluation

Once the new methods have been practiced, reflection on the process and discussion with other practitioners will help further embed new ideas. If participants have struggled to put concepts into practice, problem-solving discussions at this stage will prevent the discontinuation of the programme (Kelly, 2012). Feedback from these sessions can be used to further enhance future training sessions; however, as the most successful interventions are those with the greatest fidelity, adaptations should not interfere with programmes' core components.

Decision Support Data Systems

Continual monitoring of implementation helps ensure programme sustainability. Multiple methods should be used to draw together information from a variety of sources, including quality performance indicators, service user feedback and organizational fidelity measures (Fixsen et al., 2005). Durlak (2010) argues that implementation can be measured on a continuum from 0 to 100%. The five indexes of the implementation fidelity model outlined above (Carroll et al., 2007) could potentially be used for this purpose.

Facilitative Administrative Support

Once the practices are becoming embedded, the senior management team (SMT) within the school should ensure that administrative systems, including policies and procedures, are coherent with the new practices. These can then inform and support these new systems.

Systems Interventions

This facet of implementation advises that the organization should observe national policy and other external systems and forces. A changing political climate influences the education system and will therefore directly impact on schools' needs and priorities.

To sum up this section, these core components are fundamental considerations for designing and evaluating interventions. This article will now illustrate how evidence-based programmes' outcomes correlate with implementation quality. Variations in implementation will also highlight associated issues.

Optimized Implementation?

Research into what works within schools is crucial as it helps authorities and governments to decide on the best ways to help communities. A programme should be empirically based and successfully implemented. Mintra (2012) also states that in addition to programme fidelity, good implementation relies upon building genuine and transparent partnerships. This is illustrated in the example of the implementation of the “Incredible Years” programme.

“Incredible Years” (IY) is anevidence-based programmes aimed at reducing children's aggression and behavioral problems (Webser-Stratton, 2012), yet the success of its implementation has varied. This is attributed to the quality of implementation fidelity. However, given the vast array of countries which have invested in IY, there has been a need to adapt the programme to meet cultural and contextual needs. As Ringwalt et al. (2003) states, adaptation is inevitable and therefore care should be taken to ensure the core components are not undermined. This, therefore, has necessitated the development of guidelines which maximize fidelity but allow flexibility (Reinke et al., 2011). This guidance sets out an eight-point process throughout the implementation phases and has led to optimum implementation across the world, including in Knowsley Central Primary Support Centre in England (CAST, 2012) and the Children and Parents' Service Early Intervention in Manchester (CAPS, 2012).

A similar theme regarding the balance between flexibility and fidelity was found by Jaycox et al. (2006), who looked at three different intervention programmes delivered and evaluated within schools. All were aimed at reducing dating violence in adolescence. Evaluation of each programme illustrated a negotiation between real-world applicability and a tight research design. However, they argued that for optimum implementation, flexibility within the constraints of the design is necessary.

Finally, Promoting Alternative Thinking Strategies (PATHS) (Greenberg and Kusche, 1996) is a “blueprint” programme developed to enhance social and emotional competencies in young children (Mihalic et al., 2001). Although it has a sound evidence base, well-designed implementation is also critical. Kam et al. (2003) evaluated implementation in a study concerning a group of children of low academic achievement living in areas of high deprivation. Their results confirmed the complexity of implementation within the school context and suggested that strong leadership from the school principal and the quality of implementation were predictors of the programme's success in reducing child aggression. Their findings again underline the importance of implementation fidelity with respect to programme dosage, quality of delivery and support and commitment. Furthermore, shockingly, backward trends in pro-social behavior were evident in two out of four establishments where the PATHS programme's implementation lacked sufficient integrity, even when anecdotal evidence suggested effective positive change (Kelly et al., 2012).

These studies highlight the necessity of implementation science considerations within programme design and evaluation. This article will now continue by acknowledging the threats and challenges associated with implementation science.

Challenges/Threats

Many interventions are implemented without acknowledging the role of implementation science. Leaders need to be aware of the importance of good implementation. This requires training, which is crucial—especially at these early stages of implementation science—to raise awareness of its significance in programme design and evaluation. Raising awareness has far-reaching consequences; therefore, the new language associated with implementation science needs to be taught and embraced (Axford and Morpeth, 2012). Within education (as within other domains), if implementation science is not regarded as important by leaders and the language is not learnt, then dynamic initiatives will fail (Bosworth et al., 2018).

Currently, little time is spent upon implementation (Sullivan et al., 2008); yet effective implementation is likely to take 2–4 years (Fixsen et al., 2009a), and it can take up to 20 years before initiatives are fully embedded into everyday practice (Ogden et al., 2012). However, within our current climate, there is pressure on many organizations, including schools, to make effective changes quickly. In a study of the effectiveness of cooperative learning in secondary schools, Topping et al. (2011) argue that this investment in time may make the cost-effectiveness of intervention questionable. This type of belief, which does not acknowledge the overall cost-effectiveness of these practices, may present barriers to promoting implementation science.

In addition, Carroll et al. (2007) found that the most common reason for deviations from fidelity was time restrictions. Potentially, this could be prevented if recognition of a programme's time commitment is made clear in the initial stages of design. This would ensure realistic goals are set for positive outcomes (Maher et al., 2009). Leaders and teachers need to recognize that it is far more effective to properly invest the necessary time into an initiative, rather than to poorly implement a series of consecutive ineffective interventions over the same amount of time.

A further challenge is getting the right staff via stringent recruitment procedures: a core component of implementation. These staff, perhaps more highly sought after, may merit raised salaries, partly due to increased duties pertaining to implementation teams or steering groups. Unions and contracts may therefore be barriers, due to increased personnel responsibilities among staff. Furthermore, altering any historic systems within schools can be perceived negatively by either staff or unions. Therefore, funds need to be invested into each programme to cover these associated costs, and unfortunately economic issues are always pressing. Other barriers may include existing policies/procedures and local laws which may not reflect the ethos of implementation science. For example, implementation is a process which can take many years (Fixsen et al., 2009a), yet the cycle of government may lead politicians to be more interested in short- than long-term impact. In such cases it is therefore necessary to disseminate implementation science to policymakers to encourage investment in a longer-term vision of embedded evidence-based interventions.

Furthermore, the reality of many organizations, including schools, is that it is not practically possible to recruit new staff who are open to innovative practices or settings that can facilitate optimum implementation. Therefore, real-world settings need to account for this. For instance, in Scotland there is a national teacher staffing crisis (Hepburn, 2015), whereby rigorous selection of staff is an unobtainable luxury: application pools are small and there are high numbers of unfilled vacancies (Hepburn, 2015).

While there are many challenges, addressing these issues at the beginning of the implementation processes will ensure that interventions are effective, and over the long term, more cost-effective.

Implementation science has been successfully employed in such fields as public health and medicine (Glasgow and Emmons, 2007; Rabin et al., 2010; Scheirer, 2013). However, within education it is a comparatively new science (Lyon et al., 2018), and as such there are many areas for further research at all levels, from global to individual. Global-level areas for research include the development of a greater understanding of the true relationships between core components. This may further inform us whether the components are all-encompassing and whether the core components framework needs to be redefined (Fixsen et al., 2009a). In addition, the model would benefit from further research into each aspect of the framework. Sullivan et al. (2008) argue that this would open “the black box” to give us greater understanding of why this approach works.

Furthermore, while organizations are increasingly trying to ensure that implementation is evaluated, different approaches are being used. Therefore, one goal is to establish a commonality in approaches to the measurement of implementation. A meta-analysis of approaches could then clarify how best to evaluate all its aspects.

In addition, descriptions of interventions and details of their components can be inconsistent, leaving aspects open to interpretation (Michie et al., 2009). As this threatens intervention fidelity, these authors argue for open access to detailed intervention protocols. However, they also acknowledge that intellectual property rights may prevent this from becoming regular practice. Further research is needed to address these issues of consistency. Indeed, lessons can be learnt from other disciplines which have developed research literature to answer similar questions. For example, exploring Re-aim's extended consort diagram, which was developed to translate research into practice by breaking down key factors at each stage of health implementation (Kessler and Glasgow, 2011), could inform implementation within the context of education.

The science of implementation is pertinent in many areas of the service sector, including education, health and social work. Therefore, when researching the conditions which ensure sustainability, findings are transferable between disciplines. This offers huge opportunities for collaborative working across the different domains and creates the potential for rapid advancement of the science of implementation.

Implications for Educational Psychologists

In an ideal world, whenever a theory is supported, its teachings will be transferred into practice to bring about positive change. However, a challenge faced by EPs is ensuring that the interventions schools adopt are effective. EPs have a role in developing clear and widely available information on how to assess interventions by their evidence base and dissemination capacity. There are cases where this has been done in education (Education Endowment Foundation, 2018) and in health (The US National Cancer Institute, 2018). However, support to ensure evidence-based approaches are always used within education continues to be an ongoing goal (Kelly and Perkins, 2012). Recognition that interventions need to be implemented properly gives EPs the opportunity not only to work in line with these principles but also to build capacity within others across an array of settings.

The role of the EP has moved from casework toward more effective systemic ways of working; therefore, the EP is in an exceptional position to:

• Work in collaboration with schools. Jaycox et al. (2006) describe how working in partnership with schools can be effective. They emphasize the importance of becoming familiar with the school staff, its cultures and context through regular contact.

• Jointly discuss options when selecting interventions, ensuring programmes are based on empirical evidence and meet genuine and not perceived needs.

• Ensure staff readiness before implementation.

• Ensure the implementation is designed effectively within the school context.

• Help to measure and assess implementation.

• Undertake research to enhance our understanding of implementation science.

• Develop implementation standards within local authorities.

• Promote effective practice and raise awareness of implementation science.

• Create implementation steering groups which can ensure that implementation is monitored and evaluated throughout the process, and that integrity is maintained (Dane and Schneider, 1998).

In addition, the EP should be sensitive to individuals' workloads by asking school staff only to perform necessary tasks. Throughout the process of implementation, there is a great deal of ongoing monitoring that must take place. Programme implementers should assess success throughout the implementation period and ensure it by adapting the programme to meet the needs of the setting. Therefore, teachers need to understand the importance of implementation monitoring. Players require motivation to fully incorporate these functions into their workload. Furthermore, it may be difficult for an EP to ensure that fidelity is being maintained by teachers, especially when there are competing job pressures. It is therefore of paramount importance that positive working relationships are maintained and that communication is ongoing. The EP should adopt a flexible and sensitive approach in order to yield the best outcomes.

A threat to any intervention is ignoring the whole system of which the school is a part. An example: a teacher wants to implement new class behavior guidelines. For this to be successful, the class rules must be in line with the school and local authority policies and guidelines. Implementation science encourages us all to look at the wider multilevel influences at play. In addition, core implementation components must fit within the organizational components and other social, economic and political influences (Sullivan et al., 2008). If the relationships between these factors are poor, there is less chance of the intervention being implemented with pure fidelity. Here, again, the EP can play a pivotal role in supporting the school's ability to acknowledge all contextual factors.

Within every organization, there are many layers of staff, policies, systems and barriers. Promoting positive change therefore requires a multifaceted approach. If a teacher believes that an intervention is beneficial, they will be more likely to implement it with fidelity (Datnow and Castellano, 2000; Waugh, 2000). Therefore, teachers who have previous experience of an evidence-based intervention which was implemented poorly, thereby yielding disadvantageous outcomes, are unlikely to be motivated to implement the same intervention successfully. Due to these human belief systems, poor implementation could therefore impact on future implementation potential. In such cases the EP may have to sensitively challenge the beliefs that have led to evidence-based programmes being perceived as ineffective. Schools and EPs should work together to design and evaluate initiatives by properly adhering to implementation guidance. Only then is there the best chance of supporting positive change and having maximum impact on the lives of children and families.

Finally, EPs are researchers and have much to offer the study of implementation science. Understanding the fundamentals of this approach and supporting other researchers offer additional opportunities to bring about positive change.

Conclusions

Implementation science is a universal strategy to ensure that programmes make sustainable positive differences. It acknowledges the systems in place, which interact with each other, and has the potential to significantly improve outcomes for individuals everywhere. Implementation science needs to be incorporated into the design and evaluation of every school programme to ensure effectiveness and sustainability. There are many challenges evident, and players should concentrate on long-term gains rather than short-term fixes to successfully embrace this approach and invest the necessary funding, support and attention. The EP is in an ideal position to support the education system in using these principles and embracing new opportunities of joint working and cross-sector collaboration.

Author Contributions

The author confirms being the sole contributor of this work and approved it for publication.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Armenakis, A. A., Harris, S. G., and Mossholder, K. W. (1993). Creating readiness for organisational change. Hum. Relat. 46, 681–703. doi: 10.1177/001872679304600601

Axford, N., and Morpeth, L. (2012). “The common language service-development method from strategy development to implementation of evidence based practice,” in Handbook of Implementation Science for Psychology in Education, eds B. Kelly, and D. Perkins (Cambridge: Cambridge University Press), 443–460.

Barnett, W. (1995). Long-term affects of early childhood interventions on cognitive and school outcomes. Future Child 5, 25–50. doi: 10.2307/1602366

Birken, S. A., Powell, B. J., Shea, C. M., Haines, E. R., Kirk, M. A., Leeman, J., et al. (2017). Criteria for selecting implementation science theories and frameworks: results from an international survey. Implement. Sci. 12:124. doi: 10.1186/s13012-017-0656-y

Bosworth, K., Garcia, R., Judkins, M., and Saliba, M. (2018). The impact of leadership involvement in enhancing high school climate and reducing bullying: an exploratory study. J. Sch. Viol. 17, 354–366. doi: 10.1080/15388220.2017.1376208

Bronfenbrenner, U. (1979). The Ecology of Human Development: Experiments by Nature and Design. Cambridge: Harvard University Press.

CAPS (2012). Available online at: https://incredibleyearsblog.wordpress.com/2015/05/01/children-and-parents-service-caps-early-years-social-and-emotional-wellbeing-awarded-best-practice-congratulations/

Carroll, C., Paterson, M., Wood, S., Booth, A., Rick, J., and Balain, S. (2007). A conceptual framework for implementation fidlity. Implement. Sci. 2:40. doi: 10.1186/1748-5908-2-40

CASEL (2012). Collaborative for Academic , Social and Emotional Learning. Available online at: www.casel.org

CAST (2012). Incredible Years. Available online at: http://www.incredibleyears.com/article/cast-study-the-incredible-years-basic-program-in-denmark-in-danish-af-foaeldreprogrammet-basic/

Dane, A., and Schneider, B. (1998). Program integrity in primary and early secondary prevention: are implementation effects out of control. Clin. Psychol. Rev. 18, 23–45. doi: 10.1016/S0272-7358(97)00043-3

Datnow, A., and Castellano, M. (2000). Teachers' responses to success for all: how beliefs, experiences, and adaptations shape implementation. Am. Educ. Res. J. 37, 775–799. doi: 10.3102/00028312037003775

Dunst, C. J., and Trivette, C. M. (2009). Let's be PALS an evidence-based approach to professional development. Infant Young Child. 22, 164–176. doi: 10.1097/IYC.0b013e3181abe169

Durlak, J. (2010). The importance of doing well in whatever you do: a commentary on the special section. Early Child. Res. Q. 25, 348–357. doi: 10.1016/j.ecresq.2010.03.003

Education Endowment Foundation (2018). Available online at: https://educationendowmentfoundation.org.uk/

Fallon, L. M., Cathcart, S. C., DeFouw, E. R., O'Keeffe, B. V., and Sugai, G. (2018). Promoting teachers' implementation of culturally and contextually relevant class-wide behavior plans. Psychol. Sch. 55, 278–294. doi: 10.1002/pits.22107

Fixsen, D. L., Blase, K. A., Timbers, G. D., and Wolf, M. M. (2001). “In search of program implementation: 792 replications of the Teaching-Family Model,” in Offender Rehabilitation in Practice: Implementing and Evaluating Effective Programs, eds G. A. Bernfeld, D. P. Farrington, and A. W. Leschied (New York, NY: John Wiley & Sons), 149–166.

Fixsen, D. L., Blase, K. A., Naoom, S. F., and Wallace, F. (2009a). Core implementation components. Res. Soc. Work Pract. 19:531. doi: 10.1177/1049731509335549

Fixsen, D. L., Blase, K. A., Naoom, S. F., Van Dyke, M., and Wallace, F. (2009b). Implementation: The Missing Link between Research and Practice. NIRN implementation brief, 1. Chapel Hill, NC: University of North Carolina at Chapel Hill.

Fixsen, D., Blase, K., Naoom, S., and Duda, M. (2013). Implementation Drivers: Assessing Best Practices. Chapel Hill, NC: University of North Carolina at Chapel Hill.

Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., and Wallace, F. (2005). Implementation Research: A Synthesis of the Literature. Tampa, FL: University of South Florida.

Glasgow, R. E., and Emmons, K. M. (2007). How can we increase translation of research into practice? Types of evidence needed. Annu. Rev. Public Health 28, 413–433. doi: 10.1146/annurev.publhealth.28.021406.144145

Greenberg, M. T., Domitrovich, C. E., Graczyk, P. A., and Zins, J. E. (2005). The Study of Implementation in School-Based Preventive Interventions: Theory, Research and Practice (Unpublished Draft). Rockville, MD: U.S. Department of Health and Human Services.

Greenberg, M. T., and Kusche, C. A. (1996). The PATHS Project: Preventive Intervention for Children. Final Report to the National Institute of Mental Health, Grant (R01MH42131).

Gregson, J. A., and Sturko, P. A. (2007). Teachers as adult learners: re- conceptualizing professional development. J. Adult Educ. 36, 1–18.

Hepburn, H. (2015, July 28) Teacher Shortages: Act Now Before a 'Full-Blown Crisis' Emerges, Unions Warn. TES. Available online at: https://www.tes.com/news/teacher-shortages-act-now-full-blown-crisis-emerges-unions-warn.

Jaycox, L. H., McCaffrey, D. F., and Ocampo, B. W. (2006). Challenges in the evaluation and implementation of school-based prevention and intervention programs on sensitive topics. Am. J. Eval. 27, 320–336. doi: 10.1177/1098214006291010

Johnson, K. W., Ogilvie, K. A., Collins, D. A., Shamblen, S. R., Dirks, L. G., Ringwalt, C. L., et al. (2010). Studying implementation quality of a school-based prevention curriculum in frontier Alaska: application of video-recorded observations and expert panel judgment. Prevent. Sci. 11, 275–286. doi: 10.1007/s11121-010-0174-5

Joyce, B., and Showers, B. (2002). “Student Achievement through Staff Development,” in Designing Training and Peer Coaching: Out Needs for learning, eds B. Joyce and B. Showers (Virginia: National College for School Leadership), 1–5.

Kam, C., Greenberg, M., and Walls, C. T. (2003). Examining the role of implementation quality in school-based prevention using the paths curriculum. Prev. Sci. 4, 55–63. doi: 10.1023/A:1021786811186

Kelly, B. (2012). Evidence Based in Service Training Methods and Approaches. MSc Educational Psychology Tutorial. Glasgow: University of Strathclyde.

Kelly, B., Edgerton, C., Robertson, E., and Neil, D. (2012). “The Preschool PATHS curriculum: using implementation science to increase effectiveness,” in SDEP Conference (Edinburgh: Herriott Watt University).

Kelly, B., and Perkins, D. F. (2012). Handbook of Implementation Science for Psychology in Education. Cambridge: Cambridge University Press.

Kessler, R., and Glasgow, R. E. (2011). A proposal to speed translation of healthcare research into practice: dramatic change is needed. Am. J. Prev. Med. 40, 637–644. doi: 10.1016/j.amepre.2011.02.023

Kinsman, J., Nakiyingi, J., Kamali, A., Carpenter, L., Quigley, M., Pool, R., et al. (2001). Evaluation of a comprehensive school-based AIDS education programme in rural Masaka, Uganda. Health Educ. Res. 16, 85–100. doi: 10.1093/her/16.1.85

Klimes-Dougan, B., August, G., Lee, C.-Y. S., Realmuto, G. M., Bloomquist, M. L., Horowitz, J. L., et al. (2009). Practitioner and site characteristics that relate to fidelity of implementation: the early risers prevention program in a going-to-scale intervention trial. Prof. Psychol. Res. Pract. 40, 467–475. doi: 10.1037/a0014623

Lewin, K. (1951). Field theory in Social Science: Selected Theoretical Papers. New York, NY: Harper.

Lyon, A. R., Cook, C. R., Brown, E. C., Locke, J., Davis, C., Ehrhart, M., et al. (2018). Assessing organizational implementation context in the education sector: confirmatory factor analysis of measures of implementation leadership, climate, and citizenship. Implement. Sci. 13:5. doi: 10.1186/s13012-017-0705-6

Maher, E. J., Jackson, L. J., Pecora, P. J., Schltz, D. J., Chandra, A., and Barnes-Proby, D. S. (2009). Overcoming challenges to implementing and evaluating evidence -based interventions in child welfare: a matter of necessity. Child. Youth Serv. Rev. 31, 555–562. doi: 10.1016/j.childyouth.2008.10.013

Meyers, D. C., Durlak, J. A., and Wandersman, A. (2012). The quality implementation framework: a synthesis of critical steps in the implementation process. Am. J. Community Psychol. 50, 462–480. doi: 10.1007/s10464-012-9522-x

Michie, S., Fixsen, D., Grimshaw, J. M., and Eccles, M. (2009). Specifying and reporting in complex behaviour change interventions: the need for a scientific method. Implement. Sci. 4:40. doi: 10.1186/1748-5908-4-40

Michie, S., Stralen, M. M., and West, R. (2011). The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implement. Sci. 6:42. doi: 10.1186/1748-5908-6-42

Mihalic, S. (2012). Blueprints. Available online at: http://www.colorado.edu/cspv/blueprints/Fidelity.pdf

Mihalic, S., Irwin, K., Elliott, D., Fagan, A., and Hansen, D. (2001). Blueprints for Violence Prevention. Juvenile Justice Bulletin.

Mintra, D. (2012). “Increasing student voice in school reform: Building Partnerships, Improving Outcomes,” in Handbook of Implementation Science for Psychology in Education, eds B. Kelly, and D. Perkins (Cambridge: Cambridge University Press), 21.

Ogden, F., Bjornebekk, G., Kjobli, J., Christiansen, T., Taraldsen, K., and Tollefsen, N. (2012). Measurement of implementation components ten years after a nationwide introduction of empirically supported programs- a pilot study. Implement. Sci. 7:49. doi: 10.1186/1748-5908-7-49

Pettigrew, J., Miller-Day, M., Shin, Y., Hecht, M. L., Krieger, J. L., and Graham, J. W. (2013). Describing teacher–student interactions: a qualitative assessment of teacher implementation of the 7th grade keepin'it REAL substance use intervention. Am. J. Community Psychol. 51, 43–56. doi: 10.1007/s10464-012-9539-1

Rabin, B. A., Glasgow, R. E., Kerner, J. F., Klump, M. P., and Brownson, R. C. (2010). Dissemination and implementation research on community-based cancer prevention: a systematic review. Am. J. Prev. Med. 38, 443–456. doi: 10.1016/j.amepre.2009.12.035

Reinke, W. M., Herman, K. C., and Newcorner, L. L. (2011). The incredible years teacher classroom management training: the methods and principles that support fidelity of training delivery. Sch. Psych. Rev. 40, 509–529.

Ringwalt, C. L., Ennett, S., Johnston, T., Rohrbach, L. A., Simons-Rudolf, A., Vincus, A., et al. (2003). Factors associated with fidelity to substance abuse prevention curriculum guides in the nation's middle schools. Health Educ. Behav. 30, 375–391. doi: 10.1177/1090198103030003010

Rojas-Andrade, R., and Bahamondes, L. L. (2018). Is implementation fidelity important? a systematic review on school-based mental health programs. Contemp. Sch. Psychol. 18, 1–12. doi: 10.1007/s40688-018-0175-0

Scheirer, M. A. (2013). Linking sustainability research to intervention types. Am. J. Public Health 103, e73–e80. doi: 10.2105/AJPH.2012.300976

Senge, P. M. (1990). The Fifth Discipline: The Art and Practice of the Learning Organisation. New York, NY: Doubleday Currency.

Slavin, R. (2002). Evidence-based educational policies: transforming educational practice and research. Educ. Res. 31, 15–21. doi: 10.3102/0013189X031007015

Spoth, R., Guyll, M., Lillehoj, C., and Redmond, C. (2007). Prosper study of evidence based intervention implementation quality by community- university partnerships. Nat. Inst. Health 35, 981–999. doi: 10.1002/jcop.20207

Sullivan, G., Blevins, D., and Kauth, M. R. (2008). Translating clinical training into practice in complex mental health systems: towards opening the “Black Box” of implementation. Implement. Sci. 3:33. doi: 10.1186/1748-5908-3-33

Tabak, R. G., Khoong, E. C., Chambers, D. A., and Brownson, R. C. (2012). Bridging research and practice: models for dissemination and implementation research. Am. J. Prev. Med. 43, 337–350. doi: 10.1016/j.amepre.2012.05.024

The US National Cancer Institute (2018). Research-Tested Intervention Programs (RTIPs). Available online at: https://rtips.cancer.gov/rtips/searchResults.doon11/06/18

Topping, K. J., Thurston, A., Tolmie, A., Christie, D., Murray, P., and Karagiannidou, E. (2011). Cooperative learning in science: intervention in the secondary school. Res. Sci. Technol. Educ. 29, 91–106. doi: 10.1080/02635143.2010.539972

Waugh, R. F. (2000). Towards a model of teacher receptivity to planned system-wide educational change in a centrally controlled system. J. Educ Adm. 38, 350–367. doi: 10.1108/09578230010373615

Webser-Stratton, C. (2012). Incredible Years. Available online at: http://www.incredibleyears.com/

Keywords: fidelity, implementation science, readiness to change, education intervention, schools

Citation: Moir T (2018) Why Is Implementation Science Important for Intervention Design and Evaluation Within Educational Settings? Front. Educ. 3:61. doi: 10.3389/feduc.2018.00061

Received: 05 July 2017; Accepted: 04 July 2018;

Published: 25 July 2018.

Edited by:

Ulrich Dettweiler, University of Stavanger, NorwayReviewed by:

Sharinaz Hassan, Curtin University, AustraliaRenae L. Smith-Ray, Walgreens, United States

Copyright © 2018 Moir. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Taryn Moir, dGFyeW5tb2lyQG5vcnRoLWF5cnNoaXJlLmdjc3guZ292LnVr

Taryn Moir

Taryn Moir