- 1Department of Curriculum Teaching and Learning, Ontario Institute for Studies in Education, University of Toronto, Toronto, ON, Canada

- 2Lynch School of Education, Boston College, Chestnut Hill, MA, United States

This research paper presents the design of an active learning curriculum and corresponding software environment called CKBiology, reporting on its implementation in two sections of a Grade 12 Biology course across three design cycles. Guided by a theoretical framework called Knowledge Community and Inquiry (KCI), we employed a design-based research methodology in which we worked closely with a high school biology teacher and team of technology developers to co-design, build, test, implement, and revise this curriculum within a blended learning context. We first present the results of a needs assessment and baseline analysis in which we identify the design constraints and challenges associated with infusing a “traditional” Grade 12 Biology course with a KCI curriculum. Next, we present the design narrative for CKBiology in which we respond to these constraints and challenges, detailing the activity sequences, pedagogical aspects, and technology elements used across three design iterations. Finally, we provide a qualitative analysis of student and teacher perspectives on aspects of the design, including activity elements as well as the CKBiology interface. Findings from this analysis are synthesized into design principles which may serve the wider community of active learning researchers and practitioners.

Introduction

In today's era of “alternative facts,” the importance of a scientifically literate citizenry cannot be overstated. Combatting complex global problems such as climate change, new viral epidemics, economic disparity and nuclear threats will require a sustained collaborative effort among knowledgeable scientists, engineers, politicians, and a scientifically literate public. Thus, producing graduates who are prepared for occupations in science, technology, engineering, and mathematics (STEM) has become a global priority (OECD., 2012). However, dropout rates for STEM programs at the post-secondary level remain high. For example, in the United States 48% of bachelor's degree students and 69% of associate's degree students who enter STEM programs never complete them, with approximately half of these students switching to a non-STEM major, and the other half dropping out before earning a degree or certificate (U.S. Department of Education, 2013). In Ontario, Canada (the context of the present study), Computer Science and Physical Sciences are among the top three undergraduate programs with the lowest graduation rates, with 38.3 and 33.9% of students failing to complete these degrees, respectively (MAESD., 2016).

One factor influencing students' performance in STEM courses is related to the instructional strategies that are employed. As Kober (2015) describes, “A single course with poorly designed instruction or curriculum can stop a student who was considering a science or engineering major in her tracks” (p. xi). Bloom (1984) has observed that nearly any means of instruction is superior to lecture, and yet this is the approach that many undergraduate STEM courses maintain. In order to learn science and engineering well, students need to be able to understand and apply the practices of the discipline, develop skills in problem solving, communication, and collaboration, and critically evaluate new information in the field (Kober, 2015). Scholars and educational leaders have called for new pedagogical approaches that better prepare students to face the complex challenges of an increasingly globalized, technology-driven, knowledge economy (Tapscott and Williams, 2012; Pellegrino and Hilton, 2013; OECD., 2016).

In response to such calls for change, science educators have explored new modes of learning and instruction such as “flipped classrooms,” wherein students spend their homework time watching video lectures and reading texts so that classroom time can be devoted to more active forms of collaborative group work, inquiry and problem solving (Bens, 2005; DeLozier and Rhodes, 2017). Referred to broadly as “Active Learning” (AL), these approaches have become increasingly prominent, resulting in professional societies (e.g., SALTISE.ca) and university-based centers to support the design of AL courses (e.g., Charles et al., 2011),. Several studies have measured the benefits of AL (Dori and Belcher, 2005; Code et al., 2014), and evidence has begun to accumulate that AL methods achieve better educational outcomes than lecture-based approaches (Freeman et al., 2014; Waldrop, 2015).

However, despite these indications of its efficacy, AL remains largely ill-specified in its formulation (Ruiz-Primo et al., 2011; Brownell et al., 2013). For example, while particular group strategies are often invoked (e.g., cooperative learning, collaborative projects, or jigsaw groups) very little is known about the learning processes that occur within such methods, the materials or assessments they require, nor the role of the instructor (Henderson and Dancy, 2007). What makes a collaborative group activity effective? When should it be used within the curriculum? How will students collaborate, and to what end? How should their progress, process, or products be assessed? Simply naming or broadly describing an AL approach does not provide sufficient information about the content, structure or sequencing of activities or interactions (amongst students, materials, instructors, and the classroom environment) that it entails. Additionally, most forms of AL employ some form of technology, leveraging the valuable resources of student laptops, mobile phones, Smart boards, and a wide range of software applications and classroom management tools. These technologies can offer new opportunities for teachers to increase the sophistication of interactions and ideas in their courses, however their integration within the classroom adds a layer of complexity to the curriculum, making it challenging for teachers to enact or “orchestrate” any given design. Thus, technology can offer both a means of achieving active learning as well as a barrier to implementing it.

To advance the study of AL, this paper offers a detailed account of a full-course AL curriculum, and a custom-designed software environment called CKBiology. We describe a design-based research project implemented in two sections of a Grade 12 Biology course that comprised three iterative design cycles over the course of one academic year. We worked closely with a high school biology teacher and team of technology developers to co-design, build, test, and enact this curriculum to address the following two research questions:

1. What are the design opportunities and constraints associated with infusing a traditional Grade 12 Biology course with active learning designs?

2. What forms of active learning can address those constraints and challenges, and what technology elements are needed to support them?

Theoretical Foundations

Active Learning (AL) is rooted in the theoretical perspective of social constructivism, which emphasizes the importance of social interactions, cultural tools and activities in shaping the learning and development of an individual (Woolfolk et al., 2009). Here, the learner is seen as playing an active role in constructing her own knowledge, building understandings, and making sense of information. This contrasts with instructionist theories of learning in which the learner is seen as a recipient of knowledge transmitted from an external authoritative source. Examples of AL include solving ill-structured problems, negotiating diverse ideas and perspectives, engaging in inquiry and critical thinking, and developing a sense of responsibility for one's learning. Ruiz-Primo et al. (2011) characterize AL using the following four attributes: (1) conceptually-oriented tasks, (2) collaborative learning activities, (3) technology elements, and (4) inquiry-based projects.

One topic of great relevance to AL, particularly in regard to the role of technology and classroom learning environments, is that of scripting and orchestration (Dillenbourg and Jermann, 2007; Kollar et al., 2007). Similar to a theatrical script, which specifies all aspects of a play (i.e., stage, props, lines, actions, and behaviors), a pedagogical script explicates a learning design in terms of the participants, roles, goals, groups, activities, materials, and logical conditions or determinants of activity boundaries (Fischer et al., 2013). Like its theatrical counterpart, a pedagogical script is only an abstract or idealized description until it is actually performed. Orchestration refers to the enactment of the script, binding it to the local context of learners, classrooms, curriculum and instructor, and giving it concrete form in terms of materials, activities and interactions amongst participants (Tchounikine, 2013). Pedagogical scripts are orchestrated in the classroom, online or across contexts (i.e., home, school, or mobile), with the “orchestrational load” shared by (1) the instructor, who can tell students what to do, pause activities to hold short discussions, or advance the lesson from one point in the script to another; (2) the materials, including text or other media, instructions, or interactive Web sites; (3) the technology environment, such as online portals, discussion forums, note sharing, wikis or Google Docs; and (4) the physical learning environment such as the classroom configuration, furniture, walls, or lighting (Slotta, 2010).

Studies have shown that AL can have a variety of positive effects on teaching and learning, including improvements in student affect and motivation (Dori and Belcher, 2005), student engagement (Fisher, 2010), group interactions (Mercier et al., 2016), shared responsibility for learning (Baepler and Walker, 2014), and student learning outcomes (Brooks, 2011). In the largest and most comprehensive meta-analysis to date, Freeman et al. (2014) analyzed 225 studies that reported data on student performance in undergraduate science, technology, engineering, and mathematics (STEM) courses under traditional lecturing vs. active learning approaches. Taking into account factors such as class size, discipline, student/instructor quality, and methodological rigor within the included studies, their findings indicated that average student performance on examinations and concept inventories increased by 0.47 SDs (i.e., around 6%) in AL sections, and that students in classes with traditional lectures were 1.5 times more likely to fail than were students in classes with AL (Freeman et al., 2014).

Classrooms as Learning Communities

One promising approach to the design of AL curricula is to consider the classroom as a learning community. For many years, theories on collaborative learning tended to focus on how participating in a group would affect an individual's performance (Stahl, 2015), however in the late 1980s two programs of research emerged that gave focus to groups of learners at the community level: Fostering Communities of Learners (FCL; Brown and Campione, 1994) and Knowledge Building (KB; Scardamalia et al., 1989). Both of these research programs upheld the notion that the activities occurring in school classrooms should mirror those of authentic research communities, incorporating aspects of collective epistemology and community-level knowledge advancement (Brown, 1994; Scardamalia and Bereiter, 2006). The theoretical perspectives of FCL and KB are distinct with respect to the objectives of the community, the centrality of student-generated ideas, and the level of emphasis placed on prescribed learning goals and activity structures (Scardamalia and Bereiter, 2007; Carvalho, 2017). However, they share a commitment to helping students and teachers identify as a coherent learning community, the sharing of information and dissemination of knowledge and practices (Slotta and Najafi, 2010).

The learning community approach has been defined as “a culture of learning in which everyone is involved in a collective effort of understanding” (Bielaczyc and Collins, 1999, p. 2). Students bring diverse interests and expertise to the classroom and the teacher helps them to work collectively to advance knowledge, with all individual members benefiting along the way. However, scholars have noted that it is challenging for teachers or researchers to coordinate such an approach (Kling and Courtright, 2003; van Aalst and Chan, 2007). (Slotta 2014) articulated four key challenges to this approach: (1) to establish an epistemological context such that each student understands the collective nature of the curriculum; (2) to ensure that community knowledge is accessible as a resource during student activities; (3) to ensure that scaffolded inquiry activities advance the community's progress as well as that of all individual learners; and (4) to foster productive discourse that helps individual students and the community to progress.

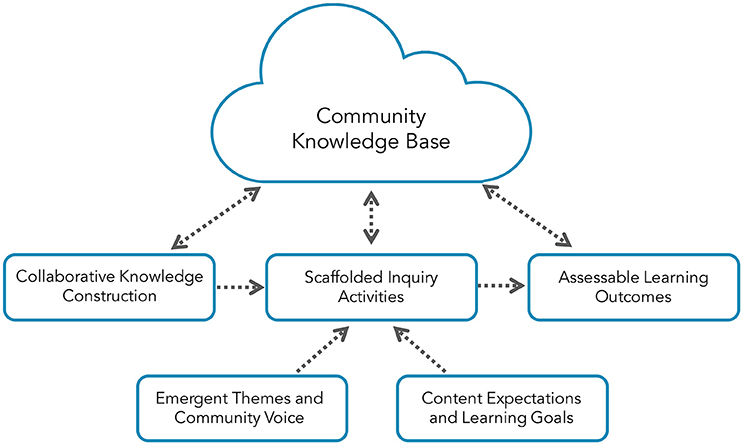

In response to the challenges of constructing effective learning communities, the Knowledge Community and Inquiry (KCI) model was developed to guide the design of collective inquiry curricula that integrate whole class, small group and individual activities (Slotta and Peters, 2008; Slotta and Najafi, 2013). KCI provides structural requirements and design principles that allow (1) an epistemological orientation to help students understand the nature of science and learning communities, (2) a knowledge base that is indexed to the targeted science domain, (3) an inquiry script that specifies collective, collaborative and individual activities in which students construct the knowledge base and then use it as a resource for subsequent inquiry, and (4) student outcomes that allow assessment of progress on targeted learning goals (see Figure 1). The model guides the design of activity sequences including individual, group (e.g., jigsaw) and whole-class activities (e.g., brainstorm, resource collecting), ensuring that all students progress on the learning goals.

To date, KCI curriculum designs have been enacted in elementary school, secondary school, and higher educational contexts. In elementary schools, work has included units in astronomy (Cober et al., 2013; Fong and Slotta, 2015), and ecology (Cober et al., 2013, 2015a). In secondary schools, KCI units have been designed on the topics of human disease (Peters and Slotta, 2010), climate change (Slotta and Najafi, 2013), evolution (Lui and Slotta, 2014), forces and motion (Tissenbaum et al., 2012), and literary studies (Carvalho and Hall, 2016). Recent work in secondary school contexts has extended beyond single curricular units to entail full course designs, including courses in Grade 12 Health Science (Serevetas, 2017) as well as the current work in Grade 12 Biology (Slotta and Acosta, 2017). Similarly, KCI research in higher educational contexts has included full course designs in pre-service teacher education (Slotta and Najafi, 2013), business and media (Ehrlick and Slotta, 2017), as well as a Massive Open Online Course for in-service teachers (Håklev and Slotta, 2017).

Methodology

Design-Based Research

This project employed a design-based research (DBR) methodology to support the creation and development of innovative learning environments through the parallel processes of design, evaluation, and theory-building (Brown, 1992; Collins, 1992; Edelson, 2002). DBR emerged in the early 1990s in response to the experienced limitations of traditional psychological research methods, which required controlled experimentation and regarded cognition as something that “takes place inside the learner and only inside the learner” (Simon, 2001, p. 210). In contrast, DBR activities are situated in naturalistic contexts and focus on understanding the messiness of real-world practice (Barab and Squire, 2004; Bell, 2004). Within such complex environments, it would be difficult—if not impossible—to test the causal impact of specific independent variables on specific dependent variables using experimental designs (Barab, 2014). Consequently, DBR is not concerned with so-called “learning outcomes,” but rather with the design of innovations to transform “existing situations into preferred ones” (Stahl, 2015, p. 15). In this sense, DBR draws from an engineering ethos, wherein success is seldom defined by the ability to provide theoretical accounts of how the world operates, but rather by the development of solutions to problems that satisfy existing conditions and meet the stated design goals within prevailing constraints (Nathan and Sawyer, 2014).

DBR activities are inherently iterative, involving cycles of design, enactment, detailed study, and revision (Bell et al., 2004). What sets DBR apart from other forms of educational research is its commitment to the development of sustained innovations in education (Bereiter, 2002). Beyond merely understanding the usability or feasibility of new educational technologies, DBR researchers seek to understand how these technologies can be productively embedded into educational systems (e.g., curriculum designs, activity structures, pedagogical practices; Bell et al., 2004) as well as the relative improvability of these designs within such systems (Bereiter, 2002).

Co-design

The effectiveness of any research that is situated within a real classroom context is critically dependent upon the classroom teacher's understanding and enactment of the designed approaches and materials (Slotta and Peters, 2008). Studies on the adoption of educational innovations have shown that the level and nature of adoption is strongly influenced by teachers' interpretations of their classroom ecologies, including how they perceive the designs to align with their goals, teaching strategies, and learning expectations (Blumenfeld et al., 2000; Means et al., 2001; Roschelle et al., 2006). Furthermore, practitioners who adopt research-based approaches must be receptive to innovations and willing to experiment with unproven methods (Bereiter, 2002).

As such, researchers in the learning sciences have developed a collaborative approach to the design of educational innovations that are deeply situated within the context of real-world classrooms. In contrast to top-down approaches to educational reform, in which teachers are simply provided with an approach that they are expected to adopt, the co-design method engages teachers as active participants in the design process, positioning them as professional contributors to an interdisciplinary co-design team (Collins, 1992). Roschelle et al. (2006) define co-design as “a highly-facilitated, team-based process in which teachers, researchers, and developers work together in defined roles to design an educational innovation, realize the design in one or more prototypes, and evaluate each prototype's significance for addressing a concrete educational need” (p. 606).

The co-design approach offers several benefits, including providing teachers with a high level of ownership and agency over the designed innovation (Roschelle et al., 2006). Because teachers remain actively involved throughout the entire design process, they not only develop a strong understanding of the underlying research but also firmly believe in the curricular materials that are produced (Cober et al., 2015b). Consequently, co-design has the potential to transform teachers into advocates for innovation within their school districts (Penuel et al., 2007).

Participants and Sampling

The co-design team in this project included five members: One Grade 12 Biology teacher, two technology developers, and two researchers. A purposeful sampling approach was used to select the teacher participant, based upon her prior experience in KCI research as well as her availability to design and implement a KCI curriculum during the 2016–2017 academic year. This teacher held a PhD in biological sciences and has been teaching at our study school since 2010. Student participants consisted of two sections of a Grade 12 Biology course (n = 29), both taught by the same co-design teacher. The student participants were an incidental sample, in that they happened to be those who were assigned to the classes of our co-design teacher. Student participants were high-achieving and culturally diverse, reflecting the overall population of the school.

Ethics Protocol

This study was carried out in accordance with the Canadian Tri-Council Policy Statement on Ethical Conduct for Research Involving Humans. The student participants in this study were between 16 and 18 years of age, however the risk to the participants was low as they were simply participating in classroom activities that were co-designed and led by their teacher. The ethics protocol for this study was approved by the Social Sciences, Humanities, and Education Research Ethics Board at the University of Toronto. Before the research began, both classes were given an orientation session in which the general purpose of the study was explained and a letter of information was provided to all participants and their parents/guardians. Additionally, a consent form was provided to students and their parents/guardians requesting permission for them to participate in video recorded and/or photographed classroom sessions. For collaborative activities, only groups for which all members returned signed consent forms were recorded and/or photographed. All subjects, as well as their parents/guardians, gave written informed consent to participate in the study in accordance with the Declaration of Helsinki.

Research Setting

This research was conducted at a university laboratory school in a large urban area. Activities took place within three settings: (1) At home (online) using the CKBiology platform, (2) in a traditional science classroom with a “bring your own device” (BYOD) policy, and (3) in a specially-designed AL Classroom, constructed by the school with the explicit aim of fostering productive collaborations between students. The AL Classroom featured six large multi-touch displays positioned around the perimeter of the room, racks of portable white-boards and markers, and flexible furniture (i.e., on casters) that enabled students to be grouped according to a variety of configurations.

CKBiology Technology Environment

In order to support a KCI approach throughout this course, we developed a custom technology environment called CKBiology, adapting the more general “Common Knowledge” (CK) platform that was designed to support KCI in previous studies (Fong et al., 2015). CKBiology was designed in close collaboration with our co-design teacher and reflects the unique design constraints of her course structure, her students, and her school context. Accordingly, CKBiology is a bespoke technology that was custom tailored to support our KCI script, enabling the teacher to orchestrate our various curricular activities and configurations (e.g., grouping students, distributing materials and activities), providing information at-a-glance to students and teachers about progress within the community, and scaffolding students in specific activities within the various learning contexts.

One important feature of this environment was a layer of intelligence, implemented on the Web server—invisible to any user interface, but supporting the scripting and orchestration conditions of our design. We sought to track the progress of individual students and groups, as well as the community as a whole, providing valuable information that could serve as input into teacher decisions or be automatically processed on our server. For example, the tracking of student activities could be used to provide real-time feedback or displays of progress, which could inform students and teachers alike in their timing, assessment and orchestration of the activities (e.g., by showing progress bars of students, groups, and community). For each iteration of our curriculum, CKBiology was adapted to support our specific scripting and orchestration conditions. The software thus, served to implement our designs, as well as to capture the data that could be analyzed, and can be seen as a product or outcome of this design-based research. While this software was developed for research purposes and was not intended to serve as a standalone product, the software repository has been made freely available on GitHub under an open-source MIT license to anyone who wishes to use, copy, expand, or adapt this software for their own purposes.

Sources of Data and Approach to Analysis

In order to enhance the validity of findings throughout this project, data was triangulated from the following sources:

1. Design documents, including co-design meeting minutes, lesson planning documents, and software mockups;

2. Audio and video recordings, used to document small groups during in-class review sessions;

3. Researcher field notes, which provided a thick description of the research context/setting and curriculum enactment, including details surrounding the collaborative processes and interactions that occurred among individual students, groups, and the teacher;

4. Learning artifacts and data logs, including text-based notes, images, relationships between terms, review reports, and metadata captured by the CKBiology platform; and

5. Teacher interviews conducted at the end of each design cycle.

For each design cycle, findings from each of these data sources were synthesized into design recommendations to be incorporated into subsequent iterations of CKBiology. Specifically, we organized all of our enactment data according to the following three categories: (1) Pedagogical challenges, (2) technological challenges, and (3) epistemological challenges. These categories were chosen because they mirrored the overarching design principles of the KCI model (Slotta, 2014). We then prioritized our findings from each of these categories using an informal scale ranging from “urgent” to “nice to have.” Working in consultation with the teacher, we negotiated which of these items we would address in the next design iteration and which items would/could be saved for future iterations.

Limitations

Overall, this research project was fairly context-specific, which makes it difficult to generalize findings to the broader population. In general, DBR addresses issues associated with replicability through the provision of detailed descriptions of the research context as well as an ongoing record of the design's history in the form of a “design narrative” (Cobb et al., 2003; Bell et al., 2004). A good design narrative provides an account of which design elements were intentional or accidental, successful or unsuccessful, explains why certain trade-offs were made, and provides justification as to why particular changes to the design over time were warranted (Bell et al., 2004). A strong design narrative allows others to judge the value of the design contribution and to connect its underlying ideas and findings to new contexts of innovation (Barab, 2014). Additionally, active involvement by the classroom teacher throughout the design process also means that the designs are likely to be enacted faithfully, giving researchers confidence that any measures collected throughout the intervention will truly reflect the underlying theory (Slotta and Peters, 2008).

Needs Assessment

This section addresses our first research question: What are the design opportunities and constraints associated with infusing a traditional Grade 12 Biology course with AL designs?

Co-design Meetings

During the year leading up to our CKBiology implementation, we held a series of co-design meetings with our teacher participant to discuss the opportunities and constraints that existed at the course-level and the school-level which would guide our designs of an AL component for the following year's course. The school in which this work was situated offered full-year courses (as opposed to a semester system), which ran from September to mid-June. Our teacher had two sections of a Grade 12 Biology course for the 2016–2017 academic year, and wanted to separate the theoretical and practical (i.e., lab) portions of the course, such that September to April would be devoted to theory and April to June would be reserved for labs and experiments. In co-design, it is essential that designs accommodate all interests, so we agreed to this approach and suggested that our designs could fit within the earlier (theoretical) portions, readying students for the later lab-based activities. The teacher indicated that the school as a whole was seeking to promote inquiry-based approaches in many of their courses, but that such approaches were particularly challenging to implement in Grade 12 Biology, since it was notoriously content-heavy. To address the heavy content needs, we decided on an approach of developing a KCI component for homework and review activities that would complement the traditional instructional approaches used in class (e.g., lectures and worksheet activities). Our designs would also include a series of end-of-unit “review challenge” activities that would provide students with an opportunity for more creative, inquiry-oriented collaborations in a face-to-face context.

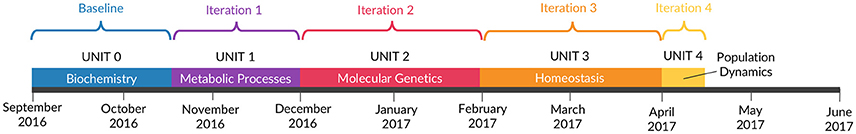

The Grade 12 Biology course was divided into five curricular units, as mandated by the Ontario Ministry of Education: (1) Biochemistry, (2) Metabolic Processes, (3) Molecular Genetics, (4) Homeostasis, and (5) Population Dynamics. Each unit spanned a period of ~6 weeks, with the exception of Unit 5 (Population Dynamics) which was only 2 weeks in duration. As shown in Figure 2, each of these units was treated as one design cycle, allowing us to evaluate and improve our designs from one unit to the next. In this paper, we report on results from the first four units only.

Baseline Observations: Biochemistry Unit

We collected baseline data in the form of lesson plans, researcher field notes, and teacher interviews, during the Biochemistry Unit? the first of five units in the course. We have labeled this “Unit 0” so that the numbering of subsequent units would align with our numbered design iterations (e.g., Unit 1 for Iteration 1). Lessons were taught using a lecture-based format, where PowerPoints were made available to students in advance of each lesson using the Moodle learning management system. Students were also given a paper booklet of handouts to help guide their note-taking for each lesson topic. These booklets were created by two teachers in the biology department, including our co-design teacher. Prior to each lecture, students were instructed to review the PowerPoints and arrive to class with the relevant pages of notes completed. The lectures served to reinforce concepts and provided an opportunity for students to ask questions to the teacher if there was something they did not understand.

In considering this unit as a baseline to inform the designs, our co-design teacher sought to try out the kinds of review activities that students would be performing in our subsequent KCI designs (i.e., a collective, learning community approach), but without the technology supports or structured materials. The purpose of this pilot effort was to inform our subsequent designs. The Unit 0 review activity included two parts, which took place over two class sessions. On the first day, students were given a printed copy of a research article on one of four topics related to biochemistry. Articles were distributed to students by the teacher based on physical proximity, such that students sitting close together received the same article. Students were free to choose their own seats upon entering the classroom, with most choosing to sit next to their friends. Each student was also given a paper handout containing a list of key terms and concepts they had learned throughout the unit. After reading their article independently, students were asked to highlight any terms or concepts from the list that applied to their article. Working in their same-“article groups,” consisting of 3–4 members, students negotiated the relevance of the terms and concepts each had selected, and generated a master list of terms with explanations justifying how each was applicable to the article. The master list generated by each group was collected by the teacher at the end of class. Prior to the second review period, the teacher made photocopies of each group's master list such that every group member received his/her own copy.

On the second day of review, students worked in jigsaw groups (i.e., with one representative from each of the previous article groups). Each of these groups was assigned an overarching theme by the teacher (e.g., “matter and molecular interactions,” “form and function”) and were asked to identify cross-cutting “big ideas” that emerged across all four of the articles with respect to these themes. Groups were also given a paper handout with a series of questions/prompts for each article, a space on which to record their big ideas, as well as their master list of terms from the previous day. The “big ideas” handout was collected at the end of the period and assessed by the teacher.

Findings

The students in both class sections were high-achieving and performance-driven, reflecting the overall population of this school. Throughout the unit, the teacher reported that class time was mostly spent with her talking through the PowerPoints. As she was lecturing, she would assess students' understanding based on factors such as facial expressions as well as the questions that students asked aloud in class. For the review activities, the teacher indicated that there was a good mapping of the terms/vocabulary that students had learned throughout the unit and the terms that were included in the activity handouts. However, our field notes as well as the teacher interview revealed that students were unclear on the purpose of the review activities—and, in particular, how the “big ideas” they were describing would help them perform better on their unit test.

Throughout the review activities, the teacher walked around the room fairly randomly to check up on how students/groups were doing. According to the teacher, “I was just, like, walking around and checking on people like, ‘What are you doing?’ ‘Show me what you have done.’ ‘Please do your work.’ Stuff like that. And sometimes it worked, and sometimes it didn't work.” Researcher field notes indicated that, while students seemed engaged and on-task in their group discussions, they didn't write very much down on their handouts for submission. According to the teacher, “they did some work, but it wasn't magnificent work.”

As an outcome of our consultations and baseline observations, we identified the following opportunities and constraints to implementing our AL curriculum design within this Grade 12 Biology course:

Design Opportunities:

1. Adding a learning community “layer” onto the existing course structure—As part of their homework activities, there was an opportunity for students to work together to co-create a persistent, shared, community knowledge base which would later serve as a resource for their review activities. Engaging students as a learning community would require an explicit epistemic treatment such that they would view each other as collaborators rather than as independent learners working in parallel and competing for grades.

2. Supporting real-time formative feedback—There was an opportunity to support students and the teacher in tracking their progress at various levels of granularity (i.e., as individuals, small groups, and as a whole class community). Providing the teacher with an overview of the progress of the learning community would enable her to make more informed decisions concerning when and where to intervene or provide assistance.

3. Designing conceptually rich and meaningful “review challenge” activities—There was an opportunity to design a “consequential task” (Brown and Campione, 1996) that would require students to draw from their community knowledge base in order to perform an engaging inquiry activity.

4. Active Learning Classroom & BYOD support—The school had recently completed construction on their own AL Classroom, which was available to be booked for our review challenge activities. Additionally, the school provided IT support for students to bring their own devices to class.

Design Constraints:

1. Course structure—Our designs were constrained to fit within the “theoretical” portion of the course only. With the exception of the review challenge activities, our designs would mostly be enacted by students outside of class time (i.e., for homework).

2. Curriculum expectations—Our designs had to conform to the content expectations of the Ontario Ministry of Education Grade 12 Biology (University Preparation) course.

3. Review challenge activities—Our review challenge designs were constrained to the (theoretical) material that students had already learned; there were limited opportunities to engage students in projects or labs in which they would learn or research a new topic.

4. CKBiology activities could not be for marks—To comply with our ethics protocol, students could not be directly evaluated for the work they completed as part of our design intervention. While this was not seen as a major issue at the outset (given the high performance of these students), the fact that it was a senior year course, together with the extraordinary level of student activities and commitments (e.g., visiting universities) did make this a factor.

Design Iterations: CKBiology and Active Learning

In this section, we respond to our second research question: What forms of active learning can address our constraints and challenges, and what technology elements are needed to support them? Given our co-design approach of adding AL designs as a culminating activity for each unit of the course, we report each iteration as a separate sub-section, summarizing what was learned and how it informed subsequent designs. In this way, we describe the complete arc of our design-based research, organized according to the temporal sequence in which it occurred. We close with a summary of the limitations of our study and potential applications of our findings to future work.

Iteration 1: Metabolic Processes Unit

In the Metabolic Processes Unit—hereafter referred to as “Unit 1” —we introduced KCI and the CKBiology platform for the “lessons” portion of the unit only, in part because we required this iteration to inform the full features of CKBiology. Thus, dedicating our efforts toward the “lessons” portion of this unit enabled us to carry forward our design and programming into Unit 2, where we added the review activities.

Design of Unit 1

At the beginning of the unit, we visited both class sections to provide students with an orientation to KCI and CKBiology. After making introductions, we began by discussing the idea of “Science 2.0,” explaining how the nature of science is changing and how large, collaborative research projects—facilitated by the social web—are becoming increasingly prevalent. Students were asked to imagine scientists working as collaborators across large distances and scales, rather than as independently isolated individuals working alone in a lab. Next, we introduced our research project and explained to students that they would have an opportunity to experience Science 2.0 as part of their school science activities. Throughout these activities, they would be asked to think of each other as collaborators rather than as independent, parallel learners competing for grades. At this time, students were informed that their participation in this research project would have no direct bearing on their grades, and that in choosing to participate they would be making a valuable contribution to CSCL research. Students were then introduced to the CKBiology platform. We performed a demonstration of the lesson activities and other functionality of CKBiology, which we projected on a display at the front of the room. The orientation session concluded with a question and answer period, at which time students asked questions and offered comments related to CKBiology and the overall research project.

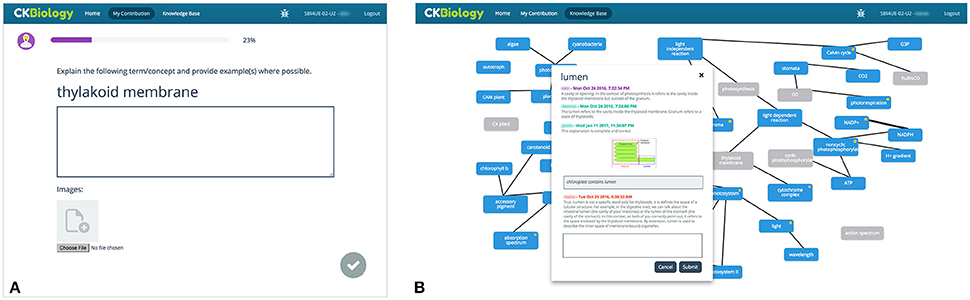

CKBiology activities were completed as part of students' homework and served as a complement to classroom lectures. There were two lesson topics in Unit 1—photosynthesis and cellular respiration—which were taught over five class sessions. As in the previous unit, students were asked to view PowerPoints and complete the appropriate pages of notes/handouts before arriving to class. In class, lectures were held which served to reinforce these concepts and provided students with an opportunity to ask questions and clarify their understandings. Following each lecture, students logged on to CKBiology for homework where they were assigned three different types of tasks. The first type of task was to explain a term or concept related to that day's lesson (see Figure 3A). The list of terms associated with a given lesson was established in advance by the co-design team, and the terms were divvied up evenly among students in the class. Students' explanations for these terms were contributed to the community knowledge base in the form of text-based notes with optional images (see Figure 3B). On average, students were assigned to explain three terms for each of the two lessons.

Figure 3. (A) CKBiology term explanation screen; (B) CKBiology Knowledge Base view, showing an open note for “lumen.” Within each note, the original explanation is shown with a purple heading, vetting is shown with a green heading, and additional comments are shown with a red heading.

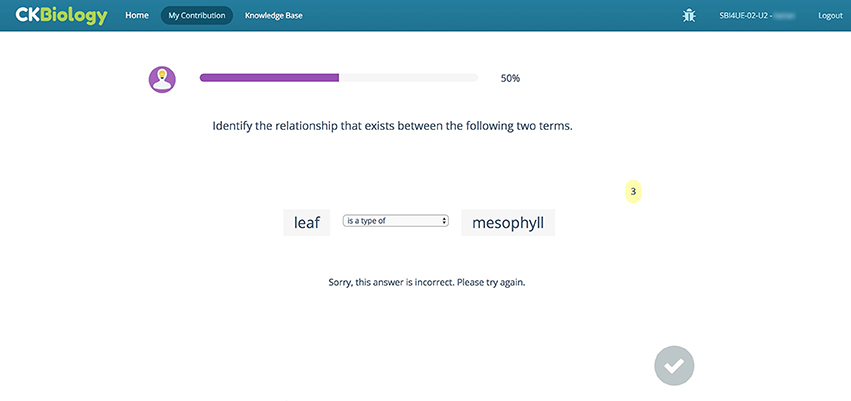

The second type of task was to identify relationships between terms or concepts in the knowledge base. Within the CKBiology interface, students were presented with two terms separated by a drop-down list of relationship types (see Figure 4). In this case, there was actually a “correct relationship” between each pair of terms, established in advance by the co-design team and programmed into the software. If a student chose the correct relationship, they were free to advance to the next task and a line would appear connecting the two terms in the knowledge base. The relationship would also appear as a sentence within each note involved in the relationship. For example, the sentence “chloroplast contains lumen” would appear in both the “chloroplast” note and the “lumen” note. If a student specified an incorrect relationship, a numeric counter would appear above their response indicating the number of attempts they had made at selecting the correct relationship. Since students would not be able to advance until they had chosen the correct relationship, the purpose of the counter was to discourage students from “gaming the system” by clicking through all possible answers without giving thoughtful consideration to each one. On average, students were assigned three or four relationships for each of the two lessons in Unit 1.

Figure 4. CKBiology relationships screen. A numerical counter (shown in yellow) indicates the number of attempts made at establishing a correct relationship.

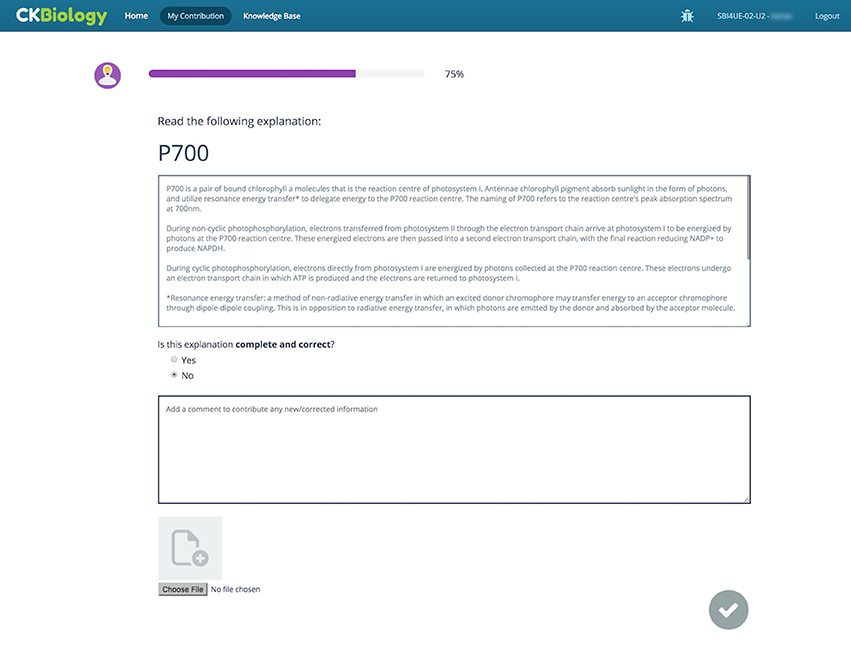

The third and final task was to peer review, or “vet,” the explanations submitted by other students. Students were presented with an anonymized note followed by the prompt: “Is this explanation complete and correct?” If the student responded “yes,” that student's name would be appended to the note along with the statement “This explanation is complete and correct.” If the student responded “no,” a text box and image uploader would appear beneath the original note, and the student would be asked to add any new ideas and/or corrected information (see Figure 5). Any additional information entered by the student would be appended to the original note along with the student's name. Subsequent vetting decisions performed on that note would be appended in the same fashion. On average, students were assigned four or five vets per lesson in Unit 1.

Within the knowledge base, a yellow dot was used to identify notes that had been deemed incomplete or incorrect as a result of student vetting. This yellow dot served as a cue to the teacher to take a closer look at these notes and potentially initiate a follow-up discussion to negotiate or improve upon these ideas as a class. As well, students and the teacher had the ability to comment upon any note within the knowledge base. Comments were appended to the note along with the commenter's name, and appeared below the rest of the note content.

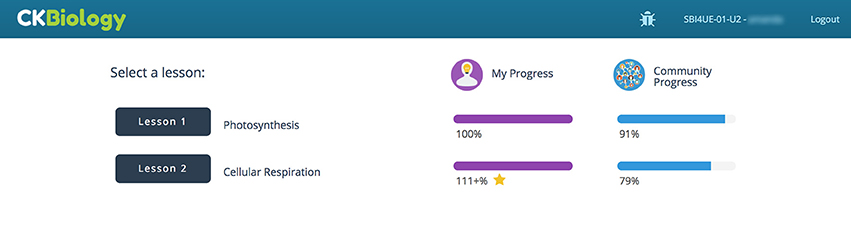

As students progressed through each of their assigned tasks, a progress bar at the top of their screen would indicate the percentage of work they had completed and the percentage of work that remained. Additionally, on their home screen (which showed information about all lessons and units) students could see their individual progress bar for each lesson as well as an overall progress bar for the whole learning community (see Figure 6). If a student saw that the progress level of the community was below 100%, they could choose to go “above-and-beyond” their own assigned tasks and make additional contributions to the knowledge base to boost community-level progress. Anyone going beyond their assigned tasks earned a gold star icon and additional progress points for that lesson. These additional contributions typically took the form of extra vetting tasks and did not detract from the assigned work of other students. Thus, no single student could dominate the knowledge base by populating an inordinate number of terms and relationships, and every student was still accountable for making their fair share of contributions.

Figure 6. CKBiology student home screen, showing individual progress bars (purple) and community-level progress bars (blue). Students who contribute more than the minimum requirement earn a gold star icon, and additional progress points above 100%.

The product of these homework activities was a shared community knowledge base that aggregated students' contributions in the form of a concept map for each lesson. Following the homework activities in CKBiology, the teacher could look at the knowledge base to assess students' understanding, and initiate a follow-up discussion in class if warranted. The teacher was also provided with a dashboard that provided an overview of students' progress for each lesson. In cases where a student was not contributing their fair share to the knowledge base, the teacher would consult with the student and try to remedy the situation.

Enactment of Unit 1

Students completed their CKBiology homework on a regular basis throughout Unit 1. The average student progress across all lessons was 93% for both course sections, with many students choosing to go above-and-beyond their own assigned work. At the same time, an average of three students per class section did not make any contributions to the CKBiology knowledge base (i.e., their progress was at 0%) throughout Unit 1. For this design cycle, these missing contributions were left as gaps in the knowledge base.

Regarding the teacher's use of the knowledge base, she explained that she did not have time to refer to the knowledge base either in class or at home, but acknowledged that she wished she had: “I should have been using this more. I think it's really helpful when we're looking at different concepts to go in and check how much they're doing….and just point out to them, like, ‘there is issues with this and this and this’ and give them feedback. I did not have the time to actually go in and do that, which I think is a shame because I believe this is a great way to show them how things relate to each other and to also check their knowledge.”

The teacher also commented that since we did not design any review activities for this unit (i.e., a task in which students were asked to apply their knowledge base), students may have had difficulty seeing the relevance of their CKBiology work: “Just because [the knowledge base] exists that doesn't make it relevant to them, right?” When considering what form of review activities we should add in the next unit, the teacher commented that students would benefit from more structured review sessions rather than periods of free study: “If you give [students] review time in class they don't review. They just do their other homework and then they go home and then they stay up late at night the day before the test… and they pretend that that's enough to do well.” More generally, the teacher noted that these students tend to be resistant to pedagogical change: “When you ask them to do something different, they're very resistant. But I think they're coming around, or I feel that there has been a change or a turn on their perception and I think they're starting to see the value of what we're doing.”

Iteration 2: Molecular Genetics Unit

In the Molecular Genetics Unit—hereafter referred to as “Unit 2” —we maintained the same format and structure for the “lessons” portion of the unit, and introduced several new review activities where students made constructive use of their knowledge base. Additionally, we introduced a group-formation tool as a component of the teacher dashboard to facilitate transitions between the “individual” and “small group” social planes. These improvements are described below.

Group Formation Tool

The group formation tool enabled the teacher to form groups of students “in the moment,” according to the following protocols:

• Group by progress—matches students with similar mean progress scores. Mean progress scores are calculated based on all lessons within a given unit.

• Jigsaw—shuffles previously existing groups such that each new group contains at least one representative from each of the previous groups.

• Random—distributes students into groups randomly.

• Manual mode—allows the teacher to modify any of the above groups, or to form groups by manually dragging-and-dropping student names into teams.

Although other grouping protocols could have been included, this initial set of protocols was chosen based on the teacher's input as to the kinds of groups she wished to form during the Unit 2 review activities. In subsequent iterations, we created additional grouping protocols based on the teacher's input for those activity designs (e.g., a group recommender, and a group-by-specialization protocol).

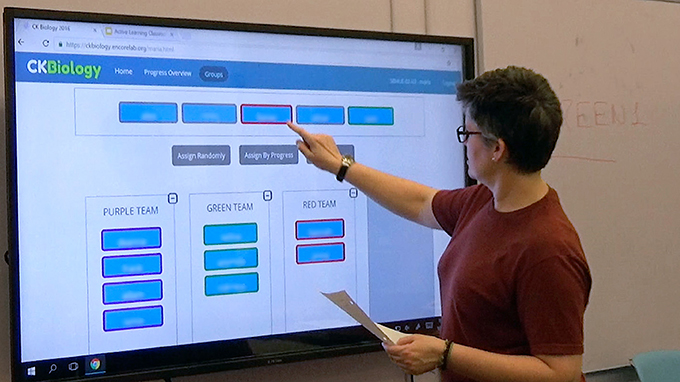

The interface for the group formation tool is presented in Figure 7. To use the tool, the teacher began by adding the desired number of teams or groups, which appeared as a series of empty boxes. After moving any absent students to the “absent” box, the teacher then selected the desired grouping protocol using one of the buttons on the screen, or by manually adding members to each group by dragging and dropping student names. At her option, the teacher could also modify group membership manually if adjustments were required.

Figure 7. Teacher using the CKBiology group formation tool on a multi-touch display within the Active Learning Classroom. Students' names have been blurred to preserve anonymity. Written informed consent was obtained from the depicted individual for the publication of this image.

Lessons

Once again, CKBiology activities were completed as part of students' homework and served as a complement to the traditional classroom lectures. There were five lesson topics in Unit 2, which were taught over nine class sessions. Before arriving to class, students were asked to view the PowerPoints and complete the appropriate pages of notes/handouts. In class, lectures served to reinforce these concepts and provided students with an opportunity to ask questions and clarify their understandings. Following each lecture, students logged on to CKBiology where they completed their explanation notes, relationships, and vetting. On average, students were assigned three explanations, three relationships, and seven vets for each lesson in Unit 2.

Review Activities

We designed four review activities for Unit 2. The goal of these review activities was for students to draw upon the knowledge base they had co-constructed throughout the unit, and to apply this knowledge to a new context of inquiry.

Review 1

The first review activity was completed individually. Within the CKBiology interface, students were asked to select a field of research from among four choices: (1) Cell biology, (2) Food science, (3) Pathology, and (4) Pharmacology. There was a maximum of four students per topic, with students receiving a notification if their chosen specialization was full. Students were then presented with a short article related to their chosen research field, and were instructed to “tag” any terms/concepts from the knowledge base that were relevant to the article. Next, students had to explain how each term/concept they had tagged was applicable within the context of their article. There was no minimum or maximum number of tags required for this activity, which was considered completed as long as students had provided explanations for all of the tags they had applied. The teacher's dashboard showed which students had completed the activity, were still in progress, or hadn't yet started.

Review 2

For the second review activity, students were assigned to jigsaw groups containing one representative from each of the four fields of research. The CKBiology interface contained each of the four articles, as well as an aggregation of all of the tags that each student had applied. The color intensity of each tag varied from pale blue to dark blue, depending on how many of the four articles contained that tag. Clicking on each tag brought up a “cross-cutting ideas” screen that prompted students to “explain how this term/concept is common across all of these articles.” Beneath the text box appeared each of the explanations that individual group members had submitted in Review 1 (i.e., of how the tag was related to one specific article). Students were also given the option to remove a term/concept if no cross-cutting ideas could be identified.

Review 3

The third review activity was a group challenge completed in the AL classroom. Students worked in groups of five, with all groups performing the same activity in parallel. The teacher created groups with the group formation tool using the “assign randomly” protocol. The progress of each group was visible to students and the teacher on a “progress overview” screen located at the front of the room. Tapping on any of the group names allowed the teacher to see the responses they had submitted so far, thereby informing her of which groups, if any, required her attention at a given moment. The premise of Review Activity 3 was that each group had been hired by a research funding agency to evaluate a research proposal in order to decide if the proposed project was both possible and scientifically sound. As part of their evaluation, groups had to prepare a report in which they explained key elements of the research and commented on its plausibility. Students' creation of this report was scaffolded by CKBiology, wherein students responded to a series of questions and virtual analyses (e.g., gene sequencing, protein synthesis, PCR, plasmid cloning). Ten question were displayed, in turn, on a large multi-touch screen, with responses entered using a shared wireless keyboard. Group members also used their own personal devices to consult the knowledge base and other online resources throughout this activity.

Review 4

In the final review activity, students were assigned to jigsaw groups consisting of at least one representative from each of the Review 3 groups. To begin, each group was given one of the 10 questions from the Review 3 activity along with the three versions of responses submitted by each of the Review 3 groups. Their task was to discuss the three responses and improve upon the ideas therein, arriving at a “best version” of the response to submit to the funding agency. Groups were also asked to tag concepts from the knowledge base that reviewers would need to understand in order to be able to respond to that question. Once a group had submitted a best response with tags, it received another question to work on until all 10 questions had been reviewed by at least one group. The output of the Review Activity 4 was a whole-class version of the review report, which served to consolidate students' ideas and informed a final discussion about whether the proposed research project should be funded.

Enactment of Unit 2

Several pedagogical challenges arose during the enactment of Unit 2. Firstly, it seemed that the novelty of the CKBiology lesson activities had started to fade, and students simply weren't keeping up with their CKBiology homework. Second, while the teacher continued to activate lessons in CKBiology as the unit progressed, due to time constraints she did not engage students in follow-up discussions wherein gaps in the knowledge base would have been revealed and discussed. This removed the social pressures that would have served to motivate students to do their homework. Before the final lesson, the teacher explored the knowledge base on her own and noticed that students' progress was low. However, this observation occurred right before the winter break, and at that point little could be done to catch up.

The “review challenge” activities were scheduled to occur on return from winter break. However, since these activities relied upon completed explanations from the knowledge base, they could not proceed as planned. Instead, students spent the first review day catching up on outstanding CKBiology homework. We had booked a total of 3 days in the ALC for the purposes of the review activities, and for various reasons it was not possible to postpone or reschedule any of these sessions. Thus, we simplified Review 1, and decided to skip Review 2 altogether.

Students were quite engaged in the Review 3 challenge activity. In one of the sessions the teacher commented, “This is the most lively I've seen this class all year!” However, the pace at which students progressed through the activity was much slower than anticipated, partly because of the impact of the winter break (i.e., on their memories), and partly because of how meticulous they were in their responses. The teacher stated that she didn't want to hurry students along just for the sake of reaching the end of the activity, seeing as how they were so deeply engaged and having such rich discussions. Consequently, by the end of the second review day most groups had only completed two or three out of the 10 questions. On the third and final review day, we decided to continue with the Review 3 activity, having no choice but to forgo Review 4. Despite the extra time allotted for Review 3, none of the groups were able to finish, with most groups ending on question six (of 10) before the period had ended.

In a debrief interview following this unit, the teacher commented that she wanted her students to be more motivated to complete their CKBiology activities, acknowledging the negative of not being able to assign grades to students' work. Therefore, on the unit test, whose design was solely under the teacher's control? she decided to include several questions that were modeled after the CKBiology review activities. For this unit, students were provided with a research proposal related to gene expression and alternative splicing in aging, and were asked to evaluate the research proposal as well as “tag” (using pencil and paper) and explain any concepts necessary for evaluating the proposal. In this manner, the inclusion of similar types of questions on the unit test meant that completing the CKBiology work would be beneficial for their performance.

Iteration 3: Homeostasis Unit

In response to some of the pedagogical challenges that arose during Unit 2—most notably students not keeping up with their CKBiology homework—one of the changes that was implemented for the Homeostasis Unit (i.e., Unit 3) was to provide class time for students to complete their CKBiology work. While the activity structure for the “lessons” portion of the script remained the same, the context in which the CKBiology work took place was now in the science classroom rather than at home. Within the classroom, the knowledge base was projected at the front of the room while students were working. This meant that students' contributions were physically prominent within the space, making knowledge gaps more public, an also allowing for more frequent discussions about aspects of the knowledge base (e.g., when there were evident vetting disagreements).

With respect to the review activities, we wanted to establish a more meaningful connection between the articles (i.e., Review 1) and the subsequent review activities. We therefore changed Review 2, introducing a “specialist certification” activity. We also exchanged our use of research articles in Review 1 with medical case studies, which students would apply toward solving a series of medical problems. In Review 3, students worked in jigsaw groups containing one representative from each specialization, with each group acting as a medical clinic. These changes are elaborated below. Based on the timing issues we had experienced in the previous unit, we shortened Review 3 considerably, from 10 questions to five, and eliminated the fourth Review activity altogether.

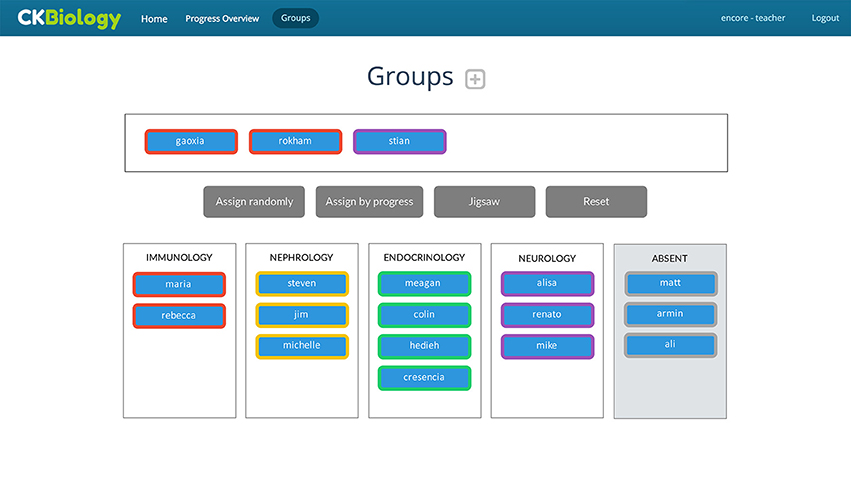

Several new technological features were added to Unit 3. First, we added a “specialization recommender” to Review 1, which made a recommendation to each student about which specialization they might choose (i.e., Immunology, Endocrinology, Nephrology, and Neurology), based on their contributions to the CKBiology knowledge base. We also enhanced the information provided on the teacher dashboard for Review 1, including the number of terms each student had tagged in addition to their level of completion. As well, complementing the student-facing recommender, we also added a teacher-facing recommender to the group formation tool for Review 2. Here, each specialization was assigned a color, and the names of students who had not chosen a specialization would appear with a colored outline corresponding to their recommended group. For example, Figure 8 shows that the students “gaoxia” and “rokham” are recommended for the “Immunology” group, and that “stian” is recommended for the “Neurology” group.

Figure 8. Teacher-facing specialization recommender. The colored ring around each students' name indicates their recommended group. Student names are pseudonyms.

A final technological design revision that was made in Unit 3 was the addition of a “call a conference” function. When students were working in their medical clinics (i.e., jigsaw groups) and a situation arose in which a particular specialist needed to consult with his/her fellow specialists, the “call a conference” button sent out a bat-signal-like alert to the other clinics, requesting the relevant specialists to convene in the designated conference area within the room. We did not put any restrictions on the number or frequency of conferences that could be called throughout Review 3.

Lessons

The activity structure for the “lessons” portion of Unit 4 was the same as in the previous two units—the only difference being that students now completed their CKBiology work in their classroom rather than at home. There were eight lesson topics in Unit 4, which were taught over 14 class sessions. In CKBiology, students were assigned an average of four to five explanations, five relationships, and 30 vets per lesson throughout Unit 4. (The high number of vetting tasks was attributed to a bug in the code).

Review Activities

There were three review activities for Unit 3:

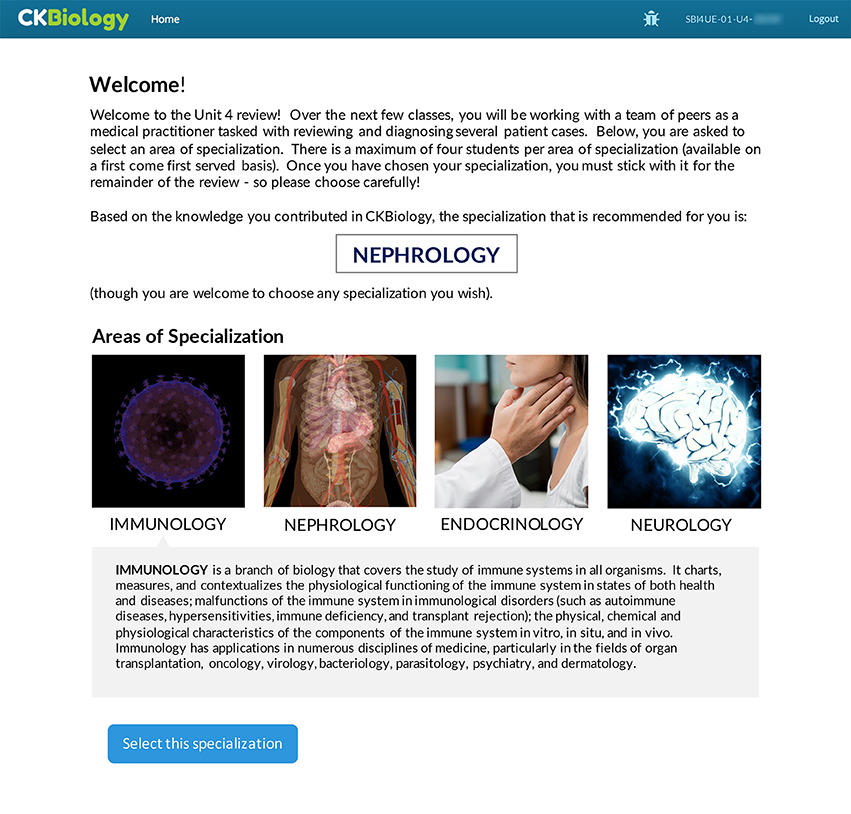

Review 1—Upon logging into CKBiology, students were asked to select an area of specialization from among four choices: (1) Immunology, (2) Endocrinology, (3) Nephrology, and (4) Neurology. As mentioned above, students were given a recommendation about which specialization would be well-suited to them. To do so, we calculated a score for each specialization based on the student's contributions to the knowledge base. Accounting for a maximum of four students per specialization, we generated a recommendation for each student based on their highest score for a non-full group. This recommendation (shown in Figure 9) was presented to students as entirely optional, with students free to choose whichever specialization they wished. Once students had chosen a specialization, they were presented with a medical case study whose purpose was to introduce students to various symptoms, lab analyses, test results, and treatment options related to a disorder within their area of specialization. For example, students who had selected “endocrinology” were given a case study about Graves' Disease, and students who had selected “nephrology” were given a case study about Glomerulonephritis. Students were then instructed to tag their medical case study with terms/concepts from the knowledge base, and to provide explanations as to how these terms were applicable within the context of their case study.

Figure 9. Student-facing specialization recommender showing a recommendation for “Nephrology.” Beneath this recommendation, students could explore all four areas of specialization before making their final selection.

Review 2—The second review activity was performed in the AL Classroom. Students worked within their specialist groups to solve a series of challenge questions related to their area of specialization. Questions were presented in CKBiology using a shared group display, and responses were entered by different group members using a wireless keyboard. Specialist groups also received a selection of paper handouts, which contained information on how to interpret various lab test results. For example, the Nephrology group was given handouts to assist them in interpreting urinalysis and urine microscopy test results. Likewise, the Neurology group was given handouts on how to interpret an EEG, the Endocrinology group received handouts on various blood tests, and the Immunology group was given handouts on autoantibodies. Specialist groups who successfully completed all of their challenge questions received “certification” in their area of specialization, which included a personalized paper certificate signed by their teacher.

Review 3—For the third review activity, students worked in jigsaw groups (i.e., “medical clinics”) containing one representative from each specialization. Playing the role of medical practitioners, students had to bring together their diverse expertise in order to diagnose a virtual patient with ambiguous symptoms. This included ordering the appropriate tests, explaining the reasoning behind their diagnosis, and identifying possible treatment options—thereby consolidating the knowledge they had acquired over the course of the unit. Students were guided through this activity via a series of five scaffolded questions in the CKBiology platform. Within the interface, the “call a conference” button was displayed next to each question. As in the previous unit, the progress of each group was visible on a public display at the front of the ALC. The teacher could also view each group's responses in real-time to get a sense of when and where students would most benefit from her assistance.

Enactment of Unit 3

While completing the CKBiology work during class time reduced the amount of time available for lecture, it had several benefits to the learning community. First, because the knowledge base was projected at the front of the classroom while students were working, any gaps or conflicts that existed in the knowledge base were made visible and salient. Consequently, discussions around the knowledge base occurred with greater frequency—whether they were initiated formally by the teacher, or informally among peers while they were working. Additionally, student progress for each of the CKBiology lessons frequently exceeded 100%, with many students performing two or three times the amount of work that had been assigned to them (i.e., earning progress scores of 200–300%). The average student progress across all eight lessons in Unit 4 was 109.7%. This figure is particularly impressive given a “vetting bug” where students were accidentally assigned more items to vet due to a software coding error.

The teacher commented that the Unit 3 review activities seemed more cohesive than in previous units, and that the articles/case studies were more meaningfully connected. Regarding students' use of the specialization recommender, only 26.3% of students ended up choosing the specialization that was recommended to them. An additional 5.3% of students indicated that they would have chosen their recommended specialization, except it had already filled up. The low uptake of recommendations may have partly been related to the fact that students completed the Review 1 activity synchronously in class as opposed to asynchronously for homework, as planned. With all students working simultaneously, the system was generating recommendations at the same time as they were being filled. Consequently, a student may have been presented with a recommendation that, moments later, was no longer available.

Attendance for the review activities remained a challenge, and became particularly problematic when trying to form jigsaw groups of specialists. In some cases, there were specialists present for Review 3 who had been absent for Review 2 and had not yet earned their certification. In other cases, specialists who had earned their certification in Review 2 were absent for Review 3, leaving some groups without expertise in these specializations. These absences were handled in two ways. First, each medical clinic was provided with a folder containing all of the specialist resources that had been generated during Review 2, including the paper handouts for each specialization as well as access to the Review 2 reports in CKBiology. In this sense, the “knowledge” of that specialist was still present at the table, even if the person wasn't. Second, students could use the “call a conference” button if they needed further information related to a particular specialization. This “call a conference” functionality was used a total of six times across both class sections, with all specialist groups conferring at least once.

An additional design challenge that arose during the enactment of the Unit 3 review activities was related to the way group progress was measured and displayed. Technically, students could enter a single character as a response to a challenge question and then proceed to the next as if that response was complete (Students could later go back and revise their responses). It was thus up to the teacher to identify such cases (e.g., using the “group report” function on her dashboard) and intervene when a particular answer wasn't up to par. However, for the purposes of the progress bar calculation, this single-character response was considered “complete,” and groups would earn progress points for submitting such a placeholder response. Students quickly caught on to this, and began entering single-character responses to the challenge questions—however their reason for doing this wasn't because they wanted to earn 100% progress for doing little/no work. Instead, they did this so that they could read all of the challenge questions ahead of time (i.e., to see where this activity was going) before going back and carefully considering each response. Consequently, several groups appeared to have earned 100% progress at the beginning of the activity, even though their responses were virtually empty.

This “false progress” made it challenging for the teacher to decide when and where to intervene. The teacher used the Reports screen on her teacher dashboard to look at the responses for each group, however she generally waited until a group claimed to be finished before reviewing their responses. According to the teacher: “What I did was like…when I would see that they were done…I would go and check [their answers]. ‘Ok…this is not great,’ ‘Mmm, this needs to be looked after…’ So then I would go back to them and say, ‘Listen people. Yes, you are on the right track, but you need to look at this and this and this,’ and ‘What about blablabla’ and ‘Did you consider blablabla.’ And that's how I used it.”

Overall, the enactment of Unit 3 was successful in that students co-constructed a quality knowledge base with many exceeding what was required of them, and then applied the knowledge base to a new context of inquiry (i.e., a medical case study). They were engaged in their review challenge activities, and completed everything within the time available. The teacher also noted that “The certificates were a big hit. Who knew? [laughs] If I had known this I would be giving them certificates every single class!” She also responded positively to the group formation tool: “It is so useful. I LOVED it. I thought that was fantastic… Because it makes it really easy to see what you're doing with your groups. It makes it really easy to see, for example, when we have the jigsaw, that you were actually jigsawing people properly…it's not something that I have to, you know, look at people or change them afterwards or whatever. Like I can really quickly do that and do it right. I thought it was great.”

Discussion

The sections above describe an uncommon opportunity to iteratively develop an active learning design over four distinct cycles during a single course offering. This opportunity arose because of the cyclical nature of our course context, with active learning elements occurring at the end of each curricular unit in the form of review activities. Because there was a month or so between iterations, we were able to examine the previous enactment, revise our designs, and develop the corresponding materials and technology environments (i.e., CKBiology). While this approach introduces the confound of having a single cohort of students engage with each successive iteration, by the same token it allowed us to develop our designs in a single coherent context, building upon the knowledge and experience of community members. Our plans for future work will extend this research to four new school contexts with a comparative study of all participants—including the ways that teachers adopt and adapt our designs for their particular curricula, students, and schedules.

In response to our first research question (i.e., What are the design opportunities and constraints associated with infusing a traditional Grade 12 Biology course with active learning designs?), this work advanced a general active learning progression, as epitomized by the Unit 3 designs, wherein students worked as a community to explain, connect, and review all the salient concepts from the unit, and then use the resulting “knowledge base” as a resource for inquiry-oriented challenge activities. We employed a jigsaw group strategy for the review activities, first creating a set of expert groups, with an activity designed to enhance group members' knowledge of their respective specializations, then regrouping such that one member from each expert group was present in a more general team. These groups were charged with creating reports and summaries, and applying their knowledge to contextually relevant challenges (e.g., reviewing grant proposals or addressing a medical diagnosis). Through three successive units (and one baseline unit), we progressively refined and adapted the review activities, including new supports for student groups, for teacher and community awareness (i.e., of community progress), and for teacher orchestration.

In response to our second research question (i.e., What forms of active learning can address those constraints and challenges, and what technology elements are needed to support them?), this iterative design study allowed us to progress in our understanding of the role of technologies for supporting students and teachers in AL. For students, we investigated and iteratively refined the role of progress bars for their individual, group and community efforts (Acosta and Slotta, 2018). We also examined group process supports during review activities, including grouping strategies and a specialization recommender. We also emphasized two forms of ambient technologies for our AL classroom: First was the inclusion of the concept network as a central display, showing terms that had or had not yet been defined, whether and to what extent they had been vetted, and relationships amongst them. This omnipresent display allowed the teacher to occasionally find certain concepts or terminologies within the display, touch them to reveal their definition, comment on relationships or conflicts in vetting, etc. She could also spot gaps in the network, and encourage greater progress. Another ambient technology was the teacher dashboard, which was visible only to the teacher and was always available for reference as a source of information about specific group products and productivity.

Throughout this effort, we were cognizant of several ongoing tensions, which challenged our successful enactment. The first was concerned with the culture of assessment in the school, and the need felt by students for grading and recognition of their contributions. Because our research ethics protocol disallowed assigning grades for participation, we were forced into a position of focusing on review activities that were perceived by students as supplementary. This perception was addressed by the teachers' decision to use our designs as a basis for part of her unit tests. However, we recognize the general need for epistemological coherence within a learning community approach. Students who are situated within an otherwise lecture and test-based course will have a difficult time identifying with and participating in any collective elements. Another challenge was concerned with the fact that this course was taught in the senior year of a university-preparatory program, where the students have substantial extracurricular activities and commitments during their final year.