- Department of Organisational Research and Business Intelligence, University of South Africa, Pretoria, South Africa

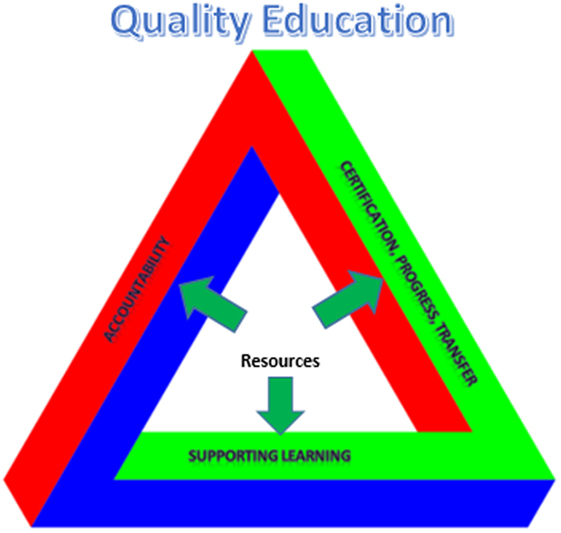

This article introduces the concept of the assessment purposes triangle to illustrate the balancing of purposes that needs to be considered in assessment for educational quality. The triangle is a visual aid for stakeholders making value judgments about equitable resource allocation in an environment of scarcity. This article argues that each of the three basic purposes of assessment, assessment to support learning; assessment for accountability; assessment for certification, progress, and transfer need to enjoy appropriate attention to support quality education. Accountability and certification, progress, and transfer are inherently high stakes due to systemic pressures such as institutional funding, accreditation with various bodies, legislative requirements, national and international competition, and public and media pressure. It is thus likely that resources will be diverted to these purposes from the lower stakes assessment to support learning. This article illustrates the inherent dangers of over-emphasizing certain purposes to the detriment of others in any educational system. It also discusses the power inherent in assessment and argues for a harnessing of this power for quality education through catering for the three basic assessment purposes in an equitable manner. All actors in an educational system should be cognizant of the goals of the education system as a whole when developing and engaging with assessment. This article provides a conceptual tool for stakeholders in educational assessment to engage with these goals when considering the allocation of appropriate resources to each of the assessment purposes.

Introduction

Examining assessment in a vacuum negates the complexity inherent in the realities of any educational system. This article therefore employs a systemic view of education, which includes the learners and teachers, schools, higher education institutions, accreditation bodies, government and other stakeholders within a specific country or territory responsible for resource allocation and funding. In keeping with this systemic approach, this paper employs the UNICEF definition (Adams, 2003) of quality education to include quality learners, quality learning environments, quality content, quality process, and quality of outcomes combined.

Educational assessment can only truly reap benefits for education if it is conceptualized as having the ultimate purpose of ensuring quality education. However, the educational assessment water is muddied by different classifications of assessment that focus on various levels of interrogation. The classification of assessment as either summative or formative leaves something to be desired, as the grouping of summative provides little indication of the use of the data (Newton, 2007). This has led to the interrogation of the purposes of assessment on a much more detailed level, such as assessment for: genuine improvement actions; instructional purposes; supporting conversations; professional development; encouraging self-directed learning; policy development and planning; meeting accountability demands; legitimizing actions; motivating students and staff; personnel decisions; student monitoring; placement; diagnosis; and resource allocation (Newton, 2007; Schildkamp and Kuiper, 2010). The intended use and audience/s impact the design and implementation of any assessment and causes complications when data are used for anything other than its original purpose and consumers. This approach of differentiating very specific uses, while valuable when working in a particular topic area, is too detailed to help us conceptualize the role of assessment in establishing overall educational quality on a systemic level. Newton (2007) deals extensively with the importance of establishing clear, meaningful and distinct groupings of assessment purposes. In line with Newton’s (Newton, 2007) work and with adaptation from the arguments of Brookhart (2001) and Black et al. (2003, 2010), this article employs a category grouping approach based on assessment purposes.

This article first examines the need for balance in fulfilling all the core purposes of educational assessment. These purposes are grouped into three categories. Second, this article examines the levels of the stakes associated with each of these purposes, since these stakes directly influence the investment in each type of assessment. Third, the assessment purpose triangle is contributed as a tool to engage with the type of emphasis placed on and the allocation of resources to the three categories of assessment purposes. Finally, this article discusses the dangers inherent in over-emphasizing certain purposes to the detriment of other the others in any education system. The author concludes that a quality education system requires provision for each of the three basic assessment purposes in an equitable, balanced manner. All stakeholders should have an awareness of these purposes when planning, providing input, and implementing assessment. Even if stakeholders cannot directly influence resource allocation at their particular level within the system, they will be equipped to conceptualize assessment holistically, provide informed contributions, and even impact student framing of assessment using the conceptualization of assessment purposes in this article.

In considering the allocation of resources, the author argues that such apportionment be equitable, though not necessarily equal. Equitable here refers to allocating the resources according to the needs of the whole system, not necessarily that resources need to be allocated equally (equality). Some educational systems may require more assessment for learning purposes, whereas others may require greater accountability. These needs must therefore be based on the unique dynamics of that system. This conceptualization of the limited pool of resources is aimed at sensitizing decision-makers, planners, and implementers at various levels of the system that their particular needs and foci may impact on the resources available for other assessment purposes throughout the system. In this way, the assessment purpose triangle provides a visual tool to support all stakeholders in seeing the bigger educational picture and deciding on the urgency and importance of resource allocation according to the current educational systems needs as a whole.

Fit for Purpose

For the purpose of this article, the author differentiates between assessment to support learning; assessment for accountability; assessment for certification, progress, and transfer. Conceptualizing the purpose of assessment as threefold provides us a solid basis from which to interrogate assessment to support quality of education (Black, 1998; Newton, 2007; Schildkamp and Kuiper, 2010). This purpose-based grouping was proposed by Newton (2007) and is adapted in this article according to Brookhart (2001) and Black et al. (2003, 2010). Some functions are served exclusively by a single purpose-developed assessment, but there are cases where assessments are designed to fulfill multiple purposes. This exacerbates the risk of not appropriately fulfilling any of the roles of the assessment or only paying lip service to a particular purpose, while actually focusing on another. This is, however, not always the case. In the following paragraphs, the definition and conceptualization of the purposes of assessment employed in this article are explored.

Assessment to Support Learning

Assessment to support learning is often referred to as formative assessment. In line with the arguments of Brookhart (2001) and Black et al. (2003, 2010), who emphasize the pedagogical role that summative assessment plays in supporting learning. Summative assessment has been judged by both students (Brookhart, 2001) and educators (Black et al., 2010) to serve not merely as a tool for reporting learners’ progress but to support learning. Assessment to support learning refers to the interaction between learning and assessment that is forward going. This means employing assessment data in a diagnostic approach to determine competence, gaps, and progress so learners may adapt their learning strategies and teachers their teaching strategies (Black and Wiliam, 1988; Black, 1998). This role is usually, but not solely associated with formative assessment. This type of assessment—be it formative or summative—may be a distinct event or integrated into the teaching practice. It is employed to determine the degree of mastery attained to that point and to inform the learning required to move toward mastery. Formative assessment in particular usually has a high frequency and focuses on smaller units of instruction (Bloom et al., 1971; Newton, 2007).

Assessment for Accountability

Assessment for accountability is a function of the responsibility of educational institutions to the public and government for the funding received (Black, 1998; Pityana, 2017). This is mainly achieved through providing evidence that learning is being promoted. The most viable manner in which to do this on a wide scale is through aggregated learner results. International and national comparative and benchmark studies have also been popularized as a means of providing accountability (Jansen, 2001; Howie, 2012; Archer and Howie, 2013).

Assessment for Certification, Progress, and Transfer

Assessment for certification, progress, and transfer needs to be served on both an institutional and individual level. Programs and qualifications need to be certified and acknowledged by accreditation bodies to have value for further studies or employability (Altbach et al., 2009). The certification of an institution is therefore an acknowledgment by the accreditation body, such as a national education system or professional board that a qualification meets with the requirements set by the authority. On an individual level, certification is necessary to endorse attainment of certain skills and knowledge. This certification then serves as the entrance criteria to the next grade or level of learning. Assessment data are also required to attest to progress and facilitate transfer to a different institution. In a similar fashion, assessment data should facilitate movement between different institutions, even if these are in different territories or countries. The certification is required to allow the receiving institution to make a decision as to whether or not previous learning will be recognized and credits transferred (Black, 1998; Garnett and Cavaye, 2015).

The Assessment Purpose Triangle

It is seductive to judge these purposes of assessment as being inherently positive or negative and even independent of one another. In reality, each of these three basic purposes of assessment serves an essential role in a well-functioning education system and must be balanced with the other purposes. The over-emphasis, under-emphasis, or absence of assessment for any of these purposes may negatively impact the overall quality of education. This paper does not prescribe a specific balance of resource allocation to the three purposes but aims to sensitize stakeholders at all levels to the considerations necessary in the three purposes. The manner of distribution of resources will be determined by the needs of the specific education system and the level at which decisions must be made. By way of illustration, consider the government official who might not consider the value of formative assessment. Through an understanding of the assessment purpose triangle, this stakeholder will be better equipped to make informed decisions about how the allocation of resources to assessment for accountability, reduces the availability of resources for other assessment purposes. In the same manner, an educator may be sensitized to the importance of accountability practices and incorporate time and resources in classroom planning to ensure this requirement is met.

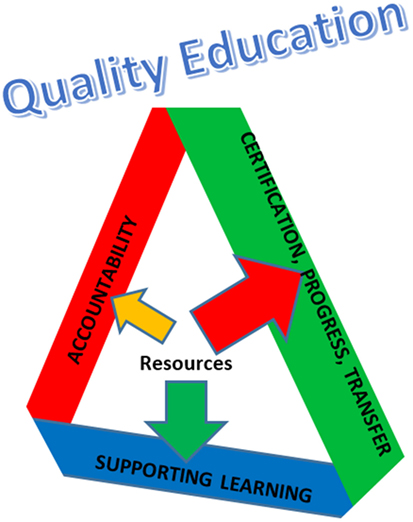

This required balance can be conceptualized as the assessment purpose triangle shown in Figure 1 below. This is an adaptation of the project management Triple Constraints model, or project management Iron Triangle which is built on the premise of resource scarcity (Atkinson, 1999). This article is therefore based on the premise that assessment for any purpose takes place in an environment of scarcity in which all resources must be shared. If external or internal motivators skew the emphasis to focus on one, or two of the purposes of assessments, the other will have to sacrifice importance and resources. The affected purpose of assessment may be neglected to the detriment of the overall systemic educational quality if this does not serve the current needs of the particular system (Brown and Harris, 2016).

This graphical aid of the assessment purpose triangle depicts each of the basic purposes of assessment on opposing sides: assessment to support learning; assessment for accountability; assessment for certification, progress, and transfer. This positioning is important because, while each purpose of assessment may contribute to the quality of education, the resources such as educator time, student time, marking load, administrative burden, and technology employed to operationalize these purposes belong to a shared pool. An over-emphasis of any one of the purposes of assessment will affect the other sides by diverting resources from one or both of the other essential assessment functions, thereby adversely influencing the quality of education. Such an outcome is not purposefully planned, but comes about owing to a differential need within the system (see Figure 2). Nevertheless, educational quality requires attention to each assessment purpose and an equitable distribution of assessment resources.

Achieving Appropriate Investment

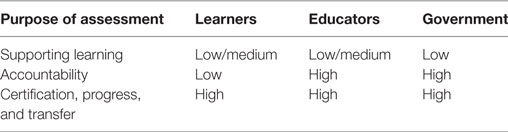

Each of the three purposes of assessments discussed, generally have different levels of stakes for stakeholders. This paper highlights learners, educators, and government as the main stakeholders in educational assessment and represents the stakes traditionally associated with each across the three assessment purpose categories (see Table 1).

It is clear that each purpose of assessment is conventionally linked to a particular level of stakes. This is an important consideration when discussing the achievement of the appropriate balance of assessment purposes in the quality of any educational system. Whenever the stakes are too high or too low there is the risk of over-emphasis or under-emphasis and possible skewing of the importance attached to each assessment purpose. The following sections discuss the risks of such skewing of the purposes of assessment in detail. Imbalance of assessment purpose does not always take place, but this section shows the threats and damage such imbalance can cause in an educational system.

Assessment to Support Learning

There is no doubt that concerted efforts are directed toward improving the classroom assessment environment and progress is being made (Black et al., 2010). Timely, formative data are essential for continuing such informed improvements in education. Unfortunately though, such data are often lacking.

The classroom environment is where the majority of regular assessments take place to support learning. This mostly represents a low stakes environment where cheating, inadequate student and educator effort, classroom dynamics, and school complexities make for a less than optimum testing environment (Dorans, 2012; Wise and Smith, 2016; Brown, 2017). These complexities are amplified in cross-cultural environments, which accommodate a high level of diversity (Epstein et al., 2015), even in a standardized assessment environment. In the cases where technology is implemented to improve accuracy of psychometrics, the novelty of the assessment approach may also adversely influence performance (Katz and Gorin, 2016).

The value and importance of valid, reliable assessment data to inform decision-making and to plan monitoring is uncontested (William et al., 2004; DeLuca and Bellara, 2013; Epstein et al., 2015; Wise and Smith, 2016). Brown (2017), however, highlights the inconvenient truth that our assumption that assessment data are always trustworthy and subject to appropriate scrutiny is a fallacy. This basic assumption often remains unchallenged and leads to decisions based on data which has no more value than the gut instinct of educators or teacher experience. This is a particular risk in the non-standardized, formatively orientated assessment environment of the individual classroom, which is mostly employed in support of learning.

An over-emphasis of assessment to support learning may lead to an over-dependence on norm referencing. As both formative and summative assessment usually takes place in the classroom environment, the educator’s judgment is easily modulated to focus on the skill levels of the group. Learner performance is thus compared to that of the other learners in the class or level. In such a case, it may well happen that the learners in a particular school have commenced at a disadvantage, having not attained the quality standards required of the particular level of study. In the absence of comparison to these standards and performance of learners in other institutions throughout the system, the learners’ attainment may be over-estimated, leaving them ill-equipped to compete with learners from other institutions.

Accountability

The monitoring culture in any country is influenced by the political environment. Accountability is necessary, particularly where the public purse is the largest funder of education in a country. Accountability, along with transparency, is essential qualities of ethical leadership for quality education (Pityana, 2017). Although accountability practice has had its adverse effects, it is an essential function of assessment to ensure that national education departments can benchmark the country or territory’s education system against those of others. Without this information, national education systems do not have a basis for evidence-based decision-making and preparing students for the global labor market (Altbach et al., 2009).

High stakes accountability practices have become popular in developing countries since the 1990 Jomtien World Conference “Education for All” (Howie, 2012). Whether one focuses on international comparative studies or national assessments for accountability in the developing world, high stakes accountability practices provide an avenue for examining the power and danger of assessment for accountability.

The following section focuses on the example of South Africa, which participated in spate of high stakes international comparative studies such Trends in International Mathematics and Science Study, Progress in International Reading Literacy Study, the Second Information Technology in Education Study, and the Monitoring Learning Achievement study (Linn, 2000; Jansen, 2001; Archer, 2011; Archer and Brown, 2013). All these assessments reflected a shift not only toward government accountability but also global competitiveness and benchmarking (Altbach et al., 2009). South Africa did not perform favorably on these assessments, firmly placing negative popular and media attention on the then Department of Education. Not surprisingly, shortly after these events, the government announced a decision to participate in fewer international comparative studies, claiming that this was to allow the interventions that were put in place to take full effect (Human Science Research Council, 2006).

South Africa’s annual national assessments (ANAs) were implemented as an alternative accountability measure in 2011 (Graven et al., 2013) by the new Department of Basic Education. These standardized national assessments examine languages, mathematics, literacy, and numeracy from Grades 1 to 9 (6–16 years) (Department of Basic Education, 2017). The ANAs are touted as serving formative and teaching for learning purposes, while also being summative measures of accountability to examine if schools are ensuring that learners meet the curriculum standards (Long, 2015; Govender, 2016).

It soon became apparent that the assessment purpose of support of learning was just a symbolic claim: ANAs took place before learners had an opportunity to complete the curriculum, while the administrative burden diverted school time from teaching and learning. In addition, teaching to the test was not only prevalent but encouraged by the Department of Basic Education and children found the assessments exhausting, stressful, and disheartening (Weitz and Venkat, 2013; Spaull, 2014; Long, 2015; Govender, 2016). A labor dispute then arose, with teaching unions and teachers refusing to administer the ANAs in 2016 (Mlambo, 2015).

Beyond the labor challenges of the ANAs, it became clear that the psychometric and comparative basis of the ANAs are questionable (Graven and Venkat, 2014; Long, 2015) with even the DBE stating that ANA results are not comparable over various years. Yet, that is exactly what the data are primarily employed for (Spaull, 2013, 2014). These data are explicitly employed by the DBE to account for and prove “improvement” in the quality of education and the fulfillment of the educational system’s responsibility toward the greater South African public. This is a clear example of the questionable validity, reliability, and generalizability of an assessment being ignored in favor of serving a political agenda and therefore subverting the aim of contributing to the quality of education.

A solitary focus on assessment for accountability risks an autopsy approach to assessment. The assessments are mainly summative, usually to assess attainment of certain standards at the end of an educational cycle. This means that by the time any problems are identified in the attainment of the required criteria, the entire group of students have not received the additional support during that period to rectify them. The group enters the next educational cycle not having attained the prerequisite skills and knowledge for the new level. Resources now have to be diverted from the new cycle of learning to address the gap. This may result in a cumulative educational backlog for students.

This difficulty is exacerbated by the nature of assessment for accountability in that such assessment generally cannot focus on the whole curriculum and mostly takes the form of aggregated and summative data. This type of assessment data often lacks the diagnostic value required for intervention and improvement action, planning, and decision-making. The accountability measure thus has the ability to indicate that a problem exists, but will need to be supplemented with additional assessment to support learning before meaningful change can be effected.

Assessment for Certification, Progress, and Transfer

As can be seen from Table 1 above, the stakes for assessment for certification, progress, and transfer are high for learners, educators/institutions, and government (Altbach et al., 2009). Without appropriate certification, access to the job market is limited for students. Institutions must attain accreditation for the organization and qualification alike, to ensure funding and support from government and investors. Students will not enroll in an institution which is not accredited and the very survival of the organization will be threatened. On a governmental level, this type of assessment has global stakes as the country needs to compete internationally and graduates must have the opportunity to seek employment globally (Altbach et al., 2009).

The high stakes create motivation for manipulating the assessment system is thus great for all mentioned stakeholders. At the same time, though, the role of educational institutions in preparing employable graduates to fulfill the need of the labor market is enjoying more scrutiny. Educational institutions are tasked with attaining and maintaining a balance of their academic purpose, producing well-rounded citizens and the more operational employability requirements of the labor market (Mcilveen and Pensiero, 2008; Bridgstock, 2009; Altbeker and Storme, 2013; Archer and Chetty, 2013). However, the future world of work represents an unknown. Many futurist studies have turned to conjecture about what such a will look like (Davis and Blass, 2007; Institute for the Future, 2011; UK Commission for Employment and Skills, 2014; Brynolfson and Mcafee, 2015; Hodgson, 2016). Education is thus faced with producing employable graduates for a work environment which will have changed by the time graduation is achieved. Such a goal requires a high level of responsiveness. This employability is seen as a component of graduateness—yet another demand which educational institutions must meet (Knight and Yorke, 2003, 2004; Bridgstock, 2009; Altbeker and Storme, 2013). Efforts to increase employability are enjoying global attention in education with specific emphasis on transferrable skills in addition to knowledge and field-specific skills (McCune et al., 2010; Archer and Chetty, 2013; Sawahel, 2014).

Finally, assessment for certification, progress, and transfer plays an essential role in any educational system to ensure employability and mobility of students and graduates (Altbach et al., 2009). Certification is often the requirement for applying for employment, while movements between various territories, countries, and educational levels require the transfer of credits and/or reporting of progress for recognition of prior learning (Garnett and Cavaye, 2015). An over-emphasis on assessment for certification, progress, and transfer may well detract from considerations of the realities of employability and the future of work. Institutions and educators may focus exclusively on meeting the general criteria and standard of accreditation bodies as opposed to the need to establish transferrable skills. The bureaucracy around such standards and criteria also decrease the responsiveness of an education system to meet the changing demands of the world of work (Altbach et al., 2009).

Making Value Judgments

Assessment for any purpose takes place in an environment of scarcity. Educational stakeholders must make a value judgment on how to allocate assessment resources for the purposes of supporting learning; accountability; certification, progress, and transfer. Some of these purposes such as accountability and certification, progress, and transfer are inherently high stakes due to systemic pressures such as institutional funding, accreditation with various bodies, legislative requirements, national and international competition, public and media pressure. It is thus often the case that the bulk of scarce resources are invested in these purposes at the cost of assessment to support learning. This means that educational institutions and learners often lack the diagnostic data to make timely improvements and adjustments to ensure quality education. The assessment purpose triangle provides a tool to support educational stakeholders by illustrating how competing assessment purposes demand varying levels of resources. Stakeholders who interrogate these demands have the opportunity to make informed decisions about the equitable allocation of resources to all three assessment purposes to attain quality education through systemic change.

Conclusion

This article illustrates the power of assessment. In many cases, the stakes in assessment are so high that stakeholders subvert the original purpose of the assessment knowingly, or unknowingly, to avoid censor and negative consequences. While this article presents examples of the original purposes of the assessment being skewed, it also illustrates what a powerful change agent assessment can be in education. However, the resources for assessment (educator and student time, motivation, effort, administrative load, infrastructure, or technological resources) are a finite.

When we examine the quality of education, it seems obvious that student learning should be the main aim of a quality education system. In a complex world where large organizations, governments, and institutions are a major feature in education systems, it is easy to lose sight of this ultimate goal and focus on only that with which you, as a cog in the system, have been tasked. This article argues that we cannot afford to lose this focus and that each of the purposes of assessment, assessment to support learning; assessment for accountability; assessment for certification, progress, and transfer needs to enjoy appropriate attention to support quality education.

The assessment purpose triangle provides a reminder that all assessment purposes must be served to a lesser or greater degree, depending on which level of governance and practice we focus on within the education system. The over-investment in resources and stakes in any one of the purposes diverts the same from the remaining two assessment purposes. Wherever there are high stakes, the motivation to manipulate the assessment increases and there is therefore serious risk of not fulfilling the original purpose.

The author introduced the concept of the assessment purpose triangle to illustrate the balance of purpose that needs to be achieved in assessment for educational quality. This provides a tool for stakeholders in educational assessment to engage with the allocation of appropriate resources to each of the three basic categories of assessment purposes, namely supporting learning; accountability; certification, progress, and transfer. The assessment purpose triangle also sensitizes users at all levels of the education system to the importance of each assessment purpose.

Thus, even if stakeholders such as teachers cannot directly impact how resources are allocated, they will be empowered to provide informed input in a forum where resource allocation is discussed and consultation is held. In the same way, use of the assessment purpose triangle sensitizes government officials involved in resource allocation to the necessity of not over-emphasizing accountability practices to the detriment of assessment to support learning. Such unequitable allocation will, in time, adversely influence accountability scores. The assessment purpose triangle thus provides a tool for informed discussion and consultation and the sensitizing of stakeholders to the various purposes assessment must serve, in the drive for systemic quality of education, even if they are not directly responsible for a particular component of the triangle.

Author Contributions

The author confirms being the sole contributor of this work and approved it for publication.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer DAB and handling editor declared their shared affiliation, and the handling editor states that the process nevertheless met the standards of a fair and objective review.

References

Altbach, P. G., Reisberg, L., and Rumbley, L. E. (2009). Trends in Global Higher Education: Tracking an Academic Revolution Trends in Global Higher Education: Tracking an Academic Revolution. Paris: United Nations Educational, Scientific and Cultural Organization (UNESCO).

Altbeker, A., and Storme, E. (2013). Graduate Unemployment in South Africa: A Much Exaggerated Problem. Johannesburg: CDE Insight.

Archer, E. (2011). Bridging the Gap: Optimising a Feedback System for Monitoring Learner Performance. University of Pretoria. Available at: http://upetd.up.ac.za/thesis/available/etd-02022011-124942/

Archer, E., and Brown, G. T. L. (2013). Beyond rhetoric: leveraging learning from New Zealand’s assessment tools for teaching and learning for South Africa. Educ. Change 17, 131–147. doi: 10.1080/16823206.2013.773932

Archer, E., and Chetty, Y. (2013). Graduate employability: conceptualisation and findings from the University of South Africa. Progressio 35, 136–167.

Archer, E., and Howie, S. (2013). “South Africa: optimising a feedback system for monitoring learner performance in primary schools,” in Educational Design Research – Part B: Illustrative Cases, eds T. Plomp, and N. Nieveen (Enschede: Netherlands Institute for Curriculum Development (SLO)), 71–93.

Atkinson, R. (1999). Project management: cost, time and quality, two best gueses and a phenomenon, its time to accept other success criteria. Int. J. Proj. Manage. 17, 337–342. doi:10.1016/S0263-7863(98)00069-6

Black, P. (1998). Testing, Friend or Foe? The Theory and Practice of Assessment and Testing. London: The Falmer Press.

Black, P., Harrison, C., Hodgen, J., Marshall, B., and Serret, N. (2010). Validity in teachers’ summative assessments. Assess. Educ. Princ. Pol. Pract. 17, 215–232. doi:10.1080/09695941003696016

Black, P., Harrison, C., Lee, C., Marshall, B., and William, D. (2003). Assessment for Learning – Putting It into Practice. Maidenhead, UK: Open University Press.

Black, P., and Wiliam, D. (1988). Inside the black box: raising standards through classroom assessment. Phi Delta Kappan 80, 139–148. doi:10.1002/hrm

Bloom, B. S., Hastings, G. T., and Madaus, G. F. (1971). Handbook on Formative and Summative Evaluation of Student Learning. New York: Techniques, x, 923.

Bridgstock, R. (2009). The graduate attributes we’ve overlooked: enhancing graduate employability through career management skills. High. Educ. Res. Dev. 28, 31–44. doi:10.1080/07294360802444347

Brookhart, S. M. (2001). Successful students’ formative and summative uses of assessment information. Assess. Educ. Princ. Pol. Pract. 8, 153–169. doi:10.1080/09695940123775

Brown, G. T. L. (2017). The future of assessment as a human and social endeavor: addressing the inconvenient truth of error. Front. Educ. 2:3. doi:10.3389/feduc.2017.00003

Brown, G. T. L., and Harris, L. R. (eds) (2016). Handbook of Human and Social Conditions in Assessment. Abingdon: Routledge.

Brynolfson, E., and Mcafee, A. (2015). Will humans go the way of the horses? Labor in the second machine age. Foreign Aff. 94, 8–14.

Davis, A., and Blass, E. (2007). The future workplace: views from the floor. Futures 39, 38–52. doi:10.1016/j.futures.2006.03.003

DeLuca, C., and Bellara, A. (2013). The current state of assessment education: aligning policy, standards, and teacher education curriculum. J. Teach. Educ. 64, 356–372. doi:10.1177/0022487113488144

Department of Basic Education. (2017). Annual National Assessments (ANA). Available at: http://www.education.gov.za/Curriculum/AnnualNationalAssessments(ANA).aspx

Dorans, N. J. (2012). The contestant perspective on taking tests: emanations from the statue within. Educ. Measure 31, 20–37. doi:10.1111/j.1745-3992.2012.00250.x

Epstein, J., Santo, R. M., and Guillemin, F. (2015). A review of guidelines for cross-cultural adaptation of questionnaires could not bring out a consensus. J. Clin. Epidemiol. 68, 435–441. doi:10.1016/j.jclinepi.2014.11.021

Garnett, J., and Cavaye, A. (2015). Recognition of prior learning: opportunities and challenges for higher education. J. Work Appl. Manage. 7, 28–37. doi:10.1108/JWAM-10-2015-001

Govender, P. (2016). Poor Children ‘doomed’ in Early Grades. Mail and Guardian. Available at: https://mg.co.za/article/2016-05-20-00-poor-children-doomed-in-early-grades

Graven, M., and Venkat, H. (2014). Primary teachers’ experiences relating to the administration processes of high-stakes testing: the case of mathematics annual national assessments. Afr. J. Res. Math. Sci. Technol. Educ. 18, 299–310. doi:10.1080/10288457.2014.965406

Graven, M., Venkat, H., Westaway, L., and Tshesane, H. (2013). Place value without number sense: exploring the need for mental mathematical skills assessment within the annual national assessments. S. Afr. J. Child. Educ. 3, 131–143. doi:10.1177/008124630303300205

Hodgson, G. M. (2016). The future of work in the twenty-first century. J. Econ. Issues 50, 197–216. doi:10.1080/00213624.2016.1148469

Howie, S. (2012). High-stakes testing in South Africa: friend or foe? Assess. Educ. Princ. Pol. Pract. 19, 81–98. doi:10.1080/0969594X.2011.613369

Human Science Research Council. (2006). Mathematics and Science Achievement in South Africa, TIMSS 2003. Pretoria: HSRC Press.

Institute for the Future. (2011). Future Work Skills 2020. Palo Alto. Available at: http://apolloresearchinstitute.com/research-studies/workforce-preparedness/future-work-skills-2020

Jansen, J. D. (2001). On the politics of performance in South African Education: autonomy, accountability and assessment. Prospects 31, 553–564. doi:10.1007/BF03220039

Katz, I. R., and Gorin, J. S. (2016). “Computerising assessment: impacts on education stakeholders,” in Handbook of Human and Social Conditions in Assessment, eds G. T. L. Brown, and L. R. Harris (New York: Routledge), 472–489.

Knight, P., and Yorke, M. (2003). Assessment, Learning and Employability. Maidenhead: Open University Press.

Knight, P., and Yorke, M. (2004). Learning, Curriculum and Employability in Higher Education. London: Routledge Falmer.

Linn, R. L. (2000). Assessments and accountability. Educ. Res. 29, 4–16. doi:10.3102/001318X029002004

Long, C. (2015). Beware the Tyrannies of the ANAs. Mail and Guardian. Available at: https://mg.co.za/article/2015-04-23-beware-the-tyrannies-of-the-anas

McCune, V., Hounsell, J., Christie, H., Cree, V. E., and Tett, L. (2010). Mature and younger students’ reasons for making the transition from further education into higher education. Teach. High. Educ. 15, 691–702. doi:10.1080/13562517.2010.507303

Mcilveen, P., and Pensiero, D. (2008). Transition of graduates from backpack-to-briefcase: a case study. Educ. Train. 50, 489–499. doi:10.1108/00400910810901818

Mlambo, S. (2015). We Will Not Administer ANA. IOL. Available at: http://www.iol.co.za/dailynews/news/we-will-not-administer-ana-1951170

Newton, P. E. (2007). Clarifying the purposes of educational assessment. Assess. Educ. Princ. Pol. Pract. 14, 149–170. doi:10.1080/09695940701478321

Pityana, B. (2017). “Leadership and ethics in higher education: some perspectives from experience,” in Ethics in Higher Education: Values-Driven Leaders for the Future, 1st Edn, eds D. Singh, and C. Stückelberger (Geneva: Globethics.net), 133–161.

Schildkamp, K., and Kuiper, W. (2010). Data-informed curriculum reform: which data, what purposes, and promoting and hindering factors. Teach. Teach. Educ. 26, 482–496. doi:10.1016/j.tate.2009.06.007

Spaull, N. (2013). South Africa’s Education Crisis: The Quality of Education in South Africa 1994-2011. Johannesburg: Centre for Development and Enterprise.

Spaull, N. (2014). Assessment Results Don’t Add Up. Mail and Guardian. Available at: https://mg.co.za/article/2014-12-12-assessment-results-dont-add-up.

UK Commission for Employment and Skills. (2014). “The future of work: jobs and skills in 2030,” in Evidence Report, Vol. 84. Available at: https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/303334/er84-the-future-of-work-evidence-report.pdf

Weitz, M., and Venkat, H. (2013). Assessing early number learning: how useful is the annual national assessment in numeracy? Perspect. Educ. 31, 49–65.

William, D., Lee, C., Harrison, C., and Black, P. (2004). Teachers developing assessment for learning: impact on student achievement. Assess. Educ. Princ. Pol. Pract. 11, 49–65. doi:10.1080/0969594042000208994

Keywords: assessment purposes triangle, assessment to support learning, assessment for accountability, assessment for certification, progress and transfer, quality education

Citation: Archer E (2017) The Assessment Purpose Triangle: Balancing the Purposes of Educational Assessment. Front. Educ. 2:41. doi: 10.3389/feduc.2017.00041

Received: 31 March 2017; Accepted: 25 July 2017;

Published: 08 August 2017

Edited by:

Jeffrey K. Smith, University of Otago, New ZealandReviewed by:

Chad W. Buckendahl, ACS Ventures, LLC, United StatesDavid Alexander Berg, University of Otago, New Zealand

Copyright: © 2017 Archer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Elizabeth Archer, YXJjaGVlQHVuaXNhLmFjLnph

Elizabeth Archer

Elizabeth Archer