95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Earth Sci. , 20 March 2025

Sec. Economic Geology

Volume 13 - 2025 | https://doi.org/10.3389/feart.2025.1545002

This article is part of the Research Topic Applications of Artificial Intelligence in Geoenergy View all 5 articles

In the context of geoscience and mineral exploration, accurate characterization of subsurface structures and their spatial variability is crucial for resource evaluation and geoenergy applications, such as hydrocarbon extraction and

The generation of stochastic fields has many applications in geosciences and reservoir management. Modeling these fields at the reservoir scale is an essential step in addressing uncertainty quantification or inverse problems in the subsurface. One of the classical approaches is the Multiple Point Statistics (MPS) algorithms (Strebelle, 2002) that were designed for geo-statistical simulation based on spatial patterns in a training image. Many variants of MPS have been developed over time, such as direct sampling techniques (Mariethoz et al., 2010) and cross-correlation based methods (Tahmasebi and Sahimi, 2013). The trained non-parametric model can be used to generate realizations constrained to well and seismic data (Hashemi et al., 2014; Rezaee and Marcotte, 2017; Arpat and Caers, 2007; Tahmasebi et al., 2014). While MPS methods can reconstruct high-dimensional samples from low-dimensional inputs, as demonstrated by (Comunian et al., 2012; Chen et al., 2018; Wang et al., 2022; Guo et al., 2024), they suffer from limitations such as limited variability Emery and Lantuéjoul (2014) and inability to model complex non-stationary patterns (Zhang T. et al., 2019).

Following the success of deep learning in computer vision, recent published work has considered deep generative models such as Generative Adversarial Networks (GANs) (Goodfellow et al., 2014) for generation of stochastic fields. A key advantage of GAN-based approaches over Multiple Point Statistics (MPS) lies in their ability to generate samples with diverse spatial patterns and high-quality reproductions due to the adversarial learning strategy. GANs have been applied to a wide range of geoengineering challenges, including reconstructing the 3D structure of porous media (Mosser et al., 2017), parametrizing high-dimensional spatial permeability fields in the subsurface (Chan and Elsheikh, 2020), and performing geostatistical inversion on both 2D and 3D categorical datasets (Laloy et al., 2018).

GANs have also been used to generate geological realizations conditioned on hard data (e.g., point measurements at wells) and soft data (e.g., probability maps). Approaches for generation of conditioned stochastic realizations could be classified into two categories: post-GANs and concurrent-GANs. In post-GANs approaches, a new optimization problem is solved after training GANs where the latent vector is searched to find realizations that match the target data. For example, the gradient descent method was used in (Dupont et al., 2018; Zhang T. et al., 2019), a Markov chain Monte Carlo sampling algorithm was used (Nesvold and Mukerji, 2019; Laloy et al., 2018), and Chan and Elsheikh (2019) trained an inference network to map the normally distributed outputs to a distribution of latent vectors that satisfies the required conditions. The main drawback of using post-GANs approaches is the additional cost needed to solve the second optimization problem, which can often be expensive. In addition, we would need to solve different problems for every new observed data (e.g., new condition).

In concurrent-GANs approaches, the training of GANs is modified to pass the conditional data to the GANs generator network. After training, the trained generator can then simulate realizations based on the input data without the need to solve another optimization problem. Abdellatif et al. (2022) introduced conditional GANs to generate unrepresented global proportions of geological facies. Cycle-consistent GANs (Zhu et al., 2017) have been used for domain mapping, for example, mapping between physical parameters and model state variables (Sun, 2018) and mapping between seismic data and geological model (Mosser et al., 2018). A GAN model with a U-net architecture (Ronneberger et al., 2015) was used to map high-dimensional input to

Song et al. (2021b) used condition-based loss functions to condition facies on hard data and global features, and they later extended the method for spatial probability maps (Song et al., 2021a). Pix2pix method (Isola et al., 2017) has been used for geophysical conditioning by adding additional losses for seismic and well log conditions (Pan et al., 2021). However, condition-based losses require designing manual functions that compute the consistency between the generated samples and target conditions (e.g., computing facies frequency for the generated realizations to mimic real probability maps (Song et al., 2021a)). The design of such function is arbitrary and this is conceptually different than GANs, where the learning is done implicitly from the training data by joint training of both the generator and the discriminator which tells what is good versus bad samples. Moreover, including additional losses in GANs relies on careful weighting between the conditions losses and the original loss in GANs which requires extensive hyper-parameters search (e.g., see Figure 6 in (Song et al., 2021b)).

In this work, we propose a concurrent-GANs approach for generating geological realizations conditioned on spatial maps that describe the distribution of facies proportions across the spatial domain. Notably, this method achieves effective conditioning without relying on explicit condition-based losses. Our approach demonstrates the ability to generate new realizations that align with spatial maps not seen during training, a critical capability for applications where the characteristics of real reservoirs deviate significantly from those of the training data. To incorporate a specific spatial configuration, we employed conditional GANs (cGANs) (Mirza and Osindero, 2014), using spatial maps as input conditions for the neural networks. By integrating the SPADE algorithm (Park et al., 2019), we enabled the generator to dynamically adapt its layers based on the spatial conditioning maps, allowing the GANs to implicitly learn the correlation between the input maps and the generated realizations. The training framework solves a single optimization problem, eliminating the need for designing condition-consistency loss functions or conducting extensive hyperparameter searches for balancing weights. Experimental results on 2D and 3D datasets demonstrate a strong correlation between the generated realizations and the target spatial maps, while also highlighting the model’s ability to generalize to unseen spatial configurations.

The rest of the paper is organized as follows: in Section 2, we discuss the algorithm of conditional GANs used in our experiments and we present the training datasets and the implementation details. In Section 3, the results of the experiments are shown. Finally, conclusions are provided in Section 4.

Generative adversarial networks (GANs) (Goodfellow et al., 2014) are trained to learn the underlying distribution of training samples. It consists of two convolutional neural networks: a generator

Given a spatial map

To accommodate the spatial nature of the map, we follow the spatially adaptive de-normalization (SPADE) conditioning method developed in (Park et al., 2019), where a segmentation mask modulates the generator layers to generate natural images based on the mask. In our work, we replace the categorical mask with a continuous map that represents the spatial proportions of the channels, these maps are calculated for each sample prior to training. The SPADE method operates as follows: for each layer

where the learnable parameters

The spatial proportions of the generated facies can be adjusted by modifying

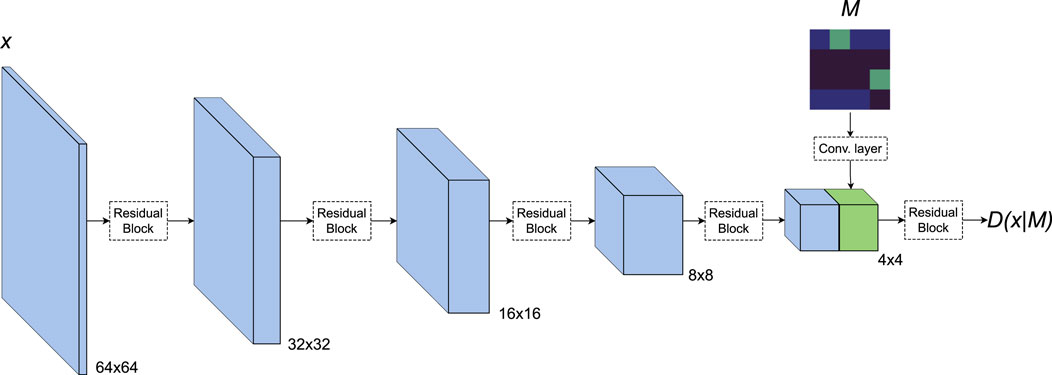

In the discriminator, features extracted from the map

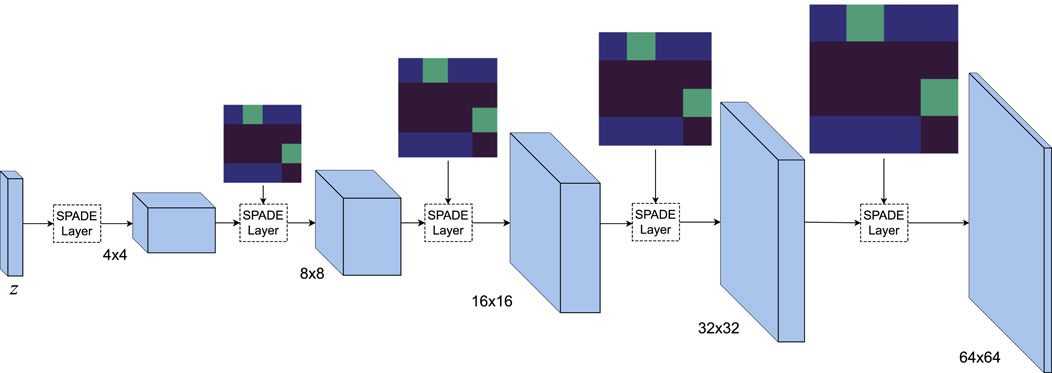

The generator and discriminator networks are built upon the ResNet architecture (He et al., 2016), following a design approach similar to (Gulrajani et al., 2017). The generator begins by mapping a 128-dimensional noise vector to a hidden representation using a multilayer perceptron (MLP). This hidden representation is then reshaped to dimensions (512, 4, 4), followed by a series of upsampling layers that progressively increase the spatial resolution while reducing the number of feature channels. Conversely, the discriminator performs downsampling operations, halving the spatial resolution and doubling the number of feature channels at each layer. The final output of the discriminator is a single scalar value, representing the probability of the input image being real. We apply spectral normalization to the discriminator’s weights (Miyato et al., 2018) and the self-attention mechanism (Zhang H. et al., 2019) in both the generator and discriminator at an intermediate layer of resolution

For the 3D model, 3D convolutions, 3D batch-normalization and 3D up-sampling operations are adapted from the PyTorch framework. In this case, the starting vector

Figure 1. Generator architecture: the stochastic input

Figure 2. Discriminator architecture: the conditioning map is passed to convolutional layers and the resulting features are concatenated with the input image features (i.e., blue and green features). The discriminator output is the conditional probability of

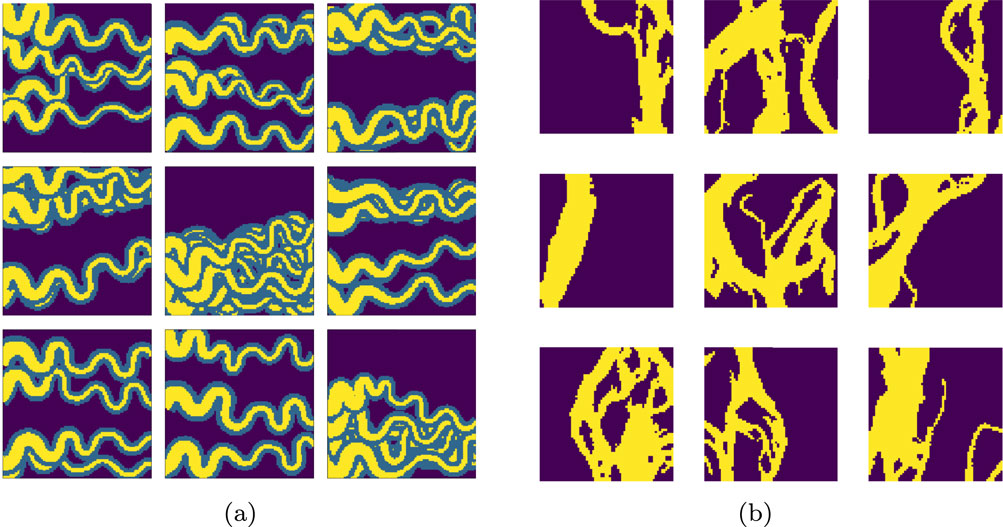

In our experiments, we evaluate our models on three datasets: a) a 2D synthetic dataset of three facies: channels, levees and background b) a 2D dataset of masks of the Brahmaputra river with binary facies: channels and background and c) a 3D synthetic dataset of binary facies: channels and background. Samples from the 2D datasets are shown in Figure 3. The non-stationarity in the datasets are due to the variations in the channels proportions across the spatial domain, we describe the 2D datasets and the preprocessing steps below.

Figure 3. Representative samples from the 2D datasets used for training GAN models: (a) synthetic dataset and (b) real dataset. All images used to train the 2D models are of size

Samples of the first dataset (a) are generated using a geo-modelling tool that mimics depositional environment formation based on random walks (Massonnat, 2019). Horizontal and vertical flipping are performed to increase the sample size from 2,000 to 8,000, this also adds an additional spatial configuration in the training set (i.e., large channel proportion on the right side and low on the left side). The Brahmaputra river mask, dataset (b), is based on the data from Schwenk et al. (2020). The large mask of size

For each sample in the training set, the channel proportions map

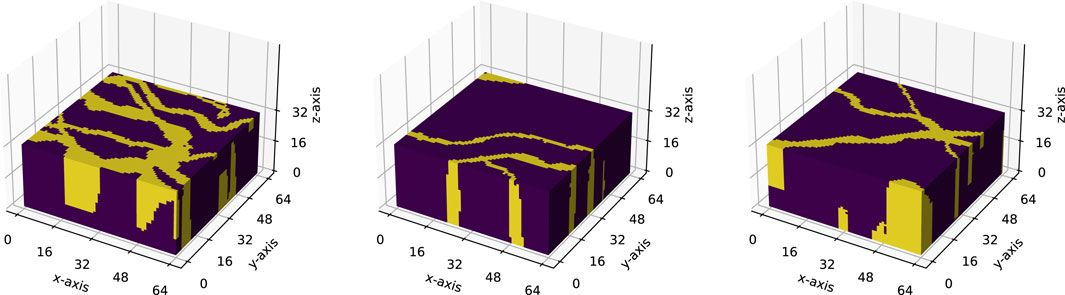

The 3D dataset (c) is based on data from Sun et al. (2023) and has been used to compare different GANs models (Sun et al., 2021). The original dataset is composed of 25 3D images, of size

Figure 4. Samples from the 3D training dataset, all images used to train the 3D models are of size

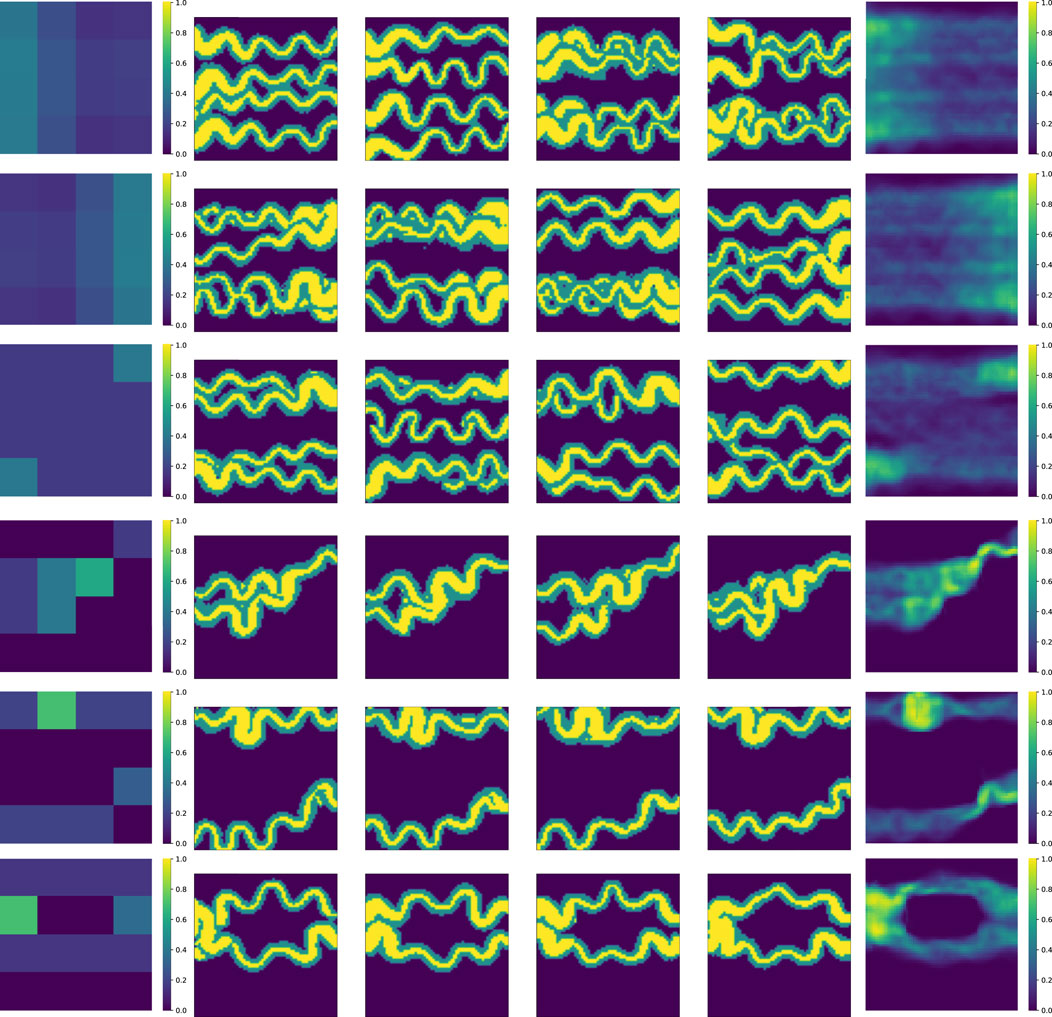

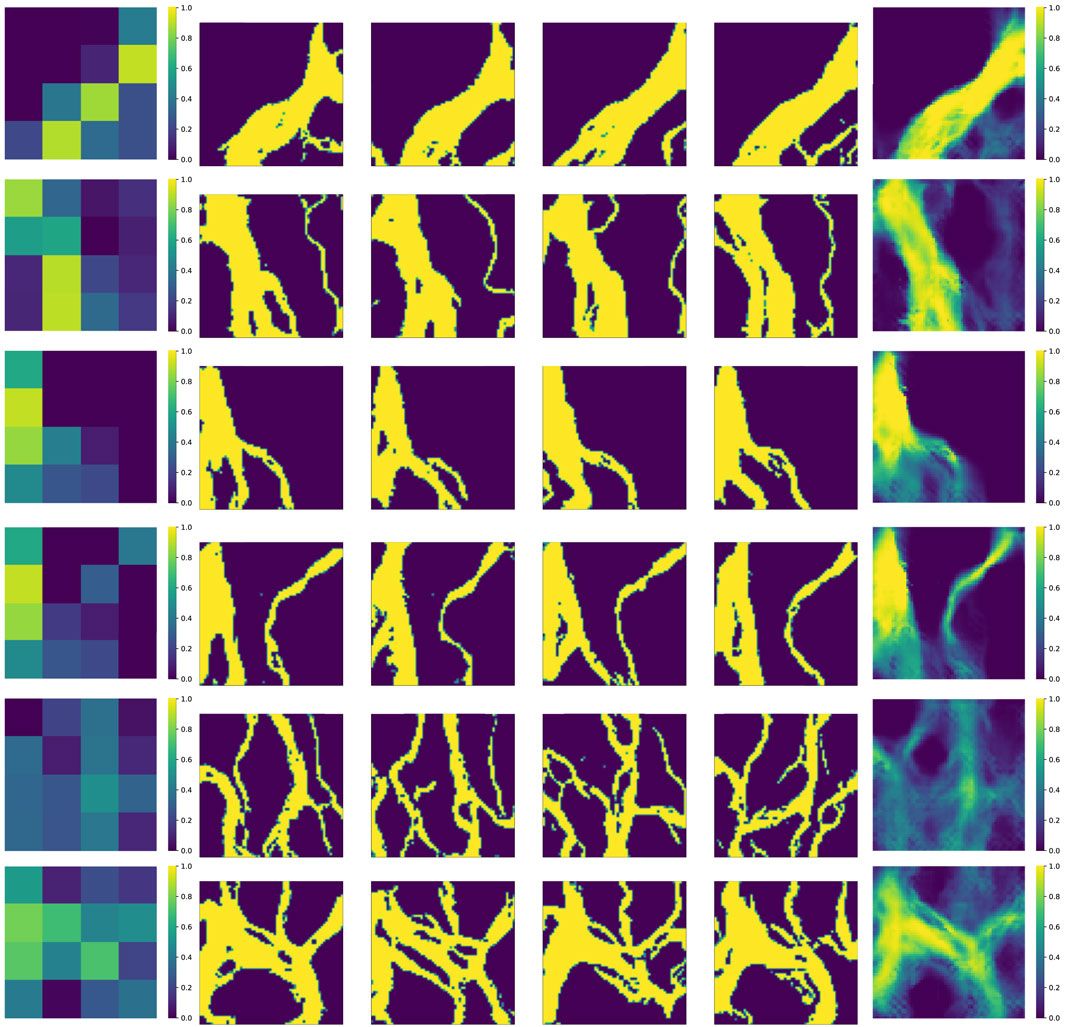

Results on the 2D synthetic dataset and the Brahmaputra river masks are shown in Figures 5, 6, respectively. The leftmost column shows the conditioning maps

Figure 5. Generated non-stationary realizations on the synthetic dataset: the input conditioning maps are in the leftmost columns, the middle columns are the generated samples and the per-pixel mean maps are in the rightmost columns. The last four rows shows generated samples with maps not seen during training.

Figure 6. Generated non-stationary realizations on the real masks of the Brahmaputra river: the input conditioning maps are in the leftmost columns, the middle columns are the generated samples and the per-pixel mean maps are in the rightmost columns. The last three rows shows generated samples with maps not seen during training.

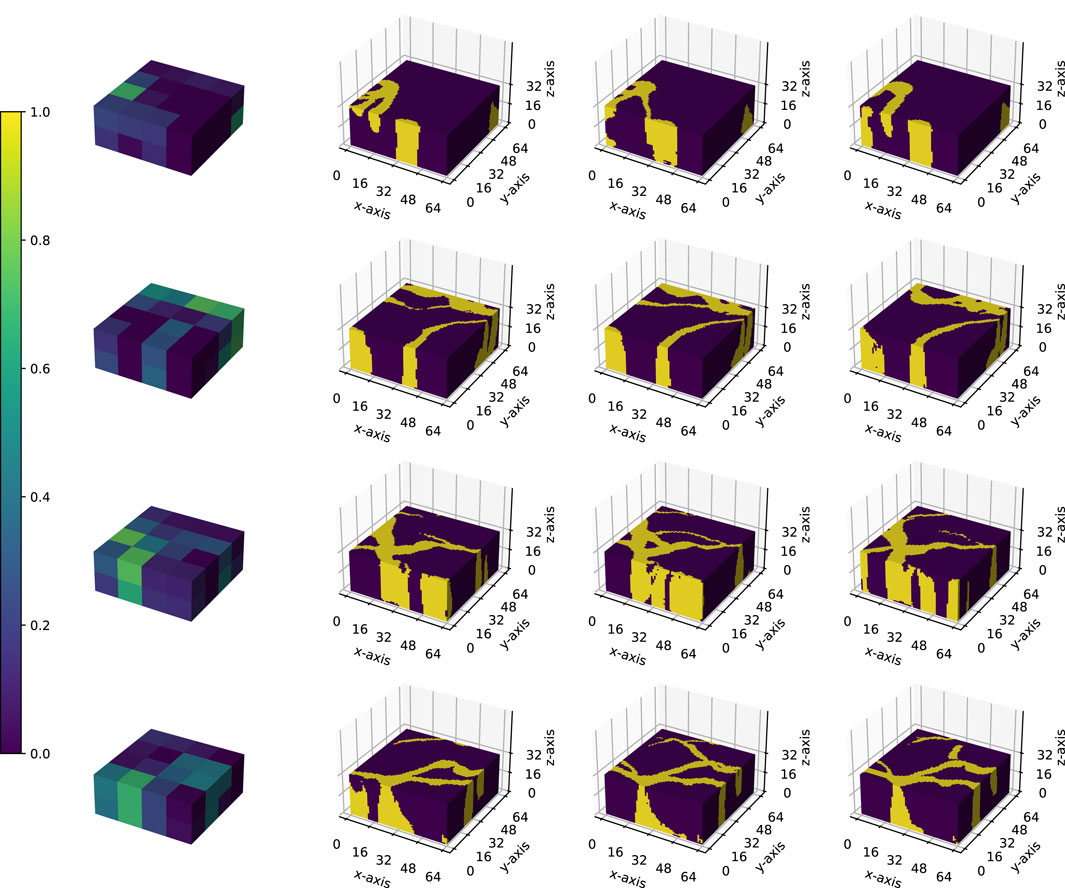

In Figure 7, we present the 3D conditional results: in each row, the first column shows the target conditional map

Figure 7. Conditional generated 3D samples. In each row, the first column shows the 3D target map

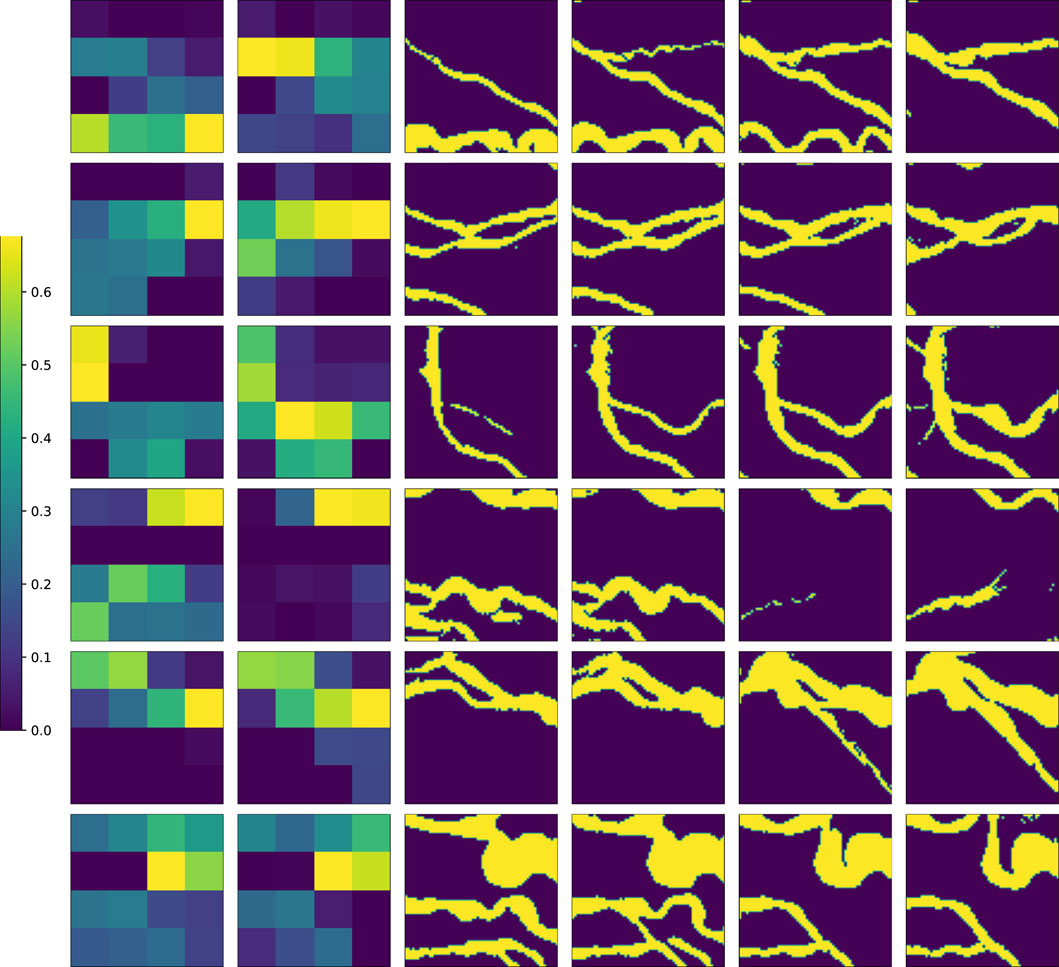

In Figure 8, we present more results of the 3D models at different cross sections. The first two columns show the two sections of the

Figure 8. Conditional generated 3D samples with target 3D maps: The first two columns represent the two layers of the

The results clearly demonstrate that the models successfully generalized to spatial configurations not present in the training datasets. Visually, the generated samples exhibit geological plausibility; for instance, channel connectivity is preserved across both datasets. Furthermore, in the case of the first dataset, the models consistently generated levees surrounding the channels, regardless of the channels’ locations. This indicates that the models did not simply memorize the training data but instead learned the underlying spatial relationships and patterns effectively.

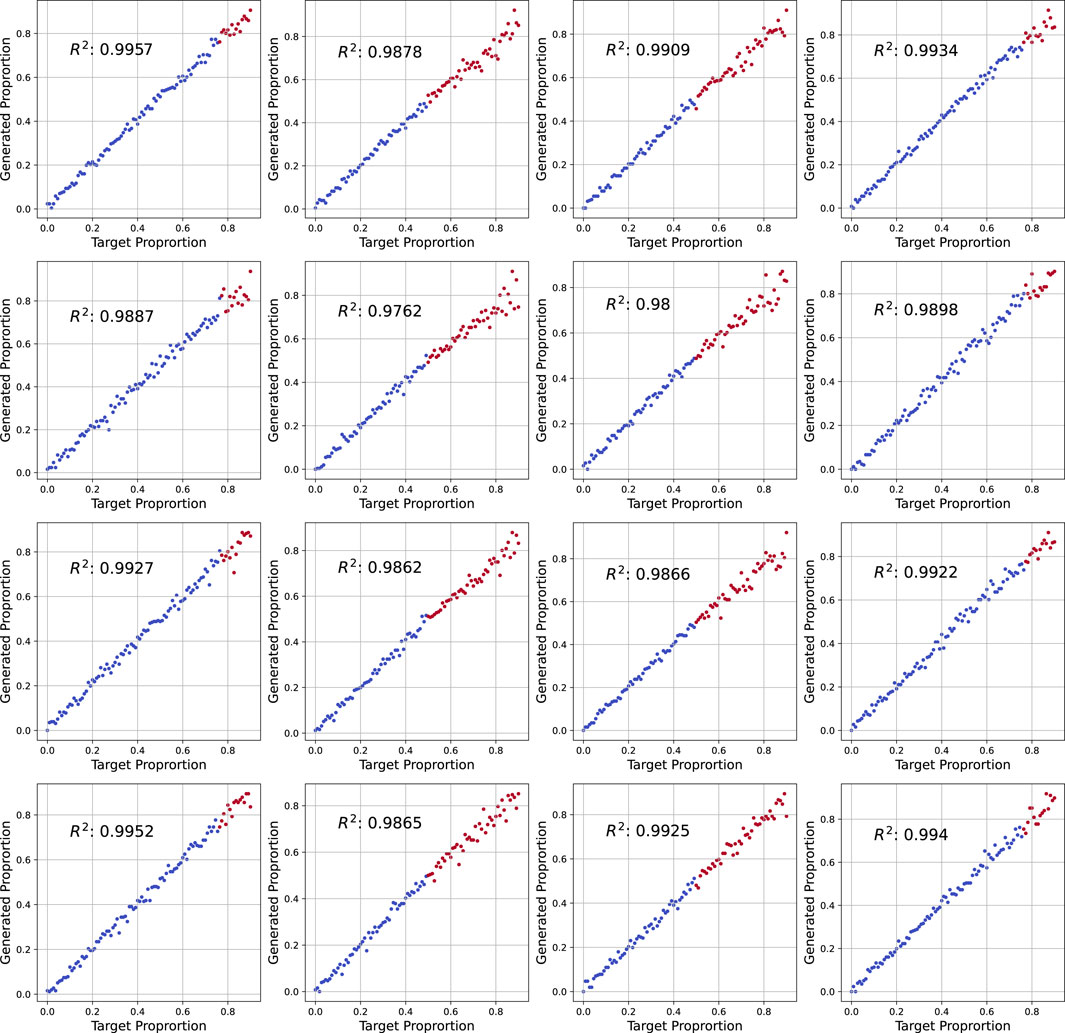

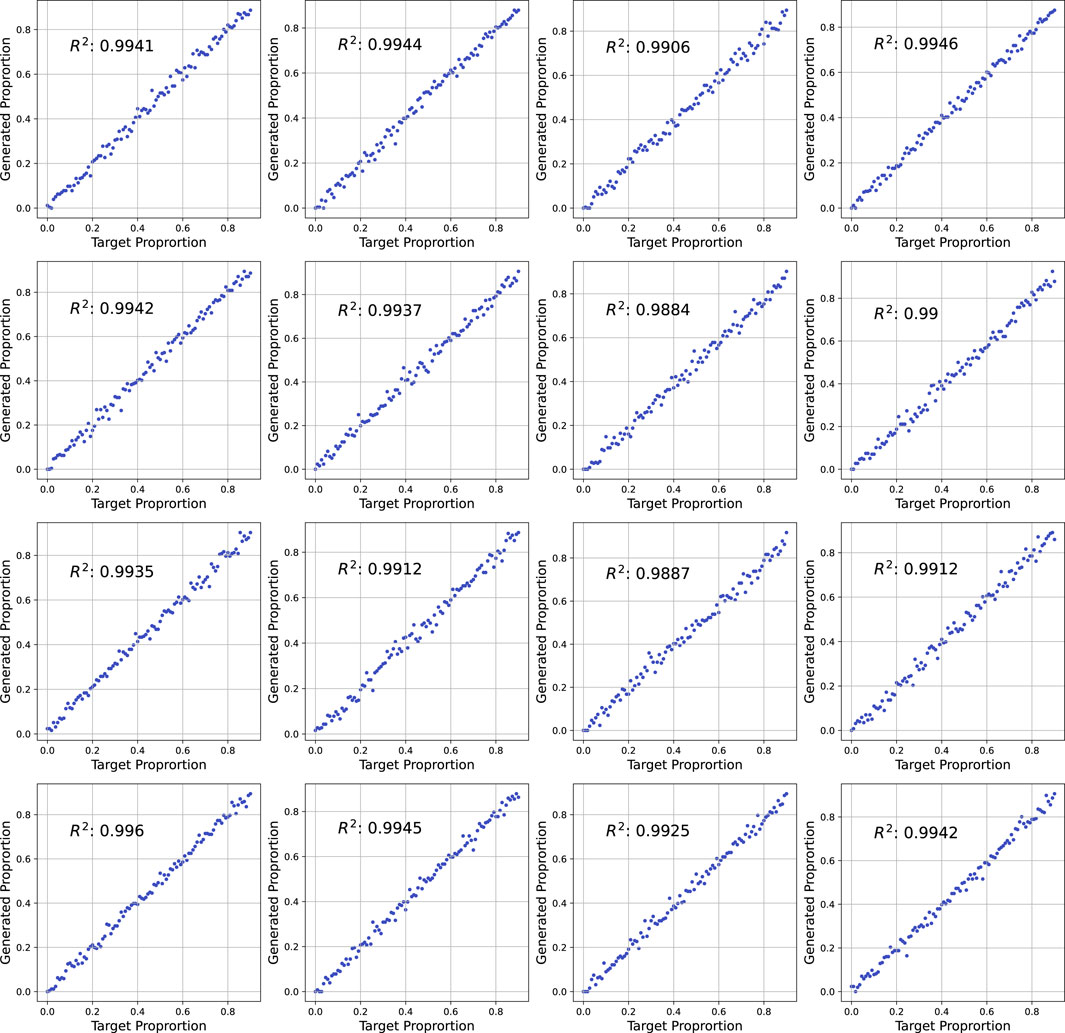

To quantify the correlation between the target proportion maps and the corresponding generated samples, Figures 9, 10 display

Figure 9. A

Figure 10. A

The results demonstrate a strong correlation between the target and generated proportions, with

The generalization capability of the GANs can be understood from two perspectives.

1. Local Generalization: The model can generalize to unseen proportions within individual sections of the grid, as illustrated by the red dots in Figure 9.

2. Global Generalization: The model can generate realistic samples for unseen non-stationary configurations across the entire image, as demonstrated in Figures 5, 6.

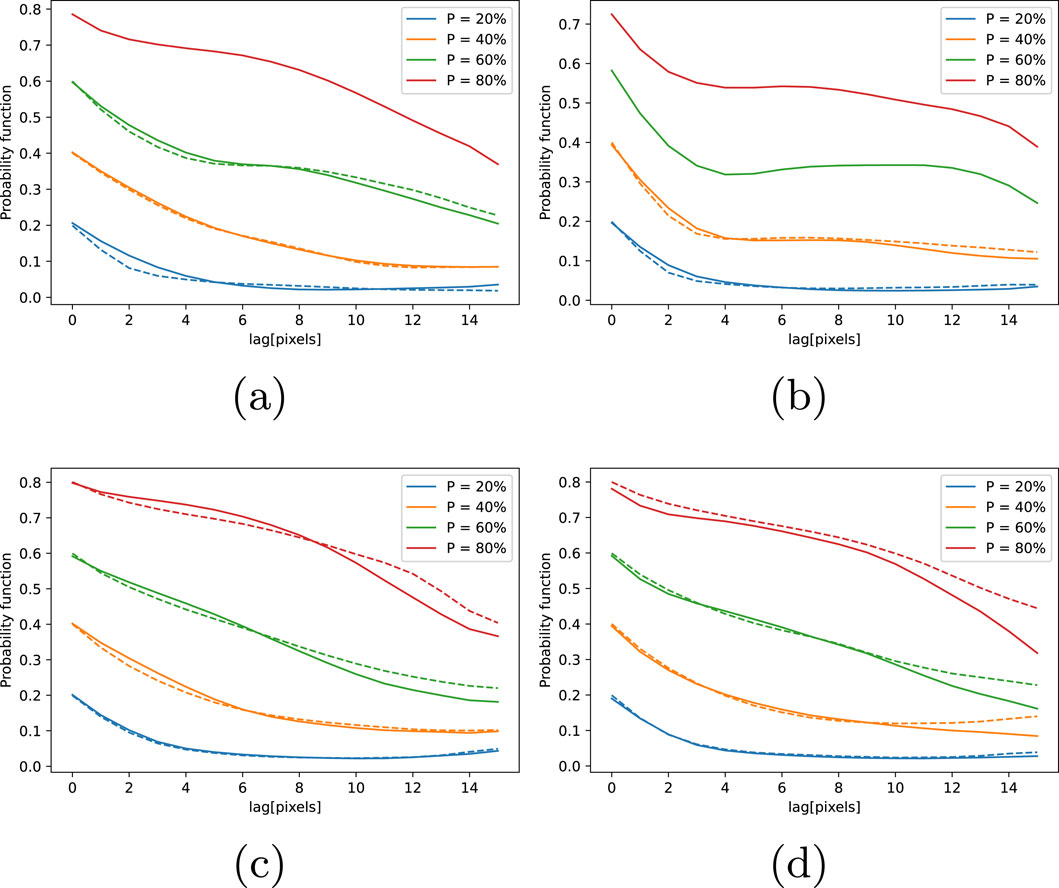

The two-point probability function quantifies the likelihood that two points, separated by a specified distance, belong to the same channel facies. This measure helps evaluate the spatial continuity and geological consistency of generated samples. In Figure 11, the function is calculated for two specific sections within the

Figure 11. Comparison of two-point probability functions for synthetic and real 2D datasets across distinct grid sections. Subplots (a, b) correspond to synthetic data, while (c, d) represent real binary masks. Dashed lines denote training sample functions at proportions P = {20%,40%,60%,80%}; solid lines show generated samples. The results highlight the alignment between generated and training samples and demonstrate the model’s generalization ability, particularly for unrepresented conditions.

To further assess the trained models, a uniform flow simulation is performed on the training samples shown on left side of Figure 3 and the corresponding generated samples shown on the top row in Figure 5. We consider the problem of a uniform flow where water is injected in order to displace contaminate in a subsurface reservoir. Flow is injected at the left side boundary and produced from the right side boundary and no-flow boundary conditions are imposed on the top and bottom sides. The problem formulation and settings are identical to those presented by Chan and Elsheikh (2020).

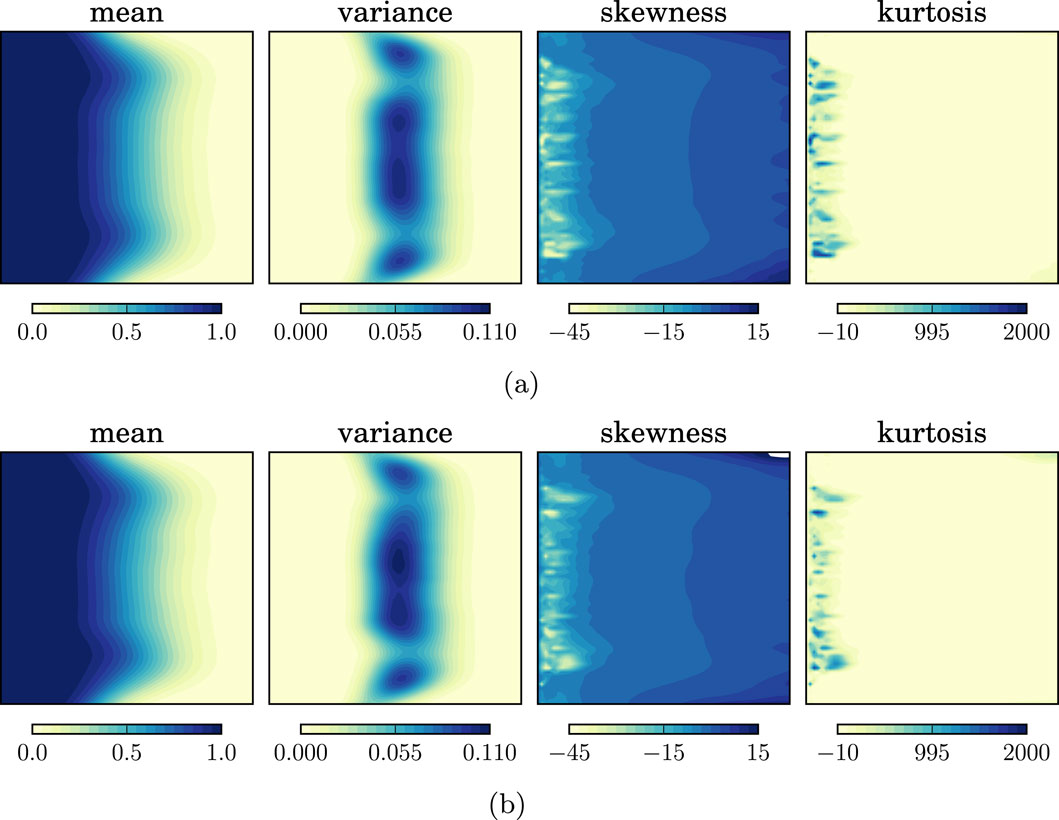

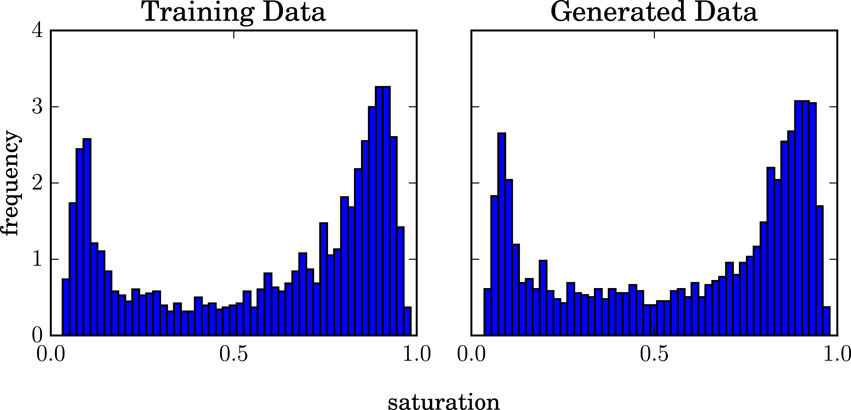

We performed a total of 4,000 flow simulations, 2,000 corresponding to the training samples and 2,000 simulations on the GANs generated samples. Flow statistics of the saturation map at

Figure 12. Saturation statistics of a uniform flow on training and generated samples at

Figure 13. Comparison between training and generated saturation histograms. The histograms display the distribution of saturation values at the spatial location in the domain where the saturation variance is highest, highlighting the range and frequency of saturation fluctuations at that point.

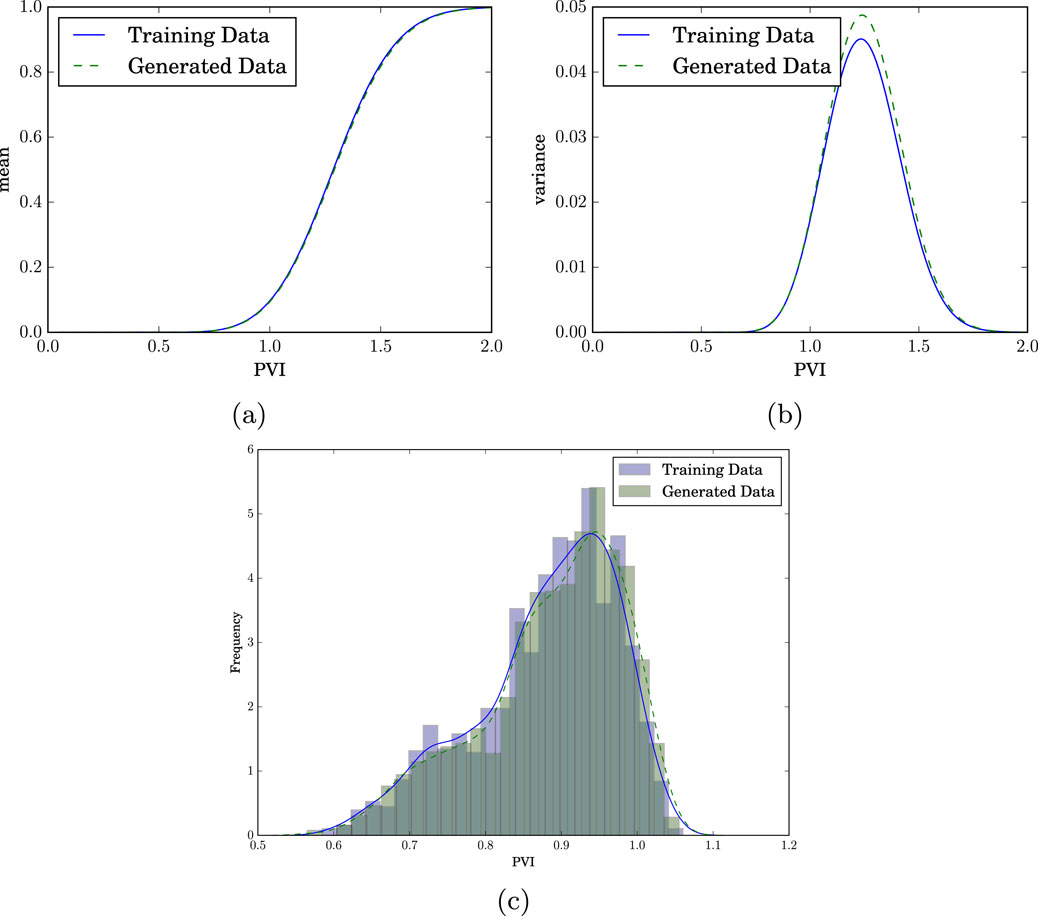

Production curves statistics are shown in Figure 14, where we calculated the mean and the variance of the production curves at different times. We have also plotted the histogram of the water breakthrough time (i.e., the time where the injected clean water level reaches the production well with a 1% threshold). As we can see, the calculated statistics on the generated realizations showed very good agreement with the ones on the training samples which reflect the capabilities of the GANs models.

Figure 14. Production Statistics Comparison Between Training and Generated Data (a) Mean production profiles for uniform flow, comparing training data (solid line) and GAN-generated samples (dashed line). (b) Variance distributions of production curves across both datasets. (c) Frequency distribution of water breakthrough times, quantified in pore volume injected (PVI), highlighting discrepancies in temporal dynamics.

This study demonstrates that GAN-based methods can effectively generate non-stationary stochastic realizations of geological facies—models that are essential for advanced reservoir characterization in geoenergy applications. The conditioning algorithm allows the model to learn spatial correlations between target maps and generated realizations without solving optimization problems for new observed data or using arbitrary loss functions. Our models consistently produce geologically plausible 2D and 3D realizations, even for spatial configurations not encountered during training, using both synthetic and real geological datasets. This capability is particularly valuable for modeling complex mineral deposits where understanding spatial variability is crucial for resource estimation and development planning. The method’s ability to handle non-stationary fields makes it especially suitable for characterizing heterogeneous geological formations typical in economic geology applications such as hydrocarbon extraction. Future work might include generating non-stationary data from stationary training sets and extending the generated field of view to infinite dimensions.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author. The code used to train the GANs models is available on the public repository https://github.com/ai4netzero/NonstationaryGANs.

AA: Conceptualization, Methodology, Software, Writing–original draft, Writing–review and editing. AE: Conceptualization, Funding acquisition, Methodology, Supervision, Validation, Writing–review and editing. DB: Data curation, Resources, Writing–review and editing. PB: Data curation, Resources, Writing–review and editing.

The author(s) declare that financial support was received for the research and/or publication of this article. This research was partially funded by the Engineering and Physical Sciences Research Council (EPSRC) [Grant No. EP/Y006143/1]. The first author acknowledges financial support from TotalEnergies for his PhD research at Heriot-Watt University. The funder was not involved in the study design, collection, analysis, interpretation of data, the writing of this article, or the decision to submit it for publication.

Authors DB and PB were employed by TotalEnergies.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that no Generative AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdellatif, A., Elsheikh, A. H., Graham, G., Busby, D., and Berthet, P. (2022). Generating unrepresented proportions of geological facies using generative adversarial networks. Comput. and Geosciences 162, 105085. doi:10.1016/j.cageo.2022.105085

Arpat, G. B., and Caers, J. (2007). Conditional simulation with patterns. Math. Geol. 39, 177–203. doi:10.1007/s11004-006-9075-3

Brock, A., Donahue, J., and Simonyan, K. (2018). Large scale GAN training for high fidelity natural image synthesis. arXiv. doi:10.48550/arXiv.1809.11096

Chan, S., and Elsheikh, A. H. (2019). Parametric generation of conditional geological realizations using generative neural networks. Comput. Geosci. 23, 925–952. doi:10.1007/s10596-019-09850-7

Chan, S., and Elsheikh, A. H. (2020). Parametrization of stochastic inputs using generative adversarial networks with application in geology. Front. Water 2 (5). doi:10.3389/frwa.2020.00005

Chen, Q., Mariethoz, G., Liu, G., Comunian, A., and Ma, X. (2018). Locality-based 3-d multiple-point statistics reconstruction using 2-d geological cross sections. Hydrology Earth Syst. Sci. 22, 6547–6566. doi:10.5194/hess-22-6547-2018

Comunian, A., Renard, P., and Straubhaar, J. (2012). 3D multiple-point statistics simulation using 2D training images. Comput. and Geosciences 40, 49–65. doi:10.1016/j.cageo.2011.07.009

Dupont, E., Zhang, T., Tilke, P., Liang, L., and Bailey, W. (2018). Generating realistic geology conditioned on physical measurements with generative adversarial networks. arXiv doi:10.48550/arXiv.1802.03065

Emery, X., and Lantuéjoul, C. (2014). Can a training image be a substitute for a random field model? Math. Geosci. 46, 133–147. doi:10.1007/s11004-013-9492-z

Fossum, K., Alyaev, S., and Elsheikh, A. H. (2024). Ensemble history-matching workflow using interpretable spade-gan geomodel. First Break 42, 57–63. doi:10.3997/1365-2397.fb2024014

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., et al. (2014). Generative adversarial nets. Adv. Neural Inf. Process. Syst., 2672–2680.

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., and Courville, A. C. (2017). “Improved training of wasserstein GANs,” in Advances in neural information processing systems, 5767–5777.

Guo, J., Zheng, Y., Liu, Z., Wang, X., Zhang, J., and Zhang, X. (2024). Pattern-based multiple-point geostatistics for 3d automatic geological modeling of borehole data. Nat. Resour. Res. 34, 149–169. doi:10.1007/s11053-024-10405-6

Hashemi, S., Javaherian, A., Ataee-pour, M., Tahmasebi, P., and Khoshdel, H. (2014). Channel characterization using multiple-point geostatistics, neural network, and modern analogy: a case study from a carbonate reservoir, southwest Iran. J. Appl. Geophys. 111, 47–58. doi:10.1016/j.jappgeo.2014.09.015

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 770–778.

Isola, P., Zhu, J.-Y., Zhou, T., and Efros, A. A. (2017). “Image-to-image translation with conditional adversarial networks,” in Proceedings of the IEEE conference onComputer vision and pattern recognition, 1125–1134.

Laloy, E., Hérault, R., Jacques, D., and Linde, N. (2018). Training-image based geostatistical inversion using a spatial generative adversarial neural network. Water Resour. Res. 54, 381–406. doi:10.1002/2017wr022148

Mariethoz, G., Renard, P., and Straubhaar, J. (2010). The direct sampling method to perform multiple-point geostatistical simulations. Water Resour. Res. 46. doi:10.1029/2008WR007621

Massonnat, G. (2019). Random walk for simulation of geobodies: a new process-like methodology for reservoir modelling [software]. Pet. Geostat. 2019 2019, 1–5. doi:10.1016/j.cageo.2022.105085

Mirza, M., and Osindero, S. (2014). Conditional generative adversarial nets. arXiv Prepr. doi:10.48550/arXiv.1411.1784

Miyato, T., Kataoka, T., Koyama, M., and Yoshida, Y. (2018). Spectral normalization for generative adversarial networks. arXiv. doi:10.48550/arXiv.1802.05957

Mosser, L., Dubrule, O., and Blunt, M. J. (2017). Reconstruction of three-dimensional porous media using generative adversarial neural networks. Phys. Rev. E 96, 043309. doi:10.1103/physreve.96.043309

Mosser, L., Kimman, W., Dramsch, J., Purves, S., De la Fuente Briceño, A., and Ganssle, G. (2018). Rapid seismic domain transfer: seismic velocity inversion and modeling using deep generative neural networks. 80th Eage Conf. Exhib. 2018 2018, 1–5. doi:10.3997/2214-4609.201800734

Nesvold, E., and Mukerji, T. (2019). Geomodeling using generative adversarial networks and a database of satellite imagery of modern river deltas. Pet. Geostat. 2019 2019, 1–5. doi:10.3997/2214-4609.201902196

Pan, W., Torres-Verdín, C., and Pyrcz, M. J. (2021). Stochastic pix2pix: a new machine learning method for geophysical and well conditioning of rule-based channel reservoir models. Nat. Resour. Res. 30, 1319–1345. doi:10.1007/s11053-020-09778-1

Park, T., Liu, M.-Y., Wang, T.-C., and Zhu, J.-Y. (2019). “Semantic image synthesis with spatially-adaptive normalization,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2337–2346.

Rezaee, H., and Marcotte, D. (2017). Integration of multiple soft data sets in mps thru multinomial logistic regression: a case study of gas hydrates. Stoch. Environ. Res. Risk Assess. 31, 1727–1745. doi:10.1007/s00477-016-1277-8

Ronneberger, O., Fischer, P., and Brox, T. (2015). “U-net: convolutional networks for biomedical image segmentation,” in International conference on Medical image computing and computer-assisted intervention (Springer), 234–241.

Schwenk, J., Piliouras, A., and Rowland, J. C. (2020). Determining flow directions in river channel networks using planform morphology and topology. Earth Surf. Dyn. 8, 87–102. doi:10.5194/esurf-8-87-2020

Song, S., Mukerji, T., and Hou, J. (2021a). Bridging the gap between geophysics and geology with generative adversarial networks. IEEE Trans. Geoscience Remote Sens. 60, 1–11. doi:10.1109/tgrs.2021.3066975

Song, S., Mukerji, T., and Hou, J. (2021b). GANSim: conditional facies simulation using an improved progressive growing of generative adversarial networks (GANs). Math. Geosci. 53, 1413–1444. doi:10.1007/s11004-021-09934-0

Strebelle, S. (2002). Conditional simulation of complex geological structures using multiple-point statistics. Math. Geol. 34, 1–21. doi:10.1023/a:1014009426274

Sun, A. Y. (2018). Discovering state-parameter mappings in subsurface models using generative adversarial networks. Geophys. Res. Lett. 45, 11–137. doi:10.1029/2018gl080404

Sun, C., Demyanov, V., and Arnold, D. (2021). Comparison of popular generative adversarial network flavours for fluvial reservoir modelling. 82nd EAGE Annu. Conf. and Exhib. 2021, 1–5. doi:10.3997/2214-4609.202113204

Sun, C., Demyanov, V., and Arnold, D. (2023). Gan river-i: a process-based low nt meandering reservoir model dataset for machine learning studies. Data Brief 46, 108785. doi:10.1016/j.dib.2022.108785

Tahmasebi, P., and Sahimi, M. (2013). Cross-correlation function for accurate reconstruction of heterogeneous media. Phys. Rev. Lett. 110, 078002. doi:10.1103/physrevlett.110.078002

Tahmasebi, P., Sahimi, M., and Caers, J. (2014). Ms-ccsim: accelerating pattern-based geostatistical simulation of categorical variables using a multi-scale search in fourier space. Comput. and Geosciences 67, 75–88. doi:10.1016/j.cageo.2014.03.009

Wang, L., Yin, Y., Zhang, C., Feng, W., Li, G., Chen, Q., et al. (2022). A mps-based novel method of reconstructing 3d reservoir models from 2d images using seismic constraints. J. Petroleum Sci. Eng. 209, 109974. doi:10.1016/j.petrol.2021.109974

Zhang, H., Goodfellow, I., Metaxas, D., and Odena, A. (2019a). “Self-attention generative adversarial networks,” in International conference on machine learning, 7354–7363.

Zhang, T., Tilke, P., Dupont, E., Zhu, L., Liang, L., and Bailey, W. (2019b). “Generating geologically realistic 3d reservoir facies models using deep learning of sedimentary architecture with generative adversarial networks,” in International petroleum technology conference (OnePetro).

Zhong, Z., Sun, A. Y., and Jeong, H. (2019). Predicting CO2 plume migration in heterogeneous formations using conditional deep convolutional generative adversarial network. Water Resour. Res. 55, 5830–5851. doi:10.1029/2018wr024592

Keywords: generative adversarial networks (GANs), non-stationary, multipoint geostatistics, soft conditioning data, geostatistical simulation

Citation: Abdellatif A, Elsheikh AH, Busby D and Berthet P (2025) Generation of non-stationary stochastic fields using generative adversarial networks. Front. Earth Sci. 13:1545002. doi: 10.3389/feart.2025.1545002

Received: 13 December 2024; Accepted: 28 February 2025;

Published: 20 March 2025.

Edited by:

Yin Yanshu, Yangtze University, ChinaReviewed by:

Suihong Song, Stanford University, United StatesCopyright © 2025 Abdellatif, Elsheikh, Busby and Berthet. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alhasan Abdellatif, YWEyNDQ4QGh3LmFjLnVr, YWxoYXNhbmFiZGVsbGF0aWZAZ21haWwuY29t

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.