94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci., 25 February 2025

Sec. Human-Media Interaction

Volume 7 - 2025 | https://doi.org/10.3389/fcomp.2025.1528455

Introduction: Generative artificial intelligence (AI) tools, such as ChatGPT, have gained significant traction in educational settings, offering novel opportunities for enhanced learning experiences. However, limited research has investigated how students perceive and accept these emerging technologies. This study addresses this gap by developing a scale to assess university students’ attitudes toward generative AI tools in education.

Methods: A three-stage process was employed to develop and validate the Generative AI Attitude Scale. Data were collected from 664 students from various faculties during the 2022–2023 academic year. Expert evaluations were conducted to establish face and content validity. An exploratory factor analysis (EFA) was performed on a subset of 400 participants, revealing a two-factor, 14-item structure that explained 78.440% of the variance. A subsequent confirmatory factor analysis (CFA) was conducted on a separate sample of 264 students to validate this structure, resulting in the removal of one item and a final 13-item scale.

Results: The 13-item scale demonstrated strong reliability, evidenced by a Cronbach’s alpha of 0.84 and a test–retest reliability of 0.90. Discriminative power was confirmed through corrected item-total correlations between lower and upper percentile groups. These findings indicate that the scale effectively differentiates student attitudes toward generative AI tools in educational contexts.

Discussion: The newly developed Generative AI Attitude Scale offers a valid and reliable instrument for measuring university students’ perspectives on integrating generative AI tools, such as ChatGPT, into educational environments. These results highlight the potential for more targeted research and informed implementation strategies to enhance learning outcomes through generative AI.

In today’s digital era, the symbiotic relationship between humans and computers has evolved into an inseparable facet of modern existence. Digital technology, in its myriad forms, has revolutionized how we communicate, work, and interact with the world around us. Human-computer interaction (HCI) stands as the cornerstone of this transformation, permeating nearly every aspect of daily life. HCI has become a pivotal aspect of education, reshaping the way students and educators engage with learning material, communicate, and collaborate (Zawacki-Richter et al., 2019). The advent of generative artificial intelligence (AI) tools, such as ChatGPT, heralds a further evolution in the educational landscape, promising to enhance personalized learning and foster student creativity (Marengo et al., 2023). It has garnered significant popularity among students owing to its multifaceted capabilities and influential impact. Its adaptability across diverse tasks has attracted students seeking to enhance their learning experiences. Nonetheless, the rapid integration of these technologies raises pertinent concerns regarding usability, accessibility, and their potential effects on students’ cognitive processes and behaviors (Popenici and Kerr, 2017).

The inclusion of generative AI tools within Human-Computer Interaction (HCI) holds promise in reshaping education, enriching individualized learning journeys, and nurturing creativity among students (Castelli and Manzoni, 2022; Harshvardhan et al., 2020; Sanchez-Lengeling and Aspuru-Guzik, 2018). Like other powerful tools in history, generative AI is expected to have measurable short-term effects and potentially transformative long-term effects (Lin, 2023). It is estimated that 47% of learning management tools will be enabled with AI capabilities by 2024, indicating the increasing integration of AI in education (Ng et al., 2023). Such tools in education are seen as a growing and promising tool for facilitating educational processes, with benefits for education-givers and seekers, including improved retention and successful online training processes (Lukianets and Lukianets, 2023).

Yet, it’s crucial to confront apprehensions regarding usability, accessibility, and their potential effects on how students think and behave (Baytak, 2023). Delving into research that examines students’ perspectives and encounters with these AI tools offers invaluable insights. These findings can guide us in refining their design and application, ultimately enhancing the way humans interact with computers in educational environments. This study endeavors to delve into the perspectives of university students regarding the utilization of generative AI tools, specifically emphasizing their implications for Human-Computer Interaction (HCI) within educational settings (Noy and Zhang, 2023; Wang et al., 2017). The study acknowledges the importance of understanding not merely the technological facet of AI tools but also how they fit into the educational ecosystem, influencing the cognitive and creative dynamics of students (Chen et al., 2020). Understanding how these tools are perceived in terms of ease of use, accessibility, and their impact on usability is pivotal in crafting effective and captivating educational experiences (Chassignol et al., 2018; Hinojo-Lucena et al., 2019; MacDonald et al., 2022). The present study aims to assimilate a comprehensive literature review with empirical methods to offer valuable insights into the dynamic between students and generative AI technology. Particularly, this study centers on measuring attitude, recognizing its significance as both an outcome and a mediator in various technology acceptance models. Through this pursuit, the study aims to design and develop a valid and reliable scale that assesses students’ attitudes toward using generative artificial intelligence in educational environments.

Generative AI technology can potentially enhance significantly personalised educational content and materials, including text, pictures, and videos. By utilising generative AI, educational systems can provide customised learning resources that cater specifically to the unique characteristics of individual students, such as their learning needs, preferences, and pace (Hsu and Ching, 2023; Pataranutaporn et al., 2021). This advanced technology allows students to create learning materials, leading to a more engaging and enjoyable learning experience. As a result, personalised instruction facilitated by generative AI technology combined with student-generated content creation abilities enhances overall comprehension levels among learners and higher-order thinking skills while increasing satisfaction with the course (Elfeky, 2019; Zhang et al., 2020). Consequently, students feel empowered through self-directed knowledge generation when supported by an environment integrating generative AI technology.

Generative artificial intelligence stands as a potent resource in bolstering students’ writing proficiencies. It achieves this by furnishing draft texts and sample writings tailored to specific topics, serving as a wellspring of inspiration and guidance for students seeking to enhance their writing skills. This practical application of AI plays a pivotal role in supporting students by providing tangible examples to learn from, nurturing creativity, and encouraging originality in their written expressions. The utility of generative AI extends beyond mere guidance; it actively assists students and learners in refining their writing abilities by exposing them to a diverse array of text samples generated by the system. This innovative tool serves as an invaluable strength for educators, offering an opportunity to leverage personalized learning experiences that foster heightened student engagement and skill development in writing. In the long run, generative AI becomes a friend in the educational landscape, empowering students to craft their narratives with greater confidence and proficiency.

AI-powered generative technology has emerged as a transformative force in bolstering students’ academic performance, as highlighted in studies by Ahmad et al. (2023), Baidoo-Anu and Owusu Ansah (2023), and Yilmaz and Karaoglan Yilmaz (2023a). Educators leverage these tools adeptly to analyze homework and exam data, effectively identifying specific subjects or topics where students may benefit from additional support and guidance. This technology fosters self-learning skills among students, empowering them to navigate their educational journey with greater autonomy and efficacy. Furthermore, the timely feedback facilitated by generative AI plays a pivotal role in cultivating self-awareness and enabling self-assessment. Through automated feedback delivery via this technology, students gain rapid and precise insights into areas requiring their attention (Li et al., 2023; Rudolph et al., 2023). This not only expedites the feedback process but also enables students to strategically direct their efforts, thereby optimizing their learning experience.

Generative AI technology opens doors to improving creative capabilities of learners. Through the use of these tools, students can influence their distinct ideas and viewpoints to craft learning materials and assignments that reflect their creativity (Ahmad et al., 2023; Yilmaz and Karaoglan Yilmaz, 2023b). They empower students by providing a platform to generate genuine content and express their imaginative thoughts more freely.

Generative AI technology plays a pivotal role in nurturing students’ analytical and critical thinking capabilities. When tasked with creating learning materials, students engage in analyzing various options and scenarios, thereby fortifying their analytical thinking skills (Chang and Kidman, 2023; Cooper, 2023; Lim et al., 2023). Furthermore, students can critically assess and appraise the content generated by generative AI technology, contributing significantly to the development of their critical thinking abilities. Additionally, generative AI technology facilitates the enhancement of students’ problem-solving skills (Qadir, 2023; Yilmaz and Karaoglan Yilmaz, 2023a). Through the utilization of generative AI, students can scrutinize given problems and generate diverse solutions, thereby enriching their problem-solving proficiencies. Consequently, the integration of generative AI technology substantiates students’ acquisition of a profound understanding of the subject matter.

Generative AI technology holds the potential to be a valuable asset in enhancing students’ communication skills (Bozkurt et al., 2023; Yilmaz and Karaoglan Yilmaz, 2023b), enabling them to articulate topics and ideas more effectively. Moreover, it plays a role in bolstering self-confidence (Chong et al., 2023; Yilmaz and Karaoglan Yilmaz, 2023a) as students independently utilize generative AI to craft learning materials and assignments. This fosters a sense of ownership and autonomy in their learning journey, contributing significantly to their confidence and sense of control in the educational process. Overall, generative AI empowers students by enhancing their communication abilities and promoting self-assurance.

While generative AI technology has the potential to enrich student’s learning experiences and develop various skills, its misuse or improper use can give rise to challenges and concerns. Ensuring students correctly direct and utilise the technology to avoid potential problems is crucial. A significant concern is the protection of student privacy and security when implementing generative AI technology (Tredinnick and Laybats, 2023). Therefore, the ethical use of generative AI should be upheld, and measures must be taken to safeguard student privacy and security. Since generative AI models are trained on datasets, any errors or inaccuracies within the dataset directly impact the accuracy of the generated content. This may produce false or misleading information by generative AI systems (Qadir, 2023). Unethical use of generative AI technology during homework or exams may encourage undesirable behaviors like plagiarism and cheating (Crawford et al., 2023). Generative AI technology, while enabling efficiency, may have a negative impact on students’ creativity (Bozkurt et al., 2023; Yilmaz and Karaoglan Yilmaz, 2023b). Easy and quick completion of tasks using generative AI might hinder students from generating original ideas.

Moreover, dependence on generative AI technology may lead to technological addiction (Yu, 2023), potentially diminishing students’ ability to think critically and develop their thoughts and ideas. Additionally, generative AI technology may hinder improving students’ communication and interaction skills (Yilmaz and Karaoglan Yilmaz, 2023a). Students may rely on features such as autocomplete and word suggestions, limiting their engagement with peers for collaborative assignments. This could impede the development of essential social skills. Furthermore, generative AI technology may decrease students’ reading and writing skills since they may opt to copy pre-existing sentences instead of crafting their original content using auto-completion and word suggestions.

Consequently, their reading and writing skills may not develop adequately (Bozkurt et al., 2023). Moreover, generative AI technology can potentially diminish students’ thinking skills. Students’ analytical and critical thinking skills may decline by providing ready-made answers instead of generating their thoughts (Yilmaz and Karaoglan Yilmaz, 2023a). It can also lead to a decrease in students’ motivation. Students may use canned answers rather than invest effort into their assignments. This can result in a loss of motivation for their studies and subsequent academic underachievement (Yilmaz and Karaoglan Yilmaz, 2023b; You et al., 2023).

In this study, “attitude toward generative AI in education” is defined as the evaluative disposition of students toward the various applications and uses of generative AI tools within educational settings. This definition is grounded in established theories of attitude within educational and psychological research, which emphasize the evaluative nature of attitudes as encompassing cognitive, affective, and behavioral components (Eagly and Chaiken, 1993; Biddle, 2006; Kaya et al., 2022).

The definition adopted in this study draws on seminal work in the field of attitude theory. Eagly and Chaiken (1993) describe attitude as a psychological tendency that is expressed by evaluating a particular entity with some degree of favor or disfavor. This conceptualization is applied to the context of generative AI in education, where students’ attitudes reflect their evaluations of the utility, implications, and personal relevance of these tools. The focus on educational settings further narrows this definition to encompass how students perceive the integration of AI tools in their learning experiences, as informed by the broader literature on technology acceptance and educational innovation (Davis, 1989; Rogers, 2003).

Generative AI technology offers students many opportunities but also brings various disadvantages. Students’ experiences with generative AI, both positive and negative, contribute to the formation of their attitudes. The benefits students derive from generative AI technology largely depend on their attitudes toward it, making attitude a crucial aspect of the learning process.

Students’ attitudes toward education may directly impact their performance and success in the learning process (Mensah et al., 2013; Marengo and Pange, 2024). These attitudes can be categorised as positive or negative. While positive attitudes refer to the exhibition of positive attitudes toward learning, negative ones represent negative attitudes toward it (Korkmaz, 2012). Positive attitudes contribute to students’ active participation, better understanding of information, and excellent receptiveness to learning (van der Linden et al., 2012). Conversely, negative attitudes can create obstacles and hinder students’ learning progress (Mahanta, 2012). Such positive attitudes reflect openness, motivation, active participation, and a favourable disposition toward learning (Longobardi et al., 2021). Students’ positive attitudes toward learning materials facilitate their effective learning process (Mensah et al., 2013; Marengo and Pange, 2024). Negative attitudes manifest as indifference, lack of motivation, and passive behavior toward learning (Illarionova et al., 2021). On the other hand, students’ negative attitudes toward learning materials can impede effective learning (Judi et al., 2011). While positive attitudes support students’ success, negative attitudes may lead to academic setbacks (Shui Ng and Yu, 2021). Therefore, fostering positive attitudes toward generative AI technology is crucial for students’ educational experiences.

Given the numerous advantages that generative AI offers students, students are expected to develop positive attitudes toward this technology. Particularly, students with creative inclinations may perceive generative AI as a means for more original and distinct works. However, concerns may arise among certain students regarding the potential of generative AI to stifle their creativity and hinder the expression of their true creative capabilities. Furthermore, the misuse of this technology can undermine the originality of assignments and adversely affect students’ learning process. Hence, it is imperative to ascertain students’ attitudes toward generative AI technology.

The classification of attitudes toward generative AI in education requires a robust theoretical foundation that bridges classical attitude theory with contemporary technological contexts. Our framework integrates three key theoretical perspectives:

1. Tripartite model of attitudes (Eagly and Chaiken, 1993):

- Cognitive component: beliefs about AI’s educational utility.

- Affective component: emotional responses to AI integration.

- Behavioral component: intended usage patterns.

2. Technology acceptance variables (Davis, 1989; Venkatesh et al., 2023):

- Perceived usefulness in educational contexts

- Ease of use in learning environments

- Social influence factors

- Facilitating conditions

3. AI-Specific attitude formation (Bozkurt et al., 2023; Yilmaz and Karaoglan Yilmaz, 2023a):

- Technological self-efficacy

- Ethical awareness

- Learning autonomy

- Creative empowerment

The classification of items as positive or negative attitudes follows specific criteria:

Positive attitudes:

- Enhancement of learning efficiency

- Support for skill development

- Facilitation of educational goals

- Promotion of learning autonomy

Negative attitudes:

- Concerns about dependency

- Ethical and privacy issues

- Potential learning impediments

- Impact on critical thinking development

This theoretical framework guided both item development and subsequent analysis, ensuring that the scale comprehensively captures the multifaceted nature of student attitudes toward generative AI in education.

Examining students’ attitudes toward generative AI technology is a valuable endeavour as it offers insights into their approach and the impact of this technology in education. The research contributes to the formulation of policies and strategic approaches for the effective integration of generative AI technology within educational environments. Moreover, determining their attitudes toward it will aid in designing and implementing training programs tailored to students’ needs. Critical considerations for the appropriate use of generative AI technology can be identified by exploring students’ attitudes, enabling effective integration and utilisation by students. Research on students’ attitudes also plays a crucial role in formulating strategies for incorporating generative AI technology into teaching and learning processes. Knowledge about students’ abilities to leverage this technology, its applicable domains, and its potential benefits enables educators to make informed decisions on aspects such as educational material design and assessment of student assignments.

Students’ attitudes toward generative AI technology also impact its sustainability in education. Positive attitudes among students foster the future adoption and utilisation of this technology, while negative attitudes may impede its effective use and integration into educational practices. While models like the Technology Acceptance Model, Perceived Usefulness, and Perceived Ease of Use are critical in understanding user behavior, this research specifically hones in on attitude due to its overarching impact on user interactions with AI technologies. This focused approach allows for a more in-depth exploration of the nuanced ways attitudes are formed and influenced in the context of AI, despite the broader theoretical frameworks available. Reviewed literature highlights the need for developing measurement tools to assess students’ attitudes toward generative AI technology. This study aims to fill this gap by developing an attitude scale tailored explicitly to the educational use of generative AI. Hence, the research holds novelty and originality in this regard. The resulting attitude scale will facilitate further research on the educational use of generative AI, providing a foundation for future investigations. As a result, this research is anticipated to make valuable contributions to educators and researchers in the field.

The present study adhered to established procedures for development and validation of a scale to measure students’ attitudes toward utilising generative AI tools across various tasks. The development of the assessment tool followed a sequential exploratory mixed-method approach. The initial strategy was qualitative to evaluate the look and content validity of the tool, while the assessment of construct validity and reliability of the statements required a quantitative approach. The study involved a thorough and iterative process consisting of multiple stages of rigorous review and revisions prior to its administration to three distinct participant groups for further evaluation and validation. These stages are explained in the following:

The process of generating scale items began with a comprehensive review of existing literature on the use of generative AI in educational contexts. The goal was to identify key constructs related to students’ attitudes toward generative AI tools. Studies that explored the intersection of digital technology, education, and attitude measurement were extensively reviewed (e.g., Hernández-Ramos et al., 2014; Küçük et al., 2014; Yavuz, 2006). From this literature, common themes and constructs were identified, which informed the initial pool of items. Specifically, the items were designed to capture both positive and negative attitudes toward generative AI, in line with established theoretical frameworks in attitude research (e.g., Edwards, 1957; Korkmaz, 2012). To ensure comprehensive coverage, the item pool included constructs related to perceived usefulness, ease of use, impact on learning outcomes, and ethical considerations.

The initial item pool consisted of 21 items, formatted as statements on a 5-point Likert scale, ranging from 1 (strongly disagree) to 5 (strongly agree). These items were crafted to reflect the dual dimensions of attitude: positive and negative. Items were phrased to ensure clarity, relevance, and alignment with the theoretical constructs identified in the literature.

The initial pool of 21 items was developed through a systematic process incorporating both classical and contemporary theoretical frameworks. While foundational methodological works (Edwards, 1957; Korkmaz, 2012) provided the basic structure for attitude scale development, recent studies on AI in education (Chen et al., 2023; Yilmaz and Karaoglan Yilmaz, 2023a; Bozkurt et al., 2023) informed the content and contextual relevance of the items.

The item generation process followed these steps:

1. Literature review phase:

- Analysis of traditional attitude measurement frameworks

- Review of recent Gen AI studies in education (2021–2023)

- Examination of existing technology acceptance scales

2. Item development phase:

- Creation of initial item pool based on theoretical dimensions

- Review by methodology experts

- Integration of contemporary AI-specific considerations

The complete 21-item pool (see Appendix 2) was structured to capture:

a) Learning Enhancement Dimension (7 items)

b) Technological Efficacy Dimension (5 items)

c) Educational Impact Dimension (5 items)

d) Ethical Considerations Dimension (4 items)

For the clarity and comprehension of the items, the initial item pool was reviewed by 15 university students with experience with generative AI tools. Participants were asked to assess their understanding of each item and engage in discussions regarding their recommendations, queries, and ideas on the items. Based on the feedback received, item revisions were for clarity and considerations of such factors as length and language (including readability level and relevance). Items deemed complex, unclear, or redundant were eliminated from the initial pool. Subsequently, an 18-item draft was created, employing a 5-point Likert scale that ranged from “1: strongly disagree” to “5: strongly agree” to gauge participants’ responses.

At this stage, substantial feedback was received from a group of experts, four from those specialising in assessment and evaluation and four of those with expertise in educational technology, to contribute to the scale’s validity. Construct, face and content validity were assessed based on their expert opinions. As a result of their feedback, four items were removed from the initial 18-item scale, and adjustments were made to two other items to improve their alignment with the intended constructs. Consequently, the generative AI attitude scale for educational purposes was refined to comprise 14 items, following the recommendations of the expert reviewers. Furthermore, to enhance the linguistic quality of the scale, two linguists, proficient in English and Turkish, respectively, conducted a thorough linguistic assessment. Their expertise was instrumental in refining the scale, resulting in revisions and improvements to ensure linguistic accuracy and clarity.

Following the revisions, a pilot study involving 14 students (8 males and 6 females) was conducted for participant feedback and testing time required to complete the questionnaire. Additionally, to check how clear the items and instructions were, they were asked to be involved in a focused group for a comprehensive discussion. Based on their ideas and suggestions required some necessary adjustments to enhance the clarity of instructions and eliminate any ambiguous or unclear language in the scale items and instructions. The questionnaire length was determined by calculating students’ average time to complete it. However, indicating that this group was excluded from the primary data set is crucial.

The 14-item scale reached its final form and was prepared for implementation in the current study. The last version of the scale is available in two languages (Turkish and English) in Appendix 1. With the participant groups, as detailed in Table 1, we employed an exploratory factor analysis (EFA) and a confirmatory factor analysis (CFA). The subsequent sections will present the data analysis procedures and results obtained from these analyses.

Constructing a tool which will assess users’ attitudes toward generative AI in the context of education was the primary objective of this study. Therefore, a purposeful selection process included participants with experience with generative AI tools and applications, specifically within an educational setting. In line with this aim, data was collected in the fall and spring semesters of the 2022–2023 academic year across the departments of nine faculties (Faculty of Forestry, Economics and Administrative Sciences, Engineering Architecture and Design, Letters, Education, Science, Islamic Sciences, Health Sciences, and Sports Sciences), three vocational schools, and a graduate school of a state university in Turkey. Throughout the data collection process, this research intended to adhere to ethical and professional priorities strictly, so all participants were kindly asked for informed consent.

The research sought to include participants from diverse departments within the university. This approach was deemed necessary as it can offer a more comprehensive understanding of students’ attitudes toward generative AI in the context of education. Moreover, incorporating participant diversity enhances the reliability of the findings, as it helps to mitigate the potential influence of department-specific factors and ensures a more representative sample who are thought to possess a variety of perspectives and experiences.

Three distinct participant groups were involved in the study. Four hundred undergraduate students, including 217 females and 183 males, were in this group, the data from which EFA was conducted, whereas the second group consisted of 264 students from undergraduate degrees, with 137 females and 127 males, and the data from the latter group was used for CFA. Furthermore, the data obtained from both participant groups, comprising a total of 664 individuals, was employed to calculate the reliability coefficients using Cronbach’s alpha and perform item analyses. DeVon et al. (2007) recommends to involve different samples to conduct EFA and CFA; hence, these analyses were performed accordingly from distinct participant groups adhering to this recommendation.

As an additional layer to evaluate the reliability of the proposed instrument, a test–retest analysis was conducted using data from a separate group of participants. A test–retest analysis is useful to examine the consistency and stability of the measurement by administering the same test to individuals on two different occasions and calculating the correlation between their scores (DeVon et al., 2007). The purpose of this analysis is to determine how consistently and stably participants’ scores remain across multiple administrations. Initially, 27 undergraduate students participated in the test–retest reliability analysis, but the data from two students were excluded as they did not attend both sessions. The “Results” section encompasses the outcomes of the test–retest reliability analysis. Subsequently, the third participant cohort comprised 25 undergraduate students (13 females and 12 males). This group underwent the scale’s administration initially and then underwent two subsequent administrations, spaced three weeks apart. Table 1 details the scale’s implementation across various participant groups and offers a statistical analysis of the data collected from these cohorts.

The demographic analysis of the participants revealed diverse age distributions across the three groups. In the first group (n = 400), participants’ ages ranged from 18 to 27 years (M = 21.3, SD = 2.4). The second group (n = 264) showed a similar age distribution, with participants ranging from 19 to 28 years (M = 22.1, SD = 2.6). The third group, used for test–retest reliability (n = 25), comprised students aged 20–26 years (M = 21.8, SD = 1.9).

The age distribution analysis revealed that 68.5% of participants were between 20–24 years old, representing the typical age range for undergraduate students. This demographic profile is particularly relevant given that this age group has grown up with digital technologies and may have different attitudes toward generative AI compared to other age groups. The relatively homogeneous age distribution also helps control for potential age-related confounding variables in attitudes toward generative AI technologies.

To ensure robust analysis of potential age effects, we conducted additional statistical tests to examine whether age significantly influenced attitudes toward generative AI. A correlation analysis between age and both positive and negative attitude subscales revealed no significant age-related differences (r = 0.12, p > 0.05 for positive attitudes; r = 0.09, p > 0.05 for negative attitudes), suggesting that within our sample, age was not a determining factor in shaping attitudes toward generative AI in educational contexts.

Table 2 provides a comprehensive overview of the demographic characteristics across all participant groups, illustrating the distribution of gender, age, and academic affiliations in the study sample.

The comprehensive evaluation of the proposed scale’s psychometric properties involved a meticulous assessment utilizing various statistical analyses. The initial step entailed conducting Exploratory Factor Analysis (EFA) utilizing data collected from a participant group of 400 individuals (Cattell, 1978; Comrey and Lee, 1992; Cokluk et al., 2012; Hair et al., 1979; Kline, 1994). EFA is a commonly employed statistical technique used to delve into underlying constructs or dimensions within observed variables. In the context of developing a measurement tool, EFA plays a critical role in understanding the structure of the construct being measured. Before initiating the EFA, the Kaiser-Mayer-Olkin (KMO) and Bartlett tests were executed to assess the dataset’s suitability for factor analysis. The dataset met the criteria for factor analysis, demonstrating a KMO value exceeding 0.60 and a statistically significant Bartlett test (Buyukozturk, 2010).

Various factorisation techniques outlined by Tabachnick and Fidell (2007) can be employed in conducting the EFA. However, principal component analysis (PCA), which is regarded as psychometrically stronger than other methods, may be preferred (Soomro et al., 2018; Stevens, 1996). Following the recommendations of Akbulut (2010), we employed principal component analysis as the factorisation technique in the present study. To ensure the effectiveness of each scale, each item within the relevant factor must exhibit a factor loading of at least 0.30 (Pallant, 2005). Additionally, the communalities (h2) of the measured variables were considered. Low communalities signal the exclusion of an item from the measurement tool. As Tabachnick and Fidell (2007) suggested, it may be appropriate to use a cutoff value of 0.20 for commonalities, as it was utilised in the present study.

Through CFA, we aimed to validate the outcomes of the EFA and assess the theoretically constructed model. However, the CFA analysis indicated that the obtained significant χ2 values did not support the fit of the data to the theoretically constructed model. To evaluate the adequacy of the proposed measurement model in relation to the observed data, several fit indices were examined, as suggested by researchers (Byrne, 2010; Hu and Bentler, 1999; Kline, 2005). These fit indices include standardized values and encompass a range of measures. For instance, one such measure is the chi-square goodness of fit test which assesses how well the model fits with the observed data. It is also important to consider other indicators such as **Goodness of Fit Index**, **Standardized Root Mean Square Residual**, **Tucker-Lewis Index**, **Incremental Fit Index (IFI**), **Normed Fit index**,**Comparative Fit Index and root-mean-square error Approximation (RMSEA**) To gain an overall understanding regarding whether or not our measurement model adequately aligns with collected data points.

The reliability of the educational generative AI attitude scale was assessed through Cronbach’s alpha and test–retest reliability approaches. These analyses were conducted to evaluate the internal consistency and stability of the scale, respectively. To examine the discriminative power of the items, adjusted item-total correlations were calculated, and comparisons were made between the lower 27% and the upper 27% of respondents. EFA, test–retest reliability, Cronbach’s alpha reliability coefficient, concordance validity, and item analysis were performed using the SPSS 24.0 package program. Additionally, DFA calculations were carried out using the AMOS 22.0 software package.

In the first group of participants, EFA was conducted. Before the analysis, the KMO measure of sampling adequacy was employed to ensure whether the sample size was acceptable, and the test of Bartlett on sphericity was used to assess the appropriateness of the data set for factor analysis. The KMO value obtained for the scale was.923, and Bartlett’s test of sphericity was statistically significant (χ2 (91) = 5788.732, p = 0.000). The results demonstrate the suitability of the dataset for conducting EFA. Later, to identify the underlying factor pattern of the scale, we performed the principal component analysis with varimax rotation. The EFA results are displayed in Table 3.

Table 3 shows that each of the 14 items in the scale exhibits a factor load exceeding.30, satisfying the minimum criterion for effective inclusion in the factor analysis. Additionally, the explained common factor variance for all items meets the threshold of.20. The first dimension, labelled positive attitude, accounts for 6.489% of the variance and comprises eight items with factor loads ranging from 0.874 to 0.925. On the other hand, the second dimension, termed negative attitude, explains 4.493% of the variance and consists of 6 items with factor loads ranging from.768 to.901. As the scale explains, the total variance is 78.440%, representing that the scale effectively accounts for the measured feature. Hence, the EFA yielded a 14-item two-factor structure.

To validate our two-factor solution and address the potential for a simpler structure, we conducted a comparative analysis exploring a one-factor solution. The analysis revealed several key findings:

1. One-Factor Solution Analysis

• The single-factor solution explained 52.34% of total variance

• Factor loadings ranged from.45 to.78

• Goodness of fit indices: χ2/df = 4.326, CFI = 0.86, GFI = 0.82, RMSEA = 0.089

2. Comparative Model Fit Two-Factor Solution:

• Explained variance: 78.440%

• Factor loadings: 0.768 to.925

• Fit indices: χ2/df = 2.126, CFI = 0.98, GFI = 0.93, RMSEA = 0.065

The two-factor solution demonstrated superior fit across multiple criteria:

• Higher explained variance (∆26.1%)

• Better fit indices (∆CFI = 0.12, ∆GFI = 0.11)

• Lower RMSEA (∆0.024)

• More theoretically meaningful structure

The comparison confirms that positive and negative attitudes toward generative AI represent distinct constructs rather than opposite ends of a single continuum. This finding aligns with attitude theory literature suggesting that positive and negative evaluations can coexist and operate independently (Eagly and Chaiken, 1993). The superior fit of the two-factor model supports our theoretical framework that students can simultaneously hold both positive expectations and concerns about generative AI in education.

The data obtained from the second group were used to confirm the two-factor, 14-item structure obtained from the EFA. The CFA results for the scale provided the following fit indices: χ2/df = 2.126, CFI = 0.98, GFI = 0.93, IFI = 0.98, TLI = 0.98, RMSEA = 0.065, and SRMR = 0.0367. Table 4 reports the satisfactory and excellent fit values of the fit indices used to specify whether the tested model is adequately compatible with the data (Hooper et al., 2008; Hu and Bentler, 1999; Kline, 2005; Tabachnick and Fidel, 2001). The findings suggest that the two-factor model derived from the confirmatory factor analysis exhibits a satisfactory level of goodness-of-fit.

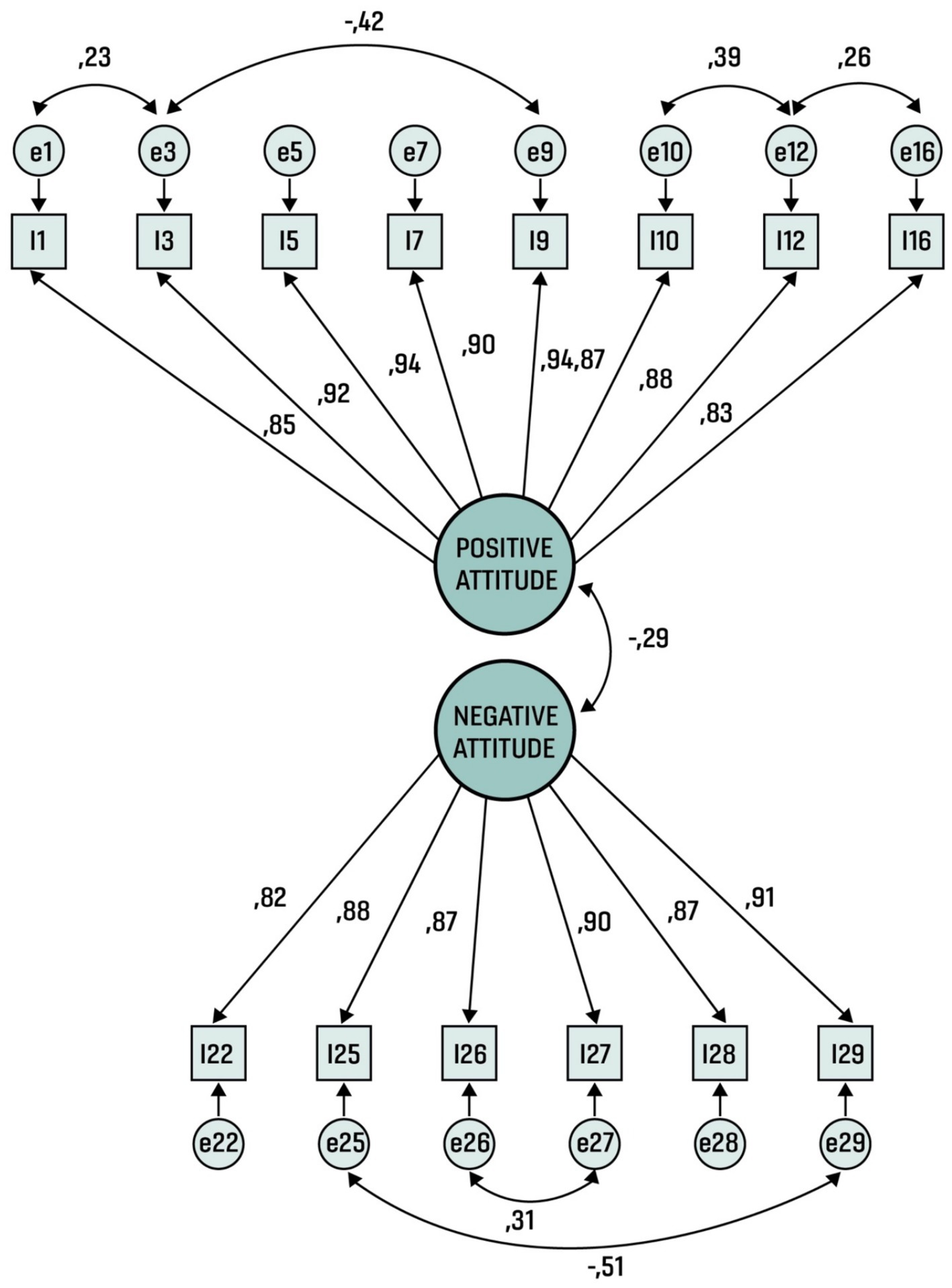

The factor loadings for the two-factor model derived from confirmatory factor analysis are illustrated in Figure 1. Figure 1 displays the factor loadings range from 0.83 to 0.94 for the positive attitude dimension and from.82 to.91 for the negative attitude dimension.

Figure 1. Standardized solutions for the two-factor model of the generative AI attitude scale for educational purposes.

The two-factor structure revealed through our analyses warrants deeper theoretical interpretation. Each factor encompasses distinct but interrelated dimensions of students’ attitudes toward generative AI in educational contexts:

1. Positive attitude factor analysis: The positive attitude dimension (Items 1–8) revealed four distinct theoretical subdimensions:

a) Perceived educational utility (Items 1, 3, 4)

• Learning efficiency enhancement

• Educational benefit perception

• Recommendation potential

• Factor loadings ranging from.874 to 0.925, indicating strong construct validity

b) Cognitive development support (Items 2, 5)

• Creative thinking enhancement

• Problem-solving facilitation

• Demonstrated high internal consistency (α = 0.97)

c) Engagement motivation (Items 6, 7)

• Interest in educational applications

• Excitement about technology integration

• Strong inter-item correlations (r = 0.82)

d) Technology appreciation (Item 8)

• Recognition of potential and features

• High factor loading (0.898)

2. Negative attitude factor analysis: the negative attitude dimension (Items 10–13) revealed three distinct concern categories:

a) Future impact concerns (Items 10, 13)

• Apprehension about long-term implications

• Societal impact considerations

• Factor loadings of.900 and.859, respectively

b) Learning process concerns (Item 11).

• Impact on cognitive skill development

• Educational effectiveness concerns

• Strong correlation with overall negative dimension (r = 0.87)

c) Reliability and trust issues (item 12)

• Accuracy concerns

• Trust in AI-generated content

• High factor loading (0.901)

This dimensional analysis reveals that students’ attitudes toward generative AI are multifaceted, encompassing both practical educational considerations and broader societal concerns. The positive dimension primarily focuses on immediate educational benefits and learning enhancement, while the negative dimension addresses broader implications for personal development and societal impact.

The strong factor loadings and internal consistency measures within each dimension suggest that these constructs are robust and theoretically meaningful. This multidimensional structure aligns with previous research on technology acceptance in education (Davis, 1989; Rogers, 2003) while extending it to the specific context of generative AI applications.

Corrected item-total correlations were calculated to examine the discriminative power of the scale items and their ability to predict the total score. The upper 27% and lower 27% of participants were compared, and the findings from the item analysis are reported in Table 5.

The t-values for the difference between the upper 27% and the lower 27% of participants, as displayed in Table 5, range from −18.965 to −24.514 for the positive attitude dimension and from −6.667 to −13.375 for the negative attitude dimension. All t-values for comparing the upper and lower 27% of respondents are statistically significant. These significant t-values indicate the items’ discriminative power in distinguishing between high and low-scoring participants (Erkuş and Terhorst, 2012).

As observed in Table 5, the item-total correlations range from 0.522 to 0.619 for the positive attitude dimension and from.273 to.438 for the negative dimension. In the context of item-total correlations, items with values of.30 and above are considered to have adequate discriminative power (Buyukozturk, 2010; Erkuş and Terhorst, 2012). When the analysis results were reviewed, it was found that item 9 had a total correlation value below.30, leading to its exclusion from the scale.

The eliminated Item 9 (‘Generative AI applications can pose risks to the protection of personal data’) was initially included to assess concerns about data privacy in the negative attitude dimension. The decision to remove this item was based on multiple methodological considerations:

1. Statistical justification

• The item showed a low item-total correlation (0.273), significantly below the established threshold of.30

• Its communality value (h2 = 0.60) was notably lower than other items in the negative attitude dimension

• The item demonstrated weak factor loading patterns in both EFA and CFA analyses

2. Conceptual analysis

• While data privacy represents a legitimate concern, the item’s focus appeared too specific compared to the broader attitudinal constructs measured by other items

• Factor analysis revealed that this item did not align consistently with either the positive or negative attitude dimensions

• The content partially overlapped with Item 13, which more comprehensively addresses general concerns about AI applications

3. Impact on scale reliability

• Removal of Item 9 improved the overall Cronbach’s alpha from.82 to.84

• The scale’s test–retest reliability showed enhancement after item removal

• The remaining 13 items demonstrated stronger internal consistency

The removal of this item strengthened the scale’s psychometric properties while maintaining comprehensive coverage of the construct through the remaining items addressing technological concerns and potential risks. This decision aligns with established scale development procedures (DeVon et al., 2007) that prioritize both statistical robustness and conceptual clarity.

Subsequently, the analyses were re-conducted, and items were renumbered and presented in Table 6.

As indicated in Table 6, the item-total correlations range from.576 to.684 for the positive attitude dimension and between.344 and.440 for the negative dimension. Item-total correlations signify that items with values of.30 and above are thought to be of satisfactory discriminative power (Buyukozturk, 2010; Erkuş and Terhorst, 2012). All items in the scale meet this criterion, indicating that they possess adequate discriminative power.

Cronbach’s alpha and test–retest methods were used for the scale’s reliability. Reliability coefficients of.70 and above are generally considered indicators of reliable measurements (Fraenkel et al., 2011). As shown in Table 7, the calculated reliability coefficients are above this threshold, demonstrating that the scale exhibits satisfactory reliability.

The relationship between the two sub-dimensions of the scale was explored, and the results are displayed in Table 8.

It can be observed from Table 8 that the correlation between the sub-dimensions of the scale is −0.172. Specifically, the correlation between positive attitudes is.774, while the correlation between negative attitudes is statistically significant at.490 and.01 levels.

The generative AI attitude scale for educational purposes consists of 13 items and employs a response format that spans from “strongly disagree” to “strongly agree.” The total score derived from the scale can range between 13 and 65. Notably, the items were reversed for scoring the negative attitude sub-dimension in the scale. In other words, the total score was computed by adding the scores from the positive attitude sub-dimension and reversing the scores of the negative attitude sub-dimension. Higher scores signify a higher level of positive generative AI attitude for educational purposes among students.

Attitudes toward generative AI tools and applications in educational contexts are significant for both students and teachers, particularly given the rapid development of generative AI technology has the potential to impact various aspects of our lives. Students’ positive attitude toward these tools and applications will enable them to actively engage with technology, acquire new skills, and stay abreast of current advancements (Marengo et al., 2023). There is currently a lack of measurement tools in the literature to assess students’ attitudes toward generative AI tools and applications. In response to this gap, our study aimed to develop a generative AI attitude scale specifically designed for educational contexts. By doing so, we can contribute to the field, gain insights into student attitudes toward generative AI, and enhance the depth and diversity of research in this area.

Our findings contribute significantly to the emerging discourse on generative AI in education by providing empirical validation of theoretical constructs previously discussed in the literature. The two-factor structure identified in our study aligns with but also extends previous research in several key ways:

1. Attitude formation and technology acceptance: Our results support Dwivedi et al.’s (2023) framework on technology acceptance, while specifically identifying how generative AI attitudes differ from general technology attitudes. The strong positive factor loadings (ranging from.874 to.925) for educational utility items echo Yilmaz and Karaoglan Yilmaz’s (2023a) findings on AI’s perceived benefits in educational contexts, but provide more granular insights into specific educational applications.

2. Educational impact dimensions: The positive attitude dimension’s focus on learning efficiency and creative thinking development validates Ahmad et al.’s (2023) theoretical propositions about AI’s educational potential. However, our findings extend this work by quantifying the strength of these relationships through robust psychometric validation.

3. Concerns and challenges: The negative attitude dimension empirically supports concerns identified by Crawford et al. (2023) regarding ethical implications and Bozkurt et al.’s (2023) findings on potential negative impacts on creativity. Our scale uniquely demonstrates how these concerns manifest in measurable attitudinal constructs.

4. Theoretical integration: The dual-factor structure aligns with established technology acceptance models (Davis, 1989) while incorporating unique aspects of generative AI, particularly in:

• The coexistence of enthusiasm and apprehension.

• The specific role of creativity and problem-solving expectations.

• The integration of ethical considerations with practical utility.

5. Practical applications: Our findings support but also refine Marengo et al.’s (2023) recommendations for AI integration in higher education by:

• Providing validated measures for assessing student readiness.

• Identifying specific areas of student concern requiring attention.

• Offering empirical support for differentiated implementation strategies.

This empirical validation of theoretical constructs provides a foundation for future research while offering practical insights for educational implementation. The robust psychometric properties of our scale (Cronbach’s alpha = 0.84) ensure that these findings can reliably inform educational policy and practice in the rapidly evolving landscape of generative AI integration.

The present research followed standard scale development procedures and employed CFA and EFA to assess construct validity (Gunuc and Kuzu, 2015; Soomro et al., 2018). The EFA yielded a two-factor structure comprising 14 items categorised as positive attitude and negative attitude, explaining 78.440% of the total variance. Subsequently, CFA was used to verify the accuracy of the measurement model, and all item factor loadings were found above the 0.30 cutoff point, revealing acceptable fit indices. However, one item displayed a low item-total correlation (<0.30), prompting its removal from the scale, which led to a final scale consisting of 13 items, further supporting the construct validity of the educational generative AI attitude scale. Internal consistency was assessed through Cronbach’s alpha reliability, resulting in a value of 0.84, indicating high reliability.

The identified factors of the proposed scale capture diverse dimensions of students’ attitudes toward generative AI tools. These dimensions could potentially encompass aspects such as perceived usefulness, ease of use, perceived impact on learning outcomes, perceived barriers, and ethical considerations. The nuanced understanding of these dimensions could aid in tailoring interventions to address specific concerns or capitalize on strengths in promoting the effective integration of generative AI technologies into educational environments.

Test–retest reliability analysis yielded a coefficient of 0.90, further reinforcing the scale’s reliability as the literature suggests the values 0.70 and above are reliable. The research results demonstrate that the developed measurement tool is of high reliability. Item analysis was conducted to assess the predictive and discriminative power of the scale items by using corrected item-total correlations (Carpenter, 2018). Comparisons were made between the top and bottom quartiles of the participants, and all items demonstrated satisfactory discriminative power. Consequently, the developed measurement tool was valid and reliable for assessing students’ attitudes toward generative AI.

This study acknowledges several limitations that should be considered when interpreting the results, although the validity and reliability test of the scale followed a systematic and methodical approach. Indeed, there are additional limitations that need addressing when generalising the findings of this study. The research sample comprised university students from various faculties and grade levels within a single state university, which may impact the generalizability of the findings. Future studies could include participants from diverse universities and aim for a more prominent and representative sample to enhance the external validity. Furthermore, a significant proportion of the participants reported using ChatGPT as the specific generative AI application. Future research could compare attitudes toward various Generative AI applications using the developed scale to gain a comprehensive understanding. Another limitation is that the study primarily focuses on university students’ attitudes toward generative AI tools in the short term. The study mainly targeted university students who have experience with generative AI tools. To capture potential variations in attitudes across different student populations, future research could include diverse groups such as adults and vocational and technical education students and consider variables like age groups, socio-cultural background, and education levels.

The long-term effects of such tools on students’ learning outcomes, critical thinking skills, and motivation should also be considered for a more comprehensive analysis. To address this, future research could implement longitudinal studies to explore the relationship between student attitudes and learning outcomes over an extended period.

In conclusion, this study contributes a validated scale that may be used to encapsulate students’ attitudes toward generative AI tools in educational contexts. The nuanced insights obtained provide a foundation for informed decision-making in designing and implementing these technologies, ultimately enhancing human-computer interaction and educational experiences.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

AM: Formal analysis, Supervision, Validation, Writing – original draft, Writing – review & editing. FK-Y: Methodology, Writing – original draft, Writing – review & editing. RY: Conceptualization, Resources, Visualization, Writing – original draft, Writing – review & editing. MC: Conceptualization, Writing – original draft, Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that no Gen AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomp.2025.1528455/full#supplementary-material

Ahmad, N., Murugesan, S., and Kshetri, N. (2023). Generative artificial intelligence and the education sector. Computer 56, 72–76. doi: 10.1109/MC.2023.3263576

Akbulut, Y. (2010). Sosyal bilimlerde SPSS uygulamaları [SPSS applications in social sciences]. İstanbul: Ideal Publications.

Baidoo-Anu, D., and Owusu Ansah, L. (2023). Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. Available at: https://ssrn.com/abstract=4337484

Baytak, A. (2023). The acceptance and diffusion of generative artificial intelligence in education: a literature review. Curr. Perspect. Educ. Res. 6, 7–18. doi: 10.46303/cuper.2023.2

Bozkurt, A., Xiao, J., Lambert, S., Pazurek, A., Crompton, H., Koseoglu, S., et al. (2023). Speculative futures on ChatGPT and generative artificial intelligence (AI): a collective reflection from the educational landscape. Asian J. Dist. Educ. 18, 53–130. doi: 10.55982/openpraxis.15.4.609

Buyukozturk, S. (2010). Sosyal bilimler için veri analizi el kitabı [the data analysis handbook for social sciences]. Ankara: Pegem Publications.

Byrne, B. M. (2010). Structural equation modeling with AMOS: Basic concepts, applications and programming. New York, NY: Taylor & Francis.

Carpenter, S. (2018). Ten steps in scale development and reporting: a guide for researchers. Commun. Methods Meas. 12, 25–44. doi: 10.1080/19312458.2017.1396583

Castelli, M., and Manzoni, L. (2022). Generative models in artificial intelligence and their applications. Appl. Sci. 12:4127. doi: 10.3390/app12094127

Cattell, R. B. (1978). The scientific use of factor analysis in behavioral and life sciences. New York, NY: Plenum.

Chang, C. H., and Kidman, G. (2023). The rise of generative artificial intelligence (AI) language models-challenges and opportunities for geographical and environmental education. Int. Res. Geogr. Environ. Educ. 32, 85–89. doi: 10.1080/10382046.2023.2194036

Chassignol, M., Хорошавин, А., Klímová, A., and Bilyatdinova, A. (2018). Artificial intelligence trends in education: a narrative overview. Proc. Comput. Sci. 136, 16–24. doi: 10.1016/j.procs.2018.08.233

Chen, B., and Zhu, X., (2023). Integrating generative AI in knowledge building. Computers and Education: Artificial Intelligence. 5:100184. doi: 10.1016/j.caeai.2023.100184

Chen, L., Chen, P., and Lin, Z. (2020). Artificial intelligence in education: a review. IEEE Access 8, 75264–75278. doi: 10.1109/access.2020.2988510

Chong, L., Raina, A., Goucher-Lambert, K., Kotovsky, K., and Cagan, J. (2023). The evolution and impact of human confidence in artificial intelligence and in themselves on AI-assisted decision-making in design. J. Mech. Des. 145:031401. doi: 10.1115/1.4055123

Cokluk, O., Sekercioglu, G., and Buyukozturk, S. (2012). Sosyal bilimler için çok değişkenli istatistik: SPSS ve LISREL uygulamaları [multivariate statistics for the social sciences: SPSS and LISREL applications]. Ankara: Pegem Publication.

Cooper, G. (2023). Examining science education in ChatGPT: an exploratory study of generative artificial intelligence. J. Sci. Educ. Technol. 32, 444–452. doi: 10.1007/s10956-023-10039-y

Crawford, J., Cowling, M., and Allen, K. A. (2023). Leadership is needed for ethical ChatGPT: character, assessment, and learning using artificial intelligence (AI). J. Univ. Teach. Learn. Pract. 20, 1–7. doi: 10.53761/1.20.01.01

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340. doi: 10.2307/249008

DeVon, H. A., Block, M. E., Moyle-Wright, P., Ernst, D. M., Hayden, S. J., Lazzara, D. J., et al. (2007). A psychometric toolbox for testing validity and reliability. J. Nurs. Scholarsh. 39, 155–164. doi: 10.1111/j.1547-5069.2007.00161.x

Dwivedi, Y. K., Kshetri, N., Hughes, L., Slade, E. L., Jeyaraj, A., Kar, A. K., et al. (2023). “So what if ChatGPT wrote it?” multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 71:102642. doi: 10.1016/j.ijinfomgt.2023.102642

Eagly, A. H., and Chaiken, S. (1993). The psychology of attitudes. Fort Worth, TX: Harcourt Brace Jovanovich College Publishers.

Elfeky, A. I. M. (2019). The effect of personal learning environments on participants’ higher order thinking skills and satisfaction. Innov. Educ. Teach. Int. 56, 505–516. doi: 10.1080/14703297.2018.1534601

Erkuş, H., and Terhorst, P. (2012). Variety of Urban Tourism Development Trajectories: Antalya, Amsterdam and Liverpool Compared. European Planning Studies - EUR PLAN STUD. 20, 665–683. doi: 10.1080/09654313.2012.665037

Gunuc, S., and Kuzu, A. (2015). Student engagement scale: development, reliability and validity. Assess. Eval. High. Educ. 40, 587–610. doi: 10.1080/02602938.2014.938019

Hair, J. F., Anderson, R. E., Tatham, R. L., and Grablowsky, B. J. (1979). Multivariate data analysis. Tulsa, OK: Pipe Books.

Harshvardhan, G. M., Gourisaria, M. K., Pandey, M., and Rautaray, S. S. (2020). A comprehensive survey and analysis of generative models in machine learning. Comput Sci Rev 38:100285. doi: 10.1016/j.cosrev.2020.100285

Hernández-Ramos, J. P., Martínez-Abad, F., Peñalvo, F. J. G., García, M. E. H., and Rodríguez-Conde, M. J. (2014). Teachers’ attitude regarding the use of ICT. A factor reliability and validity study. Comput. Hum. Behav. 31, 509–516. doi: 10.1016/j.chb.2013.04.039

Hinojo-Lucena, F., Díaz, I., Cáceres-Reche, M., and Rodríguez, J. (2019). Artificial intelligence in higher education: a bibliometric study on its impact in the scientific literature. Educ. Sci. 9:51. doi: 10.3390/educsci9010051

Hooper, D., Coughlan, J., and Mullen, M. R. (2008). Structural equation modelling: guidelines for determining modelfit. Electron. J. Bus. Res. Methods 6, 53–60.

Hsu, Y. C., and Ching, Y. H. (2023). Generative artificial intelligence in education, Part One: The Dynamic Frontier. TechTrends 67, 603–607. doi: 10.1007/s11528-023-00863-9

Hu, L. T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structural analysis: conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 6, 1–55. doi: 10.1080/10705519909540118

Illarionova, L. P., Karzhanova, N. V., Ishmuradova, A. M., Nazarenko, S. V., Korzhuev, A. V., and Ryazanova, E. L. (2021). Student attitude to distance education: pros and cons. Cypriot J. Educ. Sci. 16, 1319–1327. doi: 10.18844/cjes.v16i3.5857

Judi, H. M., Ashaari, N. S., Mohamed, H., and Wook, T. M. T. (2011). Students profile based on attitude towards statistics. Procedia Soc. Behav. Sci. 18, 266–272. doi: 10.1016/j.sbspro.2011.05.038

Kaya, F., Aydin, F., Schepman, A., Rodway, P., Yetişensoy, O., and Demir Kaya, M. (2022). The roles of personality traits, AI anxiety, and demographic factors in attitudes toward artificial intelligence. Int. J. Hum. Comput. Interact. 40, 497–514. doi: 10.1080/10447318.2022.2151730

Kline, R. B. (2005). Principle and practice of structural equation modelling. New York, NY: Guilford.

Korkmaz, O. (2012). A validity and reliability study of the online cooperative learning attitude scale (OCLAS). Comput. Educ. 59, 1162–1169. doi: 10.1016/j.compedu.2012.05.021

Küçük, S., Yilmaz, R., Baydas, Ö., and Göktas, Y. (2014). Augmented reality applications attitude scale in secondary schools: validity and reliability study. Educ. Sci. 39, 383–392. doi: 10.15390/EB.2014.3590

Li, Y., Sha, L., Yan, L., Lin, J., Raković, M., Galbraith, K., et al. (2023). Can large language models write reflectively. Comput. Educ. 4:100140. doi: 10.1016/j.asw.2023.100746

Lim, W. M., Gunasekara, A., Pallant, J. L., Pallant, J. I., and Pechenkina, E. (2023). Generative AI and the future of education: Ragnarök or reformation? A paradoxical perspective from management educators. Int. J. Manag. Educ. 21:100790. doi: 10.1016/j.ijme.2023.100790

Lin, Z. (2023). Why and how to embrace AI such as ChatGPT in your academic life. Royal Society Open Science 10. doi: 10.1098/rsos.230658

Longobardi, C., Settanni, M., Lin, S., and Fabris, M. A. (2021). Student–teacher relationship quality and prosocial behaviour: The mediating role of academic achievement and a positive attitude towards school. Br. J. Educ. Psychol. 91, 547–562. doi: 10.1111/bjep.12378

Lukianets, H., and Lukianets, T. (2023). Promises and perils of AI use on the tertiary educational level. Grail Sci. 25, 306–311. doi: 10.36074/grail-of-science.17.03.2023.053

MacDonald, C. M., Rose, E. J., and Putnam, C. (2022). How, why, and with whom do user experience (UX) practitioners communicate? Implications for HCI education. Int. J. Hum. Comput. Interact. 38, 1422–1439. doi: 10.1080/10447318.2021.2002050

Mahanta, D. (2012). Achievement in mathematics: effect of gender and positive/negative attitude of students. Int. J. Theor. Appl. Sci. 4, 157–163.

Marengo, A., Pagano, A., Soomro, K., and Pange, J. (2023). The educational value of artificial intelligence in higher education: a ten-year systematic literature. Interactive Technology and Smart Education. 21, 625–644. doi: 10.1108/ITSE-11-2023-0218

Marengo, A., and Pange, J. (2024). Envisioning general AI in higher education: transforming learning paradigms and pedagogies. Lect. Notes Netw. Syst. 1150, 330–344. doi: 10.1007/978-3-031-72430-5_28

Mensah, J. K., Okyere, M., and Kuranchie, A. (2013). Student attitude towards mathematics and performance: does the teacher attitude matter. J. Educ. Pract. 4, 132–139.

Ng, D., Leung, J., Su, J., Ng, R., and Chu, S. (2023). Teachers’ ai digital competencies and twenty-first century skills in the post-pandemic world. Educ. Technol. Res. Dev. 71, 137–161. doi: 10.1007/s11423-023-10203-6

Noy, S., and Zhang, W. (2023). Experimental evidence on the productivity effects of generative artificial intelligence. Working Paper MIT March 2, 2023. Available at: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4375283

Pallant, J. (2005). SPSS survival manual: A step by step guide to data analysis using SPSS for windows. Sydney: Allen & Unwin.

Pataranutaporn, P., Danry, V., Leong, J., Punpongsanon, P., Novy, D., Maes, P., et al. (2021). AI-generated characters for supporting personalized learning and well-being. Nat. Mach. Intell. 3, 1013–1022. doi: 10.1038/s42256-021-00417-9

Popenici, S., and Kerr, S. (2017). Exploring the impact of artificial intelligence on teaching and learning in higher education. Res. Pract. Technol. Enhanc. Learn. 12:22. doi: 10.1186/s41039-017-0062-8

Qadir, J. (2023). Engineering education in the era of ChatGPT: promise and pitfalls of generative AI for education. In 2023 IEEE global engineering education conference (EDUCON) (pp. 1–9). IEEE.

Rogers, E. M. (2003). Diffusion of Innovations, 5th Edition. Free press, A division of Simo and Schuster, Inc.1230 Avenues of Americans. New York, NY.

Rudolph, J., Tan, S., and Tan, S. (2023). ChatGPT: bullshit spewer or the end of traditional assessments in higher education? J. Appl. Learn. Teach. 6, 1–22. doi: 10.37074/jalt.2023.6.1.23

Sanchez-Lengeling, B., and Aspuru-Guzik, A. (2018). Inverse molecular design using machine learning: generative models for matter engineering. Science 361, 360–365. doi: 10.1126/science.aat2663

Shui Ng, W., and Yu, G. (2021). Students’ attitude to peer assessment process: a critical factor for success. Interact. Learn. Environ. 31, 2967–2985. doi: 10.1080/10494820.2021.1916762

Soomro, K. A., Kale, U., Curtis, R., Akcaoglu, M., and Bernstein, M. (2018). Development of an instrument to measure Faculty’s information and communication technology access (FICTA). Educ. Inf. Technol. 23, 253–269. doi: 10.1007/s10639-017-9599-9

Tabachnick, B. G., and Fidel, L. S. (2001). Using multivariate statistics. 4th Edn. Boston, MA: Allyn & Bacon, Inc.

Tabachnick, B. G., and Fidell, L. S. (2007). Using multivariate statistics (5th ed.). Allyn & Bacon/Pearson Education.

Tredinnick, L., and Laybats, C. (2023). The dangers of generative artificial intelligence. Bus. Inf. Rev. 40, 46–48. doi: 10.1177/02663821231183756

van der Linden, W., Bakx, A., Ros, A., Beijaard, D., and Vermeulen, M. (2012). Student teachers’ development of a positive attitude towards research and research knowledge and skills. Eur. J. Teach. Educ. 35, 401–419. doi: 10.1080/02619768.2011.643401

Venkatesh, V., Thong, J. Y. L., and Xu, X. (2023). Unified theory of acceptance and use of technology: a synthesis and the road ahead. J. Assoc. Inf. Syst. 24, 621–652.

Wang, K., Gou, C., Duan, Y., Lin, Y., Zheng, X., and Wang, F. Y. (2017). Generative adversarial networks: introduction and outlook. IEEE/CAA J. Autom. Sinica 4, 588–598. doi: 10.1109/JAS.2017.7510583

Yilmaz, R., and Karaoglan Yilmaz, F. G. (2023a). The effect of generative artificial intelligence (AI)-based tool use on students’ computational thinking skills, programming self-efficacy and motivation. Comput. Educ. 4:100147. doi: 10.1016/j.caeai.2023.100147

Yilmaz, R., and Karaoglan Yilmaz, F. G. (2023b). Augmented intelligence in programming learning: examining student views on the use of ChatGPT for programming learning. Comput. Hum. Behav. 1:100005. doi: 10.1016/j.chbah.2023.100005

You, Y., Chen, Y., You, Y., Zhang, Q., and Cao, Q. (2023). Evolutionary game analysis of artificial intelligence such as the generative pre-trained transformer in future education. Sustain. For. 15:9355. doi: 10.3390/su15129355

Yu, Y. (2023). Discussion on the reform of higher legal education in China based on the application and limitation of artificial intelligence in law represented by ChatGPT. J. Educ. Human. Soc. Sci. 14, 220–228. doi: 10.54097/ehss.v14i.8840

Zawacki-Richter, O., Marín, V., Bond, M., and Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education – where are the educators?. International journal of educational technology. High. Educ. 16, 1–22. doi: 10.1186/s41239-019-0171-0

Keywords: generative artificial intelligence, human-computer interaction, student attitudes, educational technology, AI attitude

Citation: Marengo A, Karaoglan-Yilmaz FG, Yılmaz R and Ceylan M (2025) Development and validation of generative artificial intelligence attitude scale for students. Front. Comput. Sci. 7:1528455. doi: 10.3389/fcomp.2025.1528455

Received: 14 November 2024; Accepted: 10 February 2025;

Published: 25 February 2025.

Edited by:

Md Atiqur Rahman Ahad, University of East London, United KingdomReviewed by:

Elizabeth A. Boyle, University of the West of Scotland, United KingdomCopyright © 2025 Marengo, Karaoglan-Yilmaz, Yılmaz and Ceylan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Agostino Marengo, YWdvc3Rpbm8ubWFyZW5nb0B1bmlmZy5pdA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.