94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Comput. Sci., 09 April 2025

Sec. Computer Vision

Volume 7 - 2025 | https://doi.org/10.3389/fcomp.2025.1437664

This article is part of the Research TopicFoundation Models for Healthcare: Innovations in Generative AI, Computer Vision, Language Models, and Multimodal SystemsView all 7 articles

Significant advancements in object detection have transformed our understanding of everyday applications. These developments have been successfully deployed in real-world scenarios, such as vision surveillance systems and autonomous vehicles. Object recognition technologies have evolved from traditional methods to sophisticated, modern approaches. Contemporary object detection systems, leveraging high accuracy and promising results, can identify objects of interest in images and videos. The ability of Convolutional Neural Networks (CNNs) to emulate human-like vision has garnered considerable attention. This study provides a comprehensive review and evaluation of CNN-based object detection techniques, emphasizing the advancements in deep learning that have significantly improved model performance. It analyzes 1-stage, 2-stage, and hybrid approaches for object recognition, localization, classification, and identification, focusing on CNN architecture, backbone design, and loss functions. The findings reveal that while 2-stage and hybrid methods achieve superior accuracy and detection precision, 1-stage methods offer faster processing and lower computational complexity, making them advantageous in specific real-time applications.

Access to information occurs through a diverse array of channels, encompassing both traditional and digital sources. Traditional sources include newspapers, magazines, television, radio, books, libraries, and billboards, while digital sources comprise websites, blogs, social media platforms, mobile applications, streaming services, and search engines. When individuals encounter visual stimuli such as advertisements or traffic signs, the ability to accurately identify the objects depicted and extract pertinent information is crucial. Effective information extraction guides individuals along appropriate pathways and mitigates the risks associated with confusion or misinformation that may lead to erroneous conclusions. Consequently, meticulous and precise extraction of information from images is of paramount importance in ensuring informed decision-making (Ardia et al., 2020).

Image detection represents an advanced computational technology that processes visual data to identify and locate specific objects within images. This methodology differs from image classification, which categorizes entire images without delineating object locations. Image detection focuses on recognizing the presence and spatial positioning of objects, often utilizing bounding boxes to indicate their locations within a given frame. The significance of image detection spans multiple domains due to its ability to automate and enhance processes that previously relied on human visual assessment. For instance, image detection improves operational efficiency in manufacturing quality control by enabling the rapid and accurate inspection of numerous components. In autonomous driving applications, it is essential for detecting pedestrians, traffic signs, and other vehicles, thereby ensuring safety on roadways (Dong et al., 2018). In healthcare settings, image detection is critical for identifying tumors or abnormalities in medical imaging, leading to improved diagnostic accuracy and timely interventions. Furthermore, surveillance systems leverage image detection technologies to monitor environments for unauthorized access or suspicious activities, thereby enhancing security measures. Therefore, image detection provides vital insights that enable systems to respond appropriately to their visual contexts, thereby highlighting its crucial role in contemporary technological applications and societal functions (Hammoudeh et al., 2022). This technology uses advanced machine learning and deep learning algorithms to improve safety across various domains by accurately detecting objects and their environments (Guan, 2017).

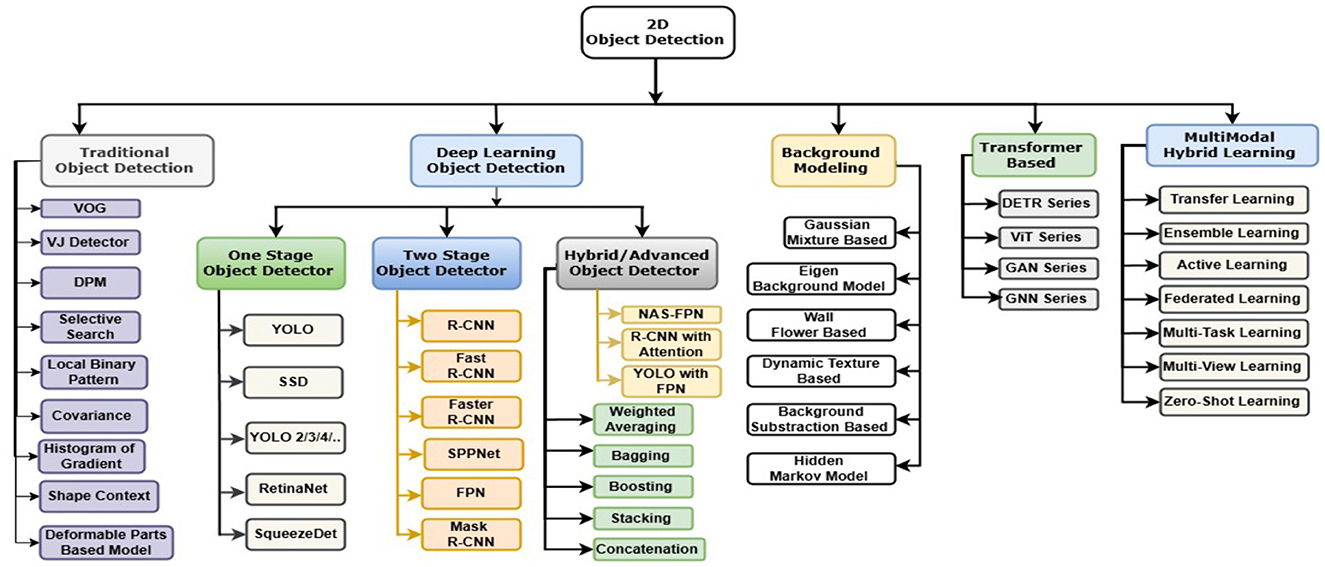

For a comprehensive understanding of visual data, the classification of images and object detection methodologies constitute critical paradigms in computer vision. The precise identification and spatial localization of objects within digital images or video streams enable a nuanced understanding of content across diverse computational applications. This fundamental interpretive framework encompasses multifaceted computational processes, including but not limited to object trajectory analysis, pose estimation, instance-level object segmentation, and sophisticated inventory management strategies (Dong et al., 2018; Hammoudeh et al., 2022; Dundar et al., 2016). Traditional object detection methodologies are characterized by their ability to operate without necessary historical training data, rendering them predominantly unsupervised. Seminal approaches such as the Viola-Jones algorithm (Viola and Jones, 2001; Li et al., 2012), the Scale-Invariant Feature Transform (SIFT) (Lowe, 1999), and histogram-based techniques (Freeman and Roth, 1995; Dalal and Triggs, 2005) exemplify this methodological category. Contemporary research, however, demonstrates the exponential efficacy of supervised learning paradigms leveraging sophisticated deep learning architectures, which have become predominant in real-world computational scenarios. Within this context, machine learning and advanced artificial intelligence techniques are strategically deployed to comprehend and interpret visual information (Hammoudeh et al., 2022). These sophisticated computational tools enable precise object localization and identification, finding critical applications in multidimensional domains such as intelligent traffic monitoring systems, comprehensive surveillance and navigation frameworks, and advanced biometric recognition technologies in smartphones and autonomous vehicular systems (Guan, 2017; Tang et al., 2023; He et al., 2015a). Figure 1 provides a schematic representation of the intricate object detection and classification methodological landscape.

The paradigmatic evolution of object detection methodologies is significantly driven by the sophisticated integration of advanced deep learning architectures with supervised learning algorithmic frameworks. This intricate symbiosis represents a pivotal mechanism for optimizing object detection methodologies, substantially augmenting the computational capacity to precisely identify and spatially localize objects within digital imagery and video streams. Contemporary deep learning neural networks, exemplified by Convolutional Neural Networks (CNN) (Sun, 2024), Region-based Convolutional Neural Networks (R-CNN) (Girshick et al., 2014a), You Only Look Once (YOLO) (Redmon et al., 2016), and Residual Networks (ResNet) (He et al., 2016b,a), have made transformative contributions to the field of computer vision. These advanced computational models demonstrate exceptional performance by strategically integrating multi-scale feature representations (Zhang et al., 2019) and iteratively refining candidate object bounding box delineations (Yao et al., 2022) during object identification processes. Neural network algorithmic frameworks, which systematically build upon established architectural paradigms (Dundar et al., 2016) and advanced learning systems, have initiated a global transformation in computational object detection capabilities (Hammoudeh et al., 2022; Guo et al., 2019). Despite these remarkable advancements, significant computational challenges remain in fully recognizing objects across heterogeneous imaging contexts, which include varying illumination conditions, diminutive object dimensions, partial occlusions, diverse viewing angles, complex poses, and varying spatial configurations. Consequently, the scholarly discourse has increasingly focused on object localization methodologies, with researchers actively seeking innovative solutions to address these inherent computational complexities (Guan, 2017). The primary scholarly objective is to achieve unprecedented accuracy in object identification through cutting-edge computational tools. To enable a comprehensive understanding, the conventional object detection framework can be systematically outlined in three fundamental computational stages: the strategic identification of salient informative regions, sophisticated feature extraction mechanisms, and subsequent probabilistic classification processes. This modular computational architecture significantly enhances object recognition capabilities by employing a rigorous, multi-stage approach to identifying and taxonomically classifying objects within digital imagery (Girshick et al., 2014b).

The computational process of object detection involves a sophisticated, multi-staged methodological framework, with each stage playing a crucial role in the precise identification and spatial localization of objects in digital imagery. Informative region selection represents the first computational phase, strategically focusing on identifying spatially salient regions with a high probabilistic likelihood of containing target objects. This critical stage is enabled through advanced computational methodologies such as selective search algorithms and Region Proposal Networks (RPNs), which generate candidate regions through a comprehensive analysis of image chromatic intensities, textural characteristics, and spatial configurations (Girshick et al., 2014a,b). Bounding box representations are systematically employed to outline these potential object zones, providing a precise cartographic representation of the object's spatial positioning within the digital image. The subsequent phase, feature extraction, involves the sophisticated retrieval and transformation of relevant computational data from the selected bounding box regions. Convolutional Neural Networks (CNNs) function as computational transformative mechanisms, converting raw image features into standardized representational matrices that enable the extraction of intricate spatial and hierarchical characteristics essential for effective object detection. Established computational techniques, including Scale-Invariant Feature Transform (SIFT) (Lowe, 1999), HOG (Dalal and Triggs, 2005), and Haar-like feature extraction methodologies (Cristianini and Shawe-Taylor, 2000), are strategically deployed during this computational phase to enhance feature representation and discriminative capabilities. The conclusive stage, Classification, involves the probabilistic assignment of taxonomical class labels to candidate object regions predicated upon the extracted computational attributes. This process entails identifying specific object categories such as anthropomorphic entities, vehicular structures, arboreal organisms, and urban infrastructural elements. The classification mechanism is realized through sophisticated classifiers embedded within fully connected neural network architectures, which leverage the extracted computational characteristics as input to determine the most probable taxonomical designation for each detected object. Canonical classification algorithms, including Support Vector Machines (SVM) (Cristianini and Shawe-Taylor, 2000; Awad et al., 2015), AdaBoost ensemble learning frameworks (Freund et al., 1999), and Deformable Part-based Model (DPM) networks (Viola and Jones, 2001), represent pivotal computational paradigms employed in this sophisticated classificatory process. These meticulously orchestrated computational stages collectively constitute a comprehensive and robust methodological framework for executing sophisticated object detection across diverse computational and visual analysis applications (Chhabra et al., 2024).

This observation highlights the critical need for improvement to increase the effectiveness of real-time object detection systems. Solutions tailored for hardware compatibility rely on discriminant feature descriptors that involve minimal computational overhead and shallow, easily trainable architectures. This is achieved by adopting a pragmatic methodology grounded in reality. However, these techniques may become less reliable when recognizing and predicting essential items is crucial. Finding the right balance between accuracy and efficiency remains essential for successfully using these methods. Deep learning approaches have seen significant advancements through a result-driven focus. In this study, we focus on object recognition methods using CNNs, which are renowned for their ability to replicate human visual intelligence. We examine one-stage, two-stage, and hybrid approaches to image recognition, localization, classification, and identification to gain a better understanding of the methodologies used by CNN-based object detection systems. We illustrate the benefits of two-stage and hybrid methods regarding accuracy and detection precision while acknowledging the effectiveness of one-stage methods concerning processing speed and computational simplicity. This analysis considers the architecture, backbone structure, and performance metrics of these approaches, emphasizing the need to strike a balance between accuracy, efficiency, and resource usage. Our review aims to facilitate informed decisions when designing and implementing CNN-based object identification systems (Zhao et al., 2024; Aggarwal and Kumar, 2021).

This study focuses on a brief discussion of object detection techniques based on CNN. It begins with key milestones illustrating the developmental process, then dives into several deep-learning object detection techniques utilizing a variety of benchmark datasets. The study explores the hierarchical growth of CNN-based detection strategies, focusing on the architecture of deep-learning CNN models for both one-stage and two-stage object detection. Additionally, it compares approaches based on computational cost, time efficiency, accuracy, algorithmic adaptability, and significance within and across detection stages for both CNN-based generic and salient object detection architectures. The “Challenges and Future Opportunities” section discusses potential ways to overcome the existing obstacles in object detection, while the 'Conclusion and Future Works' section summarizes the study's conclusions and provides guidance for future research directions aimed at advancing CNN-based object detection methodologies.

Detecting objects in images is a crucial step in the transition from hand-crafted templates to advanced deep learning models. Initially, there was a template-matching approach where image patches were compared to predefined templates. Subsequently, the idea of designing manual features emerged, such as edges, colors, and textures, which were developed for object identification. After this period, the use of statistical methods for object recognition gained popularity, which proved valuable in certain applications as well (Zou et al., 2023). HOG (Dalal and Triggs, 2005), and SIFT (Lowe, 1999) were prominent during this time. HOG divides an image into blocks to calculate gradients, subsequently combining these blocks with adjacent ones to produce gradient orientation histograms that capture light and maintain invariance over broader areas. While this method is effective for low-cost geometric modifications and varying lighting, it is less effective in identifying small objects or multiple objects within the same image. On the other hand, SIFT examines an object's surrounding areas and spatial context, using edge detection or Laplacian filtering to identify unique key points. The construction of SIFT descriptors relies on histogram computation, with the Gaussian window defining core regions, while key-point matching is performed using Euclidean distance. SIFT provides robustness by carefully selecting key points that generate descriptors, but it is susceptible to issues such as occlusion and background clutter. Therefore, it must be used with caution.

The early manual methods that relied on designed elements such as colors and textures were constrained and rigid; therefore, improvements were required. Furthermore, obstacles to accurate object identification within images include overfitting, which arises from issues with training data for algorithms, such as large datasets and computational resources mentioned by Chen et al. (2017). Deep learning became more famous for overcoming these limitations after 2006 (Zou et al., 2023; Elgendy, 2020), as it fully harnesses the extensive learning capacity of a network structure. Thus, after 2010, there was a revolution in deep learning methods, marking a pivotal shift toward robust convolutional neural networks (CNNs). CNNs utilize deep learning-enabled features to learn complex patterns from massive data, delivering significant accuracy directly. The techniques employed, such as AlexNet (Krizhevsky et al., 2017), GoogleNet (Szegedy et al., 2016), and VGGNet (Simonyan and Zisserman, 2014), in the two-stage detectors, such as R-CNN (Girshick et al., 2014a), Fast R-CNN (Girshick, 2015), and Faster R-CNN (Ren et al., 2015), were enhanced to improve accuracy and performance, making them possible to work in real-time applications. The milestone chart shown in Figure 2 presents the development year alongside the corresponding architecture name. As deep learning approaches expand their applicability in the real world, they diversify techniques that address real challenges without being constrained by their previous limitations. These diverse fields include autonomous vehicles, robotics, medical imaging, security, and more. Advanced deep learning methods revolutionize object detection techniques, ushering in a new era of possibilities. However, some challenges remain to unlock its full potential (Elgendy, 2020).

Deep Neural Networks (DNNs) are computational models inspired by the human brain, characterized by multiple interconnected layers that excel at capturing complex data patterns. These hidden layers enable hierarchical learning of intricate relationships within data, making DNNs powerful tools for addressing diverse and challenging tasks. Neurons in these layers dynamically adjust weights and biases during training to improve feature abstraction. Discrepancies between actual and predicted values are minimized through gradient descent optimization, ensuring improved performance (Krizhevsky et al., 2012).

Various types of DNNs have been developed for specific applications: Feedforward Neural Networks (FNN) are commonly utilized for identification and recognition tasks (Ben Braiek and Khomh, 2023); Convolutional Neural Networks (CNN) excel at image processing tasks (Sun, 2024); Recurrent Neural Networks (RNNs) are primarily used for time series data (Girshick et al., 2014a); Long Short-Term Memory (LSTM) networks address issues related to vanishing gradients in longer sequences (Hochreiter and Schmidhuber, 1997); and Transformer Networks have gained prominence in natural language processing tasks.

CNNs specifically target image recognition and processing. In this context, models are trained using labeled datasets, enabling them to effectively extract relevant information from test images. The feature map technique highlights detected features and serves as input for subsequent layers that progressively build a hierarchical image representation. The fully connected layer then integrates features from earlier layers, mapping them to specific classes and playing a crucial role in image classification (Elgendy, 2020; Zou et al., 2023).

Region-based CNNs (R-CNN) (Girshick et al., 2014a) combine the strengths of CNNs with region-based approaches, significantly enhancing object detection accuracy. Initially, R-CNN segments an image into multiple proposals that may contain objects. A CNN then processes these regions for feature extraction and classification, further refining the final classification through this process. Key variants include Fast R-CNN (Girshick, 2015), which improves processing speed by sharing convolutional features across regions, and Faster R-CNN (Ren et al., 2018), which directly generates proposals to enhance both speed and accuracy. Additionally, Mask R-CNN (He et al., 2017) extends this framework to predict object masks for segmentation tasks. LSTM networks (Hochreiter and Schmidhuber, 1997) enhance learning dependencies within sequential data, thereby improving performance on time series tasks through a network-controlling mechanism that regulates information flow (Amjoud and Amrouch, 2023).

In the realm of object detection, both generic object detection (Girshick et al., 2014b) and salient object detection (Liu et al., 2015; Vig et al., 2014) methodologies aim to identify and understand objects within images. Generic object detection relies on deep learning models to meticulously detect objects of varying sizes when trained on labeled datasets; this includes applications such as pedestrian and traffic sign recognition in autonomous vehicles. Conversely, salient object detection mimics human attention by prioritizing visually distinct objects based on contrast and spatial arrangement attributes. This approach enhances tasks such as robust vision, image compression, and segmentation by emphasizing captivating elements within an image. Implementing these complex algorithms requires extensive training on large datasets to continually improve object detection capabilities. Consequently, deep learning methodologies have revolutionized image editing techniques and significantly impacted various fields reliant on image classification and object detection (Zhao et al., 2019).

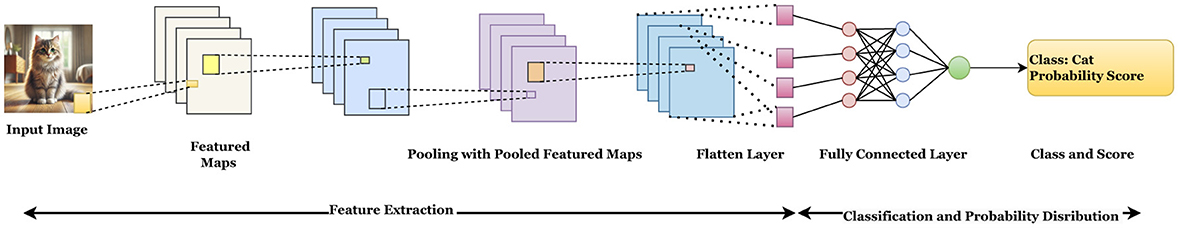

CNNs represent a powerful deep learning architecture commonly used in various domains, including artificial intelligence, natural language processing, computer vision, and autonomous vehicles. As a dependable foundation for image recognition and analysis, CNNs utilize a structured layer arrangement that collaboratively processes and extracts meaningful information from input data, particularly images. As illustrated in Figure 3, the architecture encompasses several key components: the input layer, pooling layers, convolutional layers, and fully connected layers, each playing a role in extracting abstract features from the image while facilitating sophisticated data interpretation and analysis. This intricate interplay of layers enhances the model's capability to identify complex patterns, positioning CNNs at the forefront of technological advancements in visual recognition tasks.

Figure 3. Convolution neural network basic architecture (Buckner, 2019).

The input layer is the gateway that receives raw data, establishing the foundation for subsequent network processing. The input data can encompass various categories based on availability and specific requirements. This may include image data in a 3D map format with pixel values indicating width and height, time series data such as stock market values in a 2D format corresponding to time steps, or textual data fed into the network for desired outputs. Pre-processing steps, including normalization, are crucial for preparing the data to ensure it aligns with the network's processing capabilities. The primary role of the input layer is to enable meaningful insights derived from the transfer of data during the input stage.

The convolutional layer is a fundamental component where most computations occur. It employs small grids, filters, or kernels to detect specific patterns such as lines, curves, or shapes within the receptive field. This multi-layered architecture of convolutional layers progressively interprets the visual information embedded in raw image data. To detect complex patterns and objects, each successive layer extracts feature maps that inform the deeper layers of the network.

Pooling layers generate summary statistics for adjacent layers by downsampling data while retaining essential information. This process enhances object detection capabilities by providing invariance to rotations and translations. Additionally, pooling reduces memory consumption, manages computational costs and weights, and mitigates overfitting. The most common pooling methods include max pooling and average pooling. In max pooling, the highest value within a specified region of the input feature is selected as the output for that region, thereby emphasizing prominent features. Conversely, average pooling calculates the average value from a specific region of the input feature map to produce a smoother representation of features within that region. This approach aids in locating objects in images while considering their overall appearance.

Activation layers are critical components that enable CNNs to learn non-linear transformations of complex patterns for object detection tasks. These layers capture subtle relationships between features, leading to advanced models with enhanced generalization capabilities. As illustrated in Table 1, popular activation functions include ReLU (Fukushima, 1975), Tanh (Hereman and Malfliet, 2005), Leaky ReLU (Bai, 2022), ELU (Clevert et al., 2015), Sigmoid (Ramachandran et al., 2017), and SELU (Zhu et al., 2023), each with unique characteristics (Nwankpa et al., 2018). By leveraging diverse activation functions, CNNs can effectively address more challenging object detection tasks while retaining resilience and adaptability. These activation layers are essential for enhancing the network's ability to recognize intricate patterns and generate accurate predictions, ultimately improving performance in object detection by modeling and interpreting complex relationships within the data.

Fully Connected Layers (FCL) are integral components of CNNs, designed to connect neurons across different layers. Comprising neurons, weights, and biases, these dense layers serve as essential mechanisms that transform extracted information into a format that can be meaningfully interpreted. The FCL facilitates complex information sharing by integrating features according to the specific nature of the task, whether classification or regression. Ultimately, a single neuron representing the expected output emerges as the final result of a fully connected layer. The structure and functionality of FCLs are illustrated in Figure 3. Fully connected layers enhance the network's ability to comprehend intricate patterns by acting as a bridge between feature extraction and decision-making. The close interconnectivity of neurons within these layers enables CNNs to excel in object detection tasks, effectively synthesizing information from various features to inform predictions (Alzubaidi et al., 2021).

The backbone architecture of a neural network is its fundamental structure, forming the basis for models, particularly in deep learning applications utilized for tasks such as image processing. The core of CNNs is composed of layers designed for hierarchical feature extraction. These layers may include pooling, normalization, and convolutions. The backbone architecture captures precise representations of incoming data as it moves through the system, enhancing the network's capability to understand and handle complex information. CNNs employ several well-known backbone networks, including AlexNet (Krizhevsky et al., 2017), VGGNet (Simonyan and Zisserman, 2014), ResNet (Residual Networks) (Choi et al., 2018), InceptionNet(GoogLeNet) (Szegedy et al., 2016), MobileNet (Sandler et al., 2018), and DenseNet (Huang et al., 2017).

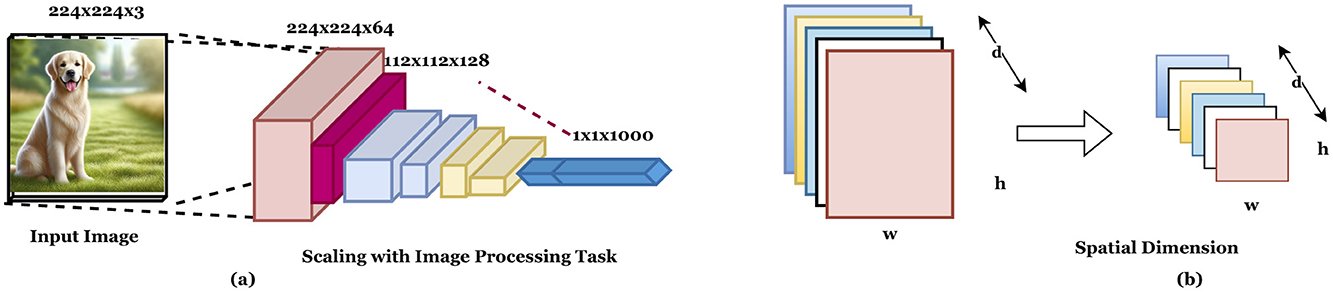

Simonyan and Zisserman (2014) proposed the VGG architecture by significantly enhancing traditional CNN models. This refined design achieved an impressive top-5 accuracy of 92.7% on the widely recognized ImageNet benchmark dataset, demonstrating its effectiveness in large-scale image classification tasks. A general diagram is shown in Figure 4. A key innovation of the VGG architecture is the consistent use of 3x3 convolutional filters throughout the network, reducing the overall parameter count and ensuring architectural simplicity and coherence while maintaining the ability to capture intricate features. The authors presented two variants of this architecture, namely VGG16 and VGG19, which comprise 16 and 19 layers of deep neural networks, respectively (Nash et al., 2018).

Figure 4. Key components of deep learning architectures: (a) the VGG architecture (Grimaldi et al., 2018) and (b) Global Average Pooling (Zhang et al., 2020). These architectures are fundamental to the design and implementation of deep neural networks in computer vision and image recognition, balancing readability with the technical details essential for understanding their significance.

A groundbreaking deep learning architecture, InceptionNet, also known as GoogLeNet, was introduced by Szegedy et al. (2016). This architecture addressed a critical bottleneck in traditional models by allowing images of varying resolutions to be fed directly into the network without extensive preprocessing. Designed with computational efficiency in mind, InceptionNet achieves superior performance in image classification tasks while optimizing resource utilization. A defining feature of this architecture is the introduction of inception modules, which integrate multi-scale convolutions within a single layer and concatenate their outputs. This approach facilitates the effective capture of local and global features, enhancing the network's ability to learn complex representations. Furthermore, compared to conventional deep neural networks, InceptionNet significantly reduces the number of parameters while maintaining state-of-the-art accuracy, making it both innovative and efficient.

The Residual Network (ResNet) architecture revolutionized deep learning by addressing the challenges associated with training complex neural networks. ResNet incorporates residual connections, also known as skip connections, which allow the direct flow of information and gradients between layers. This innovative approach effectively mitigates the vanishing gradient problem, a common issue in deep networks, and facilitates training exceptionally deep architectures comprising hundreds or even thousands of layers. ResNet models, such as ResNet-50, ResNet-101, and ResNet-152, are available in varying depths, with the numbers indicating the total layers in the network. These architectures have demonstrated state-of-the-art performance across various computer vision tasks, including image classification, object detection, and segmentation, establishing ResNet as a foundational model in deep learning research (Choi et al., 2018).

The output layer is the final decision-making component in object detection with CNNs, providing results after thorough data processing and analysis. It adapts to the specific requirements of regression or classification tasks. In classification scenarios, for instance, the output layer may consist of individual neurons corresponding to different classes, estimating the probability that an input belongs to each category. Conversely, in regression tasks, a single neuron may represent the predicted value or utilize an activation function to convey the learned prediction, with evaluation metrics such as mean squared error or absolute error assessing accuracy. As a critical element of the neural network architecture, the output layer embodies the system's capability to accurately detect and classify objects based on predefined criteria, ensuring optimal performance in object detection tasks and reflecting the complexity of the problems addressed.

Generic object detection techniques are recognized for their adaptability and versatility in the realm of CNN-based object detection. These methods can detect and classify a wide range of objects in images, including those that do not fit into predefined categories. They effectively identify and classify objects in complex visual environments by generating bounding boxes that outline object locations and provide confidence scores for their detection. A key feature of generic object detection is its ability to function without prior knowledge of specific object categories, relying instead on universal attributes such as color, shape, texture, and edge patterns to facilitate detection. The field includes various methodologies tailored to different requirements and scenarios, integrating advanced strategies to address varying challenges. These techniques achieve accurate and comprehensive object detection through innovative algorithms and robust feature extraction processes, even in intricate and unstructured visual data. As the field evolves, researchers continue to explore novel approaches to feature extraction and detection strategies, enhancing the efficiency, accuracy, and applicability of generic object detection techniques in real-world applications.

The One-Stage framework represents a regression-based approach designed to prioritize speed by predicting object attributes directly, eliminating the need for a separate region proposal step. This architecture aims to simultaneously predict object locations and bounding boxes in a single forward pass through the network, making it particularly suitable for real-time applications. However, the challenge of accurately detecting objects in a single pass often hinders its ability to achieve the same level of precision as more complex frameworks. Prominent implementations of the One-Stage framework include Single Shot Multibox Detectors (SSD) (Erhan et al., 2014; Van de Sande et al., 2011), You Only Look Once (YOLO) (Redmon et al., 2016), AttentionNet (Yoo et al., 2015), G-CNN (Najibi et al., 2016), and Differentiable Single Shot Detector (DSSD) (Fu et al., 2017). These models have garnered widespread popularity due to their ability to streamline object detection tasks and deliver rapid inference times, making them ideal for scenarios where computational efficiency is critical. Despite their speed advantages, substantial research is underway to enhance their accuracy while maintaining efficiency. As a result, the One-Stage framework remains an active and significant area of exploration in CNN-based object detection, offering both practical applications and opportunities for further innovation.

The Two-Stage framework employs a region-based approach, operating in two distinct stages, making it particularly effective for accurately handling objects with complex shapes and varying poses. In the first stage, the framework identifies potential object regions within the image. The second stage refines the bounding boxes and classifies the objects within these proposed regions. Compared to the One-Stage framework, the Two-Stage approach consistently achieves higher accuracy, although it comes at the cost of increased computational complexity and longer inference times. Prominent implementations of the Two-Stage framework include R-CNN (Girshick et al., 2014a), SPPnet (He et al., 2015a), Fast R-CNN (Girshick, 2015), Faster R-CNN (Ren et al., 2015), R-FCN (Dai et al., 2016), and Mask R-CNN (He et al., 2017). The evolution of this framework has been marked by several key innovations. R-CNN introduced the concept of a region proposal phase, enabling the detection of potential object locations, while SPPnet incorporated spatial pyramid pooling to effectively handle objects of varying scales. Faster R-CNN enhanced this approach by introducing the Region Proposal Network (RPN), which generates region proposals more efficiently. Fast R-CNN improved upon R-CNN by sharing convolutional features across proposals, which significantly reduces computational overhead. R-FCN introduced position-sensitive score maps, allowing for more precise localization and classification, while Mask R-CNN extended Faster R-CNN by adding instance segmentation capabilities. Despite its superior accuracy, the Two-Stage framework's computational demands and extended processing time render it less suitable for real-time applications. However, it remains a pivotal area of research in object detection using CNNs. Ongoing advancements aim to balance the framework's high accuracy with the need for improved computational efficiency, ensuring its continued relevance in academic and applied settings (Shah and Tembhurne, 2023). The simple classification diagram is shown in Figure 5.

Figure 5. Classification of object detection techniques. The chart offers a comprehensive overview of various object detection techniques in 2D computer vision, detailing the different algorithms and models within each category.

The goal of the hybrid approach is to balance speed, computational complexity, and accuracy by combining elements of both one-stage and two-stage frameworks. This strategy enables the creation of object detection systems that leverage the advantages of both methods, maximizing their respective strengths. NAS-FPN (Ghiasi et al., 2019) is a well-known hybrid approach that uses multi-scale representations to enhance object detection at various scales. Other implementations of the hybrid approach use the focal loss technique to address class imbalance issues commonly encountered in object detection applications, such as Mask R-CNN with attention and YOLO with FPN. Several R-CNN variants also adopt a hybrid approach to enhance performance, integrating components of both one-stage and two-stage frameworks. The capability of hybrid approaches to capitalize on the benefits of both frameworks while addressing their limitations has contributed to their increasing popularity in recent years.

The objects in the image can be recognized quickly and efficiently using single-stage object detection techniques. Single-stage detection methods, such as SSD (Elgendy, 2020) and the YOLO series, predict an object's approximate bounding box in a single neural network run, enabling quick and effective object recognition. Although this comes at the expense of lower accuracy rates, these methods demonstrate excellent reliability compared to two-stage detectors. Typically, greater accuracy is achieved in identifying larger items compared to smaller and closely spaced objects. Later iterations of YOLO (Redmon et al., 2016), such as YOLOv2 (Redmon and Farhadi, 2017), YOLOv3 (Redmon and Farhadi, 2018), and others, have successfully incorporated deeper neural networks to enhance performance. This modification has yielded more effective results while maintaining strong accuracy and low processing complexity. An in-depth examination of the YOLO and SSD architectures, along with their comparative outcomes across various datasets, will illuminate their advantages and disadvantages. These investigations contribute to a better understanding of the practical performance of these one-stage detection methods. Single-stage detection methods hold promise in applications requiring fast response times as they provide a trade-off between speed and accuracy. Further improvements in these methods will lead to even higher efficiency and accuracy.

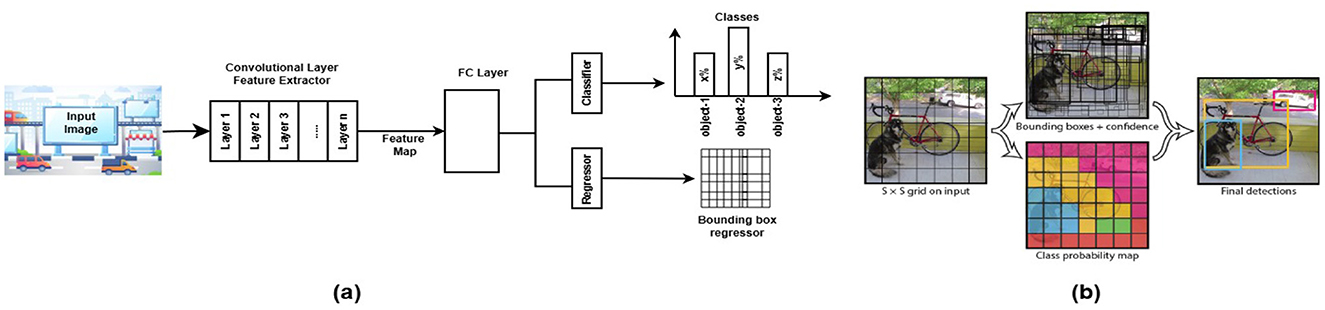

Redmon et al. (2016) proposed a unique and improved single-stage detector called YOLO for object detection and image verification. YOLO enhances object recognition by combining high-level feature mapping with a reliable evaluation of various item categories, resulting in precise predictions represented in bounding boxes, as shown in Figure 6a. This innovative design, shown in Figure 6b, divides the input image into SxS cells using a grid-based method, with each cell providing bounding box features and essential confidence scores. The probability of an object's presence and the accuracy of the bounding box location are carefully combined to yield the confidence score. Pr (project)=1 indicates the target object's presence, while Pr(object)=0 indicates its absence. YOLO ensures substantial accuracy in object localization evaluations using the Intersection over Union (IoU) metric, a crucial measure of alignment between the actual and predicted boxes. The method of calculating confidence involves a rigorous multiplication of dimensions (x, y, w, h), which represent the size and position of the bounding box. This illustrates YOLO's commitment to precision and effectiveness in recognizing object tasks (Redmon et al., 2016).

Figure 6. General block diagram of the one-stage (regression based) networks object detection model. It provides how detections are formed by merging bounding box predictions with class probabilities. (a) Highlights the core modules of single-stage approach and (b) data flow of theYOLO V1 object detection framework (Redmon et al., 2016).

Redmon and Farhadi (2017) developed YOLOv2 in 2017 based on the foundation of YOLOv1. The authors aim to enhance both the speed and accuracy of object detection in a real-time system. The concept of anchor boxes and predefined boxes of varying sizes and aspect ratios is introduced to better estimate the location of objects in the image. Additionally, it randomly resizes input images during the training phase to improve the network's capacity to detect objects at different scales. In contrast to YOLOv1, which relies solely on down-sampling to bolster the high-resolution classifier for small object detection, YOLOv2 utilizes multiple layers to transmit high-resolution features to the detector.

With the release of YOLOv3 (Redmon and Farhadi, 2018), real-time object identification has significantly advanced, showcasing impressive improvements in both speed and accuracy. To improve feature extraction performance and mitigate deterioration in deeper neural networks, a robust Darknet-53 architecture is implemented, consisting of 53 convolutional layers with residual connections. The multi-class probabilistic classifier, featuring independent classes and pyramidal forecasts, integrated into YOLOv3's innovative design, revolutionizes object recognition precision. It improves bounding box creation by applying distance penalties using an aggregated intersection over union technique, further improving the model's ability to accurately locate objects within images. Due to its speed and accuracy, YOLOv3 is one of the top choices for real-time object detection applications, such as detection systems and automated vehicles. It can identify objects at large scales and aspect ratios with minimal processing overhead, making it an effective tool for resource-constrained object detection tasks. Its versatile detection capabilities and economical approach enhance its usability.

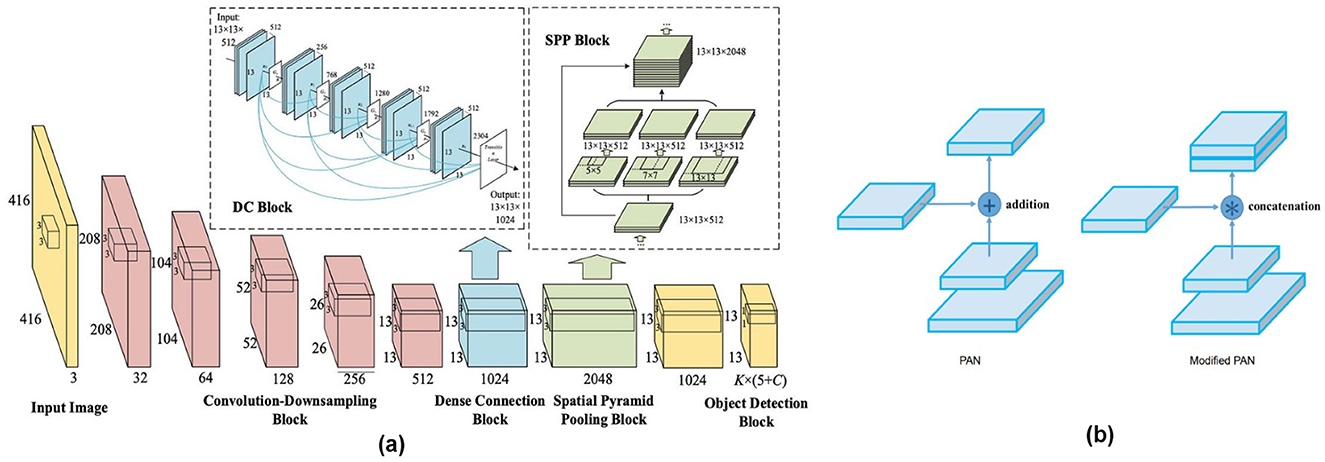

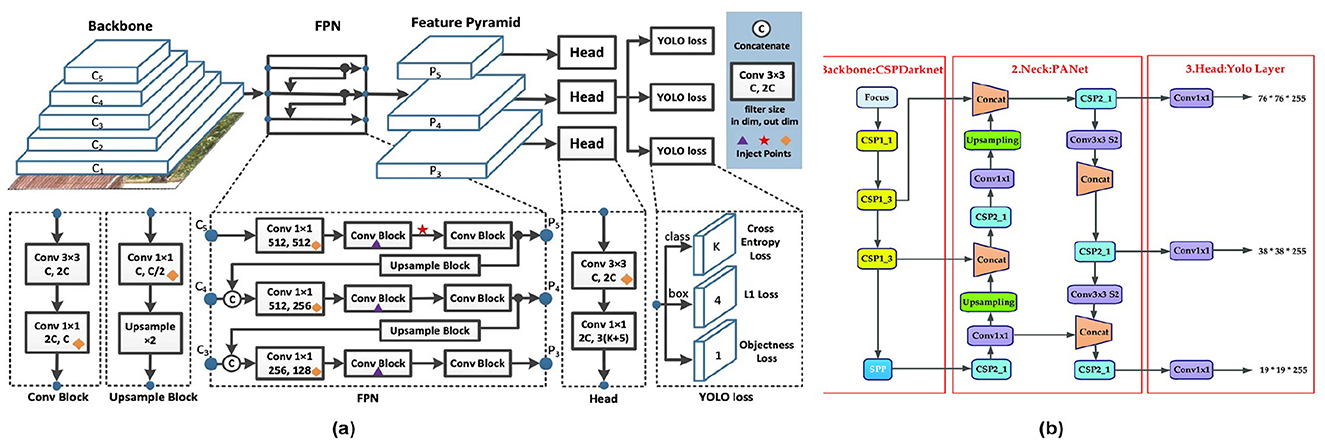

Several significant enhancements are included in YOLOv4 (Bochkovskiy et al., 2020) compared to the earlier version for improving object detection performance. First, CSPDarknet53, a more effective backbone, extracts rich features while maintaining a lower computational load. Second, a smoother activation function ensures improved gradient flow and training stability. Thirdly, by focusing on key points and combining data from different sizes, the Spatial Attention Module and Path Aggregation Network improve feature representation. In addition, different anchor boxes and optimized loss functions address variations in object size and enhance localization; a focus method prioritizes high-confidence objects during inference, improving real-time performance. Figure 7 is the representation of DC-SPP in YOLOv4. It highlights its use of spatial pyramid pooling with dilated convolutions to enhance receptive fields and capture multi-scale features for robust object detection. The main enhancements of YOLOv5's (Jocher et al., 2021) small object detection are responsible for its success. Initially, prioritizing areas with high feature values, the layer-focusing technique enables the model to efficiently allocate processing power and provide sharper, more accurate detection, especially for smaller objects. Second, the model's ability to identify and locate small objects, which may appear dim or fuzzy at a single scale, is improved by the "Multi-Scale Feature Fusion" technique, which effectively merges information from various feature maps generated at multiple scales. These improvements highlight YOLOv5's dedication to addressing the difficulties related to recognizing small objects in the object detection domain, significantly improving its overall accuracy, particularly in small object identification. Figure 8a presents the PP-YOLO object detection network, visually illustrating its architecture and showcasing key components such as the backbone, feature pyramid, detection head, and post-processing steps for efficient object detection. Figure 8b presents the architecture of the YOLOv5 object detection model.

Figure 7. Architectural components of the YOLOv4 object detection model (a) General representation of DC-SPP used in YOLOv4 Model (Huang et al., 2020) and (b) Path aggregation network of YOLOv4 (Bochkovskiy et al., 2020).

Figure 8. Architecture of the YOLOv5 and higher object detection model (a) Graphical depiction of the PP-YOLO object detection network (Long et al., 2020) and (b) Frameworks description of YOLOv5 (Jocher et al., 2021).

The new versions of the object detection model, YOLOv6 (Li et al., 2022) and YOLOv7 (Wang et al., 2023), not only predict objects but also estimate their poses. One of the key features of YOLOv6 and YOLOv7 is their ability to forecast an object's presence and pose. This means that the models provide detailed information about the detected objects, making them essential for applications that require a thorough understanding of the spatial orientation of objects within an image. In particular, YOLOv7 incorporates advanced techniques such as position encoding, level smoothing, and data augmentation. These improvements result in more accurate and versatile object identification systems by improving the models' ability to manage real-world data, reducing noise, and enhancing spatial understanding.

YOLOv8 (Reis et al., 2023) primarily focuses on pose estimation through image segmentation. Higher versions of YOLO represent a continual development process, offering improved accuracy, better identification of small objects, enhanced pose detection, and more accurate detection of cropped images. The table below summarizes the different versions of YOLO, the architecture used, the techniques implemented during development by the respective authors, and their performance evaluated on various standard datasets. Detection accuracy, computational time, and complexity with resources are the key factors distinguishing the different YOLO versions.

YOLOv9 (Wang and Liao, 2024) introduced feedback initialization, attention-based modules, and improved feature pyramids, enhancing the detection of small and distant objects while optimizing multi-scale feature learning for faster, more robust inference. Building on this foundation, YOLOv10 (Wang et al., 2024) incorporated dynamic task prioritization and transformer-based feature extraction, improving the management of complex object interactions and strengthening robustness in challenging scenarios such as occlusions. YOLOv11 (Jocher and Qiu, 2024) further advanced the detection pipeline by implementing cross-domain learning, refining loss functions for better localization, and applying knowledge distillation techniques, enabling efficient training with limited data. Additionally, YOLOv11 achieved state-of-the-art performance with reduced computational overhead, making it highly effective for edge and real-time applications. Collectively, these developments illustrate a trajectory of innovation focused on enhanced feature extraction, robustness, and computational efficiency, positioning YOLOv11 as a versatile model for diverse detection tasks. Table 2 provides a comprehensive overview of various YOLO versions, detailing their advancements in one-stage object detection techniques. It highlights key aspects such as backbone architecture, loss functions, datasets used, and accuracy metrics across different iterations from YOLOv1 to YOLOv10.

Liu et al. (2016) introduced the SSD concept in 2015, utilizing a CNN as the backbone architecture for object detection. SSD enables fast object classification and localization in a single forward path. It employs a set of predefined boxes called “anchor boxes” with different sizes and aspect ratios to detect objects at different locations in an image. The network attempts to predict offsets and adjusts these anchor boxes to accurately fix detected objects according to their size and location using the deep learning techniques. For each object class, the anchor box receives a confidence score to indicate the likelihood of the presence of an object within the box. SSD architecture is divided into two major parts: firstly, the backbone model, a pre-trained classification model, which is a feature map extractor, and secondly, the SSD head, which is moved to the top of the backbone model. This SSD head will provide the bounding box as output over any detected object, resulting in a fast and efficient object detection model. Compared to YOLO, where the object detection method is used to run on different layers at different scales, SS and D run only on the top layers. Similarly, relying on the COCO7 dataset, the tiny SSD has performed better with reliability than the tiny SSD (Womg et al., 2018).

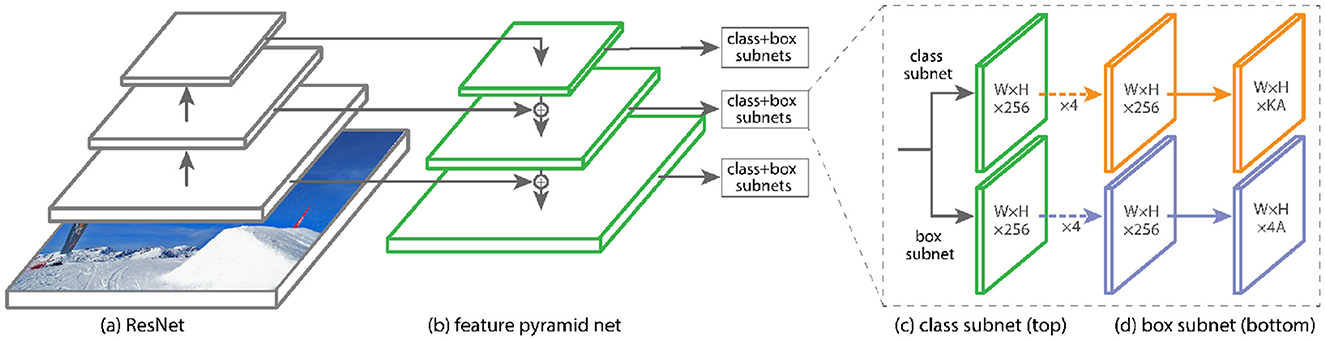

Lin et al. (2017b) revolutionized object detection by introducing a groundbreaking concept that enhances both accuracy and efficiency through a novel loss function. Rather than using the traditional cross-entropy loss, they proposed the Focal Loss function, which is specifically designed to address the challenges associated with class imbalance during training (Lin et al., 2017b). This innovation allows the single-stage RetinaNet object detector to achieve exceptional accuracy, particularly for small and densely packed objects in images. The model employs a robust backbone network architecture along with two specialized subnetworks, which operate seamlessly at multiple scales to detect objects with precision. The backbone processes input images of varying sizes to compute convolutional feature maps, while the subnetworks manage object classification: one is embedded within the backbone for feature extraction, and the other focuses on the bounding boxes of detected objects. Collectively, these components synergistically improve detection performance within this single-stage framework.

RetinaNet incorporates two pivotal upgrades: Focal Loss and Feature Pyramid Networks (FPN), which redefine its capabilities. Focal loss mitigates the impact of class imbalance caused by the prevalence of background classes or numerous anchor boxes, effectively diminishing the loss contributions of easy-to-classify samples while focusing on more complex cases. Meanwhile, by leveraging a multi-scale feature extraction strategy, FPN enables RetinaNet to excel across varying object scales. Constructing an image pyramid captures critical features at different layers, allowing for precise detection of objects regardless of size. However, the convolutional process within the CNN architecture naturally reduces feature map sizes at deeper layers, forming a hierarchical, pyramid-like structure that is ideal for multi-scale detection. Figure 9 provides a detailed illustration of the RetinaNet architecture, showcasing its innovative design.

Figure 9. Object detection using RetinaNet (Lin et al., 2017b).

In addition to YOLO, SSD, and RetinaNet, other similar one-stage architectures are used in object detection. Popular examples include SqueezeDet (Wu et al., 2017), DSSD (Fu et al., 2017), DenseNet (Huang et al., 2017), and CornerNet (Law and Deng, 2018). SqueezeNet enhances accuracy in large object detections with its fire module backbone architecture but is limited on mobile platforms. Deconvolutional layer SSD (DSSD) is more efficient for dense and smaller objects in images, utilizing multi-scale predictions for higher accuracy results. However, it consumes significant memory and has slower performance. DenseNet SSD incorporates the feature reuse concept within the SSD framework, serving as a balance between accuracy and resource utilization. CornerNet represents a unique style of object detection, identifying objects through keypoint estimation techniques that can accommodate rotated objects in images; nonetheless, its computational complexity is excessively high. PAA-SSD is the latest model that focuses on one specific type of system, integrating with various SSD model-based platforms to improve accuracy through the probabilistic anchor assignment technique. The results depend on the dataset used and the chosen backbone architecture. Additionally, it may increase the computational complexity of training based on the chosen platform, necessitating careful assignment. Table 3 illustrates different one-stage object detection techniques along with a comparative analysis. The variation in datasets for various object detections, taking into account not only size but also purpose, is considered for the results obtained.

Two-stage or region-based deep learning approaches rank among the most prominent models for achieving accurate object detection in images. These methods excel by employing a two-step process. The Region Proposal Network (RPN) focuses solely on areas containing objects, thereby avoiding an exhaustive search across the entire image. Unlike brute-force methods, RPNs enhance detection accuracy by training on data relevant to object-specific regions, facilitating precise and efficient classification. This method is especially beneficial for real-time applications, as RPNs identify potential object regions, allowing the classification stage to refine bounding boxes for accurate localization. Both stages present opportunities for customization, with advanced network architectures designed to meet the specific requirements of RPN and classification. This section summarizes and analyzes popular two-stage object detection models, comparing them across factors such as speed, accuracy, computational complexity, and advancements proposed by various researchers.

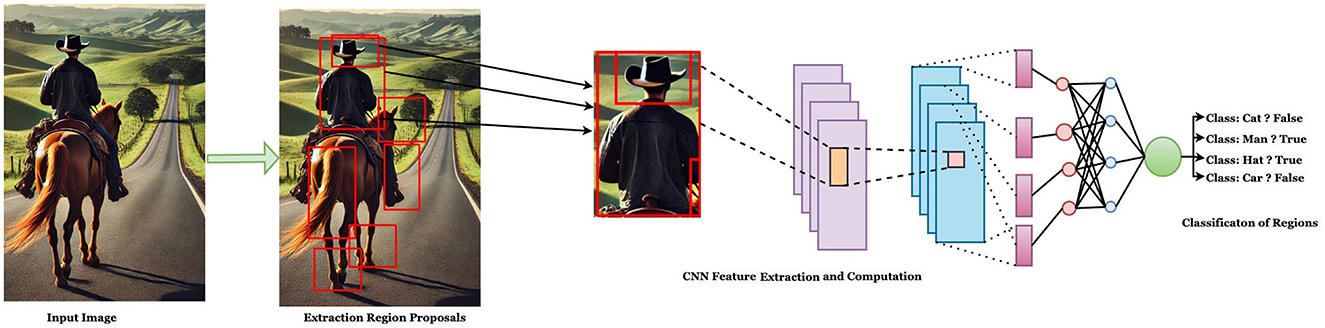

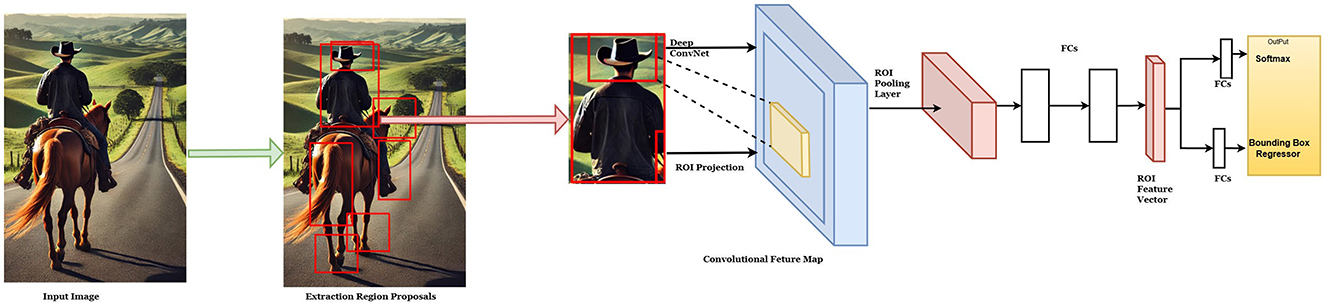

In 2014, Girshick et al. (2014b) introduced a seminal approach to object detection by incorporating CNNs to enhance detection accuracy and improve bounding box quality through deep feature extraction. This method achieved a significant milestone, attaining a mean Average Precision (mAP) of 53.4%, a remarkable improvement over contemporaneous models. The model was trained on the PASCAL VOC 2012 benchmark dataset, setting a new standard for object detection tasks. A simplified representation of the RCNN process is illustrated in Figure 10. The RCNN process comprises two primary stages: region proposal and feature extraction with classification. In the region proposal stage, the entire image is scanned using a selective search algorithm, which evaluates features such as color, texture, position, and location to generate candidate regions likely to contain objects. These candidate regions are resized to conform to the input dimensions the applied CNN requires.

Figure 10. Region-based convolutional neural network (Girshick et al., 2014b).

In the feature extraction and classification stage, the resized regions are processed using a pre-trained CNN model to extract high-level features such as color, shapes, textures, and edges. The extracted features are then input into two distinct support vector machines (SVMs): one for object classification and the other for bounding box refinement. The classification SVM predicts the object class (e.g., car, airplane, chair, person, cat), while the bounding box refinement SVM fine-tunes the proposed bounding box to ensure better localization of the detected object. The SVM assigns a score to each class through non-maximum suppression while maintaining the Intersection over Union (IoU) below a predefined threshold, further enhancing detection precision.

RCNN pioneered object detection by leveraging deep neural networks to extract hierarchical features from images, capturing multi-scale information across layers for precise detection. The model classifies objects and generates bounding boxes around detected regions. However, RCNN also has notable limitations. The fully connected layers in the CNN necessitate resizing images to a fixed size of 277 × 277, which increases computational overhead. The selective search algorithm generates thousands of potential regions, resulting in inefficiencies and high computation time. Additionally, processing these regions individually leads to redundant computations, and the SVM-based classification introduces bottlenecks that hinder speed and optimization. These challenges limit RCNN's performance in real-time applications, complex image backgrounds, and small object detection.

To address these issues, several advancements have been proposed. For instance, Zhang et al. (2015) tackled inaccurate localization in RCNN by introducing three key improvements: (1) Bayesian optimization to refine bounding boxes by evaluating classification scores and localization accuracy; (2) structured loss to penalize inaccuracies in predicted bounding boxes; and (3) class-specific CNNs to enhance accuracy for diverse object categories (Zhang et al., 2015). Furthermore, adopting superpixel classification can refine object boundaries and improve efficiency in handling complex scenes and small objects. However, careful implementation is needed to mitigate potential segmentation inaccuracies.

The fixed filter sizes used in CNN training, along with challenges such as object rotation, deformation, and pose variation, were addressed by Ouyang et al. using deformation-constrained pooling layers. Their approach used guided deformable filters to adaptively adjust shape and size, predicting offset values for local object alignment while applying geometric penalties to promote meaningful deformations. This method, integrated into the DeepID-Net, demonstrated improved accuracy on the ISVRC 2014 dataset (Ouyang et al., 2015).

The limitations of anchor-based bounding boxes, which affect small object detection and computational efficiency, were mitigated by the DeepBox anchor-free design proposed by Zhang et al. This method detects objects without predefined anchors, facilitating better localization of small objects with flexible bounding box shapes and orientations, although it remains sensitive to hyperparameter tuning. Additionally, Pinheiro et al. (2016) introduced SharpMask for refining critical regions, providing superior performance in managing overlapping objects and complex scenes, albeit at the expense of increased computational requirements due to the attention mechanism. Understanding these advancements and their trade-offs provides valuable insights into the suitability of various RCNN-based models for specific tasks. Models such as SPPNet (He et al., 2015a) and Fast RCNN (Girshick, 2015) build upon the foundation of RCNN, delivering significant improvements in efficiency and performance, which will be explored in subsequent sections.

In the context of RCNN, the CNN model requires input images to be of a fixed size, creating challenges when handling images of varying dimensions. This necessitates resizing, which can lead to information loss, reduced accuracy, and increased computational overhead during the scaling process. The Spatial Pyramid Pooling Network (SPPNet) was introduced to address this limitation, enabling the processing of variable-sized input images. SPPNet employs a spatial pyramid pooling mechanism that divides the input image into pyramids of subregions, extracts features from each subregion, and pools them into a fixed-size representation that is independent of the original image dimensions. This approach enhances the model's flexibility and scale invariance. Furthermore, SPPNet allows feature extraction from multiple convolutional layers at different resolutions, facilitating improved object localization and mitigating the resolution reduction issue inherent in RCNN. By integrating multi-scale feature extraction and enabling efficient handling of variable image sizes, SPPNet significantly improves object detection models' flexibility, scalability, and robustness. It is particularly effective in managing diverse image sizes and complex backgrounds, making it a valuable advancement over traditional RCNN methods. Figure 11 illustrates pivotal architectures in object detection. Fast R-CNN enhances region-based convolutional networks by integrating RoI pooling and shared convolutional features, while SPPNet introduces spatial pyramid pooling to efficiently handle input images of varying sizes (Kaur and Singh, 2023).

Figure 11. (a) Fast R-CNN architecture (Girshick, 2015) with RoI pooling for object detection. (b) Spatial Pyramid Pooling Network (SPPNet)(He et al., 2015a) for fixed-length feature representation, enabling multi-scale feature extraction.

Lazebnik et al. (2006) introduced the groundbreaking concept of SPM for object detection, which captures the spatial information of an image by dividing it into multiple subregions and extracting features at various scales. This innovative approach enables the representation of spatial relationships within the image, enhancing feature extraction and localization. Building on this concept, SPPNet incorporates several key advancements. SPPNet efficiently handles images of various sizes, providing scale-invariant detection capabilities. Leveraging multi-scale features extracted from different subregions significantly improves localization accuracy and enhances overall detection performance. These features collectively make SPPNet a robust and accurate model for object detection, particularly in scenarios involving diverse image sizes and complex spatial structures.

The primary limitation of R-CNN lies in its slow processing speed and high computational cost, primarily due to its dependence on selective search for region proposals. Addressing this issue, Girshick (2015) introduced Fast R-CNN, a model that significantly enhances detection speed while maintaining high accuracy. In Fast R-CNN, the RPN directly generates region proposals from image features, eliminating the inefficiency of exhaustive region searches and reducing computational overhead. This approach accelerates the process and ensures accurate object detection, as presented in Figure 11a.

Fast R-CNN employs a multi-task learning strategy, jointly training the RPN and the classifier to optimize region proposal generation and object detection in a unified pipeline. This integrated framework improves accuracy compared to traditional pipeline-based methods. To further enhance efficiency, Fast R-CNN leverages pre-trained CNN models, such as VGG or ResNet, which are trained on large-scale datasets such as ImageNet. These pre-trained networks capture hierarchical features, ranging from low-level patterns to high-level abstractions, enabling precise analysis of specific regions. The challenge of processing variable-sized regions of interest in fully connected layers, prevalent in R-CNN, is addressed in Fast R-CNN by introducing ROI pooling. ROI pooling divides each ROI into fixed-size subgrids, extracting uniformly sized features for the fully connected layers, thus ensuring consistency and improving detection accuracy.

For region proposal generation, ROI pooling utilizes features extracted from the RPN, avoiding external algorithms such as selective search. The softmax layer classifies objects within the image by predicting the probability of each object class, resulting in a K+1-dimensional vector for K object classes, with the additional dimension representing the background category. The class with the highest probability is assigned to the detected object. The bounding box regression branch also employs linear regression to refine the predicted bounding box coordinates. Offset values, derived from ROI-pooled features, are added to the initial ROI coordinates to improve bounding box precision, ensuring accurate localization of objects. Fast R-CNN represents a significant advancement over its predecessor, achieving superior speed and accuracy in object detection tasks (Girshick, 2015).

The multi-task loss L of Fast R-CNN is jointly expressed with the two output layers, specifically training for classification and bounding box regression for each labeled ROI. For the trained model, the discrete probability distribution p, computed by a softmax over K+1 categories per ROI from a fully connected layer, is defined by

the output bounding box regression offset is given by

Where K is the object class indexed by k. The Iverson bracket indicator function [u≥1] is employed to omit all background RoIs (Girshick et al., 2014b).

in which Lcls(p, u) is log loss for true class u.

The second task loss, LLOC. The bounding-box regression targets for the class (u, v) = (vx, vy, vw, vh) and the predicted tuple tu = (tux, tuy, tuw, tuh). For the bounding box regression, we use the loss

in which

Here the L1 loss is less sensitive to the outliers than the L2 loss used in R-CNN and SSPNet.

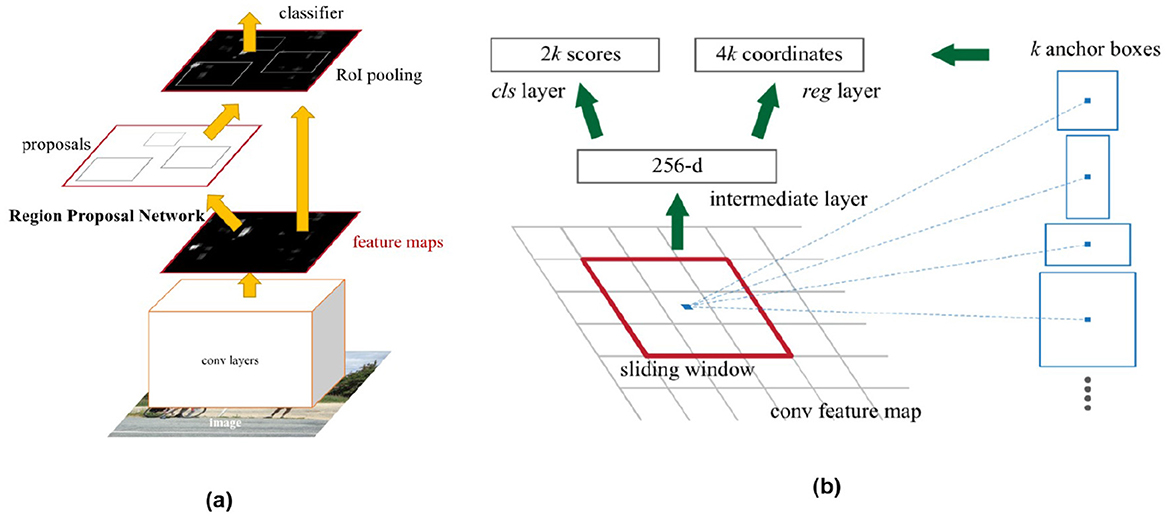

Although Fast R-CNN improves detection speed and accuracy compared to its predecessor, it still has limitations in terms of optimization and efficiency. Fast R-CNN relies on pre-trained feature extraction and external ROI pooling mechanisms that utilize fixed-size features and a softmax bounding box classifier. This dependence on external algorithms for region proposals can introduce inefficiencies, including slower processing and potential inaccuracies. Additionally, the requirement for separate stages in the training process decreases overall efficiency. Faster R-CNN was introduced to address these issues by integrating RPN with the CNN architecture into a unified framework. This design eliminates the dependence on external algorithms, resulting in significantly higher speeds than Fast R-CNN. The model trains the entire pipeline jointly, enhancing efficiency, accuracy, and effectiveness. Furthermore, Faster R-CNN is better equipped to handle diverse datasets, improving its performance in real-time applications (Ren et al., 2015).

In the architecture of Faster R-CNN, the RPN slides a small spatial window over the feature map, connecting to an n×n spatial region. For instance, with VGG16, a low-dimensional vector of size 512 is extracted within the sliding window and passed to two fully connected (FC) layers: one for box classification (cls) and another for box regression (reg). This architecture incorporates an n×n convolutional layer connected to two 1 × 1 convolutional layers, as depicted in the corresponding (Figure 12).

Figure 12. (a) Faster R-CNN (Ren et al., 2016) and (b) Region Proposal Network (RPN) (Ren et al., 2016). The diagram effectively illustrates the architecture of the Faster R-CNN model, highlighting the interactions of RPN and other essential components.

Bounding box regression is achieved by refining the proposals in relation to the reference boxes. The model utilizes anchors of three different scales and three aspect ratios, which improve detection for objects of various sizes and shapes. The loss function in Faster R-CNN is similar to that of Fast R-CNN, maintaining a balance between classification accuracy and bounding box regression.

The loss function is given by,

Where

Pi: Predicted probability of anchor box i containing an object (foreground).

ti: Predicted bounding box coordinates (4 values: x, y, width, height) for anchor box i.

p*i: Ground truth label for anchor box i (1 for foreground, 0 for background).

t*i: Ground truth bounding box coordinates for the object associated with positive (foreground) anchor box i.

Ncls: Number of anchor boxes per image in the mini-batch during training.

Nreg: Number of positive (foreground) anchor boxes in the image.

λ: Hyperparameter balancing the importance of classification and regression tasks.

: Classification loss for anchor box i, often binary cross-entropy.

: Regression loss for the predicted bounding box of anchor box i, often Smooth L1 loss.

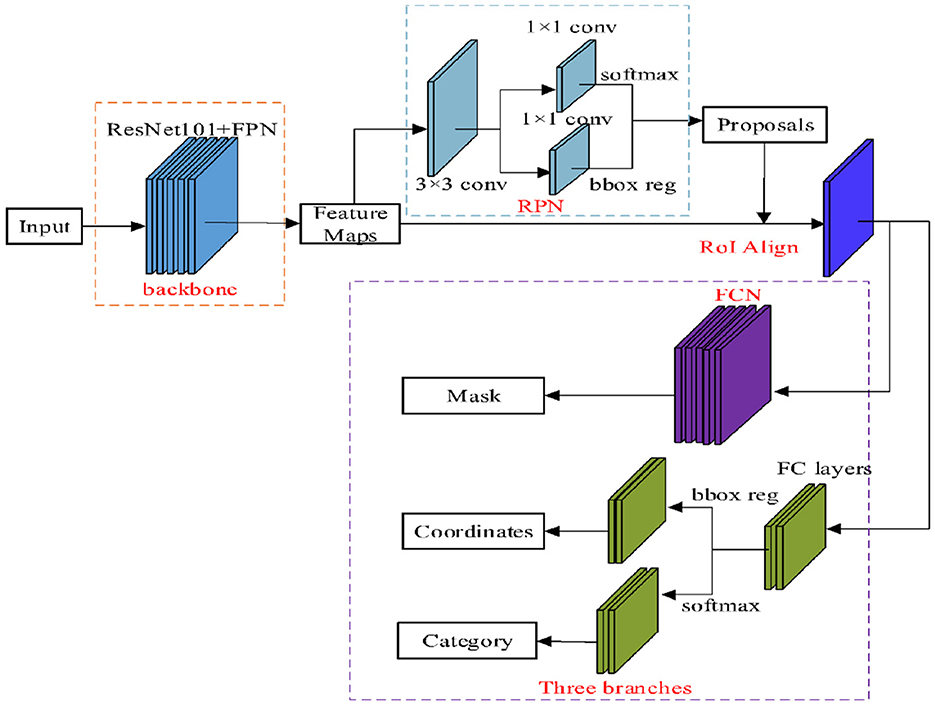

Mask R-CNN is a robust deep learning framework for object detection and instance segmentation. During object detection, it identifies and localizes objects within an image while incorporating instance-level context, which enables precise recognition of what the objects are and their locations. In the segmentation phase, Mask R-CNN goes beyond bounding boxes to create pixel-level masks for individual objects, providing superior precision. It can accurately segment various objects, such as cars, cats, bicycles, or billboards, even in challenging conditions such as partial occlusion or shadowed regions.

Mask R-CNN addresses key limitations of Faster R-CNN, such as the inability to segment individual objects within the same class or differentiate between multiple instances (e.g., distinguishing people in a crowd). It also reduces the computational overhead of storing intermediate features, thereby improving efficiency. By incorporating fine-tuning mechanisms, Mask R-CNN enhances detection accuracy and optimization, making it a robust solution for tasks such as autonomous navigation. Additionally, its end-to-end network training provides better optimization and performance compared to Fast and Faster R-CNN, establishing it as a versatile and reliable tool for image analysis.

Introduced by He et al. (2017), Mask R-CNN enhances the capabilities of Faster R-CNN by adding instance segmentation at the pixel level. Its innovation lies in integrating a mask prediction branch alongside the bounding box classifier, addressing the spatial limitations of Faster R-CNN's bilinear interpolation with a novel sampling technique that preserves spatial information. By combining region proposal and classification, this unified network architecture improves training efficiency by eliminating intermediate feature storage and optimizing the entire network through end-to-end learning. This architecture is depicted in Figure 13.

Figure 13. Architecture of mask R-CNN for instance object detection (He et al., 2017).

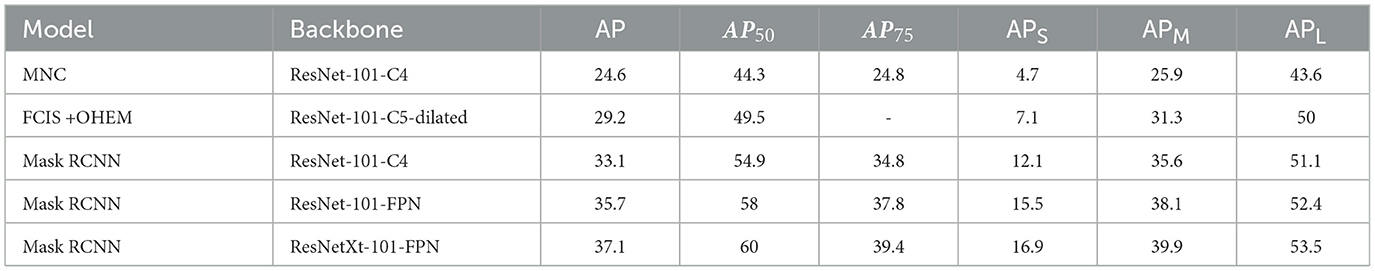

The core of Mask R-CNN is built upon Faster R-CNN, incorporating additional components such as ROI Align, shared feature pooling, and a mask prediction branch. Key architectural elements include the backbone feature extractor, RPN, shared pooling layers, detection and segmentation branches, and a multi-task loss function for joint optimization. Table 4 summarizes the performance of various models, with segmentation showing notable results. The findings demonstrate significant improvements in AP across different backbone architectures, highlighting the effectiveness of Mask R-CNN in this domain.

Table 4. Mask R-CNN performance for instance segmentation (He et al., 2017).

The foundational architecture of Mask R-CNN is Faster R-CNN. The in-depth architectural components of Mask R-CNN include the backbone feature extractor, RPN, shared feature pooling, detection, segmentation, and the calculation of the training and loss functions. ROI Align, shared features, and end-to-end training are additional components of Mask R-CNN compared to Faster R-CNN.

The backbone extractor, typically ResNet (50/101) or a VGG variant, captures complex features to enhance detection accuracy. Feature maps derived from this backbone provide rich semantic information about the input image. Anchors within the RPN are adapted to the objects' shape and size, and convolutional layers predict the presence of objects and bounding boxes. Non-maximum suppression (NMS) ensures efficient processing by suppressing redundant regions and selecting high-confidence proposals. Shared feature pooling, specifically ROI Align, preserves spatial information while resizing features for consistent mask prediction. For each ROI, the classification branch predicts object classes using fully connected layers and a softmax activation function, while the bounding box regression branch refines localization. The mask branch generates binary masks for ROIs, and skip connections enhance the network's ability to capture object shapes and extents. A multi-task loss function optimizes classification, bounding box regression, and mask prediction simultaneously, enabling robust performance through end-to-end training. Despite its computational complexity and high hardware requirements, Mask R-CNN remains a state-of-the-art tool for computer vision applications (He et al., 2017).

The concept of Feature Pyramid Networks (FPN) was developed by researchers (Lin et al., 2017a) to address the challenges associated with traditional object detection methodologies. Specifically, FPN aims to resolve two primary issues: the loss of spatial information due to down-sampling and the limited semantic information that can hinder accurate object detection. Furthermore, FPN partially mitigates the limitations inherent in Mask R-CNN, which employs traditional CNNs that often experience reduced spatial resolution, complicating the precise localization of small objects within images. FPN integrates bottom-up and top-down pathways to produce multi-scale feature maps while maintaining semantic information. This architecture enhances detection capabilities for small objects across various resolutions and sizes. The semantic gap in feature maps derived from different levels in Mask R-CNN can significantly degrade detection accuracy, particularly in cluttered scenes. To address this, FPN employs lateral connections that bridge this gap by injecting high-level semantic information from deeper layers into the feature maps. This ensures accurate object identification along with location and class information (Chhabra et al., 2023).

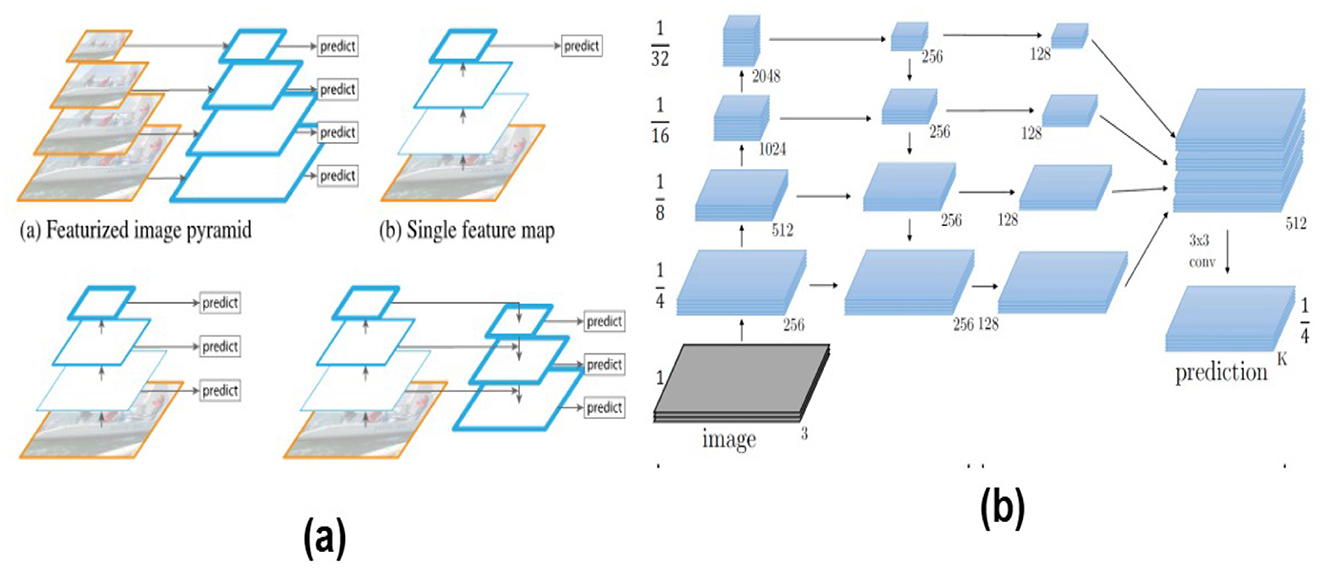

A notable advantage of FPN is its ability to reuse features computed within the CNN backbone, which minimizes computational overhead. This resource-efficient design enables the construction of multi-scale features without creating pyramids from scratch, thereby enhancing computational efficiency. As a result, FPN demonstrates improved accuracy while maintaining low computational complexity across diverse applications. It excels at detecting small objects of varying sizes with high accuracy and efficiency. During the detection process, FPN effectively integrates both bottom-up and top-down approaches. The bottom-up pathway captures fine spatial details with high semantic value using existing convolutional networks, although it may lack semantic richness and exhibit lower resolution. Conversely, the top-down pathway begins with the deepest feature map and progressively upsamples it while merging it with shallower maps through lateral connections. This synthesis results in a comprehensive representation that combines high-level semantic information with preserved low-level spatial details. Figure 14 presents how features are extracted at multiple scales, resulting in a feature pyramid that captures information at different levels of detail and abstraction.

Figure 14. (a) Featured image pyramids. Lin et al. (2017a) fundamental idea behind FPNs. (b) Feature Pyramid Network. Lin et al. (2017a) that shows how the network takes an input image and generates a single feature map for key concepts and architectural details of FPNs.

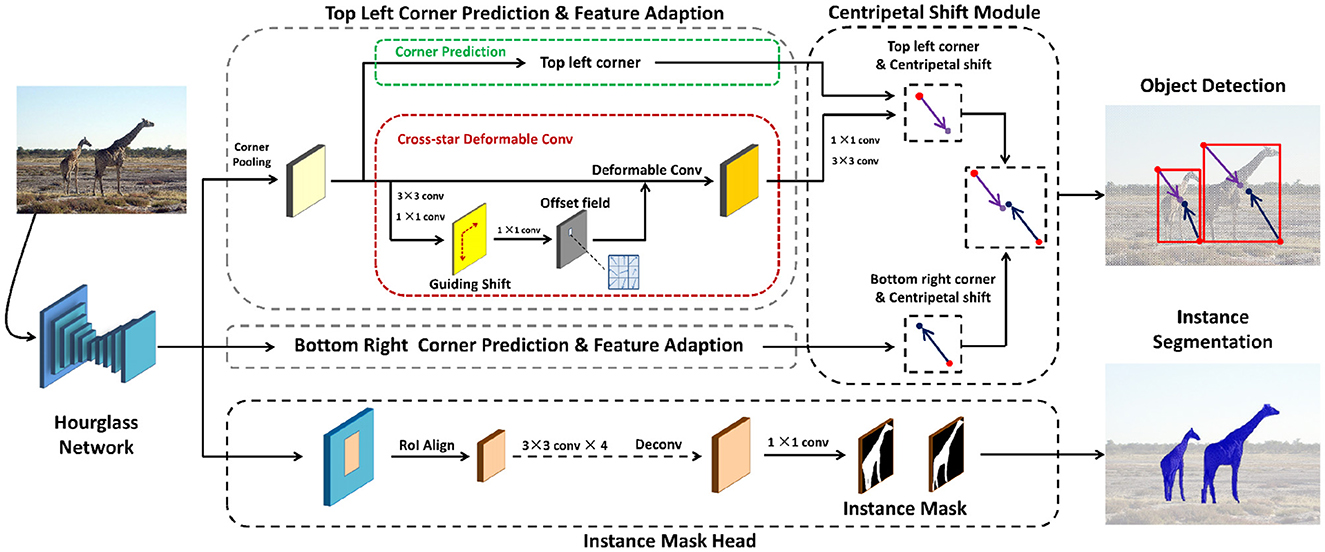

CentripetalNet demonstrates higher prediction accuracy than the bounding box approach in FPN. It achieves fine-grained localization of potential objects within an image and delivers superior performance in challenging scenarios, such as dense or crowded scenes and partially visible objects. The architecture of CentripetalNet, illustrated in Figure 15, leverages key points for object detection. Kivee (Dong et al., 2020) developed CentripetalNet to pursue high-quality keypoint pairs for object detection, addressing issues related to inaccurate keypoint matching and limited feature integration, which often result in the loss of spatial context and essential information for effective object detection.

Figure 15. Architecture of CentripetalNet for instance object detection (Dong et al., 2020).

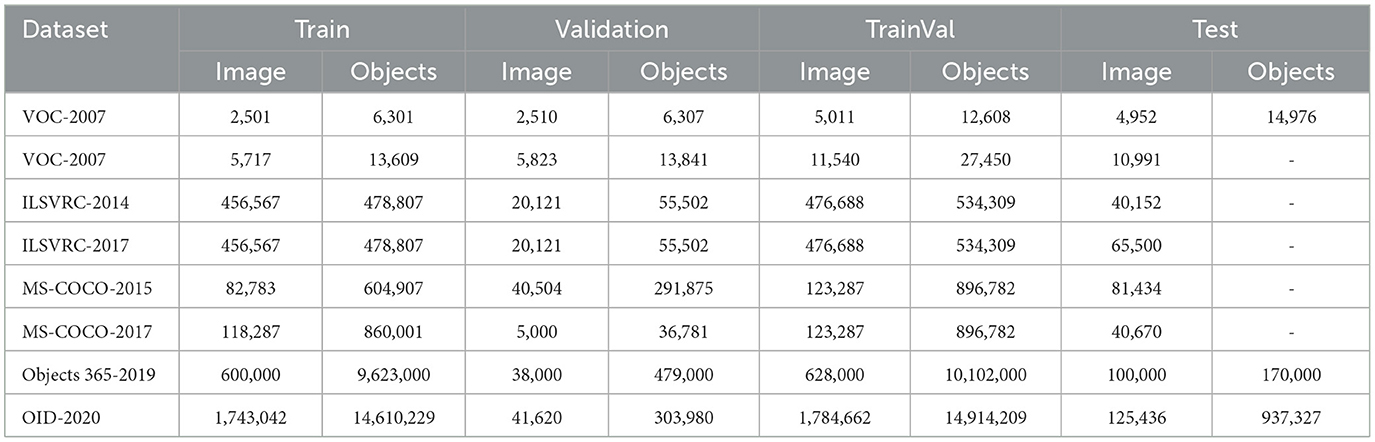

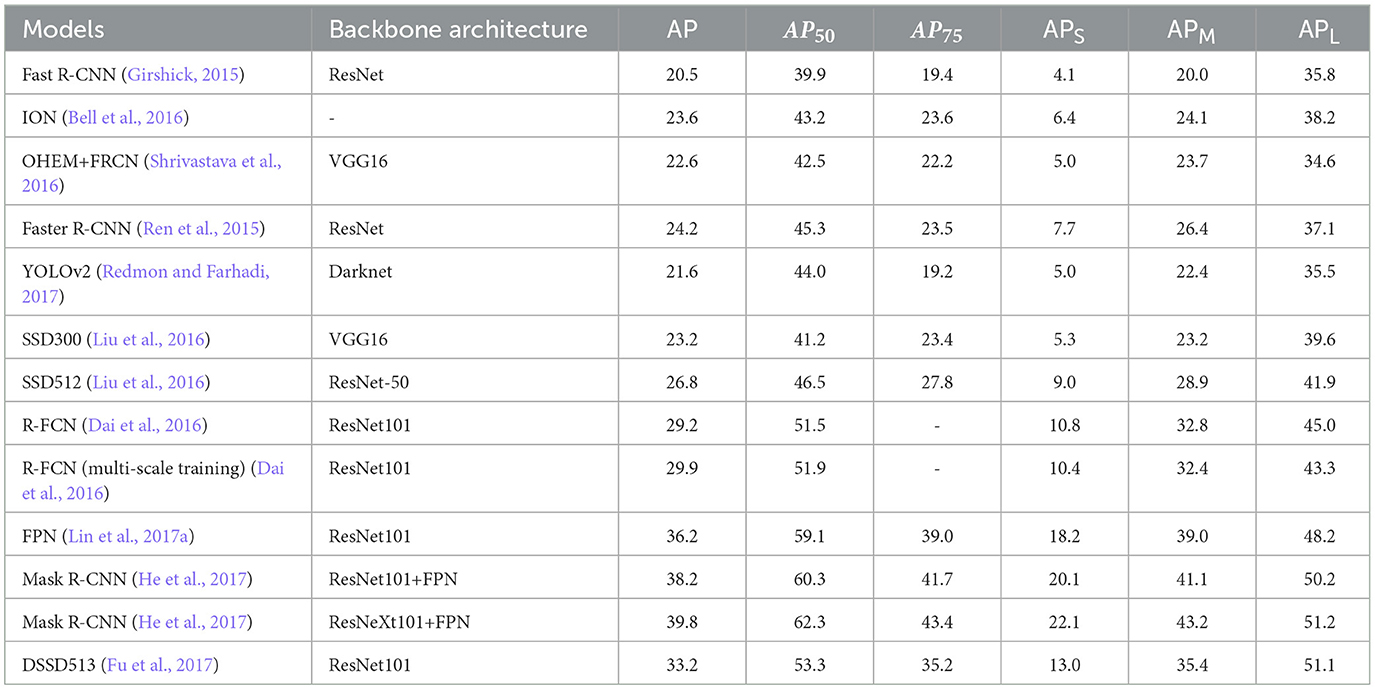

This recent object detection approach relies on key points instead of bounding boxes, predicting primary objects based on the location and relationships of corner key points. Initially, the model predicts the corner key points associated with each object and utilizes a shift vector, known as the centripetal shift, to guide these points toward the object's center. To pair corresponding key points within the same object, it employs predicted shift values in a process called shift matching, which is particularly useful when the points are initially scattered. Corner pooling extracts features from the area surrounding each corner point with sufficient precision to represent them as detected objects. Finally, deformable convolutions are employed to refine the exact shape of the object in real-time. Table 5 provides a comparative evaluation of object detection performance on the MS-COCO test-dev dataset, focusing on various detection methodologies and their performance metrics. Key indicators, including Average Precision (AP), AP at different Intersection over Union (IoU) thresholds (AP50, AP75), and performance across small, medium, and large object scales, are presented. The findings indicate that multi-scale approaches, particularly those employing Centernet511 and CetripetalNet, exhibit enhanced performance across all assessed metrics, highlighting their efficacy in object detection tasks.

Table 5. Comparison of object detection performance on the MS-COCO test-dev dataset for various methods, highlighting metrics such as Average Precision (AP), AP50, AP75, and performance across small (APS), medium (APM), and large (APL) object scales for single-scale and multi-scale evaluations (Dong et al., 2020).

Cao et al. (2020) introduced an aggregation network for object detection that significantly enhances accuracy while maintaining high processing speed, making it suitable for real-time applications. D2Det addresses the limitations of traditional methods by employing a dual-path aggregator that integrates high spatial detail from low-resolution features with rich semantic information from high-resolution features. This design balances the trade-off between accuracy and classification efficiency. D2Det selectively applies deformable convolutions at specific stages to improve feature learning, optimizing the balance between system performance and computational efficiency. Furthermore, lightweight layers ensure faster processing speeds, making the architecture highly suitable for real-time tasks. The simplified yet advanced design of D2Det has led to its adoption in various real-time domains, demonstrating robust performance and scalability.