- 1Ultraleap Ltd., Bristol, United Kingdom

- 2Research and Development Department, Idener Research and Development Agrupación de Interés Económico, Seville, Spain

- 3University College London, London, United Kingdom

Ultrasound-based mid-air haptic feedback has been demonstrated to be an effective way to receive in-vehicle information while reducing the driver's distraction. An important feature in communication between a driver and a car is receiving notifications (e.g., a warning alert). However, current configurations are not suitable for receiving notifications (haptic device on the center console requiring palmar feedback) as they force the driver to take their hands off-the-wheel and eyes off-the-road. In this paper, we propose “knuckles notifications,” a novel system that provides mid-air haptic notifications on the driver's dorsal hand while holding the steering wheel. We conducted a series of exploratory studies with engineers and UX designers to understand the perceptual space of the dorsal hand and design sensations associated with 4 in-car notifications (incoming call, incoming text message, navigation alert and driver assistant warning). We evaluated our system with driver participants and demonstrated that knuckles notifications were easily recognized (94% success rate) while not affecting the driving task, and mid-air sensations were not masked by background vibration simulating the car movement.

1 Introduction

Research studies have shown the potential of delivering mid-air haptic feedback (onto the driver's palm and fingers) within in-vehicle driving tasks and infotainment applications [e.g., music, temperature, navigation map, and phone calls (Young et al., 2020)], while reducing visual demand for safe driving (Harrington et al., 2018; Shakeri et al., 2018; Hafizi et al., 2023; Spakov et al., 2022) and improving task performance (Brown et al., 2020).

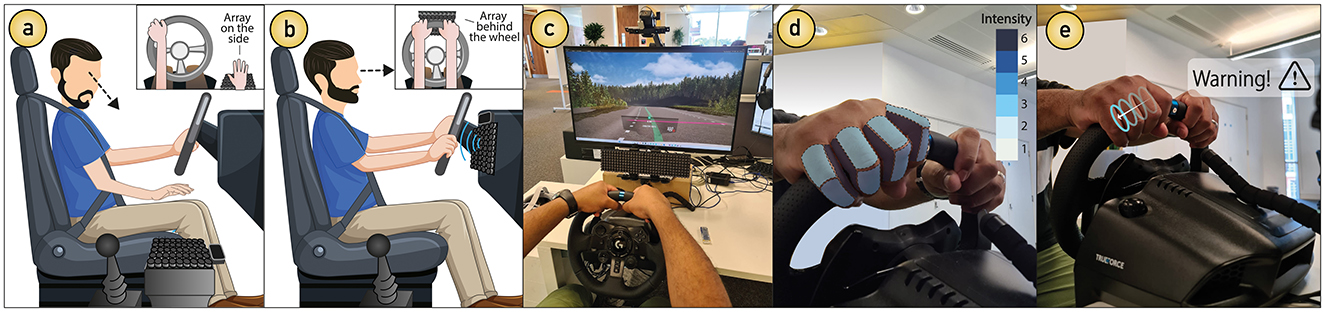

However, all systems using mid-air haptic feedback for in-vehicle applications proposed to date are used for active interaction requiring the driver to take their hand off-the-wheel to receive tactile sensations on the palm (i.e., fingers extended). To be specific, the typical configuration for placing the mid-air haptic device [a phased array of ultrasonic transducers (Carter et al., 2013)] in a car or driving simulator is on the center console near the gear stick (Shakeri et al., 2018; Large et al., 2019; Harrington et al., 2018; Young et al., 2020; Brown et al., 2020; Korres et al., 2020; Georgiou et al., 2017; Roider and Raab, 2018; Brown et al., 2022; Spakov et al., 2022), forcing the driver to place their hand above the device (see Figure 1A). Depending on the scenario, such configurations can be unsuitable for receiving in-car notifications, namely, an alert, typically unexpected, to notify the driver of a new message, an update, or a car warning. This would imply that every time the driver receives a haptic notification, they must release the wheel, place their hand above the device, keep it in a certain position while feeling, and then place it back onto the wheel.

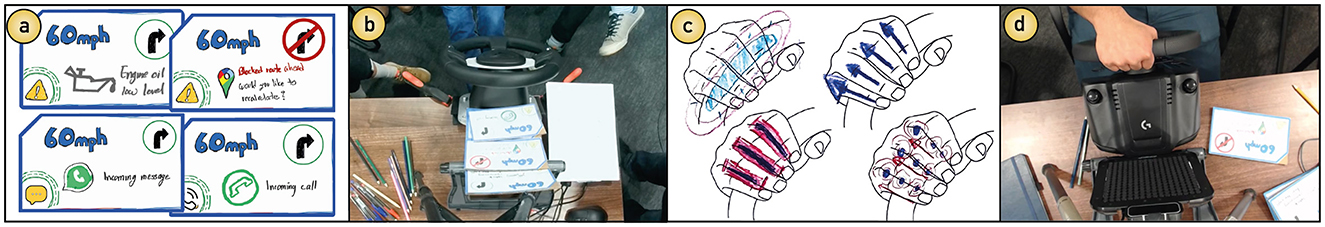

Figure 1. Traditional configurations for providing mid-air haptics for in-vehicle interfaces (haptic device on the side) forces the user to make hands off-the-wheel and eyes off-the-road actions (A). Providing mid-air haptics on the driver's dorsal hand (by placing the haptic device behind the wheel) promotes a hands-on-the-wheel and eyes-on-the-road paradigm (B). We implemented our knuckles notifications system in a driving simulation task (C) and designed different sensations based on an intensity mapping of the dorsal hand while holding a steering wheel (D) to provide drivers with meaningful notifications while driving (E).

Furthermore, while gestural interaction is often suggested to alleviate visual demand (Pitts et al., 2012), studies also show that gestural control is not completely an eyes-free interaction technique, as it still requires rapid eye glances for hand/eye coordination (Bach et al., 2008). This means that the user sporadically has to look at where their hand is, which might produce eyes-off-the-road actions as well. Research on interaction design suggests that in-vehicle interfaces should support an “eyes-on-the-road, and hands-on-the-wheel” paradigm to ensure driver safety (Angelini et al., 2014; González et al., 2007; Meschtscherjakov, 2017), and current mid-air haptic systems found in the literature, do not seem to support such an important protocol.

While vibrotactile feedback embedded inside the steering wheel has been used for delivering notifications (Noubissie Tientcheu et al., 2022; Murer et al., 2012; Shakeri et al., 2016), studies suggest that vibrotactile notifications can be masked by the natural car movement (Ryu et al., 2010; Petermeijer et al., 2016). Moreover, vibrotactile systems also occlude a critical part of the driver task, which is feeling natural vibrations from the tires on the road through the steering wheel (Adams, 1983). While ultrasonic mid-air haptics could overcome masking limitations in noisy vibration environments (Spakov et al., 2022), the current configurations of palmar feedback seem unsuitable for notification applications during driving tasks due to its hands-off-the-wheel requirement. Despite this, providing mid-air haptic feedback on other parts of the hand or placing the haptic device in different locations of the car, has not been explored for in-vehicle interfaces.

In this paper, we present knuckles notifications, a system that provides mid-air haptic feedback on the driver's dorsal hand, aiming to support a hands-on-the-wheel notification system for driving applications (see Figures 1B–E). Since it has been shown that ultrasonic stimulation is perceived as less intense on the dorsal hand compared to the palm (Salagean et al., 2022; Rakkolainen et al., 2019), first a series of exploration studies were conducted to understand the perceptual space of the dorsal hand (particularly when holding a steering wheel), and the type of stimuli that can be suitable for in-vehicle notifications.

From such exploration, we found that when ultrasound stimulation is provided on the dorsal hand while the user is holding a steering wheel (with a natural closed hand pose), the sensation on the knuckles area (between the fingers and proximal phalanges) feels stronger than experienced when (1) the hand is open and (2) the hand is not holding any object. Indeed, the perceived intensity when holding the wheel was comparable to that felt on the palm. This could be caused by the ultrasound waves getting trapped, bouncing between the fingers and the wheel.

With this configuration and the perceptual space identified, we then designed four haptic sensations associated with four in-car notifications - incoming call, incoming text message, navigation map, and driving assistant warning. We evaluated our system through a user study with 20 drivers to explore whether users are able to identify the different notifications while driving, and compared our system with and without vibration background on the wheel (i.e., background vibration simulating the road). Our results show that participants easily recognized the knuckles notifications with a success rate of 94% while not affecting the driving task, as path deviation (a proxy to driver distraction) was not significantly affected while feeling and identifying the notification. Additionally, such success rate was not affected by the background vibration on the steering wheel overcoming the masking effects. Thus, the main contributions of our paper are as follows: (1) we characterize the sensation space on the dorsal hand for mid-air haptic feedback; (2) we provide a novel in-vehicle haptic notification technique that avoids hands-off-the-wheel and eyes-off-the-road actions; (3) we demonstrate a configuration (hand position and haptic device location) that results in stronger mid-air haptic sensations on the dorsal hand; (4) we demonstrate through a user evaluation that our system is not affected by masking effects produced by car vibrations; (5) we discuss application examples in which our approach can be useful and provide guidelines for future work.

It is worth noting that we do not attempt to demonstrate that the knuckles notifications approach can replace or be superior to alternative and simpler types of notifications (e.g., auditory or visual), that might be cheaper and require less resources. However, we aim to explore the feasibility of mid-air haptics (whose research studies have promised to be safer in the literature), for potential future applications in cars. With our explorations and the results presented in this paper, we aim to foster wider research around the use mid-air haptics for in-vehicle interactions that goes beyond (but using our insights) the scope of the present paper.

2 Related work

2.1 Mid-air haptic feedback on the dorsal hand

While recent works have explored ultrasound haptic stimulation on different body parts [e.g., the mouth (Vito et al., 2019; Jingu et al., 2021; Shen et al., 2022) and face (Gil et al., 2018; Lan et al., 2024)], in most cases, research has focused on stimulating the user's palm (glabrous skin), which has higher tactile sensitivity than the hairy skin (Pont et al., 1997). Studies suggest that “modulated signals at ~200 Hz can only be felt by the palm of a hand. The dorsal side of the hand and all other human body areas are numb to it” (Rakkolainen et al., 2019). This is mainly because vibrotactile perception relies on Pacinian corpuscles, which are densely distributed in the glabrous (hairless) skin of the palm (Johansson and Vallbo, 1979). Despite this, some studies have explored tactile stimulation on the dorsal hand.

For example, Salagean et al. (2022), compared ultrasonic stimulation applied to the palm and the dorsal hand in an experiment replicating the rubber hand illusion. They concluded that ultrasonic stimulation is perceived as less intense on the dorsal surface than on the palmar surface. This suggests that the relative orientation between the hand and the haptic display affects the ultrasound tactile feedback (Sand et al., 2015). Pittera et al. (2022), explored the acoustic streaming and the acoustic radiation pressure phenomena associated with high-pressure focal points targeting different parts of the forearm. They confirmed that the user could perceive acoustic streaming effects on the hairy skin and that such stimulation can convey affective touch. Spelmezan et al. (2016) proposed a system that can steer and focus ultrasound on the skin through the hand so that ultrasound stimulation focused on the center of the palm can go through the hand and be felt on the dorsal hand. To the best of our knowledge, there are no studies involving ultrasound mid-air haptics on the dorsal surface of the hand, near the fingers, or while holding an object.

While mid-air haptics has been barely explored for the dorsal hand, driving poses a great scenario to explore haptics on this area since the driver palm is not visible (i.e., their hands must be on-the-wheel), thus, exposing the dorsal hand more easily. While other parts of the body are potentially useful particularly for driving (e.g., face, neck), the effects of exposure to ultrasound on humans/animals' face and eyes are still not fully understood (Battista, 2022). This requires further research (e.g., safety, ethics, etc.) which is beyond of scope of the present paper. Instead, we follow a safer approach of using the driver's hand as usually hands are the most interactable body part in the use of technology.

2.2 Mid-air haptic feedback in cars

The automotive industry is introducing relevant multisensory innovations (haptics, head-up displays, etc.), in which mid-air haptics, and touchless systems in general, are gaining increasing attention. However, academic research around these automotive applications is very limited. Therefore, we see an opportunity to support with research the possible adoption of haptics inside cars, and further taking advantage of the suggested benefits that mid-air haptics offer for driving such as reducing driver distraction. Mid-air haptics technology (Harrington et al., 2018) uses ultrasound to create sensations of touch on the driver's hand and fingers, even in absence of any physical buttons or knobs. Studies show potential of using mid-air haptic feedback within driving tasks, while reducing visual demand for safe driving and improving task performance.

For example, Harrington et al. (2018) compared a traditional touchscreen with a virtual mid-air gesture interface in a driving simulator and found that combining gestures with mid-air haptic feedback reduces the number of long glances and mean off-the-road glance time. Large et al. (2019) found that apart from reducing visual demand, ultrasound feedback also increases performance (shorter interaction times, the highest number of correct responses and least “overshoots”) while producing the lowest levels of workload (highest performance, lowest frustration). Korres et al. (2020) found that augmenting a virtual touchscreen with mid-air haptic feedback improved driving task performance related to spatial deviation and the number of off-road glances. Shakeri et al. (2018) showed that ultrasound feedback reduces eyes-off-the-road time compared with visual feedback whilst not compromising driving performance or mental demand and thus can increase driving safety. Recent investigations (Fink et al., 2023) have demonstrated that drivers navigate faster by combining gestural-audio techniques with mid-air haptic feedback. Spakov et al. (2022) studied mid-air haptic shape recognition in both a simulator and test-track environment and found that ultrasound mid-air haptic output can remain an efficient feedback source even in noisy vibration environments (i.e., on the road), thus drivers can focus their attention more toward the primary task and yet still interact with the onboard UI.

While mid-air haptic technology is still not commercially available in the automotive industry, it is augured that mid-air haptic systems will find its way into commercial vehicles, and suggested to be the missing piece of the puzzle in the automotive human-machine interfaces (HMI) of the future. Some OEMs are embracing novel technologies like mid-air haptics in order to differentiate and stand apart from their competition (Ultraleap, 2022).

However, we argue that the suggested safety offered by mid-air haptics might lose its value for a notification use case (i.e., the driver must take their hands-off-the-wheel). Either on a real car or driving simulator, no study has used mid-air haptics for in-car notifications as all prior studies share the same system setup – a mid-air haptic display on the side of the driver, on the center console or near the gear stick, such that the haptic stimulus arrives at the driver's palm. Therefore we see an opportunity to explore a different configuration that could be useful for driver notifications.

3 Knuckles notifications approach

Mid-air haptic perceived strength, as with all vibrotactile stimuli, is not constant throughout the hand surface (Lundström, 1985). Therefore, before designing knuckles notification patterns, we started by understanding the sensation space on the dorsal hand while holding a steering wheel. To do so, we first conducted an exploration user study aiming to (1) obtain a hand intensity mapping that allows us to identify the areas of the dorsal hand in which the haptic sensation is perceived as more intense, and (2) explore the perceptual space of moving stimuli on the dorsal hand (i.e., proximity thresholds and sense of direction). Then, we organized an exploration workshop involving individuals actively engaged in the fields of haptics and user experience (UX) in order to conceptualize tactile sensations that could be linked to in-vehicle notifications. This was achieved through using the intensity mapping and perceptual space, which were derived from the exploration user study, and using that knowledge to provide a framework for guiding participants' creative designs. We then implemented the designed sensations within a driving simulator task and evaluated our system through a user study with driver participants to explore whether the designed sensations are recognized and correctly associated with in-vehicle notifications while driving. See Figure 2 for an overview of our approach procedure.

3.1 Exploration study

To obtain a more precise perceptual intensity mapping, we recruited participants who have either used or worked with the mid-air haptic technology before. That is, they were familiar with aspects related to perceived intensity such as hand position, device distance, device working area, etc. Thus, 12 participants from our team's network took part in this study (two females, mean age = 34.6 years old, SD = 7.8). The local ethics committee approved this study.

As shown in Figure 3, we used a LOGITECH G923 steering wheel that participants held to adopt different hand poses. To provide mid-air haptic feedback, we used an Ultraleap STRATOS Explore Development Kit hardware platform (256-transducer array board, control board, and frame structure) which operates at 40 kHz. The haptic stimuli were created using Feellustrator (Seifi et al., 2023b) which is a graphical design tool for quickly creating and editing ultrasound mid-air haptic patterns. More information about the tool is available in (Seifi et al., 2023a).

Figure 3. Setup of the exploration study for the palm and dorsal hand: bare hand (A) and hand-on-the-wheel poses (B, C).

While we were interested in the perceptual space of the dorsal hand, we explored the palm as well to compare both areas of the hand. We also considered both bare-hand and hands-on-the-wheel poses. For bare-hand pose, participants simply placed their hand above the Stratos device at a 20 cm distance (see Figure 3A). For hand-on-the-wheel pose, the Stratos device was placed behind the steering wheel to provide the stimulus toward the dorsal hand while holding the wheel (see Figures 3B, C). With this setup, we explored the hand's intensity mapping and perceptual space.

3.1.1 Hand intensity mapping

The haptic stimulus was a 1 cm diameter circle (the minimum size in Feellustrator software, before decreasing rendering intensity) that was traced out by a focal point moving around it at 8 m/s (spatiotemporally modulated). The stimulus was placed at a fixed position 20 cm above the haptic display since that is the optimal focusing distance (Wojna et al., 2023a,b). We presented the mid-air haptic stimulus in three different configurations shown in Figure 4—palm, dorsal hand, and hand-on-the-wheel. For each configuration, we considered both, fingers extended and closed together, resulting in 6 hand poses in total. Participants were asked to freely move their hand and feel the stimulus on different areas of their palm and dorsal hand while adopting the different hand poses (they could move the hand even while holding the wheel). Participants then identified the areas where they felt the stimulus and rated their intensity, by using empty stencils of the hand poses on which they could annotate, color, and highlight to report their own intensity perception.

Figure 4. Perceptual intensity mapping of different locations and hand poses—the palm, dorsal hand, and hand-on-the-wheel configurations with both extended and closed fingers. Six areas were found to be perceived with different intensities. The darker the color, the more intense the sensation was perceived.

Participants were asked to identify the areas of the hand were they perceive different intensities of haptic sensation and rate their intensity by annotating, coloring, and highlighting the stencils to report their own intensity perception. They could report as many areas as they wished.

Overall, we found commonalities in the identified areas and intensity ratings across participants. They consistently reported six areas where they could perceive different intensities as reported on their stencils annotations. These stencils where then processed and converted into an intensity scale from 1 to 6 for each participant and for each hand pose. The data was tabulated, linearly normalized, averaged and rounded to the nearest integer for clarity, thus resulting in Figure 4.

As expected, participants reported higher intensity of the stimulus on the palm compared with the dorsal hand. Yet, we found that the center of the palm was reported with low intensity. This result was interesting since most applications of mid-air haptics in cars are usually directed to the center of the user's palm (Large et al., 2019; Young et al., 2020; Georgiou et al., 2017; Shakeri et al., 2018). Instead, we found that our stimulus was perceived as more intense on the top part of the palm (inter-digital area) and the proximal phalanges.

For the dorsal hand, participants consistently reported the knuckles and proximal phalanges as the area in which the stimulus felt more intense. Interestingly, participants' reports indicate that the sensations on the back of the hand are still strong even when holding the steering wheel. We also noted that for all hand poses—palm, dorsal hand, and hand on-the-wheel, the stimulus intensity was perceived higher, particularly between the fingers, when the hand was closed. We also found this interesting, since current in-vehicle interfaces using mid-air haptics usually require the driver to make open-palm gestures (Large et al., 2019; Harrington et al., 2018). However, the sensation is still strong when the fingers are separated, as long as the hand holds the wheel. Indeed, the intensity felt on the dorsal hand, when the fingers were closed or separated but holding the wheel, is comparable to that felt on the palm reported with the highest intensity.

We believe this is produced by an acoustic caustic effect whereby ultrasound is re-focused by the concave cusp formed by the closed fingers, or when open but holding the wheel (Kulowski, 2018). The acoustic caustic effects is a physical phenomenon that occurs when sound is concentrated in a specific location, such as a point focus, in a room with curved surfaces. In audible frequencies, this kind of acoustic caustics was commonly found in large historical interiors formed by curved surfaces, such as the whispering gallery of St Paul's Cathedral in London caused by “sliding” of sound along a concave wall or the concentration of sound in a distant location of the room. In ultrasonic frequencies with much smaller wavelengths, a similar effect can be achieved at concave cusps, such as those formed at the interdigital folds between fingers. Despite the density of mechanoreceptors located between the fingers and knuckles being significantly lower compared with the palm (Johansson and Flanagan, 2009), the sound concentration produced by this caustic effect (due to the closure of the fingers and the object being held) makes this area an excellent target to provide mid-air haptics that could potentially be suitable for delivering notifications. However, something relevant to consider is that participants reported that hand grip heavily affects how strongly a stimulus is perceived. For example, holding firmly the wheel drastically reduces the perceived stimulation intensity. This could be due to the grip's pressure masking the vibrotactile effect of modulated ultrasound.

3.1.2 Perceptual space

Having identified where on the hand different stimuli can be felt most intensely, understanding the perceptual space of mid-air haptics on the dorsal part of a steering wheel holding hand can help us design knuckles notifications that are clearly perceivable as well as sufficiently distinguishable in terms of their spatial and temporal characteristics. For example, how close can two stimuli be in space and/or time, before they are perceived as one? How far does a moving stimulus need to move at constant speed before it is perceived as stationary? How many directions of tactile motion (horizontal/vertical/diagonal) can users reliably distinguish between? What is the shortest perceptible pulse duration? Answers to these questions provide parameter thresholds, that define regions, the intersection of which defines the perceptual space that we can design good knuckles notifications in. Note that a recent study has explored distinguishability of mid-air haptic tactons on the palm (Lim et al., 2024).

To simplify our task, we limit our exploration of the perceptual space to just two dimensions, namely (1) the proximity thresholds of separate stimuli presented - how close a stimulus in 2 different locations can be perceived differently before they are perceived as a single point, and (2) the sense of direction - whether participants can perceive a stimulus in motion and its direction. These are sufficient to design and test a small set of knuckles notifications.

The perceptual space exploration consisted of (1) proximity thresholds of separate stimuli presented—how close a stimulus in two different locations can be perceived differently before they are perceived as a single point due to their proximity, and (2) sense of direction—whether participants can perceive a stimulus in motion and its direction. All stimuli were presented on the participants' dorsal hand while adopting a hand-on-the-wheel with a closed fingers pose. However, they were asked to adopt a natural grip (non-clenched fist). Participants performed three repetitions for each exploration task.

Proximity thresholds: Two sequential blinking circles (1 cm in diameter) were presented on the participant's dorsal hand. The stimuli were separated by 6, 5, 4, 3, 2, and 1 cm. Participants reported whether they felt one or two circles during the stimulus presentation. Results showed consistency that the minimum distance between stimuli to be considered as separated in space was 2 cm (3 and 2 cm yield a 100 and 97% success rate, respectively). A distance less than 2 cm was consistently perceived as a single blinking stimulus (below 63% success rate).

Sense of direction: A circle moving on a straight horizontal line was presented on participants' dorsal hand with lengths of 6, 5, 4, 3, 2, and 1 cm. The stimulus was presented in two directions – from left to right and from right to left (a single direction in each trial). Participants first reported whether the stimulus was static or moving. If it was felt in motion, then they reported the felt movement's direction. Results showed that participants could successfully perceive the stimulus motion and its direction for lengths of 4 cm and above (98% success rate). For stimulus with a length below 4 cm, the stimulus was mostly perceived as a static/blinking stimulus (lower than 64% success rate).

In summary, we obtained relevant considerations from this exploration study for designing mid-air haptic notifications on the dorsal hand. First, we identified the areas and hand positions where a mid-air haptic stimulus is perceived as more intense (the knuckles area between the fingers and proximal phalanges). Most importantly, we found that the perceived intensity is not affected (but intensified) when the hand is holding a steering wheel.

We then determined that, if notifications were to include multiple stimuli, they should be separated by more than 2 cm, so they are not perceived as merged. We also concluded that designing stimuli requiring users to differentiate the horizontal direction of motion is not recommended for stimulus lengths below 4 cm. Finally, we found that hand grip strength when holding the wheel affects the perceived intensity. Therefore, any designed sensation will be affected by this limitation. The user should be suggested not to clench their fist and rather adopt a natural grip.

3.2 Exploration workshop

We recruited 15 new participants (two females, mean age = 37.2 years old, SD = 7.6) from our team's network to design in-vehicle mid-air haptic notification. Participants were mostly engineers with different backgrounds including haptics, HCI, safety, interaction design, UX, and perceptual science. Ten of those participants have worked on automotive applications within their work department.

At the beginning of the workshop, participants were introduced to the results from the previous study (intensity mapping and perceptual space values) so that they could use the considerations identified for their designs. Then, they were split into three groups of five persons each and asked to design sets of four sensations that matched four notification types—incoming phone call, incoming message (e.g., WhatsApp, Messenger), navigation notification (e.g., recomputing route, road disruption), and intelligent driving assistance warning (e.g., “car overcoming on the left,” “a short distance from the car ahead”). Those notifications types have been commonly used for in-vehicle infotainment features (Brown et al., 2020; Ferwerda et al., 2022).

Participants were told that the designed stimuli should be focused on the available parts of the hand when holding a steering wheel (e.g., the fingers on the dorsal hand, the lower palm, thenar and hypothenar areas). We asked participants to focus on single-hand notifications.

To further help the participants design these tactile notifications, we applied participatory design principles such as body-storming, whereby the groups were encouraged to enact and embody the application and the received notifications using a set of props and software tools (Schleicher et al., 2010). Each group was given a LOGITECH G923 steering wheel (to play with different hand positions) and a set of four cards with different examples representing the four notification types to help them imagine a driving scenario (see Figures 5A, B). Participants also used empty stencils showing the target areas in which they could make annotations using colored pencils to design potential sensation patterns (see Figure 5C). In each group, participants first individually designed as many sensations as they wished for each of the four notifications, and then collectively chose the best five sets (one from each participant).

Figure 5. (A) Cards that participants used to represent the notification types—incoming call, incoming message, navigation alert, and driving assistant warning, (B) setup for each group to ideate the sensations, (C) example of stencils that participants used to annotate the potential sensations, (D) setup to translate the annotations into mid-air haptic sensations using the Feelustrator tool (Seifi et al., 2023b).

Then, participants translated their designs into mid-air haptic sensations. To do so, each group used an Ultraleap STRATOS device, and a laptop with the Feellustrator tool running (see Figure 5D) so that they could modulate the stimulus' properties (spatial pattern, movement direction, frequency, intensity waveform, and duration) while feeling the stimuli on the hand and holding the steering wheel. For example, participants could design a larger/smaller circle, shape it into an ellipse, make it move in space, or make it blink in time. At least one participant per group was familiar with the Feellustrator tool. Participants were allowed to edit and re-design their stimuli to ensure they were suitable for each notification type. Participants then collectively chose the best 3 sets of sensations (one from each group).

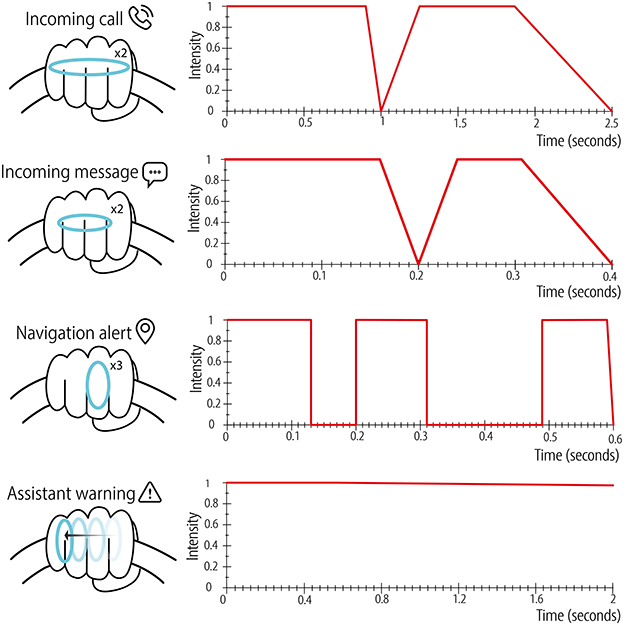

After all groups felt the top three sets of sensations, participants then evaluated the level of semantic association with the target notifications by collectively (all the 15 participants) rating the stimuli (e.g., “The stimulus matches the ‘incoming call' notification”) designed by other groups including their own, on a scale from 1 (strongly disagree) to 7 (strongly agree). The set with higher ratings was selected as the final one. The resulting set of four sensations are shown in Figure 6 which primarily targets the knuckles area covering the proximal phalanges, which is also where sensations are felt more intense (supporting the results from the previous study). Crucially, when sensations included pulses, participants decided to present them in the same position rather than in two or more different positions, to avoid they are perceived as a single point due to their proximity. When sensations included motion, the stimulus length was above 4 cm to allow the user to perceive the direction of movement. Thus, the selected tactile knuckles notifications were either modulated in time or in space, but not both.

Figure 6. Resulting sensations designed by participants for the four notifications types and their intensity-time relation for each sensation. The circle rendered to create the sensations had 1 cm in thickness. Please note that the timescale in the X-axis is different for each notification as they have different durations.

More specifically, for the incoming call notification, participants designed a tactile sensation spanning all four fingers that looks like an elongated ellipse, pulsing 2 times, and lasting 2.5 s in total. For the incoming message, a less elongated ellipse covering only the middle and ring fingers, pulsing 2 times, and lasting 0.4 s in total. For the navigation notification, the stimulus was a vertically elongated ellipse covering only the middle finger, pulsing 3 times, and lasting 0.6 s in total. For the driving assistance warning, the stimulus was a similar stimulus was used to scan/move along four knuckle fingers, from index to pinky, lasting 0.6 s in total. As shown in Figure 6, participants groups chose four patterns that are highly different in space and time likely as a proxy for making them easily distinguishable.

4 Evaluation user study

In this section we evaluate the designed notification set. It is worth noting that the tested notifications (shown in Figure 6) are not aimed to be identified based on intensity changes. Indeed, all notifications were projected on the areas found with more reported intensity (score of 6) in the exploratory study. That is, he knuckles area (covering the fingers and proximal phalanges).

We integrated the notification set into a driving task using the Unity template Test Track driving simulator from Volvo (Volvo, 2021). We then conducted a within-subjects user study to explore whether the designed sensations are distinguishable from each other and correctly associated with each notification type when (1) driving is the primary task and, (2) there is a vibration background noise on the wheel simulating a road movement.

4.1 Participants

Sixteen new participants were recruited (two females, mean age = 35.3 year old, SD = 8.9) to participate in the evaluation study. They were required to be current drivers and have a valid driving license. All participants gave written consent for their participation. Participants were recruited from our work network so they had some previous experience with mid-air haptics and overall touchless technology. Approval of all ethical and experimental procedures and protocols was granted by the Research Ethics Committee from Ultraleap and performed in line with the Data Protection Act 2018 (DPA 2018), and the General Data Protection Regulation (GDPR) as it applies in the UK.

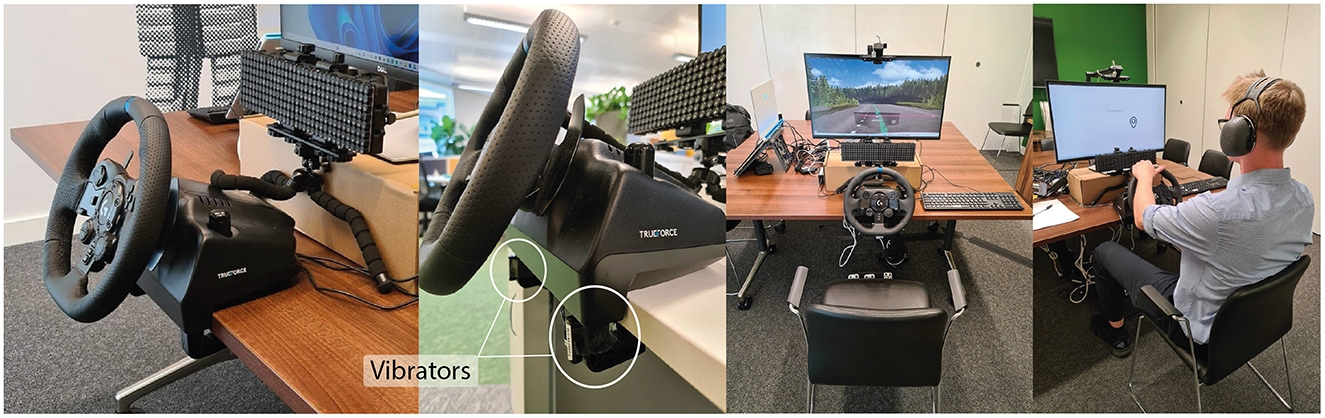

4.2 Experimental setup

Participants sat in the driving simulator seat in front of a LOGITECH G923 and a 27-inch DELL screen as shown in Figure 7. The mid-air haptic sensations were provided through a custom mid-air haptic array (32x8 transducers) placed 20 cm behind the steering wheel and positioned to provide haptic feedback onto the participants' dorsal hand while holding the wheel. We also used a Leap Motion Stereo IR170 camera to keep track of the user's hands. Participants were asked to wear 3M Peltor X5 ear defenders to block any additional noise from the haptic device.

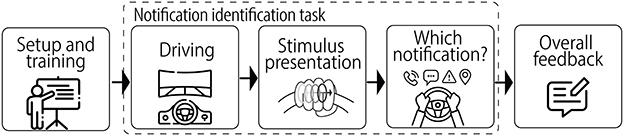

4.3 Procedure

Participants drove on a countryside road (no traffic, lights, or pedestrians) using the driving simulator. They were asked to drive without crashing or going beyond the boundaries of the road as much as possible in order to keep their attention on the driving task. The road included a guiding green line indicating the target path (see Figure 7). The driving mode was automatic so that participants only used the steering wheel and accelerator (no shift gear was required) to keep their hands-on-the-wheel during the whole task. Participants were asked to adopt a natural grip.

Then, participants went through an identification task - one of the four sensations (see Figure 6) was presented and they were asked to report the type of notification it corresponded to (incoming call, incoming text message, navigation alert, and driving assistance warning) by verbally reporting it to the experimenter who was taking notes. During this task, the four sensations were presented fourtimes each in a counterbalanced order and repeated during three driving conditions (driving + vibration, driving + no-vibration, and no-driving), thus resulting in 48 trials in total per participant.

In the driving conditions, participants used the Volvo driving simulation, in the no-driving condition they just held the wheel, but the screen was black. In the vibration condition the steering wheel vibrated during the whole task. In the no-vibration condition, no vibration was provided. Participants drove at a maximum speed of 50 km/h.

Before the notification identification task, participants went through a training stage to familiarize themselves with the 4 sensations and learn their association with the respective notification. For each sensation, they could see visual feedback of the correct notifications (a visual icon was shown on the screen) in each trial. The training was repeated until at least an 80% success rate of identification was achieved for each notification. Finally, at the end of the study, participants were asked to provide some qualitative feedback about their overall experience during the study. The full study lasted for ~30 min on average. Figure 8 illustrates the procedure of a single trial.

4.4 Background vibration

Background vibration was provided by two actuators from the “HapCoil Actuator system from Aktronika” and attached to the steering wheel on each side (see Figure 7). These actuators were vibrating during the whole notification identification task. We used a Ricker wavelet (20 ms–500 pts) also known as the Mexican hat wavelet which is often used to simulate car engine vibrations (Van den Ende et al., 2023; Bajwa et al., 2020). The parameters used for each actuator (actuator 1 - effect power = 10%, sampling frequency = 16.6 KHz, effect duration = 0.3 s; and actuator 2 - effect power = 10%, sampling frequency = 10 KHz, effect duration = 0.5 s), were determined through a pilot study with five driver subjects to define a vibration that was strong enough, but not overpowering, to simulate the vibration felt on the wheel while driving on a normal road.

4.5 Measures

During the notification identification task, we recorded two dependent variables—user response (explicit) aiming to explore participants' success rate to correctly identifying the notification type while driving, and—path deviation (implicit) aiming to explore how much participants' car deviated from the main road while receiving the haptic sensations. Path deviation was considered as a proxy measure of participants' distraction from the driving task following previous studies that have measured deviation from the lane as a metric of driving performance in automotive applications (Kim et al., 2020; Spakov et al., 2023; Roider et al., 2017).

4.6 Quantitative results

We analyzed the user response (whether participants' perceived stimulus matches the actual stimulus played) in three different conditions: driving + vibration, driving + no-vibration, and no-driving; and participants' path deviation (the deviation from the target line in the driving path) in three different conditions: before stimulation, during stimulation, and after stimulation.

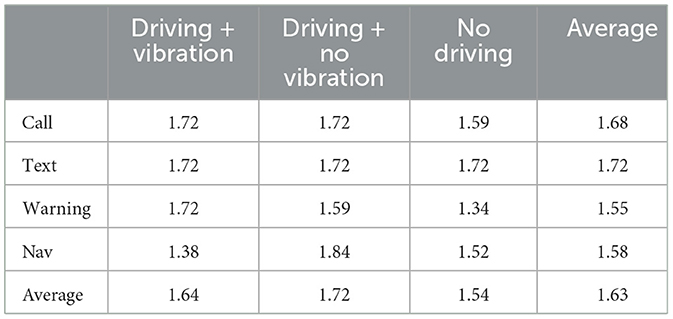

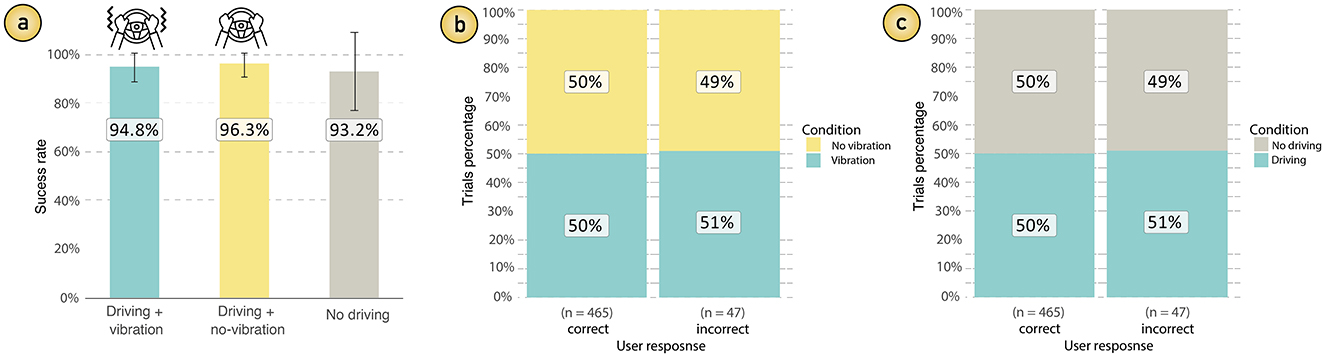

4.6.1 User response

Overall, participants presented a high success rate in each condition: 94.8% for the driving + vibration condition, 96.3% for the driving + no-vibration condition, and 93.2% for the no-driving condition (see Figure 9A). This suggests that participants were able to correctly identify the notification presented in all conditions. Further insights can be obtained from the confusion matrices shown in Table 1 and the information content per trial (or mutual information) shown in Table 2 calculated as , where N = 4 and Pe is the probability of error (i.e., the chance of choosing an incorrect response). With H values between 1.54 and 1.72 (out of a maximum of 2 bits per trial), users generally distinguish notifications accurately and consistently across the different conditions, suggesting minimal impact from driving or vibrating conditions.

Figure 9. Success rate in each condition tested: driving + vibration, driving + no-vibration, and no-driving (A). Chi-squared test results for the comparison of the different conditions: driving vs. no-driving (B), and vibration vs. no-vibration (C).

We further explored whether the user response is affected by the driving task or the wheel vibration. As the “user response” variable is a binary value (correct or incorrect), we used a Chi-square test for the analysis which was determined by a contingency table on each case (driving vs. no-driving and vibration vs. no-vibration) showing no values under 5 in any cell (Bower, 2003; McCrum-Gardner, 2008). Thus, a Chi-sq test of independence was used to determine if there was a significant association between the experimental conditions (driving vs. no-driving and vibration vs. no-vibration) and the “user response” variable.

The results showed that there was no statistically significant association between the user response and neither the “driving condition” [χ2(1) = 023427, p = 0.87], nor the “vibration condition” [χ2(1) = 023427, p = 0.87]. Figures 9B, C shows plots indicating the proportion of the different conditions, suggesting that there is no clear relationship between vibration vs. no-vibration nor between driving vs. no-driving conditions. This suggests that participants' performance was independent of both the driving task and the presence of vibrations on the steering wheel.

4.6.2 Path deviation

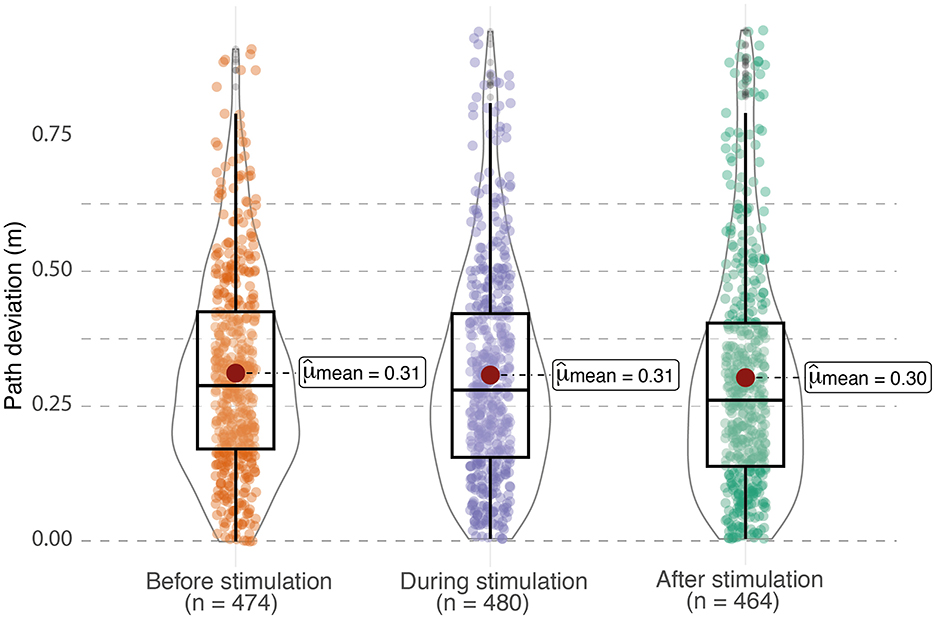

We collected the “path deviation” (PD) data into three blocks: before, during and after the stimulus presentation. The blocks “before” and “after” contain 2 s of PD data while the block “during” contains the data corresponding to the length of the presented notification. Extreme outliers were filtered out (118 out of 1,536 data points collected in total - 7.6% of the data). Figure 10 shows the PD data grouped by time instance after filtering outliers.

Figure 10. Box plot of path deviation results grouped by time condition (before, during and after haptic stimulation) after removing extreme out layers from the dataset.

Overall, participants deviated by about 30 cm from the main path in all time instances: before (mean = 0.31 m, SD = 0.18 m), during (mean = 0.31 m, SD = 0.19 m) and after (mean = 0.30 m, SD = 0.21 m) stimulus presentation. We ran a Friedman test to test whether there is a statistically significant difference between the PD means of the three groups.

Results show a significant difference when running the omnibus test (Pohlert, 2014), χ2(3) = 3.24, p = 0.03. However, after the pair-wise comparison with Bonferroni correction, we found no significant results for all pair-wise comparisons: before-during (p = 0.23), before-after (p = 0.39), and during-after (p = 0.056). Therefore, we reject the null hypothesis and conclude that the stimulus presentation was not affecting the driving task.

4.7 Qualitative results

At the end of the study, participants gave feedback about their overall experience using the knuckles notifications technique. Descriptions and some of the comments that we considered more relevant are given in the Appendix.

To better understand and draw insights from our user feedback, we transformed qualitative data (user comments) into quantitative insights through a structured analysis utilizing tools available to the scikit-learn Python library. This process allowed us to identify major themes within the feedback and quantify their occurrence, providing a clearer picture of user experiences and challenges.

We began by using natural language processing (NLP) techniques to analyze and group the user feedback comments. Each comment was first converted into a numerical format using a method called TF-IDF (Term Frequency-Inverse Document Frequency) available through the TfidfVectorizer module from scikit-learn. This approach helped highlight the most important words in each comment while downplaying common words that were less informative.

Next, we applied a KMeans clustering algorithm to identify distinct groups (or clusters) of comments based on their similarity. This allowed us to manually identify common threads or focus areas and naming each cluster, according to coherent themes. Specifically, this analysis resulted in five distinct clusters, each representing a different focus within the user feedback:

Cluster 1: Comments related to hand positioning and targeting issues with the haptic feedback system (four comments). Cluster 2: Feedback focusing on specific difficulties in differentiating sensations, especially during training and recognition tasks (six comments). Cluster 3: Comments highlighting the ease of use and general identifiability of the haptic notifications (seven comments). Cluster 4: General positive feedback about the overall user experience and perceived usefulness of the system in real-world driving (12 comments). Cluster 5: Feedback on external factors, such as the impact of external vibrations and comparisons to familiar devices (five comments).

Note that the distribution of these clusters shows that the largest proportion of comments (Cluster 4) was focused on the positive aspects and perceived usefulness of the system. In contrast, a smaller number of comments (Cluster 1) mentioned hand positioning and targeting concerns.

In summary, qualitative feedback shows insights that knuckles notifications could be a good option for receiving haptic notifications while driving, as participants found it enjoyable, easily identifiable, and familiar. Participants also gave useful feedback on how to improve the missed feedback due to hand position while holding the steering wheel. Implementation of knuckles notifications in a real car setting is crucial to validate these insights. This will require further safety considerations, yet, we see these results as a starting point toward a real-life setting.

5 Discussion

Overall, our results provide a variety of insights. First, we showed that mid-air haptic feedback can be clearly felt on the dorsal hand while holding a steering wheel. However, the strength of the hand gripping affects the felt intensity being heavily reduced when participants clenched their fist. Drivers could adopt a relaxed hand gripping while holding the steering wheel but for situations requiring a strong grip (e.g., when quickly reacting) any notifications will be affected.

Furthermore, our intensity mapping showed the location of the hand on which ultrasound stimulation feels with more intensity. While our intention was to find target locations of the dorsal hand, our mapping also showed that mid-air haptics is felt as more intense on the top part of the palm (inter-digital area) and the proximal phalanges. This contrasts most studies using ultrasound stimulation for in-car applications (Large et al., 2019; Young et al., 2020; Georgiou et al., 2017; Shakeri et al., 2018), in which stimuli are typically directed to the center of the palm (reported with low felt intensity in our study). A mapping of ultrasound perceived intensity on the hand (for both the palm and dorsal part) has not been explored to date, and therefore, we have provided relevant insights that other researchers could consider in the future. For example, direct ultrasound stimuli to the top part of the palm rather than the center of the palm.

Our results also open up opportunities to further understand the observed acoustic caustic effect. Usually, researchers direct ultrasound to the bare hand as the original purpose of mid-air haptic applications is to provide an unencumbered interaction (not holding any objects). However, here we have provided insights about a different approach in which the user could possibly hold different objects. For example, previous research has explored how to effectively combine tangibles and ultrasound mid-air haptics by using acoustically transparent surfaces (Howard et al., 2023). Our results suggest that acoustically transparent objects might not be necessary as long as the hand position (e.g., fingers aperture and gripping strength) allows for the acoustic caustic effect to produce a tactile sensation.

Moreover, we also give some insights for designing further knuckles notifications, not only for maximizing felt intensity but also to avoid that two stimuli presented simultaneously on different locations are perceived as a single point (a distance above 2 cm), and to allow the perception of motion and direction of a moving point (a length above 4 cm). These considerations could be used for designing different notifications types (e.g., fan speed, turn signals, etc.).

Our study with drivers showed that knuckles notifications yielded a high success rate of identifying the sensation associated with the different notifications. Our results also suggest that participants' ability to identify the notification was independent of both, the driving task and the presence of vibrations on the steering wheel [confirming previous findings (Spakov et al., 2022)]. Our results suggest that the path deviation value was not significantly affected while participants were feeling and identifying the notification.

These results were confirmed by participants' qualitative feedback suggesting that the sensations were clear and strong enough to perceive and differentiate the different notifications. While participants found some sensations similar, the stimulus properties (e.g., frequency, duration), which were often associated to some events (e.g., an old phone ringing, a smartwatch vibration), were useful for participants to distinguish between the different notifications.

Participants also shared positive thoughts about using knuckles notifications in real-life settings. However, since our study was done in a in-lab driving simulator using a LOGITECH interface, we propose our results to be taken as a first step toward using mid-air haptics on the dorsal hand in a real car during actual driving interactions. This was confirmed by some participants, who found unfamiliar the hands position they had to adopt to better feel the sensations (top of the wheel). This issue could be addressed by expanding the array of transducers so that it covers a larger area around the wheel. For example, we argued that the shape of the mid-air haptic display hardware could be expanded to cover the recommended “9 and 3” hand position on the steering wheel.

In summary, unlike prior mid-air haptic systems for in-vehicle interfaces, constrained by palmar feedback requiring hands-off-the-wheel actions, we provide an alternative technique for receiving mid-air haptic notifications promoting a hands-on-the-wheel and eyes-on-the road paradigm. Our results also provide a novel perceptual space of the dorsal hand, that can be useful for practitioners who are interested in designing mid-air haptic sensations in this area.

However, it is worth highlighting that our studies do not aim to demonstrate that knuckles notifications can replace simpler solutions such as typical auditory or visual notifications, which can be simpler to implement in a car setting. Instead, we aimed to explore the feasibility of using mid-air haptics on the dorsal hand for driving - a scenario where stimulating the palm could promote an unsafe interaction (e.g., hands-off-the-wheel). Mid-air haptics has been suggested to promote a safe driving and we wanted to address the requirement of palmar feedback proposed in previous studies. With our results, we aim to foster further research that goes beyond the scope of the present paper. Please refer to our “limitations” section for future research directions. Next, we present some application examples in which knuckles notifications could be useful.

6 Application examples

6.1 Privacy for head-up displays

Knuckles notifications could be integrated into head-up-display (HUD) technology which is increasingly popular in the automotive industry (Pauzie, 2015). HUDs can show visual information into the driver's field of view [as opposed to typical central console head-down interaction (Liu and Wen, 2004)] directly projected on the windshield and merged with the traffic scene. This feature is considered desirable since (i) it enables an eyes-on-the-road paradigm, thus promoting driving safety (Ma et al., 2021), and (ii) maintains higher situational awareness levels contributing to better performance in autonomous driving (Stojmenova Pečečnik et al., 2023).

Although standard notifications through dashboard lights or HUD icons with sounds seems much simpler, HUDs could compromise the driver's privacy, as information can be seen by others in the car (Roesner et al., 2014). This is especially relevant for emerging full windshield augmented reality head-up displays (AR-HUDs; Charissis, 2014; Zhang et al., 2021), and therefore, receiving notifications that only the receiver can understand would help in preserving the driver's privacy. Future research could explore the integration of knuckles notifications within AU-HUDs interactions. For example, if the driver receives a knuckles notification of an incoming video-call, they can decide whether to answer or not depending on the tactile pattern associated to the caller.

6.2 Haptic feedback while holding objects

We see our study as an initial step toward using ultrasound-based haptic feedback while holding objects in different contexts. For example, in virtual reality, users are no limited to holding controllers but also other objects, such as pens (Drey et al., 2020), brushes (Fender et al., 2023), smartphones (Kyian and Teather, 2021), tablets (Montano-Murillo et al., 2020), books Cardoso and Ribeiro (2021), and among many others.

While our system is limited by hand gripping and specific areas of the dorsal hand (to preserve stimulus intensity), future research could explore alternatives to overcome these limitations. For example, by designing objects with ditches to trap the ultrasound between the hand and the object, to provide haptic sensations that are projected onto the dorsal hand but are propagated to the palm as well. This could be achieved by better understanding and characterizing the acoustic caustic effect observed in our system.

6.3 Stimulus design based on intensity mapping

Perceptual studies provide relevant parameters to improve mid-air haptic intensity. For example by modulating focal point movement speed (Frier et al., 2018) or lateral modulation parameters (Takahashi et al., 2018). However, researchers typically use a constant intensity to provide certain sensations and do not vary the intensity based on the area being stimulated. Yet, mid-air haptic perceived strength, is not constant throughout our hand surface (Lundström, 1985).

As shown in our intensity mapping (see Figure 4), a different intensity is perceived depending on the stimulated area. This suggests that the sensation felt might not match the sensation that was designed. We argue that once the perceptual intensities on the hand are identified, we could dynamically compensate the stimulus pressure so that the sensation designed matches the sensation felt. This would imply to dynamically modulate the intensities by increasing the pressure on the areas with lower sensitivity and decreasing the pressure on the areas with higher sensitivity. Future research could explore how to predict the felt intensity when a stimulus travels along the different areas of the hand (either that palm or dorsal hand) and then implement a correction factor.

7 Limitations and future work

The perceptual space of the dorsal hand was obtained only for horizontal movements of the haptic stimulus. A more detailed exploration including vertical movements as well, is required to better understand the hand perception and thus improve the designs of future haptic notifications.

Our traffic scene was simple (no pedestrians, signs or other cars). The action of driving is itself a demanding task, thus, future work should be focused on a more complex road to test knuckle notifications with a higher level of distraction (e.g., a busy city). For example, a more controlled driving task, such as the standard lane changing task with the standard metric Standard Deviation of Lateral Position (SDLP; Häuslschmid et al., 2015).

We used a constant vibration to simulate a car movement while driving usually involves stronger vibration at times. Therefore, future work should include irregular/intermittent vibrations, and ideally, a real car and road setting.

We only designed notifications for a single hand, thus, two-hand notifications still need to be explored. Future work can be particularly focused on switching between hands depending on the hand that is at a better position to be seen by the tracking camera and not occluded to perceive the haptic sensation.

We used “path deviation” as a measure of driver distraction assuming that deviating from the main road could indicate that participants found the notification identification distracting. However, this measure does not assess how much drivers can take their eyes off-the-road. Future work including eye tracking needs to be explored. Furthermore, the impacts of other distractions in the car (e.g. radio playing or air con blowing) should be considered as well.

Path deviation measure alone may not comprehensively capture the full spectrum of driving safety. More advanced metrics, such as safety time margins and reaction time analyzes, could provide deeper insights. Further studies incorporating a broader range of driving performance metrics are necessary to substantiate and refine our findings on safety implications.

For our user study, we used a 35 x 10 cm mid-air haptic array which consisted of 32x8 transducers, which can be big to be embedded in the dashboard of a traditional car. Therefore, a modular configuration (e.g., multiple segments) could be used for future work. Moreover, we used a custom-made camera-based hand tracking (a Leap Motion Stereo IR170 camera). Therefore, occlusion can affect the performance of our system. A multiple camera setup that increase visibility and tracking accuracy could be used to address this limitation.

A direct comparison between knuckles notifications and traditional configurations (e.g., palmar feedback) is still missing to demonstrate that dorsal feedback can overcome current palmar feedback systems to reduce the driver's distraction. Furthermore, a comparison with other simpler sensory cues, such as audio notifications, should be considered.

Finally, a direct comparison between knuckles notifications approach and other haptic feedback systems is needed, particularly a comparison with traditional vibrotactile steering wheel notifications will be crucial to explore the benefits and drawbacks of mid-air haptics for in-car notifications.

8 Conclusion

We introduced knuckles notifications, a novel system to provide ultrasound-based mid-air haptic feedback on the driver's dorsal hand. We conducted a series of exploratory studies to understand the perceptual space of the dorsal hand while holding a steering wheel (stimulus intensity, target areas, hand positions, proximity thresholds, and sense of direction), and designed four sensations focused on the knuckles area (covering the fingers and proximal phalanges) associated to in-car notifications (incoming call, incoming message, navigation alert, and driving assistant warning). We then demonstrated through a user evaluation with drivers that knuckles notifications are suitable for driving (tested in a driving simulator) as they are not affected by masking effects associated to car vibration and do not produce significant distraction to the driver. We discuss applications for knuckles notifications including privacy while driving, mid-air haptics while holding other objects and sensation design.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation. Please contact the corresponding author to request the data.

Ethics statement

The studies involving humans were approved by Research Ethics Committee from Ultraleap under Application No. PROJ-MEX-FLF-001. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

RMon: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Validation, Writing – original draft, Writing – review & editing. RMor: Conceptualization, Writing – review & editing. DP: Formal analysis, Methodology, Writing – review & editing. WF: Conceptualization, Formal analysis, Methodology, Writing – review & editing. OG: Formal analysis, Investigation, Methodology, Writing – review & editing. PC: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Supervision, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by UKRI Future Leaders Fellowship grant (Reference: MR/V025511/1) and the European Union's Horizon 2020 research and innovation programme under grant agreement no. 101017746 (project TOUCHLESS).

Conflict of interest

RMon, WF, OG, and PC were employed by Ultraleap Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomp.2024.1455201/full#supplementary-material

References

Angelini, L., Carrino, F., Carrino, S., Caon, M., Khaled, O. A., Baumgartner, J., et al. (2014). “Gesturing on the steering wheel: a user-elicited taxonomy,” in Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI '14 (New York, NY: Association for Computing Machinery), 1–8.

Bach, K. M., Jæger, M. G., Skov, M. B., and Thomassen, N. G. (2008). “You can touch, but you can't look: interacting with in-vehicle systems,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI '08 (New York, NY: Association for Computing Machinery), 1139–1148.

Bajwa, R., Coleri, E., Rajagopal, R., Varaiya, P., and Flores, C. (2020). Pavement performance assessment using a cost-effective wireless accelerometer system. Comput. Aid. Civ. Infrastruct. Eng. 35, 1009–1022. doi: 10.1111/mice.12544

Bower, K. M. (2003). “When to use fisher's exact test,” in American Society for Quality, Six Sigma Forum Magazine, Vol. 2 (Milwaukee, WI: American Society for Quality), 35–37.

Brown, E., Large, D. R., Limerick, H., Frier, W., and Burnett, G. (2022). “Augmenting automotive gesture infotainment interfaces through mid-air haptic icon design,” in Ultrasound Mid-Air Haptics for Touchless Interfaces, eds. O. Georgiou, W. Frier, C. Pacchierotti, and T. Hoshi (Berlin: Springer), 119–145.

Brown, E., R. Large, D., Limerick, H., and Burnett, G. (2020). “Ultrahapticons: “haptifying” drivers' mental models to transform automotive mid-air haptic gesture infotainment interfaces,” in 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI '20 (New York, NY: Association for Computing Machinery), 54–57.

Cardoso, J., and Ribeiro, J. (2021). “VR book: a tangible interface for smartphone-based virtual reality,” in MobiQuitous 2020—17th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, MobiQuitous '20 (New York, NY: Association for Computing Machinery), 48–58.

Carter, T., Seah, S. A., Long, B., Drinkwater, B., and Subramanian, S. (2013). “Ultrahaptics: multi-point mid-air haptic feedback for touch surfaces,” in Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology, UIST—13 (New York, NY: Association for Computing Machinery), 505–514.

Charissis, V. (2014). “Enhancing human responses through augmented reality head-up display in vehicular environment,” in 2014 11th International Conference and Expo on Emerging Technologies for a Smarter World (CEWIT) (New York, NY: IEEE), 1–6.

Drey, T., Gugenheimer, J., Karlbauer, J., Milo, M., and Rukzio, E. (2020). “VRSketchin: exploring the design space of pen and tablet interaction for 3D sketching in virtual reality,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, CHI '20 (New York, NY: Association for Computing Machinery), 1–14.

Fender, A. R., Roberts, T., Luong, T., and Holz, C. (2023). “Infinitepaint: painting in virtual reality with passive haptics using wet brushes and a physical proxy canvas,” in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, CHI '23. New York, NY: Association for Computing Machinery.

Ferwerda, B., Kiunsi, D. R., and Tkalcic, M. (2022). “Too much of a good thing: When in-car driver assistance notifications become too much,” in AutomotiveUI '22 (New York, NY: Association for Computing Machinery), 79–82.

Fink, P. D. S., Dimitrov, V., Yasuda, H., Chen, T. L., Corey, R. R., Giudice, N. A., et al. (2023). “Autonomous is not enough: Designing multisensory mid-air gestures for vehicle interactions among people with visual impairments,” in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, CHI '23. New York, NY: Association for Computing Machinery.

Frier, W., Ablart, D., Chilles, J., Long, B., Giordano, M., Obrist, M., et al. (2018). “Using spatiotemporal modulation to draw tactile patterns in mid-air,” in International Conference on Human Haptic Sensing and Touch Enabled Computer Applications (Berlin: Springer), 270–281.

Georgiou, O., Biscione, V., Harwood, A., Griffiths, D., Giordano, M., Long, B., et al. (2017). “Haptic in-vehicle gesture controls,” in Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct, AutomotiveUI '17 (New York, NY: Association for Computing Machinery), 233–238.

Gil, H., Son, H., Kim, J. R., and Oakley, I. (2018). “Whiskers: exploring the use of ultrasonic haptic cues on the face,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (New York, NY), 1–13.

González, I. E., Wobbrock, J. O., Chau, D. H., Faulring, A., and Myers, B. A. (2007). “Eyes on the road, hands on the wheel: thumb-based interaction techniques for input on steering wheels,” in Proceedings of Graphics Interface 2007 (New York, NY: Association for Computing Machinery), 95–102.

Hafizi, A., Henderson, J., Neshati, A., Zhou, W., Lank, E., and Vogel, D. (2023). “In-vehicle performance and distraction for midair and touch directional gestures,” in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, CHI '23. New York, NY: Association for Computing Machinery.

Harrington, K., Large, D. R., Burnett, G., and Georgiou, O. (2018). “Exploring the use of mid-air ultrasonic feedback to enhance automotive user interfaces,” in Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI '18 (New York, NY: Association for Computing Machinery), 11–20.

Häuslschmid, R., Menrad, B., and Butz, A. (2015). “Freehand vs. micro gestures in the car: driving performance and user experience,” in 2015 IEEE Symposium on 3D User Interfaces (3DUI) (Arles: IEEE), 159–160.

Howard, T., Gicquel, G., Pacchierotti, C., and Marchal, M. (2023). Can we effectively combine tangibles and ultrasound mid-air haptics? a study of acoustically transparent tangible surfaces. IEEE Trans. Hapt. 2023:3267096. doi: 10.1109/toh.2023.3267096

Jingu, A., Kamigaki, T., Fujiwara, M., Makino, Y., and Shinoda, H. (2021). “LipnoTif: use of lips as a non-contact tactile notification interface based on ultrasonic tactile presentation,” in The 34th Annual ACM Symposium on User Interface Software and Technology, UIST '21 (New York, NY: Association for Computing Machinery), 13–23.

Johansson, R. S., and Flanagan, J. R. (2009). Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat. Rev. Neurosci. 10, 345–359. doi: 10.1038/nrn2621

Johansson, R. S., and Vallbo, A. B. (1979). Tactile sensibility in the human hand: relative and absolute densities of four types of mechanoreceptive units in glabrous skin. J. Physiol. 286, 283–300.

Kim, M., Seong, E., Jwa, Y., Lee, J., and Kim, S. (2020). A cascaded multimodal natural user interface to reduce driver distraction. IEEE Access 8, 112969–112984. doi: 10.1109/ACCESS.2020.3002775

Korres, G., Chehabeddine, S., and Eid, M. (2020). Mid-air tactile feedback co-located with virtual touchscreen improves dual-task performance. IEEE Trans. Hapt. 13, 825–830. doi: 10.1109/TOH.2020.2972537

Kulowski, A. (2018). The caustic in the acoustics of historic interiors. Appl. Acoust. 133, 82–90. doi: 10.1016/j.apacoust.2017.12.008

Kyian, S., and Teather, R. (2021). “Selection performance using a smartphone in VR with redirected input,” in Proceedings of the 2021 ACM Symposium on Spatial User Interaction, SUI '21. New York, NY: Association for Computing Machinery.

Lan, R., Sun, X., Wang, Q., and Liu, B. (2024). “Ultrasonic mid-air haptics on the face: effects of lateral modulation frequency and amplitude on users' responses,” in Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, CHI '24. New York, NY: Association for Computing Machinery.

Large, D. R., Harrington, K., Burnett, G., and Georgiou, O. (2019). Feel the noise: mid-air ultrasound haptics as a novel human-vehicle interaction paradigm. Appl. Ergon. 81:102909. doi: 10.1016/j.apergo.2019.102909

Lim, C., Park, G., and Seifi, H. (2024). “Designing distinguishable mid-air ultrasound tactons with temporal parameters,” in Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, CHI '24. New York, NY: Association for Computing Machinery.

Liu, Y.-C., and Wen, M.-H. (2004). Comparison of head-up display (HUD) vs. head-down display (HDD): driving performance of commercial vehicle operators in Taiwan. Int. J. Hum. Comput. Stud. 61, 679–697. doi: 10.1016/j.ijhcs.2004.06.002

Lundström, R. (1985). Vibration Exposure of the Glabrous Skin of the Human Hand (Ph. D. thesis). Umeå universitet, Umeå, Sweden.

Ma, X., Jia, M., Hong, Z., Kwok, A. P. K., and Yan, M. (2021). Does augmented-reality head-up display help? a preliminary study on driving performance through a VR-simulated eye movement analysis. IEEE Access 9, 129951–129964. doi: 10.1109/ACCESS.2021.3112240

McCrum-Gardner, E. (2008). Which is the correct statistical test to use? Br. J. Oral Maxillof. Surg. 46, 38–41. doi: 10.1016/j.bjoms.2007.09.002

Meschtscherjakov, A. (2017). The Steering Wheel: A Design Space Exploration (Cham: Springer International Publishing), 349–373.

Montano-Murillo, R. A., Nguyen, C., Kazi, R. H., Subramanian, S., DiVerdi, S., and Martinez-Plasencia, D. (2020). “Slicing-volume: hybrid 3D/2D multi-target selection technique for dense virtual environments,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Atlanta, GA: IEEE), 53–62.

Murer, M., Wilfinger, D., Meschtscherjakov, A., Osswald, S., and Tscheligi, M. (2012). “Exploring the back of the steering wheel: text input with hands on the wheel and eyes on the road,” in Proceedings of the 4th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI '12 (New York, NY: Association for Computing Machinery), 117–120.

Noubissie Tientcheu, S. I., Du, S., and Djouani, K. (2022). Review on haptic assistive driving systems based on drivers’ steering-wheel operating behaviour. Electronics 11:132102. doi: 10.3390/electronics11132102

Pauzie, A. (2015). “Head up display in automotive: a new reality for the driver,” in Design, User Experience, and Usability: Interactive Experience Design, ed. A. Marcus (Cham: Springer International Publishing), 505–516.

Petermeijer, S. M., de Winter, J. C. F., and Bengler, K. J. (2016). Vibrotactile displays: a survey with a view on highly automated driving. IEEE Trans. Intell. Transport. Syst. 17, 897–907. doi: 10.1109/TITS.2015.2494873

Pittera, D., Georgiou, O., Abdouni, A., and Frier, W. (2022). “I can feel it coming in the hairs tonight”: characterising mid-air haptics on the hairy parts of the skin. IEEE Trans. Hapt. 15, 188–199. doi: 10.1109/TOH.2021.3110722

Pitts, M. J., Skrypchuk, L., Wellings, T., Attridge, A., and Williams, M. A. (2012). Evaluating user response to in-car haptic feedback touchscreens using the lane change test. Adv. Hum. Comp. Int. 2012:598739. doi: 10.1155/2012/598739

Pohlert, T. (2014). The pairwise multiple comparison of mean ranks package (PMCMR). R Package 27:9. doi: 10.32614/CRAN.package.PMCMR

Pont, S. C., Kappers, A. M., and Koenderink, J. J. (1997). Haptic curvature discrimination at several regions of the hand. Percept. Psychophys. 59, 1225–1240.

Rakkolainen, I., Sand, A., and Raisamo, R. (2019). “A survey of mid-air ultrasonic tactile feedback,” in 2019 IEEE International Symposium on Multimedia (ISM) (San Diego, CA: IEEE), 94–944.

Roesner, F., Kohno, T., and Molnar, D. (2014). Security and privacy for augmented reality systems. Commun. ACM 57, 88–96. doi: 10.1145/2580723.2580730

Roider, F., and Raab, K. (2018). “Implementation and evaluation of peripheral light feedback for mid-air gesture interaction in the car,” in 2018 14th International Conference on Intelligent Environments (IE) (Rome: IEEE), 87–90.

Roider, F., Rümelin, S., Pfleging, B., and Gross, T. (2017). “The effects of situational demands on gaze, speech and gesture input in the vehicle,” in Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI '17 (New York, NY: Association for Computing Machinery), 94–102.

Ryu, J., Chun, J., Park, G., Choi, S., and Han, S. H. (2010). Vibrotactile feedback for information delivery in the vehicle. IEEE Trans. Hapt. 3, 138–149. doi: 10.1109/TOH.2010.1

Salagean, A., Hadnett-Hunter, J., Finnegan, D. J., De Sousa, A. A., and Proulx, M. J. (2022). A virtual reality application of the rubber hand illusion induced by ultrasonic mid-air haptic stimulation. ACM Trans. Appl. Percept. 19:3487563. doi: 10.1145/3487563

Sand, A., Rakkolainen, I., Isokoski, P., Raisamo, R., and Palovuori, K. (2015). “Light-weight immaterial particle displays with mid-air tactile feedback,” in 2015 IEEE International Symposium on Haptic, Audio and Visual Environments and Games (HAVE) (Ottawa, ON: IEEE), 1–5.

Schleicher, D., Jones, P., and Kachur, O. (2010). Bodystorming as embodied designing. Interactions 17, 47–51. doi: 10.1145/1865245.1865256

Seifi, H., Chew, S., Nascè, A. J., Lowther, W. E., Frier, W., and Hornbæk, K. (2023a). Feellustrator—Hasti Seifi—hastiseifi.com. Available at: https://hastiseifi.com/research/feellustrator/ (accessed July 07, 2023).

Seifi, H., Chew, S., Nascè, A. J., Lowther, W. E., Frier, W., and Hornbæk, K. (2023b). “Feellustrator: a design tool for ultrasound mid-air haptics,” in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, CHI '23. New York, NY: Association for Computing Machinery.

Shakeri, G., Ng, A., Williamson, J. H., and Brewster, S. A. (2016). “Evaluation of haptic patterns on a steering wheel,” in Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Automotive'UI 16 (New York, NY: Association for Computing Machinery), 129-136.

Shakeri, G., Williamson, J. H., and Brewster, S. (2018). “May the force be with you: ultrasound haptic feedback for mid-air gesture interaction in cars,” in Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI '18 (New York, NY: Association for Computing Machinery), 1–10.

Shen, V., Shultz, C., and Harrison, C. (2022). “Mouth haptics in VR using a headset ultrasound phased array,” in Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, CHI '22. New York, NY: Association for Computing Machinery.

Spakov, O., Farooq, A., Venesvirta, H., Hippula, A., Surakka, V., and Raisamo, R. (2022). “Ultrasound feedback for mid-air gesture interaction in vibrating environment,” in Human Interaction and Emerging Technologies: Artificial Intelligence and Future Applications. Orlando: AHFE International.

Spakov, O., Venesvirta, H., Lylykangas, J., Farooq, A., Raisamo, R., and Surakka, V. (2023). “Multimodal gaze-based interaction in cars: are mid-air gestures with haptic feedback safer than buttons?” in Design, User Experience, and Usability: 12th International Conference, DUXU 2023, Held as Part of the 25th HCI International Conference, HCII 2023, Copenhagen, Denmark, July 23–28, 2023, Proceedings, Part III (Berlin; Heidelberg: Springer-Verlag), 333–352.

Spelmezan, D., González, R. M., and Subramanian, S. (2016). “Skinhaptics: ultrasound focused in the hand creates tactile sensations,” in 2016 IEEE Haptics Symposium (HAPTICS) (Philadelphia, PA: IEEE), 98–105.