- 1Department of Computer Science, Kennesaw State University, Kennesaw, GA, United States

- 2Department of Software Engineering and Game Development, Kennesaw State University, Kennesaw, GA, United States

- 3TCL Rayneo XR, Shenzhen, China

Background: The ability to maintain attention is crucial for achieving success in various aspects of life, including academic pursuits, career advancement, and social interactions. Attention deficit disorder (ADD) is a common symptom associated with autism spectrum disorder (ASD), which can pose challenges for individuals affected by it, impacting their social interactions and learning abilities. To address this issue, virtual reality (VR) has emerged as a promising tool for attention training with the ability to create personalized virtual worlds, providing a conducive platform for attention-focused interventions. Furthermore, leveraging physiological data can be instrumental in the development and enhancement of attention-training techniques for individuals.

Methods: In our preliminary study, a functional prototype for attention therapy systems was developed. In the current phase, the objective is to create a framework called VR-PDA (Virtual Reality Physiological Data Analysis) that utilizes physiological data for tracking and improving attention in individuals. Four distinct training strategies such as noise, score, object opacity, and red vignette are implemented in this framework. The primary goal is to leverage virtual reality technology and physiological data analysis to enhance attentional capabilities.

Results: Our data analysis results revealed that reinforcement training strategies are crucial for improving attention in individuals with ASD, while they are not significant for non-autistic individuals. Among all the different strategies employed, the noise strategy demonstrates superior efficacy in training attention among individuals with ASD. On the other hand, for Non-ASD individuals, no specific training proves to be effective in enhancing attention. The total gazing time feature exhibited benefits for participants with and without ASD.

Discussion: The results consistently demonstrated favorable outcomes for both groups, indicating an enhanced level of attentiveness. These findings provide valuable insights into the effectiveness of different strategies for attention training and emphasize the potential of virtual reality (VR) and physiological data in attention training programs for individuals with ASD. The results of this study open up new avenues for further research and inspire future developments.

1. Introduction

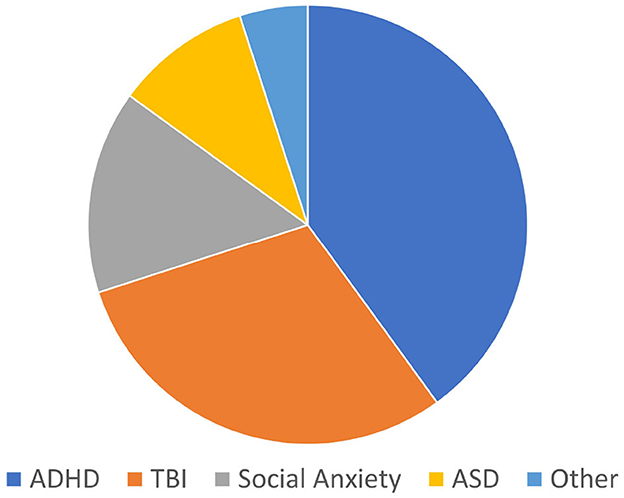

Autism spectrum disorder (ASD), a group of neurodevelopmental conditions, is defined by difficulties in social interaction and communication (Hodges et al., 2020). Although attention issues are frequently observed in ASD patients, they are not considered to be one of the primary diagnostic criteria for the condition. Notably, tactile activities like finger painting, arts and crafts, etc. (Hatch-Rasmussen, 2022) make people with ASD more susceptible to visual distractions (Poole et al., 2018). These distractions may impair their ability to concentrate and engage in such tasks, which may decrease their engagement and overall experience. After extensive research, it is revealed that a substantial number of studies have concentrated on attention improvement in individuals with attention deficit hyperactivity disorder (ADHD) and traumatic brain Injury (TBI). Figure 1 illustrates the distribution of studies that have concentrated on enhancing attention across different disorders, represented as a percentage. However, the pie chart also shows that attention improvement in individuals with ASD requires further investigation and development. While there are certain medications like selective serotonin reuptake inhibitors (SSRIs) and treatments like behavioral management therapy, cognitive behavior therapy, etc. are available for ASD. Their effectiveness varies from person to person since people with ASD possess distinctive strengths and face unique challenges (CDC, 2022). It is crucial to recognize that these approaches may not provide benefits to all autistic people and may even have negative effects. In order to effectively handle the various difficulties caused by ASD, a tailored and thorough approach has to be considered by taking behavioral treatments which focus on shaping behavior using positive reinforcement, therapeutic supports that aim to improve communication skills, and educational initiatives which provide specialized instructions. Thus, opening the door to emerging technologies like virtual reality (VR) which has the potential to help autistic people with their attention disabilities.

Through the use of visual and auditory stimuli, VR environments can be designed to capture and maintain the attention of individuals with ASD (Tarng et al., 2022), promoting engagement and facilitating learning. Moreover, it is worth noting that physiological data, such as heart rate (HR), electrodermal activity (EDA) (Thomas et al., 2011), and eye data (Arslanyilmaz and Sullins, 2021) demonstrate a significant connection with attention processes. These physiological parameters serve as measurable indicators of a person's level of arousal and engagement. By incorporating physiological monitoring techniques into VR interventions for individuals with ASD, valuable insights will be gained in the area of attention.

Based on our understanding, previous studies primarily focused on examining the effectiveness of VR training in behavior and cognitive function improvement (Shema-Shiratzky et al., 2018). However, there is a limited amount of research that explored the application of VR-based attention training coupled with physiological data specifically for individuals with Autism. The objective of the research is to establish and evaluate a VR-PDA (Virtual Reality Physiological Data Analysis) framework that intends to improve attentional skills in individuals with ASD. This framework is developed based on the preliminary phase of the study involved developing a methodology (Zhang et al., 2020) and creating a functional prototype. Additionally, an adaptive virtual environments therapy system (AVET) was already developed (Li et al., 2023), leveraging eye-gazing methodology as part of the intervention.

In this study, three key interventions are implemented to examine their effects on attentional states in individuals with ASD and NONASD. (1) we analyzed and compared the physiological data, to identify the patterns or correlations that indicate an individual's increased or decreased attention, (2) we further investigated whether there may be a connection between physiological data and attentional states in individuals with ASD and NONASD, (3) we examined whether the physiological data gathered during attention training utilizing VR simulations can be the potential biomarkers for assessing changes in attention levels.

The findings from the study reveal that the implementation of the noise strategy is particularly effective in enhancing attention among individuals with ASD. Conversely, for individuals without ASD (NONASD), the absence of specific training appears to yield more beneficial outcomes. These results hold significant potential in utilizing physiological markers as indicators to assess attentional improvements or deficits not only in individuals with ASD but also in other populations. Such insights contribute to a comprehensive understanding of attention dynamics within these distinct groups, aiding in the advancement of knowledge in this field.

This paper provides a clear understanding of the materials and methods in Section 2. The experimental results obtained from the study are presented and analyzed in Section 3. The discussion of the research is summarized in Section 4.

2. Materials and methods

2.1. Participants

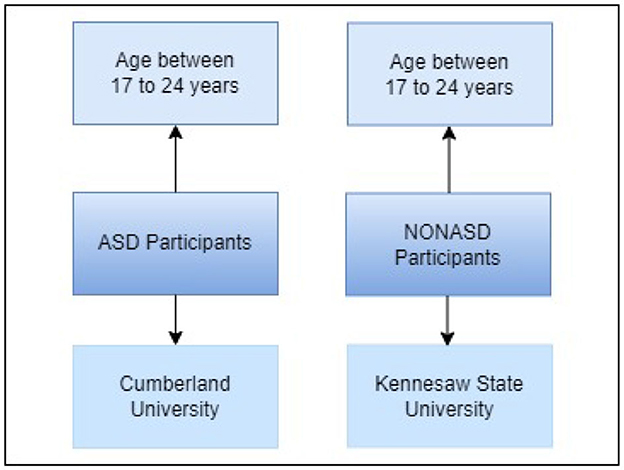

The study involved a total of 25 participants, ranging in age from 17 to 24 years. The participants were divided into two groups: the ASD group, consisting of students from Cumberland University, and the NONASD group, consisting of students from Kennesaw University. To recruit ASD students, assistance was sought from Cumberland professors, while NONASD students were recruited through daily newsletters and pinboards. Participants received compensation in the form of coupons for their involvement in the study. Prior to their inclusion, all participants provided written informed consent, and the study obtained approval from both the University of Kennesaw Committee and the Cumberland Committee. Figure 2 visually presents the recruited participants, providing a clear overview of the distribution. Each group comprised 25 participants, further divided into five subgroups. Each subgroup within the ASD and NONASD groups was assigned a specific training strategy, while one subgroup in each group served as the control group without any training. The collected data from all recruited students in both groups, including heart rate, electrodermal activity (EDA), and eye data, were analyzed. However, due to unreliable eye data obtained from ASD children, only 16 participants from the ASD group were considered for eye data analysis.

2.2. Equipment

The complete set-up includes an Alienware desktop, HTC VIVE PRO (Wolfartsberger, 2019) with a head-mounted device (HMD) that represents realistic visual images (Iwamoto et al., 1994) with eye tracking capability accurate to 0.5°–1.1° and a trackable field of view for 110°, steam VR software, and an E4 Empatica wristband (McCarthy et al., 2016) having sampling rate as 64 Hz (64 times per second) for heart rate and 1 Hz (one data point per second) for EDA. The participant will be seated comfortably in a chair while wearing the VR headset that is connected to an Alienware workstation for the purpose of the experiment. The HMD included with VIVE is useful for capturing behavioral eye data (Lohr et al., 2019), such as information on the pupil and the direction of sight, etc. The sensors of the wristband, which will be on the participant's wrist area, capture and record the heart rate and EDA in real time. Now that the room is set up and Steam VR is running, we'll enable SRAnipal (Adhanom et al., 2020) before launching the VR classroom. The data will be gathered after the experiment is completed and analyzed later. Figure 3 gives a clear picture of the whole experimental setup.

2.3. Virtual-reality classroom design

With VR, it is possible to engage and immerse oneself in a computer-created environment that aims to be realistic and give the user a sense of presence (Rohani and Puthusserypady, 2015). Our VR classroom is set up to look like an actual classroom, complete with student desks, a map, a clock, a teacher's desk, a virtual teacher, a chalkboard, a sizable window overlooking a playground, and trees visible from the windows. The Unity Game Engine 2021.3.2f1 software is used to produce VR animations (Hellum et al., 2023). The classes delivered in VR consist primarily of TEDx talks, focusing on personal life incidents. These talks have a duration of 15 min each. There are three sessions in the classroom: Session 1, Session 2, and Session 3. The first one serves as a baseline session, the second session for reinforcement, and the last one serves as a performance session. In session 2 of the reinforcement training, we used four distinct strategies, including score, red vignette, noise, and object opacity, as positive reinforcement, negative reinforcement, positive punishment, and negative punishment, respectively. To ensure that each participant is at ease throughout the study, the maximum audio volume and the red vignette's opacity are adjusted. The HMD and wristband will begin collecting the participant's real-time eye behavior and heart rate data, and electrodermal activity respectively as soon as the VR class begins and they are listening to the lecture.

2.4. Strategies

As previously discussed, the training session incorporates four distinct strategies: score, red vignette, noise, and object opacity, which are implemented within the VR user interface. The VR user interface displays these strategies as audio and visual cues whenever the participant is not engaged in active listening during the class.

• Scoring: The scoring strategy serves as a positive reinforcement mechanism, whereby the participant's score increases when actively listening to the class, remaining unchanged otherwise.

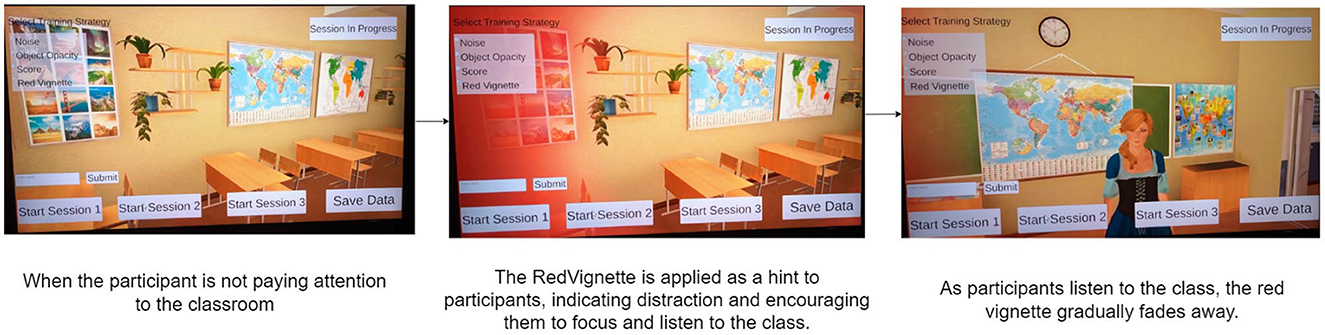

• Vignette: The red vignette strategy operates as a negative reinforcement approach, gradually increasing the opacity of the vignette when the participant is not attentive, providing a visual indication of their disengagement. Conversely, when the participant listens attentively, the vignette opacity gradually decreases.

• Noise: The noise strategy acts as a positive punishment technique, triggering an increase in auditory noise when the participant becomes distracted from the class, which progressively diminishes as they refocus their attention.

• Opacity: The object opacity strategy functions as a negative punishment mechanism, causing objects of distraction, other than the teacher, to gradually disappear or reduce in opacity when the participant fixates their gaze on them.

Figure 4 gives a clear idea of the red vignette strategy and how it guides the participant to be attentive. Throughout the study, careful consideration is given to maintain optimal user comfort while implementing the strategies.

2.5. Procedure

All participants will receive a clear and comprehensive explanation of the experiment, along with detailed instructions. Subsequently, participants will complete a pre-questionnaire containing basic demographic information. The E4 wristband, equipped with sensors, will be securely attached to each participant's hand. Next, participants will engage in the VR classroom while listening to the instructions. The training session will last approximately 15 min. Upon completion, participants will be provided with a post-questionnaire to gather their feedback on the game and overall experience.

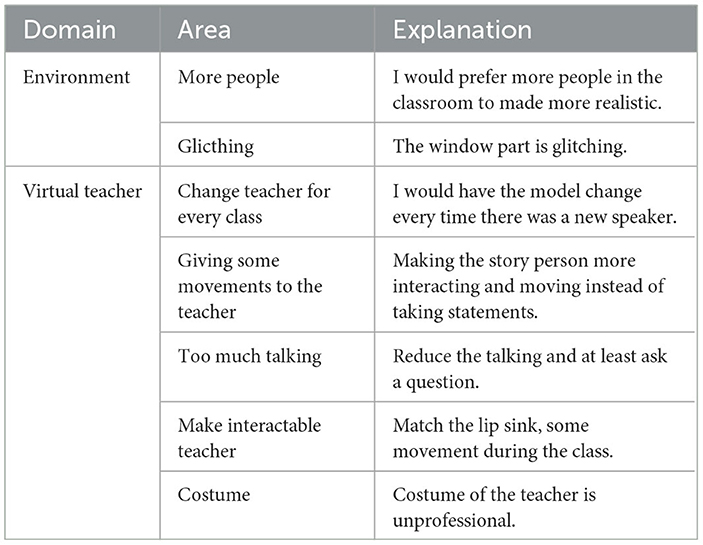

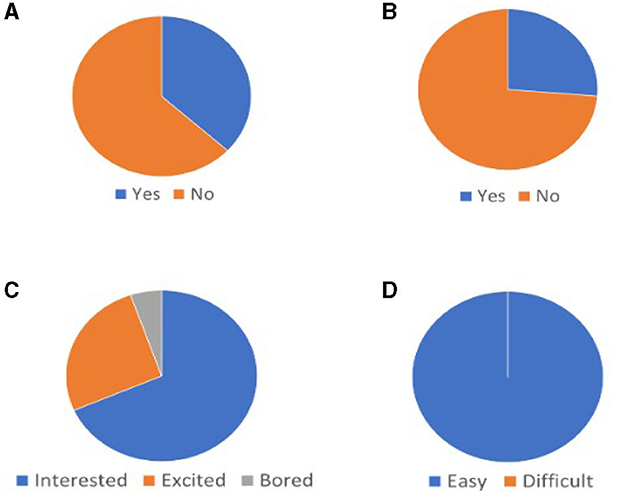

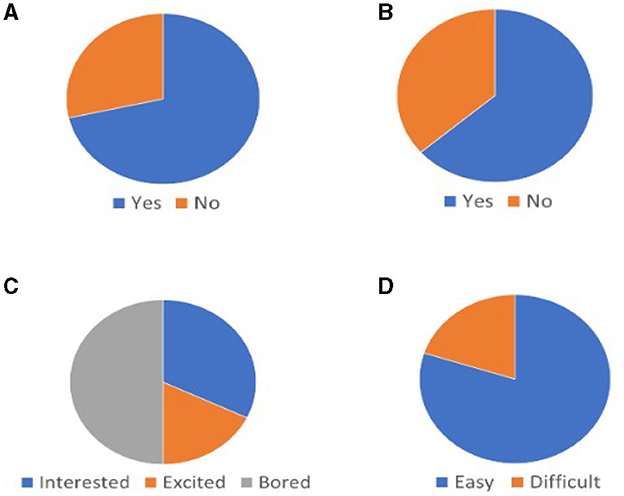

2.6. Data collection

Participants were administered questionnaires at both the beginning and end of the experiment to gather their responses and assess their experiences. The pre-questionnaire data for NONASD participants is presented in Figures 5A, B, as shown in Figure 5. For ASD participants, the pre-questionnaire data is visualized in Figures 6A, B, as shown in Figure 6. Similarly, the post-questionnaire data for NONASD participants is described in Figures 5C, D, and for ASD participants, it is depicted in Figures 6C, D. From the pre-questionnaire data of both participant groups, it is evident that individuals with autism experience more distractions (20 out of 25 participants get distracted very often) in the classroom compared to non-autistic students (8 out of 25 participants are easily distracted), highlighting the importance of attention in autistic participants also autistic children have a higher level of familiarity with VR (16 participants are familiar with VR usage), indicating some prior experience with VR usage. From the post-questionnaire data, When listening to the class, non-autistic participants reported feeling interested and excited, with only 1 participant feeling bored. However, among ASD participants, 14 felt bored, 9 felt interested, and 5 felt excited. Understanding the VR classroom was reported as easy for all non-autistic participants, while 5 out of 25 ASD participants found it difficult. The feedback is gathered from participants regarding the modifications they suggested for improving their gaming experience. These changes were documented in Table 1.

Figure 5. Pre and post questionnaire data collected from NONASD participants. (A) Participants feel distracted in the class. (B) Participate who used VR before. (C) Participants emotions while listening to VR classroom. (D) Participants level of understanding the VR classroom.

Figure 6. Pre and post questionnaire data collected from ASD participants. (A) Participants feel distracted in the class. (B) Participate who used VR before. (C) Participants emotions while listening to VR classroom. (D) Participants level of understanding the VR classroom.

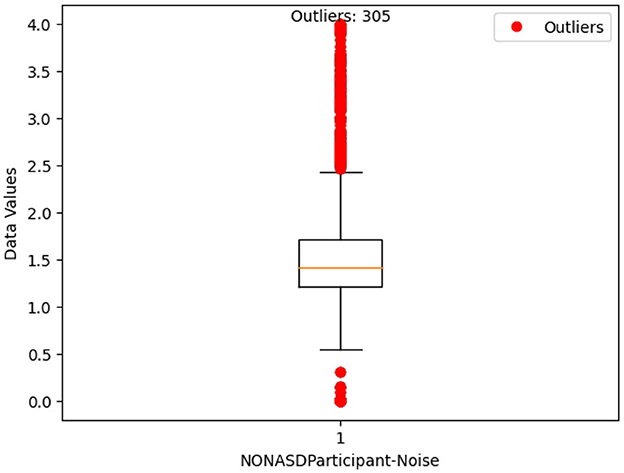

2.7. Data preprocessing

The retrieved data corresponds to a 15-min trial, which we divided into three sessions, each lasting 5 min. For the analysis, session 1 and session 3 data for each participant are considered, applying eye data, heart rate, and skin conductance features. To facilitate comparison, eye data, heart rate, and skin conductance data are visualized using a bar plot, where session 1 is shown in red and session 3 in blue. This analysis was performed for both ASD and NONASD participants. Later, data cleaning is applied to the physiological data. During the data cleaning process, various steps are addressed to ensure data cleanliness. These steps involve identifying and handling outliers, which are data points that deviate significantly from the majority of the dataset. Figure 7 explains that the majority of the skin data points of a participant are concentrated within the range of 1.2 to 1.9. The presence of outliers, indicated by values of 4 at the upper end and 0 to 0.5 at the lower end, suggests the existence of a few data points that significantly deviate from the majority of the dataset. These outliers fall outside the range represented by the box plot, signifying their extreme nature compared to the remaining data points. The removal of outliers is undertaken with the objective of obtaining a more refined dataset that better represents the overall distribution of the data. This process enables more precise analysis and interpretation of the data, leading to more accurate conclusions and insights.

2.8. Extracted features

Following the trial, the physiological data will be obtained from the Empatica wristband and Head-mounted device application. The photoplethysmograph is used to extract the heart rate, which is measured in Hz, while the electrodermal activity is measured in micro Siemens (μs).

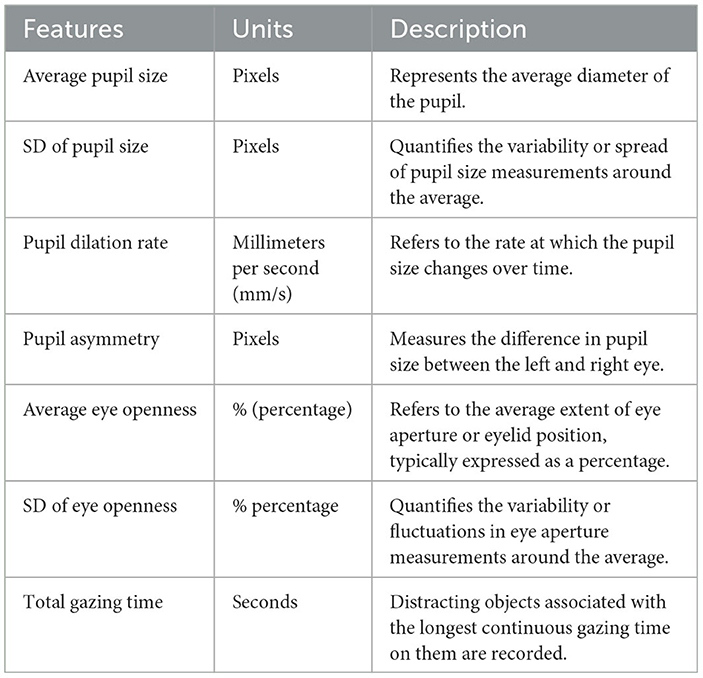

2.8.1. Eye data features

From the eye data, the average pupil size is derived using Equation (1), the standard deviation of pupil size is calculated using Equation (2), the pupil dilation rate is determined using Equation (3), the pupil asymmetry is computed using Equation (4), the average eye openness is obtained using Equation (5), and the standard deviation of eye openness is calculated using Equation (6) for both the left and right eyes, total gazing time on objects following the Equation (7). These features are interconnected and hold significance in attentional states (Pomplun and Sunkara, 2003; Laeng et al., 2010; Binda et al., 2013) providing valuable insights into an individual's attentional focus and cognitive engagement. Refer to Table 2 for a brief explanation of each feature.

• Average Pupil Size (APS):

• Standard Deviation of Pupil Size (SDPS):

• Pupil Dilation Rate (PDR):

• Pupil Asymmetry (PA):

• Average Eye Openness (AEO):

• Standard Deviation of Eye Openness (SDEO):

• Total Gazing Time on Objects (TGT):

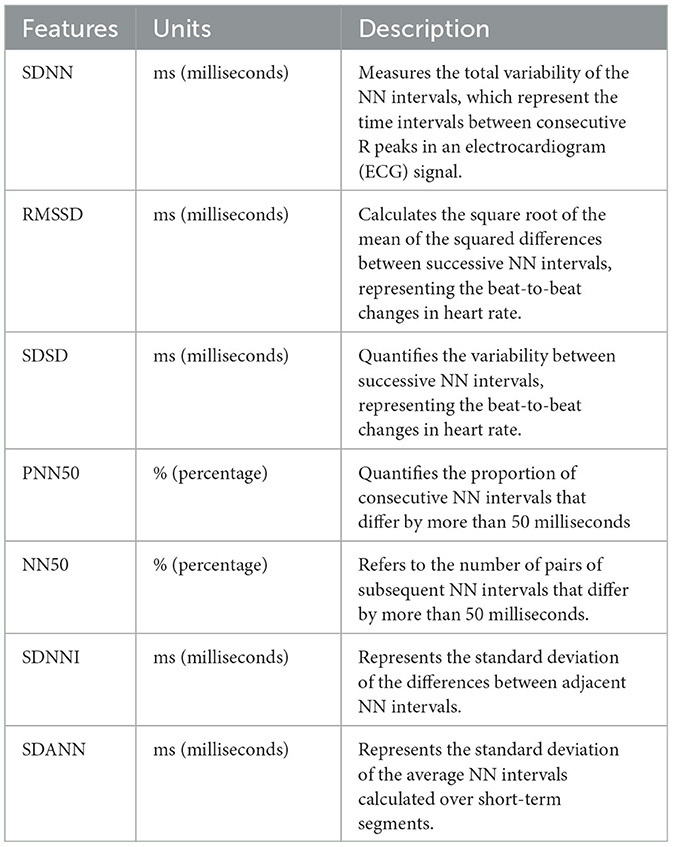

2.8.2. Time-domain features

From the heart rate data, we derived time-domain features, namely SDNN (Standard Deviation of NN Intervals) using Equation (8), RMSSD (Root Mean Square of Successive Differences) using Equation (9), SDSD (Standard Deviation of Successive Differences) using Equation (10), PNN50 (Percentage of successive NN intervals that differ by more than 50 ms) using Equation (11), NN50 (number of successive NN intervals that differ by more than 50 ms) using Equation (12), SDNNI (Standard Deviation of Normal-to-Normal Intervals) using Equation (13), and SDANN (Standard Deviation of Average NN Intervals) using Equation (14)that provide valuable insights into the characteristics of the heart rate signal. These features capture important information about the temporal aspects of heart rate fluctuations and are widely used in research and clinical applications. The increase in values of time-domain features suggests an enhancement in attentional abilities (Hansen et al., 2003; Forte et al., 2019; Pelaez et al., 2019; Tung, 2019). By analyzing the time-domain features, we can gain a better understanding of the overall variability, regularity, and patterns of the heart rate signal. Table 3 provides a concise explanation of each time-domain feature. Below are the mathematical formulas used in calculating the features where “N” means the total number of NN intervals and “M” represents the total number of average NN intervals.

• SDNN (Standard Deviation of NN Intervals):

• RMSSD (Root Mean Square of Successive Differences):

• SDSD (Standard Deviation of Successive Differences):

• PNN50 (Percentage of successive NN intervals that differ by more than 50 ms):

• NN50 (Number of successive NN intervals that differ by more than 50 ms):

• SDNNI (Standard Deviation of Normal-to-Normal Intervals):

• SDANN (Standard Deviation of Average NN Intervals):

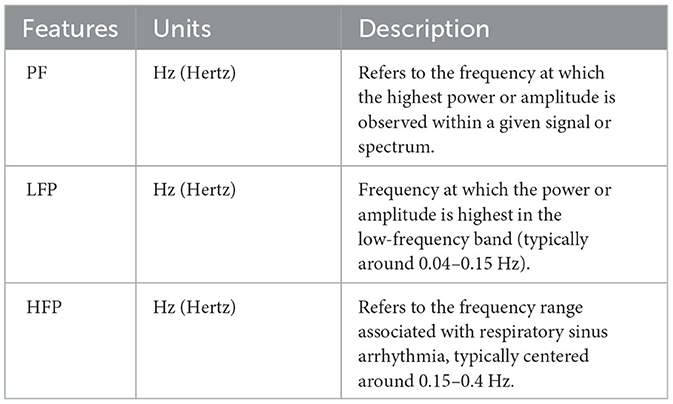

2.8.3. Frequency-domain features

Frequency-domain features are also examined from the heart rate data which also offer valuable insights into the spectral characteristics of the heart rate signal. The derived features include Peak Frequency (PF) calculated using Equation (15), Low-Frequency Power (LFP) obtained using Equation (16), and High-Frequency Power (HFP) calculated using Equation (17). These features allow us to analyze the distribution of power across different frequency bands, providing information about the autonomic control of the heart rate. An increase in the value of these features results in Li and Sullivan (2016) and Lansbergen et al. (2011) attention increase. Table 4 provides a succinct and informative overview of each frequency-domain feature.

• Peak Frequency (PF):

• Low-Frequency Power (LFP):

• High-Frequency Power (HFP):

2.8.4. Electrodermal activity features

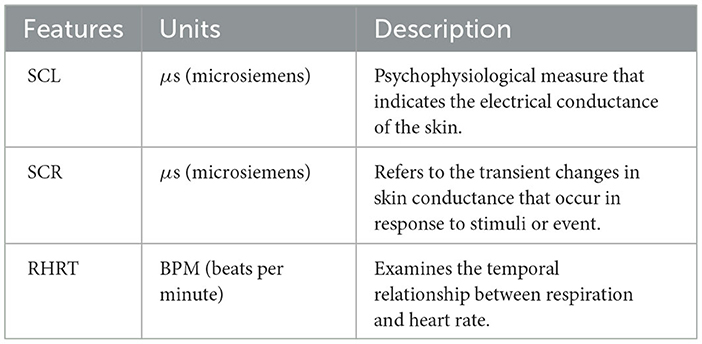

EDA features capture physiological changes in the electrical conductance of the skin, primarily influenced by the activity of sweat glands. These features provide insights into the autonomic nervous system's response to emotional and cognitive stimuli. Common EDA features encompass various measurements. The skin conductance level (SCL) is quantified using Equation (18), providing information about the baseline electrical conductance of the skin. The skin conductance response (SCR) is determined using Equation (19), capturing transient changes in conductance in response to stimuli. Additionally, the Rise Time of the Skin Conductance Response (RHRT) is assessed through Equation (20), offering insights into the time it takes for the skin conductance response to increase from the baseline level after the onset of a stimulus. An increase in these values is associated with an increase in attention (Frith, 1983; Barry et al., 2009). Table 5 presents a concise description of each EDA feature.

• Skin Conductance Level (SCL):

• Skin Conductance Response (SCR):

• Recovery Half-Recovery Time (RHRT):

3. Results

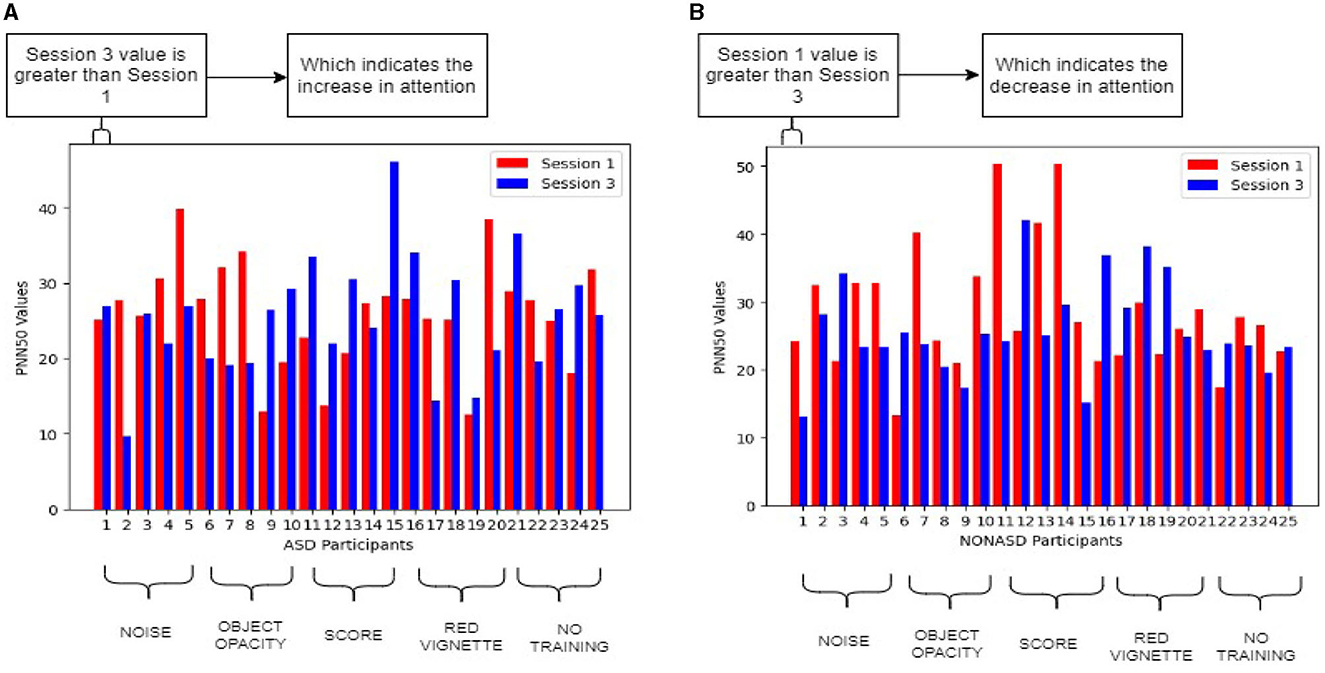

In this study, we analyzed the features derived from the data collected for all participants during session 1 and session 3. These features were calculated to assess the changes in participants' attention before and after the reinforcement training provided in the second session. To visually represent the impact of the training on attention, we utilized bar graphs. These graphs provide a clear indication of the extent to which participants' attention increased or decreased following the reinforcement training.

3.1. Analyzing heart rate and electrodermal activity data

A total of 13 features were derived from the heart and EDA data. These features were analyzed for each participant, considering the data from session 1 and session 3. The purpose of this analysis was to assess the changes in the feature values before and after the reinforcement session. Figure 8 illustrates the calculation of the PNN50 feature for all ASD and NONASD participants, with the x-axis representing the 25 participants and the y-axis representing the PNN50 feature values. The first session values are represented in red, while the third session values are shown in blue. The allocation of groups to specific strategies is clearly visualized in Figure 8, indicating which group of people participated in each training strategy. The first five participants were presented to the noise strategy, the next five participants to object opacity, the subsequent five participants to the scoring strategy, the following five participants to the red vignette strategy, and the last five participants were part of the control group with no specific training. Similarly, the attention changes in all other features were also compared for both ASD and NONASD groups, comprising a total of thirteen features.

Figure 8. PNN50 feature comparison for ASD and NONASD participants between baseline session and performance session to evaluate the reinforcement effect in the second session. (A) PNN50 feature comparison for ASD participants. (B) PNN50 feature comparison for NONASD participants.

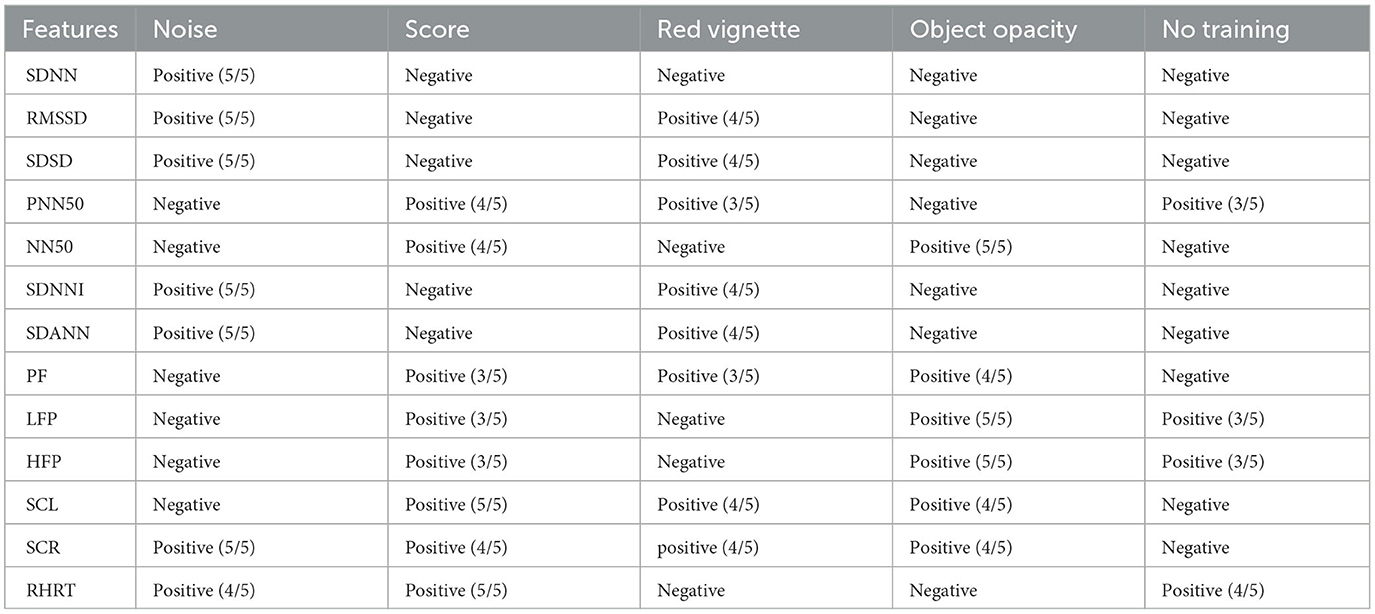

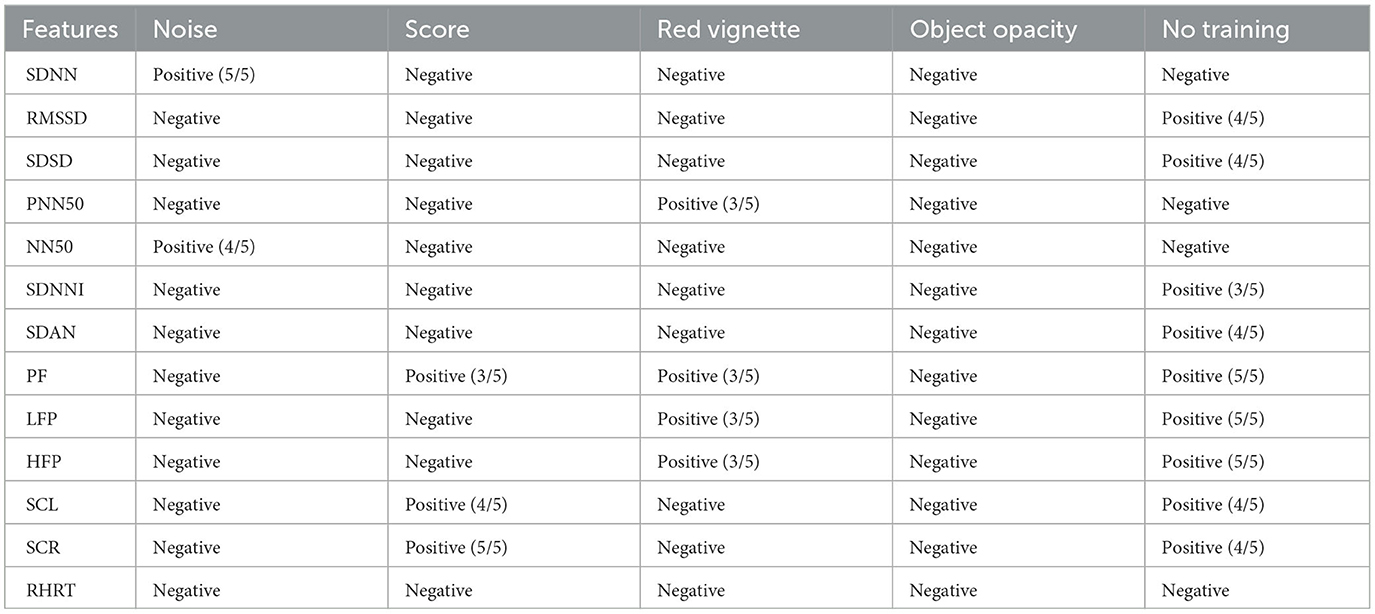

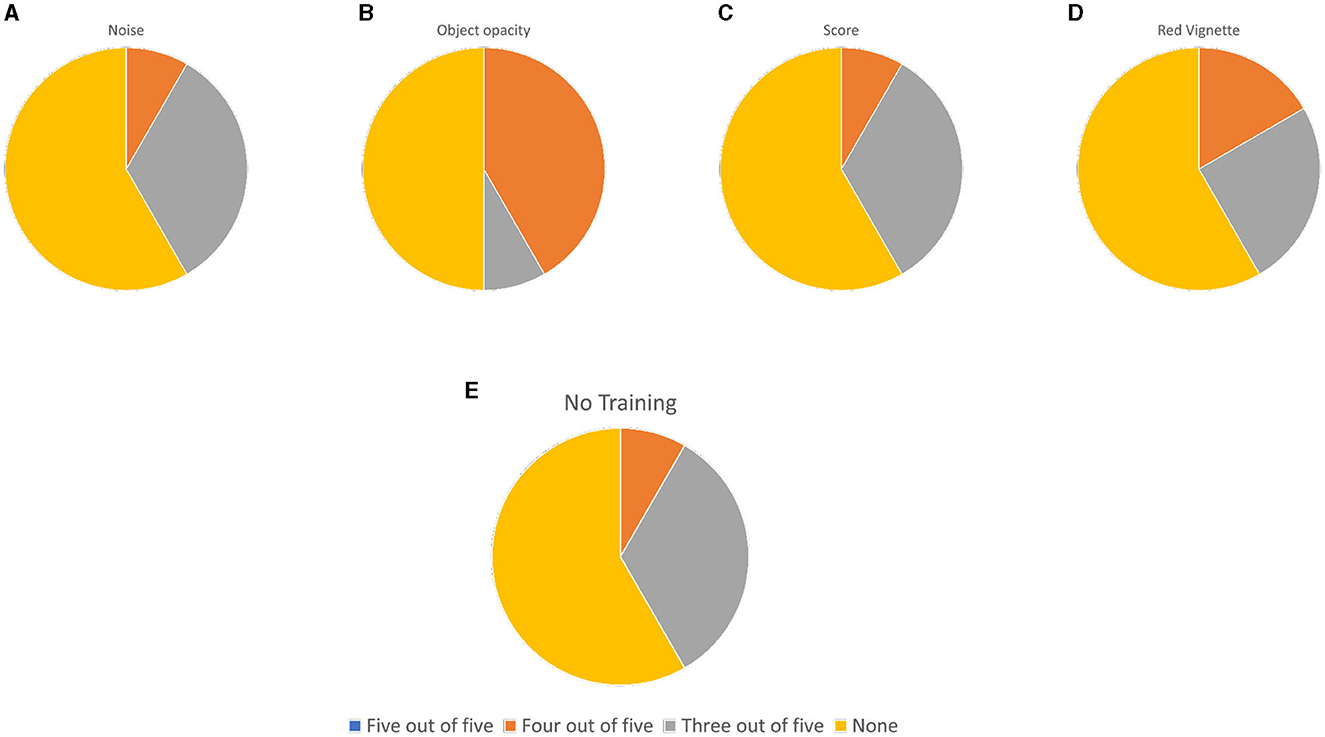

The accompanying Tables 6, 7 provide a clear overview of the features that demonstrated increased attention for NONASD and ASD participants respectively. The representation of a strategy on a particular feature is positive for increased attention, while a negative indicates decreased attention. For all the participants in each strategy, if more than half of them hold session 3 values greater than session 1, turn up to be positive in attention improvement. Considering the PNN50 feature from Table 6, there is no improvement in the session 3 values for more than half of the participants in the noise strategy. Hence it is considered negative. Similarly for the object opacity strategy. More than half of the participants showed better values in session 3 for the score (four participants), red vignette (three participants), and No training (three participants). Hence it is considered positive. The attention improvement for all other features in different strategies was listed in a similar manner.

Table 6. Strategies which affected positively and negatively for respective feature for ASD participants.

Table 7. Strategies which affected positively and negatively for the respective feature for NONASD participants.

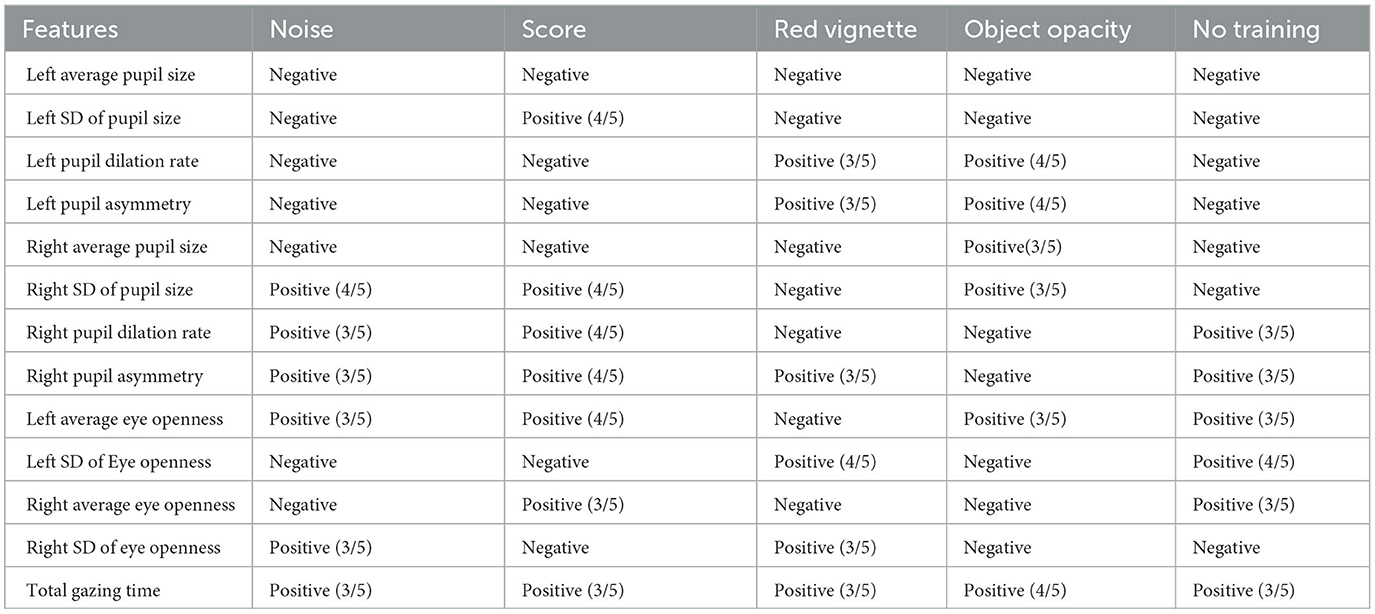

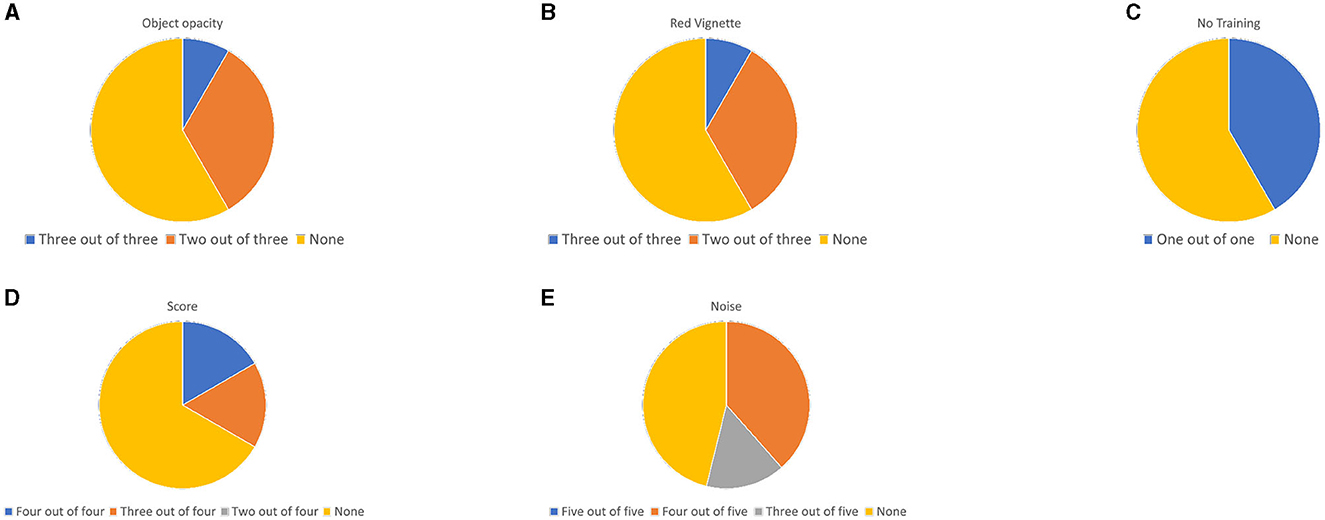

The analysis of all features in each strategy is represented as a pie chart, which indicates the number of participants who showed positive and negative results in attention improvement. If all five participants exhibited a positive effect, the representation is blue. If four participants showed a positive effect, it is represented as orange. For three participants, the representation is gray, and yellow denotes a negative outcome. Figures 9, 10 represent the effect of each strategy in a pie chart. For example, in Figure 9A, all five participants in the noise strategy exhibited a positive effect for 6 features (SDNN, RMSSD, SDSD, SDNNI, SDANN, SCR). Furthermore, four participants showed a positive effect for an additional feature (RHRT). However, no positive effect was observed for the remaining 6 features (PNN50, NN50, PF, LFP, HFP, SCL). The results clearly indicate that the noise strategy yields the most notable enhancement in attention for individuals with ASD. It is closely followed by the scoring strategy, which also exhibits positive effects. Conversely, for individuals without ASD, the no-training strategy emerges as the most effective approach for improving attention.

Figure 9. ASD Participants showed improved attention and reduced attention in each strategy analyzed from heart rate and skin data. (A) Noise, (B) Object opacity, (C) Score, (D) Red vignette, and (E) No training.

Figure 10. NONASD Participants showed improved attention and reduced attention in each strategy analyzed from heart rate and skin data. (A) Noise, (B) Object opacity, (C) Score, (D) Red vignette, and (E) No training.

3.2. Analyzing eye data

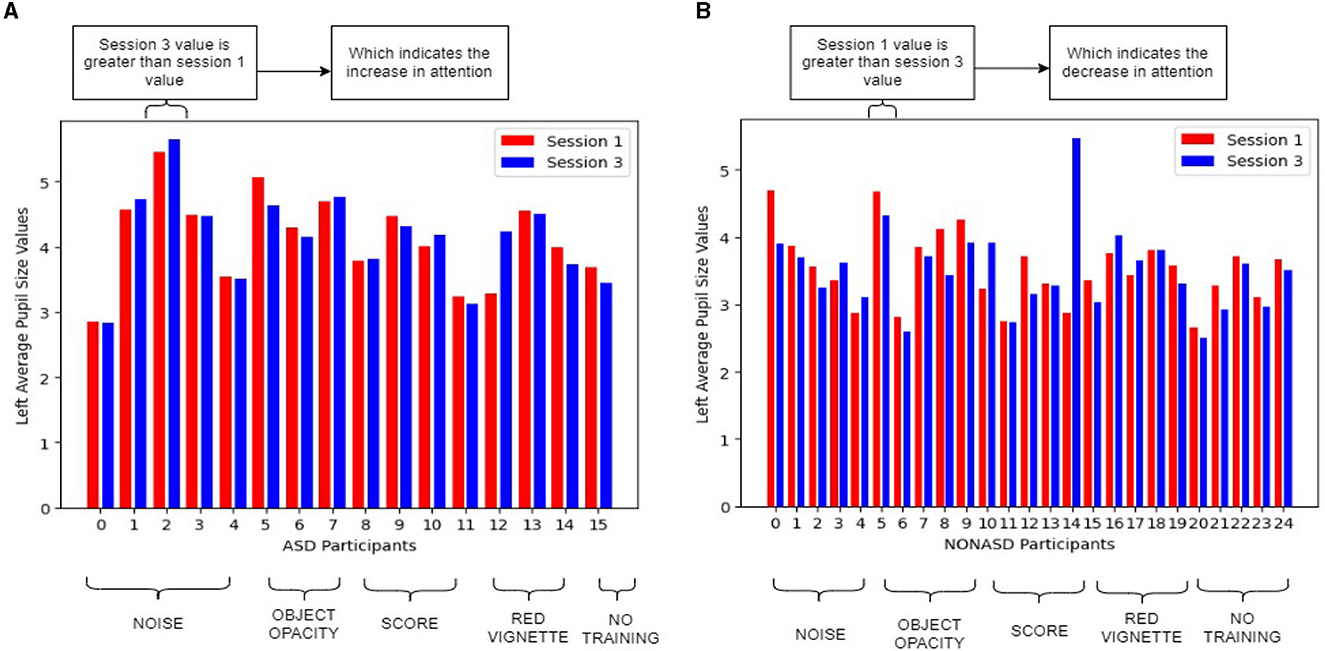

In Section 2.1, it is stated that a total of 16 participants from the ASD group and 25 participants from the NONASD group are included for eye data analysis. To effectively analyze attention patterns in the study, we created bar graphs to visualize all 13 eye data features, including data from both the left and right eyes. Figure 11 displays the bar graph of the “left average pupil size” feature for ASD and NONASD participants. On the x-axis, we have the participants, and the y-axis represents the feature values. In Figure 11A, the first five bars correspond to participants who underwent the noise strategy. The next three bars represent participants who experienced the object opacity strategy, followed by four bars for the scoring strategy participants. Three bars are dedicated to the red vignette strategy participants, and finally, one bar represents the no-training group. In Figure 11B, each set of five bars represents noise, object opacity, score, red vignette, and no training, respectively. Additionally, the red bars represent data recorded during session 1, while the blue bars represent session 3. This provides a clear and understandable representation of attention changes across all participants in each group.

Figure 11. Left Average Pupil Size feature comparison for ASD and NONASD participants between baseline session and performance session to evaluate the reinforcement effect in the second session. (A) Left Average Pupil Size feature comparison for ASD participants. (B) Left Average Pupil Size feature comparison for NONASD participants.

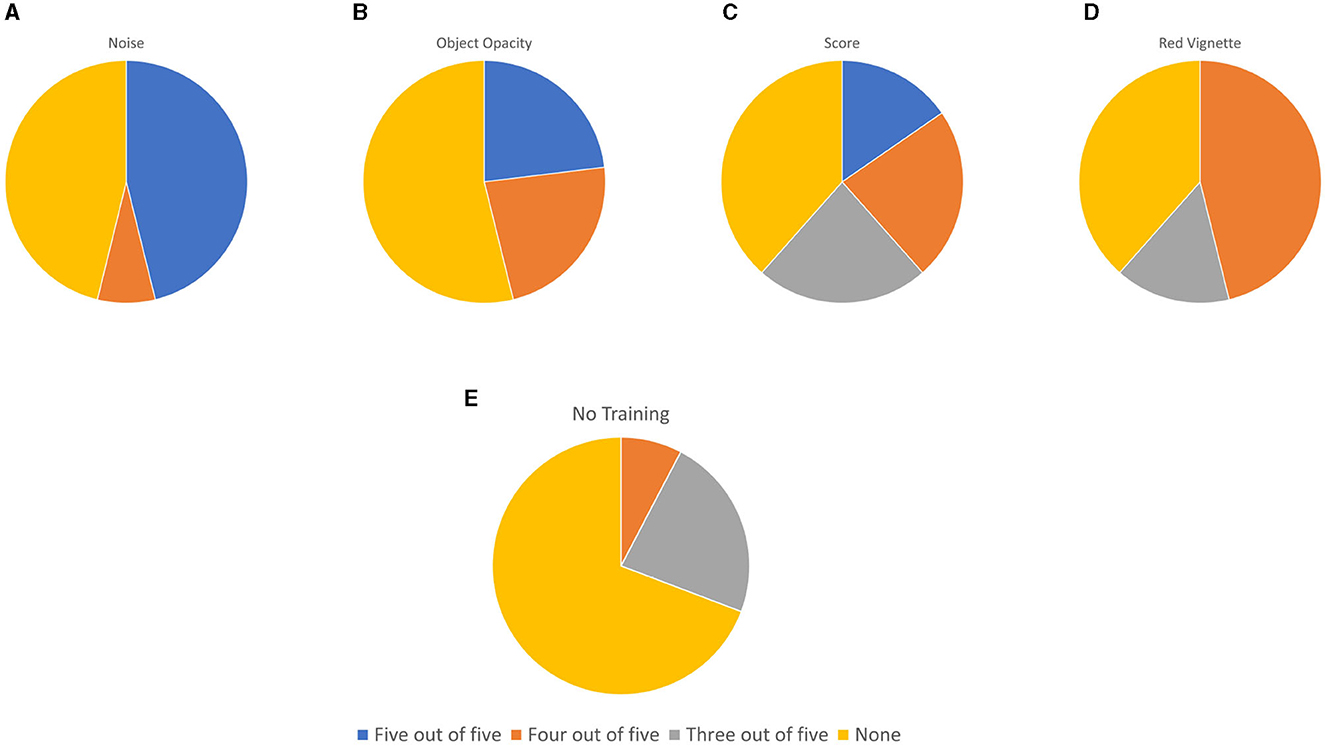

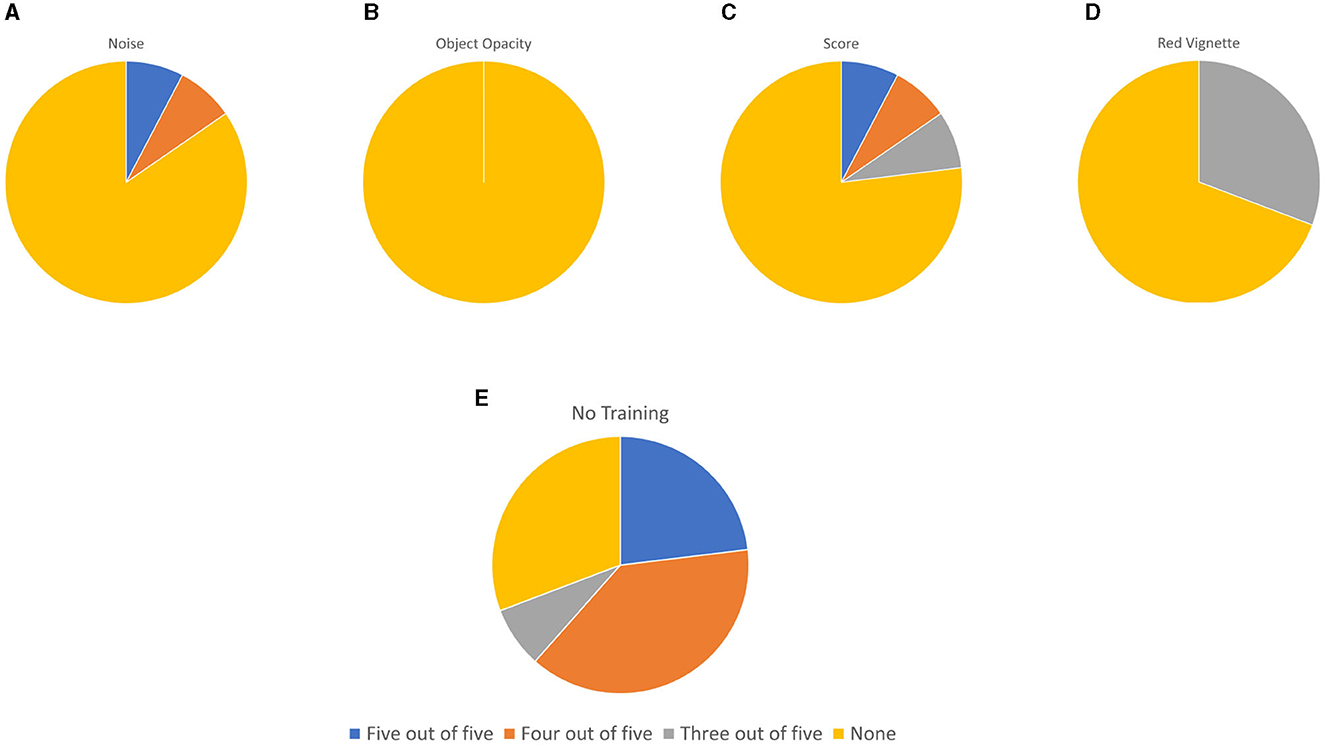

The values from the bar graphs are analyzed and compared. The analyzed data is presented in Table 8 for NONASD participants and Table 9 for ASD participants. It is to be noted that, even though the number of participants varies for each strategy in Table 9, the method used in heart rate and EDA data can be applied here as well, considering more than half of the participants showing improved attention as positive. To enhance the understanding and facilitate easy interpretation of these results, we have represented them visually using pie charts. Figures 12, 13 illustrate the visual representations for ASD and NONASD participants, respectively. The pie charts display the number of participants exhibiting positive results using blue, orange, and gray colors, while the participants showing negative effects are represented by the yellow color.

Table 8. Strategies which affected positively and negatively for respective eye feature for NONASD participants.

Table 9. Strategies which affected positively and negatively for respective eye feature for ASD participants.

Figure 12. ASD Participants showed improved attention and reduced attention in each strategy analyzed from eye data. (A) Noise, (B) Object opacity, (C) Score, (D) Red vignette, and (E) No training.

Figure 13. NONASD Participants showed improved attention and reduced attention in each strategy analyzed from eye data. (A) Noise, (B) Object opacity, (C) Score, (D) Red vignette, and (E) No training.

Upon observing Figure 12, it becomes apparent that the noise strategy yielded the most favorable outcomes for training attention in ASD participants. This strategy exhibited positive effects indicating its effectiveness. The object opacity strategy and red vignette strategy followed suit, showing some positive results. However, it is important to note that the attention training results for ASD participants are not so promising as compared to the heart and EDA data study. It is hoped that by including five participants for each strategy, more positive results can be achieved. A positive observation was made regarding the total gazing time on object features for participants with ASD and non-ASD. It was found that this feature consistently yielded positive results for both groups, indicating an improvement in attention. On the other hand, Figure 13 depicts the results for NONASD participants, and unfortunately, there are no instances of increased attention observed in any strategy. In conclusion, while the noise strategy demonstrated a positive effect for individuals with ASD, it is important to note that the inclusion of an irregular number of participants in the evaluation of other strategies requires further consideration before finalizing any definitive conclusions. Careful assessment and analysis of the data will be necessary to ensure accurate and reliable findings for the remaining strategies. The total gazing time individuals with and without ASD spent looking at object features was shown to be beneficial. This characteristic was discovered to consistently produce favorable outcomes for both groups, demonstrating an enhancement in attention.

4. Discussion

Attention development falls within the neuro-physiological domain (Lindsay, 2020). Supporting attention in autistic individuals is a complex task, but it is not impossible (Khosla, 2017). To enhance attention in ASD there are a few skills and strategies employed like selecting engaging activities, modeling tasks, and facilitating smooth transitions. Several activities like finger painting, animal walking, and mirror play have also shown promise in promoting attention development (Vishnu Priya, 2020). Additionally, factors like eye contact and sensory integration play a significant role in enhancing attention (Karim and Mohammed, 2015). Although autistic people may benefit from the aforementioned techniques, they need a lot of family work and time investment. The limitations of these traditional approaches have led to the emergence of new innovative technological tools in the neuro-physiological research area.

Neurophysiological research has seen remarkable advancements in the exploration of human cognition and brain functioning. In conjunction with these advancements, the combination of non-invasive mobile EEG registration, and signal processing devices has further enriched the understanding of human cognitive processes (Pei et al., 2022; Vortmann et al., 2022). Non-invasive mobile EEG has proven to be a valuable tool in brain research, offering real-time brain activity, and monitoring in a relatively inexpensive, portable, and user-friendly manner (Lau-Zhu et al., 2019). This approach allows researchers to record brain signals outside the lab, enabling studies in naturalistic environments and promoting inclusivity in research participation (Yang et al., 2020). Coupled with sophisticated signal processing devices, researchers can extract meaningful information from EEG data, enhancing data quality and facilitating offline analysis (Jebelli et al., 2018).

Non-invasive mobile EEG is considered better than traditional EEG due to its relatively inexpensive, portable, and user-friendly nature offering real-time brain activity monitoring (Lau-Zhu et al., 2019). These methods have evolved as a preferred approach in research, prioritizing safety, comfort, ethics, and accessibility for participants. This shift from traditional invasive techniques to non-invasive alternatives ensures participant wellbeing while gathering valuable data. In various fields, including VR research, non-invasive methods have become instrumental, allowing for the collection of reliable and meaningful data without physical intrusion (Yang et al., 2020). By considering the participants' safety, comfort, and ethical considerations, these methods have significantly advanced our understanding of human behavior and physiological responses, while also fostering inclusivity and broader participation in research studies. These methods have provided novel insights into cognitive processes, including attention training, memory, and learning, thus opening new avenues for brain-computer interface (BCI) applications.

BCI is utilized for attention training, demonstrating its efficacy in enhancing human attention and cognitive processes (deBettencourt et al., 2015; Thibault et al., 2016). It plays a significant role in the investigation of human attention (Katona, 2014), memory, and, indirectly, the learning process, offering assistance and forecasting abilities to improve the effectiveness of human learning (Katona and Kővári, 2018a,b). A study by Jiang et al. (2011) demonstrated the use of a cost-effective BCI to translate attention states into game control, offering promising implications for training individuals affected by ADHD. Significant effects of music are revealed on children's attention by investigating the impact of three types of background music on attention in regular and ADHD subjects using EEG brain signals during a Tetris game (Kiran, 2020). To overcome the limitations of traditional cognitive training fMRI is used and developed a brain-wave-based neurofeedback BCI system for attention training (Abiri et al., 2018), although a drawback is that participants must remain motionless inside the MRI scanner during brain training sessions. The integration of BCI and eye-tracking technology can be used to study information processing and even examine complex cognitive processes (Cipresso et al., 2011) like programming. Eye tracking reveals cognitive insights like visual attention, gaze patterns, and mental workload (Katona et al., 2020; Katona, 2021, 2022). Insightful information can be acquired from the eye movement parameters. These parameters allow researchers to identify situations where the utilization of Language-Integrated-Query (LINQ) query syntax reduces mental strain (Katona et al., 2020; Katona, 2021). Also, tools for more developer-friendly algorithm descriptions can be found (Katona, 2022). Furthermore, readability and source code quality can be measured objectively (Katona, 2021). Though EEG signals are efficient for analyzing neurological data they are cost-effective (Patel et al., 2023). In addition, Virtual reality (VR), augmented reality (AR), and mixed reality (MR) technologies are also valuable tools to enhance attention.

These technological tools have been shown to enhance creative thinking, communication, problem-solving ability, and immersive three-dimensional spatial experiences, fostering novel approaches to human-computer interaction (Papanastasiou et al., 2018). An overview (Goharinejad et al., 2022) assessed the studies exploring the applications of VR, AR, and MR technologies for children with ADHD, suggesting their promising potential in enhancing the diagnosis and management of ADHD in this group. A study by Fridhi et al. (2018) reviewed the applications of VR and AR in psychiatry, neurophysiology, communication disability, and neurodevelopmental disorders (Bailey et al., 2021), with a particular focus on ASD (Erb, 2023) and related studies. In a proposal by Sarker et al. (2021), an interactive multi-staged game module was proposed utilizing AR and VR technologies that aim to improve joint attention in autistic children. Embracing a learning process and gaining a deeper understanding of individual needs is crucial. While there is no one-size-fits-all method, emerging technologies such as VR in combination with physiological data (Tan et al., 2019; Kim et al., 2021) have shown promising results in enhancing attention and engagement in autistic children.

Immersive VR applications have shown promising potential in the development of vision screening approaches. Conducting functional vision screenings to assess eye coordination is time-consuming and resource-intensive (Beauchamp et al., 2010). Nevertheless, the initial utilization of VR to better understand vision poses challenges for several research teams (Lambooij et al., 2009; Bennett et al., 2019; Grassini and Laumann, 2021). However, studies explored that ET-based immersive VR software can complement current vision screening methods and offer potential solutions (Wijkmark et al., 2021). According to studies by Smith et al. (2020), VR-based therapies improved attention and task performance more than conventional activities. In addition to the initial VR studies (Lopez et al., 2016), a comparison of traditional tools for assessing divided attention was also conducted. The virtual environments lab at the University of Southern California (USC) has launched a research program (Rizzo et al., 2000) with the aim of developing VR applications for the investigation, evaluation, and rehabilitation of cognitive/functional processes. The review came to the conclusion that VR looks to be a useful tool in this field when used in conjunction with more conventional tests.

Research has demonstrated the positive impact of VR technology on attentional development in children with autism. Considering emerging evidence, VR experiences have been shown to be more engaging compared to traditional 2D computer monitors (Li et al., 2020). The immersive and interactive nature of VR provides a controlled and engaging environment for children, facilitating their focus and active participation (Araiza-Alba et al., 2021). Given the recognized significance of VR in attention training, numerous studies have been undertaken to explore its efficacy in enhancing attentional abilities among individuals with diverse disorders. A study by Cho developed an attention enhancement system (AES) that possesses the ability to improve the attention span of children and adolescents with ADHD (Cho et al., 2002). One drawback is that the approach was primarily created for persons who are impulsive and inattentive because the recruited participants were not officially diagnosed with ADHD. Another study aimed at improving joint attention skills in children with autism by utilizing an interactive VR system specifically designed to facilitate practice in gaze sharing and gaze following (Amat et al., 2021). The findings revealed positive impacts on the targeted skills with few limitations like a small sample size and no control group.

Although a number of studies have shown substantial interest in the application of VR driving simulations for attention training, there is a lack of research focusing specifically on improving attention in individuals with autism through the integration of physiological data. In light of this gap, our laboratory has conducted exploratory research with the goal of designing and developing a unique attention training system specifically for Autistic individuals. To improve training and learning outcomes, this system blends VR with reinforcement training and includes four different feedback techniques. Notably, the system uses auditory and visual cues as rewards and penalties, and VR scenarios are made to incorporate tracking and dynamic adaption throughout training sessions.

In this study, we reported the entire statistical analysis and comparison of physiological data between ASD and NONASD participants on four strategies namely score, noise, red vignette, and object opacity which are part of virtual classroom reinforcement training. From the reports, we state the findings on how the VR-PDA help ASD children in improving their attention. This project aims to provide an innovative and adaptive way to solve attention-related issues in the ASD population by leveraging the potential of VR and physiological data.

In summary, this paper provides a comprehensive evaluation of the VR-PDA framework, examining the relationship between physiological data and attention in individuals. The study included 25 participants, both with and without ASD, who were assigned to different training strategies or a no-training group. The results obtained from heart rate and electrodermal activity measurements indicate that reinforcement strategies are effective in enhancing attention among individuals with ASD. The most successful strategy for improving attention in ASD participants was found to be the score. Conversely, the no-training approach did not yield any noticeable improvement in attention for ASD participants. Regarding eye data analysis, the findings do provide evidence of improvements in attention, although not as strong as the findings from heart rate and EDA data. Both ASD and NONASD participants did not demonstrate significant attention enhancement either through the strategies or the no-training condition. In conclusion, the reinforcement training strategies employed in this study successfully redirected the attention of participants with ASD back to the classroom when they were distracted. Conversely, NONASD participants exhibited consistent attention levels without the need for specific strategies.

This study has enormous potential to help people with autism, especially by enhancing their attention. An innovative and adaptable method of treating ADD related to ASD is to employ VR as a tool for attention training. VR has the ability to alter virtual settings and enables individualized attention-focused interventions, which can have major effects on a variety of spheres of life, including success in academics, in social, and in the workplace. The impact of this research goes beyond the domain of autism and attention training. The results obtained from this study can be applied to other engineering domains, where VR and physiological data analysis can be instrumental in developing and enhancing training techniques. The insights from this research can lead to the creation of attention-training systems tailored to specific tasks and environments in fields such as aviation (Ziv, 2016), engineering (Naveh and Erez, 2004), and sports (Memmert, 2007; Memmert et al., 2009), where sustained attention is crucial for optimal performance. Moreover, these systems can influence a person's self-confidence and self-efficacy. Previous studies have shown that interventions, such as software development courses for programming, can positively impact self-efficacy (Kovari and Katona, 2023). Similarly, by using VR technology in attention training, individuals may experience a sense of empowerment and increased confidence in their abilities to focus and perform tasks effectively. This aspect can be a critical component in the overall success of attention training programs, as improved self-confidence can have far-reaching effects on various aspects of an individual's life.

In future work, the inclusion of all participants for ASD in eye data analysis will be done. The study will also take into account participants' feedback from the post-questionnaire to enhance the gaming experience. Additionally, future research will explore the integration of personalized VR-based attention training programs, leveraging transfer learning techniques with pre-trained CNNs, and incorporating a real-time feedback system. These advancements aim to further enhance attention abilities in individuals with ASD. Also, the study offers insightful information on the efficacy of various attention-training techniques and creates fresh research and development opportunities in attention training, and its applications in many fields. The effects of this discovery could go beyond autism, benefiting millions of people by enhancing their attention, self-confidence, and overall quality of life.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

CM, YL, and JH: conception and design of the work. YL and BS: data collection. BS, YL, and JH: data analysis and interpretation and drafting the article. SJ and CM: critical revision of the article. All authors contributed to the article and approved the submitted version.

Funding

This work was partly supported by the National Science Foundation (NSF) under Grants CNS-2244450 and IIS-1850438.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abiri, R., Borhani, S., Zhao, X., and Jiang, Y. (2018). “Real-time neurofeedback for attention training: Brainwave-based brain computer interface,” in Organization for Human Brain Mapping 2017 (Vancouver, BC).

Adhanom, B. I., Lee, S. C., Folmer, E., and MacNeilage, P. (2020). “Gazemetrics: an open-source tool for measuring the data quality of HMD-based eye trackers,” in ACM Symposium on Eye Tracking Research and Applications, 1–5. doi: 10.1145/3379156.3391374

Amat, A. Z., Zhao, H., Swanson, A., Weitlauf, A. S., Warren, Z., and Sarkar, N. (2021). Design of an interactive virtual reality system, InViRS, for joint attention practice in autistic children. IEEE Trans. Neural Syst. Rehabil. Eng. 29, 1866–1876. doi: 10.1109/TNSRE.2021.3108351

Araiza-Alba, P., Keane, T., Chen, W. S., and Kaufman, J. (2021). Immersive virtual reality as a tool to learn problem-solving skills. Comput. Educ. 164, 104121. doi: 10.1016/j.compedu.2020.104121

Arslanyilmaz, A., and Sullins, J. (2021). Eye-gaze data to measure students' attention to and comprehension of computational thinking concepts. Int. J. Child Comput. Interact. 100414. doi: 10.1016/j.ijcci.2021.100414

Bailey, B., Bryant, L., and Hemsley, B. (2021). Virtual reality and augmented reality for children, adolescents, and adults with communication disability and neurodevelopmental disorders: a systematic review. Rev. J. Autism Dev. Disord. 9, 160–183. doi: 10.1007/s40489-020-00230-x

Barry, R. J., Clarke, A. R., Johnstone, S. J., McCarthy, R., and Selikowitz, M. (2009). Electroencephalogram θ/β ratio and arousal in attention-deficit/hyperactivity disorder: evidence of independent processes. Biol. Psychiatry 66, 398–401. doi: 10.1016/j.biopsych.2009.04.027

Beauchamp, G. R., Ellepola, C., and Beauchamp, C. L. (2010). Evidence-based medicine: the value of vision screening. Am. Orthop. J. 60, 23–27. doi: 10.3368/aoj.60.1.23

Bennett, C. R., Bex, P. J., Bauer, C. M., and Merabet, L. B. (2019). The assessment of visual function and functional vision. Semin. Pediatr. Neurol. 31, 30–40. doi: 10.1016/j.spen.2019.05.006

Binda, P., Pereverzeva, M., and Murray, S. O. (2013). Attention to bright surfaces enhances the pupillary light reflex. J. Neurosci. 33, 2199–2204. doi: 10.1523/JNEUROSCI.3440-12.2013

Cho, B., Lee, J., Ku, J., Jang, D., Kim, J., Kim, I., et al. (2002). “Attention enhancement system using virtual reality and EEG biofeedback,” in Proceedings IEEE Virtual Reality 2002, 156–163. doi: 10.1109/VR.2002.996518

Cipresso, P., Meriggi, P., Carelli, L., Solca, F., Meazzi, D., Poletti, B., et al. (2011). “The combined use of brain computer interface and eye-tracking technology for cognitive assessment in amyotrophic lateral sclerosis,” in 2011 5th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth) and Workshops (IEEE), 320–324.

deBettencourt, M. T., Cohen, J. D., Lee, R. F., Norman, K. A., and Turk-Browne, N. B. (2015). Closed-loop training of attention with real-time brain imaging. Nat. Neurosci. 18, 470–475. doi: 10.1038/nn.3940

Erb, E. M. (2023). Using virtual and augmented reality to teach children on the autism spectrum with intellectual disabilities: a scoping review. J. Autism Dev. Disord. 52, 4692–4707.

Forte, G., Favieri, F., and Casagrande, M. (2019). Heart rate variability and cognitive function: a systematic review. Front. Neurosci. 13, 710. doi: 10.3389/fnins.2019.00710

Fridhi, A., Benzarti, F., Frihida, A., and Amiri, H. (2018). Application of virtual reality and augmented reality in psychiatry and neuropsychology, in particular in the case of autistic spectrum disorder (ASD). Neurophysiology 50, 222–228. doi: 10.1007/s11062-018-9741-3

Frith, C. (1983). The skin conductance orienting response as an index of attention. Biol. Psychol. 17, 27–39. doi: 10.1016/0301-0511(83)90064-9

Goharinejad, S., Goharinejad, S., Hajesmaeel-Gohari, S., and Bahaadinbeigy, K. (2022). The usefulness of virtual, augmented, and mixed reality technologies in the diagnosis and treatment of attention deficit hyperactivity disorder in children: an overview of relevant studies. BMC Psychiatry 22, 4. doi: 10.1186/s12888-021-03632-1

Grassini, S., and Laumann, K. (2021). “Immersive visual technologies and human health,” in European Conference on Cognitive Ergonomics 2021 (ACM). doi: 10.1145/3452853.3452856

Hansen, A. L., Johnsen, B. H., and Thayer, J. F. (2003). Vagal influence on working memory and attention. Int. J. Psychophysiol. 48, 263–274. doi: 10.1016/S0167-8760(03)00073-4

Hatch-Rasmussen, C. (2022). Sensory Integration in Autism Spectrum Disorders. Autism Research Institute.

Hellum, O., Steele, C., and Xiao, Y. (2023). Sonia: an immersive customizable virtual reality system for the education and exploration of brain networks. arXiv preprint arXiv:2301.09772. doi: 10.48550/ARXIV.2301.09772

Hodges, H., Fealko, C., and Soares, N. (2020). Autism spectrum disorder: definition, epidemiology, causes, and clinical evaluation. Transl. Pediatr. 9, S55–S65. doi: 10.21037/tp.2019.09.09

Iwamoto, K., Katsumata, S., and Tanie, K. (1994). “An eye movement tracking type head mounted display for virtual reality system: evaluation experiments of a prototype system,” in Proceedings of IEEE International Conference on Systems, Man and Cybernetics, 1, 13–18. doi: 10.1109/ICSMC.1994.399804

Jebelli, H., Hwang, S., and Lee, S. (2018). EEG signal-processing framework to obtain high-quality brain waves from an off-the-shelf wearable EEG device. J. Comput. Civil Eng. 32. doi: 10.1061/(ASCE)CP.1943-5487.0000719

Jiang, L., Guan, C., Zhang, H., Wang, C., and Jiang, B. (2011). “Brain computer interface based 3d game for attention training and rehabilitation,” in 2011 6th IEEE Conference on Industrial Electronics and Applications (IEEE), 124–127. doi: 10.1109/iciea.2011.5975562

Karim, A. E. A., and Mohammed, A. H. (2015). Effectiveness of sensory integration program in motor skills in children with autism. Egypt. J. Med. Hum. Genet. 16, 375–380. doi: 10.1016/j.ejmhg.2014.12.008

Katona, J. (2014). “Examination and comparison of the EEG based attention test with CPT and t.o.v.a.,” in 2014 IEEE 15th International Symposium on Computational Intelligence and Informatics (CINTI) (IEEE), 117–120. doi: 10.1109/cinti.2014.7028659

Katona, J. (2021). Clean and dirty code comprehension by eye-tracking based evaluation using GP3 eye tracker. Acta Polytech. Hung. 18, 79–99. doi: 10.12700/APH.18.1.2021.1.6

Katona, J. (2022). Measuring cognition load using eye-tracking parameters based on algorithm description tools. Sensors 22, 912. doi: 10.3390/s22030912

Katona, J., and Kővári, A. (2018a). The evaluation of BCI and PEBL-based attention tests. Acta Polytech. Hung. 15. doi: 10.12700/aph.15.3.2018.3.13

Katona, J., and Kővári, A. (2018b). Examining the learning efficiency by a brain-computer interface system. Acta Polytech. Hung. 15. doi: 10.12700/APH.15.3.2018.3.14

Katona, J., Kovari, A., Heldal, I., Costescu, C., Rosan, A., Demeter, R., et al. (2020). “Using eye- tracking to examine query syntax and method syntax comprehension in LINQ,” in 2020 11th IEEE International Conference on Cognitive Infocommunications (CogInfoCom) (IEEE).

Kim, S. J., Laine, T. H., and Suk, H. J. (2021). Presence effects in virtual reality based on user characteristics: attention, enjoyment, and memory. Electronics 10, 1051. doi: 10.3390/electronics10091051

Kiran, F. (2020). Exploring effects of background music in a serious game on attention by means of EEG signals in children. doi: 10.31390/gradschool_theses.5151

Kovari, A., and Katona, J. (2023). Effect of software development course on programming self-efficacy. Educ. Inform. Technol. 28, 10937–10963. doi: 10.1007/s10639-023-11617-8

Laeng, B., Ørbo, M., Holmlund, T., and Miozzo, M. (2010). Pupillary stroop effects. Cogn. Process. 12, 13–21. doi: 10.1007/s10339-010-0370-z

Lambooij, M., Fortuin, M., Heynderickx, I., and IJsselsteijn, W. (2009). Visual discomfort and visual fatigue of stereoscopic displays: a review. J. Imaging Sci. Technol. 53, 30201–1–30201–14. doi: 10.2352/J.ImagingSci.Technol.2009.53.3.030201

Lansbergen, M. M., Arns, M., van Dongen-Boomsma, M., Spronk, D., and Buitelaar, J. K. (2011). The increase in theta/beta ratio on resting-state EEG in boys with attention-deficit/hyperactivity disorder is mediated by slow alpha peak frequency. Prog. Neuropsychopharmacol. Biol. Psychiatry 35, 47–52. doi: 10.1016/j.pnpbp.2010.08.004

Lau-Zhu, A., Lau, M. P., and McLoughlin, G. (2019). Mobile EEG in research on neurodevelopmental disorders: Opportunities and challenges. Dev. Cogn. Neurosci. 36, 100635. doi: 10.1016/j.dcn.2019.100635

Li, D., and Sullivan, W. C. (2016). Impact of views to school landscapes on recovery from stress and mental fatigue. Landsc. Urban Plan. 148, 149–158. doi: 10.1016/j.landurbplan.2015.12.015

Li, G., Anguera, J. A., Javed, S. V., Khan, M. A., Wang, G., and Gazzaley, A. (2020). Enhanced attention using head-mounted virtual reality. J. Cogn. Neurosci. 32, 1438–1454. doi: 10.1162/jocn_a_01560

Li, Y. J., Ramalakshmi, P. A., Mei, C., and Jung, S. (2023). “Use of eye behavior with visual distraction for attention training in VR,” in 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (IEEE), 30–35. doi: 10.1109/vrw58643.2023.00013

Lindsay, G. W. (2020). Attention in psychology, neuroscience, and machine learning. Front. Comput. Neurosci. 14, 29. doi: 10.3389/fncom.2020.00029

Lohr, D. J., Friedman, L., and Komogortsev, O. V. (2019). Evaluating the data quality of eye tracking signals from a virtual reality system: case study using SMI's eye-tracking HTC vive. doi: 10.48550/ARXIV.1912.02083

Lopez, M. C., Deliens, G., and Cleeremans, A. (2016). Ecological assessment of divided attention: what about the current tools and the relevancy of virtual reality. Revue Neurol. 172, 270–280. doi: 10.1016/j.neurol.2016.01.399

McCarthy, C., Pradhan, N., Redpath, C., and Adler, A. (2016). “Validation of the empatica e4 wristband,” in 2016 IEEE EMBS International Student Conference (ISC) (IEEE), 1–4. doi: 10.1109/embsisc.2016.7508621

Memmert, D. (2007). Can creativity be improved by an attention-broadening training program? an exploratory study focusing on team sports. Creat. Res. J. 19, 281–291. doi: 10.1080/10400410701397420

Memmert, D., Simons, D. J., and Grimme, T. (2009). The relationship between visual attention and expertise in sports. Psychol. Sport Exerc. 10, 146–151. doi: 10.1016/j.psychsport.2008.06.002

Naveh, E., and Erez, M. (2004). Innovation and attention to detail in the quality improvement paradigm. Manage. Sci. 50, 1576–1586. doi: 10.1287/mnsc.1040.0272

Papanastasiou, G., Drigas, A., Skianis, C., Lytras, M., and Papanastasiou, E. (2018). Virtual and augmented reality effects on k-12, higher and tertiary education students' twenty-first century skills. Virt. Real. 23, 425–436. doi: 10.1007/s10055-018-0363-2

Patel, M., Bhatt, H., Munshi, M., Pandya, S., Jain, S., Thakkar, P., and Yoon, S. (2023). CNN-FEBAC: a framework for attention measurement of autistic individuals. Biomed. Signal Process. Control 105018. doi: 10.1016/j.bspc.2023.105018

Pei, X., Xu, G., Zhou, Y., Tao, L., Cui, X., Wang, Z., et al. (2022). A simultaneous electroencephalography and eye-tracking dataset in elite athletes during alertness and concentration tasks. Sci. Data 9. doi: 10.1038/s41597-022-01575-0

Pelaez, M. D. C., Albalate, M. T. L., Sanz, A. H., Valles, M. A., and Gil, E. (2019). Photoplethysmographic waveform versus heart rate variability to identify low-stress states: attention test. IEEE J. Biomed. Health Inform. 23, 1940–1951. doi: 10.1109/JBHI.2018.2882142

Pomplun, M., and Sunkara, S. (2003). “Pupil dilation as an indicator of cognitive workload in human-computer interaction,” in Proceedings of the International Conference on HCI.

Poole, D., Gowen, E., Warren, P. A., and Poliakoff, E. (2018). Visual-tactile selective attention in autism spectrum condition: an increased influence of visual distractors. J. Exp. Psychol. Gen. 147, 1309–1324. doi: 10.1037/xge0000425

Rizzo, A., Buckwalter, J., Bowerly, T., Zaag, C. V. D., Humphrey, L., Neumann, U., et al. (2000). The virtual classroom: a virtual reality environment for the assessment and rehabilitation of attention deficits. CyberPsychol. Behav. 3, 483–499. doi: 10.1089/10949310050078940

Rohani, D. A., and Puthusserypady, S. (2015). BCI inside a virtual reality classroom: a potential training tool for attention. EPJ Nonlinear Biomed. Phys. 3. doi: 10.1140/epjnbp/s40366-015-0027-z

Sarker, S., Linkon, A. H. M., Bappy, F. H., Rabbi, M. F., and Nahid, M. M. H. (2021). Improving joint attention in children with autism: a VR-AR enabled game approach. Int. J. Eng. Adv. Technol. 10, 77–80. doi: 10.35940/ijeat.C2201.0210321

Shema-Shiratzky, S., Brozgol, M., Cornejo-Thumm, P., Geva-Dayan, K., Rotstein, M., Leitner, Y., et al. (2018). Virtual reality training to enhance behavior and cognitive function among children with attention-deficit/hyperactivity disorder: brief report. Dev. Neurorehabil. 22, 431–436. doi: 10.1080/17518423.2018.1476602

Smith, V., Warty, R. R., Sursas, J. A., Payne, O., Nair, A., Krishnan, S., et al. (2020). The effectiveness of virtual reality in managing acute pain and anxiety for medical inpatients: systematic review. J. Med. Internet Res. 22, e17980. doi: 10.2196/17980

Tan, Y., Zhu, D., Gao, H., Lin, T.-W., Wu, H.-K., Yeh, S.-C., et al. (2019). “Virtual classroom: an ADHD assessment and diagnosis system based on virtual reality,” in 2019 IEEE International Conference on Industrial Cyber Physical Systems (ICPS) (IEEE), 203–208.

Tarng, W., Pan, I.-C., and Ou, K.-L. (2022). Effectiveness of virtual reality on attention training for elementary school students. Systems 10, 104. doi: 10.3390/systems10040104

Thibault, R. T., Lifshitz, M., and Raz, A. (2016). The self-regulating brain and neurofeedback: experimental science and clinical promise. Cortex 74, 247–261. doi: 10.1016/j.cortex.2015.10.024

Thomas, J. H. P., Whitfield, M. F., Oberlander, T. F., Synnes, A. R., and Grunau, R. E. (2011). Focused attention, heart rate deceleration, and cognitive development in preterm and full-term infants. Dev. Psychobiol. 54, 383–400. doi: 10.1002/dev.20597

Tung, Y.-H. (2019). Effects of mindfulness on heart rate variability. doi: 10.18178/ijpmbs.8.4.132-137

Vortmann, L.-M., Ceh, S., and Putze, F. (2022). Multimodal EEG and eye tracking feature fusion approaches for attention classification in hybrid BCIs. Front. Comput. Sci. 4, 780580. doi: 10.3389/fcomp.2022.780580

Wijkmark, C. H., Metallinou, M. M., and Heldal, I. (2021). Remote virtual simulation for incident commanders—cognitive aspects. Appl. Sci. 11, 6434. doi: 10.3390/app11146434

Wolfartsberger, J. (2019). Analyzing the potential of virtual reality for engineering design review. Automat. Construct. 104, 27–37. doi: 10.1016/j.autcon.2019.03.018

Yang, B., Li, X., Hou, Y., Meier, A., Cheng, X., Choi, J.-H., et al. (2020). Non-invasive (non-contact) measurements of human thermal physiology signals and thermal comfort/discomfort poses—A review. Energy Build. 224, 110261. doi: 10.1016/j.enbuild.2020.110261

Zhang, J., Mullikin, M., Li, Y., and Mei, C. (2020). “A methodology of eye gazing attention determination for VR training,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (IEEE), 138–141. doi: 10.1109/vrw50115.2020.00029

Keywords: attention, autism spectrum disorder, attention deficit disorder, virtual reality, physiological data

Citation: Sanku BS, Li YJ, Jung S, Mei C and He JS (2023) Enhancing attention in autism spectrum disorder: comparative analysis of virtual reality-based training programs using physiological data. Front. Comput. Sci. 5:1250652. doi: 10.3389/fcomp.2023.1250652

Received: 07 July 2023; Accepted: 06 September 2023;

Published: 28 September 2023.

Edited by:

Santiago Perez-Lloret, National Scientific and Technical Research Council (CONICET), ArgentinaReviewed by:

Jozsef Katona, University of Dunaújváros, HungaryFrancisco Capani, Universidad Abierta Interamericana, Argentina

Copyright © 2023 Sanku, Li, Jung, Mei and He. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yi (Joy) Li, am95LmxpQGtlbm5lc2F3LmVkdQ==

Bhavya Sri Sanku

Bhavya Sri Sanku Yi (Joy) Li

Yi (Joy) Li Sungchul Jung

Sungchul Jung Chao Mei3

Chao Mei3 Jing (Selena) He

Jing (Selena) He