- 1Department of Electrical and Software Engineering/Hotchkiss Brain Institute, University of Calgary, Calgary, AB, Canada

- 2Department of Electrical Engineering, Stanford University, Stanford, CA, United States

- 3Department of Computer Science, University of Oxford, Oxford, United Kingdom

- 4Department of Electrical and Computer Engineering, University of Alberta, Edmonton, AB, Canada

- 5Department of Electrical Engineering and Computer Science, Massachusetts Institute of Technology, Cambridge, MA, United States

- 6Department of Electrical and Computer Engineering, University of Toronto, Toronto, ON, Canada

- 7Research and Innovation Center, Óbuda University, Budapest, Hungary

- 8Department of Electronic Engineering, Tsinghua University, Beijing, China

- 9Systems Research Institute, Polish Academy of Sciences, Warsaw, Poland

Cognitive computers (κC) are intelligent processors advanced from data and information processing to autonomous knowledge learning and intelligence generation. This work presents a retrospective and prospective review of the odyssey toward κC empowered by transdisciplinary basic research and engineering advances. A wide range of fundamental theories and innovative technologies for κC is explored, and a set of underpinning intelligent mathematics (IM) is created. The architectures of κC for cognitive computing and Autonomous Intelligence Generation (AIG) are designed as a brain-inspired cognitive engine. Applications of κC in autonomous AI (AAI) are demonstrated by pilot projects. This work reveals that AIG will no longer be a privilege restricted only to humans via the odyssey to κC toward training-free and self-inferencing computers.

1. Introduction

A spectrum of basic research and disruptive technologies [Newton, 1729; Boole, 1854; Russell, 1903; Hoare, 1969; Kline, 1972; Aristotle (384 BC−322 BC), 1989; Zadeh, 1997; Wilson and Frank, 1999; Bender, 2000; Timothy, 2008; Widrow, 2022] has triggered the emergence of Cognitive Computers (κC) (Wang, 2012e), which paved a way to autonomous artificial intelligence (AAI) (Wang, 2022g) and machine intelligence generation (Birattari and Kacprzyk, 2001; Kacprzyk and Yager, 2001; Pedrycz and Gomide, 2007; Rudas and Fodor, 2008; Siddiqi and Pizer, 2008; Wang et al., 2009, 2016b, 2017, 2018; Cios et al., 2012; Berwick et al., 2013; Wang, 2016a, 2021a; Plataniotis, 2022; Huang et al., 2023). The odyssey to κC is inspired by the brain (Siddiqi and Pizer, 2008; Wang et al., 2009, 2016b, 2018; Wang, 2021a) and enabled by intelligent mathematics (IM) (Wang, 2008c, 2012a,b, 2020, 2022c, 2023). It is initiated by the fundamental queries on what classic AI cannot do and how κC will enable AAI (Birattari and Kacprzyk, 2001; Kacprzyk and Yager, 2001; Pedrycz and Gomide, 2007; Rudas and Fodor, 2008; Siddiqi and Pizer, 2008; Wang et al., 2009, 2016b, 2017, 2018; Cios et al., 2012; Wang, 2012a, 2016a, 2020, 2021a, 2022c,g; Berwick et al., 2013; Plataniotis, 2022; Huang et al., 2023) theories and technologies beyond classical pretrained AI based on data convolution and preprogrammed computing underpinned by pre-deterministic run-time behaviors.

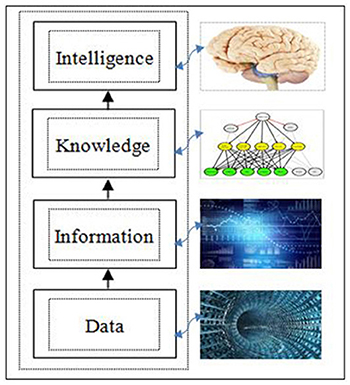

Although it is well recognized that data, information, knowledge, and intelligence are the fundamental cognitive objects in the brain (Boole, 1854; Wilson and Frank, 1999; Wang et al., 2009) and AAI systems, as illustrated in Figure 1, there is a lack of formal studies on their transition and aggregation. The contemporary and traditional perceptions, metaphors, and relationships of the basic cognitive objects in the brain and intelligent systems are analyzed in this section. The taxonomy of cognitive objects in the brain may be formally classified into four forms according to the hierarchical cognitive framework of the brain (CFB) (Wang, 2003, 2022b; Wang et al., 2006, 2021a).

Definition 1. The hierarchy of cognitive objects (HCO) represented in the brain is a four-tuple encompassing the categories of data (𝔻), information (), knowledge (), and intelligence () from the bottom up with increasing intelligence power as well as complexity:

where the symbols denote sets of objects (O), quantities (Q), signals/events (S), concepts (C), and behaviors (B), respectively.

It is noteworthy in Equation (1) that the essences of HCO are embodied by distinguished properties quantified by different cognitive units. The unit of data is a scalar quantity implied by its physical unit (pyu) of a certain corresponding qualification scale. Bit () for information is a scalar binary digit introduced by Shannon (1948). Bir for knowledge is a binary relation discovered by Wang in 2018 (Wang, 2016c). It leads to the highest level of cognitive units, bip, which denotes a binary process of knowledge-based behaviors triggered by the information of an event (Wang, 2008c).

Example 1. The inductive and hierarchical aggregation from data, information, and knowledge to intelligence in the brain may be illustrated by the cognitive model of an arbitrary AND-gate as follows (Wang, 2018):

Given an AND-gate with input size |I| = n = 10,000 pins and one output |O| = 1, its cognitive complexities at different levels according to HCO are extremely different:

1) Data: 210,000 bits ⇐ super big!

2) Information: 10,000 bits

3) Knowledge: 2 rules

4) Intelligence: ∀ n, O = f(n) = AND(n)

// It becomes a generic logical function learnt by κ C independent from n

The example reveals if a machine tries to learn how an AND-gate behaves at the data level, it always faces an extremely complex problem that easily becomes intractable. However, human brains may quickly reduce the problem to a general and simple logic function at the intelligence level. This is the fundamental difference between current data-training-based machine learning technologies and the natural intelligence of the brain underpinning κC.

The odyssey of computing theories and technologies from Babbage (1822)'s mechanical machines, Turing (1950)'s extended finite state machines (FSM), von Neumann (1946)'s stored-program-controlled computers, to regressive and convolutional neural-networks-driven AI systems (McCarthy et al., 1955), has led to the emergence of κC for autonomous intelligence generation (Wang, 2012e). κC manipulates cognitive entities as hyperstructures (ℍ) (Wang, 2008c, 2009a) beyond classic discrete objects in a set of real numbers (ℝ). ℍ reveals the latest discovery on the essence of knowledge and intelligence where the target objects have been out of the domain of and ℝ, which are challenging the processing power of traditional mathematical means and computing models used for manipulating data and information.

Definition 2. A cognitive computer (κC) is a fully intelligent computer, aggregated from data and information processing, to provide a cognitive engine for empirical knowledge acquisition and autonomous intelligence generation driven by IM.

κC is a disruptive technology for AAI that implements brain-inspired computers powered by non-preprogramed computing and non-pretrained AI based on the latest breakthroughs in cognitive computing theories (Wang, 2009b, 2011, 2012c), and intelligent mathematics (Wang, 2021b). The philosophy of κC is underpinned by that it is impossible to either preprogram all future behaviors pending by unpredicted runtime demands or pretrain all application scenarios in real-time in the infinite discourse of (H).

This study presents a retrospective and prospective review of the odyssey toward contemporary κC driven by transdisciplinary basic research and technology advances. A comprehensive scope of philosophical and theoretical perspectives on κC as well as transdisciplinary theories and IM for κC will be explored in Section 2. The architecture and functions of κC will be modeled in Section 3 as brain-inspired autonomous systems. Applications of κC will be demonstrated in Section 4 via experiments on machine knowledge learning for enabling autonomous machine intelligence generation.

2. Transdisciplinary discoveries on the theoretical foundations of κC

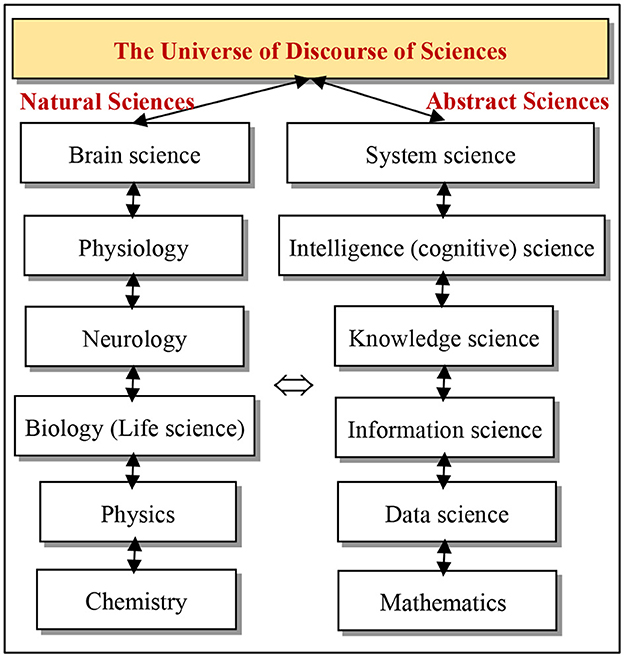

The universe of discourse of contemporary sciences is a dual of natural and abstract sciences as shown in Figure 2. In recent decades, a number of scientific disciplines have emerged as abstract sciences such as data, information, knowledge, and intelligence sciences from the bottom up. They have not only been triggered by the information revolution and the rise of computational intelligence but also by the deepened understanding of the insights and generic principles of the natural science counterparts, in formal studies (Wang, 2003, 2008c, 2022b; Wang et al., 2006, 2021a).

The convergence of brain science (Wilson and Frank, 1999; Wang et al., 2006), intelligence science (Boole, 1854; Wang, 2009a; Wang et al., 2016b, 2017; Widrow, 2022), computer science (von Neumann, 1946; Turing, 1950; Wang, 2009b), and intelligent mathematics (Wang, 2008c, 2012a,b, 2020, 2022c) has led to the emergence of κC (Wang, 2009b, 2012e), underpinned by the synergy of abstract sciences (Wang, 2022b). This section provides a transdisciplinary review and analysis of the theoretical foundations, key discoveries, and technical breakthroughs toward realizing κC across the brain, computer, software, data, information, knowledge, intelligence, cybernetics, and mathematical sciences.

2.1. Brain science

The brain as the most mature intelligent organ (Wilson and Frank, 1999; Wang et al., 2006) is an ideal reference model for revealing the theoretical foundations of AI, and what AI may or not do, constrained by the nature and the expressive power of current mathematical means for computational implementations.

Definition 3. Brain science studies the neurological and physiological structures of the brain and the mechanisms of intelligence generation on brain structures.

It is revealed in cognitive informatics (Wang, 2003) that the brain and natural intelligence may be explained by a hierarchical framework, which maps the brain through embodiments at neurological, physiological, cognitive, and logical layers from bottom-up induction and top-down deduction. A rigorous study of the cognitive foundations of natural intelligence may shed light on the general mechanisms of all forms of intelligence toward pervasive brain-inspired systems (BIS) (Wang et al., 2016b, 2018).

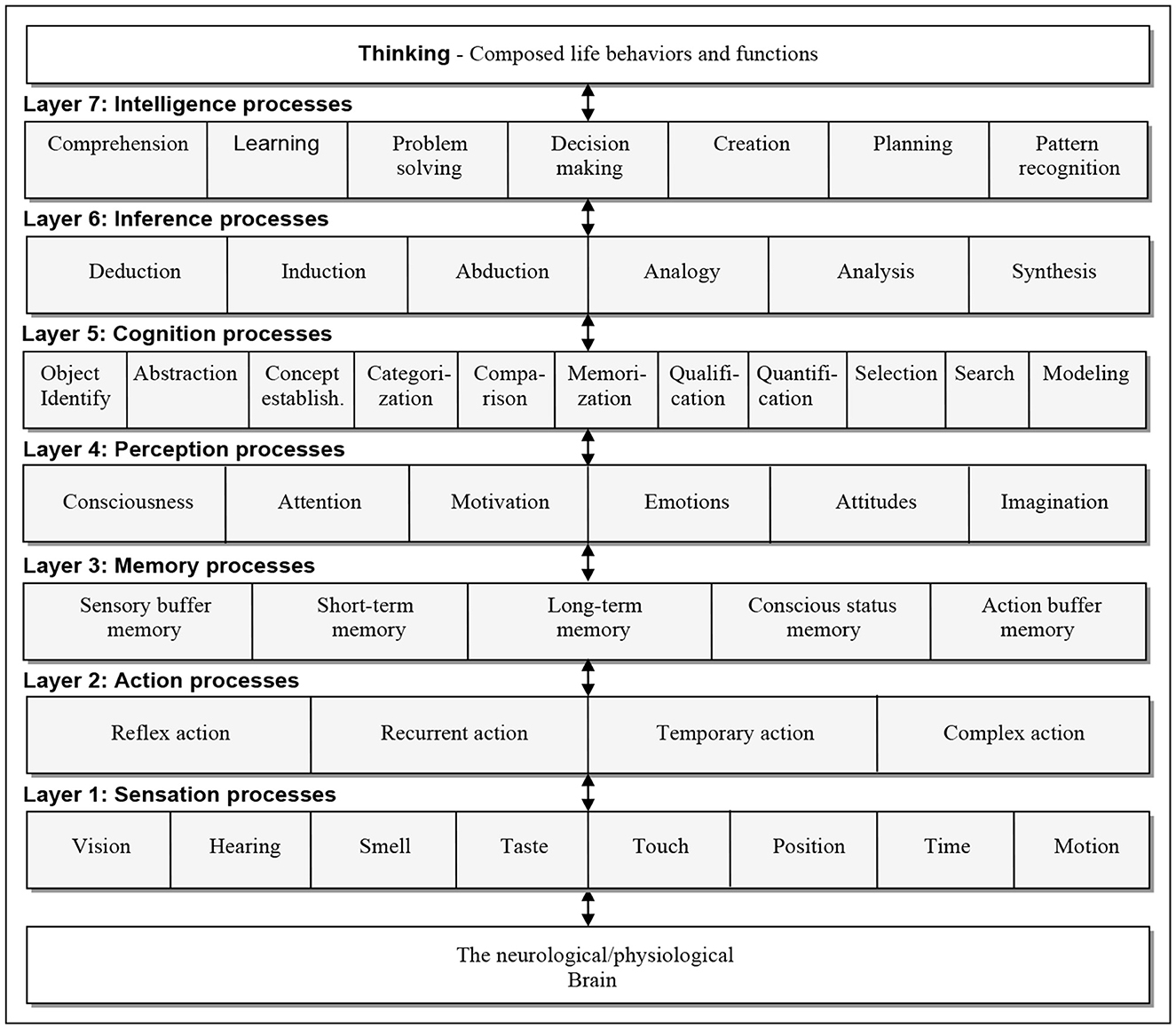

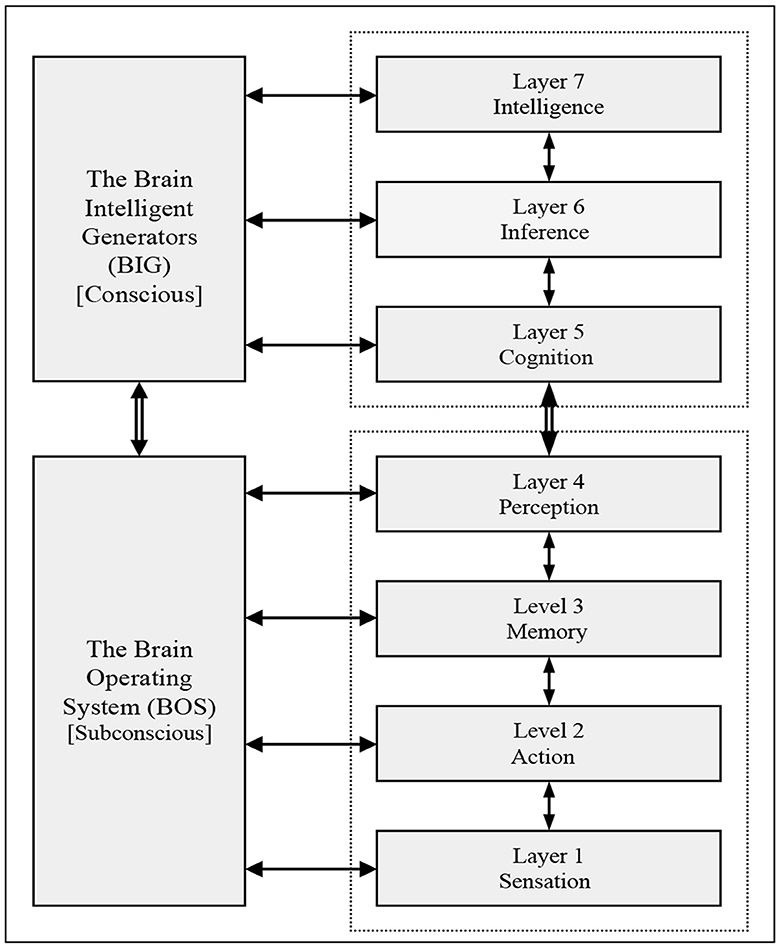

A layered reference model of the brain (LRMB) (Wang et al., 2006) is introduced in Figure 3 as an overarching architecture of the brain's mechanisms. The four lower layers of LRMB, such as those of sensation, action, memory, and perception, are classified as subconscious mental functions of the brain, which are identified as the Brain's Operating System (BOS). However, the three higher layers encompassing those of cognition, inference, and intelligence are classified as conscious mental functions of the brain known as the Brain's Intelligence Generator (BIG) built on BOS.

Figure 3. Layered reference model of the brain (LRMB) (Wang et al., 2006).

LRMB reveals that the brain can be formally embodied by 52 cognitive processes (Wang et al., 2006) at seven recursive layers from the bottom up. In this view, any complex mental process or intelligent behavior is a temporary composition of fundamental processes of LRMB at run-time. Based on LRMB, the nature of intelligence may be rigorously reduced to lower-layer cognitive objects such as data, information, and knowledge in the following subsections. The framework of brain science and its functional explanations in cognitive science will be further explained in Figures 7, 8 in Sections 3, 4.

According to LRMB, the theoretical bottleneck and technical challenges toward κC are the lack of a fully autonomous intelligent engine at the top layer constrained by current pretrained AI and preprogramed computing technologies (Wang, 2009b, 2021b). Therefore, from the brain science point of view, κC is a brain-inspired intelligent computer for autonomous intelligence generation (AIG) dependent on an intelligent operating system, as designed in Section 4.

2.2. Computer science

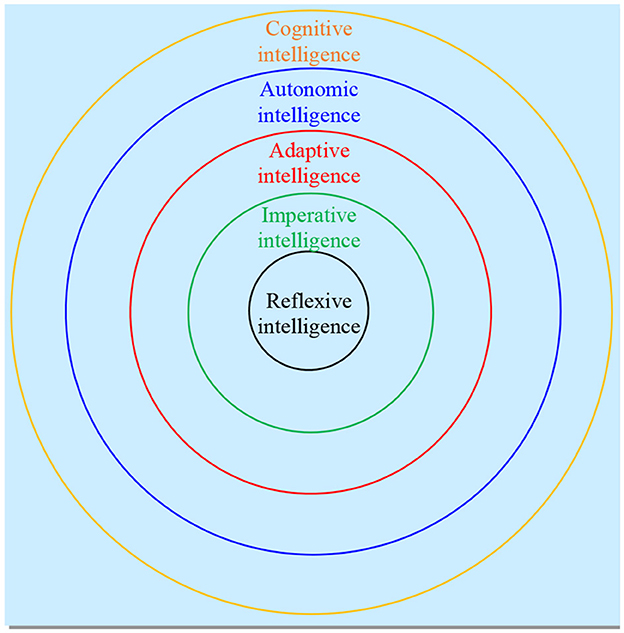

A computer is a general programable machine for realizing computational intelligence (Wang, 2008c, 2009a). The odyssey toward κC has gone through mechanical (Charles Babbage) (Babbage, 1822), analog, digital, and then quantum (David, 1985) computers. Computing theories and models have been focused on information processing by various means and architectures (Lewis and Papadimitriou, 1998; Wang, 2008f). However, they are still stuck at low levels of reflexive, imperative, and adaptive intelligence according to the hierarchical intelligence model (HIM), as shown in Figure 5.

Definition 4. Computer science studies the architectures and functions of general instructive processors, their denotational and operational mathematical models, programming languages, algorithmic frameworks, and run-time problem solutions interacting with the external environment.

A fundamental theory of computer science is built on the mathematical model of finite state machines (FSM) (Lewis and Papadimitriou, 1998) and Turing machines (Turing, 1950) as an event-driven behavioral generators in between the internal memory space and the external port (interface) space of users or devices (Wang, 2008f).

Definition 5. FSM is a universal function generator. Its structure Θ(FSM) is a quin-tuple and its function F(FSM) is a sequence of state transitions of event-driven functions to realize programable intelligence in the external space:

where S is a finite set of predefined states of the FSM, Σ a set of events, s the initial state (s ∈ S), H the set of final states and δ the state transition function. In Equation (2), the big-R calculus is a general recursive mathematical expression for denoting recurring structures or iterative behaviors (Wang, 2008d), which is introduced in real-time process algebra (RTPA) (Wang, 2002, 2008a).

For processing complex mathematical and language expressions, Alan Turing extended an FSM to a more sophisticated computing model known as the Turing machine (Turing, 1950) by enhanced recursive functions. Both the Turing machine and an FSM have provided a foundation for enabling programable intelligence in computer science.

However, in order to unleash the computational power from the first-generation of preprogramed (imperative) computers and the second-generation of pretrained (data aggregated) AI computers, to the third-generation of autonomous intelligent computers (Wang, 2012e), κC is indispensable. The architecture and functions of κC will be elaborated in Section 3.

2.3. Software science

Software is a form of abstract computational intelligence that is generated by instructive behaviors as a chain of embedded functions on executable computing structures as typed tuples (Wang, 2008a, 2014b). The latest advances in computer science, intelligence science, and intelligent mathematics have triggered the emergence of software science (Wang, 2014b, 2021d).

Definition 6. The general mathematical model of software (GMMS) ℘ is a dispatch structure ↪ with finite sets of embedded relational processes Pi driven by certain events (E):

where the abstract entities of software are represented by sets of structure models (SM), process models (PMs), and environmental events (E).

GMMS (Wang, 2014b) reveals that software is not only an interactive dispatch structure at the top level driven by trigger, timing, and interrupt events (E), but also a set of embedded relational processes at the lower level of subsystems and components.

Definition 7. Software science studies the formal properties and mathematical models of software, general methodologies for rigorous and efficient software development, and coherent theories and laws underpinning software behaviors and software engineering practices.

The discipline of software science (Hoare, 1969, 1994; Wang, 2014b, 2021d) encompasses theories and methodologies, denotational mathematics, system software, fundamental algorithms, organizational theories, cognitive complexity, and intelligent behavior generation theories. The pinnacle of software science is the set of fundamental theories of formal methods (Hoare, 1969, 1994; Hoare et al., 1987; Wang, 2008a, 2014b, 2021d) and platforms of system software including operating systems, compilers, database systems, and networked interconnections (Wang, 2008f). Hoare has revealed that software is a mathematical entity (Hoare, 1969) that may be formally denoted by a sequential behavioral process known as the unified process theory in formal methods (Hoare, 1994).

A set of 30+ laws of programming has been created by Hoare et al. (1987). Then, real-time process algebra (RTPA) (Wang, 2002, 2008e) has been introduced that extends Hoare's sequential process theory to a system of IM for formally denotating system and human cognitive, inference, and behavioral processes as a general theory toward software science (Wang, 2014b). Based on RTPA, a comprehensive set of mathematical laws and associated theorems on software structures, behaviors, and processes has been formally established (Wang, 2008b).

Software engineering is applied software science that is one of the most complicated branches of engineering fields because its objects are highly abstract and intangible. It encompasses system modeling, architecture, development methodologies, programming technologies, and development platforms. It also covers heuristic principles, tools/environments, best practices, case studies, experiments, trials, and performance benchmarking.

2.4. Data science

Data are an abstract representation of quantities or properties of real-world entities and abstract objects against a specific quantification scale. Data are the most fundamental and pervasive cognitive objects in the brain that link real-world entities and attributes to mental abstractions via sensation and quantification. Data are the most fundamental level of cognitive objects represented in the brain by its sensory, qualification, and abstraction processes for eliciting real-world attributes. The cognitive processes for data elicitation according to the LRMB model involve sensory, observation, abstraction, and measurement. However, data manipulations are dependent on qualification, qualification, measurement, statistics, and computing.

Definition 8. Data with respect to a quantity X against a measuring scale 𝔖, D(X, 𝔖), is yielded via a quantification process Γ𝔖(X) that results in a real number, , in unit [𝔖] denoted by the integer part and the decimal part , where the latter is given by the remainder:

where 𝔖 is represented by typical types encompassing bits (), natural numbers (ℕ), integers (ℤ), real numbers (ℝ), and fuzzy numbers (𝔽) in type theories (Wang, 2002, 2014b).

Definition 9. Data science studies the basic properties of data encompassing their forms, types, properties, domains, representations, and formal mathematical models. It also studies data manipulation methodologies for data generation, acquisition, storage, retrieval, transformation, sharing, protection, and information aggregation.

In data science, big data are large-scale and heterogeneous data in terms of quantity, complexity, storage, retrieval, semantics, cognition, distribution, and maintenance in all branches of abstract sciences (Wang and Peng, 2017) across Section 2.

Definition 10. The mathematical model of big data Θ is a two-dimensional n × m matrix where each row is denoted by a structure model (|SM) called a typed tuple; while each column is clustered by a field with a certain type (|Tj):

where each cell of big data formally denotes a certain object dij | j in type | j.

Example 2. A formal BDS of a social network, Θ(BDS), with a million users and seven data fields can be rigorously modeled by Equation (6), according to Definition 10.

In Example 2, row zero θ0 in the mathematical model of BDS, Θ (BDS), is a special typed tuple called the schema of the BDS, which specifies the structure and constraint of each data field of the BDS. It is noteworthy that the type suffixes | T adopted in the BDS model allow arbitrary type and media of big data to be rigorously specified in order to meet the requirements for accommodating widely heterogeneous data in the BDS, which will be specifically embodied by a certain type in applications.

The fundamental theory of data science may be explained by a set of rigorous data manipulations on Θ by Big Data Algebra (BDA) (Wang, 2016b). BDA provides a set of 11 algebraic operators in the categories of architectural modeling, analytic, and synthetic operations on the formal model of big data structure Θ(BDA). In BDA, the modeling operators include schema specification and BDS initialization. The analytic operators encompass those of system assignment, retrieval, selection, time-stamp, and differentiation. The synthetic operators encompass those of induction, deduction, integration, and equilibrium detection.

BDA creates an algebraic system for rigorously manipulating big data systems Θ(BDS) of intricate big datasets. It provides an efficient means for BDS modeling, design, specification, analysis, synthesis, refinement, validation, and complexity reduction as a general theory of data science (Wang and Peng, 2017).

2.5. Information science

Information is a general form of abstract objects and the signaling means for communication, interaction, and coordination, which are represented and transmitted by symbolical, mathematical, communication, computing, and cognitive systems. The source of information is sensory stimuli elicited from data by qualification or quantification. Any product and/or process of human mental activities results in information as a generic product of human thinking and reasoning that lead to knowledge. Information is the second-level cognitive object according to the CFB model (Figure 1), which embodies the semantics of data collected from the real world or yielded by human cognitive processes.

Definition 11. Information science studies forms, properties, and representations of information, as well as mathematical models and rules for information manipulations such as its generation, acquisition, storage, retrieval, transmission, sharing, protection, and knowledge aggregation.

In classic information theory (Shannon, 1948), information is treated as a probabilistic measure of the properties of messages, signals, and their transmissions. It has focused on information transmission rather than the cognitive mechanisms of information and its manipulations. The measure of the quantity of information is highly dependent on the receiver's subjective judgment on probable properties of signals in the message as Shannon recognized.

Definition 12. The Shannon information of an n-sign system, Is, is determined by a weighted sum of the probabilities pi and unexpectedness Ii of each sign in an information system:

It is noteworthy that Shannon information measures the characteristic information of channel properties, which is not proportional to the size of a message transmitted in the channel. Therefore, classic information science has focused on symbolic information rather than the denotational and cognitive properties of information as given in Definitions 13 and 14.

The second generation of computational information science tends to model information as a deterministic abstract entity for data, messages, signals, memory, and knowledge representation and storage, rather than a probable property of communication channels as in the classic information theory. This notion reflexes the computational theories and practices across computer science, software science, the IT industry, and everyday lives (Turing, 1950; Wang, 2014b).

Definition 13. The computational information, Ic, in contemporary information science is a normalized size of symbolic information of abstract object Ok represented in a k-based measure scale Sk:

where Ic is deterministic and independent from Shannon's probabilistic information. In other words, the unit bit in computational information has been extended from Shannon's characteristic information of signals to the quantity of symbolic information in computer science.

However, it has been argued in cognitive information science that computational information as given in Definition 13 may still not represent the overall properties of generic information, particularly its semantic aspect (Wang, 2003). This leads to the third generation of information science that models the essence of information as universal abstract artifacts and their symbolic representations, which can be acquired, memorized, and processed by human brains or computing systems. Theories of the third generation of information science are represented by cognitive Informatics (CI) (Wang, 2003) as a transdisciplinary enquiry on the internal information processing mechanisms and processes of the brain (Wang et al., 2006).

Definition 14. Cognitive information, Ici = (Iκ, IΩ), is a 2D hyperstructure that represents a pair of characteristic information (Iκ) and semantic information (IΩ) on an arbitrary base β ∈ ℕ that determines the number of representative variables κ = |v1, v2, ..., vκ|:

where Iκ is determined by the symbolic size of a certain problem dimension β, while IΩ is generated by a normalized denotational space

The cognitive information model provided in Definition 14 reveals that the generalized semantic information is an inherent property of any system by both Iκ and IΩ. The measure of cognitive information is compatible with, but more general than, those of classic and computational information.

Theorem 1. The framework of contemporary information science is constrained by the following relations among Is, Ic, and Ici:

where ⊏ denotes a dimensional enclosure in a hyperstructure.

Proof. Theorem 1 holds directly based on Definitions 12–14:

where the classic Shannon information Is and computational information Is become a special case of the characteristic information Iκ in cognitive information science. ■

Contemporary and generalized information science explains that interpreted data result in information, while comprehended information generates knowledge.

2.6. Knowledge science

Knowledge is modeled as a set of conceptual relations acquired and comprehended by the brain embodied as a concept network (Cios et al., 2012; Wang, 2012e, 2016c). Knowledge is the third level of cognitive objects according to CFB as learned and comprehended information. Knowledge is perceived as a creative mental product generated by the brain embodied by concept networks and behavioral processes. The former represents the form of to-be knowledge, while the latter embodies the form of to-do knowledge that leads to the generation of human intelligence as elaborated in Section 2.7.

In traditional epistemology, knowledge is perceived as a justified belief (Wilson and Frank, 1999). However, this perception is too narrow and subjective, and may not capture the essential essence of knowledge as a high-level cognitive entity aggregated from data and information.

Definition 15. Knowledge science studies the nature of human knowledge, principles, and formal models of knowledge representation, as well as theories for knowledge manipulations such as creation, generation, acquisition, composition, memorization, retrieval, and repository in knowledge engineering.

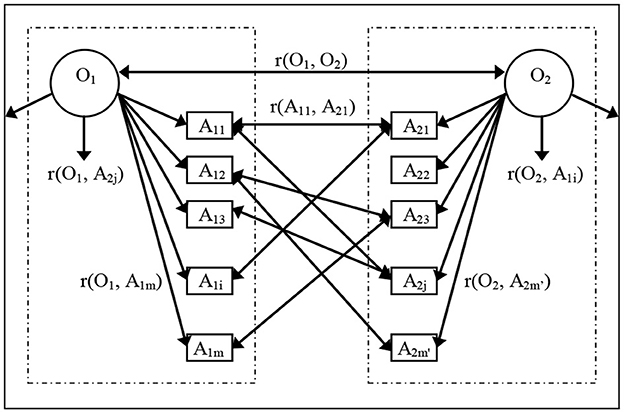

The neurological foundation of knowledge may be explained by the synaptic connections among neurons representing the clusters of objects and attributes as shown in Figure 4. The dynamic neural cluster (DNC) indicates that knowledge is not only retained in neurons as individual objects (O) or attributes (A), but also dynamically represented by the newly created synaptic connections modeled by relations such as r(O, A), r(A, O), r(O, O), and r(A, A) in Figure 4. This leads to the development of the formal cognitive model of knowledge (Wang, 2007).

Definition 16. The Object-Attribute-Relation (OAR) model (Wang, 2007) of knowledge in the brain is represented by a triple of neurological clusters:

where O is a set of objects identified by unique symbolic names, A the set of attributes for characterizing an object, and R the set of relations between the objects and attributes, i.e., R = O × A.

The OAR model reveals the nature and essence of knowledge and its neurological foundations. The OAR model may be adopted to explain a wide range of human information processing mechanisms and cognitive processes. It is different from the classic container metaphor of knowledge that failed to explain the dynamics of knowledge establishment and growth. The ORA model enables formal explanations of the structural model of human knowledge in the following mathematical model and theorem. Then, the semantical and functional theories of knowledge will be rigorously elaborated in concept algebra and semantic algebra in Section 2.9.

Definition 17. The mathematical model of knowledge is a Cartesian product of power sets of formal concepts:

where the newly acquired concept Ci is mapped onto those already existing.

Equation (13) has led to one of the most fundamental discoveries in knowledge science (Wang, 2016c) as follows.

Theorem 2. The basic unit of knowledge is a pairwise conceptual relation, or shortly, a binary relation [bir].

Proof. According to Definition 17, knowledge as a mapping between two Cartesian products of concepts results in a deterministic set of relations. In which, any complex relationships can be reduced to the basic unit, bir, of knowledge between a pair of individual concepts. ■

The formal theory of knowledge science is underpinned by concept algebra (Wang, 2010) and semantic algebra (Wang, 2013) in IM. It is recognized that concepts are the core model of human knowledge that carry stable semantics in expression, thinking, learning, reasoning, comprehension, and entity denotation. Although words in natural languages are often ambiguous and polymorphic, concepts elicited from them are not only unique in expressions but also neutral across different languages. Therefore, concept algebra is adopted to rigorously manipulate formal concepts and their algebraic operations for knowledge representation and semantic analyses. A set of algebraic operators of concept algebra has been created in Wang and Valipour (2016), Valipour and Wang (2016), which enables a wide range of applications in cognitive informatics, cognitive linguistics, and cognitive machine learning (Wang et al., 2011).

In knowledge science, semantics is the carrier of language expressions and perceptions. In formal semantics, a comprehension of an expression is composed of the integrated meaning of a sentence's concepts and their relations. Semantic algebra is created for rigorous semantics manipulations in knowledge science by a set of rigorous relational, reproductive, and compositional operators (Wang, 2013). On the basis of semantic algebra, semantic expressions may not only be deductively analyzed based on their syntactic structures from the top down, but also be synthetically composed by the algebraic semantic operators from the bottom up. Semantic algebra has enabled a wide range of applications in cognitive informatics, cognitive linguistics, cognitive computing, machine learning, cognitive robots, as well as natural language analysis, synthesis, and comprehension (Wang et al., 2016a).

2.7. Intelligence science and AI

Intelligence science is the paramount level of abstract sciences according to the CFB model in Figure 1.

Definition 18. Intelligence ï is either an internal inferential thread triggered by a cause ci that results in inductive knowledge Ki or an interactive behavioral process Bi corresponding to an external or internal stimulus (event) ei:

where the former is called inferential intelligence and the latter behavioral intelligence.

It is revealed that human and machine intelligence are dually aggregated from (sensory | data), (neural signaling | information), and (semantic networks | knowledge), to (autonomous behaviors | intelligence) in a recursive framework (Wang et al., 2021a). In each pair of intelligent layers, the former is naturally embodied in neural structures of the brain, while the latter is represented in abstract (mathematical) forms in autonomous AI. Therefore, natural intelligence (NI) and AI are equivalent counterparts in philosophy and IM, which share a unified theoretical foundation in intelligence science as illustrated by LRMB in Section 2.1.

Definition 19. Intelligence science studies the general form of abstract intelligence (αI) overarching natural and artificial intelligence and their theoretical foundations, which enable machines to generate advanced intelligence compatible with both human inferential and behavioral intelligence.

A Hierarchical Intelligence Model (HIM) (Wang et al., 2021a) in intelligence science is introduced to explain the levels and categories of natural and machine intelligence as shown in Figure 5. HIM explains why there was rarely fully autonomous intelligent system developed in the past 60+ years because almost all such systems are constrained by the natural bottleneck of adaptive (L3-deterministic) intelligence underpinned by reflexive (L1-pretrained and data-driven), and imperative (L2-preprogramed) intelligence.

According to HIM, the maturity levels of both human and machine intelligence are aggregated across the levels of reflexive, imperative, adaptive, autonomous, and cognitive intelligence from the bottom up in line with LRMB (Wang et al., 2006) in brain science. HIM indicates that current AI technologies are still immature toward achieving human-like autonomous and conscious intelligence because the inherent problems faced by current AI technologies have been out of the domain of reflexive and regressive machine intelligence.

Definition 20. The general pattern of abstract intelligence (αI) is a cognitive function fi that enables an event ei|T in the universe of discourse of abstract intelligence, , to trigger a causal behavior BT(i) |PM as a functional process:

where denotes the 5D behavioral space of humans and intelligent systems constrained by the subject (J), object (O), behaviors (B), space (S), and time (T). The symbol ↪ denotes the dispatching operator, |T the type of events classified as trigger, timing, or interrupt requests, and |PM the type suffix of a process model for a certain behavior (Wang, 2002).

The advances of intelligence science have provided primitive theories for the odyssey toward κC powered by IM, particularly Real-Time Process Algebra (RTPA) (Wang, 2002) and Inference Algebra (Wang, 2011, 2012c) inspired by Newton (1729), Boole (1854), Russell (1903), Hoare (1969), Kline (1972), Aristotle (384 BC−322 BC) (1989), Zadeh (1997), Bender (2000), Timothy (2008), and Widrow (2022).

2.8. Cybernetics 2.0

Norbert Wiener invented the word cybernetics and wrote a book by that name on the subject of feedback and control in the human body (Wiener, 1948). Widrow's new book (Widrow, 2022), “Cybernetics 2.0: a General Theory of Adaptivity and Homeostasis in the Brain and in the Body” follows in Wiener's footsteps. It is different as it introduces learning algorithms to Wiener's subject because learning algorithms did not exist in Wiener's day. Our current knowledge of biologically plausible networks for information flow is still limited. Most research focuses on neurons, but there is a world of complexity due to glial cells like astrocytes which surround them and densely pack and provide the energy for synaptic events (Salmon et al., 2023).

Through synapses of neurons in the brain, information is carried between neurotransmitters and neuroreceptors. Synapse is the coupling device from/to neuron. The strength of the coupling, the “weight”, is proportional to the number of receptors. Their numbers can increase or decrease, such as upregulation or downregulation. A mystery in neuroscience is, what is nature's algorithm for controlling upregulation and downregulation? Start with Hebbian learning and generalize it to cover downregulation, upregulation, and inhibitory, as well as excitatory synapses. What results is a surprise! We have an unsupervised form of the LMS (least mean square) algorithm of Widrow and Hoff. The Hebbian-LMS algorithm encompasses Hebb's learning rule (fire together, wire together), and introduces homeostasis into the equation. A neuron's “normal” firing rate is set by homeostasis. Physical evidence supports Hebbian-LMS as being nature's learning rule. LMS binds nature's learning to learning in artificial neural networks.

The Hebbian-LMS algorithm is key to the control of all the organs of the body, where hormones bind to hormonereceptors and there is upregulation and downregulation in the control process. Hebbian-LMS theory has a wide range of applications, explaining observed physiological phenomena regarding, for example, pain and pleasure, opioid addiction and withdrawal, blood pressure, blood salinity, kidney function, and thermoregulaion of the body's temperature. Upregulation and downregulation are even involved in viral infection and cancer. Countless variables are controlled by the same algorithm for modulating adaptivity and learning with billions of synapses in the brain.

2.9. Mathematical science and contemporary intelligent mathematics

Mathematics is the most fundamental branch of abstract sciences for manipulating contemporary entities toward κC. The history of sciences and engineering has revealed that the kernel of human knowledge has been elicited and archived in generic mathematical forms (Wang, 2020, 2021c, 2022c) as evidenced in the transdisciplinary reviews of the previous subsections. Therefore, the maturity of any scientific discipline is dependent on the readiness of its mathematical means.

Definition 21. Intelligent Mathematics (IM) is a category of contemporary mathematics that deals with complex mathematical entities in the domain of hyperstructures (ℍ), beyond those of ℝ and , by a series of embedded functions and processes in order to formalize rigorous expressions, inferences, and computational intelligence.

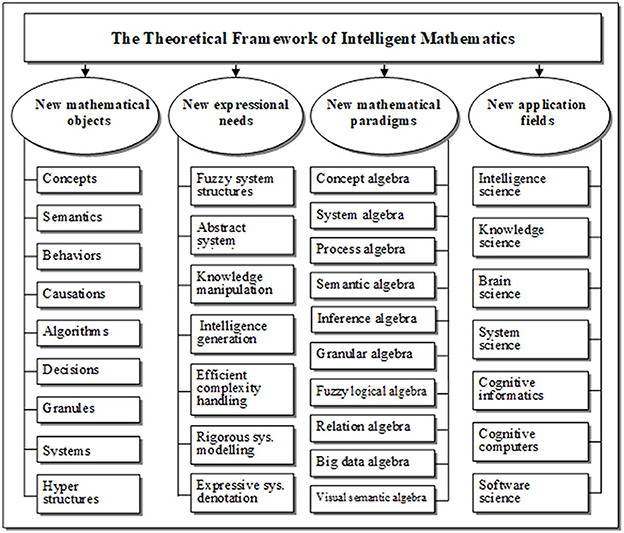

An interesting discovery in IM (Wang, 2020) is that almost all Abstract Objects (AO) in modern sciences, such as data, information, knowledge, and intelligence as illustrated in Figure 6, cannot be rigorously modeled and manipulated by pure numbers (Wang, 2008c, 2012b, 2023). In order to deal with this denotational crisis of mathematics (Wang, 2022c), the mathematical means for manipulating AO must be adapted to allow κCs to sufficiently handle the nonnumerical hyperstructures of AO for autonomous knowledge and intelligence processing.

The theoretical framework of IM is described in Figure 6 which explains the causality of what AO has triggered the development of which IM paradigm. It also describes the applications of each contemporary IM in κC for meeting the indispensable demands in symbiotic and autonomous human–machine interactions.

Although classic mathematics has provided a wide range of abstract means for manipulating abstract numbers dominated in ℝ, it has been fundamentally challenged by the demands in knowledge and intelligence sciences where the cognitive entities are no longer pure numbers. These contemporary demands have triggered the emergence of IM, which provides a family of advanced denotational means for extending classic numerical analytic mathematics in ℝ to more general categories of abstract entities in ℍ as explained in Figure 6. IM has extended traditional Boolean algebra (as well as bivalent logic) on and analytic algebra on ℝ to more complex and multi-dimensional hyperstructures (ℍ) in order to enable humans and/or intelligent machines to manipulate compatible knowledge and symbiotic intelligent behaviors across platforms via κC for efficiently processing the nonnumerical cognitive entities characterized by ℍ.

The framework of IM demonstrates a set of most important basic research on the theoretical foundations of κC. The design and implementation of κC have been enabled by IM and related theoretical and technical advances as reviewed in previous subsections. IM has shed light on the systematical solving of challenging natural/artificial intelligence problems by a rigorous and generic methodology. A pinnacle of IM is the recent proofs of the world's top-10 hardest problems in number theory known as the Goldbach conjecture (Wang, 2022d,f,h) and the Twin-Prime conjecture (Wang, 2022e,i). IM has led to unprecedented theories and methodologies for implementing κC toward autonomous intelligence generation.

3. The architecture and functions of κC for autonomous intelligence generation

The transdisciplinary synergy of basic research and technical innovations in Section 2 has provided a theoretical foundation and rational roadmap for the development of κC.

The odyssey toward κC indicates that the counterpart of human intelligence may not be in the classic form of special- purpose AI, rather than be more generic κC-enabled autonomous intelligence inspired by the brain on a symbiotic man-machine computing platform. The key transdisciplinary discoveries and mathematical breakthroughs in Section 2 have enabled the invention of κC and its development. They have also demonstrated the indispensable value of basic research devoted by the International Institute of Cognitive Informatics and Cognitive Computing (I2CICC) in the past two decades (Wang et al., 2021b).

This section presents the architectural and functional framework of κC for the next-generation autonomous intelligent computers. The brain-inspired architecture and the hierarchical intelligent functions of κC will be formally described and elaborated. It leads to a demonstration of how κC works for autonomous knowledge learning toward machine intelligence generation among other AAI functions modeled in Figure 3.

3.1. Architecture of κC

κC is designed as a BIS for advancing classic computers from data processors to the next generation of knowledge and intelligence processors mimicking the brain. It studies natural intelligence models of AAI as a cognitive system in one direction, and the formal models of the brain simulated by computational intelligence in the other direction.

Definition 22. κC is a brain-equivalent intelligent computer that implements autonomous computational intelligence enabled by IM. Let κ0 through κ4 be the hierarchical layers of cognitive objects of data (), information (), knowledge (), and intelligence (), the autonomous generation of intelligence by a κC is formally modeled by a recursive structure:

where each cognitive layer (κk) is enabled by its lower adjacent layer (κk−1) till the cognitive hierarchy is terminated at the bottom sensorial layer (κ0), which is embodied by n+1 dimensional data, as acquired by abstraction and quantification. The type-suffix |T is adopted to denote the semantic category of a variable x by x|T. Typical types for modeling intelligent behaviors of κC have been given in Definition 22.

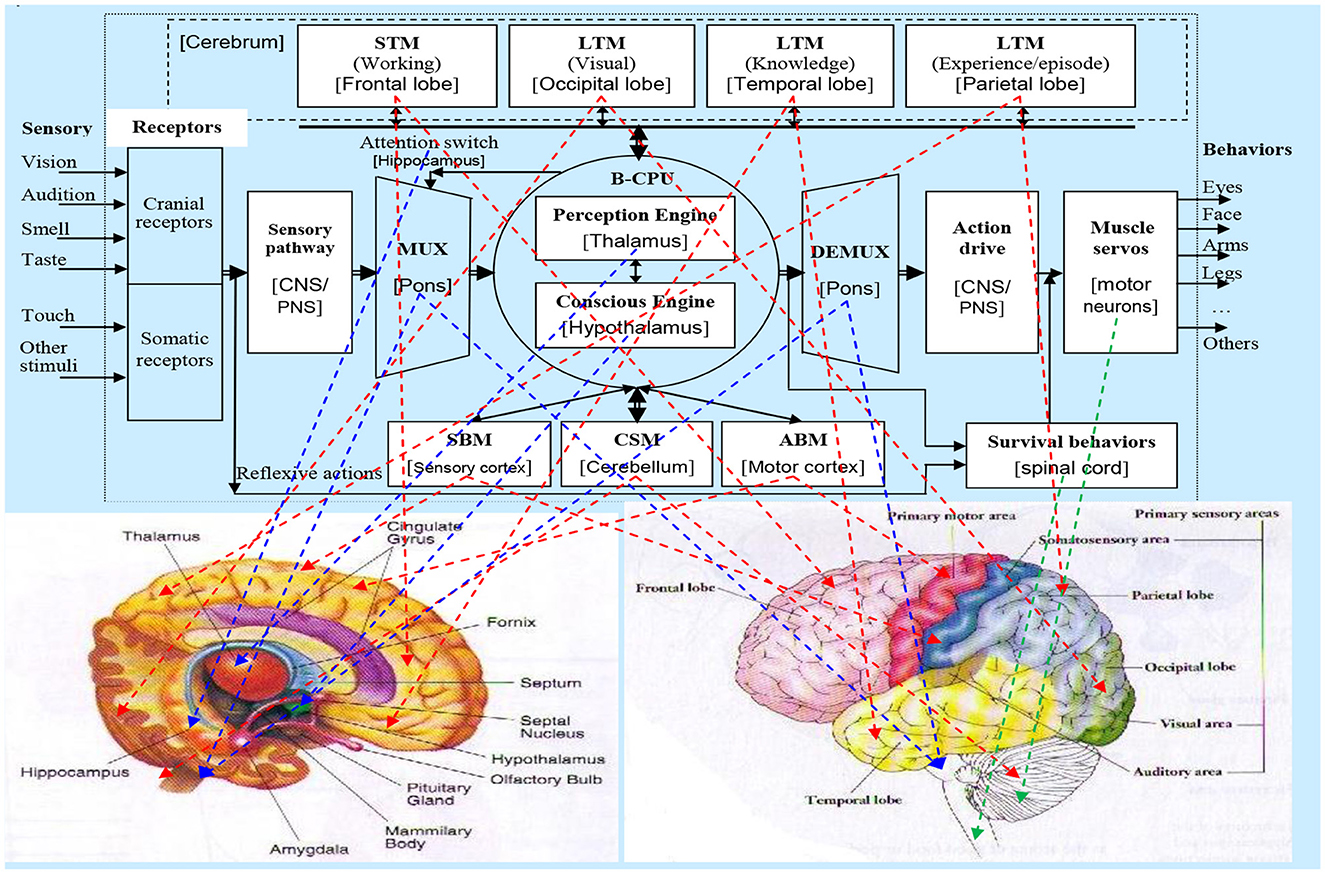

The architectural model of κC is illustrated in Figure 7 as a brain-inspired computer. In the κC architecture, each component has a corresponding organ in the brain that shares similar functions. This design reflects the essence of BIS synergized in Section 2. The kernel of κC is embodied by the inference and conscious engines equivalent to the thalamus and the hyperthalamus of the brain (Wang, 2012d), respectively, according to the LRMB model as shown in Figure 3. It is noteworthy that the cognitive memories in the brain encompass short-term memory (STM), long-term memory (LTM), sensory buffer memory (SBM), action buffer memory (ABM), and conscious status memory (CSM) as shown in Figure 7 Figure 7 (Wang, 2012d), which play important roles for supporting intelligence generation and operation. However, they are not the kernel organs of natural intelligence, rather than buffers and storages in the brain. Therefore, all memory structures may be unified in κC as a general memory space that may be partitioned into special sections.

3.2. Functions of κC

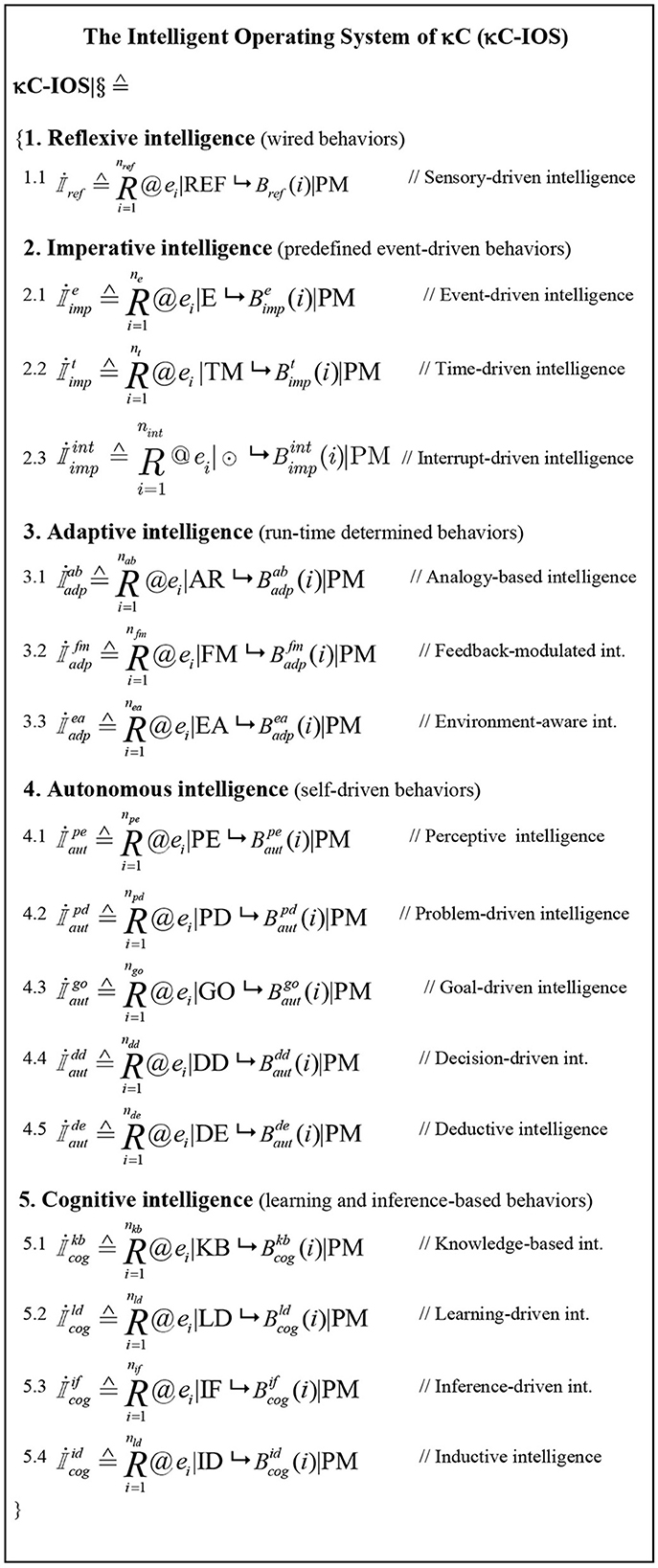

The functional model of κC, as shown in Figure 8, is designed based on the HIM model (Figure 5) of intelligence science (Wang, 2012d). Adopting HIM as a blueprint for the behavior model of κC, an Intelligent Operating System (IOS) of κC, κC-IOS, is designed as shown in Figure 9. κC-IOS provides a rigorous Mathematical Framework of Hierarchical Intelligence (MFHI) for κC. The Intelligent Behavioral Model of κC configured by the MFHI framework forms a theoretical foundation for explaining what the spectrum of natural intelligence is, and how they are generated by κC in computational intelligence.

The κC-IOS model explains why the current level of machine intelligence has been stuck at the adaptive level of for over 60+ years. It also elaborates why fully autonomous intelligence could not be implemented by current pretrained AI technologies in real-time applications.

In the framework of κC-IOS, the inference engine of κC for intelligent behavior generation is implemented from the bottom-up encompassing: L1) the reflective (sensory-driven) intelligence, L2) the imperative intelligence; L3) the adaptive intelligence, L4) the autonomous intelligence, and L5) the cognitive intelligence. κC-IOS enables κC to think (the inferential intelligence) and action (the behavioral intelligence) as defined in the mathematical model of intelligence (Equation 14) toward AAI by mimicking the brain beyond classical preprogramed computers or the pre-trained special purpose AI technologies.

Each form of the 16 intelligence functions compatible between the brain and κC is coupled by the generic pattern of intelligence as an event-triggered dispatching mechanism (Wang, 2002) formulated in Definition 20. The mathematical models developed in Figure 9 reveal that intelligent computers may be extended from the narrow sense in Section 2.7 to a broad sense of κC for autonomous intelligence generation. The intelligent behaviors of κC are aggregated from the bottom-up as shown in Figure 9, particularly those at Level 5 such as knowledge-based, learning-driven, inference-driven, and inductive intelligence in order to enable the highest level of system intelligence mimicking human brains. Therefore, κC will overcome the classical constraints inherited in the pretrained and preprogrammed computing approaches for implementing the expected intelligent computers.

4. Paradigms of κC for autonomous knowledge learning and machine intelligence generation

The fundamental AAI kernel of κC has been implemented based on the design in Figures 8, 9 (Wang, 2012e). κC is driven by a set of autonomous algorithms formally described in RTPA (Wang, 2002), which are autonomously executed by κC for enabling AAI (non-pretrained or non-preprogrammed) intelligence.

One of the key breakthroughs of κC is autonomous knowledge learning enabled by Level-5 cognitive intelligence according to Figure 5 (Wang, 2022a), (Valipour and Wang, 2017). According to HIM, the abilities for knowledge learning and reasoning are an indispensable foundation for intelligence generation by κC. In other words, traditional data-driven AI may not necessarily lead to generic and autonomous machine intelligence, because it is trained for specific purposes. This observation reveals a fundamental difference between κC and classical data-regressive AI technologies.

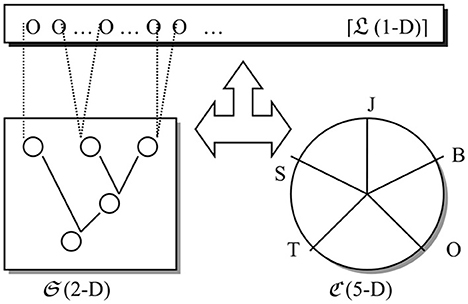

The cognitive informatics model of language comprehension is a cognitive process according to the LRMB and OAR models of internal knowledge representation (Wang, 2007; Wang et al., 2011, 2016a). A rigorous description of the cognitive process of comprehension has been formally described in Wang (2009b, 2021b). The cognitive model between a language expression and its syntaxes and semantics may be explained as shown in Figure 10 known as the universal language comprehension (ULC) model (Wang and Berwick, 2012). Figure 10 explains that linguistic analyses are a deductive process that maps the 1-D language expression L into a 5-D semantic space C via 2-D syntactical analyses S, where C is determined by the dimensions of the subject (J), the object (O), the action (A), the space (S), and the time point (T).

The ULC model indicates that syntactic analysis is a coherent part of semantic comprehension in formal language processing and machine knowledge learning by κC. It is found that the semantics of a sentence in a natural language expression may be comprehended by κC iff : (a) The parts of speech in the given sentence are analytically identified; (b) All parts of speech of the sentence are reduced to the terminals W0, which are a predefined real-world entity, a primitive abstract concept, or a derived behavior; and (c) The individual semantics of each part of speech and their logical relations are synthesized into a coherent structure.

According to the ULC theory, the methodology for knowledge learning by κC is a semantic cognition process enabled by a language comprehension engine (LCE) (Wang, 2016c). LCE is implemented in a closed loop of syntactic analysis, semantic deduction, and semantic synthesis for enabling the entire process of semantic comprehension by κC. LCE is supported by a cognitive knowledge base (CKB) (Wang, 2014a). In CKB, machine knowledge is rigorously represented by a cognitive mapping between a newly acquired concept against known ones in the CKB of κC based on Definition 17.

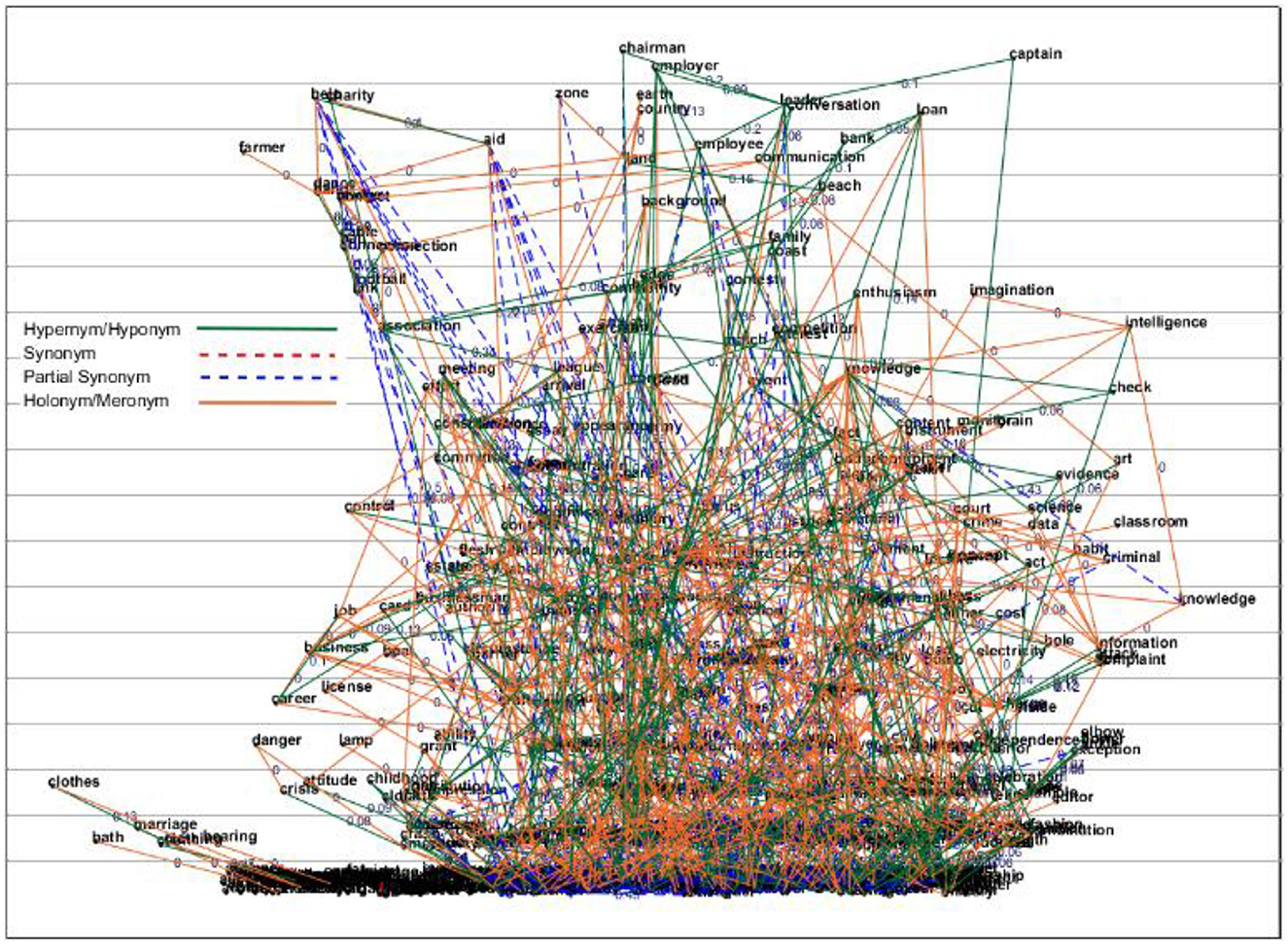

An experiment for autonomous κC learning has been conducted in a pilot project of autonomous (unsupervised) knowledge learning and semantic cognition driven by concept algebra (Wang, 2010, 2014a), and semantic algebra (Wang, 2013). In autonomous knowledge learning, κC adopts a relational neural cluster structure for knowledge representation in CKB as illustrated in Figure 4. The set of knowledge acquired by κC for the first 600+ frequently used concepts in English is shown in Figure 11 (Wang and Valipour, 2016; Valipour and Wang, 2017; Wang et al., 2017). It only takes the κC less than a minute to quantitatively acquire such scope of knowledge represented by a network of quantitative semantic relations, which would normally require several months by traditional qualitative ontology-extraction in forms of non-quantitative or subjective knowledge graphs without adopting rigorous IM underpinned by concept algebra (Wang, 2010) and semantic algebra (Wang, 2013).

More significantly in the experiment, the accuracy of semantic analyses by κC has not only surpassed normal readers but also over performed expert linguists who composed typical English dictionaries, which are enabled by κC's rigorous and quantitative semantic cognition power. Applications of autonomous knowledge learning based on concept algebra and semantic algebra may refer to Wang (2007, 2014a, 2016c, 2018), Wang et al. (2011).

The unpresented impact of κC on autonomous knowledge learning toward AIG, among other cognitive functions as shown in Section 3.2, is its capability to directly transfer learnt knowledge to peers based on the unified knowledge representation models (OAR) and manipulation mechanisms as shown in Figures 4, 11. Therefore, κC may avoid the redundancy of human learning where similar knowledge must be repetitively relearned by individuals. Therefore, the direct shareability of machine knowledge learning results enabled by κC will be an indispensable intelligent platform for assisting human learning based on symbiotic knowledge bases. It will unleash the κC power to exponentially expand machine-learning for enabling downloadable knowledge and intelligence for a wide range of novel applications.

It is noteworthy through this joint work that contemporary intelligent mathematics has played an indispensable role in abstract science in general, and in the odyssey of intelligence science toward κC in particular. A wide range of applications of κC will be expected to demonstrate how κC will realize machine enables knowledge processing and autonomous intelligence generation, which would significantly change our sciences, engineering, and everyday life.

5. Conclusion

This work has presented a retrospective review and prospective outlook on cognitive computers (κC) inspired by the brain and powered by novel intelligent mathematics (IM). It has revealed the shift in the paradigm of computing theories and technologies underpinned by basic research in autonomous AI (AAI) driven by IM. It has been discovered that the structures, mechanisms, and inference engines of κCs are fundamentally different from those of the classical pre-programmed von Neumann computers and pre-trained neural networks, because both of them have been inadequately feasible for real-time and runtime autonomous intelligence generation that is not merely regressive data-driven according to basic research in brain and intelligence sciences. Powered by κC and IM, intelligence generation is no longer a gifted privilege of humans, because machine intelligence may surpass natural intelligence generation in more aspects according to the Layered Reference Model of the Brain (LRMB), via not only machine learning but also machine thinking, inference, and creativity.

This paper has formally elaborated the latest basic research of the I2CICC initiative in brain, intelligence, knowledge, and computer sciences underpinned by IM. The transdisciplinary studies have led to the emergence of κC for autonomous intelligence generation by none pre-trained and none pre-programmed AAI technologies. A set of novel theories and intelligent mathematical means has been created. The architectures and functions of κC for cognitive computing and autonomous intelligence generation have been elaborated, which are evidenced by experiments on autonomous knowledge learning by κC. The basic research and technical innovations will unleash κC to augment human intelligence in a pervasive scope and with an accelerating pace toward the next-generation of symbiotic human-robot societies.

Author contributions

BW, WP, RB, KP, IR, and JL: concepts, related work, discussions, and reviews. CH: concepts and related work. JK: concepts, survey, related work, discussions, and reviews. All authors contributed to the article and approved the submitted version.

Funding

This work was supported in part by NSERC, DRDC, the IEEE Autonomous System Initiative (ASI), the IEEE CI TC-SW, IEEE SMC TC-BCS, the IEEE CS TC-LSC, and the Intelligent Mathematics Initiative of the International Institute of Cognitive Informatics and Cognitive Computing (I2CICC).

Acknowledgments

The authors would like to thank the reviewers and Professor Kaleem Siddiqi, EiC of FCS, for their professional insights and inspiring comments.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Bender, E. A. (2000). Mathematical Methods in Artificial Intelligence. Los Alamitos, CA: IEEE CS Press.

Berwick, B. C., Friederici, A. D., Chomsky, N., and Bolhuis, J. J. (2013). Evolution, brain, and the nature of language. Trends Cogn. Sci. 17, 89–98. doi: 10.1016/j.tics.2012.12.002

Birattari, M., and Kacprzyk, J. (2001). Tuning Metaheuristics: A Machine Learning Perspective. Berlin: Springer.

Cios, K. J., Pedrycz, W., and Swiniarski, R. W. (2012). Data Mining Methods for Knowledge Discovery. New York: Springer Science & Business Media.

David, D. (1985). Quantum theory, the Church–Turing principle and the universal quantum computer. Proc. R. Soc. Lond. A 400, 97–117. doi: 10.1098/rspa.1985.0070

Hoare, C. A. R. (1969). An axiomatic basis for computer programming. Commun. ACM 12, 576–580. doi: 10.1145/363235.363259

Hoare, C. A. R. (1994). Unified Theories of Programming. Oxford University Computing Laboratory. Available online at: ftp://ftp.comlab.ox.ac (accessed January 26, 2023).

Hoare, C. A. R., Hayes, I. J., Jifeng, H., Morgan, C. C., Roscoe, A. W., Sanders, J. W., et al. (1987). Laws of programming. Commun. ACM 30, 672–686. doi: 10.1145/27651.27653

Huang, D., Gao, F., Tao, X., Du, Q., and Lu, J. (2023). Toward semantic communications: deep learning-based image semantic coding. IEEE J. Selected Areas Commun. 1, 55–71. doi: 10.1109/JSAC.2022.3221999

Kacprzyk, J., and Yager, R. R. (2001). Linguistic summaries of data using fuzzy logic. Int. J. General Syst. 30, 133–154. doi: 10.1080/03081070108960702

Kline, M. (1972). Mathematical Thought from Ancient to Modern Times. New York: Oxford University Press.

Lewis, H. R., and Papadimitriou, C. H. (1998). Elements of the Theory of Computation, 2nd Edn., Englewood Cliffs, NJ: Prentice Hall.

McCarthy, J., Minsky, M., Rochester, N., and Shannon, C. (1955). A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence (Hanover), 1–4.

Newton, I. (1729). The Principia: The Mathematical Principles of Natural Philosophy. London: Benjamin Motte.

Pedrycz, W., and Gomide, F. (2007). Fuzzy Systems Engineering: Toward Human-Centric Computing. Hoboken, NJ: John Wiley.

Plataniotis, K. N. (2022). “Are you surprised? The role of contextual surprise in designing autonomous systems (keynote),” in IEEE 21st Int'l Conf. on Cognitive Informatics and Cognitive Computing (ICCI*CC'22) (Canada: Univ. of Toronto), 6–8.

Rudas, I. J., and Fodor, J. (2008). Intelligent systems. Int. J. Comput. Commun. Control 3, 132–138.

Salmon, C. K., Syed, T. A., Kacerovsky, J. B., Alivodej, N., Schober, A. L., Sloan, T. F. W., et al. (2023). Organizing principles of astrocytic nanoarchitecture in the mouse cerebral cortex. Curr. Biol. 33, 957–972. doi: 10.1016/j.cub.2023.01.043

Shannon, C. E. (1948). A mathematical theory of communication. Bell Syst. Tech. J. 27, 379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x

Siddiqi, K., and Pizer, S. (2008). Medial Representations: Mathematics, Algorithms and Applications. Berlin: Springer Science & Business Media.

Turing, A. M. (1950). Computing machinery and intelligence. Mind 59, 433–460. doi: 10.1093/mind/LIX.236.433

Valipour, M., and Wang, Y. (2016). Formal properties and mathematical rules of concept algebra for cognitive machine learning (II). J. Adv. Math. Appl. 5, 69–86. doi: 10.1166/jama.2016.1092

Valipour, M., and Wang, Y. (2017). “Building semantic hierarchies of formal concepts by deep cognitive machine learning,” in 16th IEEE International Conference on Cognitive Informatics and Cognitive Computing (ICCI*CC 2017) (Univ. of Oxford, UK, IEEE CS Press), 51–58.

von Neumann, J. (1946). The principles of large-scale computing machines. Ann. Hist. Comput. 3, 263–273. doi: 10.1109/MAHC.1981.10025

Wang, Y. (2002). The real-time process algebra (RTPA). Ann. Softw. Eng. 14, 235–274. doi: 10.1023/A:1020561826073

Wang, Y. (2003). On cognitive informatics. Brain Mind Transdiscip. J. Neurosci. Neurophil. 4, 151–167. doi: 10.1023/A:1025401527570

Wang, Y. (2007). The OAR model of neural informatics for internal knowledge representation in the brain. Int'l J. Cogn. Inform. Nat. Intel. 1, 66–77. doi: 10.4018/jcini.2007070105

Wang, Y. (2008a). Deductive semantics of RTPA. Int. J. Cogn. Inform. Nat. Intel. 2, 95–121. doi: 10.4018/jcini.2008040106

Wang, Y. (2008b). Mathematical laws of software. Trans. Comput. Sci. 2, 46–83. doi: 10.1007/978-3-540-87563-5_4

Wang, Y. (2008c). On contemporary denotational mathematics for computational intelligence. Trans. Comput. Sci. 2, 6–29. doi: 10.1007/978-3-540-87563-5_2

Wang, Y. (2008d). On the big-R notation for describing iterative and recursive behaviors. Int'l J. Cogn. Inform. Nat. Intel. 2, 17–28. doi: 10.4018/jcini.2008010102

Wang, Y. (2008e). RTPA: a denotational mathematics for manipulating intelligent and computational behaviors. Int. J. Cogn. Inform. Nat. Intel. 2, 44–62. doi: 10.4018/jcini.2008040103

Wang, Y. (2008f). Software Engineering Foundations: A Software Science Perspective. New York: Auerbach Publications.

Wang, Y. (2009a). On abstract intelligence: toward a unified theory of natural, artificial, machinable, and computational intelligence. Int. J. Softw. Sci. Comput. Intel. 1, 1–17. doi: 10.4018/jssci.2009010101

Wang, Y. (2009b). On cognitive computing. Int. J. Softw. Sci. Comput. Intel. 1, 1–15. doi: 10.4018/jssci.2009070101

Wang, Y. (2010). On concept algebra for computing with words (CWW). Int. J. Semantic Comput. 4, 331–356. doi: 10.1142/S1793351X10001061

Wang, Y. (2011). Inference algebra (IA): a denotational mathematics for cognitive computing and machine reasoning (I). Int. J. Cogn. Inform. Nat. Intel. 5, 61–82. doi: 10.4018/jcini.2011100105

Wang, Y. (2012a). Editorial: contemporary mathematics as a metamethodology of science, engineering, society, and humanity. J. Adv. Math. Appl. 1, 1–3. doi: 10.1166/jama.2012.1001

Wang, Y. (2012b). In search of denotational mathematics: novel mathematical means for contemporary intelligence, brain, and knowledge sciences. J. Adv. Math. Appl. 1, 4–25. doi: 10.1166/jama.2012.1002

Wang, Y. (2012c). Inference algebra (IA): a denotational mathematics for cognitive computing and machine reasoning (II). Int. J. Cogn. Inform. Nat. Intel. 6, 21–47. doi: 10.4018/jcini.2012010102

Wang, Y. (2012d). On abstract intelligence and brain informatics: mapping cognitive functions of the brain onto its neural structures. Int. J. Cogn. Inform. Nat. Intel. 6, 54–80. doi: 10.4018/jcini.2012100103

Wang, Y. (2012e). On denotational mathematics foundations for the next generation of computers: cognitive computers for knowledge processing. J. Adv. Math. Appl. 1, 118–129. doi: 10.1166/jama.2012.1009

Wang, Y. (2013). On semantic algebra: a denotational mathematics for cognitive linguistics, machine learning, and cognitive computing. J. Adv. Math. Appl. 2, 145–161. doi: 10.1166/jama.2013.1039

Wang, Y. (2014a). On a novel cognitive knowledge base (CKB) for cognitive robots and machine learning. Int. J. Softw. Sci. Comput. Intel. 6, 42–64. doi: 10.4018/ijssci.2014040103

Wang, Y. (2014b). Software science: on general mathematical models and formal properties of software. J. Adv. Math. Appl. 3, 130–147. doi: 10.1166/jama.2014.1060

Wang, Y. (2016a). “Cognitive soft computing: philosophical, mathematical, and theoretical foundations of cognitive robotics (keynote),” in 6th World Conference on Soft Computing (WConSC'16) (UC Berkeley, CA: Springer), 3.

Wang, Y. (2016b). Big data algebra (BDA): a denotational mathematical structure for big data science and engineering. J. Adv. Math. Appl. 5, 3–25. doi: 10.1166/jama.2016.1096

Wang, Y. (2016c). On cognitive foundations and mathematical theories of knowledge science. Int. J. Cogn. Inform. Nat. Intel. 10, 1–24. doi: 10.4018/IJCINI.2016040101

Wang, Y. (2018). “Cognitive machine learning and reasoning by cognitive robots (keynote),” in 3rd International Conference on Intelligence and Interactive Systems and Applications (IIAS'18) (Hong Kong), 3.

Wang, Y. (2020). “Intelligent mathematics (IM): indispensable mathematical means for general AI, autonomous systems, deep knowledge learning, cognitive robots, and intelligence science (keynote)”, in IEEE 19th Int'l Conf. on Cognitive Informatics and Cognitive Computing (Beijing: Tsinghua Univ.), 5.

Wang, Y. (2021a). “Advances in intelligence mathematics (IM) following Lotfi Zadeh's vision on fuzzy logic and semantic computing (keynote),” in 2021 Meeting of North America Fuzzy Information Processing Society, (NAFIPS'21) (Purdue Univ), 7.

Wang, Y. (2021b). “On intelligent mathematics (IM): what's missing in general ai and cognitive computing?” in 4th International Conference on Physics.

Wang, Y. (2021c). “On intelligent mathematics for AI (keynote),” in Int'l Conf. on Frontiers of Mathematics and Artificial Intelligence (Beijing, Shanghai) (IISA'22), 1–2.

Wang, Y. (2021d). On the frontiers of software science and software engineering. Front. Comput. Sci. 3, 766053. doi: 10.3389/fcomp.2021.766053

Wang, Y. (2022a). “From data-aggregative learning to cognitive knowledge learning enabled by autonomous AI theories and intelligent mathematics (keynote),” in 2022 Future Technologies Conference (FTC'22) (Vancouver), 1–3.

Wang, Y. (2022b). “On abstract sciences: from data, information, knowledge to intelligence sciences,” in IEEE 21st Int'l Conference on Cognitive Informatics and Cognitive Computing (Univ. of Toronto, IEEE CS Press), 45–55.

Wang, Y. (2022c). “On intelligent mathematics underpinning contemporary abstract sciences and autonomous ai (keynote),” in IEEE 21st Int'l Conf. on Cognitive Informatics and Cognitive Computing (ICCI*CC'22) (Canada: Univ. of Toronto), 6–8.

Wang, Y. (2022d). “On the goldbach theorem: a formal proof of goldbach conjecture by the theory of mirror prime decomposition (keynote),” in 2022 International Workshop on AI and Computational Intelligence (IWAICI'22) (Chongqing CUST), 1–3.

Wang, Y. (2022e). “On the latest proof of twin-prime (TP) conjecture: a discovery of TP ⊂ MP (mirror primes) ⊂ P x P in the hyperspace (keynote),” in 7th MCSI Int'l Conference and Mathematics and Computers in Sciences and Industry (MCSI'22) (Athens), 2.1–2.3.

Wang, Y. (2022f). “On the recent proof of goldbach conjecture: from euclidean prime factorization to mirror prime decompositions of even integers in number theory (keynote),” in 7th MCSI Int'l Conference on Mathematics and Computers in Sciences and Industry (MCSI'22) (Athens), 1.1–1.3.

Wang, Y. (2022g). “What can't AI do? The emergence of autonomous AI (AAI) beyond data convolution and pretrained learning (keynote),” 7th Int'l Conference on Intelligent, Interactive Systems and Applications (IISA'22) (Shanghai), 1–2.

Wang, Y. (2022h). A proof of goldbach conjecture by mirror-prime decomposition. WSEAS Trans. Math. 21, 563–571. doi: 10.37394/23206.2022.21.63

Wang, Y. (2022i). A proof of the twin prime conjecture in the P x P space. WSEAS Trans. Math. 21, 585–593. doi: 10.37394/23206.2022.21.66

Wang, Y. (2023). “Basic research on machine vision underpinned by image frame algebra (VFA) and visual semantic algebra (VSA) (keynote),” in 7th Int'l Conference on Machine Vision and Information Technology (CMVIT'23) (Xiamen), 1–4.

Wang, Y., and Berwick, R. C. (2012). Towards a formal framework of cognitive linguistics. J. Adv. Math. Appl. 1, 250–263. doi: 10.1166/jama.2012.1019

Wang, Y., Karray, F., Kwong, S., Plataniotis, K. N., Leung, H., Hou, M., et al. (2021a). On the philosophical, cognitive and mathematical foundations of symbiotic autonomous systems. Phil. Trans. R. Soc. A 379, 20200362. doi: 10.1098/rsta.2020.0362

Wang, Y., Kinsner, W., Anderson, J. A., Zhang, D., Yao, Y., Sheu, P., et al. (2009). A doctrine of cognitive informatics. Fund. Inform. 90, 203–228. doi: 10.3233/FI-2009-0015

Wang, Y., Lu, J., Gavrilova, M., Rodolfo, F., and Kacprzyk, J. (2018). “Brain-inspired systems (BIS): cognitive foundations and applications,” in 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (IEEE Press), 991–996.

Wang, Y., and Peng, J. (2017). On the cognitive and theoretical foundations of big data science and engineering. J. New Math. Nat. Comput. 13, 101–117. doi: 10.1142/S1793005717400026

Wang, Y., Tian, Y., Gavrilova, M. L., and Ruhe, G. (2011). A formal knowledge representation system (FKRS) for the intelligent knowledge base of a cognitive learning engine. Int. J. Softw. Sci. Comput. Intel. 3, 1–17. doi: 10.4018/jssci.2011100101

Wang, Y., and Valipour, M. (2016). Formal properties and mathematical rules of concept algebra for cognitive machine learning (I). J. Adv. Math. Appl. 5, 53–68. doi: 10.1166/jama.2016.1091

Wang, Y., Valipour, M., and Zatarain, O. A. (2016a). Quantitative semantic analysis and comprehension by cognitive machine learning. Int. J. Cogn. Inform. Nat. Intel. 10, 14–28. doi: 10.4018/IJCINI.2016070102

Wang, Y., Wang, Y., Patel, S., and Patel, D. (2006). A layered reference model of the brain (LRMB). IEEE Trans. Syst. Man Cybern. 36, 124–133. doi: 10.1109/TSMCC.2006.871126

Wang, Y., Widrow, B., Pedrycz, W., Berwick, R. C., Soda, P., Kwong, S., et al. (2021b). “IEEE ICCI*CC series in year 20: latest advances in cognitive informatics and cognitive computing towards general AI (plenary panel report-I),” in IEEE 20th International Conference on Cognitive Informatics and Cognitive Computing (ICCI*CC'21) (Banff: IEEE CS Press), 253–263.

Wang, Y., Widrow, B., Zadeh, L. A., Howard, N., Wood, S., Bhavsar, V. C., et al. (2016b). Cognitive intelligence: deep learning, thinking, and reasoning with brain-inspired systems. Int. J. Cogn. Inform. Nat. Intel. 10, 1–21. doi: 10.4018/IJCINI.2016100101

Wang, Y., Zadeh, L. A., Widrow, B., Howard, N., Beaufays, F., Baciu, G., et al. (2017). Abstract intelligence: embodying and enabling cognitive systems by mathematical engineering. Int. J. Cogn. Inform. Nat. Intel. 11, 1–15. doi: 10.4018/IJCINI.2017010101

Widrow, B. (2022). Cybernetics 2.0: Adaptivity and Homeostasis in the Brain and the Body. Berlin: Springer, 410.

Wiener, N. (1948). Cybernetics: Or Control and Communication in the Animal and the Machine. Cambridge: MIT Press.

Wilson, R. A., and Frank, C. K. (1999). The MIT Encyclopedia of the Cognitive Sciences. Cambridge: MIT Press.

Keywords: cognitive computers (κC), intelligent science, knowledge science, intelligent mathematics, brain-inspired systems (BIS), autonomous intelligence generation (AIG), autonomous AI (AAI), experiments

Citation: Wang Y, Widrow B, Hoare CAR, Pedrycz W, Berwick RC, Plataniotis KN, Rudas IJ, Lu J and Kacprzyk J (2023) The odyssey to next-generation computers: cognitive computers (κC) inspired by the brain and powered by intelligent mathematics. Front. Comput. Sci. 5:1152592. doi: 10.3389/fcomp.2023.1152592

Received: 28 January 2023; Accepted: 31 March 2023;

Published: 19 May 2023.

Edited by:

Kaleem Siddiqi, McGill University, CanadaReviewed by:

Boris Kovalerchuk, Central Washington University, United StatesJun Peng, Chongqing University of Science and Technology, China

George Baciu, Hong Kong Polytechnic University, Hong Kong SAR, China

Copyright © 2023 Wang, Widrow, Hoare, Pedrycz, Berwick, Plataniotis, Rudas, Lu and Kacprzyk. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yingxu Wang, eWluZ3h1QHVjYWxnYXJ5LmNh

Yingxu Wang

Yingxu Wang Bernard Widrow2

Bernard Widrow2 Witold Pedrycz

Witold Pedrycz Robert C. Berwick

Robert C. Berwick Konstantinos N. Plataniotis

Konstantinos N. Plataniotis Imre J. Rudas

Imre J. Rudas Janusz Kacprzyk

Janusz Kacprzyk