95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci. , 09 October 2023

Sec. Human-Media Interaction

Volume 5 - 2023 | https://doi.org/10.3389/fcomp.2023.1150348

Tianyi Wang1,2*

Tianyi Wang1,2* Shima Okada2

Shima Okada2Online live-streaming has become an essential segment of the music industry in the post-COVID- era. However, methods to enhance interaction between musicians and listeners at online concerts are yet to be adequately researched. In this pilot study, we propose Heart Fire, a system to promote musician-listener interaction that visualizes the listeners' mental states using a smartwatch-based architecture. Accordingly, the listeners' heart rates are first measured using a Galaxy smartwatch and then processed into a real-time animation of a burning flame, whose intensity is dependent on the heart rate, using Azure Kinect and TouchDesigner. The feasibility of the proposed system was confirmed using an experiment involving ten subjects. Each subject selected two types of music-cheerful and relaxing. The BPM and energy of each song were measured, and each subject's heart rate was monitored. Subsequently, each subject was asked to answer a questionnaire about the emotions they experienced. The results demonstrated that the proposed system is capable of visualizing audience response to music in real time.

The COVID-19 pandemic's strict quarantine and spatial distance restrictions significantly altered people's lifestyles across the globe. People spent more time at home because they worked and studied online. Fink et al. (2021) ranked the daily domestic activities performed by people during the lockdown and found that listening to music ranked third, just behind staying up-to-date with the outside world and domestic chores. This is a reasonable observation because people use music as an effective tool to cope with various forms of psychological distress such as anxiety, depression, loneliness, stress, and poor sleep quality (Brooks et al., 2020; Dagnino et al., 2020; Dawel et al., 2020; Franceschini et al., 2020), which have been exacerbated by the pandemic. Indeed, studies have demonstrated that listening to music aids relaxation, improves mood, reduces negative emotions, abates loneliness, and relieves daily stress (Krause et al., 2021; Vidas et al., 2021).

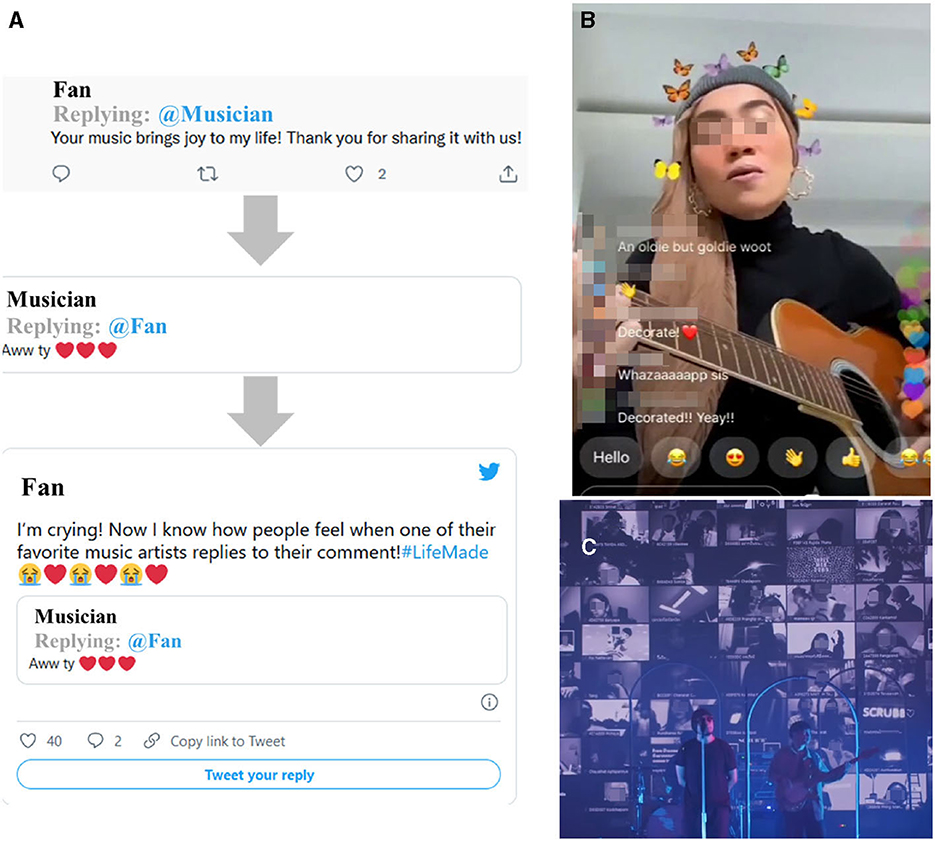

To prevent transmission of infections, artists started to perform music differently-primarily via online live-streaming. A 2021 study reported 70% of artists had live-streamed since the COVID-19 breakout, with 40% live-streaming once a month or more, and over 80% of them were willing to make live-streaming a permanent part of their performance plans even after in-person events resumed. Similarly, over 70% of music listeners have reportedly watched a live-stream at home, and 60% planned to continue live-streaming even after in-person concerts resumed (Statista Research Department, 2021a,b). Thus, online live-streaming of concerts can be expected to become an integral part of the music industry (Onderdijk et al., 2023). Besides performances, interactions between musicians and listeners were also being increasingly conducted online. Figure 1 depicts examples of musician-listener interactions on online platforms. For instance, by using social media apps such as Twitter (Figure 1A), music fans and artists can communicate with each other (Shaw, 2020). Moreover, popular live-streaming apps (Figure 1B) enable audiences to directly comment and send emoticons during a musical performance, which the artist can notice in real time (Basba, 2020), or online video chatting software is utilized by artists to organize online concerts (Figure 1C) (Scrubb, 2020).

Figure 1. Examples of online musician-listener interaction. (A) Interaction through Twitter. (B) Interaction through a live-streaming apps. (C) Interaction through video chat during performance.

Although audiences can turn on their cameras and display their faces in such an architecture, it is difficult for artists to read the atmosphere because of the overabundance of faces on their screens. Moreover, concert-goers usually clap and wave their hands synchronously with the rhythm of an ongoing song, sing along, and cheer excitedly and loudly, which in turn inspires the performing artists. In addition, musicians may occasionally improvise based on these responses. Such real-time and dynamic interaction is lacking in online music performances, and thus, online concerts do not have the same bonding capabilities as physical ones—if musicians are not able to experience the listeners' responses, their enthusiasm is discouraged.

In this context, several studies on improving musician-listener interaction during musical performances seem promising when looking to promote interaction during online concerts, for example, Yang et al. (2017) proposed a smart light stick, whose colors change when the stick is shaken at a particular frequency. Similar light-based interactions enabled by wireless motion sensors were also proposed by other studies (Freeman, 2005; Feldmeier and Paradiso, 2007; Young, 2015). Furthermore, musician-listener interaction has been increasingly carried out on smartphone-based systems through sound, text, and control interfaces, since the beginning of the smartphone era in 2007 (Dahl et al., 2011; Freeman et al., 2015; Schnell et al., 2015). Hödl et al. (2020) established that the utilization of a smartphone as a technical device can inspire creative work in artists and enhance their interaction with listeners. An interesting example of such an application was proposed in Freeman et al. (2015). The researchers designed a smartphone apps on which audience members could draw using brushes of different shapes, colors, sizes, and borders during musical events. These artworks were visible to the musician during the concert. However, Hödl et al. (2012) argued that using smartphones during a musical performance may also serve as a distraction, because of the necessity of operating the device-whether by typing or handling it in particular ways to convey their responses to the performer.

Alternatively, a way to improve musician-listener interaction might be through the usage of physiological data as a metric of the mental state of listeners, which can then be used as feedback for the performer. Previously, heart rate variability (HRV) has been used to evaluate the effect of music on the human nervous system. Wu and Chang (2021) used an Electrocardiograph (ECG) sensor to monitor listeners' emotions and found listeners' emotional states were reflected in the HRV indicators, which changed in response to musical tempo, i.e., faster music enhanced the autonomic sympathetic nervous system. Similar experimental results were found in Bernardi et al. (2006), where compared to silence, listening to music resulted in a higher heart rate. Interestingly, in a recent study by Hirata et al. (2021), HRV was analyzed as a metric of mental state while listening to music on different devices, for example, stationary speakers, headphones, and smartphone speakers. The authors found that live-streamed music performances led to a reduction in the R–R interval in HRV when compared to attending an on-site concert. This decrease in the R–R interval suggested that online music audiences experienced higher levels of stress as opposed to the more relaxed atmosphere of a live concert. Consequently, audiences felt less emotionally engaged during live-streamed events. However, the study analyzed mental state using retrospective analysis rather than analyzing the mental state information continuously in real time. Thus, it does not encapsulate the real-time state of mind of the participants.

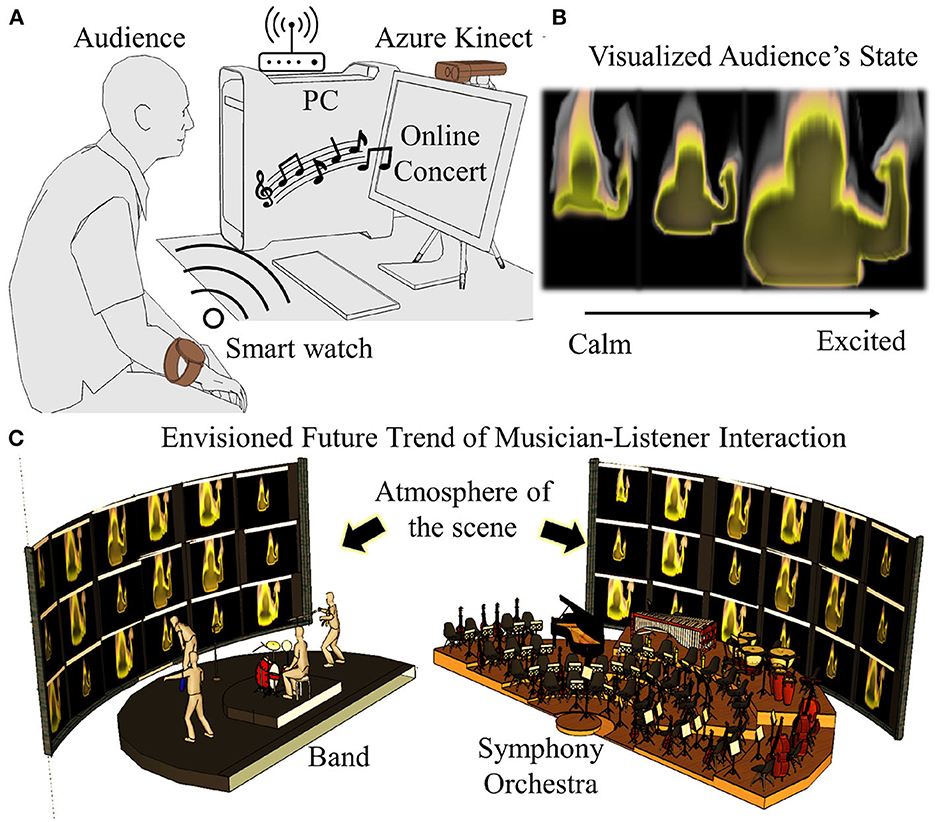

Therefore, it is essential to develop a real-time and user-friendly method to visualize the mental states of online concert attendees. In this study, we propose Heart Fire, a smartwatch-based system capable of visualizing the mental states (for example, calm or excited) of people attending online concerts. Figure 2A depicts an illustration of the proposed system. The audience's heart rate is detected using a smartwatch and transmitted to a local computer via Wi-Fi. It is then visualized using Azure Kinect and processed using TouchDesigner. We selected “burning fire” as the visualized effect. This notion was sparked by a poem from the Tang Dynasty in China: “忧喜皆心火 (xǐ yōu jiē xı̄n huŏ),” which is literally translated as: Emotions such as worries and joys are all fires of the heart. Additionally, the Japanese idiom “心火(しんか-sinka, literally means heart fire)” has a similar meaning, which indicates a range of emotions. The output of Heart Fire is depicted in Figure 2B. When the audience member is calm, the visualized fire burns slowly, whereas when the audience is excited, the fire burns intensely. We believe that the proposed system will inspire novel musician-listener interaction during online performances where musicians can read the atmosphere of the scene directly from the visualized heart fire, such as shown in Figure 2C.

Figure 2. Concept of proposed system. (A) Set-up for the proposed system. (B) Sample of visualized audience's state. (C) Future trend of musician-listener interaction during online performance.

The remainder of this paper is organized as follows. In Section 2, the details of the proposed system are presented in addition to an experiment conducted to evaluate its performance. The results are presented in Section 3. Social aspects and technical notes are discussed in Section 4. Finally, the paper is concluded in Section 5.

Briefly, the system consists of two parts-a smartwatch to record real-time heart rate, details in Section 2.1, and a local computer equipped with Azure Kinect and TouchDesigner to process the recorded heart rate signal and convert it into visualized data, described in Section 2.2.

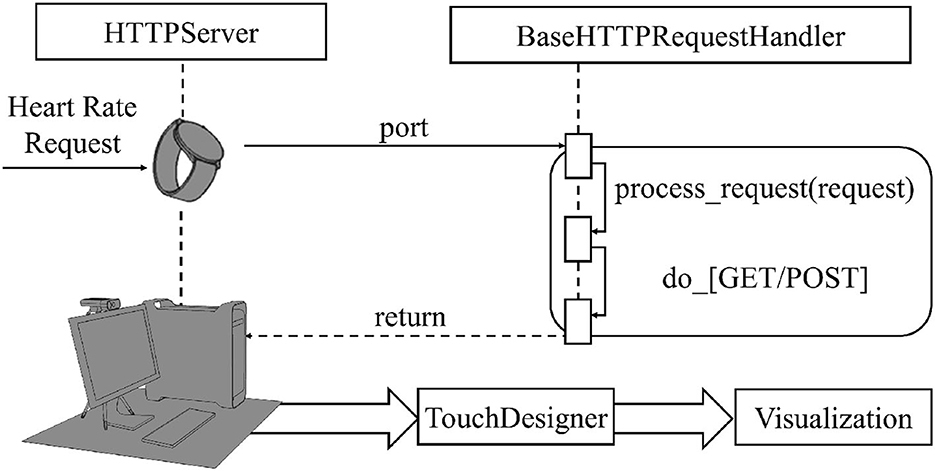

Figure 3 depicts an overview of the proposed system. In this study, we used the SAMSUNG Galaxy Watch with a 42 mm body (GW42) to record the heart rate. GW42 is a Tizen-based wearable device with a 1.15 GHz Exynos 9110 Dual core processor and 4-GB NAND memory. Besides a heart rate sensor, GW42 is also equipped with a gyroscope, an accelerometer, a barometer, and an ambient light sensor (SAMSUNG Galaxy, 2021). To record heart rate using GW42, a Python program was written to transfer the data to a local computer referenced from Loic2665 (2020). Heart rate data is read/written using the Base HTTP Request Handler. Thus, the IP address and port ID of the local computer are required for the transmission of heart rate data.

Figure 3. Overview of proposed system. Base HTTP Request Handler reads and writes the heart rate from the smartwatch, then returns the data back to the PC. Heart rate data are then transformed to TouchDesigner for further processing for visualization.

Figure 4 depicts an original GW42 application to record heart rate data, which we called Heart Fire. This apps was constructed with Tizen (an open-source, standards-based software platform), and a shortcut to it can be placed on the smartwatch home page after installation (Figure 4A). Once the icon is clicked, the application interface is displayed, and users can input the IP address and the port ID of their computers (Figure 4B). Delay denotes the interval of heart rate monitoring—for instance, a 500-ms delay implies that heart rate is monitored twice per second (at a frequency of 2 Hz). Users can alter this setting; however, we take the delay to be 500 ms in this study considering both the smartwatch memory capacity and the average duration of songs.

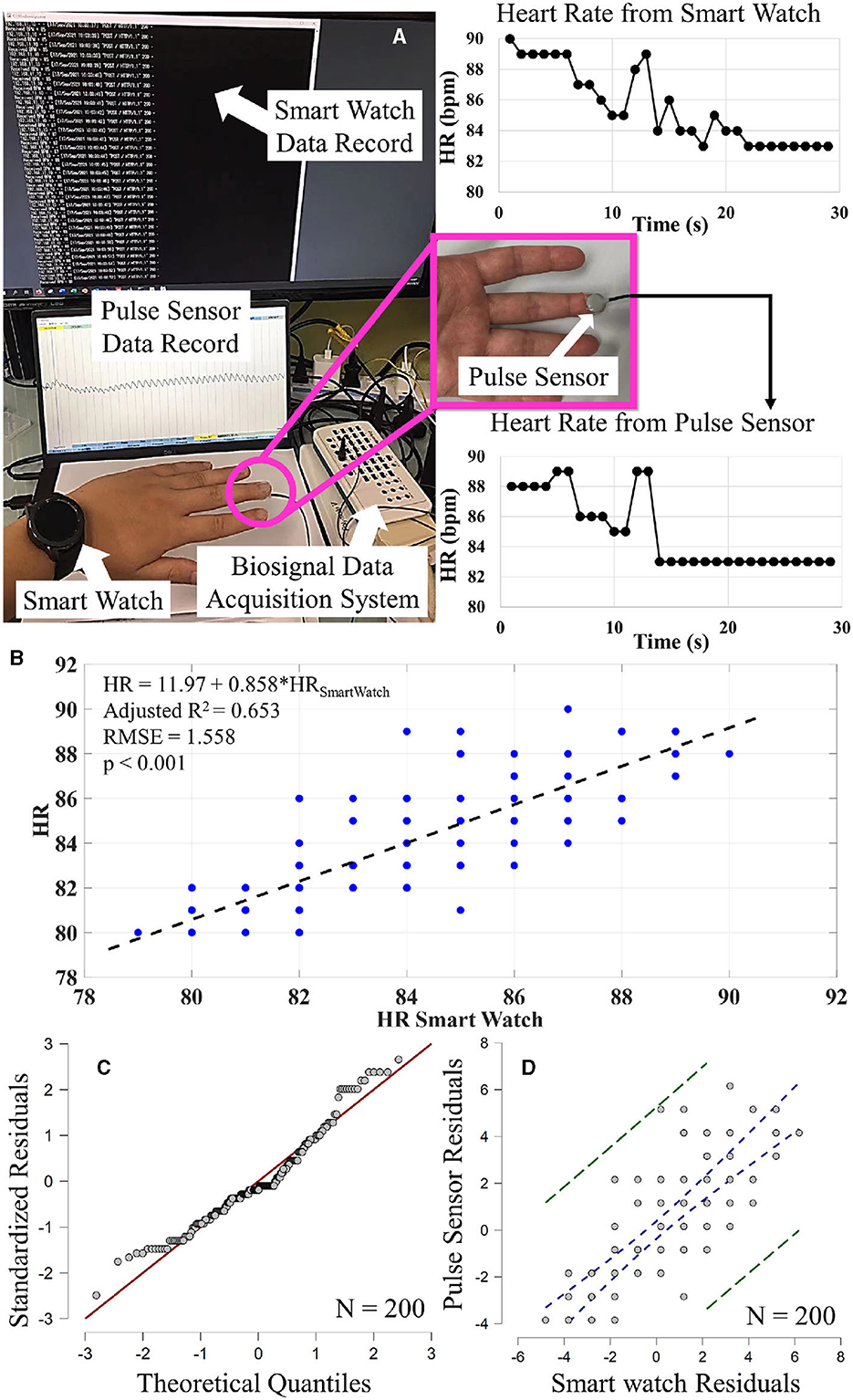

To confirm the reliability of the recorded heart rate data, a cross-check experiment was performed to compare the heart rate recorded using the smartwatch with that recorded using the gold standard (Yoshihi et al., 2021) as shown in Figure 5A. A subject wore the GW42 and a heart rate sensor [AP-C030 (a), Polymate V AP5148, Sample Frequency 100 Hz] was attached to the tip of his middle finger. We asked the subject to listen to a piece of music (length 3 m 20 s) and recorded his heart rate using both equipment at the same time. Heart rate can be directly monitored and read from the smartwatch. The heart rate sensor data were analyzed using peak analysis written in MATLAB (version: R2022b) to calculate the heart rate (Wang et al., 2019).

Figure 5. Recorded heart rates during the cross-check experiment. (A) Image of cross-check experiment. (B) Linear regression. (C) Q–Q plot standardized residuals. (D) Partial regression plot.

The heart rates during a 30-s interval of the experiment are plotted on the right-hand side of Figure 5A as an example. Linear regression was performed using the statistic software, Jeffreys's Amazing Statistics Program (JASP; version 0.17.2.1, The Netherlands). During the cross-check experiment, the heart rate was recorded 200 times. Figure 5B illustrates the results of linear regression. Linear regression reveals that the smartwatch can significantly predict HR, F(1,198) = 375.61, p < 0.001, Adjusted R2 = 0.653, using the following regression equation:

where HR denotes the heart rate recorded using the gold standard, and HRSmartWatch denotes the heart rate recorded using the smartwatch. The Q–Q plot (Figure 5C) demonstrates that the standardized residuals fit well along the diagonal, indicating that both assumptions of normality and linearity are satisfied. Figure 5D illustrates the partial regression plot—the blue dashed lines represent the 95% confidence intervals, and the green dashed lines represent the 95% prediction intervals. The proposed smartwatch system predicts heart rate with high reliability. Details about the linear regression can be found at Supplementary material.

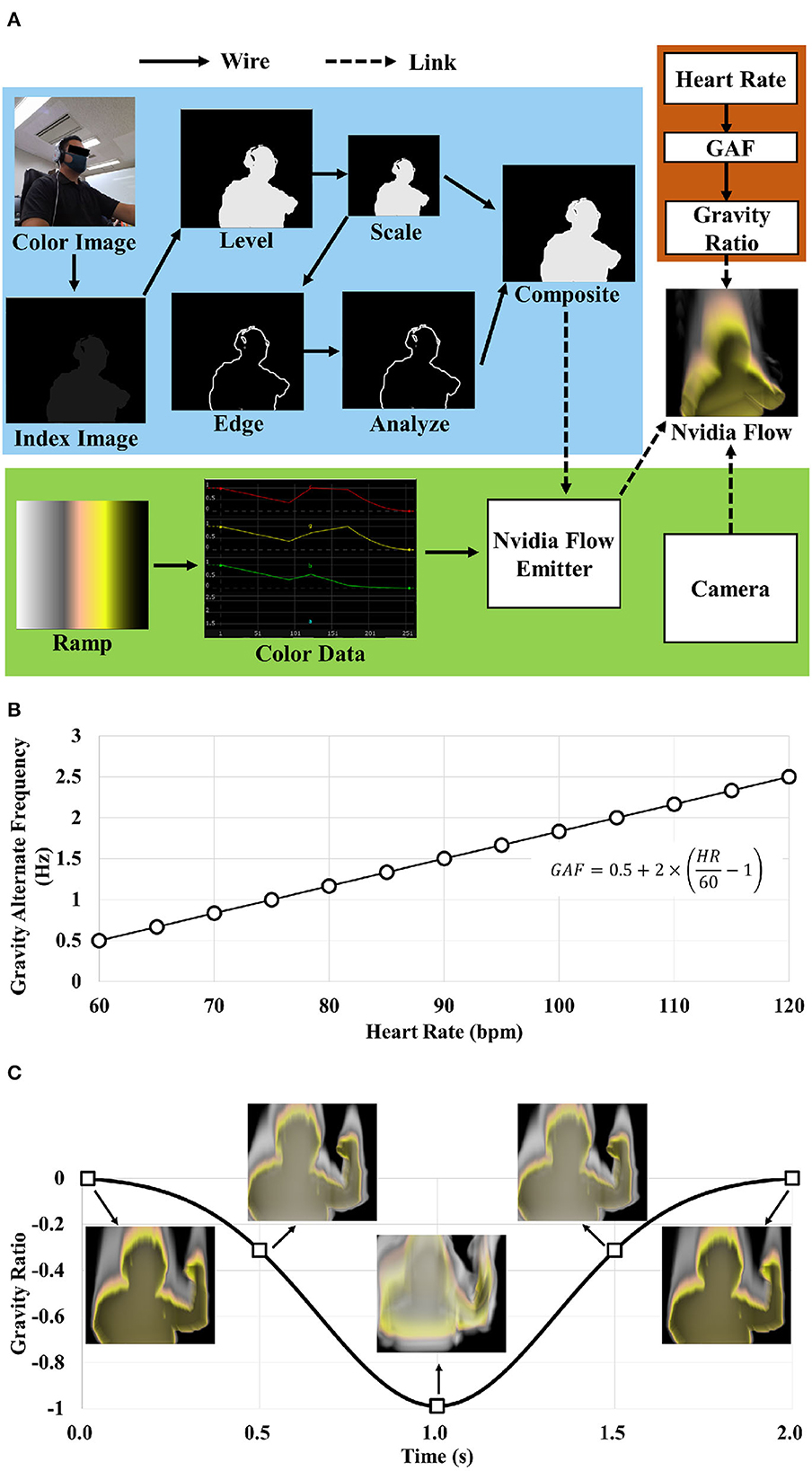

Figure 6 depicts an overview of the visualized heart rate. In this study, we used Azure Kinect (Developer Kit, Microsoft, USA) and TouchDesigner (version 099, 64-Bit, 2021.14360) to perform the Heart Fire visualization. The Azure Kinect camera consists of an RGB camera and an IR camera. Compared to other commercial cameras, Azure Kinect exhibits higher accuracy (Albert et al., 2020). TouchDesigner is a node-based programming language that has been used for real-time interactive multimedia content by software designers, artists, and creative coders (Sargeant et al., 2017; TouchDesigner, 2017). Figure 6A depicts the steps of the visualization process using TouchDesigner. In the figure, each colored square represents a node and the arrows indicate the direction of data flow. The solid arrows represent wires, which connect the output of each node to the inputs of other nodes, and the dashed arrows represent links, which represent data flow between nodes. Usually, a link is used for transforming parameter variables. In this study, TouchDesigner nodes are classified into three main categories in terms of their functions. Nodes corresponding to the blue square are used for human detection using Azure Kinect. The green square comprises four nodes, which are used to configure visualization settings. Nodes corresponding to the red square are used to import and process the heart rate data recorded using the smartwatch and then converted to a parameter used for visualization.

Figure 6. Converting heart rate to visualized media. (A) Concept of visualization process. Nodes in blue square are used for human detection, nodes in green square are used for visualization setting, nodes in red square are used to import and process heart rate data. (B) Heart rate and gravity alternate frequency. (C) Example of gravity ratio (60 bpm, GAF = 0.5 Hz).

Firstly, the index image was used to separate the user's image from the background. Then, parameters such as brightness, gamma, and contrast of the video were adjusted to clarify the user's silhouette. Following that, the edge of the user's image was identified after adjusting the size of the image. The rescaled image and the image edge were then combined into one file and used as one of the parameters of visualization. The ramp node, which enables users to create horizontal, vertical, radial, and circular ramps, was used with color data to simulate the effect of fire, and the camera node was used to ensure a proper viewing angle. Finally, real-time recorded heart rate was used to simulate a burning fire. In Heart Fire, the heart rate is directly proportional to the intensity of the fire. The intensity was selected to be the variable property instead of size (e.g., fire size > 1) to represent the mental state owing to the constraint of window size to display the user's video. Nvidia Flow, originally designed for a volumetric fluid-based simulation of a burning gas system, was used to control the intensity of the flame by adjusting the gravity of the fire effect along the Y-axis (vertical direction). Thus, a Gaussian curve with a maximum value of 1 and different frequencies was used to control the Gravity Alternate Frequency (GAF). Figure 6B illustrates the GAF with respect to the heart rate. Assuming that the heart rate of a healthy person usually ranges from 60 to 120 beats per minute (bpm), substitute the heart rate into the following equation to determine the interactive GAF:

When the heart rate lies between 60 and 120 bpm, the GAF lies between 0.5 and 2.5 Hz. Higher heart rates are visualized using more intensely burning fire. Figure 6C depicts an example of the temporal variation of the gravity ratio corresponding to a heart rate of 60 bpm and a GAF of 0.5 Hz. Details of each node can be found in Supplementary material.

The feasibility of the proposed system was experimentally verified. Ten healthy subjects recruited from the university participated in the experiment [eight males and two females, age (mean ± standard deviation): 25 ± 4 years old], and the contents of the study were explained to them orally before the experiment. Data that contains any information that may identify any person were not used in this research.

We asked all subjects to choose two pieces of music by themselves—one that cheers them up (Type 1) and another that relaxes them (Type 2). Then, the beats per minute (BPM) and energy of both types of music were investigated using the music analysis website Musicstax (Musicstax, 2021). BPM is defined as the tempo of a track in beats per minute, and energy is a measure of how intense a track sounds based on measurements of dynamic range, loudness, timbre, onset rate, and general entropy—0% indicates low energy, and 100% indicates high energy.

During the experiment, each subject sat still behind a desk, wore a smartwatch, and watched as well as listened to their chosen music on YouTube using an over-ear headphone (Beats Pro, frequency response = 20–20,000 Hz, sound pressure level = 115 dB). Participants first listened to relaxing music, which was followed by a 10-min break. After that, participants listened to cheerful music. We first recorded the heart rate for 1 min before playing the music (Pre-). This was followed by recording the heart rate for the duration of the chosen song (Music).

As previous research shows that emotions evoked by music affect how regional heart activity is regulated (Koelsch and Jäncke, 2015), the following hypothesis was formed:

• Hypothesis: Subject's heart rate is greater when listening to Type 1 (cheerful) music when compared to listening to Type 2 (relaxing) music, accordingly it is expected that GAF is higher when listening to Type 1 music when compared to Type 2 music.

After listening to each music type, a follow-up Semantic Differential scale (SDs)-based survey was conducted to record the emotions experienced by the subjects. The SDs-based technique has been used as an effective approach for analyzing affective meaning and music preference since the 1970s (Albert, 1978; Kennedy, 2010; Miller, 2021). The SDs consist of eight factors that were designed to capture user's attitudes toward particular music (Takeshi et al., 2001). Every pair of factors was rated on a 5-point bipolar rating scale (±2: strong, ±1: slight, 0: neutral), and subjects were asked to rate each factor (i.e., Bright-Dark, Heavy-Light, Hard-Soft, Peaceful-Anxious, Clear-Muddy, Smooth-Crisp, Intense-Gentle, Thick-Thin).

We used JASP (version 0.17.2.1, The Netherlands) to perform statistical analysis. Shapiro-Wilk and Levene's test were used to examine normality and equality of variances (see Supplementary material).

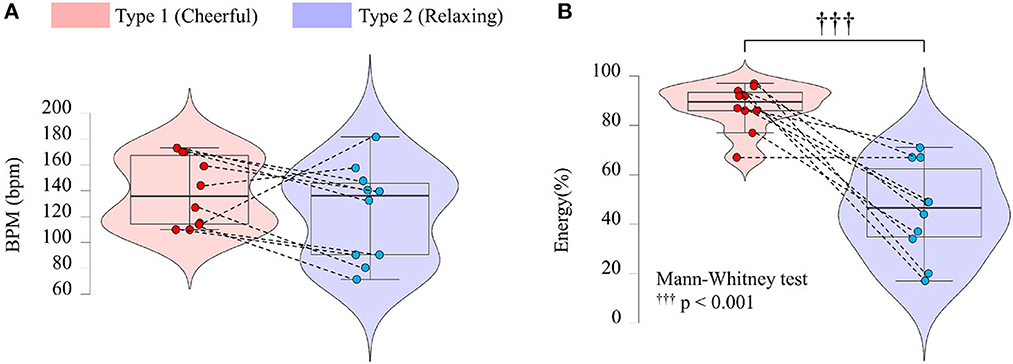

Figure 7A shows most participants' choice for Type 1 music had greater BPM than their choice for Type 2 music. Nevertheless, a Mann–Whitney U-Test did not reveal a significant difference in BPM between Type 1 (Mdn = 135.5, SD = 26.9) and Type 2 (Mdn = 138.5, SD = 37.2) songs, U = 62, p = 0.38. Rank–Biserial Correlation indicated a small effect size (0.24).

Figure 7. Characteristics associated with the two types of selected music. Red data correspond to Type 1 music, Blue data correspond to Type 2 music. Violin plots depicted in (A, B) represent the music BPM and energy. The Mann–Whitney test was performed for Energy, ††† indicates p < 0.001.

As depicted in Figure 7B, a Mann–Whitney U-Test revealed Type 1 (Mdn = 89.5, SD = 9.3) music exhibited significantly higher Energy than Type 2 (Mdn = 46.5, SD = 19.1) music, U = 98, p < 0.001. Rank–Biserial Correlation indicated a large effect size (0.96).

Table 1 summarizes the SDs results. On average, similar emotions were reported on four metrics (Bright-Dark, Hard-Soft, Peaceful-Anxious, Clear-Muddy). For both types of music, subjects felt relatively bright (both < 0), soft (both > 0), peaceful (both < 0), and clear (both < 0). The only significant difference was found on the Intense-Gentle scale, with a Wilcoxon signed-ranks test revealing greater emotional intensity during Type 1 (Mdn = −1.5, SD = 0.9) music when compared to Type 2 (Mdn = 2.0, SD = 0.7) music, W = 0, p = 0.005. The Rank–Biserial Correlation suggested that this was a large effect (−1).

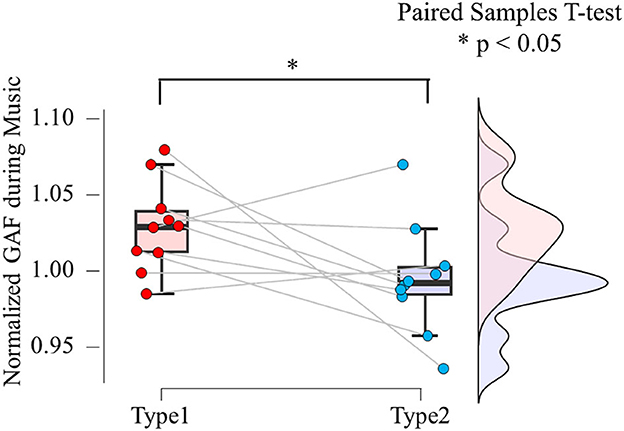

GAFs were calculated using Eq. 2. The average Pre-GAF was used to normalize overall GAF data. As hypothesized, a Paired-Samples T-Test (see Figure 8) revealed significantly greater GAF during Type 1 (M = 1.029, SD = 0.03) music listening when compared to Type 2 (M = 0.995, SD = 0.04) music listening, t(9) = 2.06, p = 0.04. Cohen's d suggests a medium effect (0.65).

Figure 8. Results of normalized GAF during music. Red data correspond to Type 1 music, blue data correspond to Type 2 music. Paired sample T-Test was performed, *indicates p < 0.05.

In this exploratory study, we introduced a novel concept: a smart watch-based interaction system connecting musicians and listeners through real-time animation that visually represents the audience's heartbeats. This marks the pioneering effort to visualize online musician-listener dynamics via a wearable device application.

Contrary to our expectations, BPM was not significantly different for the two music types. However, visualizing the results (Figure 7A) revealed a trend: for a large portion of the listeners, cheerful music consistently exhibited higher BPM than relaxing music. The listener's subjective influence in selecting the music pieces might account for this, introducing a subjective element. On the other hand, a significant difference was found for Energy. As expected, relaxing music displayed lower Energy compared to cheerful music.

The relationship between music and heart rate is linked to changes in brain structures that regulate heart activity (Koelsch, 2014). Specifically, cardiovascular afferent neurons provide the autonomic nervous system with information about blood pressure and the heart's mechanical and chemical conditions (McGrath et al., 2005). On the other hand, emotions influence heart activity through various pathways, transmitting information to the cardiac nerve plexus, including blood pressure, blood gases, autonomic and endocrine routes (Koelsch et al., 2013). These mechanisms result in music-induced emotions affecting heart regulation, heart rate, and heart rate variability (Koelsch and Jäncke, 2015). Armour and Ardell (2004) drew conclusions that heart rate regulation involves an intricate network of reflex-like circuits, incorporating brainstem structures and intra-thoracic cardiac ganglia. These circuits, in turn, are subject to the influence of cortical forebrain structures associated with emotions. Delving into emotional variations using SDs-based surveys, we discerned differing emotional responses among listeners to the two music types. Notably, cheerful music evoked intense emotion, while relaxing music evoked gentle emotion. Our study unveiled that participants listening to cheerful music inducing a high level of emotional response (i.e., intensity) experienced higher heart rates compared to when they listened to relaxing music associated with a lower emotional response (i.e., gentleness). Numerous studies have also reported that music with a high level of emotional intensity is associated with a faster heart rate than music that has a calming effect (Bernardi et al., 2006; Etzel et al., 2006; Krabs et al., 2015). Researchers have proposed that cheerful music triggers a heightened emotional arousal, subsequently influencing the activity of forebrain structures such as the hypothalamus, amygdala, insular cortex, and orbitofrontal cortex (Baumgartner et al., 2006; Koelsch, 2014; Koelsch and Skouras, 2014). Our experimental findings effectively confirm the functionality of the proposed Heart Fire architecture, successfully translating the audience's heart rates into visual feedback. A statistically significant difference emerged between the types of music when we analyzed normalized GAF, revealing an intriguing pattern. As expected, cheerful songs tended to elicit higher GAF compared to relaxing songs, indicating a more rapid animation corresponding to more upbeat music. By visually representing the audience's heartbeats, our proposed system offers a potential approach for musicians to guide and interact with their audiences based on the audience's emotional responses to the music, thereby fostering a stronger connection between performers and their audiences.

Expanding our investigation, we delve into two vital dimensions shaped by our experimental findings. Firstly, we explore the social implications pertinent to musician-listener interaction in the realm of online live-streamed concerts. Secondly, we delve into the technical aspects surrounding hardware and software.

Music's inherent social nature has been widely acknowledged, transcending historical eras (DeWoskin, 1982; Rowell, 2015), and cultural boundaries (Boer and Abubakar, 2014; Trehub et al., 2015). Across diverse societies, music has functioned as a dynamic conduit for establishing and reinforcing social connections, showcasing its role in fostering bonds among individuals (Demos et al., 2012; Boer and Abubakar, 2014; Volpe et al., 2016). As music events shift from physical spaces to the digital realm (Statista Research Department, 2021a,b; Vandenberg et al., 2021), a critical concern surfaces: how can the essential interaction between musicians and listeners be preserved, ensuring the enduring strength of these social bonds? Previous research endeavors have tackled this challenge through diverse approaches. For instance, Onderdijk et al. (2021b) enabled listeners to vote for the final song that they wanted to hear in a live-streamed concert, but they found the voting mechanism to be incapable of increasing the feeling of agency in listeners by itself. This shortcoming was attributed to the one-off nature of the exercise-voting was only enabled during the last part of the concert. This suggests that inculcating a sense of agency in online concert attendees is ineffective if it is not implemented continuously. In our study, we ventured to visualize the heart rates of listeners through a captivating animated representation: a burning fire, synchronized at a detection frequency of 2 Hz. This unique approach not only grants musicians continuous awareness of the audience's heartbeats but also holds immense potential for elevating audience engagement and tailoring performances to harmonize with listeners' emotional states. An illustrative example would be a seamless transition to a slower-tempo piece if the musician observes a rapid “Fire”animation, aiming to foster a serene and immersive musical experience. These impromptu adaptations to the setlist aren't merely a performative maneuver, they align with the listeners' emotional currents, amplifying their sense of participation by weaving their involvement more deeply into the performance's fabric. Looking ahead, our vision extends to fostering collaborative engagements between the audience and the performers on stage. This might take shape as an interactive music television concept, offering an experience that's authentically distinct with each occurrence—an embodiment of musical individuality and collective unity.

Recent studies highlight the potential for visualized musician-listener interaction to foster a more profound social bond. The application of virtual reality (VR) or 360° monitors can amplify the immersive experience for listeners, fostering a stronger sense of connection (Elsey et al., 2019; Dekker et al., 2021). Compared to standard YouTube live streams, VR-equipped listeners reported heightened physical presence and artist connection Onderdijk et al. (2021b). Survey data also support a link between participation sense and enjoyment. Deng and Pan (2023) found that a greater sense of participation in a virtual music event was positively correlated with enjoyment. Additionally, a study by Onderdijk et al. (2023) revealed that audience members wished to interact not only with other audience members to foster social anchoring but predominantly wished to share the experience and connectedness with the artists. The Heart Fire concept aligns well with this idea, showing great potential to reshape the listener-musician relationship. Notably, a study employed LED sticks not just for performance interaction but also as post-event social connectors. Yang et al. (2017) proposed a LED stick where shaking the LED stick at similar frequencies indicated that different audience members shared similar levels of enthusiasm, after which they were free to add each other as friends. It would be interesting to apply this social function to the methodology of our study as well. This would enable users to connect with others who experience similar emotions or exhibit animated fires of similar intensity while listening to a particular song. Translating this to our methodology could offer users an avenue to connect with those sharing similar emotional experiences during songs, thus strengthening the social bonds.

Within the realm of measuring emotions in musical performances, several methods offer diverse advantages and limitations. For instance, prior research established the feasibility of studying listeners' emotional responses through facial expressions (Kayser et al., 2022). However, the analysis of these expressions remains sensitive to lighting conditions and potential obstructions from hair or spectacles. Additionally, it's important to note that not all emotions manifest distinct facial expressions. Another avenue explored is facial electromyography (fEMG), utilized for gauging audience emotions during live-streamed events (Livingstone et al., 2009). In this method, listeners' engagement with music is reflected in the activation of muscles associated with emotions—for instance, the frowning-related corrugator supercilii muscles during a sad song and the smiling-linked zygomaticus major muscles during a cheerful one. However, fEMG poses challenges. Its application requires attaching surface electrodes to the listener's face, which can potentially disrupt the experience. Moreover, interpreting fEMG signals demands intricate data processing to categorize mental states. This complexity may impede musicians from attempting to gauge audience emotions. Consequently, these methods present drawbacks that may hinder their acceptability and practicality.

In contrast, our present study employed a simplified approach—measuring heart rate using a Samsung Galaxy Watch. This method boasts advantages over facial expression analysis and fEMG: smartwatches are non-invasive, user-friendly, and cost-effective. Moreover, this approach circumvents the need for calibration, and is immune to environmental noise and bodily movements. Alternative smartwatches like Apple Watch Series, Fitbit, Garmin, or Xiaomi Mi Band could serve the same purpose (Dijkstra-Soudarissanane et al., 2021). However, further investigation is necessary to ascertain their capacity for direct heart rate transmission to software like TouchDesigner, without involving online servers or clouds. This aspect also intersects with privacy considerations.

In our study, listener heart rate data was conveyed directly to a local PC, eschewing cloud or server involvement. Notably, the method operates in real-time, without data retention. Further enhancing privacy, the visualization using TouchDesigner obscures identifiable body features, ensuring that third parties cannot discern identity from heart rate and processed images—effectively safeguarding user privacy.

Furthermore, the study's equipment holds the potential for expanded application and user-friendliness. Azure Kinect, known for its versatile applications in media (Dijkstra-Soudarissanane et al., 2021), healthcare (Uhlár et al., 2021; Yeung et al., 2021), and robotics (Lee et al., 2020), extends the study's possibilities. Although this study only employed its depth sensor to identify human silhouettes, Azure Kinect's RGB camera and microphone array offer room for architectural expansion. Building on this, it's important to note that musicians often gravitate toward familiar tools, rather than embracing new platforms (Onderdijk et al., 2021a). TouchDesigner simplifies Azure Kinect integration, mitigating the steep learning curve associated with new tools and technical knowledge. With a user-friendly, codeless design of visualization, TouchDesigner stands as an accessible alternative to complex programming languages. The free account (non-commercial version) provided by TouchDesigner is also an enabling factor for musicians on a tight budget.

Real-time visual effects present another challenge. Common video-conferencing platforms like Zoom and Skype, while used for online music events, prove inadequate for synchronized performances due to latency issues (Onderdijk et al., 2021a). Optimal latency typically ranges between 20 and 40 ms (Optimum, 2023). TouchDesigner aligns well with this requirement, offering an optimal frame time of 26.8 ms for real-time visualization. This can be further improved by optimizing TouchDesigner nodes through the Null TOP node to streamline network updates. However, achieving high-quality animation may necessitate a high-performance PC with a recommended 1 GB GPU, particularly an Nvidia graphics card for the Heart Fire effect.

Nevertheless, this study has certain limitations. Due to its exploratory nature, only ten subjects were selected for the experiment, because of which the results must be interpreted with caution. Moreover, the songs were selected by the subjects themselves, and variance in their types and lengths complicated the analysis. However, self-selected music was still preferred as it was deemed to be effective in motivating the subject's emotions (Vidas et al., 2021). Due to the circumstances at the time of our study, we opted to use pre-recorded music from YouTube rather than live-streaming material from an online concert. The decision was primarily influenced by the myriad challenges posed by the COVID-19 restrictions, which made organizing a live concert a difficult endeavor. Fortunately, there are promising signs that the world is steadily recovering from the grips of the pandemic (Center on Budged and Policy Priorities, 2023; World Health Organization, 2023). These encouraging developments have spurred us to set our sights on an exciting venture: organizing a captivating online music concert. In doing so, we aim to employ our innovative system to delve deeper into the invaluable feedback provided by real musicians, a concept that aligns seamlessly with our visionary depiction of the future trends in musician-listener interaction, as illustrated in Figure 2. This groundbreaking concert promises to be an engaging experience, wherein our system is expected to play a pivotal role in enhancing the interaction between musicians and their audience. It will serve as a testament to the evolution of music appreciation in the digital age and will enable us to gather invaluable insights, shaping the future landscape of musical performances and their interactions with listeners.

The online live-streamed concert is a popular way to attend musical events and a quickly developing area of the music industry. However, the lack of an adequate atmosphere and interaction between musicians and listeners affects the viability of this type of concert. In this pilot study, we developed a smart watch-based interactive system for online music, which provided real-time visual feedback presented through a burning fire effect based on the listener's heart rate. We believe the developed system is of great interest for musician-listener interaction during online performances, as the system has the ability to present emotional responses based on heart rate variations during different types of music. While limitations such as small sample sizes and self-selected music choices exist, this research sets the stage for further exploration into improving musician-listener interaction during online performances. Further studies could delve into collaborations with virtual reality and other multimedia tools to create truly immersive online music experiences.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The requirement of ethical approval was waived by Ethical Committee of Ritsumeikan University for the studies involving humans because ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements because data used in this study cannot be used as identify personal identity. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants' legal guardians/next of kin because the contents of the study were explained orally.

TW composed the first draft of the manuscript, wrote the revised manuscript, contributed to the concept of the study, construction of the system, experimental design, data analysis, and the compilation of the results and discussion. SO provided the experimental devices and revised the manuscript. All authors have approved the submitted version.

This study was partly supported by Research Progressing Project Ritsumeikan University (B21-0132).

We would like to thank Editage (www.editage.com) for English language editing. We value and thank the reviewers for their thoughtful consideration of our manuscript. The preprint of this article can be found at: Techrxiv Preprint.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomp.2023.1150348/full#supplementary-material

Albert, J. A., Owolabi, V., Gebel, A., Brahms, C. M., Granacher, U., Arnrich, B., et al. (2020). Evaluation of the pose tracking performance of the Azure Kinect and Kinect v2 for gait analysis in comparison with a gold standard: a pilot study. Sensors 20, 1–22. doi: 10.3390/s20185104

Albert, W. G. (1978). Dimensionality of perceived violence in rock music: musical intensity and lyrical violence content. Pop. Music Soc. 6, 27–38. doi: 10.1080/03007767808591107

Armour, J. A., and Ardell, J. L. (2004). Basic and Clinical Neurocardiology. Oxford: Oxford University Press.

Basbas, F. J. (2020). Here is the Future of Music: Monetized Livestreams. 10 Years Bandwag. Available online at: https://www.bandwagon.asia/articles/here-is-the-future-of-music-monetized-livestreams-covid-19 (accessed July 24, 2023).

Baumgartner, T., Lutz, K., Schmidt, C. F., and Jäncke, L. (2006). The emotional power of music: how music enhances the feeling of affective pictures. Brain Res. 1075, 151–164. doi: 10.1016/j.brainres.2005.12.065

Bernardi, L., Porta, C., and Sleight, P. (2006). Cardiovascular, cerebrovascular, and respiratory changes induced by different types of music in musicians and non-musicians: the importance of silence. Heart 92, 445–452. doi: 10.1136/hrt.2005.064600

Boer, D., and Abubakar, A. (2014). Music listening in families and peer groups: benefits for young people's social cohesion and emotional well-being across four cultures. Front. Psychol. 5, 392. doi: 10.3389/fpsyg.2014.00392

Brooks, S. K., Webster, R. K., Smith, L. E., Woodland, L., Wessely, S., Greenberg, N., et al. (2020). The psychological impact of quarantine and how to reduce it: rapid review of the evidence. Lancet 395, 912–920. doi: 10.1016/S0140-6736(20)30460-8

Center on Budged and Policy Priorities (2023). Chart Book: Tracking the Recovery From the Pandemic Recession. Cent. Budged Policy Priorities. Available online at: https://www.cbpp.org/research/economy/tracking-the-recovery-from-the-pandemic-recession (accessed September 11, 2023).

Dagnino, P., Anguita, V., Escobar, K., and Cifuentes, S. (2020). Psychological effects of social isolation due to quarantine in chile: an exploratory study. Front. Psychiatry 11, 591142. doi: 10.3389/fpsyt.2020.591142

Dahl, L., Herrera, J., and Wilkerson, C. (2011). “TweetDreams: making music with the audience and the world using real-time Twitter data,” in International Conference on New Interfaces for Musical Expression (Oslo: NIME), 272–275. Available online at: https://ccrma.stanford.edu/~lukedahl/pdfs/Dahl,Herrera,Wilkerson-TweetDreams-Nime2011.pdf

Dawel, A., Shou, Y., Smithson, M., Cherbuin, N., Banfield, M., Calear, A. L., et al. (2020). The effect of COVID-19 on mental health and wellbeing in a representative sample of Australian adults. Front. Psychiatry 11, 579985. doi: 10.3389/fpsyt.2020.579985

Dekker, A., Wenzlaff, F., Biedermann, S. V., Briken, P., and Fuss, J. (2021). VR porn as “empathy machine”? Perception of self and others in virtual reality pornography. J. Sex Res. 58, 273–278. doi: 10.1080/00224499.2020.1856316

Demos, A. P., Chaffin, R., Begosh, K. T., Daniels, J. R., and Marsh, K. L. (2012). Rocking to the beat: effects of music and partner's movements on spontaneous interpersonal coordination. J. Exp. Psychol. Gen. 141, 49. doi: 10.1037/a0023843

Deng, J., and Pan, Y. (2023). Evaluating influencing factors of audiences' attitudes toward virtual concerts: evidence from China. Behav. Sci. 13, 478. doi: 10.3390/bs13060478

DeWoskin, K. J. (1982). A Song for One or Two: Music and the Concept of Art in Early China. Number 42. Ann Arbor, MI: Center for Chinese Studies, University of Michigan Ann Arbor. doi: 10.3998/mpub.19278

Dijkstra-Soudarissanane, S., Gunkel, S., Gabriel, A., Fermoselle, L. ter Haar, F., and Niamut, O. (2021). “XR carousel: a visualization tool for volumetric video,” in Proceedings of the 12th ACM Multimedia Systems Conference (New York, NY: ACM), 339–343. doi: 10.1145/3458305.3478436

Elsey, J. W., van Andel, K., Kater, R. B., Reints, I. M., and Spiering, M. (2019). The impact of virtual reality versus 2D pornography on sexual arousal and presence. Comput. Hum. Behav. 97, 35–43. doi: 10.1016/j.chb.2019.02.031

Etzel, J. A., Johnsen, E. L., Dickerson, J., Tranel, D., and Adolphs, R. (2006). Cardiovascular and respiratory responses during musical mood induction. Int. J. Psychophysiol. 61, 57–69. doi: 10.1016/j.ijpsycho.2005.10.025

Feldmeier, M., and Paradiso, J. A. (2007). An interactive music environment for large groups with giveaway wireless motion sensors. Comput. Music J. 31, 50–67. doi: 10.1162/comj.2007.31.1.50

Fink, L. K., Warrenburg, L. A., Howlin, C., Randall, W. M., Hansen, N. C., Fuhrmann, M. W., et al. (2021). Viral tunes: changes in musical behaviours and interest in coronamusic predict socio-emotional coping during COVID-19 lockdown. Humanit. Soc. Sci. Commun. 8, 1–11. doi: 10.1057/s41599-021-00858-y

Franceschini, C., Musetti, A., Zenesini, C., Palagini, L., Scarpelli, S., Quattropani, M. C. G., et al. (2020). Poor sleep quality and its consequences on mental health during the COVID-19 lockdown in Italy. Front. Psychol. 11, 1–15. doi: 10.3389/fpsyg.2020.574475

Freeman, J. (2005). “Large audience participation, technology, and orchestral performance,” in International Computer Music Conference, ICMC 2005 (Barcelona: Michigan Publishing), 757–760. Available online at: https://api.semanticscholar.org/CorpusID:5814099

Freeman, J., Xie, S., Tsuchiya, T., Shen, W., Chen, Y. L., Weitzner, N., et al. (2015). Using massMobile, a flexible, scalable, rapid prototyping audience participation framework, in large-scale live musical performances. Digit. Creat. 26, 228–244. doi: 10.1080/14626268.2015.1057345

Hirata, Y., Okamoto, M., and Miura, M. (2021). “Why is live streaming less impressive? Measurement of stress evaluation based on heart rate variability when listening to music,” in The Japanese Society for Music Perception and Cognition, 25–30. Available online at: http://jsmpc.org/archives/meetings/2021a/

Hödl, O., Bartmann, C., Kayali, F., Löw, C., and Purgathofer, P. (2020). Large-scale audience participation in live music using smartphones. J. New Music Res. 49, 192–207. doi: 10.1080/09298215.2020.1722181

Hödl, O., Kayali, F., and Fitzpatrick, G. (2012). “Designing interactive audience participation using smart phones in a musical performance,” in ICMC 2012: Non-Cochlear Sound - Proceedings of the International Computer Music Conference 2012 (Ljubljana: International Computer Music Association), 236–241. Available online at: https://hdl.handle.net/2027/spo.bbp2372.2012.042

Kayser, D., Egermann, H., and Barraclough, N. E. (2022). Audience facial expressions detected by automated face analysis software reflect emotions in music. Behav. Res. Methods 54, 1493–1507. doi: 10.3758/s13428-021-01678-3

Kennedy, S. W. (2010). An Exploration of Differences in Response to Music Related to Levels of Psychological Health in Adolescents [PhD thesis]. Toronto, ON: University of Toronto.

Koelsch, S. (2014). Brain correlates of music-evoked emotions. Nat. Rev. Neurosci. 15, 170–180. doi: 10.1038/nrn3666

Koelsch, S., and Jäncke, L. (2015). Music and the heart. Eur. Heart J. 36, 3043–3049. doi: 10.1093/eurheartj/ehv430

Koelsch, S., and Skouras, S. (2014). Functional centrality of amygdala, striatum and hypothalamus in a “small-world” network underlying joy: an fMRI study with music. Hum. Brain Mapp. 35, 3485–3498. doi: 10.1002/hbm.22416

Koelsch, S., Skouras, S., and Jentschke, S. (2013). Neural correlates of emotional personality: a structural and functional magnetic resonance imaging study. PLoS ONE 8, e77196. doi: 10.1371/journal.pone.0077196

Krabs, R. U., Enk, R., Teich, N., and Koelsch, S. (2015). Autonomic effects of music in health and Crohn's disease: the impact of isochronicity, emotional valence, and tempo. PLoS ONE 10, e0126224. doi: 10.1371/journal.pone.0126224

Krause, A. E., Dimmock, J., Rebar, A. L., and Jackson, B. (2021). Music listening predicted improved life satisfaction in university students during early stages of the COVID-19 pandemic. Front. Psychol. 11, 631033. doi: 10.3389/fpsyg.2020.631033

Lee, T. E., Tremblay, J., To, T., Cheng, J., Mosier, T., Kroemer, O., et al. (2020). “Camera-to-robot pose estimation from a single image,” in 2020 IEEE International Conference on Robotics and Automation (ICRA) (Paris: IEEE), 9426–9432. Available online at: https://ieeexplore.ieee.org/document/9196596

Livingstone, S. R., Thompson, W. F., and Russo, F. A. (2009). Facial expressions and emotional singing: a study of perception and production with motion capture and electromyography. Music Percept. 26, 475–488. doi: 10.1525/mp.2009.26.5.475

Loic2665 (2020). HeartRatetoWeb. GitHub. Available online at: https://github.com/loic2665/HeartRateToWeb (accessed September 2, 2021).

McGrath, M. F., de Bold, M. L. K., and de Bold, A. J. (2005). The endocrine function of the heart. Trends Endocrinol. Metab. 16, 469–477. doi: 10.1016/j.tem.2005.10.007

Miller, R. (2021). The semantic differential in the study of musical perception: a theoretical overview. Vis. Res. Music Educ. 16, 11.

Musicstax (2021). Musicstax - Music Analysis. Musicstax. Available online at: https://musicstax.com/ (accessed September 2, 2021).

Onderdijk, K. E., Acar, F., and Van Dyck, E. (2021a). Impact of lockdown measures on joint music making: playing online and physically together. Front. Psychol. 12, 642713. doi: 10.3389/fpsyg.2021.642713

Onderdijk, K. E., Bouckaert, L., Van Dyck, E., and Maes, P. J. (2023). Concert experiences in virtual reality environments. Virtual Real. 27, 2383–2396. doi: 10.1007/s10055-023-00814-y

Onderdijk, K. E., Swarbrick, D., Van Kerrebroeck, B., Mantei, M., Vuoskoski, J. K., Maes, P. J., et al. (2021b). Livestream experiments: the role of COVID-19, agency, presence, and social context in facilitating social connectedness. Front. Psychol. 12, 647929. doi: 10.3389/fpsyg.2021.647929

Optimum (2023). What Is Latency? Optimum. Available online at: https://www.optimum.com/articles/internet/what-is-latency (accessed July 25, 2023).

Rowell, L. (2015). Music and Musical thought in Early India. Chicago, IL: University of Chicago Press.

SAMSUNG Galaxy (2021). Galaxy Watch. SAMSUNG. Available online at: https://www.samsung.com/jp/watches/ (accessed September 22, 2021).

Sargeant, B., Mueller, F. F., and Dwyer, J. (2017). “Using HTC vive and TouchDesigner to projection-map moving objects in 3D space: a playful participatory artwork,” in Extended Abstracts Publication of the Annual Symposium on Computer-Human Interaction in Play CHI PLAY'17 Extended Abstracts (New York, NY: Association for Computing Machinery), 1–11. doi: 10.1145/3130859.3131427

Schnell, N., Bevilacqua, F., Robaszkiewicz, S., and Schwarz, D. (2015). “Collective sound checks: exploring intertwined sonic and social affordances of mobile web applications,” in TEI 2015 - Proceedings of the 9th International Conference on Tangible, Embedded, and Embodied Interaction (New York, NY: ACM), 685–690. doi: 10.1145/2677199.2688808

Scrubb (2020). Online Music Festival Top Hits Thailand. YouTube. Available online at: https://www.youtube.com/watch?v=IcWQe1c1JhY (accessed July 24, 2023).

Shaw, A. (2020). Why Fan Interaction is so Important for Your Online Strategy. Moon Jelly. Available online at: https://moonjelly.agency/why-fan-interaction-is-so-important-for-your-online-strategy/ (accessed July 24, 2023).

Statista Research Department (2021a). Attitudes toward Livestreaming Concerts and Music Events among Fans Worldwide as of August 2020. Technical report. New York, NY: Statista Research Department.

Statista Research Department (2021b). Musicians Livestreaming Performances during the COVID-19 Pandemic as of March 2021. Technical report. New York, NY: Statista Research Department.

Takeshi, I., Yoshinobu, K., and Yasuo, N. (2001). Music Database Retrieval System with Sensitivity Words Using Music Sensitivity Space [in Japanese]. Trans. Inform. Process. Soc. Japan 42, 3201–3212.

TouchDesigner (2017). TouchDesigner. TouchDesigner. Available online at: https://docs.derivative.ca/TouchDesigner (accessed September 23, 2021).

Trehub, S. E., Becker, J., and Morley, I. (2015). Cross-cultural perspectives on music and musicality. Philos. Trans. R. Soc. B Biol. Sci. 370, 20140096. doi: 10.1098/rstb.2014.0096

Uhlár, Á., Ambrus, M., Kékesi, M., Fodor, E., Grand, L., Szathmáry, G., et al. (2021). Kinect Azure based accurate measurement of dynamic valgus position of the knee'a corrigible predisposing factor of osteoarthritis. Appl. Sci. 11, 5536. doi: 10.3390/app11125536

Vandenberg, F., Berghman, M., and Schaap, J. (2021). The ‘lonely raver': music livestreams during covid-19 as a hotline to collective consciousness? Eur. Soc. 23, S141–S152. doi: 10.1080/14616696.2020.1818271

Vidas, D., Larwood, J. L., Nelson, N. L., and Dingle, G. A. (2021). Music listening as a strategy for managing COVID-19 stress in first-year university students. Front. Psychol. 12, 647065. doi: 10.3389/fpsyg.2021.647065

Volpe, G., D'Ausilio, A., Badino, L., Camurri, A., and Fadiga, L. (2016). Measuring social interaction in music ensembles. Phil. Trans. R. Soc. B Biol. Sci. 371, 20150377. doi: 10.1098/rstb.2015.0377

Wang, T., Jeong, H., Okada, S., and Ohno, Y. (2019). “Noninvasive measurement for emotional arousal during acupuncture using thermal image,” in 2019 IEEE 1st Global Conference on Life Sciences and Technologies (LifeTech) (Osaka: IEEE), 63–64. Available online at: https://ieeexplore.ieee.org/document/8883962

World Health Organization (2023). New Survey Results Show Health Systems Starting to Recover from Pandemic. Geneva: WHO.

Wu, M. H., and Chang, T. C. (2021). Evaluation of effect of music on human nervous system by heart rate variability analysis using ECG sensor. Sens. Mater. 33, 739–753. doi: 10.18494/SAM.2021.3040

Yang, J., Bai, Y., and Cho, J. (2017). “Smart light stick: an interactive system for pop concert,” in 2017 5th International Conference on Mechatronics, Materials, Chemistry and Computer Engineering (ICMMCCE 2017) (Chongqing: Atlantis Press), 596–600. Available online at: https://www.atlantis-press.com/proceedings/icmmcce-17/25882490

Yeung, L. F., Yang, Z., Cheng, K. C. C., Du, D., and Tong, R. K. Y. (2021). Effects of camera viewing angles on tracking kinematic gait patterns using Azure Kinect, Kinect v2 and Orbbec Astra Pro v2. Gait Posture 87, 19–26. doi: 10.1016/j.gaitpost.2021.04.005

Yoshihi, M., Okada, S., Wang, T., Kitajima, T., and Makikawa, M. (2021). Estimating sleep stages using a head acceleration sensor. Sensors 21, 1–19. doi: 10.3390/s21030952

Young, M. (2015). Concert LED Wristbands. Trendhunter. Available online at: https://www.trendhunter.com/trends/led-wristband (accessed November 18, 2021).

Keywords: heart rate, musician-listener interaction, online live-streaming, smart watch, visualization

Citation: Wang T and Okada S (2023) Heart fire for online live-streamed concerts: a pilot study of a smartwatch-based musician-listener interaction system. Front. Comput. Sci. 5:1150348. doi: 10.3389/fcomp.2023.1150348

Received: 24 January 2023; Accepted: 19 September 2023;

Published: 09 October 2023.

Edited by:

Marie Hattingh, University of Pretoria, South AfricaReviewed by:

Linda Marshall, University of Pretoria, South AfricaCopyright © 2023 Wang and Okada. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tianyi Wang, by50ZW5pY2hpLjJ6QGt5b3RvLXUuYWMuanA=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.