94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci., 08 January 2024

Sec. Computer Vision

Volume 5 - 2023 | https://doi.org/10.3389/fcomp.2023.1142621

This article is part of the Research TopicPerceptual Organization in Computer and Biological VisionView all 14 articles

Classical good continuation for image curves is based on 2D position and orientation. It is supported by the columnar organization of cortex, by psychophysical experiments, and by rich models of (differential) geometry. Here, we extend good continuation to stereo by introducing a neurogeometric model to abstract cortical organization. Our model clarifies which aspects of the projected scene geometry are relevant to neural connections. The model utilizes parameterizations that integrate spatial and orientation disparities, and provides insight into the psychophysics of stereo by yielding a well-defined 3D association field. In sum, the model illustrates how good continuation in the (3D) world generalizes good continuation in the (2D) plane.

Binocular vision is the ability of the visual system to provide information about the three-dimensional environment starting from two-dimensional retinal images. Disparities are among the main cues for depth perception and stereo vision but, in order to extract them, the brain needs to determine which features coming from the right eye correspond to those from the left eye, and which do not. This generates a coupling problem, which is usually referred to as the stereo correspondence problem. Viewed in the large, stereo correspondence must be consistent with stereo perception more generally, and knowing the relevant features is key for both issues. In this paper we develop an approach to stereo based on the functional organization of the visual cortex, and we identify the geometric features extracted by the binocular cells. This model will be able to extend the notion of good continuation for planar curves to that for 3D spatial curves. A simple example demonstrates their application in computing stereo correspondence.

Good continuation in the plane (retinotopic coordinates) is one of the foundational principles of Gestalt perceptual organization. It enjoys an extensive history (Wagemans et al., 2012). It is supported by psychophysical investigations (e.g., Field et al., 1993; Geisler et al., 2001; Elder and Goldberg, 2002; Hess et al., 2003; Lawlor and Zucker, 2013), which reveal connections to contour statistics; it is supported by physiology (orientation selectivity), which reveals the role for long-range horizontal connections (Bosking et al., 1997); and it is supported by computational modeling (Ben-Shahar and Zucker, 2004; Sarti et al., 2007), which reveals a key role for geometry. The notion of orientation underlies all three of these aspects: neurons in visual cortex are selective for orientations, pairs of dots in grouping experiments indicate an orientation, and edge elements in natural images are oriented and related to image statistics. Orientation in space involves two angles, which we shall exploit. Nevertheless, good continuation in depth is much less well-developed than good continuation in the plane, despite having comparable historical origins. (Koffka, 1963, p. 161-162):

...a perspective drawing, even when viewed monocularly, does not give the same vivid impression of depth as the same drawing if viewed through a stereoscope with binocular parallax... for in the stereoscope the tri-dimensional force of the parallax co-operates with the other tri-dimensional forces of organization; instead of conflict between forces, stereoscopic vision introduces mutual reinforcement.

Our specific goal in this paper is to develop a neurogeometrical model of stereo vision, based on the functionality of binocular cells. The main application will be a good continuation model in three dimensions that is analogous to the models of contour organization in two dimensions. We will develop ad hoc mathematical instruments, supported by a number of neural and psychophysical investigations (Malach et al., 1993; Uttal, 2013; Deas and Wilcox, 2014, 2015; Khuu et al., 2016; Scholl et al., 2022).

Although only one dimension higher than contours in the plane, contours extending in depth raise subtle new issues; this is why a geometric model can be instructive. First among the issues is the choice of coordinates which, of course, requires a mathematical framework for specifying them. In the plane, position and orientation are natural; smoothness is captured by curvature or the relationship between nearby orientations along a contour. For stereo, there is monocular structure in the left eye and in the right. Spatial disparity is a standard variable relating them, and it is well-known that primate visual systems represent this variable directly (Poggio, 1995). Spatial disparity is clearly a potential coordinate. However, other physiological aspects are less clear. The columnar architecture so powerful for contour organization in the plane is not only monocular: the presence of columns for spatial disparity of binocular cells has been experimentally described in V2 (Ts'o et al., 2009). However, orientation disparity does not seem to be coded in the cortex (see next section). Nevertheless, orientation-selective cells provide the input for stereo so, at a minimum, both position disparity and orientation – one orientation for the right eye and (possibly) another for the left – should be involved. While it is traditional to assume only “like” orientations are matched (Hubel and Wiesel, 1962; Nelson et al., 1977; Marr and Poggio, 1979; Bridge and Cumming, 2001; Chang et al., 2020), our sensitivity to orientation disparity questions this, making orientation disparity another putative variable. We shall show that orientations do play a deep role in stereo, but that it is not necessarily efficient to represent them as a disparity. Furthermore, there is a debate in stereo psychophysics about orientation: since its physiological realization could be confounded with disparity gradients (Mitchison and McKee, 1990; Cagenello and Rogers, 1993), orientation may be redundant. This is not the case, since it is the orientation of the “gradient” that matters. Thus we provide a representation of the geometry of spatial disparity and orientation in support of using good continuation in a manner that both incorporates the biological “givens” and provides a rigorous foundation for the correspondence problem. As has been the case with curve organization, we further believe that our modeling will illuminate the underlying functional architecture for stereo.

Hubel and Wiesel reported disparity-tuned neurons in early, classic work (Hubel and Wiesel, 1970). They observed that single units could be driven from both eyes and that it was possible to plot separate receptive fields (RF) for each eye. We emphasize that these monocular receptive fields are tuned to orientation (Cumming and DeAngelis, 2001; Parker et al., 2016), and a review of neural models can be found in Read (2015).

The classical model for expressing the left/right-eye receptive field combination is the binocular energy model (BEM), first introduced in Anzai et al. (1999b). It encodes disparities through the receptive profiles of simple cells, raising the possibility of both position and phase disparities (Jaeger and Ranu, 2015). However, Read and Cumming (2007), building upon (Anzai et al., 1999a), showed that phase disparity neurons tend to be strongly activated by false correspondence pairs. Other approaches are based on the statistics of natural images (Burge and Geisler, 2014; Jaini and Burge, 2017; Burge, 2020) utilized in an optimal fashion; these lead to more refined receptive field models. Nevertheless, the orientation differences between the two eyes (Nelson et al., 1977), or orientation disparity, should not be neglected. Although there were attempts to incorporate it (Bridge et al., 2001) in energy models, they are limited. The geometrical model we will present incorporates orientation differences directly.

Many other mathematical models for stereo vision based on neural models have been developed. Some claim (e.g., Marr and Poggio, 1979) that orientations should match between the two eyes, although small differences are allowed. This, of course, assumes the structure is frontal-parallel. Subsequently, Jones and Malik (1991) used a set of linear filters tuned to different orientations (and scales) but their algorithm was not built on a neurophysiological basis. Alibhai and Zucker (2000), Li and Zucker (2003), and Zucker (2014) built a more biologically-inspired model that addressed the connections between neurons. Their differential-geometry model employed position, orientations and curvatures in 2D retinal planes, modeling binocular neurons with orientations given by tangent vectors of Frenet geometry. Our results here are related, although the geometry is deeper (We develop this below.). A more recent work, based on differential and Riemannian geometry, is developed in Neilson et al. (2018). Before specifying these results, however, we introduce the specific type of geometry that we shall be using. It follows directly from the columnar organization often seen in predators and primates.

We propose a sub-Riemannian model for the cortical-inspired geometry underlying stereo vision based on the encoding of positional disparities and orientation differences in the information coming from the two eyes. We build on neuromathematical models, starting from the work of Koenderink and van Doorn (1987) and Hoffman (1989), with particular emphasis on the neurogeometry of monocular simple cells (Petitot and Tondut, 1999; Citti and Sarti, 2006; Sarti et al., 2007; Petitot, 2008; Sanguinetti et al., 2010; Sarti and Citti, 2015; Baspinar et al., 2020).

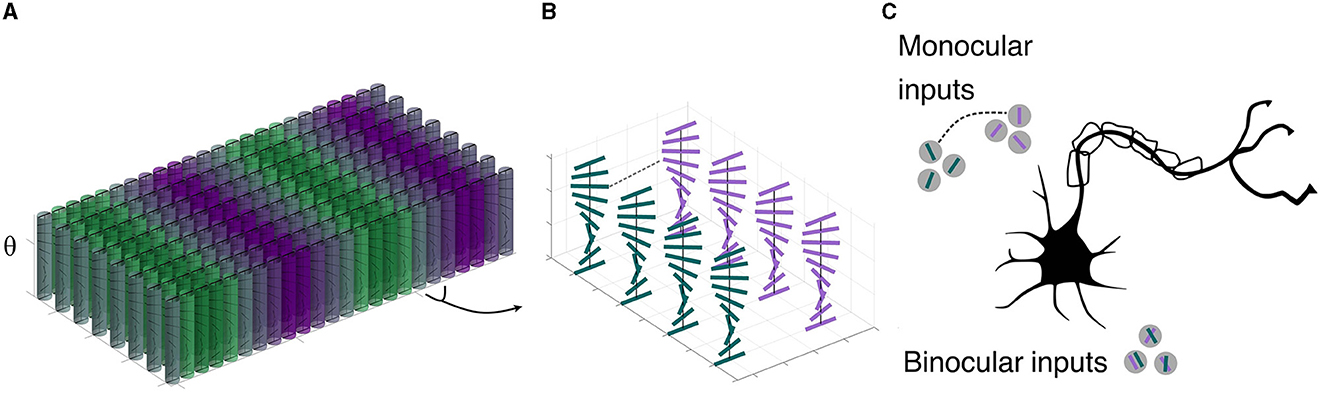

To motivate our mathematical approach, it is instructive to build on an abstraction of visual cortex. We start with monocular information, segregated into ocular dominance bands (LeVay et al., 1975) in layer 4; these neurons have processes that extend into the superficial layers. We cartoon this in Figure 1, which shows an array of orientation hypercolumns arranged over retinotopic position. It is colored by dominant eye inputs; the binocularly-driven cells tend to be closer to the ocular dominance boundaries, while the monocular cells are toward the centers. A zoom emphasizes the orientation distribution along a few of the columns near each position; horizontal connections (not shown) effect the interactions between these units. This raises the basic question in this paper: what is the nature of the interaction among groups of cells representing different orientations at nearby positions and innervated by inputs from the left and right eyes? The physiology suggests (Figure 1C) the answer lies in the interactions among both monocular and binocular cells; our model specifies this interaction, starting from the monocular ones and building analogously into a columnar organization.

Figure 1. Cartoon of visual cortex, V1, superficial layers. (A) Macroscopic organization: A number of (abstracted) orientation hypercolumns, colored by left-eye (green)/right-eye (purple) dominant inputs. The color grading emphasizes that at the center of the ocular dominance bands the cells are strongly monocular, while at the boundaries they become binocularly-driven. (B) A zoom in to a few orientation columns showing left and right monocular cells at the border of ocular dominance bands. Cells in these nearby columns will provide the anatomical substrate for our model. (C) More recent work shows that both monocular and binocular inputs matter to these cells (redrawn from Scholl et al., 2022, using data from ferret). This more advanced wiring suggests the connection structures in our model.

Since much of the paper is technical, we here specify, informally, the main ingredients of the model and the results. We first list several of the key points, then illustrate them directly.

• Stereo geometry enjoys a mathematical structure that is a formal extension of plane curve geometry. In the plane, points belonging to a curve are described by an orientation at a position, and these are naturally represented as elements (orientation, position) of columns. In our model, these become abstract fibers. The collection of fibers across position is a fiber bundle. Elements of the (monocular) fiber can be thought of as neurons.

• Our geometrical model is based on tangents and curvatures. Tangents naturally relate to orientation selectivity, and are commonly identified with “edge” elements in the world. We shall occasionally invoke this relationship, for intuition and convenience, but some caution is required. While edge elements comprising, e.g., a smooth bounding contour are tangents, the converse is not necessarily true (e.g., elongated attached highlights or hair textures). Instead, our model should be viewed as specifying the constraints relevant to understanding neural circuitry; see Section 1.3.

• To elaborate the previous point: the tangents in our model need not be edges in the world; they are neural responses. The constraints in our model can be used to determine whether these responses should be considered as “edges.” This is why the model is built from the geometry of idealized space curves: to support such inferences.

• For stereo, we shall need fibers that are a “product” of the left and right-eye monocular columns. This is the reason why we choose position, positional disparity and orientations from the left and right eyes respectively, as the natural variables that describe the stereo fiber over each position. We stress that these fibers are not necessarily explicit in the cortical architecture.

• Curvature provides a kind of “glue” to enable transitions from points on fibers to nearby points on nearby fibers. These transitions specify “integral curves” through the stereo fiber bundle.

• The integral curve viewpoint provides a direction of information flow (information diffuses through the bundle) thereby suggesting underlying circuits.

• The integral curves formalize association field models. Their parameters describe the spray of curves that is well in accordance with 3D curves as studied in psychophysical experiments in Hess and Field (1995), Hess et al. (1997), and Khuu et al. (2016).

• Our formal theory addresses several conjectures in the literature. The first is the identity hypothesis (Kellman et al., 2005a,b) and the organization of units for curve interpolation (cf. Anderson et al., 2002); we show how tangents are natural “units” and how they can be organized. The second concerns the nature of the organization (Li and Zucker, 2006), where we resolve a conjecture regarding the interpolating object (see Proposition 3.2 below).

• Our formal theory provides a new framework for specifying the correspondence problem, by illustrating how good continuation in the 3-D world generalizes good continuation in the 2-D plane. This is the point where consistent binocular-binocular interactions are most important.

• Our formal theory has direct implications for understanding torsional eye movements. It suggests, in particular, that the rotational component is not simply a consequence of development, but that it helps to undo inappropriate orientation disparity changes induced by eye movements. This role for Listing's Law will be treated in a companion paper (in preparation); see also the excellent paper (Schreiber et al., 2008).

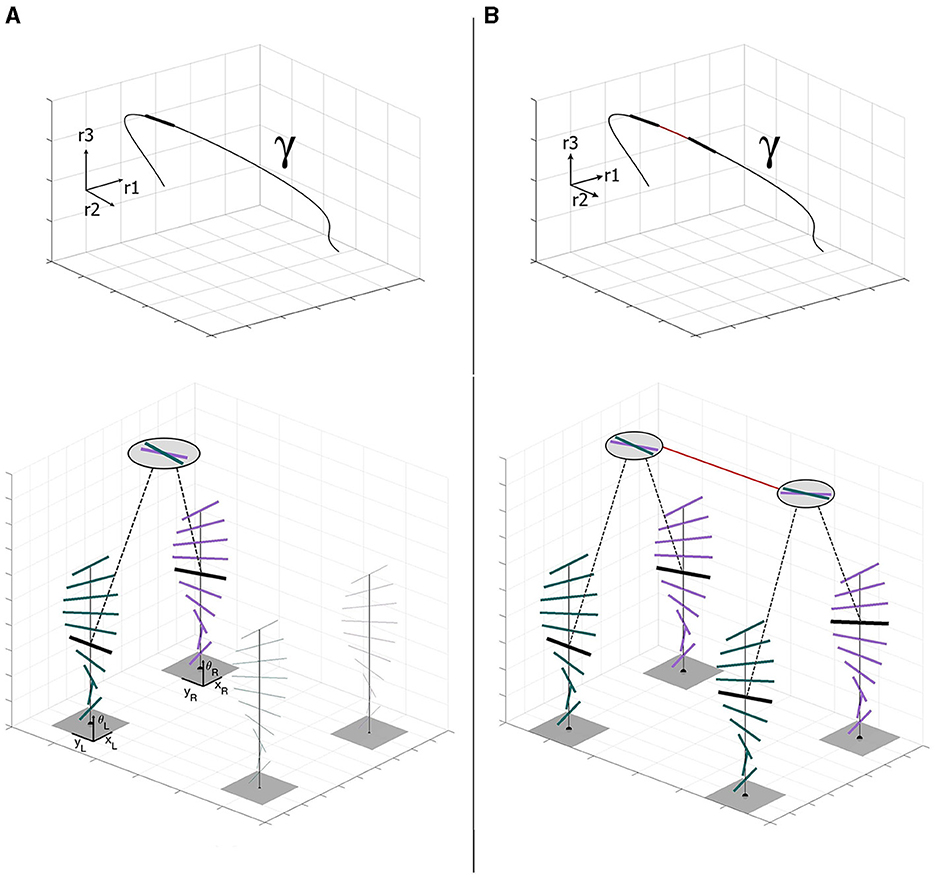

We now illustrate these ideas (Figure 2). Consider a three-dimensional stimulus as a space curve γ:ℝ → ℝ3, with a unit-length tangent at the point of fixation. Since the tangent is the derivative of a curve, the binocular cells naturally encode the unitary tangent direction to the spatial 3D stimulus γ. This space tangent projects to a tangent orientation in the left eye1, and perhaps the same or a different orientation in the right eye. A nearby space tangent projects to another pair of monocular tangents, illustrated as activity in neighboring columns. Note how connections between the binocular neurons support consistency along the space curve. It is this consistency relationship that we capture with our model of the stereo association field.

Figure 2. (A) Stereo projection of the highlighted tangent vector to the stimulus γ ∈ ℝ3 in the left-eye innervated and right-eye innervated monocular orientation columns (Each short line denotes a neuron by its orientation preference.). Joint activity across the eyes, which denotes the space tangent, is illustrated by the binocular neuron (circle). Note the two similar but distinct monocular orientations. Connections from the actively stimulated monocular neurons to the binocular neuron are shown as dashed lines. (B) Stereo projection of a consecutive pair of tangents to the stimulus γ ∈ ℝ3 in the left and right retinal columns. Each space tangent projects to a different pair of monocular columns because of the spatial disparity. Consistency in the responses of these four columns corresponds to consistency between the space tangents attached to nearby positions along γ. This consistency is realized through the binocular neural connection (solid line).

Since space curves live in 3D, two angles are required to specify its space tangent at a point. In other words, monocular tangent angles span a circle in the plane; space tangent angles span a 2-sphere in 3D. In terms of the projections into the left-eye and the right-eye, the space tangent can be described by the parameters n = (θ, φ) of 𝕊2 (Figure 3A). Thus, we can suitably describe the space of stereo cells – the full set of space tangents at any position in the 3D world – as the manifold of positions and orientations ℝ3 ⋊ 𝕊2. Moving from one position in space to another, and changing the tangent orientation to the one at the new position, amounts to what is called a group action on the appropriate manifold. We informally introduce these notions in the next subsection; a more extensive introduction to these ideas is in Appendix A (Supplementary material).

Figure 3. (A) The full geometry of stereo. Note how the stereo correspondence problem allows to establish the relationship between the 3D tangent point (P, θ, ϕ) and the projections pL and pR, the disparity and the orientations θL and θR. (B) Main result of the paper. The three-dimensional space curve γ is enveloped by the 3D association field centered at a point. Formally, this association field is a fan of integral curves in the sub-Riemmanian geometry computed entirely within the columnar architecture (It is specifically described by Equation (36) with varying c1 and c2 in ℝ, but that will take some work to develop.).

We live in a 3D world in which distances are familiar; that is, a space of points with a Euclidean distance function defined between any pair of them. Apart from practical considerations we can move in any direction we would like. Cars, however, have much more restricted movement capabilities. They can move forward or backward, but not sideways. To move in a different direction, cars must turn their wheels. Here is the basic analogy: in cortical space information can move to a new retinotopic position in a tangent direction, or it can move up or down a column (orientation fiber) to change direction. Moving in this fashion, from an orientation at a position to another orientation at a nearby position, is clearly more limited than arbitrary movements in Euclidean space. Euclidean geometry, as above, is an example of a Riemannian geometry; the limitations involved in moving through a cortical columnar space specify a sub-Riemannian geometry (Citti and Sarti, 2014; Citti et al., 2015; Sarti et al., 2019). Just as cars can move along roads that are mostly smooth, excitatory neurons mainly connect to similarly “like” (in orientation) excitatory neurons. This chain of neurons indicates a path through sub-Riemannian space (Agrachev et al., 2019); the fan of such paths is the cortical connectivity which can be considered the neural correlate of association fields. Again, for more information please consult Appendix A (Supplementary material).

Moving now out to the world, we must be able to move between all points. Repeating the above metaphor more technically, we equip ℝ3 ⋊ 𝕊2 with a group action of the three-dimensional Euclidean group of rigid motions SE(3). Notice, importantly, that this group is now acting on the product space of positions and orientations. A bit more is required, though, since the geometry of stereo vision is not solved only with these punctual and directional arguments. As we showed in Figure 2 there is the need to take into account the relationships between nearby tangents; in geometric language this involves a suitable type of connections. It is therefore natural to look at integral curves of the sub-Riemannian structure, which encode in their coefficients the fundamental concept of 3D curvature and torsion. An example of this is shown in Figure 3B. Notice how the 3D association field envelopes a space curve, in the same way that a 2D association field envelopes a planar curve. This figure illustrates, in a basic way, the fundamental result in this paper.

There are many different ways to approach mathematical modeling in vision. One could, for example, ask what is the best an ideal observer could do for the stereo problem working directly on image data (Burge and Geisler, 2014; Burge, 2020). This requires specifying the task, e.g., disparity at a point; a database of images on which the estimation is to be carried out; and a specification of the output. The approach is fundamentally statistical, and has been successful at predicting discrimination thresholds and optimal receptive field designs for patches of natural images. We seek to go the next step – to specify the relationship between receptive fields; i.e., between neurons. Note that the complexity multiplies enormously. At the behavioral level this raises the question of grouping, or determining the combinations of disparities, or Gabor patch samples, that belong together. The complexity arises because this must be evaluated over all possible arrangements of patches, be they along curves, or surfaces, or combinations thereof. In effect, the output specification is pushed toward co-occurrence phenomena, and these toward neural connections.

Our working hypothesis is that there is a deep functional relationship between structure in the brain and structure in the world, and that geometry is the right language with which to capture this relationship, especially as regards connectivity between neurons and their functionality. The neuro-geometric approach is precisely this; an attempt to capture how the structure of cortical connectivity (and other functional properties) are reflected in the phenomena of visual perception.

At first blush this might seem completely unrelated to the statistics of natural images, and how these could be informative of neural connections, but we believe that there is a fundamental relationship. Consider, to start, the distribution of oriented edge elements in a small patch. Pairwise edge statistics are well-studied (August and Zucker, 2000; Geisler et al., 2001; Elder and Goldberg, 2002; Sanguinetti et al., 2010), and indicate how orientation changes are distributed over (spatially) nearby edge elements. Co-linear and co-circular patterns emerge from these studies, as well as in third-order statistics of edges (Lawlor and Zucker, 2013). Interestingly, in Singh and Fulvio (2007) and Geisler and Perry (2009) deviation from co-circular behavior emerges.2 In particular, Geisler and Perry (2009) proposes a parabolic model to explain these statistical evidences, that is consistent with previous results if we consider a composition of the joint action of cocircularity and parallelism cues (as found to factor for example in Elder and Goldberg, 2002). To elaborate, it begins by following either a co-circular or linear term, followed by the composition with another circular or linear term. The outcome of this process is described as a spline-like behavior that can approximate a parabola. In Sanguinetti et al. (2010), it has been shown that the histogram of the co-occurrence of edges in a natural image provides the same probability kernel we could find with geometric analysis instruments. As a result, statistical measurements are integrated into the geometric approach.

The geometric analysis that we shall use is continuous mathematics, and is essentially differential (Tu, 2011). This has important implications. First, the relationships that matter are those over small neighborhoods, not over “long” distances. Thus at a point there is an orientation (tangent) and a curvature. These barely change as one moves a tiny distance from the point. Thus we are not considering (in this paper) what happens behind (relatively) large occluders, when longer distances devoid of intermediate structure separate structure (Singh and Fulvio, 2005; Fulvio et al., 2008). Such problems are important but are outside the scope of this paper. Second, because the mathematics is continuous, we shall not consider sampling issues (Warren et al., 2002). To the extent that it matters, we shall assume discrete entities are sufficiently densely distributed that they function as if they were continuous (Zucker and Davis, 1988). In this sense our analysis is restricted to early vision. It does not necessarily account for the full range of cognitive tasks, which may well invoke higher-order computations over longer distances and even richer abstractions.

It has been observed that edge statistics for curves in the world depart from co-circularity. To quote (Geisler and Perry, 2009): “Except for a direction of zero, where the orientation difference is consistent with a collinear relationship, the highest-likelihood orientation differences are less than those predicted by a co-circular relationship.” We believe this has to do with the notion of curvature used: whether it is purely local, or an estimate over distance. In summary of the geometric approach, we explain this as follows. Our constraints can be used in two rather different ways. First, from a computational perspective, one can “integrate” the local constraints into more global objects. This is the approach used in the example section of this paper, and could give rise to an “average” curvature over some distance. The second approach is more distributed, and may be closer to a neurobiological implementation (Ben-Shahar and Zucker, 2004). In this second approach (not developed in this paper, but see Li and Zucker (2006) and Figure 6), the local computations overlap to enforce consistency (The scale of such computations would be a small factor larger than that indicated in Figure 2B). This scale corresponds to the extent of biological “long range horizontal connections” but is smaller than many of the occluders used in psychophysical experiments. In other words, to emphasize this distinction, in the former case the use of integral curves may be closer to the parabolic relations observed in scene statistics (Geisler and Perry, 2009).3 Our use of the term “co-circularity” is in the latter sense.

The paper is organized as follows: in Section 2, we describe the geometrical and neuro-mathematical background underlying the problem of stereo vision. In particular, we review the standard stereo triangulation technique to relate the coordinate system of one retina with the other, and put them together in order to reconstruct the three-dimensional space. Then, we briefly review the classical neurogeometry of monocular simple cells selective for orientation and the underlying connections. The generalization of approximate co-circularity for stereo is also introduced. In Section 3, starting from binocular receptive profiles, we introduce the neuro-mathematical model for binocular cells. First we present the cortical fiber bundle of binocular cells. It follows the differential interpretation of the binocular profiles in terms of the neurogeometry of the simple cells, and we show how this is well in accordance with the results of the stereo triangulation. Then, we give a mathematical definition of the manifold ℝ3 ⋊ 𝕊2 with the sub-Riemannian structure. Finally, we study the integral curves and the suitable change of variables that allow us to switch our analysis from cortical to external space. In Section 4 we proceed to the validation of our geometry with respect to psychophysical experiments. We combine information about the psychophysics of 3D perception and formal conjectures; it is here that we formulate a 3D association field analogous to the 2D association field. At the end, we show an example of a representation of a stimulus (from image planes to the full 3D and orientation geometry) and how our integral curves properly connect corresponding points. This illustrates the use of our model as a basis for solving the correspondence problem.4

In this subsection, we briefly recall the geometrical configuration underlying 3D vision, to define the variables that we use in the rest of the paper, mainly referring to (Faugeras, 1993, Ch. 6). For a complete historical background see Howard (2012); Howard and Rogers (1995).

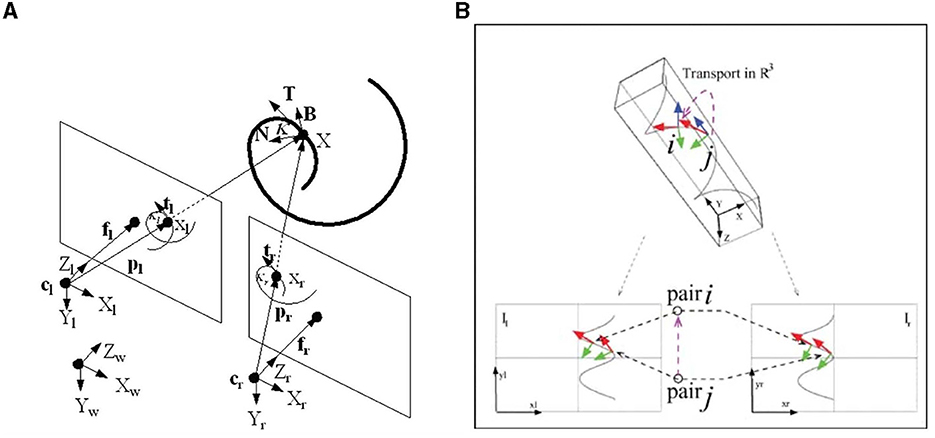

We consider the global reference system (O, i, j, k) in ℝ3, with O = (0, 0, 0), and coordinates (r1, r2, r3). We introduce the optical centers CL = (−c, 0, 0) and CR = (c, 0, 0), with c real positive element, and we define two reference systems: (CL, iL, jL), (CR, iR, jR), the reference systems of the retinal planes and with coordinates respectively (xL, y), (xR, y). In the global system we suppose the retinal planes to be parallel and to have equation r3 = f, with f denoting the focal length. This geometrical set-up is shown in Figure 4.

Remark 2.1. If we know the coordinate of a point in ℝ3, then it is easy to project it in the two planes via perspective projection, having c the coordinate of the optical centers and f focal length. This computation defines two projective maps ΠL and ΠR, respectively, for the left and right retinal planes:

Proof. A point on the left retinal plane of local coordinates has global coordinates , and it corresponds to a point in the Euclidean ℝ3 such that CL, QL and Q are aligned. This means that the vectors and are parallel, obtaining the following relationships:

Analogously, considering QR and CR, we get:

□

In a standard way, the horizontal disparity is defined as the differences between retinal coordinates

up to a scalar factor. Moreover, it is also possible to define the coordinate x as the average of the two retinal coordinates , leading to the following change of variables:

where the set of coordinates (x, y, d) is known as cyclopean coordinates (Julesz, 1971).

Corresponding points in the retinal planes allow to project back into ℝ3. An analogous reasoning can be done for the tangent structure: if we have tangent vectors of corresponding curves in the retinal planes, it is possible to project back and recover an estimate of the 3D tangent vector. Let us recall here this result; a detailed explanation can be found in Faugeras (1993).

Remark 2.2. Let γL and γR be corresponding left and right retinal curves; i.e., perspective projections of a curve γ ∈ ℝ3 through optical centers CL and CR with focal length f. Knowing the left and right retinal tangent structures, it is possible to recover the direction of the tangent vector .

Proof. Starting from a curve γ ∈ ℝ3, we project it in the two retinal planes obtaining γL = ΠL(γ) and γR = ΠR(γ) from Equation (1). The retinal tangent vectors are obtained through the Jacobian matrix5 of the left and right retinal projections :

Extending the tangent vectors and the points into ℝ3, we get , and , and , with the projection matrix . The same reasoning holds for the right structure, with projection matrix .

Then UtR × UtL is a vector parallel to the tangent vector :

□

Although this section has been based on the geometry of space curves and their projections, we observe that related geometric approaches have been developed for planar patches and surfaces; see, e.g., Li and Zucker, 2008; Oluk et al., 2022 and references therein.

We now provide background on the geometric modeling of the monocular system, and good continuation in the plane. Our goal is to illustrate the role of sub-Riemannian geometry in the monocular system, which will serve as the basis for generalization to the stereo system.

We model the activation map of a cortical neuron's receptive field (RF) by its receptive profile (RP) φ. A classical example is the receptive profiles of simple cells in V1, centered at position (x, y) and orientation θ, modeled (e.g., in Daugman, 1985; Jones and Palmer, 1987; Barbieri et al., 2014b) as a bank of Gabor filters φ{x, y, θ}. RPs are mathematical models of receptive fields; they are operators which act on a visual stimulus.

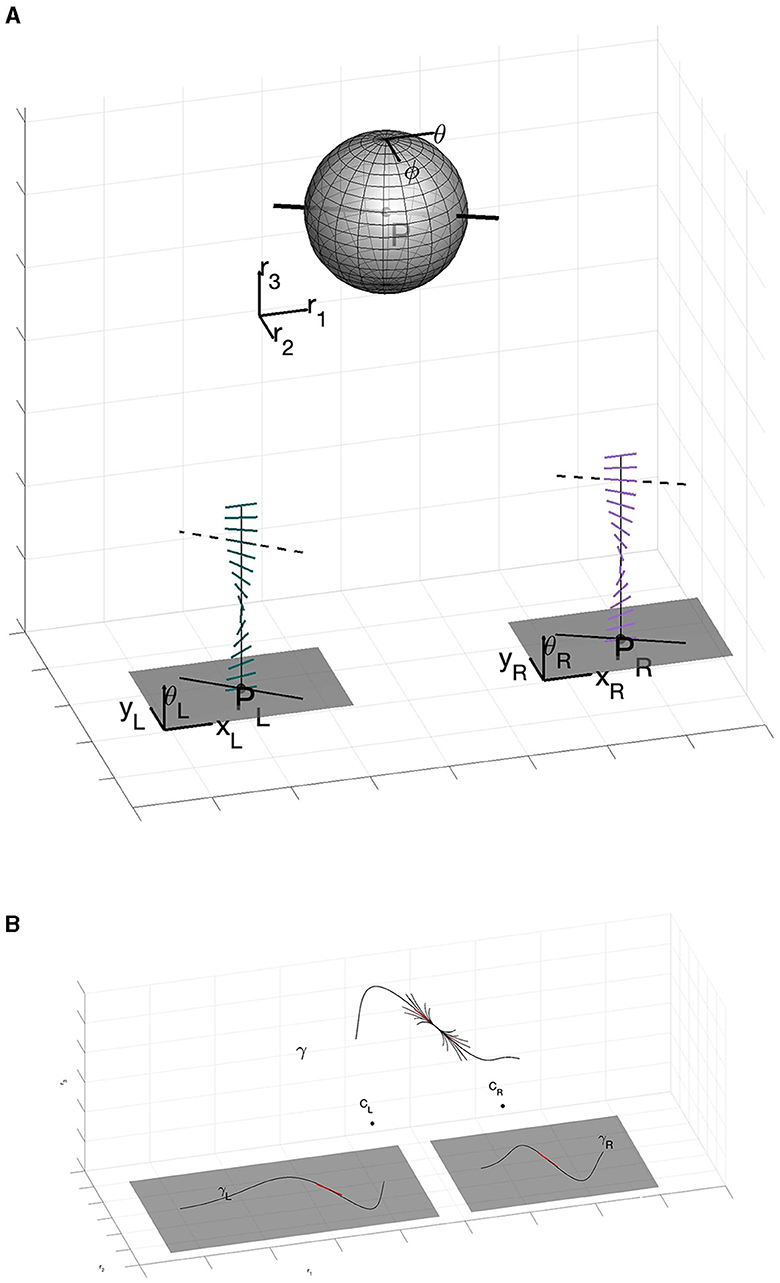

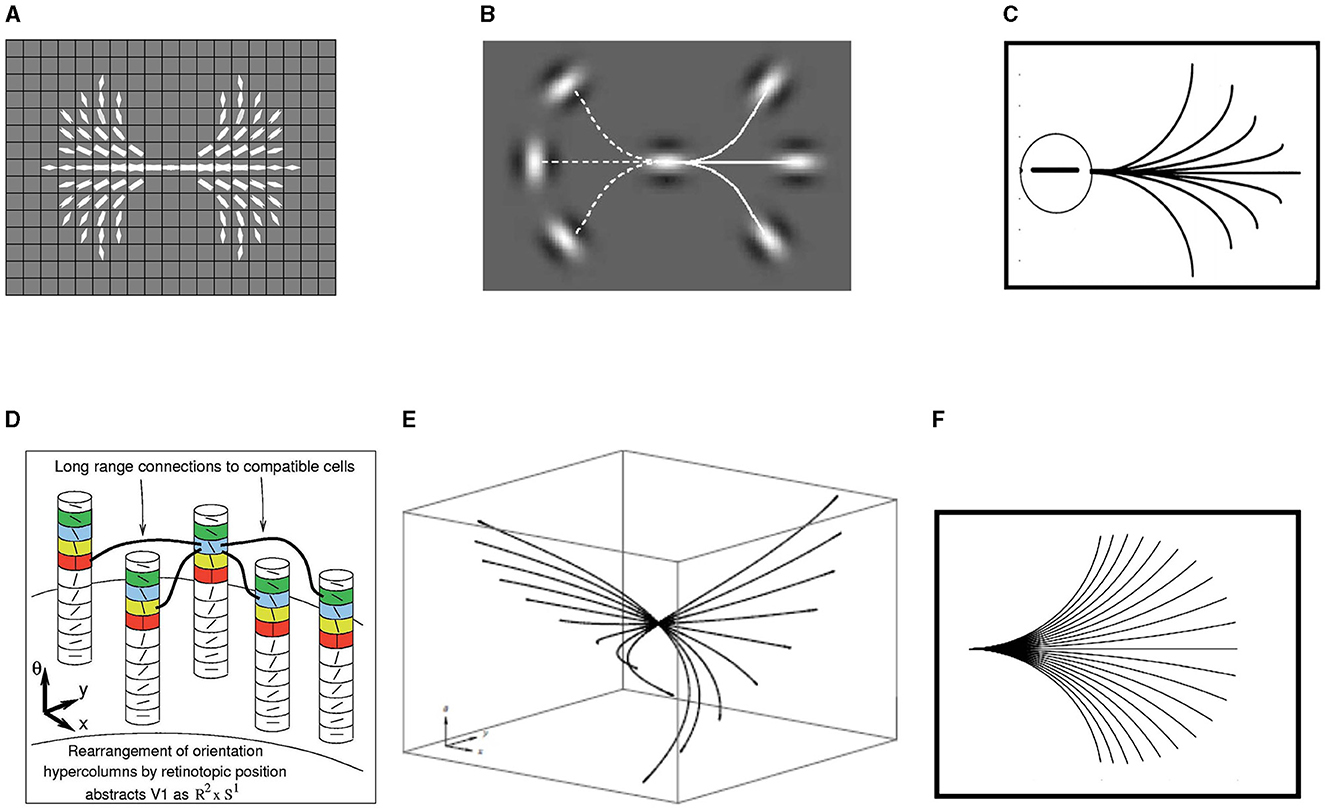

Formally, it is possible to abstract the primary visual cortex as ℝ2 × 𝕊1, or position-orientation space, thereby naturally encoding the Hubel/Wiesel hypercolumnar structure (Hubel and Wiesel, 1962). An example of this structure is displayed in Figure 5D from Ben-Shahar and Zucker (2004).

Figure 5. (A) Examples of the compatibilities around the central point of the image, derived from planar co-circularity. Brightness encodes compatibility values. Figure adapted from Ben-Shahar and Zucker (2004). (B) Starting from the central initial oriented point, the solid line indicates a configuration between the patches where the association exists while the dashed line indicates a configuration where it does not. Figure adapted from Field et al. (1993). (C) Association field of Field, Hayes, and Hess. Figure adapted from Field et al. (1993). (D) Orientation columns of cells in (x, y, θ) coordinates. Long-range horizontal connections between cells relate an orientation signal at position (x, y, θ) to another orientation at (x′, y′, θ′). Figure adapted from Ben-Shahar and Zucker (2004). (E) Horizontal integral curves in ℝ2 × 𝕊1 generated by the sub-Riemannian model (Citti and Sarti, 2006). (F) Projection of the fan of the integral curves in the (x, y) plane. Figure adapted from Citti and Sarti (2006).

Following the model of Citti and Sarti (2006), the set of simple cells' RPs can be obtained via translations along a vector (x, y)T and rotation around angle θ from a unique “mother” profile φ0(ξ, η):

This RP is a Gabor function with even real part and odd imaginary part (Figure 7A). Translations and rotations can be expressed as:

where T(x, y, θ) denotes the action of the group of rotations and translations SE(2) on ℝ2. This group operation associates to every point (ξ, η) a new point , according to the law . Hence, a general RP can be expressed as

and this represents the action of the group SE(2) on the set of receptive profiles.

The retinal plane is identified with the ℝ2 plane, whose coordinates are (x, y). When a visual stimulus of intensity I(x, y) activates the retinal layer, the neurons centered at every point (x, y) produce an output O(x, y, θ), modeled as the integral of the signal I with the set of Gabor filters:

where the function I represents the retinal image.

For (x, y) fixed, we will denote the point of maximal response:

We will then say that the point (x, y) is lifted to the point . This is extremely important conceptually to understand our geometry: it illustrates how an image point, evaluated against a simple cell RP, is lifted to a “cortical” point by introducing the orientation explicitly. If all the points of the image are lifted in the same way, the level lines of the 2D image I are lifted to new curves in the 3D cortical space .

We shall now recall a model of the long range connectivity which allows propagation of the visual signal from one cell in a column to another cell in a nearby column. This is formalized as a set of directions for moving in the cortical space , in the sense of vector fields. This is important because it will be necessary to move within this space, across both positions and orientations.

To begin, in the right hand side of the Equation (11) the integral of the signal with the real and imaginary part of the Gabor filter is expressed. The two families of cells have different shapes, hence they detect (or play a role in detecting) different features. Since the odd-symmetry cells suggest boundary detection, we concentrate on them, but this is a mathematical simplification. The output of a simple cell can then be locally approximated as O(x, y, θ) = −X3,p(Iσ)(x, y), where p = (x, y, θ) ∈ SE(2), Iσ is a smoothed version of I, obtained by convolving it with a Gaussian kernel, and

is the directional derivative in the direction . From now on, we will denote (by a slight abuse of notation) to remind the reader familiar with the language of 1-forms the correspondence of these quantities, and the relation with the Hodge star operator.6

Now, think of vector fields as defining a coordinate system at each point in cortical space. Then, in addition to above, the vector fields orthogonal to X3,p are:

and they define a 2-dimensional admissible tangent bundle7 to ℝ2 × 𝕊1. One can define a scalar product on this space by imposing the orthonormality of X1,p and X2,p: this determines a sub-Riemannian structure on ℝ2 × 𝕊1.

The visual signal propagates, in an anisotropic way, along cortical connectivity and connects more strongly cells with comparable orientations. This propagation has been expressed by the geometry just developed and 2-dimensional contour integration. This is the neural explanation of the Gestalt law of good continuation (Koffka, 1963; Kohler, 1967). It can be directly expressed as co-circularity in the plane (Parent and Zucker, 1989), to describe the consistency and the compatibility of neighboring oriented points, in accordance with specific values of curvature. An example of these compatibilities can be found in Figure 5A. It is complemented by psychophysical experiments, e.g., Uttal, 1983; Smits and Vos, 1987; Ivry et al., 1989. In particular, Field et al. (1993) describe the association rules for 2-dimensional contour integration, introducing the concept of association fields. A representation of these connections can be found in Figures 5B, C. Note that this is equivalent to the union (over curvature) in Parent and Zucker (1989). Neurophysiological studies (Blasdel, 1992; Malach et al., 1993; Bosking et al., 1997; Schmidt et al., 1997; Hess et al., 2014) suggest that the cortical correlate of the association field is the long-range horizontal connectivity among cells of similar (but not necessarily identical) orientation preference.

Based on these findings, Citti and Sarti (2006) modeled cortical propagation as propagation along integral curves of the vector fields X1 and X2, namely curves γ:[0, T]⊂ℝ → ℝ2 × 𝕊1 described by the following differential equation:

obtained by varying the parameter k ∈ ℝ. (k acts analogously as curvature.) An example of these curves is in Figure 5E. Their 2D projection is a close approximation of the association fields (Figure 5F).

A related model has been proposed by Duits et al. (2013). They study the geodesics of the sub-Riemannian structure to take into account all appropriate end-conditions of association fields.

The concept of co-circularity in ℝ2 has been developed by observing that a bidimensional curve γ can be locally approximated at 0 via the osculating circle.8 Alibhai and Zucker (2000), Li and Zucker (2003), and Li and Zucker (2006) generalize this concept with the Frenet differential geometry of a three dimensional curve.

While in the two-dimensional case the approximation of the curve using the Frenet 2D basis causes the curvature to appear in the coefficient of the Taylor series development (1st order), in the three-dimensional case the coefficients involve both the curvature and torsion. So, in Alibhai and Zucker (2000) the authors propose heuristically to generalize the osculating circle for space curves with an osculating helix, with a preference for r3-helices to improve stability in terms of camera calibration. In this way the orientation disparity is encoded in the behavior of the helix in the 3D space: there is no difference in orientation in the retinal planes if the helix is confined to be in the fronto-parallel plane (the helix becomes a circle); otherwise moving along the 3D curves the retinal projections have different orientations.

In Li and Zucker (2003, 2006) they observe that, by introducing the curvature variable as a feature in the two monocular structures, and assuming correspondence, it is possible to reconstruct the 3D Frenet geometry of the curve, starting from the two-dimensional Frenet geometry, up to the torsion parameter. In particular, they prove:

Proposition 2.1. Given two perspective views of a 3D space curve with full calibration, the normal N and curvature k at a curve space point are uniquely determined from the positions, tangents, and curvatures of its projections in two images. Thus the Frenet frame {T, N, B} and curvature k at the space point can be uniquely determined.

Hence, using the knowledge of the Frenet basis together with the fundamental addition of the curvature variable, Zucker et al. applied the concept of transport. This allowed moving the 3D Frenet frame in a consistent way with the corresponding 2D Frenet structures of the left and right retinal planes, to establish stereo correspondence between pairs of (left and right) pairs of tangents (see Figure 6B).

Figure 6. (A) Geometrical setup of Proposition 2.1. The spiral curve 3D projects in the left and right retinal planes together with the Frenet structure. (B) Stereo correspondence between pairs of (left-right) pairs of tangents. Both figures are taken from Li and Zucker (2006).

Remark 2.3. The model that we propose in this paper is related to, but differs from, what has just been stated. In particular, to remain directly compatible with the previous neuro-geometric model, we will work only with the monocular variables of position and orientation. Rather than using curvature directly, we shall assume that these variables are encoded within the connections; mathematically they appear as parameters. A theoretical result of our model is that the heuristic assumption regarding the r3-helix can now be established rigorously.

Let us also mention the paper (Abbasi-Sureshjani et al., 2017), where the curvature was considered as independent variable and helices have been obtained in the 2D space.

Here, we do not want to directly impose a co-circularity property: our scope is to model the behavior of binocular cells, and deduce properties of propagation, which will ultimately induce a geometry of 3D good continuation laws.

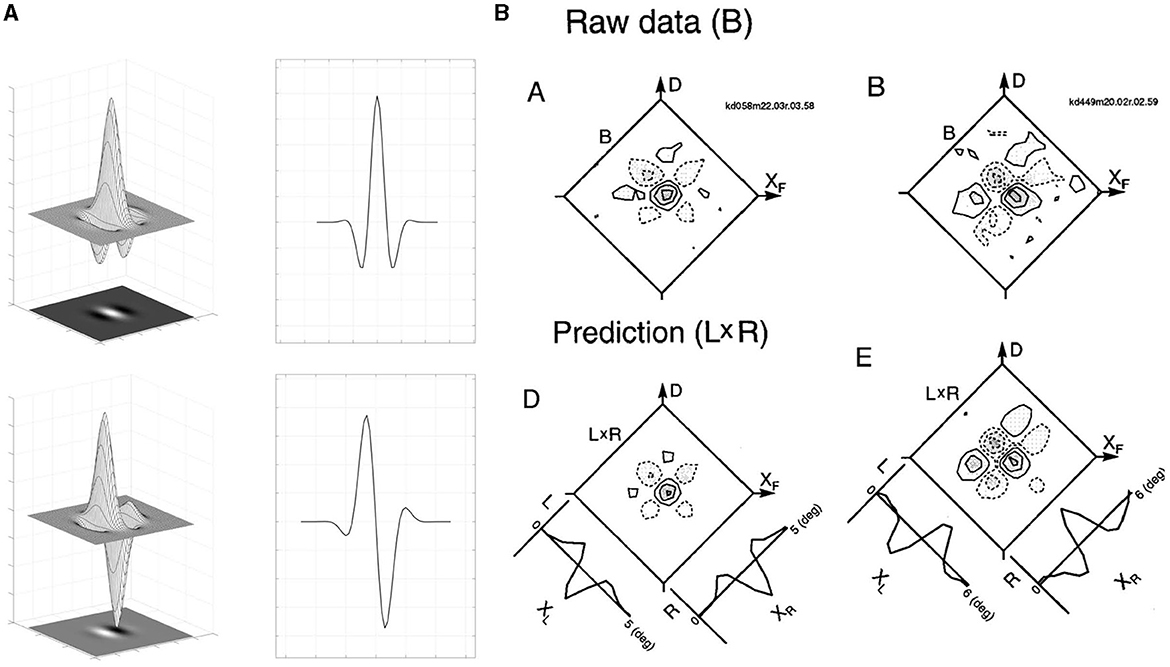

Binocular neurons receive inputs from both the left and right eyes. To facilitate calculations, we assume these inputs are first combined in simple cells in the primary visual cortex, a widely studied approach (Anzai et al., 1999b; Cumming and DeAngelis, 2001; Menz and Freeman, 2004; Kato et al., 2016). It provides a first approximation in which binocular RPs are described as the product of monocular RPs; see Figure 7. This model is clearly an oversimplification, in several senses. First, it leaves out the more refined receptive fields discussed in Section 1.3. Second, it leaves out the role for complex cells (Sasaki et al., 2010). Third, it leaves out different ways to get the position and orientation information, such as eye fixations (Intoy et al., 2021). And fourth, it avoids the delicate question of whether the max operation over a column (Equation 12) truly captures a tangent element. Nevertheless, since our focus is geometric, it does capture all of the necessary ingredients and simplifies computations.

Figure 7. (A) Even (top) and odd (bottom) part of Gabor function: the surface of the two-dimensional filters, their common bi-dimensional representation and a mono-dimensional section. (B) Comparisons between binocular interaction RPs and the product of left and right eye RPs, where left and right RPs are shown in image (A). Binocular interaction RPs (Raw data) of a cell is shown on the top row. Contour plots for the product of left and right eye RPs (L × R) are shown in the bottom row along with 1-dimensional profiles of the left (L) and right (R) eye RPs. Figure adapted from Anzai et al. (1999b).

This binocular model allows us to define disparity and frontoparallel coordinates as

perfectly in accordance with the introduction of cyclopean coordinates in (4). In this way (x, y, d) correspond to the neural correlate of (r1, r2, r3), via the change of variables (5).

The hypercolumnar structure of monocular simple cells (orientation selective) has been described as a jet fiber bundle in the works of Petitot and Tondut (1999), among many others. We concentrate on the fiber bundle ℝ2 × 𝕊1, with fiber 𝕊1; see, e.g., Ben-Shahar and Zucker, 2004 among many others.

In our setting, the binocular structure is based on monocular ones; recall the example illustrations from the Introduction. In particular, for each cell on the left eye there is an entire fiber of cells on the right, and vice versa, for each cell on the right there is an entire fiber of cells on the left. This implies that the binocular space is equipped with a symmetry that involves the left and right structures, allowing us to use the cyclopean coordinates (x, y, d) defined in (16).

Hence, we define the cyclopean retina , identified with ℝ2, endowed with coordinates (x, y). The structure of the fiber is , with coordinates . The total space is defined in a trivial way, , and the projection is the trivial projection π(x, y, d, θL, θR) = (x, y). The preimage of the projection , for every , is isomorphic to the fiber , and the local trivialization property is naturally satisfied.

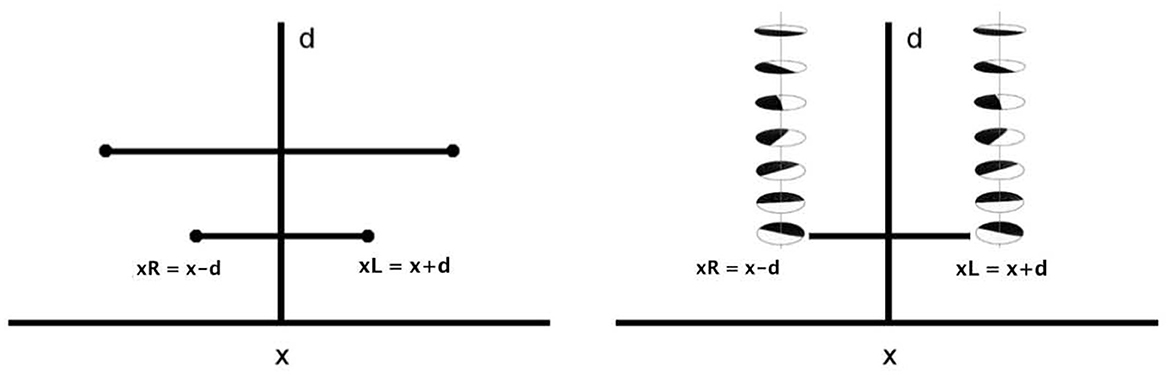

A schematic representation can be found in Figure 8. The base has been depicted as 1-dimensional, considering the restriction of the cyclopean retina on the coordinate x. The left image displays only the disparity component of the fiber , encoding the relationships between left and right retinal coordinates. The right image shows the presence of the left and right monodimensional orientational fibers.

Figure 8. Left: schematic representation of the fiber bundle in two dimension, with relationships between left and right retinal coordinates. Right: representation of the selection of a whole fiber of left and right simple cells, for every x and for every d.

To simplify calculations, as stated in the Introduction, we follow the classical binocular energy model (Anzai et al., 1999b) for binocular RPs. The basic idea is a binocular neuron receives input from each eye; if the sum OL + OR of the inputs from the left and right eye is positive, the firing rate of the binocular neuron is proportional to the square of the sum, and it vanishes, if the sum of the inputs is negative:

with Pos(x) = max{x, 0}, OB the binocular output.

If OL + OR > 0, then the output of the binocular simple cell can be explicitly written as . The first two terms represent responses due to monocular stimulation while the third term 2OLOR can be interpreted as the binocular interaction term.

The activity of a cell is then measured from the output and will be strongest at points that have a higher probability of matching each other. The maximum value over d of this quantity is the extracted disparity.

It is worth noting that neurophysiological computations of binocular profiles displayed in Figure 7B assume the mono-dimensionality of the monocular receptive profile, ignoring information about orientation of monocular simple cells. However, this information will be needed to encode different types of orientation disparity.

Remark 3.1 (Orientation matters). In 2001, the authors of Bridge et al. (2001) conducted investigations on the response of binocular neurons to orientation disparity, by extending the energy model of Anzai, Ohzawa, and Freeman to incorporate binocular differences in receptive-field orientation. More recently, the difference between orientations in the receptive fields of the eyes has been confirmed (Sasaki et al., 2010).

The binocular energy model is a type of minimal model. It serves as a starting point, allowing the combination of monocular inputs. But is not sufficient to solve the stereo-matching problem.

Remark 3.2 (Connections). It is argued in Samonds et al. (2013) and Parker et al. (2016) that, in addition to the neural mechanisms that couple characteristics (such as signals, stimuli, or particular features) relating the left and right monocular structures, there must be a system of connections between binocular cells, which characterizes the processing mechanism of stereo vision; see also Samonds et al. (2013) in particular.

It is possible to write the interaction term OLOR coming from (17), in terms of the left and right receptive profiles:

If we fix , we derive the expression of the binocular profiles φL,R = φθR, xR, yφθL, xL, y as the product of monocular left and right profiles. This is in accordance with the measured profiles of Figure 7B).

Proposition 3.1. The binocular interaction term can be associated with the cross product of the left and right directions defined through (13), namely and of monocular simple cells:

Proof. The idea is that the binocular output is the combined result of the left and right actions of monocular cells, thus identifying a direction in the space of cyclopean coordinates. The detailed proof of this proposition can be found in Appendix B (Supplementary material). □

To better understand the geometrical idea behind Proposition 3.1, we recall that the retinal coordinates can be expressed in terms of cyclopean coordinates (4) as xR = x − d and xL = x + d, and so we can write and in the 3D space of coordinates (x, y, d) as:

We define as the natural direction characterizing the binocular structure:

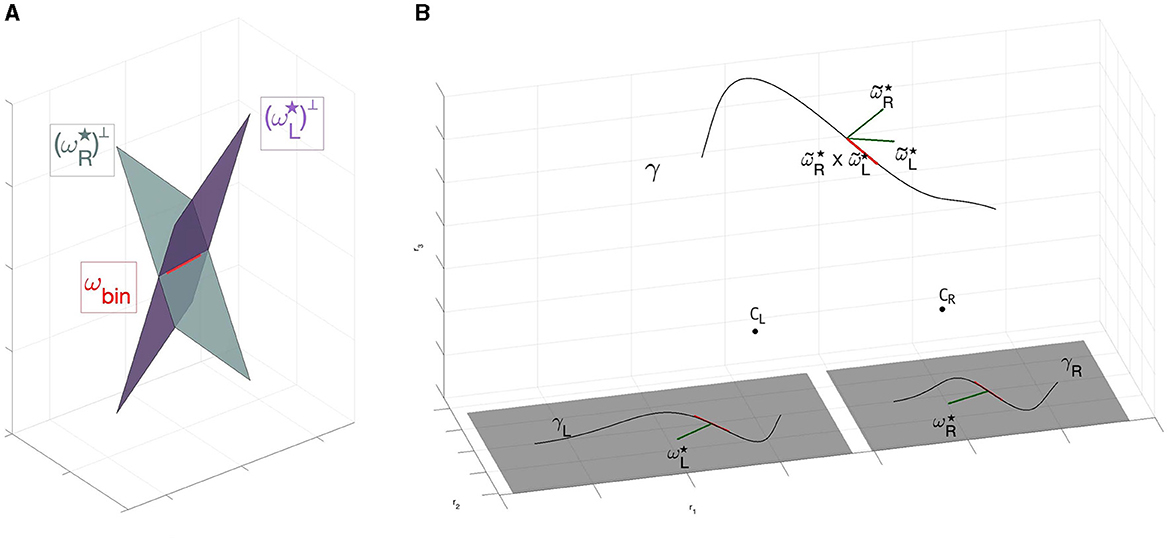

Remark 3.3. The vector ωbin of Equation (21) can be interpreted as the intersection of the orthogonal spaces defined with respect to and when expressed in cyclopean coordinates (x, y, d). More precisely, if

then

The result of the intersection of these monocular structures identifies a direction, as shown in Figure 9A.

Figure 9. (A) Direction detected by ωbin through the intersection of left and right planes generated by and . Red vector corresponds to the associated 2-form ωbin. (B) Three dimensional reconstruction of the space from retinal planes. The 1- forms and are identified with the normal to the curves γL and γR. Their three dimensional counterpart and identify the tangent vector to the curve γ:ℝ → ℝ3 by the cross product .

We earlier showed that the result of the action of a monocular odd simple cell is to select directions for the propagation of information. We now combine these, for the two eyes, to show that in the three-dimensional case the binocular neural mechanisms also lead to a direction. We will see in the next sections that this direction is the direction of the tangent vector to the 3D stimulus, provided points are corresponding.

We consider the direction characterizing the binocular structure ωbin defined in (21) and we show that it can be associated with the 3D tangent vector to the 3D curve. The idea is that this tangent vector is orthogonal both to and to , and therefore it has the direction of the vector product .

Precisely, we consider the normalized tangent vector tL and tR on retinal planes

to the points (xR, y) and (xL, y) respectively. Taking into account that f is the focal coordinate of the retinal planes in ℝ3, then we associate to these points the correspondents in ℝ3, namely , . Applying Equation (7), it is possible to derive the tangent vector of the three dimensional contour:

and the tangent direction is recovered by

If we define

and the corresponding 2 form , using the change of variables (16) we observe that:

up to a scalar factor. See Appendix C (Supplementary material) for explicit computation.

In this way, the disparity binocular cells couple in a natural way positions, identified with points in ℝ3, and orientations in 𝕊2, identified with three-dimensional unitary tangent vectors. As already observed in Remark 3.2, the geometry of the stereo vision is not solved only with these punctual and directional arguments, but there is the need to take into accounts suitable type of connections. In Alibhai and Zucker (2000); Li and Zucker (2003, 2006), Zucker et al. proposed a model that considered the curvature of monocular structures as an additional variable. Instead, we propose to consider simple monocular cells selective for orientation, and to insert the notion of curvature directly into the definition of connection. It is therefore natural to introduce the perceptual space via the manifold ℝ3 ⋊ 𝕊2, in line with the theoretical toolbox proposed in Miolane and Pennec (2016) to generalize 2D neurogeometry to 3D images, and adapt this framework to our problem, looking for appropriate curves.

We now derive the objects in Figure 3A. We have clarified (end of Section 3.5) that binocular cells are parameterized by points in ℝ3, and orientations in 𝕊2. An element ξ of the space ℝ3 ⋊ 𝕊2 it is defined by a point p = (p1, p2, p3) in ℝ3 and an unitary vector n ∈ 𝕊2. Since the topological dimension of this geometric object is 2, we introduce the classical spherical coordinates (θ, φ) such that can be parameterized as:

with θ ∈ [0, 2π] and φ ∈ (0, π). The ambiguity that arises using local coordinate chart is overcome by the introduction of a second chart, covering the singular points.

Translations and rotations are expressed using the group law of the three-dimensional special Euclidean group SE(3), defining the group action

with (p, n) ∈ ℝ3 ⋊ 𝕊2, (q, R) ∈ SE(3), namely R ∈ SO(3) tridimensional rotation, and q ∈ ℝ3.

The emergence of a privileged direction in ℝ3 (associated with the tangent vector to the stimulus) is the reason why we endow ℝ3 ⋊ 𝕊2 with a sub-Riemannian structure that favors the direction in 3D identified by ωbin.

Formally, we consider admissible movements in ℝ3 ⋊ 𝕊2 described by vector fields:

with ξ ∈ ℝ3 ⋊ 𝕊2 for φ ≠ 0, φ ≠ π. The admissible tangent space9 at a point ξ

encodes the coupling between position and orientations, as remarked by Duits and Franken (2011). In particular, the vector field identifies the privileged direction in ℝ3, while Yθ and Yφ allow changing this direction, involving just orientation variables of 𝕊2. The vector fields and their commutators generate the tangent space of ℝ3 ⋊ 𝕊2 in a point, allowing to connect every point of the manifold using privileged directions (Hörmander condition). Furthermore, it is possible to define a sub-Riemannian structure by choosing a scalar product on the admissible tangent bundle : the simplest choice is to declare the vector fields orthonormal, considering on 𝕊2 the distance inherited from the immersion in ℝ3 with the Euclidean metric.

We have already expressed the change of variable in the variables (x, y, d) to (r1, r2, r3) in Equation (5). However, the cortical coordinates also contain the angular variables θR and θL which involve the introduction of the spherical coordinates θ, φ.

To identify a change of variable among these variables, we first introduce the function :

where the retinal right angle and the retinal left angle are obtained considering Equation (6).

Analogously, it is possible to define the change of variable :

where the angles and are obtained considering that and .

The connectivity of the space is described by admissible curves of the vector fields spanning . In particular, a curve Γ:[0, T] → ℝ3 ⋊ 𝕊2 is said to be admissible10 if:

where a, b, c are sufficiently smooth function on [0, T]. We will consider a particular case of these admissible curves, namely constant coefficient integral curves with a(t) = 1, since the vector field represents the tangent direction of the 3D stimulus (and so it never vanishes):

with c1 and c2 varying in ℝ.

These curves can be thought of in terms of trajectories in ℝ3 describing a movement in the direction, which can eventually change according to and . An example of the fan of integral curves was shown in the Introduction in Figure 3B.

It is worth noting that in the case described by coefficients c1 and c2 equal to zero, the 3D trajectories would be straight lines in ℝ3; by varying the coefficients c1 and c2 in ℝ, we allow the integral curves to follow curved trajectories, twisting and bending in all space directions.

Formally, the amount of “twisting and bending” in space is measured by introducing the notions of curvature and torsion. We then investigate how these measurements are encoded in the parameters of the family of integral curves, and what constraints have to be imposed to obtain different typologies of curves.

Remark 3.4. The 3D projection of the integral curves (36) will be denoted γ and satisfy . Classical instruments of differential geometry let us compute the curvature and the torsion of the curve γ(t):

Using the explicit expression of the vector fields Yθ and Yφ in Equation (36), we get

from which it follows that:

Proposition 3.2. By varying the parameters c1 and c2 in (39) where we explicitly find solutions of (36), we have:

1. If then , and so the family of curves (36) are circles of radius on the fronto-parallel plane r3 = cost.

2. If φ = φ0, with φ0 ≠ π/2, then and τ = c1cotanφ0, and so the family of curves (36) are r3-helices.

3. If θ = θ0 then , τ = 0, and so the family of curves (36) are circles of radius in the osculating planes.

Proof. The computation follows immediately from the computed curvature and torsion of (39) and classical results of differential geometry. □

Remark 3.5. If we know the value of the curvature k, and we have one free parameter, c2, in the definition of the integral curves (36), then we are in the setting of Proposition 2.1. In fact, the coefficient c1 is obtained by imposing , and in particular the component that remains to be determined is the torsion.

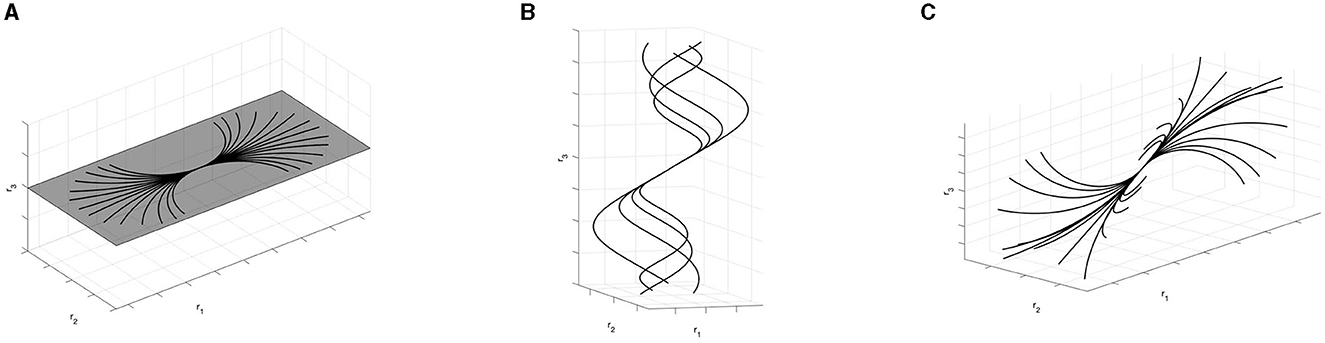

Examples of particular cases of the integral curves (36) according to Proposition 3.2 and Remark 3.5 are visualized in Figure 10.

Figure 10. Examples of integral curves obtained varying parameters c1 and c2. (A) Arc of circles for φ = π/2. (B) r3-helices for φ = π/3. (C) Family of curves with constant curvature k and varying torsion parameter.

Our sub-Riemannian model enjoys some consistency with the biological and psychophysical literature. We here describe some initial connections.

The foundation for building our sub-Riemannian model of stereo was a model of curve continuation, motivated by the orientation columns at each position. The connections between cells in nearby columns were, in turn, a geometric model of long-range horizontal connections in visual cortex (Bosking et al., 1997). In the Introduction we cartooned aspects of the cortical architecture that support binocular processing. Although the inputs are organized into ocular dominance bands, there is no direct evidence for “stereo columns” in V1 analogous to the monocular orientation columns. But such columns are not strictly necessary for our model. Rather, what is central is how information propagates. We showed in Figure 1C that there is evidence of long-range connections between binocular cells, and our model informs, abstractly, what information could propagate along these connections. Although less extensive than in the monocular case, some measurements are beginning to emerge that are informative.

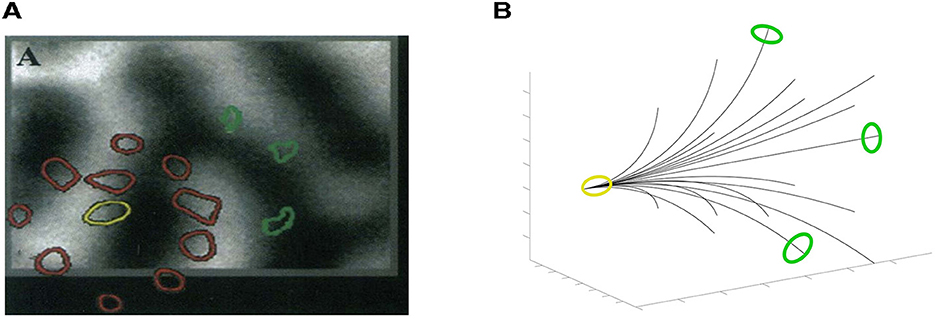

The Grinwald group first established the presence of long-range connections between binocular cells (Malach et al., 1993) (see also Figure 11A), using biocytin. This is a molecule that is taken up by neurons, propagates directly along neuronal processes and is deposited at excitatory synapses. These results were refined, more recently, by the Fitzpatrick group (Scholl et al., 2022), using in vivo calcium imaging. As shown in Figure 1C the authors demonstrated both the monocular and the binocular inputs for stereo, and (not shown) the dependence on orientations.

Figure 11. (A) A biocytin injection superimposed on a map of ocular dominance columns, image result from the work in Malach et al. (1993). Binocular zones are in the middle of monocular zones (coded in black and white). Starting from the injection site (yellow circle in the center of a binocular zone) the patches' propagation (red corresponds to dense while green to sparsely labeled) tends to avoid highly monocular sites, bypassing the centers of ocular dominance columns, and are located in binocular zones. (B) 3D interpretation of the physiological image (A).

More precisely, Malach et al. (1993) showed selective anisotripic connectivity among binocular regions: the biocytin tracer does not spread uniformly, but rather is highly directional with distance from the injection point. (This was the case with monocular biocytin injections as well.) Putting this together with Scholl et al. (2022), we interpret the anisotropy as being related to (binocular) orientation (Scholl et al., 2022), which is what the integral curves of our vector fields suggest. Our 3D association fields are strongly directional, and information propagates preferentially in the direction of (the starting point of) the curve. An example can be seen in Figure 11B, where the fan of integral curves (36) is represented, superimposed with colored patches, following the experiment proposed in Malach et al. (1993). We look forward to more detailed experiments along these lines.

In this section, we show that the connections described by the integral curves in our model can be related to the geometric relationships from psychophysical experiments on perceptual organization of oriented elements in ℝ3; in other words, that our connections serve as a generalization of the concept of an association field in 3D.

The perception of continuity between two elements of position-orientation in ℝ3 has been studied experimentally. To start, Kellman, Garrigan, and Shipley (Kellman et al., 2005a,b) introduce 3D relatability, as a way to extend to 3D the experiments of Field, Hayes and Hess (Field et al., 1993) in 2D.

Particularly, in a system of 3D Cartesian coordinates, it is possible to introduce oriented edges E at the application point and with an orientation identified with the angles θ and φ. This orientation can be read, in our case, through the direction expressed by (cosθsinφ, sinθsinφ, cosφ)T. For an initial edge E0, with application point on the origin of the coordinate system (0, 0, 0)T and orientation lying on the r1-axis, described by θ = 0, φ = π/2, the range of possible orientations (θ, φ)11 for 3D-relatable edges with E0 is given by:

The bound on these equations identified with the quantity incorporates the 90 degree constraint in three dimensions, while the bounds defined by the inverse of the tangent express the absolute orientation difference between the reference edge E0 and an edge positioned at the arbitrary oriented point E(r1, r2, r3) so that its linear extension intersects E0; see Kellman et al. (2005a,b) for further details.

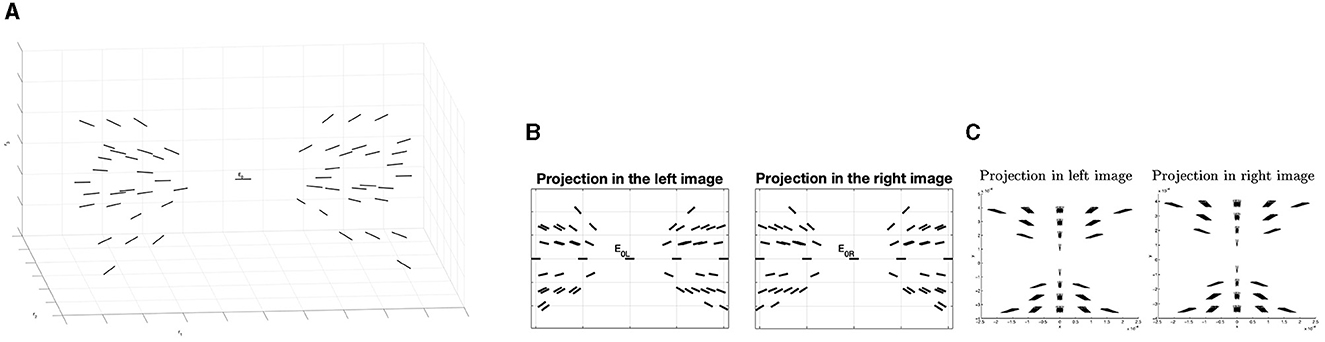

Numerical simulations allow us to visually represent an example of the 3D positions and orientations that meet the 3D relatability criteria. Starting from an initial edge E0 with endpoints in and orientation on the e1- axis, we represent for an arbitrary point the limit of the relatable orientation (θ, φ). Results are shown in Figure 12A.

Figure 12. (A) Example of the fan of the 3D relatable edges with initial point E0. (B) Example of 3D association field in the two left and right retinal planes, generated with the geometry of 3D relatability. (C) Example of 3D compatibility field of Alibhai and Zucker (2000).

Remark 4.1. By projecting on the retinal planes of the 3D fan of relatable points, it is possible to notice that these projections are in accordance with the notion of 3D compatibility field in Alibhai and Zucker (2000) (see Figures 12B, C).

Psychophysical studies, see Hess and Field (1995); Hess et al. (1997); Deas and Wilcox (2015), have investigated the properties of the curves that are suitable for connecting these relatable points. These curves are well-described as smooth and monotonic. In particular, using non-oriented contour elements for contours, Hess et al. (1997) indicate that contour elements can be effectively grouped based primarily on the good continuation of contour elements in depth. This statement is confirmed by the more recent work of Deas and Wilcox (2015) who, in addition, observe that detection of contours defined by regular depth continuity is faster than detection of discontinuous contours. All these results support the existence of depth grouping operations, arguing for the extension of Gestalt principles of continuity and smoothness in three dimensional space. Finally, on the relationship of the three-dimensional curves to 2-dimensional association fields, see Kellman et al. (2005b); Khuu et al. (2016). These authors have assumed that the strength of the relatable edges in the co-planar planes of E0 must meet the relations of the bi-dimensional association fields of Field et al. (1993).

To model the associations underlying the 3D perceptual organization discussed in the previous paragraph, we consider again the constant coefficient family of integral curves studied in (36):

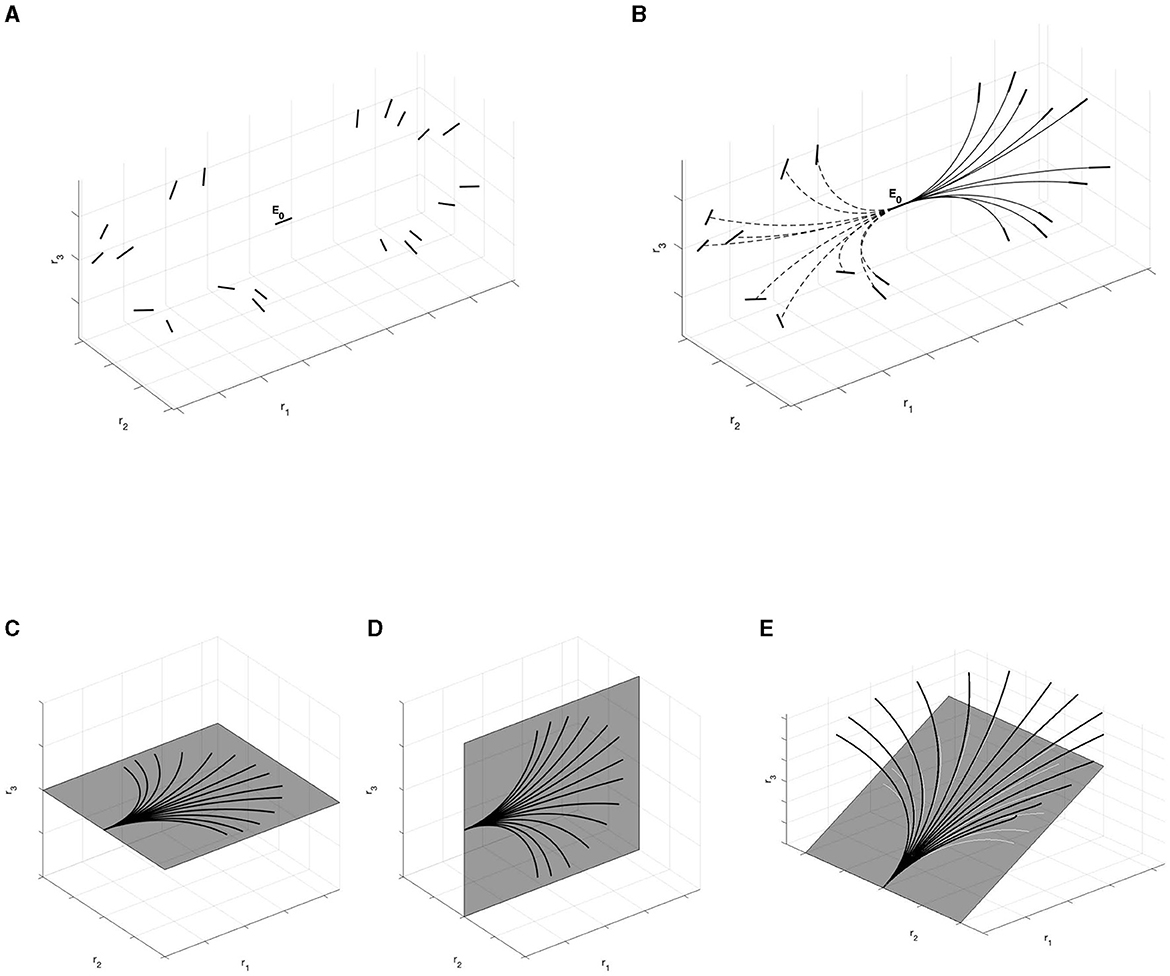

Importantly, these curves locally connect the association fan generated by the geometry of 3D relatability. In particular, Figure 13B shows the family of the horizontal curves connecting the initial point E0 with 3D relatable edges (Figure 13A). These curves are computed using Matlab solver function ode45.

Figure 13. (A) 3D relatable edges displayed on the right of the initial edge E0. Unrelatable 3D edges displayed on the left. (B) Horizontal integral curves with filled lines connect 3D relatable edges with initial point E0. Horizontal integral curves with dotted lines do not connect 3D unrelatable edges. (C) Restriction of the fan of the integral curves on the e1-e2 plane. (D) Restriction of the fan of the integral curves on the e1-e3 plane. (E) Restriction of the fan at φ = φ0. These curves (black lines) are not planar curves but helices. However, their projection (white lines) on the coplanar plane with initial edge satisfies the bidimensional constraints.

In analogy with the experiment of Field, Hayes, and Hess in Field et al. (1993), we choose to represent non-relatable edges to the left of the starting point E0, while on the right are 3D relatable edges. So, filled lines of the integral curves indicate the correlation between the central horizontal element E0 and the ones on its right, while dotted lines connect the starting point E0 with elements not correlated with it, as represented on the left part of the image.

Restricting the curves on the neighborhood of co-planar planes with an arbitrary edge E, we have different cases. First, on the r1-r2 plane (fronto-parallel) and the r1-r3 plane we have arcs of circle, as proved with Proposition 3.2. Furthermore, for an arbitrary plane in ℝ3 containing an edge E, we observe that the curves generating with fixed angle φ are helices, and locally they satisfy the bidimensional constraint in the plane. Examples can be found in Figures 13C–E. In particular, the curves displayed in Figures 13C, D are well in accordance with the curves of the Citti-Sarti model, depicted in Figure 5.

One final connection with the psychophysical literature concerns how depth discrimination thresholds increase exponentially with distance (Burge, 2020 and references therein). This is related to how the fan of integral curves “spreads out” with distance (Figures 11, 12), which is also exponential. These notions are developed more fully in Bolelli et al. (2023a).

Although the goal of this paper is not to solve the stereo correspondence problem, we can show how our geometry is helpful in understanding how to match left and right points and features. These ideas are developed more fully in Bolelli (2023).

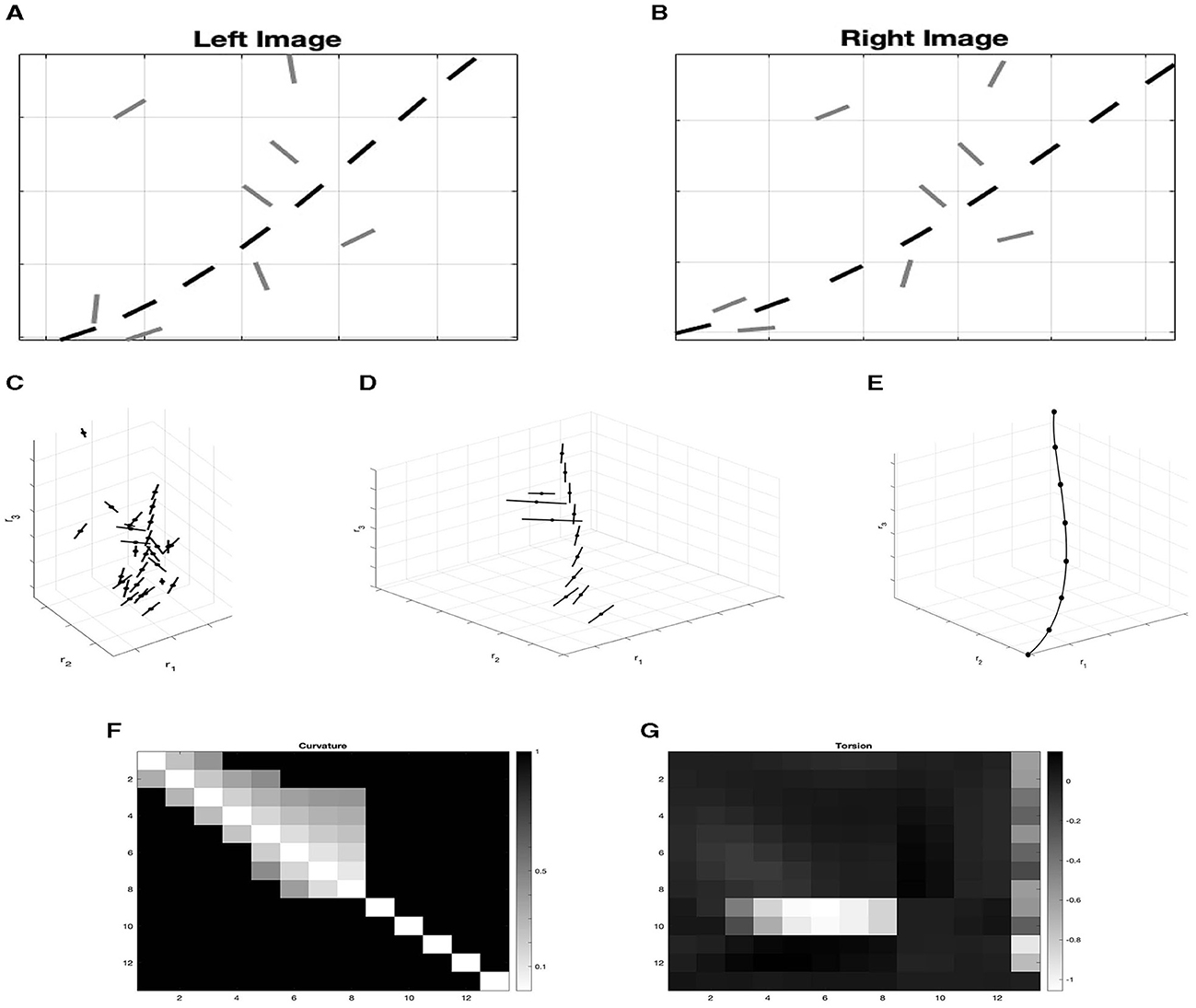

Inspired by Hess and Field (1995), we consider a path stimulus γ interpreted as a contour, embedded in a background of randomly oriented elements: left and right retinal visual stimuli are depicted in Figure 14A. We perform an initial, simplified lift of the retinal images to a set Ω ⊂ ℝ3 ⋊ 𝕊2. This set contains all the possible corresponding points, obtained by coupling left and right points which share the same y retinal coordinate, see Figure 14B. The set Ω contains false matches, namely points that do not belong to the original stimulus. It is the task of correspondence to eliminate these false matches.

Figure 14. (A) Left and right retinal images of the set Ω. Black points are the projection of the point of the curve γ, while gray points are background random noise. (B) Lifting of the two left and right retinal images of image (A) in the space of position and orientation ℝ3 × 𝕊2. (C) Selection of lifted points according to the binocular output. (D) Points of the stimulus γ connected by integral curves (36). (E, F) Matrices M which element Mij represents the value of curvature/ torsion for every couple of points ξi, ξj. The first eight points correspond to points of the curve γ while the others are random noise. (E) Curvature matrix. (F) Torsion matrix.

We compute for every lifted point the binocular output OB of Equation (17). This output can be seen as a probability measure that gives information on the correspondence of the pair of left and right points. We then simply evaluate which are the points with the highest probability of being in correspondence, applying a process of suppression of the non-maximal pairs over the fiber of disparity. In this way, noise points are removed (Figure 14C). We now directly exploit the Gestalt rule of good continuation by filtering out any couple of elements with high curvature. This qualitative rule could be quantitatively modeled by considering the statistics of distribution of curvature and torsion in natural 3D images (Geisler and Perry, 2009). The remaining noise elements are orthogonal to the directions of the elements of the curve that we would like to reconstruct. Calculating numerically the coefficients c1 and c2 of integral curves (36) that connect all the remaining pairs of points, we can obtain for every pair the value of curvature and torsion using (39).

Figures 14E, F show matrices M representing the values of curvature or torsion for every pair of points ξi, ξj in the element Mij. In particular, we observe that random points are characterized by very high curvature and deviating torsion. So, by discarding these high values, we select only the three-dimensional points of the curve γ, which are well-connected by the integral curves, as shown in Figure 14D. This is in accordance with the idea developed in Alibhai and Zucker (2000); Li and Zucker (2003, 2006), where curvature and torsion provide constraints for reconstruction in 3D.

In this artificial example we assumed that local edge elements have already been detected. Our goal was simply proof-of-concept. To apply this approach to realistic images, of course, stages of edge detection would have to be adopted, for which there is a huge literature well outside the scope of our theoretical study.

Understanding good continuation in depth, like good continuation for planar contours, can benefit from basic physiological constraints; from psychophysical performance measures, and from mathematical modeling. In particular, good continuation in the plane is supported by orientation selectivity and cortical architecture (orientation columns), by association field grouping performance, and by geometric modeling. We showed that the same should be true for good continuation in depth. However, while the psychophysical data may be comparable, the physiological data are weaker and the geometry of continuation is not as well-understood. In this paper, we introduced the neuro-geometry of stereo vision to fill this gap. It is strongly motivated by an analogical extension to 3D of 2D geometry, while respecting the psychophysics. In the end, it allowed us to be precise about the type of geometry that is relevant for understanding stereo abstractly, and concretely was highly informative toward the physiology. Although a “stereo columnar architecture” is not obvious from the anatomy, it is well-formed computationally.

Technically, we proposed a sub-Riemannian model on the space of position and orientation ℝ3 ⋊ 𝕊2 for the description of the perceptual space of the neural cells involved. This geometrical structure favors the tangent direction of a 3D curve stimulus. The integral curves of the sub-Riemannian structure encode the notions of curvature and torsion within their coefficients, and are introduced to describe the connections between elements. This model can be seen as an extension in the three-dimensional scene of the 2-dimensional association field. In particular, the integral curves of the sub-Riemannian structure of the 3D space of position-orientation are exactly those that locally correspond to psychophysical association fields.

Although the goal of this paper is not to solve the stereo correspondence problem, we have seen how the geometry we propose is a good starting point to understand how to match left and right points and features. We used binocular receptive fields to prioritize orientation preferences and orientation differences under the assumption that neuronal circuitry has developed to facilitate the interpolation of contours in 3D space. On the other side, the neurogeometrical method has been coupled with a probabilistic methods for example in Sanguinetti et al. (2010) and Sarti and Citti (2015). Here, the authors studied an analogous problem for generation of perceptual units in monocular vision: they introduced stochastic differential equations, analogous to the integral curves of vector fields, and used its probability density as a kernel able to generate monocular perceptual units. In Montobbio et al. (2019), the probability kernel is built in a direction starting from the receptive fields. A future development of the model will consist in adapting the technique of Sarti and Citti (2015) to find the probability of the co-occurrence between two elements, and individuate percepts in 3D space. Individuation of percepts through harmonic analysis on the sub-Riemannian structure has been proposed in the past, both for 2D spatial stimuli (Sarti and Citti, 2015) and in 2D + time spatio-temporal stimuli (Barbieri et al., 2014a). It would be interesting to develop a similar analysis and extend it to stereo vision.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. MB, GC, and AS were supported by EU Project, GHAIA, Geometric and Harmonic Analysis with Interdisciplinary Applications, H2020-MSCA-RISE-2017. SZ was supported in part by US NIH EY031059 and by US NSF CRCNS 1822598.

The current article is part of the first named author's Ph.D. thesis (Bolelli, 2023) and a preprint version is available at Bolelli et al. (2023b).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomp.2023.1142621/full#supplementary-material

1. ^We are here being loose with language. By a tangent orientation in the left eye, we mean the orientation of a left-eye innervated column in V1.

2. ^Of course we need to take into account the difficulty of measurements of coupled position-orientation variables for small difference of angle and position. This is due to the well-known intrinsic uncertainty of measurement in the non-commutative group of position and orientation (Barbieri et al., 2012).

3. ^The crucial point is that the curves demonstrate locally quadratic (not linear) behavior.

4. ^Portions of this material were presented at Bolelli et al. (2023a).

5. ^The Jacobian matrix (JΠ)p evaluated at point p represents how to project displacement vectors (in the sense of derivatives or velocities or directions). In details, if is the displacement vector in ℝ3, then the matrix product is another displacement vector, but in ℝ2. In other words, the Jacobian matrix is the differential of Π at every point where Π is differentiable; common notation includes JΠ or DΠ.

6. ^The purpose of introducing this notation is also to motivate an implication of the mathematical model in Citti and Sarti (2006); see Appendix B.2.1 (Supplementary material) for explanation.

7. ^as defined in Appendix A3 (Supplementary material).

8. ^Locally, a curve can be approximated by its osculating circle and, at a slightly larger scale, by the integral (parabolic) curve through the first two Taylor terms. The first approximation is co-circularity; the second is a parabolic curve. The second is an accurate model over large distances; see discussion in Section 1.3. However, since in this paper we are working over small distances and with cortical sampling (Figure 2), there is essentially no difference between them; see Figure 22.4 in Zucker (2006) and Sanguinetti et al. (2010) for a direct comparison.

9. ^see Appendix A (Supplementary material) for the definition of admissible tangent space.

10. ^sometimes the term horizontal is preferred.

11. ^The angle φ here has been modified to be compatible with our set of coordinates. The relationship between the angle in works (Kellman et al., 2005a,b) can be expressed as : .