95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

SYSTEMATIC REVIEW article

Front. Comput. Sci. , 27 April 2023

Sec. Human-Media Interaction

Volume 5 - 2023 | https://doi.org/10.3389/fcomp.2023.1085539

This article is part of the Research Topic New Advances and Novel Applications of Music Technologies for Health, Well-Being, and Inclusion View all 10 articles

The field of tactile augmentation has progressed greatly over the past 27 years and currently constitutes an emerging area of research, bridging topics ranging from neuroscience to robotics. One particular area of interest is studying the usage of tactile augmentation to provide inclusive musical experiences for deaf or hard-of-hearing individuals. This article details a scoping review that investigates and organizes tactile displays used for the augmentation of music from the field of hearing assistive devices, documented in 63 scientific publications. The focus is on the hardware, software, mapping, and evaluation of these displays, to identify established methods and techniques, as well as potential gaps in the literature. To achieve this purpose, a catalog of devices was created from the available literature indexed in the Scopus® database. We set up a list of 12 descriptors belonging to physical, auditory, perceptual, purpose and evaluation domains; each tactile display identified was categorized based on those. The frequency of use among these descriptors was analyzed and as well as the eventual relationship between them. Results indicate that the field is relatively new, with 80% of the literature indexed being published after 2009. Moreover, most of the research is conducted in laboratories, with limited industry reach. Most of the studies have low reliability due to small sample sizes, and sometimes low validity due to limited access to the targeted population (e.g., evaluating systems designed for cochlear implant users, on normal hearing individuals). When it comes to the tactile displays, the results show that the hand area is targeted by the majority of the systems, probably due to the higher sensitivity afforded by it, and that there are only a couple of popular mapping systems used by the majority of researchers. Additional aspects of the displays were investigated, including the historical distribution of various characteristics (e.g., number of actuators, or actuators type) as well as the sonic material used as input. Finally, a discussion of the current state of the tactile augmentation of music is presented, as well as suggestions for potential future research.

This section presents the rationale and objectives that form the basis for the scoping review detailed in the current paper. Subsequently, the field of vibrotactile augmentation is introduced along with a collection of relevant definitions.

This article presents a scoping review of vibrotactile displays in the field of hearing assistive devices documented in 63 scientific publications. The main goal is to present a window into the relatively new research field of Tactile Music Augmentation—a research area that has particular applications for deaf or hard-of-hearing (D/HOH) individuals (Sorgini et al., 2018). The articles included are described in terms of hardware, software, mapping, and evaluation of the displays, to provide pointers toward common methods and techniques, as well as potential shortcomings and opportunities. While the primary motivation for the current work is to aid hearing impaired individuals, the majority of the technology analyzed is designed for average users. However, there is no evidence of a difference between the haptic sense of populations with hearing loss and those with normal hearing, as the most significant differences (if any) are typically thought to be perceptual, as demonstrated by Rouger et al. (2007). Thus, we believe that the devices intended for the general public have the potential to help D/HOH individuals just as well, and we have included them in our analysis. The Scopus®1 database was queried and the eligible articles were dissected to create Table 1.

To provide an overview of the research field of vibrotactile augmentation of sound, we sought to meet the following research objectives:

• Identify the state-of-the-art and understand how research efforts have changed over the years.

• Understand the most successful and promising strategies for augmenting music with tactile stimulation

• Identify gaps in current research

• Provide a starting point with a strong foundation for designers, researchers, and practitioners in the field of vibrotactile augmentation.

By reaching these goals, this review will address the following research questions: “What are the most successful applications of vibrotactile augmentation?,” “What is the historical distribution?” and “What are the most popular actuators, processing techniques, and mappings?”

More specifically, this review will summarize research findings published over 27 years, in order to learn which type of actuators, body areas, mappings, processing techniques, and evaluation practices are most common, and how these factors have evolved over the years. In addition to this, such an examination could identify potential relations between different system components (e.g., type of actuators and type of signal processing used). This would not imply that such correlations are the most successful, but it will suggest starting points for new vibrotactile augmentation applications.

This article accompanies previous reviews in the field of vibrotactile augmentation, and should be seen as complementary. The review covering a similar sample of literature was documented by Remache-Vinueza et al. (2021), who examined the methods and technologies used in the tactile rendering of music. Their work focuses on music through the touch modality in the general sense and encompasses literature covering use cases extending past vibrotactile augmentation of music, and thus the authors analyze works from a different perspective than this article. Other similar work includes a review of haptic wearables (Shull and Damian, 2015), a review of wearable haptic systems for the hands (Pacchierotti et al., 2017), as well as a review of clinically validated devices that use haptic stimulation to enhance auditory perception in listeners with hearing impairment (Fletcher, 2021). In addition to these publications, in Papetti and Saitis (2018), the field of Musical Haptics is discussed extensively. Any researcher or designer interested in the field of vibrotactile augmentation is strongly encouraged to study these publications as well.

Research on cutaneous augmentation has been conducted since the beginning of the twentieth century, focusing on thresholds of sensitivity by using tuning forks (Rydel and Seiffer, 1903). This primitive approach was abandoned in the follow-up work in favor of electronic transducers, and by 1935, it was known that the peak skin sensitivity is somewhere in the range of 200–250 Hz (Setzepfand, 1935). In 1954, A. Wilska mapped the threshold for 35 areas spread across the entire body (Wilska, 1954).

Music is complex and has been written and performed with respect to the hearing capabilities of humans. While vibrotactile augmentation can manipulate percepts, it is important to outline the musical dimensions that can be perceived through tactile stimulation alone, to better understand and evaluate the effect of multisensory integration. Rhythm—the temporal relationship of events in a musical context—is arguably the first aspect to be discussed, as it is fairly well transmitted through tactile channels (Jiam and Limb, 2019). Substantial research has been dedicated to understanding the vibrotactile rhythm, investigating its impact on music aesthetics (Swerdfeger et al., 2009; Hove et al., 2019), and in regards to D/HOH individuals, its enhancement properties (Gilmore and Russo, 2020; Aker et al., 2022) as well as its interaction with the auditory counterpart (Lauzon et al., 2020). When it comes to pitch—the perceived (vibrotactile) frequency—the bandwidth is very limited compared to the ear, but this does not mean that humans cannot perceive pitch differences, only that the just noticeable difference between intervals must be larger, and the range is bound within 20 Hz–1,000 Hz (Chafe, 1993). However, this has not stopped researchers from exploring the potential of vibrotactile pitch with respect to music, either as in isolation (Morley and Rowe, 1990), or more commonly in context through Pitch Ranking or Melodic Contour Identification tasks (Hopkins et al., 2021). The last musical dimension that researchers focus on in terms of its vibrotactile properties is the timbre—the tempo-spectral characteristics of the stimulation that translates into the perceived quality of the sound, anecdotally refereed to as the color of sound or tone color. As with pitch discrimination, spectral content discrimination is inferior to its auditory counterpart, but there is evidence that humans can identify different spectral characteristics as discrete sensations (Russo, 2019).

Using tactile stimulation to augment auditory signals was first explored as hearing assistive devices focusing on improving speech perception for the D/HOH communities. The first commercial device that promised such results was called Tickle Talker and was developed in 1985 by Cochlear Pty. Ltd under the supervision of G. M. Clark—inventor of the cochlear implant (CI) (Cowan et al., 1990). The Tickle Talker was a multichannel electrotactile speech processor that presented speech as a pattern of electrical sensations on 4 fingers. The stimuli presented were processed similarly to the one for early-day CIs. Several other devices emerged in the mid-late 90s that explored the possibilities of using tactile stimulation to enhance speech for the hearing impaired; these will be discussed further in the current article.

What the early devices had in common with modern ones is the fundamental principle they rely on multisensory integration, pioneered by B. Stein & A. Meredith (Stein et al., 1993). This mechanism links auditory and tactile sensations and describes how humans form a coherent, valid, and robust perception of reality by processing sensory stimuli from multiple modalities (Stein et al., 1993). The classical rules for multisensory integration demand that enhancement occurs only for stimuli that are temporally coincident and propose that enhancement is strongest for those stimuli that individually are least effective (Stein et al., 1993). This is especially useful for CI users that are shown to be better multisensory integrators (Rouger et al., 2007).

For this integration to occur, the input from various sensors must eventually converge on the same neurons. In the specific case of auditory-somatosensory stimuli, recent studies demonstrate that multisensory integration can, in fact, occur at very early stages of cognition, resulting in supra-additive integration of touch and hearing (Foxe et al., 2002; Kayser et al., 2005). This translates to a lower level of robust synergy between the two sensory apparatuses that can be exploited to synthesize experiences impossible to achieve by unisensory means. Furthermore, research on auditory-tactile interactions has shown that tactile stimulus can influence the auditory stimulus and vice versa (Ro et al., 2009; Okazaki et al., 2012, 2013; Aker et al., 2022). It can therefore be observed that auditory and haptic stimuli are capable of modifying or altering the perception of each other when presented in unison, as described by Young et al. (2016) and Aker et al. (2022), and studied extensively with respect to music experiences (Russo, 2019).

Positive results from the speech experiments, as well as advancement in transducer technology, inspired researchers to explore the benefits of vibrotactile feedback in a musical context. Most of the works fall into two categories: Musical Haptics, which focuses mainly on the augmentation of musical instruments, as presented by Papetti and Saitis (2018), and vibrotactile augmentation of music listening, generally aimed at D/HOH. The focus of this article will be on the latter. A common system architecture can be seen in Figure 1, with large variability for each step, depending on the goal. For example, in DeGuglielmo et al. (2021) a 4 actuator system is used to enhance music discrimination in a live concert scenario by creating a custom mapping scheme between the incoming signal and the frequency and amplitude of the transducers. A contrasting goal is presented by Reed et al. (2021), where phoneme identification in speech is improved by using a total of 24 actuators. These two examples have different objectives, thus the systems have different requirements, but the overall architecture of both follows the one shown in Figure 1. Throughout the article, each block will be analyzed in detail, and each system description can be seen in Table 1.

Currently, research into tactile displays is expanding from speech to various aspects of music enhancement, although the field of research is still relatively new; for example, over 80% of the articles included in this review are newer than 2009. Nevertheless, the technology is slowly coming out of research laboratories and into consumers' hands, with bands such as Coldplay offering SubPacs2 for their D/HOH concert audience.

Before presenting the objectives, it is worth introducing some general terminology and describing the interpretation used throughout this article. The first clarification is with respect to how words such as tactile, vibrotactile and haptic are used in this article:

• Haptics refer to “the sensory inputs arising from receptors in skin, muscles, tendons, and joints that are used to derive information about the properties of objects as they are manipulated,” explains (Jones, 2009). It is worth highlighting that haptic sensations involve tactile systems and proprioceptive sensory mechanisms.

• Tactile refers to the ability of the skin to sense various stimulations, such as physical changes (mechanoreceptors), temperature (thermoreceptors), or pain (nociceptors), according to Marzvanyan and Alhawaj (2019). There are six different types of mechanoreceptors in the skin, each with an individual actuation range and frequency, and together they respond to physical changes, including touch, pressure, vibration, and stretch.

• Vibrotactile refers to the stimulation presented on the skin that is produced by oscillating devices. The authors of Marzvanyan and Alhawaj (2019) present evidence that two types of mechanoreceptors respond to vibrotactile stimulation; namely, Pacinian receptors and, to a lesser extent, Meissner corpuscles. These receptors have been frequently analyzed and characterized in terms of their frequency and amplitude characteristics, but it is not excluded that there are other mechanoreceptors responsible for tactile percepts.

These definitions should provide a basic understanding of the taxonomy necessary to interpret this article; nevertheless, an individual study is recommended for a better understanding of the field of physiology and neuroscience. Furthermore, the authors recommend choosing the most descriptive terminology when discussing augmentation in order to avoid potential confusion (e.g., the use of vibrotactile augmentation instead of haptic augmentation is preferred when describing a system that involves vibrations). Finally, the term augmentation will be used as the process of increasing the cognitive, perceptual or emotional, value, or quality of the listing experience. Throughout this article, augmentation generally involves the usage of dedicated, specialized hardware (HW) and software (SW) systems.

Tactile augmentation of music is a fairly new multidisciplinary research field; therefore, it is paramount to achieve consensus on terminology and definitions.

The methodology used to select and analyze the literature will be presented in the following section, starting with the system used to include articles and followed by an explanation of the process used to extract data from the article pool. No a priori review protocol was applied throughout the data collection method. The section ends by presenting known limitations in the methods described. The entire review process followed the PRISMA-ScR checklist and structure (Tricco et al., 2018).

The Scopus® database was queried due to its high Scientific Journal Rankings required for inclusion, as well as significance for the topic. The inclusion selection was a 4 step process:

Step 1 included deciding upon the selection of keywords (below), designed to cover various aspects of tactile music augmentation.

1. Audio-haptic sensory substitution

2. Audio-tactile sensory substitution

3. Cochlear implant music

4. Cochlear implant (vibro)tactile

5. Cochlear implant haptic (display)

6. Electro-haptic stimulation

7. Hearing impaired music augmentation

8. Hearing impaired (vibro)tactile

9. (Vibro)tactile music

10. (Vibro)tactile display

11. (Vibro)tactile augmentation

12. (Vibro)tactile audio feedback.

As of 23.02.2022 a total of 3555 articles were found that contain at least one of the items present in the list above in their title, abstract or keywords section. There was no discrimination between the types of documents that were included, but due to the database's inclusion criteria, PhD thesis and other potentially non-peer-reviewed works are not present.

Step 2 was a selection based on the title alone. Throughout steps 2–4, the eligibility criteria were as follows:

1. Written in English

2. Reporting primary research

3. Must describe devices that are, or could be used for tactile augmentation of music for D/HOH*

4. Must be designed for an audience (as opposite to a performer).

*A device that can be used for tactile augmentation of music for the hearing impaired (as item 3 describes) can be a system that was designed for laboratory studies that focused on the augmentation of speech and not music. Furthermore, a system for musical augmentation of normal hearing people could be used for D/HOH individuals since the tactile receptors do not differ depending on hearing capabilities; more-so there is evidence that the perception of congruent tactile stimulation is elevated in CI users (Rouger et al., 2007). Since the focus is on the technological aspects of these tactile displays and not on their efficiency, it was deemed relevant to include vibrotactile systems that are designed for music augmentation for normal hearing individuals as well.

The selection process was completed with the inclusion of 144 articles for further investigation.

Step 3 represented the reading of abstracts and evaluating their relevance; 102 articles were selected according to the eligibility criteria.

Multiple articles from the same authors describing the same setups were included only once, selecting the most recent publication and excluding the older ones. This would be the case where the authors evaluated multiple hypotheses using the same HW/SW setup.

Step 4 constituted of reading the articles selected in Step 3; the same inclusion criteria were used. This step coincided with data extraction, but articles that had been wrongfully included based on the abstract alone were discarded. The entire inclusion process resulted in 63 articles that were used to construct this review.

The relevant articles were studied and a selection of descriptors was noted for each system presented; as mentioned in Section 1.1, the focus is on hardware, software and evaluation practices, making the analysis an agnostic process with respect to their applications, target groups, or success. If the article discussed more than one system, all relevant ones were included and analyzed. The features used to analyze and compare the systems were:

1. Purpose of the display represents the end goal of the device, irrespective of the eventual evaluation conducted. All documented purposes for each display were included. A common purpose would be “Speech Enhancement.”

2. Listening situation refers to the context where the display has been used. A frequent situation is the “Laboratory Study.”

3. Number of actuators indicates the total number of actuators in the display, regardless of their type.

4. Actuators type enumerates the type of actuators used, from a hardware perspective. For example, eccentric rotating mass (ERM) vibration motors are one of the most widely used haptic technologies.

5. Signals used to feed the actuators represents the type of audio material used to excite the transducers. In the case of ERMs, most displays will indicate a “sine wave,” unless otherwise noted. This is due to the hardware nature of the actuator, capable of producing only sinusoidal oscillations, while the actual signal used is a DC voltage. Some ERM systems can reproduce harmonically complex signals as well, but the cases are few and far apart.

6. Type of signal processing (generally called DSP) enumerates the processes applied to an audio input signal to extract relevant information or prepare it for the tactile display. Fundamental frequency (F0) extraction is a common signal processing technique used for tactile displays.

7. Mapping scheme describes the features from the auditory input that are mapped to the tactile output.

8. Area of the body actuated presents where the actuators are placed on the human body (e.g., hands and chest). Throughout this article “hand” is used a combination of 2 or more sub-regions(e.g., palm and fingers)

9. Whether it is a wearable device or not (binary Yes/No)

10. Evaluation measurement presents the measurement criteria assessed in the evaluation (if applicable). Most items in this descriptor column have been grouped into a meta-category; for example “vocal pitch accuracy” seen in Shin et al. (2020) and “pitch estimation accuracy” from Fletcher (2021) have been grouped into “Music Listening Performance.”

11. Evaluation population describes the hearing characteristics of the individuals participating in the evaluation (if applicable).

12. Number of participants presents the total number of participants in the evaluation described by the previous two items.

These features were chosen in order to create an objective and complete characterization of each system while allowing a high degree of comparability. Furthermore, features regarding evaluation were included to better understand the research field as a whole. A detailed explanation for some relevant categories can be found in Section 3.1. Based on these features, Table 1 was constructed that contains all the systems analyzed.

To ensure that the inclusion process was feasible, we imposed several constraints on the process, which may have influenced some aspects of the review. First, only one database was used to browse for articles, by only one author. Although inquiring several databases is recommended, the Scopus® database already provided a large sample of publications, and includes many if not all the relevant journal publishers (e.g., IEEE). Furthermore, using the articles indexed in an academic database results in the inevitable exclusion of artistic work that might have limited exposure but with a potentially valuable contribution. Since music is inherently an artistic expression and not an academic work, this limitation could greatly impact the results of the analysis presented in this article. Similarly, articles written in other languages than English were excluded, resulting in many works not having a chance of inclusion.

This section provides a thematic analysis of the systems included in the reviewing process. The data is first presented as a table of characteristics that describes each system and its usage, and it will be succeeded by a graphical representation of the most important findings.

Due to the large variation in the hardware design and evaluation methods used in the literature presented in Table 1, some categories contain meta-descriptors that encapsulate similar features. This section will identify and clarify these situations, as well as provide an additional explanation that would allow readers an easier interpretation of the Table 1.

The first notion worth explaining is the usage of the “N/A” acronym. Although most of the time it should be understood as “Not Available”—information that is not present in the cited literature, some situations fit the interpretation more to “not applicable”. One example can be encountered in the “Nr. part.” category, where “N/A” generally means “not applicable” if the tactile display has not been evaluated at all. A more detailed version of the table, as well as the analysis software, can be found at Tactile Displays Review Repository.3

Second, the columns “Evaluation Population” and “Nr. Part.” are linked, and the number of participants separated by commas represents each population described in the previous category. For example, in Sharp et al. (2020), Evaluation Population contains: Normal Hearing, Hearing aid(HA) Users, CI Users, D/HOH while “Nr. Part.” contains 10, 2, 6, 2; this should be red as 10 normal hearing participants, 2 hearing aid users, 6 cochlear implant users, and 2 deaf or hard-of-hearing individuals.

Third, in the “Mapping” column several items called “Complex Mapping” that encapsulate all the more elaborate mappings, as well as mappings that have been specially designed for the tactile display in question.

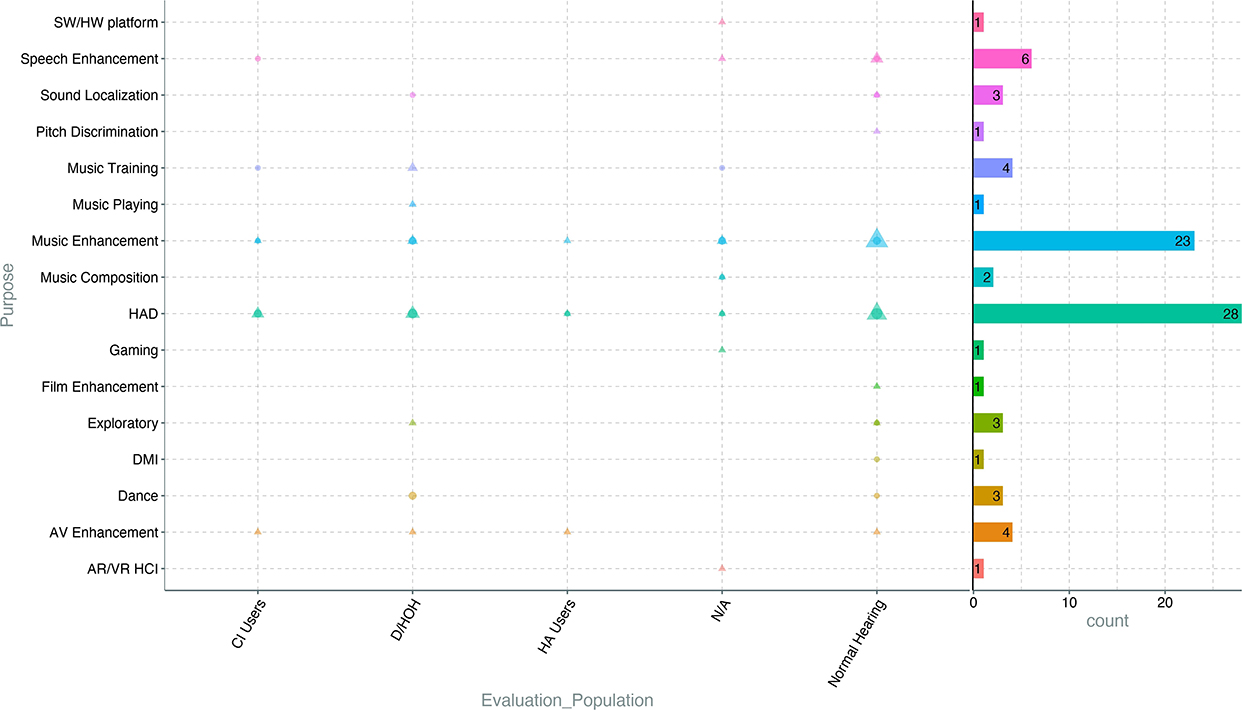

Fourth, the tactile displays analyzed usually fall under more than one category, with respect to those ones mentioned in Section 2.2. For example, Figure 10 shows purpose of devices, where several tactile displays fall under HAD as a secondary purpose, on top of their main goal (e.g., Music Enhancement). This means that analyzed items in most of the figures presented in Section 3.2 are not exclusive, resulting in a greater total amount of data points than number of displays included.

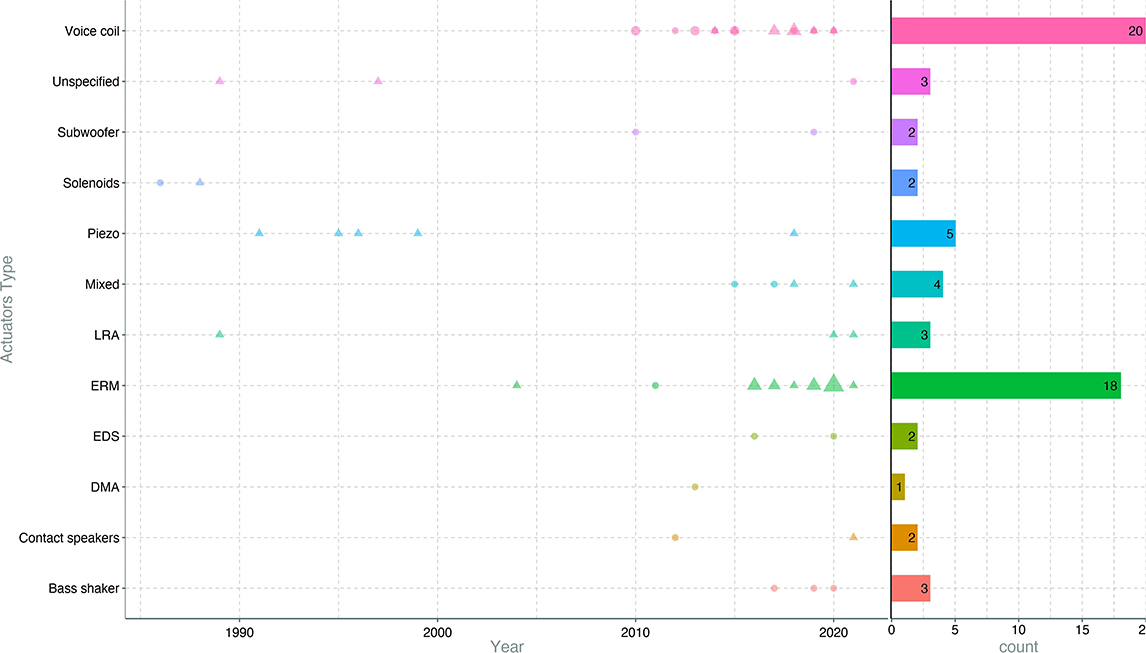

Next, a brief description of the actuator types encountered in the review and shown in Figure 4 is presented below. It is worth noting that the list ignores auxiliary systems necessary to operate these actuators (converters, amplifiers, etc.), and describes them based on their practical tactile applications:

• Voice Coil actuators get their name from the most common application: moving the paper cone in a speaker and are also known as non-commutated DC linear actuators. They consist of a permanent magnetic element (sometimes replaced by an electromagnet with the same role) and a suspended coil attached to a mobile mass. A variation in this architecture exists, where the permanent magnet is the moving mass, and the coil is static. The current from an amplifier that flows through the coil, creates an electromagnetic field that interacts with the permanent magnet, moving the mass (or the paper cone) accordingly. Voice coil actuators come in various sizes and forces available, have a relatively wide frequency response (in the KHz range), and provide high acceleration.

• Subwoofers are a type of voice coil actuators that are optimized to reproduce sound at low frequencies, commonly below 80Hz. Their construction and size vary radically depending on the application, but they do imply the properties described above. One drawback of subwoofers is that they usually require generous amplification to operate. Furthermore, they are optimized for sound reproduction, therefore their tactile characteristics are usually a byproduct of high amplitude playback.

• Solenoids are somewhat similar to voice coils, but instead of providing a permanent magnet interacting with an electromagnet, they have a coil creating a magnetic field in order to move a ferrous shaft. Solenoids are generally used to open or close locks, valves, or to apply a constant force on a surface and are not necessarily suitable for oscillating behavior, resulting in a limited frequency response.

• Piezo-actuators are mechanisms that vibrate based on the change in the shape of a piezoelectric material and belong to the category of “resonating actuators”, which have an efficient operating frequency embedded in their mechanical design. Because of that, piezo-actuators have limited frequency response (generally within 80% of the resonant frequency), but they can be designed to be tiny or in complex shapes, as opposed to the ones presented above. The tactile feedback produced by piezo-actuators is relatively modest, for a given current.

• Linear Resonant Actuators (LRA) are mass-spring systems that employ a suspended mass attached to an electromagnetic coil that vibrates in a linear fashion due to the interaction with the permanently magnetized enclosure. Being a resonant system, they need to be driven with signals close to the peak frequency response, similar to piezo-actuators and ERMs described below. Some advanced LRAs have auto-resonance systems that detect the optimal frequency for producing highest amplitude tactile feedback, trading tactile frequency accuracy for perceived intensity.

• Electro-dynamic shakers (EDS) is an industrial name given to vibration systems with excellent frequency response characteristics, generally necessary in vibration analysis and acoustics industries. They come in two categories, voice coils and electro-hydraulic shakers, but the systems described in Table 1 only use voice the former type. These can be seen more like bass shakers described below, but with much better frequency response as well as a much higher cost.

• Eccentric Rotating Mass (ERMs) are another type of resonating actuators that operate by attaching an unbalanced mass to the shaft of a DC motor. Rotating the mass produces vibrations of different frequencies and amplitudes, typically linked to the amount of current fed to the motor. These types of actuators are very popular due to their low cost, and relatively strong vibration force, but they respond slower than other resonators to a chance in the current (a lag of 40-80ms is commonly expected). Another limitation of ERMs, as well as LRAs and piezo-actuators is that the frequency and amplitude reproduced are correlated due to their resonating design, and thus are generally suggested when limited tactile frequency information is necessary.

• Dual-Mode Actuators (DMAs) are relatively new types of actuator that are similar to LRAs, but are designed to operate at two different frequencies simultaneously, usually out of phase with each other. Due to their novelty, the amount of variation and experimentation with them is limited, but Hwang et al. (2013) provides evidence that DMAs outperform LRAs in tactile displays in music as well as HCI applications.

• Contact speakers are a sub-category of voice coils that are primarily designed to excite hard surfaces in order to produce sound. They work by moving a suspended coil that has a shaft with a contact surface at the end. The contact surface is usually glued or screw on the desired surface, thus vibrating as the coil oscillates. Contact speakers vary largely in size and power requirements, but generally provide a wide frequency response. In the context of tactile stimulation, they usually have a poor low-frequency representation (below 100 Hz) and are always producing sound (sometimes very loud), due to their focus on the auditory reproduction.

• Bass shakers are another sub-category of voice coils that are grouped by marketed applications rather than physical properties. They work by having a large mass attached to the moving coil, usually in a protective enclosure. These devices are suggested to be used in an audio listening scenario (be it films or games) and should be attached to seating furniture (sofa, chairs, etc). Some vendors provide mounting hardware for drum stools or vibrating platforms designed for stage musicians that complement in-ear headphones to monitor band activity. Bass shakers are generally large, heavy, and require abundant power to operate, but provide a relatively good frequency response up to approx. 350Hz.

Besides Table 1, plots and histograms highlighting the most interesting relationships between characteristics are discussed.

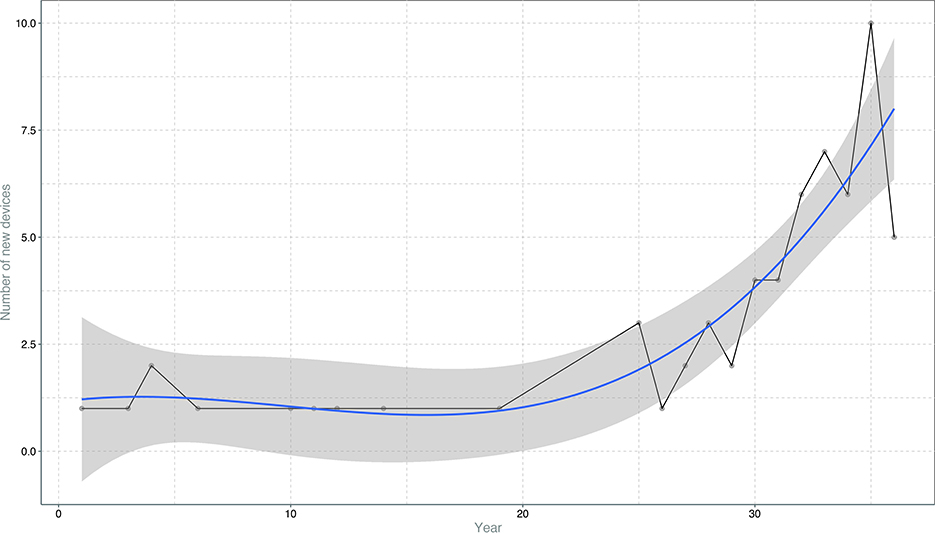

When doing the literature search for scientific publications that included the phrases described in section 2.1, we found that 84% of tactile displays have been introduced after 2010, as shown in Figure 2. This is strong evidence that interest in integrating tactile stimulation into the music-listening activities is blooming.

Figure 2. Distribution of the number of new systems described in publications every year; black line is the empirical value, the blue line is the predicted value and the grey area represents the 95% confidence interval for the estimated number of studies. The prediction model uses a cubic regression that was chosen due to the lowest AIC and BIC scores (as described by Burnham and Anderson, 2004) when compared to other models of orders 1–5.

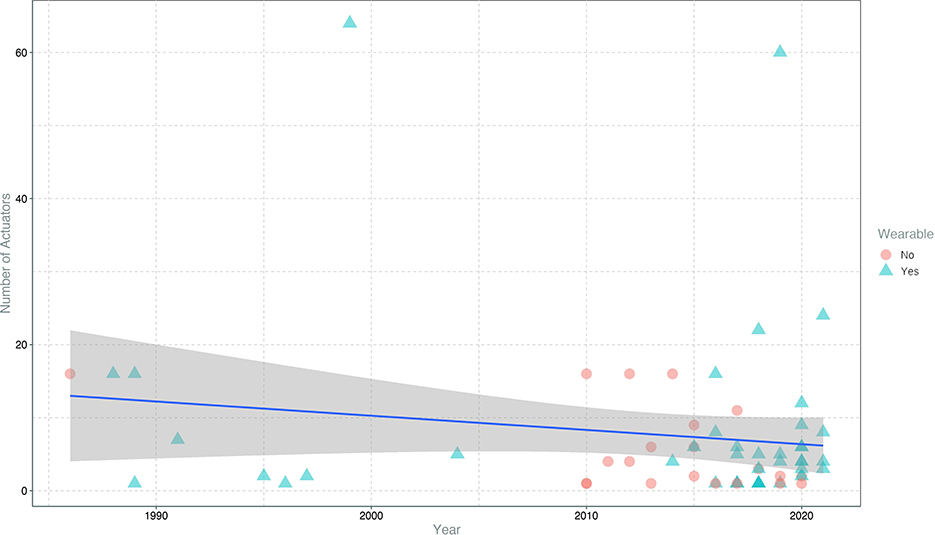

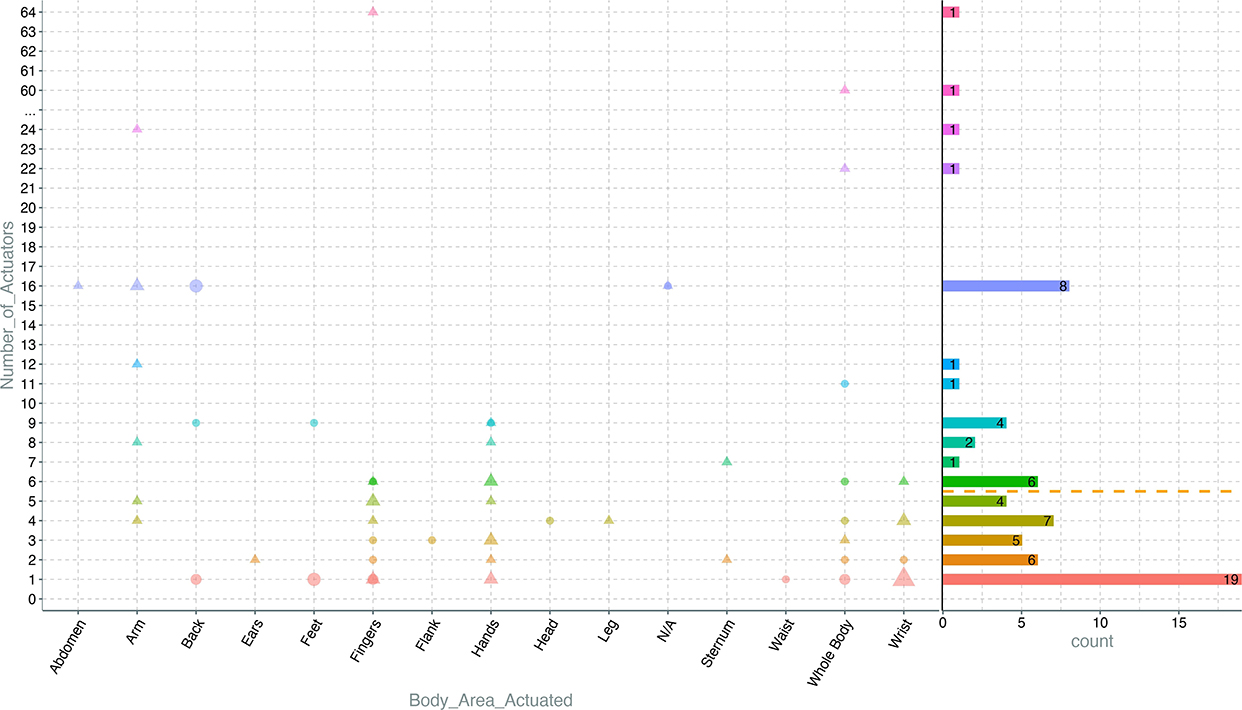

One of the main descriptors of a system is the number of actuators it uses, and it could easily be (wrongfully) assumed that the advancement in transducer technology would encourage researchers to use more actuators in their studies. As shown in Figure 3, the average number of actuators decreases slightly over the years, and the predictor model (blue line) suggests that this number will not increase in the near future. In addition to the occasional outliers with more than 60 actuators, most systems use less than 20 transducers and more than half use less than the average of 8.

Figure 3. Distribution of the number of actuators in a device over years; dashed orange line is the mean over years, the blue line is predicted value, and the grey area represents the 95% confidence interval for the estimated number of actuators; a blue triangle indicates a wearable device, and a red circle indicates a fixed device. The prediction model uses a linear regression that was chosen due to the lowest AIC and BIC scores (as described by Burnham and Anderson, 2004) when compared to other models of orders 1–5.

Plotting the distribution of actuators over time in Figure 4, shows that voice coils have been used since the 2010s, and are generally preferred for applications that require higher frequency and amplitude accuracy. The drawbacks of this type of actuators are that they are generally larger, more expensive, and would require a more complex Digital-to-Analog (DAC) to operate since they use bipolar signals. Including subwoofers, contact speakers and bass shakers in the “voice coil” category would create a cluster representing 34%, signifying their importance. Another popular choice is eccentric rotating mass (ERM) actuators, which are smaller, cheaper, and simpler to operate than voice coils, but provide limited frequency response, and the amplitude is coupled to the frequency. It is also interesting to observe that older systems used piezo and solenoids–technologies that have a very limited application range with small amplitude, or small frequency response. Lastly, it's important to highlight that one category of electro-dynamic systems is never used for tactile augmentation—electro-hydraulic shakers. These devices can provide large displacement and could be deployed to actuate very large surfaces, but have a limited frequency response, generally below 200 Hz.

Figure 4. Distribution of types of actuators used over time; a triangle indicate a wearable device, a circle indicates a fixed device and the size of the shape represents the amount of similar devices.

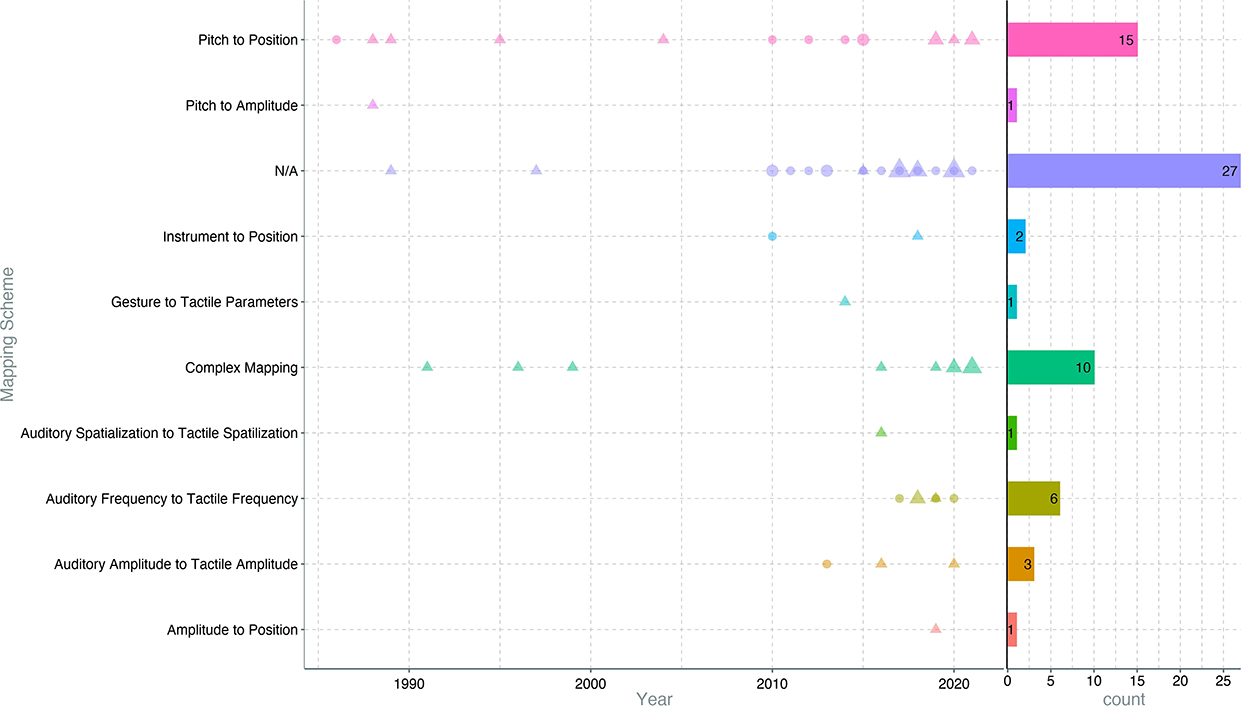

Figure 5 shows that before 1990 mapping schemes were rather simple (e.g., mapping pitch-to-position or amplitude). Tactile frequency has only recently been brought into discussion probably because of the high computational power required for the analysis stage, but also because of recent advancements in voice coil actuators. This technical progress allowed researchers to explore the tactile frequency as a method of encoding the auditory frequency. The pitch-to-position mapping (the idea of cochlear implants) is the one that is used the longest, probably because the creator of the CI and Tickle Talker used the same mapping for both. This could have been an inspiration for further research to produce incremental improvements in these systems, rather than revolutionary approaches, as can be seen in the work of Karam and Fels (2008) and Nanayakkara et al. (2012). Around 2015, new mappings start to be explored, and the popularity of “Pitch-to-position” starts decreasing.

Figure 5. Distribution of mapping schemes used over time; a triangle indicate a wearable device, a circle indicates a fixed device and the size of the shape represents the amount of similar devices.

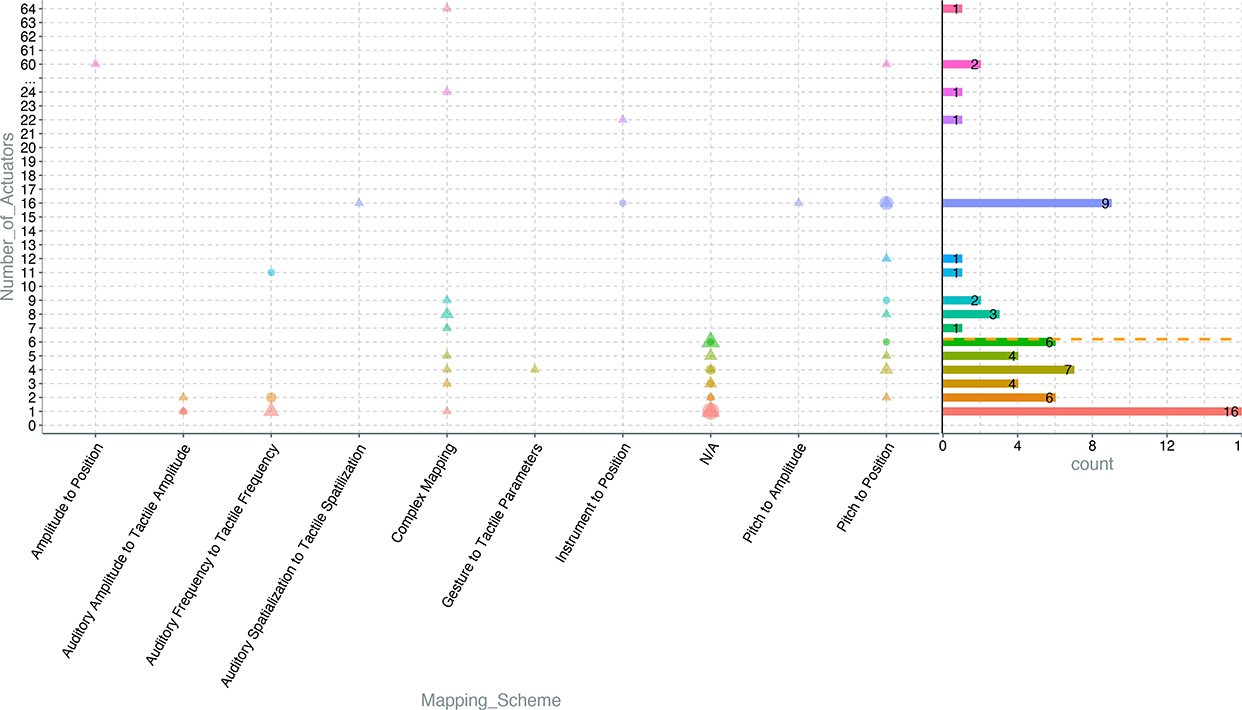

Looking at the relationship between the mapping schemes and the number of actuators in Figure 6 it is interesting to highlight the fact that only one mapping scheme utilizes tactile frequency, in combination with voice coil actuators. Furthermore, something-to-position mapping is popular, taking advantage of the larger surface area the body can afford. This hypothesis is reinforced by Figure 12 showing that areas of high sensitivity are excited with a small number of actuators, probably constrained by the actuator size.

Figure 6. Distribution of the number of actuators for each mapping scheme; a triangle indicates a wearable device, a circle indicates a fixed device and the size of the shape represents the amount of similar devices. The dashed orange line represents the mean.

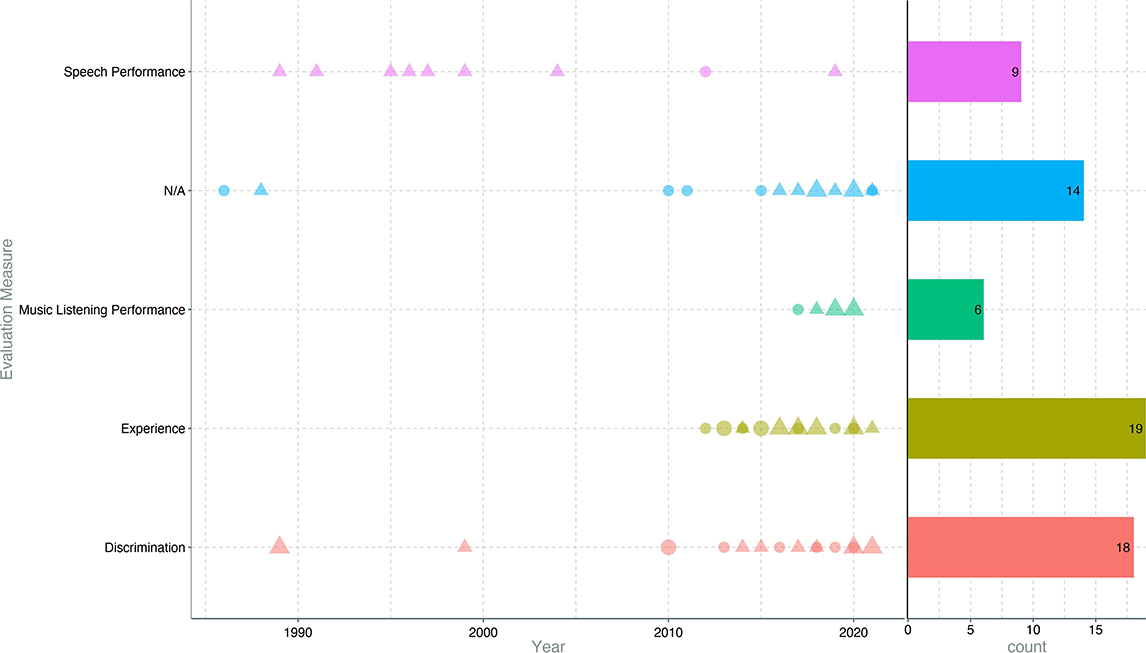

Observing Figure 7 it can be seen that speech research was the main focus before 2013, while music has been the topic of more investigation since. This can be explained by the advancement in hearing aids and CI technology that solved the speech intelligibility problem to a satisfactory degree. Nevertheless, the need to use tactile augmentation remains present when music is played for D/HOH or CI users due to the problems shown by all hearing assistive devices have with multi-stream, complex signals, as well as with timbre and melody recognition and sound localization in the case of CIs. Simultaneously, subjective experiences of users have become a topic of interest in the second decade of the century, as seen in the work of Mirzaei et al. (2020) or DeGuglielmo et al. (2021), even though tactile augmentation research has been exploring that topic for more than 27 years. A further look at the plot shows a large amount of research on users' experiences between 2000 and 2010. At the same time, there is still interest in finding the limits of the physiological and cognitive systems involved in tactile perception and integration, as evidenced by the large number of studies involving discrimination tasks conducted in the past decade. An extended version of Figure 7, reinforces the fact that speech enhancement has been a focus since the beginning of tactile augmentation, as shown in Figure 8. Although interest in it has decreased in recent years, there are still researchers working on it. The second wave of tactile augmentation research started around 2010 and it focused mostly on music, but new technologies and media consumption methods show interest into tactile stimulation as well (e.g., gaming and enhancement of film and AV).

Figure 7. Distribution of evaluation measurements over years, a triangle indicate a wearable device, a circle indicates a fixed device and the size of the shape represents the amount of similar devices.

Figure 8. Distribution of devices' purpose over year, a triangle indicate a wearable device, a circle indicates a fixed device and the size of the shape represents the amount of similar devices; items on Y axis are not exclusive.

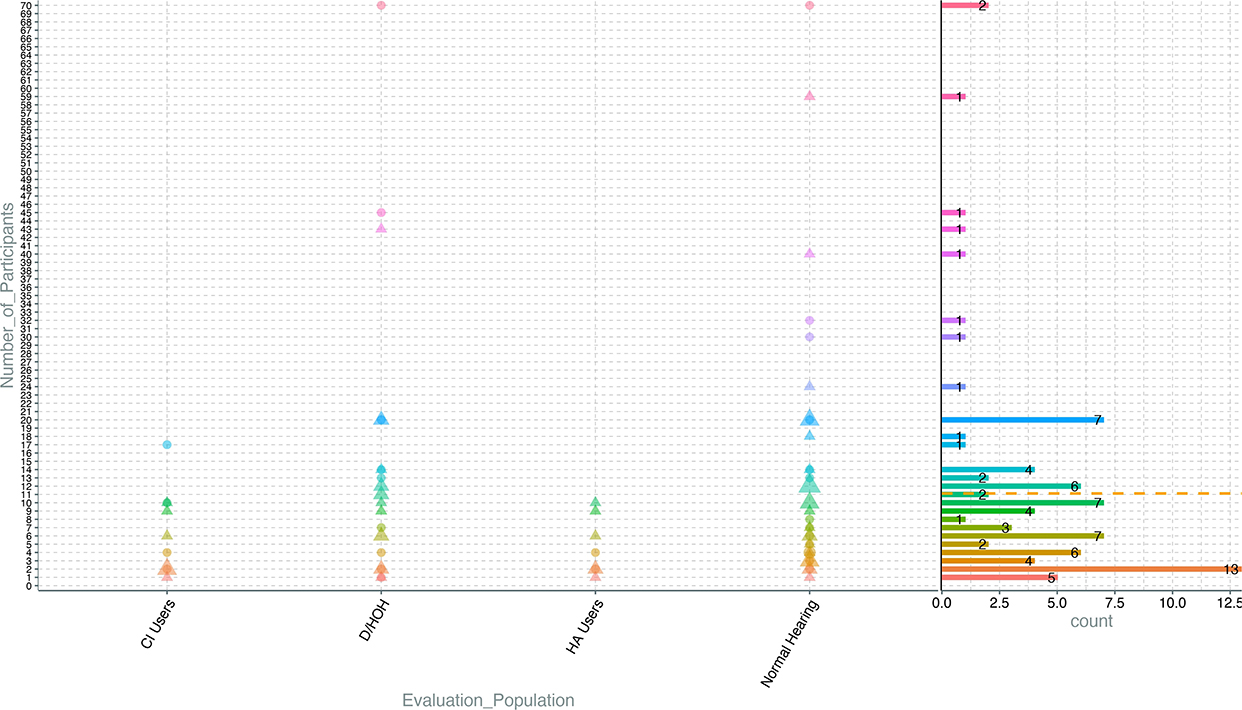

The overall number of participants is very small, with a mean of 11, resulting in generally low-reliability studies, as displayed in Figure 9. Furthermore, we can see that the hearing aid and CI users studies are mostly below this average. This is a strong indicator that most of the research is preliminary and underlines the necessity for studies to adhere to clinical guidelines (longitudinal, more participants, better control), as well as the need for replication of prior studies. Nevertheless, CI users are mostly evaluating systems focusing on music and speech, while HA users only focus on music as seen in Figure 10. This could be explained by the fact that the speech needs for HA users are fulfilled by the current state-of-the-art in HA tech. However, it could be argued that tactile augmentation could also be interesting for normal hearing and hearing aid users as well; this can be seen in the push for multimodal cinema experiences. Nevertheless, it cannot be overlooked that devices aimed at groups with a particular set of requirements (CI and HA users) are evaluated using a normal hearing population, sometimes exclusively. This practice, while common, might result in studies with lower validity, because the requirements for the target population are not met.

Figure 9. Distribution of the number of participants for each of the populations evaluated; a triangle indicate a wearable device, a circle indicates a fixed device and the size of the shape represents the amount of similar devices. D/HOH represents persons suffering from a hearing disability, but without any hearing assistive device. The dashed orange line represents the mean.

Figure 10. Distribution of the purpose for each evaluation population; a triangle indicate a wearable device, a circle indicates a fixed device and the size of the shape represents the amount of similar devices. D/HOH represents persons suffering from a hearing disability, but without any hearing assistive device; items on Y axis are not exclusive.

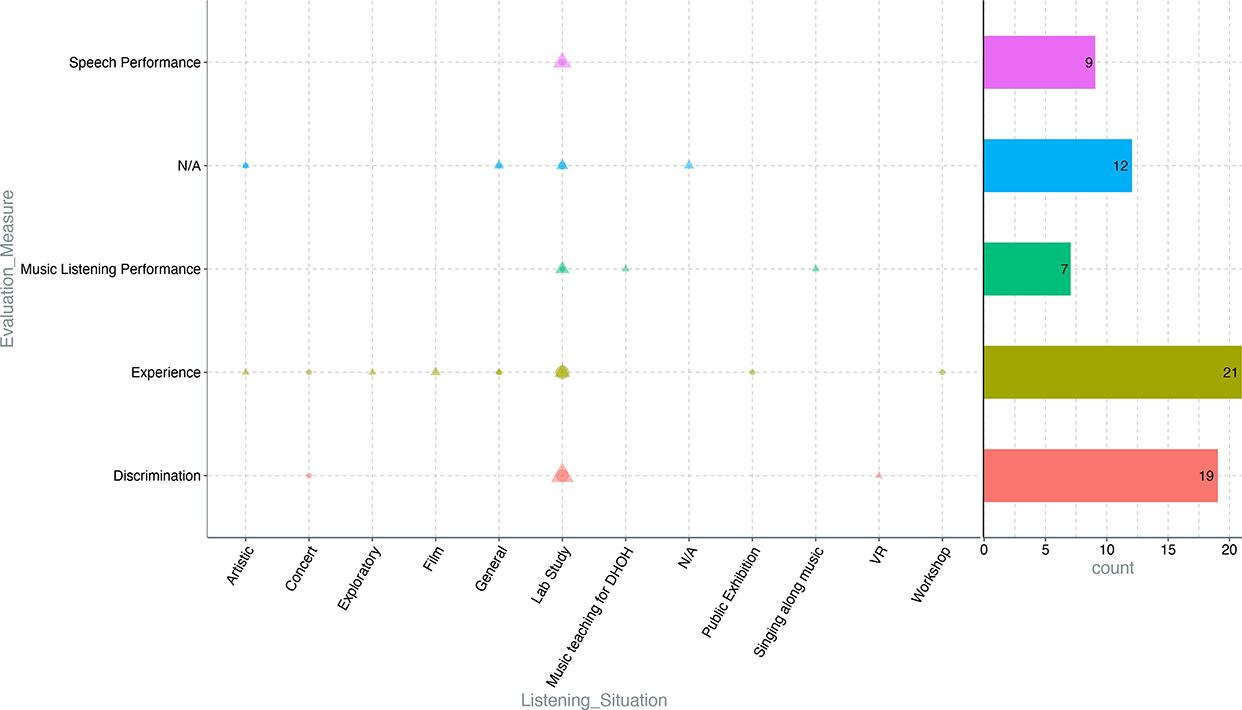

In Figure 11 the relationship between listening situations and evaluation measures is presented. On one hand, the large number of discrimination studies further highlight the incipient state of the research field, which looks to outline the “playing field” by testing the threshold and just-noticeable differences. On the other hand, studies focusing on music are increasingly more frequent, indicating that researchers bring forward new systems and ideas that have a more applied research angle to them. This aspect is supported as well by the large emphasis on the users' experience, which is evaluated in various listening situations, not only in research laboratories.

Figure 11. Distribution of the evaluation measure goals for each listening situation; a triangle indicate a wearable device, a circle indicates a fixed device and the size of the shape represents the amount of similar devices; items on X axis are note exclusive.

Figure 12 shows that systems using fingers use a few actuators, usually one actuator per finger. Similarly, for the wrists, it seems that a small number of actuators is preferred, probably because of physical limitations. On the other hand, when the whole body is used, the number of actuators increases; the same is true for arms. This clearly indicates that the size of the actuator, as well as the spatial resolution of the skin, is a constraining factor in designing complex systems, and advancements in actuator technologies will allow designers to insert more actuators aiming at the high sensitivity areas (hands, wrist, etc). It is known that there is a positive correlation between 2-point discrimination and the contact size of the actuator, so small actuators would require a smaller space between them.

Figure 12. Distribution of number of actuators over the body area they actuate; a triangle indicate a wearable device, a circle indicates a fixed device and the size of the shape represents the amount of similar devices. The dashed orange line represents the mean.

The wrist and fingers are used with mappings that require greater accuracy in terms of frequency, while mappings that rely on simpler encoding (such as amplitude and custom encoding) are generally used with a higher number of actuators, as shown in Figure 12; a potential explanation for this is that the lower physiological accuracy in certain areas is compensated for by a higher number of actuators. Going back to the number of actuators in areas that are using “auditory frequency to tactile frequency” mapping, it seems that one or two actuators are sufficient for this type of mapping.

In this scoping review, we identified 63 primary articles that describe unique vibrotactile displays used for audio augmentation, published from 1986 to 2021. Within this specific research pool, our findings highlight that most of the work in the field of vibrotactile augmentation of sound can be categorized as preliminary, missing the large-scale studies usually associated with clinical research. This conclusion is supported by the low reliability of evaluations presented derived from a low number of participants, as well as the occasional low validity of the said evaluations. The latter is evidenced by experiments conducted with poorly sampled individuals; for example, tactile displays designed for D/HOH are evaluated on normal hearing users. Finally, it should be underlined that much of the available literature covers research conducted under laboratory conditions and not in ecologically valid environments. As such, this contradicts the idea that most long-term benefits are obtained when participants use hearing assistive devices in daily life scenarios. For these reasons we can see an gap in the evaluation and experimental protocols conducted in most studies included, and we suggest that researchers start focusing on larger scale, longitudinal studies that are more akin to clinical ones, when evaluating their audio augmenting tactile displays.

Looking at the hardware aspect, the majority of included studies present tactile displays designed for some regions of the hand. This is expected as hands provide the highest fine motor skills as well as very good spatio-temporal resolution, but it might not be the most practical for devices that are designed to be used extensively, especially when daily activities are to be executed while using the tactile displays. Furthermore, other areas could present different advantages in terms of duration of stimulation or intensity tolerance for use cases where the finest discrimination properties are not vital. Therefore, we see a great opportunity to branch out and encourage designers and researchers to create displays that afford similar perceptual characteristics and are to be sensed by different body regions. Furthermore, versatile designs are strongly encouraged (in terms of mode of interaction, HW/SW and mapping), in order to be adaptable to the inter-user needs; this is especially important for CI users, where the variation in hearing abilities is largest.

On a similar note, our finding indicates that researchers present a large variety of designs in terms of type of actuators, mapping, signal processing, etc. further solidify the exploratory phase of the entire research field, characteristic of an early development stage. There seems to be a consensus on the upper limit of the number of actuators necessary for vibrotactile augmentation, although more than half of the displays identified use less than the average number of transducers, with the mode being 1 actuator. This could be attributed to cost reduction strategies, but since most of the devices are researched almost exclusively in laboratories and are generally far from commercialization, we are confident to suggest that a high number of actuators might not provide substantial benefits to tactile augmentation of audio. With this in mind, we must emphasize the importance of mapping strategies used, both in the time/frequency domain and in the psychoperceptual space, in order to design the best tactile displays for vibrotactile augmentation. Our research pool shows that almost half of the devices studied do not imply any form of mapping between the auditory signal and tactile stimulation, while the mode is pitch-to-position—a mapping scheme introduced with the very first audio-tactile augmentation device. We see a great potential for exploration into creative mapping schemes that could have roots in the most commonly encountered ones, as well as radical new ideas that could be generic or case-specific (e.g., bespoke for concert or film scenarios). These new mappings should be carefully designed and evaluated primarily with respect to the target group's hearing profiles (CI, HA, etc.) as well as signal processing used and the eventual acoustic stimulation; if possible, all these aspects should be co-created involving the end users, in order to produce a coherent multisensory experience.

This is the first scoping review focusing on the technological aspect behind the vibrotactile augmentation of music. Although mainly concerned with the hardware and software characteristics of the tactile displays described in Table 1, this article has addressed some elements of the dissemination and evaluation of the devices described. Nevertheless, the scoping nature of this review rules out a detailed description of implementation for each study or evaluates the quality and effectiveness of the included tactile display. Therefore, it is impossible to recommend specific techniques or strategies that would predict better music perception, training, or adjacent metrics for D/HOH and CI implanted people.

While a comprehensive search has been conducted on one of the most relevant databases, this process was carried out by a single reviewer and there was no forward-citation search on the included studies. Furthermore, there was no review of the reference list of included articles or a manual search protocol to scan relevant journals, as it was concluded that most of the articles are indexed by Scopus®. This resulted in the exclusion of any gray literature, as the process of searching for relevant unpublished material was of considerable difficulty.

The purpose of this scoping review was to investigate and report the current technological state in the field of vibrotactile augmentation, viewed from the perspective of music enhancement for hearing impaired users. A total of 3555 articles were considered for eligibility from the Scopus® database, resulting in the inclusion of 63 studies. The vibrotactile devices in each article was analyzed according to a pre-defined set of characteristics, focusing on hardware and software elements, as well as the evaluation and experiment design, regardless of the hearing profile of their users. The evidence gathered indicates that this research field is in an early phase, characterized by an exploratory approach and preliminary results. A secondary objective of this article was to identify the gaps and trends in the literature that can guide researchers and designers in their practice, and a list of suggestions and recommendations has been presented, based on graphical representations of statistics analysis. The data and the system used to synthesize the review are publicly accessible, and we recommend that readers explore them and generate their own graphs and interpretations.

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://github.com/razvysme/TactileDisplaysReview.

RP, NN, and SS contributed to the conception and design of the study, and including the selection of databases to be queried. RP browsed the database and organized the data collected, performed the statistical analysis, and wrote the first draft of the manuscript. All authors contributed to the manuscript revision, read, and approved the submitted version.

This research was funded by the Nordfosk Nordic University Hub through the Nordic Sound and Music project (number 86892), and as well as the Nordic Sound and Music Computing Network and Aalborg University.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Aker, S. C., Innes-Brown, H., Faulkner, K. F., Vatti, M., and Marozeau, J. (2022). Effect of audio-tactile congruence on vibrotactile music enhancement. J. Acoust. Soc. Am. 152, 3396–3409. doi: 10.1121/10.0016444

Araujo, F. A., Brasil, F. L., Santos, A. C. L., Junior, L. D. S. B., Dutra, S. P. F., and Batista, C. E. C. F. (2017). Auris system: providing vibrotactile feedback for hearing impaired population. Biomed. Res. Int. 2017, 2181380. doi: 10.1155/2017/2181380

Armitage, J., and Ng, K. (2016). “Feeling sound: exploring a haptic-audio relationship,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (Plymouth), Vol. 9617, 146–152.

Baijal, A., Kim, J., Branje, C., Russo, F. A., and Fels, D. (2012). “Composing vibrotactile music: a multi-sensory experience with the emoti-chair,” in Haptics Symposium 2012, HAPTICS 2012-Proceedings (Vancouver, BC), 509–515.

Bothe, H.-H., Mohamad, A., and Jensen, M. (2004). “Flipps: fingertip stimulation for improved speechreading,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Vol. 3118 (Berlin), 1152–1159.

Branje, C., Maksimowski, M., Karam, M., Fels, D., and Russo, F. A. (2010). “Vibrotactile display of music on the human back,” in 3rd International Conference on Advances in Computer-Human Interactions, ACHI 2010 (St. Maarten), 154–159.

Branje, C., Nespoil, G., Russo, F. A., and Fels, D. (2014). The effect of vibrotactile stimulation on the emotional response to horror films. Comput. Entertainment 11, 703. doi: 10.1145/2543698.2543703

Burnham, K. P., and Anderson, D. R. (2004). Multimodel inference: understanding aic and bic in model selection. Sociol. Methods Res. 33, 644. doi: 10.1177/0049124104268644

Chafe, C. (1993). “Tactile audio feedback,” in Proceedings of the 19th International Computer Music Conference (ICMC 1993) Tokyo.

Cieśla, K., Wolak, T., Lorens, A., Heimler, B., Skaryński, H., and Amedi, A. (2019). Immediate improvement of speech-in-noise perception through multisensory stimulation via an auditory to tactile sensory substitution. Restor. Neurol Neurosci. 37, 155–166. doi: 10.3233/RNN-190898

Cowan, R. S. C., Sarant, J. Z., Galvin, K. L., Alcantara, J. I., Blamey, P. J., and Clark, G. M. (1990). The tickle talker: a speech perception aid for profoundly hearing impaired children. Aust. J. Audiol. 9.

DeGuglielmo, N., Lobo, C., Moriarty, E. J., Ma, G., and Dow, D. E. (2021). “Haptic vibrations for hearing impaired to experience aspects of live music,” in Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST, 403 LNICST; Sugimoto.

Egloff, D., Wanderley, M., and Frissen, I. (2018). Haptic display of melodic intervals for musical applications. IEEE Haptics Sympos. 2018, 284–289. doi: 10.1109/HAPTICS.2018.8357189

Engebretson, A., and O'Connell, M. (1986). Implementation of a microprocessor-based tactile hearing prosthesis. IEEE Trans. Biomed. Eng. 33, 712–716. doi: 10.1109/TBME.1986.325762

Fletcher, M. (2021). Using haptic stimulation to enhance auditory perception in hearing-impaired listeners. Expert Rev. Med. Devices 18, 63–74. doi: 10.1080/17434440.2021.1863782

Fletcher, M., Thini, N., and Perry, S. (2020). Enhanced pitch discrimination for cochlear implant users with a new haptic neuroprosthetic. Sci. Rep. 10, 10354. doi: 10.1038/s41598-020-67140-0

Fletcher, M., and Zgheib, J. (2020). Haptic sound-localisation for use in cochlear implant and hearing-aid users. Sci. Rep. 10, 14171. doi: 10.1038/s41598-020-70379-2

Florian, H., Mocanu, A., Vlasin, C., Machado, J., Carvalho, V., Soares, F., et al. (2017). Deaf people feeling music rhythm by using a sensing and actuating device. Sens. Actuators A Phys. 267, 431–442. doi: 10.1016/j.sna.2017.10.034

Foxe, J., Wylie, G., Martinez, A., Schroeder, C., Javitt, D., Guilfoyle, D., et al. (2002). Auditory-somatosensory multisensory processing in auditory association cortex: an fMRI study. J. Neurophysiol. 88, 540–543. doi: 10.1152/jn.2002.88.1.540

Frid, E., and Lindetorp, H. (2020). “Haptic music-exploring whole-body vibrations and tactile sound for a multisensory music installation,” in Proceedings of the Sound and Music Computing Conferences 2020-June (Turin), 68–75.

Gilmore, S. A., and Russo, F. A. (2020). Neural and behavioral evidence for vibrotactile beat perception and bimodal enhancement. J. Cogn. Neurosci. 33, 1673. doi: 10.1162/jocn_a_01673

Giulia, C., Chiara, D., and Esmailbeigi, H. (2019). “Glos: Glove for speech recognition,” in Conference proceedings : ... Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual Conference, 2019 (Berlin: IEEE), 3319–3322.

Hashizume, S., Sakamoto, S., Suzuki, K., and Ochiai, Y. (2018). “Livejacket: wearable music experience device with multiple speakers,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Vol. 10921 LNCS (Frankfurt), 359–371.

Hayes, L. (2015). “Skin music, An audio-haptic composition for ears and body,” in C and C 2015-Proceedings of the 2015 ACM SIGCHI Conference on Creativity and Cognition (Glasgow), 359–360.

Haynes, A., Lawry, J., Kent, C., and Rossiter, J. (2021). Feelmusic: enriching our emotive experience of music through audio-tactile mappings. Multimodal Technol. Interact. 5, 29. doi: 10.3390/mti5060029

Hopkins, C., Maté-Cid, S., Fulford, R., Seiffert, G., and Ginsborg, J. (2016). Vibrotactile presentation of musical notes to the glabrous skin for adults with normal hearing or a hearing impairment: thresholds, dynamic range and high-frequency perception. PLoS ONE 11, e0155807. doi: 10.1371/journal.pone.0155807

Hopkins, C., Maté-Cid, S., Fulford, R., Seiffert, G., and Ginsborg, J. (2021). Perception and learning of relative pitch by musicians using the vibrotactile mode. Musicae Scientiae 27, 3–26. doi: 10.1177/10298649211015278

Hove, M., Martinez, S., and Stupacher, J. (2019). Feel the bass: music presented to tactile and auditory modalities increases aesthetic appreciation and body movement. J. Exp. Psychol. Gen. 149, 1137–1147. doi: 10.1037/xge0000708

Huang, J., Lu, T., Sheffield, B., and Zeng, F.-G. (2018). Electro-tactile stimulation enhances cochlear-implant melody recognition: effects of rhythm and musical training. Ear. Hear. 41, 106–113. doi: 10.1097/AUD.0000000000000749

Huang, J., Sheffield, B., Lin, P., and Zeng, F.-G. (2017). Electro-tactile stimulation enhances cochlear implant speech recognition in noise. Sci. Rep. 7, 2196. doi: 10.1038/s41598-017-02429-1

Hwang, I., Lee, H., and Choi, S. (2013). Real-time dual-band haptic music player for mobile devices. IEEE Trans. Haptics 6, 340–351. doi: 10.1109/TOH.2013.7

Jack, R., Mcpherson, A., and Stockman, T. (2015). “Designing tactile musical devices with and for deaf users: a case study,” in Proceedings of the International Conference on the Multimedia Experience of Music; Treviso.

Jiam, N. T., and Limb, C. J. (2019). Rhythm processing in cochlear implant–mediated music perception. Ann. N. Y. Acad. Sci. 1453, 22–28. doi: 10.1111/nyas.14130

Kanebako, J., and Minamizawa, K. (2018). Vibgrip++: haptic device allows feeling the music for hearing impaired people. Lecture Notes Electr. Eng. 432, 449–452. doi: 10.1007/978-981-10-4157-0_75

Karam, M., Branje, C., Nespoli, G., Thompson, N., Russo, F., and Fels, D. (2010). “The emoti-chair: an interactive tactile music exhibit,” in Conference on Human Factors in Computing Systems-Proceedings (Atlanta, GA), 3069–3074.

Karam, M., and Fels, D. (2008). “Designing a model human cochlea: issues and challenges in crossmodal audio-haptic displays,” in Proceedings of the 2008 Ambi-Sys Workshop on Haptic User Interfaces in Ambient Media Systems, HAS 2008; Brussels.

Kayser, C., Petkov, C., Augath, M., and Logothetis, N. (2005). Integration of touch and sound in auditory cortex. Neuron 48, 373–384. doi: 10.1016/j.neuron.2005.09.018

Knutzen, H., Kvifte, T., and Wanderley, M. M. (2014). “Vibrotactile feedback for an open air music controller,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (Marseille), Vol. 8905.

Lauzon, A. P., Russo, F. A., and Harris, L. R. (2020). The influence of rhythm on detection of auditory and vibrotactile asynchrony. Exp. Brain Res. 238, 825–832. doi: 10.1007/s00221-019-05720-x

Lofelt (2017). Basslet. Available online at: https://eu.lofelt.com/ (accessed on June 30, 2018).

Luci,á, M., Revuelta, P., Garci, á., Ruiz, B., Vergaz, R., Cerdán, V., et al. (2020). Vibrotactile captioning of musical effects in audio-visual media as an alternative for deaf and hard of hearing people: an EEG study. IEEE Access 8, 190873–190881. doi: 10.1109/ACCESS.2020.3032229

Luo, X., and Hayes, L. (2019). Vibrotactile stimulation based on the fundamental frequency can improve melodic contour identification of normal-hearing listeners with a 4-channel cochlear implant simulation. Front. Neurosci. 13, 1145. doi: 10.3389/fnins.2019.01145

Marzvanyan, A., and Alhawaj, A. F. (2019). Physiology, Sensory Receptors. Tampa, FL: StatPearls Publishing LLC.

Mazzoni, A., and Bryan-Kinns, N. (2016). Mood glove: a haptic wearable prototype system to enhance mood music in film. Entertain Comput. 17, 9–17. doi: 10.1016/j.entcom.2016.06.002

Milnes, P., Stevens, J., Brown, B., Summers, I., and Cooper, P. (1996). “Use of micro-controller in a tactile aid for the hearing impaired,” in Annual International Conference of the IEEE Engineering in Medicine and Biology-Proceedings (Amsterdam: IEEE), 413–414.

Mirzaei, M., Kán, P., and Kaufmann, H. (2020). Earvr: using ear haptics in virtual reality for deaf and hard-of-hearing people. IEEE Trans. Vis. Comput. Graph. 26, 2084–2093. doi: 10.1109/TVCG.2020.2973441

Miyamoto, R., Robbins, A., Osberger, M., Todds, S., Riley, A., and Kirk, K. (1995). Comparison of multichannel tactile aids and multichannel cochlear implants in children with profound hearing impairments. Am. J. Otol. 16, 8–13.

Morley, J. W., and Rowe, M. J. (1990). Perceived pitch of vibrotactile stimuli: effects of vibration amplitude, and implications for vibration frequency coding. J. Physiol. 431, 8336. doi: 10.1113/jphysiol.1990.sp018336

Nakada, K., Onishi, J., and Sakajiri, M. (2018). “An interactive musical scale presentation system via tactile sense through haptic actuator,” in HAVE 2018—IEEE International Symposium on Haptic, Audio-Visual Environments and Games, Proceedings (Dalian: IEEE), 13–16.

Nanayakkara, S., Wyse, L., Ong, S., and Taylor, E. (2013). Enhancing musical experience for the hearing-impaired using visual and haptic displays. Human Comput. Interact. 28, 115–160. doi: 10.1080/07370024.2012.697006

Nanayakkara, S., Wyse, L., and Taylor, E. (2012). “The haptic chair as a speech training aid for the deaf,” in Proceedings of the 24th Australian Computer-Human Interaction Conference, OzCHI 2012 (Melbourne, VIC), 405–410.

Okazaki, R., Hachisu, T., Sato, M., Fukushima, S., Hayward, V., and Kajimoto, H. (2013). Judged consonance of tactile and auditory frequencies. World Haptics Conf. 2013, 663–666. doi: 10.1109/WHC.2013.6548487

Okazaki, R., Kajimoto, H., and Hayward, V. (2012). “Vibrotactile stimulation can affect auditory loudness: a pilot study,” in International Conference on Human Haptic Sensing and Touch Enabled Computer Applications (Kyoto), 103–108.

Osberger, M., Robbins, A., Todd, S., and Brown, C. (1991). Initial findings with a wearable multichannel vibrotactile aid. Am. J. Otol. 12, 179–182.

Ozioko, O., Taube, W., Hersh, M., and Dahiya, R. (2017). Smartfingerbraille: a tactile sensing and actuation based communication glove for deafblind people. IEEE International Symposium on Industrial Electronics (Edinburgh: IEEE), 2014–2018.

Pacchierotti, C., Sinclair, S., Solazzi, M., Frisoli, A., Hayward, V., and Prattichizzo, D. (2017). Wearable haptic systems for the fingertip and the hand: taxonomy, review, and perspectives. IEEE Trans. Haptics 10, 9006. doi: 10.1109/TOH.2017.2689006

Pavlidou, A., and Lo, B. (2021). “Artificial ear-a wearable device for the hearing impaired,” in 2021 IEEE 17th International Conference on Wearable and Implantable Body Sensor Networks, BSN 2021, 2021-January (Athens: IEEE).

Petry, B., Illandara, T., Elvitigala, D., and Nanayakkara, S. (2018). “Supporting rhythm activities of deaf children using music-sensory-substitution systems,” in Conference on Human Factors in Computing Systems-Proceedings, 2018-April; Montreal, QC.

Petry, B., Illandara, T., and Nanayakkara, S. (2016). “Muss-bits: sensor-display blocks for deaf people to explore musical sounds,” in Proceedings of the 28th Australian Computer-Human Interaction Conference, OzCHI 2016 (Launceston, TAS), 72–80.

Pezent, E., Cambio, B., and Ormalley, M. (2021). Syntacts: open-source software and hardware for audio-controlled haptics. IEEE Trans. Haptics. 14, 225–233. doi: 10.1109/TOH.2020.3002696

Pezent, E., O'Malley, M., Israr, A., Samad, M., Robinson, S., Agarwal, P., et al. (2020). “Explorations of wrist haptic feedback for ar/vr interactions with tasbi,” in Conference on Human Factors in Computing Systems-Proceedings; Hawaii.

Reed, C. M., Tan, H. Z., Jiao, Y., Perez, Z. D., and Wilson, E. C. (2021). Identification of words and phrases through a phonemic-based haptic display. ACM Trans. Appl Percept. 18, 8725. doi: 10.1145/3458725

Remache-Vinueza, B., Trujillo-León, A., Zapata, M., Sarmiento-Ortiz, F., and Vidal-Verdú, F. (2021). Audio-tactile rendering: a review on technology and methods to convey musical information through the sense of touch. Sensors 21, 6575. doi: 10.3390/s21196575

Ro, T., Hsu, J., Yasar, N., Elmore, C., and Beauchamp, M. (2009). Sound enhances touch perception. Exp. Brain Res. 195, 135–143. doi: 10.1007/s00221-009-1759-8

Rouger, J., Lagleyre, S., Fraysse, B., Deneve, S., Deguine, O., and Barone, P. (2007). Evidence that cochlear-implanted deaf patients are better multisensory integrators. Proc. Natl. Acad. Sci. U.S.A. 104, 7295–7300. doi: 10.1073/pnas.0609419104

Russo, F. A. (2019). “Multisensory processing in music,” in The Oxford Handbook of Music and the Brain; Oxford.

Rydel, A., and Seiffer, W. (1903). Untersuchungen über das vibrationsgefühl oder die sog. knochensensibilität (pallästhesie). Archiv für Psychiatrie und Nervenkrankheiten 37, 8367. doi: 10.1007/BF02228367

Sakuragi, R., Ikeno, S., Okazaki, R., and Kajimoto, H. (2015). “Collarbeat: whole body vibrotactile presentation via the collarbone to enrich music listening experience,” in International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, ICAT-EGVE 2015 (Kyoto), 141–146.

Setzepfand, W. (1935). Zur frequenzabhängigkeit der vibrationsempfindung des menschen. Z. Biol. 96, 236–240.

Sharp, A., Bacon, B., and Champoux, F. (2020). Enhanced tactile identification of musical emotion in the deaf. Exp. Brain Res. 238, 1229–1236. doi: 10.1007/s00221-020-05789-9

Shin, S., Oh, C., and Shin, H. (2020). “Tactile tone system: a wearable device to assist accuracy of vocal pitch in cochlear implant users,” in ASSETS 2020-22nd International ACM SIGACCESS Conference on Computers and Accessibility; New York, NY.

Shull, P. B., and Damian, D. D. (2015). Haptic wearables as sensory replacement, sensory augmentation and trainer-a review. J. Neuroeng. Rehabil. 12, 59. doi: 10.1186/s12984-015-0055-z

Sorgini, F., Cali,ò, R., Carrozza, M. C., and Oddo, C. M. (2018). Haptic-assistive technologies for audition and vision sensory disabilities. Disabil. Rehabil. Assist. Technol. 13, 394–421. doi: 10.1080/17483107.2017.1385100

Spens, K.-E., Huss, C., Dahlqvist, M., and Agelfors, E. (1997). A hand held two-channel vibro-tactile speech communication aid for the deaf: characteristics and results. Scand. Audiol. Suppl. 26, 7–13.

Stein, B., Stein, P., and Meredith, M. (1993). The Merging of the Senses. A Bradford Book. Cambridge, MA: MIT Press.

Swerdfeger, B. A., Fernquist, J., Hazelton, T. W., and MacLean, K. E. (2009). “Exploring melodic variance in rhythmic haptic stimulus design,” in Proceedings-Graphics Interface; Kelowna, BC.

Tessendorf, B., Roggen, D., Spuhler, M., Stiefmeier, T., Tröster, G., Grämer, T., et al. (2011). “Design of a bilateral vibrotactile feedback system for lateralization,” in ASSETS'11: Proceedings of the 13th International ACM SIGACCESS Conference on Computers and Accessibility (Dundee), 233–234.

Tranchant, P., Shiell, M., Giordano, M., Nadeau, A., Peretz, I., and Zatorre, R. (2017). Feeling the beat: Bouncing synchronization to vibrotactile music in hearing and early deaf people. Front. Neurosci. 11, 507. doi: 10.3389/fnins.2017.00507

Tricco, A. C., Lillie, E., Zarin, W., O'Brien, K. K., Colquhoun, H., Levac, D., et al. (2018). Prisma extension for scoping reviews (prisma-scr): checklist and explanation. Ann. Internal Med. 169, 467–473. doi: 10.7326/M18-0850

Trivedi, U., Alqasemi, R., and Dubey, R. (2019). “Wearable musical haptic sleeves for people with hearing impairment,” in ACM International Conference Proceeding Series (New York, NY), 146–151.

Turchet, L., West, T., and Wanderley, M. M. (2020). Touching the audience: musical haptic wearables for augmented and participatory live music performances. Pers. Ubiquitous Comput. 25, 749–769. doi: 10.1007/s00779-020-01395-2

Vallgårda, A., Boer, L., and Cahill, B. (2017). The hedonic haptic player. Int. J. Design 11, 17–33.

Wada, C., Shoji, H., and Ifukube, T. (1999). Development and evaluation of a tactile display for a tactile vocoder. Technol. Disabil. 11, 151–159. doi: 10.3233/TAD-1999-11305

Weisenberger, J., and Broadstone, S. (1989). Evaluation of two multichannel tactile aids for the hearing impaired. J. Acoust. Soc. Am. 86, 1764–1775. doi: 10.1121/1.398608

West, T., Bachmayer, A., Bhagwati, S., Berzowska, J., and Wanderley, M. (2019). “The design of the body:suit:score, a full-body vibrotactile musical score,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (Orlando, FL), Vol. 11570 LNCS, 70–89.

Wilska, A. (1954). On the vibrational sensitivity in different regions of the body surface. Acta Physiol. Scand. 31, 284–289. doi: 10.1111/j.1748-1716.1954.tb01139.x

Yeung, E., Boothroyd, A., and Redmond, C. (1988). A wearable multichannel tactile display of voice fundamental frequency. Ear Hear. 9, 342–350. doi: 10.1097/00003446-198812000-00011

Young, G., Murphy, D., and Weeter, J. (2013). “Audio-tactile glove,” in DAFx 2013-16th International Conference on Digital Audio Effects; Maynooth.

Young, G., Murphy, D., and Weeter, J. (2015). “Vibrotactile discrimination of pure and complex waveforms,” in Proceedings of the 12th International Conference in Sound and Music Computing, SMC 2015 (Maynooth), 359–362.

Keywords: vibrotactile displays, vibrotactile music, hearing assistive devices, cochlear implant music, multisensory augmentation

Citation: Paisa R, Nilsson NC and Serafin S (2023) Tactile displays for auditory augmentation–A scoping review and reflections on music applications for hearing impaired users. Front. Comput. Sci. 5:1085539. doi: 10.3389/fcomp.2023.1085539

Received: 01 November 2022; Accepted: 23 March 2023;

Published: 27 April 2023.

Edited by:

Kjetil Falkenberg, Royal Institute of Technology, SwedenReviewed by:

Rumi Hiraga, National University Corporation Tsukuba University of Technology, JapanCopyright © 2023 Paisa, Nilsson and Serafin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Razvan Paisa, cnBhQGNyZWF0ZS5hYXUuZGs=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.