94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Comput. Sci., 09 November 2022

Sec. Human-Media Interaction

Volume 4 - 2022 | https://doi.org/10.3389/fcomp.2022.991180

This article is part of the Research TopicPerspectives in Human-Media Interaction 2022View all 9 articles

Deaf and hearing people can encounter challenges when communicating with one another in everyday situations. Although problems in verbal communication are often seen as the main cause, such challenges may also result from sensory differences between deaf and hearing people and their impact on individual understandings of the world. That is, challenges arising from a sensory gap. Proposals for innovative communication technologies to address this have been met with criticism by the deaf community. They are mostly designed to enhance deaf people's understanding of the verbal cues that hearing people rely on, but omit many critical sensory signals that deaf people rely on to understand (others in) their environment and to which hearing people are not tuned to. In this perspective paper, sensory augmentation, i.e., technologically extending people's sensory capabilities, is put forward as a way to bridge this sensory gap: (1) by tuning to the signals deaf people rely on more strongly but are commonly missed by hearing people, and vice versa, and (2) by sensory augmentations that enable deaf and hearing people to sense signals that neither person is able to normally sense. Usability and user-acceptance challenges, however, lie ahead of realizing the alleged potential of sensory augmentation for bridging the sensory gap between deaf and hearing people. Addressing these requires a novel approach to how such technologies are designed. We contend this requires a situated design approach.

In 2021 the World Health Organization estimated that “466 million people worldwide−5.5% of the population–have disabling hearing loss, and this number is expected to rise to 1 in 4 by 2050”1. This may have broad social (World Health Organization, 2019), cultural (Friedner and Kusters, 2020), and economic consequences (Rodenburg, 2016), such as exclusion, stigmatization, and unequal opportunities.

However, these consequences are not directly produced by deafness or hearing loss, rather they (partially) result from the limited interaction between hearing people and deaf2 people. Deaf people cannot hear what others are saying, and most hearing people are not able to communicate using sign language. This can hinder the (spontaneous) interchange of verbal expressions between them, resulting in barriers between deaf and hearing communities (Friedner and Kusters, 2020). However, such misunderstandings between hearing and deaf people do not only result from problems in their verbal communication, they also result from the differences in the way deaf and hearing people perceive the world around them (Hummels and Van Dijk, 2015): there exists a sensory gap between them. Language translation applications are often presented as a solution to the verbal misunderstandings between deaf and hearing people, but technologies that address sensory gaps are scarce. In this paper, we offer a perspective on how technology can be designed to bridge this sensory gap.

According to the concept of the Umwelt (Von Uexküll, 2013), individuals build up a personal understanding of the world based on sensory interaction with the world around them. Hence, sensory variations between individuals may lead to differences in sense-making of the world (Thompson and Stapleton, 2009). Causes of such sensory variations can range from the natural shapes of ears and curvature of the eyes to trauma resulting in a severed cochlear nerve. In humans, most sensory variations do not hinder interaction between them since spontaneously generated cues by individuals sufficiently support mutual understanding (Trujillo and Holler, 2021). However, when those cues are lacking or differ too much due to larger sensory differences between people, such as between deaf and hearing people (for example, when visual cues from a hearing person are not sufficient to compensate for the lack of hearing), the sensory differences and their impact on their personal understanding of the world may significantly hinder the sharing of meanings (Hummels and Van Dijk, 2015). We call this a sensory gap.

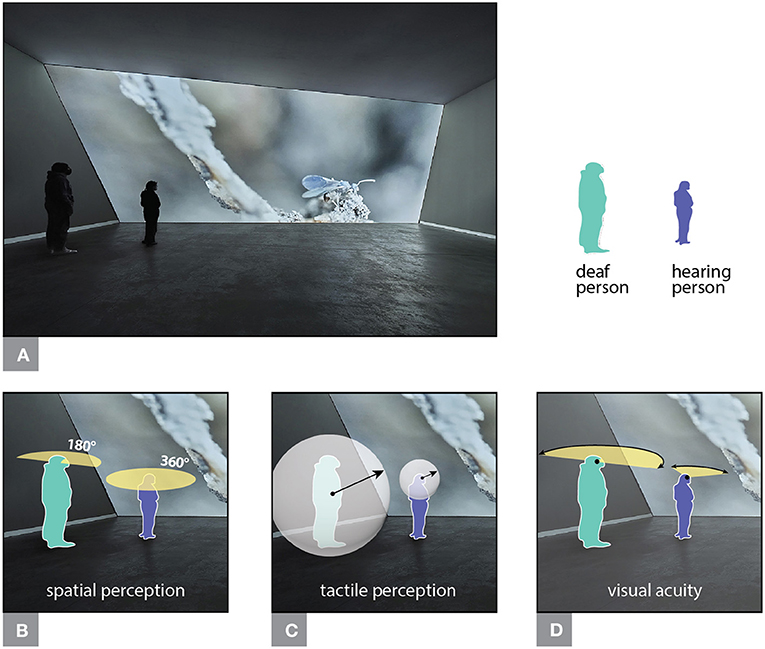

To illustrate, Figure 1A shows a museum space in which two people watch an audio-visual artwork. If one person is deaf while the other can hear, their perception of the artwork will differ. Figures 1B–D illustrate a sensory gap based on differences in spatial perception, tactile perception, and visual acuity, respectively. Even if both visitors are able to use sign language, the verbal communication this makes possible would not fully compensate for the fact that both visitors experienced the artwork differently: one visitor may for instance refer to stimuli that the other did not sense. In this way, sign language will never (fully) compensate for the difference in sensory experience. Not even if a kind of sign language is used that is specifically developed for the communication of sensuous qualities, such as Adamorobe Sign Language (Kusters, 2020).

Figure 1. (A) A sensory gap occurs in a museum space in which two people watch an audio-visual artworka; (B) The hearing person (purple) perceives the surrounding space based on auditory cues while the deaf person (green) relies on visual cues that are in view of them (Rosen, 2012; Friedner and Kusters, 2020); (C) The deaf person has a heightened susceptibility to sound and other vibrations as opposed to the hearing person (Goldreich and Kanics, 2003); (D) The deaf person has enhanced peripheral vision and faster visual detection of changes and movements than the hearing person (Dye and Bavelier, 2013). a“nu en later.” n.d. De Pont museum. Accessed April 9, 2022. https://depont.nl/tentoonstellingen/nu-en-later/maya-watanabe-liminal.

Most innovative technologies developed to support the communication between deaf and hearing people are designed to support the lack of verbal communication, without further attention for the sensory variations between them. Typical examples of such technologies include transcription of speech and relevant sounds through closed captioning (Lartz et al., 2008), and an application that translates written text into vibrotactile stimuli that, after a few weeks of training, can help to understand foreign language3. Despite their potential for supporting communication between deaf and hearing people, such technological solutions have had varying degrees of success in usage. In fact, they are often not well received by the deaf community. This is exactly because those innovative technologies concentrate on verbal communication but do not bridge the sensory gap between interlocutors (Friedner and Kusters, 2020).

Sensory augmentation technology, a specific type of human enhancement technology (De Boeck and Vaes, 2021), however, may be more suitable to help bridge this sensory gap (van Dartel and de Rooij, 2019).

Sensory augmentation is a technology that is designed to translate signals from the environment that cannot (sufficiently) be sensed with the biological senses, but which can be sensed (better) by artificial sensors (e.g., electromagnetism), to stimuli that can be sensed with the biological senses (e.g., tactile vibrations) (de Rooij et al., 2018a). This, with the intent to make formerly inaccessible signals accessible for further processing using existing pathways through which sensory information is accessed (Deroy and Auvray, 2012). Examples of sensory augmentation devices include the feelSpace belt that translates magnetic north sensor data to vibration motors worn around the waist (König et al., 2016), Neil Harbisson's device enabling the detection of colors beyond the normal human vision wavelengths (Leenes et al., 2017) and the “X-Ray Vision” device that enables users to recognize objects through walls (Avery et al., 2009; Raisamo et al., 2019).

Sensory augmentation technology has been shown to hold potential for broad applicability (van Dartel and de Rooij, 2019). This potential includes bridging the sensory gap between deaf and hearing people (Caldwell et al., 2017). Using such technology may lead to an extension of human perceptual capabilities and provide “access to new features of the world” (Auvray and Myin, 2009). This may help to understand previously incomprehensible signals from the environment, including stimuli and cues generated by other people. However, some developers of sensory augmentation devices claim their users “see with their skin” (White et al., 1970) or “see with the brain” (Bach-y-Rita et al., 2003). Despite this exciting prospect, most devices fail to be adopted. This could be explained by the fact that most of such devices are designed based on the assumption that using them equals perception through a normal sensory modality, which may be an inaccurate starting point for design (Deroy and Auvray, 2012).

Generally speaking, designers of sensory augmentation devices aspire a perceptual-level integration of such technology, meaning that the sensory apparatus of the person using the device learns to make sense of the constant and consequent changes in stimuli as a result of the user's movements, e.g., the “direct” translation of environmental information to a blind person useing a white-cane (Kristjánsson et al., 2016). This would result in an immediate and effortless perceptual experience (Auvray and Myin, 2009). The theory of sensorimotor contingencies (O'Regan, 2011) supports this thought by suggesting that there is a reciprocal relationship between sensory input and the investigative movements of body parts. Also, Kaspar et al. (2014, p. 1) claim that, by acting in the world, bodily movements and “associated sensory stimulations are tied together” form the basis of sensory experience and awareness. Mastering artificial sensorimotor contingencies may lead to changes or —optimally— to augmentation of sensory perception. However, learning to understand and use sensory augmentation technology takes essential effort to concentrate on a new stimulus and interpret its correlations to movement (Tapson et al., 2008). This may, so the argument goes, over time and under lab conditions, lead to less cognitive-level processing and perceptual integration. But empirical evidence supporting this is lacking (Schumann and O'Regan, 2017; Witzel et al., 2021). Instead, most evidence of effects of integration of artificial stimuli through sensory augmentation suggests this is more likely to happen at a cognitive level, requiring effort to recognize and interpret symbolic information and to derive meaning (Deroy and Auvray, 2012). As a result, according to Rizza et al. (2018), most tactile speech aids fail since there is no perceptual-level integration and they turn out to be used cognitively and with effort.

Sensory augmentation could help people to make sense of their environment in new and unexpected ways. Emerging evidence suggests that sensory augmentation can help bridge the sensory gap between deaf and hearing people specifically. The versatile extrasensory transducer (VEST), an interface that translates audio signals captured with a microphone to tactile stimuli actuated by a matrix of vibration motors placed on the skin, can be used to train people to recognize digits based on the vibration patterns sensed on their skin (Novich and Eagleman, 2014). Another example is a device that translates intonation during speech into pressure patterns on the skin (Boothroyd, 1985); and the Flutter dress that gives vibrotactile cues indicating “the direction of critical information of the local sound topology” (Profita, 2014, p. 332). This early work suggests that sensory augmentation can help address at least some aspects of the sensory gap that stem from an inability to hear sound.

Such sensory augmentation technology can complement hearing people in conversation with deaf people, for instance by tuning them to the signals that deaf people have come to rely on more strongly than hearing people. For example, and referring back to the examples in Figure 1, hearing people could use sensory augmentations that support sensing changes in or beyond their field of view or support a heightened tactile sensitivity that could trigger them more intensely when being unexpectedly touched. Besides supporting hearing or deaf people in conversation with each other, devices could also be designed to support both by translating one person's sensory triggers into stimuli that fit the sensory apparatus of the other person, generating stimuli the other person can learn to interpret.

Addressing the potential of sensory augmentation by experimentation with new sensory stimuli, rather than with compensation for impairments, this technology could be used to bridge the sensory gap beyond merely bridging the differences between deaf and hearing individuals. This could be done by providing sensory augmentations that enable deaf and hearing people to sense signals that neither person is able to sense with their biological senses (Berta, 2020). For example, the artwork in Figure 1 could hypothetically be adapted to be more aesthetically pleasing, e.g., a more balanced composition (McManus et al., 2011), when using an augmentation that extends color vision to sensing infrared and ultraviolet light (De Boeck and Vaes, 2021), than without. It is conceivable that well-chosen augmentations can enable a shared sensory experience between deaf and hearing that bypasses the potential challenges that deaf and hearing people run into during an encounter.

Here, sensory augmentation is intended as a cognitive extension that makes use of stimuli available that are otherwise missed and can, therefore, enable deaf and hearing people to collaboratively make sense of the signals generated by the technology. However, designing usable and acceptable sensory augmentation technology is challenging in itself (Kärcher et al., 2012; Kristjánsson et al., 2016). These challenges need to be understood and overcome to realize the potential of sensory augmentation for bridging the sensory gap between deaf and hearing people.

Despite a number of existing sensory augmentation applications, they are (yet) not well-accepted in, nor widely used by, the deaf community (Ladner, 2012; Lu, 2017). Besides the lack of expected perceptual integration and requiring effortful use mentioned before, which in its own right may cause usability issues, adoption of this technology is stymied further by the functional and social disturbances they cause when used in everyday situations, and thus the usability and user-acceptance problems that flow from these disturbances.

Firstly, the current state-of-the art sensory augmentation devices suffer from fundamental usability problems (Kristjánsson et al., 2016). Sensory augmentation devices too often are developed and tested in the lab, where environmental conditions can easily be controlled (van Dartel and de Rooij, 2019). However, when deployed in a richer use-context, the lack of control of these environmental conditions causes a reduced the signal-to-noise ratio (Meijer, 1992): many more signals from the environment may trigger the actuators of a sensory augmentation device than it was originally designed for, introducing noise, and many more signals can stimulate the (other) senses, reducing the salience of the stimulus actuated by the device. This makes it harder to learn to make sense of the world through a sensory augmentation device designed in the lab for use outside the lab. Other common usability problems relate to information loss from translating artificial sensor data into stimuli that trigger the biological senses and corresponding risk of information overload, e.g., “sign gloves” or cochlear implants have limited usability due to challenges of translating the richness of a visual sign language4 (Grieve-Smith, 2016).

Secondly, adoption of sensory augmentation devices is also stymied by user-acceptance problems. Take the sensory augmentation applications that enable the translation of manual articulations into audible or readable text (Mills, 2011; Erard, 2017) or to cochlear implants (Valente, 2011), for example. The sensory-equipped “gloves” or “blind canes” that typically provide sensory input to these sensory augmentation applications also (unintentionally) make a user's sensory differences visible to bystanders, which might be unwanted and cause an emotional distance between the user and the device. Moreover, where sensory augmentation applications provide input to cochlear implants, such implants merely help deaf people understand spoken language: they do not help in, e.g., understanding music (due to limitations in pitch) or emotional aspects of spoken language (Caldwell et al., 2017). User-acceptance of applications that enable the translation of manual articulations to cochlear implants is also hindered by social disturbances. From the perspective of deaf people these implants can for instance be regarded as an attempt to “cure” deafness, and are “often perceived as a threat to deaf communities and ways of life” (Friedner and Kusters, 2020, p. 40).

To help realize the potential of sensory augmentation its usability and user-acceptance problems need to be addressed. Others have suggested that such limitations can partially be addressed by providing long training sessions (Kärcher et al., 2012), minimizing interference with other perceptual functions (Kristjánsson et al., 2016), or by giving increased attention to the device's aesthetics (Meijer, 1992). Although these factors can surely help, they do not sufficiently take into account the broader use-context (van Dartel and de Rooij, 2019), and they especially do not take into account the use-context that is specific to the encounters between deaf and hearing people. For example, they do not consider (unpredictable) environmental factors that can influence effective use: deaf people, having enhanced visual attention, get more easily distracted by changes in their visual periphery (Bavelier et al., 2006), and hearing people may get distracted by reactions of bystanders or disturbing background noise5, prevent the device's emphasis on the deaf person's sensory differences (Friedner and Kusters, 2020), nor offer opportunities to improve communication6 rather than to merely improve how well hearing people can understand deaf people.

Our perspective on this, is that a fundamentally different design approach needs to be developed and used to overcome the usability and user-acceptance problems of sensory augmentation devices. Namely, a situated design approach.

Most sensory augmentation applications that aim to bridge the sensory gap have been developed under lab conditions, instead of under real-life conditions. It is our conjecture that this is at the root of the usability and user-acceptance problems typical for existing sensory augmentation applications that aim to close the sensory gap. Therefore, we propose a situated design approach. Such is an approach in which design processes rely on 'actions' in the design context, instead of on predefined “plans” (Suchman, 1987; Simonsen et al., 2014). Such situated actions are typically determined by specific circumstances of the design context. Hence, real-life conditions, including any functional and social disturbances, inform the design process rather than challenge its blueprint.

However, situated design into possible configurations of sensory augmentation technology —that bridge the sensory gap between deaf and hearing in everyday situations while avoiding usability and acceptance issues— has two challenges: Relevant situated actions require (1) technological innovation, and (2) a suitable design context.

Currently, no sensors and actuators are specifically designed for sensory augmentation7. Moreover, existing applications of sensory augmentation technology suffer from technological limitations, such as limitations of the bandwidth of sensory input and of the resolution of actuators, that may not measure up to the sensitivity of the human sense the information is transmitted to (Kristjánsson et al., 2016). Rather than planning for such innovation, however, a situated design approach demands that also the technological innovation required to facilitate the design process is —as much as possible— informed by the design context.

Design methods for sensory augmentation usually rely on unusual equipment (de Rooij et al., 2018b) and abstract tasks in lab-situations (Mahatody et al., 2010). The domain of human-centered design offers design methods such as personas and focus groups, but they are specifically used for inquiry about the context of use (Sleeswijk-Visser, 2009). However, actual use or application of technology “in the wild” is hard to predict. Simultaneously, “knowledge is always partial and for this reason situated” (Haraway, 1988; Simonsen et al., 2014, p. 144), the context of use should be adopted as the primary starting point for design, such as so-called situated design methods do (Simonsen et al., 2014). Otherwise, practical factors in that context and subjective experiences from the actual context of use will not be included in the design process.

Even so, since a plan cannot exactly determine the resulting action and is not as strict as following a set of rules, the design process should take place in specific situations and be performed from embedded positions. Like, in the words of Suchman (1987), you can plan a canoe trip, but you do not know in advance where and for how long you want to pause to recover from the strong current you encountered along the way. Therefore, in contrast to a predetermined design process, we propose to let the design process be guided by user actions influenced by the specific constraints and possibilities in that situation (Suchman, 1987; Witter and Calvi, 2017): an active design process in which one design action in the context of use leads to another in order to iteratively arrive at a design that is directly shaped by the context of use (Simonsen et al., 2014, p. 282).

Therefore, a viable situated design approach should firstly aim to reveal in which contexts a sensory gap occurs and/or seems an issue. Subsequent use of provided sensory augmentation technology in real-life situations will reveal specific needs or opportunities that occur from that situation in order to bridge the gap. The museum (Figure 1), for instance, is a potentially useful environment where shared experiences can arise and where they could be supported through the application of sensory augmentation. Moreover, museum visitors actively seek new experiences that forge meaningful connections to their (social) environments, and there is a growing tendency within the museum sector to offer room for experimentation centered around more (sensory) inclusive experiences (Guggenheim, 2015). The Van Abbemuseum in Eindhoven, for example, has conducted experiments regarding the inclusion of the deaf and blind over recent years. One of these included a tangible painting presented to offer for a new shared experience between both blind and sighted people8. Undertaking situated actions in a museum context where users “make and test” sensory augmentation experiences, can arguably lead to an essential contribution to the user-friendliness and acceptance of sensory augmentation applications that support the communication between deaf and hearing people.

This contribution may not only help examine the potential and limitations of sensory augmentation in relation to communications between deaf and hearing people, but may also equip designers of sensory augmentation applications at large with tools and methodology that allows for the design context to inform their design. Our first frontier, however, is to unlock the potential of sensory augmentation for communication between deaf and hearing by taking a situated design approach to bridging the sensory gap between them.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

MW developed the theory and wrote the manuscript in collaboration with AR, MD, and EK. All authors contributed to the article and approved the submitted version.

This publication is part of the project – “Gesitueerde ontwerpmethoden voor het ontwerpen van sensory augmentation-toepassingen voor doven” with project number 023.017.033 of the research programme “Promotiebeurs voor leraren – PvL 2021-1” which is partly financed by the Dutch Research Council (NWO).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. ^“Hearing Loss,” World Health Organization (World Health Organization), accessed April 11, 2022, https://www.who.int/health-topics/hearing-loss#tab=tab_2.

2. ^Where the definition of deafness covers a wide range of hearing-loss, we, for sake of clarity, focus on the societal division between people who are profoundly deaf, who only hear sound of 80 dB or higher, and fully hearing people, who have a hearing threshold above 20 dB (6). In this paper, the term deaf refers to people that are profoundly deaf.

3. ^“Eindhoven Students Reveal Smart Sleeve That Lets You Feel and Understand Any Foreign Language.” n.d. Accessed February 21, 2022. https://www.tue.nl/en/our-university/departments/wiskunde-en-informatica/de-faculteit/news-and-events/news-overview/23-11-2021-eindhoven-students-reveal-smart-sleeve-that-lets-you-feel-and-understand-any-foreign-language/.

4. ^“Why the Signing Gloves Hype Needs to Stop.” 2016. Audio Accessibility (blog). May 1, 2016. https://audio-accessibility.com/news/2016/05/signing-gloves-hype-needs-stop/.

5. ^“Gallaudet Finds Deaf People Don't See Better, They See Differently.” n.d. WAMU (blog). Accessed June 13, 2022. https://wamu.org/story/11/06/28/gallaudet_finds_deaf_people_dont_see_better_they_see_differently/.

6. ^“Tech voor doven: wat vinden doven er eigenlijk zelf van?” n.d. NRC. Accessed April 10, 2022. https://www.nrc.nl/nieuws/2016/10/10/niemand-gaat-met-zon-ding-over-straat-lopen-4680266-a1525832.

7. ^re:MakingSense symposium on sensory augmentation. 2021. Scott Novich at Re:Making Sense Online Symposium, Session #01. https://www.youtube.com/watch?v=Ia35tM7sNZs.

8. ^“Smartify | Lichamelijke Ontmoetingen Tour.” n.d. Smartify. Accessed June 21, 2022. https://smartify.org/nl/tours/bodily-encounters-tour.

Auvray, M., and Myin, E. (2009). Perception with compensatory devices: from sensory substitution to sensorimotor extension. Cogn. Sci. 33, 1036–1058. doi: 10.1111/j.1551-6709.2009.01040.x

Avery, B., Christian, S., and Thomas, B. H. (2009). “Improving Spatial Perception for Augmented Reality X-Ray Vision.” in 2009 IEEE Virtual Reality Conference. Lafayette, LA: IEEE. 79–82.

Bach-y-Rita, P., Tyler, M. E., and Kacz-marek, K. A. (2003). Seeing with the brain. Int. J. Hum. Comput. Interact. 2, 285–295. doi: 10.1207/S15327590IJHC1502_6

Bavelier, D., Dye, M. W. G., and Hauser, P. C. (2006). Do Deaf Individuals See Better? Trends Cogn. Sci. 10, 512–18. doi: 10.1016/j.tics.2006.09.006

Boothroyd, A. (1985). “A Wearable Tactile Intonation Display for the Deaf.” in IEEE Transactions on Acoustics, Speech, and Signal Processing.

Caldwell, M. T., Jiam, N. T., and Limb, C. J. (2017). Assessment and improvement of sound quality in cochlear implant users. Laryngoscope Investig. Otolaryngol. 2, 119–24. doi: 10.1002/lio2.71

De Boeck, M., and Vaes, K. (2021). Structuring human augmentation within product design. Proc. Des. Soc. 1, 2731–40. doi: 10.1017/pds.2021.534

de Rooij, A., Schraffenberger, H., and Bontje, M. (2018a). “Augmented Metacognition: Exploring Pupil Dilation Sonification to Elicit Metacognitive Awareness.” in Proceedings of the Twelfth International Conference on Tangible, Embedded, and Embodied Interaction, Stockholm. 237–44.

de Rooij, A., van Dartel, M., Ruhl, A., Schraffenberger, H., van Melick, B., Bontje, M., et al. (2018b). “Sensory Augmentation: Toward a Dialogue Between the Arts and Sciences.” in Interactivity, Game Creation, Design, Learning, and Innovation, eds Brooks, A. L., Brooks, E., and Vidakis, N., 229:213–23. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering. Cham: Springer International Publishing.

Deroy, O., and Auvray, M. (2012). Reading the World through the Skin and Ears: a new perspective on sensory substitution. Front. Psychol. 3, 457. doi: 10.3389/fpsyg.2012.00457

Dye, M. W. G., and Bavelier, D. (2013). “Visual Attention in Deaf Humans: A Neuroplasticity Perspective.” in Deafness. Springer. p. 237–63.

Erard, M. (2017). “Why Sign-Language Gloves Don't Help Deaf People.” The Atlantic. Available online at: https://www.theatlantic.com/technology/archive/2017/11/why-sign-language-gloves-dont-help-deaf-people/545441/ (accessed April 3, 2022).

Friedner, M., and Kusters, A. (2020). “Deaf Anthropology.” Ann. Rev. Anthropol. 49, 31–47. doi: 10.1146/annurev-anthro-010220-034545

Goldreich, D., and Kanics, I. M. (2003). Tactile acuity is enhanced in blindness. J. Neurosci. 23, 3439–45. doi: 10.1523/JNEUROSCI.23-08-03439.2003

Grieve-Smith, A. (2016). “Ten Reasons Why Sign-to-Speech Is Not Going to Be Practical Any Time Soon.” Technology and Language (blog). Available online at: https://grieve-smith.com/blog/2016/04/ten-reasons-why-sign-to-speech-is-not-going-to-be-practical-any-time-soon/ (accessed April 3, 2022).

Guggenheim (2015). Educators from the Guggenheim, the Met, and MoMA Discuss Access at Museums. The Guggenheim Museums and Foundation. Available online at: https://www.guggenheim.org/blogs/checklist/educators-from-the-guggenheim-the-met-and-moma-discuss-access-at-museums (accessed July 7, 2022).

Haraway, D. (1988). Situated knowledges: the science question in feminism and the privilege of partial perspective. Feminist Stud. 14, 575–99. doi: 10.2307/3178066

Hummels, C., and Van Dijk, J. (2015). “Seven Principles to Design for Embodied Sensemaking.” in Proceedings of the Ninth International Conference on Tangible, Embedded, and Embodied Interaction, Stanford, CA. 21–28.

Kärcher Silke, M., Fenzlaff, S., Hartmann, D., Nagel, S. K., and König, P. (2012). Sensory augmentation for the blind. Front. Hum. Neurosci. 6, 37. doi: 10.3389/fnhum.2012.00037

Kaspar, K., König, S., Schwandt, J., and König, P. (2014). The experience of new sensorimotor contingencies by sensory augmentation. Conscious. Cogn. 28, 47–63. doi: 10.1016/j.concog.2014.06.006

Keller, K. (2018). Could This Futuristic Vest Give Us a Sixth Sense? Smithsonian Magazine. Available online at: https://www.smithsonianmag.com/innovation/could-this-futuristic-vest-give-us-sixth-sense-180968852/ (accessed August 31, 2022).

König, S. U., Schumann, F., Keyser, J., Goeke, C., Krause, C., Wache, S., et al. (2016). Learning new sensorimotor contingencies: effects of long-term use of sensory augmentation on the brain and conscious perception. PLoS ONE 11, e0166647. doi: 10.1371/journal.pone.0166647

Kristjánsson, Á., Moldoveanu, A., Jóhannesson, Ó. I., Balan, O., Spagnol, S., Valgeirsdóttir, V. V., et al. (2016). Designing sensory-substitution devices: principles, pitfalls and potential 1. Restor. Neurol. Neurosci. 34, 769–87. doi: 10.3233/RNN-160647

Kusters, A. (2020). One village, two sign languages: qualia, intergenerational relationships and the language ideological assemblage in Adamorobe, Ghana. J. Linguist. Anthropol. 30, 48–67. doi: 10.1111/jola.12254

Ladner, R. E. (2012). Communication technologies for people with sensory disabilities. Proceed. IEEE 100, 957–973. doi: 10.1109/JPROC.2011.2182090

Lartz, M. N., Stoner, J. B., and Stout, L. J. (2008). Perspectives of assistive technology from deaf students at a Hearing University. Assist. Technol. Outcomes Benefits 5, 72–91.

Leenes, R., Palmerini, E., Koops, B. J., Bertolini, A., Salvini, P., and Lucivero, F. (2017). Regulatory challenges of robotics: some guidelines for addressing legal and ethical issues. Law Innov. Technol. 9, 1–44. doi: 10.1080/17579961.2017.1304921

Lu, A. (2017). “Deaf People Don't Need New Communication Tools — Everyone Else Does.” Medium (blog). Available online at: https://medium.com/@alexijie/deaf-people-dont-need-new-communication-tools-everyone-else-does-df83b5eb28e7 (accessed April 3, 2022).

Mahatody, T., Sagar, M., and Kolski, C. (2010). State of the art on the cognitive walkthrough method, its variants and evolutions. Int. J. Hum. Comput. Interact. 26, 741–785. doi: 10.1080/10447311003781409

McManus, I. C., Stöver, K., and Kim, D. (2011). Arnheim's gestalt theory of visual balance: examining the compositional structure of art photographs and abstract images. Iperception 2, 615–47. doi: 10.1068/i0445aap

Meijer, P. B. L. (1992). An Experimental System for Auditory Image Representations. IEEE Trans. Biomed. Eng. 39, 112–21. doi: 10.1109/10.121642

Mills, M. (2011). On disability and cybernetics: helen keller, norbert wiener, and the hearing glove. Differences 22, 74–111. doi: 10.1215/10407391-1428852

Novich, S. D., and Eagleman, D. M. (2014). 2014 IEEE Haptics Symposium (HAPTICS) Houston, TX, USA 2014 Feb. 23 - 2014 Feb. 26. “2014 Ieee Haptics Symposium (Haptics).” in Essay. In [d79] a Vibrotactile Sensory Substitution Device for the Deaf and Profoundly Hearing Impaired, IEEE. p. 1–1.

O'Regan, J. K. (2011). Why Red Doesn't Sound Like a Bell: Understanding the Feel of Consciousness. New York: Oxford University Press, Inc.

Profita, H. P. (2014). “Smart garments: an on-body interface for sensory augmentation and substitution.” in Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle Washington: ACM. p. 331–36.

Raisamo, R., Rakkolainen, I., Majaranta, P., Salminen, K., Rantala, J., and Farooq, A. (2019). Human augmentation: past, present and future. Int. J. Hum. Comput. Stud. 131, 131–143. doi: 10.1016/j.ijhcs.2019.05.008

Rizza, A., Terekhov, A. V., Montone, G., Olivetti-Belardinelli, M., and O'Regan, J. K. (2018). Why early tactile speech aids may have failed: no perceptual integration of tactile and auditory signals. Front. Psychol. 9, 767. doi: 10.3389/fpsyg.2018.00767

Rosen, R. S. (2012). Sensory orientations and sensory designs in the American deafworld. Senses Soc. 7, 366–73. doi: 10.2752/174589312X13394219653725

Schumann, F., and O'Regan, J. K. (2017). Sensory augmentation: Integration of an auditory compass signal into human perception of space. Sci. Rep. 7, 42197. doi: 10.1038/srep42197

Simonsen, J., Svabo, C., Strandvad, S. M., Samson, K., and Hertzum, M. (2014). Situated Design Methods. Cambridge, MA: Mit Press.

Sleeswijk-Visser, F. (2009). Bringing the everyday life of people into design (Doctoral dissertation). Delft University of Technology, Delft, Netherlands. Available online at: http://resolver.tudelft.nl/uuid:3360bfaa-dc94-496b-b6f0-6c87b333246c

Suchman, L. A. (1987). Plans and Situated Actions: The Problem of Human-Machine Communication. New York: Cambridge University Press.

Tapson, J., Diaz, J., Sander, D., Gurari, N., Chicca, E., Pouliquen, P., et al. (2008). “The feeling of color: A haptic feedback device for the visually disabled,” in 2008 IEEE Biomedical Circuits and Systems Conference. p. 381–384.

Thompson, E., and Stapleton, M. (2009). Making sense of sense-making: reflections on enactive and extended mind theories. Topoi 28, 23–30. doi: 10.1007/s11245-008-9043-2

Trujillo, J. P., and Holler, J. (2021). The kinematics of social action: visual signals provide cues for what interlocutors do in conversation. Brain Sci. 11, 996. doi: 10.3390/brainsci11080996

Valente, J. M. (2011). Cyborgization: deaf education for young children in the cochlear implantation era. Qual. Inq. 17, 639–52. doi: 10.1177/1077800411414006

van Dartel, M., and de Rooij, A. (2019). “The Innovation Potential of Sensory Augmentation for Public Space.” in International Symposium on Electronic Art, Gwangju.

Von Uexküll, J. (2013). A Foray into the Worlds of Animals and Humans: With a Theory of Meaning. Minneapolis, MN: U of Minnesota Press.

White, B. W., Saunders, F. A., Scadden, L., Bach-y-Rita, P., and Collins, C. C. (1970). Seeing with the skin. Percept. Psychophys. 7, 23–27.

Witter, M., and Calvi, L. (2017). “Enabling Augmented Sense-Making (and Pure Experience) with Wearable Technology.” in International Conference on Intelligent Technologies for Interactive Entertainment. Funchal: Springer. p. 136–41.

Witzel, C., Lübbert, A., Schumann, F., Hanneton, S., and O'Regan, J. K. (2021). Can Perception be Extended to a “Feel of North?” Tests of Automaticity With the NaviEar. doi: 10.31234/osf.io/2yk8b

World Health Organization (2019). Deafness and Hearing Loss. World Health Organization. Available online at: https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss (accessed December 16, 2020).

Keywords: deaf-hearing communication, sensory augmentation technology, shared experiences, situated design, sensory gap, usability and acceptance

Citation: Witter M, de Rooij A, van Dartel M and Krahmer E (2022) Bridging a sensory gap between deaf and hearing people–A plea for a situated design approach to sensory augmentation. Front. Comput. Sci. 4:991180. doi: 10.3389/fcomp.2022.991180

Received: 11 July 2022; Accepted: 17 October 2022;

Published: 09 November 2022.

Edited by:

Anton Nijholt, University of Twente, NetherlandsReviewed by:

Frank Schumann, École Normale Supérieure, FranceCopyright © 2022 Witter, de Rooij, van Dartel and Krahmer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Michel Witter, bW1sLndpdHRlckBhdmFucy5ubA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.