95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Comput. Sci. , 22 September 2022

Sec. Digital Education

Volume 4 - 2022 | https://doi.org/10.3389/fcomp.2022.959351

This article is part of the Research Topic Re-inventing Project-Based Learning: Hackathons, Datathons, Devcamps as Learning Expeditions View all 6 articles

In this article, we argue that game jam formats are uniquely suited to engage participants in learning about artificial intelligence (AI) as a design material because of four factors which are characteristic of game jams: 1) Game jams provide an opportunity for hands-on, interactive prototyping, 2) Game jams encourage playful participation, 3) Game jams encourage creative combinations of AI and game development, and 4) Game jams offer understandable goals and evaluation metrics for AI. We support the argument with an interview study conducted with three AI experts who had all organized game jams with a focus on using AI in game development. Based on a thematic analysis of the expert interviews and a theoretical background of Schön's work on educating the reflective practitioner, we identified the four abovementioned factors as well as four recommendations for structuring and planning an AI-focused game jam: 1) Aligning repertoires, 2) Supporting playful participation, 3) Supporting ideation, and 4) Facilitating evaluation and reflection. Our contribution is motivated by the recent discourse on general challenges and recommendations of teaching AI identified by related literature, here under the long and intertwined history of games and AI in general. The article presents an initial discussion of the value of game jam formats for learning about AI and which factors need to be considered in regard to this specific learning goal.

Recent studies have demonstrated how practitioners and designers have difficulties in understanding what artificial intelligence (AI) “can do” and the different capabilities that this umbrella term technology includes (Dove et al., 2017; Long and Magerko, 2020; Yang et al., 2020; Jin et al., 2021). As AI technology advances and obtains prominent roles in our everyday lives, it is increasingly important for people to be able to understand the workings of AI in order to also be able to effectively manage AI technology (Abdul et al., 2018; Došilović et al., 2018), and to understand, weigh, and mitigate risks associated with using and developing (with) AI (Bender et al., 2021).

Prior research has demonstrated several issues when people who use AI technology do not understand the technology (Abdul et al., 2018; Došilović et al., 2018): in outlining a research agenda for Hybrid Intelligence (HI)—i.e., systems where people and AI technology cooperate to achieve shared goals (Akata et al., 2020)—explains there can even be potentially catastrophic consequences if people “overestimate the range of expertise of an automated system and deploy it for tasks at which it is not competent” (Akata et al., 2020). Additionally, the use of the terms artificial intelligence and machine learning (ML)—a popular subcategory of AI—have recently been criticized for discouraging “attempts to understand or engage those technologies” (Tucker, 2022). Instead, using these words in vernacular works more to “obfuscate, alienate, and glamorize” (Tucker, 2022). Hence, learning how to design AI systems that are useful, valuable, and understandable for people using these systems is not a trivial task.

Wollowski et al. (2016) explored how educators and practitioners generally approach how to teach AI: While practitioners suggest systems engineering as a major learning outcome, educators frame “toy problems” like puzzles and games as a “good way to give a concise introduction to basic AI topics, in particular to students who by and large have only a year or two of software development expertise” (Wollowski et al., 2016). An important aspect for practitioners is the “ability to take perspective of AI tools and techniques,” while educators instead “take a broader perspective, focusing on historical, ethical and philosophical issues” (Wollowski et al., 2016).

We propose game jams as one approach which offers a combination of “toy problems” while presenting unique opportunities for evaluating deployments of AI in terms of human experience, rather than arbitrary performance metrics. During game jams, participants can use AI technology as a design material in a safe setting, which encourages playful ideation and the exploration of AI in experimental game prototypes. Game jams offer unique opportunities for the rapid materializing of otherwise abstract algorithms and their consequences.

Game jams are short and accelerated design events, lasting often between only 48 h and a week, where participants team up—sometimes with people they do not already know—to collaborate on generating game ideas, design, play-test, and prototype digital or analog games which creatively address a theme revealed at the beginning of the game jam, and where the final game prototypes are shared and presented at the end of the game jam (Falk et al., 2021). Sometimes game jams are organized as a competition, where the game that best or most creatively addresses the game jam theme wins and receives a prize. The deliberately short time frame is meant to encourage fast design decisions and thereby creativity (Kultima, 2015; Falk et al., 2021). As argued by Falk Olesen and Halskov, game jams share many similarities with other formats such as hackathons and design sprints, and comparisons can therefore be fruitful for research (Falk Olesen and Halskov, 2020). Where hackathons may tend to induce a work and competitive state for participants, game jams can furthermore inspire a play state for participants (Grace, 2016). Participation in game jams can then be described as a constructive form of play or ludic craft (Goddard et al., 2014). The play has much potential for not only children but also for adults in terms of learning (Diaz-Varela and Wright, 2019) and has been suggested by Kolb and Kolb as one of the highest forms of experiential learning (Kolb and Kolb, 2010). In the words of Dewey, there “is no contrast between doing things for utility and fun” (Dewey, 1997), or between process and outcome in a truly educative experience (Kolb and Kolb, 2010).

Game jams have in recent years attracted attention as methods for participants to learn in playful settings. Supporting this attention, participants often report that the main motivation for attending game jams is learning (Preston et al., 2012; Arya et al., 2013; Fowler et al., 2013; Alencar and Gama, 2018; Meriläinen, 2019; Gama, 2020; Aurava et al., 2021). Despite this, there is limited research on learning through game jam participation (Meriläinen, 2019). Additionally, there is only a little research on how to organize game jams, and on how game jams are “most meaningfully and best pedagogically carried out” in education (Murray et al., 2017). More research is needed on how game jams can facilitate learning for participants and how game jams may be organized for optimizing learning experiences. Murray et al. (2017) described ‘academic' game jams as deliberately organized for learning experiences, as participants generate knowledge by undergoing design processes where they externalize thinking into interactive prototypes, and when presenting these objects, the participants explain and argue for the thinking that led to that object.

In this article, we present expert insights into how learning can happen during game jams and draw especially on Donald Schön's descriptions of a designer's reflective conversation with the materials at hand to describe how game jams can facilitate learning of AI by materializing the abstract. Schön's reflective practice model aims to capture the kind of intuitive knowledge that is developed by practical experience and which can be difficult to express (Schon, 1984). We draw on five key concepts from the study of Schön: repertoire, inquiry, framing, reflection-in-action, and reflection-on-action. These are elaborated in Section 3.2.1.

Based on the expert insights, we argue that game jams can be organized in order to support learning about how to design with AI technology because of four key factors:

1. Game jams provide an opportunity for hands-on, interactive prototyping.

2. Game jams encourage playful participation.

3. Game jams encourage creative combinations of AI and game development.

4. Game jams offer understandable goals and evaluation metrics for AI.

Our contribution is 2-fold: First, we present the results of an analysis of an expert interview study on the specific topic of designing AI-based games during game jams. We support the expert insights with a theoretical framing—based on Schön's notion of reflection—on how game jams may be understood as a method for learning, specifically in the context of materializing abstract knowledge and thinking into concrete and interactive projects, where both process and product contribute to knowledge generation. Second, we discuss opportunities and challenges of organizing game jams in order to support participants' learning of AI specifically, and on knowledge acquisition in game jams in general. We hope that these contributions will be helpful for educators, who wish to organize AI-focused game jams as part of their teaching; and for designers and developers, who wish to engage with AI as a design material in the context of a game jam.

The article is structured as follows: we first present related work on learning AI concepts, and the connection between games (development) and AI, as well as related work on game jams and learning. We then present our methods for collecting and analyzing empirical data and combining it with theoretical foundations to arrive at the four key factors. These factors are presented after one another, and then we provide practical recommendations for structuring and planning an AI-focused game jam as a learning experience. Finally, we discuss some main questions for further research.

In this section, we first describe existing work on the peculiarities and challenges in teaching AI in general, and how games and game development have been used for teaching AI concepts. Next, we discuss how game jams can be viewed as settings for knowledge generating design processes and relate this to learning and teaching theory.

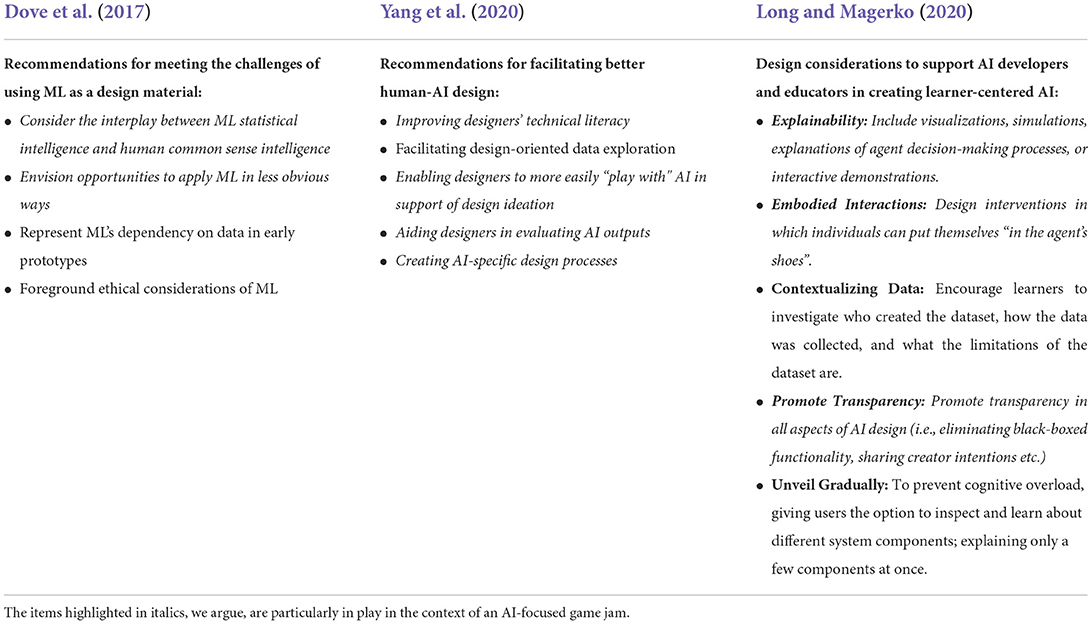

It is well-recognized that grasping, understanding, and using AI is an open problem to many practitioners due to its intangible nature, obscure “black-box” inner workings (Long and Magerko, 2020), and the uncertainty of its capabilities, and output complexity (Yang et al., 2020). In game design specifically, research has found that novices generally find it difficult to learn AI fundamentals such as game theory, machine learning, and decision trees (Giannakos et al., 2020). Here, we present three recent pieces of work which have used a mix of empirical research and literature reviews to identify and categorize why people can find it challenging to learn and work with AI. Each of these articles not only identifies challenges, but also recommendations for foci and opportunities for facilitating AI literacy, and they are, therefore, highly relevant for synthesizing the major challenges in the area. Furthermore, they focus on creating with AI, i.e., how we might develop AI literacy to the point where AI can be used as a generative design material for designers and developers. The recommendations of each of the articles—summarized in Table 1—will scaffold the analysis of this article by providing theoretical and empirical rationale for why each of the factors we present are relevant to learning AI by participating in game jams.

Table 1. An overview of recommendations and considerations for facilitating the use and knowledge of ML and AI (slightly condensed for an overview).

Dove et al. (2017) identified three major challenges of using ML as a design material based on qualitative inquiries with user experience (UX) designers. The three main challenges were Difficulties in understanding ML and its capabilities, Challenges with ML as a design material (particularly difficulty in prototyping with ML), and Challenges with the purposeful use of ML. The participating designers of this study highlighted that the main issue is that we are only just beginning to grasp and envision the possibilities of ML while often not really understanding how and why these technologies work. In response to the identified challenges, the authors of the article invite the community to further investigate how we might

• Consider the interplay between ML statistical intelligence and human common sense intelligence.

• Envision opportunities to apply ML in less obvious ways.

• Represent ML's dependency on data in early prototypes.

• Foreground ethical considerations of ML.

Yang et al. (2020) defined (based on literature and a synthesis of personal experiences in teaching AI-design) two sources of challenges distinctive to working with AI for designers: 1) uncertainty surrounding AI's capabilities, and 2) AI's output complexity. Each of these sources presents a subset of challenges such as envisioning novel AI “things” for a given UX problem, prototyping and testing, crafting thoughtful interactions, and collaborating with AI engineers. Based on a literature review, the authors identify five broad themes in human-computer interaction-work of how to facilitate the design of human-AI interaction:

• Improving designers' technical literacy.

• Facilitating design-oriented data exploration.

• Enabling designers to more easily “play with" AI in support of design ideation, so as to gain a felt sense of what AI can do.

• Aiding designers in evaluating AI outputs.

• Creating AI-specific design processes.

Finally, Long and Magerko (2020), based on literature, presented 17 competencies encompassing AI literacy with a focus on what non-technical learners should know about AI. The competencies are followed by the suggestions of several design considerations to support AI developers and educators in creating learner-centered AI:

• Explainability: Include visualizations, simulations, explanations of agent decision-making processes, or interactive demonstrations.

• Embodied Interactions: Design interventions in which individuals can put themselves “in the agent's shoes”.

• Contextualizing Data: Encourage learners to investigate who created the dataset, how the data was collected, and what the limitations of the dataset are.

• Promote Transparency: Promote transparency in all aspects of AI design (i.e., eliminating black-boxed functionality, sharing creator intentions, etc.)

• Unveil Gradually: To prevent cognitive overload, give users the option to inspect and learn about different system components; explaining only a few components at once.

While it is clear that there are many complex challenges in this domain, it is worth noting that there are clear overlaps in “what can be done.” We argue that several of these recommendations and considerations can be accommodated by an appropriately designed game jam, and refer back to these claims in the analysis part of the article.

Games and AI are two areas in computer science that have a long and closely-connected history. The origin points back to Alan Turing's proposed Turing test—originally called the imitation game (Turing, 1950)—a kind of ‘game of deceit' between an AI and a human (Hingston et al., 2006). For a visual timeline of some milestones in this historical development of AI and games, see for example Xia and Ye's work (Xia et al., 2020) (who, however, do not include the Turing test in their overview). Hingston and colleagues (Hingston et al., 2006) explain this historically intertwined development with the observation that key skills used when playing non-trivial games—such as “the ability to plan strategies and reason about the environment, other agents, and the effects of one's own actions on these elements, as well as to adapt to changes” (Hingston et al., 2006)—are key skills in AI programming and research as well.

Because there is a close connection between game-playing skills, AI programming, and research skills, games have often been used to teach students about AI (Hingston et al., 2006; McGovern et al., 2011), as it “seems especially fitting then, to use games to teach students about aspects of intelligence and how it may be artificially simulated” (Hingston et al., 2006). Teaching AI by involving games has, e.g., been done by letting students play games, manipulating factors in-game and immediately see the effects, as in the game Spacewar, presented by McGovern et al. (2011). Today, there is a number of these kinds of games which can support AI and ML education, refer to e.g., Giannakos and colleagues' recent review of 17 such games (Giannakos et al., 2020). Learners can also engage with AI by developing games themselves. Hingston et al. (2006) explain how this enables learners to learn on two levels: by the programming of the game itself and by playing the game as well. According to Hingston et al. (2006), this has several benefits: it engages learners in the learning experience, promotes “higher order thinking skills and mental models, and encourages metacognition and reflection as they (the students) endeavor to improve their programming and their results in the game.”

The success of game-based teaching of AI, however, relies on several factors such as “game literacy of students, the technological skills of teachers, class schedule restrictions, the computers available and their specifications, and the available bandwith” (Giannakos et al., 2020). In this context, Treanor et al. (2015) have contributed by identifying and describing nine game design patterns for AI game mechanics which they argue can be generative for the ideation phases of designing AI-based games. Treanor et al. (2015) further argue that seriously considering the strengths and limitations of AI techniques can serve as a foundation for new kinds of games. Moving beyond AI-based game design, designing AI-based games in novel ways can also open “up new research questions, as players begin to interact with software in novel ways. Developing AI-based games also pushes us to tackle existing research problems from a new, practical perspective” (Treanor et al., 2015).

Motivated by the history of AI and game design, we focus on game jams for continuing this fruitful combination in a setting that in recent years has shown promising results in terms of learning.

The potential for facilitating and supporting reflective practice via game jams in an educational context is acknowledged by several researchers, including for example (Murray et al., 2017; Lassheikki, 2019; Meriläinen, 2019; Aurava et al., 2021). The topic of learning in game jams is also connected to pedagogical traditions which are already established and well-researched: Informal learning, collaborative learning, learning by doing, game-making pedagogy (Merilainen et al., 2020), and project-based learning (Hrehovcsik et al., 2016). As Merilainen et al. (2020) argue, learning in game jams is therefore not radically different from more established forms of learning and teaching, rather, it is a new form of doing. Game jam participants also seem to acknowledge the formats as settings for not only developing games but also for learning. Several studies have confirmed learning as a common motivation for participants to attend game jams (refer to e.g., Arya et al., 2013; Fowler et al., 2013; Alencar and Gama, 2018; Meriläinen, 2019; Gama, 2020). In terms of learning, these studies have indicated that several different skills can be learned in game jam settings, such as programming, computing, STEM skills (Science, Technology, Engineering, Mathematics), graphics creation, game design, problem solving, and creativity, to mention a few (Meriläinen, 2019; Merilainen et al., 2020). Murray et al. argue that learning in game jams also reaches beyond skills and knowledge that relate directly to the activity of designing and developing a game: “[…]the process can promote broader design thinking skills and encourage a better appreciation of the typical understand-create-deliver flow process, which may be found in many different contexts. Other advantages can include the encouragement of critical thinking skills, the ability to safely tinker and experiment, and the empowerment to fail and start over” (Murray et al., 2017).

Though game jams are often commended as a safe space for taking risks and failing, thereby leading to more experimentation (Kultima, 2015), learning from failure has to be facilitated, and cannot be assumed: “Why does failure undermine learning? Failure is ego threatening, which causes people to tune out [...] Whether people learn from failure depends not only on whether the failure is attention grabbing but also on people's motivation to attend to it” (Eskreis-Winkler and Fishbach, 2019). As Eskreis-Winkler and Fischbach argue, despite the fact that failure is being widely celebrated as a teachable moment, it is not necessarily the case. Therefore when organizing game jams in education, organizers have to be aware of this and create setups that accommodate for the balance of failure being both a teachable moment as well as a potential undermining learning experience.

As pointed out by Merilainen et al. (2020) and Murray et al. (2017), though the potential for learning in game jams has been increasingly recognized by formal education institutions, the formats are often not organized as integral parts of education. Instead, game jams are often perceived as fun, extra-curricular hobby activities.

Some resources and guides describe how to plan a game jam in general, which could be attended by, e.g., middle or high school students, but they do often not emphasize game jams as an integral educational activity (Aurava et al., 2021). One example is The Game Jam Guide developed by Cornish et al. (2017). The guide covers several concrete activities which can be planned as part of a general game jam, in order to facilitate the students' game jam experience and engage students with real-world issues, however, the guide does not go into detail with the question of how to deeply engage participants in learning and reflection in the specific context of AI (Cornish et al., 2017). One example of a game jam that was conducted as an integral part of education is the Pocket Game Jam, with the specific main purpose of introducing students to Pocket Code, an “easy-to-learn visual programming language” (Petri et al., 2015). However, the pilot study does not specifically and in detail explore how game jams support participants' learning and reflection. Though there are contributions that explore the benefits of incorporating game jams as part of education, there is little research on how game jams can act as a vehicle for learning beyond the skills directly related to game making. One notable and a more recent exception is Aurava et al. (2021), who draw on their own experiences with organizing game jams in education. The study in this article is distinguished from their contribution in that this article focuses on game jams as settings for knowledge acquisition on particularly difficult and abstract concepts such as found within the field of AI.

Framing game jams as only fun, extra-curricular hobby activities misses a profound opportunity for organizing game jams that specifically aim at enhancing the participants' learning experiences as they engage in reflective design processes. Furthermore, though learning is a common motivator for participants, assessing learning as a consequence of participating in a game jam can be problematic, perhaps especially since game jams are often not integral to formal education. By considering more systematic approaches to organizing game jams in order to specifically support learning and reflection, we may at the same time move toward addressing the issue of assessing learning in this context as well.

We use a combination of a theoretical framework and expert interviews to form the argument of how game jams offer valuable settings for learning how to design with AI. This section describes how the empirical data was collected and analyzed.

For our research, we found only five online descriptions of game jams which were organized with the specific purpose of incorporating AI techniques into the game prototypes. While this resulted in a focused albeit narrow data collection, we emphasize that for the purpose of our research, the data collection is suitable to start discussing the value of game jams in learning about AI and how game jams may be organized for supporting this specific learning goal.

We focused on identifying the few game jams which specifically engaged participants in incorporating AI in their game prototypes and which were described online. We contacted the organizers behind five such game jams, and four organizers from three of these game jams responded to our inquiry. The four organizers first responded to an online survey we sent to them, and three of them participated in semi-structured interviews where they elaborated on their answers from the survey. The survey and interviews were structured after the following open-ended questions, which allowed room for formulating longer answers (Audenhove and Donders, 2019):

1. Which game jam(s) involving AI have you organized?

2. Why do you organize game jams involving AI? In other words, what benefits does a game jam format bring to participants when working with AI?

3. Do you think that there is something specific about participating in a game jam and developing games that involve AI, which can help participants gain a better understanding of AI concepts? Why or why not?

4. If you think participation in game jams and developing games can help participants' understanding of AI concepts, how did you notice or observe a better understanding from the participants of AI concepts?

5. What do you believe needs to be in a game jam to support participants in developing games involving AI?

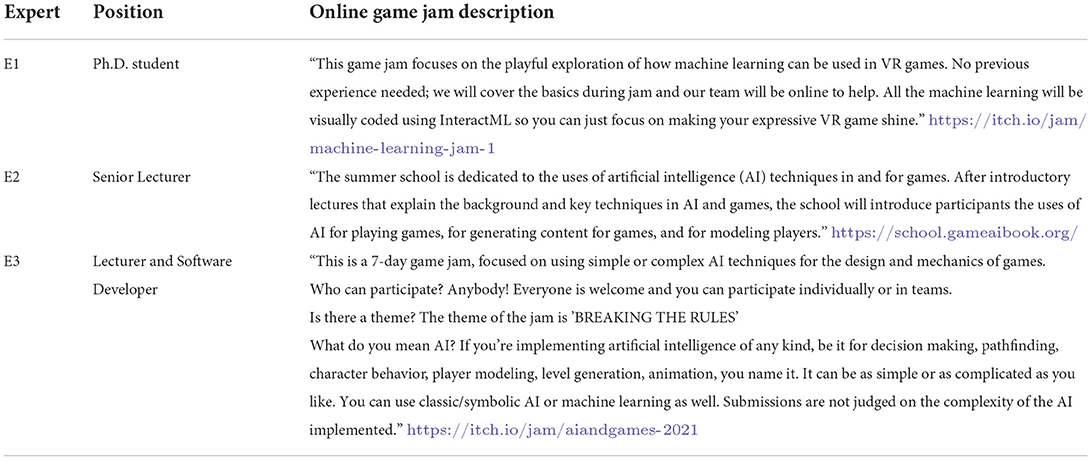

The interviews provided more depth than the survey alone, so our analysis is focused on the interviews with the three organizers. Table 2 presents an overview of the three organizers, their experience with AI, and the game jam they organized. All organizers were informed about the purpose of the research before they consented to participate in both the survey and the recorded interview sessions. The three interviewed organizers can be framed as experts, and we conducted the interviews to gather data from them because of their exclusive process knowledge (Audenhove and Donders, 2019) on how to practically organize and run a game jam which specifically engaged participants in designing AI-based games. All three organizers who were interviewed further had academic backgrounds in computer science, focusing on AI and ML, as teachers and researchers, which made them able to contribute with explanatory knowledge (Audenhove and Donders, 2019)—in addition to practical experience—in how well suited a game jam format is for learning about and designing with AI. The three interviews were conducted during spring 2022, lasted between 31 and 48 min, and were transcribed for later thematic analysis by the authors. For clarity purposes, quotes in the article are not represented verbatim.

Table 2. An overview of the position of the interviewed experts and a description of the game jams they organized.

The analysis was performed collaboratively by the authors. We first conducted a thematic analysis (TA) of the transcribed interviews, following the six phases described by Braun and Clarke (2012). We were mainly driven by the insights identified in the data set, though our identification and generation of codes and themes were also informed by how we theoretically frame knowledge generation in game jams based on Schön's notion of reflection, which we introduce in the next Section 3.2.1. TA can be performed as a combination of such an inductive and deductive approach, as the method acknowledges the reality that it “is impossible to be purely inductive, as we always bring something to the data when we analyze it, and we rarely completely ignore the semantic content of the data when we code for a particular theoretical construct” (Braun and Clarke, 2012).

After familiarizing ourselves with the data (phase one), we generated some first guiding codes which described features of the data that were relevant to our research focus (phase two). Next, we iterated our analysis and shifted from generating codes to themes, which in addition to codes represent levels of patterns in the data set (phase three). We continuously reviewed the themes and discussed new insights about themes (phase four). In preparing for writing the analysis (phase six), we clarified the resulting themes generated from the data set by defining and naming the themes (phase five). Especially in phases four and five, we returned to an awareness of how the theory of Schön may support the insights we found and thereby deepen the analysis. For phases two to five, we used the collaboration platform Miro to support the structure of codes and the generation of the themes visually. This resulted in the four themes:

1. Hands-on, Interactive Prototyping

2. Playful Participation

3. Creative Combinations of AI and Game Development

4. Understandable Goals and Evaluation Metrics for AI

The themes share the characteristic that they are all factors, which make game jams uniquely suited for learning about AI as a design material.

Where the related studies in Section 2 inform our motivation for focusing on AI and game jams, the theoretical framework of Schön's notion of reflection informs in detail how we understand knowledge generation in game jams. This works as a scaffold for the results of our analysis and in discussing knowledge generation of abstract concepts in game jams where participants work with AI. This approach is in line with a similar approach taken by Kim and Lim (2021), who develop a concept by first outlining a theory—Schön's notion of knowing-in-action and reflective practice—in which they ground their concept. While we share this approach—as well as the influential theoretical foundation by Schön—we additionally seek to evaluate the notion of game jams as a knowledge generating design process adding empirical insights from practitioners, before discussing how game jams may be set up for supporting learners, focusing on designers and developers.

Schön's notion of reflection has been discussed critically (Erlandson and Beach, 2008; Hébert, 2015). Acknowledging that some aspects of Schön's notion of reflection may be problematic in how it, to a certain degree, presumes a Cartesian duality of mind and body, Schön's concept of reflection is still widely used in both design and education. It presents a vocabulary for beginning to articulate how, for example, tacit knowledge influences and drives activity. John Dewey is often referred to as founding the model of reflective practice, particularly following his contribution in How We Think (Dewey, 1997). While Dewey's model of reflective practice has been criticized for an over-reliance on rationalism (Hébert, 2015), Schön's model of reflective practice aims at capturing the kind of intuitive knowledge which is cultivated through experience, and which can be difficult to articulate (Schon, 1984). In the following sections, we draw on especially five key concepts from the study of Schön, which we briefly introduce here:

• A person develops a repertoire of expectations, images, and techniques, as they experience “many variations of a small number of types of cases” (Schon, 1984), making them able to ‘practice' their practice.

• A design situation is generally approached by a kind of inquiry, i.e., a process of actions, which are performed as experiments (Schon, 1984).

• Framing is how a person defines which design problem to engage with and which constraints it consists of Schon (1984).

• The process of reflection-in-action is central to how “practitioners sometimes deal well with situations of uncertainty, instability, uniqueness, and value conflict” (Schon, 1984). Especially when an otherwise intuitive action leads to an unexpected or surprising result, we respond at the moment by reflection-in-action.

• After an action has been performed, the person undertaking that action may reflect-on-action, not in order to change the overall situation (as reflection-on-action happens after the fact), but in order to learn and e.g., develop their repertoire further (Schon, 1984).

While each concept characterizes a certain phenomenon or quality, the concepts are somewhat intertwined, and together they constitute a vocabulary that can be used to discuss how game jams can be knowledge generating while being, themselves, reflective design processes.

In this section, we present the results of our thematic analysis of the expert interviews, as well as how the four factors identified relate to a theoretical foundation of didactics and design theory. The section revolves around the experts' insights on what game jam formats offer, covering questions two to four described in Section 3.1.

In their argument for integrating game jams and other hackathon-like activities into scholarly meetings such as conferences and seminars, Cook et al. wrote in 2015: “While the output of a game jam is unlikely to be polished or properly tested, it has the advantage of existing” (Cook et al., 2015). When people participate in a game jam, they create something tangible, a prototype.

Prototyping is raised as one of the key challenges for teaching AI and ML, due to the immaterial nature of the technology, thus the subsequent recommendations: Represent ML's dependency on data in early proto -types, include visualizations, simulations, explanations of agent decision-making processes, or interactive demonstrations (Dove et al., 2017; Long and Magerko, 2020), Table 1. Prototypes serve as interactive de -monstrations and embodied interactions (Long and Magerko, 2020), where the learner gets a tangible demonstration of what AI does. In addition, the game jam forces the participants to rapidly improve their technical literacy (Yang et al., 2020). This was also highlighted by the experts:

“Making something always helps, right? So like, there's nothing more to it. It's not rocket science. Making something, writing something, see [] it fail. See how other people do it, the same problem, [] opens up creativity and opens up understanding” (E2).

When a designer engages with a design situation, they depend on inquiry activities: sketching, prototyping, mock-ups, and other objects and activities that externalize the participants' inquiry (Dix and Gongora, 2011), and which ultimately manifests the participants' reflection-in-action.

Reflection-in-action describes when a designer cognitively brings their awareness to their otherwise intuitive actions while performing these actions, e.g., because something unexpected happened (Schon, 1984). This may cause them to notice, think, observe, or reflect on something about the ongoing action (Schön, 1992a). In other words, while being aware in the moment of action like this, the designer constructs a new understanding, which at the same time informs their decision of actions in situ. This is closely connected to inquiry; in a game jam, reflection-in-action happens both when working with the material and because of the social setting:

“The other thing is also explaining to other people, either in their own team or elsewhere, why things are failing, it helps with reflection, right? Reflecting on what you're doing or your practices, having those practices questioned, all these things. So there's the two things, the practical aspect, and the social aspects, right? I think that with those two, people will improve their understanding [of AI]” (E2).

While participation in game jams can be framed as a reflective process, the developed game prototypes in a game jam can also be framed as knowledge generating.

We argue that the developed game prototypes during a game jam can be framed as thoughtful objects or "objects to think with" (Mor, 2013). Game prototypes can thus be more than "just" entertaining outcomes; they can contain arguments, knowledge, and articulations of this knowledge, refer to e.g., Dunne (2008); Dunne and Raby (2013):

“[ed. There] was a lot of creativity in terms of how the AI is applied. (...) And people were experimenting. One of my favorites, you're playing tic tac toe against a robot, where they trained a machine learning agent for the animated arm that plays tic tac toe against you, but also for the bot” (E3).

In this quote, E3 describes how creating and training a robot arm becomes a vessel for thinking about machine learning and intelligence that might enhance gameplay. The prototype itself, the arm, contains the articulation of some reflection, the game developer has made about how such an arm might behave.

Galey and Ruecker (2010) draw parallels between the fields of design and book history as the two fields share the process of creating artifacts worthy of analysis. In their words: "We recognize that digital artifacts have meaning, not just utility, and may constitute original contributions to knowledge in their own right. The consequence of this argument is that digital artifacts themselves–not just their surrogate project reports–should stand as peer-reviewable forms of research, worthy of professional credit and contestable as forms of argument" (Galey and Ruecker, 2010). Continuing their argument in the context of game jams, developed game prototypes can likewise be framed as forms of arguments. Because the prototypes are visual, sometimes tangible, we argue that they provide a unique form of situational backtalk (Schön, 1992a,b) to the designer as well as the community. E3 explains that the way AI (mis)behaves in unexpected ways can become the main feature of the game itself:

“There was one, the whole game is built around you wandering around nightclubs trying to have a fun night because you forgot your purse, so you have no money. And so they're figuring out ways to sneak into nightclubs and steal people's drinks and everything else, but had all the characters moving around and doing stuff. And there was just characters dancing on the dance floor in some ridiculous and sometimes lewd ways. And actually the game came more from the humor of the characters doing something they shouldn't, or the player accidentally creating a scenario that causes things to kick off in an unexpected way. (E3).

In summary, the opportunities for creating hands-on, interactive prototypes of AI, constitute the first factor why game jams are uniquely suited to materialize AI technology.

The short time-frame contributes to the game jam as a kind of temporary playful space, which Kennedy describes as valuable because “temporary differences and real-world problems can be marked off for a special time and place of playful creative design practice. [...] all game jams share this playful temporary suspension within a tightly rule bound structure and enjoy the bracketing off of differences” (Kennedy, 2018). Game jams are often emphasized for the kind of playful participation which can lead to the development of both innovative and conventional games (Goddard et al., 2014). In the words of E3, game jams can be used as:

“an opportunity just to have a bit of fun. And potentially, particularly also, for some of the community already had said, I'd really just like to have the opportunity to waste a week on trying to figure out how to do this [ed. design AI-based games], because they've never tried it before.” (E3).

Participating in a game jam can be described as a kind of inquiry, which is how a design situation is generally approached (Schon, 1984). This inquiry is conducted with an experimental what-if perspective because the design process takes place in the span between what exists and what could exist (Hansen and Halskov, 2014). The purpose of the designer's inquiry is the transformation of the uncertain design situation (Hansen and Dalsgaard, 2012), by articulating and identifying the uncertain elements in the situation (Dalsgaard, 2014).

The time-constrained format and thereby dedicated time which participants invest during the game jam can be framed as extrinsic motivational constraints; i.e., the participants are motivated to finish a playable prototype within the time frame (Reng et al., 2013). In explaining why he organizes game jams for teaching AI, E2 emphasized how these extrinsic motivational constraints engages participants in a design activity at the same time:

“they offer great networking, great activation more than anything, basically you have to do something, you're engaged because everybody else around you is engaged. There's this kind of [a] herd mentality that, ‘we need to finish' - sometimes unhealthy... overextending, over-scoping, competition and things like that. Those are the negative aspects for jams in general. But the emotional outcomes of jams, strong emotions, strong collaborations that might emerge, strong motivation to continue” (E2).

Intrinsic motivations for participating in game jams often include learning more about game development itself, as well as experiences of enthusiasm, fun, general joy of participating, and social aspects (Reng et al., 2013). In this perspective, organizing a game jam open for volunteer participation may then attract a certain audience who is intrinsically motivated:

“Just by framing it all as a game jam, you are already attracting people that know what a game jam is, that might want to make games, right? They have a playful gameful creative taste” (E1).

Regardless of whether it is extrinsically or intrinsically motivated, inquiry in game jams is to a high degree characterized by experimentation, partly because the game jam can be described as a ludic—i.e., playful—learning space, which is about gaining the courage to fail (Kolb and Kolb, 2010). In addition to participants having fun and experimenting, game jams have the potential to work as safe spaces enabling participants to take risks during their inquiry especially because of the short time-frame, an aspect which game jams often have been commended by Falk et al. (2021). E3 remarked that the participants take several kinds of risks during the game jam:

“It was interesting to see people fight with technical challenges, but also fighting with design challenges, and sometimes just leaning into the dumb weird thing that they've created and said, Yeah, I'm just going to make that. I'm just going to make a game that makes me laugh for five minutes” (E3).

In addition to game jams as safe spaces for taking creative risks, the social aspect of having participants engaged in the same activity at the same time and space can be beneficial for learning:

“It's this opportunity to take a bit of a creative risk, and hopefully be around people who can give you a bit of advice or support where necessary” (E3).

Because of the focus on playful participation, we argue that game jams are uniquely suited for learning about AI, in that they provide an arena for enabling designers to more easily “play with” AI (Yang et al., 2020), and for creating embodied interactions: designing interventions in which individuals can put themselves “in the agent's shoes” (Long and Magerko, 2020), Table 1.

Combinational creativity— i.e., the novelty in terms of “unfamiliar combinations of familiar ideas” (Boden, 2004) —has been highlighted as an important aspect of how creativity can be supported in game jams (Falk et al., 2021), and Section 2.2 outlined how the combination of AI and games have proved valuable in educational settings.

While it can be difficult for novices to combine AI and games in creative ways, E3 did observe creativity in the AI-based games developed:

“Some of them were really creative, people were trying different things. One of the things I was kind of thinking is we're going to get a certain number of top down shooters, or first person shooters or platformers, with simple non player characters. One of the things we did see through a lot of the submissions was a lot of creativity in terms of how the AI is applied” (E3).

Based on the experiences and knowledge of the experts, game jams are valuable for envisioning opportunities to apply ML in less obvious ways, as highlighted by Dove et al. (2017), and for enabling designers to more easily “play with” AI in support of design ideation (Yang et al., 2020), Table 1. The development of a lasting artifact, i.e., the resulting game prototype, may also play a positive role in engaging participants in a state of creative flow as well as in learner interest development/self-efficacy (Long et al., 2021).

During the game jam, AI or ML can be used directly as a design material:

“sometimes [people] were like, I'm just gonna focus all of it on this one thing. And then build the game around this one mechanic. there was one that did just Boid's flocking algorithms, because they were like, I want to learn how to do flocking algorithms and then built a space shooter over it.” (E3).

Here, the participants used a specific AI algorithm as a creativity constraint, similar to how an architect might use different building materials for simultaneous constraint and ideation inspiration.

The specific topic of AI and game development can be described as a kind of thematic framing (Schön, 1992a), to which the participants adhere to. Where the purpose of the inquiry is the transformation of a wicked design situation —i.e., it can not be definitely described or trivially solved, see e.g., Rittel and Webber (1973)— into a better design situation, the transformation process itself can be seen as a form of framing of constraints which the design situation consists of Dalsgaard (2014). Framing is a form of a selection of these constraints, and in doing so, the designer can transform an uncertain design situation into one which entails at least temporary certainty, until perhaps a new framing of the design situation is conducted. Framing is then the way in which the designer perceives the design situation, and shapes how the design situation should be approached (Schön, 1992a).

While the most obvious application of AI to a game design might be the design of non-player characters, E3 explained that this is only one of many ways in which AI is used in game design. They described that while organizing an AI-focused game jam, they had made a substantial effort to communicate the various ways in which AI could be used, utilized, and applied, because an AI-focused game jam may already form a certain framing of expectations prior to the game jam in terms of technicality and complexity:

“Other jams don't have the same level of technical expectation, or potential complexity to them, which was certainly something that we struggled with. (...) I tried to go out of my way to express all the different ways I could think of off the top of my head, that you could tackle it.” is it a character or is it just for the decision making of an opponent” (E3).

In summary, we argue that because of the particular constraints and framings inherent in game jams, they offer unique opportunities to apply AI in truly creative and novel ways. The game genre may even encourage deployments of AI which would motivate designers and developers who would otherwise not find AI technology inherently interesting.

Artificial intelligence is sometimes (not unjustly) described as “a shiny new hammer looking for nails” (Vardi, 2021). The main challenge of building and applying AI is how to evaluate its output, cf. (Yang et al., 2020), especially pertinent if we apply AI to problems that are not well-defined and understood. If we do not understand the problems we attempt to solve with AI, it is easy to rely on arbitrary, narrow metrics as success measures (Raji et al., 2021), and this is one of the main challenges and discussions of AI today: “Current AI approaches have weaponized Goodhart's law by centering on optimizing a particular measure as a target. This poses a grand contradiction within AI design and ethics: optimizing metrics results in far from optimal outcomes” (Thomas and Uminsky, 2022).

We argue, that game jams provide understandable goals, and therefore, evaluation metrics, for sandbox-deployments of AI. Rather than improving arbitrary algorithms, games provide an arena for AI to provide value by much more varied dimensions than speed, accuracy, or performance. E3 explains, for instance, that the deployment of AI in e.g., strategy games constitutes a more “classical” view of AI, while e.g., first-person-shooter games involve a theatrical design of the characters involved:

“in pure, just artificial intelligence, that kind of classical theoretical underpinnings of both symbolic AI and machine learning, (...) strategy games deal (...) with complex decisions, decision spaces with a high branching factor, and often very long term reward structures. (...) But if we were to look at game AI design, non-player character design, (...) has a much greater alignment with theatrical productions than a traditional game design ethos, because we're actually more interested in the theatrical blocking of the experience”1.

E1, who uses game jams for data collection in their research on Machine Learning, describes that the game allows them to look at various constructs of evaluation:

“Because this is focused on games, we are also trying to understand; How do they evaluate their own evaluation in terms of player experience? So, enjoyment, game field, these are constructs that we're trying to assess.”

The aim of a good game is not that its non-player characters or its inherent intelligence should be as fast, smart, or strong as possible, but rather that the game provides a good experience. A non-player character who consistently outperforms the player would disrupt the optimal experience of flow (Csikszentmihalyi and Csikzentmihaly, 1990), stipulating that the game is not too easy and not too difficult, but rather “just right” for keeping the player engaged.

Non-player characters are only one of many applications of AI (as described in Section 4.3). A game is designed with its own inherent rules, aesthetics, and laws to which AI should adhere, and which a game designer must consider through their application and design of AI. The optimum of a good game is a good player experience. The use of AI in game design encourages the game designer to constantly evaluate their AI design, not in terms of how well it performs, but how good an experience it creates. A game, by virtue of its nature, forces the developer to consider and cater to the user who is going to interact with it to a much higher degree than many applications of AI in business and research.

Therefore, game jams provide a unique opportunity for not only aiding designers in evaluating AI outputs (Yang et al., 2020) but also to think critically about what and how to evaluate, thus considering the interplay between ML statistical intelligence and human common sense intelligence (Dove et al., 2017), enhancing explainability and promoting transparency in a learner-centered way, as per the design considerations presented by Long and Magerko (2020), Table 1.

The distinction between reflection-in-action (refer to Section 4.1.1) and reflection-on-action is when a designer's reflection occurs. Reflection-on-action describes when the designer's reflective awareness happens after the action has occurred so that the action cannot be informed by this post situ reflective process (Schön, 1992a). Hence, while reflection-on-action does not impact the design situation this reflective awareness impacts the designer themselves and has the potential for expanding the designer's repertoire. This is one of the more defining aspects of a game jam in a learning context, as reflection-on-action can contribute to participants' knowledge generation and experience.

All experts highlighted the continuous dialogue with other game jam participants, as well as the final demonstration of games, as important events for reflection on knowledge generation. The game jam, thus, provides opportunities for active reflection-on-action:

“We had a lot of discussion in the server afterwards or during the jam where people were talking about, ‘Oh, this thing [I'm not] sure how to get this to work', or ‘I've got this happening. But now these characters are doing this. And I don't know why'. And sometimes people were able to give guidance or advice or ask them, ‘have you tried doing this?' (...) We've tried to encourage people, particularly given the length of the jam, (...) ‘Push your updates, let us know how you're doing. Let us see what you're creating', to try and reinforce that sense of community. And just give them an idea of we're all invested to see how everyone else does”) (E3).

The game jam and the final demonstration of games, we argue, are unique opportunities for peer-review and discussions—and therefore, for the individual as well as social reflection-on-action. In a didactic perspective, this is also termed postproblem reflection: “As the students evaluate their own performance and that of their peers, they reflect on the effectiveness of their self-directed learning and collaborative problem solving. Such assessment is important for developing higher order thinking skills” (Resnick, 1987; Hmelo and Ferrari, 1997).

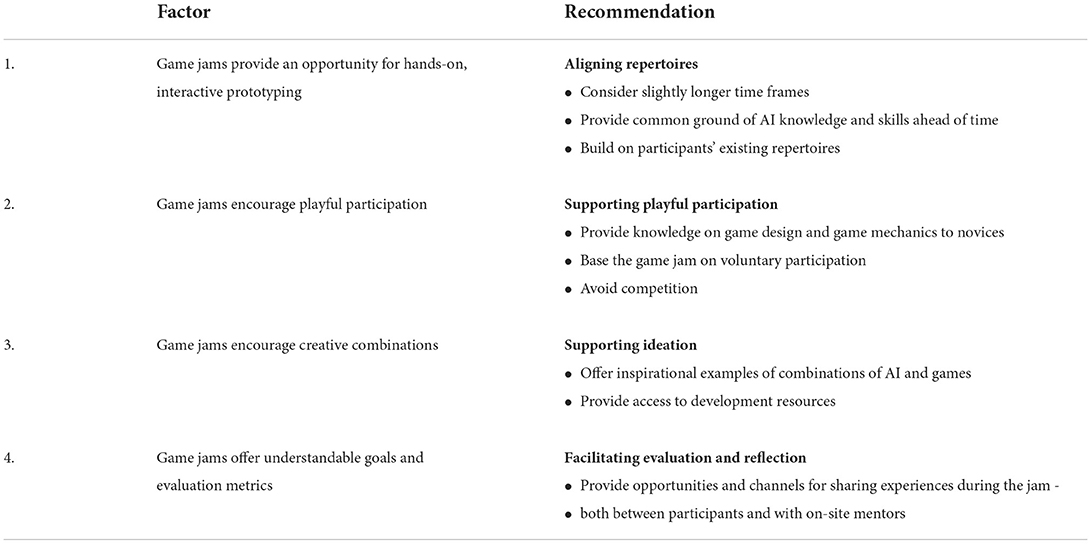

While we believe game jams provide a unique arena for materializing AI techniques and technology, there are critical perspectives and recommendations to be discussed. In this section, we present the expert organizers' recommendations and advice for how to structure AI-focused or AI-involving game jams to best support participants of that game jam, question five in Section 3.1. The themes of this section correspond to the four factors presented in the previous section, in that each theme brought up one or more critical perspectives—critical in the sense of nuanced or opposing perspectives, but also critical in the sense of important aspects to be aware of when organizing AI-focused game jams. The factors and their following recommendations are shown in Table 3.

Table 3. The four factors and corresponding recommendations for structuring and organizing an AI-focused game jam.

While the short time frame of a game jam offers advantages in terms of quickly activating participants in hands-on experiences with AI, the time frame naturally poses limitations as well. First and foremost, there are limits to the depth of learning anyone can achieve in this time scope:

“I think the good thing about the game jam is that you have— potentially—people motivated, you have—potentially—people that have arranged their schedule around the game jam. And you have focused, intensive working time, this is an advantage. The disadvantage is that this very short period of time, so you are limited in what they can learn, and they can produce and your results are going to be skewed by their limited learning” (E1).

Participants are often motivated by the extrinsic factors of a game jam, such as the limited time-frame (Reng et al., 2013). However, the time frame must be carefully considered especially in learning situations, as e.g., a short time-frame of a game jam may be detrimental to learning. Two of the experts (E2 and E3) had arranged slightly longer game jams than the default 48 h (Goddard et al., 2014) because they believed this facilitated a deeper learning experience, e.g.,:

“I don't think typical [i.e., 48-72 hours] game jams are good for many getting started with AI. Hence we opted for a longer time-frame of one week” (E3).

According to E2, AI-focused game jams have a relatively high entry requirement of participants to be experienced in game development in order to do something interesting with AI during a game jam, meaning learners that are not as game-engine savvy may end up wasting too much time on the game implementation rather than developing and engaging with the AI. This prerequisite is highly relevant in relation to Schön's notion of repertoire.

The repertoire of a designer refers to their past experiences and own knowledge which the designer draws on, in order to develop a preliminary understanding of the design situation, that is the overall setting and context for the design process. This repertoire is then the point of departure for the designer's inquiry (Löwgren and Stolterman, 2004) (refer to Section 4.2.1 about inquiry) during the time-constrained and focused engagement of game jam participants. In a design process, the early phases can be framed as a form of matching process between the repertoire and the design situation. Simultaneously, during this matching process, the designer's repertoire is challenged and is thereby developed and expanded (Dalsgaard, 2014). By participating in game jams, participants can develop their repertoire with experiences of design activities grounded in both thinking and doing.

Both E1 and E2 reflect that in order to best prepare participants for both learning new material and be able to develop a playable game prototype within the game jam time-frame, some requisites are necessary before the game jam, as complete novices in AI may need more time for grasping AI concepts in order to move beyond only designing simple game mechanics to designing more complex game prototypes. This points back to the challenges of learning AI in general, as outlined in Section 2.1. Therefore, in order to set up game jams as AI-specific design processes, as recommended by Yang et al. (2020), Table 1, there needs to be some common ground on the overall topic of AI before the game jam starts, so that participants during the game jam can concentrate on combining what they have learned - we elaborated on this in Section 4.3. In other words, the participants' AI design repertoire needs to be developed to a certain state before the game jam.

Although game jams are generally commended by participants for experiences of learning, the timing of when to introduce materials that participants should engage with during the game jam—such as learning material about AI techniques—is also important. E1 explains that when he started organizing game jams in order to teach AI techniques he was:

“fairly frustrated [...]. What I found is there's just not enough time for them to learn [...] They are just in two, three days, we just have enough time to do an interaction [...] But that's not a game. Right? [...] I think game jams are really good once people know what they're doing. And then you can have fascinating outputs, like some really popular games, they had their first version in a game jam. But this usually comes out of developers [...] that is very experienced with the topic, very experienced with the engine, very experienced, have already fiddled a little bit, and then goes to a game jam is like ‘I have a few ideas. Let's try them out' ” (E1).

E2 organized a game jam as part of a summer school, where the participants were introduced to learning material of certain AI topics before the game jam:

“We had the school that was running for five days, and it was very top down. And the idea was to make it a bit more bottom up (...) So all the things that they have heard from a lecture that was super, super high level, then they actually go into doing it, and they have no idea. So they go on Google, and they figure out, you know, how they actually do it, and they see their first approaches fail, and then they learn how to do it” (E2).

However, E1 found that when teaching participants about AI, it is important to find a balance between teaching theory and practice in a tutorial before a game jam:

“we found mixed reception to a long tutorial at the beginning of the game jam, because people want to start doing things. We found better reception when we do a short theoretical tutorial, followed by practical exercises that they do follow along. But we always find in the end, it is limited how much can they take in. And this is also a fine balance, because how much of the functionality of the tool can we take in and how much of the theoretical basic understanding of machine learning principles can they take in and we need them both right? We need them to understand how it works. And also, it's very, very, very important for them to not be frustrated.” (E1).

Different levels of expertise can be a facilitator of knowledge transfer as well. E1 explained about their own participation in various game jams:

“[Participating in] Nordic Game Jam, I did team up with these really experienced developers from Germany that had their own company. And in a very short amount of time, I learned a lot about coding standards and methodology to approach tasks and work that (...) I absorb into my regular work that I did not expect to get out of that game jam”(E1).

Depending on the goal of the specific game jam, it is highly relevant to consider participants' existing repertoires, and how to align these in a meaningful way. Teams may be formed based on repertoires so that knowledge and tasks may be distributed among participants, and so no team will be hindered by the lack of specific expertise.

To summarize this section, in order to align the repertoires for participants of an AI-focused game jam, so they can take full advantage of the jam time for hands-on experiences, we recommend considering slightly longer time frames than 48 h, providing common ground of basic AI knowledge/skills ahead of the jam, and building on participants' existing repertoires.

Not all participants will be intrinsically motivated for joining a game jam, which is an aspect that is important to consider if one wishes to organize an AI-focused game jam as part of a curriculum for teaching AI:

“If you're not intrinsically motivated to do something, it's really not a productive or fruitful idea to force you to do so. Because you don't intrinsically want to be there” (E1).

This points back to the notion of design repertoire, which is not only about the prior knowledge and understanding of AI but—particularly relevant for game jam participation—is also about participants' prior knowledge of game mechanics and game genres. Participants of a game jam who already understand a lot about games will likely have a large advantage in both what they can accomplish in the time given, and also an advantage in how creatively they can think about applying AI and AI technology in game design. Therefore, it may be worth introducing basic knowledge of game design and game mechanics to some participants ahead of the game jam itself.

In a general perspective of supporting playful learning, Whitton (2018) highlights the aspects: playful tools, playful techniques, and playful tactics. While it is beyond the scope of this article to relay comprehensive didactics of playful learning, we refer to this article for practical advice, as well as to Goddard et al. (2014) who provide a set of highly pragmatic “guidelines for game jams to facilitate ludic craft in its playful and gameful forms.”

For game jams specifically, we highlight the importance of basing them on voluntary participation. E1 had experienced being part of a mandatory game jam as part of their PhD education and distinctly remembered that not all participants were equally willing to commit their time to the game jam. The game jam was seen as somewhat of a waste of time for those PhD students who would rather spend time on writing articles or other more directly productive activities. E2 echoed this perspective and highlighted that the game jam will not work well if participation feels like work:

“Because then it becomes work. Right? You know, school, if you make school feel like work, it's not going to be as pleasant and a game jam is definitely not school, right [...] it should be explored bottom up, a process of exploring and failing and learning and, and doing something in the end, it should be like "I did something!" as the internal emotion. And you need to celebrate that” E2.

In order to support playfulness, E2 warned against making the game jam competitive:

“Competition crops up. And we want to squash this as soon as possible, because you're not there to, like you have two days! It's not about, you know, making the biggest team or, whatever. We had some good results, even without the competition. So we have a popular vote instead, which is basically the jammers voting for each other. And we never specify (...) the criteria, etc. So the lecturers cannot evaluate it. Nobody else, if you haven't participated in the jam, you're not voting. It's that simple” (E2).

This finding is mirrored by academic work on the educational merit of game jams, which has also highlighted that non-competitive atmospheres lower the barriers to participation (Aurava et al., 2021).

In summary, we recommend that the AI-focused game jam supports playful participation by providing initial knowledge on game design and game mechanics to novices, basing the game jam on voluntary participation, and avoiding competition.

Generating ideas for AI-based games can be difficult:

“AI is not something that is really embraced in a lot of indie projects, because it's got a lot of trappings. And there's a lot of issues with it. And particularly when we talk about game AI, that's this intersection of game design and artificial intelligence, where suddenly we have additional problems that you wouldn't have thought of otherwise” (E3).

In order to support participants' ideation of creative combinations of AI and game design, especially E3 mentioned providing inspirational examples of how AI can be and is used in games. Framing is contingent on the participants' repertoire, i.e., their prior knowledge of AI and game jams. One way E3 described to accommodate for the difficulties for especially novices in combining AI and games, was to provide participants with ready-made examples of how to combine AI techniques and game mechanics in order to ‘onboard' them:

“To provide a set of resources that allow for people to come in and engage with the material that perhaps previously they haven't been able to do. And onboard them. And a lot of the technology aspects that are that probably with for a few people if they can get that helping hand particularly in the first day of the jam, just to say, hey, I can actually get this to work” (E3)2.

While E2 also provided, if not ready-made examples, then references to open source AI libraries and resources, they experienced that not all participants used these resources:

“There's a number of libraries that are open source and to a different degree of quality, that someone could just plug into and do something within the first, let's say, a couple of hours. So having these available to you just as ideas as springboards comes to mind. Honestly, that said, most people didn't use them. So almost all the teams I know that did something that was cool, basically made their own game, or made their own platform. So it's not mandatory, but it always helps when you're always designing for the lowest common denominator, right?” (E2).

Previous research on supporting creativity during game jams has suggested that organizers may encourage combinational creativity by “suggesting that participants combine specific game genres or game mechanics that the participants could either select from a list prepared by the organizers or discern themselves by analyzing and discussing one or more such examples” (Falk et al., 2021).

In summary, in order to support participants' ideation and framing of AI-based games, offer several inspirational examples on combinations of AI and games as well as access to development resources.

As described in Section 4.4, game jams can offer understandable goals and evaluation metrics for AI, and one way this was supported was by participants sharing their progress, challenges, and ideas during and after the game jam. All experts mentioned having a Discord channel for facilitating this ongoing evaluation and reflection between participants and organizers.

E1's game jam where participants could contact organizers in case they had questions during the game jam (this was also used for E1's research as data):

“In the discord server, they usually ask questions, we answer them, we do get this interaction as a data set as a data point as well. We try to also when they need help, and the text chat isn't enough. We might jump with them on a call. We record the call and we try to you know, do some sort of the short interview unstructured one” (E1).

In the case of E2's game jam, there was an emphasis on having the participants collaborate and network with each other during the game jam:

“It was more about the jam organizers or me the jam organizer and the helpers to make sure that everybody was collaborating and networking etc. And so the discord was running from the beginning. And we had a room that was brainstorming ideas and stuff like that.” (E2).

In addition to facilitating communication between participants and organizers during the game jam, E2 highlighted how showcasing games in an Expo setting after the game jam is an effective and visual way of communicating the game jam outcome for participants:

“[games] are much more fun to show in an Expo setting. (....) When you finish a hackathon you submit it somewhere on GitHub, and, you know, who cares? (...) most of the games were broken, but at least they were able to play and see, you know, see how things changed as they went. (...) [games] work better as dissemination material. And also just to get other people excited, 'cause you can, like you see Mario fall down, you see the pace increase or whatever it is that you're working on.” (E2).

In terms of evaluation, E2 described how it was less important whether the participants improved their AI literacy and more about whether the participants had a good experience and give them confidence for pursuing exploring AI:

“how much people improve their AI literacy over the course of a game jam? Honestly, I don't care. Like, fine. Yes, it improved by 5%. I've never been a believer in pretest, post test, kind of educational games. So for me, it's more about the experience, the positive emotions, the fact that this was a, you know, a good networking slash pleasurable experience makes people return and do things more. It's not about they learned all they could get, they didn't, I can tell you, they spent two days. What they did was more love and more understanding about the powers and failures of AI” (E2)

In sum, for facilitating evaluation and reflection we recommend providing participants with opportunities and channels for sharing experiences and challenges during the game jam with both each other and organizers or other on-site mentors. Facilitating an open, supportive, and collaborative environment among all participants during the game jam may be a way to alleviate the challenges AI and game design inherently entail.

The purpose of this article is to discuss how a game jam format is particularly suited for learning AI as a design material for especially designers and developers, and an initial discussion on how a game jam may be organized by, e.g., educators for supporting this.

The empirical study is based on only three participants, so perspectives may be limited by this. While we do not consider this a weakness to the soundness of the discoveries made (the goal of the study was to open up avenues of opportunities rather than to exhaust all potential challenges), we acknowledge that the findings could be detailed and cultivated by more perspectives.

Furthermore, this article is based on the synthesizing of perspectives, experiences, and theories. The arguments should be tested and backed up by empirical testing, such as formalized studies of AI-focused game jams in practice. During the interviews with the experts, we identified several topics and interesting endeavors or open questions for future research.

As was repeated by the experts, and is also reflected in the research literature (refer to e.g., Reng et al., 2013; Kultima, 2015), volunteer participation driven by intrinsic motivation is an important factor for a successful game jam participation and experience. Therefore, it can be challenging to incorporate game jams as part of the curriculum in formal education. If there is a requirement for students to participate, the internal motivation related to the volunteer participation may not be there. The question is then, how can teachers best incorporate the format into their teaching and should they? Some organizational initiatives which may help alleviate this challenge, is to dial down any competitive aspects (E2). One initiative may be if the students feel that any kind of participation is accepted and sufficient—even ones that may not necessarily contribute with particularly advanced or even functioning prototypes. That is, students' outcomes in terms of the prototype should not be evaluated or graded in terms of functionality as it is more a way of creating excitement about experimenting and hence learning about the topic, and hopefully keep learning after the game jam. However, how to best combine the intrinsically motivated inquiry in a formal education setting is still an open question.

E1's game jam had the purpose of letting participants use an ML tool and research “how designers or programmers would use this tool to create [VR] experiences” (E1):

“what if we try to attract designers in a game jam, where we actually teach them machine learning and that's actually our target audience. You're a non expert machine learning user. We have a new tool, a new methodology that can teach you machine learning. You can experiment with it. We collect data It's a win situation.” (E1)

Using a game jam for this purpose was an alternative research method for more long-term and expensive research methods:

“it became clear that it was important to research how designers or programmers would use this tool to create experiences (...) the ideal option would be to have designers using our tool long term, but that's really difficult to do from a methodology perspective, and really difficult in terms of recruitment, and could be potentially very expensive” (E1).

We agree that exploring game jams—and other short-term design processes such as hackathons—as research methods have potential, as these formats engage participants in almost full design processes in terms of design activities and decision making. For hackathons, it has even been argued that the kind of design activity is more authentic compared to studies conducted in controlled experiments, among other things because of participants' self-motivation and personal interest driving the design activity (Flus and Hurst, 2021), despite the uniquely time-limited design activity. Combining this with the arguments for how game jams can offer understandable goals and evaluation metrics for AI, we suggest future research to explore the methodological validity and potential of game jams as research methods.

An important perspective mentioned by E3, is the question of who has access to technical AI resources?:

“there's a challenge in trying to communicate the opportunities or the ideas with which you can explore applications, particularly of machine learning and games, because we're still in this kind of gestation, or phase of that body of work, because almost all of this is happening in big triple A studios, often behind closed doors. And so for smaller, independent developers, they don't have that level of engagement and interaction with it. And anything we can do to bridge that is a big concern, both previously, and I think certainly going forward and future jams, it's something we're going to be thinking about a lot” (E3).

This is a question that touches on several aspects: technologies, standards, and practices in corporate game development can be shrouded by a culture of secrecy and use of non-disclosure agreements (NDA's) (O'Donnell, 2014): “More than discouraging regular testing, developing games “the old way” [i.e., upkeeping culture of secrecy and the use of NDAs] discourages exploration and experimentation, which is crucial for developers to push the quality of a game forward” (O'Donnell, 2014).

In game development, as in most other areas that develop and use AI, those who have access to resources have more possibilities. In research, unpublished source code, “black-boxed” datasets, and sensitivity to varying training conditions lead to a general “reproducibility crisis,” as has been witnessed in other fields, e.g., medicine and physics (Hutson, 2018). Perhaps, by increasing technical literacy and leveraging the so-called network effect, AI-focused game jams can contribute to increased democratization of AI (Sciforce, 2020).

In this article, we presented an argument for game jams as a unique opportunity for materializing AI in a way that facilitates learning to use AI technology as a design material. We highlighted some of the primary challenges and recommendations of teaching AI identified by previous literature, such as prototyping (Dove et al., 2017) improving technical literacy (Yang et al., 2020), enabling designers to more easily “play with” AI (Yang et al., 2020), and creating interactive demonstrations (Long and Magerko, 2020). Based on interviews with three experts who are experienced in participating in and organizing AI-focused game jams, and the theoretical background of Schön's study, we argued that game jams can meet many of the outlined challenges of materializing AI by 1) Providing an opportunity for hands-on, interactive prototyping, 2) Encouraging playful participation, 3) Encouraging creative combinations of AI and game development, and 4) Offering understandable goals and evaluation metrics for AI. The experts also raised several critical perspectives, resulting in the four recommendations of 1) aligning repertoires, 2) supporting playful participation, 3) supporting ideation, and 4) facilitating evaluation and reflection. Finally, we discussed research directions from the perspective of using game jams for learning. We will explore these in future research, and hope that the provided perspectives will be useful for educators, designers, and developers exploring novel ways of materializing AI in practice.