95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Comput. Sci. , 11 January 2022

Sec. Human-Media Interaction

Volume 3 - 2021 | https://doi.org/10.3389/fcomp.2021.733531

This article is part of the Research Topic 2022 Computer Science – Editor’s Pick View all 12 articles

Bhanuka Mahanama1

Bhanuka Mahanama1 Yasith Jayawardana1

Yasith Jayawardana1 Sundararaman Rengarajan2

Sundararaman Rengarajan2 Gavindya Jayawardena1

Gavindya Jayawardena1 Leanne Chukoskie2,3

Leanne Chukoskie2,3 Joseph Snider4

Joseph Snider4 Sampath Jayarathna1*

Sampath Jayarathna1*Our subjective visual experiences involve complex interaction between our eyes, our brain, and the surrounding world. It gives us the sense of sight, color, stereopsis, distance, pattern recognition, motor coordination, and more. The increasing ubiquity of gaze-aware technology brings with it the ability to track gaze and pupil measures with varying degrees of fidelity. With this in mind, a review that considers the various gaze measures becomes increasingly relevant, especially considering our ability to make sense of these signals given different spatio-temporal sampling capacities. In this paper, we selectively review prior work on eye movements and pupil measures. We first describe the main oculomotor events studied in the literature, and their characteristics exploited by different measures. Next, we review various eye movement and pupil measures from prior literature. Finally, we discuss our observations based on applications of these measures, the benefits and practical challenges involving these measures, and our recommendations on future eye-tracking research directions.

The five primary senses provide humans with a rich perceptual experience of the world, with vision as the dominant sense. Early studies of visual perception (Dodge, 1900; Buswell, 1935; Yarbus, 1967) and its physiological underpinnings (Hubel and Wiesel, 1979; Hubel, 1995), have provided a foundation for subtler and more sophisticated studies of the visual system and its dynamic interaction with the environment via the oculomotor system. The oculomotor system both maintains visual stability and controls gaze-orienting movements (Goldberg et al., 1991; Land and Furneaux, 1997). It is comprised of the efferent limb of the visual system and the vestibular system (Wade and Jones, 1997). The efferent limb is responsible for maintaining eye position and executing eye movements. The vestibular system, on the other hand, is responsible for providing our brain with information about motion, head position, and spatial orientation, which, in turn, facilitates motor functions, such as balance, stability during movement, and posture (Goldberg and Fernandez, 1984; Wade and Jones, 1997; Day and Fitzpatrick, 2005). The sense of hearing or touch also affects eye movements (Eberhard et al., 1995; Maier and Groh, 2009). There are five distinct types of eye movement, two gaze-stabilizing movements: vestibulo-ocular (VOR), opto-kinetic nystagmus (OKN); and three gaze-orienting movements: saccadic, smooth pursuit, and vergence (Duchowski, 2017; Hejtmancik et al., 2017). For the purposes of this review, we will focus on gaze-orienting eye movements that place the high-resolution fovea on selected objects of interest.

The existence of the fovea, a specialized high-acuity region of the central retina approximately 1–2 mm in diameter (Dodge, 1903), provides exceptionally detailed input in a small region of the visual field (Koster, 1895), approximately the size of a quarter held at arm’s length (Pumphrey, 1948; Hejtmancik et al., 2017). The role of gaze-orienting movements are to direct the fovea toward objects of interest. Our subjective perception of a stable world with uniform clarity is a marvel resulting from our visual and oculomotor systems working together seamlessly, allowing us to engage with a complex and dynamic environment.

Recent advancements in computing such as computer vision (Krafka et al., 2016; Lee et al., 2020) and image processing (Pan et al., 2017; Mahanama et al., 2020; Ansari et al., 2021) have led to the development of computing hardware and software that can extract these oculomotor events and measurable properties. This include eye tracking devices range from commodity hardware (Mania et al., 2021) capable of extracting few measures to reserach-grade eye trackers combined with sophisticated software capable of extracting various advance measures. As a result, eye movement and pupillometry have the potential for wide adoption for both in applications and research. There is a need for an aggregate body of knowledge on eye movement and pupillometry measures to provide, a.) a taxonomy of measures linking various oculomotor events, and b.) a quick reference guide for eye tracking and pupillometry measures. For application oriented literature of the eye tracking, interested reader is referred to (Duchowski, 2002) for a breadth-first survey of eye tracking applications.

In this paper, we review a selection of relevant prior research on gaze-orienting eye movements, the periods of visual stability between these movements, and pupil measures to address the aforementioned issue (see Figure 1). First, we describe the main oculomotor events and their measurable properties, and introduce common eye movement analysis methods. Next, we review various eye movement and pupil measures. Next, we discuss the applications of aforementioned measures in domains including, but not limited to, neuroscience, human-computer interaction, and psychology, and analyze their strengths and weaknesses. The paper concludes with a discussion on applications, recent developments, limitations, and practical challenges involving these measures, and our recommendations on future eye-tracking research directions.

This section describes oculomotor events that function as the basis for several eye movement and pupil measures. These events are: 1) fixations and saccades, 2) smooth pursuit, 3) fixational eye movements (tremors, microsaccades, drifts), 4) blinks, and 5) ocular vergence.

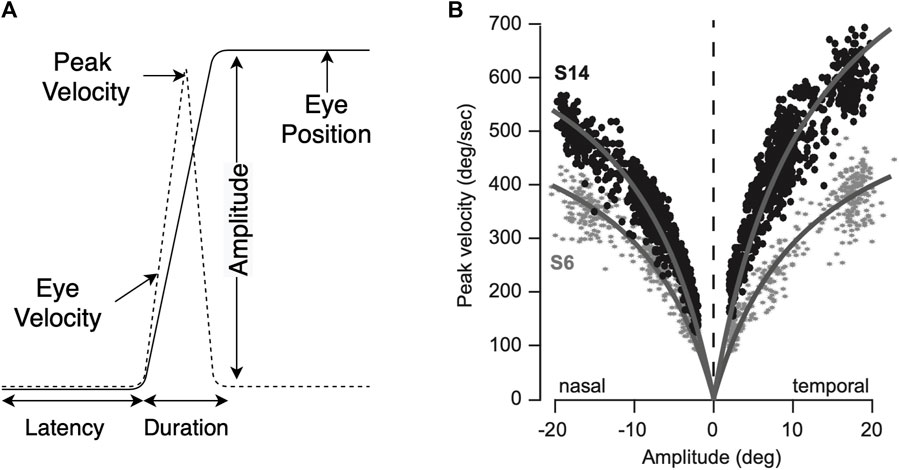

Eye movement information can be interpreted as a sequence of fixations and saccades. A fixation is a period where our visual gaze remains at a particular location. A saccade, on the other hand, is a rapid eye movement between two consecutive fixations. Typical humans perform 3–5 saccades per second, but this rate varies with current perceptual and cognitive demands (Fischer and Weber, 1993). Fixations and saccades are the primary means of interacting with and perceiving the visual world. During a fixation, our visual perceptual processes unfold. Saccades guide our fovea to selected regions of the visual field. We are effectively blind during saccades (Burr et al., 1994), which allows our gaze to remain relatively stable during saccadic reorientation. Saccadic eye movements are brief, and have a reliable amplitude-velocity relationship (see Figure 2) known as the main sequence (Bahill et al., 1975; Termsarasab et al., 2015). It shows that saccade velocity and saccade amplitude follow a linear relationship, up to 15°–20°. This relationship, however, varies with age and also in certain disorders (Choi et al., 2014; Reppert et al., 2015).

FIGURE 2. Saccade amplitude and peak velocity (A), main sequence (B) (Reppert et al., 2015).

Saccades are inhibited during engaged visual attention on stationary stimuli, and as a result, a (nearly) steady central fixation is obtained (Fischer and Breitmeyer, 1987). Previous studies (Schiller et al., 1980; Wang et al., 2015) have shown that saccade preparation processes can be analyzed via pupil size. In particular, Schiller et al. (1980) showed that distinct neural preparatory signals in Superior Colliculus (SC) and Frontal Eye Field (FEF) are vital for saccade preparation, and Wang et al. (2015) showed that the SC is associated with the pupil control circuit. Cortical processing is associated with saccade latency, with shorter latency indicating advanced motor preparation (Connolly et al., 2005). Reiterating this point, Jainta et al. (2011) showed a negative correlation between saccade latency and pupil size prior to a saccade. Thus, the analysis of measures such as pupil diameter during fixations, fixation duration, saccade rate, saccade accuracy, and saccade latency, provide important cumulative clues to understanding the underlying deployment of visual attention.

There exists several eye tracking technologies (Young and Sheena, 1975) that measure ocular features over time and transform them into a stream of gaze positions. These streams can be analyzed in different ways to identify periods of fixation and saccades. Salvucci and Goldberg (2000) describe five algorithms for such identification: Velocity Threshold Identification (I-VT), Hidden Markov Model Identification (I- HMM), Dispersion Threshold Identification (I-DT), Minimum Spanning Tree Identification (I-MST), and Area-of-Interest Identification (I-AOI). I-VT and I-HMM are velocity-based algorithms. In I-VT, consecutive points are identified as fixations or saccades, based on their point-to-point velocities (Findlay et al., 1995). I-HMM, on the other hand, uses a two-state Hidden Markov Model with hidden states representing the velocity distributions of saccade and fixation points. Compared to fixed-threshold methods like I-VT, I-HMM performs a more robust identification (Salvucci and Goldberg, 2000), since it employs a probabilistic model rather than a fixed velocity threshold, which allows more freedom in identifying points. I-DT and I-MST are dispersion-based algorithms that use a moving window to calculate the dispersion of points. Based on whether the dispersion is above or below the threshold, points are classified as fixations or saccades (Widdel, 1984). In I-MST, a minimum-spanning tree is constructed from gaze points. Edges with lengths exceeding a predefined ratio are labeled as saccades and clusters of points connected by saccades are labeled as fixations (Salvucci and Goldberg, 2000). I-AOI is an area-based algorithm that only identifies fixations within specified target areas (AOIs) (Salvucci and Goldberg, 2000). If a point falls within a target area, it is labeled as a fixation point, and if not, it is labeled as a saccade point. Consecutive fixation points are then grouped together, and groups that do not span a minimum duration are re-labeled as saccade points. A systematic evaluation of the performance of these algorithms are available at Komogortsev et al. (2010).

The smooth pursuit system is a different gaze-orienting movement that is deployed to keep a moving object in foveal vision (Carl and Gellman, 1987; Barnes, 2008). Smooth pursuit eye movements are generally made when tracking an object moving in the visual environment (Carl and Gellman, 1987; Barnes and Asselman, 1991). A typical smooth pursuit movement is usually initiated by an saccadic eye movement to orient to the tracked object. The pursuit system subsequently matches the eye velocity to target velocity (Robinson, 1965; Barnes and Asselman, 1991). This smooth movement is punctuated by additional saccadic movements that eliminate retinal error between the current gaze position and target. The smooth pursuit system has a functional architecture very similar to that of the saccadic system (Lisberger et al., 1987); however, smooth pursuit has a lower latency (100–125 ms) than saccades (200–250 ms) (Meyer et al., 1985; Krauzlis, 2004). Due to the underlying similarity between saccades and smooth pursuits, metrics used to characterize saccades could also be used to characterize smooth pursuit behavior (Lisberger et al., 1987). According to a classic smooth pursuit behavior model Robinson et al. (1986), there are three aspects of pursuit to characterize: onset, offset and motor learning. Pursuit onset is the response time of the pursuit system to a target which moves for a certain period of time. Since it occurs after the target starts to move, this time delay reflects the response of the pursuit system to the target motion while the eyes were still (Jiang, 1996). Pursuit offset is the response time of the pursuit system to turn off as a response when target stops its motion. When the target is seen to stop, the pursuit system is turned off and replaced by fixation (Robinson et al., 1986). Motor plasticity or motor learning, as a gradual process that makes small, adaptive steps in a consistent direction, was also incorporated and simulated in this model.

The process of visual exploration is characterized by alternating fixations and saccades (Salvucci and Goldberg, 2000). However, a fixation does not imply a stationary eye; our eyes are continually in (involuntary) motion, even during periods of fixation (Adler and Fliegelman, 1934; Ratliff and Riggs, 1950; Ditchburn and Ginsborg, 1953). These fixational eye movements fall into one of three classes: 1) tremors, 2) micro-saccades, and 3) drifts (Martinez-Conde et al., 2004; Rucci and Poletti, 2015).

A tremor (or ocular micro-tremor, or physiological nystagmus) is an aperiodic, wavelike eye movement with a high-frequency (

A microsaccade (or flick, or flicker, or fixational saccade) is a small, fast, jerk–like eye movement that occurs during voluntary fixation (Ditchburn and Ginsborg, 1953; Martinez-Conde et al., 2004). They occur at a typical rate of 1–3 Hz, shifting the line of sight abruptly by a small amount (Ditchburn and Ginsborg, 1953). The average size of a microsaccade is about 6′ arc (i.e., the size of a thumb-tack head, held 2.5 m away from the eye) (Steinman et al., 1973). The dynamics of microsaccades vary with stimuli and viewing task. For instance, the difficulty of a task can be discerned by the number of microsaccades that occurred (Otero-Millan et al., 2008), and their magnitude (Krejtz et al., 2018). Microsaccades also have comparable spatio-temporal properties as saccades (Zuber et al., 1965; Otero-Millan et al., 2008). For instance, microsaccades lie on the saccadic main sequence (Zuber et al., 1965). The refractory periods between saccades and microsaccades are also equivalent (Otero-Millan et al., 2008). Moreover, microsaccades as small as 9′ generate a field potential over the occipital cortex and the mid-central scalp sites 100–140 ms after movement onset, which resembles the visual lambda response evoked by saccades (Dimigen et al., 2009). It is increasingly accepted that microsaccades play an important role in modulating attentional and perceptual processes (Hafed et al., 2015).

A drift (or slow drift) is a low-frequency eye movement that occurs during the intervals between microsaccades and saccades (Steinman et al., 1973). During a drift, the retinal image of a fixated object moves across photoreceptors (Ratliff and Riggs, 1950). Drifts have a compensatory role in maintaining accurate visual fixation; they occur either in the absence of microsaccades, or when the compensation by microsaccades is inadequate (Ratliff and Riggs, 1950). The average size of a drift is about a 6′ arc, with an average velocity of about a 1′ arc/sec (Ratliff and Riggs, 1950; Ditchburn and Ginsborg, 1953; Cornsweet, 1956; Nachmias, 1961).

A blink is essentially the closing and reopening of the eyelids. Blinks are primitive, yet widely used, in eye tracking measures. When the blink originates from a voluntary action, the blink becomes a voluntary blink or a wink (Blount, 1927). In the case of non-voluntary blinks, they are of two types: spontaneous blinks and reflexive blinks. For reflexive blinks, external stimuli evoke reflexive blinks as a form of protection, while any involuntary blink not belonging to any of these categories is a spontaneous blink (Valls-Sole, 2019). The winks or voluntary blinks are not commonly adopted as a metric despite the usage as a form of interaction (Noronha et al., 2017). In contrast, involuntary blinks indicate the state of an individual (Stern et al., 1984) or a reflex action to a stimulus (Valls-Sole, 2019). Between involuntary blinks, spontaneous blinks are the most common type of blink used as a metric due to their correlation with one’s internal state (Shin et al., 2015; Maffei and Angrilli, 2019).

Up until this point, the movements described are all referred to as conjugate or “yoked” eye movements, meaning that the eyes move in the same direction to fixate an object. Fortunately, we can choose to fixate on objects in different depth planes, during which binocular vision is maintained by opposite movements of the two eyes. These simultaneously directly opposing movements of the eyes result in Ocular vergence (Holmqvist et al., 2011). These vergence movements can occur in either direction, resulting in convergence or divergence. Far-to-near focus triggers convergent movements and near-to-far focus triggers divergent movements. The ubiquitous use of screen-based eye tracking results in more literature related to conjugate eye movements in a single depth plane.

Eye movements are a result of complex cognitive processes, involving at the very least, target selection, movement planning, and execution. Analysis of eye movements (see Table 1 for a list of eye movement measures) can reveal objective and quantifiable information about the quality, predictability, and consistency of these covert processes (Van der Stigchel et al., 2007). Holmqvist et al. (2011) and Young and Sheena (1975) discuss several eye movement measures, eye movement measurement techniques, and key considerations for eye movement research. In this section, we introduce several eye movement analysis techniques from the literature.

AOI analysis is a technique to analyze eye movements by assigning them to specific areas (or regions) of the visual scene (Holmqvist et al., 2011; Hessels et al., 2016). In contrast to obtaining eye movement measures across the entire scene, AOI analysis provides semantically localized eye movement measures that are particularly useful for attention-based research (e.g., User Interaction research, Marketing research, and Psychology research) (Hessels et al., 2016). In AOI analysis, defining the shape and bounds of an AOI can be difficult (Hessels et al., 2016). Ideally, each AOI should be defined with the same shape and bounds as the actual object. However, due to practical limitations, such as the difficulty of defining arbitrarily shaped (and sized) AOIs in eye-tracking software, AOIs are most commonly defined using simple shapes (rectangles, ellipses, etc.) (Holmqvist et al., 2011). Recent advancements in computer vision have given rise to models that automatically and reliably identify real world objects in visual scenes Ren et al. (2015), Redmon et al. (2016). This makes it possible to identify AOIs in real world images almost as readily as with pre-defined stimuli presented on a computer screen Jayawardena and Jayarathna (2021), Zhang et al. (2018).

Heat map analysis is a technique for analyzing the spatial distribution of eye movements across the visual scene. This technique can be used to analyze eye movements of individual participants, as well as aggregated eye movements of multiple participants. In general, heat maps are represented using Gaussian Mixture Models (GMMs) that indicate the frequency (or probability) of fixation localization. Heat map-based metrics generally involve a measure of overlap between two GMMs, indicating similarity of fixated regions of an image. In heat map analysis, the order of visitation is not captured (Grindinger et al., 2010); rather, it analyzes the spatial distribution of fixations. When visualizing heat maps, a color-coded, spatial distribution of fixations is overlaid on the stimuli that participants looked at. The color represents the quantity of fixations at each point on the heat map. Heat map analysis is particularly useful when analyzing the areas of the stimuli that participants paid more (or less) visual attention to, for example in driving research comparing different groups and conditions (Snider et al., 2021).

A scan path is a sequence of fixations and saccades that describe the pattern of eye movements during a task (Salvucci and Goldberg, 2000). Scan paths could appear quite complex, with frequent revisits and overlapping saccades. In general, scan paths are visualized as a sequence of connected nodes (a fixation centroid) and edges (a saccade between two successive fixations) displayed over the visual image of the scene (Heminghous and Duchowski, 2006; Goldberg and Helfman, 2010). Here, the diameter of each node is proportional to the fixation duration (Goldberg and Helfman, 2010). Scan path analyses have been widely used to model the dynamics of eye movement during visual search (Walker-Smith et al., 1977; Horley et al., 2003). It has also been used in areas like biometric identification (Holland and Komogortsev, 2011). In cognitive neuroscience, Parkhurst et al. (2002) and van Zoest et al. (2004) show that stimulus-driven, bottom-up attention dominates during the early phases of viewing. On the contrary, Nyström and Holmqvist (2008) show that top-down cognitive processes guide fixation selection throughout the course of viewing. They discovered that viewers eventually fixate on meaningful stimuli, regardless of whether that stimuli was obscured or reduced in contrast.

In this section, we discuss several metrics relevant to oculomotor behavior (Komogortsev et al., 2013) which are derived from fixations, saccades, smooth pursuit, blinks, vergence, and visual search paradigm.

Fixation-based measures are widely used in eye-tracking research. Here, fixations are first identified using algorithms such as I-VT, I-HMM (velocity-based), I-DT, I-MST (dispersion-based), and I-AOI (area-based) (Salvucci and Goldberg, 2000). This information is then used to obtain different fixation measures, as described below.

Fixation count is the number of fixations identified within a given time period. Fixations can be counted either over the entire stimuli or within a single AOI (Holmqvist et al., 2011). Fixation count has been used to determine semantic importance (Buswell, 1935; Yarbus, 1967), the efficiency and difficulty in search (Goldberg and Kotval, 1999; Jacob and Karn, 2003), neurological dysfunctions (Brutten and Janssen, 1979; Coeckelbergh et al., 2002), and the impact of prior experience (Schoonahd et al., 1973; Megaw, 1979; Megaw and Richardson, 1979).

Fixation duration indicates a time period where the eyes stay still in one position (Salvucci and Goldberg, 2000). In general, fixation durations are around 200–300 ms long, and longer fixations indicate deeper cognitive processing (Rayner, 1978; Salthouse and Ellis, 1980). Also, fixations could last for several seconds (Young and Sheena, 1975; Karsh and Breitenbach, 1983) or be as short as 30–40 ms (Rayner, 1978; Rayner, 1979). Furthermore, the distribution of fixation duration is typically positively skewed, rather than Gaussian (Velichkovsky et al., 2000; Staub and Benatar, 2013). The average fixation duration is often used as a baseline to compare with fixation duration data at different levels (Salthouse and Ellis, 1980; Pavlović and Jensen, 2009). By comparing average fixation duration across AOIs, one could distinguish areas that were looked at for longer durations than others. In particular, if certain AOIs were looked at longer than others, then their average fixation duration would be higher (Goldberg and Kotval, 1999; Pavlović and Jensen, 2009).

The amplitude of a saccade (see Figure 2) is the distance travelled by a saccade during an eye movement. It is measured either by visual degrees (angular distance) or pixels, and can be approximated via the Euclidean distance between fixation points (Megaw and Richardson, 1979; Holmqvist et al., 2011). Saccade amplitudes are dependent on the nature of the visual task. For instance, in reading tasks, saccade amplitudes are limited to ≈ 2°, i.e., 7–8 letters in a standard font size (Rayner, 1978). They are further limited when oral reading is involved (Rayner et al., 2012). Furthermore, saccade amplitudes tend to decrease with increasing task difficulty (Zelinsky and Sheinberg, 1997; Phillips and Edelman, 2008), and with increasing cognitive load (May et al., 1990; Ceder, 1977).

The direction (or orientation, or trajectory) of a saccade or sequence of saccades is another useful descriptive measure. It can be represented as either an absolute value, a relative value, or a discretized value. Absolute saccade direction is calculated using the coordinates of consecutive fixations. Relative saccade direction is calculated using the difference of absolute saccade direction of two consecutive saccades. Discretized saccade directions are obtained by binning the absolute saccade direction into pre-defined angular segments (e.g., compass-like directions). In visual search studies, researchers have used different representations of saccade direction to analyze how visual conditions affect eye movement behavior. For instance, absolute saccade directions were used to analyze the effect of target predictability (Walker et al., 2006) and visual orientation (Foulsham et al., 2008) on eye movements. Similarly, discretized saccade directions were used to compare and contrast the visual search strategies followed by different subjects (Ponsoda et al., 1995; Gbadamosi and Zangemeister, 2001).

Saccade velocity is calculated by taking the first derivative of time series of gaze position data. Average saccadic velocity is the average of velocities over the duration of a saccade. Peak saccadic velocity is the highest velocity reached during a saccade (Holmqvist et al., 2011). For a particular amplitude, saccade velocity has been found to decrease with tiredness (Becker and Fuchs, 1969; McGregor and Stern, 1996), sleep deprivation (Russo et al., 2003), and conditions such as Alzheimer’s (Boxer et al., 2012) and AIDS (Castello et al., 1998). In contrast, saccade velocity has been found to increase with increasing task difficulty (Galley, 1993), increasing intrinsic value of visual information (Xu-Wilson et al., 2009), and increasing task experience (McGregor and Stern, 1996). Many studies on neurological and behavioral effects of drugs and alcohol (Lehtinen et al., 1979; Griffiths et al., 1984; Abel and Hertle, 1988) have used peak saccade velocity as an oculomotor measure.

Saccadic latency measures the duration between the onset of a stimulus and the initiation of the saccade (Andersson et al., 2010). In practice, the measurement of the saccadic latency is affected by two main factors: The sampling frequency of the setup, and the saccade detection time. The sampling frequency refers to the operational frequency of the eye tracker, where the operational frequency negatively correlates with the introduced error. The saccade detection time is when the device detects a saccade by arriving at the qualifying velocity or the criteria in the saccade detection algorithm. In a study with young and older participants, researchers observed the saccadic latencies to increase significantly in older participants with the decrease in the stimulus size Warren et al. (2013). Further, in comparative studies between healthy subjects and subjects with Parkinson’s disease with and without medication, saccadic latency shows potential as a biomarker for the disease (Michell et al., 2006). A similar study with participants having amblyopia has found the interocular difference between saccadic latencies to correlate with the difference in Snellen acuity (McKee et al., 2016).

Saccade rate (or saccade frequency) is the number of saccadic eye movements per unit time (Ohtani, 1971). For static stimuli, the saccade rate is similar to the fixation rate. For dynamically moving stimuli, however, the saccade rate is a measure of catch-up saccades generated during smooth pursuit (Holmqvist et al., 2011). The saccade rate decreases with increasing task difficulty (Nakayama et al., 2002) and fatigue level (Van Orden et al., 2000). Moreover, subjects with neurological disorders exhibit higher saccadic rates during smooth pursuit (O’Driscoll and Callahan, 2008; van Tricht et al., 2010).

Saccade gain (or saccade accuracy) is the ratio between the initial saccade amplitude and the target amplitude (i.e., Euclidean distance between the two stimuli among which that saccade occurred) (Coëffé and O’regan, 1987). This measure indicates how accurately a saccadic movement landed on the target stimuli (Ettinger et al., 2002; Holmqvist et al., 2011). When the gain of a particular saccade is greater than 1.0, that saccade is called an overshoot, or hypermetric, and when it is less than 1.0, that saccade is called an undershoot, or hypometric. Saccadic gain is probabilistic at a per-individual level; Lisi et al. (2019) demonstrates that biased saccadic gains are an individualized probabilistic control strategy that adapts to different environmental conditions. Saccade gain is commonly used in neurological studies (Ettinger et al., 2002; Holmqvist et al., 2011). For instance, Crevits et al. (2003) used saccade gain to quantify the effects of severe sleep deprivation.

Smooth pursuit direction (or smooth pursuit trajectory) indicates the direction of smooth pursuit movement as the eyes follow a moving stimulus (Holmqvist et al., 2011). The ability to pursue a moving object varies with its direction of motion. Rottach et al. (1996) showed that smooth pursuit gain is higher during horizontal pursuit than during vertical pursuit. This difference in gain can be attributed to most real-world objects naturally being in horizontal rather than vertical motion (Collewijn and Tamminga, 1984).

Smooth pursuit velocity is first moment of gaze positions during a smooth pursuit. Compared to saccade velocity, the velocity of smooth pursuits is low, typically around 20°/s–40°/s (Young, 1971). However, when participants are specifically trained to follow moving stimuli, or are provided with accelerating stimuli, higher peak smooth pursuit velocities were observed (Meyer et al., 1985). For example, Barmack (1970) observed peak smooth pursuit velocities of 100°/s in typical participants, when provided with accelerating stimuli. However, Bahill and Laritz. (1984) observed peak smooth pursuit velocities of 130°/s on trained baseball players. As the velocity of moving stimuli increases, the frequency of catch-up saccades increases to compensate for retinal offset (De Brouwer et al., 2002).

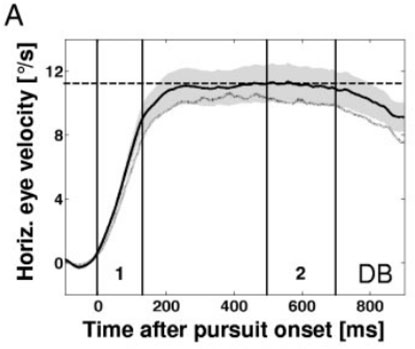

The second moment of the gaze position trace provides the acceleration of a smooth pursuit eye movement. This acceleration is maintained until the eye velocity (smooth pursuit velocity) matches the visual target’s velocity (Kao and Morrow, 1994). Examination of acceleration is typically a part of determining smooth pursuit onset (see Figure 3). Smooth pursuit acceleration has been used to analyze how visual cues (Ladda et al., 2007) and prior knowledge of a visual target’s trajectory (Kao and Morrow, 1994) impact eye movements. Smooth pursuit acceleration is higher when a visual target’s motion was unpredictable.

FIGURE 3. Eye velocity (plotted line) and visual target velocity (dotted line) over time (x-axis), aligned to pursuit onset (0 ms). Smooth pursuit acceleration seen from 0 to 140 ms. Smooth pursuit latency seen from origin to 0 ms (Spering and Gegenfurtner, 2007).

Smooth pursuit latency is the delay between when a target object starts to move (i.e., target onset) and when the pursuit begins (i.e., smooth pursuit onset) (Holmqvist et al., 2011). When the direction and velocity of the target object are not predictable, the smooth pursuit latency varies between 100–200 ms (Burke and Barnes, 2006). In paradigms such as step-ramp (Rashbass, 1961) that allow for anticipation, smooth pursuit latency may drop to 0 ms (or less, if pursuit starts before target motion) when its direction and velocity are predictable (Burke and Barnes, 2006; de Hemptinne et al., 2006). If the luminance of the moving object is the same as the background, the smooth pursuit latency may be prolonged by

Fixations are maintained more accurately on stationary targets rather than moving targets. During smooth pursuit, both the eye and the target are in motion, and lag between their positions is expected (Dell’Osso et al., 1992). This error is known as retinal position error, and is formally defined as the difference between eye and target positions measured during fixations.

Smooth pursuit gain (or smooth pursuit accuracy) is the ratio between smooth pursuit velocity and the target velocity (Zackon and Sharpe, 1987; Holmqvist et al., 2011). Typically, the smooth pursuit gain is lower than 1.0 and tends to fall even lower when the target velocity is high (Zackon and Sharpe, 1987). Moreover, smooth pursuit gain is modulated by on-line gain control (Robinson, 1965; Churchland and Lisberger, 2002). Smooth pursuit gain is decreased during conditions that distract user attention (Březinová and Kendell, 1977). Furthermore, smooth pursuit gain is also higher when tracking horizontal motion, compared to tracking vertical motion Rottach et al. (1996). Smooth pursuit gain has also been used in neurologically research. For instance, O’Driscoll and Callahan (2008) analyzed the smooth pursuit gain of individuals diagnosed with schizophrenia, and observed a low smooth pursuit gain in these populations. The smooth pursuit gain can be quantified by the root mean squared distance between the target point and the gaze position (θ) over the span of the experiment of n data samples.

Blink rate (or spontaneous blink rate, or blink frequency) is typically measured in blinks per minute. In some studies, the time between blinks (or blink interval) is measured instead (Shin et al., 2015). Early studies (Peterson and Allison, 1931; Newhall, 1932) show that blink rate is subjected to factors such as lighting, time of the day (fatigue), temperature, wind, age, and sex. Moreover, while blinks are predominantly involuntary, they are inhibited during engaged visual attention to minimize any blink-induced interruption to visual information (Ranti et al., 2020). More recent studies explore the idea of standard spontaneous eye blink rate in a broader aspect; healthy and non-healthy individuals under single (Doughty, 2002; Doughty and Naase, 2006; Ranti et al., 2020) and multiple (Doughty, 2001; Doughty, 2019) experiment conditions. Their results demonstrate the lack of a common value for blink rate, as they are dependent on experimental conditions. Thus, a standard measure for blink rate remains illusive despite the vast body of studies. Many studies on cognition adopt eye blink rate as a metric due its relative ease of detection, and its ability to indicate aspects of one’s internal state. In a study on the impact of blink rate on a Stroop task, researchers found that blink rate increases during a Stroop task compared with a baseline task of resting (Oh et al., 2012). Further, a study involving movie watching found the blink rate a reliable biomarker for assessing the concentration level (Shin et al., 2015; Maffei and Angrilli, 2019). Studies in dopamine processes also use blink rate as a marker of dopamine function. A review on studies in cognitive dopamine functions and the relationship with blink rate indicates varying results on blink rate in dopaminergic studies, including primates (Jongkees and Colzato, 2016).

The blink amplitude is the measure of the distance traveled by (the downward distance of upper eyelid) in the event of a blink (Stevenson et al., 1986). The amplitude measures the relative distance of motion to the distance the eyelid travels in a complete blink. This measure can be obtained using a video-based eye tracker (Riggs et al., 1987) or using electrooculography (Morris and Miller, 1996). Blink amplitude is often used in conjunction with research associated with fatigue measurement (Morris and Miller, 1996; Galley et al., 2004) and task difficulty (Cardona et al., 2011; Chu et al., 2014). Cardona et al. (2011) assessed the characteristics of blink behavior during visual tasks requiring prolonged periods of demanding activities. During the experiment, the authors noted a larger percentage of incomplete blinks. Another study involving fatigued pilots in a flight simulator showed that blink amplitude served as a promising predictor for level of fatigue (Morris and Miller, 1996).

Visual search behavior combines instances of saccades, fixations and possibly also smooth pursuit. The methods used to analyze such sequences of behavior are described below.

Vector and string-based editing approaches have been developed to compute the similarity of scan paths (Jarodzka et al., 2010; De Bruin et al., 2013). In particular, De Bruin et al. (2013) introduced three metrics: 1) Saccade Length Index (SLI), 2) Saccade Deviation Index (SDI)—to assist in faster analysis of eye tracking data, and 3) Benchmark Deviation Vectors (BDV)—to highlight repetitive path deviation in eye tracking data. The SLI is the sum of the distance of all the saccades during the experiment. The SLI can be obtained through following equation, where s is the starting position of the saccade, e ending position of the saccade, and n is the total number of saccades.

Jarodzka et al. (2010), on the other hand, proposed representing scan paths as geometrical vectors, and simplifying scan paths by clustering consecutive saccades directed at most Tϕ radians apart.

Time-to-First-Fixation on AOI (or time to first hit) refers to the time taken from stimulus onset up to the first fixation into a particular AOI (Holmqvist et al., 2011). This measure may be useful for both bottom-up stimulus-driven searches (e.g., a flashy company label) as well as top-down attention driven searches (e.g., when respondents actively decide to focus on certain elements or aspects on a website or picture). This metric is particularly useful for user interface evaluation, as a measure of visual search efficiency (Jacob and Karn, 2003). For instance, it has been used to evaluate the visual search efficiency of web pages (Ellis et al., 1998; Bojko, 2006). It is also influenced by prior knowledge, such as domain expertise. For instance, studies show that when analyzing medical images, expert radiologists exhibit a lower Time to First Fixation on AOIs (lesions, tumors, etc.) than novice radiologists (Krupinski, 1996; Venjakob et al., 2012; Donovan and Litchfield, 2013).

Revisit (or re-fixation, or recheck) count indicates how often the gaze was returned to a particular AOI. It can be used to distinguish between the AOIs that were frequently revisited, and the AOIs that were less so. A participant may be drawn back to a particular AOI for different reasons, such as its semantic importance (Guo et al., 2016), to refresh the memory (Meghanathan et al., 2019), and for confirmatory purposes (Mello-Thoms et al., 2005). The emotion perceived through visual stimuli also affect the likelihood of subsequent revisits, and thereby, the revisit count (Motoki et al., 2021); yet this perceived emotion is difficult to interpret purely through revisit count. Revisits are particularly common in social scenes, where observers look back and forth between interacting characters to assess their interaction (Birmingham et al., 2008). Overall, revisit count is indicative of user interest towards an AOI, and can be used to optimize user experiences.

Dwell time is the interval between one’s gaze entering an AOI and subsequently exiting it (Holmqvist et al., 2011). This includes the time spent on all fixations, saccades, and revisits during that visit (Tullis and Albert, 2013). For a typical English reading task, a lower dwell time (e.g.,

The gaze transition matrix is an adaptation of the transition matrix of Markov models into eye movement analysis. In a Markov model, a transition matrix (or probability matrix, or stochastic matrix, or substitution matrix) is a square matrix, where each entry represents the transition probability from one state to another. This concept was first applied for eye movement analysis by Ponsoda et al. (1995) to model the transition of saccade direction during visual search. Similarly, Bednarik et al. (2005) used this concept to model the transition of gaze position among AOIs and study the correlation between task performance and search pattern. The gaze transition matrix is calculated using the number of transitions from the Xth AOI to the Yth AOI. Based on the gaze transition matrix, several measures have been introduced to quantify different aspects of visual search.

Goldberg and Kotval (1999) defined transition matrix density (i.e., fraction of non-zero entries in the transition matrix) in order to analyze the efficiency of visual search. Here, a lower transition matrix density indicates an efficient and directed search, whereas a dense transition matrix indicates a random search.

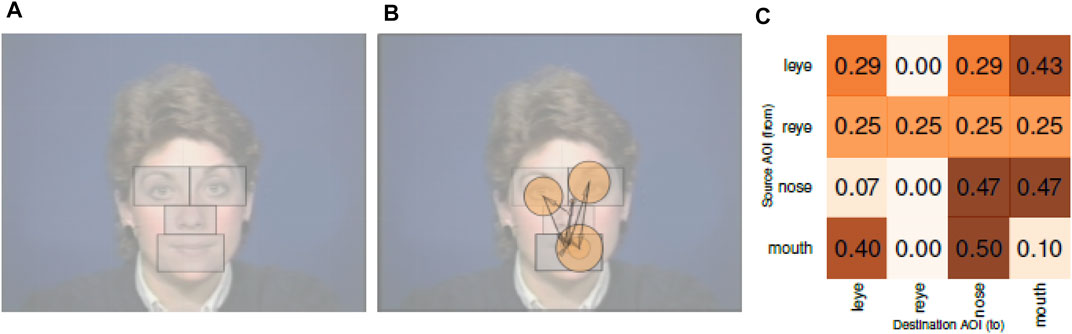

When comparing gaze transition matrices, Vandeberg et al. (2013) performed an element-wise comparison of transition probabilities by modelling eye movement transitions as a multi-level Hidden Markov Model. Similarly, Jayawardena et al. (2020) utilized gaze transition matrices to analyze the probabilities of transition of gaze between four AOIs. Figure 4A shows the AOIs used in this study. Figure 4B shows a sample scanpath of a participant, and Figure 4C shows its corresponding gaze transition matrix. Here, each cell in the matrix represents the probability of gaze transition from one AOI to another.

FIGURE 4. AOIs, Scanpaths, and Gaze Transition Matrices from Jayawardena et al. (2020). (A) AOI on face stimuli. (B) Scanpath with fixations on the AOIs. (C) Gaze transition matrix.

Gaze transition entropy is a measure of predictability in AOI transitions and overall distribution of eye movements over stimuli (Krejtz et al., 2014; Krejtz et al., 2015). Compared to transition matrix density, gaze transition entropy is a histogram-based estimation, which, in effect, takes into account the AOI size. The concept of entropy used here is that of information theory; it describes the amount of information required to generate a particular sequence, as a measure of its uncertainty. Krejtz et al. (2014), Krejtz et al. (2015) computes gaze transition entropy by first modelling eye movements between AOIs as a first-order Markov chain, and then obtaining its Shannon’s entropy (Shannon, 1948). They obtain two forms of entropy: 1) transition entropy Ht (calculated for individual subjects’ transition matrices) and 2) stationary entropy Hs (calculated for individual subjects’ stationary distributions).

Ht indicates the predictability of gaze transitions; a high Ht (∀ij|pij → 0.5) implies low predictability, whereas a low Ht (∀ij|pij → {0, 1}) implies high predictability. It is calculated by normalizing the transition matrix row-wise, replacing zero-sum rows by the uniform transition probability 1/s (Here, s is the number of AOIs), and obtaining its Shannon’s entropy. Hs, on the other hand, indicates the distribution of visual attention (Krejtz et al., 2014); a high Hs indicates that visual attention is equally distributed across all AOIs, whereas a low Hs indicates that visual attention is directed towards certain AOIs. It is estimated via eigen-analysis, and the Markov chain is assumed to be in a steady state where the transition probabilities converge. In one study, Krejtz et al. (2015) showed that participants who viewed artwork with a reportedly high curiosity, yielded a significantly less Ht (i.e., more predictable transitions) than others. Moreover, participants who viewed artwork with a reportedly high appreciation, yielded a significantly less Hs (i.e., more directed visual attention) than others. In another study, Krejtz et al. (2015) showed that participants who reportedly recognized a given artwork, yielded a significantly higher Ht and Hs (i.e., less predictable gaze) than others. Jayawardena et al. (2020) performed entropy-based eye movement analysis on neurotypical and ADHD-diagnosed subjects during an audiovisual speech-in-noise task. They found that ADHD-diagnosed participants made unpredictable gaze transitions (i.e., high entropy) at different levels of task difficulty, whereas the neurotypical group of participants made gaze transitions from any AOI to the mouth region (i.e., low entropy) regardless of task difficulty. These findings suggest that Ht and Hs are potential indicators of curiosity, interest, picture familiarity, and task difficulty.

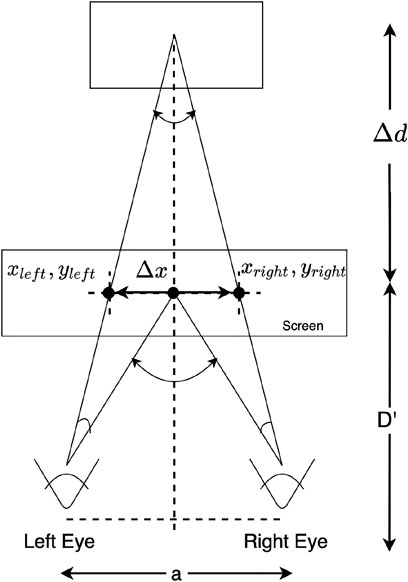

Due to the association of ocular vergence with binocular vision, the binocular gaze data can be used to measure ocular vergence. The gaze vergence can be estimated (see Figure 5) using the distance between the individual gaze positions for each eye, the distance from the user to the screen, and the interocular distance (Daugherty et al., 2010; Wang et al., 2012). A common application of the ocular vergence is assessing stereoscopic perception (Essig et al., 2004; Daugherty et al., 2010; Wang et al., 2012).

FIGURE 5. Ocular vergence (Daugherty et al., 2010; Wang et al., 2012).

These studies use ocular vergence to assess the user’s depth perception under different stimuli conditions. Further, the ocular vergence also has been the subject of estimating 3D gaze positions (Mlot et al., 2016) based on 2D positions provided by eye trackers.

The perceived depth (Δd) can be computed using the distance between gaze positions (Δx), the distance between the left and right eye (a), and the distance between the user and the screen (D′).

Pupil measures (see Table 1 for a list of pupil measures) capture fluctuations in the pupil’s size and orientation to produce measurements that provide insights into one’s internal state. The pupil diameter is the result of tonic and phasic pupillary responses of the eye (Sun et al., 1983; Wass et al., 2015; Peysakhovich et al., 2017).

The tonic component refers to the pupil diameter changes caused by slow contractions, while the phasic refers to the quick or transient contractions. Most of the metrics use pupil dilation as the primary measure for the computations in order to determine the tonic and phasic components.

The most primitive metric used in pupillometry is the average pupil diameter (Gray et al., 1993; Joshi et al., 2016). The measure captures both the tonic and the phasic components of the pupil dilation. Using the average dilation, some of the phasic and transient features can get smoothed out. An alternative measure to overcome the drawback of the average pupil dilation is to use the change in pupil dilation relative to a baseline. The baseline is assumed to correspond to the tonic component, while the relative change refers to the phasic component. The baseline can be either derived during the study or through a controlled environment.

Index of Cognitive Activity (ICA), introduced by Marshall (2002), is a measure of pupil diameter fluctuation as an instantaneous measure. Marshall (2000), Marshall (2007) describes the methodology followed in computing the ICA in an experiment setting. Furthermore, the publications also include parameter selections when performing experiments. The process starts by eliminating the pupil signal regions corresponding to blinks by either removing them or replacing them through interpolation as the preprocessing step. The signal is then passed through wavelet decomposition to capture the pupil signal’s abrupt changes through decomposing to the desired level. Finally, the decomposed signal subjects to thresholding, converting the decomposed signal coefficients to a binary vector of the same size; the thresholding stage acts as a de-noising stage here.

Index of Pupillary activity (IPA), introduced by Duchowski et al. (2018), is a metric inspired by ICA, with a similar underlying concept. Studies on ICA do not fully disclose the internals of ICA due to intellectual property reasons. IPA, however, discloses the internals of its process.

IPA computation starts by discarding pupil signals around blinks identified in the experiment. Duchowski et al. (2018) used a window of 200 ms in either direction during experiments. The procedure for computing the IPA measurement starts with a two-level Symlet-16 discrete wavelet decomposition of the pupil dilation signal by selecting a mother wavelet function ψj,k(t). The resulting dyadic wavelet upon the wavelet analysis of the signal x(t), generates a dyadic series representation. Then the process follows a multi-resolution signal analysis of the original signal x(t). A level is arbitrarily selected from the multi-resolution decomposition to produce a smoother approximation of the signal x(t). Finally, threshold the wavelet modulus maxima coefficients using a universal threshold defined by,

where

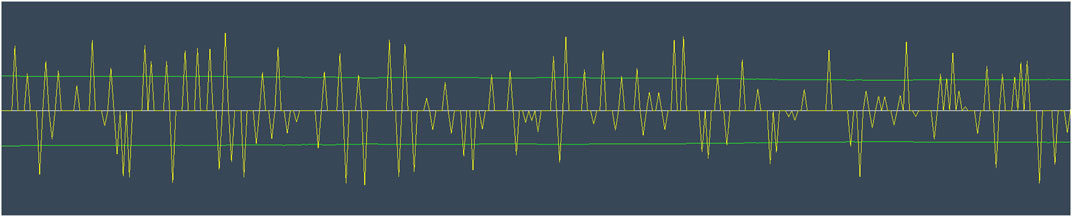

Low/High Index of Pupillary Activity (LHIPA), introduced by Duchowski et al. (2020), is a variation of the IPA measure identified earlier. The computation of the metric remains the same as in IPA, except for counting remnants. Instead of counting threshold remnants as in IPA (Duchowski et al., 2018), LHIPA counts the modulus maxima of the low and high-frequency bands contained in the wavelet (see Figure 6).

FIGURE 6. Visualization of the processed pupil diameter signal (yellow) and the threshold used for calculating the Low/High Index of Pupillary Activity (green) (Duchowski et al., 2020).

Using the Discrete Wavelet Transform to analyze the pupil diameter signal at multiple levels of resolution, the wavelet coefficients are found by:

where gk is the one-dimensional high-pass wavelet filter. Level j is chosen arbitrarily to select either high or low frequency wavelet coefficients. For the high frequency component, they have chosen j = 1 and for the low frequency component, they have chosen j = 1/2 log 2(n), the mid-level frequency octave where log 2(n) is the number of octaves. Thus, the low frequency/high frequency ratio is:

The pupillary response increases as the cognitive load increases. Since LHIPA is a ratio, an increase in the pupillary response reflects a decrease in the LHIPA reading. The authors demonstrate the metric’s applicability using a series of three experiments where they assess the relationship between the task difficulty and the corresponding measures of IPA and LHIPA. During the experiments, the authors determined that the LHIPA was able to identify between difficult tasks and easy or baseline tasks throughout the study, while IPA could do so during only one experiment. The experiments also revealed that the LHIPA demonstrated cognitive load earlier than IPA, indicating a faster response due to the measure being a ratio and a built-in ratio arising from the ratio’s computation.

Eye movement and pupil measures have been applied in disciplines including, but not limited to, neuroscience (Hessels and Hooge, 2019), psychology (Mele and Federici, 2012), and human computer interaction (Duchowski, 2002). In this section, we collectively summarize the literature of eye movement and pupillometry applications under different domains, discuss the limitations that we observe, and by doing so, establish a vision for implementing eye movements and pupil measures in these application environments.

In eye-tracking neuroscience, researchers have jointly analyzed neuronal activity and oculomotor activity to study the physiological organization of the vision system, and their effect on cognition and behavior. Studies of neuronal activity during phenomena such as attention (Blair et al., 2009; Kimble et al., 2010), scene perception (Duc et al., 2008), inattentional blindness (Simons and Chabris, 1999), visual engagement (Catherine and James, 2001), and covert attention processing (i.e., visually fixating on one location while diverting attention to another) (Posner et al., 1980) had revealed important facts about cognition and behavior.

For instance, studies on covert attention processing show that attention cannot be inferred solely from whether an object was looked at (Hafed and Clark, 2002; Ebitz and Moore, 2019), and doing so would lead to false positives (Posner et al., 1980). Similarly, studies on scene perception show that information is processed in two forms: top-down (based on semantic importance) and bottom-up (based on visual significance such as color, brightness, etc.) (Duc et al., 2008). Moreover, studies on visual attention show that attention is divided among AOIs through a sustained cognitive state, from which relevant visual objects become available to influence behavior Duncan et al. (1994).

Studies have also revealed that pupillary activity is correlated with cognitive load (Hess and Polt, 1964; Hyönä et al., 1995), and also with neural gain (Eldar et al., 2013) and cortical activity (Reimer et al., 2016). While cognitive load can be inferred from pupillary activity, studies show that such inference becomes challenging in fast-paced cognitive tasks (Wierda et al., 2012), temporally overlapping cognitive tasks (Wierda et al., 2012), and in surroundings with varying ambient luminance (Zénon, 2017). Cherng et al. (2020) showed that pupil diameter is regulated by sympathetic activation (arousal-induced pupil dilation) and parasympathetic inhibition (saccade-induced pupil dilation), both of which are affected by ambient luminance. Wierda et al. (2012) showed that a deconvolved pupil response signal is indicative of cognitive load, with a high temporal resolution. However, (Zénon, 2017), showed that this method does not account for low-frequency pupil fluctuations and inter-individual variability of pupil responses, and instead proposed using auto-regressive models (Cho et al., 2018) with exogenous inputs to analyze pupillary activity. Similarly, Watson and Yellott (2012) proposes a generalized formula to analyze pupillary activity, which accounts for ambient luminance, the size of the adapting field, the age of the observer, and whether both pupils are adapted.

Researchers of eye tracking neurscience have utilized various measures to study neurodevelopmental disorders. Some of these studies are exclusively based on fixation measures. For instance, He et al. (2019) examined the fixation count and fixation duration in preschoolers with Autism Spectrum Disorder (ASD), and found that preschoolers with ASD had atypical gaze patterns in a facial emotion expression task compared to typical individuals. Moreover, they found that deficits in recognizing emotions from facial expressions in ASD correlated with the social interaction and development quotient. In contrast, some studies are based exclusively on saccadic measures. For instance, MacAskill et al. (2002) found that the saccade amplitude was modified during adaption of memory-guided saccades and this adaptive ability was impaired in individuals with Parkinson’s disease. Similarly, Barbosa et al. (2019) identfied that saccadic direction errors are associated with impulsive compulsive behaviors of individuals with Parkinson’s Disease, as they had difficulty in suppressing automatic saccades to a given target. Patel et al. (2012) showed that saccade latency is correlated with the severity of Huntington Disease (HD), and suggested its possiblity of being a biomarker of disease severity in HD. In another study, Jensen et al. (2019) found that reduced saccade velocity is a key indicator of progressive supranuclear palsy and other disoders of mid-brain. Similarly, Biscaldi et al. (1998) found that individuals with Dyslexia had significantly higher regressive saccade rate in a sequential-target task. Similarly, Termsarasab et al. (2015) stated that abnormalities in saccade gain could aid diagnosis of hyperkinetic and hypokinetic movement disorders. Similarly, Fukushima et al. (2013) used a memory-based smooth pursuit task to examine working memory of smooth pursuit direction in individuals with PD.

Certain studies performed their analysis using visual search measures. Rutherford and Towns (2008), for instance, demonstrated scan path similarities and differences during emotion perception between typical individuals and individuals with ASD. Similarly, Bours et al. (2018) demonstrated that individuals with ASD had increased time to first fixation on the eyes of fearful faces during emotion recognition task. Duret et al. (1999) showed that refixation strategies in macular disorders was dependant on location of the target relative to the scotoma, spatial characteristics of the disease and the duration of the disorder. Guillon et al. (2015) showed that gaze transition matrices could potentially reveal new strategies of visual scanning followed by individuals with ASD. Moreover, Wainstein et al. (2017) showed that pupil diameter could be a biomarker in ADHD based on the results from a visuo-spatial working memory task.

In HCI research, eye tracking has been used to evaluate the usability of human-computer interfaces (both hardware and software). Here, the primary eye movement measures being analyzed are saccades, fixations, smooth pursuits, compensatory, vergence, micro-saccades, and nystagmus (Goldberg and Wichansky, 2003). For instance, Farbos et al. (2000) and Dobson (1977) had attempted to correlate eye tracking measures with software usability metrics such as time taken, completion rate, and other global metrics. Similarly, Du and MacDonald (2014) had analyzed how visual saliency is affected by icon size. In both scenarios, eye movements were recorded while users navigated human-computer interfaces, and were subsequently analyzed using fixation and scan-path measures.

Pupil diameter measures are widely used to assess the cognitive load of users when interacting with human-computer interfaces. For instance, Bailey and Iqbal (2008) used pupil diameter measures to demonstrate that cognitive load varies while completing a goal-directed task. Adamczyk and Bailey (2004) used pupil diameter measures to demonstrate that the disruption caused by user interface interruptions (e.g., notifications) is more pronounced during periods of high cognitive load. Iqbal et al. (2004) used pupil diameter measures to show that difficult tasks demand longer processing time, induces higher subjective ratings of cognitive load, and reliably evokes greater pupillary response at salient subtasks. In addition to pupil diameter measures, studies such as Chen and Epps (2014) have also utilized blink rate measures to analyze how cognitive load (Sweller, 2011) and perceptual load (Macdonald and Lavie, 2011) varies across different tasks. They claim that pupil diameter indicates cognitive load well for tasks with low perceptual load, and that blink rate indicates perceptual load better than cognitive load.

In psychology research, eye tracking has been used to understand eye movements and visual cognition during naturalistic interactions such as reading, driving, and speaking. Rayner (2012), for instance, have analyzed eye movements during English reading, and observed a mean saccade duration and amplitude of 200–250 ms and 7–9 letters, respectively. They have also observed different eye movement patterns when reading silently vs aloud, and correlations of text complexity to both fixation duration (+) and saccade length (−). Three paradigms were commonly used to analyze eye movements during reading tasks: moving window—selecting a few characters before/after the fixated word (McConkie and Rayner, 1975), foveal mask—masking a region around the fixated word (Bertera and Rayner, 2000), and boundary—creating pre-defined boundaries (i.e., AOIs) to classify fixations (Rayner, 1975). Over time, these paradigms have been extended to tasks such as scene perception. Recarte and Nunes (2000) and Stapel et al. (2020), for instance, have used the boundary method to analyze eye movements during driving tasks and thereby assess the effect of driver awareness on gaze behavior. In particular, Recarte and Nunes (2000) have observed that distracted drivers had higher fixation durations, higher pupil dilations, and lower fixation counts in the mirror/speedometer AOIs compared to control subjects.

In psycholinguistic research, pupil measures have been used to analyze the cognitive load of participants in tasks such as simultaneous interpretation (Russell, 2005), speech shadowing (Marslen-Wilson, 1985), and lexical translation. For instance, Seeber and Kerzel (2012) measured the pupil diameter during simultaneous interpretation tasks, and observed a larger average pupil diameter (indicating a higher cognitive load) when translating between verb-final and verb-initial languages, compared to translating between languages of the same type. Moreover, Hyönä et al. (1995) measured the pupil diameter during simultaneous interpretation, speech shadowing, and lexical translation tasks. They observed a larger average pupil diameter (indicating a higher cognitive load) during simultaneous interpretation than speech shadowing, and also momentary variations in pupil diameter (corresponding to spikes in cognitive load) during lexical translation.

Moreover, studies in marketing and behavioral finance, have used eye-tracking and pupillometric measures to understand the relationship between presented information and the decision-making process. For instance, Rubaltelli et al. (2016) used pupil diameter to understand investor decision-making, while Ceravolo et al. (2019), Hüsser and Wirth (2014) used dwell time. Further, studies in marketing have identified relationships between the consumer decision process through fixations on different sections in the product description Ares et al. (2013), Menon et al. (2016). The utility of eye-tracking in marketing extends beyond product descriptions, to advertisements, brands, choice, and search patterns (Wedel and Pieters, 2008).

Among the recent developments in eye tracking and pupil measures, the introduction of a series of pupillometry-based measures (ICA, IPA, and LHIPA) (Marshall, 2002; Duchowski et al., 2018; Duchowski et al., 2020) to assess the cognitive load is noteworthy. These measures, in general, compute the cognitive load by processing the pupil dilation as a signal, and thus require frequent and precise measurements of pupil dilation to function. We ascribe the success of these measures to the technological advancements in pupillometric devices, which led to higher sampling rates and more accurate measurement of pupil dilation than possible before. In the future, we anticipate the continued development of measures that exploit pupil dilation signals (El Haj and Moustafa, 2021; Maier and Grueschow, 2021).

Another noteworthy development is the emergence of commodity camera-based eye-tracking (Sewell and Komogortsev, 2010; Krafka et al., 2016; Semmelmann and Weigelt, 2018; Mahanama et al., 2020) systems to further democratize eye-tracking research and interaction. Since these systems require no dedicated/specialized hardware, one could explore eye-tracking at a significantly lower cost than otherwise possible. Yet the lack of specialized hardware, such as IR illumination or capture, restricts their capability to only eye tracking, and not pupillometry. The relatively low sampling rates of commodity cameras may negatively affect the quality of eye-tracking measures. Overall, eye tracking on commodity hardware provides a cost-effective means of incorporating other eye-tracking measures, despite its quality being heavily device-dependent. In the future, we anticipate cameras to have higher sampling frequencies (Wang et al., 2021) and resolutions, which, in turn, would bridge the gap between specialized eye trackers and commodity camera-based eye tracking systems.

One of the major limitation we observed is that most studies use derivative measures of only a single oculomotor event instead of combinations of multiple events. For instance, studies that use fixational eye movements generally use only measures associated with fixations, despite the possible utility of saccadic information. This limiation is exacerbated with the confusion on the concepts of fixations and saccades (Hessels et al., 2018). Further, most studies rely on first-order statistical features (e.g., histogram-based features such as min, max, mean, median, sd, and skewness) or trend analysis (e.g., trajectory-based features such as sharpest decrease and sharpest increase between consecutive samples) on the features instead of employing advanced measures. Our study identified some measures to be popular in specific domains, despite their potential applicability into other domains; for instance, pupillary measures are extensively studied in neuroscience research (Schwalm and Jubal, 2017; Schwiedrzik and Sudmann, 2020), but not quite so in psychology research. One plausible reason is the lack of literature that aggregates eye movement and pupillometric measures to assist researchers in identifying additional measures. Through this paper, we attempt to provide this missing knowledge to researchers. Another reason could be the computational limitations of eye tracking hardware and software. For instance, micro-saccadic measures often require high-frequency eye trackers ( ≥300 Hz) (Krejtz et al., 2018) that are relatively expensive, thereby imposing hardware-level restrictions. Likewise, intellectual property restrictions (Marshall, 2000) and the lack of public implementations (Duchowski et al., 2018; Duchowski et al., 2020) (i.e., code libraries) of eye tracking solutions impose software-level restrictions. Both scenarios create an entry barrier for researchers into all applicable eye movement and pupillometry measures. Even though we suggest alternatives for patented measures in this paper, the lack of code libraries still remains unaddressed.

Another limitation is the relatively unexplored research avenues of eye-tracking and pupillometric measures in Extended Reality (XR) environments (Rappa et al., 2019; Renner and Pfeiffer, 2017; Clay et al., 2019; Mutasim et al., 2020; Heilmann and Witte, 2021), such as Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR). These extended realities have a broad utility in simulating real-world scenarios, often eliminating the requirement of complex experimental environments (i.e., VR driver behavior (Bozkir et al., 2019)). Further, the significant control of the reality vested to the experiment designer enables them to simulate complex and rare real-world events. However, these extended reality scenarios require additional hardware and software to perform eye-tracking. This poses entry barriers for practitioners in the form of budgetary constraints (i.e., cost of additional hardware, and software), knowledge constraints (i.e., experience/knowledge on XR toolsets), and experimental design (i.e., the structure of the experiment, how to simulate and integrate modalities such as touch or haptics). We suspect these factors collectively contribute to the less exploratory studies in XR eye tracking research. Considering the possibility of this technology being more commonplace (e.g., Microsoft Hololens 21 eye tracking optics, HTC VIVE Pro Eye2, VARJO VR eye tracking3), this could be an excellent opportunity for future studies.

In this paper, we have identified, discussed, and reviewed existing measures in eye-tracking and pupillometry. Further, we classified these measures based on the mechanism of vision; as eye movement, blink, and pupil-based measures. For each, we provided an overview of the measure, the underlying eye-mechanism exploited by it, and how it can be measured. Further, we identified a selected set of studies that use each type of measure and documented their findings.

This survey aims to help the researchers in two forms. First, due to the utility of eye-tracking and pupillometric measures in a broader range of domains, the implications of measures/results in other domains can easily be overlooked. Our study helps to overcome the issue by including applications and their indications along with each measure. Further, we believe the body of knowledge in the study would help researchers to choose appropriate measures for a future study. Researchers could adapt our taxonomy to classify eye-tracking and pupil measures based on the eye mechanism and vice versa. Moreover, the researchers can identify particular eye mechanisms or measures exploited for research through the presented classification. Finally, we expect this review to serve as a reference for researchers exploring eye tracking techniques using eye movements and pupil measures.

BM: Planned the structure of the paper and content, carried out the review for the survey, organized the entire survey and contributed to writing the manuscript. YJ: Planned the structure of the paper and content, carried out the review for the survey, organized the entire survey and contributed to writing the manuscript. SR: Planned the structure of the paper and content, proofread, and contributed to writing the manuscript. GJ: Contributed to writing the Pupillometry measures. LC: Contributed to proofread, and supervision JS: Contributed to proofread, and supervision SJ: Idea formation, proofread and research supervision.

This work is supported in part by the U.S. National Science Foundation grant CAREER IIS-2045523. Any opinions, findings and conclusion or recommendations expressed in this material are the author(s) and do not necessarily reflect those of the sponsors.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We thank the reviewers whose comments/suggestions helped improve and clarify this manuscript.

1https://www.microsoft.com/en-us/hololens/hardware

2https://www.vive.com/us/product/vive-pro-eye/overview/

3https://varjo.com/use-center/get-to-know-your-headset/eye-tracking/

Abel, L. A., and Hertle, R. W. (1988). “Effects of Psychoactive Drugs on Ocular Motor Behavior,” in Neuropsychology of Eye Movements (Hillsdale, NJ: Lawrence Erlbaum Associates), 81–114.

Adamczyk, P. D., and Bailey, B. P. (2004). “If Not Now, when? the Effects of Interruption at Different Moments within Task Execution,” in Proceedings of the SIGCHI conference on Human factors in computing systems, 271–278.

Adler, F. H., and Fliegelman, M. (1934). Influence of Fixation on the Visual Acuity. Arch. Ophthalmol. 12, 475–483. doi:10.1001/archopht.1934.00830170013002

Anderson, N. C., Bischof, W. F., Foulsham, T., and Kingstone, A. (2020). Turning the (Virtual) World Around: Patterns in Saccade Direction Vary with Picture Orientation and Shape in Virtual Reality. J. Vis. 20, 21. doi:10.1167/jov.20.8.21

Andersson, R., Nyström, M., and Holmqvist, K. (2010). Sampling Frequency and Eye-Tracking Measures: How Speed Affects Durations, Latencies, and More. J. Eye Move. Res. 3. doi:10.16910/jemr.3.3.6

Ansari, M. F., Kasprowski, P., and Obetkal, M. (2021). Gaze Tracking Using an Unmodified Web Camera and Convolutional Neural Network. Appl. Sci. 11, 9068. doi:10.3390/app11199068

Anson, E. R., Bigelow, R. T., Carey, J. P., Xue, Q.-L., Studenski, S., Schubert, M. C., et al. (2016). Aging Increases Compensatory Saccade Amplitude in the Video Head Impulse Test. Front. Neurol. 7, 113. doi:10.3389/fneur.2016.00113

Ares, G., Giménez, A., Bruzzone, F., Vidal, L., Antúnez, L., and Maiche, A. (2013). Consumer Visual Processing of Food Labels: Results from an Eye-Tracking Study. J. Sens Stud. 28, 138–153. doi:10.1111/joss.12031

Bahill, A. T., Clark, M. R., and Stark, L. (1975). The Main Sequence, a Tool for Studying Human Eye Movements. Math. biosciences 24, 191–204. doi:10.1016/0025-5564(75)90075-9

Bahill, A. T., and Laritz, T. (1984). Why Can’t Batters Keep Their Eyes on the ball. Am. Scientist 72, 249–253.

Bailey, B. P., and Iqbal, S. T. (2008). Understanding Changes in Mental Workload during Execution of Goal-Directed Tasks and its Application for Interruption Management. ACM Trans. Comput.-Hum. Interact. 14, 1–28. doi:10.1145/1314683.1314689

Barbosa, P., Kaski, D., Castro, P., Lees, A. J., Warner, T. T., and Djamshidian, A. (2019). Saccadic Direction Errors Are Associated with Impulsive Compulsive Behaviours in Parkinson's Disease Patients. Jpd 9, 625–630. doi:10.3233/jpd-181460

Barmack, N. H. (1970). Modification of Eye Movements by Instantaneous Changes in the Velocity of Visual Targets. Vis. Res. 10, 1431–1441. doi:10.1016/0042-6989(70)90093-3

Barnes, G. R., and Asselman, P. T. (1991). The Mechanism of Prediction in Human Smooth Pursuit Eye Movements. J. Physiol. 439, 439–461. doi:10.1113/jphysiol.1991.sp018675

Barnes, G. R. (2008). Cognitive Processes Involved in Smooth Pursuit Eye Movements. Brain Cogn. 68, 309–326. doi:10.1016/j.bandc.2008.08.020

Bartels, M., and Marshall, S. P. (2012). “Measuring Cognitive Workload across Different Eye Tracking Hardware Platforms,” in Proceedings of the symposium on eye tracking research and applications, 161–164. doi:10.1145/2168556.2168582

Becker, W., and Fuchs, A. F. (1969). Further Properties of the Human Saccadic System: Eye Movements and Correction Saccades with and without Visual Fixation Points. Vis. Res. 9, 1247–1258. doi:10.1016/0042-6989(69)90112-6

Bednarik, R., Myller, N., Sutinen, E., and Tukiainen, M. (2005). “Applying Eye-Movememt Tracking to Program Visualization,” in 2005 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC’05) (IEEE), 302–304.

Bertera, J. H., and Rayner, K. (2000). Eye Movements and the Span of the Effective Stimulus in Visual Search. Perception & Psychophysics 62, 576–585. doi:10.3758/bf03212109

Birmingham, E., Bischof, W. F., and Kingstone, A. (2008). Social Attention and Real-World Scenes: The Roles of Action, Competition and Social Content. Q. J. Exp. Psychol. 61, 986–998. doi:10.1080/17470210701410375

Biscaldi, M., Gezeck, S., and Stuhr, V. (1998). Poor Saccadic Control Correlates with Dyslexia. Neuropsychologia 36, 1189–1202. doi:10.1016/s0028-3932(97)00170-x

Blair, M. R., Watson, M. R., Walshe, R. C., and Maj, F. (2009). Extremely Selective Attention: Eye-Tracking Studies of the Dynamic Allocation of Attention to Stimulus Features in Categorization. J. Exp. Psychol. Learn. Mem. Cogn. 35, 1196–1206. doi:10.1037/a0016272

Blount, W. P. (1927). Studies of the Movements of the Eyelids of Animals: Blinking. Exp. Physiol. 18, 111–125. doi:10.1113/expphysiol.1927.sp000426

Bojko, A. (2006). Using Eye Tracking to Compare Web page Designs: A Case Study. J. Usability Stud. 1, 112–120.

Bours, C. C. A. H., Bakker-Huvenaars, M. J., Tramper, J., Bielczyk, N., Scheepers, F., Nijhof, K. S., et al. (2018). Emotional Face Recognition in Male Adolescents with Autism Spectrum Disorder or Disruptive Behavior Disorder: an Eye-Tracking Study. Eur. Child. Adolesc. Psychiatry 27, 1143–1157. doi:10.1007/s00787-018-1174-4

Boxer, A. L., Garbutt, S., Seeley, W. W., Jafari, A., Heuer, H. W., Mirsky, J., et al. (2012). Saccade Abnormalities in Autopsy-Confirmed Frontotemporal Lobar Degeneration and Alzheimer Disease. Arch. Neurol. 69, 509–517. doi:10.1001/archneurol.2011.1021

Bozkir, E., Geisler, D., and Kasneci, E. (2019). “Person Independent, Privacy Preserving, and Real Time Assessment of Cognitive Load Using Eye Tracking in a Virtual Reality Setup,” in 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (IEEE), 1834–1837. doi:10.1109/vr.2019.8797758

Braun, D. I., Pracejus, L., and Gegenfurtner, K. R. (2006). Motion Aftereffect Elicits Smooth Pursuit Eye Movements. J. Vis. 6, 1. doi:10.1167/6.7.1

Březinová, V., and Kendell, R. (1977). Smooth Pursuit Eye Movements of Schizophrenics and normal People under Stress. Br. J. Psychiatry 130, 59–63.

Brutten, G. J., and Janssen, P. (1979). An Eye-Marking Investigation of Anticipated and Observed Stuttering. J. Speech Lang. Hear. Res. 22, 20–28. doi:10.1044/jshr.2201.20

Buonocore, A., Chen, C.-Y., Tian, X., Idrees, S., Münch, T. A., and Hafed, Z. M. (2017). Alteration of the Microsaccadic Velocity-Amplitude Main Sequence Relationship after Visual Transients: Implications for Models of Saccade Control. J. Neurophysiol. 117, 1894–1910. doi:10.1152/jn.00811.2016

Buonocore, A., McIntosh, R. D., and Melcher, D. (2016). Beyond the point of No Return: Effects of Visual Distractors on Saccade Amplitude and Velocity. J. Neurophysiol. 115, 752–762. doi:10.1152/jn.00939.2015

Burke, M. R., and Barnes, G. R. (2006). Quantitative Differences in Smooth Pursuit and Saccadic Eye Movements. Exp. Brain Res. 175, 596–608. doi:10.1007/s00221-006-0576-6

Burr, D. C., Morrone, M. C., and Ross, J. (1994). Selective Suppression of the Magnocellular Visual Pathway during Saccadic Eye Movements. Nature 371, 511–513. doi:10.1038/371511a0

Buswell, G. T. (1935). How People Look at Pictures: A Study of the Psychology and Perception in Art. Chicago: Univ. Chicago Press.

Cardona, G., García, C., Serés, C., Vilaseca, M., and Gispets, J. (2011). Blink Rate, Blink Amplitude, and Tear Film Integrity during Dynamic Visual Display Terminal Tasks. Curr. Eye Res. 36, 190–197. doi:10.3109/02713683.2010.544442

Carl, J. R., and Gellman, R. S. (1987). Human Smooth Pursuit: Stimulus-dependent Responses. J. Neurophysiol. 57, 1446–1463. doi:10.1152/jn.1987.57.5.1446

Castello, E., Baroni, N., and Pallestrini, E. (1998). Neurotological and Auditory Brain Stem Response Findings in Human Immunodeficiency Virus-Positive Patients without Neurologic Manifestations. Ann. Otol Rhinol Laryngol. 107, 1054–1060. doi:10.1177/000348949810701210

Catherine, L., and James, M. (2001). Educating Children with Autism. Washington, DC: National Academies Press.

Ceder, A. (1977). Drivers' Eye Movements as Related to Attention in Simulated Traffic Flow Conditions. Hum. Factors 19, 571–581. doi:10.1177/001872087701900606

Ceravolo, M. G., Farina, V., Fattobene, L., Leonelli, L., and Raggetti, G. (2019). Presentational Format and Financial Consumers’ Behaviour: an Eye-Tracking Study. Int. J. Bank Marketing 37 (3), 821–837. doi:10.1108/ijbm-02-2018-0041

Chen, S., and Epps, J. (2014). Using Task-Induced Pupil Diameter and Blink Rate to Infer Cognitive Load. Human-Computer Interaction 29, 390–413. doi:10.1080/07370024.2014.892428

Cherng, Y. G., Baird, T., Chen, J. T., and Wang, C. A. (2020). Background Luminance Effects on Pupil Size Associated with Emotion and Saccade Preparation. Sci. Rep. 10, 1–11. doi:10.1038/s41598-020-72954-z