- 1Media Laboratory, Massachusetts Institute of Technology, Cambridge, MA, United States

- 2Connected Future Labs, New York, NY, United States

Accessibility, adaptability, and transparency of Brain-Computer Interface (BCI) tools and the data they collect will likely impact how we collectively navigate a new digital age. This discussion reviews some of the diverse and transdisciplinary applications of BCI technology and draws speculative inferences about the ways in which BCI tools, combined with machine learning (ML) algorithms may shape the future. BCIs come with substantial ethical and risk considerations, and it is argued that open source principles may help us navigate complex dilemmas by encouraging experimentation and making developments public as we build safeguards into this new paradigm. Bringing open-source principles of adaptability and transparency to BCI tools can help democratize the technology, permitting more voices to contribute to the conversation of what a BCI-driven future should look like. Open-source BCI tools and access to raw data, in contrast to black-box algorithms and limited access to summary data, are critical facets enabling artists, DIYers, researchers and other domain experts to participate in the conversation about how to study and augment human consciousness. Looking forward to a future in which augmented and virtual reality become integral parts of daily life, BCIs will likely play an increasingly important role in creating closed-loop feedback for generative content. Brain-computer interfaces are uniquely situated to provide artificial intelligence (AI) algorithms the necessary data for determining the decoding and timing of content delivery. The extent to which these algorithms are open-source may be critical to examine them for integrity, implicit bias, and conflicts of interest.

Introduction

Brain-computer interfaces (BCIs) are poised to transform the nature of human consciousness in the 21st century. In this context, we adopt the operational definition of being conscious as having an experience – the subjective phenomena “what it's like” to see an image, hear a sound, conceive a thought, or be aware of an emotion (Sandberg et al., 2010; Faivre et al., 2015; Koch et al., 2016). We speculate based on prior research that closed-loop systems with a combination of stimuli (mixed reality), sensing (BCI) and predictive algorithms (AI and its subsets machine learning and deep learning) will increasingly be capable to alter the subjective experience in a manner that is tightly coupled with changes in emotion regulation (Lorenzetti et al., 2018; Montana et al., 2020), and cognitive augmentations such as attention improvements (Wang et al., 2019), episodic memory enhancement (Burke et al., 2015). These transformations may not only make humanity more productive and efficient, but also potentially more expressive, understanding, and empathetic.

We can begin by classifying BCIs into three main groups: (1) invasive approach (Wolpaw et al., 2000), (2) partially invasive approach, and (3) non-invasive approach. An invasive approach requires electrodes to be physically implanted into the brain's gray matter by neurosurgery, making it possible to measure local field potentials. A partially invasive approach (e.g., electrocorticography—ECoG) is applied to the inside of the skull yet outside the gray matter. A non-invasive approach (e.g., electroencephalography—EEG and functional Magnetic Resonance Imaging—fMRI) is the most frequently used signal capturing method. This system is placed outside of the skull on the scalp and records the brain activities inside of the skull and on the surface of the brain membranes. Both EEG and fMRI give different perspectives and enable us to “look” inside the brain (Kropotov, 2010). It is worth noting that invasive and partially invasive approaches are prone to scar tissue, are difficult to operate, and expensive. Although EEG signals can be prone to noise and signal distortion, they are easily measured and have a good temporal resolution. This and the fact that fMRI devices are expensive and cumbersome to operate make EEG the most widely used method for recording brain activity in BCI systems. EEG-based devices directly measure electrical potentials produced by the brain's neural synaptic activities.

While the use of EEG was originally limited to medical imaging research labs, more compact and affordable EEG systems have opened up opportunities for other applications to be explored. In this paper, we discuss some of the ways that these new technologies have been applied in areas ranging from the arts, self-improvement and rehabilitation to gaming and augmented reality. While consumer electronics devices have increased the accessibility to BCI technologies, we also discuss some of the ways in which these same devices limit their adaptability beyond pre-defined use-cases as well as the transparency of the data and algorithms. For the context of this discussion, we use BCI technology to describe devices that measure a broad array of biometric signals, not only directly from the central nervous system (CNS), but also from the peripheral nervous system (PNS). Because changes in cognitive and emotional states engage sympathetic and parasympathetic responses of the PNS, changes in heart rate, electrodermal activity, and other biometric signals can provide a detailed window into brain activity (Picard, 1995).

Our discussion briefly reviews the evolution of BCI devices with examples of how they have been applied outside of traditional research settings. Within a transdisciplinary context, including neuroscience, computer science, health, philosophy, art, and a rapidly developing technology landscape, we review specific ways in which limitations to the adaptability and transparency of BCI technology can have implications for applications both within and outside research contexts. We examine how applying open-source principles may help to democratize the technology and overcome some of these limitations, both for traditional research as well as alternative uses. Looking forward to a future in which BCIs are likely to become increasingly integrated into our everyday lives, we believe that it will be important to involve more voices from across traditional disciplinary divides contributing to the conversation about what our future should look like and how BCI devices and data should contribute to our lives. From public BCI art to hackathons and K-12 education, it will likely be critical to be asking more questions, new types of questions from different perspectives, and starting at a younger age, to ensure that BCI technology will serve society at large.

As BCIs move beyond siloed research labs toward new and more diverse use cases, the accessibility, adaptability, and transparency of BCI tools and data will significantly impact how we collectively navigate this new digital age. Technology and the self are becoming increasingly coupled, allowing us to learn faster, create new ways to express ourselves and share information like never before. The extent to which these technologies are open and accessible for more people to engage with them and examine their integrity may shape the nature of our consciousness and the future of humanity.

Change in Perspective

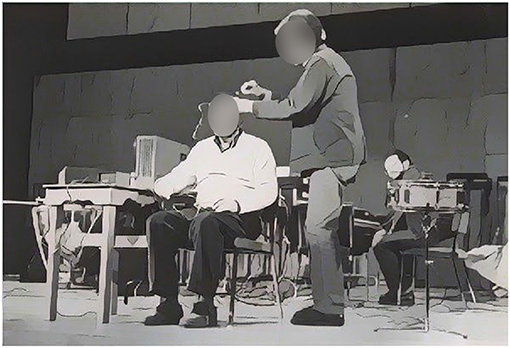

Artists and do-it-yourself-ers (DIYers) have been exploring novel BCI applications since before BCI was an acronym. Artists and DIYers often adopt new technologies to modify their original condition and purpose beyond the “intended” use. Going back 60 years, artists proposed novel experiments such as the sonification of alpha waves to excavate untapped musical imaginations or subconscious musicality. There was especially a great interest by composers in the use of feedback, both acoustic and electronic, as a fundamental musical process. In 1965, physicist Edmond Dewan and composer Alvin Lucier collaborated on Music for the Solo Performer, shown in Figure 1. This piece is generally considered to be the first musical work to use brain waves and directly translate them into musical sound. Lucier's work remains a pioneering and important piece of 20th-century music, as well as one of the touchstones of early “live” electronic music. Classic feedback pieces such as David Behrman's WaveTrain (Behrman, 1998), Max Neuhaus' Public Supply (Max, 1977), and Terry Riley's tape delay feedback (Sitsky, 2002) were also created in this new wave of exploration. These early artists were often found building BCIs and synthesizers from scratch using basic electrical components (resistors, capacitors, amplifiers, etc.), which gave them a very high degree of flexibility to create and adapt their circuits to do strange and wonderful things but also posed a high barrier of entry to create and use the technology.

Figure 1. Physicist Edmond Dewan and composer Alvin Lucier collaborated on music for the solo performer.

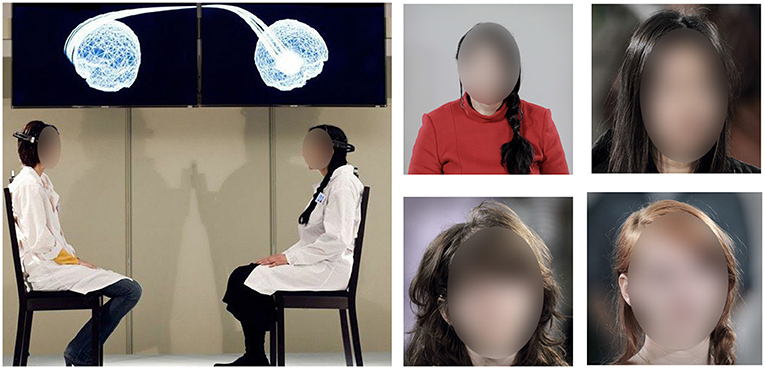

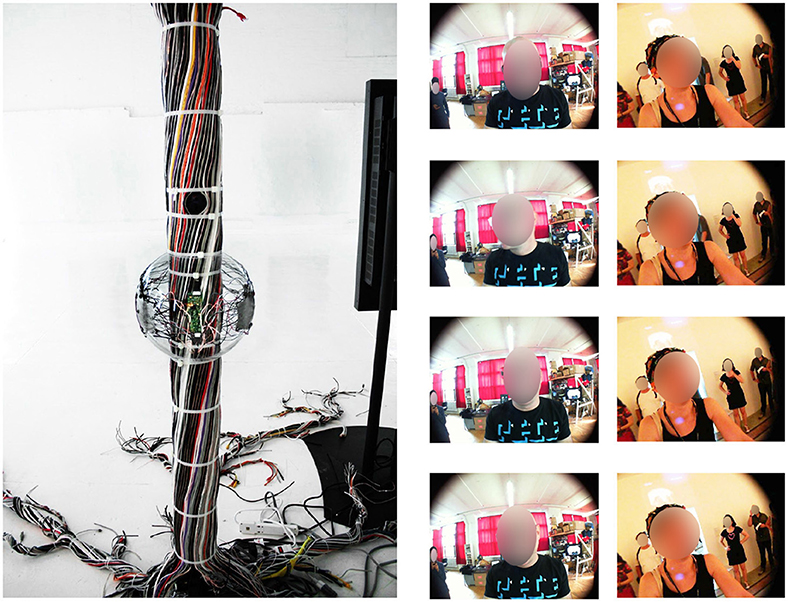

The explosion of consumer electronics during the 80 and 90's resulted in substantial moves in the BCI space in the late 2000's and early 2010's. NeuroSky, Emotiv, and Zeo Sleep Coach among others developed consumer EEG devices and BodyMedia, Polar, Fitbit and other devices measured signals from the autonomic nervous system (Nijboer et al., 2011). This increase in accessibility of BCI technologies has enjoyed a commensurate blossoming of BCI-driven art. Emotiv's EPOC headset has been utilized in a number of artistic contexts including measuring the Magic of Mutual Gaze (Abramovic et al., 2014), a durational performance art piece by Marina Abramovic that utilized the Emotiv EPOC to visualize and neuro-contextualize the synchrony of two people engaged in mutual gaze (as shown in Figure 2). Noor: A Brain Opera (Pearlman, 2016) turned brainwave data into an immersive audio-visual experience in which the internal state of the performers drives the operatic performance. Vessels (Leslie, 2020) is a brain-body performance piece that combines flute improvisation with live, sonified brain and body data from a Muse headband. Polar handheld devices similarly became the springboard for a number of artworks including Pulse Spiral (Lozano-Hemmer, 2008) and Emergence, as shown in Figure 3 (Montgomery et al., 2011), measuring viewer's heartbeats to reflect on the nature of their internal state and the human-computer interface.

Figure 2. Measuring the magic of mutual gaze & the artist is present. Left: Measuring the magic of mutual gaze at the garage museum for contemporary art, Moscow in 2011. Photograph by Maxim Lubimov © Garage center for contemporary culture. Video: www.youtube.com/watch?v=Ut9oPo8sLJw&t=73s Right: Marina Abramović, the artist is present, performance, 3 months, the museum of modern art, New York, NY (2010), photography by Marco Anelli. Courtesy of the Marina Abramović Archives.

Figure 3. Emergence installation at open house gallery, New York City (left) and digital memories triggered by successive heartbeats and uploaded to Flickr (right). Left image by Sean Montgomery. Right images by Emergence courtesy of Sean Montgomery. Web: http://produceconsumerobot.com/emergence/; Leonardo Electronic Almanac Vol 18 No 5 p 6–9.

While this consumer electronics explosion made BCI technology more accessible, the resulting devices (including the combination of hardware, firmware, and required software) often place limits on the adaptability and extensibility of the device. In many cases raw data is made completely inaccessible or is only offered at high price points, tending to confine the resulting artistic applications into more pre-defined use-cases.

Around the same time as the advent of consumer-grade BCI devices, the DIY maker movement began to take root in the 2000's, in part to break down the dichotomy between accessibility and flexibility/extensibility. One of the movement's cornerstones was born when a group of Italian postgraduate students and a lecturer at the Interaction Design Institute in Ivrea created the first version of what would go on to become the Arduino project (Banzi et al., 2015). The OpenEEG project (Griffith, 2006) quickly became an early go-to open-source circuit for everything from academic tutorials and clothing that lights up with brain activity to increase the expressiveness of the wearer (Montgomery, 2010) to adaptive drone piloting (Ossmann et al., 2014), but the volume-pricing of consumer electronics quickly became an attractive opportunity for artists and DIYers alike (Montgomery and Laefsky, 2011) to find “alternative uses” for the devices. Hacking of the Mattel MindFlex (How to Hack Toy EEGs | Frontier Nerds, 2010) to extract derivative EEG power-band data and of the Zeo Sleep Coach to extract raw EEG data (Dan, 2011) enabled a multiplicity of art installations such as Telephone Rewired, using rhythmic visual and audio patterns to alter endogenous brain oscillations and create an immersive aesthetic experience and altered subjective state (Produce Consume Robot and LoVid, 2013) and Teletron by the band Apples in Stereo, an instrument which allows the user to play an analog synth completely through brain activity (Schneider, 2010). These projects leveraged the wearability of consumer-grade EEG devices to break down barriers between artistic expression and scientific research.

In the 2010's, Pulse Sensor, OpenBCI, EmotiBit, and other fully open-source products with full data access further broke down the barriers to accessibility and extensibility that gave artists and creative technologists as well as researchers and educators access to high-quality BCI platforms beyond the confines of specific intended uses (Hoffman and Bast, 2017; Montgomery, 2018a; Gupta et al., 2020; Masui et al., 2020; Vujic et al., 2020). Ever since, developers have been fascinated with the possibility of enhancing the gaming experience via BCIs (Lécuyer et al., 2008). Games tailored to the user's affective state—immersion, flow, frustration, surprise, etc.—like the famous World of Warcraft, allow an avatar's appearance to reflect the gamer's cognitive state instead of being controlled through keyboarding (Nijholt et al., 2009). It is not unrealistic to believe that the first mass application of non-medical BCIs will be in the gaming and entertainment field. Standalone examples already have a market, and extensions to console games are likely to follow soon (Nijboer et al., 2011). Other projects like Emotional Beasts allowed the exploration of the user's self-expression in VR space by transforming the appearance of the avatar in an artistic way based on the user's affective state, thereby pulling the avatar design away from the uncanny valley problem and making it more expressive and more relatable (Bernal and Maes, 2017). Through the use of VR headsets that have been altered to accommodate physiological sensors (Bernal et al., 2018), the system collects and integrates physiological data to enable the perception of human affect. Bernal et al. showed how the PhysioHMD system can be used to help develop personalized phobia treatment by creating a closed loop experience. The images (insect sprites) spawned through the particle system can be modified (speed, size, the rate of spawn, movement) in the Unity inspector to increase or decrease the arousal level of the user.

As we look forward to the 2020's and beyond, it's reasonable to expect that BCI technology will become a greater part of everyday life for humanity and that these technologies may integrate with and potentially change aspects of human cognition. The extent to which artists and makers are enabled to be a part of the conversation about what this future should look like and where there are potential dystopian hazards, may play a pivotal role in shaping that future (Flisfeder, 2018; Montgomery, 2018b). The level of engagement and dialog will depend on the accessibility made possible through volume production of consumer electronics, the adaptability and flexibility made possible through open-source technology, and granular access to raw data that allows for going beyond pre-baked intended uses to ask new questions about brains, computers and the interfaces that increasingly connect them.

My Data, Their Data?

Looking Behind the Curtain

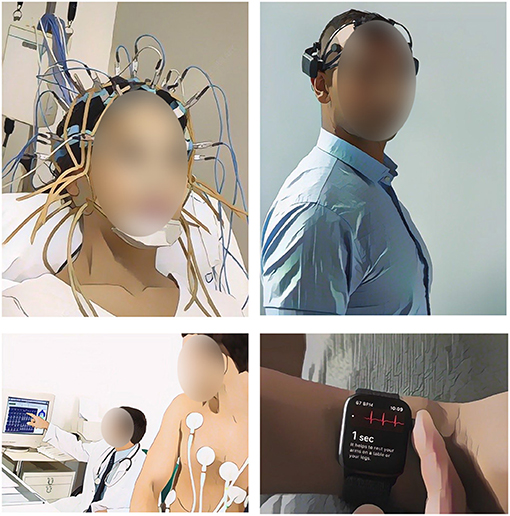

As availability of consumer-grade BCI and biometric technologies has grown, the application of these technologies in research, serious games, and rehabilitation has surged (View Research, 2019). Instead of costing tens of thousands of U.S. dollars and requiring participants to wear saline-soaked headgear entangled with dozens of wires, it is now possible to get meaningful BCI data from devices costing < US$1,000 in wireless and relatively easy to wear form factors, as shown in Figure 4. The result has vastly opened up possibilities for more people around the world to ask more and new types of questions. For rehabilitation, that means it is easier to bring devices into people's homes or care facilities, allowing for many more people to be served and for exploring new treatments and methodologies (Sung et al., 2012). Bringing consumer BCI technologies into the workplace has led to rapid development ranging from serious games, to flight simulators and warehouse safety training (Marshall et al., 2013; Huang et al., 2020) and research labs can now ask new questions about the neuroscience of interpersonal and classroom dynamics (Dikker et al., 2017, 2019a).

Figure 4. Consumer-grade BCI devices substantially improved the comfort and wearability, permitting devices to be used in new contexts and new types of questions to be asked. Top left, a standard wet EEG system, earlier systems were very susceptible to movement artifacts. Top right, a wireless headset by Emotiv, still uses wet electrodes but the setup is much quicker and wearability much improved compared to earlier systems. Bottom left, ECG setup found in most clinics, the setups commonly use 10 sticky electrodes to monitor cardiac activity. Bottom right, today's wrist-worn smartwatches can report user's ECG from the press of a button.

With these benefits of consumer-grade BCI devices, however, has also come a challenge of transparency. Most of the consumer-electronics world, including BCI devices, lives and dies by a closed-source ethos. While patents can serve as a mechanism to maintain transparency as well as a competitive advantage, it is often difficult or impossible to patent BCI circuits and algorithms because they are either not sufficiently novel or can be modified slightly to avoid infringement claims, and yet, omitting specific details (e.g., filter frequencies and other signal conditioning) can both make it harder for others to simply rip off the product as well as limit the ways the end-user can utilize and interpret the data. The patent system is also rather slow and somewhat overrun, so in a fast-moving technology landscape the competitive advantage of a patent might be somewhat irrelevant by the time a patent is actually granted. In addition to limiting access to raw data and only providing end-users with summary statistics, a competitive advantage is commonly maintained by creating a moat of closed-source trade-secrets and datasets that prevent competitors from moving into the space. On the other hand, essential to the world of science is the principle of reproducibility, and in a number of areas the scientific method and the closed-source veil stand on different sides of the table propelling the stalwart march of human knowledge (McNutt, 2014; Höller et al., 2017).

One way closed-source/closed-data ecosystems limit research is in the scope of questions that are possible to ask. For example, when examining EEG data, if only power-band statistics (alpha, beta, gamma, etc.) are made available (as for example is the case for the standard Emotiv license), much of the information about synchrony in the brain is lost. Specifically, it is impossible to investigate whether two regions of the brain are exhibiting coherence or phase-locking with one another. There is wide consensus among neuroscientists (Uhlhaas et al., 2009) that synchrony is important in determining the efficacy of neuronal communication, plasticity, and learning, and possibly even for governing aspects of consciousness (Buzsaki, 2006). For example, the phase-locking of EEG oscillations has been shown to increase between different regions of the medial-temporal lobe during successful memory formation (Miltner et al., 1999; Fries, 2015) suggesting an important role in memory encoding or selective attention (Fries, 2015). Similarly, increased coherence has been associated with memory retrieval (Kaplan et al., 2014; Meyer et al., 2015) and binding together of multi-modal representations spanning different areas of the brain (Gray et al., 1989; Llinás et al., 1998; Engel et al., 2001). In the context of this research, it is likely that timing and synchrony in the brain are important for some of the most interesting cognitive functions—memory encoding and retrieval, associative learning, attention, and likely others. However, when EEG data is reduced to power-band statistics, the phase relationships and cycle-by-cycle timing information is inherently lost. In doing so, it is possible that some of the most important information about the operation of the brain and how it relates to cognition may be lost in an irreversible way. Similarly, as we increasingly apply machine learning to EEG data, if only power-band data or other potentially impoverished derivative metrics are used as inputs, this may fundamentally limit the effectiveness of the resulting machine learning models. In some cases the resulting models may lack statistical power to make reliable predictions and in other cases the models may overfit the power-band data and be unable to adequately generalize and replicate the results in other contexts. As the field of neuroscience continues working to understand what the most important parameters of brain function are that derive cognitive processes, having access to the raw EEG data will likely be critical to unlock the true potential of this technology.

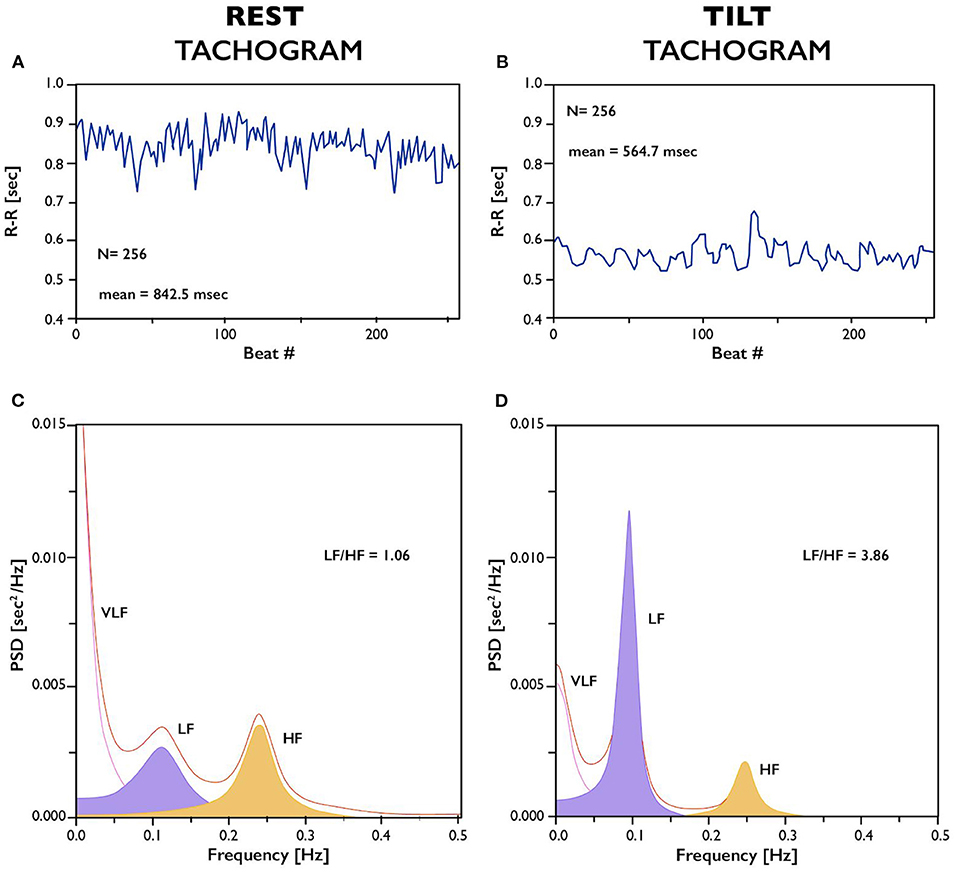

Further limitations can come when deriving metrics from data preprocessed with closed-source algorithms. For example, using heart rate (HR) data from common wrist-worn devices (e.g., FitBit or Apple Watch) to calculate pulse rate variability (PRV) can be substantially problematic. Similar to heart rate variability (HRV), PRV is a metric that is particularly dialed into the sympathetic/parasympathetic axis of the autonomic nervous system (Berntson et al., 1997). By looking at the relationship between fast and slow changes in HR it is possible to mathematically relate heart rate changes to levels of epinephrine governing sympathetic “fight or flight” responses and levels of acetylcholine governing parasympathetic “rest and digest” responses (Appelhans and Luecken, 2006). However, PRV calculations require long periods (typically 10+ min) of clean data, to accurately estimate the low frequency (LF) and high frequency (HF) components used to assess the sympathetic/parasympathetic ratio. While measuring photoplethysmography (PPG) from the wrist is convenient, one of the limitations is that it can be subject to substantial movement artifacts depending on a host of factors (Biswas et al., 2019). To deal with movement-related noise, consumer-grade devices typically employ heavy-handed smoothing and interpolation in order to give consumers a “best guess” HR value even when the signal quality is low. While this interpolation can improve the HR estimation and the consumer experience overall, as is illustrated in Figure 5, the resulting PPG estimation can be very substantially distorted (Morelli et al., 2018). Without access to the original raw PPG data, researchers are simply stuck with the HR estimates coming off the device with no path by which to improve the HR detection as new algorithms are developed, for example, based on recent research using a sensor fusion approach combining PPG and accelerometer data (Kos et al., 2017; Biswas et al., 2019). In contrast, open-source products like OpenBCI (Murphy and Russomanno, 2013), Pulse Sensor (WorldFamousElectronicst, 2011) and EmotiBit (https://www.emotibit.com/, Montgomery 2020) provide access to the raw data as well as the electrical specifications and source code to understand the data collection and derivatives.

Figure 5. Illustrative example of heart rate variability derivation and how smoothing heart rate data can lead to variability detecting changes in the sympathetic/parasympathetic nervous system. Figure plots are based on data presented in Electrophysiology (1996). (A) shows the raw tachogram fluctuations in heart rate during supine rest and (C) shows the derivative power spectral density (PSD) of the supine rest heart rate data to calculate the VLF, LF, and HF frequency bands that can be used to assess autonomic nervous system balance. (B,D) show the raw heart rate tachogram and derived PSD plot after a 90 degree head-up tilt physiological perturbation that increases the sympathetic nervous system response. Smoothing or interpolation algorithms acting on the raw rest tachogram data can potentially generate tachogram data similar to the tilt condition in (B), leading to a spurious shift in the observed LF/HF ratio. In the context of wearable consumer devices with potential data gaps and heavy-handed closed-source smoothing/interpolation algorithms, it is thereby possible to misinterpret smoothed or interpolated data as a shift in the sympathetic/parasympathetic nervous system responding, even when no such shift has occurred.

Worse than the data distortion itself is that the algorithms performing the interpolation on consumer-grade devices are closed-source and it is often unclear when data is being interpolated and when it is faithfully reflecting the physiological activity of the wearer. As a result, it can be very difficult to assess when the data distortion may be leading to interpretations that are overstated, understated, or even opposite of the truth in any given experimental paradigm. Potentially even more problematic for the use in scientific contexts is that the algorithms deriving biometrics can change without notice any time the company chooses to push new code onto the device, phone or remote servers. These quickly evolving algorithms are especially difficult to reveal and protected with patents, and algorithm changes with different firmware and software releases are thus always a potential caveat when a result fails to replicate from one study to the next or even if an effect shows up, disappears, or changes in the midst of a single study (Shcherbina et al., 2017). When it comes to developing new therapeutic approaches, training protocols and serious game applications, these kinds of errors can have real-world consequences that can potentially affect people's lives.

When technological tools are developed out in the open, anyone can verify if a vendor is actively pursuing accurate validation metrics, appropriately managing security and privacy, or handling issues in a timely and professional manner. The ability to examine the process followed and the source code developed makes it so that anyone can perform an independent audit. This is true not only for the code itself, but also the methods and testing processes used in the development and the history of changes. The transparency of open-source tools and the access to raw data is similarly important for BCI applications in research as it is for gaming, therapy, and rehabilitation. As state-of-the-art technology endeavors to make sense of signals from the body, it is often critical to understand important details of how the data was collected, conditioned, and transformed into derivative metrics. Particularly as derivative metrics become building blocks and inputs for downstream analysis and machine learning algorithms, transparency becomes essential for the ability to replicate and understand results and unlock the mysterious inner workings of the human brain.

Looking Into the Black Box

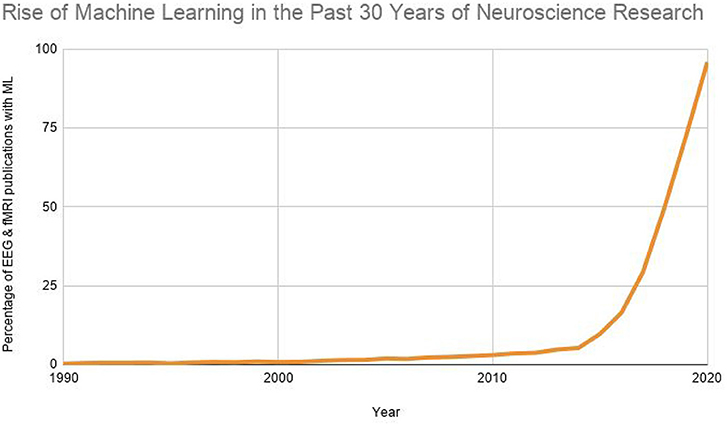

Stepping forward from simple derivative metrics, the recent wave of machine learning commonly utilizes deep neural network models, which often behave like black boxes because the relationship between the input and output can be difficult to ascertain. When we look into the number of journal articles about ML in neuroscience, we find that its adoption has continuously grown over the last 30 years (Figure 6). This rise occurred because Neuroscience has experienced a revolution in the volume of data and datasets that researchers are able to gather from a large number of neurons that researchers can record from, and the size of the datasets is rising rapidly. Researchers increasingly need machine learning methods to wrangle this data and try to gain insight into it. Deep learning, a subset from ML, has given researchers methods for relating high-dimensional neural data to high-dimensional behavior. In addition to their ability to model complex, intelligent behaviors, Deep neural networks (DNNs) excel at predicting neural responses to novel sensory stimuli with accuracy beyond any other currently available model type. DNNs can have millions of parameters required to capture the domain knowledge needed for successful task performance regression models. These parameters are not meant to capture what features of neural activity relate to what features of behaviors, but rather what features of neural activity display information about the behavior or sensory stimuli. If these models aren't made transparent so that BCI experts can interpret the model's decisions based on key model features, it can be challenging to predict the reliability and transferability to new contexts. Current state-of-the-art performance in multi-class EEG ranges from detection of epilepsy (Acharya et al., 2018), to cognitive-workload recognition (Almogbel et al., 2018), to bullying incident identification within an immersive environment (Baltatzis et al., 2017). However, relatively little work in the field of AI or BCI has been done to analyze the interpretability of such models. We define interpretability as the degree to which humans can consistently predict the model's decision (Kim et al., 2016).

Figure 6. Here we plot the proportion of neuroscience papers that have used machine learning over the last three decades. That is, we calculate the number of papers involving both neuroscience and machine learning, normalized by the total number of neuroscience papers. Neuroscience papers were identified using a search for “EEG + fMRI” on semantic scholar. Papers involving neuroscience and machine learning were identified with a search for “machine learning” and “EEG + fMRI” on semantic scholar.

It is in this context that the concepts of explain ability and interpretability have taken on new urgency. They will likely only become more important moving forward as discussions around artificial intelligence, data privacy, and ethics continue. The open-source community's recent efforts to support methods and applications that can lead to better trust in AI systems are already producing results. Two open-source methods are available to the public and kept on GitHub to decompose a neural network's output prediction on specific inputs. LIME (Ribeiro et al., 2016) and SHAP (Lundberg and Lee, 2017) are two projects providing novel techniques that explain a classifier's predictions in an interpretable and reliable manner, by learning an interpretable model locally around the prediction. These techniques produce “visual explanations” for decisions from a large class of Convolution Neural Network-based models, making them more transparent and accessible to a human expert by comparing the amount and degree of overlap between identified inputs.

Leveraging the open-source community can help improve trust by ensuring that any BCI-AI effort meets safe and transparent regulations. The community can include domain experts and set routine checks to the codebase. Beyond transparency into the code alone, as our artificial neural network (ANN) models continue to increase in complexity, having tools and transparency to visualize and understand key relationships of the models will be important in understanding when the data and decisions can be trusted and used in research and real-life applications. A closed approach to sophisticated BCI systems can lead to inadequate feature design choices that are not relevant to the current needs of the community and society. Such features can be harmful to the system; for example, if a medical system's patient diagnostic function has poor accuracy due to lack of testing, then this will mean more human intervention and, ultimately, less trust.

New Realities and Augmented Cognition

Closed Loops

Looking forward to a future in which augmented and virtual reality become an integral part of daily life, BCIs will likely play an increasingly important role in creating closed-loop feedback for generative content. As one physical reality transforms into a multiplicity of mixed realities, brain-computer interfaces will be uniquely situated to provide necessary feature selections to determine the decoding and timing of content delivery.

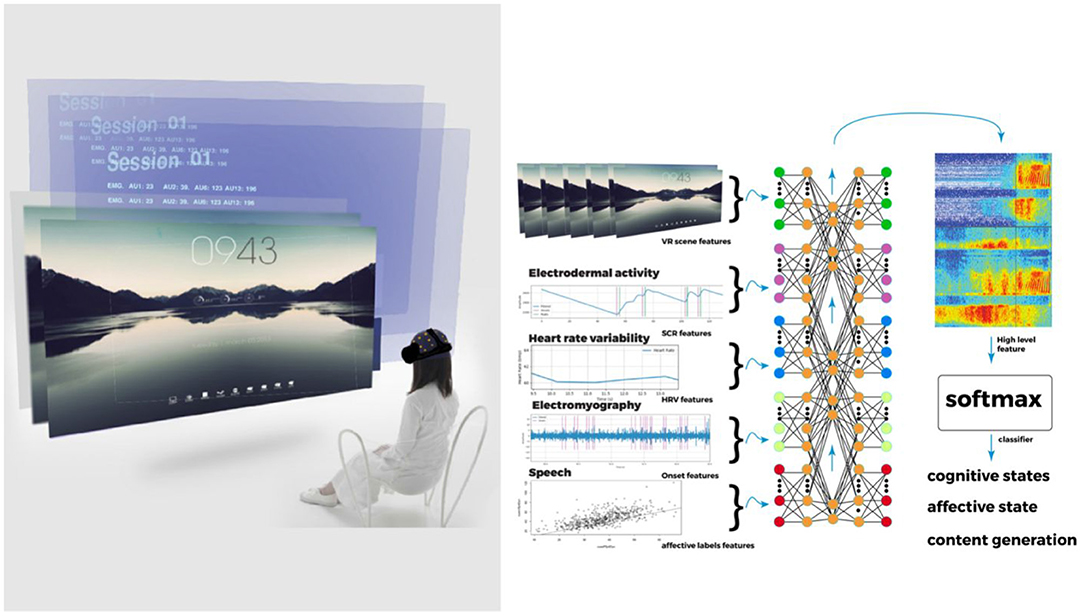

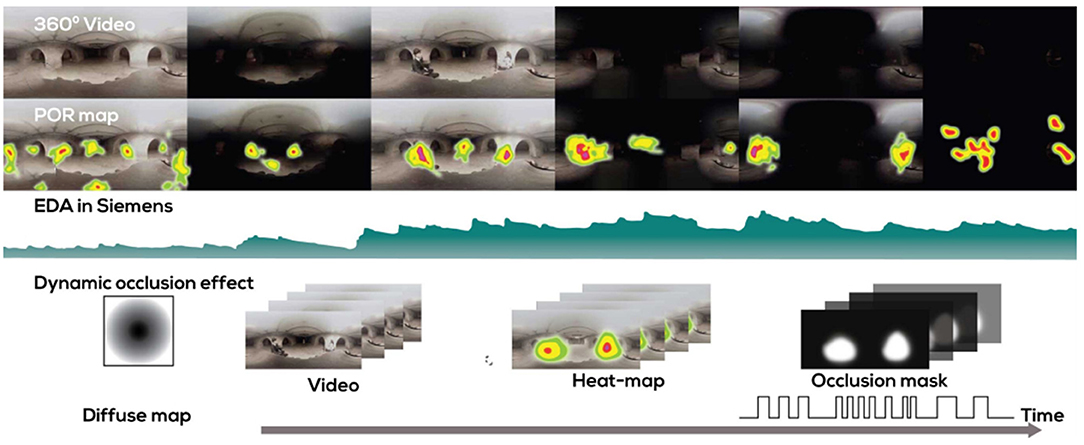

A closed-loop system that monitors the user's reactions to the content of a virtual environment enables the generation of personalized virtual reality experiences. Demonstrations like those shown by Bernal et al. in Figure 7 use arousal levels to provide real-time, reliable information about the user's reception of the content and can help the system adapt the content seamlessly (Bernal et al., 2018). In a 360 video demo player scene, they used gaze data and Skin Conductance Response (SCR) data to increase the user's arousal levels by modulating a shader's occlusion superimposed on the 360 scene in order to create the fear of the unknown. Figure 8 shows how the demo takes standard footage from people in a basement and creates a pulsating shadow effect, and therefore a more dramatic 360 captured video, similar to those seen in horror movies. To direct the user's focus to the people within the video, a surface shader is modulated dynamically to occlude areas that are not of interest to the user; locations informed by the point of regard (POR) data from the gaze tracking system. The detected SCR peak values are used to pulse the occlusion shader with modulation.

Figure 7. An example of a closed-loop setup used in a lab setting. Physiological signals are recorded using a set of electrodes positioned over the visual cortex while stimuli are displayed on a VR headset. Currently, the system supports deep learning model inference for high-level cognitive states.

Figure 8. A 360 video scene is manipulated by the user's gaze data and skin conductance response data to increase the users' arousal levels. These arousal levels create darker flashing moments making it more dramatic, similar to those seen in horror movies. To direct the user's focus to the people within the video, the system modulates a surface shader dynamically that occludes locations informed by the gaze tracking system's data as areas that are not of interest to the user.

Sourina et al. proposed a real-time approach for feature extraction in EEG-enabled applications for serious games, emotional avatars, music therapy, musicians, and storytelling where the emotional states of the users are mapped onto avatars. The Haptek Activex control provides functions and commands to change the six facial expressions of 3D avatars including fear, frustration, sadness, happiness, pleasant, and satisfaction. In the application, emotions of the user are recognized from EEG and visualized in real-time on the user's avatar with the Haptek system. For the music therapy application, the music selection and duration is adjusted based on the current emotional state of the user wearing the BCI, as identified by the system (Sourina et al., 2011).

These types of scenarios don't come without consequences if proper guidelines are not being followed. Recently a BCI start-up has been under scrutiny over tests on Chinese schoolchildren after the Wall Street Journal released a video stating that teachers at that school know exactly when students are and are not paying attention (Wall Street Journal, 2019). In the video, children are shown wearing an EEG headband during class with an LED located on the forehead region that changes color based on the children's attention levels. At the time there were no privacy laws regulating this type of collaboration between private schools and companies. Even though the start-up reports that all parties involved had given consent about taking part in the test, concerns about whether the data was adequately secured or potential future implications for the children led to the ban of this device's use in the classroom and the creation of a new law (Chinese Primary School Halts Trial of Device That Monitors Pupils Brainwaves, 2019; Primary School in China Suspends Use of BrainCo Brainwave Tracking Headband, 2019).

New Realities

The importance of open-source transparency will likely expand substantially as AIs increasingly become BCI-driven coprocessors for the human mind. Whether algorithms are designed to help homeostatically regulate stress, or to monitor engagement and optimize learning or improve safety, these reality-selection algorithms will likely be critical to examine for integrity, implicit bias, and conflicts of interest. As AIs take on an adjunct homunculus role, their ability to make sense of BCI data and be creatively applied to novel applications will depend on the adaptability, extensibility, and transparency of the BCI tools on which they are built. All this comes with great possibilities to fundamentally transform human consciousness in addition to extreme risks and concerns, and open source may be one of the key factors that helps us navigate this conversation as we build safeguards into this new paradigm.

The issues surrounding algorithm integrity and examples of ways in which open-source code can mitigate those issues can be drawn from the cyber-security sector. For example, WannaCry ransomware targeted a vulnerability in the closed-source Windows operating system (Petrenko et al., 2018) that had existed for over a decade and only came to worldwide attention after the WannaCry crypto-worm infected ~300,000 computers worldwide, including the U.K.'s National Health Service which cost the organization nearly £100 M in canceled appointments and cleanup. In contrast, the Heartbleed security bug in the open-source OpenSSL cryptography library was discovered and fixed in just over 2 years. While it can be hard to compare any two vulnerabilities, data has suggested that open-source defects are found and fixed more rapidly than closed-source projects (Paulson et al., 2004). The integrity and security of cognition augmenting and reality-selection algorithms is very likely to present a host of cyber-attack opportunities for anything from lone-wolf hackers hawking their wares, to state actors creating individually targeted propaganda. The ability for open-source public review of algorithm integrity may be a way to catch these vulnerabilities in a timely manner.

Implicit bias has been documented in machine learning AI algorithms that govern everything from filtering job applicants to home loan approval (O'neil, 2016; Buolamwini and Gebru, 2018). The implicit bias embodied in the algorithms and machine learning models often ends up reflecting and reinforcing the racial and gender inequities present in our society. As we diversify a multiplicity of virtual and mixed realities, both the risk to exacerbate and the opportunity to mitigate implicit bias will be great. For example, Mel Slater and his students have demonstrated that the embodiment of light-skinned participants in a dark-skinned virtual body significantly reduces implicit racial bias against dark-skinned people, in contrast to embodiment in light-skinned or purple-skinned avatars, or ones with no virtual body at all (Peck et al., 2013). Virtual Reality presents a persuasive tool for potentially placing people into a different body stereotype, particularly race or gender, by modifying the form of their body image. This is accomplished by a setup that is referred to as 'virtual embodiment'. The participants wear a broad field-of-view head-mounted display and when they look down toward themselves in the VR, they see a programmed virtual body (VB) substituting their own real body. They also see this body when looking at their (geometrically accurate) reflection in a virtual mirror. These kinds of virtual reality experiences have the potential to increase empathy and understanding. We speculate based on prior research that as BCIs combined with machine learning become increasingly capable to detect biometric patterns associated with complex cognition such as implicit bias and empathy (Hasler et al., 2017; Levsen and Bartholow, 2018; Luo et al., 2018; Katsumi et al., 2020; Patané et al., 2020), feedback loops between BCIs and virtual reality content will be positioned to either diminish or amplify these internal states. Open-source algorithms and models that can be audited may be an important tool to ensure implicit bias is mitigated and empathy is enhanced as we multiply reality.

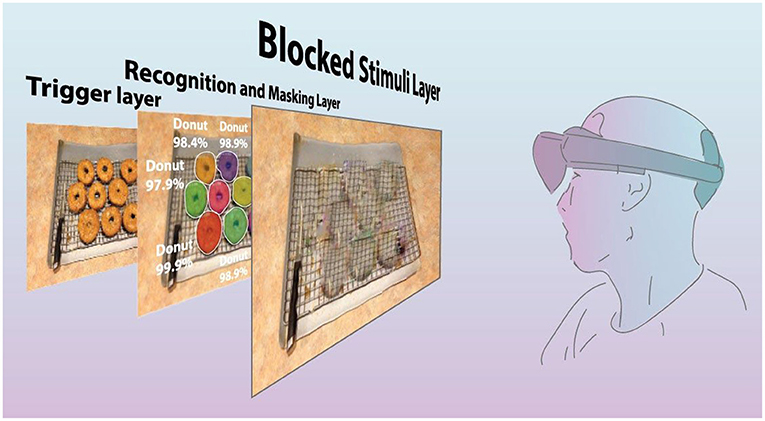

Koutsouleris et al. (2020), reported that AI algorithms were able to predict whether people would have a psychotic episode using a combination of clinical, brain imaging, and genetic data. The positive potential of algorithms that could help to intervene or otherwise divert a catastrophic life event for millions of people worldwide cannot be overstated. Looking at an example with less devastating, but potentially broader impact, research has shown that struggles of obesity are driven, in part, by food stimuli that hijack the decision making centers in the brain to create an overwhelming compulsion to eat (Stice et al., 2009; Cobb et al., 2015; Mejova et al., 2015). To short circuit this stimulus-response behavior we can easily imagine how a closed-loop Augmented Reality system that occludes specific food stimuli, like donuts, could be a great aid to those in the process of rebuilding their relationship with food, as shown in Figure 9. And yet this technology also presents a clear risk for mistakes and conflicts of interest to have dire consequences. The landscape of BCI-driven reality selection is rife with both utopian and dystopian possibilities. Relapse into addiction, for example, is known to be triggered by specific associative stimuli like a cigarette or a lighter (Shiffman, 2005; Leventhal et al., 2008) and very likely to exhibit greater susceptibility under certain neurological states (Potvin et al., 2015; Witteman et al., 2015). It is easy to imagine a utopian world with reduced substance abuse rates simply by detecting susceptible states and using augmented reality to block trigger stimuli thereby enabling people to be productive members of society. On the contrary, it is equally easy to imagine the dystopian world that might follow if Purdue Pharma [maker of the highly addictive opiate, OxyContin, that drove opiate addiction rates to all-time highs in the United States, (Knisely et al., 2008)] were generating BCI-driven targeted advertising into our reality feeds. We speculate that possible futures of BCI-driven reality selection range from greater safety, health, wellness, rates of learning, and creativity to security risks, manipulated addiction, misinformation, and brainwashing. Transparency may be a critical principle to help unlock the utopian and steer clear of the most dystopian visions of our future.

Figure 9. Diagram showing close-loop scenario where stimuli are removed from user perception. The trigger layer shows a stimulus that the user intends to avoid. The recognition layer is computed on the user's device and masks the stimuli to be blocked. The blocked stimuli layer is what the user perceives when a trigger stimulus is shown.

Closing Topics

As we have discussed in this paper, BCIs are moving beyond siloed research labs to explore new use-cases ranging from the arts and rehabilitation to gaming and augmented reality. The accessibility, adaptability, and transparency of BCI tools and data will significantly impact how we collectively navigate this new digital age. It is essential that we build technologies that are not only affordable, but that also can be used for diverse applications while delivering transparency of the data and algorithms employed. Open-source principles would enable BCI technologies to be explored from different perspectives and for novel applications with confidence that the data is relevant and accurately reflecting underlying physiological changes. Enabling BCI technologies to be used in the broadest possible range of applications will ensure that more voices can understand, utilize, and validate the integrity of these signals as we shape a BCI-augmented future.

Both art and technology aim to reshape the world we exist in, re-envisioning what we perceive as real and understanding nature's own limits. For decades, industry has been inspiring new technologies (3D printing, e-paper, satellites) and presenting critical reflections like Black Mirror's “The Entire History of You” that gives us mental frameworks to rethink our relationship with technology. As we move into a future that increasingly merges technology with humanity, the artist's role must be one of an active partner in preparing the direction of research and facilitating synergy between science and technology of science and technology as a vital means for understanding the world.

We speculate that Augmented cognition driven by BCI technology may be poised to transform humanity at a level on par with or exceeding that of the written word. The possibilities to learn faster through dynamic material that is individually tuned to each person's psychophysiology, develop strategies for enhanced creativity and even perhaps bootstrap our biology into new forms of distributed or collective consciousness, may have profound implications for the ability of humanity to understand the universe and its place therein. And yet, much as with any powerful tool, part and parcel with the potential benefits come potential risks. Whether it is the possibility for a future in which we can create reality filters based on physiological responses or read the cataloged memories of alleged criminals (Flisfeder, 2018), there are very real risks as we look forward into a world powered by BCI-driven augmented cognition. Despite these risks, the benefits are too profound and the advantages too immediate to imagine a reverse course. If someone can save themselves or a family member from addiction or a psychotic break (Koutsouleris et al., 2020), or if a driver or pilot can be safer (Healey and Picard, 2005; Zepf et al., 2019), or if a day-trader can be the smartest person in the room, the unrelenting force of progress will likely overpower any attempts to outright halt it. Instead, we may consider building a future based on open-source principles including adaptability, extensibility, and transparency so as to democratize the conversation with different perspectives, deliver the openness needed to conduct replicable science, and understand the algorithms and models that will play increasingly important roles in creating our realities and world views.

Setting up the technical as well as ethical and societal norms of a BCI-driven future will require a diversity of transdisciplinary perspectives. Sitting at the nexus of biology, electrical engineering, and computer science, BCIs are transdisciplinary by their nature, and they also present an opportunity to bring perspectives ranging from psychology, health, and physical education to history, philosophy, and the arts. Bringing together a diversity of ideas and viewpoints can help ensure that this transformative technology is set up to serve not only the most privileged members of society, but also enable individual ingenuity to go beyond preconceived use-cases to solve issues that transcend physical, economic, and cultural boundaries. Even more than the sum of siloed individual perspectives, creating transdisciplinary conversations that explore the intersections between different points of view can multiply the information and ideas to imagine our future realities (Nijholt et al., 2018; Dikker et al., 2019b). Those conversations might become more common and start at a younger age, by bringing BCIs into K-12 project-based curricula and into hackathons that promote diverse teams including artists and philosophers as well as engineers and scientists. By building BCI tools that are adaptable and transparent as well as accessible, the resulting applications and conversations can go beyond preconceived use-cases to explore the widest scope of possibilities that BCIs may unlock for the future. Greater openness may require some reframing of the solution space in the context of principles including those of adaptability, extensibility and transparency. Developing BCI tools that are adaptable and extensible can democratize the development of new ideas and applications to imagine beyond the board-room developed use-cases. Cultivating more transparency, with greater access to raw data, source code, and visibility into black-box models can facilitate creating replicable scientific knowledge, and a trust that future realities will be secure and serve the interests of all.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author Contributions

GB and SM conceived of the presented idea, drafted the manuscript, and designed the figures. All authors discussed the results and commented on the manuscript.

Conflict of Interest

SM was employed by company Connected Future Labs.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Acharya, U. R., Oh, S. L., Hagiwara, Y., Tan, J. H., and Adeli, H. (2018). Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput. Biol. Med. 100, 270–278. doi: 10.1016/j.compbiomed.2017.09.017

Almogbel, M. A., Dang, A. H., and Kameyama, W. (2018). “EEG-signals based cognitive workload detection of vehicle driver using deep learning,” in 2018 20th International Conference on Advanced Communication Technology (ICACT) (IEEE) (Chuncheon), 256–259. doi: 10.23919/ICACT.2018.8323716

Appelhans, B. M., and Luecken, L. J. (2006). Heart rate variability as an index of regulated emotional responding. Rev. Gen. Psychol. 10, 229–240. doi: 10.1037/1089-2680.10.3.229

Baltatzis, V., Bintsi, K.-M., Apostolidis, G. K., and Hadjileontiadis, L. J. (2017). Bullying incidences identification within an immersive environment using HD EEG-based analysis: a swarm decomposition and deep learning approach. Sci. Rep. 7, 1–8. doi: 10.1038/s41598-017-17562-0

Banzi, M., Cuartielles, D., Igoe, T., Martino, G., and Mellis, D. (2015). Arduino: Open-Source Project Official Website. Arduino.

Bernal, G., and Maes, P. (2017). “Emotional beasts: visually expressing emotions through avatars in VR,” in Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems CHI EA'17 (New York, NY: ACM), 2395–2402. doi: 10.1145/3027063.3053207

Bernal, G., Yang, T., Jain, A., and Maes, P. (2018). “PhysioHMD: a conformable, modular toolkit for collecting physiological data from head-mounted displays,” in Proceedings of the 2018 ACM International Symposium on Wearable Computers ISWC'18 (New York, NY: Association for Computing Machinery), 160–167. doi: 10.1145/3267242.3267268

Berntson, G. G., Bigger, J. T., Eckberg, D. L., Grossman, P., Kaufmann, P. G., Malik, M., et al. (1997). Heart rate variability: origins, methods, and interpretive caveats. Psychophysiology 34, 623–648. doi: 10.1111/j.1469-8986.1997.tb02140.x

Biswas, D., Simões-Capela, N., Hoof, C. V., and Helleputte, N. V. (2019). Heart rate estimation from wrist-worn photoplethysmography: a review. IEEE Sens. J. 19, 6560–6570. doi: 10.1109/JSEN.2019.2914166

Buolamwini, J., and Gebru, T. (2018). “Gender shades: intersectional accuracy disparities in commercial gender classification,” in Conference on Fairness, Accountability and Transparency (PMLR) (New York NY), 77–91. Available online at: http://proceedings.mlr.press/v81/buolamwini18a.html (accessed January 22, 2021).

Burke, J. F., Merkow, M. B., Jacobs, J., Kahana, M. J., and Zaghloul, K. A. (2015). Brain computer interface to enhance episodic memory in human participants. Front. Hum. Neurosci. 8:1055. doi: 10.3389/fnhum.2014.01055

Buzsaki, G. (2006). Rhythms of the Brain. Oxford: Oxford University Press. doi: 10.1093/acprof:oso/9780195301069.001.0001

Chinese Primary School Halts Trial of Device That Monitors Pupils Brainwaves. (2019). The Guardian. Available online at: http://www.theguardian.com/world/2019/nov/01/chinese-primary-school-halts-trial-of-device-that-monitors-pupils-brainwaves (accessed January 22, 2021).

Cobb, L. K., Appel, L. J., Franco, M., Jones-Smith, J. C., Nur, A., and Anderson, C. A. (2015). The relationship of the local food environment with obesity: a systematic review of methods, study quality, and results. Obesity 23, 1331–1344. doi: 10.1002/oby.21118

Dan, C. (2011). ZeoScope. Available online at: https://github.com/dancodru/ZeoScope (accessed January 17, 2021).

Dikker, S., Michalareas, G., Oostrik, M., Serafimaki, A., Kahraman, H. M., Struiksma, M. E., et al. (2019a). Crowdsourcing neuroscience: inter-brain coupling during face-to-face interactions outside the laboratory. NeuroImage 227:117436. doi: 10.1016/j.neuroimage.2020.117436

Dikker, S., Montgomery, S., and Tunca, S. (2019b). “Using synchrony-based neurofeedback in search of human connectedness,” in Brain Art, eds A. Nijholt (Cham: Springer), 161–206. doi: 10.1007/978-3-030-14323-7_6

Dikker, S., Wan, L., Davidesco, I., Kaggen, L., Oostrik, M., McClintock, J., et al. (2017). Brain-to-brain synchrony tracks real-world dynamic group interactions in the classroom. Curr. Biol. 27, 1375–1380. doi: 10.1016/j.cub.2017.04.002

Electrophysiology, T. F. (1996). Heart rate variability: standards of measurement, physiological interpretation, and clinical use. Circulation 93, 1043–1065. doi: 10.1161/01.CIR.93.5.1043

Engel, A. K., Fries, P., and Singer, W. (2001). Dynamic predictions: oscillations and synchrony in top–down processing. Nat. Rev. Neurosci. 2, 704–716. doi: 10.1038/35094565

Faivre, N., Salomon, R., and Blanke, O. (2015). Visual consciousness and bodily self-consciousness. Curr. Opin. Neurol. 28, 23–28. doi: 10.1097/WCO.0000000000000160

Flisfeder, M. (2018). Black Mirror, “playtest,” and the crises of the present. Black Mirror Crit. Media Theory 141:141. doi: 10.1177/1461444810365313

Fries, P. (2015). Rhythms for cognition: communication through coherence. Neuron 88, 220–235. doi: 10.1016/j.neuron.2015.09.034

Gray, C. M., König, P., Engel, A. K., and Singer, W. (1989). Oscillatory responses in cat visual cortex exhibit inter-columnar synchronization which reflects global stimulus properties. Nature 338, 334–337. doi: 10.1038/338334a0

Gupta, K., Hajika, R., Pai, Y. S., Duenser, A., Lochner, M., and Billinghurst, M. (2020). “Measuring human trust in a virtual assistant using physiological sensing in virtual reality,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Atlanta, GA), 756–765. doi: 10.1109/VR46266.2020.00099

Hasler, B. S., Spanlang, B., and Slater, M. (2017). Virtual race transformation reverses racial in-group bias. PLoS ONE 12:e0174965. doi: 10.1371/journal.pone.0174965

Healey, J. A., and Picard, R. W. (2005). Detecting stress during real-world driving tasks using physiological sensors. IEEE Trans. Intell. Transp. Syst. 6, 156–166. doi: 10.1109/TITS.2005.848368

Hoffman, C., and Bast, G. (2017). Cosa Mentale, Draw With Two Brains Connected to One Hand | Mentalista. Available online at: https://mentalista.fr/en/cosamentale (accessed October 31, 2020).

Höller, Y., Uhl, A., Bathke, A., Thomschewski, A., Butz, K., Nardone, R., et al. (2017). Reliability of EEG measures of interaction: a paradigm shift is needed to fight the reproducibility crisis. Front. Hum. Neurosci. 11:441. doi: 10.3389/fnhum.2017.00441

How to Hack Toy EEGs | Frontier Nerds (2010). Available online at: http://www.frontiernerds.com/brain-hack (accessed January 17, 2021).

Huang, D., Wang, X., Li, J., and Tang, W. (2020). “Virtual reality for training and fitness assessments for construction safety,” in 2020 International Conference on Cyberworlds (CW) (Caen), 172–179. doi: 10.1109/CW49994.2020.00036

Kaplan, R., Bush, D., Bonnefond, M., Bandettini, P. A., Barnes, G. R., Doeller, C. F., et al. (2014). Medial prefrontal theta phase coupling during spatial memory retrieval. Hippocampus 24, 656–665. doi: 10.1002/hipo.22255

Katsumi, Y., Dolcos, F., Moore, M., Bartholow, B. D., Fabiani, M., and Dolcos, S. (2020). Electrophysiological correlates of racial in-group bias in observing nonverbal social encounters. J. Cogn. Neurosci. 32, 167–186. doi: 10.1162/jocn_a_01475

Kim, B., Khanna, R., and Koyejo, O. O. (2016). “Examples are not enough, learn to criticize! criticism for interpretability,” in Advances in Neural Information Processing Systems, ed N. Thakor (Springer), 2280–2288.

Knisely, J. S., Wunsch, M. J., Cropsey, K. L., and Campbell, E. D. (2008). Prescription opioid misuse index: a brief questionnaire to assess misuse. J. Subst. Abuse Treat. 35, 380–386. doi: 10.1016/j.jsat.2008.02.001

Koch, C., Massimini, M., Boly, M., and Tononi, G. (2016). Neural correlates of consciousness: progress and problems. Nat. Rev. Neurosci. 17, 307–321. doi: 10.1038/nrn.2016.22

Kos, M., Li, X., Khaghani-Far, I., Gordon, C. M., Pavel, M., and Jimison Member, H. B. (2017). Can accelerometry data improve estimates of heart rate variability from wrist pulse PPG sensors? Conf. Proc. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Annu. Conf. 2017, 1587–1590. doi: 10.1109/EMBC.2017.8037141

Koutsouleris, N., Dwyer, D. B., Degenhardt, F., Maj, C., Urquijo-Castro, M. F., Sanfelici, R., et al. (2020). Multimodal machine learning workflows for prediction of psychosis in patients with clinical high-risk syndromes and recent-onset depression. JAMA Psychiatry 78, 195–209. doi: 10.1001/jamapsychiatry.2020.3604

Kropotov, J. D. (2010). Quantitative EEG, Event-Related Potentials and Neurotherapy. Academic Press.

Lécuyer, A., Lotte, F., Reilly, R. B., Leeb, R., Hirose, M., and Slater, M. (2008). Brain-computer interfaces, virtual reality, and videogames. Computer 41, 66–72. doi: 10.1109/MC.2008.410

Leslie, G. (2020). Inner rhythms: vessels as a sustained brain-body performance practice. Leonardo, 1–8. doi: 10.1162/leon_a_01963

Leventhal, A. M., Waters, A. J., Breitmeyer, B. G., Miller, E. K., Tapia, E., and Li, Y. (2008). Subliminal processing of smoking-related and affective stimuli in tobacco addiction. Exp. Clin. Psychopharmacol. 16, 301–312. doi: 10.1037/a0012640

Levsen, M. P., and Bartholow, B. D. (2018). Neural and behavioral effects of regulating emotional responses to errors during an implicit racial bias task. Cogn. Affect. Behav. Neurosci. 18, 1283–1297. doi: 10.3758/s13415-018-0639-8

Llinás, R., Ribary, U., Contreras, D., and Pedroarena, C. (1998). The neuronal basis for consciousness. Philos. Trans. R. Soc. B Biol. Sci. 353, 1841–1849. doi: 10.1098/rstb.1998.0336

Lorenzetti, V., Melo, B., Basílio, R., Suo, C., Yücel, M., Tierra-Criollo, C. J., et al. (2018). Emotion regulation using virtual environments and real-time fMRI neurofeedback. Front. Neurol. 9:390. doi: 10.3389/fneur.2018.00390

Lundberg, S. M., and Lee, S.-I. (2017). A Unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 30, 4765–4774.

Luo, S., Han, X., Du, N., and Han, S. (2018). Physical coldness enhances racial in-group bias in empathy: electrophysiological evidence. Neuropsychologia 116, 117–125. doi: 10.1016/j.neuropsychologia.2017.05.002

Marshall, D., Coyle, D., Wilson, S., and Callaghan, M. (2013). Games, gameplay, and BCI: the state of the art. IEEE Trans. Comput. Intell. AI Games 5, 82–99. doi: 10.1109/TCIAIG.2013.2263555

Masui, K., Nagasawa, T., and Tsumura, N. (2020). “Continuous estimation of emotional change using multimodal affective responses,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 290–291. doi: 10.1109/CVPRW50498.2020.00153

Mejova, Y., Haddadi, H., Noulas, A., and Weber, I. (2015). “Foodporn: obesity patterns in culinary interactions,” in Proceedings of the 5th International Conference on Digital Health (New York, NY), 51–58. doi: 10.1145/2750511.2750524

Meyer, L., Grigutsch, M., Schmuck, N., Gaston, P., and Friederici, A. D. (2015). Frontal-posterior theta oscillations reflect memory retrieval during sentence comprehension. Cortex J. Devoted Study Nerv. Syst. Behav. 71, 205–218. doi: 10.1016/j.cortex.2015.06.027

Miltner, W. H., Braun, C., Arnold, M., Witte, H., and Taub, E. (1999). Coherence of gamma-band EEG activity as a basis for associative learning. Nature 397, 434–436. doi: 10.1038/17126

Montana, J. I., Matamala-Gomez, M., Maisto, M., Mavrodiev, P. A., Cavalera, C. M., Diana, B., et al. (2020). The benefits of emotion regulation interventions in virtual reality for the improvement of wellbeing in adults and older adults: a systematic review. J. Clin. Med. 9:500. doi: 10.3390/jcm9020500

Montgomery, S. (2018a). Hive Mind, Don't Speak | Neural. Winter. Available online at: http://neural.it/2018/05/hive-mind-dont-speak/ (accessed November 13, 2020).

Montgomery, S. (2018b). Synergy in Art and Science Could Save the World. San Francisco, CA. Available online at: https://www.youtube.com/watch?v=OXFxVWI6QNU (accessed January 17, 2021).

Montgomery, S., and Laefsky, I. (2011). Bio-Sensing: hacking the doors of perception. Make Mag. 26, 104–111.

Montgomery, S., Rioja, D., and Bagdatli, M. (2011). “Emergence,” in ISEA2011: 17th International Symposium on Electronic Art (Istambul). Available online at: https://isea-archives.siggraph.org/art-events/sean-montgomery-diego-rioja-mustafa-bagdatli-emergence/ (accessed January 17, 2021).

Montgomery, S. M. (2010). “Measuring biological signals: concepts and practice,” in Proceedings of the Fourth International Conference on Tangible, Embedded, and Embodied Interaction Tei'10 (New York, NY: Association for Computing Machinery), 337–340. doi: 10.1145/1709886.1709969

Morelli, D., Bartoloni, L., Colombo, M., Plans, D., and Clifton, D. A. (2018). Profiling the propagation of error from PPG to HRV features in a wearable physiological-monitoring device. Healthc. Technol. Lett. 5, 59–64. doi: 10.1049/htl.2017.0039

Murphy, J., and Russomanno, C. (2013). OpenBCI. Wikipedia. Available online at: https://openbci.com/ (accessed October 31, 2020).

Nijboer, F., Allison, B. Z., Dunne, S., Bos, D. P.-O., Nijholt, A., and Haselager, P. (2011). “A preliminary survey on the perception of marketability of brain-computer interfaces and initial development of a repository of BCI companies,” in Proceedings 5th International Brain-Computer Interface Conference (BCI 2011) (Verlag der Technischen Universität Graz), 344–347.

Nijholt, A., Bos, D. P.-O., and Reuderink, B. (2009). Turning shortcomings into challenges: brain–computer interfaces for games. Entertain. Comput. 1, 85–94. doi: 10.1016/j.entcom.2009.09.007

Nijholt, A., Jacob, R. J., Andujar, M., Yuksel, B. F., and Leslie, G. (2018). “Brain-computer interfaces for artistic expression,” in Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (Montreal, QC), 1–7. doi: 10.1145/3170427.3170618

O'neil, C. (2016). Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. Crown.

Ossmann, R., Parker, S., Thaller, D., Pecyna, K., García-Soler, A., Morales, B., et al. (2014). AsTeRICS, a flexible AT construction set. Int. J. Adapt. Control Signal Process. 28, 1475–1503. doi: 10.1002/acs.2496

Patané, I., Lelgouarch, A., Banakou, D., Verdelet, G., Desoche, C., Koun, E., et al. (2020). Exploring the effect of cooperation in reducing implicit racial bias and its relationship with dispositional empathy and political attitudes. Front. Psychol. 11:510787. doi: 10.3389/fpsyg.2020.510787

Paulson, J. W., Succi, G., and Eberlein, A. (2004). An empirical study of open-source and closed-source software products. IEEE Trans. Softw. Eng. 30, 246–256. doi: 10.1109/TSE.2004.1274044

Peck, T. C., Seinfeld, S., Aglioti, S. M., and Slater, M. (2013). Putting yourself in the skin of a black avatar reduces implicit racial bias. Conscious. Cogn. 22, 779–787. doi: 10.1016/j.concog.2013.04.016

Petrenko, A. S., Petrenko, S. A., Makoveichuk, K. A., and Chetyrbok, P. V. (2018). Protection model of PCS of subway from attacks type ≪wanna cry≫,≪petya≫ and ≪bad rabbit≫ IoT. in 2018 IEEE Conference of Russian Young Researchers in Electrical and Electronic Engineering (EIConRus) (IEEE), 945–949.

Picard, R. W. (1995). Affective Computing-MIT Media Laboratory Perceptual Computing Section Technical Report No. 321. Camb.

Potvin, S., Tikàsz, A., Dinh-Williams, L. L.-A., Bourque, J., and Mendrek, A. (2015). Cigarette cravings, impulsivity, and the brain. Front. Psychiatry 6:125. doi: 10.3389/fpsyt.2015.00125

Primary School in China Suspends Use of BrainCo Brainwave Tracking Headband (2019). Glob. Shak. Available online at: https://globalshakers.com/primary-school-in-china-suspends-use-of-brainco-brainwave-tracking-headband/ (accessed January 22, 2021).

Produce Consume Robot LoVid. (2013). Telephone Rewired at Pocket Gallery. Available online at: https://www.harvestworks.org/telephone-rewired/ (accessed January 17, 2021).

Ribeiro, M. T., Singh, S., and Guestrin, C. (2016). “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. Available online at: http://arxiv.org/abs/1602.04938 (accessed January 21, 2021).

Sandberg, K., Timmermans, B., Overgaard, M., and Cleeremans, A. (2010). Measuring consciousness: is one measure better than the other? Conscious. Cogn. 19, 1069–1078. doi: 10.1016/j.concog.2009.12.013

Schneider, R. (2010). Mindflex Hack Turns Brain Waves Into Music. Wired. Available online at: https://www.wired.com/2010/10/robert-schneider-teletron/ (accessed January 21, 2021).

Shcherbina, A., Mattsson, C. M., Waggott, D., Salisbury, H., Christle, J. W., Hastie, T., et al. (2017). Accuracy in wrist-worn, sensor-based measurements of heart rate and energy expenditure in a diverse cohort. J. Pers. Med. 7:3. doi: 10.3390/jpm7020003

Shiffman, S. (2005). Dynamic influences on smoking relapse process. J. Pers. 73, 1715–1748. doi: 10.1111/j.0022-3506.2005.00364.x

Sitsky, L. (2002). Music of the Twentieth-Century Avant-Garde: A Biocritical Sourcebook: A Biocritical Sourcebook. ABC-CLIO.

Sourina, O., Liu, Y., Wang, Q., and Nguyen, M. K. (2011). “EEG-based personalized digital experience,” in International Conference on Universal Access in Human-Computer Interaction (Orlando, FL: Springer), 591–599. doi: 10.1007/978-3-642-21663-3_64

Stice, E., Spoor, S., Ng, J., and Zald, D. H. (2009). Relation of obesity to consummatory and anticipatory food reward. Physiol. Behav. 97, 551–560. doi: 10.1016/j.physbeh.2009.03.020

Sung, Y., Cho, K., and Um, K. (2012). A development architecture for serious games using BCI (brain computer interface) sensors. Sensors 12, 15671–15688. doi: 10.3390/s121115671

Uhlhaas, P., Pipa, G., Lima, B., Melloni, L., Neuenschwander, S., Nikoli,ć, D., et al. (2009). Neural synchrony in cortical networks: history, concept and current status. Front. Integr. Neurosci. 3.:17 doi: 10.3389/neuro.07.017.2009

View Research, G. (2019). Brain Computer Interface Market Size Report, 2020–2027. Available online at: https://www.grandviewresearch.com/industry-analysis/brain-computer-interfaces-market (accessed January 22, 2021).

Vujic, A., Tong, S., Picard, R., and Maes, P. (2020). “Going with our guts: potentials of wearable electrogastrography (EGG) for affect detection,” in Proceedings of the 2020 International Conference on Multimodal Interaction (Netherlands), 260–268. doi: 10.1145/3382507.3418882

Wall Street Journal (2019). How China Is Using Artificial Intelligence in Classrooms | WSJ. Available online at: https://www.youtube.com/watch?v=JMLsHI8aV0g (accessed January 22, 2021).

Wang, J., Wang, W., and Hou, Z.-G. (2019). Toward improving engagement in neural rehabilitation: attention enhancement based on brain–computer interface and audiovisual feedback. IEEE Trans. Cogn. Dev. Syst. 12, 787–796. doi: 10.1109/TCDS.2019.2959055

Witteman, J., Post, H., Tarvainen, M., de Bruijn, A., Perna, E. D. S. F., Ramaekers, J. G., et al. (2015). Cue reactivity and its relation to craving and relapse in alcohol dependence: a combined laboratory and field study. Psychopharmacology 232, 3685–3696. doi: 10.1007/s00213-015-4027-6

Wolpaw, J. R., Birbaumer, N., Heetderks, W. J., McFarland, D. J., Peckham, P. H., Schalk, G., et al. (2000). Brain-computer interface technology: a review of the first international meeting. IEEE Trans. Rehabil. Eng. 8, 164–173. doi: 10.1109/TRE.2000.847807

WorldFamousElectronicst (2011). Pulse Sensor. Available online at: https://github.com/WorldFamousElectronics/PulseSensorStarterProject (accessed January 23, 2021).

Keywords: augmentation, art, closed-loop systems, virtual realities, open-source, brain computer interface

Citation: Bernal G, Montgomery SM and Maes P (2021) Brain-Computer Interfaces, Open-Source, and Democratizing the Future of Augmented Consciousness. Front. Comput. Sci. 3:661300. doi: 10.3389/fcomp.2021.661300

Received: 30 January 2021; Accepted: 16 March 2021;

Published: 14 April 2021.

Edited by:

Anton Nijholt, University of Twente, NetherlandsReviewed by:

Elizabeth A. Boyle, University of the West of Scotland, United KingdomDulce Fernandes Mota, Instituto Superior de Engenharia do Porto (ISEP), Portugal

Copyright © 2021 Bernal, Montgomery and Maes. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guillermo Bernal, Z2Jlcm5hbEBtaXQuZWR1

†These authors have contributed equally to this work

Guillermo Bernal

Guillermo Bernal Sean M. Montgomery

Sean M. Montgomery Pattie Maes

Pattie Maes