95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci. , 08 April 2021

Sec. Human-Media Interaction

Volume 3 - 2021 | https://doi.org/10.3389/fcomp.2021.624694

This article is part of the Research Topic Alzheimer's Dementia Recognition through Spontaneous Speech View all 21 articles

We introduce a novel audio processing architecture, the Open Voice Brain Model (OVBM), improving detection accuracy for Alzheimer's (AD) longitudinal discrimination from spontaneous speech. We also outline the OVBM design methodology leading us to such architecture, which in general can incorporate multimodal biomarkers and target simultaneously several diseases and other AI tasks. Key in our methodology is the use of multiple biomarkers complementing each other, and when two of them uniquely identify different subjects in a target disease we say they are orthogonal. We illustrate the OBVM design methodology by introducing sixteen biomarkers, three of which are orthogonal, demonstrating simultaneous above state-of-the-art discrimination for two apparently unrelated diseases such as AD and COVID-19. Depending on the context, throughout the paper we use OVBM indistinctly to refer to the specific architecture or to the broader design methodology. Inspired by research conducted at the MIT Center for Brain Minds and Machines (CBMM), OVBM combines biomarker implementations of the four modules of intelligence: The brain OS chunks and overlaps audio samples and aggregates biomarker features from the sensory stream and cognitive core creating a multi-modal graph neural network of symbolic compositional models for the target task. In this paper we apply the OVBM design methodology to the automated diagnostic of Alzheimer's Dementia (AD) patients, achieving above state-of-the-art accuracy of 93.8% using only raw audio, while extracting a personalized subject saliency map designed to longitudinally track relative disease progression using multiple biomarkers, 16 in the reported AD task. The ultimate aim is to help medical practice by detecting onset and treatment impact so that intervention options can be longitudinally tested. Using the OBVM design methodology, we introduce a novel lung and respiratory tract biomarker created using 200,000+ cough samples to pre-train a model discriminating cough cultural origin. Transfer Learning is subsequently used to incorporate features from this model into various other biomarker-based OVBM architectures. This biomarker yields consistent improvements in AD detection in all the starting OBVM biomarker architecture combinations we tried. This cough dataset sets a new benchmark as the largest audio health dataset with 30,000+ subjects participating in April 2020, demonstrating for the first time cough cultural bias.

Since 2001, the overall mortality for Alzheimer's Dementia (AD) has been increasing year-on-year. Between 2000 and 2020 deaths resulting from stroke, HIV and heart disease decreased while reported deaths from AD increased by about 150% (Alzheimer's Association, 2020). Currently no treatments are available to cure AD, however, if detected early on, treatments may greatly slow and eventually possibly even halt further deterioration (Briggs et al., 2016).

Currently, methods for diagnosing AD often include neuroimaging such as MRI (Fuller et al., 2019), PET scans of the brain (Ding et al., 2019), or invasive lumbar puncture to test cerebrospinal fluid (Shaw et al., 2009). These diagnostics are far too expensive for large-scale testing and are usually used once family members or personal care detect late-stage symptoms, when the disease is too advanced for onset treatment. On top of the throughput limitations, recent studies on the success of the most widely used form of diagnostic, PET amyloid brain scans, have shown expert doctors in AD currently misdiagnose patients in about 83% of cases and change their management and treatment of patients nearly 70% of the time (James et al., 2020). This is mainly caused by the lack of longitudinal explainability of these scans. As a result it is hard to track effectiveness of treatments and even more to evaluate personalized treatments tailored to specific on-set populations of AD (Maclin et al., 2019). AI in general suffers from similar issues and operates a bit as a black-box, and does not offer explainable results linked to specific causes of each individual subject (Holzinger et al., 2019).

Based on the above findings, our research aims to find AD diagnostic methods achieving the following four warrants:

1. Onset Detection: detection needs to occur as soon as the first signs emerge, or sooner even if only probabilistic metrics can be provided. Preclinical AD diagnosis and subsequent treatment may offer the best chances at delaying the effects of dementia (Briggs et al., 2016). Therapeutic significance may require establishing subclassifications within AD (Briggs et al., 2016). Evidence that there are early signs of AD onset in the human body come in the form of recent research on blood plasma phosphorylated-tau isoforms diagnostic biomarkers demonstrating chemical traces of dementia, and of AD in particular, decades in advance of clinical diagnosis (Barthélemy et al., 2020; Palmqvist et al., 2020). These are encouraging findings, and hopefully there are also early onset signs in free-speech audio signals. In fact, preclinical AD is often linked to mood changes and in cognitively normal adults onset AD includes depression (Babulal et al., 2016), while apathy and anxiety have been linked to some cognitive decline (Bidzan and Bidzan, 2014). Both of these may be detectable in preclinical AD using existing sentiment analysis techniques (Zunic et al., 2020).

2. Minimal Cost: we need a method that has very little side effects, so that a person can perform the test periodically, and at very low variable costs to allow broad pre-screening possibilities. Our suggestion is to develop methods that can run on smart speakers and mobile phones (Subirana et al., 2017b) at essentially no cost while respecting user privacy (Subirana et al., 2020a). There is no medically approved system allowing preclinical AD diagnosis at scale. There are different approaches to measure AD disease onset and progression but all rely on expensive human assessments and/or medical procedures. We demonstrate our approach using only free speech but the approach can also include multi-modal data if available including MRI images (Altinkaya et al., 2020) and EEG recordings (Cassani et al., 2018).

3. Longitudinal tracking: the method should include some form of AD degree metric, especially to evaluate improvements resulting from medical interventions. The finer disease progression increments can be measured, the more useful they'll be. Ideally, adaptive clinical trials would be supported (Coffey and Kairalla, 2008).

4. Explainability: the results need to have some form of explainability, if possible including the ability to diagnose other types of dementia and health conditions. Most importantly, the approach needs to be approved for broad use by the medical community.

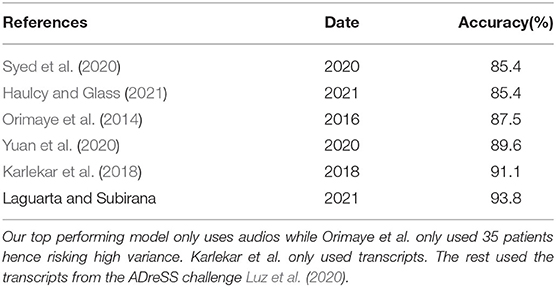

Our approach is enabled by and improves upon advances in deep learning on acoustic signals to detect discriminating features between AD and non-AD subjects—it aims to address the warrants above, including explainability which has been challenging for previous approaches. While research in AD detection from speech has been ongoing for several years most approaches did not surpass the 90% detection mark as shown in Table 1. These approaches use black-box deep learning algorithms providing little to no explainability as to what led the model's decision, making it hard for clinicians to use and hence slowing adoption by the healthcare system. In Petti et al. (2020), review of the literature on AD speech detection, about two thirds of the papers reviewed use Neural Nets or Support Vector Machines, while the rest focus on Decision Trees and Naïve Bayes. Neural Nets seem to achieve the highest detection accuracy on average. Previous work, instead, has very little inspiration on the different stages of human intelligence and at most focuses solely on modeling a small part of the brain as shown in Nassif et al. (2019), de la Fuente Garcia et al. (2020), and Petti et al. (2020).

Table 1. A review of other AD diagnostic algorithms on the same dataset from Lyu (2018).

Combining independent biomarkers with recent advances in our understanding of the four modules of the human brain as researched at MIT's Center for Brain Minds and Machines (CBMM) (CBM, 2020), we introduce a novel multi-modal processing framework, the MIT CBMM Open Voice Brain Model (OVBM). The approach described in this paper aims to overcome limitations of previous approaches, firstly by training the model on large speech datasets and using transfer learning so that the accurate learned features improve AD detection accuracy even if the sample of AD patients is not large. Secondly, by providing an explainable output in the form of a saliency chart that may be used to track the evolution of AD biomarkers.

The use of independent biomarkers in the CBMM Open Voice Brain Model enables researching what is the value of each of them, simply by contrasting results with and without one of the biomarkers—we illustrate this point with a biomarker focused on cough discrimination (Subirana et al., 2020b) and one focused on wake words (Subirana, 2020). We feel this is an original contribution of our work grounded on the connection between respiratory conditions and Alzheimer's.

Furthermore, we also show that our framework lets apply the same biomarker models for audio detection of multiple diseases, and explore whether there may be common biomarkers between AD and other diseases. To that end, the OVBM framework we introduce may be extended to various other tasks such as speech segmentation and transcription. It has already proven to detect COVID-19 from a forced-cough recording with high sensitivity including 100% asymptomatic detection (Laguarta et al., 2020). Here we demonstrate it in the individualized and explainable diagnostic of Alzheimer's Dementia (AD) patients, where, as shown in Table 1 we achieve above state-of-the-art accuracy of 93.8% (Pulido et al., 2020), and using only raw audio as input, while extracting for each subject a saliency map with the relative disease progression of 16 biomarkers. Even with expensive CT scans, to date experts can not create consistent biomarkers as described in James et al. (2020), Henriksen et al. (2014), and Morsy and Trippier (2019) even when including emotional biomarkers, unlike our approach which automatically develops them from free speech. Experts point at this lack of biomarkers as the reason why no new drug has been introduced in the last 16 years despite AD (Zetterberg, 2019) being the sixth leading cause of death in the United States (Alzheimer's Association, 2019), and one of the leading unavoidable causes for loss of healthy life.

We found that cough features, in particular, are very useful biomarker enablers as shown in several experiments reported in this paper and that the same biomarkers could be used for COVID-19 and AD detection. Our emphasis on detecting relevant biomarkers corresponding to the different stages of disease onset, led us to build ten sub-models using four datasets. To do so, over 200,000 cough samples were crowd sourced to pre-train a model discriminating English from Catalan coughs, and then transfer learning was leveraged to exploit resulting features by integrating it into an OVBM brain model, showing improvements in AD detection, no matter what transfer learning strategy was used. This COVID-19 cough dataset we created approved by MIT's IRB 2004000133 sets a new benchmark as the largest audio health dataset, with over 30,000 subjects participating in less than four weeks in April 2020.

In the next section we present a literature review with evidence in favor of our choice of four biomarkers. In section 3, we present the different components of the Open Voice Brain Model AD detector, from sections 4 to 7 we introduce the 16 biomarkers with results and a novel personalized AD biomarker comparative saliency map. We conclude in section 8 with a brief summary and implications for future research.

Informed by a review of the literature, our choice of biomarkers is consistent with the vast literature resulting from AD research as we discuss next.

Preclinical AD is often linked to mood changes. In cognitively normal adults it include depression (Babulal et al., 2016), while apathy and anxiety have been linked to some cognitive decline (Bidzan and Bidzan, 2014). Sentiment biomarker. Clinical evidence supports the importance of sentiments in AD early-diagnosis (Costa et al., 2017; Galvin et al., 2020), and different clinical settings emphasize different sentiments, such as doubt, or frustration (Baldwin and Farias, 2009).

One of the main early-stage AD biomarkers is memory loss (Chertkow and Bub, 1990), which occurs both at a conceptual level as well as at a muscular level (Wirths and Bayer, 2008) and is different from memory forgetting in healthy humans (Cano-Cordoba et al., 2017; Subirana et al., 2017a). A prominent symptom of early stage AD is malfunctioning of different parts of memory depending on the particular patient (Small et al., 2000), possibly affecting one or more of its subcomponents including primary or working memory, remote memory, and semantic memory. The underlying causes of these memory symptoms may be linked to neuropathological changes, such as tangles and plaques, initially affecting selected areas of the brain like the hippocampi or the temporal and frontal lobes, and gradually expanding beyond these (Morris and Kopelman, 1986). Memory biomarker.

The human cough is already used to diagnose several diseases using audio recognition (Abeyratne et al., 2013; Pramono et al., 2016) as it provides information corresponding to biomarkers in the lungs and respiratory tract (Bennett et al., 2010). People with chronic lung disorders are more than twice as likely to have AD (Dodd, 2015), therefore we hypothesize features extracted from a cough classifier could be valuable for AD diagnosis.

There is an extensive cough-based diagnosis research of respiratory diseases but to our knowledge, no one had applied it to discriminate other, apparently unrelated, diseases like Alzheimer's. Our findings are consistent with the notion that AD patients cough differently and that cough-based features can help AD diagnosis; they are also consistent with the notion that cough features may help detect the onset of the disease. The lack of longitudinal datasets prevents us from exploring this point but do allow us to demonstrate the diagnostic power of cough-based features, to the point where without these features we would not have surpassed state-of-the-art performance.

The respiratory tract is often involved in the fatal outcome of AD. We introduce two biomarkers focused on the respiratory tract that may help discriminate between early and late stage AD. We have not found research indicating how early changes in the tract may be detected but given it's importance in the disease outcome it may be early on. This could also explain the success of many speech-based AD discrimination approaches—some of which have been applied to early stages of FTD. Significant research in AD such as Heckman et al. (2017) has proven that the disease impacts motor neurons. In other diseases, like Parkinson's, where motor neurons are affected, vocal cords have proven to be one of the first muscles affected (Holmes et al., 2000).

Dementia in general has been linked to increased deaths from pneumonia (Wise, 2016; Manabe et al., 2019) and COVID-19 (Azarpazhooh et al., 2020; Hariyanto et al., 2020) possibly linked to specific gens (Kuo et al., 2020). COVID-19 deaths are more likely with Alzheimer's than with Parkinson's disease (Yu et al., 2020). This different respiratory response depending on the type of dementia suggests that related audio features, such as coughs, may be useful not only to discriminate dementia subjects from others but also to discriminate specific types of dementia.

We contend there is correlation, instead of causality, between our two respiratory track biomarkers and Alzheimer's but further elucidation to this extent is necessary as there is in many other areas with AD and more broadly in science in general (Pearl and Mackenzie, 2018). Some causality link may exist due to the simultaneous role of substance P in Alzheimer's (Severini et al., 2016) and in cough (Sekizawa et al., 1996). The existence of spontaneous cough per se may not be enough to predict onset risk but in combination with other health parameters may contribute to an accurate risk predictor (Song et al., 2011). Our biomarker suggestion is based on “forced coughs” which, to our knowledge, has not been studied in connection with Alzheimer's. We feel it may be an early indication of future respiratory tract conditions that will show in the form of spontaneous coughs. In patients with late-onset Alzheimer's Disease (LOAD) a unique delayed cough response has been reported in COVID-19 infected subjects (Isaia et al., 2020; Guinjoan, 2021). Dysphagia and aspiration pneumonia continue to be the two most serious conditions in late stage AD with the latter being the most common cause of death of AD patients (Kalia, 2003), suggesting substance P induced early signs in the respiratory tract may already be present in forced coughs, perhaps even unavoidably.

What seems unquestionable is the connection between speech and orofacial apraxia and Alzheimer's, and it has been suggested that it, alone, can be a good metric for longitudinal assessment (Cera et al., 2013). Various forms of apraxia have been linked to AD progression in different parts of the brain (Giannakopoulos et al., 1998). Nevertheless, given the difficulty in estimating speech and orofacial apraxia these figures are not part of common Clinical Dementia Rating scales (Folstein et al., 1975; Hughes et al., 1982; Clark and Ewbank, 1996; Lambon Ralph et al., 2003). However, all these studies reveal difficulties in an objective, accurate, and personalized scale that can track each patient independently from the others (Olde Rikkert et al., 2011). The lack of metrics also spans other related indicators such as quality of life estimations (Bowling et al., 2015). There are no reliable biomarkers for other neurogenerative disorders either (Johnen and Bertoux, 2019).

Recent research has demonstrated that apraxia screening can also predict dementia disease progression (Pawlowski et al., 2019), especially as a way to predict AD in early stage FTD subjects, a population that we are particularly interested in targeting with our biomarkers. For the Behavioral Variant of Fronto Temporal Dementia (bvFTD), in patients under 65 the second most common cognitive disorder caused by neurodegeneration, little tonal modulation and buccofacial apraxia, are targeted by our biomarkers and are established diagnostic domains (Johnen and Bertoux, 2019). We hope that our research can help establish reliable biomarkers for disease progression that can also distinguish at onset between the different possible diagnostics. The exact connection between buccofacial apraxia and dementia has not been as well-documented as that of other forms of apraxia. Recent results show that there buccofacial apraxia may be present in up to fifty percent of dementia patients with no association to oropharyngeal dysphagia (Michel et al., 2020). Oropharyngeal dysphagia, on the other hand, has been linked to dementia, in some studies in over fifty percent of the cases, appearing, in particular, in late stages of FTD and in early stages of AD (Alagiakrishnan et al., 2013).

According to the NIH's National Institute of Neurological Disorders and Stroke information page on apraxia1, the most common form of apraxia is orofacial apraxia which causes the inability to carry out facial movements on request such as coughing. Cough reflex sensitivity and urge-to-cough deterioration has been shown to help distinguish AD from dementia with Lewy Bodies and control groups (Ebihara et al., 2020). The impairment of cough in the elderly is linked to dementia (Won et al., 2018).

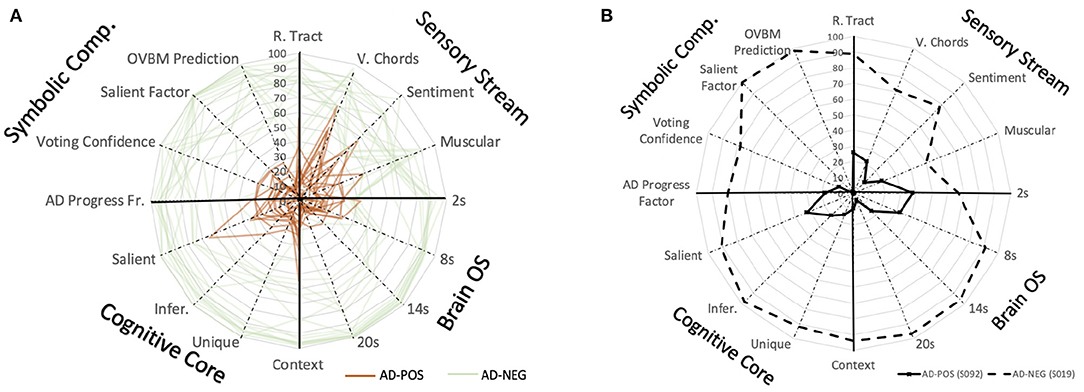

The OVBM architecture shown in Figure 1 frames a four-unit system to test biomarker combinations and provides the basis for an explainable diagnostic framework for a target task such as AD discrimination. The Sensory Stream is responsible for pre-training models on large speech datasets to extract features of individual physical biomarkers. The Brain OS splits audio into overlapping chunks and leverages transfer learning strategies to fine-tune the biomarker models to the smaller target dataset. For longitudinal diagnosis, it includes a round-robin five stage graph neural network that marks salient events in continuous speech. The Cognitive Core incorporates medical knowledge specific to the target task to train cognitive biomarker feature extractors. The Symbolic Compositional Models unit combines fine-tuned biomarker models into a graph neural network. Its predictions on individual audio chunks are fed into an aggregating engine subsequently reaching a final diagnostic plus a patient saliency map. To enable doctors to gain insight into the specific condition of a given patient, one of the novelties of our approach is that the outputs at each unique module are extracted to create a visualization in the form of a health diagnostic saliency map showing the impact of the selected biomarkers. This saliency map may be used to longitudinally track and visualize disease progression.

Next, we review each of the four OVBM modules in the context of AD, introducing 16 biomarkers and gradually explaining the partial GNN architecture shown in Figure 2. To be able to compare models, our baselines and 8 of the biomarkers are based on the ResNet50 CNN due to its state-of-the-art performance on medical speech recognition tasks (Ghoniem, 2019). All audio samples are processed with the MFCC package published by Lyons et al. (2020), and padded accordingly. We operate on Mel Frequency Cepstral Coefficients (MFCC), instead of spectrograms (Lee et al., 2009), because of its resemblance to how the human cochlea captures sound (Krijnders and t Holt, 2017). All audio data uses the same MFCC parameters (Window Length: 20 ms, Window Step: 10 ms, Cepstrum Dimension: 200, Number of Filters: 200, FFT Size: 2,048, Sample rate: 16,000). All datasets follow a 70/30 train-test split and models are trained with an Adam optimizer (Kingma and Ba, 2014).

The dataset from DementiaBank, ADrESS (Luz et al., 2020), is used for training the OVBM framework and fine-tuning all biomarker models on AD detection. This dataset is the largest publicly available, consisting of subject recordings in full enhanced audio and short normalized sub-chunks, along with the recording transcriptions from 78 AD and 78 non-AD patients. The patient age and gender distribution is balanced and equal for AD and non-AD patients. For the approach of this study focusing purely on audio processing we only use the full enhanced audio and patient metadata, excluding transcripts from any processing. It is worth noting this given the poor audio quality of some of the recordings.

We have selected four biomarkers inspired by previous medical community choices (Chertkow and Bub, 1990; Wirths and Bayer, 2008; Dodd, 2015; Heckman et al., 2017; Galvin et al., 2020), as reviewed next.

We follow memory decay models from Subirana et al. (2017a) and Cano-Cordoba et al. (2017) to capture this muscular metric by degrading input signals for all train and test sets with the Poisson mask shown in Equation (1), a commonly occurring distribution in nature (Reed and Hughes, 2002). We use as a Possion function a mask with input MFCC image = Ix, output mask = M(IX), λ = 1, and k = each value in Ix:

As shown in Table 2, this Poisson biomarker brings a unique improvement to each model except for Cough, consistent with both inherently capturing similar features containing muscular degradation.

We have developed a vocal cord biomarker to incorporate in OBVM architectures. We trained a Wake Word (WW) model to learn vocal cord features on LibriSpeech—an audiobook dataset with ≈1,000 h of speech from Panayotov et al. (2015) by creating a balanced sample set of 2 s audio chunks, half containing the word “Them” and half without. A ResNet50 (He et al., 2016) is trained for binary classification of “Them” on 3 s audio chunks(lr:0.001, val_acc: 89%).

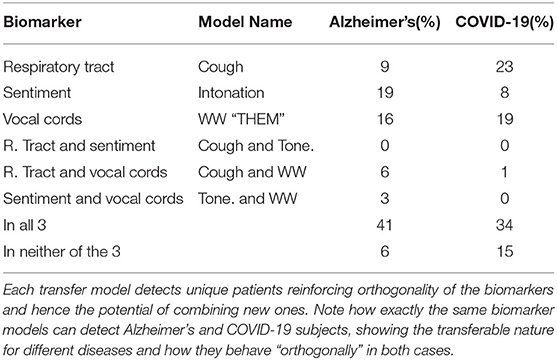

Illustrated in Table 3 and Figure 4, this vocal cords model proves its contribution of unique features, which without fine-tuning to the AD task performs as well as the baseline ResNet50 fully trained on AD, and significantly beats it when fully fine-tuned.

Table 3. To illustrate the complementary nature of the biomarkers we show the unique AD patients detected by each individual biomarker model with only the final classification layer fine-tuned on the target disease, Alzheimer's and COVID-19 in this case.

We train a Sentiment Speech classifier model to learn intonation features on RAVDESS—an emotional speech dataset by Livingstone and Russo (2018) of actors speaking in eight different emotional states. A ResNet50 (He et al., 2016) is trained on categorical classification of eight corresponding intonations such as calm, happy, or disgust(lr: 0.0001, val_acc on 8 classes: 71%).

As illustrated by Table 3 and Figure 4, this biomarker captures unique features for AD detection, and when only fine-tuning its final five layers outperforms a fully trained ResNet50 on AD detection.

We use the cough dataset collected through MIT Open Voice for COVID-19 detection (Subirana et al., 2020b), strip all but the spoken language of the person coughing (English, Catalan), and split audios into 6 s chunks. A ResNet50 (He et al., 2016) is trained on binary classification (Input: MFCC 6s Audio Chunks (1 cough)—Output: English/Catalan, lr: 0.0001, val_acc: 86%).

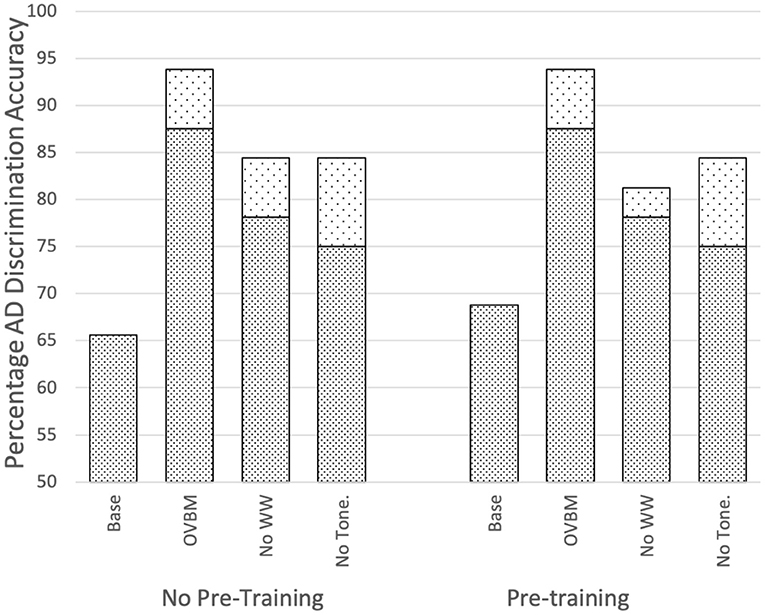

Figure 4 and Table 3, justify the features extracted by this cough model as valuable for the task of AD detection by capturing a unique set of samples and improving performance. Further, Figure 3 validates its impact on various OBVM architectures, including the top performing multi-modal model, justifying the relevance of this novel biomarker.

Figure 3. Impact of sensory stream biomarkers on OVBM performance by removing transferred knowledge one at a time. Top dotted sections of bars indicate there is always performance gain from the cough biomarker. Baselines are the OVBM trained on AD without any transfer learning. In the other bars, a biomarker is removed and replaced with an AD pre-trained ResNet50, hence removing the transferred knowledge but conserving computational power, showing complementarities since all are needed for maximum results.

The Brain OS is responsible for capturing learned features from the individual biomarker models in the Sensory Stream and Cognitive Core, and for integrating them into an OVBM architecture, with the aim of training the ensemble for a target task, in this case AD detection.

To make the most out of the short patient recordings, we split each patient recording into overlapping audio chunks (0–4, 2–6, 4–8 s). Once the best pre-trained biomarker models in the sensory stream and cognitive modules are selected, we first concatenate them together and then pass their outputs through a 1,024 neuron deeply connected neural network layer with ReLU activation. We also incorporate at this point metadata such as gender. We test three Brain OS transfer learning strategies: (1) CNNs are used as fixed feature extractors without any fine-tuning; (2) CNNs are fine-tuned by training all layers; (3) only the final layers of the CNN are fine-tuned.

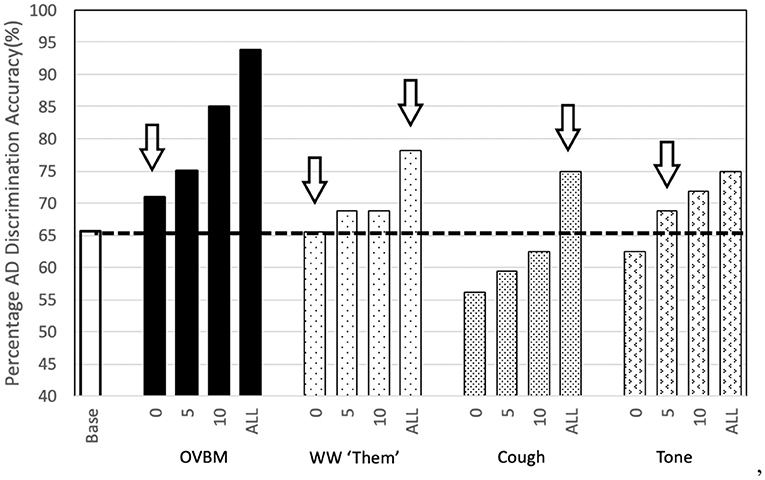

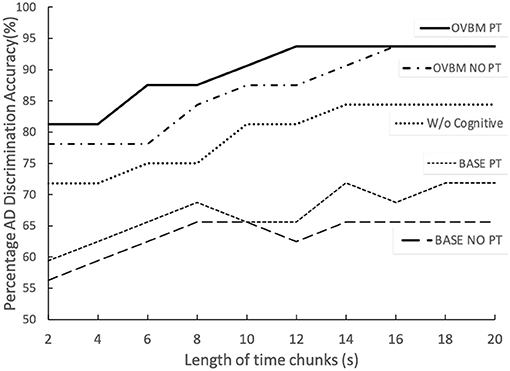

From Figure 5, it is evident AD detection improves as chunk length increases consistent with the fact that attention-marking has more per-chunk information to formulate a better AD prediction. From this attention-marking index (quantity of information required in a chunk for a confident diagnosis) we select chunk sizes 2, 8, 14, and 20 s, shown in Figure 7, as the Brain OS biomarkers, establishing individual AD progression. In terms of transfer learning strategies, Figure 4 shows that fine-tuning all layers always leads to better results, however for most models almost no fine-tuning is required to beat the baseline.

Figure 4. Sensory Stream Saliency Bar Chart: To illustrate the potential of our approach we show the strength of the simplest transfer models we tried. The numbers 0-5-10-ALL on the x-axis labels refer to the number of convolution layers trained after transfer learning in addition to the final dense layer. We find the most surprising, perhaps, is that the simple wakeword model to find the word “Them” is as powerful as the baseline. If we let the model fine-tune the last few (0-5-10) layers then it goes well beyond it. Our novel Cough database, inspired in the effect of AD in the respiratory tract also shows surprising results, even without any adaptation at all. If we let fine-tuning of the whole model, it's validation accuracy improves ≈10% points with respect to the baseline. Baseline is the same OVBM architecture trained on AD without any transfer learning of features.

Neuropsychological tests are a common screening tool for AD (Baldwin and Farias, 2009). These tests, among others, evaluate a patient's ability to remember uncommon words, contextualize, infer actions, and detect saliency (Baldwin and Farias, 2009; Costa et al., 2017). In the case of this AD dataset, all patients are asked to describe the Cookie Theft picture created by Goodglass et al. (1983), where a set of words such as “kitchen” (context), “tipping” (unique), “jar” (inferred), and “overflow” (salient), are used to capture four cognitive biomarkers. To keep the richness of speech, we train four wake word models from LibriSpeech (Panayotov et al., 2015) with ResNet50s following the same approach as Biomarker 2. The four chosen cognitive biomarkers aim to detect patients' ability on: context, uniqueness, inference, and saliency.

We could show the same saliency bar chart in Figure 4 and a uniqueness table such as Table 3 to illustrate the impact of each cognitive biomarker. Instead in Figure 5, we show the impact of removing the cognitive core on the top OVBM performance which drops ≈10%, validating the relevance of the cognitive core biomarkers.

Figure 5. The two top lines illustrate the full OVBM performance, with its biomarker feature models, as a function of chunk size. PT refers to individually fine-tuning each biomarker model for AD before re-training the whole OVBM. The middle line shows the OVBM without the cognitive core, illustrating how it boosts performance by about 10% across the board. Baseline PT is the OVBM architecture with each ResNet50 inside individually trained on AD before retraining them together in the OVBM architecture.

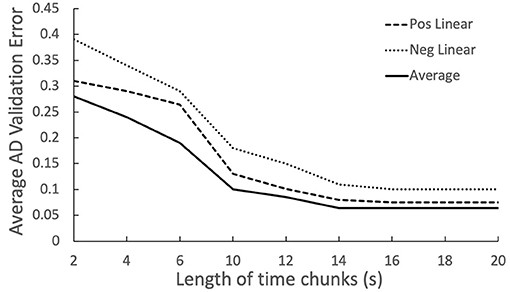

This module fine-tunes previous modules' outputs into a graph neural network. Predictions on individual audio chunks for one subject are aggregated and fed into competing models to reach a final diagnostic. We tested the model with various BERT configurations and found no improvement in detection accuracy. In the AD implementation, given we had at most 39 overlapping chunks, three simple aggregation metrics are compared: averaging, linear positive (more weight given to later chunks), and linear negative (more weight given to earlier chunks).

In Figure 6, averaging proves to be the most effective, while positive linear over performing the negative linear indicates the latter audio chunks are more informative than front ones. Figure 7 includes four biomarkers derived from combining chunk predictions from biomarker models of the three other modules (Cummings, 2019). With more data and longitudinal recordings, the OVBM GNN may incorporate other biomarkers.

Figure 6. Relation between chunk size and AD discrimination error, showing increased importance of the latter chunks.

Figure 7. (A) Saliency map to study the explainable AD evolution for all the patients in the study based on the predictions of individual biomarker models. BrainOS (2, 8, 14, 20) show the model prediction at different chunk sizes. This map could be used to longitudinally monitor subjects where a lower score on the biomarkers may indicate a more progressed AD subject. (B) Saliency map comparing AD+ subject S092 with a solid line and AD- subject S019 represented with a dashed line.

We conclude by providing a few insights further supporting our OVBM brain-inspired model for audio health diagnostics as presented above. We have proven the success of the OVBM framework, setting the new benchmark for state-of-the-art accuracy of AD classification, despite only incorporating audio signals—one that can incorporate GNNs (Wu et al., 2020). Future work may improve this benchmark by also incorporating into OVBM longitudinal GNN's natural language biomarkers using NLP classifiers or multi-modal graph neural networks incorporating non-audio diagnostic tools (Parisot et al., 2018).

One of the most promising insights of all is the discovery of cough as a new biomarker (Figure 3), one that improves any of the intermediate models tested and that validates OVBM as a framework on which medical experts can hypothesize and test out existing and novel biomarkers. We are the first to report that cough biomarkers have information related to gender and culture, and are also the first to demonstrate how they improve simultaneous AD classification as illustrated in the saliency charts (Figure 4) as well as that of other apparently unrelated conditions.

Another promising finding is the model's explainability, introducing the biomarker AD saliency map tool, offering novel methods to evaluate patients longitudinally on a set of physical and neuropsychological biomarkers as shown in Figure 7. In future research, longitudinal data may be collected to properly test the onset potential of OVBM GNN discrimination in continuous speech. We hope our approach brings the AI health diagnostic experts closer to the medical community and accelerates research for treatments by providing longitudinal and explainable tracking metrics that can help succeed adaptive clinical trials of urgently needed innovative interventions.

The data analyzed in this study is subject to the following licenses/restrictions: in order to gain access to the datasets used in the paper, researchers must become a member of DementiaBank. Requests to access these datasets should be directed to https://dementia.talkbank.org/.

JL wrote the code. BS designed the longitudinal biomarker Open Voice Brian Model (OVBM) and the saliency map. All authors contributed to the analysis of the results and the article.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abeyratne, U. R., Swarnkar, V., Setyati, A., and Triasih, R. (2013). Cough sound analysis can rapidly diagnose childhood pneumonia. Ann. Biomed. Eng. 41, 2448–2462. doi: 10.1007/s10439-013-0836-0

Alagiakrishnan, K., Bhanji, R. A., and Kurian, M. (2013). Evaluation and management of oropharyngeal dysphagia in different types of dementia: a systematic review. Arch. Gerontol. Geriatr. 56, 1–9. doi: 10.1016/j.archger.2012.04.011

Altinkaya, E., Polat, K., and Barakli, B. (2020). Detection of Alzheimer's disease and dementia states based on deep learning from MRI images: a comprehensive review. J. Instit. Electron. Comput. 1, 39–53. doi: 10.33969/JIEC.2019.11005

Alzheimer's Association (2019). 2019 Alzheimer's disease facts and figures. Alzheimer's Dement. 15, 321–387. doi: 10.1016/j.jalz.2019.01.010

Alzheimer's Association (2020). Alzheimer's disease facts and figures [published online ahead of print, 2020 mar 10]. Alzheimers Dement. 1–70. doi: 10.1002/alz.12068

Azarpazhooh, M. R., Amiri, A., Morovatdar, N., Steinwender, S., Ardani, A. R., Yassi, N., et al. (2020). Correlations between covid-19 and burden of dementia: an ecological study and review of literature. J. Neurol. Sci. 416:117013. doi: 10.1016/j.jns.2020.117013

Babulal, G. M., Ghoshal, N., Head, D., Vernon, E. K., Holtzman, D. M., Benzinger, T. L., et al. (2016). Mood changes in cognitively normal older adults are linked to Alzheimer disease biomarker levels. Am. J. Geriatr. Psychiatry 24, 1095–1104. doi: 10.1016/j.jagp.2016.04.004

Baldwin, S., and Farias, S. T. (2009). Unit 10.3: Assessment of cognitive impairments in the diagnosis of Alzheimer's disease. Curr. Protoc. Neurosci. 10:Unit10-3. doi: 10.1002/0471142301.ns1003s49

Barthélemy, N. R., Horie, K., Sato, C., and Bateman, R. J. (2020). Blood plasma phosphorylated-tau isoforms track CNS change in Alzheimer's disease. J. Exp. Med. 217:e20200861. doi: 10.1084/jem.20200861

Bennett, W. D., Daviskas, E., Hasani, A., Mortensen, J., Fleming, J., and Scheuch, G. (2010). Mucociliary and cough clearance as a biomarker for therapeutic development. J. Aerosol Med. Pulmon. Drug Deliv. 23, 261–272. doi: 10.1089/jamp.2010.0823

Bidzan, M., and Bidzan, L. (2014). Neurobehavioral manifestation in early period of Alzheimer disease and vascular dementia. Psychiatr. Polska 48, 319–330.

Bowling, A., Rowe, G., Adams, S., Sands, P., Samsi, K., Crane, M., et al. (2015). Quality of life in dementia: a systematically conducted narrative review of dementia-specific measurement scales. Aging Ment. Health 19, 13–31. doi: 10.1080/13607863.2014.915923

Briggs, R., Kennelly, S. P., and O'Neill, D. (2016). Drug treatments in Alzheimer's disease. Clin. Med. 16:247. doi: 10.7861/clinmedicine.16-3-247

Cano-Cordoba, F., Sarma, S., and Subirana, B. (2017). Theory of Intelligence With Forgetting: Mathematical Theorems Explaining Human Universal Forgetting Using “Forgetting Neural Networks”. Technical Report 71, MIT Center for Brains, Minds and Machines (CBMM).

Cassani, R., Estarellas, M., San-Martin, R., Fraga, F. J., and Falk, T. H. (2018). Systematic review on resting-state EEG for Alzheimer's disease diagnosis and progression assessment. Dis. Mark. 2018:5174815. doi: 10.1155/2018/5174815

Cera, M. L., Ortiz, K. Z., Bertolucci, P. H. F., and Minett, T. S. C. (2013). Speech and orofacial apraxias in Alzheimer's disease. Int. Psychogeriatr. 25, 1679–1685. doi: 10.1017/S1041610213000781

Chertkow, H., and Bub, D. (1990). Semantic memory loss in dementia of Alzheimer's type: what do various measures measure? Brain 113, 397–417. doi: 10.1093/brain/113.2.397

Clark, C. M., and Ewbank, D. C. (1996). Performance of the dementia severity rating scale: a caregiver questionnaire for rating severity in Alzheimer disease. Alzheimer Dis. Assoc. Disord. 10, 31–39. doi: 10.1097/00002093-199603000-00006

Coffey, C. S., and Kairalla, J. A. (2008). Adaptive clinical trials. Drugs R & D 9, 229–242. doi: 10.2165/00126839-200809040-00003

Costa, A., Bak, T., Caffarra, P., Caltagirone, C., Ceccaldi, M., Collette, F., et al. (2017). The need for harmonisation and innovation of neuropsychological assessment in neurodegenerative dementias in Europe: consensus document of the joint program for neurodegenerative diseases working group. Alzheimer's Res. Ther. 9:27. doi: 10.1186/s13195-017-0254-x

Cummings, L. (2019). Describing the cookie theft picture: Sources of breakdown in Alzheimer's dementia. Pragmat. Soc. 10, 153–176. doi: 10.1075/ps.17011.cum

de la Fuente Garcia, S., Ritchie, C., and Luz, S. (2020). Artificial intelligence, speech, and language processing approaches to monitoring Alzheimer's disease: a systematic review. J. Alzheimer's Dis. 78, 1547–1574. doi: 10.3233/JAD-200888

Ding, Y., Sohn, J. H., Kawczynski, M. G., Trivedi, H., Harnish, R., Jenkins, N. W., et al. (2019). A deep learning model to predict a diagnosis of Alzheimer disease by using 18F-FDG pet of the brain. Radiology 290, 456–464. doi: 10.1148/radiol.2018180958

Dodd, J. W. (2015). Lung disease as a determinant of cognitive decline and dementia. Alzheimer's Res. Ther. 7:32. doi: 10.1186/s13195-015-0116-3

Ebihara, T., Gui, P., Ooyama, C., Kozaki, K., and Ebihara, S. (2020). Cough reflex sensitivity and urge-to-cough deterioration in dementia with Lewy bodies. ERJ Open Res. 6, 108–2019. doi: 10.1183/23120541.00108-2019

Folstein, M. F., Folstein, S. E., and McHugh, P. R. (1975). “Mini-mental state”: a practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 12, 189–198. doi: 10.1016/0022-3956(75)90026-6

Fuller, S. J., Carrigan, N., Sohrabi, H. R., and Martins, R. N. (2019). “Current and developing methods for diagnosing Alzheimer's disease,” in Neurodegeneration and Alzheimer's Disease: The Role of Diabetes, Genetics, Hormones, and Lifestyle, eds R. N. Martins, C. S. Brennan, W. M. A. D. Binosha Fernando, M. A. Brennan, S. J. Fuller (John Wiley & Sons Ltd.), 43–87. doi: 10.1002/9781119356752.ch3

Galvin, J., Tariot, P., Parker, M. W., and Jicha, G. (2020). Screen and Intervene: The Importance of Early Detection and Treatment of Alzheimer's Disease. The Medical Roundtable General Medicine Edition.

Ghoniem, R. M. (2019). “Deep genetic algorithm-based voice pathology diagnostic system,” in Natural Language Processing and Information Systems, eds E. Métais, F. Meziane, S. Vadera, V. Sugumaran, and M. Saraee (Cham: Springer International Publishing), 220–233. doi: 10.1007/978-3-030-23281-8_18

Giannakopoulos, P., Duc, M., Gold, G., Hof, P. R., Michel, J.-P., and Bouras, C. (1998). Pathologic correlates of apraxia in Alzheimer disease. Arch. Neurol. 55, 689–695. doi: 10.1001/archneur.55.5.689

Goodglass, H., Kaplan, E., and Barressi, B. (1983). Cookie Theft Picture. Boston Diagnostic Aphasia Examination. Philadelphia, PA: Lea & Febiger.

Guinjoan, S. M. (2021). Expert opinion in Alzheimer disease: the silent scream of patients and their family during coronavirus disease 2019 (covid-19) pandemic. Pers. Med. Psychiatry 2021:100071. doi: 10.1016/j.pmip.2021.100071

Hariyanto, T. I., Putri, C., Situmeang, R. F. V., and Kurniawan, A. (2020). Dementia is a predictor for mortality outcome from coronavirus disease 2019 (COVID-19) infection. Eur. Arch. Psychiatry Clin. Neurosci. 26, 1–3. doi: 10.1007/s00406-020-01205-z

Haulcy, R., and Glass, J. (2021). Classifying Alzheimer's disease using audio and text-based representations of speech. Front. Psychol. 11:3833. doi: 10.3389/fpsyg.2020.624137

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Las Vegas, NV), 770–778. doi: 10.1109/CVPR.2016.90

Heckman, P. R., Blokland, A., and Prickaerts, J. (2017). “From age-related cognitive decline to Alzheimer's disease: a translational overview of the potential role for phosphodiesterases,” in Phosphodiesterases: CNS Functions and Diseases eds H. T. Zhang, Y. Xu, J. O'Donnell (Springer), 135–168. doi: 10.1007/978-3-319-58811-7_6

Henriksen, K., O'Bryant, S., Hampel, H., Trojanowski, J., Montine, T., Jeromin, A., et al. (2014). The future of blood-based biomarkers for Alzheimer's disease. Alzheimer's Dement. 10, 115–131. doi: 10.1016/j.jalz.2013.01.013

Holmes, R., Oates, J., Phyland, D., and Hughes, A. (2000). Voice characteristics in the progression of Parkinson's disease. Int. J. Lang. Commun. Disord. 35, 407–418. doi: 10.1080/136828200410654

Holzinger, A., Langs, G., Denk, H., Zatloukal, K., and Müller, H. (2019). Causability and explainability of artificial intelligence in medicine. Wiley Interdisc. Rev. Data Mining Knowl. Discov. 9:e1312. doi: 10.1002/widm.1312

Hughes, C. P., Berg, L., Danziger, W., Coben, L. A., and Martin, R. L. (1982). A new clinical scale for the staging of dementia. Br. J. Psychiatry 140, 566–572. doi: 10.1192/bjp.140.6.566

Isaia, G., Marinello, R., Tibaldi, V., Tamone, C., and Bo, M. (2020). Atypical presentation of covid-19 in an older adult with severe Alzheimer disease. Am. J. Geriatr. Psychiatry 28, 790–791. doi: 10.1016/j.jagp.2020.04.018

James, H. J., Van Houtven, C. H., Lippmann, S., Burke, J. R., Shepherd-Banigan, M., Belanger, E., et al. (2020). How accurately do patients and their care partners report results of amyloid-β pet scans for Alzheimer's disease assessment? J. Alzheimer's Dis. 74, 625–636. doi: 10.3233/JAD-190922

Johnen, A., and Bertoux, M. (2019). Psychological and cognitive markers of behavioral variant frontotemporal dementia-a clinical neuropsychologist's view on diagnostic criteria and beyond. Front. Neurol. 10:594. doi: 10.3389/fneur.2019.00594

Kalia, M. (2003). Dysphagia and aspiration pneumonia in patients with Alzheimer's disease. Metabolism 52, 36–38. doi: 10.1016/S0026-0495(03)00300-7

Karlekar, S., Niu, T., and Bansal, M. (2018). Detecting linguistic characteristics of Alzheimer's dementia by interpreting neural models. arXiv preprint arXiv:1804.06440. doi: 10.18653/v1/N18-2110

Kingma, D. P., and Ba, J. (2014). Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980. Available online at: https://arxiv.org/abs/1412.6980

Krijnders, J., and t Holt, G. (2017). Tone-fit and MFCC scene classification compared to human recognition. Energy 400:500. Available online at: https://www.researchgate.net/publication/255823915_Tonefit_and_MFCC_Scene_Classification_compared_to_Human_Recognition

Kuo, C.-L., Pilling, L. C., Atkins, J. L., Kuchel, G. A., and Melzer, D. (2020). !‘i?‘APOE!‘/i?‘ e2 and aging-related outcomes in 379,000 UK biobank participants. Aging 12, 12222–12233. doi: 10.18632/aging.103405

Laguarta, J., Hueto, F., and Subirana, B. (2020). Covid-19 artificial intelligence diagnosis using only cough recordings. IEEE Open J. Eng. Med. Biol. 1, 275–281. doi: 10.1109/OJEMB.2020.3026928

Lambon Ralph, M. A., Patterson, K., Graham, N., Dawson, K., and Hodges, J. R. (2003). Homogeneity and heterogeneity in mild cognitive impairment and Alzheimer's disease: a cross-sectional and longitudinal study of 55 cases. Brain 126, 2350–2362. doi: 10.1093/brain/awg236

Lee, H., Pham, P., Largman, Y., and Ng, A. Y. (2009). “Unsupervised feature learning for audio classification using convolutional deep belief networks,” in Advances in Neural Information Processing Systems 22, eds Y. Bengio, D. Schuurmans, J. D. Lafferty, C. K. I. Williams, and A. Culotta (Curran Associates, Inc.), 1096–1104.

Livingstone, S. R., and Russo, F. A. (2018). The ryerson audio-visual database of emotional speech and song (ravdess): a dynamic, multimodal set of facial and vocal expressions in north American english. PLoS ONE 13:e196391. doi: 10.1371/journal.pone.0196391

Luz, S., Haider, F., de la Fuente, S., Fromm, D., and MacWhinney, B. (2020). “Alzheimer's dementia recognition through spontaneous speech: the ADReSS Challenge,” in Proceedings of INTERSPEECH 2020 (Shanghai). doi: 10.21437/Interspeech.2020-2571

Lyons, J., Wang, D. Y.-B., Shteingart, H., Mavrinac, E., Gaurkar, Y., Watcharawisetkul, W., et al. (2020). jameslyons/python_speech_features: release v0.6.1.

Lyu, G. (2018). “A review of Alzheimer's disease classification using neuropsychological data and machine learning,” in 2018 11th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI) Beijing, 1–5. doi: 10.1109/CISP-BMEI.2018.8633126

Maclin, J. M. A., Wang, T., and Xiao, S. (2019). Biomarkers for the diagnosis of Alzheimer's disease, dementia lewy body, frontotemporal dementia and vascular dementia. Gen. Psychiatry 32:e100054. doi: 10.1136/gpsych-2019-100054

Manabe, T., Fujikura, Y., Mizukami, K., Akatsu, H., and Kudo, K. (2019). Pneumonia-associated death in patients with dementia: a systematic review and meta-analysis. PLoS ONE 14:e0213825. doi: 10.1371/journal.pone.0213825

Michel, A., Verin, E., Hansen, K., Chassagne, P., and Roca, F. (2020). Buccofacial apraxia, oropharyngeal dysphagia, and dementia severity in community-dwelling elderly patients. J. Geriatr. Psychiatry Neurol. 34, 150–155. doi: 10.1177/0891988720915519

Morris, R. G., and Kopelman, M. D. (1986). The memory deficits in Alzheimer-type dementia: a review. Q. J. Exp. Psychol. 38, 575–602. doi: 10.1080/14640748608401615

Morsy, A., and Trippier, P. (2019). Current and emerging pharmacological targets for the treatment of Alzheimer's disease. J. Alzheimer's Dis. 72, 1–33. doi: 10.3233/JAD-190744

Nassif, A. B., Shahin, I., Attili, I., Azzeh, M., and Shaalan, K. (2019). Speech recognition using deep neural networks: a systematic review. IEEE Access 7, 19143–19165. doi: 10.1109/ACCESS.2019.2896880

Olde Rikkert, M. G., Tona, K. D., Janssen, L., Burns, A., Lobo, A., Robert, P., et al. (2011). Validity, reliability, and feasibility of clinical staging scales in dementia: a systematic review. Am. J. Alzheimer's Dis. Other Dement. 26, 357–365. doi: 10.1177/1533317511418954

Orimaye, S. O., Wong, J. S.-M., and Golden, K. J. (2014). “Learning predictive linguistic features for Alzheimer's disease and related dementias using verbal utterances,” in Proceedings of the Workshop on Computational Linguistics and Clinical Psychology: From Linguistic Signal to Clinical Reality (Baltimore, MD), 78–87. doi: 10.3115/v1/W14-3210

Palmqvist, S., Janelidze, S., Quiroz, Y. T., Zetterberg, H., Lopera, F., Stomrud, E., et al. (2020). Discriminative accuracy of plasma phospho-tau217 for Alzheimer disease vs. other neurodegenerative disorders. JAMA 324, 772–781. doi: 10.1001/jama.2020.12134

Panayotov, V., Chen, G., Povey, D., and Khudanpur, S. (2015). “Librispeech: an ASR corpus based on public domain audio books,” in 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (South Brisbane, QLD), 5206–5210. doi: 10.1109/ICASSP.2015.7178964

Parisot, S., Ktena, S. I., Ferrante, E., Lee, M., Guerrero, R., Glocker, B., et al. (2018). Disease prediction using graph convolutional networks: application to autism spectrum disorder and Alzheimer's disease. Med. image Anal. 48, 117–130. doi: 10.1016/j.media.2018.06.001

Pawlowski, M., Joksch, V., Wiendl, H., Meuth, S. G., Duning, T., and Johnen, A. (2019). Apraxia screening predicts Alzheimer pathology in frontotemporal dementia. J. Neurol. Neurosurg. Psychiatry 90, 562–569. doi: 10.1136/jnnp-2018-318470

Pearl, J., and Mackenzie, D. (2018). The Book of Why: The New Science of Cause and Effect. Basic books.

Petti, U., Baker, S., and Korhonen, A. (2020). A systematic literature review of automatic Alzheimer's disease detection from speech and language. J. Am. Med. Inform. Assoc. 27, 1784–1797. doi: 10.1093/jamia/ocaa174

Pramono, R. X. A., Imtiaz, S. A., and Rodriguez-Villegas, E. (2016). A cough-based algorithm for automatic diagnosis of pertussis. PLoS ONE 11:e162128. doi: 10.1371/journal.pone.0162128

Pulido, M. L. B., Hernández, J. B. A., Ballester, M. Á. F., González, C. M. T., Mekyska, J., and Smékal, Z. (2020). Alzheimer's disease and automatic speech analysis: a review. Expert Syst. Appl. 2020:113213. doi: 10.1016/j.eswa.2020.113213

Reed, W. J., and Hughes, B. D. (2002). From gene families and genera to incomes and internet file sizes: Why power laws are so common in nature. Phys. Rev. E 66:067103. doi: 10.1103/PhysRevE.66.067103

Sekizawa, K., Jia, Y. X., Ebihara, T., Hirose, Y., Hirayama, Y., and Sasaki, H. (1996). Role of substance p in cough. Pulmon. Pharmacol. 9, 323–328. doi: 10.1006/pulp.1996.0042

Severini, C., Petrella, C., and Calissano, P. (2016). Substance p and Alzheimer's disease: emerging novel roles. Curr. Alzheimer Res. 13, 964–972. doi: 10.2174/1567205013666160401114039

Shaw, L. M., Vanderstichele, H., Knapik-Czajka, M., Clark, C. M., Aisen, P. S., Petersen, R. C., et al. (2009). Cerebrospinal fluid biomarker signature in Alzheimer's disease neuroimaging initiative subjects. Ann. Neurol. 65, 403–413. doi: 10.1002/ana.21610

Small, B. J., Fratiglioni, L., Viitanen, M., Winblad, B., and Bäckman, L. (2000). The course of cognitive impairment in preclinical Alzheimer disease: three-and 6-year follow-up of a population-based sample. Arch. Neurol. 57, 839–844. doi: 10.1001/archneur.57.6.839

Song, X., Mitnitski, A., and Rockwood, K. (2011). Nontraditional risk factors combine to predict Alzheimer disease and dementia. Neurology 77, 227–234. doi: 10.1212/WNL.0b013e318225c6bc

Subirana, B. (2020). Call for a wake standard for artificial intelligence. Commun. ACM 63, 32–35. doi: 10.1145/3402193

Subirana, B., Bagiati, A., and Sarma, S. (2017a). On the Forgetting of College Academics: at “Ebbinghaus Speed”? Technical Report 68, MIT Center for Brains, Minds and Machines (CBMM). doi: 10.21125/edulearn.2017.0672

Subirana, B., Bivings, R., and Sarma, S. (2020a). “Wake neutrality of artificial intelligence devices,” in Algorithms and Law, eds M. Ebers and S. Navas (Cambridge University Press). doi: 10.1017/9781108347846.010

Subirana, B., Hueto, F., Rajasekaran, P., Laguarta, J., Puig, S., Malvehy, J., et al. (2020b). Hi Sigma, do I have the coronavirus?: call for a new artificial intelligence approach to support health care professionals dealing with the COVID-19 pandemic. arXiv preprint arXiv:2004.06510.

Subirana, B., Sarma, S., Cantwell, R., Stine, J., Taylor, M., Jacobs, K., et al. (2017b). Time to Talk: The Future for Brands is Conversational. Technical report, MIT Auto-ID Laboratory and Cap Gemini.

Syed, M. S. S., Syed, Z. S., Lech, M., and Pirogova, E. (2020). “Automated screening for Alzheimer's dementia through spontaneous Speech,” in INTERSPEECH 2020 Conference (Shanghai), 2222–2226. doi: 10.21437/Interspeech.2020-3158

The Center for Brains Minds & Machines. (2020). Modules. Available online at: https://cbmm.mit.edu/research/modules (accessed April 14, 2020).

Wirths, O., and Bayer, T. (2008). Motor impairment in Alzheimer's disease and transgenic Alzheimer's disease mouse models. Genes Brain Behav. 7, 1–5. doi: 10.1111/j.1601-183X.2007.00373.x

Wise, J. (2016). Dementia and flu are blamed for increase in deaths in 2015 in England and wales. BMJ 353:i2022. doi: 10.1136/bmj.i2022

Won, H.-K., Yoon, S.-J., and Song, W.-J. (2018). The double-sidedness of cough in the elderly. Respir. Physiol. Neurobiol. 257, 65–69. doi: 10.1016/j.resp.2018.01.009

Wu, Z., Pan, S., Chen, F., Long, G., Zhang, C., and Philip, S. Y. (2020). A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 32, 4–24. doi: 10.1109/TNNLS.2020.2978386

Yu, Y., Travaglio, M., Popovic, R., Leal, N. S., and Martins, L. M. (2020). Alzheimer's and Parkinson's diseases predict different COVID-19 outcomes, a UK biobank study. medRxiv. 1–16. doi: 10.1101/2020.11.05.20226605

Yuan, J., Bian, Y., Cai, X., Huang, J., Ye, Z., and Church, K. (2020). “Disfluencies and fine-tuning pre-trained language models for detection of Alzheimer's disease,” in INTERSPEECH 2020 Conference (Shanghai), 2162–2166. doi: 10.21437/Interspeech.2020-2516

Zetterberg, H. (2019). Blood-based biomarkers for Alzheimer's disease–an update. J. Neurosci. Methods 319, 2–6. doi: 10.1016/j.jneumeth.2018.10.025

Keywords: multimodal deep learning, transfer learning, explainable speech recognition, brain model, graph neural-networks, AI diagnostics

Citation: Laguarta J and Subirana B (2021) Longitudinal Speech Biomarkers for Automated Alzheimer's Detection. Front. Comput. Sci. 3:624694. doi: 10.3389/fcomp.2021.624694

Received: 01 November 2020; Accepted: 25 February 2021;

Published: 08 April 2021.

Edited by:

Saturnino Luz, University of Edinburgh, United KingdomReviewed by:

Mary Jean Amon, University of Central Florida, United StatesCopyright © 2021 Laguarta and Subirana. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Brian Subirana, c3ViaXJhbmFAbWl0LmVkdQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.