- 1Sussex Computer-Human Interaction Lab, Department of Informatics, School of Engineering and Informatics, University of Sussex, Brighton, United Kingdom

- 2Department of Management Sciences, Faculty of Engineering, University of Waterloo, Waterloo, ON, Canada

- 3Department of Computer Science, University College London, London, United Kingdom

We explored the potential of haptics for improving science communication, and recognized that mid-air haptic interaction supports public engagement with science in three relevant themes. While science instruction often focuses on the cognitive domain of acquiring new knowledge, in science communication the primary goal is to produce personal responses, such as awareness, enjoyment, or interest in science. Science communicators seek novel ways of communicating with the public, often using new technologies to produce personal responses. Thus, we explored how mid-air haptics technology could play a role in communicating scientific concepts. We prototyped six mid-air haptic probes for three thematic areas: particle physics, quantum mechanics, cell biology; and conducted three qualitative focus group sessions with domain expert science communicators. Participants highlighted values of the dynamic features of mid-air haptics, its ability to produce shared experiences, and its flexibility in communicating scientific concepts through metaphors and stories. We discuss how mid-air haptics can complement existing approaches of science communication, for example multimedia experiences or live exhibits by helping to create enjoyment or interest, generalized to any fields of science.

1. Introduction

Without appropriate science communication, science and technological advances may be feared and opposed by the public. In 2008, protests broke out against the launch of the Large Hadron Collider (LHC) at the European Organization for Nuclear Research (CERN) (Courvoisier et al., 2013) in fear of destruction of Earth. Another example of societal fear and the impact of science communication on public health is the “Chernobyl syndrome” and its debated effects on induced abortions (Auvinen et al., 2001). A further conflict between religion and science on the matter of creation, caused the ban of teaching evolution until 1968 in the USA, with the Scopes (monkey) trial exemplifying the impact of science communication on education (Holloway, 2016).

1.1. Technology Enhanced Science Communication

New multimodal technologies developed within the Human-Computer Interaction (HCI) community may facilitate the dialog between science communicators and the public, by supporting positive personal responses to science. While science instruction often focuses on the cognitive domain of acquiring new knowledge (Bloom et al., 1956), in science communication the primary goal is to produce “one or more of the following personal responses to science: Awareness, Enjoyment, Interest, Opinion forming, and Understanding” (Burns et al., 2003), also known as the AEIOU model. Science communication is not an offshoot of general communication or media theory (Burns et al., 2003), nor is is just dissemination of scientific results for the peer community or teaching scientific skills and concepts to children. Even so, science communication is thought to be a broader spectrum, ranging from the more informal style of public engagement to the more formal science education (Burns et al., 2003).

However, producing personal responses is a challenge when communicating phenomena that are imperceptible to humans, such as atomic structure, or the electromagnetic nature of sunlight. Multimodal interfaces are often used to convey these complex and often invisible scientific concepts (Furió et al., 2017). The sense of touch could add to these, helping people perceive and interact in ways other senses cannot (Lederman and Klatzky, 2009). Touch feedback has been shown to influence our behavior (Gueguen, 2004), and emotions (Obrist et al., 2015).

Physical models are often used to enable people to touch static representations of otherwise untouchable things, such as galaxies. For example, Clements et al. (2017) published the “Cosmic Sculpture” which transforms the map of the cosmic microwave background radiation into a scaled 3D model. The “Tactile Universe” (Bonne et al., 2018) creates 3D models of galaxies, used to engage visually impaired children in astronomy. Both of these projects were developed for public engagement, with the aim to engage interested publics of science festivals in conversations, or to engage underserved audiences, such as visually impaired students. Physical models, using commercially available 3D printers, have the advantage of high resolution (0.2–0.025 mm), allowing a detailed exploration of fine features. However, they are limited in presenting dynamic concepts or internal structure of variable density.

More recently, Augmented Reality (AR) has been used to address the limitations of static tactile probes. For example, “HOBIT” (Furió et al., 2017) was built and evaluated in the context of light interferometry. Here, physical (3D printed) equivalents of the optical apparatus have been augmented with digital content, e.g., equations or animations of wave properties. The studies on HOBIT highlight benefits of augmented reality, such as affordability, lower time consumption, or safety compared to live demos; while the augmented information can also enhance learner performance (Furió et al., 2017). The multisensory nature of augmented reality has benefits compared to stand alone 3D printed probes, but it does not provide dynamic physical effects.

While haptic technology, to the best of our knowledge, has not been used to support science communication, haptics has been used in science instruction (see Zacharia, 2015, for a review). Researchers have proposed using force feedback “Phantom” devices, which use a stylus to provide force feedback across six degrees of freedom (Jones et al., 2006a). Jones et al. (2003, 2006b) demonstrated the positive impact of the Phantom on students' understanding of viruses at the nanoscale, as well as how scientific apparatus, e.g., Atomic Force Microscopes function. On the other hand, the “Novint Falcon” force feedback system showed little evidence of positive impact on learners' understanding, when learning about concepts of sinking and floating (Chen et al., 2014). Force feedback seems to be better suited than static tactile probes for representing elastic, or magnetic forces, as well as conveying structural properties, such as density or stiffness. However, just like with 3D probes, communication of dynamic processes remains a limitation.

In addition, users interact through a probe, and do not gain direct tactile experiences. Haptics has also shown promise in instruction for younger children. There is evidence that tactile feedback on a table can improve reading outcomes (Yannier et al., 2015), and that 3D physical mixed-reality interfaces can improve interest and learning (Yannier et al., 2016). Researchers have also developed a set of lower cost “DIY” force-feedback devices for extending science instruction from university education to high school. Force-feedback “paddle” devices were initially developed as low-cost options to teach dynamics and controls (Richard et al., 1997; Rose et al., 2014). The Hapkit (Richard et al., 1997) has since evolved into a lower-cost, 3D-printable, composable platform for instruction in other domains (Orta Martinez et al., 2016). When a sandbox-style software was added, the Hapkit was shown to render haptics adequately for education with an impact on student problem-solving strategies and curiosity (Minaker et al., 2016), and scaffold sense-making with high-school students learning mathematical concepts (Davis et al., 2017). The Haply (Gallacher et al., 2016) is another DIY platform, primarily a 2-DoF one, used for VR and haptic prototyping, and adapted for education and hobbyists.

1.2. Opportunity for Mid-Air Haptics Technology in Science Communication

In this paper, we explored the potential of haptics for improving informal science communication, challenging the suitability of ultrasonic mid-air haptic technology. Mid-air haptics describes the technological solution of generating tactile sensations on a user's skin, in mid-air, without any attachment on the user's body. One way to achieve this is through the application of focused ultrasound, as first described by Iwamoto et al. (2008), and commercialized by Ultraleap Limited in 2013 (formerly known as Ultrahaptics). A phased array of ultrasonic transducers is used to focus acoustic radiation pressure onto the user's palms and fingertips (see Figure 1, left). Modulating the focus points, such that it matches the resonant frequency of the cutaneous mechanoreceptors found in humans (~5–400 Hz) (Mahns et al., 2006), causes a localized tactile sensation to be perceived by the user.

Figure 1. Ultrasonic mid-air haptic technology (left) enables the creation of tactile sensations without attachments to the hand. We developed six mid-air haptic probes (right) of science concepts from particle physics, quantum mechanics, and cell biology; and then run workshops with science communicators from each of those three scientific fields (middle).

Spatial and temporal discrimination studies were one of the early mainstream focus of researching perception of mid-air haptic sensations. Alexander et al. (2011) showed that users were able to discriminate the number of sensations between 0 and 4 focal points to an average accuracy of 87.3%, in context of a mobile TV device, augmented with mid-air haptics. Alongside the system description of the Ultraleap mid-air haptic display, Carter et al. (2013) also performed experiments on spatial resolution of perceived focal points. Results showed a minimum required separation distance of 5 cm between two focal points of identical modulation frequency, and 3 cm if the modulation frequency differed. Although these values are relatively high compared to vibro-tactile stimuli, results also showed improvements in discriminating focal points with training. Indeed, Wilson et al. (2014) further studied the localization of static tactile points in mid-air and found an average of 8.5 mm error in locating targets, where the localization errors were typically 3 mm larger in the longitudinal axis of the hand. Although spatial resolution is not as detailed as for digitally fabricated probes, a <1 cm resolution of tactile features in mid-air is a promising property of the technology in context of science communication.

With regards to temporal resolution, Wilson et al. (2014) also studied the perception of apparent movement (Geldard and Sherrick, 1972) of mid-air haptic stimulation, by investigating correlations between number of points, point duration, point separation, and directionality. Results showed that higher number of points, and longer point duration improved the reported quality of movement, which generally scored higher in the transverse direction, than the longitudinal axis. Pittera et al. (2019a) also studied the illusion of movement using mid-air touch, stimulating both hands synchronously, such that the simulated movement is located in the intermediate space, unlike Wilson et al. (2014), where tactile movement was simulated on the body.

With the use of multipoint and spatiotemporal modulation techniques, it is possible to create more advanced tactile sensations, such as lines, circles, animations, and even 3D geometric shapes (Carter et al., 2013; Long et al., 2014). Hence, ultrasonic mid-air haptic technology is explored in more and more application areas, such as art (Vi et al., 2017), multimedia (Ablart et al., 2017), or virtual reality (Georgiou et al., 2018; Pittera et al., 2019b). For example, Ablart et al. (2017) showed the positive effect of mid-air haptics augmented movie experiences on user experience and engagement in context of human-media interaction. However, we are unaware of any empirical research on the potentials of mid-air haptics in science communication, neither in scientific public engagement, nor in science education.

For this reason, we created six prototypes, demonstrating science concepts using ultrasonic mid-air haptic sensations (see Figure 1), an emerging type of haptic technology (Carter et al., 2013). We took these prototypes to 11 science communicators for feedback during three qualitative focus group sessions, themed around particle physics, quantum physics, and cell biology. The science communicators could experience mid-air haptic sensations of selected scientific phenomena from their respective field (two per field). The discussion during the workshops were transcribed and analyzed following an open coding approach. In contrast to six hypothesized advantages of the technology, we identified three main themes which were valuable as expressed by the focus group participants. Science communicators highlighted the value of tangible and dynamic sensations combined. Moreover, participants implied that the ability to easily share the tactile experience between users was important to science communicators, alongside the potential to flexibly create a story around the sensation by the communicator. In other words, a single sensation can be described as an atomic nucleus, a brain cell, or as a distant star, which helps science communicators to intertwine the technology with the use of metaphors and other tools of storytelling. Overall, our qualitative analysis suggests that mid-air haptics may have the greatest impact on the hedonic dimensions of the AEIOU framework—enjoyment and interest.

In summary, the key contributions of this paper are: (1) a characterization of mid-air haptic technology as a novel tool for science communication; (2) a design-driven exploration of the properties of mid-air haptic sensations and interaction techniques, explored in three scientific disciplines with six mid-air haptic experience prototypes; and (3) a discussion on opportunities and challenges for mid-air haptic technology within the AEIOU framework of science communication.

2. Materials and Methods

To guide the design process for mid-air haptic probes (see Figure 2) we considered different features of other tangible modalities and discuss them in relation to mid-air haptic properties.

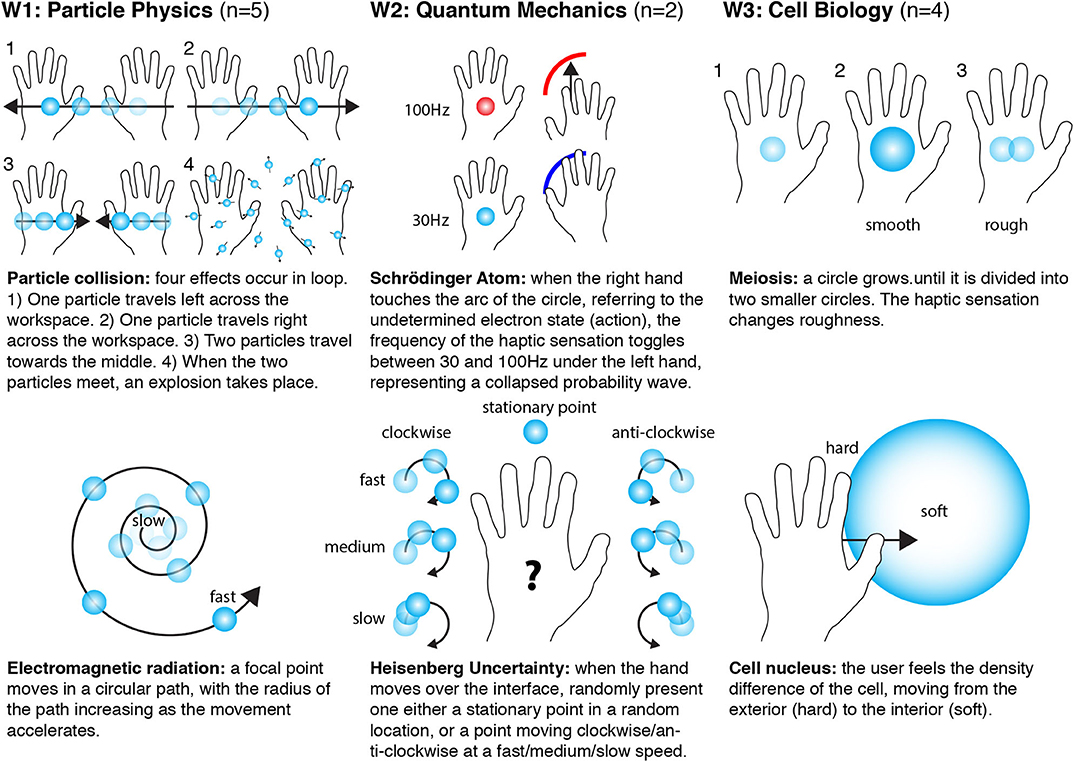

Figure 2. Design of the six mid-air haptic probes used in the three workshops. In W1, we presented two designs representing concepts in particle physics: particle collision and electromagnetic radiation. In W2, we presented two designs representing concepts in quantum mechanics: the Schrödinger Atom and Heisenberg Uncertainty. In W3, we presented two designs representing concepts in Cell Biology: Meiosis and a Cell Nucleus.

2.1. Hypotheses: Relevant Design Properties of Mid-Air Haptics

As with augmented reality, interactive-3D graphics, physical models, and force feedback controllers, emerging haptic technologies should be able to accommodate a combination of design features, relevant in science communication. Our hypothesis is that mid-air haptics could serve as a new technological solution within this design space, with six specific properties of haptic interaction being valuable to different extent: In this section, we start introducing the haptic probes by specifying our hypotheses, then describing these with their associated rationales.

H.1 (3D): ultrasonic mid-air haptic interfaces can display volumetric sensations in 3D space (Long et al., 2014) and the movement of focal points remains stable during user interaction, unlike levitated tangible pixels.

H.2 (stability): Location and apparent movement of focal points are programmable and undisturbed (Wilson et al., 2014).

H.3 (dinamicity): The force exerted by the touch of the user is not restricting any moving components of the haptic system.

H.4–H.5 (interactivity and structure): Integrated hand tracking also allows interactive and structural haptic sensations.

H.6 (augmentation): Covering the haptic display with an acoustically transparent projection screen (Carter et al., 2013), it is also possible to augment the tactile sensations with visualizations.

We further hypothesize that dynamic, interactive, and structural design features of mid-air haptics are the most characteristic of this technology, since three-dimensional and augmented tangible probes have already been addressed. Below, we further rationalize this hypothesis, in a heuristic presentation of the set of six features. This approach motivated the choice of concepts and implementation of mid-air haptic probes, as described in the following sections.

2.1.1. Feature 1: 3-Dimensionality

Visualizations, augmented or physical representations are primarily depicted as 3D objects, the natural appearance for many phenomena. Interactive, 3D graphics, such as found on the PHET simulations website (PHET, 2018), are a good example of this feature. In contrast, tangible UIs can only display pseudo 3D shapes, such as the “inFORM” shape changing display (Follmer et al., 2013).

2.1.1.1. Design rationale

Although mid-air haptics is capable of producing volumetric sensations, and it is a relevant feature, we decided to develop only 2D haptic probes for our exploratory study. We expected 3D sensations would create additional confusion when participants interact with the device. Therefore, we did not specifically address hypothesis H.1.

2.1.2. Feature 2: Stability

Another design feature is to create tactile representations, which do not collapse as a result of tactile exploration. Whilst a 3D printed galaxy (Bonne et al., 2018) remains stable during tactile interaction, acoustic levitation of floating tangible bits are fragile to touch (Seah et al., 2014). These can only act as visual displays, despite the use of tangible pixels.

2.1.2.1. Design rationale

Mid-air haptic sensations are stable by nature, and tactile interaction does not influence the properties of the haptic feedback. Hence, stability is a constant variable in our haptic probe designs and we did not evaluate its explicit value in science communication, leaving hypothesis H.2 unaddressed.

2.1.3. Feature 3: Augmentation

With the development of technologies like augmented reality, visually and physically augmented science representations are explored in conjunction with tangible or tactile information. For example, in the case of “HOBIT” (Furió et al., 2017), visual depictions of light rays are augmented with animations of the underlying wave phenomena, text, and equations. In another project, tangible probes equipped with RFI tags are also able to layer information, for example associate vibrations to a map displaying pollution in countries of the world (Stusak and Aslan, 2014).

2.1.3.1. Design rationale

We were interested in exploring the potential of unimodal mid-air haptics, hence we decided not to augment the haptic probes with other sensory information, leaving hypothesis H.6 untested.

2.1.4. Feature 4: Dynamicity

Most implementations of communicating scientific phenomena require representation of movement. Such dynamic systems can be easily visualized through animations. However, during tactile interaction it is a key requirement to maintain an undisturbed movement, even after the user touched the probe. Dynamic physical bar charts (Taher et al., 2015) may support movement during interaction, given the actuators exert greater forces than the user. But, tactile probes, such as an elastic spring (often used at schools to illustrate transverse wave propagation) will be disturbed when people touch these.

2.1.4.1. Design rationale

We focused on designing dynamic haptic probes, used during the particle physics workshop (W1). We implemented electromagnetic radiation with the representation of a swirling haptic particle (see Figure 2, W1). The radius of the orbit grew over time from 1 to 4 cm, in 8 s, while the angular velocity of the haptic particle increased (from 2π to 4π rad s-1). The acceleration of the haptic particle (associated with electric charge) was noticeable, and the radial expansion (associated with radiation) correlated to the acceleration. The focal point was created using amplitude modulation (AM) of ultrasound (Carter et al., 2013), at 200 Hz.

The particle collision (see Figure 2, W1) involved two ultrasound emitters, one for each hand. A haptic impulse was displayed from one board to the other with a delay of 200 ms to create an illusion of movement (Pittera et al., 2019a). The representation involved a movement from left to right and back with a delay of 1 s (representing, respectively the clockwise and anticlockwise particle streams). After three cycles, we simulated particle collision with a “sparkly” sensation under both palms. The moving points were displayed using AM at 200 Hz and the sparkling feeling was created using spatiotemporal modulation (STM) of ultrasound at 30 Hz. Both of these two probes were addressing hypothesis H.3 on dynamic haptic sensations and its value in science communication.

2.1.5. Feature 5: Interactivity

Interaction is key to communicate causal relations between input and output. Movement of the pointer on a graphical representation can change colors, or induce dynamic animations. We see many examples of this on PHET simulations (PHET, 2018). Shape changing displays can produce variable stiffness based on user input (Follmer et al., 2013) and thus enable interactive experiences. However, physical representations, such as the “Cosmic Sculpture” (Clements et al., 2017), do not change upon interaction.

2.1.5.1. Design rationale

We focused on interactivity of haptic probes in the quantum mechanics workshop (see Figure 2, W2). For this, the hand tracking capability of mid-air haptics was crucial. Using algorithms described by Long et al. (2014), runtime modifications of the ultrasound stimulus are computed based on hand location to simulate the intended surface between the hand and the virtual object. This is true for both 2D and 3D objects. While a user is unable to enclose a 3D shape in a traditional sense, a 3D object, such as a sphere or pyramid can be explored from all sides using the palm and fingertips.

To convey the concept of Heisenberg uncertainty, we used two states: (1) a fixed point representing the position of the particle; or (2) an orbiting point representing the momentum of the particle. When a user moved their hand over the interface, they were randomly assigned to one of the two conditions). To signify the momentum condition, the direction (clockwise vs. anti-clockwise) and speed (ranging from slow to fast) of the orbiting point was randomized.

We chose this design in order to emphasize the importance of changing direction and speed, every-time the participant interacts with the probe, avoiding semi-conclusive statements (e.g., it's moving). The changing properties of movement were highlighting the relevant quantities in identifying velocity and momentum. We used AM at 200 Hz and full intensity. The circles size ranged from 1π to 4π cm and the speed ranged −10 to 10 rad s-1 at a frequency of 200 Hz. For triggering the random display of either cases, we used the Leap Motion sensor to track the users' hand. The haptic probe for representing an atom (see Figure 2, W2) was similarly interactive. When the participant touched the arc, representing the electron cloud, the frequency of the haptic feedback changed from 100 to 30 Hz. These probes were designed to aid the focus group evaluation of hypothesis H.4 on interactivity.

2.1.6. Feature 6: Structure

Encoding structural information, such as density, is often desirable. Natural phenomena frequently impose boundary conditions, which highlight structural differences in objects. For example, the crust of a planet is distinguished from its core; or the cell membrane from its nucleus. Digital fabrication techniques allow representation of structural information, through distinct internal and external material properties (Torres et al., 2015). However, 3D printed probes allow only surface exploration or deformation. Users are unable to push their fingers through a solid spherical membrane, to find fluid state materials in the interior, without damaging the representation. Force feedback controllers on the other hand have the potential to provide structural information (Minogue et al., 2016).

2.1.6.1. Design rationale

We focused on conveying structural information in the cell biology workshop (W3). In our example, we associated chromosome number with the frequency (perceived texture) of the haptic feedback. The cell was depicted as a circle displayed above the transducer at 20 Hz frequency. Over 2 s, the shape increased in radius from 2.5 to 5 cm, eventually splitting into two independent smaller shapes (see Figure 2, W3). During the process, we also increased the frequency of the haptic feedback from 20 to 80 Hz, simulating the change in chromosome number (through change in perceived texture) and therefore implying meiosis and not mitosis.

The second concept of cell biology highlighted the structure of a cell in a simplified form. Concentrating on two aspects, cell membrane and cell nucleus. We represented the cell as a disc, where the users' hand was tracked with the Leap Motion. On the edges of the disc, the haptic feedback of 80 Hz frequency would create a more solid sensation (hard), than the interior of the disc (see Figure 2, W3). Reducing the frequency of the haptic feedback to 10 Hz in the middle of the shape, a distinct nucleus (soft) could be felt. These probes depicted structural information, addressing the claims of hypothesis H.5 on the value of representing structure through the haptic sense.

As highlighted through these rationales in this heuristic approach of designing haptic probes for science communication, we believe that dynamic, interactive, and structural design features are the most characteristic of this technology. In the following section, we describe how these haptic probes were used to collect qualitative data during three focus groups of science communicators at the research workshops organized.

2.2. Materials: Mid-Air Haptics Probes for Three Fields of Science

Considering the rationale presented in the previous section, we designed and implemented six mid-air haptic probes (i.e., demonstrations of using mid-air haptics for conveying specific scientific concepts). These haptic probes were used to facilitate a dialog between science communicators, who are also domain experts in three different fields of science: particle physics, quantum mechanics, and cell biology. For every discipline we organized a workshop, where two concepts were represented (see an overview in Figure 2). A video of the demonstrations can be viewed on this link: https://youtu.be/QOnOWobSoBI.

We used a haptic device manufactured by Ultraleap Limited, which generates the tactile sensations using ultrasound (Carter et al., 2013) (see Figure 1, left). The integrated hand tracking system enables the design of interactive and structural haptic probes, while the high refresh rate of the device enables dynamic haptics. Haptic probes were created during a rapid prototyping design process, with multiple iterations, involving two co-authors. Their combined expertise is in theoretical physics and HCI (mid-air haptics experience design). The sensations were rendered with both amplitude modulation (AM) (Carter et al., 2013) and spatiotemporal modulation (STM) (Frier et al., 2018), as outlined in the previous section (see Design Rationales).

We chose three scientific fields based on two criteria. First, we wanted concepts discussed by disciplines that are invisible to the unaided human eye; second, disciplines that are likely to have a societal impact. We decided on particle and nuclear physics, which can cause fear in the public, as cited in the introduction (Perucchi and Domenighetti, 1991; SwissInfo, 2008), quantum technology, which is believed to be living its second revolution and playing an essential role in future technologies (High-Level Steering Committee, 2017), and cell biology, which is the basis of talking about cancer research.

2.3. Participants: Science Communicators

Four overlapping groups are identified in the research field and practice of science communication. These are: (1) scientists in academia, industry, or government; (2) mediators, such as journalists, science communicators or teachers; (3) policy or decision makers in government or research councils; and (4) the lay public (Burns et al., 2003). We deliberately chose to run this exploratory, qualitative study with science communicators who are also active researchers, forming an overlap between scientists and mediators. Participant groups, such as teachers, the lay public, or policy makers are valuable in evaluating the user experience of the technology, or its benefits in learning, but are not aware of the objectives of science communication. We recruited eleven participants of this description to carry out three consecutive workshops (Ws). W1 had five participants, W2 had two, and W3 had four. W1 took place at the end of an outreach event at a school; W2 and W3 were held at our research laboratory. Participants were novice to mid-air haptic technology.

2.4. Procedure: Collecting Data in Three Workshops

Each of the three workshops lasted for 2 h and consisted of four main phases described below. Ethics approval was obtained and consent forms were collected.

2.4.1. Phase 1 (15 min)

After welcoming participants, we asked each of them to experience three to four sample mid-air haptic sensations. These were displayed using the “Ultrahaptics Sensation Editor” and the device described above. Sample sensations included a static focal point, an orbiting focal point, a circle growing and shrinking in size, and a vertical sheet.

2.4.2. Phase 2 (45 min)

Following the familiarization phase, we showed two haptic probes to participants. They were instructed to feel the tactile feedback, describe the sensation and make associations to a scientific concept. Participants were encouraged to have dialogs among themselves and the researchers. While researcher 1 controlled the device, researcher 2 instructed, guided, and observed the participants. If participants could not describe what they felt, hints and guiding questions were given by the researchers.

2.4.3. Phase 3 (30 min)

Once the haptic probes were explored, we asked participants to describe their ideas on new implementations, based on their interaction with mid-air haptics, and its characteristic properties that may differ from technologies they had experiences with, such as 3D printing, physical toys, or virtual reality.

2.4.4. Phase 4 (30 min)

We concluded with a guided discussion. The participants were asked thematic questions in a semi-structured group interview. They were prompted to respond to three key questions facilitated by a moderator to ensure that each participant was able to express their opinion: Q1: How well did the demos resemble the scientific concept conveyed, and how difficult was it to interpret the haptic sensations? Q2: What are the benefits of mid-air haptics (if any), and considering its properties what does it offer in contrast to other technological solutions they use in science communication? Q3: What new ideas of demos do participants have based on the features of the technology, and what are their challenges in communicating science?

The same researchers, who designed the prototypes, were leading the workshops. While one researcher controlled the apparatus, the other researcher facilitated the discussion and exploration of the mid-air haptic probes, following the procedure described above. All four phases of the workshops were audio recorded, resulting in 6 h audio material. We transcribed the data and extracted relevant feedback following an open coding approach (Braun and Clarke, 2006). The transcripts were coded by three of the co-authors independently, then synthesized, resulting in three themes.

3. Results

Qualitative analysis of the transcripts revealed three significant themes. The first of these themes suggests the validity of hypothesis H.3 on the value of dynamic haptics in science communication. However, we did not find any qualitative evidence for verifying hypotheses H.4 and H.5 on the value of interactivity and structural information. Instead, the value of sharing experiences, and creating stories with the haptic sensations was suggested. Results are discussed below and exemplified through participant quotes.

3.1. Theme 1: “I can feel it moving”: Mid-Air Haptics Support Dynamic Tactile Experiences With Low Level-of-Detail

Across all three workshops (W1, W2, W3), the biggest “wow” factor and uniquely quoted feature of mid-air haptic sensations was its dynamicity. One participant, P2 in W2, explained how this dynamic feature of mid-air haptics could really make a difference in communicating science:

P2:W2: “One of the things that we struggle to communicate [in quantum mechanics] is that you can have the probability oscillating backwards and forwards. I think this [mid-air haptics] has a really cool potential to show that because, sort of, whilst you can't see it, you're feeling the evolution of probability. you're feeling that probability before you've actually measured it.”

Participants were generally fascinated by the dynamicity of mid-air haptics and described this technology's ability to represent sensations that are moving and changing (e.g., P1:W1: “acceleration and creating waves,” P2:W2: “opening and closing 'till you have an oscillation,” P3:W3: “it is going really fast and then it slows down”). Participants volunteered various scenarios to apply the newly discovered dynamic features of mid-air haptics, such as for representing DNA models, hydrogen molecules, or the Higgs boson.

Participants in the particle physics workshop (W1) appreciated the temporal variations the dynamic representation mid-air haptics affords in comparison to 3D-printed objects: P1:W1: “something that could demonstrate waves in a tactile way is very good”; P5:W1: “it's the dynamics of the haptics.3D printing is too static, and in physics almost everything is time variant.” Variations over time were also discussed in the cell biology workshop, and described with the example of cell forming and firing: P1:W3: “Try to imagine you're a cell and you get a noisy signal. [Mid-air haptics] is actually a really good sort of depiction of a noisy signal because it's, I guess, harder to distinguish compared to, for example, a sound.”

This quote highlights that the unique characteristic of mid-air—being an invisible non-contact tactile sensation—can be an advantage in science communication. The ability to represent dynamic depictions comes with the tradeoff of a lower “level-of-detail.” This design consideration was further discussed by participants in the quantum mechanics workshop (W2) when they compared mid-air haptic sensations to 3D printed models, praising its dynamic characteristics but noting that mid-air haptics does not have the level of details that 3D printed objects have.

P1:W2: “We've got some 3D printed models, really nice proteins that we printed. I mean, the advantage of those is the level of detail.you know, you're turning them around in your hands and looking at them is as close to what these proteins look like in our bodies. But obviously they don't move. [With mid-air haptics] we can show that dynamics much better. That's the big advantage.”

While the low level-of-detail was a disadvantage for some concepts, it could be an advantage for others as exemplified by the cell forming example above (P1:W3). Throughout the initial explorations (phase 1 and 2) in the workshops, various participants also suggested adding visual (W2, W3) and/or sound (W1, W3) features to strengthen the tactile sensation. However, in the following discussion (phase 3 and 4), participants increasingly decided against adding graphics and sound. Participants appreciated the fact that a user needs to focus and thus learn to listen to their hand (i.e., P2:W1: “I was listening. it's like tracing your hand to kind of get into the right kind of sensitivity”). This new sensation created excitement, and was considered a unique feature to engage people and boost interest, two main aims of science communication.

P1:W2: “With outreach stuff, it's always great to have a tool that is portraying something simple and fundamental. For example, our microscope, when we've got a camera looking at some leaf cells, there's so much we can say about it, as little or as much as we want. Where's with VR, you put it on and they're watching this video, and like there's only so much really, you can say with it. I find it much more limiting.”

Because mid-air haptics is more abstract and suggestive than 3D models and images, it requires participants to be more focused and listen to their hand. Mid-air haptics has a lower level-of-detail and might require additional feedback to handle complex scenarios. However, we found this combination of dynamic and abstract characteristics encouraged discussion and supported flexible narratives of core concepts, leading to Themes 2 and 3.

3.2. Theme 2: “Hazard a Guess”: Shared Experiences Led to Divergent Interpretations and Discussion

In all three workshops, mid-air haptics acted as a catalyst for co-discovery. Participants instinctively took turns exploring each tactile sensation, starting by describing the sensation (during phase 1) and then guessing the scientific concept we tried to convey (in phase 2, facilitated by the researcher who led all three workshops). The ability to just move the hand above the ultrasound array, then quickly withdraw when someone else wanted to feel the sensation, was considered a useful feature to engage audiences at science fairs and public engagement events. P1:W2: “People can just rotate around quickly and have a feel.”

Mid-air haptics supports easy turn-taking between participants, like 3D printed objects but unlike VR headsets. While VR headsets support dynamic phenomena and have a high level-of-detail, they lead to much more individual experiences. In two of the three workshops, participants compared mid-air haptics to VR technology, a recent addition to their science communication tools. One participant, P1 in W2, described his experience with VR as follows, indicating the benefit of mid-air haptics for having a shared experience:

P1:W2: “VR was cool but it feels limited, because it's one person at a time. This is still one person at a time, but they can be shared quite easily. With VR, someone's got the headset on and they have to kind of describe what they're looking at. If you've got a group, it doesn't work as well.”

When participants felt the same haptic effect, they would often talk about it and interpret it differently. For example, in W3, P2 felt one sensation like it was “growing,” while P4 described it like a “flower opening”; in W2, P1 felt a “wave from bottom left to top right,” while P2 talked about “dragging the ball around.” These divergent interpretations were due to the low level-of-fidelity: P2:W1: “That's kind of like random almost tickling sensation.” And yet, in the end, diverging interpretations resulted in a resolution. Guessing what the scientific representation was became a guessing game, a riddle, directed by the facilitator. The following exchange between participants in W3 demonstrates the process of co-discovering meiosis (a type of cell division) shown in Figure 2.

P2:W3: “Does someone else want to have a go?”

P3:W3: “You don't want to hazard a guess?” (laugh)

P2:W3: “Well, is there something growing?”

Facilitator Yes

P4:W3: “What's growing?”

Facilitator Can you notice something after it's growing maybe?

P2:W3: “It's not like a flower opening or something” (pause)

P3:W3: “ (jumps in and says) Cell division or something.”

During these exchanges, the facilitator was able to maneuver the discussion using comments and questions. Participants were visibly excited about and engaged with the scientific concept illustrated by quick exchanges between participants, laughter, as well as thinking pauses. This flexible discussion, involving multiple participants guessing and interpreting the mid-air haptic effects, meant the facilitator could really guide the exploration and tell a story.

3.3. Theme 3: “Take them on a Journey”: Many Stories With One Mid-Air Haptic Sensation

Science communicators come to realize that they are able to tell multiple stories using these dynamic, abstract tactile sensations. A single mid-air haptic focal point could form a representation for an atomic nucleus, a brain cell, or a distant star. It is in the hands of the science communicators to tell and vary the story depending on the audience as the following quote exemplifies: P1:W1: “You got so much control over the sensation, you can really take them on a journey.”

The discussion across all workshops (Phase 3 and 4) highlighted the science-agnostic potentials of mid-air haptics. In other words, due to its dynamic features and lower level-of-detail than for example 3D models, this tool leaves more freedom to the facilitator to guide the stories to be told about scientific phenomenon.

P3:W3: “Sciences shouldn't be thought as independent but using each other's toys. This technology is a very nice way to unite sciences.”

All participants, especially in W3, mentioned the potentials of different narratives for different contexts. Science fairs demonstrations need to be fast, intuitive, and engaging (P3:W3: “you're trying to get through a lot of people very quickly”). For a school setting it can be more complex, as it can be slower paced and allows the teacher or science communicator to tell a story to engage and draw in the students.

P4:W3: “You need to keep it simple [at fairs].”

P3:W3: “Anything that we spent ages with, like the cell division, is a really cool idea, but it's probably going to be better for smaller groups in schools. There, you've got the time to process it and tell them to really think about what they're feeling.”

P2:W3: “Yes, tie it into a bigger lesson.”

P4:W3: “Yes. Tie it in with the concept, maybe some other props, and then have this tech, and turn it into a big session rather than a science fair stand.”

In both contexts, at science fairs and in schools, the unique tactile characteristics of mid-air can engage users and create interest, two key objectives of science communication. Moreover, mid-air has the potential to turn this interest into understanding in smaller group settings where a facilitator can go from simple to complex concepts. In such contexts, more details can be added, both with respect to the story the facilitator tells, and the experiences they provide. For example, mid-air sensations can be complemented with visual animations and sounds, and other props.

Finally, participants in the cell biology workshop commented that this novel, tactile experience could also engage less-interested groups, such as older children (e.g., P3:W3: “We do activities where we look at viruses using balloon models, but it is mainly for young children and their parents. For the odd older child, [mid-air haptics] might be a nice way of engaging them”). P2 in W3 also mentioned the opportunity to attract new audiences, such as technology savvy adults, who otherwise would just walk by. P2:W3: “They might not normally be interested in biology, but they've come to look at the tech. But then, you're telling them more about the science.” Again, participants emphasized the relevance of being able to frame a story for different audiences, even if it starts with the tool that conveys the concept. Through new tools, such as mid-air technology, they can achieve their science communication objectives, most notably create interest and engage, maybe increase awareness, and possibly understanding in specific contexts can be achieved.

4. Discussion

We explored the possible use of mid-air haptic technology in science communication for the first time. Our findings highlight the opportunity of taking advantage of dynamic haptics and shareable experiences mid-air haptics affords. This novel tool also allows for flexible approaches of storytelling, taking into account the interaction setting, such as who is involved, and where science communication takes place. Here, we discuss the possible implications, opportunities and limitations, and future research directions.

4.1. Talking About Science Through Mid-Air Haptics

In our workshops, participants described mid-air haptic sensations with words, such as P1:W3: “pulsing” and P3:W3: “rain” and emphasized the sensation of movement and change. While those descriptions are in line with prior work on how people talk about tactile experiences (Obrist et al., 2013), in our exploration people were able to connect those descriptors to a specific scientific concept. The fact that people deconstructed the sensation is part of what led to engaging discussions. Our findings highlight that the dialog around the haptic probes naturally resulted in a co-discovery process. This shared exploration of a scientific phenomena contributed to the enjoyment of mid-air haptics technology for public engagement.

4.2. Mid-Air Haptics Produces Enjoyment and Interest

From the findings of Themes 2 and 3, we believe mid-air haptics may contribute the most to enjoyment and interest, the two hedonic dimensions of the five objectives of science communication described in the AEIOU framework (e.g., P1:W2: “it is really fun to play with”). Mid-air haptics might engage new, wider audiences, who otherwise would not be interested in science. Participants said that the technology could engage older children and parents, as well as tech savvy people. The ability to create shared experiences and motivate co-discovery promote an environment for interpretation, which may contribute to greater enjoyment and engagement of the public. Previously it has been shown that augmenting abstract art (Vi et al., 2017) and multimedia content (Ablart et al., 2017) with mid-air haptic sensations can indeed increase levels of enjoyment, which are measurable through physiological markers. However, the terms enjoyment and interest are notably used as umbrella terms in science communication, with many granular dimensions, characteristics of each of these experiences. Therefore in a research study to follow, we are examining target and perceived affective descriptors elicited by this technology in contrast to other communication modalities.

4.3. Story-Like and Metaphor-Based Haptic Design Tools for Science Communication

One of the branches of science communication research argues about the role of metaphors, rhetorical tools, humor, and storytelling in engaging with the public. Metaphor is a vital tool of science communicators. As Kendall-Taylor and Haydon put it “An Explanatory Metaphor helps people organize information into a clearer picture in their minds? making them more productive and thoughtful consumers of scientific information” (Kendall-Taylor and Haydon, 2016). Another contemporary approach to humanizing science is through storytelling (Joubert et al., 2019). Through stories, students can relate more to either the concept, or the scientist. Even though recall might not be improved, humorous stories provide a hook, grab attention, and create excitement, and enjoyment amongst the audience (Frisch and Saunders, 2010). Using narratives allows for “emotification,” “personification,” and “fictionification,” which in turn contributes to mental processes at multiple levels, such as motivation, or transfer to long term memory (Dahlstrom, 2014).

With the aid of sensory technologies, communicators may be able to expand explanatory metaphors with sensory metaphors and augment their narrative. To this end, a major challenge is the complexity of content creation with mid-air haptics. Currently, the complexity of development means that science communicators are likely to outsource development to hapticians. One possible way to overcome this challenge is to create toolkits and user interfaces, which make the content creation effortless. Toolkits are very important in order to reduce the complexity of a specific application area, addressed by an emerging technology, opening it up for new content creators (Ledo et al., 2018). Research and development of mid-air touch specific toolkits may follow a similar trend to that of the development of computer aided design toolkits for digital fabrication by non-experts. However, from our findings we can see that any toolkit development in the context of science communication should take a “metaphor-based” approach (Seifi et al., 2015), so that science communicators can easily design new probes, ideally in real-time.

4.4. Generalizability of the Technology

Our work has explored three different fields of science, which are example demonstrations of the potential generalization of mid-air haptics for science communication. This included particle physics, quantum mechanics, and cell biology; however, the field of interest could easily be expanded to astronomy or environmental sciences, as well as many more. The analysis of qualitative data indicates further transferability of the technology and mid-air haptic sensations to other disciplines. Theme 1 described in section 3.1 highlights the dynamic features of mid-air haptics and its generalizability to other scientific concepts: P1:W1: “something that could demonstrate waves in a tactile way is very good,” where we note that waves are a universal phenomena describing acoustics, optics, ocean waves, and much more. The trade-off of a “lower level-of-detail” allows mid-air haptic sensations to be applied and explored in different disciplines, especially through different science stories described in Theme 3. The haptic probe illustrating a cell structure could be used to tell the story of galaxy formations, with galaxy nucleus playing the role of a cell nucleus and the cell membrane playing the role of stars toward the edge of the galaxy. Hence a story of “scales” from microscopic to cosmic may be recited with the aid of mid-air haptics.

Two recently published case studies illustrate the generalizability of the technology in science communication through metaphorical experiences. Trotta et al. (2020) exhibited a multisensory installation of dark matter, where the interested public was able to perceive cosmological particles in an inflatable planetarium. The exhibit was hosted on multiple occasions, where visitors' sense of touch was stimulated using mid-air haptics, integrated with other sensory stimuli and a 2 min long narrative. O'Conaill et al. (2020) integrated mid-air haptics with cinema experiences on the topic of oceanography, renewable energy, and environmental science. In this case study, the aim was to create more immersive experiences for sensory impaired audiences, by associating haptic sensations with either visual or auditory content from the short documentary. This work also outlines research questions, such as whether haptics should be associated with visual information or auditory stimuli when engaging sensory impaired audiences. Both the dark matter and oceanography projects, where the corresponding author of this work has also contributed, show a potential to generalize the technology in science communication beyond the currently presented haptic probes.

With regards to informal vs. formal learning environments, mid-air haptic sensations have opportunities both in the classroom and in museums. In informal learning environments, a platform for co-discovery may be an attractive communication tool, where families and small groups can collectively interpret the exhibit. To set the narrative where facilitation is missing, multisensory integration may provide the missing context. However, this may prove counterproductive, since the low-level-detail of unimodal haptic sensation creates the utility of ambiguous representations. Ambiguity could be an advantage when facilitating engagement in more formal environments, such as a school lesson. As we saw in Theme 2, a small group of students may start guessing the intended interpretation, if that is guided by a teacher or other facilitator. In formal learning environments, there is typically more time to also combine various teaching probes, such as 3D printed models for higher details and mid-air haptic technology for more immersive learning experiences. Mid-air haptics offers a tool to create dynamic and complementary experiences, in addition to static digital fabrication (Bonne et al., 2018) and the isolating side-effect of VR (Furió et al., 2017).

4.5. Concluding Remarks

We synthesized three commonly reoccurring themes based on the focus groups, consisting of science communicators discussing opportunities and challenges of mid-air haptics in public engagement. These themes give a broad response to the direction in which further research should explore the value of mid-air haptics in science communication. We have not carried out a direct comparison of mid-air haptics, 3D printed probes, or VR tools, therefore we can not state with certainty how these communication tools would perform in competing conditions. However, we worked with expert participants, who were familiar with using VR and tangible probes during their science communication activities. Thus participants were able to evaluate mid-air haptics in context of their experiences of these alternative technologies, despite the lack of direct comparison. Regardless, further research validating these assumptions by comparison studies would be necessary to draw any explicit conclusions.

Counter to expectations set out in the hypotheses, analysis of the qualitative results suggested three opportunistic themes. First, the ability to create dynamic tactile sensations was highlighted as an outstandingly relevant property of mid-air haptic sensations, in contrast to five other hypothesized significant properties. Second, it was implied that the shared experiences which the technology affords, by allowing multiple users to engage almost simultaneously, is a relevant opportunity at fast paced public engagement events. This theme signifies a contrast to more isolating experiences, such as VR (Furió et al., 2017), or in the words of a participant: P1:W2: “VR was cool but it feels limited, because it's one person at a time. This is still one person at a time, but they can be shared quite easily. With VR, someone's got the headset on and they have to kind of describe what they're looking at. If you've got a group, it doesn't work as well.” Third, the characteristic sensation of mid-air touch, in contrast to physical touch may pose an opportunity in storytelling and adapting the same probes to the expectations of various audiences.

We found one of the greatest challenges noted by science communicators to be the level of concentration and potentially long exploration time required to make sense of the haptic sensation. This challenge initiated conversations on whether mid-air haptics is better suited for informal learning environments, or in a formal setting. In either case, the emphasis shifted toward the hedonic, or affective domains of the learning process, that is the enjoyment and interest dimensions within the AEIOU framework of science communication.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by Sciences & Technology Cross-Schools Research Ethics Committee (SCITEC). The participants provided their written informed consent to participate in this study. Written informed consent was obtained from Daniel Hajas for the publication of any potentially identifiable images or data included in this article.

Author Contributions

DH identified the potential research area in the intersection of mid-air haptics and science communication, facilitated the focus groups with the science communicators, studied the literature to help characterize the design properties of mid-air haptics in science communication, and did the preliminary analysis. DA supported the haptic probe development by programming and also writing the technical reports in the article. OS had a major role in synthesizing the findings into the three themes, shaping the discussion presented in this article, and also created the figures. MO supervised the overall project and also had a key role in the analysis of data. All authors contributed to the article and approved the submitted version.

Funding

For equipment and funding support, we would like to thank Ultraleap Ltd, and the European Research Council, European Union's Horizon 2020 programme (grant no. 638605). OS was supported by the Natural Sciences and Engineering Research Council of Canada (NSERC).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank the science communicators who took part in our study. We also thank Dario Pittera, Joseph Tu, and Marisa Benjamin for the help with the video production in various stages, and designing earlier versions of the static illustrations. Thanks also go to Dmitrijs Dmitrenko for proof reading and commenting earlier versions of this manuscript. The authors also appreciate the support by Robert Cobden with programming tasks. Finally, we would like to thank the reviewers for their valuable comments.

References

Ablart, D., Velasco, C., and Obrist, M. (2017). “Integrating mid-air haptics into movie experiences,” in Proceedings of the 2017 ACM International Conference on Interactive Experiences for TV and Online Video, TVX '17 (Hilversum: ACM), 77–84. doi: 10.1145/3077548.3077551

Alexander, J., Marshall, M., and Subramanian, S. (2011). “Adding haptic feedback to mobile TV,” in Proceedings of CHI'11 (Vancouver, BC), 1975–1980. doi: 10.1145/1979742.1979899

Auvinen, A., Vahteristo, M., Arvela, H., Suomela, M., Rahola, T., Hakama, M., et al. (2001). Chernobyl fallout and outcome of pregnancy in Finland. Environ. Health Perspect. 109, 179–185. doi: 10.1289/ehp.01109179

Bloom, B. S., Engelhart, M. B., Furst, E. J., Hill, W. H., and Krathwohl, D. R. (1956). Taxonomy of Educational Objectives. The Classification of Educational Goals. Handbook 1: Cognitive Domain (New York, NY: Longmans Green).

Bonne, N., Gupta, J., Krawczyk, C., and Masters, K. (2018). Tactile universe makes outreach feel good. Astron. Geophys. 59, 1.30–1.33. doi: 10.1093/astrogeo/aty028

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Burns, T. W., O'Connor, D. J., and Stocklmayer, S. M. (2003). Science communication: a contemporary definition. Public Understand. Sci. 12, 183–202. doi: 10.1177/09636625030122004

Carter, T., Seah, S. A., Long, B., Drinkwater, B., and Subramanian, S. (2013). “Ultrahaptics: multi-point mid-air haptic feedback for touch surfaces,” in Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology, UIST '13 (St. Andrews: ACM), 505–514. doi: 10.1145/2501988.2502018

Chen, S. T., Borland, D., Russo, M., Grady, R., and Minogue, J. (2014). “Aspect sinking and floating: an interactive playable simulation for teaching buoyancy concepts,” in Proceedings of the First ACM SIGCHI Annual Symposium on Computer-human Interaction in Play, CHI PLAY '14 (Toronto, ON), 327–330. doi: 10.1145/2658537.2662978

Clements, D. L., Sato, S., and Portela Fonseca, A. (2017). Cosmic sculpture: a new way to visualise the cosmic microwave background. Eur. J. Phys. 38:015601. doi: 10.1088/0143-0807/38/1/015601

Courvoisier, N., Clémence, A., and Green, E. G. T. (2013). Man-made black holes and big bangs: diffusion and integration of scientific information into everyday thinking. Public Understand. Sci. 22, 287–303. doi: 10.1177/0963662511405877

Dahlstrom, M. F. (2014). Using narratives and storytelling to communicate science with nonexpert audiences. Proc. Natl. Acad. Sci. U.S.A. 111, 13614–13620. doi: 10.1073/pnas.1320645111

Davis, R. L., Orta Martinez, M., Schneider, O., MacLean, K. E., Okamura, A. M., and Blikstein, P. (2017). “The haptic bridge: towards a theory for haptic-supported learning,” in Proceedings of the 2017 Conference on Interaction Design and Children, IDC '17 (Stanford, CA: ACM), 51–60. doi: 10.1145/3078072.3079755

Follmer, S., Leithinger, D., Olwal, A., Hogge, A., and Ishii, H. (2013). “Inform: dynamic physical affordances and constraints through shape and object actuation,” in Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology, UIST '13 (St. Andrews: ACM), 417–426. doi: 10.1145/2501988.2502032

Frier, W., Ablart, D., Chilles, J., Long, B., Giordano, M., Obrist, M., et al. (2018). “Using spatiotemporal modulation to draw tactile patterns in mid-air,” in Haptics: Science, Technology, and Applications, eds D. Prattichizzo, H. Shinoda, H. Z. Tan, E. Ruffaldi, and A. Frisoli (Pisa: Springer International Publishing), 270–281. doi: 10.1007/978-3-319-93445-7_24

Frisch, J., and Saunders, G. (2010). Using stories in an introductory college biology course. J. Biol. Educ. 2008, 164–169. doi: 10.1080/00219266.2008.9656135

Furió, D., Fleck, S., Bousquet, B., Guillet, J.-P., Canioni, L., and Hachet, M. (2017). “Hobit: hybrid optical bench for innovative teaching,” in Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, CHI '17 (Denver, CO: ACM), 949–959. doi: 10.1145/3025453.3025789

Gallacher, C., Mohtat, A., and Ding, S. (2016). Toward Open-Source Portable Haptic Displays With Visual-Force-Tactile Feedback Colocation (Philadelphia, PA: IEEE).

Geldard, F., and Sherrick, C. (1972). The cutaneous “rabbit”: a perceptual illusion. Science 178, 178–179. doi: 10.1126/science.178.4057.178

Georgiou, O., Jeffrey, C., Chen, Z., Tong, B. X., Chan, S. H., Yang, B., et al. (2018). “Touchless haptic feedback for VR rhythm games,” in 2018 IEEE Conference on Virtual Reality and 3D User Interfaces, VR 2018 (Tuebingen/Reutlingen), 553–554. doi: 10.1109/VR.2018.8446619

Gueguen, N. (2004). Nonverbal encouragement of participation in a course: the effect of touching. Soc. Psychol. Educ. 7, 89–98. doi: 10.1023/B:SPOE.0000010691.30834.14

High-Level Steering Committee, E. (2017). Quantum Technologies Flagship Final Report. Brussels: European Commission.

Holloway, R. (2016). Chapter 38: Angry Religions: Audible Studios on Brilliance Audio (Grand Haven, MI: Audible Studios on Brilliance Audio).

Iwamoto, T., Tatezono, M., and Shinoda, H. (2008). “Non-contact method for producing tactile sensation using airborne ultrasound,” in Haptics: Perception, Devices and Scenarios. EuroHaptics 2008. Lecture Notes in Computer Science, Vol. 5024, ed M. Ferre (Berlin; Heidelberg: Springer), 504–513. doi: 10.1007/978-3-540-69057-3_64

Jones, G., Andre, T., Superfine, R., and Taylor, R. (2003). Learning at the nanoscale: the impact of students' use of remote microscopy on concepts of viruses, scale, and microscopy. J. Res. Sci. Teach. 40, 303–322. doi: 10.1002/tea.10078

Jones, M. G., Minogue, J., Oppewal, T. P., Cook, M., and Broadwell, B. (2006a). Visualizing without vision at the microscale: students with visual impairments explore cells with touch. J. Sci. Educ. Technol. 15, 345–351. doi: 10.1007/s10956-006-9022-6

Jones, M. G., Minogue, J., Tretter, T. R., Negishi, A., and Taylor, R. (2006b). Haptic augmentation of science instruction: does touch matter? Sci. Educ. 90, 111–123. doi: 10.1002/sce.20086

Joubert, M., Davis, L., and Metcalfe, J. (2019). Storytelling: the soul of science communication. J. Sci. Commun. 18:5. doi: 10.22323/2.18050501

Kendall-Taylor, N., and Haydon, A. (2016). Using metaphor to translate the science of resilience and developmental outcomes. Public Understand. Sci. 25, 576–587. doi: 10.1177/0963662514564918

Lederman, S. J., and Klatzky, R. L. (2009). Haptic perception: a tutorial. Atten. Percept. Psychophys. 71, 1439–1459. doi: 10.3758/APP.71.7.1439

Ledo, D., Houben, S., Vermeulen, J., Marquardt, N., Oehlberg, L., and Greenberg, S. (2018). “Evaluation strategies for HCI toolkit research,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, CHI '18 (Montreal, QC: ACM), 36:1–36:17. doi: 10.1145/3173574.3173610

Long, B., Seah, S. A., Carter, T., and Subramanian, S. (2014). Rendering volumetric haptic shapes in mid-air using ultrasound. ACM Trans. Graph. 33, 1–10. doi: 10.1145/2661229.2661257

Mahns, D. A., Perkins, N. M., Sahai, V., Robinson, L., and Rowe, M. J. (2006). Vibrotactile frequency discrimination in human hairy skin. J. Neurophysiol. 95, 1442–1450. doi: 10.1152/jn.00483.2005

Minaker, G., Schneider, O., Davis, R., and MacLean, K. E. (2016). “HandsOn: enabling embodied, creative STEM e-learning with programming-free force feedback,” in Haptics: Perception, Devices, Control, and Applications: 10th International Conference, EuroHaptics 2016, Proceedings, Part II, eds F. Bello, H. Kajimoto, and Y. Visell (London; Cham: Springer International Publishing), 427–437. doi: 10.1007/978-3-319-42324-1_42

Minogue, J., Borland, D., Russo, M., and Chen, S. T. (2016). “Tracing the development of a haptically-enhanced simulation for teaching phase change,” in Proceedings of the 2016 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts, CHI PLAY Companion '16 (Austin, TX: ACM), 213–219. doi: 10.1145/2968120.2987723

Obrist, M., Seah, S. A., and Subramanian, S. (2013). “Talking about tactile experiences,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems–CHI '13 (Paris: ACM Press), 1659. doi: 10.1145/2470654.2466220

Obrist, M., Subramanian, S., Gatti, E., Long, B., and Carter, T. (2015). “Emotions mediated through mid-air haptics,” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, CHI '15 (Seoul: ACM), 2053–2062. doi: 10.1145/2702123.2702361

O'Conaill, B., Provan, J., Schubel, J., Hajas, D., Obrist, M., and Corenthy, L. (2020). “Improving immersive experiences for visitors with sensory impairments to the aquarium of the Pacific,” in Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI20) (Honolulu, HI). doi: 10.1145/3334480.3375214

Orta Martinez, M., Morimoto, T. K., Taylor, A. T., Barron, A. C., Pultorak, J. D. A., Wang, J., et al. (2016). “3-D printed haptic devices for educational applications,” in 2016 IEEE Haptics Symposium (HAPTICS) (Philadelphia, PA: IEEE), 126–133. doi: 10.1109/HAPTICS.2016.7463166

Perucchi, M., and Domenighetti, G. (1991). The chernobyl accident and induced abortions: only one-way information. Scand. J. Work Environ. Health 16, 443–444. doi: 10.5271/sjweh.1761

Pittera, D., Ablart, D., and Obrist, M. (2019a). Creating an illusion of movement between the hands using mid-air touch. IEEE Trans. Hapt. 12, 615–623. doi: 10.1109/TOH.2019.2897303

Pittera, D., Gatti, E., and Obrist, M. (2019b). “I'm sensing in the rain: spatial incongruity in visual-tactile mid-air stimulation can elicit ownership in VR users,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, CHI '19 (Glasgow, UK: ACM), 132:1–132:15. doi: 10.1145/3290605.3300362

Richard, C., Okamura, A. M., and Cutkosky, M. R. (1997). “Getting a feel for dynamics: using haptic interface kits for teaching dynamics and controls,” in 1997 ASME IMECE 6th Annual Symposium on Haptic Interfaces (Dallas, TX).

Rose, C. G., French, J. A., and O'Malley, M. K. (2014). “Design and characterization of a haptic paddle for dynamics education,” in 2014 IEEE Haptics Symposium (HAPTICS) (Houston, TX: IEEE), 265–270. doi: 10.1109/HAPTICS.2014.6775465

Seah, S., Drinkwater, B., Carter, T., Malkin, R., and Subramanian, S. (2014). Dexterous ultrasonic levitation of millimeter-sized objects in air: dexterous ultrasonic levitation of millimeter-sized objects in air. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 61, 1233–1236. doi: 10.1109/TUFFC.2014.3022

Seifi, H., Zhang, K., and Maclean, K. (2015). “VibViz: organizing, visualizing and navigating vibration libraries,” in 2015 IEEE World Haptics Conference (WHC) (Chicago, IL), 254–259. doi: 10.1109/WHC.2015.7177722

Stusak, S., and Aslan, A. (2014). “Beyond physical bar charts–an exploration of designing physical visualizations,” in Conference on Human Factors in Computing Systems–Proceedings (Toronto, ON). doi: 10.1145/2559206.2581311

Taher, F., Hardy, J., Karnik, A., Weichel, C., Jansen, Y., Hornbæk, K., et al. (2015). “Exploring interactions with physically dynamic bar charts,” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, CHI '15 (Seoul: ACM), 3237–3246. doi: 10.1145/2702123.2702604

Torres, C., Campbell, T., Kumar, N., and Paulos, E. (2015). “Hapticprint: designing feel aesthetics for digital fabrication,” in Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, UIST '15 (Charlotte, NC: ACM), 583–591. doi: 10.1145/2807442.2807492

Trotta, R., Hajas, D., Camargo-Molina, E., Cobden, R., Maggioni, E., and Obrist, M. (2020). Communicating cosmology with multisensory metaphorical experiences. J. Sci. Commun. 19:N01. doi: 10.22323/2.19020801

Vi, C., Ablart, D., Gatti, E., Velasco, C., and Obrist, M. (2017). Not just seeing, but also feeling art: mid-air haptic experiences integrated in a multisensory art exhibition. Int. J. Hum. Comput. Stud. 108, 1–14. doi: 10.1016/j.ijhcs.2017.06.004

Wilson, G., Carter, T., Subramanian, S., and Brewster, S. (2014). “Perception of ultrasonic haptic feedback on the hand: localisation and apparent motion,” in ACM CHI, Vol. 14 (Toronto, ON). doi: 10.1145/2556288.2557033

Yannier, N., Hudson, S. E., Wiese, E. S., and Koedinger, K. R. (2016). Adding physical objects to an interactive game improves learning and enjoyment: evidence from earthshake. ACM Trans. Comput. Hum. Interact (Seoul), 23:26. doi: 10.1145/2934668

Yannier, N., Israr, A., Lehman, J. F., and Klatzky, R. L. (2015). “FeelSleeve: haptic feedback to enhance early reading,” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (Seoul: ACM), 1015–1024. doi: 10.1145/2702123.2702396

Keywords: mid-air haptics, science communication, touch, public engagement, tactile experiences

Citation: Hajas D, Ablart D, Schneider O and Obrist M (2020) I can feel it moving: Science Communicators Talking About the Potential of Mid-Air Haptics. Front. Comput. Sci. 2:534974. doi: 10.3389/fcomp.2020.534974

Received: 14 February 2020; Accepted: 22 October 2020;

Published: 20 November 2020.

Edited by:

Anton Nijholt, University of Twente, NetherlandsReviewed by:

Laurent Moccozet, Université de Genève, SwitzerlandNicola Bruno, University of Parma, Italy

Copyright © 2020 Hajas, Ablart, Schneider and Obrist. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Daniel Hajas, dh256@sussex.ac.uk

Daniel Hajas

Daniel Hajas