- 1School of Design and Informatics, Abertay University, Dundee, United Kingdom

- 2School of Applied Sciences, Abertay University, Dundee, United Kingdom

The air traffic control industry is highly regulated, with stringent processes and procedures to ensure that IP (Intellectual Property) and workplaces are kept secure. The training of air traffic controllers (ATCs) and other roles relating to air traffic services is a lengthy and expensive process. The rate in which trainees can be trained is projected to fall significantly short of the demand for staff to work in the air traffic industry. This paper focuses on two prototype mobile training applications—Location Indicators (LI) and the Aircraft Control Positions Operator (ACPO) Starter Pack. LI and the ACPO Starter Pack have been produced to explore how air traffic control training could be improved and supported using digital applications. Each application explores a key learning area for trainees in the air traffic control industry and presents an alternative to the equivalent training that is currently in use. The two prototypes that have been designed focus on producing a succinct user experience alongside gamified elements to improve engagement. As part of this paper, usability testing has been undertaken with LI and the ACPO Starter Pack. A total of nine usability tests have been undertaken at four different locations. These usability tests consisted of participants from differing demographics, varying experience with the current training and differing amounts of time with both applications. The System Usability Scale (SUS) was adapted and used to quantify participant's reactions to the usability of each application. Usability scores for both applications were collected and then averaged to produce an overall score for each application. We can conclude from both usability scores and qualitative feedback that digital applications have the potential to engage future trainees in the air traffic services industry.

Introduction

It can take 3 years to train ATCs (Humphreys, 2017; Noyes, 2018). Rigorous pre-testing means only 1% of potential applicants go onto the training phase (BBC, 2018a,b). Experienced ATCs tend to act as teachers (National Research Council, 1997) which further contributes to a shortage of ATCs. With experienced ATCs being assigned to teaching roles, ATCs retiring at a younger age (Federal Aviation Administration, 2017), a low pass rate of ATC applicants and the amount of flights expecting to rise to an extra 500,000 per by year 2030 year (pre-Covid 19), there is an increased demand to both recruit and train ATCs (BBC, 2018a,b). Digital alternatives to training can provide learning which is flexible, with less dependency on instructors (Pollard and Hillage, 2011). Issues pertaining to training time, the costs associated with training and the effectiveness of training, could all be alleviated through the widespread adoption of digital training (Strother, 2002).

Training ATCs is not only lengthy but also costly with each trainee costing ~£600,000 to be trained (Savill, 2009). This training process has been identified to be “no longer engaging the current generation of trainees” (Humphreys, 2017). With the ever-increasing demand for ATCs and the current training needing to adapt to meet the learning expectations of the newer generation of air traffic controller trainees, the disruption of current air traffic controller training is desperately needed. Whilst not being specific to air traffic controller training, the so called “millennial” generation is frequently found to prefer learning via technology (Oblinger, 2003). Millennials are defined as individuals who have been born between 1979 and 1994 (Myers and Sadaghiani, 2010) and by 2020 millennials will consist of 35% of the workforce (ManpowerGroup, 2016).

To address these problems, aeronautical companies have tried to recruit more individuals to apply to be ATC's (BBC, 2018b). For example, one air traffic service company has created mini-games to determine whether an individual has the correct cognitive skills to become an ATC (NATS(a), n.d.). By playing these mini-games, individuals can quickly identify if they have the required skill set to be an ATC. The FAA is also looking to recruit, with thousands of ATCs being expected to be trained over the next decade to ensure safety and efficient air travel (Lane, 2018). Whilst recruitment is critical of air traffic organisations around the world, there approach to technology is hampering validation rates.

New technologies and procedures are difficult to develop due to the large number of development steps needed before they can become operational within an air traffic company (Haissig, 2013). These include the creation of new procedures, terminology, and a lengthy testing phase because of the safety critical nature of the industry. Technology is widely underutilised in the air traffic services industry, with the lack of technology adoption not only impacting recruitment and validation, but also safety (The Economist, 2016). Furthermore, supporting ATC trainees by providing self-paced digital learning tools can negate the need for trainees to make their own training tools and provide learning which is consistent and effective.

A large amount of companies at present are using one or several digital products to improve their business, whether that is tools to provide a better customer experience or data analysis (Schwertner, 2017). Digital transformation is difficult with Forbes finding that only 7% of 274 executives felt that they had “successfully used digital technologies to evolve their organization into truly digital businesses” (McKendrick, 2015). Whilst digital transformation is a challenge to businesses, it can be a powerful method to improve a company's productivity (Matt et al., 2015). By providing more effective training, costs can be decreased by reducing training time.

E-learning can reduce costs and foster behaviour change if it's well-received. Engaging e-learning content can be produced by utilising gamification techniques (Seixas et al., 2016) and applying formal user experience design practice (Lain and Aston, 2004). Gamification is a term used to describe the implementation of gaming techniques to non-gaming applications (Thom et al., 2012). The primary goal of Gamification is typically to help engage and motivate individuals to complete tasks. Using a multitude of different techniques such as scoring systems, leader boards, achievements, and rewards, the user is further engaged with the material. In training, the concept of the quiz is the most easily gamified interactive element with motivators such as point rewards serving to motivate the player and quizzes delivering the primary learning the player undertakes. By utilising gamification, both engagement and learning retention can be dramatically increased (Burke, 2014; Darejeh and Salim, 2016). Gamification techniques enhance self-learning tools with gamified workplaces being “self-improving, self-learning entities” (Oprescu et al., 2014).

UX (User Experience) is a modern field of design and is deeply associated with other areas of design and science such as HCI (Human Computer Interaction) and Ergonomics (Law et al., 2009). UX focuses on the accomplishment of a user tasks. Buley (2013) explains that “good” UX is reducing the friction between the task and the tool or application. Focusing on UX design often increases engagement, resulting in an increase in the time the user interacts with the application (Hart et al., 2012). Better user experiences are typically more engaging and younger generations are more receptive to activities such as video games and other digital media (Metz, 2011). By creating training applications which possess a high-quality user experience, we can engage individuals with their given training. With a younger generation of ATC trainees and a large sum of crucial information needing to be learned and retained, UX practice can play a key part in creating effective training programs.

This research is based on a joint academic-industry project to explore how ATC training could be improved by taking advantage of UX principles and gamification in digitalisation of training. The intention of the project was to conceptualise and prototype a range of potential solutions to assist with initial training of new recruits through to up-skilling packages for experienced staff. Thirty different concepts were created to explore different ways to improve training. These included an interactive application to teach ATCs visual scanning patterns, a learning tool to help in radar scenarios and an application to assist in the learning of the phonetic alphabet. From this pool, two digital prototypes were produced and then tested to determine if gamified and UX centric digital training applications would be beneficial to the air traffic industry. Data gathered from the usability tests will give us an insight into the utility of the app in providing flexible, portable and engaging learning tools.

Literature Review

This literature review we will examine the existing literature on UX, Gamification, and usability. We will also explore ATC training and existing digital training applications for employees of air traffic control companies.

User Experience (UX) and Usability

In 2009 Law et al., concluded from a survey of 275 researchers and practitioners working within industry and academia that the definition of User Experience is dynamic and very much dependent on the context, they concluded that UX has origins in HCI (Human-Computer Interaction) and UCD (User-Centered Design) (Law et al., 2009). Don Norman coined the term User Experience (UX), defined as “a philosophy based on the needs and interest of the user, with an emphasis on making products usable and understandable.” UCD is ultimately for the benefit of the user, creating experiences more pleasurable and usable (Norman, 1988).

McCarthy and Wright argue that “we don't just use technology, we live it” and that interacting with technology “involves us emotionally, intellectually, and sensually” (McCarthy and Wright, 2004). Interacting with technology is not a passive experience, it is an experience that engages users on an emotional and intellectual level. They further state that those “who design, use, and evaluate interactive systems need to be able to understand and analyse people's felt experience with technology.” Having an understanding of an individual's experiences with a user experience is therefore paramount to building something which is usable for users. By conducting usability tests, we can gain an insight into the emotions our participants feel when interacting with the applications produced.

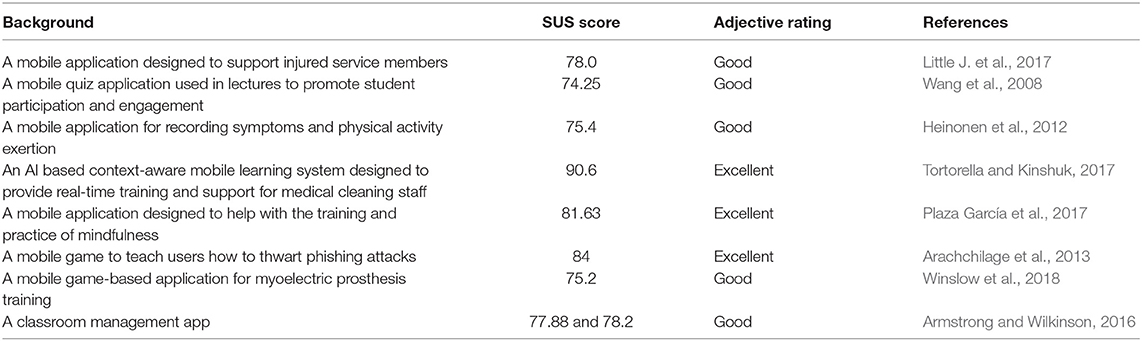

Usability, “the capability to be used by humans easily and effectively” (Shackel and Richardson, 1991) can be measured in respect of: ergonomics and user comfort; effectiveness, learnability, flexible, and attitude. Dowell and Long define usability as “the level of behavioural resource costs demanded of the user.” where the process of work is both a physical and mental behaviours and working is a cost on those behaviour (Dowell and Long, 1998). In a long term study using computer games, usability defects negatively correlated with user engagement measured by playtime and playability (Febretti and Garzotto, 2009). The study of usability and user experience is the focus on creating experiences and services more suited toward the user. How the user thinks and feels is at the centre of user experience and is related to the core usability factors previously mentioned. Focusing on UCD, usability and UX principles when designing experiences is important to engage users. Usability has been assessed via a questionnaire with responses linked to a System Usability Scale (SUS) (Brooke, 1996). This results in a score between 0 and 100 with score ranges linked to usability adjectives e.g., poor through to excellent. Table 1 outlines studies focusing on mobile training applications that have used SUS to assess the usability of the applications.

Air Traffic Controller Training

ATC training initially involves external training (also described as college-based training) in which learning content is presented through practical and theory-based classes. After a year to 18 months, trainees are then sent to do operational training. Finally, after operation training, trainees are given their ATC license and can begin operating as an ATC (NATS(c), n.d.).

Cordeil et al. (2016) outline the challenges of air traffic workers and digital immersive solutions to support air traffic staff. Air traffic staff operate in an incredibly complex environment, having to manage and analyse a large amount of critical data in real-time. They suggest potential immersive solutions such as Augmented Paper Strips to enhance the traditional stripboard method of tracking flights as well as a VR (Virtual Reality) immersive environment designed for long distance collaboration between air traffic controller experts (Cordeil et al., 2016).

Perry et al. (2017) also frames the challenge of ATC training as the application of complex information at a fast rate. Their hypothesis states that a digital learning tool may increase student learning and success rates. The paper outlines a digital tool called “Swivl” which allows students to refer back to simulations and videos they had previously seen in a classroom setting (Perry et al., 2017). In an associated research article Little A. et al. (2017) outlines that trainees in the field of air traffic control have no way to learn what is taught via simulations outside of the classroom.

In 1997, a panel was formed by the FAA (Federal Aviation Authority) to investigate Human Factors in Air Traffic Control. They outlined problems with ATC training including the fact that many trained ATCs do not have sufficient time to teach trainees or receive refresher training themselves. The panel recommended Flight to the Future: Human Factors in Air Traffic Control to use computer simulations to improve controller training (National Research Council, 1997).

ATC trainings' current problems have existed for a long period of time. These challenges include the complexity of the required learning for ATCs, full-time controllers being utilized as educators and the difficult environment in which ATCs need to work in. Reviewing the research suggests that ATC training consists of the use of computer simulation in combination with procedural based traditional teaching programmes. Digital learning tools are less utilised, especially the use of digital tools within self-learning scenarios. Research suggests that digital learning can strengthen a students' learning experience and enhance student engagement (Sousa et al., 2017). The current state of air traffic controller training consists of very few digital tools and digitally focused training programs, yet both have the ability to facilitate learning. In the forthcoming sections, we highlight two applications that have been created to evaluate the use of digital tools for learning.

Methodology

The two prototypes have been designed with an emphasis on producing a succinct user experience alongside gamified elements to improve and measure engagement. The questions the two prototypes address are:

• What elements of the applications contribute to higher or lower usability scores?

• What changes can be made to both applications to improve their usability?

• Are both applications perceived to be fit for purpose to train the air traffic services workforce?

The study began with the investigation of the needs of the target users. By shadowing staff members working at an air traffic services organisation, we understood the challenges the target user faced. Initial concepts were influenced by the problems encountered by staff members. Workshops were undertaken to gain a further insight into user's needs. The workshops found that training is often a stressful experience for trainees and that there is room for improvement regarding the self-learning material given to trainee ATCs. Training material for example is often cumbersome with trainees given large books to review and comprehend. After conducting a game jam (Kultima, 2015) to explore preferred digital platforms/products e.g., PC, VR, and Augmented Reality (AR) applications, mobile applications were identified as being the platform of choice. Two concepts were prototyped—Location Indicators (LI) and The Aircraft Control Positions Operator (ACPO) Starter Pack and evaluated with respect to UX and Usability.

Development Process

LI and the ACPO Starter Pack were designed using an agile approach allowing for iterative development. Both app designs were created through a UCD approach. Agile is an iterative approach to software development with the aim of delivering quality software in smaller increments (Fowler and Highsmith, 2001). By delivering functional software to the customer in a shorter time, the direction of a software project can change frequently. This is especially important in the case of game projects due to the complex nature of the medium. Game developers must balance the user needs and development complexity with good “game feel” (Swink, 2009). Utilising a specific agile approach, such as Scrum, has become a common approach in the games industry. Scrum is an agile methodology for software development which utilises sprints to produce smaller increments of work (Schwaber and Beedle, 2002). Sprints typically involve a set number of tasks to be completed in a short space of time, usually a week or 2 weeks. This approach is very similar to a “lifecycle model for interaction design” (Preece et al., 2002).

1. Identify needs/establish requirements

2. Re(design)

3. Build an interactive version

4. Evaluate

This is a repeating process which focuses on user needs, creating prototypes frequently and then redesigning and reiterating based on user evaluation. This model is similar to an agile approach when developing prototypes, and for the development of both LI and the ACPO Starter Pack, this process was used to focus on meeting user needs.

Both LI and the ACPO Starter Pack were developed iteratively in short increments, taking into account feedback from trainee ATCs. This is effective as Abras et al. (2004) argues it “is only through feedback collected in an interactive iterative process involving users that products can be refined.” The involvement of the trainee/employee is central to facilitating a UCD approach. Abras et al. (2004) defines UCD as a “broad term to describe design processes in which end-users influence how a design takes shape.” The prototypes of both the ACPO Starter Pack and LI allowed for the usability study to be built into the process and therefore is a key pillar of the research of this paper. The creation of prototypes is necessary as Preece et al. (2002) points out in Interaction Design: Beyond Human-Computer Interaction, “For users to evaluate the design of an interactive product effectively, designers must prototype their ideas.”

Rams outlines ten principles which were considered central when designing the two applications (de Jong et al., 2017). The Location Indicators design has been made to be as simple as possible with the core interactions being to swipe left and right, this design decision was made in line with Rams principle of “good design is as little design as possible” (Lovell, 2011). This is echoed in the aesthetics of the application, with very little colour being used and minimal screen clutter. This provides contrast when green and red colours are used as the main indicators if the player remember or did not remember the location. One of Rams principles is that “Good design makes a product useful” which is something echoed in his work as a designer who valued “honest design” which focuses on simplicity (de Jong et al., 2017). This is a core principle in the design of LI and the ACPO Starter Pack.

Design of Location Indicators Mobile Application

LI focuses on teaching ICAO (International Civil Aviation Organization) Codes. ICAO Codes are four-letter codes that are designated to each airport around the world. ICAO Codes are referenced when ATCs and pilots communicate with each other via radio to signify the airports they are departing from or arriving to. ICAO Codes are classified geographically with certain letters signifying different regions, countries and continents. The first letter identifies the continent, the second letter identifies the country whilst the remaining two letters are used to identify the airport. Larger countries such as the USA, Australia, and Canada are the exception to this rule and have only one letter to represent a country. In some instances, the third letter may identify a certain region in a country. For example, the ICAO Code EGBB signifies Birmingham Airport. The “E” represents the continent, Europe, the “G” represents the country, the United Kingdom and the “B” represents a certain region within the country. Airports and airfields which are in relatively close proximity to Birmingham such as Derby and Leicester also have the letter “B” as their third letter. This convention is also present with airfields located in London and Scotland with Scottish airfields being represented with “P” as the third letter and London airfields being represented with a “L” as a third letter. It is important to note that this convention is not a rule that is followed comprehensively throughout ICAO Codes and is merely a guide (Benedikz, 2019). This demonstrates the complexity in learning these codes for any prospective ATC.

Learning ICAO Codes is required for ATCs and is a challenging aspects of initial ATC training. To learn these codes, trainees employ various training methods to learn and retain these codes. The main method is group learning by testing their fellow trainee's knowledge of ICAO codes, with the use of flashcards. Trainees use this method consistently, but anecdotal evidence suggests that this method may cause issues due to misinformation. Flashcards are typically self-made by trainees and there is the opportunity for flashcards to be made inaccurately with either spelling or letter placement errors being made or ICAO codes being designated to the wrong airport.

Due to the large number of airports and other aeronautical facilities that possess ICAO codes, it is impossible for a single individual to learn all the codes that exist globally. ATCs regularly are assigned to different sectors of airspace (NATS(b), n.d.), ATCs therefore are required to learn the ICAO codes for all the airfields and aviation facilities for that sector (NATS(b), n.d.). This creates confusion due to trainees unsure if they are required to learn certain codes and their locations. To learn different regions, flashcards are often made by trainees to learn the codes for the airports and aviation facilities in their assigned sector. Flashcards are cumbersome to carry around, even if the trainee is learning codes from a certain region there are still many flashcards that a trainee is required to carry around. A portable learning tool which can be flexible enough to provide sector specific training to trainees seemed to be an obvious potential solution.

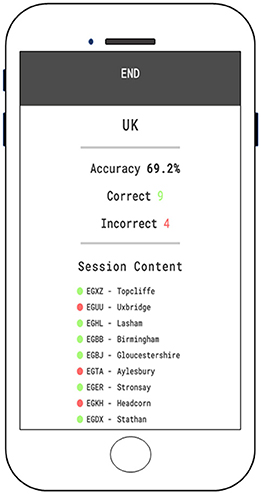

LI was designed to be a portable, flexible alternative which guaranteed consistency (Wobbrock et al., 2009). LI was designed to mimic a trainee using flashcards to learn different ICAO codes and their corresponding locations thus providing familiarity with the learning method. LI further utilises gamification by providing scoring systems to each play session, this allows for instant feedback to be provided to the user. The feedback shows the user immediately what codes they got wrong. This should provide motivation to LI users as they are able to identify what codes they need to study. The user experience is designed to be succinct and allows for play sessions with differing codes and for differing amounts of time.

LI has been created for iOS devices primarily but due to technical simplicity of the application and being created within the game engine Unity (Unity, n.d.), the application is able to be played on Android devices or ported to other platforms. Unity is an incredibly popular game engine that has an extensive knowledge base, contributed to by both industry developers, as well as the creators of the game engine. It is a fast and flexible tool which allows for the development of mobile prototypes and smaller scale software projects due to its component system. Whilst standard libraries are usable, Unity is widely used by the games industry and is a more user-friendly game engine in comparison to standard libraries. Due to its usability, Unity is suitable for both game designers and developers. For creating a refined user experience via rapid prototyping, as well as the having the ability to pivot to different platforms, Unity was the clear choice when choosing the software to create LI with. Being playable on Smartphones, trainees have a portable training tool that can fit into their pocket and played anywhere and at any time.

It is essential that ATCs continue to retain the knowledge of ICAO codes. LI is designed to function as a retention tool for ATCs. ATCs are obligated to retain the knowledge of the ICAO codes of the airports within the sectors they are assigned to. This knowledge retention process is supported by the different regions the user can choose from within LI. Users can choose different regions which contain ICAO codes from a specific sector. LI provides the option to use LI for differing amounts of time. This means users can use any incidental time they may have working to retain knowledge of specific ICAO codes. The usage of mobile phones is widespread with 85% of people owning mobile phones (Deloitte, 2017). LI takes advantage of this by providing a mobile experience that is portable and allows users to play for differing amounts of time.

User Flow

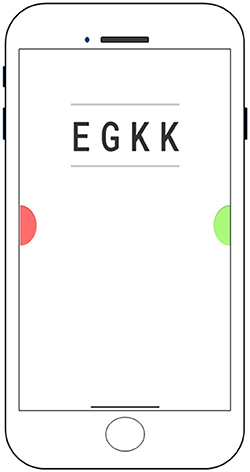

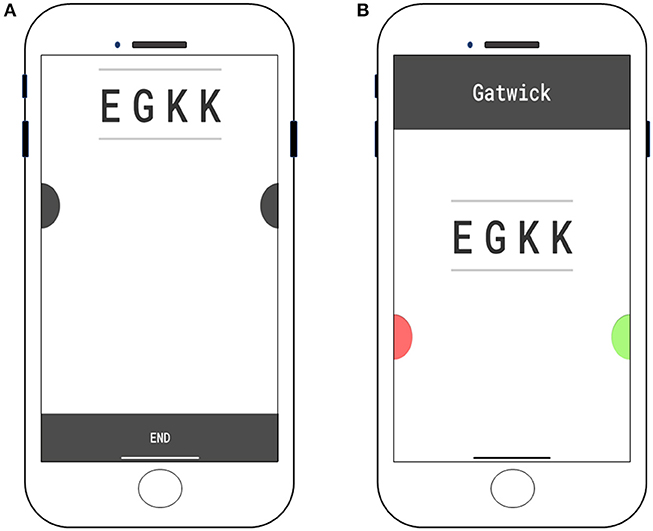

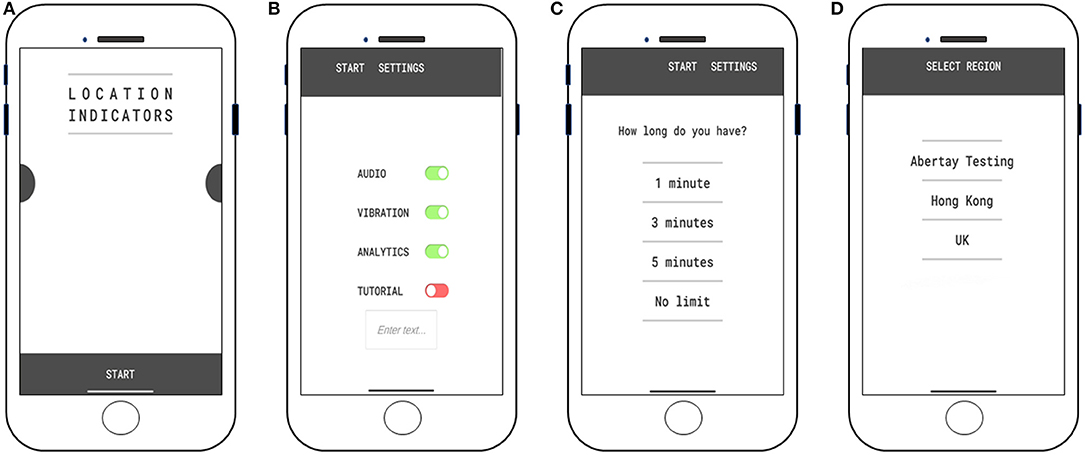

LI first leads the user through an onboarding and tutorial section in which users are introduced to the game's interactions. Once the tutorial is completed, the game puts the player into the title screen (see Figure 1A) wherein they can start the game by pressing START or go into the settings page (see Figure 1B) by swiping left. In the settings menu the player can turn the audio and vibration on and off and can also turn the tutorial back on. By turning the tutorial back on, the player can replay the tutorial when they next start the game.

Figure 1. (A) Title screen of Location Indicators. (B) Settings screen of Location Indicators. (C) “Play time” screen of Location Indicators. (D) “Select region” screen of Location Indicators.

Users can start interacting with the gameplay by defining how long they want to play for (see Figure 1C). Users can either play the game for 1, 3 or 5 min sessions or request to play without any limit by selecting “no limit.” After selecting their desired playtime they have a selection of regions (see Figure 1D) to choose from, defined by the database manager. Once the user has defined their play time and region they are taken into the game. Users are presented with ICAO codes in the middle of their screen (see Figure 2) and can either swipe up to end their session (see Figure 3A) or swipe down to reveal the answer (see Figure 3B). After revealing the answer, the player can swipe left to mark that they do remember the code (see Figure 4) or swipe right to signify that they don't remember the code (see Figure 5). After the time user defined in the “play time” screen has run out or the user manually ends their session by swiping up and press the end button, they are displayed with the results of their session (see Figure 6). The results display what codes the user remembered or did not remember as well as their accuracy displayed as a percentage. After seeing their results, the user can press END and return to the title screen, go into another game or enter the settings menu. The whole experience is designed to be as simple as possible with the interactions and gameplay itself being very simple to learn. LI seeks to provide a more effective training tool for trainees ATCs to learn ICAO codes.

Design of the ACPO Starter Pack Mobile Application

Aircraft Control Positions Operators (ACPOs) are individuals who act as “pseudo-pilots” within simulators. An ACPOs job is to assist in the training of ATCs within simulators. ACPOs give trainee ATCs the opportunity to gain experience interacting with individuals who possess the same knowledge as pilots. ACPOs are therefore required to act in the simulators the same way that pilots would in the real world. ACPOs should give the same response to trainees ATCs when communicating. As ATC training simulates high risk scenarios, ACPOs need to act appropriately in response to those scenarios. Working with ACPOs, ATCs have someone to send commands to and communicate with, the core aspect of an ATCs job.

ACPOs are required to learn specific aspects of what a pilot is required to learn. ACPOs learn the basic requirements of their job by reading and referencing a document called the ACPO Starter Pack. The ACPO Starter Pack comes in the form of a document, with the majority of the content being written word with very few visuals. The current ACPO Starter Pack is seen as non-engaging and hard to digest, therefore a digital gamified alternative was identified as a potentially better tool to engage ACPOs.

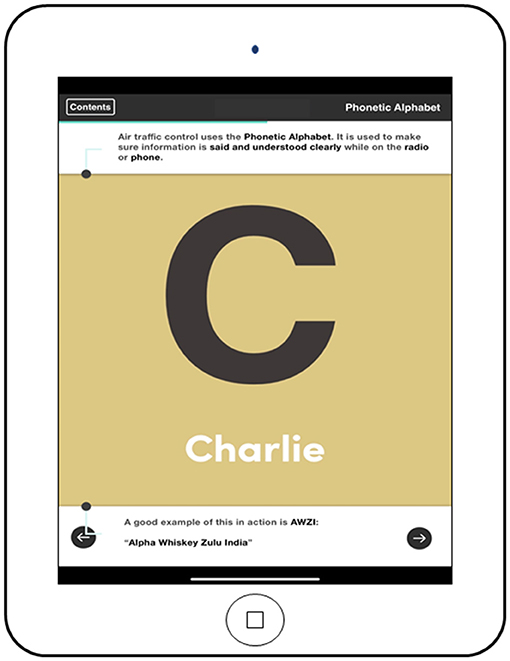

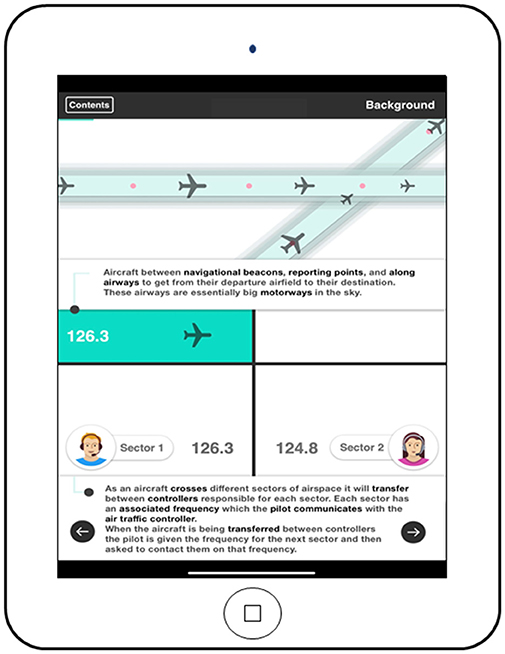

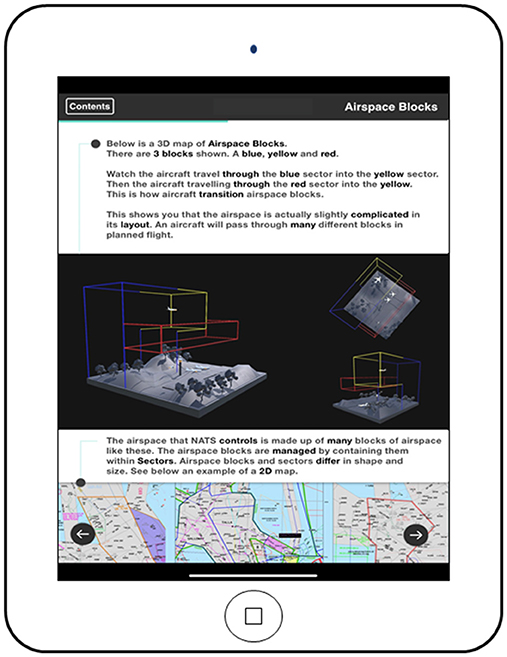

The ACPO Starter Pack is a digitised version of the existing starter pack. The ACPO Starter Pack is a mobile application that has been developed for Apple iPad devices. The app contains information regarding the usage of the Phonetic Alphabet (see Figure 7), background information on how aircraft navigate the skies, what happens when aircraft travel across different sectors (see Figure 8) and how airspace blocks work (see Figure 9).

Each page covers a different topic, contain text on relevant subject areas as well as images and animations. The ACPO Starter Pack presents a steep learning challenge to trainees due to the complex information that trainees are required to learn. The ACPO Starter Pack application is therefore designed with a focus on the user's experience, with animations and visuals being utilised to explain complex concepts. Users can navigate each screen via two arrow icons at the bottom of the screen, which either go forward or back a page when interacted with. Users can also select the “Contents” button which brings them to the beginning contents page where they can select any page to go to.

The decision to include relevant animations, images, icons and other resources as alternatives to the standard material was mainly to present the learning content in a more engaging way but also to break up blocks of text. To ensure material was accurate and was consistent with the learning content in the traditional training, an expert in the company that the research team is collaborating with, reviewed the material. Material was iterated on to ensure that it was equivalent to the content of the traditional training tools. The prototype was created over a period of ~3 months and iterated on for and after informal user tests with trainers over several more months. Design decisions were again influenced by the works of Dieter Rams and their 10 principles for good design previously referenced in the “Development Process” section.

The ACPO Starter Pack was created in the software program Principle. Principle is a design tool which facilitates the creation of interactive user interfaces, allowing for the quick creation of multi-screen apps. Principle is incredibly lightweight allowing for rapid prototyping and the implementation of new content and alteration of existing content based on user feedback. It allows for instant deployment to iOS devices and can be shared to multiple stakeholders quickly for review. Using principle allowed us to garner feedback by being able to quickly present the ACPO Starter Pack to testing participants through iOS devices easily.

The ACPO Starter Pack has been designed to be a more engaging alternative to the existing paper-based starter pack. The application was also designed with younger trainees in mind with a potential preference for digital technology over more traditional methods, and designed to better engage and teach more effectively with animations and images being used to teach complex concepts easier.

Design of Usability Study

The study was designed to obtain both qualitative and quantitative data focused on the usability of the two applications. Qualitative data was gathered from participants to support the quantitative usability scores and gather potential changes to both applications to improve their usability. Feedback on the usability of each application were recorded via a SUS questionnaire, recorded observations during usability tests and individual conversations with the research team. One usability test session included a group discussion. By gathering quantitative and qualitative data we can produce thematic analyses for each usability session to gain an understanding of the overall usability of each application.

A total of nine usability test sessions have been undertaken at four different locations. In total, four sessions had five participants, four sessions had four participants, and one session had three participants with thirty-nine participants in total. Participants were either undergraduates or working within the ATC sector. The undergraduate participants comprised 100% of six of the usability studies, accounting for 25 of the 39 participants. Sixteen participants of the 25 graduates who participated in the usability testing were enrolled in a game design and production course. Three of the participants were enrolled in a computer games technology course, one participant was enrolled in a health course, one participant was enrolled in a business course with the rest of the student participants enrolled in computing based courses. The participants who were employed within the aeronautical industry had experience ranging from 4 months to 22 years and accounted for 14 out of the 39 participants. The aeronautical industry employees represented a group of individuals who have previously undertaken self-learning with the traditional training equivalents of the two applications. The undergraduate students represent individuals who would be prospective applicants to work in the air traffic services industry, this user group was necessary to test due to the wide demographic of potential ATC trainees. Having qualitative results regarding the usability of the two applications from both user groups was extremely valuable. The undergraduate students all had no prior knowledge of air traffic control training except for one participant who had experience in air traffic services before enrolling (see Table A1 in the Supplementary Material).

Usability testing sessions involved a brief introduction to the project and the two applications, with the researcher briefing participants on the subject matter for a short introduction, no information was given about the design of the application nor how to operate the applications. Participants were given 5–10 min “playtime” with an application in which they were encouraged to explore the two applications and to experience all of the content within both applications. In regard to playtime, the participants of five of the usability sessions were made up of individuals who spent a considerable amount of time with the applications as part of a longitudinal study. After interacting with the applications, participants were given usability questionnaires to fill out and told not to discuss any of their initial feelings about the two application. We purposefully instructed participants to not share their thoughts with other participants or with the researchers present due to not wanting to impact other participant's opinions. After the usability questionnaires were completed and the researcher gathered additional feedback from participants through conversation. This process was repeated with the other application. For the participants who had spent a considerable amount of time with the two application as part of a longitudinal study, usability questionnaires were given out at the end of their time using both applications. These users also gave feedback on a one to one basis at the end of their time using both applications.

Participants were given two copies of the usability questionnaire document, one for the ACPO Starter Pack and one for LI. The two copies were identical and the two documents asked the same questions. The usability document asked the participants for demographic data, asking for age and name with length of employment, course and year studying being gathered in the one to one or group discussion sessions with participants and the researcher. The questionnaire was comprised of ten questions based on a Likert scale of a value from 1 to 5 being given to each statement. The odd numbered statements were positive attitude questions whilst the odd were negative attitude. Calculating the SUS involves subtracting 1 from the user's answers to odd statements and subtract the value of the answers of even statements by 5. After this process, responses will range from 0 to 4, after adding responses and multiplying by 2.5, we achieved our average SUS scores (Usability.gov, n.d.). The results of each usability assessment allowed a usability score to be associated to each participant for each application. With this quantitative data we can produce a succinct and aggregate usability value for each application. The SUS is a globally recognised usability scale which provides a reliable method of assessing usability whilst being scalable and easy to use (Brooke, 1996). The SUS is beneficial to studies with smaller sample sizes, which makes it ideal for this study (Usability.gov, n.d.).

Participants were given open-ended questions as the final section of the usability questionnaires. These open-ended questions served to generate more qualitative data on the usability and design of each application and to gather potential design changes to both applications. The answers to these open-ended questions also added to the thematic analysis alongside the collected feedback from participants after completing the usability questionnaire. Qualitative data gathered from discussion between researchers and participants as well as the usability questionnaires would support usability scores and give more insight to each application's usability scores.

After completing the usability questionnaires, participants were interviewed within a group or on a one to one basis with the researcher. Questions were asked in order to gain more informal qualitative feedback on the design and usability of the two applications. Participants were given prompts so that qualitative feedback could be collected for thematic analysis. Participants were encouraged to suggest any potential changes to the two applications to improve their usability. Participants feedback was gathered and contributed to a thematic analysis.

By undertaking these usability test sessions, we measured the engagement users had with each application and evaluated the overall design of each application from a user experience perspective. We can also conclude how “fit for purpose” both applications are for the training of air traffic service staff and gained feedback from participants on how to improve the usability of each application. We can then reflect upon the usability tests to conclude what elements make for an engaging digital training application for the air traffic industry.

Results

Quantitative Analysis

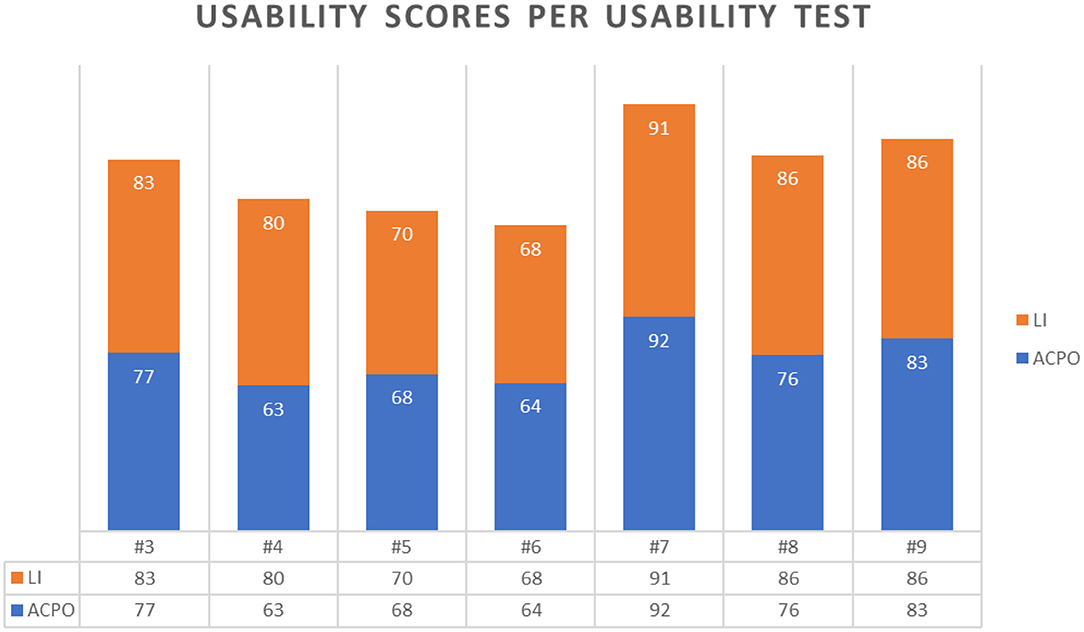

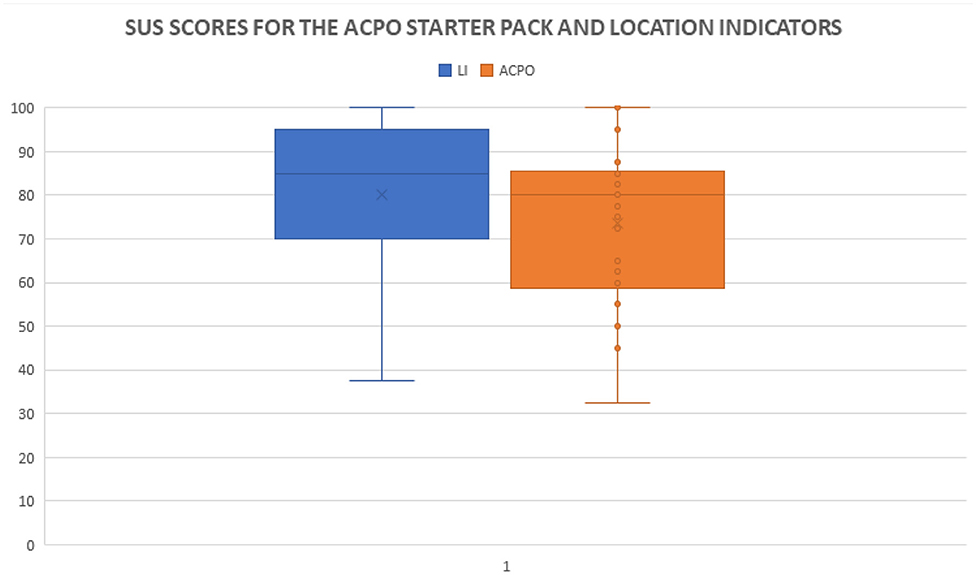

Usability assessments were used to quantify participant's reactions to the usability of each application based on SUS methodology. Over seven of the nine usability sessions, usability scores were averaged (n = 39, see Figure 10). LI scored an average usability score of 80 and The ACPO Starter Pack scored an average usability score of 74 (see Figure 11 for SUS score variability depicted using boxplots). On the SUS scale, this is defined as “Good” with a letter grade of B (Usability.gov, n.d.). Two usability testing sessions were disregarded due to a logistical error.

A thematic analysis (see Appendix in Supplementary Material) was undertaken based on the qualitative feedback received via the open-ended questions on the usability questionnaires and the discussions between researcher and participants. A thematic analysis has been undertaken for all nine of the usability testing sessions. The common thematic trends seen through all usability testing sessions were identified and are discussed in the following sections.

Qualitative Analysis

Location Indicators: Themed Feedback

Unclear aim

Participants when first presented with LI were unsure of the applications aim. This misunderstanding of the purpose of the application directly impacted the way in which users initially attempted to interact with the application. Many participants were confused with what they needed to do when using the application, with one participant thinking that they needed to stipulate if the code and the location matched on a true or false basis. “Is this for true or false?” was a common piece of feedback throughout usability sessions. One participant commented that “I was thinking I had to type something in” and many participants wrongly assumed the LI application as a “testing” tool rather than a tool for “learning” and “assessment.” This meant that participants felt that that “people will cheat themselves” as they only had to tell the game they remembered a code or not. This extended to participants feeling they needed more explanation, one participant is quoted in saying “generally good application, needs more explanation at the start though.” With participants regularly asking “What do I need to do?” when first presented with LI.

Simplicity

Simplicity was a core theme throughout the feedback received. Participants commented on the colour choices of red and green for remembering and not remembering as a good visual design decision. A total of seven participants remarked that simplicity was LI's key value. Participants particularly liked the simplicity of the cards which made it easier for them to focus on the task at hand.

Fit for purpose and well-designed learning tool

Participants were favourable toward LI, with participants speaking positively of the design of the application. Participants stated that the LI app was an accessible, simplistic tool and participants expressed that “the app would work well for the intended purpose” and the application was “quick to pick up when you have some spare time.” Many participants commented they had gained additional knowledge of ICAO codes even after using the application briefly, with one participant stating that “With no previous knowledge I was quickly able to recognise some of the names as they appeared more frequently.” Participants felt that LI was fit for purpose at teaching trainees and could be used in a real-world capacity. Participants felt that the application was very easy to use and that would be suitable for trainees of a younger age. Some participants expressed some mixed response toward LI as an effective learning tool. This was due to participants not feeling LI was optimal for reinforcing their knowledge, due to the random nature of the codes appearing.

Inappropriate codes being displayed

Participants from usability sessions that contained experienced air traffic staff had many participants comment on the codes implemented within LI. There were multiple suggestions from participants that LI should contain more commonly known codes. Many ICAO codes that were implemented within the application were deemed too specific and were only required knowledge when trainees undertake sector-specific training. Participants felt that these low scores could potentially discourage further use of LI. Participants expressed that there should be a stronger focus on prioritising major airports as well as codes for sectors that the trainees would be managing in the future.

Participants expressed negativity toward the codes being repeated throughout a game session and specifically that too many uncommon ICAO codes were repeated with one participant stating that there was “too much repetition”. Many participants highlighted the typos seen within LI. Participants felt that “map information would be beneficial” with other participants echoing the use of maps to enhance learning. Participants felt that this would help guide the learning process and make the information less abstract in nature. Many participants expressed that they didn't like “The fact that all location indicators are given at once” with this being one participant's least favourite element of the game. One participant commented that “Not knowing how large the list of Location Indicators could be, I felt discouraged.”

The ACPO Starter Pack: Themed Feedback

Presentation of information

A large amount of the feedback was focused on the fact that participants felt that the application was busy, with one participant saying that “It (ACPO Starter Pack) felt rushed, too much use of graphics and text.” Participants felt that the application contained too many images, gifs, and videos per page, with some pages having too much on-screen movement. This made it hard for participants to concentrate on the learning content as “There is so much information condensed into a short experience.” This made pages too cluttered leading to participants feeling unfocused, unable to digest information and in one case one participant almost felt motion sick. Participants found it hard to focus on certain pages and would even just skip pages without reading through the contents. Participants did this as they were not able to focus on certain pages or felt uncomfortable doing so. The combination of horizontal and vertical animation was a pain point for a few participants as they felt it was very distracting and uncomfortable. Participants felt that various images which were in LI were unnecessary and gave the user no educational benefit.

Improvement over existing training

Participants generally held a positive feeling toward the two applications through the usability sessions. Participants indicated that they particularly felt that the ACPO Starter Pack was easy to understand and had a good layout with a clean design. The level of engagement was commented on with one participant saying that the ACPO Starter Pack was “Dynamic and subsequently more engaging.” Participants felt that the application would be very useful, this was particularly highlighted by participants experienced in air traffic controller training. Participants regularly stated that the ACPO Starter Pack was a significant improvement over current training materials. Participants with experience in undertaking ATC training consistently commented that the ACPO Starter Pack on iPad was a “huge upgrade” to the existing training equivalent.

Participants commented on the use of visuals as one of the main highlights of the ACPO Starter Pack. The simplification of complex concepts via the use of simple language as well as visual media was commented on various times by participants. A number of participants who had spent considerable amount of time with application felt that wasn't much of a difference between the paper-based training and the digital stating that they “Felt like the paper learning just stuck onto an app.” These comments are possibly due to the core content of the existing ACPO Starter Pack being identical to the content of the mobile application.

Interaction with application

Participants frequently commented on the interactivity they had with the ACPO Starter Pack, focusing on the sound and sub-pages within the application. The comments focused on the inconsistency within the application regarding what can be interacted within the ACPO Starter Pack and what cannot be interacted with. Participants are quoted as saying “some maps could not be clicked on” and “Some links aren't clear you can touch to see other stuff.” Participants also commented on the fact that some buttons aren't working and other elements within the application were not interactive when they should have been and there was a lack of interactivity with “Some elements should interact, such as the phonetic alphabet.” This was commented upon from various participants in the usability sessions consisting of users who had used the two applications for a longer period of time. One participant commented that they perceived the lack of interactivity “made it difficult to retain info.” One participant echoed previous feedback by commenting that “It could be more interactive to make it feel more as an actual app rather than a textbook.” The interactivity itself being the reason why comparisons were drawn between textbook and application.

Sound was commented as jarring within the application due to audio cues being used inconsistently within the application. Audio was also considered to be unnecessary and did not require to be implemented within the application as it was not perceived to enhance learning in any way.

Many participants commented on the issues they had with navigating throughout the application. Participants stated that the navigation of content could be improved, as the forward arrow button offered an overly linear way of moving through content (only allowing forwards or backwards navigation). Participants said that navigation would be improved if a contents page could be accessed to directly access certain sections. Some participants disagreed and felt that the ACPO Starter Pack was “very easy to navigate.”

Many participants suggested the use of a play button to play and pause certain animations with one participant stating that “The gifs [videos] were a bit much—I'd have liked them to play once and then need to be tapped to play again.” One participant agreed about the animations being distracting suggesting “the animations could be paused/ played.”

The ACPO Starter Pack vs. Location Indicators

The final usability results suggest that there is no clear preference from users regarding the two apps. The usability scores when averaged are near identical at 80 and 74 with the qualitative feedback from participants having a positive reaction to both LI and The ACPO Starter Pack. Clear trends were easily identifiable through the qualitative feedback and the suggested changes participants would like to see are apparent. The authors believe that further research would be beneficial to explore the use of UX methodology vs. the use of gamification principles. This further work would benefit the future design of training applications and other e-learning content.

Discussion

The ACPO Starter Pack had an average usability score of 74 whilst LI had an average usability score of 80. This is in line with other studies that prove well-designed mobile applications are effective learning tools (Sánchez et al., 2008; Su et al., 2009; Sánchez and Espinoza, 2011; Tsuei et al., 2013; Ahmad and Azahari, 2015; Armstrong and Wilkinson, 2016). The SUS scores seen in Table 1 ranged from 74.25 to 90.6 for an average of 79.4.

Participants found the lack of progression an issue, as they had no method of assessing their own learning. Participants suggested the use of summaries, evaluation elements such as quizzes to evaluate their knowledge of the content as well as reinforce their own learning. Other studies have utilised gamification mechanisms such as quizzes, leader boards, badges, achievements and avatars (Gordillo et al., 2013; Su and Cheng, 2014; Ngan et al., 2016; Pechenkina et al., 2017) and have produced satisfactory results with respect to usability. The Zeigarnik effect states that unfinished tasks are far better remembered that finished tasks (Zeigarnik, 1938) whilst Nunes and Drèze (2006) explains “a phenomenon whereby people provided with artificial advancement toward a goal exhibit greater persistence toward reaching the goal.” Both Nunes and Drèze (2006) and Zeigarnik speak about matters regarding the motivation of individuals and that when there exists a semblance of progression, there is an increase in engagement. A progression tracker is an example of the Zeigarnik effect where unfinished tasks are displayed to users in order to motivate. Whilst functioning as an e-book may provide some value as a learning tool, implementing evaluation elements and breaking the section up, may produce an engaging flow and ensure trainees are learning the contents of the ACPO starter pack.

Participants initially found LI's interactivity unintuitive. Participants stated that swiping should be reversed due to their exposure to mobile dating apps (David and Cambre, 2016). This is an example of an “affordance” which Norman (1988) outlines as “the perceived and actual properties of the thing, primarily those fundamental properties that determine just how the thing could possibly be used.” In layman's terms the design of objects influences the perceived interaction (Norman, 1988). Referred to as “Swiping ambiguity” by Budiu and Nielsen (2010), issues with the swiping interaction is common for of iPad apps and websites, where the swipe interaction is both inconsistent and confusing (Budiu and Nielsen, 2010). Miller et al. (2017) conducted a usability study into an iPad health app for the vulnerable population. They found that participants had little to no problems interacting with the application. They explained this was due to closely considering their users and the fact they possessed “low computer literacy.” They purposefully created a simple interface, using large buttons for navigation which the user tapped (Miller et al., 2017). Despite LI being designed with simplicity in mind, Miller et al. (2017) presents an even simpler interaction solution which caused little usability issues with users. A study into gestured interaction design saw that commonly people use tapping or swiping. The same study saw 70% of participants using tapping gestures and 45% using swiping gestures (Leitão, 2012). Whilst swiping is natural to most users, the act of tapping is even more natural to users with the act of swiping having significant ambiguity.

Participants were also unsure of what they were signifying when interacting with LI and what the application wanted them to indicate. In LI, users are presented with ICAO codes, after they reveal the answer the user then needs to stipulate if they either “remember” or “don't remember” the code. Several participants were not initially aware of how the application worked, with many feeling that this was not naturally obvious to users. Participants initially thought they had to stipulate to the game if it was true or false that the ICAO code designated the displayed location. Participants expressed that quizzing participants by giving them multiple locations to choose from, was a more preferred method of learning. Studies that focus on the effectiveness of digital flashcard mobile applications present different methods of how to interact with flashcards digitally. Previous studies have simply used digital buttons to reveal the answer and to signify if the user remembered or did not remember the answer. A previous study used large buttons that simply say “check,” “correct,” and “incorrect” (Edge et al., 2012). Whilst theoretically, not being suited to a universal audience, another study uses buttons with ticks and crosses inside them and which are coloured red and green for a more universal approach (Edge et al., 2011). Both studies reported study participants having no problems interacting with the applications (Edge et al., 2011, 2012). A study which used the application Study Blue also reported no problems with the commercial application presenting flashcards which users tapped on to reveal the answer. Users of Study Blue swipe the card left or right to signify if the user knows the answer or not (Burgess and Murray, 2014). This is also seen in the popular self-learning application Quizlet, which uses the exact same interaction technique as Study Blue. Quizlet itself has proven to be an effective learning tool (Barr, 2016). It's clear that the design of LI's core interaction is overly confusing, with clear alternative interaction methods existing that present more usable interaction techniques. Participants repeatedly felt that they were cheating themselves due to LI relying on the user's honesty. Due to there being nothing stopping users from marking codes as “remembered”, participants felt that they could easily cheat. This feeling of cheating may be due to the fact that participants had no motivation to actively learn the codes as part of the usability sessions. Future work will look at longer term testing which will assess participant's motivation to learn. There is a strong case based on qualitative data that an alternative method of evaluation is needed for LI.

Reviewing the qualitative feedback from participants of other usability studies issues pertaining to the presentation of information and navigation were common, with the presentation of text—its colour, size and amount of text being commented upon. This was also seen in the feedback the ACPO Starter Pack received, with the presentation of text being commented upon. One participant is quoted as saying “The text content needs a huge improvement to be easier to understand.” Reviewing the findings of this study and other relevant studies show that how text is presented is a big factor to consider in terms of the usability of mobile applications. Participants from the other studies reacted negatively due to the lack of navigation buttons for “faster learning of the application” (Hashim et al., 2011) and being annoyed as they couldn't access certain content. Users of the ACPO Starter Pack regularly commented that they weren't able to access the content of the sub-pages. This was due to the interaction needed to access the sub-pages, being unclear. Presenting information is very important and doing so in a clear and obvious way is important for mobile devices due to their small screen size. The way in which users navigate mobile learning applications, should be closely considered.

The qualitative data highlights that the core mechanic for LI is not obvious or effective. The core mechanic in LI needs to change, as one participant commented, having multiple choices of locations to choose from to progress through LI is an option. Several participants believed the game was asking if the code and location matching up was true or false, this is also a solution. Mobile phones now commonly use on-screen keyboards, the application could easily require users to type in a code or location when either a location or code is presented with them. Expanding on this, the game could also ask users to provide the remaining letter of an unfinished location or code. The usage of maps and other imagery could also be explored with the real-world locations making the learning of ICAO codes less abstract, this has proven to lead to positive outcomes (Zbick et al., 2015).

The following guidelines are recommended for the design of mobile learning applications for ATCs, based on the findings of the study.

ATCs and other workers in the aeronautical industry have jobs that require extensive training in order to undertake work which is safety critical. ATCs have diverse background with anyone with five GSCE's able to become a trainee ATC (BBC, 2018a,b). Due to trainees coming into the aeronautical industry being of different ages and demographics, they also have varying degrees of experience using technology. Designing any sort of technology for trainee ATCs, needs to accommodate individuals who possess little experience at using technology in their day to day lives. To accommodate this, the user experience of mobile applications produced for trainee ATCs needs to be considered. Interfaces need to be designed with icons, colours, and other elements targeting a wide audience. Interactions are required to be as simple and user friendly as possible. Simplicity is something that should be targeted through any application design, from the qualitative feedback received, simplicity was highlighted consistently as one of LI's most admired elements. Feedback from the qualitative analysis also provided evidence that the applications produced are not suited to the individuals learning style, with some testing participants wanting more interactivity when using the ACPO Starter Pack and other individuals wanting a more tailored experience for LI. With the diverse background of ATC trainees and individuals wanting differing self-learning experiences, creating applications that can be flexible and contain different learning modalities is key. Due to the critical role that air traffic service industry personnel undertake, self-learning tools should also be kept up to date with the latest information. Most importantly information should always be accurate. Using inaccurate information in any learning material, would deem it to be unusable and if used, could pose a real danger. Lastly, one of the most common pieces of feedback received focused on the unclear purpose of LI. The expected learning outcomes and core learning is never disclosed to the user. Not only was the aim of the application unclear to participants, how they were meant to interact with the application was also often confusing to participants. Because of this we recommended that any future learning applications, present the purpose of the given application and how to interact with the application, in either an information menu or through a tutorial or onboarding process.

Conclusions and Further Work

Our work has focused on producing two mobile applications for the aeronautical industry. These mobile applications serve as an alternative to existing training undertaken in the aeronautical industry. By undertaking usability testing sessions with various participants of differing demographics and experience with ATC training, we have assessed the design and usability of the two mobile applications produced. We have seen that approval rating is high from the quantitative usability results whilst qualitative results suggest that there are clear improvements to be considered for future versions of both applications.

In the methodology section of this paper, we defined three questions.

• What elements of the applications contribute to higher or lower usability scores?

• What changes can be done to both applications to improve their usability?

• Are both applications perceived to be fit for purpose to train the aeronautical workforce?

Both LI and ACPO achieved a usability score which based on the literature can be considered a good and excellent rating. The feedback gathered from the usability sessions provides a clear pathway for further work to improve the usability of both mobile applications.

By analysing the qualitative data taken from participants, there are clear elements that contribute to lower and higher usability scores for both apps. Simplicity was a common comment with five out of the nine usability sessions consisting of multiple participants mentioning simplicity as their favourite element of the LI app. Another element that participants reacted well to was the gamification implemented in LI via the feedback given after every play session.

In terms of the elements that contribute to lower usability scores of LI, the core interaction of swiping was a contentious theme of feedback. Participants regularly mentioned that they had problems interacting with LI with participants commenting that swiping felt “clunky” and “fiddly [sic].” LI also had persistent comments on its purpose, with participants unsure what the aim of LI was. Often participants commented that “people will cheat themselves” For the ACPO Starter Pack, participants reacted well to the use of visuals, with one participant commenting that “The use of animation is a definite advantage over a regular textbook/worksheet.” Many comments from participants focused on how “digestible” the content of the ACPO Starter Pack was with one commenter saying that the ACPO Starter Pack “Made some aspects easier to understand.” Participants consistently had issues when navigating the ACPO Starter Pack as it was not clear that certain sub-pages could be accessible. One participant commented that “An icon to represent clickable content would be beneficial.” Participants also had problems with the amount of content viewable at any one time, with participants commenting that they felt the ACPO Starter Pack felt “busy.”

This paper contributes to the research area of digital training and digital transformation. This research has focused on the aeronautical industry, proposing, and testing the use of the digital apps to improve the current air traffic controller training. In the study we present two mobile learning applications which demonstrate that presenting mobile applications, designed through a user-centered design approach can be received well by a wide audience. This audience includes experienced ATC staff, ATC trainees and individuals with no experience with ATC or ACPO training. We've considered a wide audience and shown that self-learning in the air traffic services industry can theoretically be innovated through mobile learning applications. This study is beneficial for the air traffic services industry as it considers and evaluates the use of mobile applications for the air traffic services industries. The study implicates that the use of mobile technology can be beneficial for ATC trainees. ATCs encapsulate a wide audience of individuals who have no particular demographic, this study presents varying demographics having a positive response to two applications. This is especially noteworthy due to the wide demographic of individuals who could possibly apply to be an ATC, with only basic qualifications needed to become an ATC (BBC, 2018a,b). As far as we are aware this paper is unique in that it presents a usability study of digital apps for the aeronautical industry.

Future work is already being undertaken by the authors, in which they are exploring the effectiveness of both applications as learning tools. A study is underway to compare the learning effectiveness of the existing training in comparison to LI and the ACPO Starter Pack. This research builds directly on the results of this paper. One group is tasked with learning via the existing paper-based training in current use, the other group is utilizing the two applications to learn the identical learning content. At the end of the study each group will complete the same assessment. After the assessments are completed, the average learning score of both groups will be compared. The aim is to evaluate how effective the approaches are for learning retention, but this could only be undertaken after a successful design could demonstrate high user engagement in digital tools.

Data Availability Statement

All datasets generated for this study are included in the article/Supplementary Material.

Ethics Statement

The studies involving human participants were reviewed and approved by this study and was conducted under the auspices of the Abertay University School of Design and Informatics Research Ethics Committee, having passed the ethics process and acquired approval. The University of Abertay Dundee also approved of the consent form given to all participants. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

PS was the Research Associate on the project. ID was the project lead and the primary academic supervisor and contributed to the overall project development. RF was the knowledge base support and contributed to the project development. ID, RF, and KS-B assisted in academic matters including the structure and writing of the paper.

Funding

This research was funded by a Knowledge Transfer Partnership (KTP). The KTP was part-funded by Innovate UK on behalf of the UK government.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The author would like to thank all the participants of the study as well as Steve Graham, Andrea Kirkhope, Robert Becker, Simon Messer, and Melanie Hayes. As a Knowledge Transfer Project Andrea Kirkhope acted as primary company supervisor and contributed with assistance related to the assisting aeronautical company. This included organising usability tests with their colleagues at the aeronautical company they were employed at. Robert Becker conducted two usability sessions on the behalf of the author. Steve Graham was the company sponsor. All authors contributed to the article and approved the submitted version.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomp.2020.00022/full#supplementary-material

References

Abras, C., Maloney-Krichmar, D., and Preece, J. (2004). “User-centered design,” in Berkshire Encyclopedia of Human-computer Interaction, Vol. 1, ed W. S. Bainbridge (Great Barrington: Berkshire Publishing Group), 763–768.

Ahmad, W. F. W., and Azahari, I. N. N. (2015). Visual Approach of a Mobile Application for Autistic Children: Little Routine. Los Angeles, CA: HCI, 436–442.

Arachchilage, N. A. G., Love, S., and Maple, C. (2013). Can a mobile game teach computer users to thwart phishing attacks?. Int. J. Infonomics 6, 720–730. doi: 10.20533/iji.1742.4712.2013.0083

Armstrong, P., and Wilkinson, B. (2016). “Preliminary usability testing of ClaMApp: a classroom management app for tablets,” in OzCHI '16: Proceedings of the 28th Australian Conference on Computer-Human Interaction (Launceston), 654–656.

Barr, B. W. B. (2016). Checking the effectiveness of Quizlet® as a tool for vocabulary learning. The Center for ELF J. 1, 36–48. doi: 10.15045/ELF_0020104

BBC (2018a). Call for Students as Air Traffic Service Demand Rises. Available online at: https://www.bbc.co.uk/news/uk-wales-45187872 (accessed December 6, 2019).

BBC (2018b). Hundreds More Air Traffic Controllers Needed. Available online at: https://www.bbc.co.uk/news/uk-scotland-scotland-business-45057103 (accessed November 25, 2019).

Benedikz, R. (2019). ICAO CODES. Available online at: https://www.key.aero/article/icao-codes (accessed December 16, 2019).

Brooke, J. (1996). “SUS - a quick and dirty usability scale,” in Usability Evaluation in Industry eds P. W. Jordan, B. Thomas, B. A. Weerdmeester and I. L. McClelland (London: Taylor and Francis).

Budiu, R., and Nielsen, J. (2010). Usability of iPad Apps and Websites. Fremont, CA: Nielsen Norman Group.

Buley, L. (2013). The User Experience Team of One: A Research and Design Survival Guide. New York, NY: Rosenfeld Media.

Burgess, S. R., and Murray, A. B. (2014). Use of traditional and smartphone app flashcards in an introductory psychology class. J. Instr. Pedagogies 13, 1–7. Available online at: https://eric.ed.gov/?id=EJ1060082 (accessed June 24, 2020).

Burke, B. (2014). Gamify: How Gamification Motivates People to Do Extraordinary Things. New York, NY: Bibliomotion Inc.

Cordeil, M., Dwyer, T., and Hurter, C. (2016). Immersive Solutions for Future Air. Niagara Falls, NY: ACM.

Darejeh, A., and Salim, S. S. (2016). Gamification solutions to enhance software user engagement—a systematic review. Int. J. Hum. Comput. Inter. 32, 613–642. doi: 10.1080/10447318.2016.1183330

David, G., and Cambre, C. (2016). Screened intimacies: tinder and the swipe logic. Soc. Media Soc. 2, 1–11. doi: 10.1177/2056305116641976

de Jong, C., Klemp, K., Mattie, E., and Goodwin, D. (2017). Ten Principles for Good Design: Dieter Rams: The Jorrit Maan Collection. Munich: Prestel.

Deloitte (2017). UK Public Are ‘Glued To Smartphones' as Device Adoption Reaches New Heights. Available online at: https://www2.deloitte.com/uk/en/pages/press-releases/articles/uk-public-glued-to-smartphones.html (accessed January 30, 2020).

Dowell, J., and Long, J. (1998). Target Paper: Conception of the cognitive. Ergonomics 41, 126–139. doi: 10.1080/001401398187125

Edge, D., Searle, E., Chiu, K., Zhao, J., and Landay, J. A. (2011). “MicroMandarin: mobile language learning in context,” in CHI 2011 Conference on Human Factors in Information Systems (Vancouver, BC: ACM).

Edge, D., Whitney, M., Landay, J., and Fitchett, S. (2012). “MemReflex: adaptive flashcards for mobile microlearning,” in MobileHCI 2012 Conference on Human Computer Interaction with Mobile Devices and Services (San Francisco, CA: MobileHCI), 431–440.

Febretti, A., and Garzotto, F. (2009). “Usability, playability, and long-term engagement in computer games,” in Conference: Proceedings of the 27th International Conference on Human Factors in Computing Systems, CHI 2009, Extended Abstracts Volume (Boston, MA).

Federal Aviation Administration (2017). Retirement. Available online at: https://www.faa.gov/jobs/employment_information/benefits/csrs/ (accessed December 6, 2019).

Fowler, M., and Highsmith, J. (2001). Manifesto for Agile Software Development. Available online at: https://agilemanifesto.org/ (accessed April 1, 2020).

Gordillo, A., Gallego, D., Barra, E., and Quemada, J. (2013). “The city as a learning gamified platform.,” in 2013 IEEE Frontiers in Education Conference (FIE) (Oklahoma City, OK), 372–378, doi: 10.1109/FIE.2013.6684850

Haissig, C. (2013). “Air traffic management modernization: promise and challenges,” in Encyclopedia of Systems and Control, eds J. Baillieul and T. Samad (London: Springer), 1–7.

Hart, J., Sutcliffe, A., and De Angeli, A. (2012). “Using affect to evaluate user engagement,” in CHI EA: Conference on Human Factors in Computing Systems (New York, NY), 1811–1834.

Hashim, A. S., Ahmad, W. F. W., and Ahmad, R. (2011). “Mobile learning course content application as a revision tool: the effectiveness and usability,” in International Conference on Pattern Analysis and Intelligence Robotics (Putrajaya), 184–187.

Heinonen, R., Luoto, R., Lindfors, P., and Nygård, C.-H. (2012). Usability and feasibility of mobile phone diaries in an experimental physical exercise study. Telemed. e-Health 18, 115–119. doi: 10.1089/tmj.2011.0087

Lain, D., and Aston, J. (2004). Literature Review of Evidence on e-Learning. Brighton: Institute for Employment Studies.

Lane, K. (2018). Air Traffic Controllers Overcome Complexities, Challenges. Available online at: https://news.erau.edu/headlines/air-traffic-controllers-overcome-complexities-challenges (accessed April 20, 2020).

Law, L.-C. E., Roto, V., Hassenzahl, M., Vermeeren, A. P. O. S., and Kort, J. (2009). Understanding, Scoping and Defining User Experience: A Survey Approach. Boston, MA: ACM.

Leitão, R. (2012). Creating Mobile Gesture-based Interaction Design Patterns for Older Adults: a study of tap and swipe gestures with Portuguese seniors (Master thesis).

Little, A., Cook, B., Hughes, H., and Wilkerson, K. (2017). Utilizing Guided Simulation in Conjunction with Digital Learning. Daytona Beach, FL: Embry-Riddle Aeronautical University.

Little, J. R., Pavliscsak, H. H., Cooper, M., Goldstein, L., Tong, J., and Fonda, S. J. (2017). Usability of a Mobile Application for Patients Rehabilitating in their Community. J. Mobile Tech. Med. 6, 14–24. doi: 10.7309/jmtm.6.3.4

Matt, C., Hess, T., and Benlian, A. (2015). Digital transformation strategies. Bus. Inform. Syst. Eng. 57, 339–343. doi: 10.1007/s12599-015-0401-5

McKendrick, J. (2015). Why Digital Transformation is So Difficult, and 8 Ways to Make It Happen. Available online at: https://www.forbes.com/sites/joemckendrick/2015/12/29/eight-ways-to-make-digital-transformation-stick/#26fdc32c756a (accessed Feburary 11, 2020).

Metz, S. (2011). Motivating the Millennials. Sci. Teach. 78:6. Available online at: https://www.questia.com/read/1G1-267811175/motivating-the-millennials (accessed 24 June, 2020).

Miller, D. P. Jr., Weaver, K. E., Case, L. D., Babcock, D., Lawler, D., Denizard-Thompson, N., et al. (2017). Usability of a novel mobile health iPad app by vulnerable populations. JMIR Mhealth Uhealth. 5:e43. doi: 10.2196/mhealth.7268

Myers, K., and Sadaghiani, K. (2010). Millennials in the workplace: a communication perspective. J. Bus. Psychol. 25, 225–238. doi: 10.1007/s10869-010-9172-7

National Research Council (1997). Flight to the Future: Human Factors in Air Traffic Control. Washington, DC: National Academy Press.

NATS(a) (n.d.). Careers. Available online at: https://www.nats.aero/careers/trainee-air-traffic-controllers/#games (accessed March 26, 2020).

NATS(b) (n.d.). Introduction to Airspace. Available online at: https://www.nats.aero/ae-home/introduction-to-airspace/ (accessed January 31, 2020).

NATS(c) (n.d.). Training to Be an Air Traffic Controller. Available online at: https://www.nats.aero/careers/trainee-air-traffic-controllers/ (accessed February 7, 2020).

Ngan, H. Y., Lifanova, A., Jarke, J., and Broer, J. (2016). “Refugees welcome: supporting informal language learning and integration with a gamified mobile application,” in European Conference on Technology Enhanced Learning (Lyon) 521–524.

Noyes, D. (2018). Could Air Traffic Controller Shortage Have Impact on Safety?. Available online at: https://abc7news.com/travel/could-air-traffic-controller-shortage-have-impact-on-safety/4468996/ (accessed December 6, 2019).

Nunes, J., and Drèze, X. (2006). The endowed progress effect: how artificial. J. Cons. Res. 32, 504–512. doi: 10.1086/500480

Oblinger, D. (2003). Boomers, Gen-Xers, and Millennials: Understanding the “New Students”, Vol. 500. Educause, 36–47. Available online at: https://www.naspa.org/images/uploads/main/OblingerD_2003_Boomers_gen-Xers_and_millennials_Understanding_the_new_students.pdf (accessed June 24, 2020).

Oprescu, F., Jones, C., and Katsikitis, M. (2014). I PLAY AT WORK—ten principles for transforming work processes through gamification. Front. Psychol. 5:14. doi: 10.3389/fpsyg.2014.00014

Pechenkina, E., Laurence, D., Oates, G, Eldridge, D., and Hunter, D. (2017). Using a gamified mobile app to increase student engagement, retention and academic achievement. Int. J. Educ. Technol. Higher Educ. 14:31. doi: 10.1186/s41239-017-0069-7

Perry, J., Luedtke, J., Little, A., Cook, B., and Hughes, H. (2017). Incorporating Digital Learning Tools in Conjunction with Air Traffic. Daytona Beach, FL: Embry-Riddle Aeronautical University.