- 1Ophthalmic Center, The Second Affiliated Hospital, Jiangxi Medical College, Nanchang University, Nanchang, China

- 2Center of Ophthalmic, Heyou Hospital, Foshan, China

- 3School of Mathematics and Computer Sciences, Nanchang University, Nanchang, China

- 4Nanchang University, Nanchang, China

Aim: This study aimed to predict the formation of OBL during femtosecond laser SMILE surgery by employing deep learning technology.

Methods: This was a cross-sectional, retrospective study conducted at a university hospital. Surgical videos were randomly divided into a training (3,271 patches, 73.64%), validation (704 patches, 15.85%), and internal verification set (467 patches, 10.51%). An artificial intelligence (AI) model was developed using a SENet-based residual regression deep neural network. Model performance was assessed using the mean absolute error (EMA), Pearson’s correlation coefficient (r), and determination coefficient (R2).

Results: Four distinct types of deep neural network models were established. The modified deep residual neural network prediction model with channel attention built on the PyTorch framework demonstrated the best predictive performance. The predicted OBL area values correlated well with the Photoshop-based measurements (EMA = 0.253, r = 0.831, R2 = 0.676). The ResNet (EMA = 0.259, r = 0.798, R2 = 0.631) and Vgg19 models (EMA = 0.31, r = 0.758, R2 = 0.559) both displayed satisfactory predictive performance, while the U-net model (EMA = 0.605, r = 0.331, R2 = 0.171) performed poorest.

Conclusion: We used a panoramic corneal image obtained before the SMILE laser scan to create a unique deep residual neural network prediction model to predict OBL formation during SMILE surgery. This model demonstrated exceptional predictive power, suggesting its clinical applicability across a broad field.

Introduction

Small-incision lenticule extraction (SMILE) surgery is a widely employed and efficacious ocular surgery for treating myopia and astigmatism (Ang et al., 2021). Compared to keratorefractive surgery, SMILE has a higher level of predictability, precision, and security (Palme et al., 2022; Wu et al., 2014; Sekundo et al., 2011; Reinstein et al., 2014; Kobashi et al., 2017). A common intraoperative complications associated with SMILE is an opaque bubble layer (OBL). This is engendered by emission of pulsed light lasting 10−15 s at a wavelength of 1053 nm, which causes photofracture and the generation of bubbles (carbon dioxide + dihydrogen oxide) (Moshirfar et al., 2021; Mrochen et al., 2010). Subsequent bubbles coalescence creates an opaque area. An OBL causes cap lenticule adhesion, hampering dissection of lenticules. In severe cases, it may precipitate complications, such as epithelial breakthrough and cap perforation (Sahay et al., 2021; dos Santos et al., 2016; Son et al., 2017), which markedly affect the patient’s postoperative vision (Aristeidou et al., 2015). Hence, diminishing OBL formation during SMILE surgery holds promise for reducing complications and improving postoperative visual quality, with significant practical implications.

In recent years, artificial intelligence (AI) advanced ophthalmology significantly, presenting novel opportunities and challenges for ocular disease diagnosis, treatment, and management (Nuzzi et al., 2021). Through deep learning (DL) and machine learning, AI systems can swiftly and accurately detect lesions in fundus color photography, optical coherence tomography (OCT), and fundus fluorescein angiography (FFA), thereby assisting clinicians in early stage disease screening and diagnosis (Schmidt-Erfurth et al., 2018), and can predict the risk of glaucoma (Li et al., 2020; Xiong et al., 2022). Combined use of AI and patient data and surgical videos in cataract surgery holds significant promise for transforming surgical practice (Al Hajj et al., 2019).

We investigated the value of DL techniques for predicting OBL in SMILE surgery. Specifically, we attempted to predict formation of an OBL in femtosecond laser SMILE surgery by recognizing the panoramic view of the cornea before laser scanning and compared this prediction with the actual measured OBL area.

Materials and Methods

Research object

This cross-sectional retrospective study enrolled patients who underwent SMILE at the Ophthalmology Center of the second affiliated hospital of Nanchang University of between June 2021 and October 2022. All patients underwent SMILE surgery to treat myopia and astigmatism, by two experienced surgeons with 10 and 20 years’ refractive surgery experience in thousands of cases of photorefractive keratectomy, femtosecond-laser-assisted in situ keratomileusis (FS-LASIK), and SMILE surgeries.

This study follows the Declaration of Helsinki, is registered at ClinicalTrails.gov (identifier, NCT06577012), and approved by the ethics committee of the second affiliated hospital of Nanchang University (2024086).

Patients were included if they were aged ≥18 years, had preoperative spherical equivalent ≥−10.0 dioptre (D); corrected distance visual acuity ≥16/20; had a relatively stable refractive diopter (annual dioptre change in the past 2 years < 0.50 D); and had not worn contact lenses in the last 2 weeks. Patients were excluded if they had ocular diseases other than myopia and astigmatism, including keratoconus, severe corneal disease, severe dry eye, uncontrolled glaucoma, cataracts that seriously affected vision, or a history of ocular trauma; an ocular surgery history; and systemic diseases, such as psychiatric disorders, severe hyperthyroidism, systemic connective tissue diseases, or autoimmune diseases.

Data collection

Data collection involved obtaining screenshots of the SMILE surgical video in a panoramic view of the intraoperative corneal and posterior lenticule cut scans. Additionally, the OBL area was measured in operated eyes (Son et al., 2017). Following completion of the side-cut during SMILE surgery, the video was immediately paused, and the image was captured in BMP format using the “screen capture” option. Subsequently, the BMP file was imported into Adobe Photoshop (PS) 2020 software (Adobe Systems, San Jose, CA), where the “Elliptical Marquee Tool” was utilised to select the total corneal area. The mean luminosity and standard deviation were recorded, and the percentage of pixels above the threshold (average brightness + two standard deviations) was documented as the OBL region (Son et al., 2017; Yang et al., 2023). Measurement of the OBL area was checked by a senior surgeon to eliminate overestimation or underestimation by the PS software. The actual measured OBL area are subsequently verified and recorded by senior surgeons.

Data set building

All operations involved in building the data set were performed by professionally trained technicians.

Image preprocessing

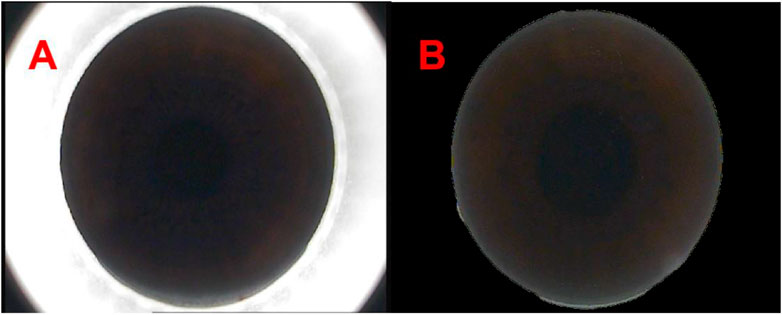

Considering the surgical instruments in the images captured from the VisuMax storage system (Carl Zeiss, Oberkochen, Germany), our initial approach involved the use of OpenCV within Python (https://pypi.org/project/opencv-python/) to remove instruments around the cornea. Subsequently, a binary process was employed to separate the cornea from the instrument, with the corneal regions individually labelled through a maximum connected region analysis. The corneal size was calculated using the ellipse-fitting method. Upon determining the corneal dimensions, a mask image was generated, and the image bit operation was executed using the cv2.bitwise_and () functions to yield a complete corneal image, effectively zeroing all neighbouring pixels within the image (Figure 1).

Figure 1. The panoramic view of corneal processed by Python [(A, B) respectively show the panoramas view of corneal captured from the VisuMax storage system (Carl Zeiss, Germany) and the panoramas view of corneal processed by Python].

Data partitioning

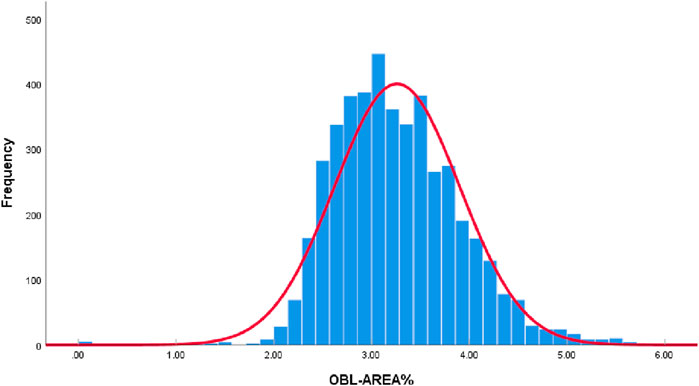

All collected OBL area data were plotted as a histogram. According to the Kolmogorov-Smirnov test, the data did not conform to a normal distribution (P < 0.05). However, given the large sample size of this study (4,442 eyes) and the “Bell Curve” characteristics exhibited by the histogram data of OBL areas, which approximate a normal distribution (Figure 2), it is acceptable to consider the data as normally distributed (Vetter, 2017; Muntaner et al., 1996).

Based on the proportion of the OBL area in the panoramic view of the cornea, we divided the data into three categories: <3%, 3%–4%, and >4% (Figure 3), consisting of 1,619, 2,126, and 697 items, respectively. Given that most of the OBL area data fell within the range of 3%–4%, random partitioning was performed to allocate this subset into the training set (60%), verification set (20%), and test set (20%). The remaining data were divided into proportions of 80%, 10%, and 10%.

Figure 3. OBL area measured in operated eye. [(A–C) respectively show the OBL generated during SMILE surgery captured by the VisuMax storage system. After processing by PS software and checking by senior surgeons, 3A represents the OBL area <3%, 3B represents the OBL area 3%–4%, and 3C represents the OBL area >4%].

The training set comprised 60% of the OBL data falling within the range of 3%–4% and 80% of the data outside this range (<3% and >4%), totalling 3,271 patches. These datasets were used to train the DL network to predict the OBL percentage area.

The verification set included 20% of the OBL data within and 10% of the data outside the 3%–4% range, amounting to 704 patches. These patches were used in real-time monitoring of the network training progress and the adjustment of network hyperparameters.

For the test set, we used 20% of the OBL data that fell within and 10% of the data that fell outside the 3%–4% range, resulting in a selection of 467 patches. By verifying the prediction outcomes of the test set, key evaluation indicators, such as the mean absolute error (EMA), Pearson correlation coefficient (r), and determination coefficient (R2), were calculated (Table 1).

Residual regression deep neural network based on SENet

Predicting the area of the OBL formed during SMILE based on an RGB image of the eye can be considered as a regression problem. Therefore, we designed a deep neural network incorporating the Sque-and-Excitation Networks (SENet) and ResNet architecture to solve this regression prediction task.

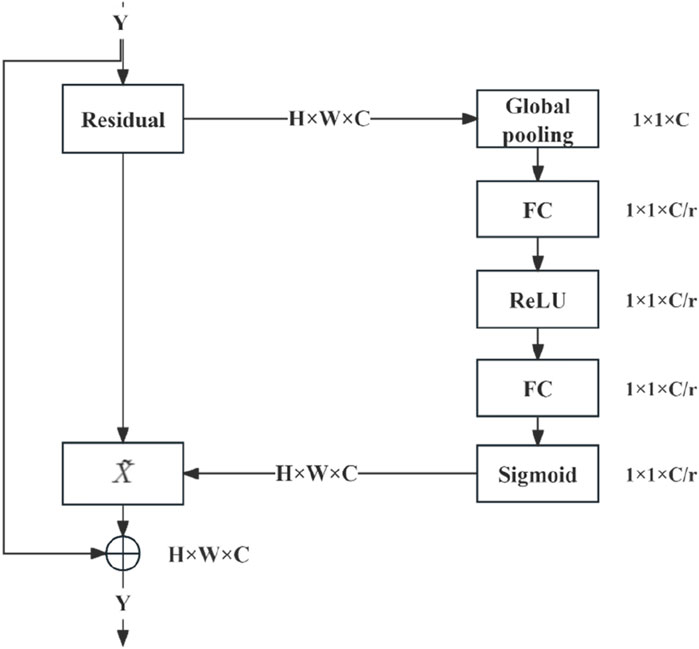

SENet is an influential network architecture (Hu et al., 2020) that introduces a pioneering feature-relabeling strategy called the attention mechanism. This approach obtains significant weights for each channel through a learning process. Subsequently, these discerned weights are utilised to enhance the crucial features relevant to the given task, while the impact of less essential features is simultaneously suppressed.

Moreover, residual neural networks devised by He et al. (2016) and his team at Microsoft Research tackled the issue of “degeneration” by introducing a groundbreaking concept known as the “shortcut” or “skip connection” mechanism. These connections resolved challenges encountered when training a neural network with excessive depth.

In the present study, the SENet module was integrated into a deep residual network. To streamline the number of parameters in the model, we have chosen to employ the same global average pooling (GAP) as the original creators to process the features extracted from the convolutional layer. Simultaneously, the loss function was refined to the mean square error (MSE) to enhance the precision of the loss calculation in the regression prediction task.

SENet module

The combination of SENet and the deep residual network module (Figure 4) entails executing weighted operations on the original image at the conclusion of each residual block, including the GAP, fully connected layer, ReLU operation, and sigmoid function. These operations calculate the weight values for each channel, which are then leveraged for multiplication. SENet was combined with the deep residual network by integrating it into the residual module following the last block in the module.

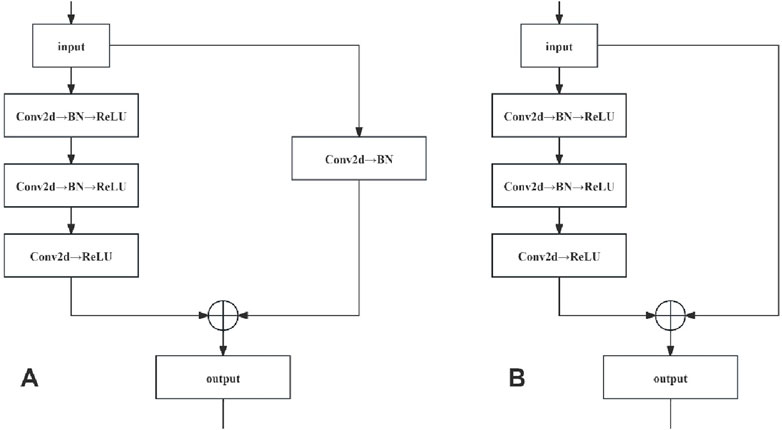

Residual network

Residual networks were constructed by stacking the convolutional (Conv) and identity blocks. As the input and output dimensions of the Conv blocks differed, they were used to adjust the dimensions of the network. Conversely, the identity blocks maintained uniform input and output dimensions, thus augmenting the network depth. The network structures of the Conv and identity blocks are shown in Figure 5.

The network structure of the Conv locks is characterised by a bifurcated structure, and the input data are divided into two branches, with the first branch undergoing a three-layer convolution operation to obtain a feature map, while the second branch undergoes a single-layer convolution operation to obtain another feature map. These two are added, and the result is subjected to a ReLU operation (i.e., assuming that a negative value is 0). Finally, the ReLU operation results are taken as the Conv block module output. In the network structure of the identity block, only one branch of input data exists. After three convolution operations without changing the dimensions, which are directly added (i.e., residual connection), a ReLU operation is performed on the result of the sum, which is finally used as the output of the identity block module.

Loss functions and optimizer

In this study, the MSE loss EMS (MSE) was chosen as the loss function (Equation 1), calculated as follows:

Stochastic Gradient Descent is an optimisation algorithm commonly used for large-scale datasets and DL model training. It calculates the gradient of the loss function by selecting one of the samples and verifies that the direction of the negative gradient updates the weights to reduce the value of the loss function. The loss function, which is the optimal value of the weights, is minimised by iteratively updating the weights.

Evaluation indexes

In this study, EMA, Pearson’s correlation coefficient r, and the determination coefficient R2 were chosen as the evaluation indices, calculated as follows (Equations 2–4):

Where

Experimental environment

The DL regression model used in this study was based on implementation under the PyTorch framework, running on a server equipped with two NVIDIA 3090 GPUs. The version of Pytorch was 1.10.0, the version of CUDA was 11.1, and the operating system was Ubuntu 18.04. Each GPU had 128 GB of RAM and 24 GB of graphics memory.

The original image was normalised and the pixel values were scaled to the range [0, 1]. A modified deep residual neural network with channel attention was used for training, and a dropout function was introduced in the fully connected layer, to reduce overfitting. During the training process, the batch size was set to 48, the iteration epoch was set to 200, the learning rate of the stochastic gradient descent was set to 10–4, and every 50 iterations reduced the learning rate to 1/10th of the initial value. During model training, the evaluation metric, Pearson’s correlation coefficient r, was recorded for the validation set, and the model with the largest r value was selected. Finally, models were evaluated using the test set for each correlation metric, to verify their predictive performance.

Surgical procedure

Each patient received 0.3% gatifloxacin eye gel for 3 days before surgery as a prophylactic measure against infection. Immediately before surgery, the nurse administered 0.5% procaine hydrochloride eye drops in a series of one drop every 5 min, followed by two consecutive drops to provide surface anaesthesia to the affected eye.

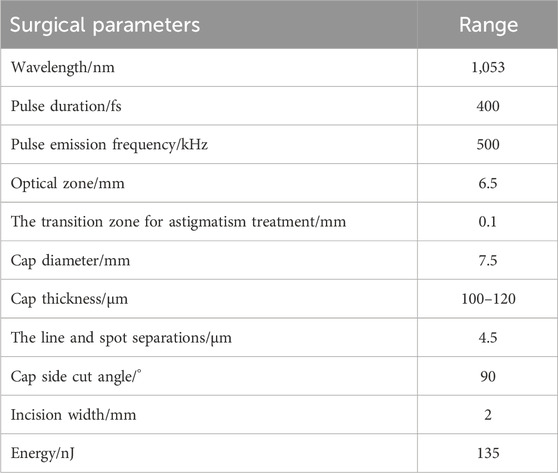

A VisuMax femtosecond laser system (Carl Zeiss), with a 135-nJ pulse energy of and 4.5-μm spot spacing was used. The corneal cap thickness was set at 100–120 μm, with a 7.5-mm diameter. The femtosecond laser scanning sequence included the following steps: posterior lenticule, lenticule side-cut, anterior lenticule, and cap side-cut. The cap side-cut was set at 2 mm (at the 12 o’clock position, at a 90° angle). The transition zone for astigmatism treatment was 0.1 mm (Table 2). The surgeon controlled the eyeball by using microtoothed forceps in the left hand and femtosecond lens separation in the right hand to dissect the anterior plane of the lenticule, followed by posterior plane dissection and lenticule extraction.

All patients began using the following medications on the first day after surgery: 0.3% gatifloxacin eye gel (four times/day, instilled in the eye for 1 week), 0.1% flumilone eye drops (four times/day, instilled in the eye for 4 weeks, decreased once a week), and sodium hyaluronate eye drops (four times/day, instilled in the eye for 4 weeks).

Statistical methods

IBM SPSS Statistics for Windows version 26.0 (IBM Corp., Armonk, NY, USA) was used for statistical processing of the general information. Microsoft Excel 2018 (Microsoft Org., Redmond, WA) was used to organise and tabulate the data, and GraphPad Prism 9.0 (GraphPad Inc., La Jolla, CA) was used for data analysis. All measurements are expressed as mean ± standard deviation (

In this study, EMA, r and R2 were used as the model evaluation indices. EMA was affected by the size of the processed data; therefore, the EMA only reflected the mean absolute error value, which was not readable. In Pearson’s correlation test, r = 0.8–1.0 was defined as a very strong correlation, r = 0.6–0.8 as a strong correlation, r = 0.4–0.6 as a moderate correlation, r = 0.2–0.4 as a weak correlation, and r = 0.0–0.2 as a poor correlation or lack of correlation. R2 was used to reflect the goodness-of-fit of the model and ranged from 0 to 1. R2 > 0.4 indicated reliable goodness-of-fit.

Results

General information and DL model prediction performance

This study included 2,265 patients (4,442 eyes) with a mean age of 21.88 ± 5.32 years. Of these, 68.12% were male patients, and the mean measured OBL area was 3.26% ± 0.64%.

The proposed algorithm was validated using a test set. It was also compared with classical network architectures, such as U-net, Vgg19, and ResNet50, to evaluate its predictive performance (Table 3). Method in this study and the ResNet50 model have been described in detail in the METHODS section. The U-net network comprises four layers, with the image undergoing subsampling and upsampling four times, respectively. The convolution kernel sizes for each layer are 64, 128, 256, and 512, respectively. Each subsampling process is connected with max pooling, and the upsampling process utilizes deconvolution and the ReLU activation function of the U-Net network. Additionally, the VGG19 model consists of 19 layers in total, including 16 convolutional layers and 3 fully connected layers. The convolutional part is divided into five blocks, with the respective block counts being 2, 2, 4, 4, and 4. Each block utilized max pooling for connectivity, and then connect three fully connected layers, and finally output the result through the softmax activation function.

According to Table 3, ResNet50 exhibits a smaller EMA, and larger R2 and r than did U-net and Vgg19. Our proposed method showed some improvements over ResNet: it slightly reduced the EMA and increases the R2 and r values. However, omitting GAP from the model resulted in a significant reduction in the size of the model (MB). Taken together, the method proposed in this study performed the best in terms of predictive effectiveness.

Visual analysis of model predictions

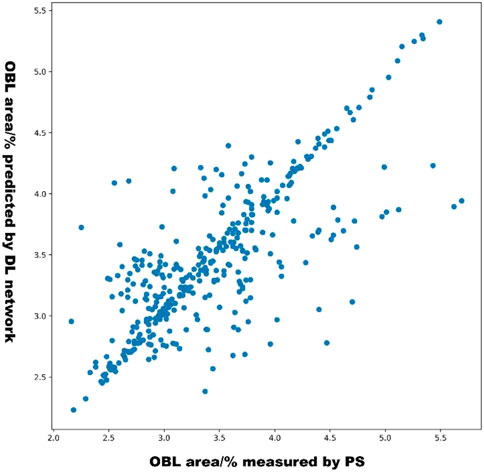

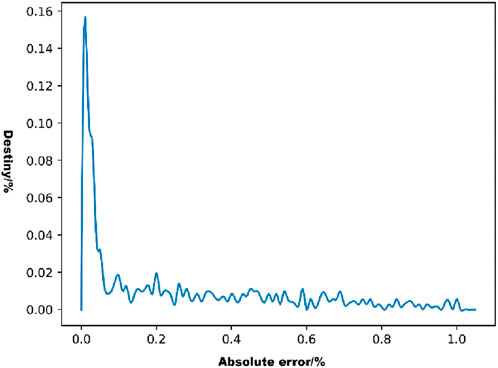

The scatterplot in Figure 6 shows the distribution of the OBL predictions of our model versus the OBL measurements. These data correlated well (r = 0.831).

The distribution of the absolute errors in the model predictions was observed using a density line graph of the absolute errors (Figure 7). As shown in Figure 7, most of the absolute error values were distributed below 0.2%, which represents a small error range.

Discussion

In this study, we trained and evaluated four DL models to predict the OBL area during surgery, based on a panoramic corneal image obtained before conducting SMILE laser scanning. To confirm the best-performing DL model, we used an internal validation set and designed a deep neural network model incorporating channel attention and a residual module (EMA = 0.253, r = 0.831, and R2 = 0.676). The model-matching effect and predictive performance were good. The DL model designed in this article could assist surgeons in predicting potential OBL areas in patients preoperatively, enabling the adjustment of surgical parameters to mitigate OBL formation, such as reducing the laser energy, replacing the suction ring or modifying the thickness of the corneal cap (Yang et al., 2023; Wu et al., 2020). This adjustment is crucial for reducing adverse impacts on surgical outcomes and postoperative visual recovery, bearing significant practical implications.

An OBL is an intraoperative complication of SMILE. Its formation is mainly related to the excessive accumulation of interlaminar corneal gas generated during photorupture of the corneal stroma by the femtosecond laser, particularly when a posterior lenticule is scanned. In previous studies, Liu et al. (2014), Courtin et al. (2015), He et al. (2022), and others have investigated the risk factors for OBL in FS-LASIK and have reported that higher myopia, greater central corneal thickness, and larger corneal diameter were associated with OBL formation. Ma et al. (2018) and Son et al. (2017) suggested that the central corneal thickness and residual stromal thickness are significant independent risk factors for OBL formation, with a higher likelihood of OBL occurrence in eyes in which a thinner lenticule is created during the SMILE procedure.

To date, AI has demonstrated significant potential in the screening, diagnosis, progression prediction, and supportive treatment of ocular diseases (Xiong et al., 2022; Wan et al., 2023; Lin et al., 2019). The widespread application of AI technology is expected to have a revolutionary impact on ophthalmology, but AI algorithms have not yet been used to predict OBL formation during SMILE, as we have done here. The methodology integrated various disciplines, such as the physical characteristics of femtosecond lasers, OBL formation mechanisms, computer image processing technology, and DL algorithms. The AI prediction process was achieved by extracting effective feature parameters from a panoramic corneal image scanned by the femtosecond laser. This study provided a detailed theoretical explanation of the algorithm implementation process and experimental results, and demonstrated that our AI prediction model has high accuracy and superior performance compared with traditional multiple linear regression models.

This study had some limitations. The DL model developed in this study focused specifically on predicting OBL formation during SMILE but did not predict the specific region or quadrant of OBL occurrence and the weight of the forming factors that influence OBL. Considering the complexity and variety of SMILE complications in clinical practice, creating an AI model that can predict all intra-and postoperative complications would entail a lengthy accumulation process. The study’s training and test data were relatively limited, highlighting the need for more extensive test sample data collection and the establishment of a robust data platform to test system adequacy. Additionally, the reliance on data from only one hospital for the test, training, and verification sets in this study introduces a certain level of inaccuracy. Future research should involve gathering data from diverse regions and hospitals for further testing and refinement.

Conclusion

In this study, a DL network, including a residual convolutional structure of residual modules, was constructed to extract image features, output regression predicted values by the fully connected layer, and channel attention. The model performance was verified on real datasets and exhibited a higher statistical correlation and a lower EMA than classical network architecture models.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by The Second Affiliated Hospital of Nanchang University Medical Research Ethics Committee Review Documents. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

ZZ: Data curation, Formal Analysis, Investigation, Resources, Software, Supervision, Validation, Writing–original draft. XZ: Data curation, Software, Visualization, Writing–original draft. QW: Investigation, Supervision, Writing–original draft. JXi: Conceptualization, Methodology, Writing–original draft. JXu: Formal Analysis, Supervision, Writing–original draft. KY: Formal Analysis, Supervision, Writing–original draft. ZG: Data curation, Software, Writing–original draft. SX: Data curation, Software, Writing–original draft. MW: Conceptualization, Methodology, Software, Visualization, Writing–review and editing. YY: Conceptualization, Funding acquisition, Project administration, Writing–review and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

We are grateful for the cooperation of the participants.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

OBL, Opaque bubble layer; SMILE, Small incision lenticule extraction; AI, Artificial intelligence; DL, Deep learning; EMA, Mean absolute error; r, Pearson’s correlation coefficient; R2, Determination coefficient; PS, Adobe Photoshop; D, Dioptre.

References

Al Hajj, H., Lamard, M., Conze, P.-H., Roychowdhury, S., Hu, X., Maršalkaitė, G., et al. (2019). CATARACTS: challenge on automatic tool annotation for cataRACT surgery. Med. Image Anal. 52, 24–41. doi:10.1016/j.media.2018.11.008

Ang, M., Gatinel, D., Reinstein, D. Z., Mertens, E., Alió Del Barrio, J. L., and Alió, J. L. (2021). Refractive surgery beyond 2020. Eye (Lond). 35 (2), 362–382. doi:10.1038/s41433-020-1096-5

Aristeidou, A., Taniguchi, E. V., Tsatsos, M., Muller, R., McAlinden, C., Pineda, R., et al. (2015). The evolution of corneal and refractive surgery with the femtosecond laser. Eye Vis. (Lond). 2, 12. doi:10.1186/s40662-015-0022-6

Courtin, R., Saad, A., Guilbert, E., Grise-Dulac, A., and Gatinel, D. (2015). Opaque bubble layer risk factors in femtosecond laser-assisted LASIK. J. Refract Surg. 31 (9), 608–612. doi:10.3928/1081597X-20150820-06

dos Santos, A. M., Torricelli, A. A. M., Marino, G. K., Garcia, R., Netto, M. V., Bechara, S. J., et al. (2016). Femtosecond laser-assisted LASIK flap complications. J. Refract Surg. 32 (1), 52–59. doi:10.3928/1081597X-20151119-01

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in presented at: 29th IEEE Conference on Computer Vision and Pattern Recognition: 29th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, Nevada, 26 June – 1 July 2016. Available at: https://d.wanfangdata.com.cn/conference/ChZDb25mZXJlbmNlTmV3UzIwMjQwMTA5EiA0OGMyZTUwMjkwODc3NGI0ZTRkNjA4MTQwYWI2Zjk4MxoId2ppYjhyY24=.

He, X., Li, S.-M., Zhai, C., Zhang, L., Wang, Y., Song, X., et al. (2022). Flap-making patterns and corneal characteristics influence opaque bubble layer occurrence in femtosecond laser-assisted laser in situ keratomileusis. BMC Ophthalmol. 22 (1), 300. doi:10.1186/s12886-022-02524-6

Hu, J., Shen, L., Albanie, S., Sun, G., and Wu, E. (2020). Squeeze-and-Excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 42 (8), 2011–2023. doi:10.1109/TPAMI.2019.2913372

Kobashi, H., Kamiya, K., and Shimizu, K. (2017). Dry eye after small incision lenticule extraction and femtosecond laser-assisted LASIK: meta-analysis. Cornea 36 (1), 85–91. doi:10.1097/ICO.0000000000000999

Li, F., Song, D., Chen, H., Xiong, J., Li, X., Zhong, H., et al. (2020). Development and clinical deployment of a smartphone-based visual field deep learning system for glaucoma detection. NPJ Digit. Med. 3, 123. doi:10.1038/s41746-020-00329-9

Lin, H., Li, R., Liu, Z., Chen, J., Yang, Y., Chen, H., et al. (2019). Diagnostic efficacy and therapeutic decision-making capacity of an artificial intelligence platform for childhood cataracts in eye clinics: a multicentre randomized controlled trial. EClinicalMedicine 9, 52–59. doi:10.1016/j.eclinm.2019.03.001

Liu, C.-H., Sun, C.-C., Hui-Kang Ma, D., Chien-Chieh Huang, J., Liu, C.-F., Chen, H. F., et al. (2014). Opaque bubble layer: incidence, risk factors, and clinical relevance. J. Cataract. Refract Surg. 40 (3), 435–440. doi:10.1016/j.jcrs.2013.08.055

Ma, J., Wang, Y., Li, L., and Zhang, J. (2018). Corneal thickness, residual stromal thickness, and its effect on opaque bubble layer in small-incision lenticule extraction. Int. Ophthalmol. 38 (5), 2013–2020. doi:10.1007/s10792-017-0692-2

Moshirfar, M., Tukan, A. N., Bundogji, N., Liu, H. Y., McCabe, S. E., Ronquillo, Y. C., et al. (2021). Ectasia after corneal refractive surgery: a systematic review. Ophthalmol. Ther. 10 (4), 753–776. doi:10.1007/s40123-021-00383-w

Mrochen, M., Wüllner, C., Krause, J., Klafke, M., Donitzky, C., and Seiler, T. (2010). Technical aspects of the WaveLight FS200 femtosecond laser. J. Refract Surg. 26 (10), S833–S840. doi:10.3928/1081597X-20100921-12

Muntaner, C., Nieto, F. J., and O'Campo, P. (1996). The Bell Curve: on race, social class, and epidemiologic research. Am. J. Epidemiol. 144 (6), 531–536. doi:10.1093/oxfordjournals.aje.a008962

Nuzzi, R., Boscia, G., Marolo, P., and Ricardi, F. (2021). The impact of artificial intelligence and deep learning in eye diseases: a review. Front. Med. 8, 710329. doi:10.3389/fmed.2021.710329

Palme, C., Mulrine, F., McNeely, R. N., Steger, B., Naroo, S. A., and Moore, J. E. (2022). Assessment of the correlation of the tear breakup time with quality of vision and dry eye symptoms after SMILE surgery. Int. Ophthalmol. 42 (3), 1013–1020. doi:10.1007/s10792-021-02086-4

Reinstein, D. Z., Archer, T. J., and Gobbe, M. (2014). Small incision lenticule extraction (SMILE) history, fundamentals of a new refractive surgery technique and clinical outcomes. Eye Vis. (Lond). 1, 3. doi:10.1186/s40662-014-0003-1

Sahay, P., Bafna, R. K., Reddy, J. C., Vajpayee, R. B., and Sharma, N. (2021). Complications of laser-assisted in situ keratomileusis. Indian J. Ophthalmol. 69 (7), 1658–1669. doi:10.4103/ijo.IJO_1872_20

Schmidt-Erfurth, U., Sadeghipour, A., Gerendas, B. S., Waldstein, S. M., and Bogunović, H. (2018). Artificial intelligence in retina. Prog. Retin Eye Res. 67doi, 1–29. doi:10.1016/j.preteyeres.2018.07.004

Sekundo, W., Kunert, K. S., and Blum, M. (2011). Small incision corneal refractive surgery using the small incision lenticule extraction (SMILE) procedure for the correction of myopia and myopic astigmatism: results of a 6 month prospective study. Br. J. Ophthalmol. 95 (3), 335–339. doi:10.1136/bjo.2009.174284

Son, G., Lee, J., Jang, C., Choi, K. Y., Cho, B. J., and Lim, T. H. (2017). Possible risk factors and clinical effects of opaque bubble layer in small incision lenticule extraction (SMILE). J. Refract Surg. 33 (1), 24–29. doi:10.3928/1081597X-20161006-06

Vetter, T. R. (2017). Fundamentals of research data and variables: the devil is in the details. Anesth. Analg. 125 (4), 1375–1380. doi:10.1213/ANE.0000000000002370

Wan, Q., Yue, S., Tang, J., Wei, R., Tang, J., Ma, K., et al. (2023). Prediction of early visual outcome of small-incision lenticule extraction (SMILE) based on deep learning. Ophthalmol. Ther. 12 (2), 1263–1279. doi:10.1007/s40123-023-00680-6

Wu, D., Li, B., Huang, M., and Fang, X. (2020). Influence of cap thickness on opaque bubble layer formation in SMILE: 110 versus 140 µm. J. Refract Surg. 36 (9), 592–596. doi:10.3928/1081597X-20200720-02

Wu, D., Wang, Y., Zhang, L., Wei, S., and Tang, X. (2014). Corneal biomechanical effects: small-incision lenticule extraction versus femtosecond laser-assisted laser in situ keratomileusis. J. Cataract. Refract Surg. 40 (6), 954–962. doi:10.1016/j.jcrs.2013.07.056

Xiong, J., Li, F., Song, D., Tang, G., He, J., Gao, K., et al. (2022). Multimodal machine learning using visual fields and peripapillary circular OCT scans in detection of glaucomatous optic neuropathy. Ophthalmology 129 (2), 171–180. doi:10.1016/j.ophtha.2021.07.032

Keywords: deep learning, opaque bubble layer, small-incision lenticule extraction, artificial intelligence, complication

Citation: Zhu Z, Zhang X, Wang Q, Xiong J, Xu J, Yu K, Guo Z, Xu S, Wang M and Yu Y (2024) Predicting an opaque bubble layer during small-incision lenticule extraction surgery based on deep learning. Front. Cell Dev. Biol. 12:1487482. doi: 10.3389/fcell.2024.1487482

Received: 28 August 2024; Accepted: 14 October 2024;

Published: 30 October 2024.

Edited by:

Weihua Yang, Jinan University, ChinaReviewed by:

Dongqing Yuan, Nanjing Medical University, ChinaYufeng Ye, Affiliated Eye Hospital to Wenzhou Medical University, China

Copyright © 2024 Zhu, Zhang, Wang, Xiong, Xu, Yu, Guo, Xu, Wang and Yu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mingyan Wang, bWluZ3lhbndAMTI2LmNvbQ==; Yifeng Yu, MTcxMDE4MTcwQHFxLmNvbQ==

†These authors have contributed equally to this work

Zeyu Zhu

Zeyu Zhu Xiang Zhang3†

Xiang Zhang3† Jian Xiong

Jian Xiong Kang Yu

Kang Yu Yifeng Yu

Yifeng Yu