- Software Engineering Research Center, International Institute of Information Technology Hyderabad, Hyderabad, India

Introduction: Gathering requirements for developing virtual reality (VR) software products is a labor-intensive process. It requires detailed elicitation of scene flow, articles in the scene, action responses, custom behaviors, and timeline of events. The slightest change in requirements will escalate the design and development costs. While most VR practitioners depend on conventional software engineering (SE) requirement-gathering techniques, there is a need for novel methods to streamline VR software development. With severe software platform fragmentation and hardware volatility, VR practitioners need assistance specifying non-volatile requirements for a minimum viable VR software product.

Methods: To address this gap, we present virtual reality requirement specification tool (VReqST), a model-based requirement specification tool for developing virtual reality software products.

Results: Using VReqST, requirement analysts can specify the requirements for both simple and complex multi-scene VR software products and virtual environments (VEs).

Discussion: VReqST is customizable and competent in illustrating custom requirements for new locomotion, colocation, teleportation algorithms, etc. We worked with the VR community from the industry for adoption and feedback. We revised and included the desired features based on inputs from the VR community and gathered their observations on the overall impact of VReqST in practice.

1 Introduction

Virtual reality (VR) technology can potentially revolutionize the future of work. Several big companies, including Meta, NVIDIA, Microsoft, Apple, and SAP, have already created proofs-of-concept using immersive solutions for remote collaborative work and complex task completion (Gramlich, 2022). These VR enterprise solutions have become essential in various industries such as healthcare, manufacturing, training, IT, energy, and retail. They provide application-specific domain practitioners with immersive experiences that help them comprehend complex activities through simulations. In the post-pandemic era, immersive solutions are increasingly embraced to enhance productivity in enterprise industries by fostering innovation. This trend has also given rise to the “metaverse,” three-dimensional virtual experience software that allows people to socialize, learn, collaborate, implement, work, play, and experience emotions virtually using real-world metaphors. Despite the numerous advantages, the overall VR product development process for developing enterprise VR software is plagued by significant technological and practitioner challenges. Recent studies by Anwar et al. (2024) have also illustrated the quality of experience in adapting, augmenting, and producing standards for immersive environments. VR software product development has been observed to employ different sets of practices compared to traditional software product development (Karre et al., 2019), resulting in the following challenges.

The aforementioned factors can be alleviated by obtaining clear and comprehensive specifications, thereby preventing the need for rework and reducing the cost associated with development. It has been noted that proficient requirement engineering methodologies can result in enhanced software quality (Filho and Kochan, 2001). Conversely, the process of eliciting requirements in virtual reality is perceived as arduous and necessitates meticulous documentation (Karre et al., 2023). Figure 1 illustrates an example of a VR bowling alley game (Kandhari, 2023) in a UNITY development editor mode to understand the underlying details required to elicit and specify requirements for VR software. We can observe articles like pins, balls, gutters, scoreboards, light sources, and walls populating in the three-dimensional scene. Each article holds distinct VR-specific properties like layout information, scale, material, gravity, mass, audio, ray-casting, object on collision, object rigidity, light sources, motion, rotation, occlusion, angular drag, object kinematic properties, interpolation of an object, wobble effect of object, VR participant locomotion, environment depth, scene rendering, synchronous–asynchronous VR participant task-action responses, control flow, and data flow of overall events. In comparison to two-dimensional or three-dimensional graphics, the complexity of requirements for VR software is significantly higher. Consequently, the requirement engineering process for VR scenes becomes multi-faceted and intricate. In order to facilitate the process of requirement engineering for the development of VR products, we introduce a model-based tool called the virtual reality requirement specification tool (VReqST). This tool adopts a model based template approach, which means that it provides a structured framework for capturing and organizing requirements for the VR technology domain. It is designed to assist the VR community in specifying requirements for the creation of enterprise VR software.

2 Materials and methods

2.1 Virtual reality domain model template

Why is a model-based understanding of the VR technology domain required?—a virtual reality software system strives to induce targeted behavior in an organism (predominantly a human or an animal) using artificial sensory simulation (LaValle, 2020). VR hardware and software have evolved independently, causing disparity in the overall evolution of VR as a domain. Consequently, it has become difficult for VR practitioners to build portable and cross-platform VR products. Several attempts have been made to standardize VR as a domain through standards like Virtual Reality Modeling Language (VRML) by W3C (1997), (COLLAborative Design Activity (COLLADA) by Sony Computer Entertainment Inc. (2004), O3D by Google Inc. (2010), Filmbox (FBX), GL Transmission Format (glTF), and OpenXR. These standards have failed due to the lack of interoperability support between VR software and VR hardware (Brennesholtz, 2017). Following are few detailed insights into these prominent standards and the reasons for their adoption failure.

VRML (W3C, 1997) is a file format for describing three-dimensional (3D) interactive vector graphics, designed particularly for the web. It allows the creation of 3D scenes and objects to be viewed in a web browser or a standalone VRML browser, called a “player.” VRML files can be created and edited using various 3D modeling software and can include textures, lighting, physics properties, animations, and behaviors. VRML has been replaced by X3D (ISO/IEC 19775-1) as the standard for 3D web graphics.

O3D was a web-based 3D graphics application programming interface (API) developed by Google as an alternative to Flash and other 3D technologies. It was designed to enable the creation and display of interactive 3D graphics in web browsers without the need for additional plugins or software. O3D provided a JavaScript API for creating and manipulating 3D scenes, objects, and animations and supported features such as lighting, texturing, and physics. O3D was intended to make it easier for web developers to create and share 3D content and to provide a more immersive and interactive web experience for users.

COLLADA is a royalty-free, XML-based file format for 3D digital assets. It was developed by the Khronos Group, an industry consortium focused on the creation of open standards for 3D graphics, and is used for exchanging 3D models and scenes between different applications and platforms. COLLADA supports a wide range of 3D modeling features, including geometry, textures, lighting, animations, and physics. It is designed to be platform-independent and can be used to exchange 3D assets between different operating systems, hardware platforms, and software applications. COLLADA files can be exported and imported using a variety of 3D modeling tools, game engines, and other software programs, making it a popular choice for interoperability in the 3D graphics industry. COLLADA is often used for creating and sharing 3D models and scenes for use in real-time 3D applications, such as games, simulations, and virtual reality experiences. It is also used in the film and television industry for creating 3D visual effects and animations.

Despite initial interest and support, all these standards failed to gain widespread adoption due to several challenges, as discussed below:

On other end, formats like FBX and glTF and have gained wide popularity due to their proprietary in nature.

FBX is a proprietary file format used for exchanging 3D digital assets between different applications and platforms. It was developed by Kaydara and owned by Autodesk Inc., which was used for exchanging 3D models, animations, and other assets between different 3D modeling tools, game engines, and other software programs. FBX supports a wide range of 3D modeling features, including geometry, textures, lighting, animations, and physics. It is designed to be platform-independent and can be used to exchange 3D assets between different operating systems, hardware platforms, and software applications. FBX files can be exported and imported using a variety of 3D modeling tools, game engines, and other software programs, making it a popular choice for interoperability in the 3D graphics industry. It is often used for creating and sharing 3D models and scenes for use in real-time 3D applications, such as games, simulations, and virtual reality experiences. It is also used in the film and television industry for creating 3D visual effects and animations. As it is a proprietary format, it is not open to the public. Thus, users must have a license to use the FBX software development toolkit and underlying tools for working with this format. However, FBX is widely supported by many 3D modeling tools and game engines and is a popular choice for interoperability in the 3D graphics industry.

glTF, on the other hand, is an open, royalty-free file format for 3D digital assets. It was developed by the Khronos Group, an industry consortium focused on the creation of open standards for 3D graphics, and is used for exchanging 3D models and scenes between different applications and platforms. glTF is designed to be lightweight and efficient, with a focus on real-time 3D applications, such as games, simulations, and virtual reality experiences. It supports a wide range of 3D modeling features, including geometry, textures, lighting, animations, and physics, and is designed to be platform-independent and easy to use. glTF is an open standard, which means that it is freely available to the public and can be used and implemented by anyone. It is supported by a wide range of 3D modeling tools, game engines, and other software programs and is becoming an increasingly popular choice for interoperability in the 3D graphics industry. glTF is often compared to other 3D file formats, such as COLLADA and FBX, which offer similar capabilities. However, glTF has several advantages over these formats, including its lightweight and efficient design, its focus on real-time 3D applications, and its open and royalty-free status. As a result, glTF is gaining popularity as a modern and flexible file format for exchanging 3D assets between different applications and platforms.

In contrast, despite being proprietary (in the case of FBX) and open, royalty-free (in the case of glTF), they are not widely acceptable due to volatility in their underlying meta-model of the virtual reality technology domain. For example, consider a human with little or no awareness of the interference (LaValle, 2020). Such a human, through a software system, aspires to simulate a real-world environment as an immersive three-dimensional (3D) environment, primarily using 3D computer-generated graphics. Various human sensory aspects like auditory, haptic (through force), olfactory, vision, human motor, proprioception (body position and movement), and cognitive capacities are experienced through this system. Immersion and presence are ideal states of a VR software system but are not necessary prerequisites for engagement and interaction. Despite the increase in the computation power of VR hardware, VR software systems do not adequately achieve realism through underlying VR software. VR hardware and VR software have evolved independently, leading to severe platform fragmentation. Such challenges still continue with FBX and glTF standards. Recently, attempts have been made to support interoperability between VR software and hardware using OpenXR standard APIs (Group, 2019).

OpenXR is an open standard for virtual reality and augmented reality devices, developed by the Khronos Group, an industry consortium focused on the creation of open standards for 3D graphics. It provides a common, cross-platform API for accessing and controlling VR and AR devices, allowing developers to create applications that can run on a wide range of devices without the need for custom integration. It was initially designed to simplify the development and deployment of VR and AR applications by providing a single, unified API that can be used across different devices and platforms. This allows developers to create applications that can run on a wide range of devices without the need to write custom code for each device or platform. OpenXR is an open standard, which means that it is freely available to the public and can be used and implemented by anyone. It is supported by a wide range of VR and AR device manufacturers, software companies, and other organizations and is becoming an increasingly popular choice for cross-platform development in the VR and AR industry. OpenXR is still in the process of being developed and is not yet a final, stable standard. However, it is expected to be released in the near future and is already being used by some developers and organizations for cross-platform VR and AR development.

2.2 Attributes for VR software development

Overall, the available standards are either in draft or require more comprehensive usage/feedback within the VR practitioner community. Various commercial VR providers proposed and practiced variants of the VR conceptual model. These models are open-ended and do not permit interoperability to meet the necessities of a bare-minimum VR software system. At a minimum, a VR software system should depict components like scenes, scene objects, cameras, action responses, and behavior outcomes. However, the existing conceptual models do not provide common control and data flow to facilitate constructing a bare-minimum VR software system. Thus, we address the problem by formulating the following research questions:

RQ: What constitutes a meta-model of a bare minimum VR software system?

Conceptualizing a meta-model for VR systems requires a thorough understanding of VR as a domain in addition to understanding VR systems in application domains like healthcare and banking. We made an unsuccessful attempt by conducting a systematic literature review to understand what constitutes a bare-minimum VR software system. The results were obsolete and no longer significant to comprehend contemporary VR software systems. Most of the primary and secondary studies conducted by Levy and Bjelland (1994) and Sherman and Craig (2003) suggested superficial information on the workings of a typical VR software system. Most secondary studies present VR from an application point of view with no precise details on the underlying aspects of VR as a domain. We found studies that explain VR as a software system applied in various fields like education, tourism, simulation, healthcare, and design applications with no underlying information about using the constructs of a bare-minimum VR software system. Given the limited academic literature, we adopted the Socio-Technical Grounded Theory (STGT) approach (Hoda, 2021) to investigate components of bare-minimum VR software systems.

STGT is a modern version of traditional sociological Grounded Theory methodology, specifically designed for software engineering and other socio-technical domains. It is based on over 15 years of experience and combines socio-technical principles with grounded theory methods to explore the intersection of technology and society. STGT is intended to provide increased clarity and flexibility in its methodological steps and procedures (Hoda, 2021). It is an iterative and incremental research method using available resources with abductive reasoning for theory development. As per the STGT approach, the following particulars represent the boundaries of our work:

Below are the detailed steps that are required to be applied to conduct the STGT in practice:

Based on the STGT approach, we defined our research context as follows.

Research context: The proprietary standards failed to create cross-platform support for VR software, driving the VR technology domain into a platform-dependent system.

We relied on the following resources to gather the data needed to establish the theory for constructing a meta-model for VR software systems: informal interviews, reviewing VR SDKs, and reviewing VR standards. These are detailed below to understand the criteria of our data collection approach.

2.2.1 Informal interviews

We conducted informal interviews with developer communities of UNITY Technologies, Epic Games, Khronos Group (OpenXR), and the VR/AR Association (UK/APAC) for nearly 6 months (October 2021 to April 2022). These interviews aimed to understand practitioners’ perspectives of a bare-minimum VR software system in practice. Participants with a minimum of 5 years of VR development experience were considered for interviews. In total, 39 VR practitioners participated. The following questions were the basis for the informal interviews.

2.2.2 VR SDK selection

Our interactions with VR practitioners led us to review widely used VR SDKs like UNITY3D (2022), Unreal Game Engine (2022), CryEngine Cry (2021), AframeJS (2022), and Amazon Lumberyard (2022). We examined SDK source code, underlying classes, and white papers. All other non-technical proprietary materials are excluded from examination.

2.2.3 VR standards’ selection

Interactions with VR practitioners led us to explore VR standards in detail. We considered prior standards—VRML (W3C, 1997; X3D, 1997), WebXR, and O3D (Google Inc., 2010), and prevailing standards—OpenXR (Group, 2019) and IEEE VR/AR Working Group for our study. Additionally, we examined peer-reviewed publications to understand the components of a meta-model for a bare-minimum VR software system.

We used the open coding method (Strauss and Corbin, 1967) to annotate the interview transcripts, VR standard documentation, and VR SDK documentation with essential details. Our annotation criteria are to check for the presence of elements that explain the constructs of a bare-minimum VR software system and depict the control and data flow among them. These annotations are linked with generalized codes, which have the same meaning as our annotation criteria. These codes are further generalized into common labeled concepts. The researcher has the flexibility to define the concept labels. The concepts are ordered into a category goal for our study. Figure 2 explains a few examples of open codes linking overall concepts and categories to provide an overall understanding of a bare-minimum VR software system.

Based on the codes generated using open coding, we used Abduction Reasoning (Hoda, 2021) to theorize a meta-model for a bare minimum VR software system. Abductive reasoning helps researchers conduct data analysis through different means such as hunches, clues, metaphors or analogies, symptoms, patterns, and explanations. This approach opens various avenues for creative thinking and theory development. Following are the data points and observations captured to depict bare-minimum concepts that are found to constitute a VR software system. These concepts are the building blocks of a meta-model understanding of a VR software system.

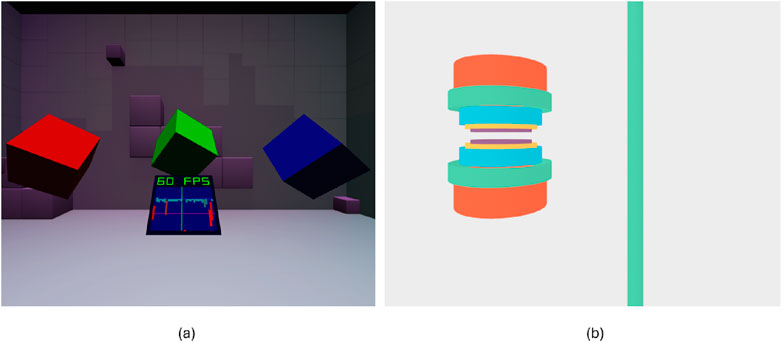

The culmination of the above concepts varies between various VR standards and VR SDKs based on their levels of abstraction and nomenclature. However, the overall concepts described above represent the bare-minimum set to construct a VR software system, and they can be consistently observed across various VR standards and VR SDKs in different forms. To understand how SDKs perceive the same underlying meta-model elements differently, we present examples using WebXR APIs and AFrameJS VR scenes. Figure 3A shows a WebXR scene presenting no-audio 3D environment with a centered view-source. The scene features cube articles placed at a particular height, which change color when clicked but otherwise exhibit no interaction. Figure 3B shows an AFrameJS scene presenting a no-audio 3D environment with a centered view-source. In this scene, articles of varying sizes are placed at a distance and exhibit a change in terms of their dimension in response to any external intervention, with no actions taking place. These two scenes are different and are built using different VR SDKs. These two SDKs work under different meta-model concepts and code templates. The WebXR-based scene is obsolete as prevailing browsers no longer support this standard. The AFrameJS based scene is supported by JavaScript-based browsers only (Chrome, Firefox, etc.). These two scenes are built for the web and are not compatible with high-end head-mounted-device consumption. One of the SDKs, WebXR, is now deprecated, thus limiting the portability of all WebXR scenes and causing platform fragmentation. Such gaps can be avoided if they are built using a shared underlying meta-model of VR. Considering these observations, we provide our steps toward developing a role-based model template that represents a shared meta-model for a VR software system.

Figure 3. Example VR scene built using WebXR APIs and AFrameJS. (A) A WebXR scene with a centered-view source. (B) A AframeJS scene with a centered-view source.

2.3 Virtual reality software system meta model

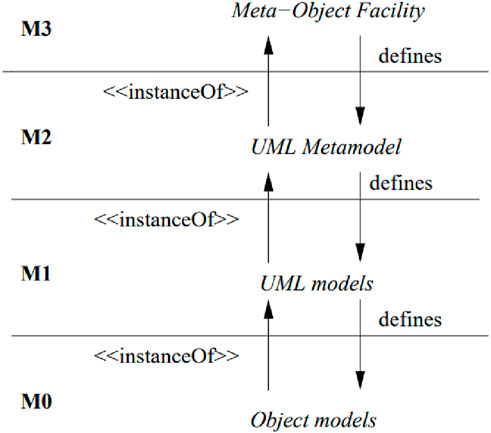

The Unified Modeling Language is a programming language with an independent notion for specifying, visualizing, constructing, and documenting systems. It is an Object Management Group (OMG) standard language for object-oriented modeling. The UML infrastructure is defined as a four-layer architecture, as shown in Figure 4.

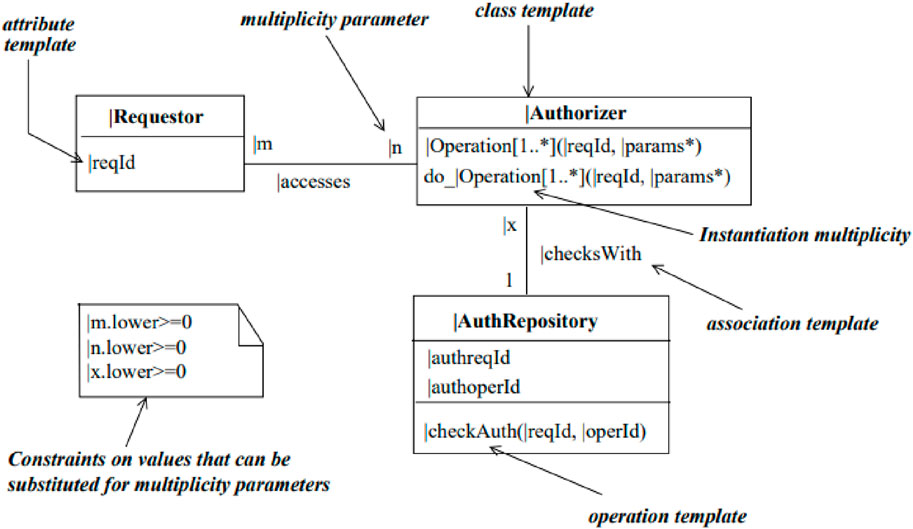

The UML was designed primarily as a notation for modeling a single application, and its use to model application families is problematic. The role-based meta-modeling language (RBML) is a UML-based language extension that supports rigorous specification of patterns that characterize a family of design models proposed (Kim et al., 2003). As RBML uses UML syntax, UML tools can be used to create RBML specifications. A RBML specification consists of a set of role models that describe pattern properties from different perspectives with additional details. A variant of the RBML that facilitates the generation of compliant models from pattern descriptions is used in this work. Template diagrams rather than role models are used to specify families of models. The UML models are obtained from template diagrams by binding the template parameters to actual values.

A Role represents a specific set of responsibilities, behaviors, or functions within a system or model. It defines expected behaviors and interactions without specifying the concrete implementation. Roles are typically more abstract and focus on the purpose or function an entity fulfills in a given context. In contrast, A Template is a predefined structure or pattern that can be reused and instantiated multiple times. It provides a blueprint for creating consistent instances of a particular element or component. Templates are more concrete and focus on the structure and attributes of an entity. In practice, we can use both roles and templates as part of a meta-model, where roles help define the abstract behaviors and interactions between entities and templates to provide concrete implementations or structures for those roles. For example, a Developer role represents the responsibilities and interactions of a developer in the process, and DeveloperTemplate defines the specific attributes and structures of a developer entity in the system. This combination allows the model to represent both the dynamic and behavioral aspects (with roles) and the static and structural aspects (with templates) of the system. As the focus is on illustrating static and structural aspects of a VR software system, as part of our work, we use the notion of templates to describe the meta-model of the VR technology domain.

Figure 5 illustrates an example of a class diagram using RBML specifications to describe a meta-model system that authorizes a requestor by identifying the desired operation from the authorizing repository. Here,

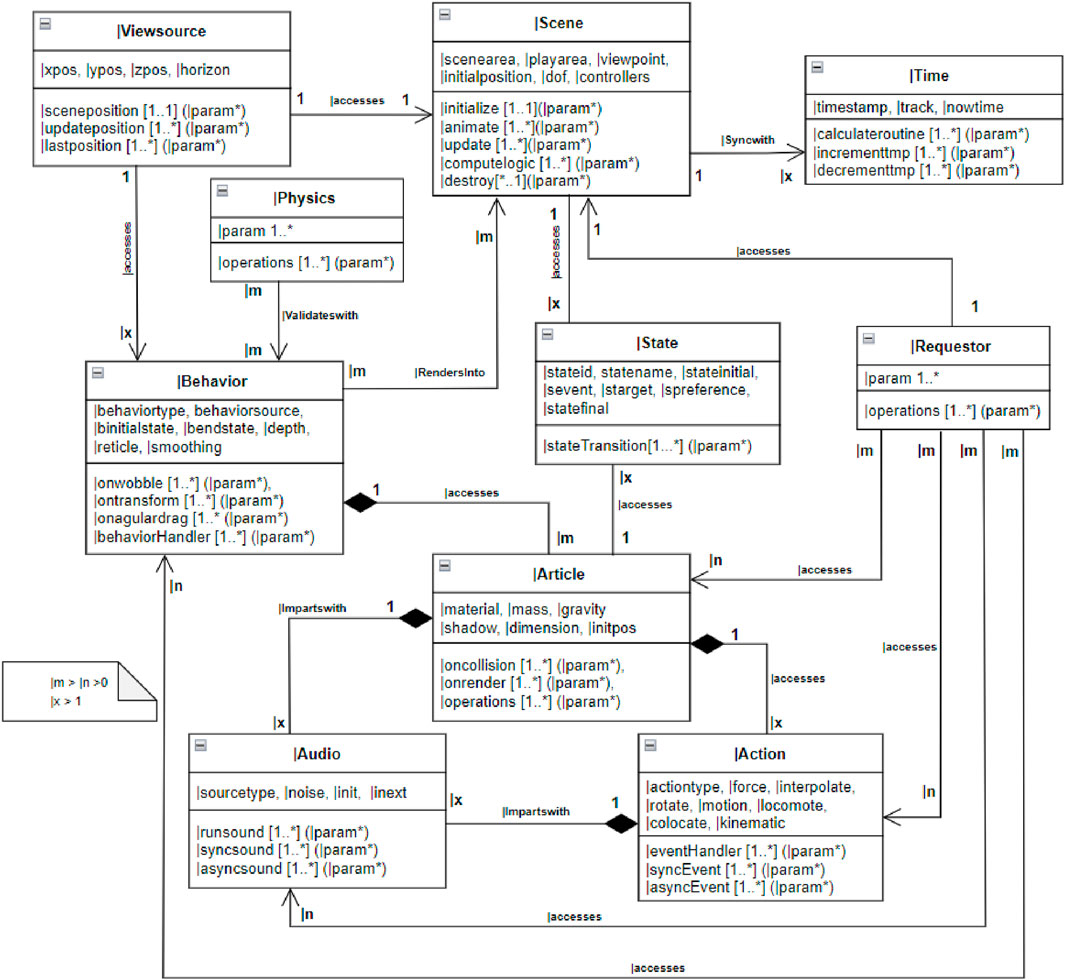

Based on role-based meta-modeling language, we present a role-based model template to illustrate meta-model for Virtual Reality as the technology domain. Figure 6 presents the role-based model template class diagram of a bare-minimum VR software system. Template elements are marked with the “

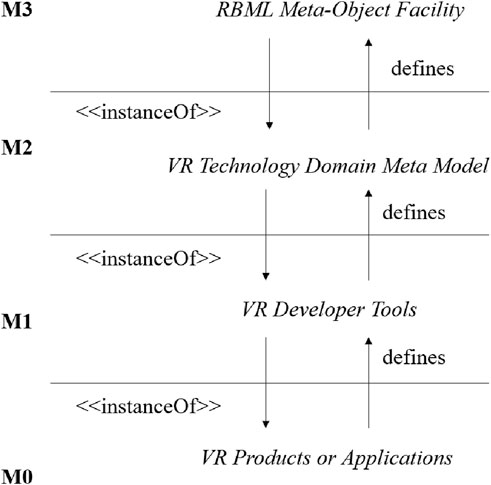

Figure 7 illustrates the UML four-layered meta-model architecture, deduced by considering the model template for the VR technology domain in Figure 6.

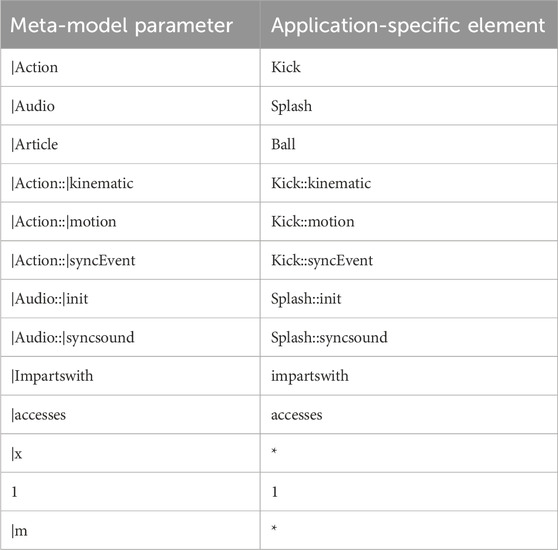

To understand the instantiation of the VR meta-model into the VR application, we bring an example that provides bindings between the meta-model and its related application using a virtual reality soccer training application developed by Rezzil Inc. Figure 8 provides us a VR scene where the soccer trainee is required to perform a task called “Kick.” Table 1 provides the bindings for a test case to verify the action:kick and the response audio:splash associated with article:ball. The example bindings can be used as part of a novel VR test case generator tool. This table provides a correlation between the meta-model attributes and potential test-case generator application-specific attributes for a VR soccer training application instance. Using the test-case application specification, a custom test-case generator tool can be developed to generate test cases for a game like VR applications.

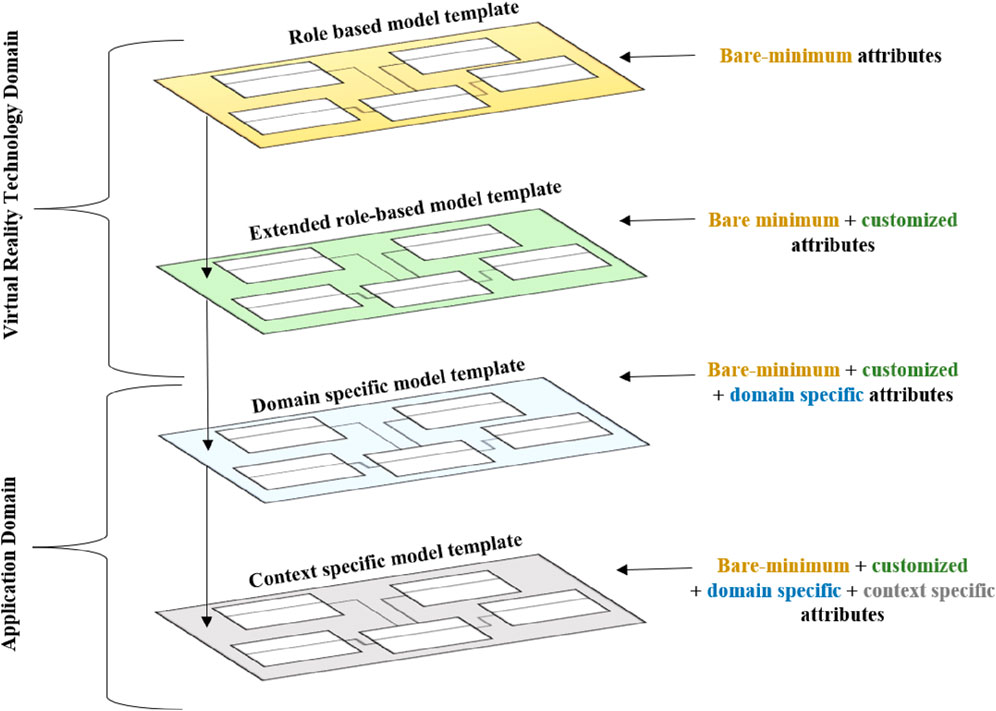

2.4 Extending VR meta-model to domain-specific and context-specific applications

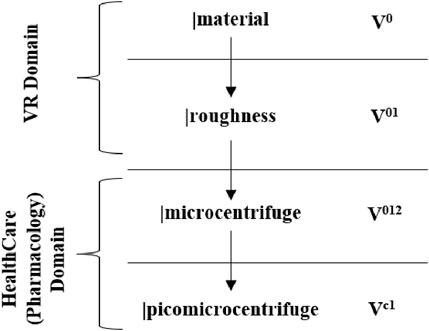

The role-based model template for a bare-minimum VR software system can be extended to an application centered on a domain or to a context within a given domain. In the context of software applications, a domain refers to the specific field or area of expertise that the software program is designed to serve. It represents the concepts, processes, and rules that define the problem space the software program aims to solve. Application domains vary widely, ranging from healthcare and finance to gaming and social media. Domains are often associated with specific industries or business processes and may involve specialized terminology and workflows. Software developers use domain models to represent these domains and create tailored software solutions to meet their needs. By understanding the domain, developers can design context-specific applications and effectively address the challenges users face in that field. As shown in Figure 9, we can visualize the top–down stack of layers using the VR model template for domain- and context-specific applications by extending underlying attributes from bare-minimum attributes to domain- and context-specific attributes.

For better illustration, let us consider that

For example,

For example, consider the pharmacology domain in healthcare, where a domain-specific practitioner (pharmacologist) uses various chemicals to develop, identify, and test the drugs to cure, treat, and prevent diseases. A microcentrifuge is one of the many apparatus they rely on to spin down and separate chemical particles in small volumes of liquid samples. Consider a pharmacology domain-specific VR scene developed to train pharmacologists; a pre-curated domain-specific article, such as

Figure 10 illustrates this example by presenting the model attributes in layers. This figure shows that

Figure 10. Visualizing example of extending the VR meta-model to the healthcare (Pharmacology) application domain.

Figure 11 illustrates an example of a sphere game object. In Figure 11i, the sphere shows bare-minimum properties, including dimension, color, and illumination. In Figure 11ii, the sphere shows variations in the roughness property, which is not a bare-minimum attribute. In Figure 11iii, the sphere illustrates a domain-specific game object, such as volley ball and basketball, with variations in both sphere dimension and roughness. In Figure 11iv, a context-specific game object is shown, representing various balls used in the game of cricket. Thus, a role-based model template for a VR software system can be extended into a customizable, VR technology domain-centered model template. It can be extended further to a domain-specific application and a context-specific application within a given application domain.

Figure 11. Visualizing example of extending the VR meta-model to sphere game-object (these images are generated by AI). (i) A sphere with bare-minimum properties. (ii) A sphere with variations of roughness property. (iii) A domain specific game object, volley ball and a basket ball. (iv) A context specific game object, types of cricket game balls.

2.5 Model-based requirement specification for virtual reality software

2.5.1 VR authoring tools

An authoring tool is a software application or platform that enables users to create content in a cohesive and interactive format, including text, code, graphics, audio, and video. These tools simplify content creation, making it accessible even to those without advanced technical skills. In the context of the virtual reality technology domain, VR authoring tools are explicitly created to develop interactive and immersive virtual reality content. They enable users to develop VR experiences without requiring extensive programming knowledge. They offer an intuitive user interface, integration of multimedia assets, support for a wide range of VR devices, collaborative features across various VR developer engines, and integration support to external software like learning management systems to host VR content. Sometimes, VR authoring tools cater to different expertise levels, offering pre-made interactions and scenes for non-specialists or customizable content for specialists. Unfortunately, most VR authoring tools do not incorporate authoring capabilities as an integral feature in the VR developer tools. Due to serious platform fragmentation issues within the VR technology domain, individual VR practitioners develop custom plugins to streamline the VR content to modify and distribute across distinct VR developer engines. Examples of VR authoring tools include AirVu Authoring Tool, LearnBrite, Storyflow, and CenarioVR. These tools cater to various industries, such as education, healthcare, manufacturing, and customer service, providing customizable solutions predominantly for developing immersive VR training modules. It is pretty rare to observe a VR authoring tool that conventional VR practitioners can use as a general-purpose tool. This is primarily because the artifacts generated from such VR authoring tools may not work across different VR developer engines and do not support multiple VR devices.

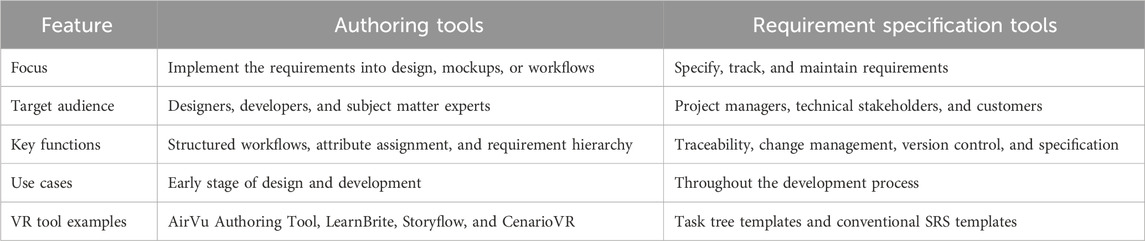

2.5.2 VR requirement specification tool

Requirement specification tools help manage, organize, and document software requirements throughout the development of software products. Their primary goal is to ensure that all stakeholders involved in a software project have a clear understanding of the requirements and that the final product aligns with the initial vision. Such tools are required to support the requirement creation, provide traceability, enable collaboration, manage changes, prioritize requirements, and offer version control of requirements, among other features. In the context of the virtual reality technology domain, there is no dedicated requirement specification tool that meets these domain-specific needs. Due to the lack of VR-centered requirement specification tools, the VR community is heavily relying on conventional approaches like software requirement specification templates and task tree-based requirement specification templates.

As shown in Table 2, we illustrate the differences between authoring and requirement specification tools. In brief, authoring tools are for authoring and organizing content based on requirements, while requirement specification tools are for managing and tracking requirements throughout development. Furthermore, the authoring tools focus on clarity and conciseness, while requirement specification tools provide a more comprehensive approach to the management of requirements. As there is a need for such comprehension in the VR technology domain, we developed a model-based requirement specification tool. In the following subsection, we provide more details about our novel requirement specification tool that can help requirement analysts specify requirements with higher precision and clarity.

2.5.3 Why use a model-based requirement specification tool for VR?

From our previous systematic literature review (Karre et al., 2024) on understanding requirement engineering methods for VR products in practice, we observed that most VR practitioners relied on approaches like conventional functional systematic specification documentation, common scene definition framework, and mental model techniques to specify requirements for VR products. We observed from our literature review (Karre et al., 2024) that due to a lack of understanding about VR as a domain within the VR community, novel tools are not practiced or adopted. Thus, we envisioned developing a tool that works on the foundations of a meta-model of a VR technology domain. The practical benefits of model-based tools (Gonzalez-Perez and Henderson-Sellers, 2008) motivated us to build a model-based requirement specification tool for VR software. These benefits are as follows:

2.6 VReqST—to specify VR requirements

The VReqST, i.e., “virtual reality requirement specification tool,” is developed for requirement analysts of the VR community to specify requirements using a role-based model template of the VR software system (Karre et al., 2022). VReqST will aid requirement analysts in describing scene properties, article properties, and action responses between these articles in the given scene, along with their state change and custom behaviors (like algorithms and logic interpretation) that are to be executed in a defined timeline. Our first iteration for VReqST was oriented toward a formal-specification language with pre-defined code constructs. We exchanged our early ideas with requirement analysts from the VR community through various platforms. Based on the feedback from the VR community, we simplified them into a model template tool instead of evolving it into a formal-specification language. In contrast, VReqST behaves as an informal form of a specification language. In the following sub-sections, we present the details about different model template(s), their underlying validator(s), the architecture, tool overview, and workflow of VReqST.

2.7 Model template and its validator

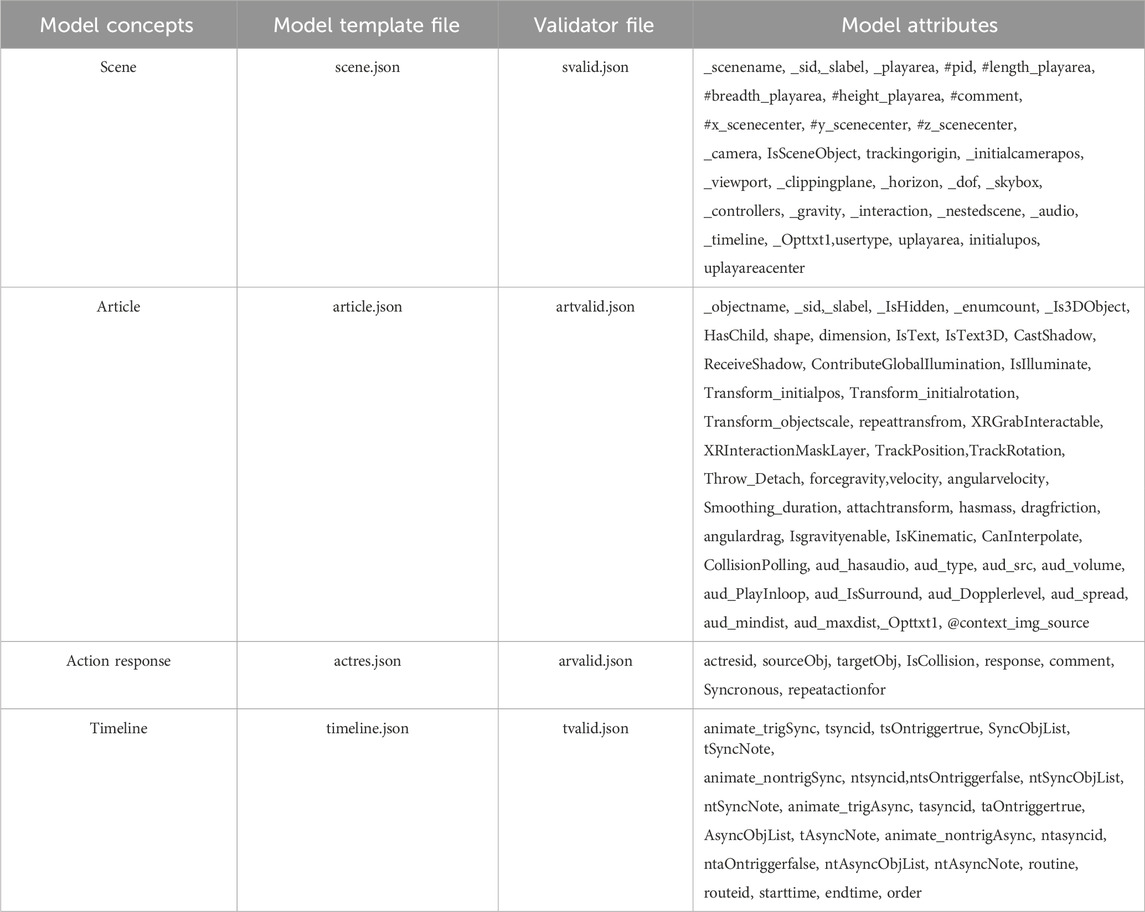

As mentioned in the previous section, model templates are extensions of model concepts defined as part of the role-based model template for VR software systems (Karre et al., 2022). These model concepts are scenes, articles, action responses, behaviors, and timelines. Each model concept constitutes model attributes that act as properties of the respective model concept. Table 3 provides the mapping of model concepts and their model attributes. In the case of VReqST, we represent each model concept as a model template file. They are represented in the JavaScript Object Notation (JSON) format. The model template file contains model attributes, a minimum set required to capture requirements for VR software products, as shown in Table 3. For example, the model template file of the model concept called “Article” contains one of the model attributes called “IsKinematic”—a physics property of the article that can execute motion when an external force is applied. The scope of this attribute is Boolean, i.e., it can only hold either value “1” or “0,” where “1” signifies that the respective article holds kinematic physics property, whereas “0” signifies that the respective article does not hold kinematic physics property.

All such validation rules for model attributes for a given model template are defined as part of the underlying validator. Each model template file (scene, article, action response, and timeline) has a distinct validator file to validate the datatype and scope of the model attributes, as shown in Figure 13. These validator files are also represented in the JSON format. The model template files and their corresponding validator files are available as part of our documentation (Karre, 2024a). Table 3 provides details of the default naming convention for the model template file and validator files for the respective model concept. The requirement analysts can rely on VReqST documentation to specify requirements in the model template file while eliciting requirements from their stakeholders. These model template files will aid requirement analysts in probing the requirements more accurately.

2.8 VReqST overview

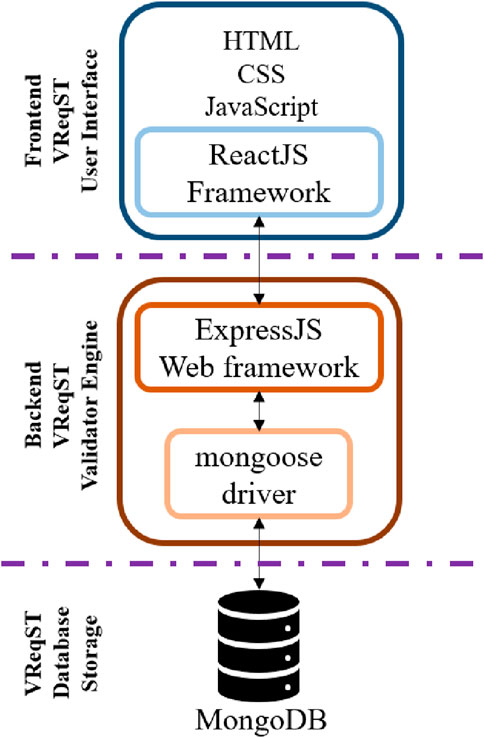

VReqST is a web-based tool built using the Mongo–ExpressJS–ReactJS–NodeJS (MERN) technology stack, i.e., a ReactJS framework is for client-end web page rendering, ExpressJS framework and NodeJS for server-end setup, and MongoDB as a database to store the data. Figure 12 illustrates the architecture of the VReqST tool. The source code, tool demo, documentation, and sample requirement specification examples are available as part of our resources (Karre, 2024a). Requirement analysts of VR products are the target users of VReqST. This web-based tool is designed to aid requirement analysts in capturing requirements instantly and refer to them effortlessly. This will eventually ease the work of VR designers and developers by streamlining the development of VR software products and enabling swift traceability to the original requirements.

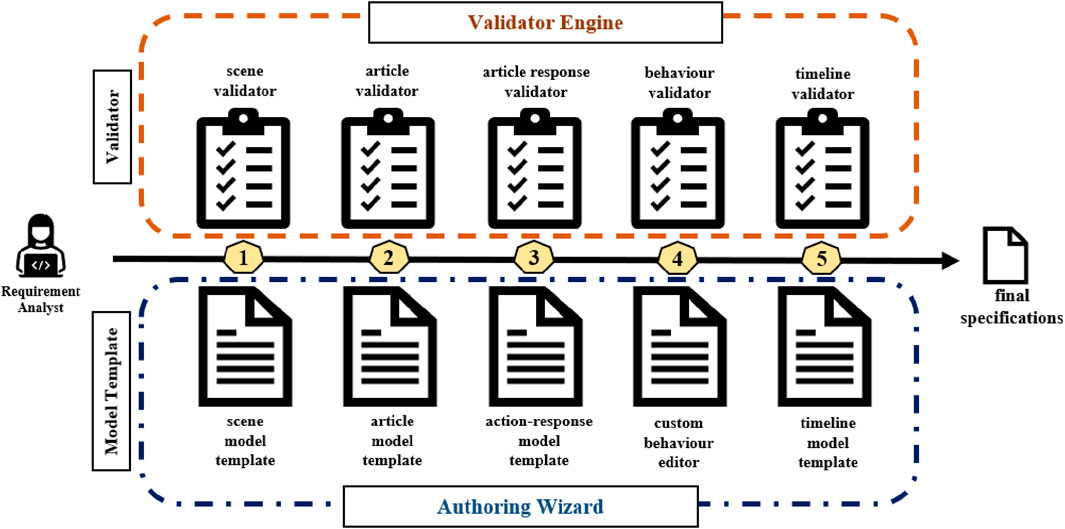

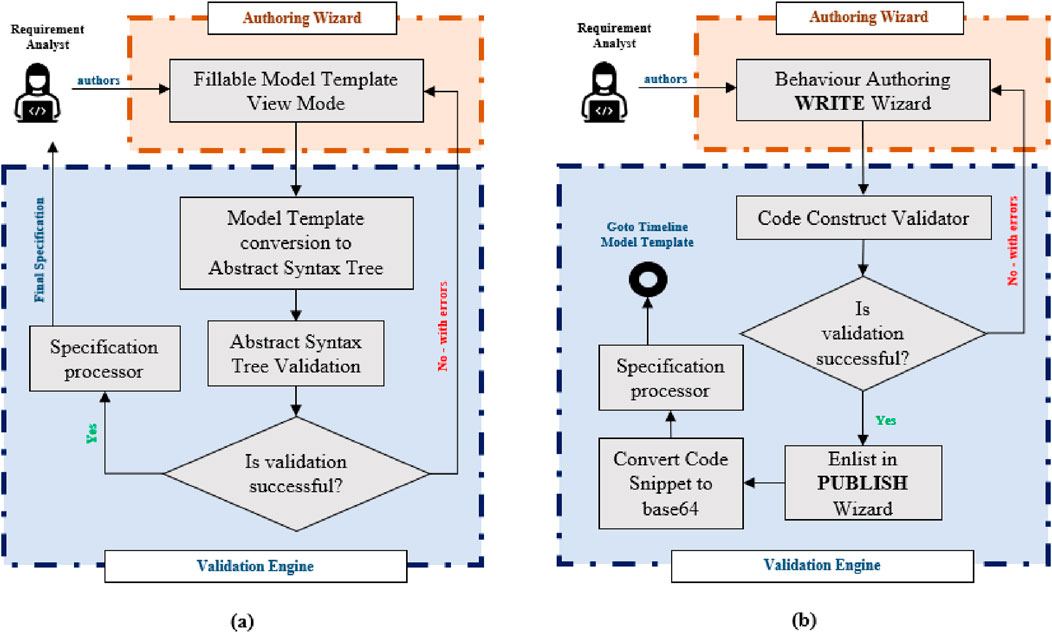

As shown in Figure 13, there are two sections in the VReqST tool, namely, authoring wizard and validator engine. Requirement analysts interact only with the authoring wizard while specifying VR software product specifications, whereas the validator engine runs in the background. As shown in Figure 13, the requirement analysts are required to start with scene-related details, i.e., the scene model template is the first stage of the VR requirement specification. Once the required model attributes of the scene model template are composed, the specification is validated using the scene validator. Upon successful validation, the requirement analyst can compose an article model template, a second stage of the VR requirement specification. All the dependent scene model attributes are carried forward to the article model template. The article model attributes are validated using the article validator. Upon successful validation, scene- and article-related model attributes are carried forward to the following stage, i.e., action response, the third stage of VR requirement specifications. The requirement analyst must define all possible action responses between articles that are listed as part of the article model template in the second stage. Along with all possible action responses, the state of an article and its initial, final, and transition states are to be defined. After completing the action response model template, all the scene, article, and action response attributes are carried into the customer behavior editor. This is the fourth stage of authoring VR requirement specifications. The custom behavior editor in VReqST will help requirement analysts define behaviors using logic and conditional constructs. For example, in the case of VR games, scoring criteria can be codified as part of this stage. In the case of VR simulation scenes, custom algorithms like co-location or locomotion can be codified. The codified code constructs are validated based on the behavior validator. After authoring behaviors, the requirement analysts are required to present the overall specifications in a timeline. This is the final stage of the VR requirement specification. The attributes defined in all the previous stages can be presented as asynchronous and synchronous events. A timeline validator validates the attributes associated with the timeline model template. After completing all the stages, a final specification file combining all the model attributes will be published. This final specification is now available for designers and developers to initiate VR software product development. All the stages in VReqST are linear and cannot be skipped, i.e., the specification will start with a scene, article, action response, behavior, and timeline. We may not be able to specify articles directly without specifying scene information. We can navigate back to the respective stage if validation of a given stage is complete. We shall go through the detailed validation workflow of VReqST in the following subsection.

2.9 VReqST validation workflow

Figure 14 illustrates an overall validation workflow of specifications of the model template using the respective validator. This section is divided into two parts: validation of model template files and validation of the custom behavior editor.

Figure 14. (A) Validation workflow of the model template in VReqST tool, (B) Customer behavior editor in VReqST tool.

2.9.1 Model template validation

Figure 14A provides detailed steps for validating the model template file. There are four model templates in VReqST. This applies to the scene, article, action response, and timeline model template files; the steps for validating the model template file are as follows.

2.9.2 Custom behavior editor validation

Figure 14B provides detailed steps for validating the custom behavior editor. As shown in Figure 13, stages 1, 2, 3, and 5 are fillable model template files validated against respective validator files. However, stage 4 in the authoring wizard contains a custom behavior editor that allows requirement analysts to write custom behaviors on articles and their action responses. These behaviors are business rules with expected output for the corresponding output. These business rules are use-case-based and vary from product to product. The steps involved in validating the behaviors are stated as follows.

2.10 Understanding behavior and state in VReqST

For example, to author “Behavior,” assume that we are building a tic-tac-toe game in VR with two articles, circle (O) and cross (X). If three of any one of these articles form a straight line (vertical, horizontal, and diagonal) in a tic-tac-toe game, the player with the respective article is deemed the winner. To author this behavior in stage 4, we use the code snippet below using a CASE condition, and everything in bold is the pre-defined code construct from behave.json. The text in italics are articles from article.json, and the rest is in the free-form text, which is not validated. The code snippet construct in bold is validated using behave.json.

1: CASE # Circle OR Cross = true #

2: C1: forms straight vertical line = player:winner;

3: C2: forms straight horizontal line = player:winner;

4: C3: forms straight diagonal line = player:winner;

5: C4: player: next_step;

6: !

The requirement analysts can specify behaviors using in-built code constructs. These code constructs do not follow existing programming standards but are custom-made for VReqST. They follow an inbuilt syntax that is simplified and easy for a non-developer to use. After considering the feedback from the VR community and considering that requirement analysts are full-scale developers, we designed them as low-code tools.

Similarly, “State” transition can be specified by defining state parameters like initial and final states based on transition parameters like event and target. For example, to describe the state transition of sun-rise in VReqST, the requirement is to specify that while the sun rises, the sun rays should cast a shadow and transition snow to water in a specified time counter.

Listing 1 Example: State template.

{

“state”: [

{

“id”: “sunrise2013”,

“name”: “Sun Rise in New York”,

“initial”: “dark horizon”,

“transition”: [

{

“object”: [“Sun”,“trees”],

“event”: “rising”,

“target”: “sun ray cast shadow on all assests from east to west”

},{

“object”: [“Sun”,”Snow”],

“event”: “rising”,

“target”: “turn the snow into water gradually after 35 seconds”

}

],

“final”: “Display sunrise with cast shadow on trees and snow converted to water after 50 seconds”

}

]

}

2.11 VReqST features

Following are a few significant features of the VReqST tool that are provisioned for requirement analysts to embrace based on the sophistication of their VR software product:

2.11.1 Example specifications

We used VReqST to specify requirements for two VR applications to understand the capabilities and shortcomings. We specified detailed requirements for a VR bowling alley game and a VR virtual art gallery that uses a limitless locomotion algorithm called PragPal (Mittal et al., 2022). We developed these VR scenes using the UNITY game engine (version 2022.2.16), compatible with the Oculus Meta Quest 2 head-mounted device.

2.11.2 VR bowling alley game

A VR bowling alley game is a virtual facility where bowling is played. It is a multiplayer game that follows all the bowling alley rules. The game scene is played under a 30 ft (length) × 30 ft (breadth) × 30 ft (height) virtual play area. The game contains a bowling ball, ten pins, a pinsetter for setting the pins in a frame, an alley lane, and a gutter that acts as a boundary for the alley lane. The game is controlled by a control desk that registers the parties, registers bowlers, and manages the party assignment to the game lanes. There are ten games for each party player. The scores are displayed on the scoreboard. The player from a party who scores the highest in all ten games wins. We authored and shared the VR bowling alley game specifications with the VR developer to design and develop the game. The final working game (Kandhari, 2023) and the sample specifications are available as part of our resources (Karre, 2024b).

2.11.3 VR virtual art gallery

The VR virtual art gallery is an endless corridor comprising two walls running parallel to each other. The end users walk through the gallery to explore the art exhibits on these walls and progress in the forward direction. Under the influence of an underlying locomotion algorithm, the users’ path becomes virtually limitless in a limited physical space; in other words, within a fixed physical play area, the user can navigate freely in a virtual play area. We authored the requirement specifications of this virtual art gallery and the underlying limitless locomotion algorithm using the VReqST to study the capabilities of specifying algorithms using the behavior step. The final working game (Mittal et al., 2022) and the sample specifications are available as part of our resources (Karre, 2024b). This validation helped us conduct a unit test of VReqST and demonstrate its capabilities of specifying custom features and algorithms for a given VR scene.

3 Results

3.1 Validating VReqST in practice

How do we validate a requirement specification method? Software requirements are not static and evolve throughout the software development lifecycle. As the project progresses, new requirements may arise due to changing user needs, technological advancements, and stakeholder feedback. As a result, software development teams must be agile and adaptable in managing and accommodating these evolving requirements. Conversely, requirement specifications are vital in ensuring that software products meet the desired functionalities, performance, and quality standards during this evolution process. Requirement specifications serve as a guiding framework for the software development team during requirement evolution. These specifications comprehend the software’s intended behavior and enable the software practitioners to make informed design decisions and develop solutions that align with the specified requirements. By adhering to these specifications, software development teams can create reliable, efficient products that meet their users’ expectations. The consistency, completeness, and correctness of requirement (R) specification (S) can only be validated and verified under the context of a given domain (D) (Gervasi and Nuseibeh, 2000). As shown in Equation 4, given the assumption that the machine will perform as instructed by the specification and that our model of the domain faithfully predicts how the real world will behave (Gervasi and Nuseibeh, 2000),

These observations holds true even for VR software systems and their VR practitioner community. However, the critical difference is that the evolution of VR requirements is very difficult to track and supervise unless there is a structured approach to comprehending VR as a technology domain. VReqST aims to address this gap. VReqST is developed to specify, maintain, track, and manage the evolution of VR software product requirements more precisely. Considering this as our motivation, we reached out to the VR community to understand how VReqST can disrupt this evolution and aid the VR community in easing overall VR product development. In the following sub-sections, we present our attempts to validate the adoption and impact of VReqST in practice.

3.2 VR community adoption and feedback

We reached out to the VR community from the industry through various channels, like LinkedIn, VR/AR meetups, online VR summits, Discord XR Connect, the Khronos Group, Deloitte Digital, and UNITY Unite Expo (2022 and 2023), to promote VReqST for validation, adoption, and feedback. We clearly defined our evaluation study’s objectives, ensuring they are specific, measurable, achievable, relevant, and time-bound. This ensures we receive a clear direction for our validation and helps incrementally improve the tool for wider adoption. We reached more than 500 practitioners from the VR community, of which only 101 participants have shown to deploy the tool in-house for validation. Of these participants, 53 have actively participated in iterations and helped us revise and improve the tool over time. These participants include business analysts, VR product managers, VR program managers, VR quality engineers, and VR developers. This multi-year empirical study was conducted between October 2022 and January 2024. The steps followed by the practitioners who have shown interest in implementing and validating VReqST in practice are as follows.

In the following subsection, we present a detailed overview of our study setup, feedback received in three iterations, and the impact of survey details.

3.3 VReqST validation study setup

The following steps are involved in our validation study setup with VR community practitioners in respective iterations.

3.3.1 Iteration 1

3.3.2 Iteration 2

3.3.3 Iteration 3

In the following sub-section, we present detailed observations shared by VR practitioners during each iteration, along with the implementation information related to those enhancements in detail.

3.4 VReqST impact and experience survey

After a thorough review of iterations and implementing feedback on VReqST, we reached out to the VR practitioners to share their experiences on using VReqST and the impact it has made on their day-to-day activities. The following are the questions posed to VR practitioners to help us understand their experiences and the impact of VReqST on their VR product development journey in contrast to their regular processes.

1. Is the VReqST tool compatible with the existing tools that you use for your overall VR software product development?

2. Is the overall user-interface and design of the VReqST tool intuitive and user-friendly for your team members?

3. Does the VReqST tool allow you to specify requirements for necessary features in your VR software to meet your business needs?

4. With whom and how frequently did you use the VReqST tool while specifying or explaining the requirements to your target users (developers or stakeholders)?

5. Are you able to completely specify and track the requirements associated with your feature in a given VR scene?

6. Does the tool allow you to correctly specify the requirement as per the conventional standards of the VR technology domain?

7. Is the VReqST tool’s performance and stability under different conditions and workloads acceptable for your day-to-day business?

8. Do you believe the VReqST tool is extendable to meet your current product needs and adaptable to future needs, considering updates in the VR technology domain?

Overall, our validation study and impact and experience survey of the VReqST tool focuses on the following quality attributes listed below in particular stack order. We evaluated the benefits of VReqST using these attributes. We share detailed observations from the VR practitioners using these quality attributes for better quantification.

4 Discussion

4.1 Observations—validation study

While releasing the incremental version of VReqST during three iterations, we gathered feedback through informal interviews with the VR practitioners on VReqST. We summarize the overall observations gathered during all the iterations using the “Abductive Reasoning” approach and illustrate them below. We categorized these observations into two parts: merits and enhancements.

4.1.1 Merits

4.1.2 Enhancements

In contrast to the consolidated observations, we present detailed feedback that we gathered during each iteration below, along with the revisions performed on the VReqST tool. We shared the revised tool during each iteration and have incorporated these changes accordingly.

Iteration 1: The detailed changes conducted as part of our iteration 1 are listed below. We included all the required changes in the form of figures as part of Supplementary Material.

Iteration 2: The detailed changes conducted as part of our iteration 2 are listed below. We included all the required changes in the form of figures as part of Supplementary Material.

Iteration 3: The detailed changes conducted as part of our iteration 3 are listed below. We included all the required changes in the form of figures as part of Supplementary Material.

After considering thorough feedback from the VR community, we updated the VReqST tool in three iterations and released it as an open-source tool. In contrast to this feedback, we surveyed the participants to understand the impact and overall experiences of using VReqST in practice. We present more details in this regard in the following subsection.

4.2 Observations—impact and experience survey

Between December 2023 and January 2024, we reached the 53 VR practitioners who participated in the VReqST iterative validation study to participate in an impact and experience survey to provide their overall experience of their team on using VReqST and their experiences in utilizing the specifications in practice. Of the 53 VR practitioners, 26 responded to the survey. The detailed observations from the survey are provided in the following sections.

4.2.1 Demography of participants

Most organizations that participated in this impact and experience survey have been building enterprise VR products for approximately 6 years on average, with 2 years being the minimum tenure of a given organization on a lower end and 20+ years for the maximum tenure of a given organization on a higher end on building VR products from countries US, UK, India, China, Europe, and Australia. Among the 26 participants, we have engineering manager(s), engineering directors with various capacities, product managers, technical architects, and software engineers who work in XR, an emerging technology domain. The participants of the survey primarily build VR products in the following domains: healthcare, defense, simulation, digital twins, interior design, inner environment design, industrial layout, corporate education, compliance training, entertainment, gaming, drug discovery, medical protocol design, gaming content, cinema, retail marketing, marketing campaigns, authoring tools, metaverse, fashion, ads, wearables, financial analytics, tourism, consulting, enterprise banking, and recreational tours. These practitioners are from Deloitte Digital, Plug XR, Cornerstone On Demand, SAP-XR, Samsung Studios, SkillSoft, UNITY Developer Group, ThoughtWorks Dev Group, Khronous Dev Community, and many other VR open source practitioners helped us provide their valuable feedback.

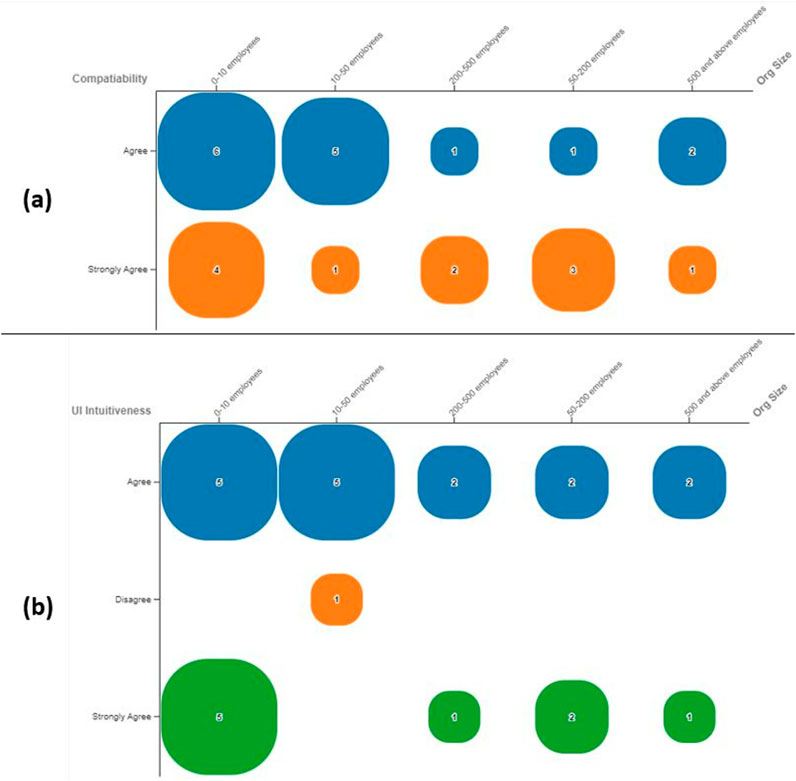

4.2.2 Compatibility

Figure 15A illustrates that most participants observe that the VReqST tool is compatible with their existing processes and can be easily integrated without any hassle. Primarily, small organizations with an organization size of less than 200 employees have found VReqST to be highly compatible with their regular VR product development processes.

Figure 15. (A) Compatibility of VReqST with existing processes, grouped by organization size. (B) Ease of UI/UX of VReqST in practice, grouped by organization size.

4.2.3 Ease of use (UI/UX)

Figure 15B illustrates that most participants observe that the VReqST tool is intuitive, considering its user interface and overall user experience seem manageable in practice. Small organizations with an organization size of fewer than 200 employees have found VReqST to be highly consistent with its UI design and ease of use in practice.

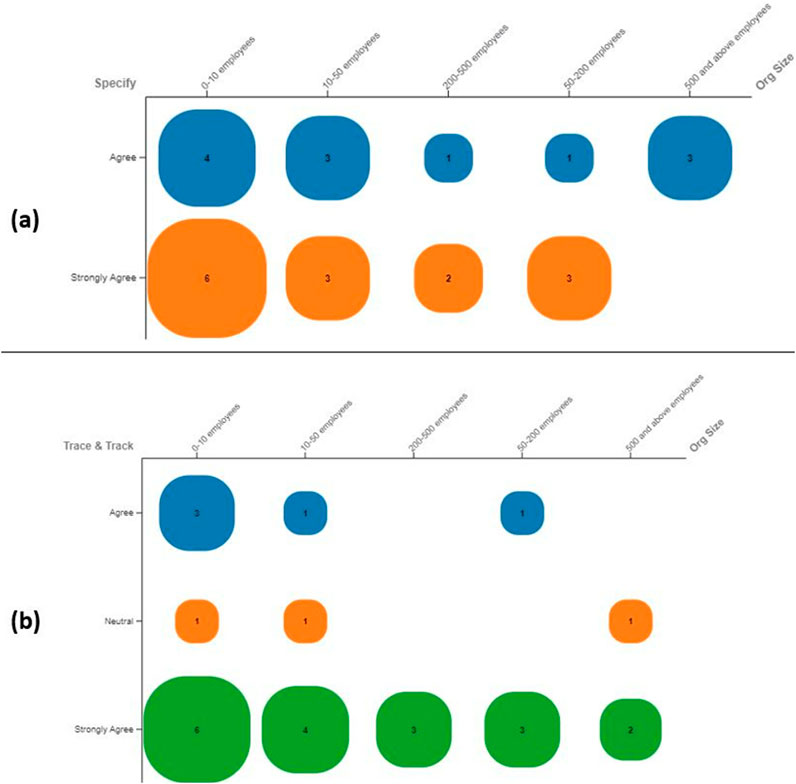

4.2.4 Frequency of use

Figure 16A illustrates that most participants who started using VReqST frequently or occasionally for authoring requirement specifications for their VR products mentioned that VReqST can specify requirements in detail, irrespective of the organization’s size. In almost all cases, the VReqST is highly capable and easy to specify requirements, or it is manageable compared to their conventional approaches.

Figure 16. (A) Ability to specify VReqST specifications by frequency of usage across organizations. (B) Ability to track VReqST requirements by frequency of usage across different classes of organizations.

4.2.5 Feature tracking

Figure 16B illustrates that most participants who started using VReqST frequently or occasionally for authoring requirement specifications for their VR products mentioned that VReqST can easily track and trace requirements, irrespective of the organization’s size. In almost all cases, the VReqST tool is highly capable and makes it easy to track and trace requirements to the overall features across the requirement specifications of VR products, especially when compared to conventional approaches.

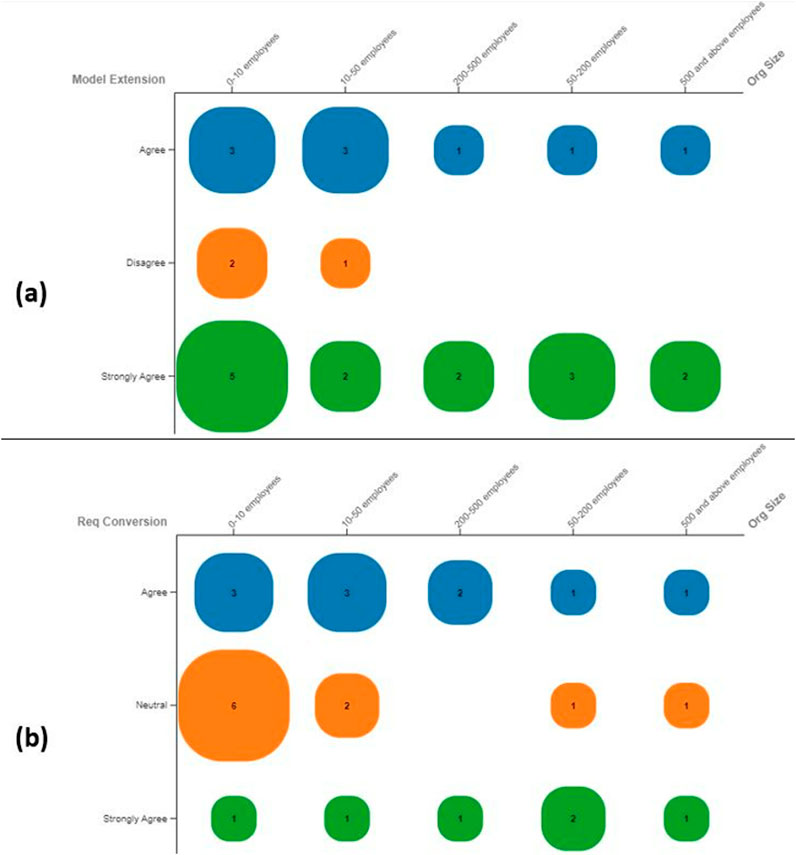

4.2.6 Extendability

Figures 17A, B illustrate that most participants who started using VReqST for authoring requirement specifications for their VR products observed that VReqST is highly extendable and adaptable in practice. It can be extended to accommodate new model attributes as the underlying model validator can be customized for domain- or context-specific applications. Most small and mid-range organizations working on context-specific VR products observed VR as a better alternative to specifying requirements to meet their business goals.

Figure 17. (A) Ability to extend VReqST to domain-specific applications, grouped by the ability to specify requirements based on conventional standards across different classes of organizations. (B) Ability to convert conventional specifications to VReqST specifications.

4.2.7 Additional observations

Along with the survey questionnaire, we asked the survey participants to share their additional insights on adopting and using VReqST. The following subsection illustrates detailed responses in participants’ words, which we have coded into specific themes for better illustration.

4.3 VReqST extensions in VR product development

With the VR community practitioners’ exposure to VReqST, the following ideas are suggested as future extensions to the VReqST tool by the VR community to ease overall VR software product development. We detailed them as follows.

4.3.1 Code generation

VReqST-based requirement specifications can be extrapolated further on generating model-driven code generation tools for scenes and three-dimensional articles. Irrespective of the VR technology stack, VReqST specifications can be used to develop new tools that can generate alternate versions of code snippets for behaviors that could be generated on-demand during the scene play. It is one of the critical future aspects of extending VReqST into automated scene generation and behavior generation on run time.

4.3.2 Test case generation

As VReqST curates the requirements in detail, automated test case generation of scene dynamics, article properties, action responses, behaviors, and timeline of events can be effortlessly explored to comprehend the quality of a delivered VR scene. A new, novel customizable software testing framework can be developed as an external wrapper around VReqST to generate on-demand test cases during run-time to improve the performance of the VR scene.

4.3.3 Risk and dependencies

With detailed VReqST requirements, the requirement analyst can determine the risks of prioritizing or extending new features. The requirement analyst can easily understand the overall dependencies of articles and their action responses on each other. These dependencies will help the requirement analyst estimate the consequences of the continuous evolution of a feature in VR and contribute toward a higher-quality VR product.

4.3.4 Traceability

As the VReqST requirement specifications establish a relationship between VR concepts like scenes, articles, action responses, and timelines, the changes made to one concept will reflect across other concepts. These specifications support design traceability, helping understand the rationale behind design decisions. New tools can be developed alongside VReqST to handle code traceability, aiding the understanding of code segments and assists with debugging. Such new tools can support test traceability to help testers identify gaps in test-case coverage.

4.3.5 Maintenance and portability

With substantial requirements, the challenges of VR platform portability, i.e., porting VR scenes developed using a particular technology stack to another, can be mitigated to some extent. As VReqST can distinguish platform-independent requirements from platform-dependent ones, VR developers can now maintain platform-independent source code separately from the regular code branch. This practice may improve the maintainability and versioning of VR source code.

4.3.6 New types of requirements

The current VReqST version does not facilitate security, privacy, and usability requirements. The VR community recommends facilitating additional features to VReqST, including customizable security, privacy, and usability requirements, as part of the new model template. These requirement types are non-generic and domain-centered. They may vary from application to application. As VReqST is customizable, security, privacy, and usability requirements can be included beyond the bare-minimum model template. It can be managed by domain-specific requirement analysts to accommodate such new requirement types by composing a custom model template on par with the bare-minimum model template.

4.4 Proof of concept: specifying requirements for depression detection application using VR

4.4.1 Background

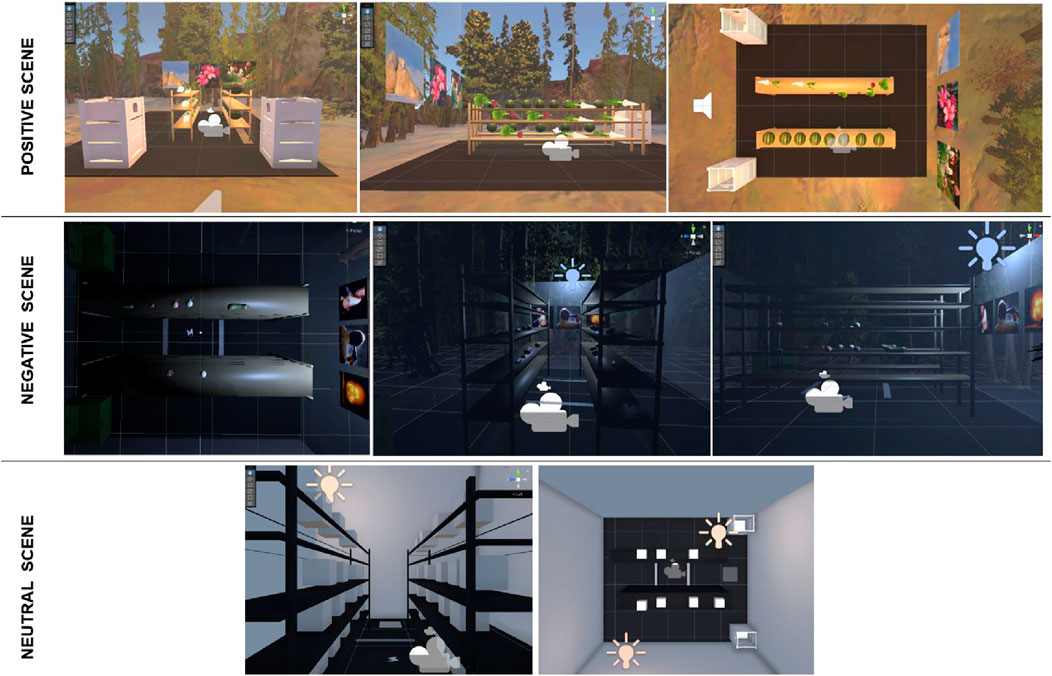

Between January 2023 and October 2023, researchers from the Software Engineering Research Center and Cognitive Science Laboratory at IIIT Hyderabad worked toward developing multiple virtual reality scenes for the detection or intervention of depression using VR technology. These VR scenes are used as a medium to evoke emotions that are deemed to result in positive, negative, and neutral emotions. These three emotions are evoked using positive, negative, and neutral environments. Figure 18 illustrates the positive scene, negative scene, and neutral scene with top view and side views. The requirement specifications of these VR scenes are first authored using VReqST; they are revised and then finalized for design and development. These VR scenes are developed in UNITY Game Engine (Unreal Game Engine, 2022) and are visualized using HTC Vive Pro 2. This headset has a dual RGB low persistence LCD screen with a resolution of 2,448

4.4.2 Experiment

The three developed VR scenes are designed to evoke the participants’ positive, negative, and neutral emotions while locomoting in the virtual environment. While the participant is under locomotion under the influence of a VR scene, the participant’s gait pattern is captured using a VelGmat (Wani et al., 2022), i.e., a Velostat-based gait mat that captures gait pressure. The gait pattern under the influence of positive, negative, and neutral scenes will reveal the levels of depression in a given participant as the scene evokes emotions. The participant is required to walk around in the environment once clockwise around the right shelf and once anti-clockwise around the left shelf, and in each round, while returning, he/she is required to pick up the specified object and place it into the respective crate.

4.4.3 VR scene requirements

All three environments have a 10 × 2 foot aisle with six-foot-high shelves on both sides, two feet apart. There are two similar aisles on the right and left that allow the players to circle the right and left shelves clockwise and anticlockwise, respectively. The walking area is separated from the respective terrain by a glass wall. The right aisle has an opaque wall with images of positive and negative environments. Both shelves are stacked with four objects on three different levels. The aisles on the sides have crates in the corners.

4.5 Future work

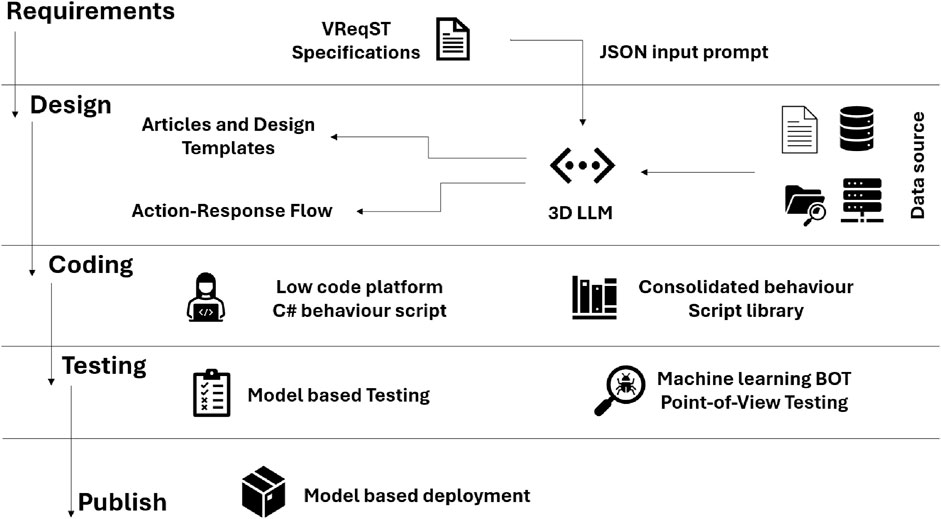

Model-driven development (MDD) is a software engineering methodology that emphasizes the creation and use of domain models. These are conceptual models representing various aspects of a software system. They serve as blueprints for software development, guiding the process from design to code generation. MDD involves abstracting technical aspects such as logic, data models, and user interfaces into visual representations that can be easily manipulated. The ultimate goal of MDD is to enhance productivity, improve software quality, and facilitate collaboration among developers of varying skill levels. In the context of virtual reality software products, our role-based model template can be useful for formulating a model-driven development approach using domain-specific large language models and generative AI. Our role-based model template for comprehending the virtual reality technology domain is a backbone for formulating model-driven development for developing virtual reality software products. Using our role-based model template for VR, we developed an open-source requirement specification tool called VReqST. This tool can apprehend bare-minimum concepts related to the VR technology domain. It can generate precise and clear specifications that can become an input to our MDD pipeline for virtual reality. Figure 19 describes a development pipeline for virtual reality software. During the requirement phase, VReqST can be used to specify requirements, which are then used as input to a three-dimensional large learning model (LLM) that is specific to the VR domain. The input will be in a JSON format. In the design phase, the 3-D LLM is trained using 3-D object data sources that offer data in the form of point clouds, voxels, or meshes to generate multiple desired articles, mock design templates for terrain, three-dimensional environment, and respective action response flow.

The output of the 3-D LLM can be used as input to a low-code platform that generates the behavior script for specific VR game engines and creates a consolidated behavior script library for future reference. A model-based testing protocol can be formulated for these behavior scripts based on the generated code snippets. The overall 3-D run-through can be evaluated using a machine learning BOT through point-of-view testing. The tested artifact can now be deployed using a model-based deployment approach, where changes reflected in the model (scene artifacts, article artifacts, action response artifacts, behavior artifacts, and timeline artifacts) can be deployed asynchronously in an incremental approach to production. However, despite various automated processes involved in the proposed overall MDD pipeline for virtual reality software development, it is partially a human-in-a-loop process. Human validation is required in a few stages to validate the exactness and quality of the resultant VR scene. This hypothesis requires a lot of conviction to execute at a large scale.

5 Related work

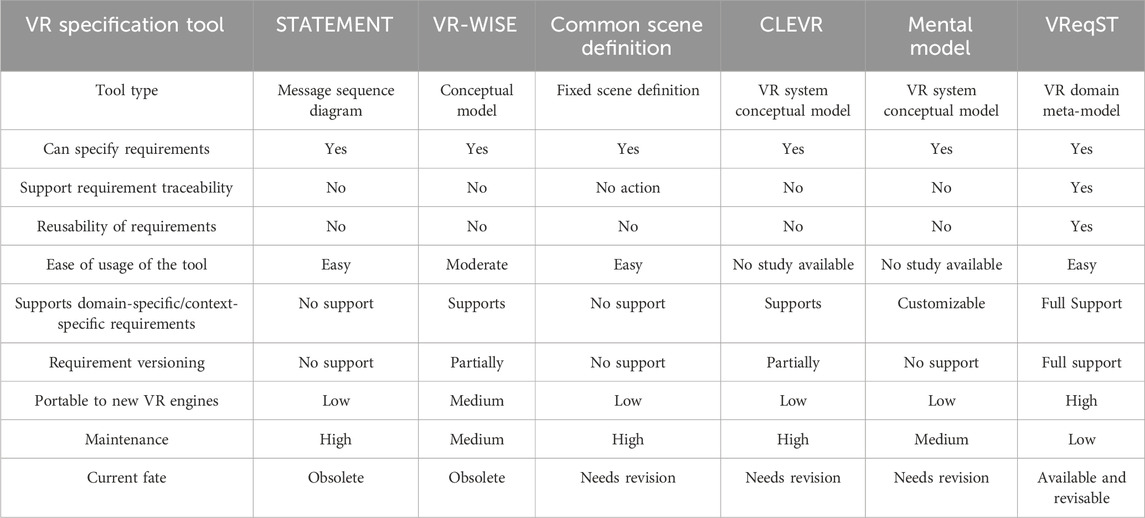

Although VR applications have much attention in practice, as a domain, they still require wider adoption. Platform fragmentation and hardware dependencies are causing serious adoption issues. Early studies in requirement specification in VR are either human-centered or process-oriented. The VR community widely practices functional software requirement specification documentation, unified modeling language/legacy software requirement specification documentation (Ragkhitwetsagul et al., 2022), templatized specifications (Okere et al., 2016), and task tree documentation (Colombo et al., 2020). These approaches do not facilitate detailed specifications that are VR-centered, and it depends on the requirement analyst’s ability to articulate the requirements effectively. Thus, such approaches are subjective and arbitrary in practice. Other approaches like common scene definition (Belfore et al., 2005), conceptual VR prototyping (Manninen, 2002), and mental modal technique (Mohd Muhaiyuddin and Awang Rambli, 2014) are distinct requirement specification methods that are used on sample VR scenes and studying spatial presence. They are specific to simulation-based VR applications and are not extendable to other VR applications. Kim et al. (1998) were the first to explore the possibilities of creating a process-oriented approach for requirement engineering in VR. Early tools like STATEMENT (Kim et al., 1998) based on POSTECH and message sequence diagrams (MSDs) paved the way for VR requirement engineering automation. Pellens et al. (2005) proposed VR-WISE for modeling the static part of VR, from conceptual specification to code generation, Seo and Kim (2002) proposed a CLEVR model that considers the functions, behavior, hierarchical modeling, user task and interaction modeling, and composition reuse in early VR systems. Alinne et al. proposed a scene-graph-based approach for requirement specification and testing automation of VR products (Souza et al., 2018). Our previous work about an extensive systematic literature review on requirement engineering practices of VR software products (Karre et al., 2024) also illustrates that almost all the requirement specification approaches are either template-based or manual in practice. Most of these tools and approaches are obsolete and require a generalized revision to align with the contemporary state-of-the-art in VR as a technology domain. Amidst such challenges, we ideated and developed VReqST, a model-based tool to specify requirements for VR products to address the observed gaps for the VR community to adopt and practice. Table 4 illustrates the unique contributions of VReqST in comparison to existing VR requirement specification tools.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/Supplementary Material.

Ethics statement

Written informed consent from participants was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

SK: conceptualization, data curation, formal analysis, funding acquisition, investigation, methodology, project administration, resources, software, supervision, validation, visualization, writing–original draft, and writing–review and editing. YR: conceptualization, data curation, formal analysis, funding acquisition, investigation, methodology, project administration, resources, software, supervision, validation, visualization, writing–review and editing, and writing–original draft.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This effort was carried out at the Design Innovation Center, IIIT Hyderabad, India, partially funded by the Ministry of Education, Government of India.

Acknowledgments

The authors thank the VR practitioners from SAP-XR, Deloitte Digital Laboratories, ThoughtWorks, Samsung Studios, UNITY Dev Group, and Khronos Dev Community for participating in the empirical study to formulate and sharing their insights.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Generative AI was used in the creation of this manuscript. We acknowledge that image as part of Figures 11–iv image generated by OpenAI’s DALL-E 2 for reference purpose only, December 15, 2023.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2024.1471579/full#supplementary-material

References

Anwar, M. S., Choi, A., Ahmad, S., Aurangzeb, K., Laghari, A. A., Gadekallu, T. R., et al. (2024). A moving metaverse: Qoe challenges and standards requirements for immersive media consumption in autonomous vehicles. Appl. Soft Comput. 159, 111577. doi:10.1016/j.asoc.2024.111577

Arshad, H., Shaheen, S., Khan, J., Anwar, M., Khursheed, K., and Alhussein, M. (2023). A novel hybrid requirement’s prioritization approach based on critical software project factors. Cognition Technol. Work 25, 305–324. doi:10.1007/s10111-023-00729-3

Belfore, L. A., Krishnan, P. V., and Baydogan, E. (2005). “Common scene definition framework for constructing virtual worlds,” in Proceedings of the 37th Conference on Winter Simulation (Winter Simulation Conference), WSC’05, Orlando, FL, December 4, 2005, 1985–1992. doi:10.1109/wsc.2005.1574477Proc. Winter Simul. Conf. 2005.

Colombo, C., Blas, N. D., Gkolias, I., Lanzi, P. L., Loiacono, D., and Stella, E. (2020). An educational experience to raise awareness about space debris. IEEE Access 8, 85162–85178. doi:10.1109/ACCESS.2020.2992327

Filho, O. V. S., and Kochan, K. G. (2001). “The importance of requirements engineering for software quality,” in Proceedings of the World Multiconference on Systemics, Cybernetics and Informatics: Information Systems Development-Volume I - Volume I (IIIS) (ISAS-SCI ’01), Orlando, FL, July 22–25, 2001, 529–532.

Gervasi, V., and Nuseibeh, B. (2000). “Lightweight validation of natural language requirements: a case study,” in Proceedings Fourth International Conference on Requirements Engineering, ICRE 2000 (Schaumburg, IL: Cat. No.98TB100219), 140–148. doi:10.1109/ICRE.2000.855601

Gonzalez-Perez, C., and Henderson-Sellers, B. (2008). Metamodelling for software engineering. Wiley Publishing.

Hoda, R. (2021). Socio-technical grounded theory for software engineering. IEEE Trans. Softw. Eng. 48, 3808–3832. doi:10.1109/TSE.2021.3106280

[Dataset] Karre, S. A. (2024a). Vreqst - online documentation for virtual reality requirement analysts.

Karre, S. A., Mittal, R., and Reddy, R. (2023). “Requirements elicitation for virtual reality products - a mapping study,” in Proceedings of the 16th Innovations in Software Engineering Conference ISEC’23, Allahabad India, February 23–25, 2023 (New York, NY, USA: Association for Computing Machinery). doi:10.1145/3578527.3578536

Karre, S. A., Neeraj, M., and Reddy, Y. R. (2019). “Is virtual reality product development different? an empirical study on vr product development practices,” in Proceedings of the 12th Innovations on Software Engineering Conference (ISEC), Pune, India, February 14–16, 2019 (New York, NY: ACM). doi:10.1145/3299771.3299772

Karre, S. A., Pareek, V., Mittal, R., and Reddy, Y. R. (2022). “A role based model template for specifying virtual reality software,” in In proceedings International Workshop on Virtual and Augmented Reality Software Engineering, in conjucture with Automated Software Engineering (ASE 2022), Oakland Center, MI, October 10–14, 2022 (New York, NY: : ACM). doi:10.1145/3551349.3560514

Karre, S. A., Reddy, Y. R., and Mittal, R. (2024). Re methods for virtual reality software product development: a mapping study. ACM Trans. Softw. Eng. Methodol. 33, 1–31. doi:10.1145/3649595

Kim, D., France, R. B., Ghosh, S., and Song, E. (2003). “A role-based metamodeling approach to specifying design patterns,” in 27th International Computer Software and Applications Conference (COMPSAC 2003): Design and Assessment of Trustworthy Software-Based Systems, Dallas, TX, 3-6 November 2003 (IEEE Computer Society), 452. doi:10.1109/CMPSAC.2003.1245379

Kim, G. J., Kang, K. C., Kim, H., and Lee, J. (1998). “Software engineering of virtual worlds,” in Proceedings of the ACM Symposium on Virtual Reality Software and Technology VRST ’98, Taipei, Taiwan, November 2–5, 1998 (New York, NY, USA: Association for Computing Machinery), 131–138. doi:10.1145/293701.293718

Levy, J. R., and Bjelland, H. (1994). Create your own virtual reality system. United States: McGraw-Hill, Inc.