- 1Industrial Systems Institute, Athena Research Center, Patras, Greece

- 2Department of Electrical and Computer Engineering, University of Patras, Patras, Greece

- 3Chair of Computational Modeling and Simulation, School of Engineering and Design, Technical University of Munich, Munich, Germany

- 4Department of Electrical and Computer Engineering, University of Western Macedonia, Kozani, Greece

- 5K3Y Ltd., Sofia, Bulgaria

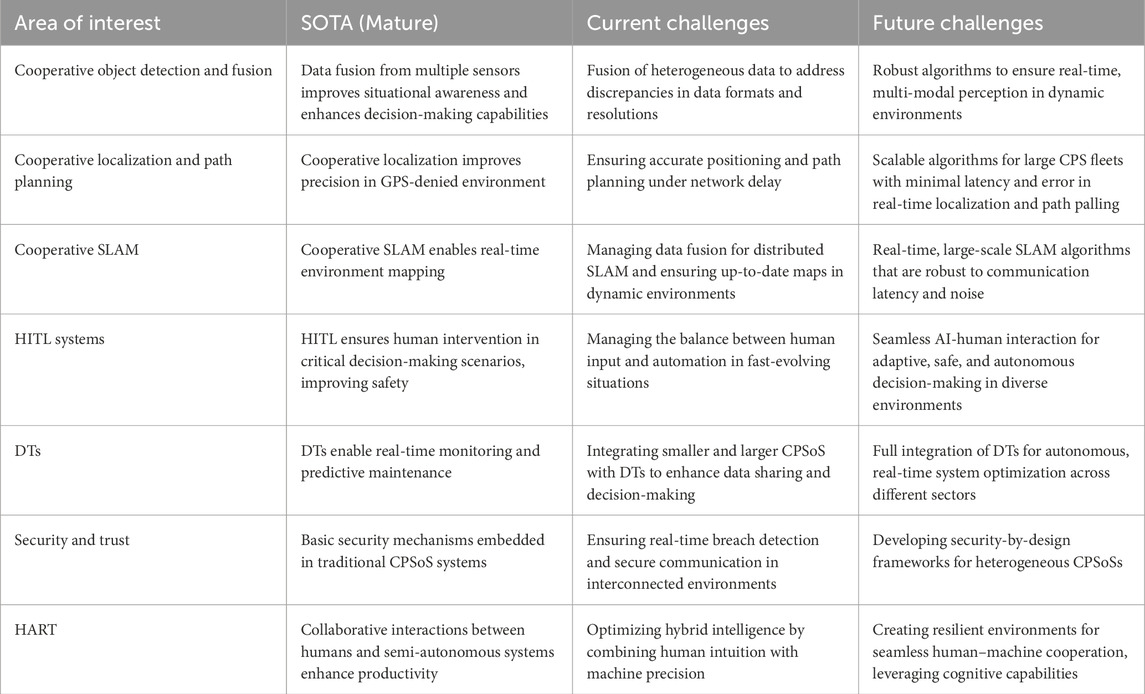

Cyber–physical systems (CPSs) are evolving from individual systems to collectives of systems that collaborate to achieve highly complex goals, realizing a cyber–physical system of systems (CPSoSs) approach. They are heterogeneous systems comprising various autonomous CPSs, each with unique performance capabilities, priorities, and pursued goals. In practice, there are significant challenges in the applicability and usability of CPSoSs that need to be addressed. The decentralization of CPSoSs assigns tasks to individual CPSs within the system of systems. All CPSs should harmonically pursue system-based achievements and collaborate to make system-of-system-based decisions and implement the CPSoS functionality. The automotive domain is transitioning to the system of systems approach, aiming to provide a series of emergent functionalities like traffic management, collaborative car fleet management, or large-scale automotive adaptation to the physical environment, thus providing significant environmental benefits and achieving significant societal impact. Similarly, large infrastructure domains are evolving into global, highly integrated cyber–physical systems of systems, covering all parts of the value chain. This survey provides a comprehensive review of current best practices in connected cyber–physical systems and investigates a dual-layer architecture entailing perception and behavioral components. The presented perception layer entails object detection, cooperative scene analysis, cooperative localization and path planning, and human-centric perception. The behavioral layer focuses on human-in-the-loop (HITL)-centric decision making and control, where the output of the perception layer assists the human operator in making decisions while monitoring the operator’s state. Finally, an extended overview of digital twin (DT) paradigms is provided so as to simulate, realize, and optimize large-scale CPSoS ecosystems.

1 Introduction

In the past few years, there has been a significant investment in cyber–physical systems of systems (CPSoSs) in various domains, like automotive, industrial manufacturing, railways, aerospace, smart buildings, logistics, energy, and industrial processes, all of which have a significant impact on the economy and society. The automotive domain is transitioning to the system of systems approach, aiming to provide a series of emergent functionalities like traffic management, collaborative car fleet management, or large-scale automotive adaptation to the physical environment, thus providing significant environmental benefits (e.g., air pollution reduction) and achieving significant societal impact.

Similarly, large infrastructure domains, like industrial manufacturing (Nota et al., 2020), are evolving into global, highly integrated CPSoSs that go beyond pure production and cover all parts of the value chain, including research, design, and service provision. This novel approach can enable a high level of flexibility, allowing for rapid adaptation to customer requirements, a high degree of product customization, and improved industrial sustainability. Furthermore, achieving collective behavior in CPSoS-based solutions for large-scale control processes will help citizens improve their quality of life through smart, safe, and secure cities, energy-efficient buildings, and green infrastructures (i.e., lighting, water, and waste management), as well as smart devices and services for smart home functionality, home monitoring, health services, and assisted living.

However, in practice, there are significant challenges in the applicability and usability of CPSoSs that need to be addressed to take full advantage of the CPSoS benefits and sustain/extend their growth. The fact that even a small CPSoS (e.g., a connected car) consists of several subsystems and executes thousands of lines of code highlights the complexity of the system-of-systems solution and the extremely elaborate CPSoS orchestration required, highlighting the need for an approach beyond traditional control and management centers (Engell et al., 2015). Given this complexity, having a centralized authority that handles all CPSoS processes, subsystems, and control loops seems to be challenging to capture and implement, thus pointing to the need for a different design, control, and management approach. The decentralization of CPSoS processes and overall functionality by assigning tasks to individual cyber–physical systems (CPSs) within the system of systems can be a reasonable solution. However, the collaborative mechanisms between CPSs (that constitute the CPSoS behavior) remain a point of research since appropriate tools and methodologies are needed to ensure that the expected system-of-systems functional requirements are met (the CPSoS operates as it should be) and that non-functional requirements are fulfilled (the CPSoS remains resilient, safe, and efficient). Another critical challenge in effectively developing CPSoSs is the need for integrating social and human factors into the design process of CPSs so that the cyber, physical, and human layers can be efficiently coordinated and operated (Zhou et al., 2020). Compared with traditional CPSs, cyber–physical–social systems (CPSSs) regard humans as an important factor of the system and, therefore, incorporate human-in-the-loop (HITL) mechanisms into system operations so as to increase the trustworthiness of the overall CPSoS. To be more concise, creating an intelligent CPSoS environment relies on both modern technology and the natural resources provided by its inhabitants. Specifically, both “things” (objects and devices) and “humans” are essential for making smart environments even smarter. People benefit from smart services made possible by today’s technology while simultaneously contributing to the enhancement of business intelligence. In this context, CPSS, as the human-in-the-loop counterpart of CPSs, can be used to gather information from humans and provide services with user awareness, creating a more responsive and personalized intelligent environment.

To address the complex challenges in CPSoSs, researchers have proposed a two-layer architecture consisting of a perception layer and a behavioral layer. This approach serves as a foundation for advancing CPSoS research and development across multiple critical areas. The proposed architecture aims to enhance functionality, reliability, adaptability, and the overall situational awareness (Chen and Barnes, 2014) of CPSoSs in various domains, from automotive and industrial manufacturing to smart cities and healthcare. Situational awareness refers to the collective understanding of an environment shared among multiple agents or entities—whether human or machine—who work together to achieve a common goal. More specifically, this concept of collective understanding emphasizes that situational awareness is not confined to individual knowledge but is distributed across team members. By cooperating, participants can better understand complex environments, adapt to dynamic changes, and respond more efficiently to evolving situations. As such, by focusing on these two interconnected layers, researchers can tackle the intricate issues of system integration, human–machine interaction, and real-time decision-making that are essential for the next generation of CPSoSs. The perception layer focuses on enhancing situational awareness through sophisticated algorithms for object detection, cooperative scene analysis, cooperative localization, and path planning. Research in this layer aims to develop effective perception mechanisms that are foundational for achieving higher levels of autonomy and reliability in CPSoSs. These advancements will enable CPSoSs to interact more intelligently with their environment and make informed decisions based on comprehensive situational awareness. Additionally, the behavioral layer focuses on integrating human operators into CPSoSs, recognizing the crucial role of human knowledge, senses, and expertise in ensuring operational excellence. This layer introduces the HITL approach, which allows continuous interaction between humans and CPSoS control loops. It addresses applications in which humans directly control CPSoS functionality, systems passively monitor human actions and biometrics, and hybrid combinations of both. The behavioral layer explores advanced Human-Machine Interfaces (HMI), including speech recognition, gesture recognition, and extended reality technologies, to enhance situational awareness and enable seamless human–system interaction. Furthermore, it investigates the prediction of operator intentions to improve collaboration between humans and CPSoSs, particularly in industrial scenarios where safety and efficiency are paramount. By integrating human expertise and intuition alongside automated processes, this layer aims to create a true human–machine symbiosis, vital for maintaining system flexibility and responsiveness in dynamic environments and unforeseen events. Key research directions within this two-layer framework include decentralized control and management, human-in-the-loop integration, data analytics and cognitive computing, real-time processing and decision making, and collaborative mechanisms between individual CPSs. These areas of study aim to develop more intelligent, responsive, and human-centric systems that can adapt to the complex demands of our interconnected world.

In addition to these approaches, digital twins (DTs) are an emerging technology that assists in addressing the challenges within CPSoSs (Mylonas et al., 2021). DTs create accurate virtual replicas of physical systems, allowing for continuous observation of system performance, real-time data integration, and predictive maintenance, thus improving the reliability and efficiency of CPSoSs (Tao et al., 2019). DTs facilitate better decision-making by providing a comprehensive view of the entire system and enabling the simulation of various scenarios for proactive planning and response (Wang et al., 2023b). Furthermore, the integration of multiple CPSoSs through DTs can significantly enhance task performance. By enabling seamless communication and coordination among different CPSoSs, DTs ensure that each subsystem can efficiently share data and resources, leading to improved overall system performance. For example, in smart city environments, integrating transportation systems, energy grids, and public safety networks through DTs can optimize urban operations, reduce response times in emergencies, and enhance the overall quality of life for residents (Jafari et al., 2023). By integrating DTs into the CPSoS framework, systems can achieve higher efficiency, reliability, and adaptability. This synergy between DTs and CPSoSs leads to smarter, more resilient, and more efficient systems across various domains, providing robust solutions to complex challenges and contributing to the overall improvement of system performance and human wellbeing.

The remainder of this survey is organized as follows. In Section 2, we present the related work and outline the contributions of this study. Section 3 describes the conceptual architecture of CPSs. Section 4 delves into the perception layer, summarizing relevant works and providing detailed insights. Section 5 focuses on the behavioral layer, offering a comprehensive summary of pertinent research. Section 6 discusses the role of digital twins in optimizing the CPSoS ecosystem. Section 7 identifies open research questions, while Section 8 highlights key lessons learned. In Section 9, we provide a detailed discussion of various aspects of the study. Finally, Section 10 concludes the survey.

2 Related work and contribution

Many of the recent review papers discuss how the CPSs are utilized in emerging applications. Chen (2017) conducted an extensive literature review on CPS applications. Sadiku et al. (2017) provided a brief introduction to CPSs, their applications, and challenges. Yilma et al. (2021) presented the SoA perspectives on CPSs regarding definitions, underlining principles, and application areas. Other survey papers focus more on very specific areas. Haque et al. (2014) presented a survey of CPS in healthcare applications, characterizing and classifying different components and methods that are required for the application of CPS in healthcare, while Oliveira et al. (2021) presented the use of CPSs in the chemical industry. On the other hand, architecture and CPS characteristics are also common issues that are discussed in many survey papers. Hu et al. (2012) reviewed previous works of CPS architecture and introduced the main challenges, which are real-time control, security assurance, and integration mechanisms. CPS characteristics (like generic architecture, design principles, modeling, dependability, and implementation) and their application domains are also presented by Liu and Wang (2020). Lozano and Vijayan (2020) presented the current state-of-the-art, intrinsic features (like autonomy, stability, robustness, efficiency, scalability, safe trustworthiness, reliable consistency, and accurate high precision), design methodologies, applications, and challenges for CPS. Liu et al. (2017b) discussed the development of CPS from the perspectives of the system model, information processing technology, and software design. Oliveira et al. (2021) discussed the use of artificial intelligence to confer cognition to the system. Topics such as control and optimization architectures and digital twins are presented as components of the CPSs. Al-Mhiqani et al. (2018) investigated the current threats on CPSs (e.g., the type of attack, impact, intention, and incident categories). Leitão et al. (2022) provided an analysis of the main aspects, challenges, and research opportunities to be considered for implementing collective intelligence in industrial CPSs. Estrada-Jimenez et al. (2023) explored the concept of smart manufacturing, focusing on self-organization and its potential to manage the complexity and dynamism of manufacturing environments. It presents a systematic literature review to summarize current technologies, implementation strategies, and future research directions in the field. Pulikottil et al. (2023) explored the integration of multi-agent systems in cyber–physical production systems for smart manufacturing, offering a thorough review and SWOT analysis validated by industry experts to assess their potential benefits and challenges. Hamzah et al. (2023) provided a comprehensive overview of CPSs across 14 critical domains, including transportation, healthcare, and manufacturing, highlighting their integration into modern society and their role in advancing the fourth industrial revolution. Additionally, DT-based survey works focus on realizing automotive CPS (Xie et al., 2022a), achieving high adaptability with a short development cycle, low complexity, and high scalability, which meet various design requirements during the development process, how the interconnection between different components in CPSs and DTs affect the smart manufacturing domain (Tao et al., 2019), as well as presenting the potential of DTs as a means to reinforce and secure CPSs and Industry 4.0 in general (Lampropoulos and Siakas, 2023).

In this survey, we focus on the CPS architecture and its modules that are used to increase the situational awareness of the CPSoS users. Considering the importance of human integration in CPSs, we include the HITL component and human–machine interaction to realize the CPSS paradigm. Additionally, we emphasize the critical role of DTs in optimizing CPSoS ecosystems. The main contributions of this paper can be summarized as follows:

3 Conceptual architecture

3.1 CPSoSs are heterogeneous systems

They consist of various autonomous CPSs, each with unique performance capabilities, criticality levels, priorities, and pursued goals. CPSs, in general, are self-organized and, on several occasions, may have conflicting goals, thus competing to get access to common resources. However, from a CPSoS perspective, all CPSs must also harmonically pursue system-based achievements and collaborate to make system-of-system-based decisions and implement the CPSoS behavior. Considering that CPSoSs consists of many CPSs, finding the methodology to achieve such an equilibrium in a decentralized way is not an easy task. The above issue becomes more complex when we also consider the amount of data to be exchanged between CPSs and the processing of those data. The collection of data and the data analytics need to be refined in such a way that only the important information is extracted and forwarded to other CPSs and the overall system. Furthermore, mechanisms to handle large amounts of data in a distributed way are needed to extract cognitive patterns and detect abnormalities. Thus, local data classification, labeling, and refinement mechanisms should be implemented in each CPS to offload the complexity and communication overhead at the system-of-systems level (Atat et al., 2018).

In the above-described setup, we cannot overlook the fact that CPSoSs depend on humans since humans are part of the CPSoS functionality and services, interact with the CPSs, and contribute to the CPSoS behavior. Operators and managers play a key role in the operation of CPSoSs and make many important decisions, while in several cases, human CPS users are the key players in the CPSoS main role (thus forming cyber–physical human systems). Thus, we need to structure a close symbiosis between computer-based systems and human operators/users and constantly enhance human situational awareness as well as devise a collaborative mechanism for handling CPSoS decisions, forcing the CPSoSs to comply with human guidelines and reactions. Novel approaches to human–machine interfaces that employ eXtended Reality (XR) principles need to be devised to help humans gain fast and easy-to-grasp insights into CPSoS processes while also enabling their seamless integration into CPSoS operations.

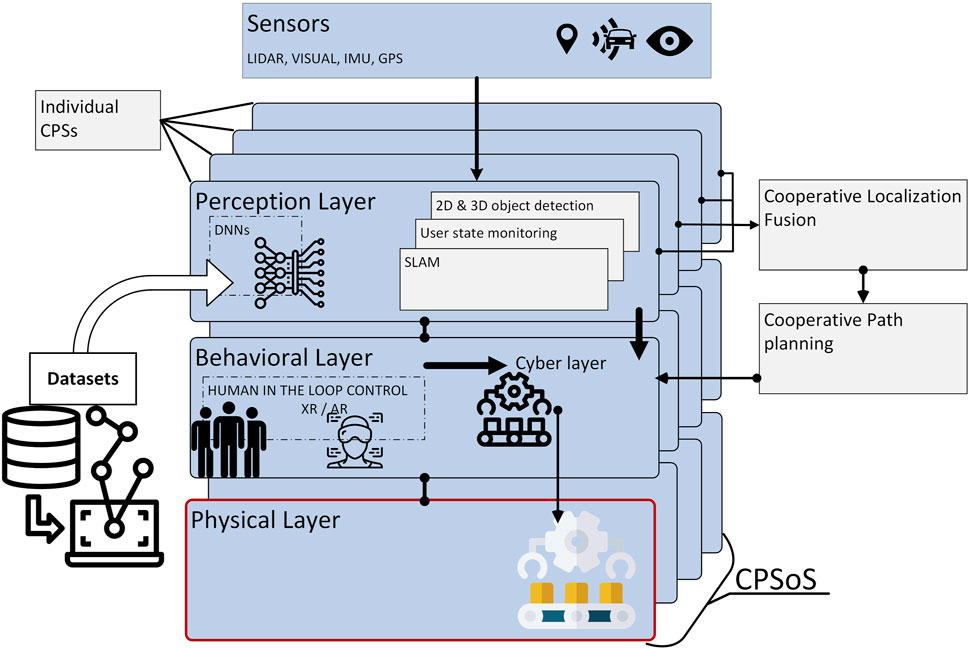

As shown in Figure 1, it is assumed that a CPSoS consists of interconnected CPSs, each acting independently while also collectively functioning as part of the CPSoS. We also assume that each individual CPS is composed of a perception module and a behavioral or decision-making module while bearing actuation capacities represented by the physical layer; this configuration facilitates the coordination with the other connected CPSs toward the collective implementation of a common goal or mission. A key aspect of the proposed architecture is the integration of various sensors, including redundant, complimentary, and cooperative sensors across nodes. More specifically, incorporating redundant sensors in interconnected CPSs is necessary to improve reliability, fault tolerance, and system safety. Redundant sensors provide backup in the event of failure of primary sensors, ensuring continued operation without disruption and maintaining the integrity and reliability of CPS (Bhattacharya et al., 2023). Additionally, the use of complementary sensors in heterogeneous systems allows for cross-verification of data, better coverage of sensor limitations, and improved decision-making in dynamic environments (Alsamhi et al., 2024). For example, combining visuals with depth sensors in autonomous vehicles helps enhance object detection, environmental mapping, and path planning. The diverse data from complementary sensors can be fused to produce more accurate and comprehensive situational awareness. Finally, cooperative sensors on individual CPSs work together to improve the accuracy and robustness of measurements and operations. These sensors share information and collaborate to address limitations inherent in individual sensors. For instance, in robotics, multiple sensors like cameras, inertial measurement units (IMUs), and proximity sensors can cooperate to provide accurate localization and object detection (Zhang and Singh, 2015).

The aggregation of the individual perception modules formulates the perception layer of the CPSoS, while the sensor, behavioral, and physical layers represent the summation of sensing, decision making, and actuation capabilities of the CPSoS. The perception layer can also be envisioned as a cognitive engine that employs appropriate algorithmic approaches for effective scene understanding, a task predominately accomplished today by deep neural network architectures. To this end, data collection and annotation are crucial for the training of AI models to undertake such tasks. This review paper sheds light upon all of the aforementioned aspects of interconnected CPSs.

4 Perception layer

4.1 Cooperative scene analysis

4.1.1 Background on object detection from 2D and 3D data

Object detection has evolved considerably since the appearance of deep convolutional neural networks (Zhao et al., 2019b). Nowadays, there are two main branches of proposed techniques, namely, two-stage and single-stage detectors.

In the first one, the object detectors, using two stages, generate region proposals, which are subsequently classified into the categories that are determined by the application at hand (e.g., vehicles, cyclists and pedestrians, in the case of autonomous driving). Some important, representative, high-performance examples of this first branch are R-CNN (Girshick et al., 2014), Fast R-CNN (Girshick, 2015), Faster R-CNN (Ren et al., 2016), spatial pyramid pooling net (He et al., 2015), region-based fully convolutional network (R-FCN) (Dai et al., 2016), feature pyramid network (FPN) (Lin et al., 2017), and mask R-CNN (He et al., 2017). In the second branch, object detection is cast to a single-stage, regression-like task with the aim to provide directly both the locations and the categories of the detected objects. Notable examples, here, are Single Shot MultiBox Detector (SSD) (Liu et al., 2016), SqueezeDet (Wu et al., 2017),YOLO (Redmon et al., 2016), YOLOv3 (Redmon and Farhadi, 2018) and EfficientDet (Tan et al., 2020). A recent review on object detection using deep learning (Zhao et al., 2019b) provides inquisitive insight into the aforementioned approaches to object detection.

Object detection in LiDAR point clouds is a three-dimensional problem where the sampled points are not uniformly distributed over the objects in the scene and do not directly correspond to a Cartesian grid. Three-dimensional object detection is dominantly performed using 3D convolutional networks due to the irregularity and lack of apparent structure in the point cloud. Several transformations take place to match the point cloud to feature maps that are forwarded into deep networks. Commendable detection outcomes have appeared in the literature as early as 2016. Li et al. (2016a) projected the 3D points onto a 2D map and employed 2D fully convolutional networks to successfully detect cars in a LiDAR point cloud, reaching an accuracy of 71.0% for car detection of moderate difficulty. A follow-up paper by Li (2017) proposed 3D fully convolutional networks, reporting an accuracy of 75.3% for car detection of moderate difficulty. However, since dense 3D fully convolutional networks demonstrate high execution times, Yan et al. (2018) investigated an improved sparse convolution method for such networks, which significantly increases the speed of both training and inference. According to KITTI benchmarks, the reported accuracy reaches 78.6% for car detection of moderate difficulty. To revisit 2D convolutions in 3D object detection, Lang et al. (2019) proposed a novel encoder called PointPillars that utilizes PointNets to learn a representation of point clouds organized in vertical columns (pillars) and subsequently employs a series of 2D convolutions. PointPillars reported an accuracy of 77.28% in the same category. Shi et al. (2019) proposed PointRCNN for 3D object detection from raw point clouds. They devised a two-stage approach where the first stage generates bottom-up 3D proposals and the second stage refines these proposals in the canonical coordinates to obtain the final detection results, reporting an accuracy of 78.70%. An extended variation of PointRCNN is the part-aware and aggregation neural network (Part-

4.1.2 Cooperative object detection and fusion

Object detection from a single point of view of a single agent is definitely vulnerable to a series of sensor limitations that can significantly affect the outcome. These limitations entail occlusion, limited field-of-view, and low-point density at distant regions. The transition from isolated CPSs to CPSoSs, enabling the collaboration among various agents, offers the opportunity to tackle such problems. Chen et al. (2019b) proposed a cooperative sensing scheme where each CPS combines its sensing data with those of other connected vehicles to help enhance perception. Furthermore, to tackle the increased amount of data, the authors propose a sparse point-cloud object detection method. It is important to highlight that the agents share on-board V2V information and fuse these data locally. Feature-level fusion is examined in a follow-up work by Chen et al. (2019a). The authors propose F-Cooper framework, a method that improves the autonomous vehicle’s detection precision without introducing much computational overhead. This framework aims to utilize the capacity of feature maps, especially for 3D LiDAR data generated by autonomous vehicles as the feature maps are used for object detection only by single vehicles. F-Cooper is an end-to-end 3D object detection system with feature-level fusion supporting voxel feature fusion and spatial feature fusion. Voxel feature fusion achieves almost the same detection precision improvement compared to the raw-data level fusion solution, which offers the ability to dynamically adjust the size of feature maps to be transmitted. A unique characteristic of F-Cooper is that it can be deployed and executed on in-vehicle and roadside edge systems. Hurl et al. (2020) proposed TruPercept to tackle malicious attacks against cooperative perception systems. In their trust scheme, each agent reevaluates the detections originating from its neighboring agents using data from its position and perspective. Arnold et al. (2020) proposed a central system that fuses data from multiple infrastructure sensors, facilitating the management of both sensor and processing costs through shared resources while addressing evaluations of pose sensor configurations, the number of sensors, and the sensor field-of-view. The authors deploy VoxelNet (Zhou and Tuzel, 2018) and claim to have reached an average precision score of

4.2 Cooperative localization, cooperative path planning, and SLAM

Unmanned vehicles, either ground (UGV), aerial (UAV), or underwater (UUV), are prominent CPSoSs. Typical examples include autonomous vehicles and robots, operating for a variety of different civilian and military challenging tasks. At the same time, the prototyping of 5G and V2X (e.g., V2V and V2I) related communication protocols enables the close collaboration of vehicles to address their main individual or collective goals. Autonomous vehicles with inter-communication and network abilities are known as connected and automated vehicles (CAVs), being part of the more general concept of connected CPSoSs. The main focus of CAV’s related technologies is to increase and improve the safety, security, and energy consumption of (cooperative or not) autonomous driving by the strict control of the vehicle’s position and motion (Montanaro et al., 2018). At a higher level, CAV have the potential for a further enhancement of the transportation sector’s overall performance.

Perception and scene analysis ability are fundamental for a vehicle’s reliable operation. Computer vision-based object detection and tracking should be seen as a first (though necessary) pre-processing step, feeding more sophisticated operational modules of vehicles (Eskandarian et al., 2021). The latter is imperative to have accurate knowledge of both its own and its neighbors’ (vehicles, pedestrians, or static landmarks) position in order to design efficiently the future motion actions, i.e., to determine the best possible velocity, acceleration, yaw rate, etc. These motion actions primarily focus on, e.g., keeping safe inter-vehicular distances, eco-friendly driving by reducing gas emissions, etc. The above challenges can be addressed in the context of localization, SLAM, and Path planning, which are discussed below.

4.2.1 Cooperative localization

The localization module is responsible for providing absolute position information to the vehicles. Global Navigation Satellite Systems (GNSSs), like GPS, Beidou, Glonass, etc., are usually exploited for that purpose. The GPS sensor is currently employed as the most common commercial device. It is straightforward to couple or fuse GPS information with IMU readings (Noureldin et al., 2013) to design a complete inertial navigation system (INS) providing positioning, velocity, and timing (PVT) solutions. The IMU sensor consists of gyroscopes and accelerometers for measuring the yaw rate and acceleration (in

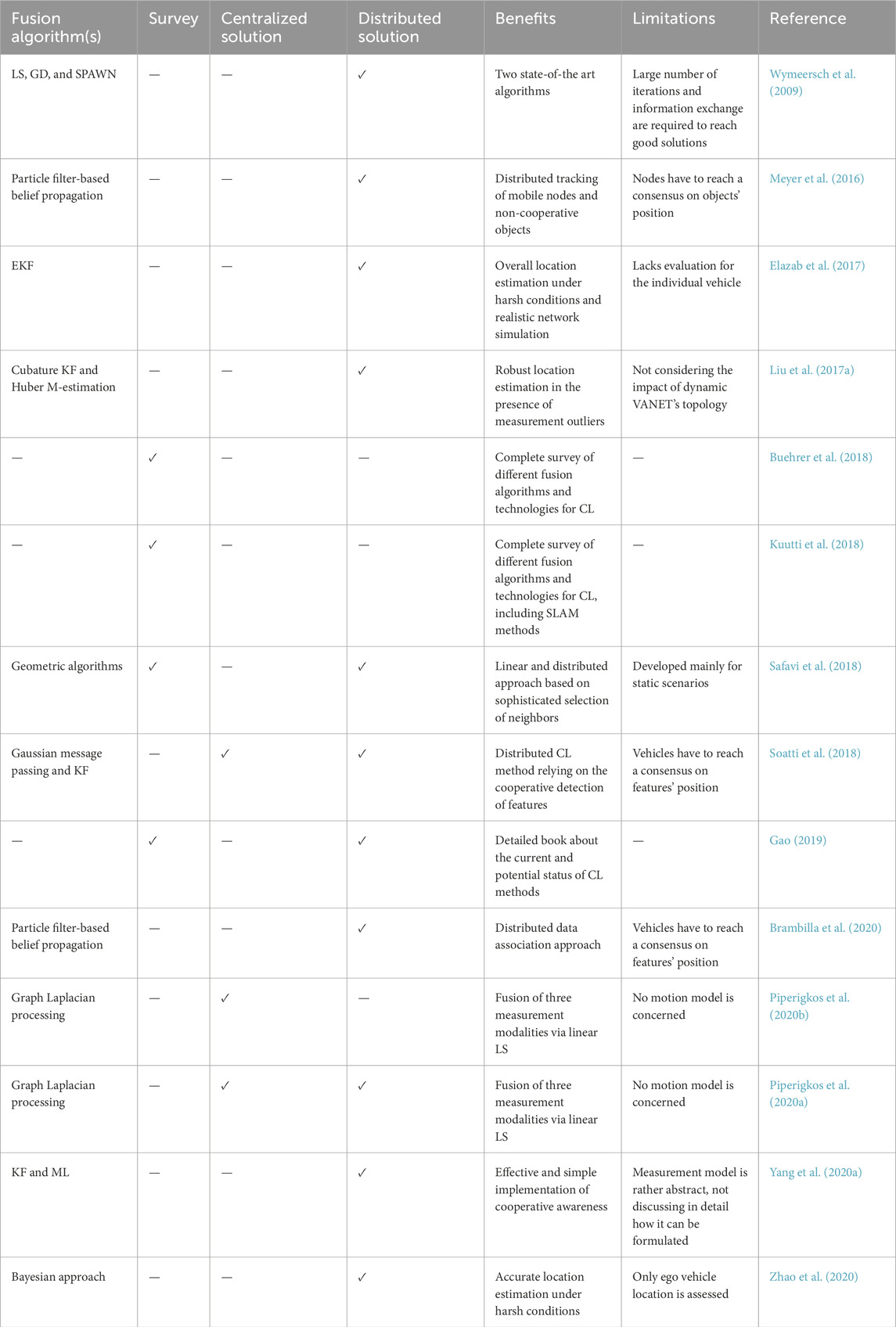

There are many existing works (Kuutti et al., 2018; Buehrer et al., 2018; Wymeersch et al., 2009; Safavi et al., 2018; Gao, 2019) that survey-related aspects, challenges, and algorithms of CL. For example, Kuutti et al. (2018) provided an overview of current trends and future applications of localization (not only CL) in autonomous vehicle environments. The discussed techniques are mainly distinguished on the basis of the utilized sensor. Ranging measurements like relative distance and angle can also be extracted through the V2V abilities of CL. Common ranging techniques include time of arrival (TOA), angle of arrival (AoA), time difference of arrival (TDOA), and received signal strength (RSS). The works of Buehrer et al. (2018), Wymeersch et al. (2009) delve into detailed mathematical modeling of CL tasks. More specifically, Buehrer et al. (2018) exploit various criteria to categorize related algorithms:

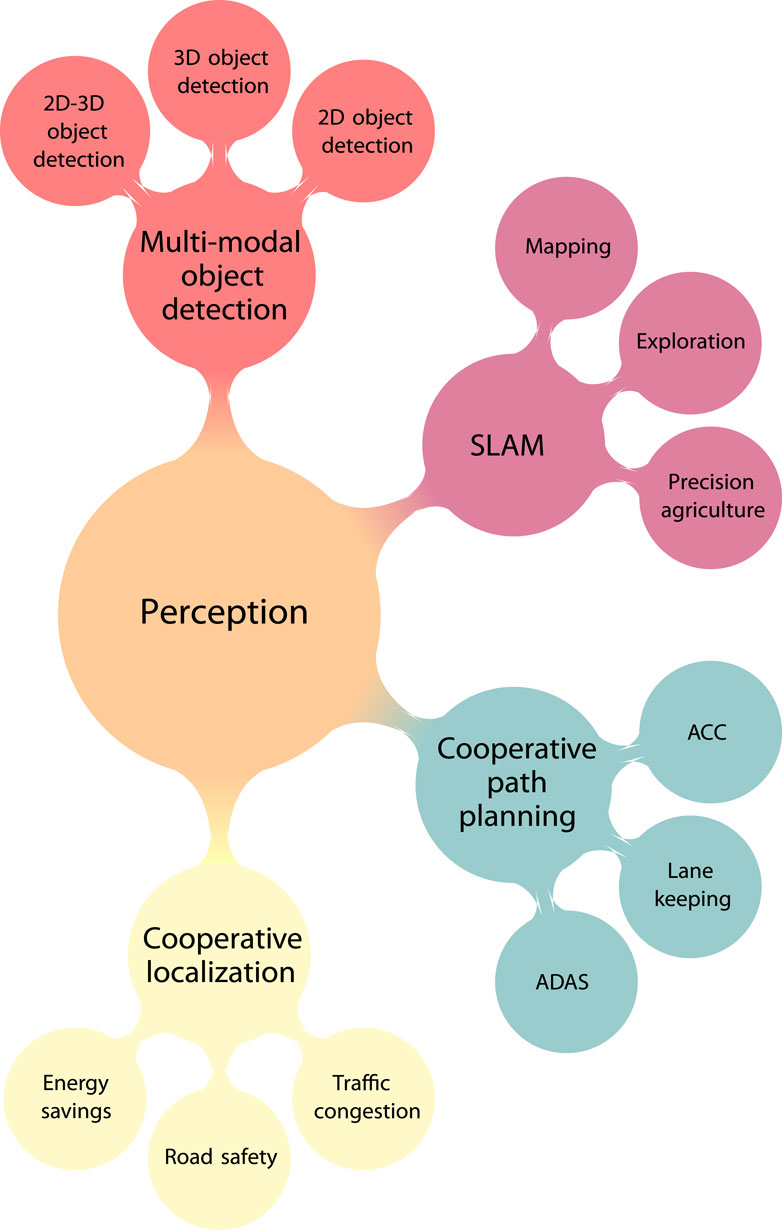

Wymeersch et al. (2009) formulated a distributed gradient descent (GD) algorithm as an LS solution and the Bayesian factor graph approach of the sum–product algorithm over wireless networks (SPAWNs). In general, distributed and tracking/Bayesian algorithms are more attractive to perform CL. An overview of distributed localization algorithms in IoT is also given by Safavi et al. (2018). Additionally, the authors discuss the proposed distributed geometric framework of DILOC, as well as the extended versions of DLRE and DILAND, which facilitate the design of a linear localization algorithm. These methods require the vehicle to be inside the convex full formed by three neighboring anchors (nodes with known and fully accurate positions) and to compute its barycentric coordinates with respect to neighbors. However, major challenges are related to mobile scenarios due to varying topologies, as well as how feasible the presence of anchors will be in automotive applications. An interesting approach is discussed by Meyer et al. (2016), where mobile agents, in general, try to cooperatively estimate their position and track non-cooperative objects. The authors developed a distributed particle filter-based belief propagation approach with message passing although they consider the presence of anchor nodes. Furthermore, the computational and communication overhead may be a serious limitation toward real-time implementation. Soatti et al. (2018) proposed a novel distributed technique to improve the stand-alone GNSS accuracy of vehicles. Once again, non-cooperative objects or features (e.g., trees and pedestrians) are exploited in order to improve location accuracy. Features are cooperatively detected by vehicles using their onboard sensors (e.g., LiDAR), where a perfect association is assumed. These vehicle-to-feature measurements are fused with GNSS in the context of a Bayesian message-passing approach and KF. Experimental evaluation was assessed using the SUMO simulator; however, the number of detected features, as well as communication overhead, should be taken into serious account. The work of Brambilla et al. (2020) extends that of Soatti et al. (2018) by proposing a distributed data association framework for features and vehicles. Data association was based on belief propagation. Validation was performed in realistic urban traffic conditions. The main aspect of Brambilla et al. (2020) and Soatti et al. (2018) is that vehicles must reach a consensus about feature states in order to improve their location. Graph Laplacian CL has been introduced in Piperigkos et al. (2020b) and Piperigkos et al. (2020a). Centralized or distributed Laplacian localization formulates an LS optimization problem, which fuses the heterogeneous inter-vehicular measurements along with the V2V connectivity topology through the linear Laplacian operator. EKF- and KF-based solutions have been proposed for addressing CL in tunnels (Elazab et al., 2017; Yang et al., 2020a) when the GPS signal may be blocked. A distributed robust cubature KF enhanced by Huber M-estimation is presented by Liu et al. (2017a). The method is used to tackle the challenges of data fusion in the presence of outliers. Pseudo-range measurements from satellites are also considered during the fusion process. Zhao et al. (2020) developed a distributed Bayesian CL method for localizing vehicles in the presence of non-line-of-sight range measurements and spoofed vehicles. They focused primarily on ego vehicle location estimation and abnormal vehicle detection rates. Potential applications of localization in various domains like wireless sensor networks (WSNs), intelligent transportation systems (ITS), and robotics are demonstrated in Figure 2. Table 1 summarizes the above mentioned works.

4.2.2 SLAM

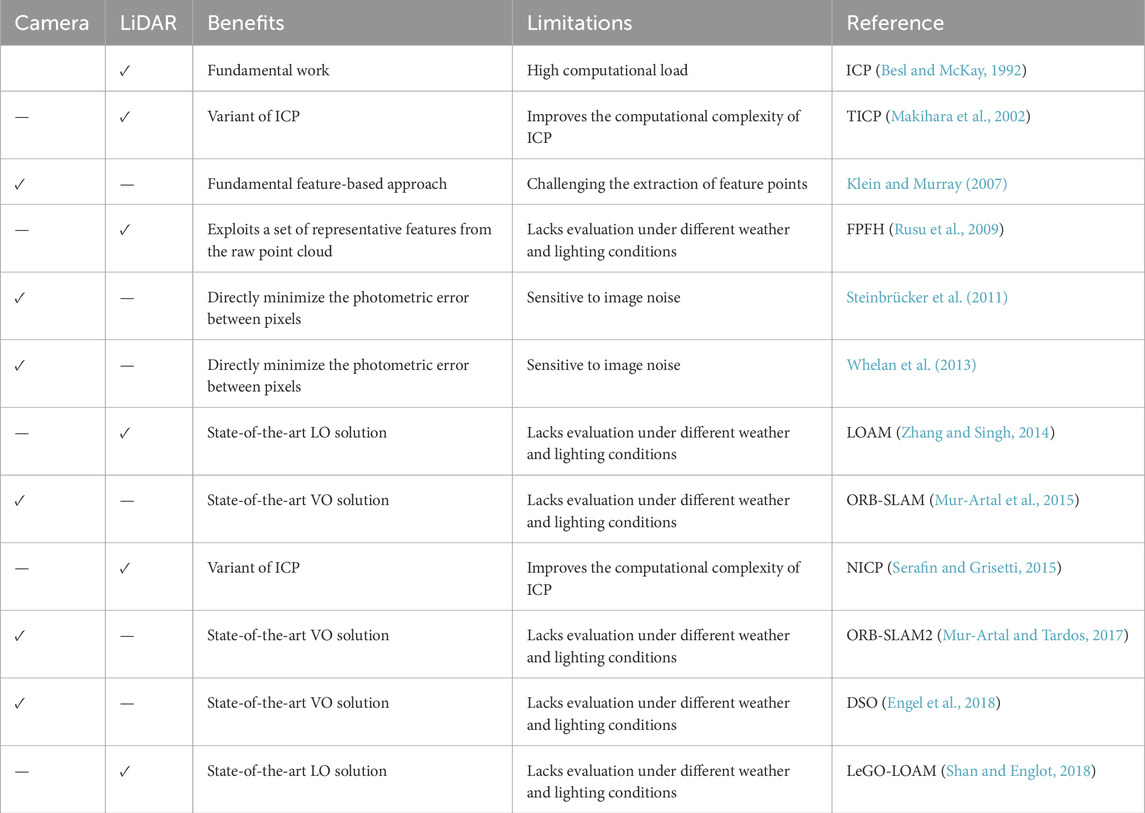

Simultaneous localization and mapping (SLAM) is also a relevant task of localization. It refers to the problem of mapping an environment using measurements from sensors (e.g., a camera or LiDAR) onboard the vehicle or robot while at the same time estimating the position of that sensor relative to the map. Although when stated in this way, SLAM can appear to be quite an abstract problem robust, and efficient solutions to the SLAM problem are critical to enabling the next wave of intelligent mobile devices. SLAM, in its general, form tries to estimate over a time period the poses of the vehicle/sensor and the landmarks’ position of the map, given control input measurements provided by odometry sensors onboard the vehicle and measurements with respect to landmarks. Therefore, we have mainly two subsystems: the front-end, which detects the landmarks of the map and correlates them with the poses, and the back-end, which casts an optimization problem in order to estimate the pose and the location of landmarks. SLAM techniques can be distinguished to either visual or LiDAR based odometry (VO and LO) solutions, reflecting camera or LiDAR as the main sensor to be exploited:

Cooperative SLAM approaches are, in general, more immature with respect to CL since they are usually applied in indoor experimental environments with small-scale robots. A thorough overview of multiple-robot SLAM methods is provided by Saeedi et al. (2016), focusing mainly on agents equipped with cameras or 2D LiDARs (Mourikis and Roumeliotis, 2006; Zhou and Roumeliotis, 2008; Estrada et al., 2005). In addition, cooperative SLAM approaches using 3D LiDAR sensors are discussed by Kurazume et al. (2017), Michael et al. (2012), and Nagatani et al. (2011). Table 2 summarizes the above mentioned SLAM-based methods.

4.2.3 Cooperative path planning

Connected advanced driver assistance systems (ADASs) help reduce road fatalities and have received considerable attention in research and industrial societies (Uhlemann, 2016). Recently, there has been a shift of focus from individual drive-assist technologies like power steering, anti-lock braking systems (ABS), electronic stability control (ESC), and adaptive cruise control (ACC) to features with a higher level of autonomy like collision avoidance, crash mitigation, autonomous drive, and platooning. More importantly, grouping vehicles into platoons (Halder et al., 2020; Wang et al., 2019a) has received considerable interest since it seems to be a promising strategy for efficient traffic management and road transportation, offering several benefits in highway and urban driving scenarios related to road safety, highway utility, and fuel economy.

To maintain the cooperative motion of vehicles in a platoon, the vehicles exchange their information with the neighbors using V2V and V2I (Hobert et al., 2015). The advances in V2X communication technology (Hobert et al., 2015; Wang et al., 2019b) enable multiple automated vehicles to communicate with one another, exchanging sensor data, vehicle control parameters, and visually detected objects facilitating the so-called 4D cooperative awareness (e.g., identification/detection of occluded pedestrians, cyclists, or vehicles).

Several works have been proposed for tackling the problems of cooperative path planning. Many of them focus on providing spacing policy schemes using both centralized and decentralized model predictive controllers. However, very few take into account the effect of network delays, which are inevitable and can significantly deteriorate the performance of distributed controllers.

Viana et al. (2019) presented a unified approach to cooperative path-planning using nonlinear model predictive control with soft constraints at the planning layer. The framework additionally accounts for the planned trajectories of other cooperating vehicles, ensuring collision avoidance requirements. Similarly, a multi-vehicle cooperative control system is proposed by Bai et al. (2023) and Kuriki and Namerikawa (2015) with a decentralized control structure, allowing each automated vehicle to conduct path planning and motion control separately. Halder et al. (2020) presented a robust decentralized state-feedback controller in the discrete-time domain for vehicle platoons, considering identical vehicle dynamics with undirected topologies. An extensive study of their performance under random packet drop scenarios is also provided, highlighting their robustness in such conditions. Viana and Aouf (2018) extended decentralized MPC schemes to incorporate the predicted trajectories of human-driving vehicles. Such solutions are expected to enable the co-existence of vehicles supporting various levels of autonomy, ranging from L0 (manual operation) to L5 (fully autonomous operation) (Taeihagh and Lim, 2019). Furthermore, a distributed motion planning approach based on the artificial potential field is proposed by Xie et al. (2022b), where its innovation is related to developing an effective mechanism for safe autonomous overtaking when the platoon consists of autonomous and human-operated vehicles.

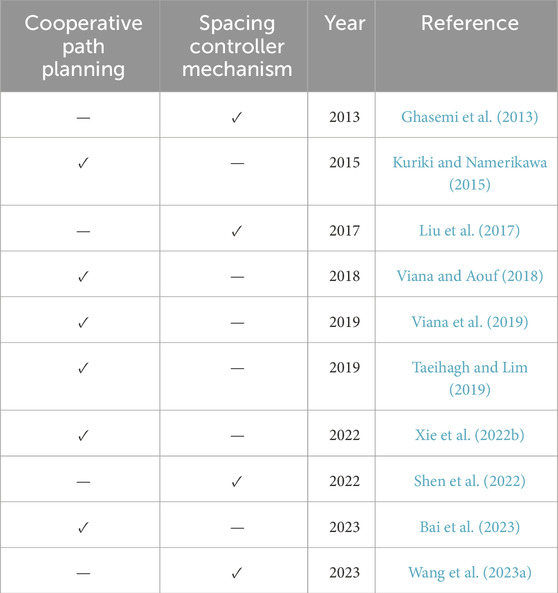

In additional to the cooperative path planning mechanisms, spacing policies and controllers have also received increased interest in ensuring collision avoidance by regulating the speeds of the vehicles forming a platoon. Two different types of spacing policies can be found in the literature, i.e., the constant-spacing policy (Liu et al., 2017; Shen et al., 2022) and the constant-time-headway spacing policy (e.g., focusing on maintaining a time gap between vehicles in a platoon resulting in spaces that increase with velocity) (Wang et al., 2023a). In both categories, most works use a one-direction control strategy. At this point, it should be mentioned that in a one-directional strategy, the vehicle controller processes the measurements that are received from leading vehicles. Similarly, a bidirectional platoon control scheme takes into consideration the state of vehicles in front and behind (see Ghasemi et al., 2013). In most of the cooperative platooning approaches, the vehicle platoons are formulated as double-integrator systems that deploy decentralized bidirectional control strategies similar to mass–spring–damper systems. This model is widely deployed since it is capable of characterizing the interaction of the vehicles with uncertain environments and, thus, is more efficient in stabilizing the vehicle platoon system in the presence of modeling errors and measurement noise. However, it should be noted that the effect of network delays on the performance of such systems has not been extensively studied. Time delays, including sensor detective delay, braking delay, and fuel delay, not only seem to be inevitable but are also expected to deteriorate significantly the performance of the distributed controllers. Table 3 summarizes the above mentioned Path planning-based methods.

4.3 Human centric perception

Humans, as a part of a CPSoS, play an important role in the functionality of the system. The humans’ role in such complicated systems (e.g., CPSoSs) is vital since they react and collaborate with the machines, providing them with useful feedback and affecting the way that these systems work. Humans can provide valuable input both in an active (on purpose) or a passive (without consideration) way (Figure 3). For example, an input such as a gesture or voice can be used as an order or command to control the operation of a system via an HMI. On the other hand, pose estimation or biometrics, like heart rate, could be taken into account by a decision component, resulting in a corresponding change in the system’s functionality for security reasons (e.g., when a user’s fatigue has been detected).

The following sections present some human-related inputs (e.g., behavior and characteristics) that can be beneficially used in CPSoSs according to the literature.

Regarding the face of a human as a biometric, the related tasks can be face detection (Claudi et al., 2013; Isern et al., 2020; Chen et al., 2016; Galbally et al., 2019), face alignment (Kaburlasos et al., 2020a; Kaburlasos et al., 2020b), face recognition (Makovetskii et al., 2020), face tracking (Li et al., 2016b; Pereira Passarinho et al., 2015), face classification/verification (Arvanitis et al., 2016), and face landmarks extraction (Jeong et al., 2018; Eskimez et al., 2020; Lee et al., 2020). Fingerprint (Okpara and Bekaroo, 2017; Valdes-Ramirez et al., 2019; Preciozzi et al., 2020), palmprint (Wang et al., 2006), and iris/gaze (Vicente et al., 2015; Lai et al., 2015) are mainly used for user’s identification tasks due to their uniqueness for each person. EEG (Laghari et al., 2018; Pandey et al., 2020; Lou et al., 2017), EGG, respiratory (Jiang et al., 2020; Saatci and Saatci, 2021; Meng et al., 2020), and heart rate (Rao et al., 2012; Prado et al., 2018; Majumder et al., 2019) are used for the user’s state monitoring. In addition to the fact that they can provide valuable information, their usage in real applications is difficult to apply due to the special wearable devices that it is required for the capturing.

The choice of which specific biometric to utilize depends on the use case scenario, including factors such as the availability and feasibility of using a sensor (e.g., whether it will be placed in a stationary location or worn constantly during the operation), the special power consumption needs of each sensor, the accuracy, and the latency. One other important issue that needs to be taken under serious consideration before the use of a biometric in real systems is the privacy and security of these sensitive data since they must be protected via encoding in order to be anonymously stored or used.

Generally, the person identification task is a more complicated and challenging problem in comparison to the face identification since face identification is applied in a more controlled environment (e.g., use of a smaller captured frame, the user has to remove glasses, hats, and other accessories to be identified). On the other hand, person identification has to deal with more complex issues like the different points of view, light and weather conditions, different resolutions of the camera, types of clothes, and a large variety of background contexts. Person identification has shown great usability in applications related to CPSoSs, mostly for security purposes. Its utility has been marked specifically when it is applied “in the wild” and in uncontrolled environments where other biometrics are not feasible to be used due to technical constraints. Nowadays, approaches usually use deep networks to perform reliable and accurate results.

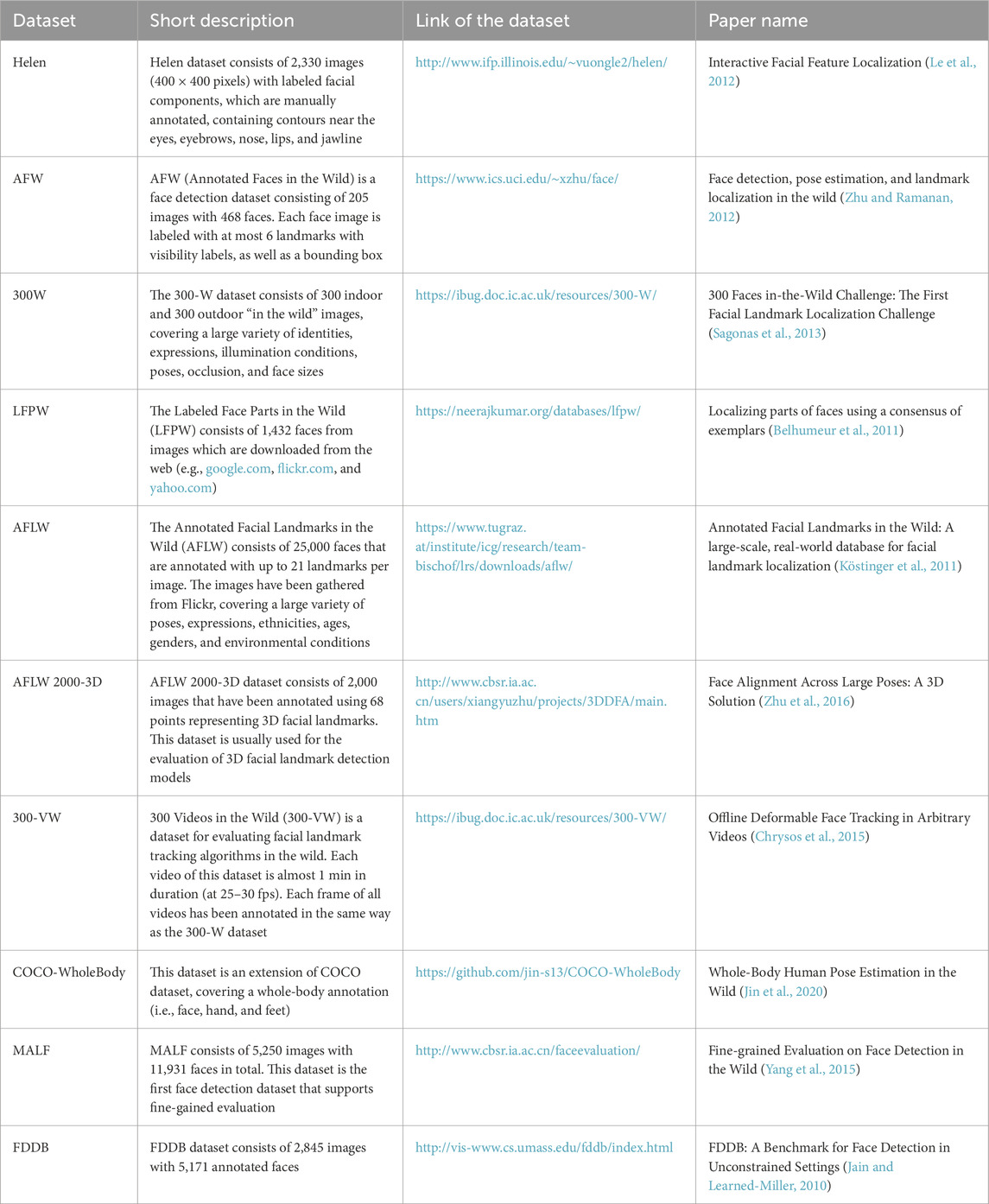

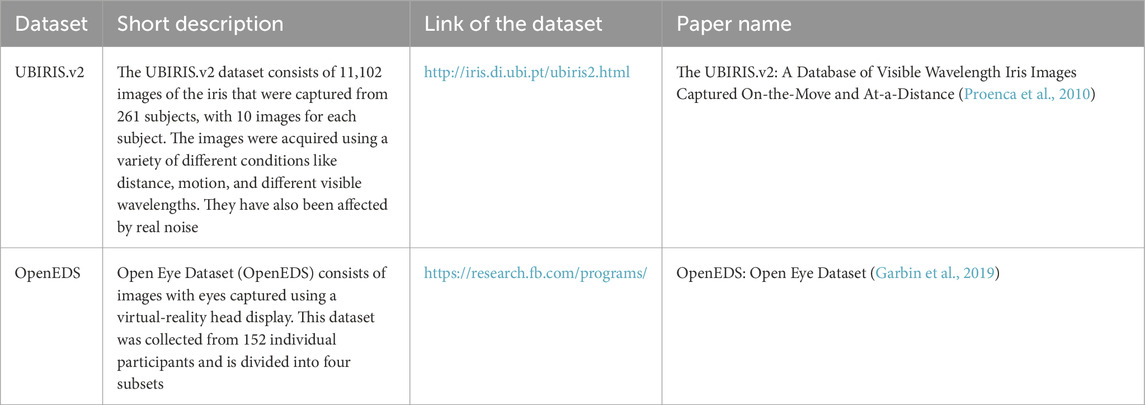

Li et al. (2020a) proposed an additive distance constraint approach with similar label loss to learn highly discriminative features for person re-identification. Ye and Yuen (2020) proposed a deep model (PurifyNet) to address the issue of the person re-identification task with label noise, which has limited annotated samples for each identity. Li et al. (2020b) used an unsupervised re-identification deep learning approach capable of incrementally discovering discriminative information from automatically generated person tracklet data. Table 4 and Table 5 summarize relevant datasets for the face recognition, detection, and facial landmark extraction problems.

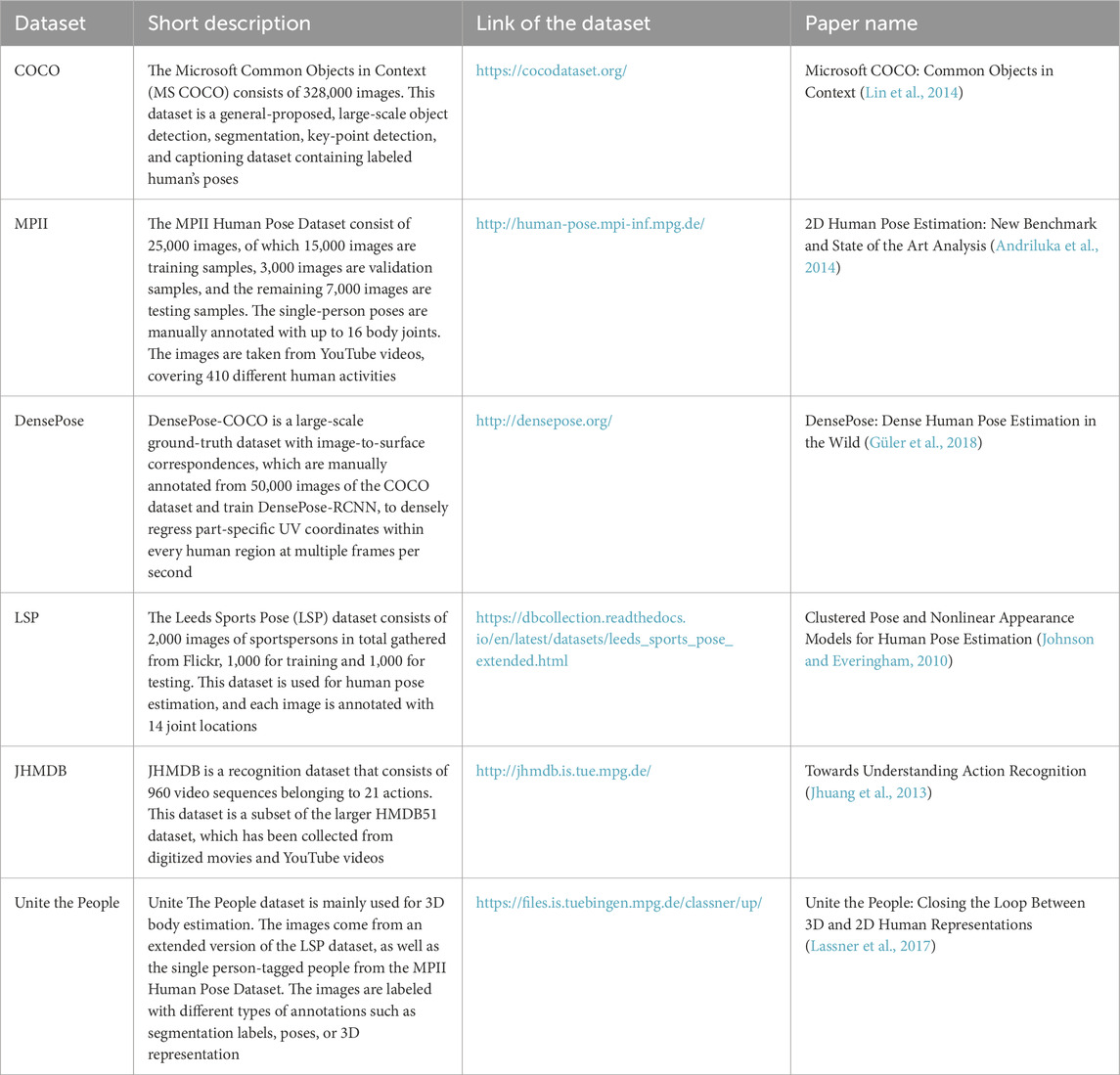

Human pose estimation and action recognition have been proved to be particularly valuable tasks in modern video-captured applications related to CPSoSs. They can be utilized in a variety of fields such as ergonomics assessment, safe training of new operators, fatigue and drowsiness detection of the user, HMIs, and the prediction of an operator’s next action for avoiding accidents by changing the operation of a machine. They are also useful for monitoring dangerous movements in insecure workspace areas.

A restriction that can negatively affect and obstruct the quality of the results of these tasks is the limited coverage area of the camera. Nevertheless, this limitation can be overcome using new types of sensors and tools like IMUs and whole-body tracking systems (e.g., SmartsuitPro and Xsens) (Hu et al., 2017).

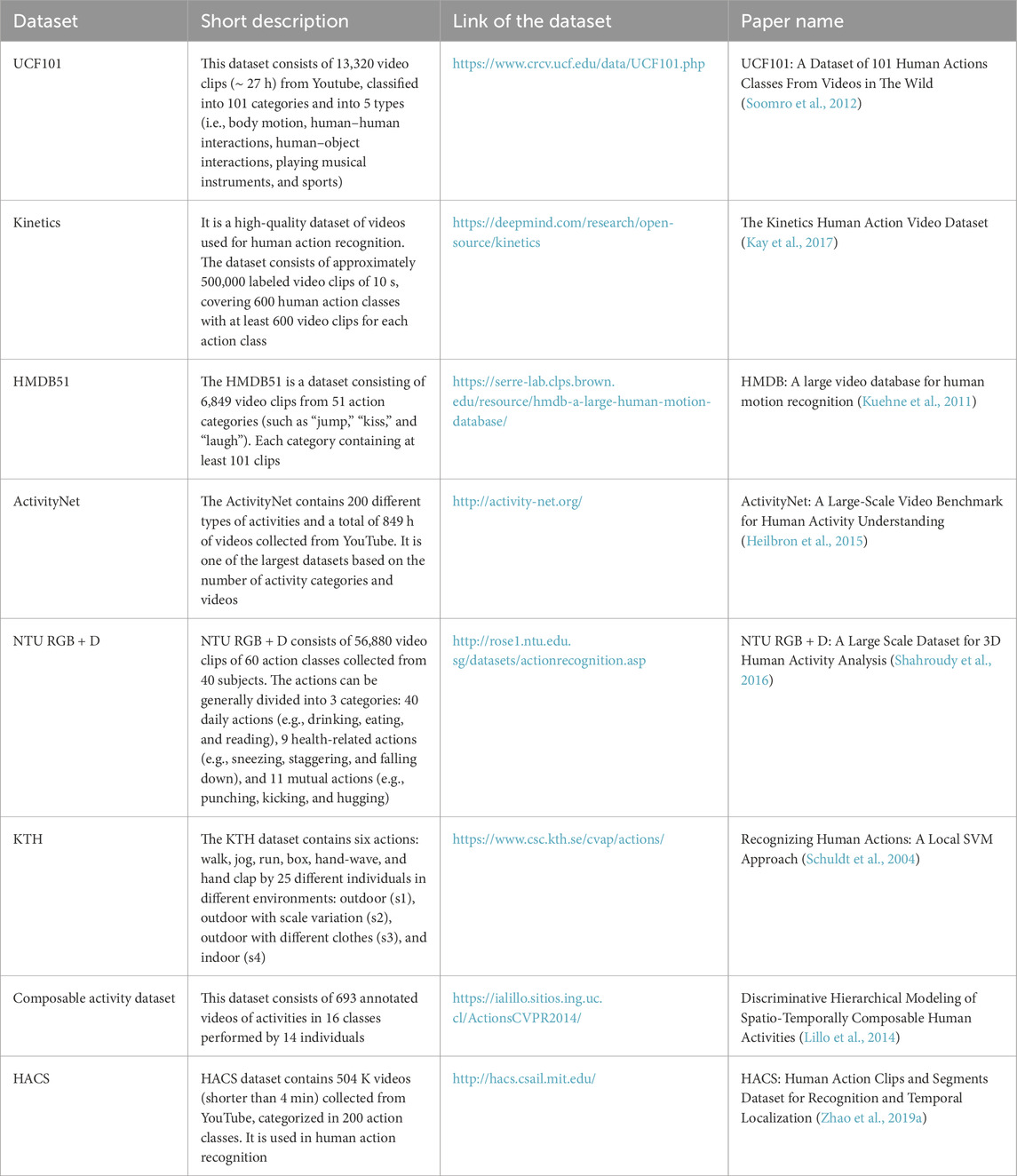

Islam et al. (2019) presented an approach that exploits visual cues from human pose to solve industrial scenarios for safety applications in CPSs. El-Ghaish et al. (2018) integrated three modalities (i.e., 3D skeletons, body part images, and motion history images) into a hybrid deep learning architecture for human action recognition. Nikolov et al. (2018) proposed a skeleton-based approach utilizing spatio-temporal information and CNNs for the classification of human activities. Deniz et al. (2020) presented an indoor monitoring reconfigurable CPS that uses embedded local nodes (Nvidia Jetson TX2), proposing learning architectures to address human action recognition. Table 6 and Table 7 summarize relevant datasets for the pose estimation and action recognition problem.

Hand gesture recognition tasks can be a very useful tool for interactions with machines or subsystems in CPSoSs (Horváth and Erdős, 2017), particularly in applications where the user is not allowed to have physical hand contact with a machine due to security reasons. This task mainly consists of three sequential steps, which are hand detection, hand tracking, and gesture recognition. Gesture recognition can occur either by a single image (i.e., static gesture recognition) or a sequence of images (i.e., dynamic gesture recognition). The first strategy looks more like a retrieval problem where the gesture of the image has to match with a known predefined gesture from a dataset of gestures. The second is a more complicated problem, but it is more useful since it can cover the requirements of a wider variety of real-life use cases (Choi and Kim, 2017). Gesture recognition is a very common task in human–computer interaction. Nonetheless, the recognition of complex patterns demands accurate sensors and sufficient computational power (Grützmacher et al., 2016). Additionally, we have to refer to the fact that visual computing plays an important role in CPSoSs, especially in these applications where the visual gesture recognition system relies on multi-sensor measurements (Posada et al., 2015; Aviles-Arriaga et al., 2006).

Horváth and Erdős (2017) presented a control interface for cyber–physical systems that interprets and executes commands in a human-robot shared workspace using a gesture recognition approach. Lou et al. (2016) tried to address the problem of personalized gesture recognition for cyber–physical environments, proposing an event-driven service-oriented framework. However, in other gesture recognition applications, a body-worn setup was proposed, which supplements the omnipresent 3 DoF motion sensors with a set of ultrasound transceivers (Putz et al., 2020). Table 8 summarizes relevant datasets for the hand and gesture recognition problems.

Speech recognition is a sub-category of a more generic research area related to the domain of natural language processing (NLP). The main objective of speech recognition is to automatically translate the content of the entire speech (or the most significant part of it) into text or other recognizable forms from the computers. Assuming that the recording and processing of speech do not require a special sensor, but just a simple audio recorder, we can understand how easy to use this information is. Additionally, speech can be applied without any physical contact interaction, making it an ideal signal for HMI applications.

Speech recognition tasks can be utilized in the smart input system (Wang, 2020; Han et al., 2016), automatic transcription system (Chaloupka et al., 2012; Chaloupka et al., 2015), smart voice assistant (Subhash et al., 2020), computer-assisted speech (Tejedor-García et al., 2020), rehabilitation (Aishwarya et al., 2018; Mariya Celin et al., 2019), and language teaching.

Similar to the face recognition task that focuses on the recognition of an individual human using facial information, the speaker recognition task tries to achieve the same goal using the vocal tone information of the subject. Speaker recognition is one of the most basic components for human identification, which has various applications in many CPSoSs. Additionally, fusion schemes can be used combining both speaker recognition and face recognition for more secure integrations (Zhang and Tao, 2020).

A speaker recognition system consists of three separate parts, namely, the speech acquisition module, the feature extraction and selection module, and finally the pattern matching and classification module. In CPSoSs, the implementation of an automatic speech recognition system relies on a voice user interface so that humans can interact with robots or other CPS components. Nevertheless, this type of interface cannot replace the classical GUIs, but it can intensify them by providing, in some cases, a more efficient way of interaction.

Kozhirbayev et al. (2018) developed a technique to train a neural network (NN) on the extracted mel-frequency cepstral coefficient (MFCC) features from audio samples to increase the recognition accuracy of the short utterance speaker recognition system. Wang et al. (2020) tried to improve the robustness of speaker identification using a stacked sparse denoising auto-encoder. Table 9 summarizes relevant datasets for the speech recognition problem.

5 Behavioral layer

In each CPSoS, human knowledge, senses, and expertise constitute important informative values that can be taken into account for the assurance of its operational excellence. However, a substantial concern that needs to be addressed at an early age of CPSoS evolution is determining how these abstract human features can be made accessible and understandable to the system.

A way to integrate the human as a separate component into a CPSoS is by introducing an anthropocentric mechanism, which is known in the literature as the HITL approach (Gaham et al., 2015; Hadorn et al., 2016). This mechanism allows a direct way for humans to continuously interact with the CPSoSs’ control loops in both directions of the system (i.e., taking and giving inputs).

Although common CPSoSs are human-centered systems (where human constitutes an essential part of the system), unfortunately, in many real cases, these systems still consider humans as external and unpredictable elements without taking their importance into deeper consideration. The central vision of the researchers and engineers is to create a human–machine symbiosis, integrating humans as holistic beings within CPSoSs. In this way, CPSoSs have to support a tight bond with the human element through HITL controls, taking into account human features like intentions, psychological and cognitive states, emotions, and actions, all of which can be deduced through sensor data and signal processing approaches.

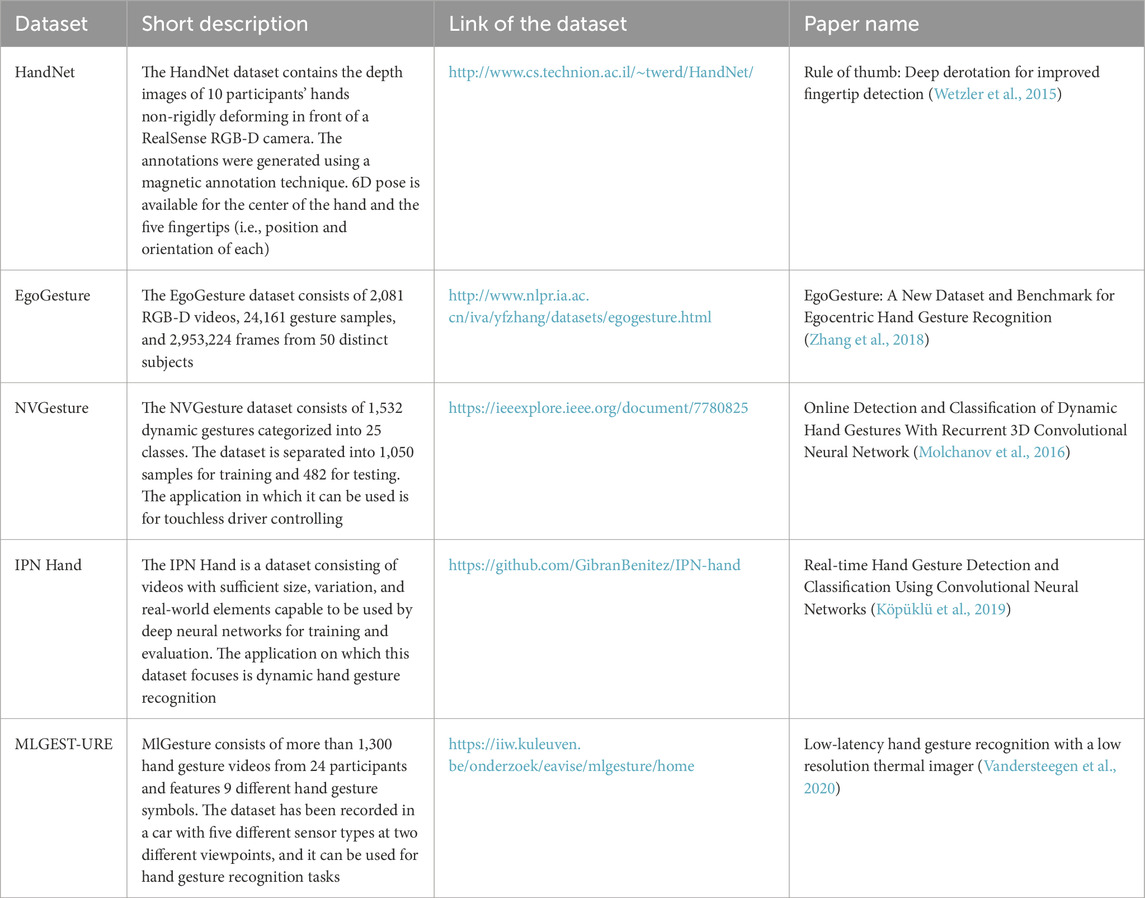

HITL systems integrate human feedback into the control loop of a system, allowing humans to interact with and influence automated processes in real-time. In self-driving vehicles, haptic teleoperation enables remote operators to control the vehicle with the sensation of touch. For instance, when the vehicle encounters an abnormal situation, a human operator can take over using HMI haptic feedback to feel the road conditions and obstacles, ensuring safe navigation and improving response times and overall safety (Kuru, 2021). Additionally, HITL telemanipulation of unmanned aerial vehicles (UAVs) allows operators to control drones remotely while receiving haptic feedback about the drone’s interactions with its environment. For instance, an operator uses a haptic interface to control the UAV. The haptic feedback provides sensations of wind resistance, surface textures, and physical interactions with obstacles (Zhang et al., 2021). Integrating haptic feedback into HITL operations enhances human perception by providing a multisensory experience. This integration improves the realism of virtual environments and the accuracy and efficiency of tasks, requiring fine motor skills and precise control. Haptic feedback bridges the gap between the virtual and physical worlds, allowing users to interact with digital systems more naturally and intuitively. Thus, the concept of human–machine symbiosis refers to the synergistic relationship between humans and machines, where both entities work together to achieve common goals. This cooperation ensures that the unique strengths of humans (such as intuition, creativity, and decision-making) complement the capabilities of machines (such as speed, accuracy, and endurance). By creating environments where humans and machines work together, the overall system performance can be enhanced. This human–machine symbiosis ensures that both parties contribute to the task, leading to increased productivity and innovation. The key components of behavioral layer are summarized in Figure 4.

System designers, who design and develop new generations of CPSoSs, have to understand and realize which features differentiate them from the traditional CPSs. One of these features is the HITL mechanism that allows CPSoSs to take advantage of some unique human characteristics, making them superior to the machines. The technological assessments are not mature yet to integrate these human-oriented characteristics into machines and robots. As a result, the HITL approach is essential to serve the initial goals of a CPSoS. These characteristics, as have been proposed by the literature (Sowe et al., 2016), are presented below:

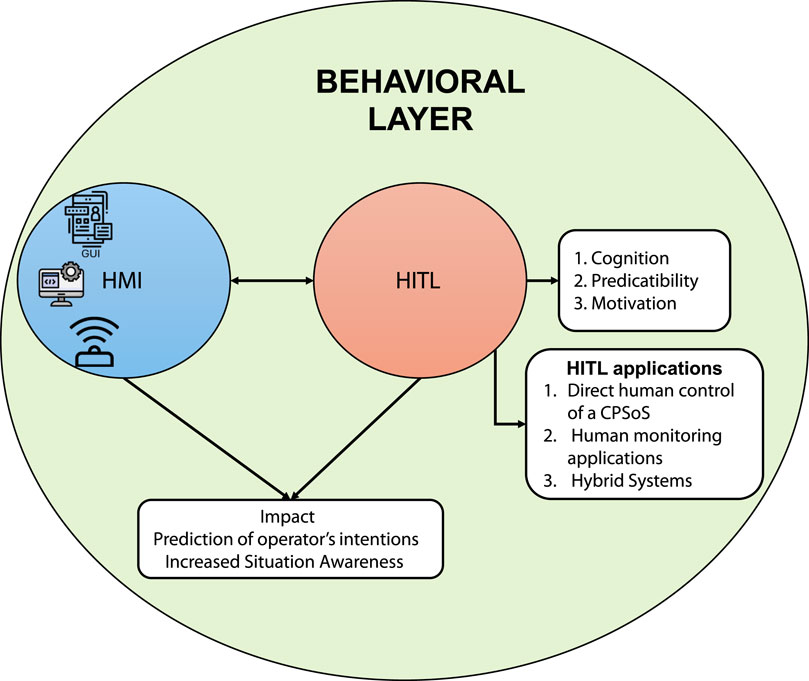

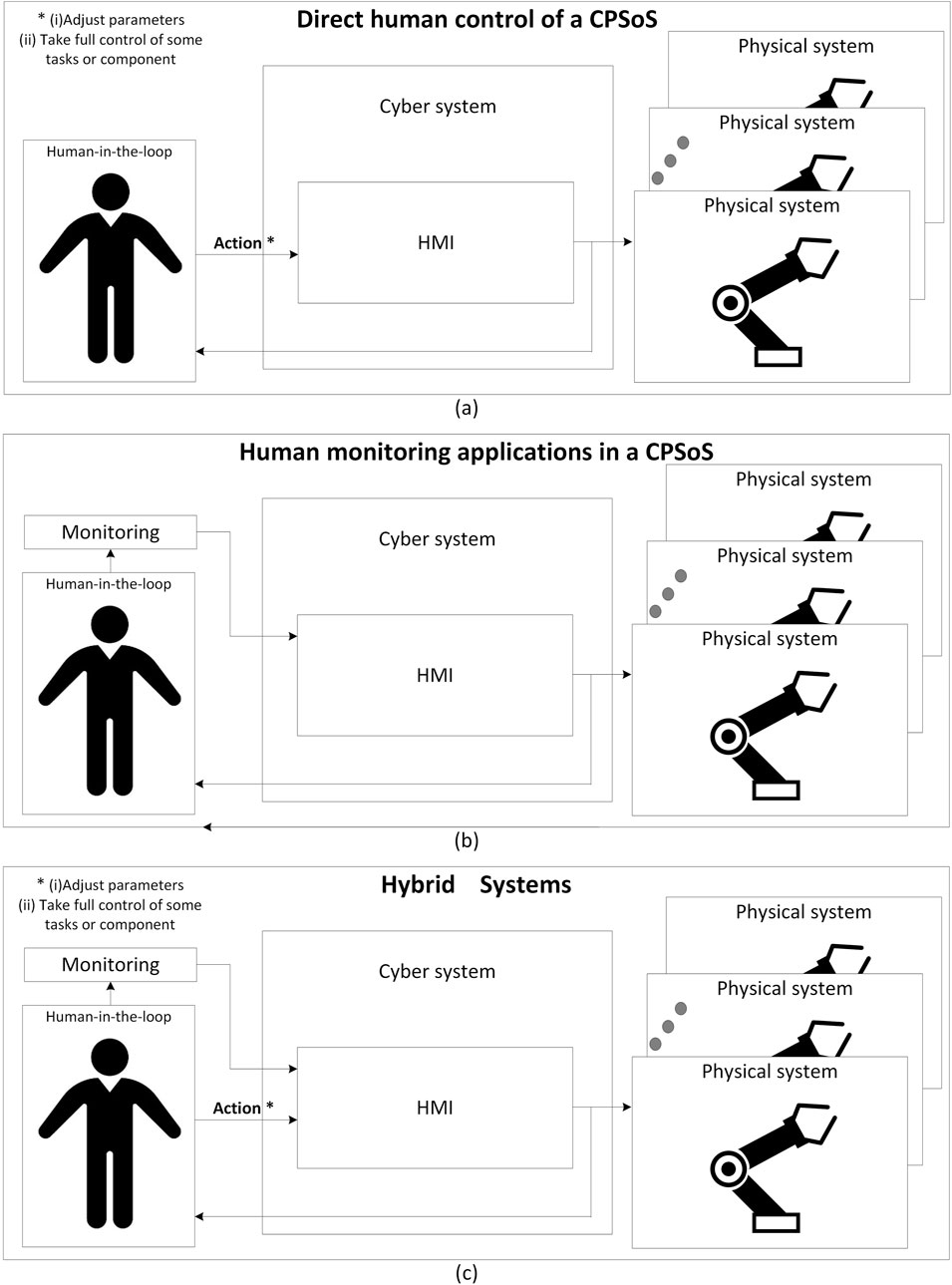

The HITL applications can be separated into three main categories with respect to the type of input that humans provide:

1. Applications in which the human plays a leading role and directly controls the functionality of the CPSoS as an operator (Figure 5A).

2. Applications where the system passively (Figure 5B) monitors humans (e.g., biometrics, pose) and then makes decisions for appropriate actions.

3. Hybrid combination of the two types mentioned above (Figure 5C).

Figure 5. HITL applications with respect to the type of input that humans provide. (A)applications in which human plays a leading role of the CPSoS, (B)Applications where the system passively monitors humans and then makes decisions for appropriate actions. and (C)Hybrid combination of the two types mentioned above.

5.1 Direct human control of a CPSoS

The applications in this category can be separated into two different sub-categories related to CPS autonomy. In the first sub-category, operators manage a process close to an autonomous task. The system has complete control of its action, and the user is responsible for adjusting some parameters that may affect the functionality of the system when required for external reasons. An example that can describe a scenario like this is when an operator sets new values to specific parameters on a machine in the industry for changing the operation of the assembly line (e.g., for a new product).

In the second sub-category, the operator plays a more active role in the process by directly controlling several tasks and setting explicit commands for the operation of the machines or robots. An example of this scenario is when an expert operator has to remotely take complete control of a robotic arm for repair purposes.

5.2 Human monitoring applications

The applications of this category are represented by systems that passively monitor humans’ actions, behavior, and biometrics. The acquired data can be used to make appropriate decisions. Based on the type of the system reaction, the applications can be separated into two types, namely, open-loop and closed-loop systems.

Open-loop systems continuously monitor humans and visualize (e.g., smart glasses) or send a report with relevant results, which may be helpful or interesting for the operator. The system does not take any further action in this case. The presented results can cover (i) the first level of information, (ii) the second level of information, and (iii) KPIs. First-level information includes measurements that usually are directly received by the sensors (e.g., heart rate, respiratory, and blinking of eyes). After the appropriate process of the first level of information, the second level corresponds to a higher contextual meaning (e.g., drowsiness, awareness, and anxiety level).

Closed-loop systems use the received information from the sensors and the processing results to take action. For example, in an automotive use case, if critical drowsiness of a driver is detected, the car could take complete control of the vehicle or appropriately inform the driver of his/her condition.

5.3 Hybrid systems

In manufacturing, for example, a system monitors the operators’ actions while collaborating with a robot, and it can provide appropriate guided instructions. However, the level of detail of the guided assistant can be modified by the personalized preferences of the user (which may be related to the level of his/her experience, for instance). Hybrid systems take human-centric sensing information as feedback to perform an open or closed-loop action, but additionally, they also take into account the direct human inputs and preferences.

Humans play an essential role in CPSoSs. Their contribution can be summarized into three categories: (i) for data acquisition, (ii) for inference related to their state, and (iii) for the actuation of an action to complete a task of their own or to collaborate with other components of the system (Nunes et al., 2015).

1. Data acquisition:

2. State inference:

3. Actuation:

HMI is referred to as the medium that is utilized for direct communication between humans and machines, facilitating their physical interaction (Ajoudani et al., 2018). In the literature, when there are multi-human users or systems of machines instead of a separate individual machine, HMIs have also been mentioned as cooperative or collaborative HMIs (CHMIs). Nevertheless, for simplicity, they will be referred to as HMIs since the way they function and their primary features remain the same regardless of the number of humans or machines.

Typically, the classical HMI system comprises some standard hardware components, like a screen and keyboard, along with software featuring specialized functionalities, effectively performing as a graphical user interface (GUI). All sensors and wearable devices connected with humans or other components of the CPSoS are also part of an HMI. The HMI has pervasive usage in CPSoSs, allowing each part of the CPSoS to directly interact with a human and vice versa, creating a synergy loop between CPSs and humans. In the future, HMIs will also have social cohesion between humans and machines. Gorecky et al. (2014) suggested that the primary representatives of HMI tools in CPSoSs, which are mainly used for the communication between humans and machines, are automatic speech recognition, gesture recognition, and extended reality, which can be represented by augmented or virtual reality. In such implementations, a touch screen can allow human operators to pass messages to machines. Singh and Mahmoud (2017) presented a framework that is capable of visually acquiring information from HMIs in order to detect and prevent HITL errors in the control rooms of nuclear power plants. The intelligent and adaptable CPSoSs expect the automation systems to be decentralized and support “Plug-and-Produce” features. In this way, the HMIs have to dynamically update and adapt display screens and support elements to facilitate the work of the operators, like IO fields and buttons (Shakil and Zoitl, 2020). Wang et al. (2019a) proposed a graphical HMI mechanism for intelligent and connected in-vehicle systems in order to offer a better experience to automotive users. However, Pedersen et al. (2017) presented a way of connecting an HMI with a software model of an embedded control system and thermodynamic models in a hybrid co-simulation.

Naujoks et al. (2017) presented an HMI for cooperative automated driving. The cooperative perception extends the capabilities of the automated vehicles by performing tactics and strategic maneuvers independently of any driver’s intervention (e.g., avoiding obstacles). The goal of the papers presented by Kharchenko et al. (2014), Orekhov et al. (2016a), and Orekhov et al. (2016b) is to increase the drivers’ awareness through the development and implementation of cooperative HMIs for ITSs based on cloud computing, providing measurements of vehicle and driver’s state in real-time. Johannsen (1997) dealt with HMIs for cooperative supervision and control by different human users (e.g., in control rooms or group meetings). The application in various domains (i.e., cement industry and chemical and power plants) has shown that several persons from different classes (e.g., operators, maintenance personnel, and engineers) need to cooperate.

By integrating haptic technologies into HMIs, human perception and interaction can be significantly enhanced, leading to more effective and efficient HITL operations. Haptic teleoperation allows operators to control remote or virtual systems with the sensation of touch, providing real-time tactile feedback from the remote environment. This feedback increases the operator’s situational awareness and precision by mimicking the sense of touch, thereby enhancing the user’s understanding and control (Cheng et al., 2022). In automotive applications, for instance, haptic feedback in steering wheels or pedals can alert drivers to hazards or guide them through automated driving tasks, thus improving response times and safety (Quintal and Lima, 2021). Additionally, techniques like haptic physical coupling can create a physical connection between the user and the system, enabling more intuitive and direct manipulation of virtual objects or remote devices. This can simulate the weight, texture, and resistance of objects in virtual environments, which is particularly beneficial in training simulations and remote manipulations, where direct human intervention is risky or impossible. For instance, it can be used in simulations for hazardous environments, such as nuclear plants or space missions. Operators can train with realistic tactile feedback, preparing them for real-world scenarios without the associated risks (Laffan et al., 2020). Incorporating haptic feedback into HITL operations can also improve human perception by providing a multisensory experience. This integration not only enhances the realism of virtual environments but also improves the accuracy and efficiency of tasks that require fine motor skills and precise control (Sagaya Aurelia, 2019).

Cooperative HMIs can be used as standalone GUI in aircraft guidance applications, allowing data and other types of graphical information (e.g., routes, route attributes, airspaces, and flight plan tracks). This real-time information enables collaborative decision-making between all associates, such as the crew (the pilot and the copilot), who have to cooperate continuously or interact with air traffic controllers. HMIs can facilitate the users in operational services, such as air traffic flow and capacity management, flight planning, and airspace management. Kraft et al. (2020) and Kraft et al. (2019) investigated the type of information that should be provided to drivers via HMIs in merging or turning left situations to support cooperative driving, facilitating each other’s goal achievement. Cooperation between road users utilizing V2X communication has the potential to make road traffic safer and more efficient (Fank et al., 2021). The exchange of information enables the cooperative orchestration of crucial traffic conditions, like truck overtaking maneuvers on freeways.

In addition to cooperative decision-making facilitated by HMIs, the integration of predictive capabilities and situational awareness is paramount in enhancing the functionality and safety of CPSoSs. Prediction of operator’s intentions and situation awareness are critical aspects of integrating human capabilities within CPSoSs. By understanding and anticipating human actions and maintaining a high level of awareness, these systems can significantly enhance operational safety, efficiency, and resilience. These concepts further solidify the human–machine symbiosis, ensuring that CPSoSs can effectively adapt to dynamic and unpredictable environments.

Prediction of operator’s intentions is a task that can improve the effectiveness of collaboration between CPSoSs and humans. An accurate prediction can be essential, especially in industrial scenarios where the resilience and safety of all CPSoS components mainly depend on the mutual understanding between humans and CPSoSs. So, it seems necessary to design and develop reliable, robust, and accurate human behavior modeling techniques capable of predicting human actions or behavior.

On the one hand, operators are mainly responsible for their safety when they are in the same working environment with a cobot, performing collaborative tasks. However, on the other hand, CPSoSs must have intelligent components that can identify, understand, and even predict operators’ intentions with the primary goal of protecting them from severe injury. A continuous video-capturing component can be used by the prediction system to detect, track, and recognize human gestures or postures, and an artificial intelligence component can be used to predict human intentions. The system can anticipate when unexpected human operations have been detected, or specific human activity patterns have been predicted (Zanchettin et al., 2019). Meanwhile, the cobot can perform other tasks (Garcia et al., 2019). In the literature, a lot of different approaches have been presented to solve the problem of the prediction operator’s intentions, such as a framework for the prediction of human intentions from RGBD data (Casalino et al., 2018). A sparse Bayesian learning-based human intention predictor is used to predict the future human desired position (Li et al., 2019). A temporal CNN with a convolution operator is applied for human trajectory prediction (Zhao and Oh, 2021). A system that detects human intentions through a recursive Bayesian classifier is used, exploiting head and hand tracking data (Casalino et al., 2018). Another human intention inference system that uses an expectation-maximization algorithm with online model learning is employed (Ravichandar and Dani, 2017).

Awareness: Situational awareness in HMIs is used to describe the level of awareness that operators/drivers/users have of the situation in order to perform tasks successfully (Endsley, 1995). Based on the definition provided by Vidulich et al. (1994), situational awareness needs to include four specific requirements:

1. to easily receive information from the environment.

2. to integrate this information with relevant internal knowledge, creating a mental model of the current situation.

3. to use this model to direct further perceptual exploration in a continual perceptual cycle.

4. to anticipate future events.

Taking these four requirements into account, situational awareness is defined as the continuous extraction of environmental information, integrating this information with previous knowledge to form a coherent mental picture and using that picture in directing perception and anticipating future events. The system will be able to monitor and understand the user’s state (e.g., fatigue and cognitive level) to produce personalized alarms, warnings, information, and suggestions to the users. A situational awareness application could also provide

Situation awareness is essential in cases where a user must intervene in operations and co-operations with highly automated systems in order to correct failed autonomous decisions in CPSoSs (Horváth, 2020). It is also an effective method to keep the mechanical parts of a system and its operators secure and safe; it can be classified into two groups, human and computer awareness (Yang et al., 2018). Moreover, situational awareness for security reasons is critical since it can be used to inform the user about a cyber-attack that takes place in real time (Joo et al., 2018).

6 Role of digital twins in optimizing CPSoS ecosystems

Building upon the previously described perception and behavioral layers of the CPSoS general architecture, DTs emerge as a crucial technology that realizes and optimizes this framework. The perception layer’s role in enhancing situational awareness through object detection, cooperative scene analysis, and effective path planning and the behavioral layer’s focus on integrating human operators and supporting HITL interactions are both significantly augmented by the capabilities of DTs. DTs provide a dynamic and real-time simulation of physical systems, creating accurate virtual replicas that enable continuous monitoring and data integration across both layers. More specifically, DTs in the perception layer offer a comprehensive view of the environment by synchronizing data from various sensors and subsystems, ensuring more precise and reliable situational awareness. This integration allows for improved responsiveness to environmental changes and anomalies, enhancing the autonomy and reliability of CPSoSs. In the behavioral layer, DTs facilitate seamless human-machine interfaces by delivering real-time feedback and predictive insights. This supports the HITL approach by allowing human operators to interact with the system using real-time simulations and up-to-date information. Advanced HMIs such as gesture recognition and eXtended Reality technologies are further empowered by DTs, making interactions more intuitive and efficient. Moreover, the predictive capabilities of DTs help anticipate operator intentions, improving the collaborative efforts between humans and the system, especially in critical scenarios where safety and efficiency are paramount.

As such, by integrating DT frameworks into the proposed two-layered architecture, the CPSoS ecosystem is not only optimized but also becomes more resilient and adaptive to the complex demands of various domains, including automotive, industrial manufacturing, and smart cities. This synergy between DTs and the CPSoS architecture leads to smarter, more efficient systems capable of addressing modern challenges with greater efficiency. In the following sections, we will present how DTs can be employed to realize indicative large-scale CPSoSs like smart cities, transportation systems, and aerial traffic monitoring.

6.1 Digital twins in prominent examples of CPSoSs

DTs will play a pivotal role in optimizing large CPSoSs by creating virtual replicas of physical systems, allowing for real-time monitoring, simulation, and predictive maintenance. This capability is particularly important for large CPSoSs, where the integration of numerous interconnected subsystems demands precise coordination and management. DTs enhance the functionality and efficiency of these complex systems by providing a unified platform for data integration, analysis, and visualization. By enabling continuous feedback loops between the physical and digital realms, DTs improve decision-making processes, enhance system reliability, and optimize operational performance across diverse domains, including but not limited to smart cities, intelligent transportation systems, and aerial traffic monitoring (Mylonas et al., 2021; Kušić et al., 2023; Wang et al., 2021).

6.1.1 DTs and smart cities

The management and development of smart cities can be revolutionized by DTs as they provide detailed digital replicas of urban environments. These digital models integrate various data sources to deliver real-time insights and simulations, enhancing urban planning, infrastructure maintenance, and environmental monitoring. More specifically, DTs enable city planners to test different scenarios and make data-driven decisions, optimizing the layout and functionality of urban spaces. By modeling traffic flows, optimizing traffic light timings, and reducing congestion, DTs improve urban mobility and air quality. For example, DTs can analyze data from traffic cameras, sensors, and GPS to provide real-time traffic management solutions, facilitating efficient and sustainable urban traffic control (Schwarz and Wang, 2022). Furthermore, DTs allow for the detailed simulation of urban infrastructure, helping planners optimize the layout and design of utilities such as water, electricity, and waste management systems. By providing a virtual model of the city’s infrastructure, DTs enable predictive maintenance and efficient resource allocation, reducing operational costs and improving service delivery (Broo and Schooling, 2023). Additionally, DTs play a crucial role in enhancing public safety and emergency response (Aluvalu et al., 2023). By integrating data from surveillance systems, emergency services, and environmental sensors, DTs provide real-time situational awareness, enabling faster and more coordinated responses to emergencies. For instance, DTs can simulate natural disaster scenarios and help plan effective response strategies, thereby improving the efficiency and effectiveness of emergency management. Another important aspect is that DTs support smart building management by monitoring energy consumption, predicting maintenance needs, and improving overall building efficiency. By providing a virtual model of buildings, DTs help optimize heating, ventilation, and air conditioning (HVAC) systems, lighting, and other building services, contributing to energy savings and improved occupant comfort (Eneyew et al., 2022). Finally, DTs may be utilized for environmental monitoring by integrating data from air quality sensors, weather stations, and other environmental monitoring tools (Purcell et al., 2023). They provide real-time insights into environmental conditions, helping cities monitor pollution levels, manage natural resources, and implement sustainability initiatives. For instance, DTs can help track and manage water quality in urban water systems, ensuring safe and clean water for residents. Overall, DTs enhance the functionality and efficiency of smart cities by providing a unified platform for data integration, analysis, and visualization. This technology enables cities to become more resilient, sustainable, and responsive to the needs of their residents.

6.1.2 DTs and intelligent transportation systems