94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Robot. AI, 23 May 2022

Sec. Human-Robot Interaction

Volume 9 - 2022 | https://doi.org/10.3389/frobt.2022.855819

This article is part of the Research TopicHuman-Robot Interaction for Children with Special NeedsView all 7 articles

A correction has been applied to this article in:

Corrigendum: A Music-Therapy Robotic Platform for Children With Autism: A Pilot Study

Children with Autism Spectrum Disorder (ASD) experience deficits in verbal and nonverbal communication skills including motor control, turn-taking, and emotion recognition. Innovative technology, such as socially assistive robots, has shown to be a viable method for Autism therapy. This paper presents a novel robot-based music-therapy platform for modeling and improving the social responses and behaviors of children with ASD. Our autonomous social interactive system consists of three modules. Module one provides an autonomous initiative positioning system for the robot, NAO, to properly localize and play the instrument (Xylophone) using the robot’s arms. Module two allows NAO to play customized songs composed by individuals. Module three provides a real-life music therapy experience to the users. We adopted Short-time Fourier Transform and Levenshtein distance to fulfill the design requirements: 1) “music detection” and 2) “smart scoring and feedback”, which allows NAO to understand music and provide additional practice and oral feedback to the users as applicable. We designed and implemented six Human-Robot-Interaction (HRI) sessions including four intervention sessions. Nine children with ASD and seven Typically Developing participated in a total of fifty HRI experimental sessions. Using our platform, we collected and analyzed data on social behavioral changes and emotion recognition using Electrodermal Activity (EDA) signals. The results of our experiments demonstrate most of the participants were able to complete motor control tasks with 70% accuracy. Six out of the nine ASD participants showed stable turn-taking behavior when playing music. The results of automated emotion classification using Support Vector Machines illustrates that emotional arousal in the ASD group can be detected and well recognized via EDA bio-signals. In summary, the results of our data analyses, including emotion classification using EDA signals, indicate that the proposed robot-music based therapy platform is an attractive and promising assistive tool to facilitate the improvement of fine motor control and turn-taking skills in children with ASD.

Throughout history, music has been used medicinally due to its notable impact on the mental and physical health of its listeners. This practice became so popular over time that it ultimately transitioned into its own type of therapy called music therapy. The interactive nature of music therapy has also made it especially well-suited for children. Children’s music therapy is performed either in a one-on-one session or a group session and by listening, singing, playing instruments, and moving, patients can acquire new knowledge and skills in a meaningful way. It has previously been shown to help children with communication, attention, and motivation problems as well as behavioral issues Cibrian et al. (2020); Gifford et al. (2011). While music therapy is extremely versatile and has a broad range of applications, previous research studies have found that using music as an assistive method is especially useful for children with autism Cibrian et al. (2020); Mössler et al. (2019); Dellatan (2003); Brownell (2002); Starr and Zenker (1998). This interest in music therapy as a treatment for children with autism has even dated as far back as the early 20th century. In fact, in the 1940s, music therapy was used at psychiatric hospitals, institutions, and even schools for children with autism. Due to the fact both the diagnostic criteria for autism and music therapy as a profession were only just emerging at this time, no official documentation or research about this early work exists. In the next decade, the apparent unusual musical abilities of children with autism intrigued many music therapists Warwick and Alvin (1991). By the end of the 1960s, music therapists started delineating goals and objectives for autism therapy Reschke-Hernández (2011). Since the beginning of the 1970s and onward, theoretically grounded music therapists have been working toward a more clearly defined approach to improve the lives of individuals with autism spectrum disorders (ASD). However, for decades, music therapists have not used a consistent assessment method when working with individuals with ASD. A lack of a quality universal assessment tool has caused therapy to drift towards non-goal driven treatment and has reduced the capacity to study the efficacy of the treatment in a scientific setting Thaut and Clair (2000).

Humanoid socially assistive robots are ideal to address some of these concerns Scassellati et al. (2012); Diehl et al. (2012). Precise programming can be implemented to ensure the robots deliver therapy in a consistent assessment method each session. Social robots are also unique in their prior success in working with children with ASD Boucenna et al. (2014b); Pennisi et al. (2016). Previous studies have found children with autism have less interest in communicating with humans due to how easily these interactions can become overwhelming Marinoiu et al. (2018); Di Nuovo et al. (2018); Richardson et al. (2018); Feng et al. (2013) and instead are more willing to interact with humanoid social robots in daily life due to their relatively still faces and less intimidating characteristics. Some research has found that children with ASD speak more while interacting with a non-humanoid robot compared to regular human-human interactions Kim et al. (2013). A ball-like robot called Sphero has been used to examine different play patterns and emotional responses of children with ASD Boccanfuso et al. (2016); Boucenna et al. (2014a). Joint attention with body movement has also been tested and evaluated using a robot called NAO from Anzalone et al. (2014). Music interaction has been recently introduced in Human-Robot-Interaction (HRI) as well Taheri et al. (2019). The NAO robot has been widely used in this field such as music and dance imitation Beer et al. (2016) and body gesture imitation Guedjou et al. (2017); Zheng et al. (2015); Boucenna et al. (2016). To expand upon the aforementioned implications of socially assistive robotics and music, the scientific question of this research is whether music-therapy delivered by a socially assistive robot is engaging and effective enough to serve as a viable treatment option for children with ASD. The main contribution of this paper is as follows. First, we propose a fully autonomous assistive robot-based music intervention platform for children with autism. Second, we designed HRI sessions and conducted studies based on the current platform, where motor control and turn-taking skills were practiced by a group of children with ASD. Third, we utilized and integrated machine learning techniques into our music-therapy HRI sessions to recognize and classify event-based emotions expressed by the study participants.

The remainder of this paper is organized as follows. Section 2 presents related works concerning human-robot interaction in multiple intervention methods. Section 3 elaborates on the experiment design process including the details of the hardware and HRI sessions. We present our music therapy platform in Section 4 and the experimental results in Section 5. Finally, Section 6 concludes the paper with remarks for future work.

Music is an effective method to involve children with autism in rhythmic and non-verbal communication and has often been used in therapeutic sessions with children who suffer from mental and behavioral disabilities LaGasse et al. (2019); Boso et al. (2007); Roper (2003). Nowadays, at least 12% of all treatments for individuals with autism consist of music-based therapies Bhat and Srinivasan (2013). Specifically, playing music to children with ASD in therapy sessions has shown a positive impact on improving social communication skills LaGasse et al. (2019); Lim and Draper (2011). Many studies have utilized both recorded and live music in interventional sessions for single and multiple participants Dvir et al. (2020); Bhat and Srinivasan (2013); Corbett et al. (2008). Different social skills have been targeted and reported (i.e., eye-gaze attention, joint attention and turn-taking activities) in music-based therapy sessions Stephens (2008); Kim et al. (2008). Improving gross and fine motor skills for children with ASD through music interventions is noticeably absent in this field of studies Bhat and Srinivasan (2013) and thus is one of the core features in the proposed study. Socially assistive robots are becoming increasingly popular as interventions for youth with autism. Previous studies have focused on eye contact and joint attention Mihalache et al. (2020); Mavadati et al. (2014); Feng et al. (2013), showing that the pattern of gaze perception in the ASD group is similar to Typically Developing (TD) children as well as the fact eye contact skills can be significantly improved after interventional sessions. These findings also provide strong evidence that ASD children are more inclined to engage with humanoid robots in various types of social activities, especially if the robots are socially intelligent Anzalone et al. (2015). Other researchers have begun to use robots to conduct music-based therapy sessions. In such studies, children with autism are asked to imitate music based on the Wizard of Oz and Applied Behavior Analysis (ABA) models using humanoid robots in interventional sessions to practice eye-gaze and joint attention skills Askari et al. (2018); Taheri et al. (2015, 2016).

However, past research has often had certain disadvantages, such as a lack of an automated system in human-robot interaction. This research attempts to address these shortcomings with our proposed platform. In addition, music can be used as a unique window into the world of autism as a growing body of evidence suggests that individuals with ASD are able to understand simple and complex emotions in childhood using music-based therapy sessions Molnar-Szakacs and Heaton (2012). Despite the obvious advantages of using music-therapy to study emotion comprehension in children with ASD, limited research has been found, especially studies utilizing physiological signals for emotion recognition in ASD and TD children Feng et al. (2018). The current study attempted to address this gap by using electrodermal activity (EDA) as a measure of emotional arousal to better understand and explore the relationship between activities and emotion changes in children with ASD. To this end, the current research presents an automated music-based social robot platform with an activity-based emotion recognition system. The purpose of this platform is to provide a possible solution for assisting children with autism and help improve their motor and turn-taking skills. Furthermore, by using bio-signals with Complex-Morlet (C-Morlet) wavelet feature extraction Feng et al. (2018), emotion classification and emotion fluctuation can be analyzed based on different activities. TD children are included as a control group to compare the intervention results with the ASD group.

Nine high functioning ASD participants (average age: 11.73, std: 3.11) and seven TD participants (average age: 10.22, std: 2.06) were recruited for this study. Only one girl was included in the ASD group, which according to Loomes et al. (2017), is under the estimated ratio of 3:1 for males and females with ASD. ASD participants who had participated in previous unrelated research studies in the past were invited to return for this study. Participants were selected from this pool and the community with help from the University of Denver Psychology department. Six out of nine ASD participants had previous human-robot-interaction with another social robot named ZENO Mihalache et al. (2020), and three out of the nine kids from the ASD group had previous music experience on instruments other than the Xylophone (saxophone and violin). All children in the ASD group had a previous diagnosis of ASD in accordance with diagnostic criteria outlined in Association (2000), including an ADOS report on record. Additionally, their parents completed the Social Responsiveness Scale (SRS) Constantino and Gruber (2012) for their children. The SRS provides a quantitative measure of traits associated with autism among children and adolescents between four and eighteen year-of-age. All ARS T-scores in our sample were above 65. Unlike the ASD group, TD kids had no experience with NAO or other robots prior to this study. Most of them, on the other hand, did participate in music lessons previously. Experimental protocols were approved by the Unversity of Denver Institutional Review Board.

NAO, a humanoid robot merchandised by SoftBank Group Corporation, was selected for the current research. NAO is 58 cm (23 inches) tall and has 25 degrees of freedom, allowing him to perform most human body movements. According to the official Aldebaran manufacturer documentation, NAO’s microphones have a sensitivity of 20mV/Pa±3 dB at 1 kHz and an input frequency range of 150Hz-12 kHz. Data is recorded as a 16 bit, 48000Hz, four-channel wave file which meets the requirements for designing the online feedback audio score system. NAO’s computer vision module includes face and shape recognition units. By using the vision feature of the robot, NAO can see an instrument with its lower camera and implement an eye-hand self-initiative system that allows the robot to micro-adjust the coordination of its arm joints in real-time, which is very useful to correct cases of improper positioning prior to engaging with the Xylophone.

NOA’s arms have a length of approximately 31 cm. Position feedback sensors are equipped in each of the robot’s joints to obtain real-time localization information. Each robot arm has five degrees of freedom and is equipped with sensors to measure the position of joint movement. To determine the position of the Xylophone and the mallets’ heads, the robot analyzed images from the lower monocular camera located in its head, which has a diagonal field of view of 73°. By using these dimensions, properly sized instruments can be selected and more accessories can be built.

In order to have a well-functioning toy-size humanoid robot play music for children with autism, some necessary accessories needed to be made before the robot was capable of completing this task. All accessories will be discussed in the following paragraphs.

In this system, due to the length of NAO’s outstretched arms, a Sonor Toy Sound SM Soprano Glockenspiel with 11 sound bars 2 cm in width was selected and utilized in this research. The instrument is 31cm × 9.5cm × 4cm, including the resonating body. The smallest sound bar is playable in an area of 2.8cm × 2cm, the largest in an area of 4.8cm × 2 cm. The instrument is diatonically tuned in C major/A minor. The 11 bars of the Xylophone represent 11 different notes, or frequencies, that cover one and a half octave scales, from C6 to F7. The Xylophone, also known as the marimba or the glockenspiel, is categorized as a percussion instrument that consists of a set of metal/wooden bars that are struck with mallets to produce delicate musical tones. Much like the keyboard or drums, to play the Xylophone properly, a unique and specific technique needs to be applied. A precise striking movement is required to produce a beautiful note, an action perfect for practicing motor control, and the melody played by the user can support learning emotions through music.

For the mallets, we used the pair that came with the Xylophone, but added a modified 3D-printed gripper that allowed the robot hands to hold them properly (see Figure 1). The mallets are approximately 21 cm in length and include a head with a 0.8 cm radius. Compared to other designs, the mallet gripper we added encourages a natural holding position and allows the robot to properly model how participants should hold the mallet stick.

Using carefully measured dimensions, a wooden base was designed and laser cut to hold the Xylophone at the proper height for the robot to play in a crouching position. In this position, the robot could easily be fixed in a location and have the same height as the participants, making it more natural for the robot to teach activities (see Figure 2).

One Q-sensor Kappas et al. (2013) was used in this study. Participants were required to wear this device during each session. EDA signal (frequency rate 32Hz) was collected from the Q-sensor attached to the wrists (wrist side varied and was determined by the participants). Due to the fact participants often required breaks during sessions, two to 3 seperated EDA files were recorded. These files were annotated by comparing the time stamps with the videos manually.

All experiment sessions were held in an 11ft × 9.5ft × 10 ft room with six HD surveillance cameras installed in the corners, on the sidewalls, and on the ceiling of the room (see Figure 3). The observation room was located behind a one-way mirror and participants were positioned with their backs toward this portion of the room to avoid distractions. During experiment sessions, an external, hand-held audio recorder was set in front of participants to collect high-quality audio to use for future research.

Utilizing a miniature microphone attached to the ceiling camera, real-time video and audio were broadcasted to the observation room during sessions, allowing researchers to observe and record throughout the sessions. Parents or caregivers in the observation room could also watch and call off sessions in the case of an emergency.

For each participant in the ASD group, six total sessions were delivered, including one baseline session, four interventional sessions, and one exit session. Only the baseline and exit sessions were required for the TD group. Baseline and exit sessions contained two activities: 1) music practice and 2) music gameplay. Intervention sessions contained three parts: S1) warm-up; S2) single activity practice (with a color hint); and S3) music gameplay. Every session lasted for a total of 30–60 min depending on the difficulty of each session and the performance of individuals. Typically, each participant had baseline and exit session lengths that were comparable. For intervention sessions, the duration gradually increased in accordance with the increasing difficulty of subsequent sessions. Additionally, a user-customized song was used in each interventional session to have participants involved in multiple repetitive activities. The single activity practice was based on music practice from the baseline/exit session. In each interventional session, the single activity practice only had one type of music practice. For instance, single-note play was delivered in the first intervention session. For the next intervention session, the single activity practice increased in difficulty to multiple notes. The level of difficulty for music play was gradually increased across sessions and all music activities were designed to elicit an emotional reaction.

To aid in desired music-based social interaction, NAO delivered verbal and visual cues indicating when the participant should take action. A verbal cue consisted of the robot prompting the participant with “Now, you shall play right after my eye flashes.” Participants could also reference NAO’s eyes changing color as a cue to start playing. After the cues, participants were then given five to 10 s to replicate NAO’s strikes on the Xylophone. The start of each session was considered a warmup and participants were allowed to imitate NAO freely without accuracy feedback. The purpose of having a warm-up section was to have participants focus on practicing motor control skills while also refreshing their memory on previous activities. Following the warmup, structured activities would begin with the goal of the participant ultimately completing a full song. Different from the warmup section, notes played during this section in the correct sequence were considered to be a good-count strike and notes incorrectly played were tracked for feedback and additional training purposes. To learn a song, NAO gradually introduced elements of the song, starting with a single note and color hint. Subsequently, further notes were introduced, then the participants were asked to play half the song, and then finally play the full song after they perfected all previous tasks. Once the music practice was completed, a freshly designed music game containing three novel entertaining game modes was presented to the participants. Participants could then communicate with the robot regarding which mode to play with. Figure 4 shows the complete interaction process in our HRI study.

The laboratory where these experiments took place is 300-square feet and has environmental controls that allowed the temperature and humidity of the testing area to be kept consistent. For experimental purposes in the laboratory, the ambient temperature and humidity were kept constant to guarantee the proper functionality of the Q-sensor as well as the comfort of subjects in light clothing. To obtain appropriate results and ensure the best possible function of the device, the sensor was cleaned before each usage. Annotators were designated to determine the temporal relation between the video frames and the recorded EDA sequences of every participant. To do this, annotators went through video files of every session, frame by frame, and designated the initiation and conclusion of an emotion. Corresponding sequences of EDA signals were then identified and utilized to create a dataset for every perceived emotion.

Figure 5 shows the above-described procedure diagrammatically. Due to the fact that it is hard to conclude these emotions with specific facial expressions, event numbers will be used in the following analysis section representing emotion comparison. The rest of the emotion labels represent the dominant feeling of each music activity according to the observations of both annotators and the research assistant who ran the experiment sessions.

Mode 1): The robot randomly picks a song from its song bank and plays it for participants. After each song, participants were asked to identify a feeling they ascribed to the music to find out whether music emotion can be recognized.

Mode 2): A sequence of melodies were randomly generated by the robot with a consonance (happy or comfortable feeling) or dissonance (sad or uncomfortable feeling) style. Participants were asked to express their emotions verbally and a playback was required afterwards.

Mode 3): Participants have 5 seconds of free play then challenge the robot to imitate what was just played. After the robot was done playing, the participant rated the performance, providing a reversed therapy-like experience for all human volunteers.

There was no limit on how many trials or modes each individual could play for each session, but each mode had to be played at least once in a single session. The only difference between the baseline and exit sessions was the song that was used in them. In the baseline session, “Twinkle, Twinkle, Little Star” was used as an entry-level song for every participant. For the exit session, participants were allowed to select a song of their choosing so that they would be more motivated to learn the music. In turn, this made the exit session more difficult than the baseline session. By using the Module-Based Acoustic Music Interactive System, inputting multiple songs became possible and less time-consuming. More than 10 songs were collected in the song bank including “Can” by Offenbach, “Shake It Off” by Taylor Swift, the “SpongeBob SquarePants” theme from the animated show SpongeBob SquarePants, and “You Are My Sunshine” by Johnny Cash. Music styles covered kid’s songs, classic, pop, ACG (Anime, Comic and Games), and folk, highlighting the versatile nature of this platform. In addition to increasing the flexibility of the platform, having the ability to utilize varying music styles allows the robot to accommodate a diverse range of personal preferences, further motivating users to learn and improve their performance.

In this section, a novel, module-based robot-music therapy system will be presented. For this system to be successful, several tasks had to be accomplished: 1) allow the robot to play a sequence of notes or melody fluently; 2) allow the robot to play notes accurately; 3) allow the robot to adapt to multiple songs easily; 4) allow the robot to be able to have social communication with participants; 5) allow the robot to be able to deliver learning and therapy experiences to participants; and 6) allow the robot to have fast responses and accurate decision-making. To accomplish these tasks, a module-based acoustic music interactive system was designed. Three modules were built in this intelligent system: Module 1: eye-hand self-calibration micro-adjustment; Module 2: joint trajectory generator; and Module 3: real-time performance scoring feedback (see Figure 6).

Knowledge about the parameters of the robot’s kinematic model was essential for programming tasks requiring high precision, such as playing the Xylophone. While the kinematic structure was known because of the construction plan, errors could occur because of factors such as imperfect manufacturing. After multiple rounds of testing, it was determined that the targeted angle chain of arms never equals the returned chain. Therefore, we used a calibration method to eliminate this error.

To play the Xylophone, the robot had to be able to adjust its motions according to the estimated relative position of the Xylophone and the heads of the mallets it was holding. To estimate the poses adopted in this paper, we used a color-based technique.

The main idea in object tracking is that, based on the RGB color of the center blue bar, given a hypothesis about the Xylophone’s position, one can project the contour of the Xylophone’s model into the camera image and compare them to an observed contour. In this way, it is possible to estimate the likelihood of the position hypothesis. Using this method, the robot can track the Xylophone with extremely low cost in real-time (see Figure 7).

FIGURE 7. Color detection from NAO’s bottom camera: (A) single blue color detection (B) full instrument color detection (C) color based edge detection.

Our system parsed a list of hexadecimal numbers (from one to b) to obtain the sequence of notes to play. The system converted the notes into a joint trajectory using the striking configurations obtained from inverse kinematics as control points. The timestamps for the control points are defined by the user to meet the experiment requirement. The trajectory was then computed by the manufacturer-provided API, using Bezier curve Han et al. (2008) interpolation in the joint space, and then sent to the robot controller for execution. This process allowed the robot to play in-time with songs.

Two core features were designed to complete the task in the proposed scoring system: 1) music detection and 2) intelligent scoring feedback. These two functions could provide a real-life interaction experience using a music therapy scenario to teach participants social skills.

Music, from a science and technology perspective, is a combination of time and frequency. To make the robot detect a sequence of frequencies, we adopted the Short-time Fourier transform (STFT) for its audio feedback system. Doing so allowed the robot to be able to understand the music played by users and provide proper feedback as a music instructor.

The STFT is a Fourier-related transform used to determine the sinusoidal frequency and phase content of local sections of a signal as it changes over time. In practice, the procedure for computing STFTs is to divide a longer time signal into shorter segments of equal length and then separately compute the Fourier transform for each shorter segment. Doing so reveals the Fourier spectrum on each shorter segment. The changing spectra can then be plotted as a function of time. In the case of discrete-time, data to be transformed can be broken up into chunks of frames that usually overlap each other to reduce artifacts at boundaries. Each chunk is Fourier transformed. The complex results are then added to a matrix that records magnitude and phase for each point in time and frequency (see Figure 8). This can be expressed as:

likewise, with signal x [n] and window w [n]. In this case, m is discrete and ω is continuous, but in most applications, the STFT is performed on a computer using the Fast Fourier Transform, so both variables are discrete and quantized. The magnitude squared of the STFT yields the spectrogram representation of the Power Spectral Density of the function:

After the robot detects the notes from user input, a list of hexadecimal numbers are returned. This list is used for two purposes: 1) to compare with the target list for scoring and sending feedback to the user and 2) to create a new input for having robot playback in the game session.

To compare the detected and target notes, we used Levenshtein distance, an algorithm that is typically used in information theory linguistics. This algorithm is a string metric for measuring the difference between two sequences.

In our case, the Levenshtein distance between two string-like hex-decimal numbers a, b (of length |a| and |b| respectively) is given by leva,b (|a|, |b|),

where

Based on the real-life situation, we defined a likelihood margin for determining whether the result is good or bad:

where, if the likelihood is more than 66% (not including single note practice since, in that case, it is only correct or incorrect), the system will consider it to be a good result. This result is then passed to the accuracy calculation system to have the robot decide whether it needs to add additional practice trials (e.g., six correct out of a total of 10 trials).

Nine ASD and seven TD participants completed the study over the course of 8 months. All ASD participants completed all six sessions (baseline, intervention, and exit) while all the TD subjects completed the required baseline and exit sessions. Conducting a Wizard of Oz experiment, a well-trained researcher was involved in the baseline and exit sessions in order for there to be high-quality observations and performance evaluations. With well-designed, fully automated intervention sessions, NAO was able to initiate music-based therapy activities with participants.

Since the music detection method was sensitive to the audio input, a clear and long-lasting sound from the Xylophone was required. As seen in Figure 9, it is evident that most of the children were able to strike the Xylophone properly after one or two sessions (the average accuracy is improved over sessions). Notice that participants 101 and 102 had significant improvement during intervention sessions. Some of the participants started at a higher accuracy rate and sustained a rate above 80% for the duration of the study. Even with some oscillation, participants with this type of accuracy rate were considered to have consistent motor control performance.

Figure 10 shows the accuracy of the first music therapy activity that was part of the intervention sessions across all participants. As described in the previous section, the difficulty level of this activity was designed to increase across sessions. With this reasoning, the accuracy of participant performance was expected to decrease or remain consistent. This activity required participants to be able to concentrate, use joint attention skills during the robot therapy stage, and respond properly afterwards. As seen in Figure 10, most of the participants were able to complete single/multiple notes practice with an average of a 77.36%/69.38% accuracy rate although, even with color hints, the pitch difference of notes was still a primary challenge. Due to the difficulty of sessions 4 and 5, a worse performance, when compared to the previous two sessions, was acceptable. However, more than half of the participants consistently showed a high accuracy performance or an improved performance than previous sessions.

The American Psychological Association VandenBos (2007) defines turn-taking as, “in social interactions, alternating behavior between two or more individuals, such as the exchange of speaking turns between people in conversation or the back-and-forth grooming behavior that occurs among some nonhuman animals. Basic turn-taking skills are essential for effective communication and good interpersonal relations, and their development may be a focus of clinical intervention for children with certain disorders (e.g., Autism).” Learning how to play one’s favorite song can be a motivation that helps ASD participants understand and learn turn-taking skills. In our evaluation system, the annotators scored turn-taking based on how well kids were able to follow directions and display appropriate behavior during music-play interaction. In other words, participants needed to listen to the robot’s instructions and then play, and vice versa. Any actions not following the interaction routine were considered as less or incomplete turn-taking. Measurements are described as follows: measuring turn-taking behavior can be subjective, so to quantify this, a grading system was designed. Four different behaviors were defined in the grading system: 1) “well-done”, this level is considered good behavior where the participant should be able to finish listening to the instructions from NAO, start playback after receiving the command, and wait for the result without interrupting (3 points for each “well-done”); 2) “light-interrupt”, in this level, the participant may exhibit slight impatience, such as not waiting for the proper moment to play or not paying attention to the result (2 points given in this level); 3) “heavy-interrupt”, more interruptions may accrue in this level and the participant may interrupt the conversation, but is still willing to play back to the robot (1 point for this level); and 4) “indifferent”, participant shows little interest in music activities including, but not limited to, not following behaviors, not being willing to play, not listening to the robot or playing irrelevant music or notes (score of 0 points). The higher the score, the better turn-taking behavior the participant demonstrated. All scores were normalized into percentages due to the difference of total “conversation” numbers. Figure 11 shows the total results among all participants. Combining the report from video annotators, six out of nine participants exhibited stable, positive behavior when playing music, especially after the first few sessions. Improved learn-and-play turn-taking rotation was demonstrated over time. Three participants demonstrated a significant increase in performance, suggesting turn-taking skills were taught in this activity. Note that two participants (103 and 107) had a difficult time playing the Xylophone and following turn-taking cues given by the robot.

Since we developed our emotion classification method based on the time-frequency analysis of EDA signals, the main properties of The Continuous Wavelet Transforms (CWT), assuming that it is a C-Morlet wavelet, is presented here. Then, the pre-processing steps, as well as the wavelet-based feature extraction scheme, are discussed. Finally, we briefly review the characteristics of the SVM, the classifier used in our approach.

EDA signals were collected in this study. By using the annotation and analysis method from our previous study Feng et al. (2018), we were able to produce a music-event-based emotion classification result that is presented below. To determine the emotions of the ASD group, multiple comparisons were made after annotating the videos. Note that on average, 21% of emotions were not clear during the annotation stage. It is necessary to have them as unclear rather than label them with specific categories.

During the first part of the annotation, it was not obvious to conclude the facial expression changes from different activities, however, in S1 participants showed more “calm” for most of the time as the dominant emotion due to the simplicity of completing the task. In S2, the annotator could not have a precise conclusion by reading the facial expression from participants as well. Most of the participants intended to play music and complete the task, however, feelings of “frustration” were often evoked in this group. A very similar feeling can be found in S3 when compared to S1. In S3, all the activities were designed to create a role changing environment for users in order to stimulate a different emotion. Most of the children showed “happy” emotion during the music game section. We noticed that different activities can result in a change in emotional arousal. As mentioned above, the warm-up section and single activity practice section use the same activity with different intensity levels. The gameplay section has the lowest difficulty and is purposely designed to be more relaxing.

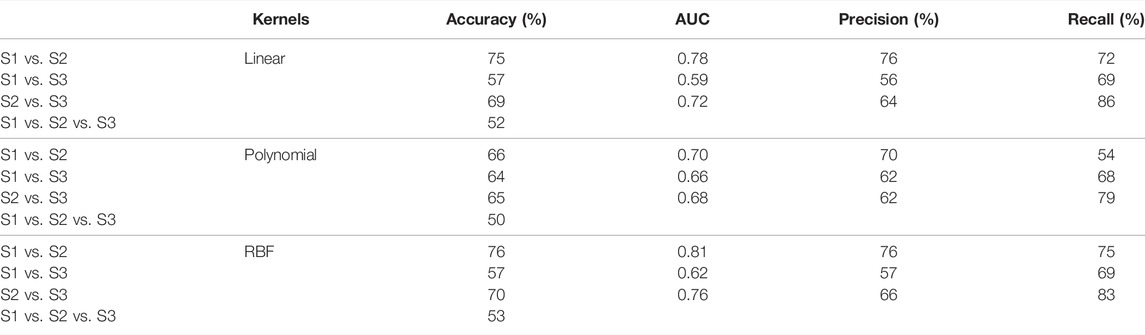

In the first part of the analysis, EDA signals were segmented into small event-based pieces according to the number of “conversations” in each section. One “conversation” was defined by three movements: 1) robot/participant demonstrates the note(s) to play; 2) robot/participant repeats the note(s); and 3) robot/participant presents the result. Each segmentation lasts about 45 s. The CWT of the data, assuming the use of the C-Morlet wavelet function, was used inside a frequency range of (0.5, 50)Hz. A Support Vector Machines (SVM) classifier was then employed to classify “conversation” segmentation among three sections using the wavelet-based features. Table 1 shows the classification accuracy for the SVM classifier with different kernel functions. As seen in this table, emotion arousal change between warmup (S1) and music practice (S2) and S2 and music game (S3) sections can be classified using a wavelet-based feature extraction SVM classifier with an average accuracy of 76 and 70%, respectively. With the highest percentage of accuracy for S1 and S3 being 64%, fewer emotion changes between the S1 and S3 sections may be indicated.

TABLE 1. Emotion change in different events using wavelet-based feature extraction under SVM classifier.

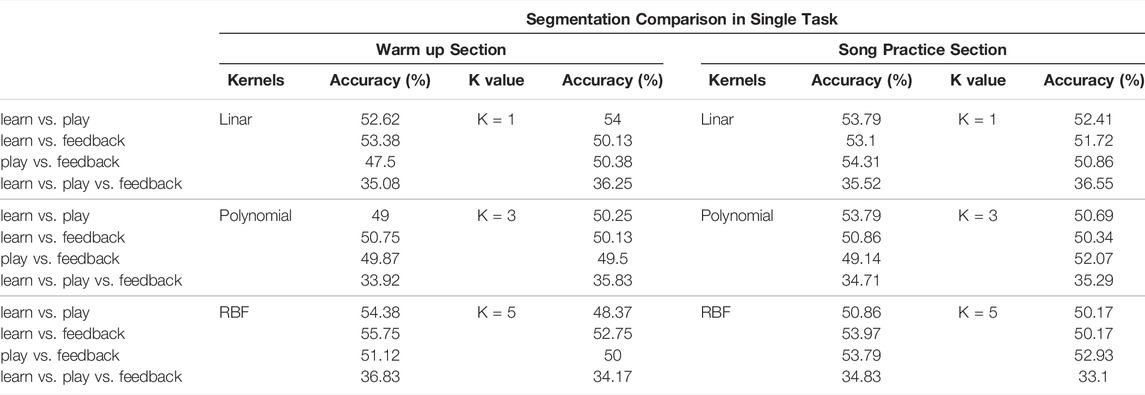

In the second part of the analysis, EDA signals were segmented into small event-based pieces according to the number of “conversations” in each section as mentioned previously. In order to discover the emotion fluctuation inside one task, each “conversation” section was carefully divided into three segments, as described before. Again, each segmentation lasts about 45 s. Each segment lasts about 10–20 s. Table 2 shows the full result of emotion fluctuation in the warm-up (S1) and music practice (S2) sections from the intervention session. Notice that all of the segments cannot be appropriately classified using the existing method. Both SVM and KNN show stable results. This may suggest that the ASD group has less emotion fluctuation or arousal change once the task starts, despite varying activities in it. Stable emotion arousal in a single task could also benefit from the proper activity content, including robot agents playing music and language used during the conversation. Friendly voice feedback was based on the performance delivered to participants who were well prepared and stored in memory. Favorable feedback occurred while receiving correct input and in the case of incorrect playing, participants were given encouragement. Since emotion fluctuation can affect learning progress, less arousal change indicates the design of intervention sessions that are robust.

TABLE 2. Emotion change classification performance in single event with segmentation using both SVM and KNN classifier.

Cross-section comparison is also presented below. Since each “conversation” contains three segments, it is necessary to have specific segments from one task to compare with the other task it corresponded to. Table 3 shows the classification rate in children learn, children play, and robot feedback across warm-up (S1) and music practice (S2) sessions. By using RBF kernel, wavelet-based SVM classification rate had an accuracy of 80% for all three comparisons. This result also matches the result from Table 1.

TABLE 3. Classification rate in children learn, children play and robot feedback across warm up (S1) and music practice (S2) sessions.

The types of activities and procedures between the baseline and exit session for both groups were the same. Using the “conversation” concept above, each were segmented. Comparing with target and control groups using the same classifier, an accuracy rate of 80% for detecting different groups was found (see Table 3). Video annotators also reported “unclear” in reading facial expressions from the ASD group. Taken together, these results suggest that even with the same activities, TD and ASD groups display different bio-reactions. It has also been reported that a significant improvement of music performance was shown in the ASD group (see Table 4), although both groups had similar performance at their baseline sessions. Furthermore, the TD group was found to be more motivated to improve and ultimately perfect their performance, even when they made mistakes.

When comparing emotion patterns from baseline and exit sessions between TD and ASD groups in Table 3, differences can be found. This may suggest that we have discovered a potential way of using biosignals to help diagnose autism at an early age. According to annotators and observers, TD participants showed a strong passion for this research. Excitement, stress, and disappointment were easy to recognize and label when watching the recorded videos. On the other hand, limited facial expression changes were detected in the ASD group. This makes it challenging to determine whether the ASD participants had different feelings or had the same feelings but different biosignal activities compared to the TD group.

As shown by others LaGasse et al. (2019); Lim and Draper (2011) as well as this study, playing music to children with ASD in therapy sessions has a positive impact on improving their social communication skills. Compared to the state of the art Dvir et al. (2020); Bhat and Srinivasan (2013); Corbett et al. (2008), where they utilized both recorded and live music in interventional sessions for single and multiple participants, our proposed robot-based music-therapy platform is a promising intervention tool in improving social behaviors, such as motor control and turn-taking skills. As our human robot interaction studies (over 200 sessions were conducted with children), most of the participants were able to complete motor control tasks with 70% accuracy and six out of nine participants demonstrated stable turn-taking behavior when playing music. The emotion classification SVM classifier presented illustrated that emotion arousal from the ASD group can be detected and well recognized via EDA signals.

The automated music detection system created a self-adjusting environment for participants in early sessions. Most of the ASD participants began to develop the strike movement in the initial two intervention sessions; some even mastered the motor ability throughout the very first warm-up event. The robot was able to provide verbal directions and demonstrations to participants by providing voice command input as applicable, however, the majority of the participants did not request this feedback, and instead just focused on playing with NAO. This finding suggests that the young ASD population can learn fine motor control ability from specific, well-designed activities.

The purpose of using a music therapy scenario as the main activity in the current research was to create an opportunity to practice a natural turn-taking behavior during social interaction. Observing all experimental sessions, six out of nine participants exhibited proper turn-taking after one or two sessions, suggesting the practice helped improve this behavior. Specifically, participant 107 significantly improved in the last few sessions when comparing results of the baseline and final session. Participant 109 had trouble focusing on listening to the robot most of the time, however, with prompting from the researcher, they performed better at the music turn-taking activity for a short period of time. For practicing turn-taking skills, fun, motivating activities, such as the described music therapy sessions incorporating individual song preference, should be designed for children with autism.

During the latter half of the sessions, participants started to recognize their favorite songs. Even though the difficulty for playing proper notes was much higher, over half of the participants became more focused in the activities. Upon observations, it became clear that older participants spent more time interacting with the activities during the song practice session compared to younger participants, especially during the half/whole song play sessions. This could be for several reasons. First, the more complex the music, the more challenging it is, and the more concentration participants need to be successful. Older individuals may be more willing to accept the challenge and are better able to enjoy the sense of accomplishment they receive from their verbal feedback at the end of each session. Prior music knowledge could also be another reason for this result as older participants may have had more opportunities to learn music at school.

The game section of each session reflected the highest interest level, not only because it was relaxing and fun to play, but also because it was an opportunity for the participants to challenge the robot to mirror their free play. This exciting phenomenon could be seen as a game of “revenge.” Participant 106 exhibited this behavior by spending a significant amount of time in free play game mode. According to the session executioner and video annotators, this participant, the only girl, showed very high level of involvement for all the activities, including free play. Based on the conversation and music performance with the robot, participant 106 showed a strong interest in challenging the robot in a friendly way. High levels of engagement further supports the idea that the proposed robot-based music-therapy platform is a viable interventional tool. Additionally, these findings also highlight the need for fun and motivating activities, such as music games, to be incorporated into interventions designed for children with autism.

Conducting emotion studies with children with autism can be difficult and bio-signals provide a possible way to work around the unique challenges of this population. The event-based emotion classification method adopted in this research suggests that not only are physiological signals a good tool to detect emotion in ASD populations, but it is also possible to recognize and categorize emotions using this technique. The same activity with different intensities can cause emotion change in the arousal dimension, although, for the ASD group, it is difficult to label emotions based on facial expression changes in the video annotation phase. Fewer emotion fluctuations in a particular activity, as seen in Table 2, suggests that a mild, friendly, game-like therapy system may encourage better social content learning for children with autism, even when there are repetitive movements. These well-designed activities could provide a relaxed learning environment that helps participants focus on learning music content with proper communication behaviors. This may explain the improvement in music play performance during the song practice (S2) section of intervention sessions, as seen in Figure 10.

There were several limitations of this study that should be considered. As is the case with most research studies, a larger sample size is needed to better understand the impact of this therapy on children with ASD. The ASD group in this paper also only included one female. Future work should include more females to better portray and understand an already underrepresented portion of this population of interest. In addition, improving skills as complex as turn-taking for example, likely requires more sessions over a longer period of time to better enhance the treatment and resulting behavioral changes. Music practice can also be tedious if it is the only activity in an existing interaction system and may have influenced the degree of interaction in which participants engaged with the robot. Future research could include more activities or multiple instruments, as opposed to just one, to further diversify sessions. The choice of instrument, and its resulting limitations, may also have an impact. The Xylophone, for example, is somewhat static and future research could modify or incorporate a different instrument that can produce more melodies, accommodate more complex songs, and portray a wider range of musical emotion and expression. Finally, another limitation of this study was the type of communication the robot and participant engaged in. Verbal communication was not rich between participants and NAO, and instead, most of the time, participants could follow the instructions from the robot without asking it for help. While this was likely not an issue for the specific non-verbal behavioral goals of this intervention, future work should expand upon this study by incorporating other elements of behavior that often have unique deficits in this population, including speech.

In summary, this paper presented a novel robot-based music-therapy platform to model and improve social behaviors in children with ASD. In addition to the novel platform introduced, this study also incorporated emotion recognition and classification utilizing EDA physiological signals of arousal. Results of this study are consistent with findings in the literature for TD and ASD children and suggest that the proposed platform is a viable tool to facilitate the improvement of fine motor and turn-taking skills in children with ASD.

The datasets presented in this article are not readily available because the data is just for research purposes for the authors of this paper and cannot be shared with others. Requests to access the datasets should be directed to; bW1haG9vckBkdS5lZHU=.

The studies involving human participants were reviewed and approved by the University of Denver, IRB. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin.

HF was the primary author of the paper, implemented the algorithm, collected and analyzed data. MHM was the advisor and the lead principal investigator on the project. He read and revised the paper. FD was the research coordinator who help in designing the study and recruiting participants for the study. She also edited and revised the paper.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

This research was conducted at the University of Denver (DU) as HF’s Ph.D. dissertation. The research was partially supported by the Autism gifts given to the DU by several family members. We would like to thank the families who donated to DU in support of this research as well as those families and their children who participated in this research. The publication cost was covered by the University of Denver (50%) and MOE Project of Humanities and Social Sciences (21YJCZH029) (50%).

Anzalone, S. M., Boucenna, S., Ivaldi, S., and Chetouani, M. (2015). Evaluating the Engagement with Social Robots. Int J Soc Robotics 7, 465–478. doi:10.1007/s12369-015-0298-7

Anzalone, S. M., Tilmont, E., Boucenna, S., Xavier, J., Jouen, A.-L., and Bodeau, N. (2014). How Children with Autism Spectrum Disorder Behave and Explore the 4-dimensional (Spatial 3d+ Time) Environment during a Joint Attention Induction Task with a Robot. Res. Autism Spectr. Disord. 8, 814–826. doi:10.1016/j.rasd.2014.03.002

Askari, F., Feng, H., Sweeny, T. D., and Mahoor, M. H. (2018). “A Pilot Study on Facial Expression Recognition Ability of Autistic Children Using Ryan, a Rear-Projected Humanoid Robot,” in 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN IEEE), 790–795. doi:10.1109/roman.2018.8525825

Association, A. P. (2000). Diagnostic and Statistical Manual of Mental Disorders. Dsm-iv 272, 828–829. doi:10.1001/jama.1994.03520100096046

Beer, J. M., Boren, M., and Liles, K. R. (2016). “Robot Assisted Music Therapy a Case Study with Children Diagnosed with Autism,” in 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (IEEE), 419–420. doi:10.1109/hri.2016.7451785

Bhat, A. N., and Srinivasan, S. (2013). A Review of “Music and Movement” Therapies for Children with Autism: Embodied Interventions for Multisystem Development. Front. Integr. Neurosci. 7, 22. doi:10.3389/fnint.2013.00022

Boccanfuso, L., Barney, E., Foster, C., Ahn, Y. A., Chawarska, K., Scassellati, B., et al. (2016). “Emotional Robot to Examine Different Play Patterns and Affective Responses of Children with and without Asd,” in 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (IEEE), 19–26. doi:10.1109/hri.2016.7451729

Boso, M., Emanuele, E., Minazzi, V., Abbamonte, M., and Politi, P. (2007). Effect of Long-Term Interactive Music Therapy on Behavior Profile and Musical Skills in Young Adults with Severe Autism. J. Altern. complementary Med. 13, 709–712. doi:10.1089/acm.2006.6334

Boucenna, S., Anzalone, S., Tilmont, E., Cohen, D., and Chetouani, M. (2014a). Learning of Social Signatures through Imitation Game between a Robot and a Human Partner. IEEE Trans. Aut. Ment. Dev. 6, 213–225. doi:10.1109/tamd.2014.2319861

Boucenna, S., Cohen, D., Meltzoff, A. N., Gaussier, P., and Chetouani, M. (2016). Robots Learn to Recognize Individuals from Imitative Encounters with People and Avatars. Sci. Rep. 6, 1–10. doi:10.1038/srep19908

Boucenna, S., Narzisi, A., Tilmont, E., Muratori, F., Pioggia, G., Cohen, D., et al. (2014b). Interactive Technologies for Autistic Children: A Review. Cogn. Comput. 6, 722–740. doi:10.1007/s12559-014-9276-x

Brownell, M. D. (2002). Musically Adapted Social Stories to Modify Behaviors in Students with Autism: Four Case Studies. J. music Ther. 39, 117–144. doi:10.1093/jmt/39.2.117

Cibrian, F. L., Madrigal, M., Avelais, M., and Tentori, M. (2020). Supporting Coordination of Children with Asd Using Neurological Music Therapy: A Pilot Randomized Control Trial Comparing an Elastic Touch-Display with Tambourines. Res. Dev. Disabil. 106, 103741. doi:10.1016/j.ridd.2020.103741

Constantino, J. N., and Gruber, C. P. (2012). Social Responsiveness Scale: SRS-2. Torrance, CA: Western psychological services.

Corbett, B. A., Shickman, K., and Ferrer, E. (2008). Brief Report: the Effects of Tomatis Sound Therapy on Language in Children with Autism. J. autism Dev. Disord. 38, 562–566. doi:10.1007/s10803-007-0413-1

Dellatan, A. K. (2003). The Use of Music with Chronic Food Refusal: A Case Study. Music Ther. Perspect. 21, 105–109. doi:10.1093/mtp/21.2.105

Di Nuovo, A., Conti, D., Trubia, G., Buono, S., and Di Nuovo, S. (2018). Deep Learning Systems for Estimating Visual Attention in Robot-Assisted Therapy of Children with Autism and Intellectual Disability. Robotics 7, 25. doi:10.3390/robotics7020025

Diehl, J. J., Schmitt, L. M., Villano, M., and Crowell, C. R. (2012). The Clinical Use of Robots for Individuals with Autism Spectrum Disorders: A Critical Review. Res. autism Spectr. Disord. 6, 249–262. doi:10.1016/j.rasd.2011.05.006

Dvir, T., Lotan, N., Viderman, R., and Elefant, C. (2020). The Body Communicates: Movement Synchrony during Music Therapy with Children Diagnosed with Asd. Arts Psychotherapy 69, 101658. doi:10.1016/j.aip.2020.101658

Feng, H., Golshan, H. M., and Mahoor, M. H. (2018). A Wavelet-Based Approach to Emotion Classification Using Eda Signals. Expert Syst. Appl. 112, 77–86. doi:10.1016/j.eswa.2018.06.014

Feng, H., Gutierrez, A., Zhang, J., and Mahoor, M. H. (2013). “Can Nao Robot Improve Eye-Gaze Attention of Children with High Functioning Autism? in,” in 2013 IEEE International Conference on Healthcare Informatics (IEEE), 484.

Gifford, T., Srinivasan, S., Kaur, M., Dotov, D., Wanamaker, C., Dressler, G., et al. (2011). Using Robots to Teach Musical Rhythms to Typically Developing Children and Children with Autism. University of Connecticut.

Guedjou, H., Boucenna, S., Xavier, J., Cohen, D., and Chetouani, M. (2017). “The Influence of Individual Social Traits on Robot Learning in a Human-Robot Interaction,” in 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN IEEE), 256–262. doi:10.1109/roman.2017.8172311

Han, X.-A., Ma, Y., and Huang, X. (2008). A Novel Generalization of Bézier Curve and Surface. J. Comput. Appl. Math. 217, 180–193. doi:10.1016/j.cam.2007.06.027

Kappas, A., Küster, D., Basedow, C., and Dente, P. (2013). “A Validation Study of the Affectiva Q-Sensor in Different Social Laboratory Situations,” in 53rd Annual Meeting of the Society for Psychophysiological Research, Florence, Italy.39

Kim, E. S., Berkovits, L. D., Bernier, E. P., Leyzberg, D., Shic, F., Paul, R., et al. (2013). Social Robots as Embedded Reinforcers of Social Behavior in Children with Autism. J. autism Dev. Disord. 43, 1038–1049. doi:10.1007/s10803-012-1645-2

Kim, J., Wigram, T., and Gold, C. (2008). The Effects of Improvisational Music Therapy on Joint Attention Behaviors in Autistic Children: a Randomized Controlled Study. J. autism Dev. Disord. 38, 1758. doi:10.1007/s10803-008-0566-6

LaGasse, A. B., Manning, R. C., Crasta, J. E., Gavin, W. J., and Davies, P. L. (2019). Assessing the Impact of Music Therapy on Sensory Gating and Attention in Children with Autism: a Pilot and Feasibility Study. J. music Ther. 56, 287–314. doi:10.1093/jmt/thz008

Lim, H. A., and Draper, E. (2011). The Effects of Music Therapy Incorporated with Applied Behavior Analysis Verbal Behavior Approach for Children with Autism Spectrum Disorders. J. music Ther. 48, 532–550. doi:10.1093/jmt/48.4.532

Loomes, R., Hull, L., and Mandy, W. P. L. (2017). What Is the Male-To-Female Ratio in Autism Spectrum Disorder? a Systematic Review and Meta-Analysis. J. Am. Acad. Child Adolesc. Psychiatry 56, 466–474. doi:10.1016/j.jaac.2017.03.013

Marinoiu, E., Zanfir, M., Olaru, V., and Sminchisescu, C. (2018). “3d Human Sensing, Action and Emotion Recognition in Robot Assisted Therapy of Children with Autism,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2158–2167. doi:10.1109/cvpr.2018.00230

Mavadati, S. M., Feng, H., Gutierrez, A., and Mahoor, M. H. (2014). “Comparing the Gaze Responses of Children with Autism and Typically Developed Individuals in Human-Robot Interaction,” in 2014 IEEE-RAS International Conference on Humanoid Robots (IEEE), 1128–1133. doi:10.1109/humanoids.2014.7041510

Mihalache, D., Feng, H., Askari, F., Sokol-Hessner, P., Moody, E. J., Mahoor, M. H., et al. (2020). Perceiving Gaze from Head and Eye Rotations: An Integrative Challenge for Children and Adults. Dev. Sci. 23, e12886. doi:10.1111/desc.12886

Molnar-Szakacs, I., and Heaton, P. (2012). Music: a Unique Window into the World of Autism. Ann. N. Y. Acad. Sci. 1252, 318–324. doi:10.1111/j.1749-6632.2012.06465.x

Mössler, K., Gold, C., Aßmus, J., Schumacher, K., Calvet, C., Reimer, S., et al. (2019). The Therapeutic Relationship as Predictor of Change in Music Therapy with Young Children with Autism Spectrum Disorder. J. autism Dev. Disord. 49, 2795–2809. doi:10.1007/s10803-017-3306-y

Pennisi, P., Tonacci, A., Tartarisco, G., Billeci, L., Ruta, L., Gangemi, S., et al. (2016). Autism and Social Robotics: A Systematic Review. Autism Res. 9, 165–183. doi:10.1002/aur.1527

Reschke-Hernández, A. E. (2011). History of Music Therapy Treatment Interventions for Children with Autism. J. Music Ther. 48, 169–207. doi:10.1093/jmt/48.2.169

Richardson, K., Coeckelbergh, M., Wakunuma, K., Billing, E., Ziemke, T., Gomez, P., et al. (2018). Robot Enhanced Therapy for Children with Autism (Dream): A Social Model of Autism. IEEE Technol. Soc. Mag. 37, 30–39. doi:10.1109/mts.2018.2795096

Scassellati, B., Admoni, H., and Matarić, M. (2012). Robots for Use in Autism Research. Annu. Rev. Biomed. Eng. 14. doi:10.1146/annurev-bioeng-071811-150036

Starr, E., and Zenker, K. (1998). Understanding Autism in the Context of Music Therapy: Bridging Theory and Practice. Can. J. Music Ther.

Stephens, C. E. (2008). Spontaneous Imitation by Children with Autism during a Repetitive Musical Play Routine. Autism 12, 645–671. doi:10.1177/1362361308097117

Taheri, A., Alemi, M., Meghdari, A., Pouretemad, H., Basiri, N. M., and Poorgoldooz, P. (2015). “Impact of Humanoid Social Robots on Treatment of a Pair of Iranian Autistic Twins,” in International Conference on Social Robotics (Springer), 623–632. doi:10.1007/978-3-319-25554-5_62

Taheri, A., Meghdari, A., Alemi, M., Pouretemad, H., Poorgoldooz, P., and Roohbakhsh, M. (2016). “Social Robots and Teaching Music to Autistic Children: Myth or Reality?,” in International Conference on Social Robotics (Springer), 541–550. doi:10.1007/978-3-319-47437-3_53

Taheri, A., Meghdari, A., Alemi, M., and Pouretemad, H. (2019). Teaching Music to Children with Autism: a Social Robotics Challenge. Sci. Iran. 26, 40–58.

Thaut, M. H., and Clair, A. A. (2000). A Scientific Model of Music in Therapy and Medicine. IMR Press.

VandenBos, G. (2007). American Psychological Association Dictionary. Washington, DC: American Psychological Association.

Keywords: social robotics, autism, music therapy, turn-taking, motor control, emotion classification

Citation: Feng H, Mahoor MH and Dino F (2022) A Music-Therapy Robotic Platform for Children With Autism: A Pilot Study. Front. Robot. AI 9:855819. doi: 10.3389/frobt.2022.855819

Received: 16 January 2022; Accepted: 29 April 2022;

Published: 23 May 2022.

Edited by:

Adham Atyabi, University of Colorado Colorado Springs, United StatesReviewed by:

Sofiane Boucenna, UMR8051 Equipes Traitement de l'Information et Systèmes (ETIS), FranceCopyright © 2022 Feng, Mahoor and Dino. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohammad H. Mahoor, bW1haG9vckBkdS5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.