- Department of Decision Systems and Robotics, Faculty of Electronics, Telecommunications and Informatics, Gdańsk University of Technology, Gdańsk, Poland

A cybernetic approach to modeling artificial emotion through the use of different theories of psychology is considered in this paper, presenting a review of twelve proposed solutions: ActAffAct, FLAME, EMA, ParleE, FearNot!, FAtiMA, WASABI, Cathexis, KARO, MAMID, FCM, and xEmotion. The main motivation for this study is founded on the hypothesis that emotions can play a definite utility role of scheduling variables in the construction of intelligent autonomous systems, agents, and mobile robots. In this review, we also include an innovative and panoptical, comprehensive system, referred to as the Intelligent System of Decision-making (ISD), which has been employed in practical applications of various autonomous units, and which applies xEmotion, taking into consideration the personal aspects of emotions, affects (short-term emotions), and mood (principally, long-term emotions).

1. Introduction

Computational intelligence has been developing for many years. Recently, some engineering systems may even have the neuron count corresponding to the capacity of a cat brain (meaning the usage of 1.6 billion virtual neurons connected by means of 9 trillion synapses, which follow the neurological construction of a cat brain) (Miłkowski, 2015). Despite the fact that there is no unique definition of awareness, there are works that describe certain autonomous robots passing the test of self-awareness (Hodson, 2015), which is a moot point. On one hand, a rustic experiment concerning a robot, which recognizes itself in a simplistic situation (in a mirror), does not prove any kind of awareness. On the other hand, leaving aside intelligence, in some aspects and experimental settings, the currently developed machines (such as industrial robots and mechatronic systems) are even more efficient than men, given the repeatability and precision. Nevertheless, there is still a huge gap in intelligence between the implemented computer systems and the majority of animals, let alone humans.

One of the major differences between a human and a humanoid robot relies on the feeling and expressing of emotions. For this reason, robots appear to be heartless to people. To reduce this appearance, a considerable number of robot-oriented projects have begun to take into account the handling of emotions, especially to simply simulate them (without whatever internal effects). As far as emotions are concerned, there are several projects which take into account the issue of expressing emotions by a robot, e.g., Kismet, Mexia, iCube, Emys, etc. [Breazeal (Ferrell) and Velasquez, 1998; Esau et al., 2003; Metta et al., 2011]. However, the crux of the problem lies not in acting the emotions, but in the feeling and functioning of emotions.

A dual problem is connected with the issue of recognizing emotions manifested by a human. There are several research studies concerning the recognition of facial expressions, the temperature of the human body, or body language. However, there are no useful signs of mathematical modeling and practical simulation of human emotions. Perhaps, knowledge in the topic of creating and evolving/variability of human emotions is still not sufficient to carry out such a study.

Accepting a certain predominance of the system of needs, the mechanism of emotions constitutes a second human motivation system. This mechanism is the result of evolution (Tooby and Cosmides, 1990). The emotions evolved quite early, as a reaction to more and more varied problems and threats from environment. Despite various theories, there is currently a belief that not only humans and but also apes have mechanisms of emotions. The principal problem of proving this theory results from difficulties in precise determination of the animals’ emotional states. According to the latest achievements in the field of biology and neuroscience, not only mammals (dogs, cats, rodents) have emotions but also birds (Fraser and Bugnyar, 2010), …, and even bees (Bateson et al., 2011) express emotions. The studies of apes, as the animal closest to human in the biological sense, are particularly important. Gorillas are able to use elements of human language and can even recognize and verbalize their emotional state (McGraw, 1985; Goodall, 1986).

Basically, emotions in robotics and artificial intelligence are designed to create a social behavior of robots, and even make them somehow similar to people. The aims of Human–Machine Interaction can also be recalled here. On the other hand, by incorporating a computational model of emotions as part of a behavioral system, we can shape a counterpart for a model of the environment. An agent with reactive emotions is able to make sophisticated decisions, despite the lack of a full-size model of a complex environment. Such emotions can provide the agent with important information about the environment, as is the case with the human pattern.

1.1. Arguments for the Research

The main reason for developing a computational system of emotions is the lack of top-down approaches in the available autonomous robotics’ results. Moreover, there is no system which would model human psychology suitably for the purpose of autonomous agents (nor in a general sense). There are behavior-based robotic systems (BBR), which focus on agents that are able to exhibit complex behaviors despite simple models of environment, which act mostly by correcting their operations according to the signals gained from their sensors (Arkin, 1998). Such BBR systems can use emotions as internal variables, which drive their external actions. Therefore, the idea of computational emotions is applied here as a means to promote acting (in a virtual or laboratory world) of an independent agent, who is able to adapt to the changing environment.

Computational modeling of emotion in itself can lead to effective mechanisms, which may have impact on various developments in psychology, artificial intelligence, industry control and robotics, as well as in haptic systems and human–system/robot interaction. It is a truism to say that such mechanisms can be practically utilized to show the deficiency of existing theories, weak points of implementations, and incorrect assumptions. Certainly, unusually detailed implementational studies have to be performed to show where those flaws in theory are. On the other hand, putting into practice a workable computational system can also demonstrate which parts of psychological theories are coherent and reassure the appropriateness of the undertaken directions of further studies. Created complex cognitive architectures based on the computational model of emotion can, for instance, be used to rationalize the quest for the role of emotion in human cognition and behavior.

Adapting the emotional systems to complex control applications may lead to more intelligent, flexible, and capable systems. Emotions can supply data for the method of interrupting normal behavior, for introducing competing goals (specially selected, and profitable), and for implementing a generalized form of the so-called scheduling variable control (SVC), leading to more effective reactive behavior. Social emotions, such as anger or guilt, may minimize conflicts between virtual agents in multiagent systems. The computational models of emotions are not new in AI, but they are still underestimated, and most researchers focus their attention rather on the bottom-up models of human thinking, such as deep learning/neural networks and data mining.

1.2. Purpose and Structure of the Article

In this article, we try to answer the following question: what are the types of computational models of emotion, and to what extent do they reflect the psychological theories of emotion?

First, the paper presents a short introduction to psychological theories of emotions by showing different definitions of emotion, as well as the processes of their creation, or triggering. In addition, the parameters of emotion, such as duration, intensity, and color, are introduced.

While in the main part of the paper, we will review a selection of the recently developed computational systems of emotions; it is worth noting that today this branch of robotics and cognitive systems develops poorly, although there are many computational systems of emotions created in the last 15 years.

2. Emotion in Psychology

The physiological approach postulates that emotions have evolved from the process of homeostasis. Taking into account cybernetic achievements, the process of emotion is a homeostat. It is a subsystem designed to “maintain the functional balance of the autonomic system” (Mazur, 1976) by counter-action of the information and energy flow that reduce the possibility of environmental impact. In other words, emotions should customize the behavior of a human, agent, or robot, to better respond to the stimuli from the surrounding environment. It is observed that once the system has to process too much information, the role of emotions overpowers the process of selection of a suitable reaction. Moreover, there are theories that treat emotions (such as pain, relaxation, security, leisure, and health) as (self-regulatory) homeostatic (Craig, 2003; Jasielska and Jarymowicz, 2013).

There are several psychological theories, which provide a certain definition of emotion. According to Mayers (2010) “emotions are our body’s adaptive response.” Lazarus and Lazarus (1994) claim that “emotions are organized psycho-physiological reactions to news about ongoing relationships with the environment.” A similar viewpoint is represented by Frijda (1994): “Emotions (…) are, first and foremost, modes of relating to the environment: states of readiness for engaging, or not engaging, in interaction with that environment.” Finally, there is also a definition derived from evolutionary considerations, presented by Plutchik (2001), saying that “Emotion is a complex chain of loosely connected events which begins with a stimulus and includes feelings, psychological changes, impulses to action and specific, goal-directed behavior.”

Besides the challenge of defining the emotion, there are also theories relating to the process of the creation or triggering of the emotion. Psychologists are supporting (mostly) one of two main streams: somatic and appraisal. Appraisal theory suggests that before the occurrence of emotion, there are certain cognitive processes that analyze stimuli (Frijda, 1986; Lazarus, 1991). In such a way, the emotions are related to a certain history of a human (agent or robot). The relation to the history should follow the process of recognition (since the objects and their relations to the agent’s emotion should be first recognized). Thus, the appraisal theory postulates a certain priority of cognitive processes over emotions. On the other hand, the somatic theory concedes that emotions are primary to cognitive processes (Zajonc et al., 1989; Murphy and Zajonc, 1994). Prior to analyzing a perceived object, and even before recording any impressions, the (human) brain is able to immediately invoke an emotion associated with this object.

Plutchik’s approach to the problem of modeling emotions shows that both the above-mentioned theories can be correct. From the evolutionary point of view, the appraisal theory need not be the only selection. Emotions should allow an agent to take immediate actions (such as escaping from a predator) right after certain stimuli show up, without cognitive processes (Izard, 1972). Moreover, there are certain experiments that show the occurrence of emotion in early stages of human life. These kinds of emotions are based on neuronal or sensorimotor processes evolving in species (Plutchik, 1994).

There are a number of parameters that can characterize an emotional state. A group of similar emotions can be attributed to a definite color and labeled as joy, happiness, or ecstasy. This type of grouping can also be interpreted as generalization of emotions, known in psychology as the concept of basic emotions (Arnold, 1960; Izard, 1977; Plutchik, 1980). Recognition of such an emotional color allows us to model and predict the evolution of the emotional reaction of a human.

There are also dimensional models of emotions in psychology (Russell, 1980; Posner et al., 2005; Lövheim, 2012), which use a parameter referred to as an emotional intensity, known also as stimulation, energy, or degree of activation. Emotional intensity describes how much the emotion affects the agent’s behavior. Emotions of the same color are thus divided into several states based on intensity.

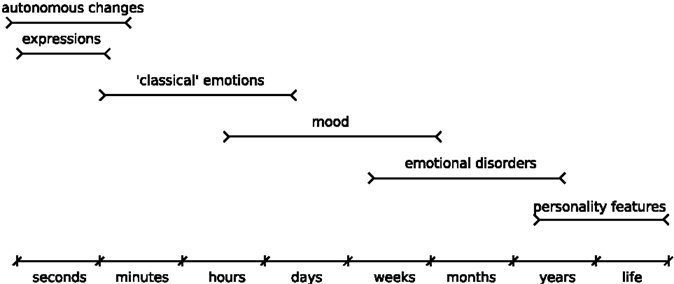

An important parameter concerns the duration of emotions. This may range from a few seconds to several weeks, sometimes even months. Emotional states which take longer than a few months are rather personality traits or emotional disorders (Figure 1). Consequently, due to the time duration, emotions can be divided into (Biddle et al., 2000; Oatley et al., 2012):

• autonomous changes: very short (in seconds), spontaneous physical feelings (Ekman, 2009), connected with the somatic theory of emotions, dependent on certain stimuli, without recognition of situation, object, or event (such as the fear of something in darkness);

• expressions: short (in seconds), associated with objects and based on the appraisal theory (Lazarus, 1991) of emotions (e.g., calmness associated with the places or objects from childhood);

• “classical” emotions: lasting longer, consciously observed, verbalized (named), and associated with both theories, possibly combined motivational factors, and related to objects (such as the commonly known emotions: joy, happiness, anger, etc.);

• mood: long (lasting days or months), consciously perceived, of intensity smaller than that of the “classic” emotion, and characterized by slow, positive, or negative changes (Batson, 1990);

• emotional disorders: kind of depression, phobia, mania, fixation, etc.;

• personality features: emotions based on personality, such as shyness and neuroticism.

Figure 1. Classification of emotions, based on duration time (Oatley et al., 2012).

3. Classification of Computational Models of Emotions

In general, most systems can be classified according to

• psychological theory (under which they were created), such as:

• evolutionary (LeDoux and Phelps, 1993; Damasio, 1994),

• somatic (Zajonc et al., 1989; Damasio, 1994), and

• cognitive appraisal (Frijda, 1986; Collins et al., 1988; Lazarus and Lazarus, 1994; Scherer et al., 2010);

• components involved in the formation of emotion (Scherer et al., 2010), such as:

• cognitive (external environment),

• physiological (internal, biological/mechanical environment), and

• motivational (internal psychological environment);

• phases involved in the emotional process (Scherer et al., 2010), such as:

• low-level evaluation of emotions – creation of primal emotions, based on simple stimuli,

• high-level evaluation of emotions – creation of secondary emotions, based on emotional memory,

• modification of the priorities of the objectives/needs,

• implementation of the agent’s actions,

• planning behavior,

• deployment behavior, and

• communication;

• the applied description of emotions, such as:

• digital/linguistic/binary (only labels),

• sharp – label and valence (discrete or continuous), and

• fuzzy.

Further arguments on the computational models of emotions can be found in the literature (Sloman, 2001; Breazeal, 2003; Arbib and Fellous, 2004; Ziemke and Lowe, 2009). As will be shown hereinafter, each of the exemplary systems described below in Subsection 4 can be (freely) attributed to a subset of the above characterizations.

4. A Selective Review of the Existing Proposals for Computational–Emotion Systems

The theory of cognitive appraisal is one of the most common ideas used in the design of computational systems of emotions (Gratch and Marsella, 2004). According to this theory, the emotions are created based on the appraisal of currently perceived objects, situations, or events. Appraisal is created on the basis of the relationship between perceived elements and individual beliefs, desires, intentions (BDI) of the agent (Lazarus, 1991). This relationship is known as the personal–environmental relationship. Such systems do not take into account the possibility of the occurrence of emotions before recognition of an object, event, or situation. It is clear that this type of recognition requires a lot of computing power and therefore the emotions generated by those systems do not occur in real time, which generally contradicts the general idea of emotions (as well as the SVC approach).

In the literature, one can find many works concerning the issue of modeling human emotions considered here: CBI (Marsella, 2003), ACRES (Swagerman, 1987), Will (Moffat and Frijda, 1994), EMILE (Gratch, 2000), TABASCO (Staller and Petta, 2001), ActAffAct (Rank and Petta, 2007), EM (Reilly, 1996), FLAME (El-Nasr et al., 2000), EMA (Gratch and Marsella, 2004), ParleE (Bui et al., 2002), FearNot! (Dias, 2005), Thespian (Mei et al., 2006), Peactidm (Marinier et al., 2009), Wasabi (Becker-Asano, 2008), AR (Elliott, 1992), CyberCafe (Rousseau, 1996), Silas (Blumberg, 1996), Cathexis (Velásquez and Maes, 1997), OZ (Reilly and Bates, 1992), MAMID (Hudlicka, 2005), CRIBB (Davis and Lewis, 2003), and Affect and Emotions (Schneider and Adamy, 2014). However, some of them are either freely/weakly connected with the psychological aspects of emotions (e.g., ACRES) or away from a strict mathematical wording of the issue (FAtiMA Modular, MAMID). Moreover, in general, most psychologists shun attempts to relate their considerations to a mathematical synthesis of emotional phenomena.

To give a taste of these specific issues, several selected computational systems of emotion are presented in the following.

4.1. ActAffAct

Acting Affectively affecting Acting is an emotional architecture for agents operating as actors. It is intended to give a greater credibility to the characters in computer games, chatterbots, or other virtual characters (Rank and Petta, 2005, 2007). The system is based on the scheme of the emotional valence from the appraisal theory of emotion. As a result, new events, objects, or actions are evaluated in terms of the goals, standards, and tastes of the considered agent. For example, the agent can feel joy after disarming a bomb, but before that, it may feel hope and fear. The system functioning can be described as a transition between the four phases: perception, appraisal, decision-making (relational action tendency – RAT), and execution. The perception phase relies on the translation of external information to an intelligible form for cognitive appraisal. Emotions are created based on appraisal, so that the RAT tries to reconfigure the currently executed actions and behaviors. In fact, the reported application based on ActAffAct concerns a simple scenario with several virtual characters and objects, where the agents, in accordance with experienced emotions, choose the appropriate current target, and then a suitable response. Thus, ActAffAct is a decision-making system based on a current situation rather than on a general goal.

In view of Subsection 3, ActAffAct reflects emotions solely as labels. The system is based on the above-mentioned BDI model, in other words, the actions of the agent are based on its pre-programed beliefs (we can thus talk about the personality of criminals, for instance). ActAffAct covers all phases involved in the emotional process. Appraisal, that is formation of emotions, uses the cognitive and motivational components (Scherer et al., 2010).

4.2. Fuzzy Logic Adaptive Model of Emotions

FLAME is a computational system based on a model of emotions by Collins et al. (1988). It takes into account an emotional evaluation of events (El-Nasr et al., 2000). During the occurrence of a new event, FLAME assesses an emotional value of the event in relation to the objectives of the agent. In particular, FLAME takes into account which objectives are fulfilled (at any level, even partially) by the occurrence of the event and calculates an event impact. Then, the system determines its own assessment, based on the significance of certain targets (the importance of goals). These data are an input to a fuzzy system (Mamdani and Assilian, 1975), which calculates desirability of events. Based on the appraisal of the events, emotions are created according to the fuzzy desirability and several other rules as presented in Collins et al. (1988). For example, the emotion of joy is the effect of a desired event, whereas sadness is generated when the agent experiences an adverse event.

Due to the fuzziness of the system, emotions appear in groups. For example, sadness is accompanied by shame, anger, and fear. For this reason, emotions need to be filtered. In the FLAME system, filtering is performed using simple rules (e.g., a greater joy may exclude sadness). In addition, FLAME has a built-in interruption system, based on motivational elements (such as needs). As a result, the emotion-driven behavior of the FLAME agent can be overpowered by the effects of motivations (needs).

The agent’s behavior is determined by the filtered emotions processed using a fuzzy method. For example, if the anger is high and the vessel was taken then the behavior is barking. The system can learn by one of four implemented methods:

• conditioning: linking the object with emotion,

• with reinforcement learning: changing the assessment of the impact of events into the realization of the objectives,

• probabilistic approach to learning the patterns of behavior, and

• heuristic learning which leads to gratifying behavior.

FLAME was used as a driver for a pet in virtual simulations. The resulting pet action consisted of the voice actions: barking, growling, sniffing, etc. (expressing emotions posing a feedback to the owner), and other actions, such as looking, running, jumping, and playing. Emotions created by FLAME greatly improved the pets’ behavior and their appearance/credibility.

In view of Subsection 3, the FLAME system is based on the theory of cognitive appraisal. In the formation of emotions, only the cognitive components are involved. Despite the factual presence of motivation components, they do not affect the emotions. Emotions serve only to select the agent’s behavior but can not modify its goals/needs. Fuzzy emotions used in FLAME are definitely much more intuitive and allow for easier inference.

4.3. Emotion and Adaptation

EMA is a complex emotional system which has many appraisal variables (Gratch and Marsella, 2004, 2005). Among them, we distinguish (Gratch and Marsella, 2004):

• relevancy – whether the event needs attention or action,

• desirability – if the event coincides with the goals of the agent,

• causality – whether the agent is responsible for the event,

• opportunity – how likely was the event,

• predictability – whether the event was predictable based on past history,

• urgency – whether the delay response is important,

• commitment – to what elements of personality and motivation the agent refers the event,

• controllability – whether the agent can affect the event,

• self-variation – whether the event can change itself,

• power – power which is required to control the event, and

• adaptability – whether the agent will be able to cope with the consequences of the event.

Emotions are generated using a mapping algorithm based on the model of Elliott (1992). They take into account the variables mentioned earlier in the context of certain perspectives. For example, hope comes from the belief that something good might happen (desirability >0 and the ability <1). Each of the 24 emotions has its individual intensity.

Coping strategies, used for solving problems, are clearly determined by emotions (Lazarus and Lazarus, 1994). Such strategies, however, work in the opposite direction than cognitive appraisal (constructing the emotions). In fact, a certain coping strategy has yet to determine the cause of emotions and modify the whole BDI mechanism, if necessary. Among them, we distinguish (Gratch and Marsella, 2005):

• actions, selected for implementation,

• plans, forming intentions to carry out acts,

• searching for support (e.g., seeking help),

• procrastination, waiting for an external event, designed to change the current circumstances,

• positive reinterpretation, giving a positive side effect for negative actions,

• acceptance,

• denial, reducing the likelihood of adverse effects,

• disconnecting mental abasement from the desired state,

• shifting responsibility (blame, pride, contribution) for the effects of actions to certain factors (rather external), and

• attenuating information or consulting other sources.

In EMA, inferences and beliefs are derived from post hoc analysis. EMA can run with a set of actions and a set of recipes for combining these actions, or with a set of simple responses of the emotion-action type. This idea was partly implemented using SOAR (State, Operator, and Result) – a cognitive architecture similar to expert systems (Laird et al., 1987; Newell, 1994; Laird, 2012), in a very simplistic scenario that describes the agent dealing with an angry bird (which can attack or fly away). However, this experiment proves only the implemented small part of the concept, rather than the entire developed concept of EMA.

In view of Subsection 3, the EMA system has extensive possibilities for interpretation of external stimuli. The agent’s emotions are sharp. In the process of creating emotions, motivational and cognitive components are involved. EMA also considers planning and implementing of its behavior.

4.4. ParleE

ParleE is a computational model of emotions prepared for a virtual agent for communication purposes used in a multiagent environment (Bui et al., 2002). The model is based on the OCC theory of Collins et al. (1988) and a model of personality (Rousseau, 1996). Detected events are evaluated based on previously learned behavior using a probabilistic algorithm. The system consists of five basic blocks. A block called the Emotion Appraisal Component evaluates events in terms of the agent’s personality, taking into account its plans and the models of other agents. After this evaluation, the block generates a vector of emotions. A working plan (an algorithm of actions to achieve a certain purpose) is generated by a Scheduler block, also with the use of the models of the other agents. This block calculates the probability of achieving the goals, next used to create a vector of emotions. The following blocks: emotion component, emotion decay component, models of other agents, modify the vector of emotions by taking into account further aspects, such as motivational factors, personality, or behavior of other agents (in terms of the gathered information). The system takes into account ten emotions of varied intensity, created on the basis of the rules of OCC theory.

ParleE was implemented as a conversational agent Obie, which communicates with its interlocutor via textual interface and facial 3D expressions. The scenario presented by the authors using three options of the agent’s personality: sensitive, neutral, and optimistic, can be described as: “Obie goes to the shop and buys bread. He brings the bread home. The user eats his bread” (Bui et al., 2002). The applied realizations of Obie react in three different emotional ways. The sensitive becomes really angry, the neutral is just angry, and the optimist ignores events and remains happy.

In view of Subsection 3, ParleE uses the sharp form of the emotion descriptions which are built of the components characterized as both motivational and cognitive. In addition, ParleE takes into account the models of own personality and other agents’ behavior (which is a rare feature).

4.5. FearNot!

FearNot! represents a PC application that supports the removal of violence from schools (Aylett et al., 2005; Dias, 2005; Dias and Paiva, 2005). It simulates situations in which you sympathize with a victim. To achieve such a sensitive goal, this application must be credible both in terms of behavior and emotions. Emotions in the FearNot! system have six attributes:

• name/label,

• valence (negative or positive),

• source (objects associated with each emotion),

• cause,

• intensity (sharp value which decreases with the passage of time), and

• time of occurrence.

FearNot! takes into account the emotional parameters of the agent: its mood and sensitivity. When the agent is more energized, it feels emotions more intensely. The mood of the agent increases or decreases the possibility of positive or negative emotions (Picard and Picard, 1997).

Emotions are created based on cognitive appraisal of the current situation of the agent, performed using the OCC model. It is also related to the agent’s plans. Implementation of plans also reflects on emotions (as it can generate hope, fear, or satisfaction). Emotions associated with perceived objects (that are the sources of emotions) indicate which objects require the attention of the agent. This feature is also a part of the agent’s plans.

Executive strategies are divided into policies of coping with emotions and solving problems. The first type of strategy is intended to change the interpretation of the currently perceived stimuli and other conditions (which resulted in feeling emotions). It embraces such strategies as acceptance of an adverse reaction, wishful thinking, planning or execution of a certain action.

FearNot! is a story-telling application with an anti-bullying message to children. The story in this application arises as the interaction between the user and the virtual agent (which affect each other). This approach is called the emergent narrative. In such a way, the users of FearNot! can learn about violence (Figueiredo et al., 2006).

In view of Subsection 3, FearNot! represents the most comprehensive approach to computational systems of emotion. Emotions, described using sophisticated structures, are conditioned by cognitive, motivational, and reactive elements. Changing the interpretation of emotions is possible. The main objective of emotions is to (SVC) control the behavior of the agent and its communication with others.

4.6. FAtiMA Modular

Fearnot AffecTIve Mind Architecture is an emotional architecture designed to control an autonomous virtual agent. It is an upgraded version of the FearNot! system of emotion (Dias et al., 2014). The FAtiMA phases of the processing cycle may be enumerated as:

• perception,

• appraisal,

• goal-based planning (similar to BDI), and

• execution of actions.

The specified phases are quite similar to the universal model of a BDI agent, described by Reisenzein et al. (2013).

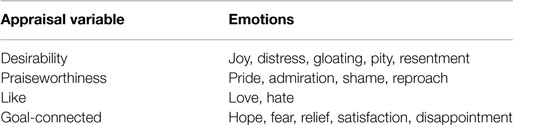

The appraisal process in FAtiMA is founded on the theory of Scherer et al. (2010), which can be generally described as follows. The appraisal process is incremental and continuous. It is (basically) sequential as the applied appraisal components have their own order. The process generates certain appraisal (emotional) variables, which are important tools in decision-making. The FAtiMA system also takes into account the OCC model, where the appraisal variables can have a definite impact on different emotions as indicated in Table 1. Clearly, as a developed version of FearNot!, FAtiMA is also founded on the story-telling paradigm (Battaglino et al., 2014).

Table 1. Referencing the emotions to the appraisal variables, based on OCC (Dias et al., 2014).

In summary – the FAtiMA system presents a quite coherent continuous-time concept of emotions, in which emotions are created using the OCC reasoner and the Scherer theory of appraisal. It thus apparently relies on the appraisal theory of emotions. The emotional state of the agent is, however, related also to other factors, including external stimuli, which is clearly attributed to the somatic theory of emotions. Cognitive and motivational elements are involved in the process of creating emotions. Emotions generated by FAtiMA can be treated as a sheer emotional expression or a desired reaction of an agent (which should be perceived by humans as natural).

4.7. WASABI

Affect Simulation Architecture for Believable Interactivity is an example of another computational system of emotion, where emotions are modeled in a continuous three-dimensional space (Becker-Asano, 2008, 2014; Becker-Asano and Wachsmuth, 2010), described as a pleasure arousal dominance (PAD) space (Russell and Mehrabian, 1977). Areas in the PAD space have labels ascribed to the primal emotions. The secondary emotions (e.g., relief and hope) are formed on the basis of higher cognitive processes.

The composed WASABI system consists of two parallel processes/sub-modules, such as emotional and cognitive. The emotional process creates a vector of emotions on the basis of relevant pulses, or triggers, received from the ambient environment and the cognitive module, which generates signals of secondary emotions. They result from the assumed BDI model and an Adaptive Control of Thought-Rational (ACT-R) architecture. The cognitive module is also responsible for decisions about the desired action, and for transferring them to perform. A third module connecting emotion and action is intended to generate a speech signal taking into account current emotions.

The WASABI module of emotions can be used for emulating the emotions of MAX, a virtual tour agent in the virtual museum guide scenario. This approach allows the guide to have its own human-like emotions, which affect on its current conversations conducted as “small talk.” For example, MAX analyzes the environment in the context of human skin color, and every new person triggers a positive emotional impulse in its PAD space, which results in the “emotion-colored” responses of the agent. This simulation was presented at several public events (Becker-Asano, 2008).

In summary, and in view of Subsection 3 – the WASABI system does not rely only on the appraisal of the situation. In addition to receiving external stimuli, the system can change its emotions by itself, due to the passage of time. Moreover, the continuous-time nature of emotions means that at any moment of time the agent has some emotional state. Thus, both the cognitive elements and time are involved in the process of creating emotions. However, emotions can only modify the way of speaking, but not the behavior of the agent.

4.8. Cathexis

Cathexis is the system which first took into account the hypothesis of somatic markers (Damasio, 1994). This hypothesis states that the decisions taken in circumstances similar to the previous ones induce a somatic sign (as the outcome can be potentially beneficial or harmful). This is used for making prompt decisions on future events, in the case of possible gain or danger (threat). The Cathexis system consists of five modules: perception, action, behavior, emotions, and motivation (Velásquez and Maes, 1997; Velásquez, 1999).

The perception module is used for receiving and processing sensory information, which later reaches the modules of behavior and emotion. The motivation module consists of four needs: energy/battery, temperature (at which it is driven), fatigue (amount of consumed energy), and interest. The emotional module is created based on different theories of emotion, includes basic emotions (anger, fear, sadness, joy, disgust, and surprise) and mixtures of them [e.g., the theory of Plutchik (1994), joy, and fear arise to a sense of guilt, …]. Basic emotions emerge as a response to various stimuli from the environment. The emotional module takes into account also secondary emotions, linked to objects (Damasio, 1994; Ledoux, 1998). Behavior module generates the most suitable reactions based on the emotion and motivation of the agent. The reactions are then implemented by the action module.

The Cathexis system was implemented in Yuppy, “an Emotional Pet Robot” (Velásquez, 1998), whose needs were formulated as: recharging, temperature, fatigue, and curiosity, represent senses from different sensors. Emotions and needs definitely influence the behavior of the Yuppy. In practice, Cathexis chooses one behavior (for instance, “search for bone”) for a given set of these two factors.

In view of Subsection 3, the Cathexis system is based on evolutionary, cognitive appraisal, and somatic theories. Cognitive, physiological, and motivational components are involved in creating emotions. The system plans and implements its behavior in relation to both low-level and high-level emotions, described as discrete.

4.9. KARO

KARO is an alloy of modal logic, dynamic logic, and accessory operators of motivation (Meyer et al., 1999; Meyer, 2006). It models emotions in a logical language, where one can distinguish operators of knowledge, beliefs, actions, abilities, and desires. The system uses four free-floating (not connected to external objects) emotions, associated with certain attitudes:

• happiness, triggered by achieving goals,

• sadness, triggered by failing goals,

• anger, triggered by being frustrated by the active plan, and

• fear, triggered by goal conflicts or danger.

The KARO system uses a BDI-like cognitive notion of emotion, which can be used in practical implementations. However, it is only a theoretical description of emotional agents, with its emphasis on the dynamics of the mental states of the agent and the effects of its actions.

Implementation of KARO was assessed by running it on a platform iCAT (playing humanoid- and cat-robot functions, with the ability of presenting facial expression).

In view of Subsection 3, KARO uses certain cognitive and motivational components in the task of creating emotions. The KARO system evaluates emotions (as labels) and modifies the agent’s priorities. Unreservedly, the system is not based on the available evolutionary, somatic, or cognitive appraisal theories; however, it implements some elements from the OCC theory.

4.10. MAMID

MAMID implements an extended processing cycle (Hudlicka, 2004, 2005, 2008; Reisenzein et al., 2013). From the universal model of the BDI-agent point of view, the MAMID system adds attention (filtration and selection processes), and expectations as additional phases. It is also supported by long-term memory of beliefs and rules. MAMID is highly parameterized and enables manipulation of its architecture topology. It appears to be quite universal in terms of possible applications.

Emotions in MAMID are created using external data, internal interpretations, desires, priorities, and individual characteristics. Emotions have their own valence and affective states (one of the four basic emotions: fear, anger, sadness, and joy). The system can represent the specificity of the human/individual differences/personality (such as attention speed or aggressiveness), emotions, memory, and certain cognitive parameters, which are all used to select the most suitable reaction.

MAMID was evaluated as the model of decision-making for an agent (with a few different personality types) in a certain experimental scenario. The goal of the agent was to keep calmness in several different situations (such as in the view of a destroyed bridge and hostile crowd). Different emotions were triggered depending on the actual situation and the agent’s personality.

In summary, the MAMID system implements the appraisal theory of emotion. It uses cognitive and motivational elements to create emotions, which are sharp. Unfortunately, there are only four distinct emotions.

4.11. Emotion Forecasting Using FCM

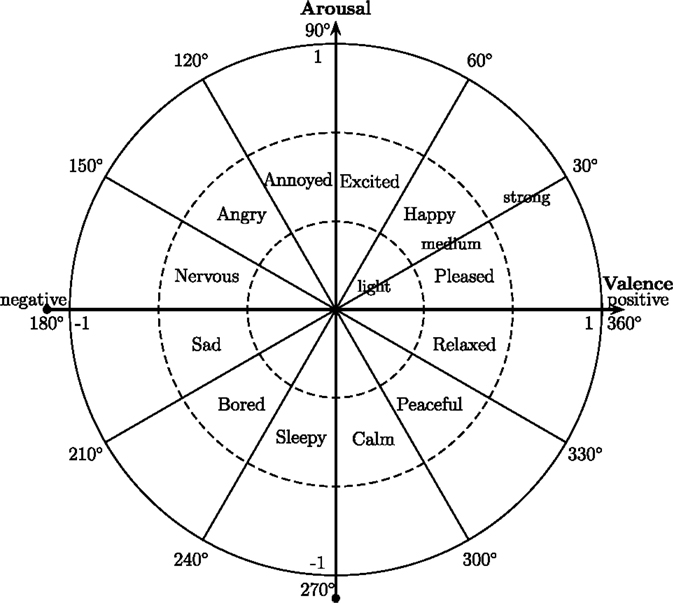

Salmeron (2012) suggests using Fuzzy Cognitive Maps (FCM) as a tool for predicting human emotions that are modeled on the concept of Thayer (1989), which takes into account both the arousal (the level of agents excitation/boredom) and the valence (positive or negative in general terms). The states/values of arousal and valence can be interpreted as the vertical and horizontal coordinates of the current point/status on a circle of emotion shown in Figure 2. The model is constructed using information from the analysis of mood and bio-psychology concepts. Emotions in this model may take one of twelve values: excited, happy, pleased, relaxed, peaceful, calm, sleepy, bored, sad, nervous, angry, and annoyed. In addition, Salmeron (2012) evaluates every emotion in the trivalent scale: light, medium, and strong. As a result, the model has 36 possible states of emotion.

Figure 2. Circle of emotion, arousal, and valence (Thayer, 1989; Salmeron, 2012).

Fuzzy Cognitive Maps collect and represent the knowledge necessary for a specific fuzzy-neural system of inference (FNIS) about emotion. There are four different stimuli inputs in the input layer responsible for “noise,” “high temperature,” “scare waiting time,” and “few people waiting.” The specific elements in the hidden layer are described as “waiting” and “anxiety,” which in turn generate the system emotional outputs: valence and arousal. The transitions between the layers of the network are being weighed, and the system is always able to generate a certain emotion.

The described system does not fully use the fuzzy model of emotions, as the emotions are represented only by labels assigned to the specific 36 ranges of emotional variables (derived from the arousal and valence). This model can be classified into the appraisal theory of emotion. The components involved in the derivation of emotions can be classified as cognitive (inputs to FCM/FNIS) and motivational (treating the waiting and anxiety in the hidden FNIS layer as a mixture of mood and belief).

4.12. xEmotion

The concept of ISD, the Intelligent System of Decision-making (Kowalczuk and Czubenk, 2010b, 2011), allows an agent to construct individual emotions in a subsystem referred to as xEmotion (Kowalczuk and Czubenko, 2013), in response to observations and interactions.

The xEmotion subsystem is based on several different theories of emotion, also including the time division. First of all, it implements the somatic theory of emotions (Zajonc et al., 1989; Damasio, 1994), as a concept of pre-emotions. Pre-emotions are the most raw form of emotions, which are connected to impressions (basic features perceived by the agent), such as pain and rapid movement. They can also appear as a result of a sub-conscious reaction (such as crying – impression of tears). The second component of xEmotion is sub-emotions – emotions connected to certain objects or situations, which have an important, informative aspect to the agent. They are evidently connected to the appraisal theory of emotion. However, the appraisal of sub-emotions takes place in case of a strong emotional state (in contrast to the classical appraisal approach). This state is remembered/associated with certain perceived objects (in a current scene and in the time of strong emotion), and a next occurrence of this object (in future) will trigger a certain sub-emotional state. Sub-emotions will decay with the passage of time (based on a forgetting curve).

These two elements can modify an actual emotional state of the agent, called a classical emotion, which is also influenced by time lapse and a global rate of fulfillment of the agent’s needs (besides the emotional components). It is clear and natural that the agent’s emotion is often in its neutral or quasi-neutral states, where it evolves with the passage of time. On the other hand, the system of emotion is also affected by the basic motivational system of the ISD-based agent, which is a system of the agent’s needs. The ISD need system is similar to Beliefs, Desires, Intentions (BDI); however, it focuses on long-term goals. Mood (a long-term emotion) is automatically generated based on the classical emotion using a mechanization of quasi-derivative.

There are 24 possible emotions in the xEmotion subsystem of ISD. They are based on the Plutchik concept of emotions (Plutchik, 2001) and fuzzy logic (Zadeh, 1965). However, only the sub-emotions and the classical emotion may take-over 1 of the 24 labeled/linguistic emotions, modeled as fuzzy two-dimensional variables. The applied pre-emotions, in view of their simplicity, can assume only one of eight primal emotions (imaging only sections/colors on a wheel/rainbow of emotion), also modeled with the use of the fuzzy description.

Thus, emotions in the ISD system have their specific purposes. Their main role is to narrow the possibilities of choosing the agent’s reactions (but not to decide which particular reaction is to be implemented). This means using emotion and a generalized scheduled variable for controlling the agent, according to the SVC scheme. In such a way, the current operation point of the whole ISD system can be suitably adapted. This is not the only function of emotion. The (derivative) mood adjusts the value of the fuzzy coefficients of a needs’ subsystem, allowing the agent’s needs to reach the state of satisfaction at a faster or slower rate.

The ISD concept was initially applied as a “thinking” engine, which controls a simple mobile robot using a restricted Maslow’s hierarchy of needs, as well as in governing an interactive avatar DictoBot (Kowalczuk and Czubenko, 2010a) and automatic autonomous driver xDriver (Czubenko et al., 2015) [see also Kowalczuk et al. (2016) for the development of the agent’s memory itself].

In summary, xEmotion, as a part of the ISD decision-making concept, is an emotional system based on the appraisal and somatic approaches to emotions. It has some minor elements taken from the evolutionary theory of emotions, especially the application of emotion as a self-defense mechanism. Cognitive (sub-emotions), physiological (pre-emotions), and motivational (needs) components are involved in creating emotions. This system changes its operating point, and thus also its behavior/reaction – taking into account (inner) qualia and classical emotions, also interpreted using fuzzy logic.

5. Comparison of the Discussed Systems

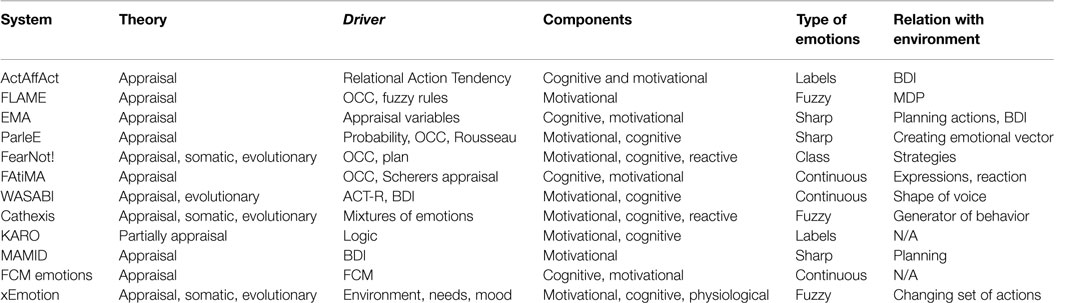

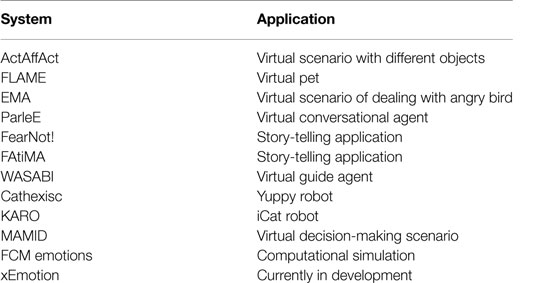

A short comparison of the research results concerning computational models of emotion is presented in Table 2. Moreover, the applications of the presented systems are shortly described in Table 3. Clearly, it is difficult to compare various systems of emotion due to the lack of a common, sufficiently universal, and comprehensive measure. All of the discussed models and systems, however, have some impact on the IT domain under consideration. Their impact on the humanities is unfortunately low. The main conclusion, which can be drawn from the presented overview, is as follows: almost all of the computational models of emotions employ the theory of appraisal as their scientific background. This is a bit controversial. There are also other theories that treat about the creation of emotions, but unfortunately, they are not in use as the foundation of computational emotions. Another aspect of the proposed systems of emotions is that both sharp and fuzzy logic representations can be effectively used in such systems.

Unlike most of the developed models, the xEmotion system is based on evolutionary, somatic, and appraisal theories. It is a combination of approaches leading to one coherent concept of emotion. The emotions are created based on cognitive (appraisal of events and objects), physiological (needs connected to the environmental aspects), and motivational (needs and emotions themselves) components. All phases mentioned by Scherer et al. (2010) are involved in xEmotion. The xEmotion system also creates emotion based on impressions/sensations and stimuli. That makes the system even more effective in hazardous or other special situations requiring a rapid response. Similar to Cathexis, xEmotion employs the fuzzy representation of data in several applications, including modeling of emotions, which is characteristic of the human way of understanding the world. Note that the xEmotion system optimizes and constructs a sole and limited set of actually available reactions. This system, therefore, does not directly determine the behavior of the controlled (managed) agent, it only allows the agent to choose (later) one appropriate response from this set. One may see this approach as a clear improvement over the other proposed models under discussion (see Table 2).

Overall, it is very difficult to definitively determine what are the advantages and disadvantages of the applied systems of computational emotions. In some applications, some of these schemes work better or worse than others. Of course, certain concepts of use are very simple. For example, it is typical to use such a system to modify only an emotional reaction, such as facial expressions or tone of voice (then a chosen emotion implements the system reaction). Some basic criteria for the selection of a computational system of emotions are given in Table 2.

When deciding to implement one of the presented systems, it is important to clarify the expectations that we have in relation to the intended computational system of emotions. In simple mobile robotics, models using sharp emotions that directly induce (implemented earlier) behavior, appear to be most optimal. In this case, you need to take into account the components that affect the formation of emotions. Certainly, the appraisal analysis of stimuli from the environment should be carried out in real time. This means that Cathexis and xEmotion (both using simplifying fuzzy emotions), or EMA may be recommended for simple mobile robots. On the other hand, robots with greater computing power can be designed using systems based on fuzzy representation of emotions and having the ability to indirectly shape the agent’s behavior, such as xEmotion, FLAME, and EMA.

6. Conclusion

In this article, a variety of computational models of emotion designed to reflect the principal aspects of psychological theory have been described. Affective computation can lead to effective control and decision-making mechanisms in various branches of science and technology. The created emotional architecture can be used to rationalize the quest for the role of emotion in human cognition and behavior. From the control theory viewpoint, computational models of emotion can play an important scheduling control role in designing autonomous systems and agents. In this way, adapting the emotional systems to robot applications may lead to more flexible behavior and rapid response of this type of artificial creatures and agents.

Since the creation and development of the various humanoid robots, such as the ASIMO and Valkyrie (Kowalczuk and Czubenko, 2015), robotics may also utilize the discussed computational models of emotions that allow making human–system interaction more user-friendly. Such an aspiration can be noticed in several robotic programs, such as the FLASH robot (Dziergwa et al., 2013), which is able to manifest different emotion by changing the poses of its “face.” Due to the above, one can easily anticipate a bright future ahead for emotional robots.

Author Contributions

ZK: the concept of the paper, selecting the material, supervision, and final preparation of the paper. MC: principal contribution to reviewing material and the performed implementational research (behind the paper).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Arbib, M. A., and Fellous, J. M. (2004). Emotions: from brain to robot. Trends Cogn. Sci. 8, 554–561. doi: 10.1016/j.tics.2004.10.004

Aylett, R. S., Louchart, S., Dias, J., Paiva, A., and Vala, M. (2005). “FearNot! – an experiment in emergent narrative,” in Intelligent Virtual Agents, Vol. 3661, eds T. Panayiotopoulos, J. Gratch, R. Aylett, D. Ballin, P. Olivier, and T. Rist (Berlin Heidelberg: Springer), 305–316.

Bateson, M., Desire, S., Gartside, S. E., and Wright, G. A. (2011). Agitated honeybees exhibit pessimistic cognitive biases. Curr. Biol. 21, 1070–1073. doi:10.1016/j.cub.2011.05.017

Batson, C. D. (1990). “Affect and altruism,” in Affect and Social Behavior, eds B. S. Moore and A. M. Isen (Cambridge: Cambridge University Press), 89–126.

Battaglino, C., Damiano, R., and Lombardo, V. (2014). “Moral values in narrative characters: an experiment in the generation of moral emotions,” in Interactive Storytelling, Volume 8832 of Lecture Notes in Computer Science, eds A. Mitchell, C. Fernández-Vara, and D. Thue (Singapore: Springer International Publishing), 212–215.

Becker-Asano, C. (2008). Wasabi: Affect Simulation for Agents With Believable Interactivity. Ph.D. thesis, Faculty of Technology, University of Bielefeld, Bielefeld.

Becker-Asano, C. (2014). “Wasabi for Affect Simulation in Human-computer Interaction,” in Proceedings of the International Workshop on Emotion Representations and Modelling for HCI Systems (Istanbul: ACM), 1–10.

Becker-Asano, C., and Wachsmuth, I. (2010). “Wasabi as a Case Study of how misattribution of emotion can be modelled computationally,” in A Blueprint for Affective Computing: a Sourcebook and Manual, eds K. R. Scherer, T. Banziger, and E. Roesch (Oxford: Oxford University Press, Inc.), 179–193.

Biddle, S., Fox, K. R., and Boutcher, S. H. (2000). Physical Activity and Psychological Well-Being. London: Psychology Press.

Blumberg, B. M. (1996). Old Tricks, New Dogs: Ethology and Interactive Creatures. Ph.D. thesis, Massachusetts Institute of Technology, Cambridge, MA.

Breazeal, C. (2003). Emotion and sociable humanoid robots. Int. J. Hum. Comput. Stud. 59, 119–155. doi:10.1016/S1071-5819(03)00018-1

Breazeal (Ferrell), C., and Velasquez, J. (1998). “Toward teaching a robot ‘infant’ using emotive communication acts,” in Simulation of Adaptive Behavior workshop on Socially Situated Intelligence, eds B. Edmonds and K. Dautenhahn (Zurich: CPM), 25–40.

Bui, T., Heylen, D., Poel, M., and Nijholt, A. (2002). “ParleE: an adaptive plan based event appraisal model of emotions,” in KI 2002: Advances in Artificial Intelligence, eds M. Jarke, G. Lakemeyer, and J. Koehler (Berlin Heidelberg: Springer), 129–143.

Collins, A., Ortony, A., and Clore, G. L. (1988). The Cognitive Structure of Emotions. Cambridge: Cambridge University Press.

Craig, A. D. (2003). A new view of pain as a homeostatic emotion. Trends Neurosci. 26, 303–307. doi:10.1016/S0166-2236(03)00123-1

Czubenko, M., Kowalczuk, Z., and Ordys, A. (2015). Autonomous driver based on intelligent system of decision-making. Cognit. Comput. 7, 569–581. doi:10.1007/s12559-015-9320-5

Damasio, A. (1994). Descartes’ Error: Emotion, Reason, and the Human Brain. New York: Gosset/Putnam.

Davis, D. N., and Lewis, S. C. (2003). Computational models of emotion for autonomy and reasoning. Informatica 27, 157–164.

Dias, J. A. (2005). Fearnot!: Creating Emotional Autonomous Synthetic Characters for Emphatic Interactions. Ph.D. thesis, Instituto Superior Técnico, Lisbon.

Dias, J., Mascarenhas, S., and Paiva, A. (2014). “Fatima modular: towards an agent architecture with a generic appraisal framework,” in Emotion Modeling, Volume 8750 of Lecture Notes in Computer Science, eds T. Bosse, J. Broekens, J. Dias, and J. van der Zwaan (Switzerland: Springer), 44–56.

Dias, J. A., and Paiva, A. (2005). “Feeling and reasoning: a computational model for emotional characters,” in Progress in Artificial Intelligence Lecture Notes in Computer Science, Proceedings of the 12th Portuguese Conference on Progress in Artificial Intelligence (Berlin; Heidelberg: Springer-Verlag), 127–140.

Dziergwa, M., Frontkiewicz, M., Kaczmarek, P., Kedzierski, J., and Zagdańska, M. (2013). “Study of a social robot’s appearance using interviews and a mobile eye-tracking device,” in Social Robotics, Volume 8239 of Lecture Notes in Computer Science, eds G. Herrmann, M. J. Pearson, A. Lenz, P. Bremner, A. Spiers, and U. Leonards (Cham: Springer International Publishing), 170–179.

Ekman, P. (2009). Telling Lies. Clues to Deceit in the Marketplace, Politics and Marriage. New York, NY: W. W. Norton & Company.

Elliott, C. D. (1992). The Affective Reasoner: A Process Model of Emotions in a Multi-Agent System. Ph.D. thesis, Northwestern University, Chicago.

El-Nasr, M. S., Yen, J., and Ioerger, T. R. (2000). Flame – fuzzy logic adaptive model of emotions. Auton. Agents Multi Agent Syst. 3, 219–257. doi:10.1023/A:1010030809960

Esau, N., Kleinjohann, B., Kleinjohann, L., and Stichling, D. (2003). “Mexi: machine with emotionally extended intelligence,” in International Conference on Hybrid and Intelligent Systems (Melbourne: IEEE), 961–970.

Figueiredo, R., Dias, J., and Paiva, A. (2006). “Shaping emergent narratives for a pedagogical application,” in Narrative and Interactive Learning Environments (Milton Park: Taylor & Francis Group), 27–36.

Fraser, O. N., and Bugnyar, T. (2010). Do ravens show consolation? Responses to distressed others. PLoS ONE 5:e10605. doi:10.1371/journal.pone.0010605

Frijda, N. H. (1994). “The lex talionis: on vengeance,” in Emotions: Essays on Emotion Theory, eds S. H. M. van Goozen, N. E. V. de Poll, and J. A. Sergeant (Hillsdale, NJ: Lawrence Erlbaum Associates), 352.

Goodall, J. (1986). The Chimpanzees of Gombe. Patterns of Behavior. Cambridge, MA: The Belknap Press of Harvard University Press.

Gratch, J. (2000). “Émile: marshalling passions in training and education,” in Proceedings 4th International Conference on Autonomous Agents (Barcelona: ACM), 1–8.

Gratch, J., and Marsella, S. (2004). A domain-independent framework for modeling emotion. Cogn. Syst. Res. 5, 269–306. doi:10.1016/j.cogsys.2004.02.002

Gratch, J., and Marsella, S. (2005). Evaluating a computational model of emotion. Auton. Agent. Multi Agent Syst. 11, 23–43. doi:10.1007/s10458-005-1081-1

Hudlicka, E. (2004). “Beyond cognition: modeling emotion in cognitive architectures,” in Proceedings of the International Conference on Cognitive Modelling, (Pittsburgh: ICCM), 118–123.

Hudlicka, E. (2005). “A computational model of emotion and personality: applications to psychotherapy research and practice,” in Proceedings of the 10th Annual Cyber Therapy Conference: A Decade of Virtual Reality (Basel: ACTC), 1–7.

Hudlicka, E. (2008). “Modeling the mechanisms of emotion effects on cognition,” in AAAI Fall Symposium: Biologically Inspired Cognitive Architectures (Menlo Park, CA: AAAI Press), 82–86.

Izard, C. E. (1972). Patterns of Emotions: A New Analysis of Anxiety and Depression. New York, NY: Academic Press.

Jasielska, D., and Jarymowicz, M. (2013). Emocje pozytywne o genezie refleksyjnej i ich subiektywne znaczenie a poczucie szczȩścia. Psychologia Spoeczna 3, 290–301.

Kowalczuk, Z., and Czubenko, M. (2010a). “Dictobot – an autonomous agent with the ability to communicate,” in Technologie Informacyjne (Kowalczuk: Wydawnictwo Politechniki Gdańskiej), 87–92.

Kowalczuk, Z., and Czubenko, M. (2010b). “Model of human psychology for controlling autonomous robots,” in 15th International Conference on Methods and Models in Automation and Robotics, (Miȩdzyzdroje: IEEE), 31–36.

Kowalczuk, Z., and Czubenko, M. (2011). Intelligent decision-making system for autonomous robots. Int. J. Appl. Math. Comput. Sci. 21, 621–635. doi:10.2478/v10006-011-0053-7

Kowalczuk, Z., and Czubenko, M. (2013). Xemotion – Obliczeniowy Model Emocji Dedykowany Dla Inteligentnych Systemów Decyzyjnyjnych, in Polish (Computational model of emotions dedicated to intelligent decision systems). Pomiary Automatyka Robotyka 17, 60–65.

Kowalczuk, Z., and Czubenko, M. (2015). Przegl d robotów humanoidalnych, in Polish (Overview of humanoid robots). Pomiary Automatyka Robotyka 19, 67–75.

d robotów humanoidalnych, in Polish (Overview of humanoid robots). Pomiary Automatyka Robotyka 19, 67–75.

Kowalczuk, Z., Czubenko, M., and Jȩdruch, W. (2016). “Learning processes in autonomous agents using an intelligent system of decision-making,” in Advanced and Intelligent Computations in Diagnosis and Control (Advances in Intelligent Systems and Computing), Vol. 386, ed. Z. Kowalczuk (Cham; Heidelberg; New York; London: Springer), 301–315.

Laird, J. E., Newell, A., and Rosenbloom, P. S. (1987). SOAR: an architecture for general intelligence. Artif. Intell. 33, 1–64. doi:10.1016/0004-3702(87)90050-6

Lazarus, R. S., and Lazarus, B. N. (1994). Passion and Reason: Making Sense of Our Emotions. New York, NY: Oxford University Press.

Ledoux, J. (1998). The Emotional Brain: The Mysterious Underpinnings of Emotional Life. New York, NY: Simon & Schuster.

LeDoux, J. E., and Phelps, E. A. (1993). “Chapter 10: Emotional networks in the brain,” in Handbook of Emotions, eds M. Lewis, J. M. Haviland-Jones and L. F. Barrett (New York, NY: Guildford Press), 159–180.

Lövheim, H. (2012). A new three-dimensional model for emotions and monoamine neurotransmitters. Med. Hypotheses 78, 341–348. doi:10.1016/j.mehy.2011.11.016

Mamdani, E. H., and Assilian, S. (1975). An experiment in linguistic synthesis with a fuzzy logic controller. Int. J. Man Mach. Stud. 7, 1–13. doi:10.1016/S0020-7373(75)80002-2

Marinier, R. P., Laird, J. E., and Lewis, R. L. (2009). A computational unification of cognitive behavior and emotion. Cogn. Syst. Res. 10, 48–69. doi:10.1016/j.cogsys.2008.03.004

Marsella, S. C. (2003). “Interactive pedagogical drama: Carmen’s bright ideas assessed,” in Intelligent Virtual Agents, Volume 2792 of Lecture Notes in Computer Science, eds T. Rist, R. S. Aylett, D. Ballin and J. Rickel (Berlin; Heidelberg: Springer), 1–4.

Mayers, D. (ed.) (2010). “Emotions, stress, and health,” in Psychology (New York: Worth Publishers), 497–527.

Mei, S., Marsella, S. C., and Pynadath, D. V. (2006). “Thespian: modeling socially normative behavior in a decision-theoretic framework,” in Proceedings of the 6th International Conference on Intelligent Virtual Agents (Marina Del Rey: Springer Berlin Heidelberg), 369–382.

Metta, G., Natale, L., Nori, F., and Sandini, G. (2011). “The Icub Project: an open source platform for research in embodied cognition,” in IEEE Workshop on Advanced Robotics and its Social Impacts (Half-Moon Bay, CA: IEEE), 24–26.

Meyer, J.-J. C., van der Hoek, W., and van Linder, B. (1999). A logical approach to the dynamics of commitments. Artif. Intell. 113, 1–40. doi:10.1016/S0004-3702(99)00061-2

Meyer, J.-J. C. (2006). Reasoning about emotional agents. Int. J. Intell. Syst. 21, 601–619. doi:10.1002/int.20150

Miłkowski, M. (2015). Explanatory completeness and idealization in large brain simulations: a mechanistic perspective. Synthese. 1–22. doi:10.1007/s11229-015-0731-3

Moffat, D., and Frijda, N. H. (1994). “Where there’s a will there’s an agent,” in Proceedings of the Workshop on Agent Theories, Architectures, and Languages on Intelligent Agents, Volume 890 of Lecture Notes in Computer Science, eds M. J. Wooldridge and N. R. Jennings (New York: Springer-Verlag), 245–260.

Murphy, S. T., and Zajonc, R. B. (1994). Afekt, Poznanie I świadomość: Rola Afektywnych Bodźców Poprzedzających Przy Optymalnych I Suboptymalnych Ekspozycjach. Przegld Psychologiczny 37, 261–299.

Oatley, K., Keltner, D., and Jenkins, J. M. (2012). Understanding Emotions, 2nd Edn. Malden, MA: Blackwell Publishing.

Plutchik, R. (1980). “A general psychoevolutionary theory of emotion,” in Emotion: Theory, Research, and Experience, Vol. 1, eds R. Plutchik and H. Kellerman (New York: Academic Press), 3–33.

Plutchik, R. (1994). The Psychology and Biology of Emotion. New York, NY: Harper Collins College Publishers.

Posner, J., Russell, J. A., and Peterson, B. S. (2005). The circumplex model of affect: an integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 17, 715–734. doi:10.1017/S0954579405050340

Rank, S., and Petta, P. (2005). “Appraisal for a character-based story-world,” in Intelligent Virtual Agents, Volume 3661 of Lecture Notes in Computer Science, eds T. Panayiotopoulos, J. Gratch, R. Aylett, D. Ballin, P. Olivier and T. Rist (Kos: Springer), 495–496.

Rank, S., and Petta, P. (2007). “From actaffact to behbehbeh: increasing affective detail in a story-world,” in Virtual Storytelling. Using Virtual Reality Technologies for Storytelling, Volume 4871 of Lecture Notes in Computer Science, eds M. Cavazza and S. Donikian (Berlin; Heidelberg: Springer), 206–209.

Reilly, W. S. N., and Bates, J. (1992). Building Emotional Agents. Technical Report. Pittsburgh, PA: Carnegie Mellon University.

Reilly, W. N. (1996). Believable Social and Emotional Agents. Ph.D. thesis, School of Computer Science, Carnegie Mellon University, Pittsburgh, PA.

Reisenzein, R., Hudlicka, E., Dastani, M., Gratch, J., Hindriks, K., Lorini, E., et al. (2013). Computational modeling of emotion: toward improving the inter- and intradisciplinary exchange. IEEE Trans. Affect. Comput. 4, 246–266. doi:10.1109/T-AFFC.2013.14

Rousseau, D. (1996). “Personality in computer characters,” in AAAI Workshop on Entertainment and AI/A-Life (Portland, OR: The AAAI Press), 38–43.

Russell, J. A., and Mehrabian, A. (1977). Evidence for a three-factor theory of emotions. J. Res. Pers. 11, 273–294. doi:10.1016/0092-6566(77)90037-X

Russell, J. A. (1980). A circumplex model of affect. J. Pers. Soc. Psychol. 39, 1161–1178. doi:10.1037/h0077714

Salmeron, J. L. (2012). Fuzzy cognitive maps for artificial emotions forecasting. Appl. Soft Comput. 12, 3704–3710. doi:10.1016/j.asoc.2012.01.015

Scherer, K. R., Banziger, T., and Roesch, E. (2010). A Blueprint for Affective Computing: A Sourcebook and Manual. New York, NY: Oxford University Press, Inc.

Schneider, M., and Adamy, J. (2014). “Towards modelling affect and emotions in autonomous agents with recurrent fuzzy systems,” in IEEE International Conference on Systems, Man and Cybernetics (SMC) (San Diego, CA: IEEE), 31–38.

Staller, A., and Petta, P. (2001). Introducing emotions into the computational study of social norms: a first evaluation. J. Artif. Soc. Soc. Simul. 4, 1–11.

Swagerman, J. (1987). The Artificial Concern Realization System ACRES: A Computer Model of Emotion. Ph.D. thesis, University of Amsterdam, Amsterdam.

Tooby, J., and Cosmides, L. (1990). The past explains the present: emotional adaptations and the structure of ancestral environments. Ethol. Sociobiol. 11, 375–424. doi:10.1016/0162-3095(90)90017-Z

Velásquez, J. D. (1998). “When robots weep: emotional memories and decision-making,” in American Association for Artificial Intelligence Proceedings (AAAI Press), 70–75.

Velásquez, J. D. (1999). “An emotion-based approach to robotics,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, Volume 1 (Kyongju: IEEE), 235–240.

Velásquez, J. D., and Maes, P. (1997). “Cathexis: a Computational Model of Emotions,” in Proceedings of the First International Conference on Autonomous Agents, AGENTS ‘97 (New York, NY: ACM), 518–519.

Zajonc, R. B., Murphy, S. T., and Inglehart, M. (1989). Feeling and facial efference: implications of the vascular theory of emotion. Psychol. Rev. 96, 395–416. doi:10.1037/0033-295X.96.3.395

Keywords: emotions, decision-making systems, cognitive modeling, human mind, computational models, fuzzy approach, intelligent systems, autonomous agents

Citation: Kowalczuk Z and Czubenko M (2016) Computational Approaches to Modeling Artificial Emotion – An Overview of the Proposed Solutions. Front. Robot. AI 3:21. doi: 10.3389/frobt.2016.00021

Received: 18 November 2015; Accepted: 01 April 2016;

Published: 19 April 2016

Edited by:

Michael Wibral, Goethe University, GermanyReviewed by:

Danko Nikolic, Max Planck Institute for Brain Research, GermanyElena Bellodi, University of Ferrara, Italy

Jose L. Salmeron, University Pablo de Olavide, Spain

Copyright: © 2016 Kowalczuk and Czubenko. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zdzisław Kowalczuk, a292YSYjeDAwMDQwO3BnLmdkYS5wbA==

Zdzisław Kowalczuk

Zdzisław Kowalczuk Michał Czubenko

Michał Czubenko