95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

OPINION article

Front. Res. Metr. Anal. , 28 March 2022

Sec. Research Assessment

Volume 7 - 2022 | https://doi.org/10.3389/frma.2022.850333

This article is part of the Research Topic Quality and Quantity in Research Assessment: Examining the Merits of Metrics View all 6 articles

“It will never be possible to harmoniously implement open science without a universal consensus on a new way of evaluating research and researchers.”

Bernard Rentier

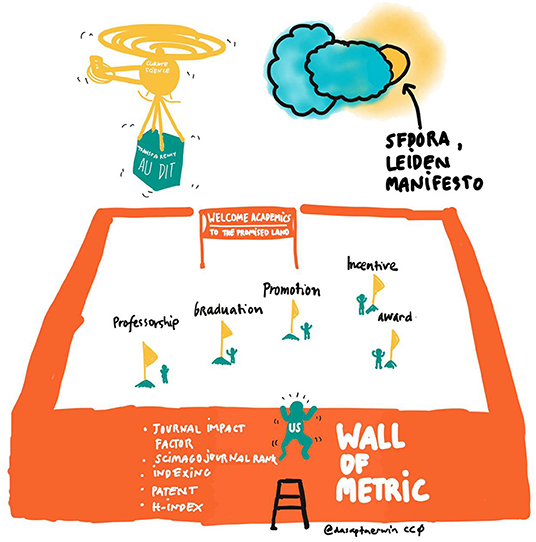

The conventional assessment of scientists relies on a set of metrics which are mostly based on the production of scientific articles and their citations. These metrics are primarily established at the journal level (e.g., the Journal Impact Factor), the article-level (e.g., times cited), and the author level (e.g., h-index; Figure 1). These metrics form the basis of criteria that have been widely used to measure institutional reputation, as well as that of authors and research groups. By relying mostly on citations (Langfeldt et al., 2021), however, they are inherently flawed in that they provide only a limited picture of scholarly production. Indeed, citations only count document use within scholarly works and thus provide a very limited view of the use and impact of an article. Those reveal only the superficial dimensions of a research's impact on society. Even within academia, citations are limited since the link they express does not hold any value (Tennant et al., 2019). As an example, one could be cited for the robustness of the presented work while the other could be cited for its main limitation (Aksnes et al., 2019). As such, two articles could be cited the same number of times for very different reasons, and relying on citations to evaluate scientific work therefore displays obvious limitations (Tahamtan et al., 2016). Beyond this issue, however, the conventional assessment of scientists is clearly beneficial to some scientists more than others and does not reflect or encourage the dissemination of knowledge back to the public that is ultimately paying scientists. This is visible in the Earth and natural sciences which has been organized to solve local community problems in dealing with the Earth system like groundwater hazards (Irawan et al., 2021; Dwivedi et al., 2022). Sadly, results of the conducted research rarely reach the public and dissemination often relies on volunteer efforts from scientists. The efforts bear close-to no weight in current scientific evaluation practices. The problem is even more present for scientists from Global South and/or non-English speaking countries. They carry heavier burdens of producing bilingual materials: (i) peer-reviewed articles in indexed reputable journals using high standard English- to satisfy current assessment methods and (ii) community outreach and engagement using local language to perform their responsibility to society (Irawan et al., 2021). However, the latter activity frequently lies on the bottom of their list given the already high workload necessary to publish peer-reviewed articles. This can be clearly observed by looking at the campaign launched by Asian and African universities showcasing their achievement in the World-Class University game. All publications are strongly encouraged to be written in English-language and assessments follow those typically drafted by, and beneficial for, western, educated, industrialized, rich, and democratic-nations (Gadd, 2021).

Figure 1. Sketchnote the “Wall of Metric” by Dasapta Erwin Irawan to showcase the small playground of researchers/scientists that is filled with self-centered indicators.

The limitations of traditional research assessment have been thoroughly demonstrated by scholars advocating for Open Science. They argue that our focus on citations and articles is both unfair and giving incentives for quantity over quality. Open Science is not a unified ideology but a diverse set of principles, practices, and goals (Besançon et al., 2021). Equity is often stated as a core aim of Open Science practice, but just because things are “open” will not necessarily ensure equity (Fecher and Friesike, 2014). Indeed, many factors like region, gender, discipline, and access to resources will continue to shape the possibilities of participation in an Open Science world (Davies et al., 2021). Public have been connecting Open Science only with Open Access (OA) journal publishing system with article processing charge (APC). Moreover, OA journals with APC (which mostly run by for profit publishers) have often been set as the standards of quality in OA publishing (especially in the SE Asia and also other regions). The question of “how” to fairly evaluate researchers using Open Science perspectives, however, remains a hot topic. Indeed, although Open Science aims to make all stages of research process (including evaluation) open and transparent, its limited role is an issue of practical implementation (a kind of challenge).

Beyond the limitations of the adopted metric-based evaluation of scientists, academia faces important and rising challenges that research assessment methods should consider.

(i) First, a significant barrier to greater engagement among scientists and researchers with stakeholders and community members is the persistent academic standard that productivity and impact be judged primarily by their productions of journal articles. Incentives for researchers to share their findings more broadly to policymakers, industry or to involve society in the process of research are generally quite limited and often not provided by funding agencies. There are funding programs whose evaluation criteria include dissemination, some do not. There is a strong reason for this difference: not all frontier science is easily accessible (or immediately relevant) to the public.

(ii) For science communication to be successful, professionals are required. Often it would be too much (if not impossible) to ask fundamental science researchers to engage in science communication—at least with a significant level of success. One could ask whether the role of science communicators deserves to become more established, but who should be in charge of such coordination and funding?

(iii) Funding agencies do some communication activities but not in a structured manner as to follow regular updates on specific topics (choices for featuring stories often fall on hot topics like global warming). This lack of a clear dissemination strategy results in many research findings and data remaining undelivered and untranslated and therefore inaccessible to policymakers, stakeholders, and the general public (Tennant and Wien, 2020).

(iv) Time effort and costs of publishing datasets, engaging the public, and communicating findings are proportionally greater for small projects, institutions, or research teams, putting even greater demands on these groups to achieve integrated, open, and networked science.

(v) A solution to this would be the use of alternative metrics (e.g., altmetrics; Pourret et al., 2020), or social metrics derived from research data dissemination and social outreach. The Metric Tide Report (https://responsiblemetrics.org/the-metric-tide/) make some proposals for alternatives to the impact factor.

(vi) Aside to those challenges mentioned above, another critically important, yet often disregarded factor, is the need for diversity in team composition. Representation among different genders, backgrounds, nationalities, and career stages can expand perspectives in a project (Nielsen et al., 2017). The system is now designed in such a way that it recognizes only one type of excellent scientist (the one with a high publication rate). Early Careers and promising scientists who do not recognize themselves in that profile might leave academia for that reason, leading to a loss of talent. A better definition of the various profiles/career paths of scientists will attract more diverse talents, as proposed in The Netherlands (https://recognitionrewards.nl/). Room is now being created for academics to include what they feel their strengths are and to focus on what matters most in their field. Academics will be given more opportunity to present their quality, content, academic integrity, creativity, and contributions to society (European Commission, Directorate General for Research Innovation, 2021), and then followed by the European Open Science Conference held in Paris early February 2022 and the Paris Call on research assessment (OSEC, 20221; http://www.ouvrirlascience.fr/paris-call-on-research-assessment/).

The future decision to introduce new ways of recognizing and rewarding academics does not mean that the quality of research will be lower. In contrast, it is a positive choice for more team science: to promote multidisciplinarity, where one team member can be good at research, another at making an impact and yet another at teaching. The team will benefit collectively (see in geochemistry; Riches, 2022).

Local scientists and non-scientists can be great assets to projects, bringing valuable contextual information. However, even when researchers wish to engage communities and stakeholders, the approach taken can thwart community engagement efforts. Further, non-scientists are often dismissed by researchers, leading to disengagement by individuals who may bring great value to an effort. One factor that the science community must take into consideration is the collaboration between professional scientists and society. Such collaboration is a two-way process, which will empower non-scientists to play a role in research activity and produce improvements and make discoveries which will be of benefit to both parties (Ignat et al., 2018). Scientific journals and databases are less accessible to the general public and outreach is an effort to “translate,” simplify, and convey new scientific knowledge with the wider public community. Science communication in multiple non-English languages is also crucial for effective dissemination of scientific ideas (Márquez and Porras, 2020). However, journalists, who have taken the role of science dissemination, have a different educational background than scientists, resulting in difficult communication between them and potentially misinformation of the public. Therefore, we need a shift in the roles of both scientists and journalists.

Finally, the COVID-19 pandemic that limits physical interactions, has proven that conducting research in traditional closed mode (with articles published behind paywalls) also limits the collaboration and effectiveness of research development. But beyond this, it highlighted the crucial importance of fast knowledge dissemination (sometimes at the risk of misinterpreting) and community provided peer-review outside of traditional publishing and reviewing models; none of which are usually rewarded or considered by traditional scientific evaluation paradigm (Besançon et al., 2020). Peer-reviewers should not act as gate-keepers of science, instead they could take the role as the nurturers of science.

Overall, it thus seems that limitations of scientific evaluation are clearly apparent in all of the aforementioned challenges. None of these challenges are new and Open Science advocates have for long argued toward a radical change in scientific evaluation. It remains however disputed how scientific assessment should be undertaken in the future.

Currently, there are several initiatives of the cross-stakeholder global movement to make sure that we are responsibly and appropriately assessing common values of higher education (scholarship) for the society, i.e., The Leiden Manifesto (http://www.leidenmanifesto.org/), the San Francisco Declaration on Research Assessment (DORA; https://sfdora.org/), or proposals to replace Journal Impact Factor. To date (March 2nd 2022), 21,303 individuals and organizations from 156 countries have signed DORA. Despite the strong campaign by Open Science advocates, the monitoring of the institutions which sign up for the manifestos would be difficult because Leiden Manifesto and DORA are based on self-assessment. Especially in some countries where the rate of institutional endorsement is low, there have been several high-level debates over which path to choose for national-level research assessments. As signatories of DORA ourselves, we advocate for removing journal impact factors in research assessment (Pourret, 2021). However, although many people would agree with the principles presented by DORA, publishing in top journals and projecting it as one's achievement is still in the daily conversation of academia (e.g., Nature Index). The efforts to track down researchers' publications in top journals and highly cited researchers have been used as an explicit race campaign at national or international levels, for example in Indonesia (Kemenristek/BRIN, 2020). In most assessment sheets in Indonesia, a community engagement, such as disaster preparedness coaching for junior high students living in the footslope of active Mount Agung in Bali (Saepuloh et al., 2018), will only be worth a tick mark. However, more researchers are also exposed to the principles of collaboration instead of competition (Pelupessy, 2017) that would bring a new fresh voice in the research ecosystem. The pandemic has presumably helped scientists collaborate more across the world, but we are also running into the digital divide (Irawan et al., 2020). In-person meetings have always been seen as the best method to communicate with each other, but virtual conferences have shown undeniable advantages (including accessibility), and we are convinced that the future of events will be hybrid (Pourret and Irawan, 2022).

More intentional engagement with local stakeholders, community members, and educators at the outset of a research effort has the potential to lead to more integrated, coordinated, and impactful Open Science (Goldman et al., 2021). It might also help in increasing scientific literacy (Garrison et al., 2021). During project inception and development, researchers should build in ways to involve stakeholders and society, ranging from defining scope and priorities of a research question based on community expertise to engaging the public in citizen science data collection. The American Geophysical Union's Thriving Earth Exchange provides a way for scientists to connect with communities seeking science support to resolve challenges that require the expertise of biogeochemistry (Dwivedi et al., 2022). Existing citizen science projects can provide ready-made infrastructure for engaging members of the public in data collection; thousands of such projects are listed at scistarter.org. Alternatively, researchers may create their own citizen science project leveraging existing infrastructure. In the context of reciprocity within Citizen Science, research libraries play a key role to support or engage in the projects, build skills for engaging, adopt toolkits or models, as well as promote positive attitude toward Citizen Science, thus creating an increased Public Understanding of Science (Overgaard and Kaarsted, 2018). To include social scientists, with skills and experience in engaging groups, can increase the chances that projects in Earth and natural sciences are designed with human dimensions and applications in mind and that products will be utilized by non-scientist audiences. With such engagement, we could expect a more fluid relationship between stakeholders of science. Publishing in more languages than English will help (Pourret and Irawan, 2022), point out the language barrier for non-English speaking countries to understand the content of English-written scholarship outputs. Creating secondary non-conventional outputs (e.g., a YouTube video or conversational podcast) to translate a paper written in English would, in a way, help more people to understand the content of the paper. One of several initiatives that further call to diversify language in scientific publications is Helsinki Initiative (https://www.helsinki-initiative.org/; Henry et al., 2021). It supports the dissemination of research output, while at the same time encourage in promoting local relevant research and the usage of local language.

Because much of the work carried out in the field of earth and natural sciences addresses issues intersecting with the environment, climate change, and biodiversity, these scientific disciplines are frequently covered in the news media. The benefits of such coverage are many, including a more informed public and demonstration of the return on public funds invested in the research. However, it is necessary to provide incentives for scientists to engage with the news media (Besançon et al., 2021) and to translate their work through less traditional mechanisms such as social media, blog posts, or videos. For that we need a dedicated structure that makes the process simple and easy.

As an example, the Danish Bibliometric Research Indicator (BFI) provides an overview of Danish research production. The BFI was introduced in 2009 to report the number of peer reviewed publications of each type that universities have produced (Deutz et al., 2021). The BFI model measures publications and publication channels degree of prestige, and indicates that quantitative analyses should never stand alone, but be supplemented by qualitative analyses. Indeed, even experienced researchers from the developed world publish in predatory journals mainly for the same reasons as do researchers from developing countries: lack of awareness, speed and ease of publication process, and a chance to get rejected work published. On the other hand, Open Access potential and larger readership outreach were also motives for publishing in open access journals with quick acceptance rates (Shaghaei et al., 2018). Moreover, another threats that take advantage of our assessment system, especially in the Global South, would be predatory journals (see discussion on definition in Grudniewicz et al., 2019) that are sometimes seen as a solution to wide dissemination of new research results, the COVID-19 pandemic has shown that preprints were actually more beneficial as they can also gather feedback (Fraser et al., 2021). However, they are not always considered as scientific output, but preprints are here to stay, and are valid scientific resources that deserve to be seen as scientific productions (Lanati et al., 2021), under specific conditions. Beyond the early and wide dissemination of scientific advances through preprints, the pandemic has also shown a need to recognize scientific communication and peer-review as the embedded parts of science implementation (e.g., outreach of COVID vaccination). Attitudes toward open peer review, open data, and use of preprints influence scientists' engagement with those practices. Further research is needed to determine optimal ways of increasing researchers' attitudes and their Open Science practices (Baždarić et al., 2021). While open peer-review could help ensure that credit is given where due, there are very few incentives for scientists to engage in thorough reviewing of their peers' work (Armani et al., 2021). Moreover, unseen work of early career researchers should be valued, indeed many of them ghost write peer-review in place of their principal investigator or mentor and are not dully rewarded for their work (McDowell et al., 2019).

Publishing a paper is one big effort, but creating engagement and tracking the impact of it is another huge effort that most researchers take for granted (Pourret et al., 2020). While increasing citations is one goal that people frequently mention when they are promoting their paper, creating engagement has more benefits than just adding some new citations to your portfolio (Ross-Hellauer et al., 2020).

Diversity, equity and inclusion are key components of Open Science. In achieving them, we can hope that we can reach a true Open Access of scientific resources, one that encompasses both (i) open access to the files (uploading them to a public repository) and (ii) open access to the contents (including language). Until we decide to move away from profit-driven journal-based criteria to evaluate researchers, it is likely that high author-levied publication costs will continue to maintain inequities to the disadvantage of researchers from non-English speaking and least developed countries. As quoted from Bernard Rentier, “the universal consensus should focus on the research itself, not where it was published.”

OP: conceptualization, writing—original draft, writing—review, and editing. DEI: visualization, writing—original draft, writing—review, and editing. NS, EMvR, and LB: writing—original draft, writing—review, and editing. All authors contributed to the article and approved the submitted version.

This research was partly funded by the Science & Impacts grant awarded to the project Open Science in Earth Sciences by Ambassade de France en Indonésie.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We deeply acknowledge Claudia Jesus-Rydin for her feedback on an early version of this article and Victor Venema for comments on the preprint.

Aksnes, D. W., Langfeldt, L., and Wouters, P. (2019). Citations, citation indicators, and research quality: an overview of basic concepts and theories. SAGE Open 9:2158244019829575. doi: 10.1177/2158244019829575

Armani, A. M., Jackson, C., Searles, T. A., and Wade, J. (2021). The need to recognize and reward academic service. Nat. Rev. Mater. 6, 960–962. doi: 10.1038/s41578-021-00383-z

Baždarić, K., Vrkić, I., Arh, E., Mavrinac, M., Gligora Marković, M., Bilić-Zulle, L., et al. (2021). Attitudes and practices of open data, preprinting, and peer-review—a cross sectional study on Croatian scientists. PLoS ONE 16:e0244529. doi: 10.1371/journal.pone.0244529

Besançon, L., Peiffer-Smadja, N., Segalas, C., Jiang, H., Masuzzo, P., Smout, C., et al. (2021). Open science saves lives: lessons from the COVID-19 pandemic. BMC Med. Res. Methodol. 21:117. doi: 10.1186/s12874-021-01304-y

Besançon, L., Rönnberg, N., Löwgren, J., Tennant, J. P., and Cooper, M. (2020). Open up: a survey on open and non-anonymized peer reviewing. Res. Integr. Peer Rev. 5:8. doi: 10.1186/s41073-020-00094-z

Davies, S. W., Putnam, H. M., Ainsworth, T., Baum, J. K., Bove, C. B., Crosby, S. C., et al. (2021). Promoting inclusive metrics of success and impact to dismantle a discriminatory reward system in science. PLoS Biol. 19:e3001282. doi: 10.1371/journal.pbio.3001282

Deutz, D. B., Drachen, T. M., Drongstrup, D., Opstrup, N., and Wien, C. (2021). Quantitative quality: a study on how performance-based measures may change the publication patterns of Danish researchers. Scientometrics 126, 3303–3320. doi: 10.1007/s11192-021-03881-7

Dwivedi, A. D., Santos, A., Barnard, M., Crimmins, T., Malhotra, A., Rod, K., et al. (2022). Biogeosciences perspectives on Integrated, Coordinated, Open, Networked (ICON) science. Earth Space Sci [Preprint]. doi: 10.1029/2021EA002119

European Commission, Directorate General for Research Innovation. (2021). Towards a Reform of the Research Assessment System: Scoping Report. Publications Office. doi: 10.2777/707440

Fecher, B., and Friesike, S. (2014). “Open science: one term, five schools of thought,” in Opening Science, eds Bartling, S., and Friesike, S. (Cham: Springer) 17–47. doi: 10.1007/978-3-319-00026-8_2

Fraser, N., Brierley, L., Dey, G., Polka, J. K., Pálfy, M., Nanni, F., et al. (2021). The evolving role of preprints in the dissemination of COVID-19 research and their impact on the science communication landscape. PLoS Biol. 19:e3000959. doi: 10.1371/journal.pbio.3000959

Gadd, E. (2021). Mis-measuring our universities: why global university rankings don't add up. Front. Res. Metr. Anal. 6:680023. doi: 10.3389/frma.2021.680023

Garrison, H., Agostinho, M., Alvarez, L., Bekaert, S., Bengtsson, L., Broglio, E., et al. (2021). Involving society in science. EMBO Rep. 22:e54000. doi: 10.15252/embr.202154000

Goldman, A. E., Emani, S. R., Pérez-Angel, L. C., Rodríguez-Ramos, J. A., and Stegen, J. C. (2021). Integrated, Coordinated, Open, and Networked (ICON) science to advance the geosciences: introduction and synthesis of a special collection of commentary articles. Earth Space Sci. Open Arch [Preprint]. 28. doi: 10.1002/essoar.10508554.2

Grudniewicz, A., Moher, D., Cobey, K. D., Bryson, G. L., Cukier, S., Allen, K., et al. (2019). Predatory journals: no definition, no defence. Nature 576, 210–212. doi: 10.1038/d41586-019-03759-y

Henry, K. R., Virk, R. K., DeMarchi, L., and Sears, H. (2021). A call to diversify the lingua franca of academic STEM communities. J. Sci. Policy Govern [Preprint]. 18. doi: 10.38126/JSPG180303

Ignat, T., Ayris, P., Labastida i Juan, I., Reilly, S., Dorch, B., Kaarsted, T., et al. (2018). Merry work: libraries and citizen science. Insights 31:35. doi: 10.1629/uksg.431

Irawan, D. E., Abraham, J., Tennant, J. P., and Pourret, O. (2021). The need for a new set of measures to assess the impact of research in earth sciences in Indonesia. Eur. Sci. Edit. 47:e59032. doi: 10.3897/ese.2021.e59032

Irawan, D. E., Hedding, D., and Pourret, O. (2020). How Open Science May Help Us During and After the Pandemic. Available online at: https://blogs.egu.eu/geolog/2020/11/25/how-open-science-may-help-us-during-and-after-the-pandemic/ (accessed March 02, 2022).

Kemenristek/BRIN (2020). Kemristek/BRIN Umumkan 500 Peneliti Terbaik Indonesia Berdasarkan Jurnal Internasional dan Nasional - The Ministry of Research and Technology/BRIN announces Indonesia's 500 Best Researchers. Available online at: https://www.brin.go.id/kemenristek-brin-umumkan-500-peneliti-terbaik-indonesia-berdasarkan-jurnal-internasional-dan-nasional (accessed March 02, 2022).

Lanati, A., Pourret, O., Jackson, C., and Besançon, L. (2021). Research funding bodies need to follow scientific evidence: preprints are here to stay. OSF [Preprint]. doi: 10.31219/osf.io/k54pe

Langfeldt, L., Reymert, I., and Aksnes, D. W. (2021). The role of metrics in peer assessments. Res. Eval. 30, 112–126. doi: 10.1093/reseval/rvaa032

Márquez, M. C., and Porras, A. M. (2020). Science communication in multiple languages is critical to its effectiveness. Front. Commun. 5:31. doi: 10.3389/fcomm.2020.00031

McDowell, G. S., Knutsen, J. D., Graham, J. M., Oelker, S. K., and Lijek, R. S. (2019). Research culture: co-reviewing and ghostwriting by early-career researchers in the peer review of manuscripts. Elife 8:e48425. doi: 10.7554/eLife.48425

Nielsen, M. W., Alegria, S., Börjeson, L., Etzkowitz, H., Falk-Krzesinski, H. J., Joshi, A., et al. (2017). Opinion: gender diversity leads to better science. Proc. Natl. Acad. Sci. U.S.A. 114:1740. doi: 10.1073/pnas.1700616114

Overgaard, A. K., and Kaarsted, T. (2018). A new trend in media and library collaboration within citizen science? The case of ‘a healthier Funen’. LIBER Q. 28, 1–17. doi: 10.18352/lq.10248

Pelupessy, D. (2017). Indonesia Ingin Jadi No. 1 di ASEAN, Tapi Dalam Dunia Ilmu Pengetahuan Kolaborasi Lebih Penting - Indonesia to Become No 1 in ASEAN, but Collaboration Is More Important in Science. Available online at: https://theconversation.com/indonesia-ingin-jadi-no-1-di-asean-tapi-dalam-dunia-ilmu-pengetahuan-kolaborasi-lebih-penting-83984 (accessed March 02, 2022).

Pourret, O. (2021). Comment la science ouverte peut faire évoluer les méthodes d'évaluation de la recherche. Available online at: https://theconversation.com/comment-la-science-ouverte-peut-faire-evoluer-les-methodes-devaluation-de-la-recherche-169071 (accessed March 02, 2022).

Pourret, O., and Irawan, D. E. (2022). Open access in geochemistry from preprints to data sharing: past, present, and future. Publications 10:3. doi: 10.3390/publications10010003

Pourret, O., Suzuki, K., and Takahashi, Y. (2020). Our study is published, but the journey is not finished! Elements 16, 229–230. doi: 10.2138/gselements.16.4.229

Riches, A. (2022). Where do geochemistry teams start and end? Professional and technical support is vital to us all! OSF [Preprint]. doi: 10.31219/osf.io/h27dx

Ross-Hellauer, T., Tennant, J. P., Banelyte, V., Gorogh, E., Luzi, D., Kraker, P., et al. (2020). Ten simple rules for innovative dissemination of research. PLoS Comput. Biol. 16:e1007704. doi: 10.1371/journal.pcbi.1007704

Saepuloh, A., Sucipta, I. G. B. E., Darajat, F. I., and Nugraha, Y. A. (2018). Pembuatan Sistem dan Pendampingan Siaga Bencana Gunung Agung di SMPN 3 Bebandem dan SMPN 5 Kubu, Karangasem, Bali. Zenodo [Preprint]. doi: 10.5281/zenodo.5817231

Shaghaei, N., Wien, C., Holck, J. P., Thiesen, A. L., Ellegaard, O., Vlachos, E., et al. (2018). Being a deliberate prey of a predator: researchers' thoughts after having published in predatory journal. Lib. Q. 28, 1–17. doi: 10.18352/lq.10259

Tahamtan, I., Safipour Afshar, A., and Ahamdzadeh, K. (2016). Factors affecting number of citations: a comprehensive review of the literature. Scientometrics 107, 1195–1225. doi: 10.1007/s11192-016-1889-2

Tennant, J., and Wien, C. (2020). Fixing the Crisis State of Scientific Evaluation. SocArXiv. doi: 10.31235/osf.io/f4zk9

Keywords: Open Science, research assessment, inclusive metrics, award, h-index

Citation: Pourret O, Irawan DE, Shaghaei N, Rijsingen EMv and Besançon L (2022) Toward More Inclusive Metrics and Open Science to Measure Research Assessment in Earth and Natural Sciences. Front. Res. Metr. Anal. 7:850333. doi: 10.3389/frma.2022.850333

Received: 07 January 2022; Accepted: 03 March 2022;

Published: 28 March 2022.

Edited by:

Maziar Montazerian, Federal University of Campina Grande, BrazilReviewed by:

Adriana Bankston, University of California Office of the President, United StatesCopyright © 2022 Pourret, Irawan, Shaghaei, Rijsingen and Besançon. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Olivier Pourret, b2xpdmllci5wb3VycmV0QHVuaWxhc2FsbGUuZnI=

†ORCID: Olivier Pourret orcid.org/0000-0001-6181-6079

Dasapta Erwin Irawan orcid.org/0000-0002-1526-0863

Najmeh Shaghaei orcid.org/0000-0002-7884-8576

Elenora M. van Rijsingen orcid.org/0000-0001-7330-5903

Lonni Besançon orcid.org/0000-0002-7207-1276

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.