95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Res. Metr. Anal. , 24 April 2018

Sec. Research Assessment

Volume 3 - 2018 | https://doi.org/10.3389/frma.2018.00015

This article is part of the Research Topic Fostering Scientific Capacities: Towards a Global Scientific System View all 5 articles

Research activities are subject to constant processes of evaluation, which increasingly include the use of bibliometric indicators to support decision-making. This paper presents a model for the individual evaluation of different facets of researchers' work and discusses the interest in using “control” parameters to identify deviations suggesting inappropriate conduct. The proposed model is illustrated through an empirical example that analyzes the activity of Spanish researchers in the area of Library and Information Science. There are important differences among the most productive authors, and in many cases, there is no association between the degree of participation in high-impact journals and citation indicators. The control indicators help to identify behaviors such as excessive self-citation, endogamy with regard to the principal journal of publication, and co-dependence with other investigators. We have identified different roles related to the concept of leadership, measured by means of the participation as first or corresponding author, prestige, direct contribution, active direction, supervision, or management.

Research activities are subject to constant processes of evaluation. The use of bibliometric indicators as a basis to support these processes is increasingly commonplace in different institutions (Åström et al., 2011; Gumpenberger et al., 2012), and there is abundant literature regarding the use of bibliometric indicators at a micro-level, particularly with regard to the citation and impact of publications (Waltman, 2016). However, few studies have proposed evaluation methodology allowing a comprehensive characterization of the different dimensions of researchers' work. Thus, it is necessary to launch empirical studies that deepen the analysis into the relevance and significance of different bibliometric indicators that can be used at the level of an individual researcher to evaluate their performance. Exploratory studies may also gauge the interest in identifying and measuring negative aspects associated with research or in capturing concepts considered to be relevant in evaluation processes, such as leadership, internationalization, or excellence.

Recent contributions related to the use of bibliometric indicators for researchers' evaluation include a study by Franceschet (2009), which uses a sample of 13 computer science scholars in the Department of Mathematics and Computer Science of Udine University (Italy) and proposes a model of evaluation based on 13 bibliometric indicators, grouped into four indexes that measure productivity, absolute impact, relative impact of the scholar, and enduring impact over time. For their part, Costas et al. (2010a) analyze the activity of 1064 investigators from the Spanish National Research Council, grouping them according to their professional status and topic area (Natural Resources, Biology & Biomedicine, Materials Science). The study proposes a model of research performance evaluation based on nine variables across three dimensions: impact, journal quality, and production. Abramo and d'Angelo (2011) describe bibliometric methods to conduct a large-scale comparative evaluation of individual investigators' research performance, based on eight productivity and average impact indicators; to illustrate the proposed methodology, they document a comparative evaluation of 11 researchers in Molecular Biology at the University of Rome Tor Vergata. Wildgaard (2015) analyzes the activity of 512 researchers in Astronomy, Environmental Science, Philosophy and Public Health, synthesizing 17 publication-based, citation-based and hybrid indicators to rate the productivity and effect of the scholar (age, citations and the order of researchers) using a single number. At a more theoretical level, Wildgaard et al. (2014) review the bibliometric indicators that can be employed to evaluate individual scientists, identifying 108 that they classify according to the dimension addressed and the complexity associated with collecting the data necessary for calculation; the authors call for new studies to identify and promote the most useful indicators for end users. Wildgaard (2016) proposes a seven-stage cluster methodology for evaluating researchers that takes into account investigators' disciplinary and seniority levels. Finally, Gorraiz and Gumpenberger (2015) and Gorraiz et al. (2016) describe processes of individual evaluation as a tool for making decisions and advising researchers in the University of Vienna.

The aim of this study is to present a model of individual evaluation, based on bibliometric indicators, for the main dimensions of research activity and to illustrate the methodology through an empirical example evaluating a research community in a specific discipline and geographic context.

Specific aims are:

- To comparatively analyze individual indicators for evaluating the most productive authors according to the reference values for the discipline as a whole.

- To gauge the interest in using “control” indicators to identify negative qualities associated with research activity.

- To integrate in the proposed evaluation model indicators that measure three prominent features that evaluation processes assess due to their relevance in galvanizing research: excellence, internationalization, and leadership.

For the analysis, we took as a reference the activity of Spanish researchers in the area of Library and Information Science. We first identified all documents coauthored by at least one investigator from a Spanish institution (country field = Spain) between 2001 and 2015 in journals included in the category Information Science & Library Science (IS&LS) in the Social Sciences Citation Index (SSCI) database of the Web of Science (WoS). Next, we downloaded the recovered bibliographic records to check the standardized author signatures and calculate the indicators that could not be obtained directly from the WoS.

We performed a specific analysis focusing on the 24 most productive authors identified, calculating the following indicators.

- Scientific activity:

◦ Number of documents published and indexed on the WoS.

◦ Number of articles and percentage of this document type with regard to the total documents published.

- Collaboration:

◦ Average author signatures per article.

◦ Percentage of articles produced in international collaboration.

-Impact indicators:

◦ Number of citations received.

◦ Average citations per article.

◦ H-index (number h articles that have been cited at least h times each).

◦ Mean percentile in subject area.

◦ Mean normalized citation impact.

-Visibility indicators:

◦ Percentage of articles published in journals ranked in the first (Q1) and second (Q2) quartile according to their impact factor in Journal Citation Reports (JCR).

We selected these indicators from the numerous potential measures that could have been used due to their ease of calculation, accessibility and reproducibility or because they are standardized indicators that can be consulted through the WoS by non-bibliometricians. The indicators also measure the main dimensions of research activity—including collaboration, an aspect that is not covered in the proposed evaluation methodologies that we identified during our literature review. With regard to scientific activity, the quantification of the number of documents published can help to determine the degree of researchers' contributions to advancing knowledge in a particular discipline or topic. The focus on the number of articles responds to the fact that this document type constitutes the preferred medium for disseminating original research work. The collaboration indicators are intended to capture the extent to which cooperative practices (particularly at an international level) are integrated in research collaborations, reflecting their increasingly acknowledged importance in advancing knowledge. The impact indicators based on citations aim to measure the repercussions of the studies and visibility indicators aim to measure the importance of the media through which they have been disseminated, understood as the degree to which researchers' publications are concentrated in journals occupying the highest positions in impact ratings. Although the individual evaluation of researchers by means of bibliometric indicators is an increasingly widespread practice, and (in Spain and elsewhere) it is often researchers themselves who are expected to provide the indicators, the Leiden Manifesto for research metrics signals that no single indicator or combination of measures can replace the qualitative evaluations performed by subject area experts (Hicks et al., 2015). Moreover, the ideal situation would be for expert bibliometricians to supervise the adequate and transparent use of these measures in evaluation processes (Gorraiz and Gumpenberger, 2015; Gorraiz et al., 2016).

We obtained the following reference indicators, calculating the mean value for each study variable (scientific activity, collaboration, and impact) for the body of documents in the analyzed area; these served as reference values to evaluate the extent to which the researchers followed the overall patterns of research activity.

- Average papers per year and per author.

- Average number of coauthors per article.

- Percentage of articles produced in international collaboration.

- Average citations per article.

- Percentage of articles published in the upper half of the JCR ranking (Q1 and Q2) in the WoS category IS&LS.

Finally, we obtained the following “control” indicators in order to determine their utility in identifying deviations that could indicate negative conduct, and we analyzed the leadership in relation to research activity as measured through author order (i.e., evaluating investigators' participation as first or corresponding author).

- Percentage of self-citation (references to one's own work in subsequent articles).

- Percentage of articles published in Spanish journals.

- Percentage of articles published in the journal in which the author has published the most papers.

- Percentage of articles co-signed with the principal or top collaborator. The principal collaborator or top collaborator is the author with whom a researcher collaborates most regularly, that is, the author with whom a researcher has coauthored the most papers.

- Percentage of articles signed as first author.

- Percentage of articles signed as corresponding author.

All of the indicators mentioned, with the exception of the total number of documents published, were calculated only for the document type of “article” and where indicators followed a skewed distribution, we estimated the median values.

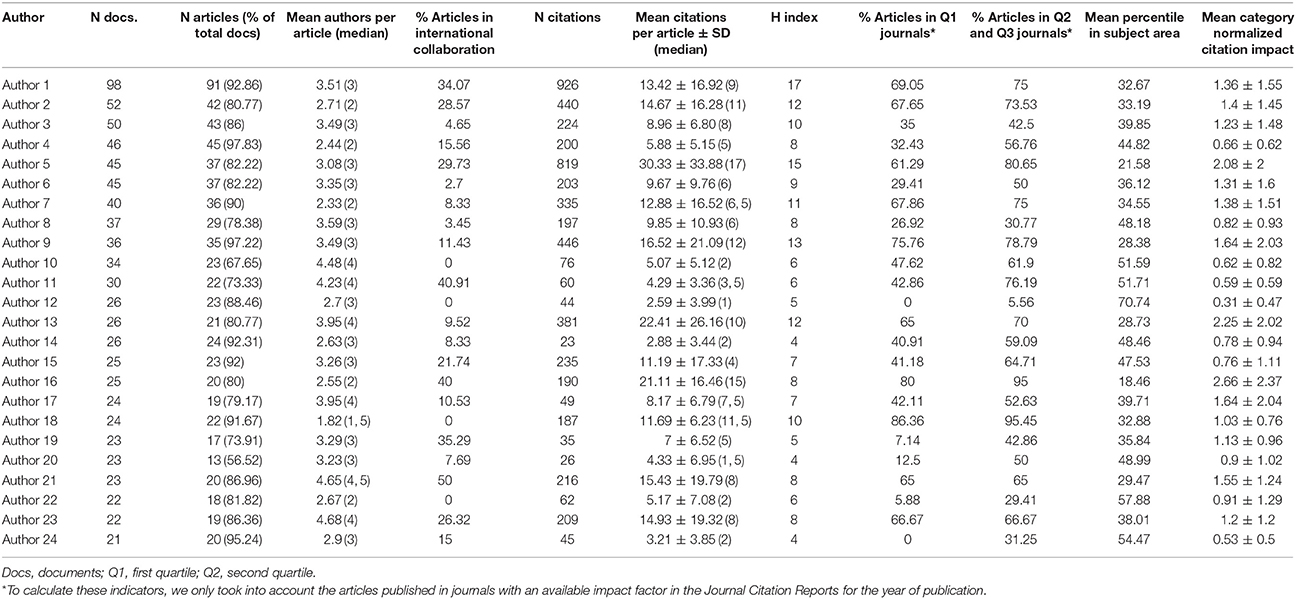

We analyzed 2972 documents (2329 articles), signed by 4104 authors, among whom there were 24 authors with an elevated scientific production (>20 documents). Most of these authors had published a high percentage of their work in the form of articles (22 of the 24 top authors published 73.33–97.83% of their contributions to the field using this document type). The two exceptions, authors 20 and 10, published only 56.52 and 67.65%, respectively, of their work in the form of articles (Table 1).

Table 1. Indicators of scientific activity, collaboration and impact for top-producing Spanish authors in the Web of Science category “Infomation Science and Library Science” (2001–2015).

The most productive authors present important differences in terms of collaboration indicators: some show mean values for authors per paper that are ostensibly higher than the reference value for the field as a whole (mean 2.8 authors/paper, median 3). A few investigators also stand out for their frequent international collaborations, which stand in contrast to other authors whose scientific production is exclusively undertaken in collaborations with other Spanish researchers (Table 1).

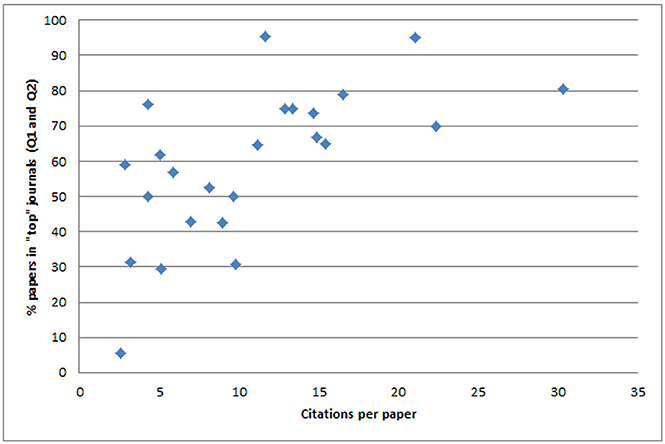

The impact indicators based on the number of citations received show the most significant variations among the highly productive authors, with some authors presenting a high degree of citation (e.g., authors 1, 5, 9, 2, 13, and 7 have received the most citations for their work, and authors 5, 13, and 16 particularly stand out, garnering mean citation rates for each article that are well above the rest). The high productivity of other researchers contrasts with their low values of citation (this is the case of authors 12 and 14, who show the lowest mean values for citations per article). Although the researchers who publish more frequently in Q1 and Q2 journals present higher citation values, it is noteworthy that most researchers showing low or moderate mean citations per paper also published a high percentage of their articles in Q1 and Q2 journals (9 of the 12 investigators showing low citations per paper published between 43 and 76% of their work in Q1 and Q2 journals, respectively; Figure 1).

Figure 1. Relationship between citation degree and publication in top (Q1 and Q2) journals among Spanish researchers in the Web of Science category “Infomation Science and Library Science” (2001–2015).

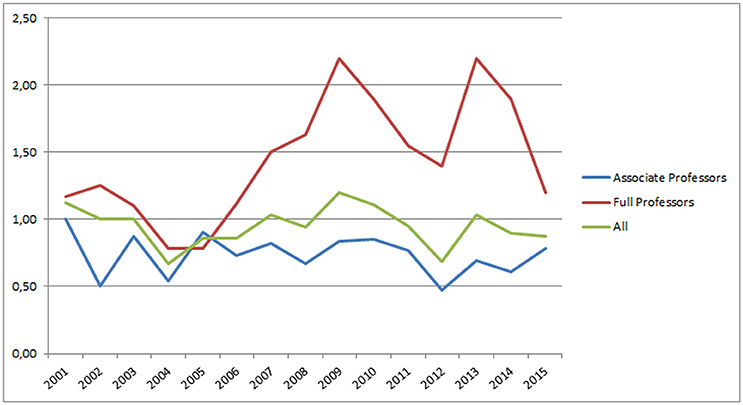

It is difficult to establish an “optimal” reference value with regard to investigators' scientific activity in a discipline, given the heterogeneity of the authors who contribute to a given field. To address this problem, and as an example of one solution that could be implemented for the group under study, we selected the documents published by full and associate professors at universities in the fields of IS&LS. These were identified through the directories of 12 Spanish universities that award Bachelor's degrees in IS&LS; for each year in the study period, we calculated the average number of articles per author in each of these specific professional categories. In that sense, the average number of articles published per year oscillated between 0.67 and 1.2 (mean 0.92), with higher productivity and more peaks in scientific production among the full professors compared to associate professors (Figure 2).

Figure 2. Evolution of scientific activity (average articles per year) of associate professors and full professors in the Web of Science category “Infomation Science and Library Science” (2001–2015).

The average number of authors per article in the body of analyzed documents (2.8) did not show significant changes in the study period (2.9 in 2001–2005; 2.7 in 2006–2010, and 2.8 in 2011–2015). We did observe a higher degree of collaboration among the articles published in foreign, English-language journals (mean 2.93 authors per article, median 3) compared to Spanish journals (2.44 authors per article, median 2). Of the 24 most productive authors, 16 presented above-average values for collaboration in their work; the other 8 presented collaboration values that were lower but still very near the mean. Four authors (10, 11, 21, and 23), however, showed very high mean levels of collaboration, with 4.23–4.68 authors per article—much higher than in the discipline and the topic area as a whole (Table 1).

With regard to international collaboration, 21.14% of the articles had the participation of at least one Spanish institution as well as one or more institutions from another country. Just 9 of the 24 most productive authors signed at least 20% of their papers in international collaboration, while the rest showed values that were below the average for the field and even (for authors 10, 12, 18, and 22) null participation in internationally produced papers (Table 1).

In terms of impact indicators, the average citations per article (±standard deviation) for the body of documents analyzed was 5.36 (±10.65, median 3). About a third of the articles (33.70%, n = 699) were published in Q1 journals with the highest JCR impact factor; 18.71% (n = 388), in Q2 journals; 34.96% (n = 725), in Q3 journals; and 12.63% (n = 262), in Q4 journals. With regard to citation indicators, only 16 of the 24 top producers received above-average levels of citations per paper compared to the field as a whole. Despite their prolific production, eight authors did not reach the reference values for the field (Table 1).

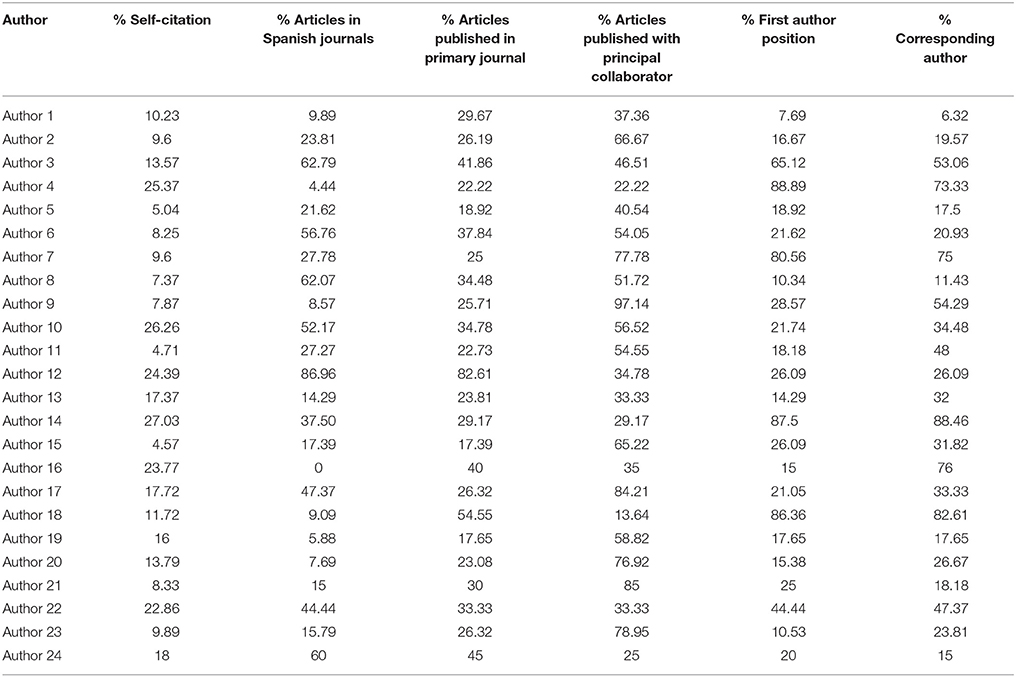

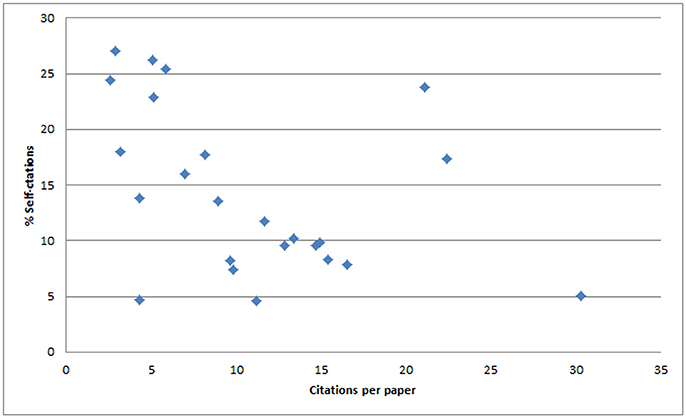

With regard to the control indicators (Table 2), some investigators, such as authors 14, 10, and 4, show rates of self-citation above 25% (27.03, 26.26, and 25.37%, respectively), that is, more than one in four bibliographic references cited in their papers are references to studies they participated in as authors or coauthors. Three other authors (authors 12, 16, and 22) also show very high rates of self-citation (>20%). The remaining 18 authors present low or moderate values in this parameter (4.57–18%).

Table 2. Control indicators for top-producing Spanish authors in the Web of Science category “Information Science and Library Science” (2001–2015).

Moreover, we observed that the higher the citation indicators are for the authors, both in absolute (N citations) and in relative (citations per paper) terms, the lower the degree of self-citation. In other words, the highest levels of self-citation are concentrated among the authors who receive the fewest citations from others (Figure 3).

Figure 3. Relationship between external citation and self-citation in the scientific production of Spanish researchers in the Web of Science category “Infomation Science and Library Science” (2001–2015).

Six of the most productive authors (authors 12, 3, 8, 24, 6, and 10) published over half of their papers in Spanish journals, reflecting a clear national orientation with regard to the dissemination of their research activities. The rest of the authors are characterized by a prominent international projection with regard to their chosen media of dissemination, with values of participation in Spanish journals that in some cases hover at about 10% (authors 1, 19, 20, 18, 9, and 4). The authors that publish most frequently in Spanish journals are those that also present greater endogamy, that is, with the highest proportions of articles published in a single journal (Table 2).

Another aspect to highlight is the pronounced co-dependence between some authors who have signed most or a great proportion of their articles together. This is the case, for example, of author 9, who coauthored 97.14% of his total papers with author 1; author 21, coauthoring 85% of articles with author 1; author 17, coauthoring 84.21% of papers with author 3; and author 23, coauthoring 78.95% of articles with author 1 (Table 2).

The analysis of author order and participation as corresponding author has revealed the existence of different author groups that respond to similar patterns. We classified these groups into the following:

- Type I. Authors characterized by low representation (<12% of the documents) as first and corresponding authors. This is the case of authors 1 and 8, who figure as first authors in 7.69 and 10.34% of the cases, and as corresponding authors for 6.32 and 11.43% of their papers, respectively.

- Type II. Authors showing high values, generally above 50% in the articles in which they contributed, as both first and corresponding authors (authors 3, 4, 7, 14, 18, and 22).

- Type III. Authors with moderate but still important participation as both first and corresponding authors, in 15% to 30% of their articles (authors 2, 5, 6, 12, 15, 19, 21, and 24).

- Type IV. Authors who stand out for their disproportionate representation as corresponding authors (33–76%) compared to their participation as first authors (15–30%). This is the case for authors 9, 10, 11, 13, 16, 17, 20, and 23.

Finally, with regard to the control and leadership indicators, there were some very noteworthy distortions among investigators with intermediate rankings in terms of production. There were some authors with very low values or a total absence of first-position or corresponding authorships. This is the case, for example, of author 25, who despite signing 20 papers (reflecting high levels of scientific production) did not participate as the lead or corresponding author in any of them. We also observed authors with disproportionately high values for certain variables, for example authors who published nearly all work in a single journal (e.g., author 36, who published 75% of her articles in a journal in which she was a member of the editorial board).

Evaluating researchers is a complex process, and it is difficult—if not impossible—to accurately capture all the dimensions of research through the use of a single or limited number of indicators. To identify the most prominent features of a researcher's activity during an evaluation process, it is essential to generate multivariate profiles that quantify and characterize the different facets of the research that is disseminated through scientific publications (Gorraiz and Gumpenberger, 2015).

Evaluation processes demand that researchers demonstrate their degree of scientific activity, indicating in some cases the minimum number of documents that they need to have published to obtain a positive accreditation or evaluation. The problem resides in developing clear criteria, objectives, and reference thresholds for establishing acceptable and excellent levels of production. The research community within any discipline or area of knowledge is characterized by great heterogeneity, for instance in terms of professional categories or age groups (Costas and Bordons, 2005); transience in contributions to the field (due to isolated collaborations with experts in a particular methodology, multidisciplinary participation, or other reasons; Gordon, 2007; Amez, 2012); and natural variations in researchers' levels of scientific production (Costas et al., 2010a; Frixione et al., 2016). The specific analysis undertaken on a narrowly defined group of researchers in one area of knowledge and similar professional and academic status (Spanish university professors and associate professors in IS&LS) allowed us to develop a frame of reference for scientific activity among these two research communities. With regard to their average level of scientific activity (0.92 articles/year), it is conspicuous that only 65% of the full professors, and 37% of the associate professors, surpass the mean value. Future lines of research that may be of interest to shed light on the contours of scientific activity include the factors associated with high research productivity, barriers that constrain or even prevent some researchers from publishing (Baccini et al., 2014; Horodnic and Zait, 2015), and the characteristics and contributions of less productive researchers in terms of visibility and impact on a given research topic.

Collaboration is an inherent feature of research, holding great value due to the numerous positive factors associated with it (González-Alcaide and Gómez-Ferri, 2014), but interpreting it during an evaluation process is problematic. First of all, it is difficult to measure individual contributions to research carried out in collaboration, and secondly, different studies have reported the inflationary tendencies of collaboration indexes (Cronin, 2001). Thus, a number of challenges need to be addressed before integrating scientific collaboration as a variable in research evaluation processes (Cronin and Weaver, 1995; González-Alcaide and Gómez-Ferri, 2014). These include further in-depth analysis into the processes that scientists use to attribute merit through publications in different disciplines and particularly the clarification of specific aspects such as author order, the role of the corresponding author, and other elements such as the acknowledgments. A frame of reference should also be established in order to avoid conflicts derived from the dichotomy of the rise in collective knowledge production, on the one hand, and the prevalence of rewards and recognitions for individual researchers, on the other. The international collaboration rate we observed in the present study (21%) represents a significant increase relative to a previous study analyzing Library and Information Science publications by Spanish authors up to 2009 (Ardanuy, 2012), and it is slightly higher than the overall rates observed at an international level (Han et al., 2014), with the exception of a few countries such as China (48%) (Jabeen et al., 2017). That said, the low to absent levels of collaboration observed among many of the most productive authors identified reflects the dichotomous nature of the researchers studied: some show a markedly national orientation, while others—mainly those whose work is concentrated on performing bibliometric studies—show considerable international projection. The overall coauthorship index obtained from the set of analyzed documents (2.8) is slightly above that observed among Brazilian researchers in the area of Library and Information Science in 2010–2012 (2.2) (Hilário and Grácio, 2017), among papers published in Ibero-American Library and Information Science journals in 2006–2014 (2.24) (Maz-Machado et al., 2015), and among the 15 core Library and Information Science journals in 2000–2011 (2.11) (Han et al., 2014). However, the coauthorship index pertaining to most of the top-producing authors is far higher, suggesting an association between productivity and degree of collaboration, albeit without excluding the potential presence of inflated coauthorship values in some cases. With regard to the relationship between the degree of collaboration and citation, our results corroborate those published by Levitt and Thelwall (2009), who analyzed collaboration among 35 influential information scientists. These authors determined that collaboration is not an indispensable requisite for obtaining a high degree of citation, as some of the authors with the highest degree of collaboration also presented the lowest degree of citation. In a more recent study Levitt and Thelwall (2016) reported that researchers who worked in groups of 2 or 3 were generally the most productive, in terms of producing the most papers and citations. Similarly, Bu et al. (2018a) concluded that papers written by large groups of researchers tend to have a lower impact, a result that justifies the interest in measuring these variables as control indicators during individual researcher evaluation processes. Moreover, these findings would help support arguments to limit the number of authors established in the regulatory provisions for accreditation and academic promotion.

With regard to impact and visibility-based indicators, one of the most notable observations in our study is that some authors, despite showing prominent levels of participation in high-impact journals (Q1 and Q2), present low degrees of citation. This fact calls into question the mechanical use of journal impact indicators as a mechanism for evaluating researcher performance (Bordons et al., 2002; Leydesdorff et al., 2011). However, this finding should be tested in other disciplines and beyond the small group of highly productive authors. Some authors may be guided by strategies based on academic profitability (in which case, publication in journals topping the impact rankings becomes an inexcusable objective) rather than by other criteria such as the appropriateness of the medium in terms of topic focus or language. It would also be of interest to study the individual-level factors associated with rising citation degrees (Aksnes et al., 2013). With regard to H-index, is a widely used indicator for evaluating individual researchers' activity, even though a large body of literature is critical of this generalized application and despite the existing consensus that the measure is only valid for evaluating senior researchers, who present more homogeneous features and similar patterns of publication (Vinkler, 2007; Amez, 2012; Waltman, 2016). In our study, the H-index is strongly correlated with the total number of citations and the citations per paper, which probably reflects the homogeneity of the most productive investigators in a given area of knowledge. In any case, it is worth highlighting that for more heterogeneous samples, it is more appropriate to use other indicators that consider aspects like researchers' field or lifetime publication, or that assign a higher relative weight to highly cited papers (Vinkler, 2007; Havemann and Larsen, 2015).

Our so-called “control” indicators use bibliometric indicators to capture some negative aspects associated with citation practices and dissemination of research results: high levels of self-citation, excessive concentration of publications in a single journal, and dependence on other investigators in publishing.

Self-citation is a common phenomenon in the process of disseminating scientific knowledge (Glänzel et al., 2006; Shah et al., 2015). In Library and Information Science, several papers offer evidence that about half the papers published in specialized journals contain at least one instance of self-citation (Dimitroff and Arlitsch, 1995; Davarpanah and Aslekia, 2008). Although modest levels of self-citation may simply be a reflection of researchers building on previous findings, in excess, self-citation may reflect preconceived citations that entail less plurality in research perspectives on a topic or an intent to manipulate impact-based indicators for one's own benefit in individual research evaluation processes. At the individual level, Anwar and Jan (2017) have analyzed the scientific activity of the 21 most highly cited Pakistani researchers in the area of Library and Information Science, determining that 11.74% of the citations were self-citations, and although most of the authors presented values similar or below that average, three showed high levels of self-citation (31–54%), which is consistent with the observations from the present study. With regard to the most productive Spanish authors in the area analyzed, the most significant aspects to emerge include the identification of many more authors who self-cite excessively (6 of 24 present auto-citation rates of above 20%) as well as a negative correlation between self-citation and external citation. That is, the authors who most frequently cite themselves are those who receive the fewest citations (in both absolute and relative terms). As overall citation values rise, self-citation decreases. This phenomenon has also been reported by other studies in different topic areas (Costas et al., 2010b), including Library and Information Science, where one study observed a negative correlation between impact factor and the share of self-citations (Shah et al., 2015). This suggests that self-citation should be taken into account in researcher evaluation processes, as it may cause an important distortion of the bibliometric indicators at this level (Aksnes, 2003). In that sense, Seeber et al. (in press) have reported that in Italy, the introduction of a regulation linking career advancement to the number of citations received has resulted in a pronounced increase in self-citations among scientists. This explanation may be at the root of the high levels of self-citation observed among some of the authors analyzed in our study, given that in Spain, too, academic promotions for researchers have been closely tied to bibliometric citation indicators. Excessive self-citation has also been associated with other rather unethical practices, including hyperauthorship or collaborative works irrespective of co-authorship (Shah et al., 2015).

Other ethically questionable aspects related to citation practices are more difficult to analyze at a bibliometric level and merit specific studies using other methods. Issues include directed or imposed citation, for example when senior researchers or directors are systematically cited by their students or subordinates, when journal editors or peer reviewers propose their own work as references for papers they are reviewing, or when authors or even journals reciprocally cite each other (Esfe et al., 2015; Thombs et al., 2015).

The percentage of papers published in journals from Spain versus other countries reveals the duality of Spanish authors' publication patterns in the social sciences and some other disciplines, as pointed out elsewhere (González Alcaide et al., 2006; Fernández-Quijada et al., 2013), and as occurs in other non-Anglophone countries such as China (Tucker et al., 2011). This duality could partly reflect differences in thematic focus, as some topics in a given discipline have a greater international projection compared to others that are more concerned with the national sphere. Without a doubt, scientific policies have also had an impact, as the norms and regulations around research evaluation processes value papers published in journals that rank high in terms of relative quality indexes, and these are generally international journals in English; this factor is given more weight than other considerations that could influence the final assessment (González-Alcaide et al., 2012; López-Navarro et al., 2015a,b).

With regard to analyzing collaborative ties with other investigators and the concept of the principal or top collaborator described in this study, Bu et al. has introduced the concepts of “persistence in maintaining collaborative relationships” (Bu et al., 2018a) and “stability of scientific collaboration” (Bu et al., 2018b) as measures of the continuity and the absence of fluctuations in the number of collaborations maintained between investigators over time. Remarkably, 54% of the most productive Spanish investigators that we analyzed in the area of Library and Information Science share over half of their bylines with their top collaborator. This figure is far higher than that observed in other disciplines, where only 9–20% of the authors publish more than 50% of their papers with their top collaborator (Petersen, 2015). These high rates of collaboration may reflect a certain degree of co-dependence. However, this co-dependence should not necessarily be perceived as negative, and in fact it may even be positive. Indeed, Petersen's (2015) analysis—like Abbasi and Altmann (2011) and de Montjoye et al. (2014) before it—concluded that extremely strong collaborations have a significant positive impact on productivity and citations. Other authors, including Bu et al. (2018a), take the opposite position, arguing that persistent scientific collaboration does not always result in high-quality papers. These apparently contradictory results make it necessary to generate further scientific evidence with regard to this collaboration variable. Moreover, in relation with science policies, this tight cooperation introduces the need to maintain the ties, as problems may occur due to changes in the workplace, retirement, or other reasons that interrupt the collaboration (Silva et al., 2014).

Employing control indicators could be positive in order to discourage deviations or negative behaviors among researchers, although it is necessary to establish the conditions that enable their use. These analyses generally require costly bibliometric studies or reference values/thresholds that illustrate the degree to which researchers exceed the typical values of the discipline or topic area. Moreover, any decision made could be associated with modifications in the future conduct of the researchers under evaluation, as some aspects such as signature order or position are easy to manipulate by unscrupulous researchers, to the detriment of collaborators with less influence or academic standing (Michels and Schmoch, 2014; Bloch and Schneider, 2016).

The concept of leadership may be delineated at a bibliometric level through the study of signature order and participation as corresponding author. With few exceptions (for example, where authors sign in alphabetic order), research communities in different disciplines understand that the greatest contribution in the development of a paper corresponds to the first author, and subsequent authors have a decreasing stake in the work. The corresponding author also assumes a greater role in the dissemination of research results (Kosmulski, 2012). Thus, a more frequent presence as first or corresponding author confers greater leadership in the research activities. In contrast, absence in these roles could be associated with subordination, a passive or secondary role, or a merely honorific presence. In some areas, including biomedical research, the last author position is also highly valued and corresponds to the function of direction or supervision of the research (Baerlocher et al., 2007; Bhandari et al., 2014). This convention is not followed in areas with less collaboration, including the area analyzed in the present paper, Social Sciences.

The analysis of signature order and the participation as corresponding authors among the most productive authors in IS&LS reveals the existence of different patterns that clearly respond to different forms of organization and roles performed by investigators. These variations can inform different models of leadership. First of all, there would be a model of “prestige” leadership (type I), with highly productive authors who nevertheless show low values with regard to position as first or corresponding author. These authors probably play a primary role as relevant authors due to their prestige, influence or authority in the field, and they may also perform other, more specific functions, such as mobilizing resources for research, or conceptualizing and planning the lines of investigation or research questions. Voluntarily or otherwise, they will have minimized their role as active researchers due to their work dynamic or the demands of the professional role(s) they fulfill.

On the opposite end of the spectrum are the authors characterized by their participatory leadership (type II), who have played an important role in developing their research work, particularly with regard to the performance of field work, experiments, or drafting of manuscripts. As a result of this work, they occupy the role of first or corresponding author in a large part of the papers to which they contribute. This model of leadership may respond to different motivations: they may be investigators at the beginning or middle of their career (Costas and Bordons, 2011), or in some cases they could have a special weight in their individual work, as suggested by the fact that these authors are among those participating on the papers with the lowest mean number of authors.

An intermediary point between the two leadership types described above would be “active leadership” (type III), wherein authors combine an active role conducting some studies as first authors while also playing a directive or supervisory role in other cases; these authors also appear frequently as corresponding authors.

Finally, there are authors who present higher values as corresponding authors than as first authors; these may fall into the category of “directive, supervisory or managing leadership” (type IV), as they probably prefer to perform these functions, assuming the responsibility for the content and the role assigned to the final author of some disciplines (Baerlocher et al., 2007; Bhandari et al., 2014).

With regard to the aspects commented above, it would be desirable to establish uniform criteria of consensus with regard to author order and the responsibility of the corresponding author. This would avoid conflicts between collaborators when determining author presentation as well as abuse or impositions from senior investigators. Such criteria would also favor subsequent interpretation at a bibliometric level of the significance of these bibliographic characteristics (Burrows and Moore, 2011; Mattsson et al., 2011).

The main limitations of the present study reside in its focus on a narrowly defined research community within a specific discipline and geographic area and the lack of consideration for papers published in multidisciplinary journals or journals with other specializations. Moreover, we did not consider papers that were not indexed in the Web of Science. Nevertheless, our study can serve as an example for how to use individual bibliometric indicators and control indicators in research evaluation processes. We also describe some relevant characteristics in terms of research behavior among the most productive authors in the area under analysis. Future studies should confirm the transferibility of these findings to other topic areas or disciplines.

The main conclusions emerging from the study are the following:

- There are important differences among the top-producing authors in the field analyzed in terms of the degree of both citation and collaboration as well as in other variables, such as participation in high-impact journals. This last variable does not always correspond to a high degree of citation, calling into question the use of journal impact ranking as a measure of researcher performance.

- The use of control indicators allows the identification of behaviors in need of correction among some investigators, for example an excessive degree of self-citation, endogamy with regard to the primary journal of publication, and an excessive dependence on other investigators.

- We have identified different patterns or roles associated with the concept of leadership in scientific publications, as measured by participation as first and/or corresponding author. These patterns probably reflect different ways of working and organizing research; our study developed four typologies of research leadership: “prestige leadership,” “participatory leadership,” “active leadership,” and “directive, supervisory or managing leadership.”

GG and JG conceived the study; GG and JG developed the methodology; GG performed the formal analysis; and GG and JG drafted and reviewed the manuscript.

This work has been supported by the Consellería de Educación, Investigación, Cultura y Deporte, Generalitat Valenciana (BEST 2016/153).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbasi, A., and Altmann, J. (2011). “On the correlation between research performance and social network analysis measures applied to research collaboration networks,” in Proceedings of the 44th Hawaii International Conference on System Sciences (HICSS-44) (Koloa; Kauai, HI: IEEE Computer Society Press).

Abramo, G., and d'Angelo, C. A. (2011). National-scale research performance assessment at the individual level. Scientometrics 86, 347–364. doi: 10.1007/s11192-010-0297-2

Aksnes, D. W. (2003). A macro-study of self-citations. Scientometrics 56, 235–246. doi: 10.1023/A:1021919228368

Aksnes, D. W., Rørstad, K., Piro, F. N., and Sivertsen, G. (2013). Are mobile researchers more productive and cited than non-mobile researchers? A large-scale study of Norwegian scientists. Res. Eval. 22, 215–223. doi: 10.1093/reseval/rvt012

Amez, L. (2012). Citation measures at the micro level: influence of publication age, field, and uncitedness. J. Am. Soc. Inf. Sci. Technol. 63, 1459–1465. doi: 10.1002/asi.22687

Anwar, M. A., and Jan, S. U. (2017). Research output of the Pakistani Library and Information Science authors: a bibliometric evaluation of their impact. J. Inf. Sci. Theory Pract. 5, 48–61. doi: 10.1633/JISTaP.2017.5.2.4

Ardanuy, J. (2012). Scientific collaboration in library and information science viewed through the web of knowledge: the Spanish case. Scientometrics 90, 877–890. doi: 10.1007/s11192-011-0552-1

Åström, F., Hansson, J., and Olsson, M. (2011). Bibliometrics and the Changing Role of the University Libraries. Available online at: http://www.diva-portal.org/smash/get/diva2:461857/FULLTEXT01.pdf

Baccini, A., Barabesi, L., Cioni, M., and Pisani, C. (2014). Crossing the hurdle: the determinants of individual scientific performance. Scientometrics 101, 2035–2062. doi: 10.1007/s11192-014-1395-3

Baerlocher, M. O., Newton, M., Gautam, T., Tomlinson, G., and Detsky, A. S. (2007). The meaning of author order in medical research. J. Invest. Med. 55, 174–180. doi: 10.2310/6650.2007.06044

Bhandari, M., Guyatt, G. H., Kulkarni, A. V., Devereaux, P. J., Leece, P., Bajammal, S., et al. (2014). Perceptions of authors' contributions are influenced by both byline order and designation of corresponding author. J. Clin. Epidemiol. 67, 1049–1054. doi: 10.1016/j.jclinepi.2014.04.006

Bloch, C., and Schneider, J. W. (2016). Performance-based funding models and researcher behavior: an analysis of the influence of the Norwegian publication indicator at the individual level. Res. Eval. 25, 371–382. doi: 10.1093/reseval/rvv047

Bordons, M., Fernandez, M. T., and Gómez, I. (2002). Advantages and limitations in the use of impact factor measures for the assessment of research performance. Scientometrics 53, 195–206. doi: 10.1023/A:1014800407876

Bu, Y., Ding, Y., Liang, X., and Murray, D. S. (2018a). Understanding persistent scientific collaboration. J. Assoc. Inf. Sci. Technol. 69, 438–448. doi: 10.1002/asi.23966

Bu, Y., Murray, D. S., Ding, Y., Huang, Y., and Zhao, Y. (2018b). Measuring the stability of scientific collaboration. Scientometrics 114, 463–479. doi: 10.1007/s11192-017-2599-0

Burrows, S., and Moore, M. (2011). Trends in Authorship Order in Biomedical Research Publications. Faculty Research, Publications, and Presentations. Paper 1. Available online at: http://scholarlyrepository.miami.edu/health_informatics_research/1

Costas, R., and Bordons, M. (2005). Some results in the area of natural resources at the Spanish CSIC. Res. Eval. 14, 110–120. doi: 10.3152/147154405781776238

Costas, R., and Bordons, M. (2011). Do age and professional rank influence the order of authorship in scientific publications? Some evidence from a micro-level perspective. Scientometrics 88, 145–161. doi: 10.1007/s11192-011-0368-z

Costas, R., van Leeuwen, T. N., and Bordons, M. (2010a). A bibliometric classificatory approach for the study and assessment of research performance at the individual level: the effects of age on productivity and impact. J. Am. Soc. Inf. Sci. Technol. 61, 1564–1581. doi: 10.1002/asi.21348

Costas, R., van Leeuwen, T. N., and Bordons, M. (2010b). Self-citations at the meso and individual levels: effects of different calculation methods. Scientometrics 82, 517–537. doi: 10.1007/s11192-010-0187-7

Cronin, B. (2001). Hyperauthorship: a postmodern perversion or evidence of a structural shift in scholarly communication practices? J. Am. Soc. Inf. Sci. Technol. 52, 558–569. doi: 10.1002/asi.1097

Cronin, B., and Weaver, S. (1995). The praxis of acknowledgement: from bibliometrics to influmetrics. Revista Española de Documentación Científica 18, 172–177. doi: 10.3989/redc.1995.v18.i2.654

Davarpanah, M. R., and Aslekia, S. (2008). A scientometric analysis of international LIS journals: productivity and characteristics. Scientometrics 77, 21–39. doi: 10.1007/s11192-007-1803-z

de Montjoye, Y. A., Stopczynski, A., Shmueli, E., Pentland, A., and Lehmann, S. (2014). The strength of the strongest ties in collaborative problem solving. Sci. Rep. 4:5277. doi: 10.1038/srep05277

Dimitroff, A., and Arlitsch, K. (1995). Self-citations in the Library and Information Science literature. J. Doc. 51, 44–56. doi: 10.1108/eb026942

Esfe, M. H., Wongwises, S., Asadi, A., Karimipour, A., and Akbari, M. (2015). Mandatory and self-citation; types, reasons, their benefits and disadvantages. Sci. Eng. Ethics 21, 1581–1585. doi: 10.1007/s11948-014-9598-9

Fernández-Quijada, D., Masip, P., and Bergillos, I. (2013). El precio de la internacionalidad: la dualidad en los patrones de publicación de los investigadores españoles en comunicación. Revista Española de Documentación Científica, 36:e010. doi: 10.3989/redc.2013.2.936

Franceschet, M. (2009). A cluster analysis of scholar and journal bibliometric indicators. J. Am. Soc. Inf. Sci. Technol. 60, 1950–1964. doi: 10.1002/asi.21152

Frixione, E., Ruiz-Zamarripa, L., and Hernández, G. (2016). Assessing individual intellectual output in scientific research: Mexico's National System for evaluating scholars performance in the Humanities and the Behavioral Sciences. PLoS ONE 11:e0155732. doi: 10.1371/journal.pone.0155732

Glänzel, W., Debackere, K., Thijs, B., and Schubert, A. (2006). A concise review on the role of author self-citations in information science, bibliometrics and science policy. Scientometrics 67, 263–277. doi: 10.1007/s11192-006-0098-9

González-Alcaide, G., and Gómez-Ferri, J. (2014). La colaboración científica: principales líneas de investigación y retos de futuro. Revista Española de Documentación Científica 37:e062. doi: 10.3989/redc.2014.4.1186

González-Alcaide, G., Valderrama-Zurián, J. C., and Aleixandre-Benavent, R. (2012). The impact factor in non-English-speaking countries. Scientometrics 92, 297–311. doi: 10.1007/s11192-012-0692-y

González Alcaide, G., Valderrama Zurián, J. C., Aleixandre Benavent, R., Alonso Arroyo, A., de Granda Orive, J. I., and Villanueva Serrano, S. (2006). Redes de coautoría y colaboración de las instituciones españolas en la producción científica sobre drogodependencias en biomedicina 1999-2004. Trastor. Adict. 8, 78–114. doi: 10.1016/S1575-0973(06)75110-8

Gordon, A. (2007). Transient and continuant authors in a research field: the case of terrorism. Scientometrics 72, 213–224. doi: 10.1007/s11192-007-1714-z

Gorraiz, J., and Gumpenberger, C. (2015). A flexible bibliometric approach for the assessment of professorial appointments. Scientometrics 105, 1699–1719. doi: 10.1007/s11192-015-1703-6

Gorraiz, J., Wieland, M., and Gumpenberger, C. (2016). Individual bibliometric assessment at University of Vienna: from numbers to multidimensional profiles. El Profesional de la Información 25, 901–914. doi: 10.3145/epi.2016.nov.07

Gumpenberger, C., Wieland, M., and Gorraiz, J. (2012). Bibliometric practices and activities at the University of Vienna. Libr. Manage. 33, 174–183. doi: 10.1108/01435121211217199

Han, P., Shi, J., Li, X., Wang, D., Shen, S., and Su, X. (2014). International collaboration in LIS: global trends and networks at the country and institution level. Scientometrics 98, 53–72. doi: 10.1007/s11192-013-1146-x

Havemann, F., and Larsen, B. (2015). Bibliometric indicators of young authors in astrophysics: can later stars be predicted? Scientometrics 102, 1413–1434. doi: 10.1007/s11192-014-1476-3

Hicks, D., Wouters, P., de Rijcke, S., and Rafols, I. (2015). The Leiden Manifesto for research metrics. Nature 520, 429–431. doi: 10.1038/520429a

Hilário, C. M., and Grácio, M. C. C. (2017). Scientific collaboration in Brazilian researchers: a comparative study in the information science, mathematics and dentistry fields. Scientometrics 113, 929–950. doi: 10.1007/s11192-017-2498-4

Horodnic, I. A., and Zait, A. (2015). Motivation and research productivity in a university system undergoing transition. Res. Eval. 24, 282–292. doi: 10.1093/reseval/rvv010

Jabeen, M., Imran, M., Badar, K., Rafiq, M., Jabeen, M., and Yun, L. (2017). Scientific collaboration of Library & Information Science research in China (2012-2013). Malays. J. Libr. Inf. Sci. 22, 67–83. doi: 10.22452/mjlis.vol22no2.5

Kosmulski, M. (2012). The order in the lists of authors in multi-author papers revisited. J. Informetr. 6, 639–644. doi: 10.1016/j.joi.2012.06.006

Levitt, J. M., and Thelwall, M. (2009). Citation levels and collaboration within Library and Information Science. J. Am. Soc. Inf. Sci. Technol. 60, 434–442. doi: 10.1002/asi.21000

Levitt, J. M., and Thelwall, M. (2016). Long term productivity and collaboration in information science. Scientometrics 108, 1103–1117. doi: 10.1007/s11192-016-2061-8

Leydesdorff, L., Bornmann, L., Mutz, R., and Opthof, T. (2011). Turning the tables on citation analysis one more time: principles for comparing sets of documents. J. Am. Soc. Inf. Sci. Technol. 62, 1370–1381. doi: 10.1002/asi.21534

López-Navarro, I., Moreno, A. I., Burgess, S., Sachdev, I., and Rey-Rocha, J. (2015a). Why publish in English versus Spanish?: towards a framework for the study of researchers' motivations. Revista Española de Documentación Científica 38:e073. doi: 10.3989/redc.2015.1.1148

López-Navarro, I., Moreno, A. I., Quintanilla, M. A., and Rey-Rocha, J. (2015b). Why do I publish research articles in English instead of my own language? Differences in Spanish researchers' motivations across scientific domains. Scientometrics 103, 939–976. doi: 10.1007/s11192-015-1570-1

Mattsson, P., Sundberg, C. J., and Laget, P. (2011). Is correspondence reflected in the author position? A bibliometric study of the relation between corresponding author and byline position. Scientometrics 87, 99–105. doi: 10.1007/s11192-010-0310-9

Maz-Machado, A., Jiménez-Fanjul, N., and Madrid, M. J. (2015). Collaboration in the Iberoamerican Journals in the Category Information & Library Science in WOS. Library Philosophy and Practice. Available online at: https://digitalcommons.unl.edu/libphilprac/1270/

Michels, C., and Schmoch, U. (2014). Impact of bibliometric studies on the publication behavior of authors. Scientometrics 98, 369–385. doi: 10.1007/s11192-013-1015-7

Petersen, A. M. (2015). Quantifying the impact of weak, strong and super ties in scientific careers. Proc. Natl. Acad. Sci. U.S.A. 112, E4671–E4680. doi: 10.1073/pnas.1501444112

Seeber, M., Cattaneo, M., Meoli, M., and Malighetti, P. (in press). Self-citations as strategic response to the use of metrics for career decisions. Res. Policy doi: 10.1016/j.respol.2017.12.004

Shah, T. A., Gul, S., and Gaur, R. C. (2015). Authors self-citation behaviour in the field of Library and Information Science. Aslib J. Inf. Manage. 67:45868. doi: 10.1108/AJIM-10-2014-0134

Silva, T. H. P., Moro, M. M., Silva, A. P. C., Meira, W., and Laender, A. H. F. (2014). “Community-based endogamy as an influence indicator,” in Proceedings of the 14th ACM/IEEE-CS Joint Conference on Digital Libraries (London; Piscataway, NJ: IEE Press).

Thombs, B. D., Levis, A. W., Razykov, I., Syamchandra, A., Leentjens, A. F., Levenson, J., et al. (2015). Potentially coercive self-citation by peer reviewers: a cross-sectional study. J. Psychosom. Res. 78, 1–6. doi: 10.1016/j.jpsychores.2014.09.015

Tucker, J. D., Chang, H., Brandt, A., Gao, X., Lin, M., Luo, J., et al. (2011). An empirical analysis of overlap publication in Chinese language and English research manuscripts. PLoS ONE 6:e22149. doi: 10.1371/journal.pone.0022149

Vinkler, P. (2007). Eminence of scientists in the light of the h-index and other scientometric indicators. J. Inf. Sci. 33, 481–491. doi: 10.1177/0165551506072165

Waltman, L. (2016). A review of the literature on citation impact indicators. J. Informetr. 10, 365–391. doi: 10.1016/j.joi.2016.02.007

Wildgaard, L. (2015). A comparison of 17 author-level bibliometric indicators for researchers in astronomy, environmental science, philosophy and public health in web of science and google scholar. Scientometrics 104, 873–906. doi: 10.1007/s11192-015-1608-4

Wildgaard, L. (2016). A critical cluster analysis of 44 indicators of author-level performance. J. Informetr. 10, 1055–1078. doi: 10.1016/j.joi.2016.09.003

Keywords: scientific publications, academic promotion, scientific performance, bibliometric indicators, individual evaluation, control parameters, library and Information science, Spain

Citation: González Alcaide G and Gorraiz JI (2018) Assessment of Researchers Through Bibliometric Indicators: The Area of Information and Library Science in Spain as a Case Study (2001–2015). Front. Res. Metr. Anal. 3:15. doi: 10.3389/frma.2018.00015

Received: 21 November 2017; Accepted: 05 April 2018;

Published: 24 April 2018.

Edited by:

Zaida Chinchilla-Rodríguez, Consejo Superior de Investigaciones Científicas (CSIC), SpainReviewed by:

Marc J. J. Luwel, University Leiden, NetherlandsCopyright © 2018 González Alcaide and Gorraiz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Juan Ignacio Gorraiz, anVhbi5nb3JyYWl6QHVuaXZpZS5hYy5hdA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.