- University of Sheffield, Sheffield, United Kingdom

Background: There is a growing interest in using and analyzing altmetric data for quantifying the impact of research, especially societal impact (Bornmann, 2014, Thelwall et al., 2016, Haunschild and Bornmann, 2017). This study therefore aimed to explore the usefulness of Altmetric.com data as a means of identifying and categorizing the policy impact of research articles from a single center (the University of Sheffield).

Method: This study has only included published research articles from authors at the University of Sheffield and indexed in the Altmetric.com database. Altmetric data on policy impact was sourced from Altmetric.com following a data request and included citations up until February 2017. Supplementary Altmetric.com data, including news media, blogs, Mendeley saves, and Wikipedia citations, were also gathered.

Results: Altmetric.com data did enable the identification of policy documents that cited relevant articles. In total, 1,463 pieces of published research from authors at the University of Sheffield were found to be cited by between 1 and 13 policy documents. 21 research articles (1%) were listed as being cited in five or more policy documents; 21 (1%) in four policy documents; 50 (3%) in three documents; 186 (13%) in two documents; and 1,185 (81%) in one document. Of those 1,463 outputs, 1,449 (99%) were journal articles, 13 were books, and 1 was a book chapter (less than 1%). The time lag from the publication of the research to its citation in policy documents ranged from 3 months to 31 years. Analysis of the 92 research articles cited in three or more policy documents indicated that the research topics with the greatest policy impact were medicine, dentistry, and health, followed by social science and pure science. The Altmetric.com data enabled an in-depth assessment of the 21 research articles cited in five or more policy documents. However, errors of attribution and designation were found in the Altmetric.com data. These findings might be generalizable to other institutions similar in organizational structure to The University of Sheffield.

Conclusion: Within the limitations of the current text-mining system, Altmetric.com can offer important and highly accessible data on the policy impact of an organization’s published research articles, but caution must be exercised when seeking to use this data, especially in terms of providing evidence of policy impact.

Introduction

Altmetrics have only been in existence since 2010 and are already starting to highlight useful pieces of information on how a research output is communicated and shared on the web. Despite the use of the word “metrics” within altmetrics, which implies an exact number of something, it is not yet an exact science but does provide a useful indicator of research interest. Since 2010, there have been a few leaders in altmetric data analytics and support, with Altmetric.com, ImpactStory, Plum Analytics, and GrowKudos leading the way. For the purpose of this paper we will refer to the company Altmetric.com as capitalized, and altmetric as the process without a capital letter.

Citation analysis and peer review are still the principal approaches taken in measuring impact (Booth, 2016), but they fail to take into account that research is being communicated, shared, downloaded, and saved to reference management tools across the web. These traditional metrics have also focused on journals and authors, and not article-level outputs. Even before the first mention of the term altmetric, Neylon and Wu (2009) pointed out the need for “sophisticated metrics to ask sophisticated questions about different aspects of scientific impact and we need further research into both the most effective measurement techniques and the most effective uses of these in policy and decision making.” Altmetrics attempts to do this by tracking outputs beyond established metrics to explore social communication such as blogs and social media coverage, citations in Wikipedia and reference management saves in Mendeley (Fenner, 2013). Altmetrics can also assess whether a research output has had some form of broader impact that is “good for teaching” (Bornmann, 2015).

Altmetrics are constantly adapting to new and evolving data sources. Robinson-Garcia et al. (2015) found that, “there is an important demand for altmetrics to develop universal and scalable methodologies for assessing societal impact of research.” Much of the data Altmetric.com trawl through comes from social media, which is not exclusive to the research community. Yet, at present there is evidence to suggest that rather than build a bridge between the research community and society at large, social media has instead helped open new channels for informal discussions among researchers (Sugimoto et al., 2016).

Assessing the influence of research has become increasingly important for impact and assessment across all higher education and research-active centers. Work carried out by Thelwall and Kousha (2015a,b) and Kousha and Thelwall (2015) explored different types of web indicators for research evaluation. The UK has the Research Excellence Framework (REF) which will next take place in 2021 and will assess the impact of research beyond academic citations. A definition of that broader impact is summed by Wilsdon et al. (2015) as, “research that has a societal impact when auditable or recorded influence is achieved upon non-academic organization(s) or actor(s) in a sector outside the university sector itself.” In relation to metrics and how they are captured to show impact, Wilsdon et al. (2015) state that societal impacts need to be demonstrated rather than assumed. Evidence of external impacts can take the form of references to, citations of, or discussion of a person, their work, or research results. Altmetric.com offers a useful way of extracting impact evidence from a variety of sources that go beyond these traditional metrics. These extend to traditional and social media coverage, reference management saves, and citations from non-traditional sources such as Wikipedia and policy documents. We are most interested in the latter as a new area of impact research.

Altmetric.com Data and Policy Impact

Recently the question has been asked whether altmetrics can measure research impact on policy (Bornmann et al., 2016) (Haunschild and Bornmann, 2017). Waltman and Costas (2014) reflected: “altmetrics opens the door to a broader interpretation of the concept of impact and to more diverse forms of impact analysis.” Some of these forms of impact, such as public engagement and changes to practice in society, might benefit from the development of altmetrics (Khazragui and Hudson, 2015). With a growing pool of research in this area, attention is moving toward the evolution of altmetric data relating to policy documents, and what policy makers could or should do with those data (Didegah et al., 2014). Funders should have a natural interest in such impact: if research they have supported is referenced as part of the evidence supporting a national and/or international clinical guideline, for example, then it is an indication that this research is likely to be influencing policy (Kryl et al., 2012). Until recently it was almost impossible to discover whether a piece of research had been cited in a policy document without either manually sifting through potentially relevant documents, by finding out through direct communication between the policy makers, or by pure chance. However, in 2014, Altmetric.com announced that they had added the ability to track policy documents that cited a research output (Lui, 2014). Funders saw the importance of this development, with The Wellcome Trust taking an immediate keen interest in the development of altmetrics relating to the policy sphere (Haustein et al., 2016).

Altmetric.com now has a growing list of policy documents that it trawls for citations. This includes, but is not limited to, the UK National Institute for Care and Health Excellence (NICE), the World Health Organization (WHO), and the European Food Safety Authority. Altmetric.com is able to do this by processing each policy document and extracting text to search for possible citations. The Altmetric.com “scraper” evaluates the text in the policy document and determines if it is appropriate data to make a positive match with a research output. Any references that are detected are checked in the PubMed and CrossRef databases to determine whether or not they are actual scholarly citations tied to actual research articles. If a match is made between a policy document and a research paper in the form of a citation, then it is added to the Altmetric.com details badge, which contributes to the paper’s Altmetric.com Score. Altmetric.com scrapes data within policy documents going back to 1928 and as a result is able to provide a long tail of research influence in newly published policy. For example, a paper could be published in a journal in 2005 and then get cited in a 2017 policy document; using Altmetric.com data we can now see this policy impact. It is important to note that the results of this research are influenced by the coverage limitations of Altmetric.com. At present, only data on the policy documents and subsequent citations are presented in this database. The full extent of research cited in global policy documents is not yet known.

Research Questions

Given the massive scale of the available data, it was necessary to create a reasonably sized and coherent sample for the analysis. Previously, other authors have explored the usefulness and viability of Altmetric.com policy documents data by identifying the proportion of publications within a single database [Web of Science (WoS)] that were cited in policy-related documents (0.5%) (Haunschild and Bornmann, 2017). For this study, the authors chose to limit the sample to a single center, their own institution, the University of Sheffield. This provided a sizeable but manageable, cross-disciplinary sample, the findings from which could be considered to be generalizable to very many other, similar institutions. No previous work has been published on the altmetrics for policy impact of research publications from a single institution.

As with other UK higher education institutions, The University of Sheffield is increasingly interested in the impact of its research, especially given the power of the periodical REF assessment to reward institutions based on the quality and impact of their research outputs. Citations in policy documents are, therefore, of interest to universities as they are a potential indicator of societal impact (Haunschild and Bornmann, 2017). Altmetric.com provides the facility to explore data back to 1928 and does not only help identify research that has recently been published and is having an influence on policy, but also permits and exploration of this influence in the past. We, therefore, also wanted to look at research published by The University of Sheffield from previous years that was still having some influence in new policy documents which the Altmetric.com data was able to highlight. The authors also had access to The University of Sheffield’s Altmetric.com institutional account, which permitted an exploration of the degree to which this organization’s research was being cited in policy.

The interest in altmetrics and policy impact is, therefore, growing. This paper seeks to contribute to the research in this area by addressing the following questions:

• First, how useful are Altmetric.com data for identifying and categorizing policy documents that cite the research of a particular institution?

• Second, how useful are Altmetric.com data for determining issues such as:

• the time lag to “policy citation and possible impact”;

• the disciplinary and geographical spread of policy impact; and

• the scale of research papers’ “impact” in terms of the number of times they are cited within particular policy documents?

• Finally, how do the Altmetric.com data for policy impact compare with other Altmetric.com data, such as Tweets and Mendeley Saves?

Materials and Methods

This study only included published research articles from authors currently at the University of Sheffield and indexed in the Altmetric.com database. These data were retrieved for The University of Sheffield using our Altmetric.com institutional account up until the February 21, 2017. Altmetric.com updates their database in real time as the platform carries out regular crawls for fresh data across the web. We made a data request to Altmetric.com asking for research that had a Sheffield-affiliated author, past or present. That returned 1,463 records of our publications that had at least one policy document citation.

These data were downloaded into Excel® spreadsheets to facilitate analysis. The Altmetric.com data set included the following data for each research article: title; journal; authors at The University of Sheffield; their department; unique identifier (including DOI); overall Altmetric.com score; numbers of policy documents; new stories; blog posts; tweets; Facebook posts; Wikipedia entries; Reddit posts; and Mendeley readers. Each entry also had an URL for the Altmetric.com page for the research article, which in turn provided links to the citing “policy documents.” This facilitated further analysis: a verification of the numbers of policy documents listed as citing the research article and an assessment of how many times the research article was cited within a document. This latter score provides greater depth and context to the citation metric (Carroll, 2016). The analysis of the available data principally consisted of the tabulation and discussion of descriptive statistics and frequencies, e.g., the reporting of numbers of relevant research articles cited in policy documents; the categorization of these articles by academic faculty or field; the numbers and sources of policy documents.

Results

Identification and Categorizing of Relevant Policy Documents by Altmetrics.com

Details of the Total Sample

Altmetric.com did enable an assessment of whether a relevant research article had an impact on policy documents (i.e., had been cited within policy documents) and, if so, how many such documents. In total, 1,463 pieces of published research articles from authors currently at The University of Sheffield were found to be cited in between 1 and 14 policy documents. Twenty-one research articles were listed as being cited in five or more policy documents; 21 research articles in four policy documents; 50 in three documents; 186 in two documents; and 1,185 in one document.

At present Altmetric.com tracks 96,550 research outputs at the University of Sheffield with 1,463 of them being cited by at least one policy document. This means that 1.41% of Sheffield research, across all disciplines, is cited by at least one policy document. This compares relatively well with the overall impact of research on policy according to previous research based on data from papers indexed in WoS, which was just 0.5% from (n = 11,254,636) (Haunschild and Bornmann, 2017), and 1.2% of published climate change research from (n = 191,276) (Bornmann et al., 2016).

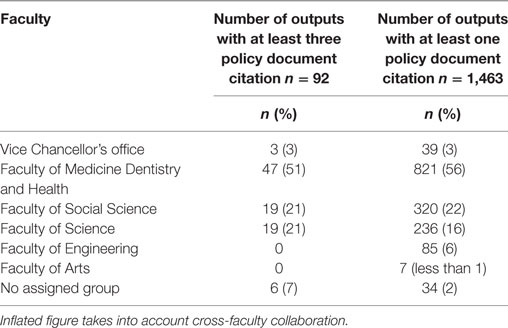

Policy Documents by Faculty

We looked at the Altmetric.com data to see which research disciplines received the most policy document citations. Medicine and health research have potentially wide sweeping impacts on a global scale and, therefore, it was no surprise that these disciplines received the most policy citations. Policy documents are core to health research being designed and implemented for future reform. Analysis of the 92 University of Sheffield research articles listed as being cited in three or more policy documents indicated that the research topics with the greatest policy impact are medicine, dentistry, and health, followed by social science and pure science. There were several entries from the 1,463 records that did not contain any faculty or departmental data. Rather than assume that these belong to a single faculty, we decided to not assign them to a group. For the 1,463 research articles cited once and the 92 cited at least three times in policy documents see Table 1.

The 0 values indicate that none of the publications cited in three or more policy documents assigned by Altmetric.com were produced from the faculties of Engineering or Arts, compared with 85 and 7, respectively, out of the total of 1,463 publications with a single policy document citation. Work from these faculties is clearly rarely cited in the policy documents.

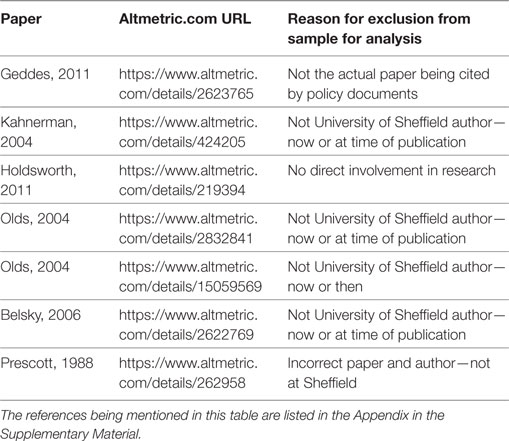

Errors

An in-depth assessment was made of the 21 research articles that were listed by Altmetric.com as being cited in five or more policy documents and having one or more authors who are currently at the University of Sheffield. From this sample of 21, 4 were research articles without an author at Sheffield (at the time of publication or now); 2 were outright errors (the identified paper was not actually the paper being cited in the policy documents or was another paper entirely); and 1 research article actually only listed the Sheffield “author” in a mass of names belonging to a related “collaboration group,” but who had no specific involvement with the research article. For the “excluded” research articles, see Table 2.

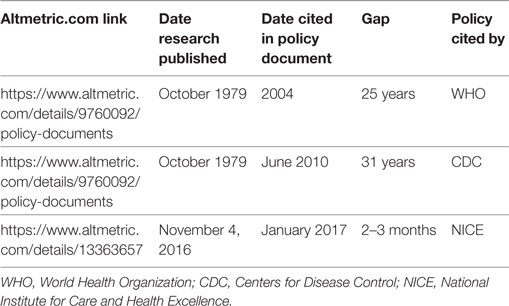

Time Lags from Publication to Citation in a Policy Document

We wanted to see whether research conducted at The University of Sheffield was limited in its citation lifespan within policy documents. Access to the Altmetric.com data allowed us to look back to 1928 to see how far back a piece of research could exist but still be cited in policy. The earliest piece of research in our sample of 1,463 records was published in 1976. We wanted to explore the time lag between a piece of research being published and being cited in policy. We found that one paper was recorded in the data as being published in 1965, but that was incorrect on closer inspection of the Altmetric.com record. We also found this to be the case for several other papers that were recorded as being published pre-1979. All of these papers were actually published post-2000 after cross-checking the records. The earliest paper to be cited in policy was from 1979 and had a time lag of 25 years to its first citation in a policy document, and the shortest time between research publication and policy citation was about 3 months (see Table 3). Time lapses between research publication and policy vary and are complicated with one estimate being an average of 17 years (Morris et al., 2011). There have been calls to accelerate policy impact of relevant research (Hanney et al., 2015). As seen from our sample and other published research, the vast majority of published research will never feature in policy documents and despatches. It is important to remember that not all research is published in a policy context. Altmetric.com’s continual trawling for fresh policy sources and documents can aid the process for discovering the minority that are.

Table 3. Time lags from publication to citation in a policy document (papers not included in our sample of 14).

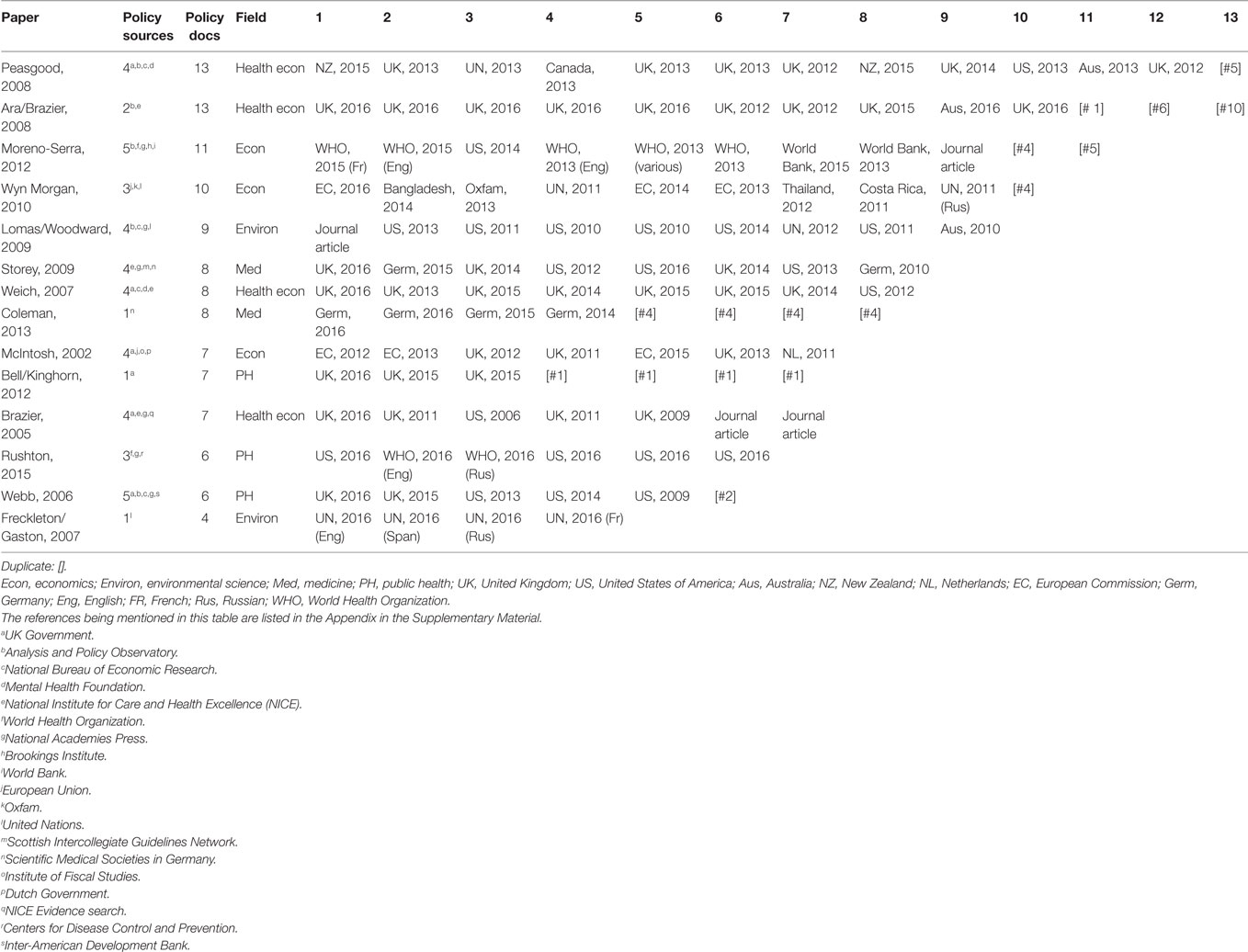

Details of the Most Frequently Cited Research Articles

Numbers of Policy Documents

The total number of relevant research articles with useable data from this sample of research papers cited five or more times in policy documents, therefore, was 14/21 (66% of the total). The data relating to these 14 research articles and their policy documents are presented in Table 4. In 4 of these 14 papers, the “Sheffield author” was not actually at Sheffield when they published the paper (McIntosh, 2002, Scott Weich, 2007, Wyn Morgan, 2010, Moreno-Serra, 2012). Altmetric.com was, therefore, useful at identifying and assigning papers to Sheffield even if an author was not at Sheffield at time of the publication.

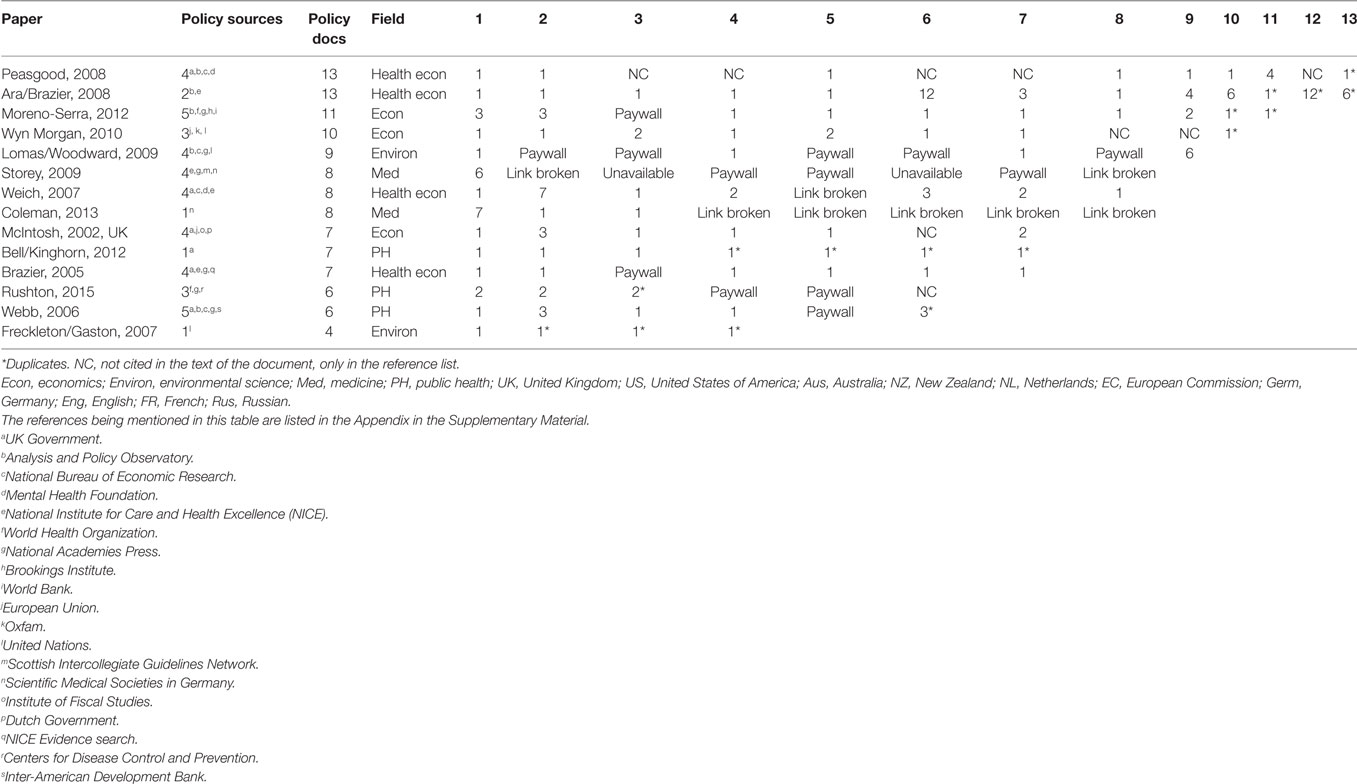

Table 4. Research articles and the policy documents listed as citing them: country and year (language) information.

There is a clear distinction between policy sources and actual policy documents: a research article might be cited by the UK Government (UK Gov1), for example, as the “policy source,” but appear in multiple reports or documents related to that source. A typical example can be seen from the paper by Ara and Brazier (2008)—see Table 4. This research article was cited by two policy sources: the UK National Institute for Care and Health Excellence (NICE)—an independent organization that produces guidance for the National Health Service in England and Wales2—and the Australian Analysis and Policy Observatory (APO), a not-for-profit organization that makes available information to promote evidence-based policy and practice.3 The research article was cited in 12 “policy documents” sourced from NICE and one document sourced from the APO. It should be noted that two of these documents were duplicates (leaving 10 unique documents), and that some of the documents were actually separate sections of a single policy document or piece of guidance, e.g., appendices. Indeed, it can be seen from Table 4 that 9% (16/175) of the “identified” policy documents were duplicates, although it should be noted that only two of the 14 research articles in this sample had even a moderate proportion of duplicate policy documents (Coleman, 2013; Bell and Kinghorn, 2012): the vast majority had one or none.

Disciplinary and Geographical Spread

The research articles focused on a narrow field of disciplines: health economics (four articles); economics and public health (both three articles); medicine and environmental science (two articles each) (see Table 4). We were very interested to explore the global impact of University of Sheffield research in policy documents and it was relatively easy to assess the national and international policy impact of research articles using Altmetric.com. This sample of 14 research articles with authors from the University of Sheffield in the UK was associated with a total of 175 “policy documents.” Including duplicates, 107 (61%) of these documents were “national,” i.e., from the UK, and 68 (39%) were “international,” i.e., from countries other than the UK or from international bodies, such as the United Nations or the WHO. It should be noted, however, that these “Sheffield” authors shared authorship with non-UK authors in 5/14 research articles (Rushton, 2015; Coleman, 2013; Wyn Morgan, 2010; Lomas/Woodward, 2009; Storey, 2009) and that the proportion of international policy documents is much higher in these cases: 31/34 policy documents (91%) were international for these five research articles (excluding duplicates and journal articles erroneously designated as “policy documents”). This compares with a 46% (28/61) of international policy documents for the 9/14 research articles with only UK authorship. This is consistent with other findings on international co-authorship and impact (Narin et al., 1991). In the field of health economics, which is less generalizable to non-UK contexts, the four research articles all had UK only authorship, and 25/34 (74%) of non-duplicate citing “policy documents” were also from the UK (i.e., national). In the three more general economics research articles, by contrast, even with almost exclusive UK authorship (only the Wyn Morgan, 2010 paper had an “international author,” from Italy), only 3/24 (13%) were “national” policy documents, so 25/28 (87%) were “international.” The topic clearly influences the national or international nature of policy impact more than other factors. However, it is important to remember that this is only a small sample.

Weight of Impact: Citation Counts in the Policy Documents

From our sample we looked at the total policy document citation count per research paper. Twenty-three out of the 175 policy documents (13%) were not accessible via the Altmetric.com pages either because the link was broken, the document was currently unavailable or because the full document itself was behind a paywall. Where non-duplicate documents could be accessed, an assessment was made of the number of citations of the relevant research article within each policy document (see Table 5). This was possible for 75 out of 117 documents from this sample (64%). The majority of these research articles were cited only a single time or not at all (only appearing in a reference list) in the various “policy documents”: 53/75 (71%). In this sample, the research articles are cited twice in 8/75 (11%) and three or more times in 14/75 (17%). This suggests that impact generally is very limited, but is perhaps consistent with known figures for academic research impact (Carroll, 2016).

Table 5. Research articles and the policy documents listed as citing them: Number of times each was cited in each policy document.

Altmetric.com Supplementary Data

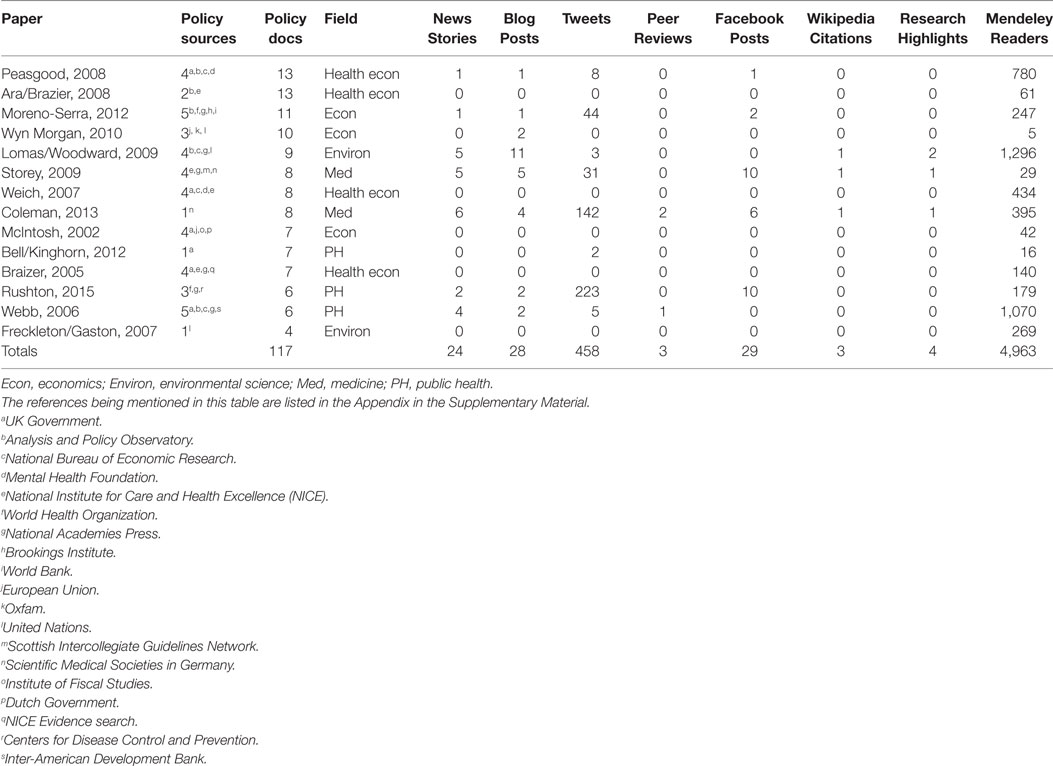

We also explored supplementary Altmetric.com data (See Table 6) to investigate whether there were any relationship between the attention that research receives across the web and citation in policy documents. We looked at individual Altmetric.com data counts rather than the total Altmetric.com Score. The best Altmetric.com performers were Mendeley saves and Tweets, while some of the research outputs received some media attention. It is important to note that not all Altmetric.com outputs receive the same weighting with news having the highest importance and Altmetric.com score, for example, news scores 8 points, blogs 5, Twitter 1, and policy documents 3 (per source).4 Mendeley had by far the most attention with the 14 most frequently cited papers saved 4,963 times in Mendeley users’ reference management databases, although just three papers (Lomas/Woodward, 2009; Peasgood, 2008; Webb, 2006) accounted for about 40% of those Mendeley saves. Twitter accounts for 458 Tweets but again the majority of these Tweets were as the result of just two papers (Rushton, 2015; Coleman, 2013). Coleman, 2013, Lomas/Woodward, 2009, Storey, 2009, and Webb, 2006 received coverage in 20 of the 24 news articles that were captured in the data, while Coleman, 2013, Lomas/Woodward, 2009, and Storey, 2009 also account for over two-thirds of the blog posts. Of the two most-cited pieces of research in policy, Peasgood, 2008 received a great deal of attention in Mendeley, but very little elsewhere. Brazier and Ara’s work was picked-up by Mendeley users but received no other coverage. Coleman, 2013 was the only research article to receive a score in each of the columns, while Brazier/Ara, 2008, Freckleton/Gaston, 2007, Weich, 2007, Brazier, 2005, and McIntosh, 2002 received no Altmetric.com attention other than policy document citations. Work by Cadwallader (2016) looked at selected Altmetric.com data from some departments at The University of Cambridge and found that “sustained news and blog attention could be a key indicator for identifying those papers likely to make it into policy.”

Table 6. Altmetric.com supplementary data.

Discussion

Identification of Relevant Policy Documents by Altmetrics.com

The Altmetric.com data did permit the quick and easy identification of research publications from an institution and the identification of policy documents that cited those publications. However, the system and data are not without errors or limitations.

Altmetric.com had problems in the accurate identification of some papers: one-third (7/21) of the sample of publications that appeared to be cited five or more times in policy documents were not by current or previous Sheffield authors at all. It is not entirely clear how research articles were identified as having an author who was currently at The University of Sheffield, when none of the listed authors was actually employed at the institution; or how citations of one research article came to be attributed to another (another paper with the same or a very similar title, from the same year, but with different authorship: e.g., Geddes, 2011 and Prescott, 1988, see Table 2). We, therefore, looked at the published research and the authors and their affiliations at the time of publishing, with most being based in the United States. In the case of Prescott, where we have a notable academic of the same surname at Sheffield presently, we looked at the publication date and discipline and compared it to research by the Sheffield-based author. We found that there was not only a change in discipline but also a notable gap between the publications of about 20 years. Exploring the issue further we contacted our research department to find out why the data were incorrect. We found that data such as academics’ names, departments, and faculties are all held within institutional systems and fed to Altmetric.com as a way of connecting the Altmetric.com data with academics’ profiles. These data, which are hosted by Sympletic Elements, are updated by institutions and any changes within the institution are reflected by the Altmetric.com database. In the case of incorrect authors, we found that three of them had been linked to Sheffield academics after three researchers based at our institution had incorrectly claimed the publications as their own, before rejecting them later. One was rejected by a Sheffield author but remained in the system, while another had pulled in the wrong paper through in Altmetric.com from the PubMed ID provided by Sympletic Elements. Other incorrect attributions to Sheffield authors were more understandable: research articles identified as being authored by Holdsworth were actually authored by others, although the name of this academic does appear elsewhere in the article. However, this still represents an issue with the software as the Sheffield author did not actually appear in the author list of the paper.

The result was that one-third of the sample of 21 research articles subjected to in-depth analysis was incorrectly identified (false positives). This has implications for the utility of data from Altmetric.com, as it suggests that perhaps as much as one-third of such a data set might consist of erroneous attributions (e.g., 487 of this sample of 1,463 research articles with policy citations), which can only be verified by checking each individual research article and its Altmetric.com data. As noted above, the research publications, authors, departments, and institutions that Altmetric.com trawls for information have also been found to be inaccurate, for example, an author at an institution accepting publications that are not their own via in-house research databases, such as Sympletic Elements. The origin of these inaccuracies is not always easy to identify. We can only assume that such examples are genuine mistakes by authors, although we cannot rule-out gaming or foul play in some instances.

The policy document citation and supplementary data are only as good as what goes into the system at the institution and Altmetric.com databases. Policy documents are being added to the Altmetric.com database all of the time, so it should follow that policy citations will increase, while other Altmetric.com data such as news coverage and social media can only be accurate if there is some kind of unique identifier tied to the research output. If a paper is discussed across the media, but there is no linking to any of its unique identifiers within that communication, it does not get picked-up by Altmetric.com. The Altmetric.com score for our sample is quite likely to be higher than we have reported.

The two papers that received the highest number of policy document citations Peasgood, 2008, Brazier/Ara, 2008 received almost no media attention according to Altmetric.com. Peasgood, 2008 received notable attention via the 780 Mendeley users who had saved a copy of the paper to their database but very little other attention. Brazier/Ara, 2008 received less Mendeley attention with 61 saves and no other social or traditional media attention. This may suggest three things, first, that these are niche pieces of research that are not news or social media-friendly. Second, that it may be a function of date, that these were published at a time when social media was still quite embryonic and sharing this research would have happened more if it was published today. Third, that the research has been shared but without any corresponding unique IDs attached which would mean Altmetric.com would fail to pick it up. The latter is much less likely due to the number of Mendeley Saves that have been recorded in the system.

While acknowledging that this case study is based on a small sample, the in-depth analysis indicates that the number of policy documents identified by Altmetric.com is also likely to be an over-estimation of the true number of “policy documents” citing a research article. It is apparent from Table 4 that, based on this sample, up to 14% of research articles might have between 20 and 50% of duplicate “policy documents” in their assigned numbers. It is also noteworthy that not all documents identified from “policy sources” should be considered “policy documents,” under any conventional definition. Four citing “policy documents” in this sample were clearly standard peer-reviewed journal articles, but were categorized as policy documents citing the research articles by Moreno-Serra, 2012 (Hendriks et al., 2014) Lomas, Woodward, 2009 (MacDicken, 2015), and Brazier, 2005 (Armstrong et al., 2013; Holmes et al., 2014). The quality of ascribed policy sources might potentially be an issue of concern, very much like that of predatory journals where argument can ensue as to the authenticity and credibility of a journal (Cartwright, 2016). Altmetric.com will need to ensure that they maintain a quality system that only uses legitimate policy sources. Users of Altmetric.com can suggest policy sources, so it is essential that a peer review process of such sources is employed.

Time Lags from Publication to Citation

The earliest policy document in the sample of 14 research articles was from 2006, but only 3/175 policy documents identified by Altmetric.com in this sample were from before 2010 even though 5of the 14 research articles were published between 2002 and 2007 [McIntosh, 2002 (economics), Brazier, 2005, Weich, 2007 (both health economics), Webb, 2006 (public health), and Freckleton/Gaston, 2007 (environmental science)]. It is not clear if this reflects a natural time lag to policy impact for research of this type, or the current limitations of the Altmetric.com search. The “policy impact” of some research articles was incredibly rapid: the six papers by Wyn Morgan, 2010 and Moreno-Serra, 2012 (both economics); Storey, 2009 and Coleman, 2013 (both medicine); Lomas/Woodward, 2009 (environmental science), and Rushton, 2015 (public health) all appeared in policy documents the year after they were published. Interestingly, all of these “early” policy documents were international and five of the six papers were international collaborations (Narin et al., 1991). This suggests that, in some disciplines, international authorship might facilitate and speed-up international policy impact.

Disciplinary and Geographical Spread

It is perhaps not surprising that the majority of the research with greatest policy impact had been undertaken within the fields of economics and health (see Tables 1 and 4): alongside education, these are arguably the two major areas of policy development nationally and internationally (Haunschild and Bornmann, 2017), with the environment being another focus of policy work (Bornmann et al., 2016). It is, therefore, no surprise to see the relative numbers of research articles, categorized by Faculty, with medicine and health far outstripping any other discipline.

Conclusion

Altmetric.com data can be used for assessing policy impact, but there are clearly still problems with how the software identifies and attributes research papers (based on this data set, perhaps as much as 33% of any sample could be being erroneously attributed to an institution or an author). Further work is needed to investigate how Altmetric.com identifies and attributes authors and “policy documents” (and they might not be policy documents, e.g., journal articles). Once it can be ascertained that an author at your institution has authored a paper that has been cited in policy, it is essential to double-check any policy documents to ensure that it is correct. Therefore, while useful, caution must be exercised when seeking to use these data, especially in terms of providing evidence of policy impact.

Author Contributions

AT came up with the research question and contacted Altmetric to obtain data and liaised with Altmetric over further correspondence. AT wrote the majority of introduction and analyzed the extended set of data and additional altmetric data. CC wrote the abstract, most of the methods, and carried out analysis for the 21 publications. AT and CC wrote the results and conclusion. AT managed the references and majority of citations.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Funding

The authors would like to thank Altmetric who assisted us and provided us with the data and supported us with any questions relating to the data.

Supplementary Material

The Supplementary Material for this article can be found online at http://www.frontiersin.org/articles/10.3389/frma.2017.00009/full#supplementary-material.

Footnotes

- ^http://www.gov.uk.

- ^https://www.nice.org.uk/.

- ^http://apo.org.au/.

- ^For details of how the overall score is weighted, see: https://help.altmetric.com/support/solutions/articles/6000060969-how-is-the-altmetric-attention-score-calculated- (Accessed 8th November 2017).

References

Armstrong, N., Wolff, R., van Mastrigt, G., Martinez, N., Hernandez, A. V., Misso, K., et al. (2013). A systematic review and cost-effectiveness analysis of specialist services and adrenaline auto-injectors in anaphylaxis. Health Technol. Assess. 17, 1–117, v–vi. doi: 10.3310/hta17170

Booth, A. (2016). “Metrics of the trade,” in Altmetrics – A Practical Guide for Librarians, Researchers and Academics, ed. A. Tattersall (London: Facet Publishing), 21–47. Available at: http://www.facetpublishing.co.uk/title.php?id=300105

Bornmann, L. (2014). Validity of altmetrics data for measuring societal impact: A study using data from Altmetric and F1000Prime. J. Inform. 8, 935–950. doi:10.1016/j.joi.2014.09.007

Bornmann, L. (2015). Usefulness of altmetrics for measuring the broader impact of research: a case study using data from PLOS and F1000Prime. Aslib J. Inform. Manage. 67, 305–319. doi:10.1108/09574090910954864

Bornmann, L., Haunschild, R., and Marx, W. (2016). Policy documents as sources for measuring societal impact: how often is climate change research mentioned in policy-related documents? Scientometrics 109, 1477–1495. doi:10.1007/s11192-016-2115-y

Cadwallader, L. (2016). “Papers, policy documents and patterns of attention: insights from a proof-of-concept study,” in Altmetric Blog. Available at: https://www.altmetric.com/blog/policy-attention-patterns-grant-report/

Carroll, C. (2016). Measuring academic research impact: creating a citation profile using the conceptual framework for implementation fidelity as a case study. Scientometrics 109, 1329–1340. doi:10.1007/s11192-016-2085-0

Cartwright, V. A. (2016). Authors beware! The rise of the predatory publisher. Clin. Exp. Ophthalmol. 44, 666–668. doi:10.1111/ceo.12836

Didegah, F., Bowman, T. D., and Holmberg, K. (2014). “Increasing our understanding of altmetrics: identifying factors that are driving both citation and altmetric counts research unit for the sociology of education, University of Turku, Finland 1 introduction,” in iConference (Philadelphia), 1–8.

Fenner, M. (2013). What can article-level metrics do for you? PLoS Biol. 11:1–4. doi:10.1371/journal.pbio.1001687

Hanney, S. R., Castle-Clarke, S., Grant, J., Guthrie, S., Henshall, C., Mestre-Ferrandiz, J., et al. (2015). How long does biomedical research take? Studying the time taken between biomedical and health research and its translation into products, policy, and practice. Health Res. Policy Syst. 13, 1. doi:10.1186/1478-4505-13-1

Haunschild, R., and Bornmann, L. (2017). How many scientific papers are mentioned in policy-related documents? An empirical investigation using web of science and altmetric data. Scientometrics 110, 1209–1216. doi:10.1007/s11192-016-2237-2

Haustein, S., Bowman, T. D., and Costas, R. (2016). “Interpreting ‘altmetrics’: viewing acts on social media through the lens of citation and social theories,” in Theories of Informetrics and Scholarly Communication, ed. C. R. Sugimoto (de Gruyter), 372–406. Available at: http://arxiv.org/abs/1502.05701

Hendriks, M. E., Wit, F. W., Akande, T. M., Kramer, B., Osagbemi, G. K., Tanovic, Z., et al. (2014). Effect of health insurance and facility quality improvement on blood pressure in adults with hypertension in Nigeria: a population-based study. JAMA Intern. Med. 174, 555–563. doi:10.1001/jamainternmed.2013.14458

Holmes, M., Rathbone, J., Littlewood, C., Rawdin, A., Stevenson, M., Stevens, J., et al. (2014). Routine echocardiography in the management of stroke and transient ischaemic attack: a systematic review and economic evaluation. Health Technol. Assess. 18, 1–176. doi:10.3310/hta18160

Khazragui, H., and Hudson, J. (2015). Measuring the benefits of university research: impact and the REF in the UK. Res. Eval. 24, 51–62. doi:10.1093/reseval/rvu028

Kousha, K., and Thelwall, M. (2015). Web indicators for research evaluation, part 3: books and non-standard outputs. El Profesional de La Información 24, 724–736. doi:10.3145/epi.2015.nov.04

Kryl, D., Allen, L., Dolby, K., Sherbon, B., and Viney, I. (2012). Tracking the impact of research on policy and practice: investigating the feasibility of using citations in clinical guidelines for research evaluation. BMJ Open 2, e000897. doi:10.1136/bmjopen-2012-000897

Lui, J. (2014). New Source Alert: Policy Documents. Altmetric Blog. Available at: https://www.altmetric.com/blog/new-source-alert-policy-documents/

MacDicken, K. (2015). Editorial: introduction to the changes in global forest resources from 1990 to 2015. For. Ecol. Manage. 352, 1–2. doi:10.1016/j.foreco.2015.06.018

Morris, Z. S., Wooding, S., and Grant, J. (2011). The answer is 17 years, what is the question: understanding time lags in translational research. J. R. Soc. Med. 104, 510–520. doi:10.1258/jrsm.2011.110180

Narin, F., Stevens, K., and Whitlow, E. S. (1991). Scientific co-operation in europe and the citation of multinationally authored papers. Scientometrics 21, 313–323. doi:10.1007/BF02093973

Neylon, C., and Wu, S. (2009). Article-level metrics and the evolution of scientific impact. PLoS Biol. 7:e1000242. doi:10.1371/journal.pbio.1000242

Robinson-Garcia, N., Van Leeuwen, T. N., and Rafols, I. (2015). Using Almetrics for Contextualised Mapping of Societal Impact: From Hits to Networks. SSRN Preprints, 1–18. Available at: https://ssrn.com/abstract=2932944.

Sugimoto, C. R., Work, S., Larivière, V., and Haustein, S. (2016). Scholarly Use of Social Media and Altmetrics: A Review of the Literature. Jasist, 1991. Available at: http://arxiv.org/abs/1608.08112

Thelwall, M., and Kousha, K. (2015a). Web indicators for research evaluation. Part 1: citations and links to academic articles from the web. El Profesional de La Información 24, 587–606. doi:10.3145/epi.2015.sep.08

Thelwall, M., and Kousha, K. (2015b). Web indicators for research evaluation. Part 2: social media metrics. El Profesional de La Información 24, 607–620. doi:10.3145/epi.2015.sep.09

Thelwall, M., Kousha, K., Dinsmore, A., and Dolby, K. (2016). Alternative metric indicators for funding scheme evaluations. Aslib J. Inf. Manag. 68, 2–18. doi:10.1108/AJIM-09-2015-0146

Waltman, L., and Costas, R. (2014). F1000 recommendations as a potential new data source for research evaluation: a comparison with citations. J. Assoc. Inform. Sci. Technol. 65, 433–445. doi:10.1002/asi.23040

Keywords: altmetrics, policy making, policy research, research impact, scholarly communication, metrics, research metrics

Citation: Tattersall A and Carroll C (2018) What Can Altmetric.com Tell Us About Policy Citations of Research? An Analysis of Altmetric.com Data for Research Articles from the University of Sheffield. Front. Res. Metr. Anal. 2:9. doi: 10.3389/frma.2017.00009

Received: 27 July 2017; Accepted: 28 November 2017;

Published: 08 January 2018

Edited by:

Kim Holmberg, University of Turku, FinlandReviewed by:

Zohreh Zahedi, Leiden University, NetherlandsHan Woo Park, Yeungnam University, South Korea

Copyright: © 2018 Tattersall and Carroll. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Andy Tattersall, YS50YXR0ZXJzYWxsQHNoZWZmaWVsZC5hYy51aw==

Andy Tattersall

Andy Tattersall Christopher Carroll

Christopher Carroll