- 1Laboratory of Applied Psychology and Intervention, Department of Human and Social Sciences, University of Salento, Lecce, Italy

- 2Center for Mind/Brain Sciences – CIMeC, University of Trento, Rovereto, Italy

- 3Laboratory of Applied Psychology and Intervention, Department of Medicine, University of Salento, Lecce, Italy

- 4Department of Psychology and Milan Center for Neuroscience (NeuroMi), University of Milano-Bicocca, Milan, Italy

Introduction: This study aimed to establish normative data for the Self-Administered Tasks Uncovering Risk of Neurodegeneration (SATURN), a brief computer-based test for global cognitive assessment through accuracy and response times on tasks related to memory, attention, temporal orientation, visuo-constructional abilities, math (calculation), executive functions, and reading speed.

Methods: A sample of 323 Italian individuals with Montreal Cognitive Assessment (MoCA) equivalent score ≥1 (180 females; average age: 61.33 years; average education: 11.32 years), stratified by age, education, and sex, completed SATURN using PsychoPy, and a paper-and-pencil protocol consisting of Mini-Mental State Examination (MMSE) and MoCA. Data analyses included: (i) correlations between the total accuracy scores of SATURN and those of MMSE and MoCA; (ii) multiple regressions to determine the impact of sex, age, and education, along with the computation of adjusted scores; (iii) the calculation of inner and outer tolerance limits, equivalent scores, and the development of correction grids.

Results: The mean total time on tasks was 6.72 ± 3.24 min. Age and education significantly influence the SATURN total accuracy, while sex influences the total time on tasks. Specific sociodemographic characteristics influence subdomain accuracies and times on task differently. For the adjusted SATURN total score, the outer limit corresponds to 16.56 out of 29.00 (cut-off), while the inner limit is 18.57. SATURN significantly correlates with MMSE and MoCA.

Discussion: In conclusion, SATURN is the first open-source digital tool for initial cognitive assessment in Italy, showing potential for self-administration in primary care, and remote administration. Future studies need to assess its sensitivity and specificity in detecting pathological cognitive decline.

1 Introduction

As individuals age, age-related cognitive decline becomes more common. The prevalence of cognitive difficulties in Europe varied from 13.0 to 50.5%, as assessed across various cognitive abilities (Barbosa et al., 2021). Notably, neurological disorders, including dementia, rank as the second leading cause of death and the primary cause of disability worldwide, with projections indicating that they will contribute to more than half of the economic burden of disability by 2050 (Feigin et al., 2020).

Situations that may impair cognitive performance in aged adults are primarily related, but not limited, to conditions directly affecting cognition, such as dementia (Jack et al., 2018), Mild Cognitive Impairment (MCI, Petersen et al., 2018), stroke (Huang et al., 2023), traumatic brain injuries (Torregrossa et al., 2023), or instances where treatment for an illness might influence cognitive abilities (e.g., chemotherapy, Cheung et al., 2012). The measurement of cognitive performance is typically conducted through a neuropsychological assessment, which usually involves different levels of investigation, from screening or case finding (first level) to diagnostic and differential evaluation (second level).

An approach that can be used to distinguish pathological from non-pathological changes in cognitive functions is based on the normal distribution of cognitive performance in a neurologically healthy sample (without evident signs of ongoing pathology) (Capitani and Laiacona, 2017). Based on this approach, an individual’s performance is compared to that of a healthy sample with the same characteristics that could influence performance, like age and education (Perry et al., 2017).

Brief screening measures of general cognitive status (e.g., Mini-Mental State Examination, MMSE, Folstein et al., 1975; Montreal Cognitive Assessment, MoCA, Nasreddine et al., 2005) have been developed in response to the need for early detection of cognitive deterioration. Such screening tests are expected to be relatively quick, easy to administer, score, and interpret, and could be carried out by professionals other than neuropsychologists, such as general practitioners, nurses, or trained staff. For example, regular cognitive screening is a recommended measure for the prevention of Alzheimer’s Disease for individuals over 50 years old (Alzheimer’s Association, 2019). However, despite the crucial role general practitioners play in detecting early signs of cognitive decline in older adults, only 16% of those in need receive regular cognitive function screenings (Alzheimer’s Association, 2019). The limited use of cognitive screening in these contexts is due to several factors: the nature of primary care settings (such as unsuitable office environments and limited time for visits), physicians’ specific competencies (including a lack of training in neuropsychological assessment, scoring, and interpretation), and contingent issues like the need for social distancing due to infectious risks, such as those associated with COVID-19 (Staffaroni et al., 2020).

Several systematic reviews have reported a remarkable increase in new technology-based cognitive assessment tools worldwide (Chan et al., 2021; Giaquinto et al., 2022; Pieri et al., 2023; Thabtah et al., 2020; Tsoy et al., 2021). Technological advancements could present an opportunity for overcoming these issues through self-administration, self-scoring, and reduced time involvement for professionals (Isaacson and Saif, 2020). Thus, their use in primary care seems appropriate (Sabbagh et al., 2020). Although some studies show that digital tools outperform traditional paper-and-pencil methods in distinguishing MCI and dementia from controls, most digital tools lack clinical, multilingual, and cross-cultural validation studies. These tools often have small sample sizes, are typically administered to highly educated individuals, and rarely fully leverage the advantages of digital formats over paper-and-pencil methods, such as the precise measurement of test completion times (Giaquinto et al., 2022). Indeed, some of these tools include tasks with time limits, but all of them can record the time taken to finish (response time), which reflects the duration of relevant cognitive operations, along with the time required for selecting and executing the response. The speed score may be a very important measure for detecting age-related variation in behavior in healthy aging and preclinical phases in dementing disorders (for a meta-analysis on AD disorder see Bäckman et al., 2005). Numerous studies suggest that normal aging gradually decreases processing speed, starting from early middle age (e.g., Zimprich and Mascherek, 2010). Processing speed declines as people grow older with whichever type of processing speed task is used: psychometric (e.g., Hedden and Gabrieli, 2004), cognitive-experimental (e.g., Der and Deary, 2006), or psychophysical (e.g., Gregory et al., 2008). It is important to note that the psychometric approach notably includes both cognitive tests with time limits (number of correct responses within predetermined time limits, e.g., the Attentive Matrices or the Wechsler Digit Symbol-Coding and Symbol Search), as well as tests that assess processing speed by measuring the time to complete tasks (e.g., the Trail Making Test -A). Both types of cognitive tests have been applied in clinical settings to assess processing speed in both normal and neurologically impaired populations, alongside other cognitive functions.

Therefore, considering the usefulness of easily administered and applied screening tools that are self-administered and have automatic corrections, along with the importance of having not only accuracy measures but also response time measures, digital tools undoubtedly meet these needs. Among at least 30 touchscreen tools available in 22 countries, only a few of them are available in multiple languages and culturally validated in different populations, and none of them has yet been validated in Italian (Giaquinto et al., 2022).

Recently, the Self-Administered Tasks Uncovering Risk of Neurodegeneration (SATURN) (Bissig et al., 2020) has been developed and validated in clinical settings in the United States. This freely accessible electronic screening tool proved comparable to the MoCA in identifying cognitive impairment. SATURN was originally introduced to assess the risk of cognitive symptoms that may indicate a potential neurodegenerative disorder. In the original study (Bissig et al., 2020), it was tested on patients from a dementia clinic who had Alzheimer’s Disease, other forms of dementia, or other neurological conditions such as Multiple Sclerosis, Essential Tremor, or Parkinson’s disease. Like the MMSE and MoCA, it can be useful in various conditions where cognitive symptoms emerge in domains such as attention, memory, orientation, calculation, visuo-constructional abilities, and executive functions, which are characteristic of pathological cognitive aging. SATURN relies solely on visual stimuli, requiring some reading ability, thus overcoming potential language barriers between examiners and patients, or prevalent hearing impairments in the target population. It also reduces hardware and software demand for remote use, such as eliminating the need for speaker volume calibration and multimodal stimuli for time synchronization. SATURN is self-administered, automatically scored, and open source for professionals and researchers, and it was comparable to MoCA, for example, in detecting dementia from controls. Its sensitivity and specificity in the USA population were 0.92 and 0.88, respectively (Bissig et al., 2020). Thanks to its open-source nature, which facilitates its dissemination, it has already been adapted into other languages (e.g., Korean, Vietnamese, simplified Chinese, Italian, and Greek), although clinical or normative validation data for the tool outside of the USA have not yet been published.

This study aims to establish normative data for the Italian version of SATURN, using a sample of individuals aged 50–80 years, stratified by age, sex, and education. Conducting a normative study on SATURN in the Italian context would enhance knowledge of the tool’s psychometric characteristics, facilitate its multilingual and cross-cultural dissemination, provide insights into its performance among individuals with lower education levels than those in the original sample, and offer an estimate of administration times that were not included in the original study. Several innovative aspects distinguish it from the validation of the original version. In the Italian version, we analyzed both time on task and accuracy for each task and cognitive subdomain (represented by an average of tasks exploring each specific cognitive subdomain). The total time on tasks for the entire test was also computed. A standardization methodology outlined by Capitani and Laiacona (2017), which is the accepted standard for validating neuropsychological tests in Italy, was applied to the accuracy and time on task data. Another noteworthy feature is the inclusion of a preliminary assessment of visual acuity and color perception, aimed at identifying potential visual impairments that could impact task performance or render the test inapplicable. Additionally, to facilitate users, an automatic scoring function has been implemented. After completing the digital test, users can remotely download a report containing raw, adjusted, and equivalent scores for each variable. A local version of the tool is accessible via PsychoPy and can also be remotely administered through Pavlovia. It is openly shared through a specified link (see Methods) and allows for future software updates to be downloaded.

2 Methods

2.1 Subjects

A total of 330 subjects living in the region of Puglia (Italy) were recruited. A balanced sampling method based on age, education, and sex was used. Each administrator was assigned a specific age and education group, with the request to carry out a balanced number of assessments for each sex. Two recruitment strategies were employed in two different phases of the research. The recruitment strategy was initially conducted directly, using flyers posted in pharmacies, doctors’ offices, and at events promoting cognitive prevention. Subsequently, a snowball sampling method was employed to specifically target and complete the necessary strata of the population. Inclusion criteria were: (i) age between 50 and 80 years; (ii) absence of self-reported diagnosis of neurologic diseases (e.g., MCI, any kind of dementia, stroke, traumatic brain injuries); (iii) MoCA equivalent score ≥1. Participants with other well-known risk factors for dementia (e.g., alcohol abuse, hypertension, diabetes, history of depression, etc.), as identified by Livingston et al. (2020), but without a diagnosis of dementia, were included to ensure the representation of the general population. We excluded from analysis: (i) participants (n = 3) who achieved an age- and education-adjusted MoCA equivalent score equal to 0 [according to the normative Italian data by Santangelo et al., 2015], to reduce probability of enrolling individuals with possible diffuse cognitive impairment, as it has been done in previous normative studies on Italian neuropsychological tests (e.g., Conti et al., 2015; Santangelo et al., 2015); (ii) participants (n = 2) whose total completion time on SATURN was higher than three standard deviations from the sample mean, as outliers due to unintentional interruptions during administration; (iii) participants (n = 2) with 0 years of formal education, as extremely rare cases that cannot be considered in the age-education classification used in this study.

All participants took part in the study on a voluntary basis after having provided their written informed consent and without receiving any reward. This enrolment procedure resulted in a sample of 323 neurologically healthy Italian subjects (180 females and 143 males), representing all levels of formal education based on the three-level coding system from the International Standard Classification of Education (ISCED) of the United Nations Educational, Scientific and Cultural Organization (UNESCO), as suggested by Boccardi et al. (2022): compulsory (primary and secondary) education; upper-secondary education; and post-secondary education. In Italy, the first level corresponds to less than 9 years of education, the second level from 9 to 13 years, and the third level more than 13 years of education. The mean age of the whole sample was 61.33 ± 8.75 years, mean formal education was 11.32 ± 4.59 years. The distribution of the sample for age and education is reported in Table 1.

Table 1. Demographic distribution of the sample are reported in terms of age (50–59, 60–69, 70–80 years old), years of education (<9, 9–13, >13 years), and sex (male, female).

2.2 Procedure

Participants were briefed about the study and signed an informed consent form. Data collection took place in a quiet environment where the participant was alone with the experimenter conducting the evaluation. The research protocol consisted of paper-and-pencil tests and digital tests administered via a laptop with mouse input (hardware) using PsychoPy software version 2022.2.2. The paper-and-pencil protocol was administered by the experimenter, while the digital one was self-administered. Only in cases where participants were unable to use the computer, the experimenter could offer limited assistance with entering responses, without influencing reading, comprehension of the text, or completion of the tests in any way. The protocol was the same and in the same order for all participants. The administration occurred in a single session and by a single experimenter. The digital and paper-and-pencil protocols were administered by psychologists who were adequately trained. The administration lasted approximately 30 min. Data collection took place from April 2023 to December 2023. The study received approval from the National Ethics Committee for experiments of public research institutions (NEC) and other national public entities (CEN) during the session held on April 4, 2023, Project Code: PNRR-MAD-2022-12376781.

2.3 Measures

2.3.1 Paper-and-pencil protocol

The Mini-Mental State Examination (MMSE, Folstein et al., 1975), administered orally, is a widely used tool assessing cognitive functions in individuals with suspected cognitive impairment. It evaluates various cognitive areas, including temporal and spatial orientation, short-term memory, attention, and calculation ability. It comprises a series of questions and tasks, with a maximum score of 30 points, where lower scores indicate greater cognitive impairment. In the updated normative study that enrolled people from the South of Italy (Carpinelli Mazzi et al., 2020), the multiple linear regression showed a significant effect of age and education on the raw score, but not for sex, and the cut-off score fixed to 24.9/30 led to detect 44 out of 47 Alzheimer’s Disease patients.

The Montreal Cognitive Assessment (MoCA, Nasreddine et al., 2005) is a cognitive screening tool developed to detect MCI. It assesses multiple cognitive domains, including memory, language, executive functions, visuospatial abilities, calculation, abstraction, attention, concentration, and orientation. Like the MMSE, it consists of various questions and tasks with a maximum score of 30 points, where lower scores indicate cognitive issues. In the Italian normative study considered here (Santangelo et al., 2015), the multiple linear regression showed a significant influence of age and education on the raw score, but not for sex. The inferential cut-off score was fixed at 15.5. The correlation between MoCA and MMSE-adjusted scores was statistically significant and medium positive (r = 0.43; p < 0.001).

2.3.2 Digital protocol

The SATURN (Bissig et al., 2020) is a self-administered digital test designed to assess global cognitive functioning and detect cognitive decline. Originally consisting of 20 brief cognitive tasks, it assessed a range of cognitive functions such as memory, attention, temporal orientation, visuo-constructional abilities, math (calculation), and executive functions in a time limit of 15 min (the program ends when one completes any tasks started within 15 min). In the American validation study, the total accuracy and the reading time (based on the duration participants spent reading verbal instructions for specific tasks, excluding any figures), were computed. Recently, the SATURN was adapted to test its remote, completely unsupervised delivery and usability in a relatively large group of healthy older adults recruited online (Tagliabue et al., 2023). The Italian version of the Saturn test was translated and adapted from its original English counterpart. Saturn primarily relies on visual tasks (see Tagliabue et al., 2023). The components requiring translation, apart from the instructions, were the attention, incidental memory, and recall items. For these items, direct translation of the English words was not employed. Instead, word selection was based on the Lexvar database (Barca et al., 2001; Barca et al., 2002) using the following procedure. First, the word database was ranked by familiarity (i.e., the extent to which a word is encountered in daily life, provided within the Lexvar) and frequency (Bertinetto et al., 2005). Only words of 4, 5, or 6 letters in length were considered. Subsequently, a composite score was calculated by averaging the z-transformed scores for familiarity and frequency for each word. Words falling within the third and fourth quartiles of this composite score distribution were then randomly selected to be used in the SATURN items. Efforts were made to balance word length (4, 5, and 6 letters) within tasks. The instructions were freely translated from English into Italian, with an emphasis on ensuring clarity and comprehensibility for the target population. The adapted version of SATURN was pilot-tested in a laboratory setting with older adult participants, who completed the SATURN test independently on a tablet and provided verbal feedback on the translation and usability. The Italian version was implemented using PsychoPy® (v2022.2.2) (Peirce et al., 2019) and can be translated into JavaScript, and hosted on Pavlovia1 for remote administration. PsychoPy and Pavlovia are products of Open Science Tools Ltd2, available for free or with a small fee, respectively. For consistent online unsupervised administration, the Italian SATURN differs from the original American version in certain aspects: the introductory sentence (“Click on the square to proceed” instead of “Close your eyes”), the instruction for the incidental memory task (“What shape had been selected?” instead of “What phrase was read at the start?”), and the removal of the spatial orientation question, which could not be automatically scored. The final Italian version comprises 19 tasks with a maximum total score of 29. Description of each task is reported in Table 2. In the present validation study, given the program’s valuable capability to record the time (expressed in seconds) spent on each task (hereafter referred to as ‘time on task’), we decided to analyze both accuracy and time on task for each cognitive subdomain. Each subdomain is represented by an average of accuracies and times on tasks exploring that specific subdomain. Additionally, we examined the SATURN total accuracy and total time spent on tasks by summing the accuracy scores and the time spent on each of the 19 tasks that constitute the test. Similarly to the American version, a reading time determined by the duration participants spent reading verbal instructions for specific tasks (excluding any figures) was also calculated. Moreover, in the Italian PsychoPy® version used in this study the tasks’ administration is preceded by a measure of visual acuity and color perception aimed at examining the subsistence of the examination conditions. SATURN demonstrated excellent feasibility features such as cross-platform portability, satisfactory user experience, and clarity of instructions [to delve deeper into the characteristics of the remote online adaptation, please refer to Tagliabue et al., 2023]. An updated version of Italian SATURN is publicly available at: https://osf.io/cdmt3/?view_only=44c8bc4977274e02969a2d7b80362caf.

Table 2. Here are reported the subdomains assessed with the SATURN, the tasks used to assess each domains, and the instruction/description of the task.

2.4 Statistical analysis

Statistical analysis followed the methodology outlined by Capitani and Laiacona (2017), which is the accepted standard for validating neuropsychological tests in Italy. Multiple regression analyses were conducted to determine the impact of demographic variables (such as sex, age, and education) on participants’ SATURN total accuracy and total time spent on tasks, as well as accuracy and time spent on tasks in each subdomain, and on the reading time for task instructions. Sex, age, and education were included in a multiple linear regression analysis to account for their potential overlapping effects. The results of these analyses were used to generate correction factors for each subject in the sample, which were applied to adjust raw scores. Adjusted scores were then ranked and categorized into a five-point interval scale (Equivalent Score, ES), ranging from 0 to 4, with specific criteria for each category. To identify normal and abnormal scores, non-parametric procedures (Capitani and Laiacona, 2017) were employed to calculate outer and inner tolerance limits, based on the distribution of scores within the normal population. Confidence intervals were set at 95%. Correction formulas and grids were provided to adjust raw scores based on demographic variables, such as age and education level. Correlations were calculated using Person’s r, and significance was set at p < 0.05. Analysis was run using JASP (JASP Team, 2024).

3 Results

SATURN mean total accuracy was 24.02 ± 3.25, ranging from 14 to 29, while mean total time on tasks was 403.09 ± 194.50 s (6.72 ± 3.24 min), ranging from 76.82 to 1172.15 s (i.e., 1.28–19.54 min). Table 3 reports descriptive statistics for demographic characteristics (age, education), raw and adjusted scores of the MMSE and MoCA, and SATURN total and subdomain accuracies and times on tasks. Approximately, 70–80 years old participants performed worse in SATURN total accuracy than 50–59 years old, and the less educated subjects had the lowest total accuracy. The distribution of SATURN mean total accuracy as a function of age and education is reported in Table 4. The mean distribution of accuracy for each subdomain, as well as the total and subdomain times on tasks, is reported in the Supplementary Table S1.

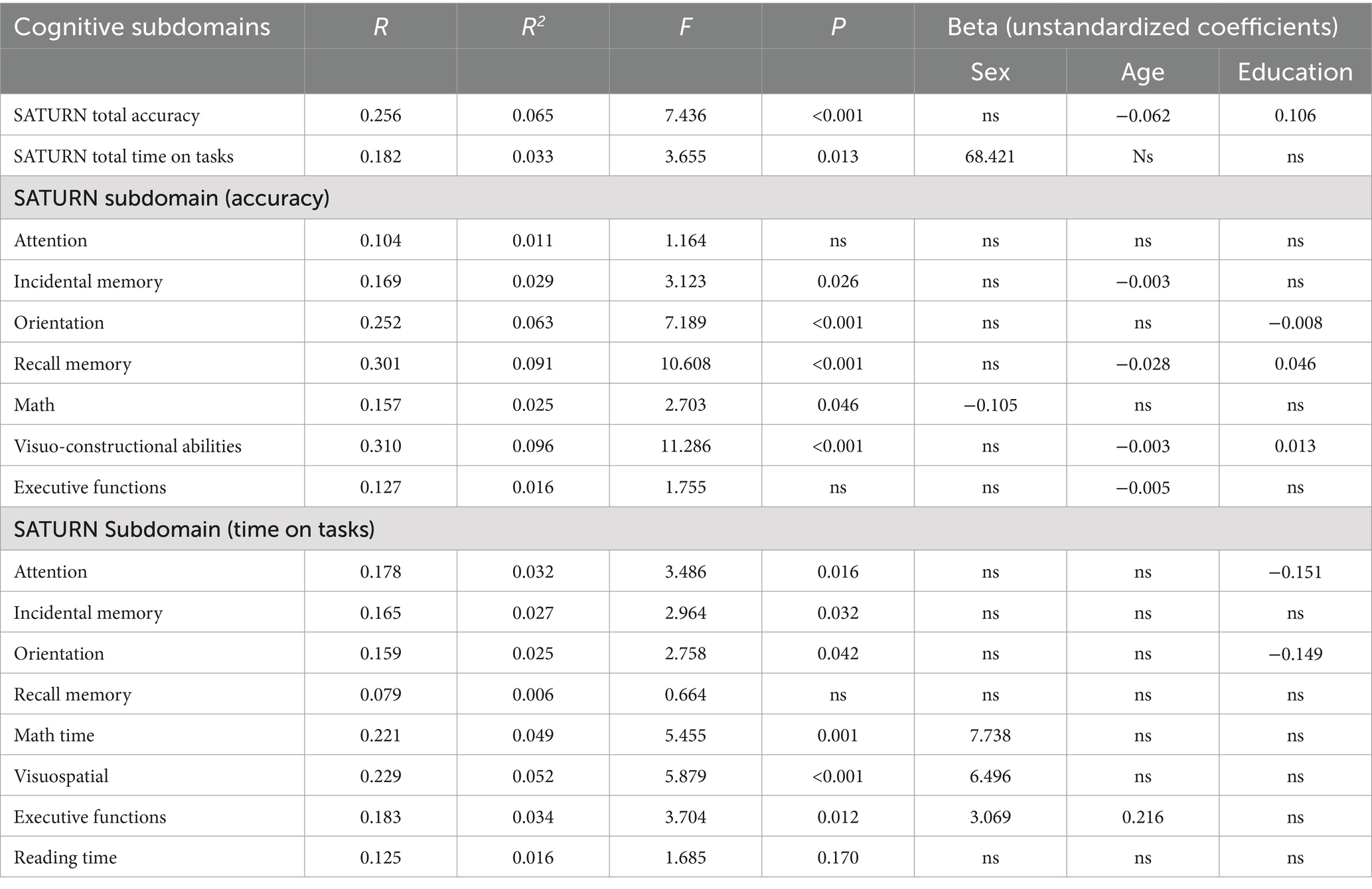

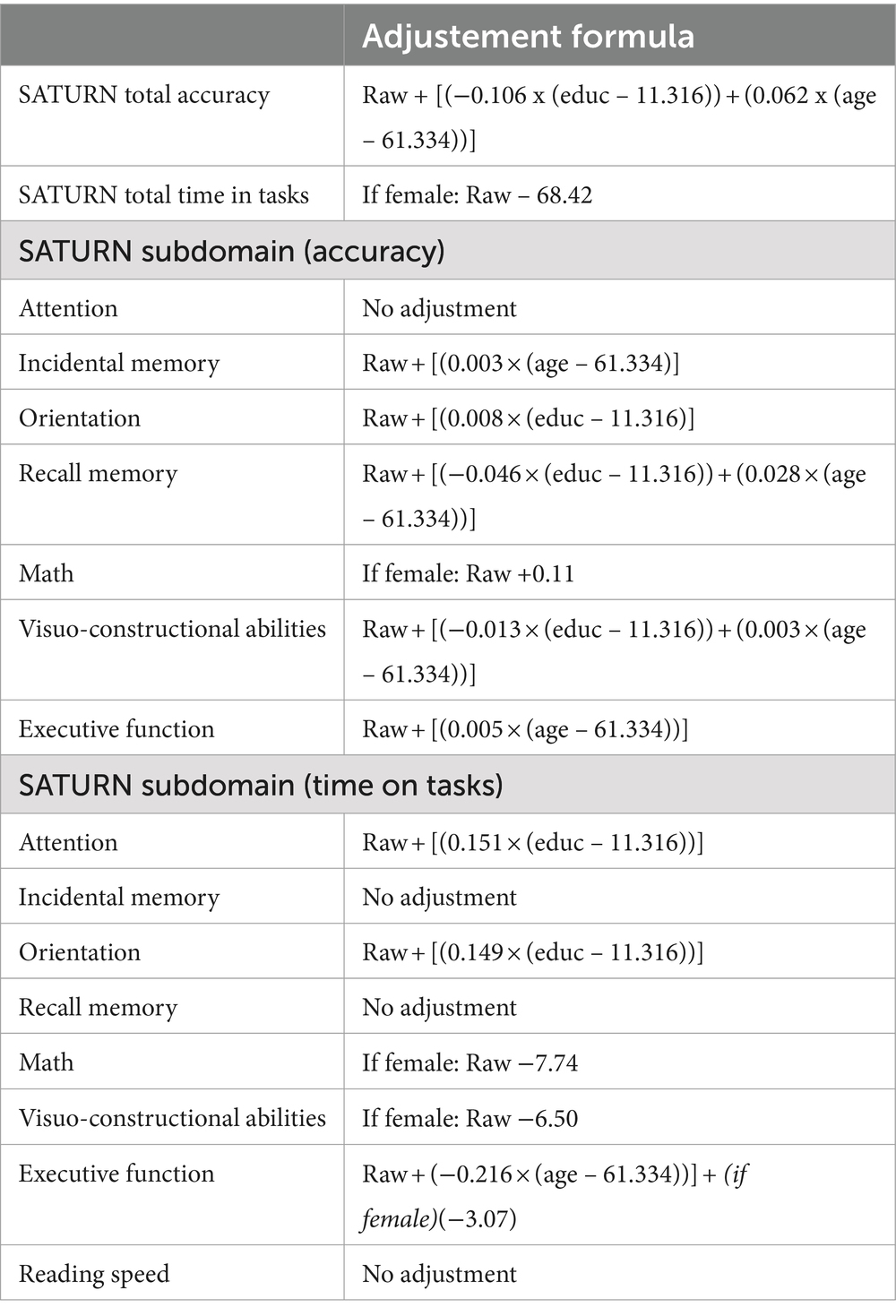

A series of multiple linear regression models revealed the influence (or lack thereof) of sociodemographic variables (sex, age, education) on total and subdomain accuracies and times on task. A significant effect of age and education emerged for SATURN total accuracy, while sex significantly influences the total time on tasks. Subdomain accuracies and times are influenced differently by sociodemographic characteristics. A significant effect of sex emerged for math accuracy and time on tasks in the subdomains of math, visuo-constructional abilities, and executive functions. A significant effect of age emerged for incidental memory accuracy, recall memory accuracy, visuo-constructional abilities accuracy, executive functions accuracy and executive functions time. A significant effect of education emerged for orientation accuracy, recall memory accuracy, visuo-constructional abilities accuracy, attention time and incidental memory time (Table 5). We derived the formulas from multiple linear regression models to calculate the adjusted scores for SATURN total and subdomain accuracies and times on tasks, based on age, sex, and education (Table 6).

Table 5. Multiple linear regression and beta unstandardized coefficients for the SATURN total accuracy, total time on tasks, and accuracy and time on tasks on each cognitive subdomain.

Table 6. Correction formulas for SATURN total and subdomains accuracies and time on tasks, according to sex, age and education.

Considering a sample of 323 subjects, outer and inner non-parametric tolerance limits are defined by values corresponding to the 10th and 24th worst observation. The outer limit for adjusted SATURN total accuracy corresponds to 16.56, while the inner to 18.57. The outer limit for adjusted SATURN total time on tasks corresponds to 832.83 s, while the inner to 706.56 s. The outer tolerance limit corresponds to the cut-off point, and scores falling beneath it (or above, if we consider times) may be seen as abnormal since it also encompasses the value of the 10th lowest observation (Capitani and Laiacona, 2017). Adjusted score higher (or lower, if we consider times) than the inner tolerance limit indicates a performance that we are 95% confident could come from the highest 95% of the distribution of the healthy population. Scores between outer and inner limits correspond to the 4.33% of our sample and are associated with borderline performance. Table 7 displays the inner and outer non-parametric tolerance limits, ES, the number of individuals within each ES (density), and the total frequency of individuals from 0 to 1, 2, 3, and 4 for the total and each subdomain score and time. For attention accuracy, we reported only Inner and Outer tolerance limits because of a ceiling effect: most individuals reached the Inner tolerance score, making not possible to distinguish different equivalent scores.

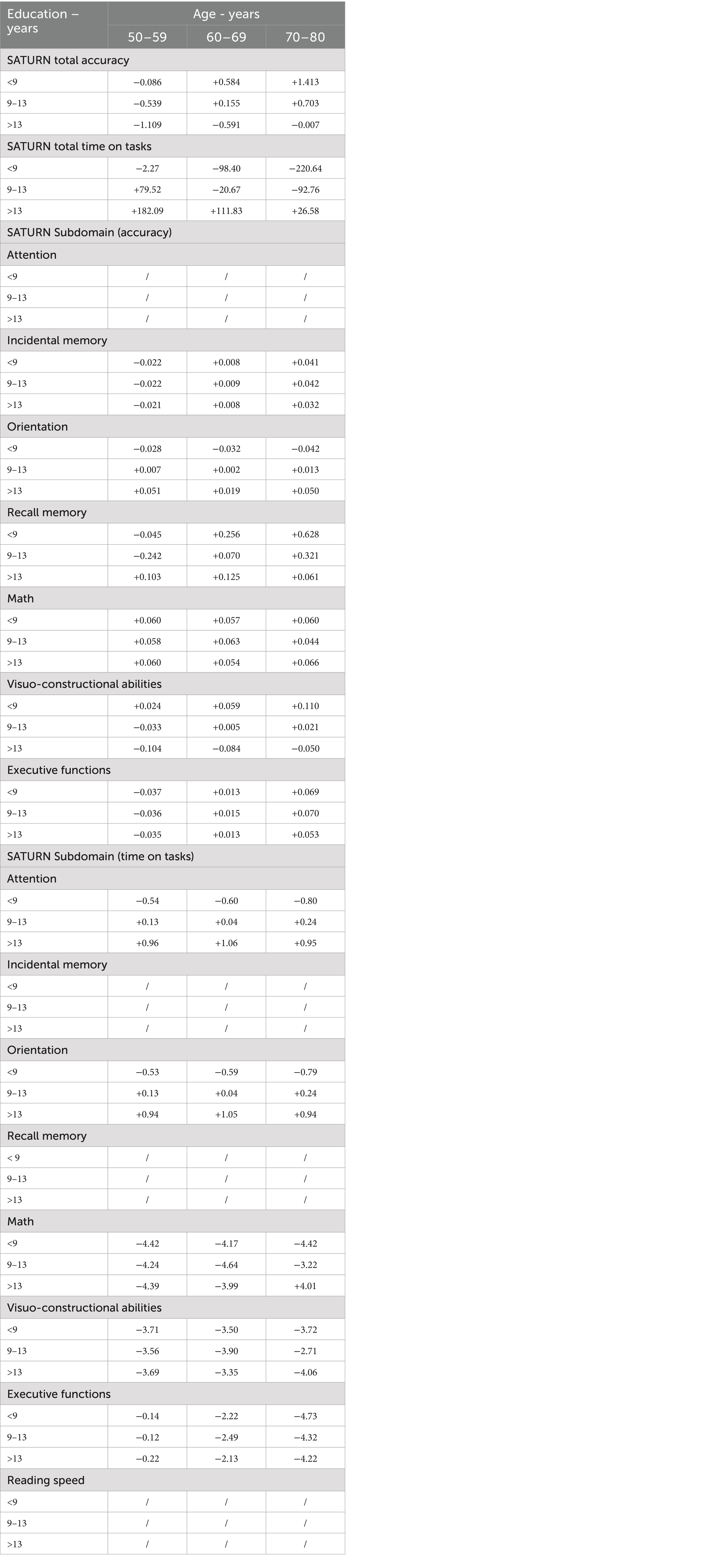

A correction grid was devised to accommodate the raw scores of tested individuals based on demographic factors. This grid was tailored for common combinations of age (grouped in 10-year increments) and educational attainment. In cases where the correction grid does not cover specific demographic profiles, regression equations can be employed to estimate adjusted scores (Table 8).

Table 8. Correction grid for SATURN total and subdomain accuracies and time on tasks, according to age and education.

Examination of the correlation coefficient between the adjusted SATURN total accuracy and MMSE adjusted scores indicated a statistically significant small positive correlation (r = 0.19, p < 0.001), the correlation between the adjusted SATURN total accuracy and MoCA adjusted scores was statistically significant, positive, and more consistent (r = 0.30, p < 0.001), like that between MoCA and MMSE (r = 0.31, p < 0.001).

A total of 86 (26.6%) individuals received assistance during the completion of SATURN because of self-reported absence of experience in using computers. They were older [Age help, yes: 67.98 ± 9.09; no: 58.92 ± 7.26, t(321) = −9.234, p < 0.001] and less educated [Education help, yes: 8.41 ± 3.30; no: 12.37 ± 4.56, t(321) = 7.420, p < 0.001] than people who did not required assistance, with no significant sex difference (X2 = 0.074; p = 0.785). To test the influence of receiving assistance on SATURN total accuracy, total time on tasks, and reading speed, we performed a series of multiple linear regression models considering sex, age, education, and assistance received as factors. Results confirmed the influencing factors reported in Table 5 and receiving assistance did not affect any outcome (Supplementary Table S2).

4 Discussion

This research is the pioneering effort to provide normative data stratified by age, education, and sex for the Italian version of SATURN, the first open-source digital tool for the initial assessment of cognitive functions to be introduced in the Italian context. The distinctive features of the tool, such as self-administration, automatic correction, and instant report generation, offer fundamental advantages that traditional paper-and-pencil methods cannot provide and could facilitate the application in primary care setting (Staffaroni et al., 2020). The availability of the same tool in different languages facilitates both the development of research in different countries and the clinical use of the tool with diverse populations (Canevelli et al., 2022; Giaquinto et al., 2022).

Compared to the original study in the USA (Bissig et al., 2020) and the previous online one (Tagliabue et al., 2023), the average education level of the sample was lower (USA: 16.3 ± 2.5; Tagliabue et al., 2023: 15.7 ± 3.1; this study: 11.3 ± 4.6), but in line with the demographic characteristics of Southern Italy (Caffò et al., 2016; Giaquinto et al., 2023). Probably for this reason, the average raw score is lower in this study (USA: 27.1 ± 2.6 out of 30; Tagliabue et al., 2023: 27.0 ± 1.7 out of 29; this study: 24.0 ± 3.3 out of 29). The current study provides normative data for SATURN along with cutoff values for detecting potential cognitive decline. The cutoff value (16.56 out of 29) derived in this study is much lower than the one reported by Bissig et al. (2020) (21.00 out of 30). However, the influence of age and education on total raw accuracy is in line with correlations reported in Tagliabue et al. (2023), and with multiple regressions reported in MMSE (Carpinelli Mazzi et al., 2020) and MoCA (Santangelo et al., 2015) Italian normative studies. The Italian version of SATURN significantly correlates with MMSE and MoCA (positive correlations), ranging from small to moderate, respectively. The correlation between SATURN and MoCA in the original study was significantly higher (r = 0.90; p < 0.001), but this was consistent with a sample composed of both patients and controls. In fact, the correlation between SATURN, MoCA, and MMSE reflects the moderate positive correlation between MoCA and MMSE, after adjusting for age and education, observed in previous studies on normative Italian samples (e.g., Santangelo et al., 2015: r = 0.43, p < 0.001; Conti et al., 2015: r = 0.32, p < 0.001). These results demonstrate the convergent validity of SATURN on normative samples. Further studies exploring the convergent validity of SATURN on clinical samples are needed. Although the small correlation is not ideal for tests that aim to measure the same function, it remains unclear whether it is advantageous or not to overlap with the aforementioned screening tests that are known to have some severe limitations (Carnero-Pardo, 2014; Moafmashhadi and Koski, 2013). Moreover, the SATURN may tap more into cognitive functions barely touched by the MMSE, which is known to strongly rely on verbal abilities (e.g., attentional and executive functions). Finally, the fact that MMSE and MoCA are bounded scores, with many participants reaching a ceiling performance in a healthy population, may deflate the correlation because of a restriction of range artifact. Nonetheless, the significant correlation among these tests is consistent with the idea that they offer an assessment of overall cognitive functioning through a multi-domain approach, although they evaluate different cognitive domains, with only partial overlap (Ciesielska et al., 2016; Tagliabue et al., 2023).

As it was done for the Italian normative validation of the MoCA (Santangelo et al., 2015), normative scores for all cognitive subdomains assessed by the SATURN were also calculated. This was done to obtain a more precise understanding of the cognitive domains that might influence an overall performance below the norm and to guide second-level evaluation more accurately. Age and years of education influence performance in visuo-constructional tasks in SATURN, as well as in MoCA; some differences emerged about executive functions, influenced by age and education in MoCA but only age in SATURN, and orientation, influenced by age and education in MoCA but only education in SATURN. Age also influences incidental and recall memory in SATURN, consistently with MoCA. Females performed worse than males in math (calculation), and sex results as an influent variable for this subdomain. The current results are consistent with previous findings on male vs. female differences across the lifespan in math abilities (Kaufman et al., 2009) although it is more frequently studied on young adult samples involving college students.

This study leverages the capabilities of digital tools to provide normative values for times on task, which are automatically calculated. These values include the total and subdomain times spent on tasks, as well as the time taken to read the verbal instructions. Measuring time on tasks can help assess the possible presence of cognitive slowing, accordingly to the processing speed hypothesis (Salthouse, 1996, 2000). Clinically, detecting individuals at risk for dementing disorders early on is essential for enhancing treatment effectiveness and guiding other clinical and social decisions (Liss et al., 2021). The utility of considering the temporal parameter in executing cognitive tasks for detecting patients with MCI or those at greater risk of developing dementia has been demonstrated in various contexts. Psychometric tests, such as the WAIS digit-symbol test [see the meta-analysis by Bäckman et al., 2005], and experimental tests measuring reaction times (e.g., Schmidt et al., 2020) have shown significant findings. Additionally, ecological studies, including measuring response times in online surveys (e.g., Schneider et al., 2023) or gait speed to detect motor decline (e.g., Hackett et al., 2018; Grande et al., 2019), have also provided valuable insights. Some digital tools represent an effort in this direction (Giaquinto et al., 2022). For example, CognICA (Kalafatis et al., 2021) is a 5-min, self-administered tool that assesses Information Processing Speed, a key indicator of cognitive impairment, using a language-independent task. An AI algorithm analyzes accuracy, response speed, and age to estimate the likelihood of impairment by comparing the patient’s performance to healthy and impaired individuals. This highlights the potential of digital tools and machine learning in advancing neuropsychological assessments (Battista et al., 2020). This study provides SATURN normative values for time on task and reading times, which could be tested as discriminant variables in distinguishing cognitive decline from controls, either alone or in combination with accuracy, based on age and education level. Future studies are needed to explore these possibilities.

4.1 Limitations

It is important to consider certain limitations when discussing the results. The type of sampling and recruitment methods used, while effective for obtaining a stratified sample, may be susceptible to selection and response bias from individuals who frequently visit pharmacies, doctors’ offices, and awareness events. Additionally, snowball sampling could lead to the creation of a homogeneous sample. Furthermore, residing in a single region of Southern Italy may render the results of this study not generalizable to a sample of residents in the Northern part of the country, as is the case, for example, with the normative sample of the MMSE (e.g., Carpinelli Mazzi et al., 2020). The inclusion criteria used do not allow us to definitively exclude the possibility that individuals with various types of cognitive deficits may be present in the normative sample. However, this is also due to the limitations of existing paper-and-pencil screening tools, which do not achieve perfect sensitivity and specificity, and we do not know to what extent these limitations are also present in the Italian version of SATURN. Future clinical validity studies will need to assess the discriminative capacity of SATURN. Results also showed that older and less educated individuals, without computer experience, more often required assistance in submitting answers during the completion of SATURN. Although this did not affect the final results, it could represent a limitation for the remote application of SATURN in certain populations. Finally, although we believe that completion times for the various SATURN tasks and for the overall SATURN test are clinically useful measures of processing speed, they may include some noise.

5 Conclusion

Future studies need to evaluate the sensitivity and specificity values of the Italian version of the SATURN in identifying cases of pathological cognitive decline. To test the clinical application of the tool in primary care settings, a feasibility study is required to test its application in clinical practice. Preliminary results from this study offer encouraging prospects, as the self-administered tool showed no significant influence when minimal assistance was required for inputting questions, especially with no prior computer use experience. Additionally, the total administration time of the tool is less than 10 min for the majority of healthy people, which, considering the number of measures provided with self-scoring and self-reporting, is an extremely advantageous ratio. The tool can operate on both iOS and Windows as well as Android platforms, thanks to the flexibility of PsychoPy, which simplifies its application (Giaquinto et al., 2022).

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: the datasets analyzed for this study and SATURN materials can be found in the OSF repository at: https://osf.io/cdmt3/?view_only=44c8bc4977274e02969a2d7b80362caf.

Ethics statement

The studies involving humans were approved by the National Ethics Committee for experiments of public research institutions (NEC) and other national public entities (CEN) during the session held on April 4, 2023, Project Code: PNRR-MAD-2022-12376781. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

FG: Conceptualization, Data curation, Formal analysis, Investigation, Visualization, Writing – original draft, Writing – review & editing. SA: Data curation, Software, Writing – original draft, Writing – review & editing. GL: Investigation, Writing – original draft, Writing – review & editing. DR: Formal analysis, Methodology, Supervision, Writing – original draft, Writing – review & editing. PA: Conceptualization, Data curation, Formal analysis, Funding acquisition, Methodology, Project administration, Resources, Supervision, Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. Funded by the European Union – Next Generation EU – PNRR – M6C2 – Investment 2.1 Enhancing and strengthening biomedical research of the National Health Service (NHS). Project Code: PNRR-MAD-2022-12375822 - Title: “From prevention to the etiopathogenetic and pathophysiological mechanisms of dementia: a paradigm shift in the biological continuum of cognitive decline. The PREV-ITA-DEM study” CUP: F85E22001040006.

Acknowledgments

We express our gratitude to Andrioli Matteo, Bocco Stefano, Cazzato Claudia Vittoria, Centonze Mirko, Corlianò Luisa, De Cicco Elisa, Delli Santi Alessia, Festa Noemi, Fioro Carmela Giorgia, Giovinazzo Manola, Martella Eliana, Marzio Federica, Mastrorilli Matteo, Musarò Giulia, Pellerano Giulia Letizia, Pichierri Sabrina, Rossetti Erika, Santoiemma Lucia, Scalese Greta, Scozzi Sara, Secondo Miriana, Sgobio Eliana, and Sperti Valentina for their important contributions to data collection.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2024.1456619/full#supplementary-material

Footnotes

References

Alzheimer’s Association (2019). 2019 Alzheimer's disease facts and figures. Alzheimers Dement. 15, 321–387. doi: 10.1016/j.jalz.2019.01.010

Bäckman, L., Jones, S., Berger, A. K., Laukka, E. J., and Small, B. J. (2005). Cognitive impairment in preclinical Alzheimer's disease: a meta-analysis. Neuropsychology 19, 520–531. doi: 10.1037/0894-4105.19.4.520

Barbosa, R., Midão, L., Almada, M., and Costa, E. (2021). Cognitive performance in older adults across Europe based on the SHARE database. Aging Neuropsychol. Cogn. 28, 584–599. doi: 10.1080/13825585.2020.1799927

Barca, L., Burani, C., and Arduino, L. S. (2001). Lexical and sublexical variables: Norms for 626 Italian nouns. Database: lexvar.xls.

Barca, L., Burani, C., and Arduino, L. S. (2002). Word naming times and psycholinguistic norms for Italian nouns. Behav. Res. Methods Instrum. Comput. 34, 424–434. doi: 10.3758/BF03195471

Battista, P., Salvatore, C., Berlingeri, M., Cerasa, A., and Castiglioni, I. (2020). Artificial intelligence and neuropsychological measures: the case of Alzheimer’s disease. Neurosci. Biobehav. Rev. 114, 211–228. doi: 10.1016/j.neubiorev.2020.04.026

Bertinetto, P.M., Burani, C., and Laudanna, A., Marconi, L., Ratti, D., Rolando, C., and Thornton, A.M. (2005). Corpus e Lessico di Frequenza dell’Italiano Scritto (CoLFIS). Available at: http://www.istc.cnr.it/grouppage/colfisEng (Accessed March 15, 2022).

Bissig, D., Kaye, J., and Erten-Lyons, D. (2020). Validation of SATURN, a free, electronic, self-administered cognitive screening test. Alzheimers Dement TRCI 6:e12116. doi: 10.1002/trc2.12116

Boccardi, M., Monsch, A. U., Ferrari, C., Altomare, D., Berres, M., Bos, I., et al. (2022). Harmonizing neuropsychological assessment for mild neurocognitive disorders in Europe. Alzheimers Dement. 18, 29–42. doi: 10.1002/alz.12365

Caffò, A. O., Lopez, A., Spano, G., Saracino, G., Stasolla, F., Ciriello, G., et al. (2016). The role of pre-morbid intelligence and cognitive reserve in predicting cognitive efficiency in a sample of Italian elderly. Aging Clin. Exp. Res. 28, 1203–1210. doi: 10.1007/s40520-016-0580-z

Canevelli, M., Cova, I., Remoli, G., Bacigalupo, I., Salvi, E., Maestri, G., et al. (2022). A nationwide survey of Italian centers for cognitive disorders and dementia on the provision of care for international migrants. Eur. J. Neurol. 29, 1892–1902. doi: 10.1111/ene.15297

Capitani, E., and Laiacona, M. (2017). Outer and inner tolerance limits: their usefulness for the construction of norms and the standardization of neuropsychological tests. Clin. Neuropsychol. 31, 1219–1230. doi: 10.1080/13854046.2017.1334830

Carnero-Pardo, C. (2014). Should the mini-mental state examination be retired? Neurología 29, 473–481. doi: 10.1016/j.nrleng.2013.07.005

Carpinelli Mazzi, M., Iavarone, A., Russo, G., Musella, C., Milan, G., D’Anna, F., et al. (2020). Mini-mental state examination: new normative values on subjects in southern Italy. Aging Clin. Exp. Res. 32, 699–702. doi: 10.1007/s40520-019-01250-2

Chan, J. Y., Yau, S. T., Kwok, T. C., and Tsoi, K. K. (2021). Diagnostic performance of digital cognitive tests for the identification of MCI and dementia: a systematic review. Ageing Res. Rev. 72:101506. doi: 10.1016/j.arr.2021.101506

Cheung, Y. T., Tan, E. H. J., and Chan, A. (2012). An evaluation on the neuropsychological tests used in the assessment of postchemotherapy cognitive changes in breast cancer survivors. Supportive Care Cancer 20, 1361–1375. doi: 10.1007/s00520-012-1445-4

Ciesielska, N., Sokołowski, R., Mazur, E., Podhorecka, M., Polak-Szabela, A., and Kędziora-Kornatowska, K. (2016). Is the Montreal cognitive assessment (MoCA) test better suited than the Mini-mental state examination (MMSE) in mild cognitive impairment (MCI) detection among people aged over 60? Meta-analysis. Psychiatr Pol 50, 1039–1052. doi: 10.12740/PP/45368

Conti, S., Bonazzi, S., Laiacona, M., Masina, M., and Coralli, M. V. (2015). Montreal cognitive assessment (MoCA)-Italian version: regression based norms and equivalent scores. Neurol. Sci. 36, 209–214. doi: 10.1007/s10072-014-1921-3

Der, G., and Deary, I. J. (2006). Age and sex differences in reaction time in adulthood: results from the United Kingdom health and lifestyle survey. Psychol. Aging 21, 62–73. doi: 10.1037/0882-7974.21.1.62

Feigin, V. L., Vos, T., Nichols, E., Owolabi, M. O., Carroll, W. M., Dichgans, M., et al. (2020). The global burden of neurological disorders: translating evidence into policy. Lancet Neurol. 19, 255–265. doi: 10.1016/S1474-4422(19)30411-9

Folstein, M. F., Folstein, S. E., and McHugh, P. R. (1975). “Mini-mental state”: a practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 12, 189–198. doi: 10.1016/0022-3956(75)90026-6

Giaquinto, F., Battista, P., and Angelelli, P. (2022). Touchscreen cognitive tools for mild cognitive impairment and dementia used in primary care across diverse cultural and literacy populations: a systematic review. J. Alzheimers Dis. 90, 1359–1380. doi: 10.3233/JAD-220547

Giaquinto, F., Tosi, G., Abbatantuono, C., Pepe, I., Iaia, M., Macchitella, L., et al. (2023). The indirect effect of cognitive reserve on the relationship between age and cognition in pathological ageing: a cross-sectional retrospective study in an unselected and consecutively enrolled sample. J. Neuropsychol. 17, 477–490. doi: 10.1111/jnp.12323

Grande, G., Triolo, F., Nuara, A., Welmer, A. K., Fratiglioni, L., and Vetrano, D. L. (2019). Measuring gait speed to better identify prodromal dementia. Exp. Gerontol. 124:110625. doi: 10.1016/j.exger.2019.05.014

Gregory, T., Nettelbeck, T., Howard, S., and Wilson, C. (2008). Inspection time: a biomarker for cognitive decline. Intelligence 36, 664–671. doi: 10.1016/j.intell.2008.03.005

Hackett, R. A., Davies-Kershaw, H., Cadar, D., Orrell, M., and Steptoe, A. (2018). Walking speed, cognitive function, and dementia risk in the English longitudinal study of ageing. J. Am. Geriatr. Soc. 66, 1670–1675. doi: 10.1111/jgs.15312

Hedden, T., and Gabrieli, J. D. (2004). Insights into the ageing mind: a view from cognitive neuroscience. Nat. Rev. Neurosci. 5, 87–96. doi: 10.1038/nrn1323

Huang, Y., Wang, Q., Zou, P., He, G., Zeng, Y., and Yang, J. (2023). Prevalence and factors influencing cognitive impairment among the older adult stroke survivors: a cross-sectional study. Front. Public Health 11:1254126. doi: 10.3389/fpubh.2023.1254126

Isaacson, R. S., and Saif, N. (2020). A missed opportunity for dementia prevention? Current challenges for early detection and modern-day solutions. J. Prev. Alz. Dis. 7, 1–293. doi: 10.14283/jpad.2020.23

Jack, C. R., Bennett, D. A., Blennow, K., Carrillo, M. C., Dunn, B., Haeberlein, S. B., et al. (2018). NIA-AA research framework: toward a biological definition of Alzheimer’s disease. Alzheimers Dement. 14, 535–562. doi: 10.1016/j.jalz.2018.02.018

Kalafatis, C., Modarres, M. H., Apostolou, P., Marefat, H., Khanbagi, M., Karimi, H., et al. (2021). Validity and cultural generalisability of a 5-minute AI-based, computerised cognitive assessment in mild cognitive impairment and Alzheimer’s dementia. Front. Psych. 12:706695. doi: 10.3389/fpsyt.2021.706695

Kaufman, A. S., Kaufman, J. C., Liu, X., and Johnson, C. K. (2009). How do educational attainment and gender relate to fluid intelligence, crystallized intelligence, and academic skills at ages 22–90 years? Arch. Clin. Neuropsychol. 24, 153–163. doi: 10.1093/arclin/acp015

Liss, J. L., Seleri Assunção, S., Cummings, J., Atri, A., Geldmacher, D. S., Candela, S. F., et al. (2021). Practical recommendations for timely, accurate diagnosis of symptomatic Alzheimer’s disease (MCI and dementia) in primary care: a review and synthesis. J. Intern. Med. 290, 310–334. doi: 10.1111/joim.13244

Livingston, G., Huntley, J., Sommerlad, A., Ames, D., Ballard, C., Banerjee, S., et al. (2020). Dementia prevention, intervention, and care: 2020 report of the lancet commission. Lancet 396, 413–446. doi: 10.1016/S0140-6736(20)30367-6

Moafmashhadi, P., and Koski, L. (2013). Limitations for interpreting failure on individual subtests of the Montreal cognitive assessment. J. Geriatr. Psychiatry Neurol. 26, 19–28. doi: 10.1177/0891988712473802

Nasreddine, Z. S., Phillips, N. A., Bédirian, V., Charbonneau, S., Whitehead, V., Collin, I., et al. (2005). The Montreal cognitive assessment, MoCA: a brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 53, 695–699. doi: 10.1111/j.1532-5415.2005.53221.x

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., et al. (2019). PsychoPy2: experiments in behavior made easy. Behav. Res. Methods 51, 195–203. doi: 10.3758/s13428-018-01193-y

Perry, A., Wen, W., Kochan, N. A., Thalamuthu, A., Sachdev, P. S., and Breakspear, M. (2017). The independent influences of age and education on functional brain networks and cognition in healthy older adults. Hum. Brain Mapp. 38, 5094–5114. doi: 10.1002/hbm.23717

Petersen, R. C., Lopez, O., Armstrong, M. J., Getchius, T. S., Ganguli, M., Gloss, D., et al. (2018). Practice guideline update summary: mild cognitive impairment: report of the guideline development, dissemination, and implementation Subcommittee of the American Academy of neurology. Neurology 90, 126–135. doi: 10.1212WNL.0000000000004826

Pieri, L., Tosi, G., and Romano, D. (2023). Virtual reality technology in neuropsychological testing: a systematic review. J. Neuropsychol. 17, 382–399. doi: 10.1111/jnp.12304

Sabbagh, M. N., Boada, M., Borson, S., Chilukuri, M., Dubois, B., Ingram, J., et al. (2020). Early detection of mild cognitive impairment (MCI) in primary care. J. Prev Alzheimers Dis. 7, 165–170. doi: 10.14283/jpad.2020.21

Salthouse, T. A. (1996). The processing-speed theory of adult age differences in cognition. Psychol. Rev. 103, 403–428. doi: 10.1037/0033-295X.103.3.403

Salthouse, T. A. (2000). Aging and measures of processing speed. Biol. Psychol. 54, 35–54. doi: 10.1016/S0301-0511(00)00052-1

Santangelo, G., Siciliano, M., Pedone, R., Vitale, C., Falco, F., Bisogno, R., et al. (2015). Normative data for the Montreal cognitive assessment in an Italian population sample. Neurol. Sci. 36, 585–591. doi: 10.1007/s10072-014-1995-y

Schmidt, G. J., Boechat, Y. E. M., van Duinkerken, E., Schmidt, J. J., Moreira, T. B., Nicaretta, D. H., et al. (2020). Detection of cognitive dysfunction in elderly with a low educational level using a reaction-time attention task. J. Alzheimers Disease 78, 1197–1205. doi: 10.3233/JAD-200881

Schneider, S., Junghaenel, D. U., Meijer, E., Stone, A. A., Orriens, B., Jin, H., et al. (2023). Using item response times in online questionnaires to detect mild cognitive impairment. J. Gerontol. B Psychol. Sci. Soc. Sci. 78, 1278–1283. doi: 10.1093/geronb/gbad043

Staffaroni, A. M., Tsoy, E., Taylor, J., Boxer, A. L., and Possin, K. L. (2020). Digital cognitive assessments for dementia: digital assessments may enhance the efficiency of evaluations in neurology and other clinics. Pract. Neurol. 2020, 24–45

Tagliabue, C. F., Bissig, D., Kaye, J., Mazza, V., and Assecondi, S. (2023). Feasibility of remote unsupervised cognitive screening with SATURN in older adults. J. Appl. Gerontol. 42, 1903–1910. doi: 10.1177/07334648231166894

Thabtah, F., Peebles, D., Retzler, J., and Hathurusingha, C. (2020). Dementia medical screening using mobile applications: a systematic review with a new mapping model. J. Biomed. Inform. 111:103573. doi: 10.1016/j.jbi.2020.103573

Torregrossa, W., Torrisi, M., De Luca, R., Casella, C., Rifici, C., Bonanno, M., et al. (2023). Neuropsychological assessment in patients with traumatic brain injury: a comprehensive review with clinical recommendations. Biomedicines 11:1991. doi: 10.3390/biomedicines11071991

Tsoy, E., Zygouris, S., and Possin, K. L. (2021). Current state of self-administered brief computerized cognitive assessments for detection of cognitive disorders in older adults: a systematic review. J. Prev Alzheimers Dis. 8, 267–276. doi: 10.14283/jpad.2021.11

Keywords: cognitive assessment, self-administered cognitive test, computer based test, aging, normative data

Citation: Giaquinto F, Assecondi S, Leccese G, Romano DL and Angelelli P (2024) Normative study of SATURN: a digital, self-administered, open-source cognitive assessment tool for Italians aged 50–80. Front. Psychol. 15:1456619. doi: 10.3389/fpsyg.2024.1456619

Edited by:

Scott Sperling, Cleveland Clinic, United StatesReviewed by:

Francesca Conca, University Institute of Higher Studies in Pavia, ItalySamantha Stern, University College London Hospitals NHS Foundation Trust, United Kingdom

Copyright © 2024 Giaquinto, Assecondi, Leccese, Romano and Angelelli. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Francesco Giaquinto, ZnJhbmNlc2NvLmdpYXF1aW50b0B1bmlzYWxlbnRvLml0

Francesco Giaquinto

Francesco Giaquinto Sara Assecondi

Sara Assecondi Giuliana Leccese

Giuliana Leccese Daniele Luigi Romano

Daniele Luigi Romano Paola Angelelli

Paola Angelelli