- 1Research Organization of Open, Innovation and Collaboration, Ritsumeikan University, Osaka, Japan

- 2Japan Society for the Promotion of Science, Tokyo, Japan

- 3College of Comprehensive Psychology, Ritsumeikan University, Osaka, Japan

Estimating the time until impending collision (time-to-collision, TTC) of approaching or looming individuals and maintaining a comfortable distance from others (interpersonal distance, IPD) are commonly required in daily life and contribute to survival and social goals. Despite accumulating evidence that facial expressions and gaze direction interactively influence face processing, it remains unclear how these facial features affect the spatiotemporal processing of looming faces. We examined whether facial expressions (fearful vs. neutral) and gaze direction (direct vs. averted) interact on the judgments of TTC and IPD for looming faces, based on the shared signal hypothesis that fear signals the existence of threats in the environment when coupled with averted gaze. Experiment 1 demonstrated that TTC estimates were reduced for fearful faces compared to neutral ones only when the concomitant gaze was averted. In Experiment 2, the emotion-gaze interaction was not observed in the IPD regulation, which is arguably sensitive to affective responses to faces. The results suggest that fearful-averted faces modulate the cognitive extrapolation process of looming motion by communicating environmental threats rather than by altering subjective fear or perceived emotional intensity of faces. The TTC-specific effect may reflect an enhanced defensive response to unseen threats implied by looming fearful-averted faces. Our findings provide insight into how the visual system processes facial features to ensure bodily safety and comfortable interpersonal communication in dynamic environments.

1 Introduction

Accurate perception of approaching or looming objects is crucial for organisms living in a dynamic three-dimensional environment. Looming stimuli increase in size on the retina over time, as a function of viewing distance, which induces a reliable perception of the approach. Previous studies have shown that the human visual system is highly sensitive to looming stimuli and prioritizes their processing (e.g., Cappe et al., 2012; Finlayson et al., 2012), yielding cognitive consequences such as attentional capture (Franconeri and Simons, 2003; Abrams and Christ, 2005) Looming, the specific visual pattern of expansion, results in increased neural activation in the visuomotor cortex (Peron and Gabbiani, 2009; Billington et al., 2011), and superior temporal sulcus (Tyll et al., 2013), seemingly leading to faster detection and motor responses to looming stimuli (Ball and Tronick, 1971; Yonas et al., 1979; Walker-Andrews and Lennon, 1985; Náñez and Yonas, 1994; Shirai and Yamaguchi, 2004; Lewkowicz, 2008). Avoiding collisions and maintaining an appropriate distance from others are commonly required in daily life and necessitates accurate spatiotemporal processing of looming individuals. Nevertheless, studies on looming perception in interpersonal situations are considerably scarce, and it has not been fully understood how the visual system monitors approaching others and what factors affect such processing.

In face-to-face interpersonal situations, the facial cues of an interaction partner are likely to influence the processing of looming others. Facial expressions that signal the expressor’s internal state are particularly important cues not only for social interactions with others but also for visual face processing. In particular, negative facial expressions are recognized more accurately (Macrae et al., 2002) and perceived as being closer compared to neutral expressions (Kim and Son, 2015), indicating their substantial impact of them on visual cognition. Furthermore, negative facial expressions serve as social threat cues that increase amygdala activity (Morris et al., 1996), which in turn triggers socioaffective responses such as avoidance behavior (Fox et al., 2007; Roelofs et al., 2010) and a greater interpersonal distance (Ruggiero et al., 2017, 2021; Silvestri et al., 2022). Thus, it is plausible to predict that negative facial expressions affect the spatiotemporal processing of looming faces.

Another factor that affects the processing of looming faces is gaze direction, which is an important cue for deducing the attentional focus of an interactive partner (for reviews, see de C Hamilton, 2016; Hadders-Algra, 2022) that also modulates and interpretation of emotional facial expressions (e.g., Sander et al., 2007). The effect of gaze direction on facial expression processing has also been found in face preference in newborns (Rigato et al., 2011), indicating an adaptive role of the emotion-gaze interaction in early age. The shared signal hypothesis posits that gaze direction differentially affects the perceived threats posed by negative expressions such as fear and anger, and that specific emotion-gaze pairs that convey a clear threat are particularly salient (Adams and Kleck, 2003, 2005). Specifically, fear indicates that the expresser perceives threats, which signals unseen threats in the environment when combined with an averted gaze. However, a direct gaze obscures the source of the threat and further communicates that the expressor is afraid of the observer. Anger, on the other hand, indicates aggression by the expressor, and when combined with direct gaze, suggests that the threat is directed at the observer. However, when combined with averted gaze, the target of threat becomes ambiguous. In line with this, it has been shown that the specific emotion-gaze pairs (i.e., fear-averted and anger-direct) elicit greater neuronal activity in the amygdala (Adams et al., 2003; Sato et al., 2004; N’Diaye et al., 2009). It has also been found that angry and happy faces with direct gaze and fearful faces with averted gaze are detected more accurately than the switched combinations, which helps observers orient their attention to environmental threats or rewards (Hietanen et al., 2008; Milders et al., 2011; de C Hamilton, 2016; McCrackin and Itier, 2019). The emotion-gaze pairs also affect emotional detection (Adams and Kleck, 2003, 2005), approach-avoidance tendencies (Roelofs et al., 2010), time perception (Doi and Shinohara, 2009; Kliegl et al., 2015), and gaze-cued attention (McCrackin and Itier, 2018a,b). Therefore, based on the shared signal hypothesis, we may predict that the emotion-gaze interaction affects the processing of looming faces.

When others are approaching, a crucial function of the visual system is to accurately estimate the time until impending collision, time-to-collision (TTC), based on visual inputs. Pioneering research theorized that TTC is estimated based on the visual variable tau, defined as the ratio of the retinal angle of a looming target to its instantaneous rate of optical expansion (tau theory, Lee, 1976; for a review, see Regan and Gray, 2000). However, subsequent studies have revealed that TTC estimates involve cognitive motion extrapolation (DeLucia and Liddell, 1998), decrease under a high cognitive load (Baurès et al., 2010; McGuire et al., 2021), and are affected by target size (DeLucia, 2004), initial distance (Yan et al., 2011), and velocity (Gray and Regan, 1998). Moreover, threatening targets (e.g., spiders, snakes, and frontal attacks) are estimated to arrive earlier than non-threatening targets (Brendel et al., 2012; Vagnoni et al., 2012), indicating an emotional impact. Furthermore, TTC estimates can decrease when participants’ movements are restricted, which constitutes a threatening situation given the difficulty of avoiding collisions (Neuhoff et al., 2012; Vagnoni et al., 2017). Despite accumulating findings suggesting that TTC estimation is not determined purely by physical optics, but also involves the processing of stimulus properties and threats in targets and the viewing environment, it remains unclear which factors affect the TTC estimation of looming people.

Aside from TTC estimation contributing to survival goals, maintaining an appropriate interpersonal distance (IPD) with approaching others is crucial for comfortable social interactions. In social psychology, IPD is considered to reflect personal space, in which individuals experience discomfort when others intrude (e.g., Hayduk, 1983), and plays an important role in social interactions. It has been suggested that individuals automatically adjust IPD based on the experienced discomfort and that IPD is regulated by an arousal-sensitive process involving the amygdala (e.g., Kennedy et al., 2009). Previous studies have shown that target individuals’ negative facial expressions and direct gaze of target individuals increase the preferred IPD (Bailenson et al., 2001; Asada et al., 2016; Ruggiero et al., 2017, 2021; Cartaud et al., 2018; Silvestri et al., 2022) by using a stop-distance task that measures the point at which observers experience discomfort with the approaching targets (e.g., Iachini et al., 2016; Silvestri et al., 2022). Although both negative facial expressions and direct gaze are known to increase the IPD, it is unclear whether the combination of these facial features interactively affects IPD regulation when being approached by others.

For our aim of examining TTC estimation and IPD regulation towards looming faces, it is meaningful to organize the concepts of the spatial regions associated with the judgments. Based on the finding that people with restricted body movements estimate shorter TTC of looming objects (Neuhoff et al., 2012; Vagnoni et al., 2017), reduced TTC estimates has been argued to reflect an enlargement of peripersonal space (PPS), the surrounding region serving as the spatial buffer for immediate actions (Rizzolatti et al., 1981, 1997; Di Pellegrino et al., 1997). On the other hand, preferred IPD is associated with a safety zone maintained for comfortable interpersonal interactions, which refers to a concept of social space that differs from the PPS as an action space (for a review: Coello and Cartaud, 2021). Recent studies have shown that both PPS and preferred IPD are altered by social relationships and emotions with interaction partners (Iachini et al., 2016; Patané et al., 2017), and that manipulations of reachability can alter the preferred IPD (Quesque et al., 2017; D'Angelo et al., 2019), albeit individual differences (Candini et al., 2019), suggesting that PPS and social IPD are functionally dissociable but share common spatial representations. Thus, note that TTC estimates and preferred IPD, which are similarly examined in spatiotemporal judgments towards approaching others, are considered to be related to different spatial concepts.

Previous studies have failed to demonstrate the effects of angry facial expressions on TTC estimates (Brendel et al., 2012; DeLucia et al., 2014). Note that Brendel et al. (2012) only found a reliable increase in TTC estimates for friendly faces relative to baseline empty faces, and observed an effect of threatening stimuli on TTC estimates when data from face and non-face images were pooled. Based on their results, it has been suggested that the TTC modulation requires unequivocal threats (i.e., predators) that elicit high arousal rather than implicit threats conveyed by facial expressions, which elicit less arousal (Wangelin et al., 2012). However, direct gaze by itself can affect visual cognition because it accompanies aggressive displays (Hinde and Rowell, 1962; Ioannou et al., 2014) and increases arousal, thus leading to cognitive consequences (Nichols and Champness, 1971; Mason et al., 2004; Senju and Hasegawa, 2005; Hietanen et al., 2008; Conty et al., 2010; Akechi et al., 2013; for a review, Emery, 2000). The lack of effect for angry facial expressions in previous studies may be attributable to the confounding effect of direct gaze paired with angry and neutral faces. Thus, for angry faces, which should convey threat along with direct gaze, the effects of facial expressions and direct gaze are inseparable, and the possible emotion-gaze interaction would be elusive. Therefore, we aimed to examine the emotion-gaze interaction effect using faces expressing fear as negative stimuli.

Therefore, the current study aimed to investigate whether fearful facial expressions and gaze direction have an interaction effect on the TTC estimation of and the preferred IPD from looming faces. Experiment 1 involved the TTC estimation task, which required participants to estimate the collision time after the looming faces disappeared, thus encompassing the spatiotemporal extrapolation of looming motion. Experiment 2 involved an IPD task that required participants to continuously observe looming faces and indicate when they felt discomfort. Given previous knowledge, it is hypothesized that fearful facial expressions reduce TTC estimates and increase preferred IPD for looming faces. The shared signal hypothesis further predicts that averted gaze enhances the influence of fearful facial expressions on the spatiotemporal processing of looming faces. On the other hand, the effect of direct gaze by itself, if any, was expected to appear only in the context of neutral faces, because the threat of fearful faces would be decreased by direct gaze.

2 Experiment 1

2.1 Method

2.1.1 Participants

Sample size was determined using G*Power software (version 3.1.9.6; Faul et al., 2009) assuming an effect size (ηp2 = 0.4) reported in a previous study that explored the effect of facial expressions on TTC estimates (Brendel et al., 2012). Based on the power analysis, a sample size of 15 participants were needed an interaction effect (α = 0.05, power = 0.8, ANOVA: fixed effects, special, main effects, and interactions). Therefore, in Experiment 1, we collected data from 15 students (10 females, M = 20.6 years, SD = 1.8). All participants reported normal or corrected-to-normal visual acuity and were naïve to the purpose of the study. In accordance with the Declaration of Helsinki, the participants signed a written consent form approved by the Ethics Review Committee for Research Involving Human Subjects of Ritsumeikan University.

2.1.2 Apparatus and stimuli

Stimuli were generated using PsychoPy3 software (version 2021.1.4; Peirce et al., 2019) and presented on a black background on a 26-inch LCD screen (60 Hz, 1920 × 1,080 pixels; XL2411P; BenQ Desktop, Taipei, Taiwan), which was set at a viewing distance of approximately 57 cm. With their self-reported dominant eye, participants monocularly viewed the monitor in a standing position, ensuring veridical perception. The height of the monitor was individually adjusted to each participant’s eye level at the beginning of the experiment. Participants were required to maintain a standing position and keep their heads still during the experiment to ensure constant viewing conditions.

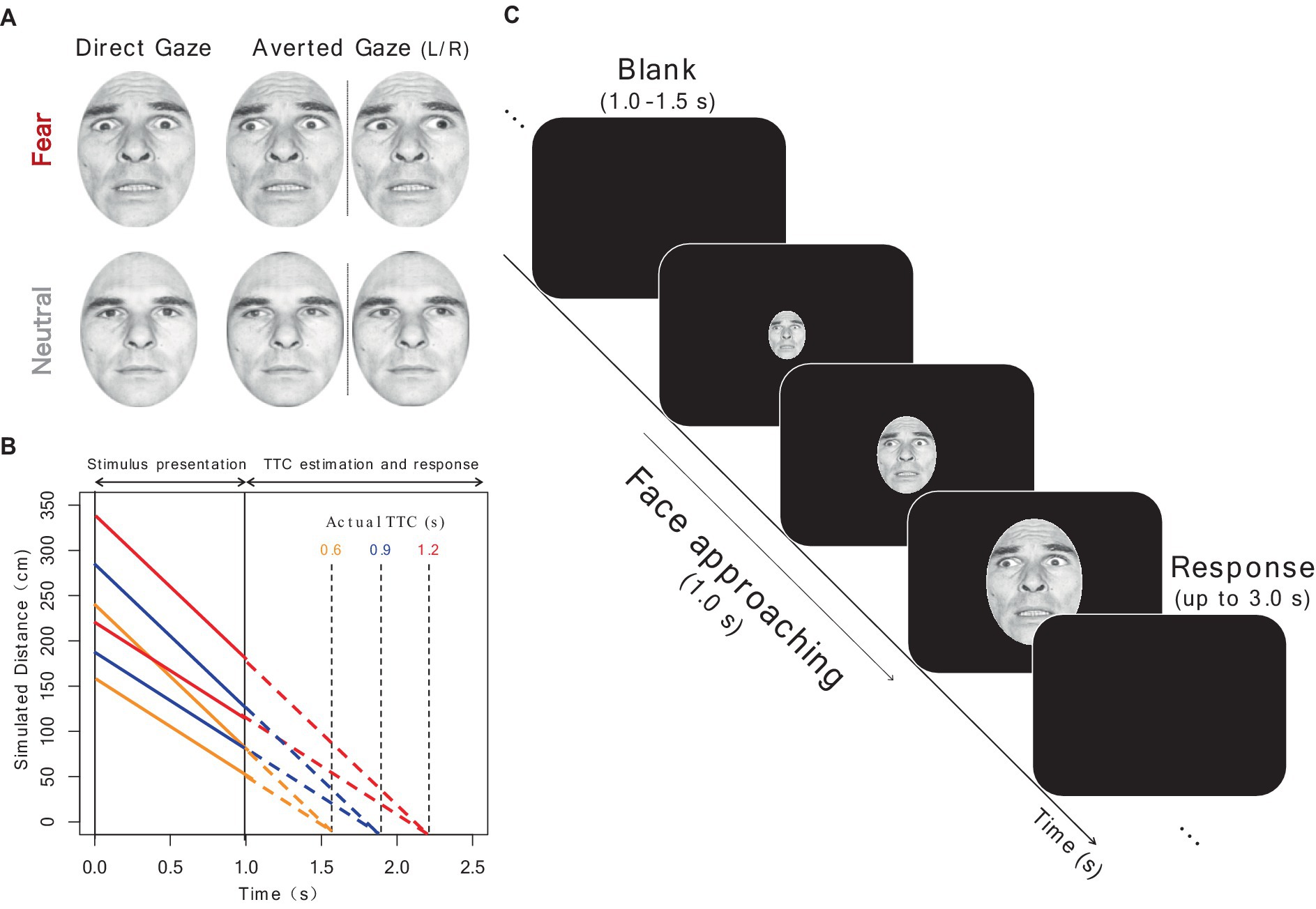

Figure 1 shows the face stimuli and procedures used in Experiment 1. The stimuli consisted of seven adult faces (four females and three males) selected from the Pictures of facial affect (Ekman and Friesen, 1976), each of which presented both neutral and fearful facial expressions.1 The gaze direction of each face was manipulated (direct gaze: 0°; averted gaze: leftward or rightward by 30°) using the Adobe Photoshop neural filter (version 22.5.1; Adobe, Mountain View, CA, United States). Each image was then superimposed by an oval mask (260 × 347 pixels), with the size and luminance held constant. In total, there were 28 face stimuli (7 faces×2 facial expressions×2 gaze directions).

Figure 1. Schematic of experimental procedure. (A) Examples of fearful and neutral face stimuli with direct and averted gaze. Copyright is available in Ekman and Friesen (1976). Consulting Psychologists Press, Palo Alto, CA (B) Timeline of the approach of face stimuli. The simulated distance to the face (y-axis) is shown as a function of the time after stimulus onset (x-axis). Time during stimulus presentation and time after its disappearance are depicted by solid and dotted lines, respectively. Each actual TTC condition is indicated in different colors. (C) Timeline of a trial. After a blank, a face presented at the center of the screen expanded gradually over 1 s and then disappeared. Participants were required to extrapolate the motion and to press a key at the moment they thought the face would have collided with them. Source: “Pictures of Facial Affect (POFA)” © Paul Ekman 1993, https://www.paulekman.com/. Used with permission.

We adopted three TTC conditions (actual TTC was simulated to be 0.6, 0.9, and 1.2 s), based on a previous study that investigated the effect of facial expressions on TTC (Brendel et al., 2012). To simulate a looming motion, the visual angle of the face stimulus was increased over 1 s (60 frames) and then it disappeared. The visual angle of the stimulus in each frame was simulated based on one of the three TTC conditions. If a face with a vertical length of S moves from a distance D at a constant velocity v, the subtended visual angle θ should follow the formula at time point t:

In the experiment, the visual angle was simulated by assuming a vertical face height of the face of 21 cm. The velocity of the stimulus was randomly varied (100 or 150 cm/s) to prevent participants from using a simple heuristic (Schiff et al., 1992), resulting in different initial and final distances.

2.1.3 Procedure

Participants completed a TTC estimation task and following two subjective rating tasks. In the TTC estimation task, a face stimulus was presented at the center of the monitor and increased in size over 1 s, consistent with one of the three TTC conditions. Participants were instructed to mentally extrapolate the approaching face at a constant rate after its disappearance and to respond with a keypress at the moment when they judged that the face would have collided with them (prediction-motion task; DeLucia et al., 2014). A response was allowed for up to 3 s after the stimulus disappeared. The keypress latency in each trial was recorded as the estimated TTC. The next trial started automatically, followed by a random intertrial interval of 1–1.5 s. The TTC task consisted of 14 repetitions, in which each of the seven faces was presented twice, of 12 experimental conditions (2 emotions×2 gaze directions×3 TTC conditions), for a total of 168 trials. The experiment was divided into four blocks, each including two catch trials with TTCs of 0.5 and 1.5 s. Trial order was randomized across participants and blocks.

After the TTC estimation task, participants completed two subjective rating tasks using the same face stimuli presented in the TTC task. Participants observed a face stimulus and then indicated their degree of subjective fear (“How much did you fear the face?”), and the perceived intensity of the fear emotion expressed by the stimulus (“How strongly did the face express fear?”), on a 7-point Likert scale (1, not at all; 7, very much). The rating tasks of subjective fear and emotional intensity were conducted in separate blocks in a fixed order. Given the nature of looming motion as a signal of potential threat, we examined the possible influence of dynamic presentation on the ratings of face stimuli. Thus, in the rating tasks, 28 face stimuli (7 faces×2 facial expressions×2 gaze directions) were presented in looming and static forms, resulting in 56 trials. The stimuli in the looming condition were identical to those with a simulated TTC of 0.6 s and a velocity of 150 cm/s in the TTC task, consequently, they expanded from 5.01° to 13.31° over 1 s. In the static condition, the stimuli were presented for 1 s with a visual angle of 9.16°, corresponding to the average size in the looming condition. The stimuli were presented in a randomized order. To prevent participants from guessing the purpose of the TTC estimation task, the rating tasks were completed after the TTC task.

2.1.4 Data analysis

Individual data of subjective ratings and TTC estimates were analyzed with linear mixed models using the lme4 and lmerTest packages in R software (version 3.6.2; R Development Core Team, Vienna, Austria). The initial model included all possible fixed effects and by-participant random effects. Then, backward elimination was performed using the step function of the lmerTest package to identify the model that best explained the data. Backward elimination was conducted only for random effects, because a full-factorial analysis of variance provides test statistics for all fixed effects in the case of the balanced factorial designs (Barr et al., 2013; Matuschek et al., 2017). Based on the selected model, an F-test of the fixed effects was conducted using the Anova function of the car package. Since our primary aim was to investigate the interaction effect of facial expressions and gaze direction, we planned to conduct multiple comparisons across the four emotion-gaze conditions using the difflsmeans function of the lmerTest package, and p values were adjusted using the Holm method. The effect sizes were obtained using the F_to_eta2 and the t_to_d functions in the effectsize package.

2.2 Results

2.2.1 Effects of emotion and gaze direction on TTC estimation

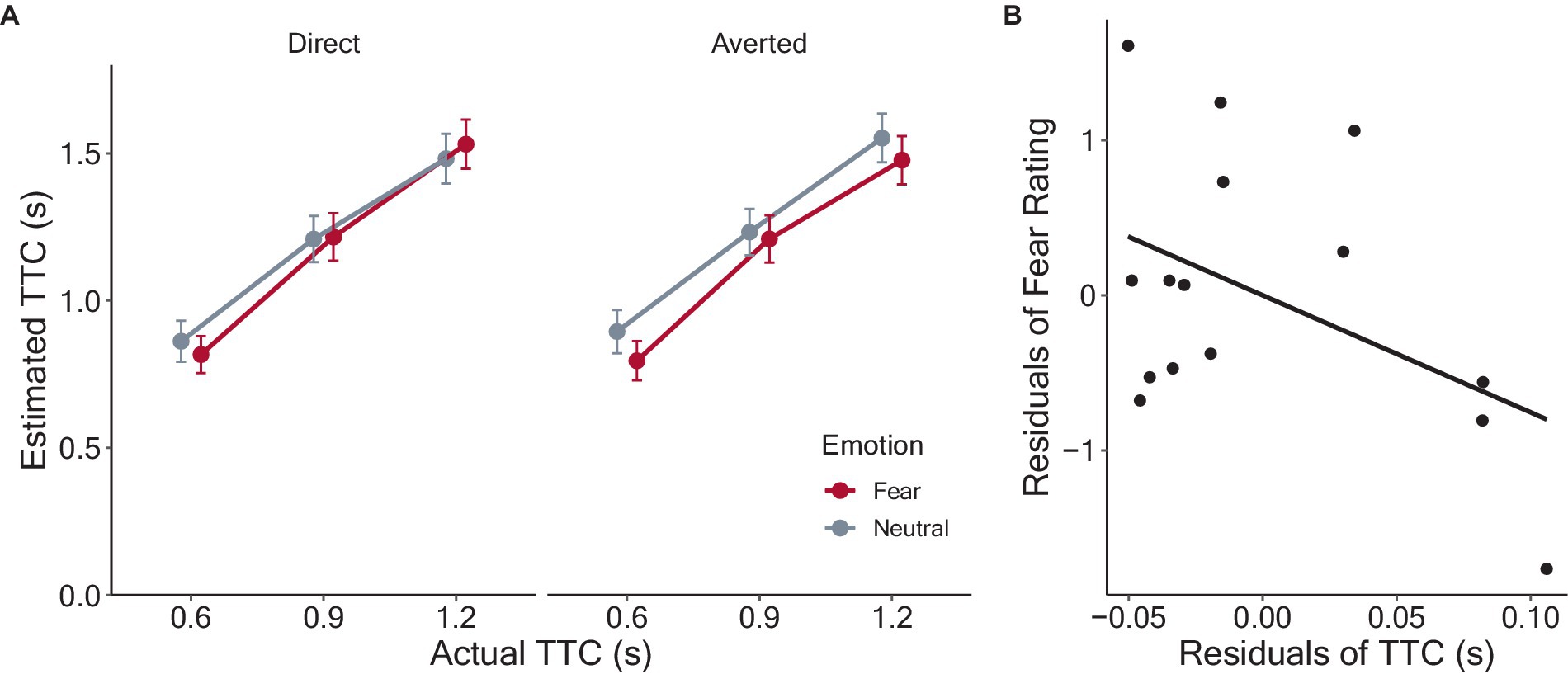

Figure 2A shows the estimated TTC, which increased monotonically as a function of actual TTC. Trials in which no response was made within the time limit (2.3% of all trials) were excluded from the analyses. After applying backward elimination, the model included the main effects of emotion, gaze direction, and actual TTC and their interactions as fixed effects, in addition to random by-participant intercepts and slopes of the approaching velocity as random effects. The least-squares means of the TTC estimates for each condition were calculated, and the results are shown in Figure 2. The analysis revealed significant main effects of emotion (F(1, 2,378) = 3.91, p = 0.049, ηp2 = 0.002), due to smaller TTC estimates for fearful (M = 1.17 ± SD = 0.62) than neutral (1.20 ± 0.62). The main effect of actual TTC was also significant (F(1, 2,377) = 808.78, p < 0.001, ηp2 = 0.25), with smaller TTC estimates in the order of TTC0.6 s (0.84 ± 0.50), TTC0.9 s (1.22 ± 0.57), and TTC1.2 s conditions (1.51 ± 0.59). However, the main effect of gaze direction was insignificant (averted: 1.19 ± 0.62; direct: 1.18 ± 0.62, F(1, 2,377) = 2.04, p = 0.153, ηp2 < 0.001). The interaction between emotion and gaze direction was significant (F(1, 2,377) = 3.98, p = 0.046, ηp2 = 0.002). However, the other two-way interactions were not significant (emotion and TTC: F(1, 2,377) = 0.95, p = 0.386, ηp2 < 0.001; gaze direction and TTC: F(1, 2,377) = 0.65, p = 0.524, ηp2 < 0.001). The three-way interaction among emotion, gaze direction, and TTC was not significant (F(1, 2,377) = 0.54, p = 0.586, ηp2 < 0.001).

Figure 2. Results of the TTC estimation task. (A) Estimated TTC as a function of actual TTC in each condition. Each data point refers to the between-participant means with error bars showing 95% confidence intervals. Fear and neutral conditions are depicted in different colors. The dotted lines indicate veridical judgments of 0.6, 0.9, and 1.2 s. (B) Scatter plot showing relation of the residuals of TTC estimates and fear ratings.

Given the significant interaction between emotion and gaze direction, we conducted multiple comparisons to examine in more detail how these factors affected the TTC estimates. The mean TTC estimates and standard deviation were 1.16 ± 0.615 for fearful-averted, 1.19 ± 0.621 for fearful-direct, 1.22 ± 0.623 for neutral-averted, and 1.18 ± 0.612 for neutral-direct condition. The estimated TTC was significantly shorter for fearful-averted than that for neutral-averted condition (t(2377) = 2.80, p = 0.031, d = 0.11). However, there was no significant difference across other conditions (Fearful-averted vs. Fearful-direct: t(2377) = 0.40, p = 0.690, d = 0.02; Fearful-averted vs. Neutral-direct: t(2377) = 0.39, p = 0.697, d = 0.02; Fearful-direct vs. Neutral-averted: t(2377) = 0.01, p = 0.993, d = 0.002; Fearful-direct vs. Neutral-direct: t(2377) = 0.01, p = 0.064, d < 0.001; Neutral-averted vs. Neutral-direct: t(2377) = 2.42, p = 0.078, d = 0.10). Together, the analyses showed that fearful facial expressions led to shorter TTC estimates for approaching faces compared to neutral ones, but the effect emerged only when eye gaze was averted from the observer.

As previous studies have reported that people who have a greater fear of targets show greater reductions in the TTC estimates (Vagnoni et al., 2012, 2015, 2017), we examined whether TTC estimates were associated with participants’ reported fear ratings of the face stimuli. Following previous studies, to distinguish the effect of facial expressions from the variance related to individual differences, the individual means for fearful faces were regressed on those for neutral faces for both fear ratings and TTC estimates, and the residuals were obtained. The relationships between the residuals of the TTC estimates and fear ratings are shown in Figure 2B. A negative correlation between the residuals was observed, but it was not significant (r = −0.45, p = 0.09).

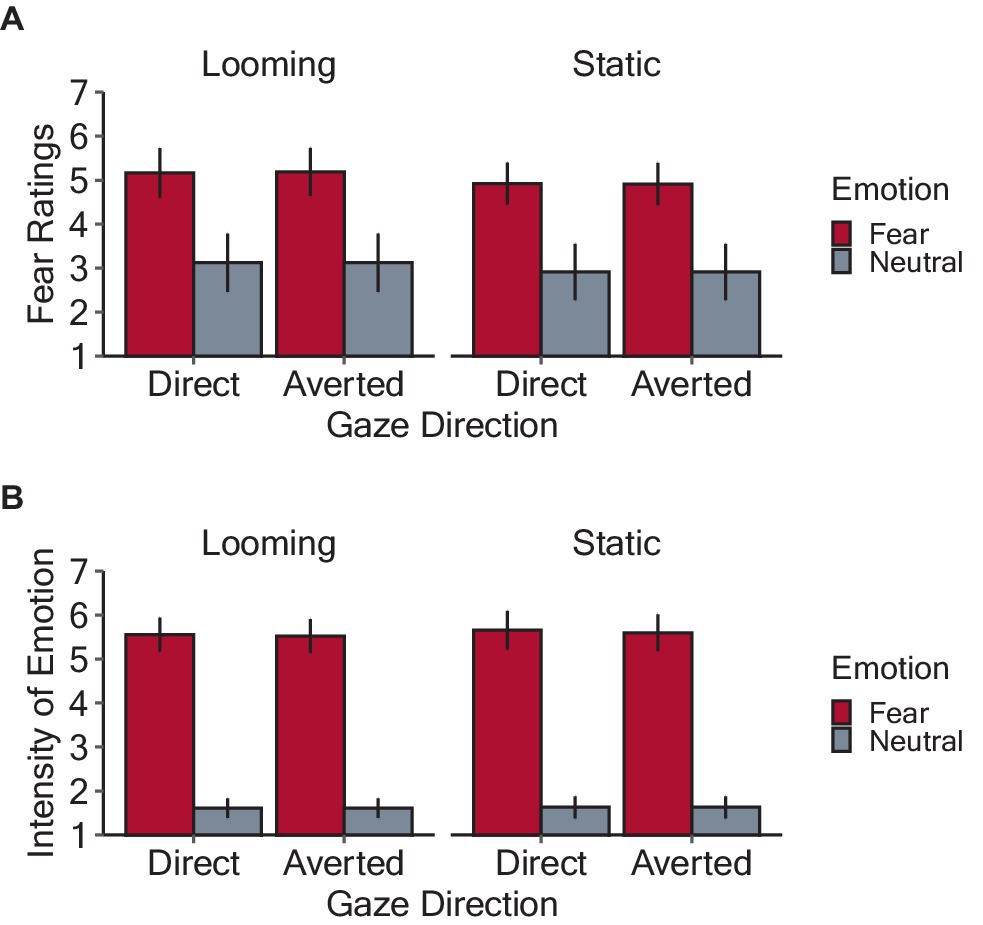

2.2.2 Rating tasks: subjective fear and emotional intensity of the face stimuli

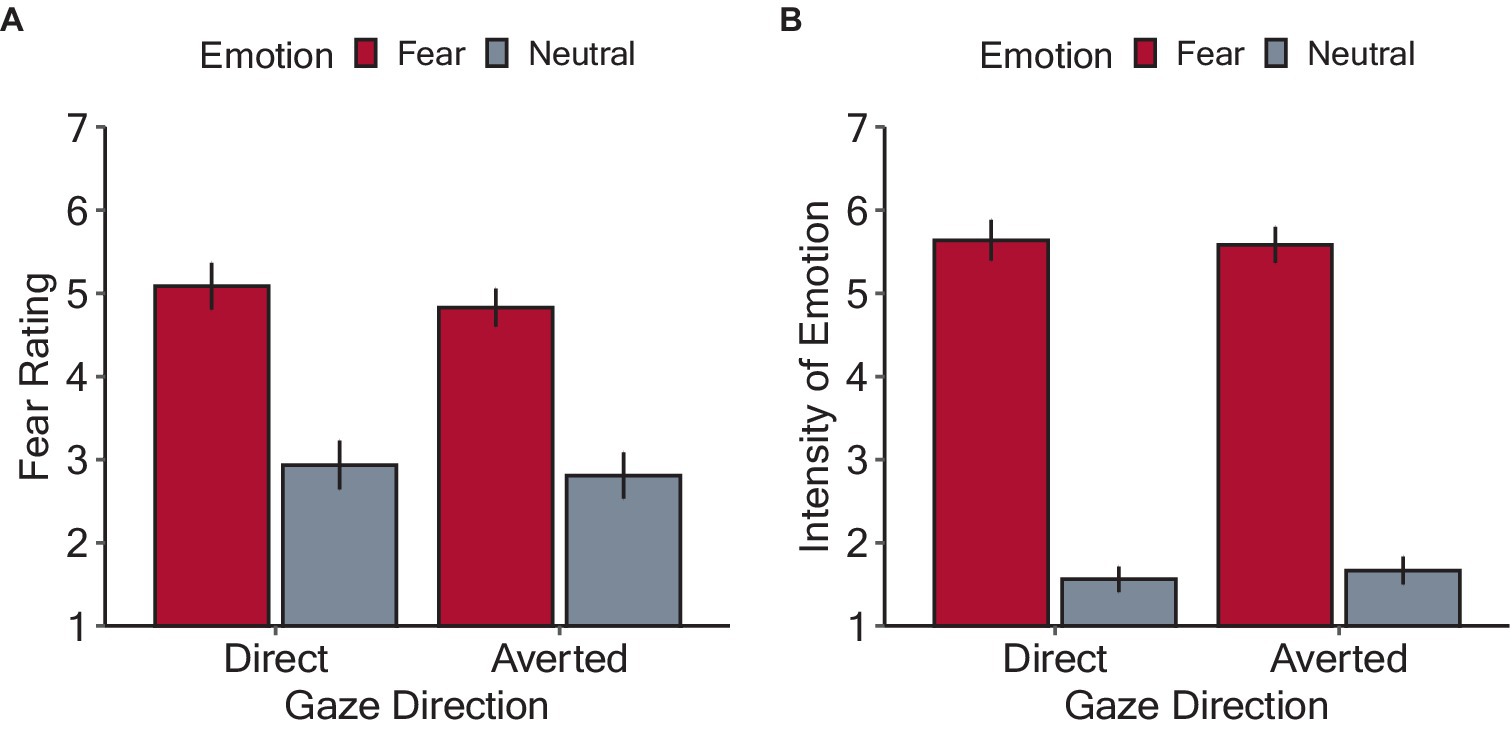

Figure 3A shows the mean ratings of the subjective fear induced by the stimuli. The model included the main effects of emotion, gaze direction, stimulus motion, and their interactions as fixed effects, as well as random by-participant intercepts. There were significant main effects of emotion (F(1, 802) = 371.46, p < 0.001, ηp2 = 0.32), due to higher scores for fearful (5.05 ± 1.34) than neutral (3.02 ± 1.63) condition, and of stimulus motion (F(1, 802) = 4.97, p = 0.026, ηp2 = 0.006), with higher scores for looming (4.11 ± 1.76) than static (3.88 ± 1.85) condition. However, the main effect of gaze direction was insignificant (averted: 3.04 ± 1.80; direct: 3.95 ± 1.81, F(1, 802) = 0.001, p = 0.97, ηp2 < 0.001). The interactions among these three factors were not significant (three-way interaction: F(1, 802) = 0.006, p = 0.94, ηp2 < 0.001; emotion and gaze direction: F(1, 802) = 0.001, p = 0.97, ηp2 < 0.001; emotion and stimulus motion: F(1, 802) = 0.06, p = 0.81, ηp2 < 0.001; gaze direction and stimulus motion: F(1, 802) = 0.005, p = 0.94, ηp2 < 0.001).

Figure 3. Results of the rating tasks in Experiment 1. (A) Mean fear rating of each experimental condition. (B) Mean rating for the perceived intensity of fear exhibited by the face stimuli. The bars denote the mean values with error bars showing 95% confidence intervals.

Figure 3B shows the mean ratings of the perceived intensity of fear emotion exhibited by the face stimuli in each condition. The selected model included the fixed effects of facial expression, gaze direction, stimulus motion, and all possible interactions, as well as the random by-participant intercepts. There was the main effect of emotion (F(1, 802) = 2442.26, p < 0.001, ηp2 = 0.75), due to higher scores for fearful (5.58 ± 1.27) than neutral (1.62 ± 0.995) condition. However, the main effects of gaze direction (averted: 3.59 ± 2.28; direct: d.46 ± 2.29, F(1, 802) = 0.07, p = 0.79, ηp2 < 0.001) and stimulus motion were not significant (looming: 3.50 ± 2.24; static: 3.55 ± 2.33, F(1, 802) = 0.42, p = 0.52, ηp2 < 0.001). There were no significant interactions (three-way interaction: F(1, 802) = 0.006, p = 0.94, ηp2 < 0.001; emotion and gaze direction: F(1, 802) = 0.07, p = 0.79, ηp2 < 0.001; emotion and stimulus motion: F(1, 802) = 0.18, p = 0.67, ηp2 < 0.001; gaze direction and stimulus motion: F(1, 802) = 0.005, p = 0.94, ηp2 < 0.001).

The results indicate that the emotional valence of the face stimuli was successfully manipulated. Looming faces were rated as more threatening than static faces, whereas the intensity of emotional facial expressions did not differ between looming and static faces. Given that looming motion serves as a warning signal to avoid a possible collision (e.g., Neuhoff, 2001; Bach et al., 2008; Cappe et al., 2012), it is intuitive that such a looming bias was observed for fear ratings but not for the perception of facial expression.

2.3 Discussion

Experiment 1 examined whether fearful facial expressions interacted with gaze direction in the TTC estimates of looming faces. We demonstrated that fearful faces were judged to arrive earlier than neutral faces only when the faces’ gaze was averted, indicating that fear reduced TTC estimates depending on the concomitant gaze. This result is consistent not only with previous findings that negative stimuli can reduce TTC estimates (e.g., Vagnoni et al., 2012), but also with the prediction based on the shared signal hypothesis, which assumes that fearful facial expressions should signal clear threats when coupled with averted gaze, providing new evidence of the susceptibility of TTC estimation to negative facial features.

Subjective ratings showed that the fearful faces were perceived to be expressing more intense fear and induced more fear than neutral faces, confirming that fear valence was successfully manipulated. However, the ratings varied based solely on facial expressions, regardless of gaze direction, unlike the TTC estimation. The gaze effect has been shown to occur only when the emotional expression is ambiguous for emotion intensity judgments (N’diaye et al., 2009) and for emotion discrimination (Graham and LaBar, 2007). Considering that our fearful faces clearly expressed fear, as indicated by the rating tasks, the insignificant gaze effect might be due to the manifest emotion in the stimuli, at least in consciously experienced emotions.

In addition, given the non-significant correlation between subjective fear and TTC reduction, it is unlikely that fear of the faces directly affected the TTC estimates. What factor could have reduced the TTC estimates for looming faces? According to the previous studies, fearful faces are not dangerous per se but imply the existence of an impending peril, which, coupled with averted gaze, induces environmental threats (e.g., Sander et al., 2007; Adams et al., 2012). Thus, one plausible explanation is that the TTC estimates were reduced owing to threats related to the viewing environment implied by the fearful-averted faces. Indeed, visual processing can change in threatening viewing contexts (Righart and de Gelder, 2008; Stefanucci et al., 2012; Wieser and Brosch, 2012; Tabor et al., 2015; Stolz et al., 2019). TTC estimates have also been shown to decrease in threatening situations (Neuhoff et al., 2012; Vagnoni et al., 2017). Against this background, it is feasible that environmental threats signaled by fearful-averted faces, rather than the specific fear of looming stimuli (Brendel et al., 2012; Vagnoni et al., 2012; DeLucia et al., 2014), reduced the TTC estimates in this experiment.

However, the reduced TTC estimates might reflect affective responses to the faces rather than visual processing of the looming motion. A potentially confounding factor would be discomfort with looming faces because negative facial expressions and direct gaze have been shown to increase preferred IPD from others (Bailenson et al., 2001; Asada et al., 2016; Ruggiero et al., 2017; Cartaud et al., 2018; Ruggiero et al., 2021; Silvestri et al., 2022), which seems to be adjusted according to the degree of discomfort experienced (e.g., Kennedy et al., 2009). Therefore, using the same face stimuli, Experiment 2 examined the emotion-gaze interaction in the preferred IPD task, where participants were asked to respond when they felt discomfort when viewing looming faces (e.g., Iachini et al., 2016; Silvestri et al., 2022). If the result of the TTC estimation task was due to increased discomfort with the fearful-averted faces, a similar interaction should be observed in the affective judgments of IPD from looming faces.

3 Experiment 2

3.1 Method

3.1.1 Participants

A different group of 15 students (six females, M = 21.9 years, SD = 2.43) with normal or corrected-to-normal vision participated in the experiment. Since there was no prior assumption on the effect size of the emotion-gaze interaction on preferred IPD judgments, we determined to collect the same sample size as in Experiment 1. All participants were naïve to the purpose of the experiment. All participants signed a written consent form in accordance with the Declaration of Helsinki, and the study was approved by the Ethics Review Committee for Research Involving Human Subjects of Ritsumeikan University.

3.1.2 Apparatus, stimuli, and procedure

The same apparatus and stimuli used in Experiment 1 were employed in Experiment 2, except that the visual angle was increased from 3° to 36° over 3 s, thereby simulating a face approaching the observer from a distance of 390 cm to 30 cm at a constant speed. Participants performed the preferred IPD task (e.g., Silvestri et al., 2022), where they were asked to press a key when they felt that the faces violated their personal space. They were instructed to respond as soon as they felt that the distance between them and the face stimuli made them uncomfortable. Unlike Experiment 1, where faces disappeared during the trial, in Experiment 2, the stimulus was presented until response and disappeared following the response or when no keypress was detected for 4 s after stimulus onset. A shorter response time indicated that the face caused discomfort at a greater distance from the observer. All four experimental conditions (2 emotions×2 gaze direction) were presented 21 times for a total of 84 trials (separated into two blocks). Trial order was randomized across participants and blocks.

Participants then completed two blocks of the subjective rating tasks, which were the same as those used in Experiment 1. Given that stimulus motion showed no interaction with emotion and gaze direction factors in Experiment 1, only the looming condition was examined herein. The rating tasks were again notified after the main task to prevent participants from ascertaining the purpose of the study.

3.2 Results

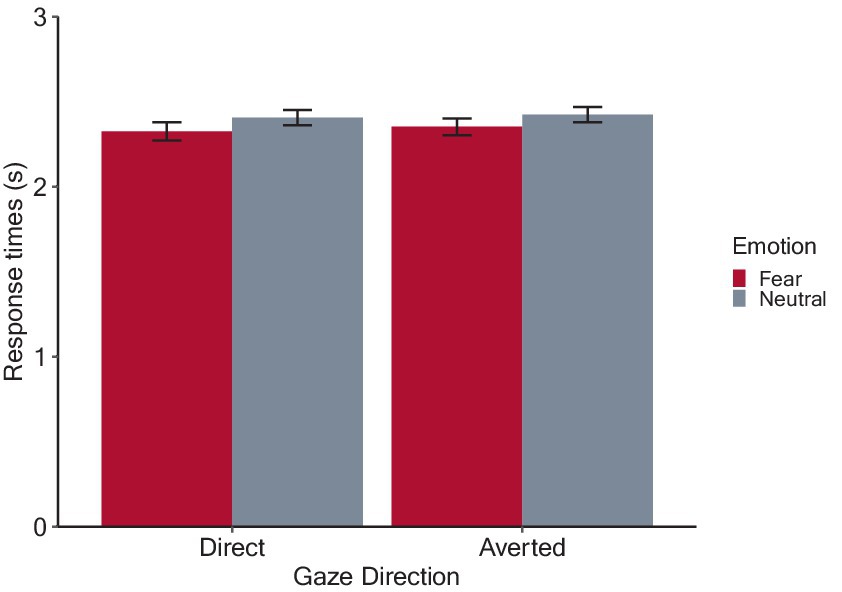

3.2.1 Effects of emotion and gaze direction on the preferred IPD

Figure 4 shows the estimated mean response times from stimulus onset for each condition. After applying backward elimination, we obtained a linear mixed model including the main effects of emotion and gaze direction and their interaction as fixed effects, as well as the random by-participant intercepts. The analysis revealed a significant main effect of emotion (F(1, 1,276) = 24.88, p < 0.001, ηp2 = 0.02), due to shorter response times for fearful (2.34 ± 0.47) than neutral (2.42 ± 0.41) condition. However, the main effect of gaze direction (averted: 2.39 ± 0.44; direct: 2.37 ± 0.46, F(1, 1,276) = 1.19, p = 0.275, ηp2 < 0.001), and the interaction between emotion and gaze direction were not significant (F(1, 1,276) = 0.32, p = 0.570, ηp2 < 0.001). These results indicate that fearful facial expressions decreased response times from the onset of looming faces, which indicates that participants maintained a greater IPD from fearful compared to the neutral faces, but the emotional effect remained regardless of gaze direction.

Figure 4. Results of the preferred interpersonal distance (IPD) judgment task. The bars denote mean response times with error bars showing 95% confidence intervals. Shorter response times indicate that participants experienced discomfort at a greater simulated IPD from looming faces.

3.2.2 Rating tasks

Figure 5 shows the mean subjective fear ratings and perceived intensity of fear expressed by the face stimuli. Regarding the subjective fear ratings, a linear mixed model including the by-participant random intercepts revealed a significant main effect of emotion (F(1, 402) = 362.72, p < 0.001, ηp2 = 0.47), with higher scores for fearful (4.91 ± 1.04) than neutral (2.88 ± 0.99) condition. The main effect of gaze direction was not significant (averted: 3.80 ± 1.34; direct: 4.00 ± 1.54, F(1, 402) = 3.03, p = 0.083, ηp2 = 0.007). There was no significant interaction between emotion and gaze direction (F(1, 402) = 0.37, p = 0.543, ηp2 < 0.001). Regarding the perceived intensity of fear expressed by the face stimuli, a linear mixed model including the by-participant random intercepts revealed a significant main effect of emotion (F(1, 402) = 1955.74, p < 0.001, ηp2 = 0.83), with higher scores for fearful (5.61 ± 0.77) than neutral (1.61 ± 0.35) condition. However, the main effect of gaze direction was not significant (averted: 3.62 ± 2.97; direct: 3.60 ± 2.17, F(1, 402) = 0.07, p = 0.371, ηp2 < 0.001). There was no significant interaction between emotion and gaze direction (F(1, 402) = 0.80, p = 0.079, ηp2 = 0.002). These results indicate that the emotions expressed by the facial stimuli were satisfactorily manipulated and were robust against the effects of gaze direction.

Figure 5. Results of the rating tasks in Experiment 2. (A) Mean fear rating for each experimental condition. (B) Mean perceived intensity of the fear expressed by the face stimuli. The bars denote mean values with error bars showing 95% confidence intervals.

3.3 Discussion

The results of the rating tasks were highly consistent with those in Experiment 1. Faces with fearful expressions were rated as expressing and inducing more fear than neutral ones, indicating that fear valence was successfully manipulated. Again, the ratings were not modulated by gaze direction, replicating the results of Experiment 1. In the IPD task, we found that participants stopped the faces earlier for fearful faces compared to neutral faces, which indicates that an increased preferred IPD from fearful faces, consistent with the previous knowledge of negative facial expression effects (Ruggiero et al., 2017, 2021; Cartaud et al., 2018; Silvestri et al., 2022). However, there was no gaze effect or the emotion-gaze interaction, which is inconsistent with that direct gaze can also increase preferred IPD (e.g., Bailenson et al., 2001). The consistent results for IPD, which is thought to be sensitive to affective responses to the target, and the rating tasks that assess conscious emotions toward the faces suggests that fearful facial expressions alter emotional responses to faces, resulting in the maintenance of a greater distance to looming faces expressing fear. On the other hand, given that no emotion-gaze interaction was found in either the IPD or the rating tasks, it is unlikely that gaze direction affected the emotion processing of the looming faces.

The lack of an effect of direct gaze on IPD could be at least partly due to the study methodology. While a direct gaze effect on IPD was evident in previous experiments involving real dyads or realistic virtual avatars, no such effect was found in experimental settings where printed pictures of faces were used (Sicorrelo et al., 2019). Less realistic faces with direct gaze have been shown to evoke weaker neural responses in the amygdala, while responses to facial expressions do not depend on facial realism (Pönkänen et al., 2011; Kätsyri et al., 2020). Moreover, direct gaze enhances the skin conductance response (SCR), a reliable measure of arousal (e.g., Nichols and Champness, 1971; Strom and Buck, 1979; Pönkänen and Hietanen, 2012), albeit only when engaging in a demanding task (Conty et al., 2010). Considering the nature of our task, which required participants to make a simple keypress when discomfort was felt in response to gray-scaled faces, direct gaze might have elicited insignificant increases in arousal, which is responsible for regulating preferred IPD (Kennedy et al., 2009). However, using the same task as ours, Silvestri et al. (2022) found that adult participants maintained a greater IPD from angry faces than happy and neutral faces, with no change in SCR by facial emotions. This suggests that preferred IPD may be susceptible to facial emotion, independent of physiological emotional responses.

Ambiguity in the interpretation of direct gaze might have attenuated the gaze effect, given that direct gaze may be associated with both hostility and intimacy (e.g., Argyle et al., 1986; Akechi et al., 2013; Hessels et al., 2017; Cui et al., 2019), which predict an increase and decrease in IPD, respectively. Additionally, the gaze effect on face processing depends inversely on the intensity of facial expressions (Graham and LaBar, 2007; N’diaye et al., 2009; Caruana et al., 2019), whereas the processing of emotions seems to be preconscious (Öhamn et al., 2001; Jiang et al., 2009; Stins et al., 2011; for a review, Frischen et al., 2008). Given the results of the rating tasks, which repeatedly indicated that our stimuli expressed manifest fear, it is possible that gaze direction had little effect on the affective processing of the fearful faces in this study. Together, Experiment 2 showed that the preferred IPD to looming faces was increased by clearly expressed fearful expressions but not by gaze direction.

This study was limited to examining a situation where participants passively observe looming faces, leaving the emotion-gaze interaction in IPD regulation during active approach unclear. Preferred IPD has been known to be greater when people are approached passively by others than when they approach others actively (Iachini et al., 2016). It is possible that people prefer to maintain a smaller IPD to others in situations where the existence of threats is implied, which is an intriguing hypothesis for future research. Additionally, since the sample size was calculated based on the effect size of facial expressions on TTC estimates in a previous report (Brendel et al., 2012), the result of null interaction effect in preferred IPD may have been underpowered. Although we analyzed the data designed for an ANOVA using mixed models, it has been suggested that a Monte Carlo simulation is effective for the sample size calculation for mixed models (Green and Mac Leod, 2016), which is another limitation of this research and should be considered in future research.

4 General discussion

We investigated the interaction effect of fearful facial expressions and gaze direction on TTC estimation and preferred IPD judgments toward looming faces, according to the shared signal hypothesis that fearful facial expressions with averted gaze strongly affect TTC estimates of looming faces (Adams and Kleck, 2003, 2005). Experiment 1 demonstrated the hypothesized emotion-gaze interaction on TTC estimates. Specifically, fearful faces led to shorter TTC estimates than neutral faces only when the gaze was averted from participants and disappeared when the gaze was directed toward them. In Experiment 2, participants displayed a greater preferred IPD for fearful faces than for neutral faces, regardless of gaze direction. Considering that the IPD is adjusted according to degree of discomfort and is regulated by an automatic arousal-sensitive process (e.g., Kennedy et al., 2009), the effect of emotion-gaze interaction on the TTC estimates observed herein is unlikely to be due to affective processes such as increased arousal, discomfort, or avoidant responses to the looming faces themselves. Additionally, an interaction between fearful facial expressions and gaze direction was observed in the TTC task, in which the looming stimuli disappeared during the trial, but not in the IPD task, in which the stimuli were presented until response. Given the different stimuli presentation procedures between the two tasks, our results suggest that integrated facial cues affect the cognitive extrapolation processing of unseen looming motion, which is only required in the TTC estimation task (DeLucia and Liddell, 1998; DeLucia, 2004). Taken together, these results indicate that fearful facial expressions interact with gaze direction in the spatiotemporal judgment of TTC, but not in the socioaffective judgment of preferred IPD from looming faces.

To the best of our knowledge, this is the first observation of an underestimation effect on TTC estimates by fearful facial expressions, although it has been reported that friendly faces increased TTC estimates compared to baseline empty faces (Brendel et al., 2012). Previous TTC studies that did not detect such an effect used only direct gaze stimuli (Brendel et al., 2012; DeLucia et al., 2014), which may have confounded the effects of facial expressions and direct gaze. Thus, the effects of facial expressions and the emotion-gaze interaction were uncertain. In contrast, the current study isolated the effects of negative facial expressions and direct gaze by examining fearful facial expressions, which should have a stronger influence with averted gaze. Given that fearful faces, unlike dangerous animals and angry confederates used in previous studies, are not themselves threatening targets, the observed reduction in TTC estimates by fearful-averted faces is unlikely to be due to increased fear of the stimuli, as suggested by the rating tasks. Based on previous studies showing that fearful faces coupled with averted gaze signal the existence of threats in the surrounding environment (Adams and Kleck, 2003, 2005; Adams et al., 2012), our results may be explained in terms of the environmental threats signaled by the faces, in line with the fact that TTC estimates decrease in situations where defensive actions are restricted (Neuhoff et al., 2012; Vagnoni et al., 2017). Thus, our findings suggest the susceptibility of TTC estimation to the environmental threats implied by the combination of facial features, in addition to the fear of the targets themselves (Brendel et al., 2012; Vagnoni et al., 2012; DeLucia et al., 2014). Note that the reduced TTC estimates in the averted gaze condition can hardly be explained by changes in arousal or face processing due to direct gaze (e.g., Nichols and Champness, 1971; Strom and Buck, 1979; Pönkänen and Hietanen, 2012). Given the previous knowledge of the beneficial role of mutual gaze in collision avoidance behavior (Nummenmaa et al., 2009; Narang et al., 2016), the prediction of collisions with looming faces might have been increased by averted gaze, which indicates that the expressor is unaware of the observer, thus affecting TTC estimates. However, if the attentional focus of faces inferred from gaze direction affects TTC estimation, a similar effect should also have appeared in the neutral expression condition.

The TTC task in Experiment 1 may have involved representations of faces in considerable proximity to observers or even collisions with faces, whereas the stimuli were stopped before intruding into participants’ personal space in Experiment 2. The TTC-specific modulation may be related to the intrusion of faces into the peripersponal space, or PPS (Rizzolatti et al., 1981; Di Pellegrino et al., 1997; Rizzolatti et al., 1997), and to an enlargement of PPS to prepare defensive responses to looming faces posing a threatening context (Neuhoff et al., 2012; Vagnoni et al., 2017). It has been known that the boundary of PPS is modulated by threats related to viewing situations (Lourenco et al., 2011; Sambo and Iannetti, 2013; Bufacchi and Iannetti, 2018; Serino, 2019), and that the defensive function of PPS is enhanced by fearful faces presented nearby (Ellena et al., 2020, 2021). Given these findings, a possible speculation based on our results is that in the TTC estimation task, the representational intrusion of faces into participants’ PPS may have enhanced defensive responses to environmental threats implied by fearful-averted faces.

Given the similarities between PPS and IPD (Iachini et al., 2016; Ruggiero et al., 2017; Patané et al., 2017; Quesque et al., 2017; D'Angelo et al., 2019), one might expect the effects observed in TTC estimation to also appear in IPD regulation. Although we demonstrated that the emotion-gaze interaction affects TTC estimates but not preferred IPD, we did not examine whether these two spatiotemporal judgments vary in tandem within individuals. It is intriguing to address the possibility that responses of PPS and IPD to face-induced threats may be dissociated in future studies. Another limitation of this study is that a possible influence of gender was not examined. Emotional facial expressions have been found to shrink preferred IPD of male more than female participants (Silvestri et al., 2022), raising a question whether the emotion-gaze interaction in the processing of looming faces show gender differences. Our post-hoc analysis of the effect of participant gender on preferred IPD preliminarily found that females (2.09 ± 0.34) showed faster response times than males (2.56 ± 0.40), without any interactions with emotions and gaze direction (data not shown). This is consistent with the knowledge that females perceive approaching stimuli as more intruding than males (Wabnegger et al., 2016), although inconsistent with Silvestri et al. (2022) showing faster response times for males than females. Moreover, it has been known that when participants passively observe approaching others, judgments of reachable and comfortable distances are both larger for male than female confederates (Iachini et al., 2016). Given the effect of stimulus gender in the judgments related to PPS and IPD, it is possible that stimulus gender affected TTC estimates and preferred IPD, but our post-hoc analyses found no effect of stimulus gender in both tasks (data not shown), possibly due to the gray-colored and cropped face stimuli and the modest sample size. Therefore, the influences of the gender of participants and stimulus, if any, were not crucial for interpreting our results in this study. However, future studies could include these as possible factors that may influence the emotion-gaze interaction in the processing of looming faces.

There were some potential confounders in this study, none of which undermined the results. First, since tau theory originally postulates that TTC estimates depend on the rate of optical expansion, the decrease in TTC estimates could have been related to perceptual modulations. Because threatening targets and viewing situations can cause observers to overestimate size and underestimate distance (e.g., van Ulzen et al., 2008; Stefanucci et al., 2012; Vasey et al., 2012; Leibovich et al., 2016), it is possible that the fearful-averted faces were perceived as larger or closer than actual, both of which would predict decreased TTC estimates. Such perceptual modulations could have appeared in the IPD task, where visual inputs provided until response would have yielded a continuous perception of looming faces, but this was not the case. Thus, the effect of emotion-gaze interaction on TTC estimates is unlikely to be due to ongoing changes in visual perception. Second, the stimulus duration differed between tasks. While the stimuli were presented for 1 s and then disappeared in Experiment 1, in Experiment 2, they were displayed for longer periods (average, 2.4 s) until a response. The shorter duration of stimuli presentation and smaller optical size in the TTC task may have reduced emotional discriminability, which underlies the gaze effect in emotion judgment tasks (Graham and LaBar, 2007; N’diaye et al., 2009). However, given the rapid processing of facial expressions observed in electroencephalography studies (Eimer and Holmes, 2002; Schyns et al., 2007; Poncet et al., 2019), a duration of 1 s seems sufficient for recognizing facial expressions, as indicated by our rating tasks. Additionally, the simulated velocities of the looming stimuli were similar in the two experiments, precluding any influence of motion velocity. Third, it is worth mentioning that the perceived duration of angry faces has been known to be lengthened by increased arousal (e.g., Doi and Shinohara, 2009; Gil and Droit-Volet, 2012; Kliegl et al., 2015). If the perceived duration of the fearful-averted faces had been lengthened, such an effect might also have been observed in preferred IPD judgments, which are sensitive to arousal. In addition, a longer stimulus duration should result in a lower rate of optical expansion within a unit of time, which would predict increased TTC estimates. Finally, the stimuli consisted of faces of other races from that of our Japanese participants. Although it is known that facial ethnicity can influence the emotional processing of facial expressions (for a review, Elfenbein and Ambady, 2002), the rating tasks confirmed accurate emotion perception in both experiments. Therefore, the other-race effect is unlikely to be crucial to our finding of emotion-gaze interaction on TTC estimates. Other-race faces with direct gaze can increase amygdala responses more than own-race faces (Richeson et al., 2008). Moreover, amygdala responses to fearful expressions were shown to be larger for averted gaze in own-race faces, but for direct gaze in other-race faces (Hadjikhani et al., 2008; Adams et al., 2010). Also, the fearful-averted bias for own-race faces can disappear in Japanese participants (Adams et al., 2010), likely due to the perception of direct gaze as intrusive in Asian cultures (Graham and LaBar, 2012; Akechi et al., 2013). Since all participants in this study were Japanese, the fearful-direct gaze in the other-race face stimuli might have increased amygdala activity. However, our results did not show a specific effect of fearful-direct faces, even in preferred IPD involving amygdala activity (Kennedy et al., 2009). Future studies could examine the mechanism of the observed emotion-gaze interaction in more detail through inter-racial experiments using brain imaging techniques and physiological measures.

The current study demonstrated that fearful facial expressions and gaze direction interact on TTC estimation, but not on preferred IPD from looming faces, providing important insights into how the visual system processes and monitors looming motion to ensure bodily safety and comfortable interpersonal communication. Our findings show that TTC estimation is susceptible to a specific combination of facial features. TTC-specific modulation by fearful-averted faces can be interpreted in terms of environment-related threats rather than affective responses to the faces themselves. Reduced TTC estimates in situations in which a threat is implied could reflect an adaptive bias of the visual system to prepare a safety margin for avoiding collisions. On the other hand, the finding that direct gaze regulates the effect of fearful facial expressions suggests that mutual gaze may preclude the emotional impact on the veridical perception of looming motion. This is consistent with the previously known benefit of mutual gaze in collision avoidance (Nummenmaa et al., 2009; Narang et al., 2016), reconciling adaptive bias with the beneficial role of mutual gaze in interpersonal communication. The limitations of this study are that we only investigated fearful facial expressions and did not measure face-induced environmental threats. Future studies need to examine other facial expressions and use physiological measures and behavioral tasks related to defensive responses to invisible threats to better understand the detailed characteristics and mechanisms of the emotion-gaze interactions in the perceptual, cognitive, and emotional processing of looming individuals.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Ethics Review Committee for Research Involving Human Subjects, Ritsumeikan University. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

DY: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Writing – original draft, Writing – review & editing. MN: Conceptualization, Supervision, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This study was supported by a grant from the Japan Society for the Promotion of Science (grant numbers: 21K20308, 22KJ3024).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^Note that all participants were Japanese, and their ethnicity differed from that of Caucasian face stimuli. While an own-race advantage has been shown in face perception (e.g., Elfenbein and Ambady, 2002), it has also been reported that Asian participants exhibit a low accuracy when categorizing fearful expressions and tend to misclassify Asians’ facial expressions as sad (Jack et al., 2009; Ma et al., 2022). Given our primary aim of examining the combination of fearful expressions and averted gaze, a well-validated dataset was used to ensure veridical perception of fear.

References

Abrams, R. A., and Christ, S. E. (2005). The onset of receding motion captures attention: comment on 626 Franconeri and Simons (2003). Percept. & Psychophys. 67, 219–223. doi: 10.3758/bf03206486

Adams, R. B. Jr., Franklin, R. G. Jr., Kveraga, K., Ambady, N., Kleck, R. E., Whalen, P. J., et al. (2012). Amygdala responses to averted vs direct gaze fear vary as a function of presentation speed. Soc. Cogn. Affect. Neurosci. 7, 568–577. doi: 10.1093/scan/nsr038

Adams, R. B. Jr., Franklin, R. G. Jr., Rule, N. O., Freeman, J. B., Kveraga, K., Hadjikhani, N., et al. (2010). Culture, gaze and the neural processing of fear expressions. Soc. Cogn. Affect. Neurosci. 5, 340–348. doi: 10.1093/scan/nsp047

Adams, R. B. Jr., Gordon, H. L., Baird, A. A., Ambady, N., and Kleck, R. E. (2003). Effects of gaze on amygdala sensitivity to anger and fear faces. Science 300:1536. doi: 10.1126/science.1082244

Adams, R. B. Jr., and Kleck, R. E. (2003). Perceived gaze direction and the processing of facial display of emotion. Psychol. Sci. 14, 644–647. doi: 10.1046/j.0956-7976.2003.psci_1479.x

Adams, R. B. Jr., and Kleck, R. E. (2005). Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion 5, 3–11. doi: 10.1037/1528-3542.5.1.3

Akechi, H., Senju, A., Uibo, H., Kikuchi, Y., Hasegawa, T., and Hietanen, J. K. (2013). Attention to eye contact in the west and east: autonomic responses and evaluative ratings. PLoS One 8:e59312. doi: 10.1371/journal.pone.0059312

Argyle, M., Henderson, M., Bond, M., Iizuka, Y., and Contarello, A. (1986). Cross-cultural variations in relationship rules. Int. J. Psychol. 21, 287–315. doi: 10.1080/00207598608247591

Asada, K., Tojo, Y., Osanai, H., Saito, A., Hasegawa, T., and Kumagaya, S. (2016). Reduced personal space in individuals with autism spectrum disorder. PLoS One 11:e0146306. doi: 10.1371/journal.pone.0146306

Bach, D. R., Schächinger, H., Neuhoff, J. G., Esposito, F., Di Salle, F., Lehmann, C., et al. (2008). Rising sound intensity: an intrinsic warning cue activating the amygdala. Cereb. Cortex 18, 145–150. doi: 10.1093/cercor/bhm040

Bailenson, J. N., Blascovich, J., Beall, A. C., and Loomis, J. M. (2001). Equilibrium theory revisited: mutual gaze and personal space in virtual environments. Presence Teleop. Virt. 10, 583–598. doi: 10.1162/105474601753272844

Ball, W., and Tronick, E. (1971). Infant responses to impending collision: optical and real. Science 171, 818–820. doi: 10.1126/science.171.3973.818

Barr, D. J., Levy, R., Scheepers, C., and Tily, H. J. (2013). Random effects structure for confirmatory hypothesis testing: keep it maximal. J. Mem. Lang. 68, 255–278. doi: 10.1016/j.jml.2012.11.001

Baurès, R., Oberfeld, D., and Hecht, H. (2010). Judging the contact-times of multiple objects: evidence for asymmetric interference. Acta Psychol. 134, 363–371. doi: 10.1016/j.actpsy.2010.03.009

Billington, J., Wilkie, R. M., Field, D. T., and Wann, J. P. (2011). Neural processing of imminent collision in humans. Proc. R. Soc. B Biol. Sci. 278, 1476–1481. doi: 10.1098/rspb.2010.1895

Brendel, E., DeLucia, P. R., Hecht, H., Stacy, R. L., and Larsen, J. T. (2012). Threatening pictures induce shortened time-to-contact estimates. Atten. Percept. Psychophys. 74, 979–987. doi: 10.3758/s13414-012-0285-0

Bufacchi, R. J., and Iannetti, G. D. (2018). An action field theory of peripersonal space. Trends Cogn. Sci. 22, 1076–1090. doi: 10.1016/j.tics.2018.09.004

Candini, M., Giuberti, V., Santelli, E., di Pellegrino, G., and Frassinetti, F. (2019). When social and action spaces diverge: a study in children with typical development and autism. Autism 23, 1687–1698. doi: 10.1177/1362361318822504

Cappe, C., Thelen, A., Romei, V., Thut, G., and Murray, M. M. (2012). Looming signals reveal synergistic principles of multisensory integration. J. Neurosci. 32, 1171–1182. doi: 10.1523/JNEUROSCI.5517-11.2012

Cartaud, A., Ruggiero, G., Ott, L., Iachini, T., and Coello, Y. (2018). Physiological response to facial expressions in peripersonal space determines interpersonal distance in a social interaction context. Front. Psychol. 9:657. doi: 10.3389/fpsyg.2018.00657

Caruana, N., Inkley, C., Zein, M. E., and Seymour, K. (2019). No influence of eye gaze on emotional face processing in the absence of conscious awareness. Sci. Rep. 9: 16198. doi: 10.1038/s41598-019-52728-y

Coello, Y., and Cartaud, A. (2021). The interrelation between peripersonal action space and interpersonal social space: psychophysiological evidence and clinical implications. Front. Hum. Neurosci. 15:636124. doi: 10.3389/fnhum.2021.636124

Conty, L., Russo, M., Loehr, V., Hugueville, L., Barbu, S., Huguet, P., et al. (2010). The mere perception of eye contact increases arousal during a word spalling task. Soc. Neurosci. 5, 171–186. doi: 10.1080/17470910903227507

Cui, M., Zhu, M., Lu, X., and Zhu, L. (2019). Implicit perceptions of closeness from the direct eye gaze. Front. Psychol. 9:2673. doi: 10.3389/fpsyg.2018.02673

D'Angelo, M., di Pellegrino, G., and Frassinetti, F. (2019). The illusion of having a tall or short body differently modulates interpersonal and peripersonal space. Behav. Brain Res. 375:112146. doi: 10.1016/j.bbr.2019.112146

de C Hamilton, A. F. (2016). Gazing at me: the importance of social meaning in understanding direct-gaze cues. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 371:20150080. doi: 10.1098/rstb.2015.0080

DeLucia, P. R. (2004). Time-to-contact judgments of an approaching object that is partially concealed by an Occluder. J. Exp. Psychol. Hum. Percept. Perform. 30, 287–304. doi: 10.1037/0096-1523.30.2.287

DeLucia, P. R., Brendel, E., Hecht, H., Stacy, R. L., and Larsen, J. T. (2014). Threatening scenes but 701 not threatening faces shorten time-to-contact estimates. Atten. Percept.\u0026amp; Psychophys. 76, 1698–1708. doi: 10.3758/s13414-014-0681-8

DeLucia, P. R., and Liddell, G. W. (1998). Cognitive motion extrapolation and cognitive clocking in prediction motion task. J. Exp. Psychol. Hum. Percept. Perform. 24, 901–914. doi: 10.1037/0096-1523.24.3.901

Di Pellegrino, G., Ladavas, E., and Farne, A. (1997). Seeing where your hands are. Nature 388:730. doi: 10.1038/41921

Doi, H., and Shinohara, K. (2009). The perceived duration of emotional face is influenced by the gaze direction. Neurosci. Lett. 457, 97–100. doi: 10.1016/j.neulet.2009.04.004

Eimer, M., and Holmes, A. (2002). An ERP study on the time course of emotional face processing. Neuroreport 13, 427–431. doi: 10.1097/00001756-200203250-00013

Ekman, P., and Friesen, W. V. (1976). Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press.

Elfenbein, H. A., and Ambady, N. (2002). On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol. Bull. 128, 203–235. doi: 10.1037/0033-2909.128.2.203

Ellena, G., Starita, F., Haggard, P., and Làdavas, E. (2020). The spatial logic of fear. Cognition 203:104336. doi: 10.1016/j.cognition.2020.104336

Ellena, G., Starita, F., Haggard, P., Romei, V., and Làdavas, E. (2021). Fearful faces modulate spatial processing in peripersonal space: An ERP study. Neuropsychologia, 156:107827. doi: 10.1016/j.neuropsychologia.2021.107827

Emery, N. J. (2000). The eyes have it: the neuroethology, function and evolution of social gaze. Neurosci. Biobehav. Rev. 24, 581–604. doi: 10.1016/S0149-7634(00)00025-7

Faul, F., Erdfeldefr, E., Buchner, A., and Lang, A. G. (2009). Statistical power analyses using G*power 3.1: test for correlation and regression analysis. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/BRM.41.4.1149

Finlayson, N. J., Remington, R. W., and Grove, P. M. (2012). The role of presentation method and depth singletons in visual search for objects moving in depth. J. Vis. 12:13. doi: 10.1167/12.8.13

Fox, E., Mathews, A., Calder, A., and Yiend, J. (2007). Anxiety and sensitivity to gaze direction in emotionally expressive faces. Emotion 7, 478–486. doi: 10.1037/1528-3542.7.3.478

Franconeri, S. L., and Simons, D. J. (2003). Moving and looming stimuli capture attention. Percept. Psychophys. 65, 999–1010. doi: 10.3758/BF03194829

Frischen, A., Eastwood, J. D., and Smilek, D. (2008). Visual search for faces with emotional expressions. Psychol. Bull. 134, 662–676. doi: 10.1037/0033-2909.134.5.662

Gil, S., and Droit-Volet, S. (2012). Emotional time distortions: the fundamental role of arousal. Cognit. Emot. 26, 847–862. doi: 10.1080/02699931.2011.625401

Graham, R., and LaBar, K. S. (2007). Garner interference reveals dependencies between emotional expression and gaze in face perception. Emotion 7, 296–313. doi: 10.1037/1528-3542.7.2.296

Graham, R., and LaBar, K. S. (2012). Neurocognitive mechanisms of gaze-expression interactions in face processing and social attention. Neuropsychologia 50, 553–566. doi: 10.1016/j.neuropsychologia.2012.01.019

Gray, R., and Regan, D. (1998). Accuracy of estimating time to collision using binocular and monocular information. Vis. Res. 38, 499–512. doi: 10.1016/S0042-6989(97)00230-7

Green, P., and Mac Leod, C. J. (2016). SIMR: an R package for power analysis of generalized linear mixed models by simulation. Methods Ecol. Evol. 7, 493–498. doi: 10.1111/2041-210X.12504

Hadders-Algra, M. (2022). Human face and gaze perception is highly context specific and involves bottom-up and top-down neural processing. Neurosci. Biobehav. Rev. 132, 304–323. doi: 10.1016/j.neubiorev.2021.11.042

Hadjikhani, N., Hoge, R., Snyder, J., and de Gelder, B. (2008). Pointing with the eyes: the role of gaze in communicating danger. Brain Cognition 68, 1–8. doi: 10.1016/j.bandc.2008.01.008

Hayduk, L. A. (1983). Personal space: where we now stand. Psychol. Bull. 94, 293–335. doi: 10.1037/0033-2909.94.2.293

Hessels, R. S., Cornelissen, T. H. W., Hooge, I. T. C., and Kemner, C. (2017). Gaze behavior to faces during dyadic interaction. Can. J. Exp. Psychol. 71, 226–242. doi: 10.1037/cep0000113

Hietanen, J. K., Leppänen, J. M., Peltola, M. J., Linna-aho, K., and Ruuhiala, H. J. (2008). Seeing direct and averted gaze activates the approach–avoidance motivational brain systems. Neuropsychologia 46, 2423–2430. doi: 10.1016/j.neuropsychologia.2008.02.029

Hinde, R. A., and Rowell, T. E. (1962). Communication by posture and facial expression in the rhesus monkey. Proc. Zool. Soc. London 138, 1–21. doi: 10.1111/j.1469-7998.1962.tb05684.x

Iachini, T., Coello, Y., Frassinetti, F., Senese, V. P., Galante, F., and Ruggiero, G. (2016). Peripersonal and interpersonal space in virtual and real environments: effects of gender and age. J. Environ. Psychol. 45, 154–164. doi: 10.1016/j.jenvp.2016.01.004

Ioannou, S., Morris, P., Mercer, H., Baker, M., Gallese, V., and Reddy, V. (2014). Proximity and gaze influences facial temperature: a thermal infrared imaging study. Front. Psychol. 5:845. doi: 10.3389/fpsyg.2014.00845

Jack, R. E., Blais, C., Scheepers, C., Schyns, P. G., and Caldara, R. (2009). Cultural confusions show that facial expressions are not universal. Curr. Biol. 19, 1543–1548. doi: 10.1016/j.cub.2009.07.051

Jiang, Y., Shannon, R. W., Vizueta, N., Bernat, E. M., Patrick, C. J., and He, S. (2009). Dynamics of processing invisible faces in the brain: automatic neural encoding of facial expression information. Neuro Image 44, 1171–1177. doi: 10.1016/j.neuroimage.2008.09.038

Kätsyri, J., de Gelder, B., and de Borst, A. W. (2020). Amygdala responds to direct gaze in real but not in computer-generated faces. Neuro Image 204:116216. doi: 10.1016/j.neuroimage.2019.116216

Kennedy, D. P., Glascher, J., Tyszka, J. M., and Adolphs, R. (2009). Personal space regulation by the human amygdala. Nat. Neurosci. 12, 1226–1227. doi: 10.1038/nn.2381

Kim, N. G., and Son, H. (2015). How facial expressions of emotion affect distance perception. Front. Psychol. 6:1825. doi: 10.3389/fpsyg.2015.01825

Kliegl, K. M., Limbrecht-Ecklundt, K., Dürr, L., Traue, H. C., and Huckauf, A. (2015). The complex duration perception of emotional faces: effects of face direction. Front. Psychol. 6:262. doi: 10.3389/fpsyg.2015.00262

Lee, D. N. (1976). A theory of visual control of braking based on information about time-to-collision. Perception 5, 437–459. doi: 10.1068/p050437

Leibovich, T., Cohen, N., and Henik, A. (2016). Itsy bitsy spider? Valence and self-relevance predict size estimation. Biol. Psychol. 121, 138–145. doi: 10.1016/j.biopsycho.2016.01.009

Lewkowicz, D. J. (2008). Perception of dynamic and static audiovisual sequences in 3- and 4-month-old infants. Child Dev. 79, 1538–1554. doi: 10.1111/j.1467-8624.2008.01204.x

Lourenco, S. F., Longo, M. R., and Pathman, T. (2011). Near space and its relation to claustrophobic fear. Cognition 119, 448–453. doi: 10.1016/j.cognition.2011.02.009

Macrae, C. N., Hood, B. M., Milne, A. B., Rowe, A. C., and Mason, M. F. (2002). Are you looking at me? Eye gaze and person perception. Psychol. Sci. 13, 460–464. doi: 10.1111/1467-9280.00481

Mason, M., Hood, B. M., and Macrae, C. M. (2004). Look into my eyes: gaze direction and person memory. Memory 12, 637–643. doi: 10.1080/09658210344000152

Matuschek, H., Kliegl, R., Vasishth, S., Baayen, H., and Bates, D. (2017). Balancing type I error and power in linear mixed models. J. Mem. Lang. 94, 305–315. doi: 10.1016/j.jml.2017.01.001

Ma, X., Fu, M., Zhang, X., Song, X., Becker, B., Wu, R., et al. (2022). Own race eye-gaze bias for all emotional faces but accuracy bias only for sad expressions. Front. Neurosci. 16:852484. doi: 10.3389/fnins.2022.852484

McCrackin, S. D., and Itier, R. J. (2018a). Both fearful and happy expressions interact with gaze direction by 200 ms SOA to speed attention orienting. Vis. Cogn. 26, 231–252. doi: 10.1080/13506285.2017.1420118

McCrackin, S. D., and Itier, R. J. (2018b). Individual differences in the emotional modulation of gaze-cuing. Cognit. Emot. 33, 768–800. doi: 10.1080/02699931.2018.1495618

McCrackin, S. D., and Itier, R. J. (2019). Perceived gaze direction differentially affects discrimination of facial emotion, attention, and gender–an ERP study. Front. Neurosci. 13:440237. doi: 10.3389/fnins.2019.00517

McGuire, A., Ciersdorff, A., Gillath, O., and Vitevitch, M. (2021). Effects of cognitive load and type of object on the visual looming bias. Atten. Percept. Psychophys. 83, 1508–1517. doi: 10.3758/s13414-021-02271-8

Milders, M., Hietanen, J. K., Leppänen, J. M., and Braun, M. (2011). Detection of emotional faces is modulated by the direction of eye gaze. Emotion 11, 1456–1461. doi: 10.1037/a0022901

Morris, J. S., Frith, C. D., Perrett, D. I., Rowland, D., Young, A. W., Calder, A. J., et al. (1996). A differential neural response in the human amygdala to fearful and happy facial expressions. Nature 383, 812–815. doi: 10.1038/383812a0

N’Diaye, K., Sander, D., and Vuilleumier, P. (2009). Self-relevance processing in the human amygdala: gaze direction, facial expression, and emotion intensity. Emotion 9, 798–806. doi: 10.1037/a0017845

Náñez, J. Sr., and Yonas, A. (1994). Effects of luminance and texture motion on infant defensive reactions to optical collision. Infant Behav. Dev. 17, 165–174. doi: 10.1016/0163-6383(94)90052-3

Narang, S., Best, A., Randhavane, T., Shapiro, A., and Manocha, D. (2016). PedVR: simulating gaze-based interactions between a real user and virtual crowds. Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology, 91–100. Available at: https://doi.org/10.1145/2993369.2993378

Neuhoff, J. G. (2001). An adaptive bias in the perception of looming auditory motion. Ecol. Psychol. 13, 87–110. doi: 10.1207/S15326969ECO1302_2

Neuhoff, J. G., Long, K. L., and Worthington, R. C. (2012). Strength and physical fitness predict the perception of looming sounds. Evol. Hum. Behav. 33, 318–322. doi: 10.1016/j.evolhumbehav.2011.11.001

Nichols, K. A., and Champness, B. G. (1971). Eye gaze and the GSR. J. Exp. Soc. Psychol. 7, 632–626. doi: 10.1016/0022-1031(71)90024-2

Nummenmaa, L., Hyönä, J., and Hietanen, J. K. (2009). I'll walk this way: eyes reveal the direction of locomotion and make passersby look and go the other way. Psychol. Sci. 20, 1454–1458. doi: 10.1111/j.1467-9280.2009.02464.x

Öhamn, A., Lundqvist, D., and Esteves, F. (2001). The face in the crowd revisited: a threat advantage with schematic stimuli. J. Pers. Soc. Psychol. 80, 381–396. doi: 10.1037/0022-3514.80.3.381

Patané, I., Farnè, A., and Frassinetti, F. (2017). Cooperative tool-use reveals peripersonal and interpersonal spaces are dissociable. Cognition 166, 13–22. doi: 10.1016/j.cognition.2017.04.013

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., et al. (2019). PsychoPy2: experiments in behavior made easy. Behav. Res. Methods 51, 195–203. doi: 10.3758/s13428-018-01193-y

Peron, S., and Gabbiani, F. (2009). Spike frequency adaptation mediates looming stimulus selectivity in a collision-detecting neuron. Nat. Neurosci. 12, 318–326. doi: 10.1038/nn.2259

Poncet, F., Baudouin, J. Y., Dzhelyova, M. P., Rossion, B., and Leleu, A. (2019). Rapid and automatic discrimination between facial expressions in the human brain. Neuropsychologia 129, 47–55. doi: 10.1016/j.neuropsychologia.2019.03.006

Pönkänen, L. M., and Hietanen, J. K. (2012). Eye contact with neutral and smiling faces: effects on autonomic responses and frontal EEG asymmetry. Front. Hum. Neurosci. 6:122. doi: 10.3389/fnhum.2012.00122

Pönkänen, L. M., Peltola, M. J., and Hietanen, J. K. (2011). The observer observed: frontal EEG asymmetry and autonomic responses differentiate between another person’s direct and averted gaze when the face is seen live. Int. J. Psychophysiol. 82, 180–187. doi: 10.1016/j.ijpsycho.2011.08.006

Quesque, F., Ruggiero, G., Mouta, S., Santos, J., Iachini, T., and Coello, Y. (2017). Keeping you at arm's length: modifying peripersonal space influences interpersonal distance. Psychol. Res. 81, 709–720. doi: 10.1007/s00426-016-0782-1

Regan, D., and Gray, R. (2000). Visually guided collision avoidance and collision achievement. Trends Cogn. Sci. 4, 99–107. doi: 10.1016/S1364-6613(99)01442-4

Richeson, J. A., Todd, A. R., Trawalter, S., and Baird, A. A. (2008). Eye-gaze direction modulates race-related amygdala activity. Group Process. Intergroup Relat. 11, 233–246. doi: 10.1177/1368430207088040

Rigato, S., Menon, E., Johnson, M. H., and Farroni, T. (2011). The interaction between gaze direction and facial expressions in newborns. Eur. J. Dev. Psychol. 8, 624–636. doi: 10.1080/17405629.2011.602239

Righart, R., and de Gelder, B. (2008). Rapid influence of emotional scenes on encoding of facial expressions: an ERP study. Soc. Cogn. Affect. Neurosci. 3, 270–278. doi: 10.1093/scan/nsn021

Rizzolatti, G., Fadiga, L., Fogassi, L., and Gallese, V. (1997). The space around us. Science 11, 190–191. doi: 10.1126/science.277.5323.190

Rizzolatti, G., Scandolara, C., Matelli, M., and Gentilucci, M. (1981). Afferent properties of periarcuate neurons in macque monkeys. Behav. Brain Res. 2, 147–163. doi: 10.1016/0166-4328(81)90053-X

Roelofs, K., Hagenaars, M. A., and Stins, J. (2010). Facing freeze: social threat induces bodily freeze in humans. Psychol. Sci. 21, 1575–1581. doi: 10.1177/0956797610384746

Ruggiero, G., Frassinetti, F., Coello, Y., Rapuano, M., di Cola, A. S., and Iachini, T. (2017). The effect of facial expressions on peripersonal and interpersonal spaces. Psychol. Res. 81, 1232–1240. doi: 10.1007/s00426-016-0806-x

Ruggiero, G., Rapuano, M., Cartaud, A., Coello, Y., and Iachini, T. (2021). Defensive functions provoke similar psychophysiological reactions in reaching and comfort spaces. Sci. Rep. 11:5170. doi: 10.1038/s41598-021-83988-2

Sambo, C. F., and Iannetti, G. D. (2013). Better safe than sorry? The safety margin surrounding the body is increased by anxiety. J. Neurosci. 33, 14225–14230. doi: 10.1523/JNEUROSCI.0706-13.2013

Sander, D., Grandjean, D., Kaiser, S., Wehrle, T., and Scherer, K. R. (2007). Interaction effects of perceived gaze direction and dynamic facial expression: evidence for appraisal theories of emotion. Eur. J. Cogn. Psychol. 19, 470–480. doi: 10.1080/09541440600757426

Sato, W., Yoshikawa, S., Kochiyama, T., and Matsumura, M. (2004). The amygdala processes the emotional significance of facial expressions: an fMRI investigation using the interaction between expression and face direction. Neuro Image 22, 1006–1013. doi: 10.1016/j.neuroimage.2004.02.030

Schiff, W., Oldak, R., and Shah, V. (1992). Aging persons' estimates of vehicular motion. Psychol. Aging 7, 518–525. doi: 10.1037/0882-7974.7.4.518

Schyns, P. G., Petro, L. S., and Smith, M. L. (2007). Dynamics of visual information integration in the brain for categorizing facial expressions. Curr. Biol. 17, 1580–1585. doi: 10.1016/j.cub.2007.08.048

Senju, A., and Hasegawa, T. (2005). Direct gaze captures visuospatial attention. Vis. Cogn. 12, 127–144. doi: 10.1080/13506280444000157

Serino, A. (2019). Peripersonal space (PPS) as a multisensory interface between the individual and the environment, defining the space of the self. Neurosci. Biobehav. Rev. 99, 138–159. doi: 10.1016/j.neubiorev.2019.01.016

Shirai, N., and Yamaguchi, M. K. (2004). Asymmetry in the perception of motion-in-depth. Vis. Res. 44, 1003–1011. doi: 10.1016/j.visres.2003.07.012