- 1Department of Clinical and Experimental Sciences, University of Brescia, Brescia, Italy

- 2IRCCS Neuromed, Pozzilli, Italy

Introduction: The widespread use of surgical masks during the COVID-19 pandemic has posed challenges in interpreting facial emotions. As the mouth is known to play a crucial role in decoding emotional expressions, its covering is likely to affect this process. Recent evidence suggests that facial expressions impact behavioral responses only when their emotional content is relevant to subjects’ goals. Thus, this study investigates whether and how masked emotional faces alter such a phenomenon.

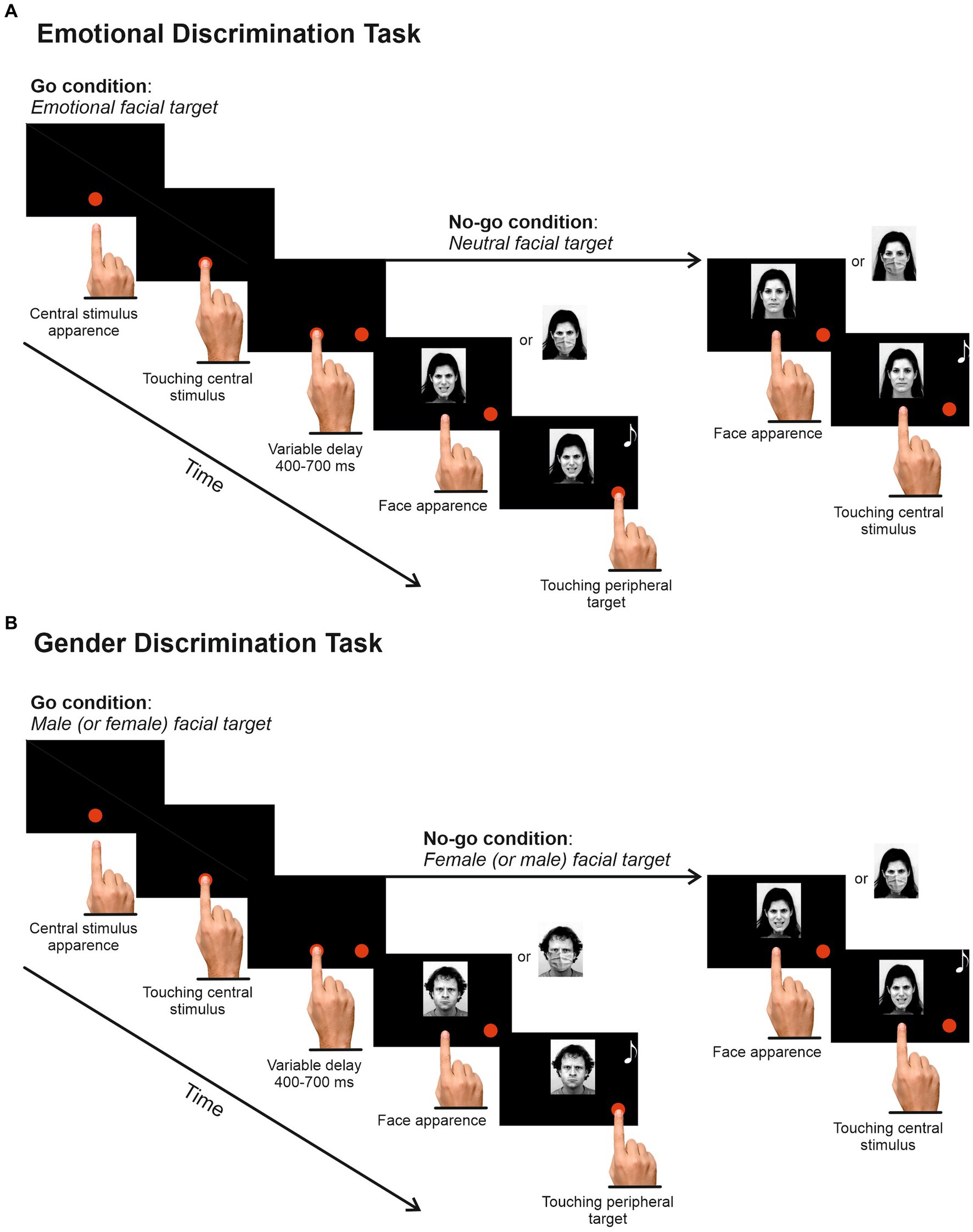

Methods: Forty participants completed two reaching versions of the Go/No-go task in a counterbalanced fashion. In the Emotional Discrimination Task (EDT), participants were required to respond to angry, fearful, or happy expressions by performing a reaching movement and withholding it when a neutral face was presented. In the Gender Discrimination Task (GDT), the same images were shown, but participants had to respond according to the poser’s gender. The face stimuli were presented in two conditions: covered by a surgical mask (masked) or without any covering (unmasked).

Results: Consistent with previous studies, valence influenced behavioral control in the EDT but not in the GDT. Nevertheless, responses to facial emotions in the EDT exhibited significant differences between unmasked and masked conditions. In the former, angry expressions led to a slowdown in participants’ responses. Conversely, in the masked condition, behavioral reactions were impacted by fearful and, to a greater extent, by happy expressions. Responses to fearful faces were slower, and those to happy faces exhibited increased variability in the masked condition compared to the unmasked condition. Furthermore, response accuracy to masked happy faces dramatically declined compared to the unmasked condition and other masked emotions.

Discussion: In sum, our findings indicate that surgical masks disrupt reactions to emotional expressions, leading people to react less accurately and with heightened variability to happy expressions, provided that the emotional dimension is relevant to people’s goals.

1 Introduction

Facial expressions represent a form of non-verbal communication that can complement and enhance verbal communication, providing important cues about the emotional states, needs, and intentions of others, enabling us to manage and coordinate our social interactions. Thus, the ability to perceive and interpret facial expressions is a crucial aspect of social cognition, allowing individuals to respond appropriately in social contexts.

While there is evidence indicating that emotional stimuli affect motor behavior, consensus on the underlying mechanisms remains elusive (Mancini et al., 2020; Montalti and Mirabella, 2023). The prevailing theoretical framework for interpreting the effect of emotional stimuli on behavioral reactions is the motivational model (Bradley et al., 2001; Lang and Bradley, 2010). This model is built on the notion that, unlike most stimuli, emotional ones cannot be filtered by selective attention, and thus, they trigger automatic responses promoting our survival (Lang et al., 2000; Vuilleumier, 2005). In contrast, the appraisal theories of emotion (Moors and Fischer, 2019; Scherer and Moors, 2019) argue against the existence of attentional bias toward emotionally laden stimuli and assert that behavioral reactions to such stimuli vary depending on the situational context and the individual’s specific goals. In this context, a specific stimulus does not possess an inherently negative or positive value in absolute terms. The following example clarifies the concept. Suppose a normal person encounters a spider. She/he will likely have a fearful reaction, aiming to avoid it. Conversely, an entomologist, actively seeking spiders for his/her studies, will react joyfully, entailing an approach-oriented behavior. The appraisal theories received strong support from a recent series of studies that employed an innovative Go/No-go paradigm developed by Mirabella and colleagues (Mirabella, 2018; Mancini et al., 2020, 2022; Calbi et al., 2022; Mirabella et al., 2023; Montalti and Mirabella, 2023). In this experimental design, the same group of participants is engaged in two versions of the Go/No-go task, differing only in the instruction given to participants. In the emotional version (the Emotional Discrimination Task, EDT), participants have to move when they see an emotional face and withhold the response when it is neutral, or vice versa. In the control version, participants were instructed to respond according to other images’ features, such as the poser’s gender (Mirabella, 2018; Mancini et al., 2020, 2022; Mirabella et al., 2023; Montalti and Mirabella, 2023) or the t-shirt’s color (Calbi et al., 2022). Overall, Mirabella and colleagues’ findings unveiled that emotional valence exerted a significant influence on motor planning (Mirabella, 2018; Mancini et al., 2020; Mirabella et al., 2023; Montalti and Mirabella, 2023), and inhibitory control (Calbi et al., 2022; Mancini et al., 2022), provided that this stimulus dimension is relevant for task instructions, i.e., in the EDT. Their findings suggest that threatening stimuli, such as angry and fearful expressions, hold subjects’ attention stronger than happy faces, but only when the emotional connotation of the stimuli aligns with the participants’ goals. This phenomenon was named task-relevant effect.

Over the past three years, the outbreak of the COVID-19 pandemic has necessitated the widespread use of surgical masks. This has posed a novel challenge for recognizing others’ expressions as the masks cover the mouth region, one of the most relevant features for identifying emotional expressions (Engbert and Kliegl, 2003; Smith et al., 2005; Eisenbarth and Alpers, 2011; Bodenschatz et al., 2019). It is generally acknowledged that the mouth mainly contributes to the recognition of happiness, disgust, and surprise, while the eyes are deemed more relevant for detecting sadness and anger (Schurgin et al., 2014). The literature is discordant on fearful expressions recognition. Some studies argue for a substantial role of the eyes (Whalen et al., 2001; Morris et al., 2002; Adolphs et al., 2005), while others sustain that mouth and eyes provide equal contributions to the identification of fear (Eisenbarth and Alpers, 2011). Several previous studies have examined the impact of surgical masks on the identification of emotional faces and found that, as expected, healthy people do not show any impairments in recognizing anger, but they were impaired in recognizing happiness (Calbi et al., 2021; Marini et al., 2021; Grahlow et al., 2022; Proverbio and Cerri, 2022; Rinck et al., 2022; Ventura et al., 2023). Nevertheless, this evidence does not offer insights into how we respond to emotional faces, as the visual signals used for guiding motor actions are processed differently from those involved in perception (Goodale, 2014). To fill this gap, in the current study, we assessed the impact of masks on behavioral reactions elicited by three emotional facial expressions (angry, happy, and fearful faces). We chose such emotional expressions because we studied them previously using the above-described paradigm. Our primary objective was to assess whether the ask-relevance effect observed in unmasked faces persisted in the presence of surgical masks. In other words, we wondered whether healthy persons, in addition to their ability to recognize emotional expressions explicitly, were still able to implicitly use visual information to respond appropriately to such stimuli.

2 Materials and methods

2.1 Participants

Notably, 40 healthy volunteers participated in the present study (20 females; mean ± standard deviation age = 24.5 ± 3.6, range = 19.7–32.9). The sample size was determined in advance using G*Power 3.1.9.4 (Faul et al., 2009) using repeated-measure ANOVA. The input variables for such calculation were taken from previously published data (Mirabella, 2018; Mancini et al., 2020; Montalti and Mirabella, 2023; effect size = 0.15, alpha = 0.05, power = 0.80, number of measures = 12, correlations among repeated measures r = 0.5, and non-sphericity correction e = 0.8). The estimated minimum sample size was 37. All participants had normal or corrected-to-normal visual acuity and were naïve about the study’s purpose. None of the participants had a history of psychiatric or neurological disorders.

Before the experimental session, we assessed the presence of alexithymia, which is a condition characterized by difficulty or inability to identify, express, and communicate one’s own emotions. It also makes it difficult for people to understand and respond to other people’s emotions, leading to difficulties in social relationships. Consequently, we considered the alexithymia as an exclusion criterion. As a screening tool, we administered the Italian version of the Toronto Alexithymia Scale (TAS-20; Bagby et al., 1994; Bressi et al., 1996). This scale consisted of 20 statements, and participants were required to indicate their level of agreement using a 5-point Likert scale. Participants were included in the study only if their TAS-20 total score was smaller than the alexithymia threshold, i.e., 61.

The study received approval from the local ethical committee “ASST Spedali Civili” di Brescia (protocol number 4452) and was conducted in accordance with the principles outlined in the Declaration of Helsinki (2013). Before the study’s commencement, all participants provided written informed consent to participate.

2.2 Stimuli

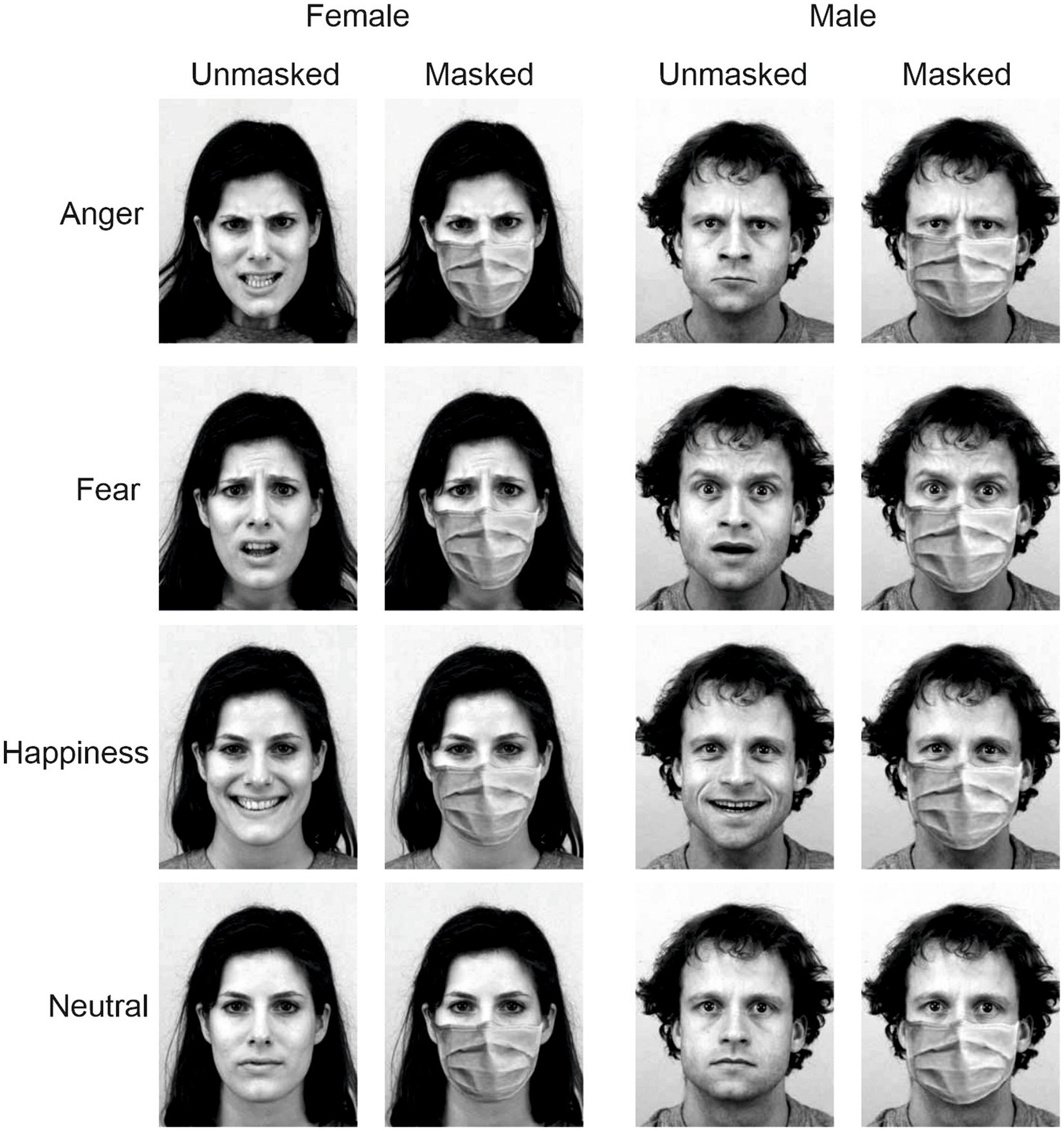

Stimuli were angry, fearful, happy, and neutral facial expressions interpreted by eight actors (four females) taken from the Karolinska Directed Emotional Faces (KDEF; Lundqvist et al., 1998). Using Photoshop software (version 2020, Adobe Systems Inc., San Jose, United States), we added a surgical mask to each stimulus to obtain a masked and an unmasked version of each stimulus. Thus, we created 64 different stimuli (Figure 1).

Figure 1. Examples of the experimental stimuli depict angry, fearful, happy, and neutral facial expressions of a man and a woman. Stimuli were taken from the Karolinska Directed Emotional Faces (KDEF; Lundqvist et al., 1998; https://kdef.se/home/aboutKDEF). Pictures were modified, adding a surgical mask to obtain a masked and an unmasked version of each stimulus’. Stimuli were reproduced and adapted with permission (see https://kdef.se/faq/using-and-publishing-kdef-and-akdef).

At the end of the experimental sessions, two questionnaires were administered to evaluate the arousal and valence of each stimulus. In the arousal questionnaire, participants had to evaluate each stimulus using a 7-point Likert scale ranging from “1 = not at all arousing” to “7 = very much arousing.” The valence questionnaire had a similar structure, but the Likert scale ranged from “1 = negative” to 7 = positive.” The middle point, i.e., 4, was labeled as neutral.

For both valence and arousal and each participant, we calculated the average ratings of each experimental condition. Such a procedure was conducted separately for each emotion and face with and without surgical masks. Although both arousal and valence ratings did not follow a normal distribution according to the Shapiro–Wilk test, we opted to use parametric ANOVA as our sample size was larger than 30 participants (Fagerland, 2012). This large sample size satisfies the requirements of the central limit theorem, making the parametric approach robust even in cases of deviations from the normal distribution (Rasch and Guiard, 2004). Both ANOVAs had two within-participant factors: Emotion (four levels: anger, fear, happiness, and neutral) and Mask (two levels: masked and unmasked).

The analysis of valence ratings revealed a significant main effect of Emotion (F(1.81, 70.43) = 597.68, p < 0.001, η2ₚ = 0.94). Post-hoc comparison with Bonferroni correction indicated that all emotions differed from one another (all ps < 0.0001). In particular, happy facial expressions (5.81 ± 1.06) were rated significantly more positive-valenced than angry (1.80 ± 0.86), fearful (2.35 ± 0.91), and neutral expressions (3.81 ± 0.72). Furthermore, neutral faces were judged more positive than angry and fearful expressions, while fearful expressions were considered more positive than angry ones. There was also a significant main effect of Mask (F(1, 39) = 40.66, p < 0.001, η2ₚ = 0.51), indicating that unmasked faces (3.52 ± 1.97) were rated more positive in valence than masked faces (3.36 ± 1.59). Finally, the ANOVA showed a significant interaction effect between Emotion and Mask (F(2.41, 93.85) = 65.08, p < 0.001, η2ₚ = 0.62). Post-hoc comparison with Bonferroni correction revealed that unmasked happy faces were rated more positively (6.29 ± 0.86) than masked ones (5.32 ± 1.03; p < 0.0001). Also, it revealed that within both masked and unmasked conditions, all emotions differed from each other (all ps < 0.0001). In both cases, these differences followed the same pattern observed in the main effect of Emotion.

The analysis of arousal ratings showed a significant main effect of Emotion (F(1.87, 72.82) = 79.67, p < 0.001, η2ₚ = 0.67). Post-hoc comparisons with Bonferroni correction revealed that neutral faces (2.12 ± 1.21) were rated less arousing compared to happy (3.69 ± 1.67), fearful (4.49 ± 1.47), and angry expressions (4.39 ± 1.48; all ps < 0.0001). Furthermore, happy expressions were rated significantly less arousing than both angry and fearful faces (ps < 0.0001), while angry and fearful faces did not differ significantly from each other (p = 1.00). We also found a main effect of Mask (F(1, 39) = 76.17, p < 0.001, η2ₚ = 0.66) because masked faces (3.32 ± 1.64) were perceived as less arousing than unmasked ones (4.03 ± 1.77). Finally, the analysis unveiled a significant interaction between Emotion and Mask (F(2.76, 107.64) = 21.01, p < 0.001, η2ₚ = 0.35). Post-hoc comparison with Bonferroni correction showed that the mask affected all emotions. But the neutral ones. In fact, masked expressions were rated less arousing than their unmasked counterparts (ps < 0.0001). Furthermore, within the masked expressions, all emotions differed (ps < 0.0001) except anger and fear. Differently, within the unmasked faces, only neutral expressions differed from all other emotions (all ps < 0.0001).

2.3 Experimental apparatus and procedure

Participants were tested in a dimly lit and soundproofed room, using a 17-inch PC monitor where visual stimuli were displayed. The PC monitor was linked to a touch screen (MicroTouch; sampling rate 200 Hz) for monitoring touch position. The timing of the stimulus presentation was synchronized with the monitor’s refresh rate. Behavioral responses and stimulus presentation were controlled by Cortex, a non-commercial software package developed at NIH.1 In one experimental session, participants performed two different versions of the Go/No-go task in a counterbalanced fashion. In the EDT (Figure 2A), participants were instructed to reach a central red dot (2.43 cd/m2, diameter 2.8 cm or 4 dva) appearing two centimeters below the center of the screen, with the index finger of their dominant hand, previously assessed using the Italian version of the Edinburgh Handedness test (Oldfield, 1971). When participants touched the central dot, a peripheral target appeared on the right side of the screen at an eccentricity of 8 cm or 11.3 dva. Participants were instructed to hold the central dot for a variable time (400–700 ms) until it disappeared and a picture of a face (go-stimulus) appeared above it. When the face had an emotional connotation (go trials; 66%), participants had to reach the peripheral target as quickly and accurately as possible, holding it for a variable time (300–400 ms). Conversely, when the face displayed a neutral expression (no-go trials; 34%), they were instructed to remain still and hold the index on the central position for a random time (400–800 ms).

Figure 2. Experimental Design. (A) Emotional Discrimination Task. Each trial started with the appearance of a red dot at the center of the screen. Participants were instructed to touch and hold it for a random delay of 400–700 ms. Then, a peripheral red dot appeared on the right side of the screen, followed by an image depicting a face with angry, fearful, happy, or neutral expressions. Participants had to reach and hold the peripheral target when the face expressed an emotion (67%) and refrain from moving when the face had a neutral expression (33%). (B) The Gender Discrimination Task had the same structure, but participants had to respond or refrain from responding according to the poser’s gender. Half of the participants were instructed to respond to the male target and to withhold their response to the female target, and vice versa for the other half. The task order was counterbalanced across participants, and the experimental conditions were randomized in each task. Stimuli were reproduced and adapted with permission (see https://kdef.se/faq/using-and-publishing-kdef-and-akdef).

For both go and no-go trials, the correct execution was signaled by acoustic feedback, followed by an intertrial interval lasting 800 ms. During this period, the screen was black. In the go trials, participants had a maximum time of 500 ms to respond. However, we allowed participants an extra 100 ms to avoid cutting the right tail of reaction times (RTs) distribution. The upper-RT limit was set to discourage participants from slowing their movement to easy stopping. When the participants’ response was between 500 and 600 ms, the go trial was signaled as an error and aborted (overtime reaching trials, see Mirabella et al., 2006). Nevertheless, overtime-reaching trials were included in the analyses, accounting for 5.95% of the total go trials. Overall, the EDT had 432 trials that were given in two blocks to allow rest if requested. Experimental conditions were randomized and balanced across blocks.

The Gender Discrimination Task (GDT; Figure 2B) was identical to the EDT except that participants were instructed to respond according to the stimuli’ gender, i.e., half of the participants had to respond to the presentation of female faces and to withhold their response to male faces, and vice versa for the other half. The GDT also had 432 trials delivered in two blocks (34% no-go trials). The experimental conditions were randomized and balanced. The overtime-reaching trials accounted for 3.17% of the total go trials.

2.4 Statistical analyses

The impact of emotions on behavioral responses was investigated by analyzing three indices, calculated separately for each participant and condition, i.e., RTs and movement times (MTs) of correct go trials and omission error rates (OERs). RTs are defined as the time between the go-signal and the movement onset. The mean RT was calculated excluding go trials with an RT that exceeded three standard deviations above and below the mean. This procedure resulted in excluding 0.53% of trials in the EDT and 0.53% in the GDT. MTs are measured as the time between the movement onset and the moment when participants touch the peripheral target. Finally, OERs are instances in which participants did not respond to the go-signal, keeping touching the central stimulus. OERs were computed as the ratio between the number of errors in a given condition and the overall number of trials in the same condition, multiplied by 100.

As the analyses of arousal ratings revealed significant differences within the masked condition, we included arousal as a between-participant factor to rule out the possibility that such dimension, rather than valence, could explain the effect. To this aim, we exploited the Delta Arousal index, which was computed using the Revised Standardized Difference Test (RSDT; Crawford and Garthwaite, 2005) that allowed us to evaluate whether the standardized difference between the ratings of one individual significantly deviated from the average difference of the all the other participants, considered as the control group. Since the RSDT assessed the differences only between two variables, but we have three emotions, we opted to collapse anger and fear ratings, as their arousal did not differ in the masked and unmasked conditions. We then converted the resulting z-values into percentiles to create two equally sized subgroups, i.e., participants with a Delta Arousal ranging from the 30th to the 70th percentile were categorized as the “low arousal” subgroup (10 females; age = 23.9 ± 3.4 years, range = 19.7–32.9), while those outside this range were assigned to the “high arousal” subgroup (10 females; age = 25.2 ± 3.7 years, range = 20.3–31.9).

Separately for RTs, MTs, and OERs, we performed a four-way mixed-design ANOVA [within-participants factors: Emotion (three levels: anger, fear, and happiness); Task (two levels: EDT and GDT); Mask (two levels: masked and unmasked); between-participants factor: Delta Arousal (two levels: high and low)]. Additionally, for each behavioral parameter, we performed a control analysis for the GDT go trials, as in these trials, neutral facial expressions were also shown. We used a three-way mixed-design ANOVAs [within-participants factors: Emotion (four levels: anger, fear, happiness, and neutral); Mask (two levels: masked and unmasked); between-participants factor: Delta Arousal (two levels: high and low)].2 The Shapiro–Wilk tests showed that RTs and MTs were normally distributed, while the OERs were not. However, we also employed parametric ANOVA for OERs because we had a large sample (Fagerland, 2012).

Post-hoc tests were corrected using Bonferroni, and we reported the effect sizes as partial eta-squared (η2p) or Cohen’s d. Finally, we computed the Bayes Factors to quantify the null hypothesis’ strength (BF10; Jarosz and Wiley, 2014), setting the prior odds to 0.707 (R package BayesFactor; Morey and Rouder, 2018). Values of BF10 > 3 and > 10 indicate moderate and strong support for the alternative hypothesis, respectively. Values of BF10 < 0.1 and < 0.33 indicate strong and substantial support for the null hypothesis, and values 0.33 < BF10 < 3 are inconsistent for any hypothesis. All statistical analyses were performed using R, version 4.2.3 (R Core Team, 2020).

3 Results

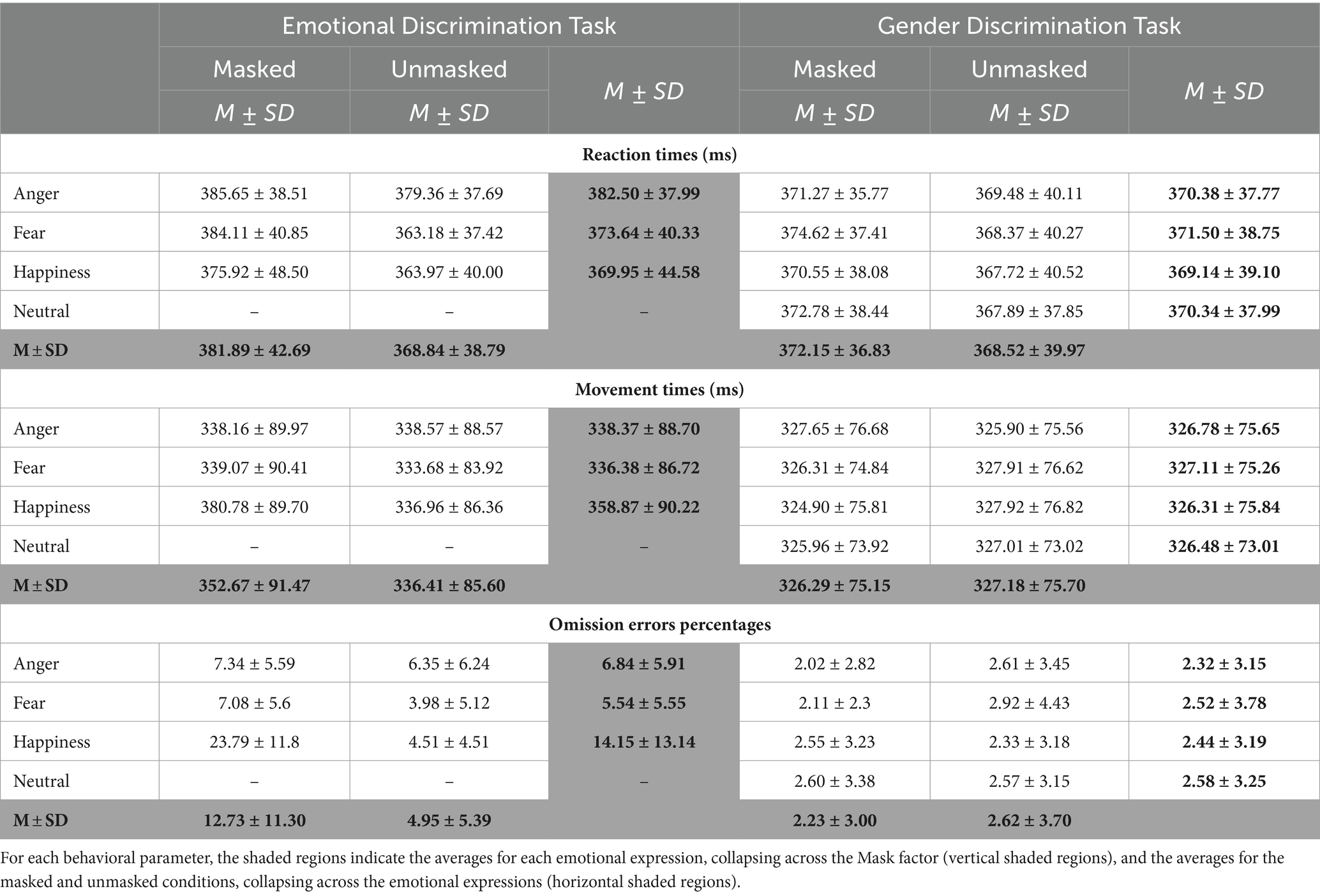

Table 1 reports the means and standard deviations for each experimental condition. The results of the three behavioral indices, i.e., RTs, MTs, and OERs, will be presented in single subsections.

Table 1. Means (M) and standard deviations (SD) of Go trial reaction times, movement times, and omission error percentages.

3.1 Reaction times

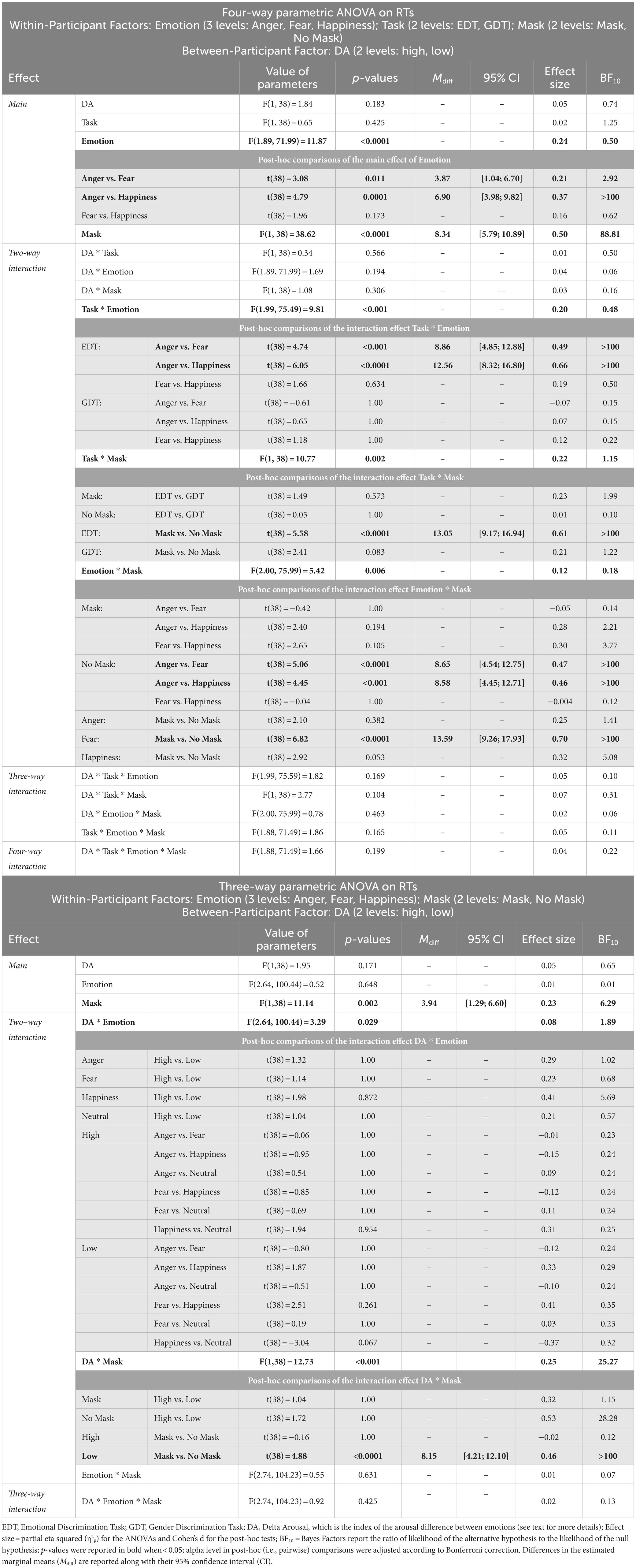

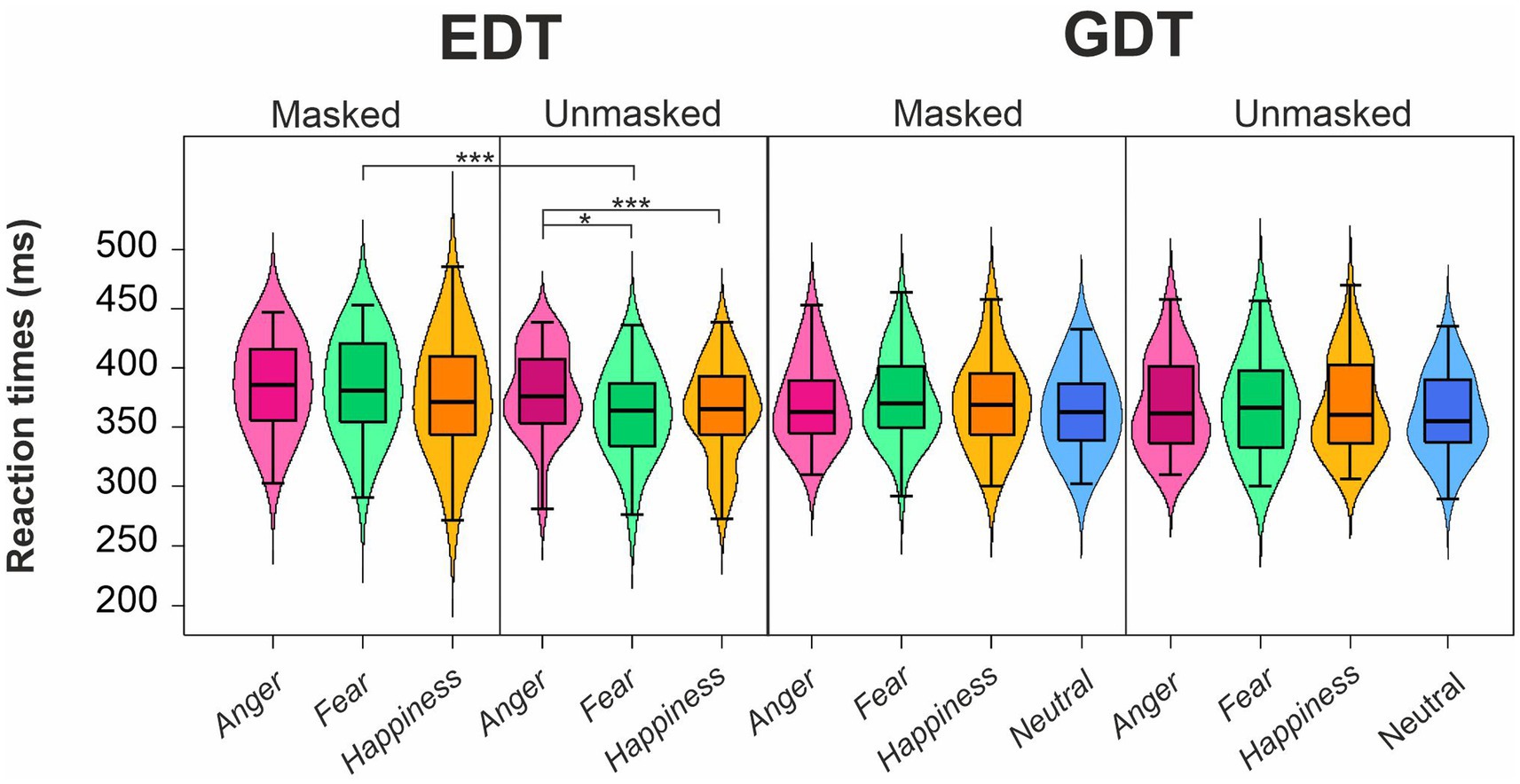

The four-way ANOVA on mean RTs of go trials (Figure 3; Table 2) revealed two main effects. First, the factor Emotion showed a main effect as participants’ RTs were longer for angry (376.44 ± 38.25 ms) than fearful (372.57 ± 39.43 ms) and happy faces (369.54 ± 41.80 ms). Second, we found an effect of Mask demonstrating that participants took longer to respond to masked (377.02 ± 40.08 ms) than unmasked facial expressions (368.68 ± 39.30 ms).

Figure 3. Effects of emotional facial expressions on reaction times in the Emotional (EDT) and Gender Discrimination Task (GDT). Boxplots are reported inside violin plots, which depict kernel probability density. The black line of the boxplot represents the median of the data, and the lower and the upper box’s boundaries indicate the first and third quartiles, respectively. The violin plot width shows the data frequency. Black dots represent outliers. * p < 0.05, *** p < 0.0001.

Both main effects were qualified by the interaction Emotion*Mask. Post-hoc comparisons indicated that participants displayed significantly longer RTs for angry unmasked expressions (374.42 ± 38.99 ms) than fearful (365.77 ± 38.71 ms) and happy unmasked faces (365.85 ± 40.05 ms). In addition, masked fearful expressions (379.37 ± 39.21 ms) elicited longer RTs than unmasked fearful expressions (365.77 ± 38.71 ms). Crucially, we also found an interaction Emotion*Task. Post-hoc comparisons indicated that in the EDT, RTs to angry faces (382.50 ± 37.99 ms) were longer than those to fearful (373.64 ± 40.33 ms) and happy expressions (369.95 ± 44.58 ms), whereas no such difference was observed in the GDT. Finally, there was also an interaction Task*Mask. Post-hoc comparisons revealed that during EDT, participants had longer RTs for masked (381.89 ± 42.69 ms) than unmasked faces (368.84 ± 38.79 ms).

The control three-way ANOVA on mean RTs of go trials in the GDT showed a main effect of Mask, as participants responded slower to the masked (372.31 ± 37.12 ms) than unmasked expressions (368.37 ± 39.33 ms). We also found two interactions involving the factor Delta Arousal. The first one was the Delta Arousal*Mask. Post-hoc comparisons indicated that participants of the Low Delta Arousal subgroup were slower for masked (366.38 ± 32.03 ms) than unmasked faces (358.22 ± 34.51 ms). The second interaction was Delta Arousal*Emotion. However, no comparison survived to Bonferroni’s correction.

3.2 Movement times

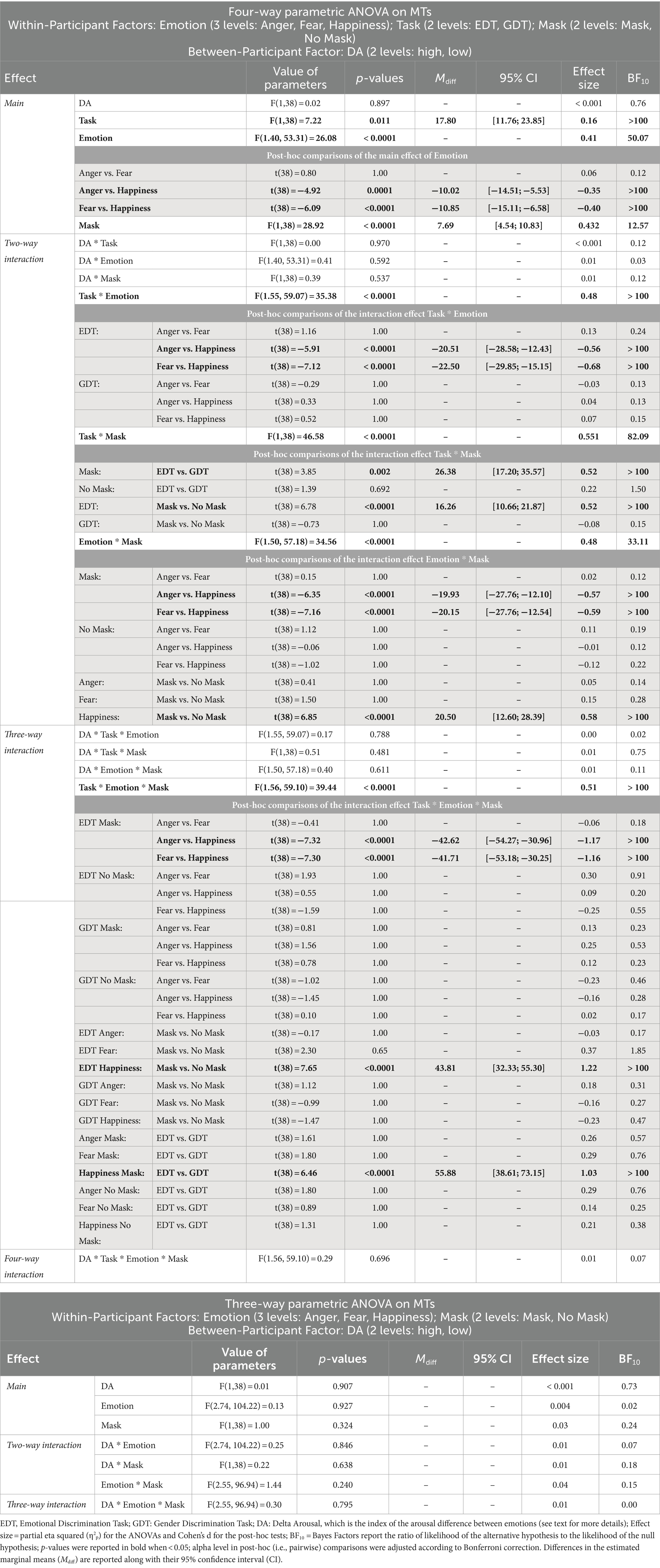

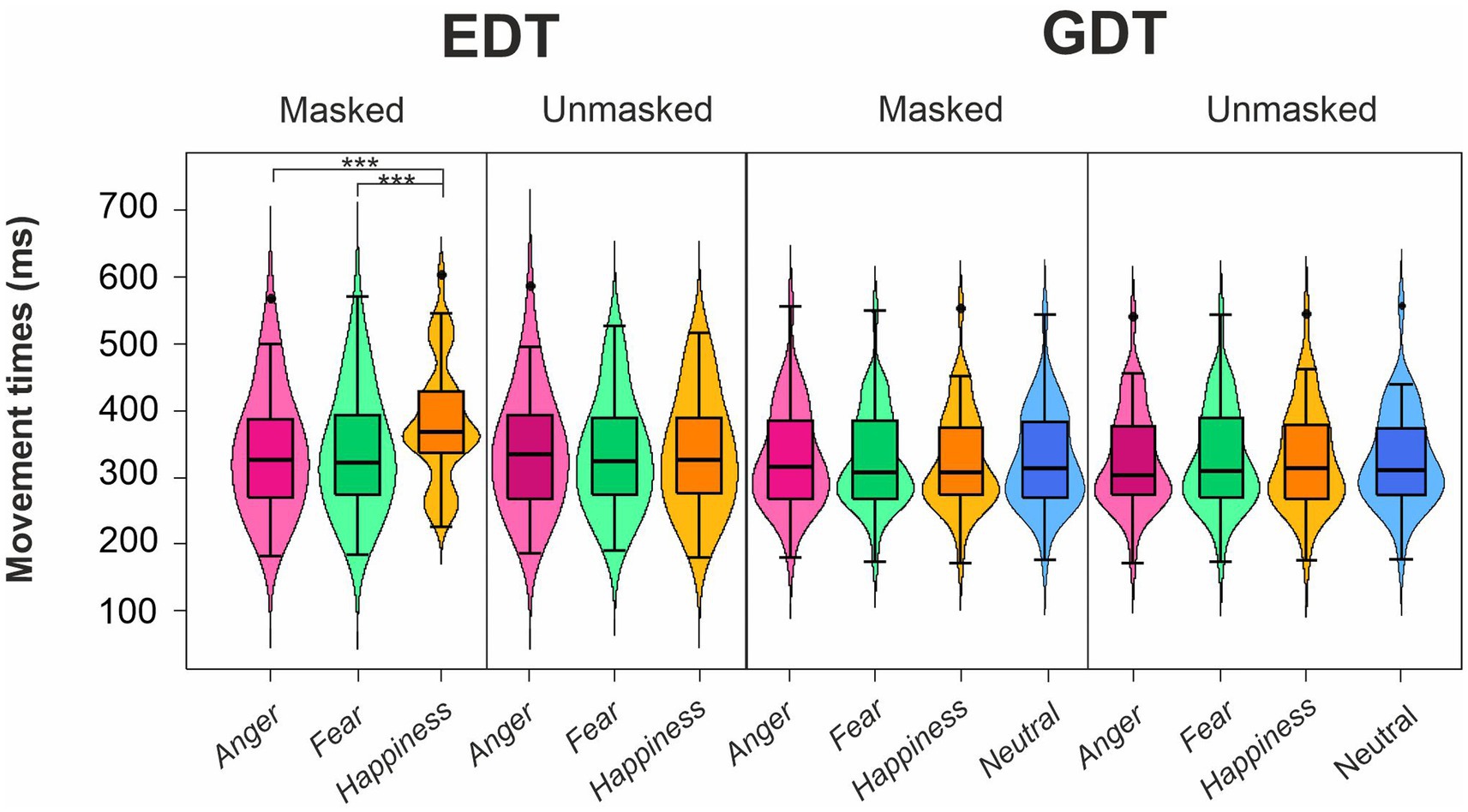

The four-way ANOVA on mean MTs of go trials (Figure 4; Table 3) revealed three main effects. First, the main effect of the Task was that participants had longer MTs in the EDT (344.54 ± 88.77 ms) than in GTD (326.74 ± 75.27 ms). Second, the main effect of Emotion was due to the fact that MTs for happy expressions (342.59 ± 84.67 ms) were longer than those for angry (332.57 ± 82.38 ms) and fearful expressions (331.75 ± 81.07 ms). Third, the main effect of Mask revealed that masked faces (339.48 ± 84.57 ms) had significantly longer MTs than unmasked faces (331.79 ± 80.76 ms).

Figure 4. Effects of emotional facial expressions on movement times in the Emotional (EDT) and Gender Discrimination Task (GDT). All conventions are as in Figure 3.

The interactions qualified the main effects. The interaction Task*Emotion revealed that in the EDT, happy faces (358.87 ± 90.22 ms) elicited longer MTs than angry (338.37 ± 88.70 ms) and fearful faces (336.38 ± 86.72 ms). The interaction Task*Mask was due to two effects. First, in the EDT, participants showed longer MTs in response to masked (352.67 ± 91.47 ms) than to unmasked expressions (336.41 ± 85.60 ms). Second, considering only the masked version of the faces, participants had longer MTs in the EDT (352.67 ± 91.47 ms) than the GDT (326.29 ± 75.15 ms). The interaction Emotion*Mask was also due to two effects. First, in the masked condition, participants had longer MTs to happy (352.84 ± 87.17 ms) than to angry (332.91 ± 83.23 ms) and fearful expressions (332.69 ± 82.72 ms). Second, MTs for happy masked faces (352.84 ± 87.17 ms) were longer than happy unmasked faces (332.35 ± 81.34 ms).

All these effects were further qualified by the triple interaction Emotion*Task*Mask. Post-hoc comparisons unveiled that only in the masked conditions of the EDT, happy expressions (380.78 ± 89.70 ms) elicited longer MTs than angry (338.17 ± 89.97 ms) and fearful faces (339.07 ± 90.41 ms). In addition, happy masked expressions had longer MTs in the EDT than in the GDT (324.90 ± 75.81 ms). Finally, in the EDT, happy masked faces (380.78 ± 89.70 ms) had longer MTs than happy unmasked expressions (336.97 ± 86.36 ms).

The control ANOVA on MTs in the GDT did not yield significant effects.

3.3 Omission error rates

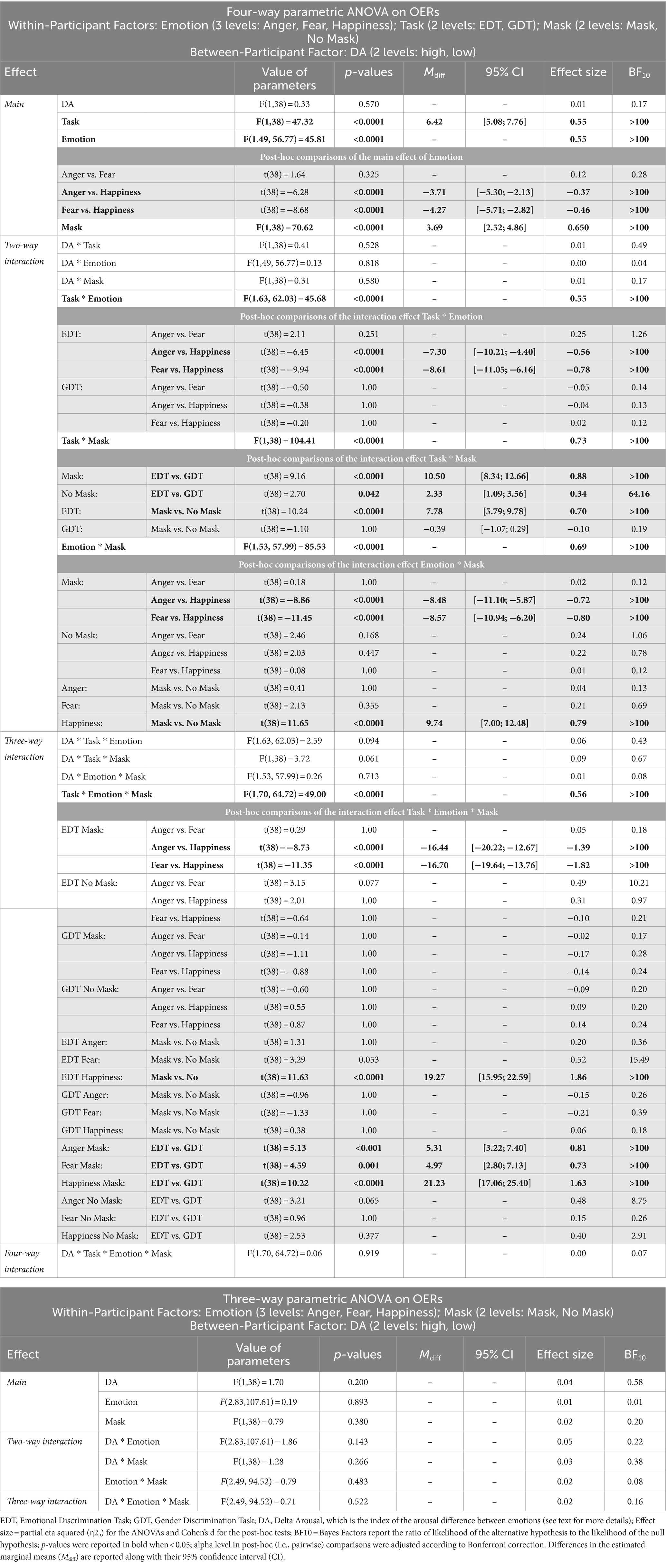

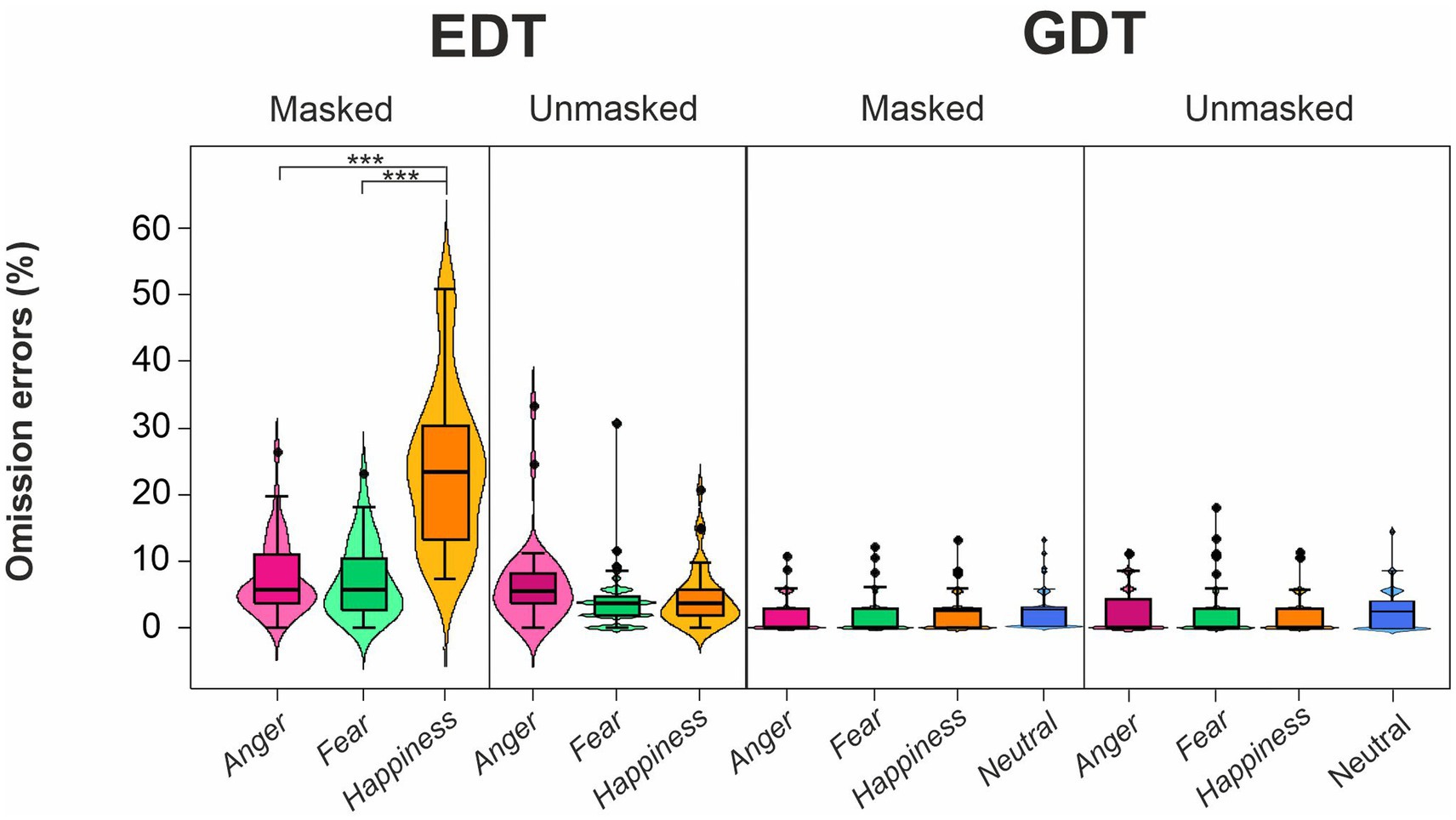

The four-way ANOVA on mean OERs (Figure 5; Table 4) unveiled three main effects, i.e., Task, Emotion, and Mask. The Task’s main effect was due to higher OER in the EDT (8.84 ± 9.66) than in the GTD (2.43 ± 3.37). The main effect of Emotion indicated that participants made more OERs for happy (8.29 ± 11.20) than angry (4.58 ± 5.23) and fearful faces (4.03 ± 4.97). Finally, the main effect of the Mask showed that participants had a higher OER in the masked (7.48 ± 9.79) than in the unmasked condition (3.79 ± 4.76).

Figure 5. Effects of emotional facial expressions on omission error rates in the Emotional (EDT) and Gender Discrimination Task (GDT). All conventions are as in Figure 3.

These main effects were qualified by two-way interactions, i.e., Task*Emotion, Task*Mask, and Emotion*Mask. Post-hoc comparisons for the Task*Emotion interactions revealed that in the EDT, the OERs were higher for happy (14.15 ± 13.14) than angry (6.84 ± 5.91) and fearful faces (5.54 ± 5.550). The Task*Mask interaction was because, first, in the EDT, the OERs were higher for masked (12.73 ± 11.30) than unmasked expressions (4.95 ± 5.39). Second, participants made more OERs in the EDT than the GDT both in the masked condition (EDT: 12.73 ± 11.30; GDT: 2.23 ± 3.00) and in the unmasked condition (EDT: 4.95 ± 5.39; GDT: 2.62 ± 3.70). The Emotion*Mask interaction was explained by the fact that first, the OERs were higher for happy (13.17 ± 13.71) than angry (4.68 ± 5.15) and fearful faces (4.60 ± 5.11). Second, participants had higher OERs to happy masked than happy unmasked expressions (3.42 ± 4.03).

The triple interaction Emotion*Task*Mask further explained the results. The post-hoc analysis revealed that in the EDT, participants made more OERs in response to masked happy (23.78 ± 11.80) than masked angry (7.34 ± 5.59) and masked fearful faces (7.08 ± 5.60). In addition, in the EDT, the OERs were higher for happy masked faces than for happy unmasked faces (4.51 ± 4.51). Finally, participants had higher OERs in the EDT than GDT for each masked emotion (anger: 2.02 ± 2.82; fear: 2.11 ± 3.00; happiness: 2.55 ± 3.23).

The control ANOVA on the OERs in the GDT did not yield significant effects.

4 Discussion

For the first time, we investigated the impact of surgical masks employed during the COVID-19 pandemic on the phenomenon of task-relevance of facial emotional stimuli. In our experiment, we gave the same participants two tasks where the same stimuli consisting of a pseudorandom mix of masked and unmasked facial expressions were shown. In one task, the EDT, participants had to respond according to the stimuli’ valence, whereas in the other, the GDT, they had to respond according to the posers’ gender. We found that responses to emotional stimuli were influenced only in the EDT, i.e., when the emotional content of the stimuli was relevant, but not in the GDT, i.e., when valence was task-irrelevant. Thus, in line with our previous evidence (Mirabella, 2018; Mancini et al., 2020, 2022; Calbi et al., 2022; Mirabella et al., 2023; Montalti and Mirabella, 2023), we showed that only when the emotional content was relevant for providing the correct responses it also affected the behavioral reactions of participants. However, responses to facial emotions in the EDT markedly differ between unmasked and masked conditions. On the one hand, as previously shown (Mancini et al., 2020; Mirabella et al., 2023), participants responded slower to unmasked angry faces than to unmasked fearful and happy faces in the EDT. On the other hand, surgical masks left responses to angry faces unaltered with respect to the unmasked version of the stimuli but impacted fearful and, more prominently, happy expressions. In particular, the RTs to masked fearful faces increased, and those to masked happy faces became more variable, so the difference between these three facial emotions observed in the unmasked condition disappeared. In addition, masked happy faces elicited longer MTs and higher OERs than masked fearful and angry faces.

Relevantly, our results depend not on the stimuli’ arousal but on their valence. Furthermore, significant results have large effect sizes and high values of BF10, whereas key non-significant results have values of BF10 supporting null hypotheses. Thus, our findings are statistically very solid.

4.1 The impact of task-relevance on unmasked faces

As expected, in the unmasked condition, we found that the task-relevance of angry faces matters. In fact, participants showed higher RTs to angry than to happy and fearful unmasked faces in the EDT, whereas no differences in RTs between the three emotional expressions or between emotional and neutral faces appear in the GDT. This evidence aligns with previous results (Mancini et al., 2020; Mirabella et al., 2023), suggesting that angry faces hold participants’ attention more strongly than happy and fearful faces. However, there are also a few important differences with previous research. First, in this experiment, fearful unmasked faces did not elicit longer RTs than happy unmasked faces. Second, the OERs of angry unmasked faces were only nominally but not yet significantly higher than those of happy and fearful unmasked faces (Table 1) as in Mancini et al. (2020). Third, in contrast to the findings of Mancini et al. (2020), our results revealed that angry faces did not significantly increase the length of the MTs than other emotional faces. We suggest that such differences stem from the increased cognitive demands associated with the current task than prior ones. Our rationale is based on the outcomes we previously observed. In Mirabella (2018), we presented fearful and happy faces, along with neutral expressions in the EDT. We found the RTs for the former stimuli increased by approximately 15 ms than the latter. Additionally, we observed significantly higher OERs for fearful faces than for happy faces. In Mancini et al.’s study (2020) study, we increased the task difficulty by including three emotional expressions, i.e., angry, fearful, and happy faces, along with a neutral one. Under these conditions, the difference in RTs in the EDT between fearful and happy faces remained significant but decreased to 9.5 ms, and the OERs were no longer different between the two emotional expressions. In the current study, we dramatically heightened cognitive demands by presenting the masked and unmasked versions of three emotional expressions along with the masked and unmasked versions of the neutral stimuli. In such context, it is highly probable that only the most salient emotional expression, i.e., the angry unmasked faces can hold participants’ attention, prolonging the responses. This aligns well with the notion that the task-relevance phenomenon is contingent on the automatic allocation of attention to threatening stimuli in the EDT driven by the implicit need to assess whether these stimuli may pose a potential threat (Mirabella, 2018; Mancini et al., 2020, 2022; Mirabella et al., 2023; Montalti and Mirabella, 2023). However, in situations where attentional resources are more extensively utilized, a shift in cognitive strategy becomes imperative. This adjustment is necessary as these resources are essential for responding accurately to the various stimuli presented in the task.

4.2 The impact of the surgical mask on behavioral reactions to emotional expressions

The surgical mask exhibited several effects in the EDT; yet, it did not impact responses in the GDT, except for one aspect unrelated to valence, as discussed in the Section 4.3. Essentially, masks selectively and profoundly influenced experimental conditions where the stimuli’ valence was essential for giving the correct response as opposed to when participants based their responses on the posers’ gender. When participants responded to the masked stimuli, they had longer RTs, MTs, and higher OERs than for unmasked stimuli. However, these effects widely varied on the emotional expressions. First, reactions to angry expressions remained unaffected, aligning with evidence indicating that surgical masks did not hinder the recognition of angry faces (Grahlow et al., 2022; Proverbio and Cerri, 2022; Rinck et al., 2022; Gil and Le Bigot, 2023; Proverbio et al., 2023). Second, fearful masked faces increased the RTs with respect to unmasked stimuli, while they had no impact on MTs and OERs. This suggests that, in line with Eisenbarth and Alpers (2011), the mouth, not just the eyes, plays a significant role in fear decoding. This finding contrasts with other studies suggesting that the eyes have a prominent role in fear recognition (Whalen et al., 2001; Morris et al., 2002; Adolphs et al., 2005). Third, the presence of masks had a pronounced impact on happy expressions. Surgical masks resulted in highly variable RTs for happy expressions, nullifying the significant differences between the three facial emotions shown in the unmasked condition. Additionally, they substantially increased OERs and MTs compared to fearful and angry faces. We propose that both effects can be attributed to the covering of the mouth, which specifically interferes with processing information related to happiness. The increased difficulty in recognizing masked happy expressions is well known (Marini et al., 2021; Grahlow et al., 2022; Proverbio and Cerri, 2022; Rinck et al., 2022; Ventura et al., 2023). However, here, we demonstrated for the first time that this effect also occurs when visual signals are employed to guide motor actions. Furthermore, we showed that even MTs are affected. This is noteworthy, considering that modulations in MTs are seldom observed, given that participants typically respond using keypresses or saccadic movements. However, unlike saccadic eye movements, which, once initiated, cannot be corrected, reaching arm movements are not ballistic. Hence, MTs can be affected by the experimental context, as a few other studies have previously shown (Coombes et al., 2005; Hälbig et al., 2011; Esteves et al., 2016; Mancini et al., 2020). The lengthening of MTs was likely because participants in the presence of a happy masked face were uncertain about the valence of the expression and thus paid attention to the faces’ valence even during the execution of the reaching arm movement.

4.3 The influence of arousal on behavioral reactions

As it is well known that arousal impacts response modulation (Lundqvist et al., 2014), we incorporated the rating of this dimension of emotional stimuli into our analyses. Consistent with our prior investigations (Mirabella, 2018; Mancini et al., 2020, 2022; Calbi et al., 2022; Mirabella et al., 2023; Montalti and Mirabella, 2023), arousal never showed main effects or interaction with stimuli valence. Consequently, we can affirm that all our outcomes are due solely to the valence of facial expressions. In just one instance, we found that behavioral performance was influenced by arousal. The analyses of the RTs in GDT revealed that participants with lower Delta Arousal exhibited slower responses to masked stimuli compared to unmasked stimuli, in contrast to those with higher Delta Arousal. This finding suggests that individuals perceiving masked stimuli as more arousing displayed heightened reactivity to them.

5 Conclusion

This study contributes to understanding how surgical masks, commonly used during the COVID-19 pandemic, impact our reactions to facial emotion processing, highlighting the interplay between task demands, stimuli valence, and stimuli arousal. We found that surgical masks selectively impact behavioral performance to emotional stimuli in the EDT when participants were instructed to respond according to the emotional content of the images. Conversely, there was no effect of valence in the GDT. This evidence provides further support to the phenomenon of task-relevance, indicating that responses to emotional stimuli are not automatic as suggested by the motivational model (Bradley et al., 2001), but they are appraised according to the contextual situation and people’s goals in accordance with the appraisal theories of emotions (Moors and Fischer, 2019; Scherer and Moors, 2019). Notably, the effect of valence on participant responses in the EDT was strikingly different when unmasked with respect to masked emotional stimuli were presented. In line with previous findings (Mancini et al., 2020; Mirabella et al., 2023), in the unmasked condition, participants’ attention was held longer by angry than happy or fearful expressions expression, leading to slower responses to such stimuli. However, masks had a pronounced impact on those emotions whose decoding relies to a certain extent on mouth visibility, i.e., fear and happy expressions. The influence of surgical masks was particularly pronounced on happy expressions, introducing variability in RTs and increasing OERs and MTs. This evidence suggests that when facing masked people, observers are less capable of adequately reacting to happiness.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below. The datasets for this study can be found in the Open Science Framework at the link https://osf.io/pvut6/.

Ethics statement

The studies involving humans were approved by Local ethical committee “ASST Spedali Civili” di Brescia (protocol number 4452). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

MM: Data curation, Formal analysis, Investigation, Project administration, Writing – original draft. GM: Conceptualization, Funding acquisition, Methodology, Resources, Software, Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was supported by (i) the Department of Clinical and Experimental Sciences of the University of Brescia under the Project “Departments of Excellence 2023–2027” (IN2DEPT Innovative and Integrative Department Platforms) and (ii) PRIN PNNR P2022KAZ45 both awarded to GM by the Italian Ministry of University and Research (MIUR).

Acknowledgments

The authors would like to thank Filippo C. Baresani Varini for his help in data collection and support throughout this research.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^https://www.nimh.nih.gov/research/research-conducted-at-nimh/research-areas/clinics-and-labs/ln/shn/software-projects.shtml (Accessed February 21, 2024).

2. ^We did not use the valence ratings to make an index, similar to what was done for arousal because valence is binary, encompassing either positive or negative valenced stimuli. Nonetheless, studies have indicated that anger influences action planning in a distinct manner than fear. This does not come as a surprise, as angry faces convey different social signals compared to fearful faces. Unlike fear, anger poses a direct threat to the observer, prompting immediate action. Consequently, combining behavioral indexes for fearful and angry faces may introduce a potential confounding factor. To avoid this, we considered the three emotional expressions as different levels of the factor Emotions.

References

Adolphs, R., Gosselin, F., Buchanan, T. W., Tranel, D., Schyns, P., and Damasio, A. R. (2005). A mechanism for impaired fear recognition after amygdala damage. Nature 433, 68–72. doi: 10.1038/nature03086

Bagby, R. M., Parker, J. D., and Taylor, G. J. (1994). The twenty-item Toronto alexithymia scale--I. Item selection and cross-validation of the factor structure. J. Psychosom. Res. 38, 23–32. doi: 10.1016/0022-3999(94)90005-1

Bodenschatz, C. M., Kersting, A., and Suslow, T. (2019). Effects of briefly presented masked emotional facial expressions on gaze behavior: an eye-tracking study. Psychol. Rep. 122, 1432–1448. doi: 10.1177/0033294118789041

Bradley, M. M., Codispoti, M., Cuthbert, B. N., and Lang, P. J. (2001). Emotion and motivation I: defensive and appetitive reactions in picture processing. Emotion 1, 276–298. doi: 10.1037/1528-3542.1.3.276

Bressi, C., Taylor, G., Parker, J., Bressi, S., Brambilla, V., Aguglia, E., et al. (1996). Cross validation of the factor structure of the 20-item Toronto alexithymia scale: an Italian multicenter study. J. Psychosom. Res. 41, 551–559. doi: 10.1016/s0022-3999(96)00228-0

Calbi, M., Langiulli, N., Ferroni, F., Montalti, M., Kolesnikov, A., Gallese, V., et al. (2021). The consequences of COVID-19 on social interactions: an online study on face covering. Sci. Rep. 11:2601. doi: 10.1038/s41598-021-81780-w

Calbi, M., Montalti, M., Pederzani, C., Arcuri, E., Umiltà, M. A., Gallese, V., et al. (2022). Emotional body postures affect inhibitory control only when task-relevant. Front. Psychol. 13:1035328. doi: 10.3389/fpsyg.2022.1035328

Coombes, S. A., Janelle, C. M., and Duley, A. R. (2005). Emotion and motor control: movement attributes following affective picture processing. J. Mot. Behav. 37, 425–436. doi: 10.3200/jmbr.37.6.425-436

Crawford, J. R., and Garthwaite, P. H. (2005). Evaluation of criteria for classical dissociations in single-case studies by Monte Carlo simulation. Neuropsychology 19, 664–678. doi: 10.1037/0894-4105.19.5.664

Eisenbarth, H., and Alpers, G. W. (2011). Happy mouth and sad eyes: scanning emotional facial expressions. Emotion 11, 860–865. doi: 10.1037/a0022758

Engbert, R., and Kliegl, R. (2003). Microsaccades uncover the orientation of covert attention. Vis. Res. 43, 1035–1045. doi: 10.1016/s0042-6989(03)00084-1

Esteves, P. O., Oliveira, L. A., Nogueira-Campos, A. A., Saunier, G., Pozzo, T., Oliveira, J. M., et al. (2016). Motor planning of goal-directed action is tuned by the emotional valence of the stimulus: a kinematic study. Sci. Rep. 6:28780. doi: 10.1038/srep28780

Fagerland, M. W. (2012). T-tests, non-parametric tests, and large studies—a paradox of statistical practice? BMC Med. Res. Methodol. 12:78. doi: 10.1186/1471-2288-12-78

Faul, F., Erdfelder, E., Buchner, A., and Lang, A. G. (2009). Statistical power analyses using G*power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/brm.41.4.1149

Gil, S., and Le Bigot, L. (2023). Emotional face recognition when a colored mask is worn: a cross-sectional study. Sci. Rep. 13:174. doi: 10.1038/s41598-022-27049-2

Goodale, M. A. (2014). How (and why) the visual control of action differs from visual perception. Proc. Biol. Sci. 281:20140337. doi: 10.1098/rspb.2014.0337

Grahlow, M., Rupp, C. I., and Derntl, B. (2022). The impact of face masks on emotion recognition performance and perception of threat. PLoS One 17:e0262840. doi: 10.1371/journal.pone.0262840

Hälbig, T. D., Borod, J. C., Frisina, P. G., Tse, W., Voustianiouk, A., Olanow, C. W., et al. (2011). Emotional processing affects movement speed. J. Neural Transm. (Vienna) 118, 1319–1322. doi: 10.1007/s00702-011-0627-4

Jarosz, A. F., and Wiley, J. (2014). What are the odds? A practical guide to computing and reporting bayes factors. J. Probl. Solving 7, 2–7. doi: 10.7771/1932-6246.1167

Lang, P. J., and Bradley, M. M. (2010). Emotion and the motivational brain. Biol. Psychol. 84, 437–450. doi: 10.1016/j.biopsycho.2009.10.007

Lang, P. J., Davis, M., and Ohman, A. (2000). Fear and anxiety: animal models and human cognitive psychophysiology. J. Affect. Disord. 61, 137–159. doi: 10.1016/s0165-0327(00)00343-8

Lundqvist, D., Flykt, A., and Öhman, A. (1998). Karolinska directed emotional faces. Psyc TESTS Dataset 91. doi: 10.1037/t27732-000

Lundqvist, D., Juth, P., and Öhman, A. (2014). Using facial emotional stimuli in visual search experiments: the arousal factor explains contradictory results. Cognit. Emot. 28, 1012–1029. doi: 10.1080/02699931.2013.867479

Mancini, C., Falciati, L., Maioli, C., and Mirabella, G. (2020). Threatening facial expressions impact goal-directed actions only if task-relevant. Brain Sci. 10:794. doi: 10.3390/brainsci10110794

Mancini, C., Falciati, L., Maioli, C., and Mirabella, G. (2022). Happy facial expressions impair inhibitory control with respect to fearful facial expressions but only when task-relevant. Emotion 22, 142–152. doi: 10.1037/emo0001058

Marini, M., Ansani, A., Paglieri, F., Caruana, F., and Viola, M. (2021). The impact of facemasks on emotion recognition, trust attribution and re-identification. Sci. Rep. 11:5577. doi: 10.1038/s41598-021-84806-5

Mirabella, G. (2018). The weight of emotions in decision-making: how fearful and happy facial stimuli modulate action readiness of goal-directed actions. Front. Psychol. 9:1334. doi: 10.3389/fpsyg.2018.01334

Mirabella, G., Grassi, M., Mezzarobba, S., and Bernardis, P. (2023). Angry and happy expressions affect forward gait initiation only when task relevant. Emotion 23, 387–399. doi: 10.1037/emo0001112

Mirabella, G., Pani, P., Pare, M., and Ferraina, S. (2006). Inhibitory control of reaching movements in humans. Exp. Brain Res. 174, 240–255. doi: 10.1007/s00221-006-0456-0

Montalti, M., and Mirabella, G. (2023). Unveiling the influence of task-relevance of emotional faces on behavioral reactions in a multi-face context using a novel flanker-go/no-go task. Sci. Rep. 13:20183. doi: 10.1038/s41598-023-47385-1

Moors, A., and Fischer, M. (2019). Demystifying the role of emotion in behaviour: toward a goal-directed account. Cogn. Emot. 33, 94–100. doi: 10.1080/02699931.2018.1510381

Morey, R. D., and Rouder, J. N. (2018). BayesFactor: Computation of Bayes Factors for common designs. R package version 0.9.12-4.2. Available at: https://CRAN.R-project.org/package=BayesFactor

Morris, J. S., de Bonis, M., and Dolan, R. J. (2002). Human amygdala responses to fearful eyes. NeuroImage 17, 214–222. doi: 10.1006/nimg.2002.1220

Oldfield, R. C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113. doi: 10.1016/0028-3932(71)90067-4

Proverbio, A. M., and Cerri, A. (2022). The recognition of facial expressions under surgical masks: the primacy of anger. Front. Neurosci. 16:864490. doi: 10.3389/fnins.2022.864490

Proverbio, A. M., Cerri, A., and Gallotta, C. (2023). Facemasks selectively impair the recognition of facial expressions that stimulate empathy: an ERP study. Psychophysiology 60:e14280. doi: 10.1111/psyp.14280

R Core Team (2020). R: A language and environment for statistical computing. Available at: http://www.R-project.org/. RFoundation for statistical computing, Vienna, Austria.

Rasch, D., and Guiard, V. (2004). The robustness of parametric statistical methods. Psychol. Sci. 46, 175–208.

Rinck, M., Primbs, M. A., Verpaalen, I. A. M., and Bijlstra, G. (2022). Face masks impair facial emotion recognition and induce specific emotion confusions. Cogn. Res. Princ. Implic. 7:83. doi: 10.1186/s41235-022-00430-5

Scherer, K. R., and Moors, A. (2019). The emotion process: event appraisal and component differentiation. Annu. Rev. Psychol. 70, 719–745. doi: 10.1146/annurev-psych-122216-011854

Schurgin, M. W., Nelson, J., Iida, S., Ohira, H., Chiao, J. Y., and Franconeri, S. L. (2014). Eye movements during emotion recognition in faces. J. Vis. 14:14. doi: 10.1167/14.13.14

Smith, M. L., Cottrell, G. W., Gosselin, F., and Schyns, P. G. (2005). Transmitting and decoding facial expressions. Psychol. Sci. 16, 184–189. doi: 10.1111/j.0956-7976.2005.00801.x

Ventura, M., Palmisano, A., Innamorato, F., Tedesco, G., Manippa, V., Caffo, A. O., et al. (2023). Face memory and facial expression recognition are both affected by wearing disposable surgical face masks. Cogn. Process. 24, 43–57. doi: 10.1007/s10339-022-01112-2

Vuilleumier, P. (2005). How brains beware: neural mechanisms of emotional attention. Trends Cogn. Sci. 9, 585–594. doi: 10.1016/j.tics.2005.10.011

Keywords: behavioral control, task-relevance, facial expressions, emotion, surgical mask, COVID-19, Go/No-go

Citation: Montalti M and Mirabella G (2024) Investigating the impact of surgical masks on behavioral reactions to facial emotions in the COVID-19 era. Front. Psychol. 15:1359075. doi: 10.3389/fpsyg.2024.1359075

Edited by:

Melissa T. Buelow, The Ohio State University, United StatesReviewed by:

Sandrine Gil, University of Poitiers, FranceViola Oldrati, Eugenio Medea (IRCCS), Italy

Copyright © 2024 Montalti and Mirabella. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Giovanni Mirabella, Z2lvdmFubmkubWlyYWJlbGxhQHVuaWJzLml0

Martina Montalti

Martina Montalti Giovanni Mirabella

Giovanni Mirabella