- 1Centre for Neurolinguistics and Psycholinguistics (CNPL), Faculty of Psychology, Vita-Salute San Raffaele University, Milan, Italy

- 2The Arctic University of Norway, Tromsø, Norway

- 3Centre for Cognition and Decision Making, Institute for Cognitive Neuroscience, Higher School of Economics, National Research University, Moscow, Russia

- 4Department of Neuroradiology, Fondazione IRCCS Istituto Neurologico Carlo Besta, Milan, Italy

- 5Neuropsychology Service, Department of Rehabilitation and Functional Recovery, San Raffaele Scientific Institute, Vita-Salute San Raffaele University, Milan, Italy

- 6Department of Neurosurgery and Gamma Knife Radiosurgery, San Raffaele Hospital, Milan, Italy

Introduction: The COVID-19 pandemic impacted public health and our lifestyles, leading to new social adaptations such as quarantine, social distancing, and facial masks. Face masks, covering extended facial zones, hamper our ability to extract relevant socio-emotional information from others’ faces. In this fMRI study, we investigated how face masks interfere with facial emotion recognition, focusing on brain responses and connectivity patterns as a function of the presence of a face mask.

Methods: A total of 25 healthy participants (13F; mean age: 32.64 ± 7.24y; mean education: 18.28 ± 1.31y) were included. Participants underwent task-related fMRI during the presentation of images of faces expressing basic emotions (joy or fear versus neutral expression). Half of the faces were covered by a face mask. Subjects had to recognize the facial emotion (masked or unmasked). FMRI whole-brain and regions-of-interest analyses were performed, as well as psychophysiological interaction analysis (PPI).

Results: Subjects recognized better and faster emotions on unmasked faces. FMRI analyses showed that masked faces induced a stronger activation of a right occipito-temporal cluster, including the fusiform gyrus and the occipital face area bilaterally. The same activation pattern was found for the neutral masked > neutral unmasked contrast. PPI analyses of the masked > unmasked contrast showed, in the right occipital face area, a stronger correlation with the left superior frontal gyrus, left precentral gyrus, left superior parietal lobe, and the right supramarginal gyrus.

Discussion: Our study showed how our brain differentially struggles to recognize face-masked basic emotions, implementing more neural resources to correctly categorize those incomplete facial expressions.

1 Introduction

Since its spread in 2020, the COVID-19 pandemic has revolutionized our lives, and its impact is far from disappearing completely, even with the vaccination campaign raging on (The Lancet Microbe, 2021). Apart from social distancing, one of the most effective containment measures has been the adoption of face masks, which hide about 60–70% of the face (Carbon, 2020). The face is one of the most important means of social communication, both through verbal and non-verbal channels (Adolphs, 2002). In particular, the social relevance of the face has been related to its ability to express emotions through specific facial configurations. Facial emotion recognition is indeed crucial for the process of inferring our interlocutors’ emotional state (Adolphs, 2002). The adoption of facial masks has raised concerns about how these protective devices could interfere with facial emotion recognition during our social interactions (Carbon, 2020; Ruba and Pollak, 2020; Marini et al., 2021). For a successful facial emotion recognition, many individual facial features need to be extracted and then integrated into a unique percept (Ellison and Massaro, 1997). At the neural level, face perception is supported by a selective ventral occipito-temporal network that comprises the inferior occipital gyrus and the fusiform gyrus, known as the occipital face area (OFA) (Pitcher et al., 2007) and the fusiform face area (FFA) (Kanwisher et al., 1997), respectively. Indeed, the ventral stream is more involved in facial expression processing than the dorsal stream and includes the right inferior occipital gyrus (containing the right OFA), the left middle occipital gyrus, left FFA, and the right inferior frontal gyrus (Liu et al., 2021). The OFA is generally considered as the first stage of face processing as it responds selectively to single facial features (Pitcher et al., 2011b), which are then integrated into a unique facial representation in the FFA (Pitcher et al., 2007). Thus, these two brain areas are considered to be involved in the processing of the invariant aspects of a face, such as its canonical T configuration, which allows for the recognition of each individual as unique (Haxby et al., 2002). However, a face also displays dynamic features, such as eye gaze, mouthing, and facial expressions. These aspects are processed separately in different subdivisions of the superior temporal sulcus (STS) (Schobert et al., 2018) and then transmitted to other brain regions (e.g., the amygdala) to extract their socio-emotional relevance during interactions with others (Haxby et al., 2002; Gan et al., 2022).

The covering of important facial features by face masks may hamper face processing ability, leading to misinterpretations of the emotion expressed and to misunderstandings in social contexts. Previous studies have shown that masking heavily affected emotion recognition, reducing the recognizability of facial expressions (Proverbio and Cerri, 2022; Rinck et al., 2022) and emotion recognition accuracy (Carbon, 2020; Ruba and Pollak, 2020; Calbi et al., 2021; Grundmann et al., 2021; Marini et al., 2021). A previous EEG study investigated the cerebral activity related to the recognition of six masked/unmasked facial expressions by using event-related potentials (ERPs) analyses (Żochowska et al., 2022; Proverbio et al., 2023). The results indicated increased neuronal activity during face-masked emotion recognition. They also showed that face masking was more detrimental to sadness, fear, and disgust than positive emotions, such as happiness. The authors suggested that processing of faces with surgical-like masks might require an amplified attentional process. Indeed, hiding crucial facial characteristics with face masks may induce a re-organization of the neural resources involved in face processing. A better understanding of such neuronal plasticity induced by face masks is still needed. In this study, we aimed to further investigate how face masks may influence brain responses and connectivity during facial emotion recognition. Given the novelty of mask use in everyday life, little is known about how the brain accommodates the recognition of facial expressions covered by masks. We used functional magnetic resonance imaging (fMRI) to determine whether this process would differ from normal “uncovered” facial emotion recognition (Wegrzyn et al., 2015).

2 Materials and methods

2.1 Participants

A total of 25 right-handed healthy volunteers (13F; mean age: 32.64 ± 7.24 y; mean education: 18.28 ± 1.31 y) were included in this study. The study was approved by the Ethics Committee of the Ospedale San Raffaele (Milan), and all participants gave their oral and written informed consent in accordance with the Declaration of Helsinki.

2.2 Facial emotions recognition task and analyses

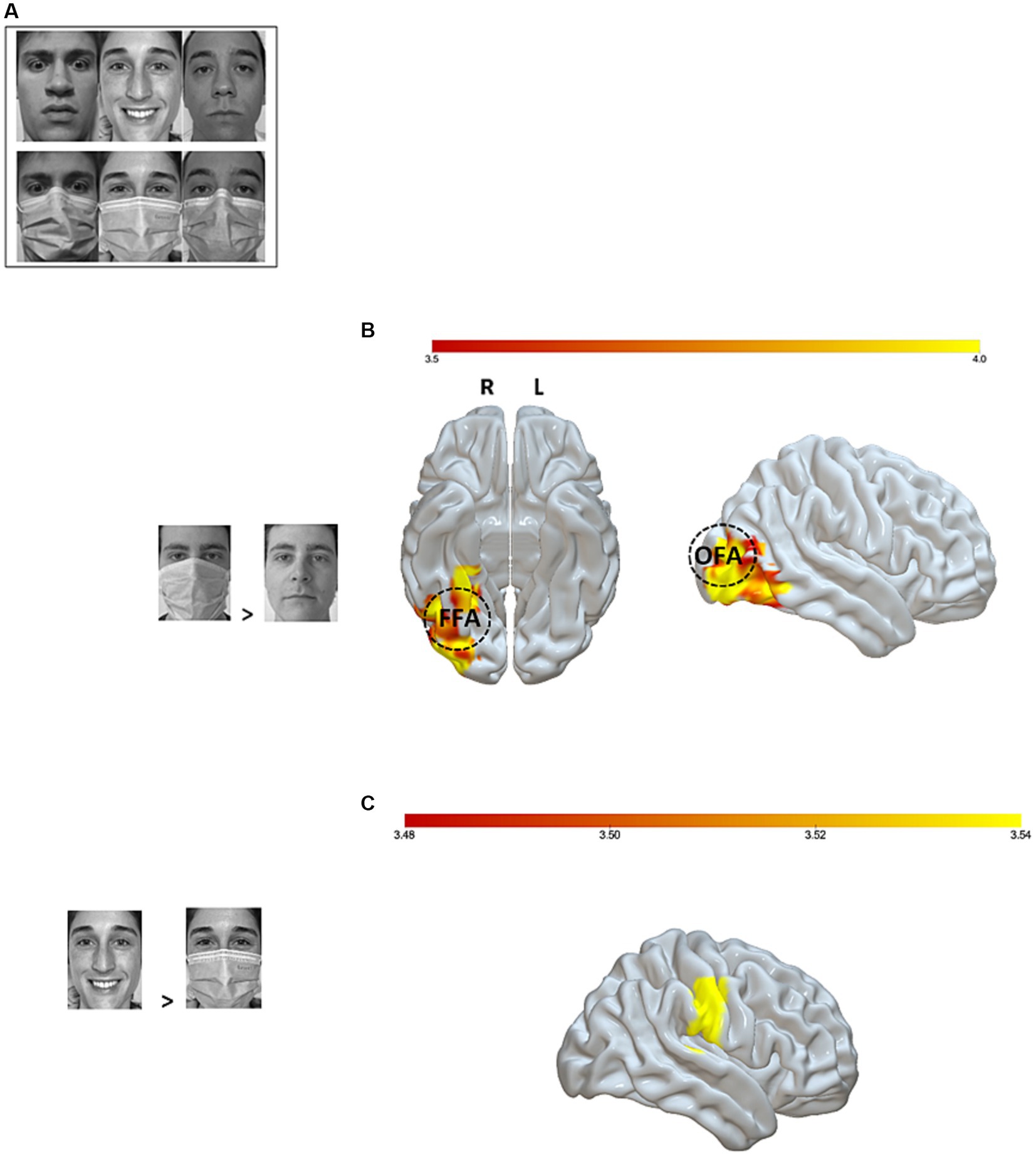

During the fMRI session, participants were shown black-and-white pictures of faces expressing a basic emotion (joy or fear), along with a neutral control condition. In all, 36 pictures were used and presented twice to the participants during each block for a total of three blocks. Thus, each participant was exposed to a total of 216 pictures. Half of the target faces in each condition were covered with a surgical mask (Figure 1A). Participants were instructed to press a response button on an MRI-compatible response box corresponding to the emotion expressed by each face, whether masked or not masked, in order to capture differential brain responses.

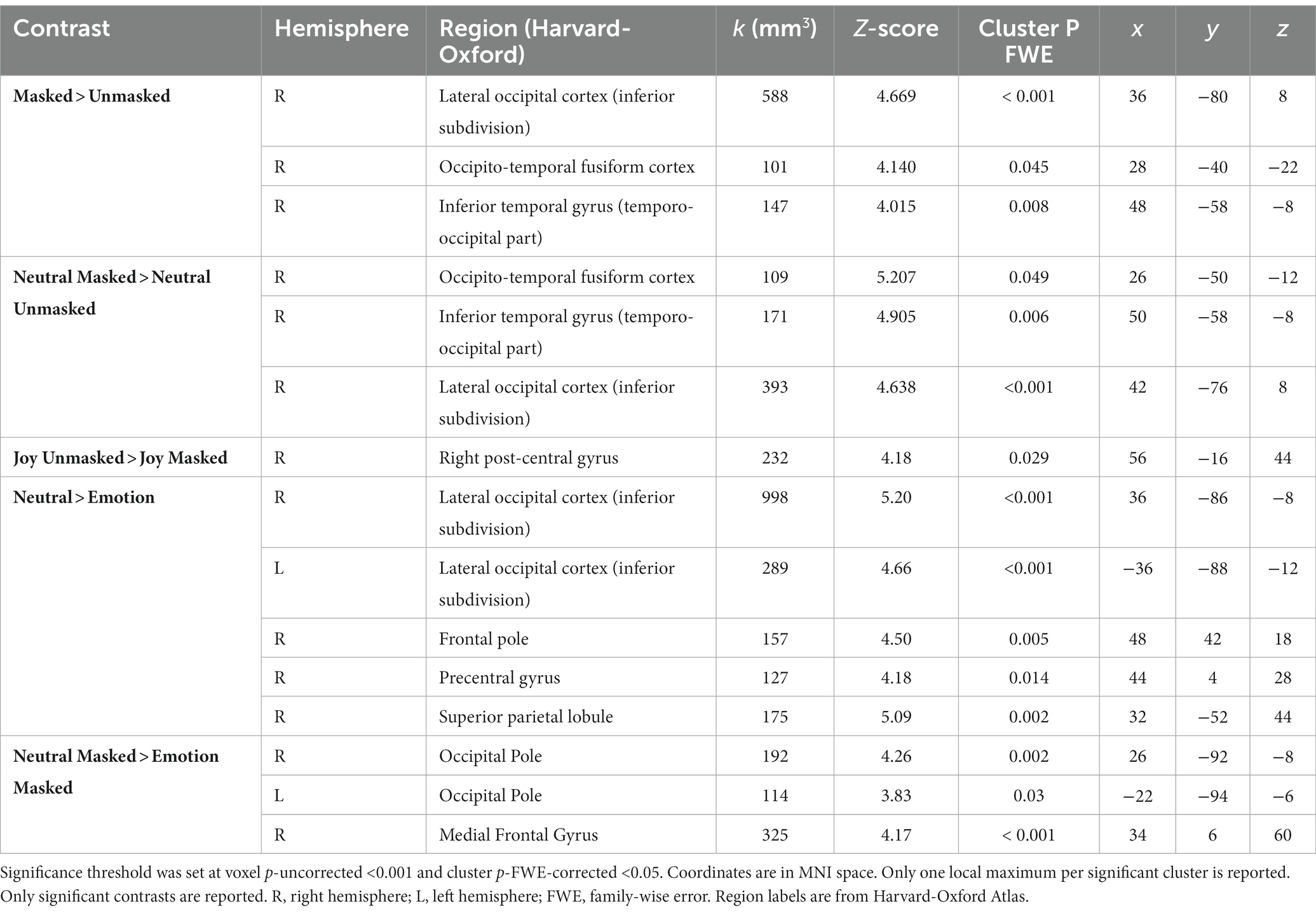

Figure 1. fMRI setup and analyses. (A) Examples of experimental stimuli; (B) Significant brain activations (p-uncorrected < 0.001 voxel level and p-FWE < 0.05 cluster level) for the masked > unmasked contrast. The right fusiform face area (rFFA) is represented at MNI coordinates x = 40, y = −55, z = −12 and the right occipital face area (rOFA) at MNI coordinates x = 39, y = −79, z = −6, 10 mm diameter. (C) Significant brain activation (p-uncorrected <0.001 voxel level and p-FWE < 0.05 cluster level) for the joy unmasked > joy masked contrast. Color bar represents t-values. OFA, Occipital Face Area; FFA, Fusiform Face Area; L, Left; R, Right.

The stimuli were collected by the experimenters by taking close-up photographs of 5 young Caucasian male adults and 6 young Caucasian female adults expressing six emotions (fear, joy, disgust, surprise, sadness, anger) or a neutral pose, each with and without a surgical mask on. This picture database underwent ratings by 25 independent participants who had to assign one of the seven conditions to each masked and unmasked picture. Based on these ratings, we selected as experimental stimuli the single negative (i.e., fear) and positive (i.e., joy) emotions with the highest level of correct assignments, together with the neutral pose, and the 3 male and 3 female participants whose sets of facial expressions received the highest level of correct assignments. This procedure resulted in a set of 36 experimental stimuli: 6 individuals expressing three emotions (joy, fear, neutral) with two mask conditions (masked, unmasked). Faces were projected onto a panel that was reflected inside the scanner by a mirror glass. The 36 images were shown to all participants at 4:3 ratio, in black and white, on a white background. During a de-briefing session, all the participants were asked to evaluate the emotion associated with each of the experimental pictures via an online questionnaire. No picture was removed due to poor ratings (< 60%).

Pictures were presented to the participants for 2000 ms. When the stimulus disappeared, a question mark was displayed on the screen for 500 ms. The question mark prompted the participants to choose the appropriate emotion (joy, fear, or neutral). This stimulus was then followed by a blank screen that lasted for the remaining duration of the inter-stimulus interval (ISI, approximately 2,398 ms). Therefore, participants had almost 3 s to rate each picture before the beginning of the following trial. The Presentation software1 was used to present stimuli and collect participants’ responses.

To explore the main effects of both mask condition (i.e., mask and unmask) and emotion category (i.e., joy, fear, and neutral), as well as their interaction, on the participants’ accuracy rates and reaction times (RTs), a generalized linear mixed model was computed with the glmer function in the “lme4” package (Bates et al., 2015). Response correctness (for accuracy analyses) or RTs for hit responses were entered into the model as dependent variables. Participants were modeled as random intercept. Mask condition and emotion category were modeled as fixed effects, and each was tested for its significance by comparing a model in which the fixed term of interest was present against a model in which it was not included (i.e., likelihood ratio test). A predictor was retained only when its inclusion determined a significant increase in explained variance. In case of a significant interaction, all the lower-order terms involved were retained. Post hoc comparisons were run with the emmeans package. Data were considered significant when p < 0.05. Statistical analyses were run on R software (version 4.3.0) (R Development Core Team, 2015).

2.3 fMRI recording and analyses

MR images were acquired with a 3Tesla Philips Ingenia CX MR system (Philips HealthCare, Best, Netherlands) equipped with a 32-channels SENSE head coil. A fast-speed echo-planar imaging (EPI) sequence was used to acquire functional scans (echo time [TE] = 33 ms; repetition time [TR] = 2000 ms; flip angle [FA] = 85°; number of volumes per run = 199; field of view [FOV] = 240 mm; matrix size = 80 × 80; 35 axial slices per volume; slice thickness = 3 mm; interslice gap = 0.75 mm; voxel size = 3 × 3 × 3.75 mm3; phase-encoding direction [PE] = A/P; whole-brain coverage). A total of 10 dummy scans preceded each run to optimize the EPI image signal. Pre-processing was run using the default pre-processing batch available in spm12. In particular, prior to undergoing pre-processing, the origin of each T1w image was manually aligned to the anterior commissure–posterior commissure (AC-PC) line. Then, MR images were subjected to both temporal and spatial pre-processing steps. First, functional images were slice-time corrected to the first slice to correct for differences in slice acquisition times, realigned to the first volume, and unwarped to remove movement artifacts. For each participant, functional volumes were checked for excessive head motion (>2 mm). No participant was excluded due to excessive head motion. T1w images were segmented into different tissue classes (i.e., grey matter, white matter, cerebro-spinal fluid, bone, soft tissues, and air), bias-corrected for intensity inhomogeneities, and spatially normalized. Then, the bias-corrected T1w images were skull-stripped using the “Image Calculator” SPM function, entered as reference image to co-register the mean realigned functional image of each participant, and normalized to the standard Montréal Neurological Institute (MNI) template. After normalization, functional volumes were resampled to 2 × 2 × 2 mm3 voxels and smoothed with a 6 mm3 full width at half-maximum (FWHM) Gaussian kernel to minimize inter-subject variability. After being pre-processed, functional data were analyzed at the whole-brain level using SPM12 (Wellcome Department of Cognitive Neurology, London, UK) (SPM v6906) by means of a random-effects model implemented with a two-level summary statistic approach. In the first-level analysis, evoked responses for the six experimental conditions were entered into a general linear model (GLM) and modeled with the canonical hemodynamic function (HRF). Realignment parameters were entered as nuisance covariates in the first-level analyses. Moreover, temporal autocorrelation was accounted for with an AR (1) regression algorithm. A 128 s high-pass filter was imposed, which removed slow signal chains with a longer period. A set of Student’s t-test linear contrasts were defined to use the estimated con-images at the second statistical level. At the second level of analysis, the contrast images obtained at the single-subject level were used to compute one-sample t-tests, assessing their significance at the group level. Voxel-wise whole-brain analysis was performed with cluster-level multiple comparison correction. The statistical threshold was set at cluster level at p < 0.05 family-wise error corrected (FWE) and at voxel level at p < 0.001.

We then narrowed the focus of our analyses on regions of interest (ROIs) known to play a key role in facial emotion recognition and facial recognition per se. Through the MarsBaR toolbox for SPM (Brett et al., 2002), we defined as ROIs the OFA and the FFA, known to underpin facial processing (Kanwisher et al., 1997; Grill-Spector et al., 2017). The WFU_pickatlas toolbox of the Automatic Anatomical Labeling atlas (AAL2) (Tzourio-Mazoyer et al., 2002) was used to generate the anatomical ROIs for the bilateral amygdala, widely considered as critical for facial emotion recognition (Adolphs et al., 1994; Geissberger et al., 2020). Only whole-brain significant contrasts underwent ROI-based analysis. The statistical threshold was set at p < 0.05.

Finally, we performed a psychophysiological interaction analysis (PPI) by means of the gPPI toolbox for SPM82 in order to explore whether the presence of a facial mask changed the connectivity pattern between those regions and other brain areas during facial emotion recognition. Indeed, generalized PPI (gPPI) analysis offers the opportunity to understand how brain regions interact in a task-dependent manner in block or event-related task designs with two or more experimental conditions. As ROIs, we selected once again the bilateral OFA, FFA, and amygdala. For each ROI, a GLM was performed, including as regressors the BOLD signal extracted from that ROI (i.e., the physiological effect), the six experimental conditions (i.e., the psychological variables), and the element-by-element product of these two variables (i.e., the psychophysiological interaction). The statistical threshold was set at cluster level at p < 0.05 family-wise error corrected (FWE) and at voxel level at p < 0.001.

3 Results

3.1 Behavioral results

A significant mask condition × emotion category interaction on the participants’ accuracy rates was observed (χ2 = 17.498, value of p = 0.0002). Post hoc comparisons revealed that the probability of correct response was lower for fear mask than fear unmask faces (odds.ratio = 0.264, st.err. = 0.054, z.ratio = −6.567, value of p < 0.0001), for joy mask than joy unmask faces (odd.s ratio = 0.169, st.err. = 0.032, z.ratio = −9.279, value of p <0.0001), and for neutral mask than neutral unmask faces (odds.ratio = 0.545, st.err. = 0.111, z.ratio = −2.979, value of p = 0.034). Moreover, the probability of correct responses was lower for both fear mask (odds.ratio = 0.587, st.err. = 0.098, z.ratio = −3.203, value of p = 0.017) and joy mask (odds.ratio = 0.345, st.err. = 0.055, z.ratio = −6.721, value of p < 0.0001) than neutral mask faces. Lastly, the probability of correct responses was higher for fear mask than joy mask faces (odds.ratio = 1.699, st.err. = 0.242, z.ratio = 3.732, value of p = 0.0026). No such differences between emotion categories were observed for the unmask condition (p > 0.1).

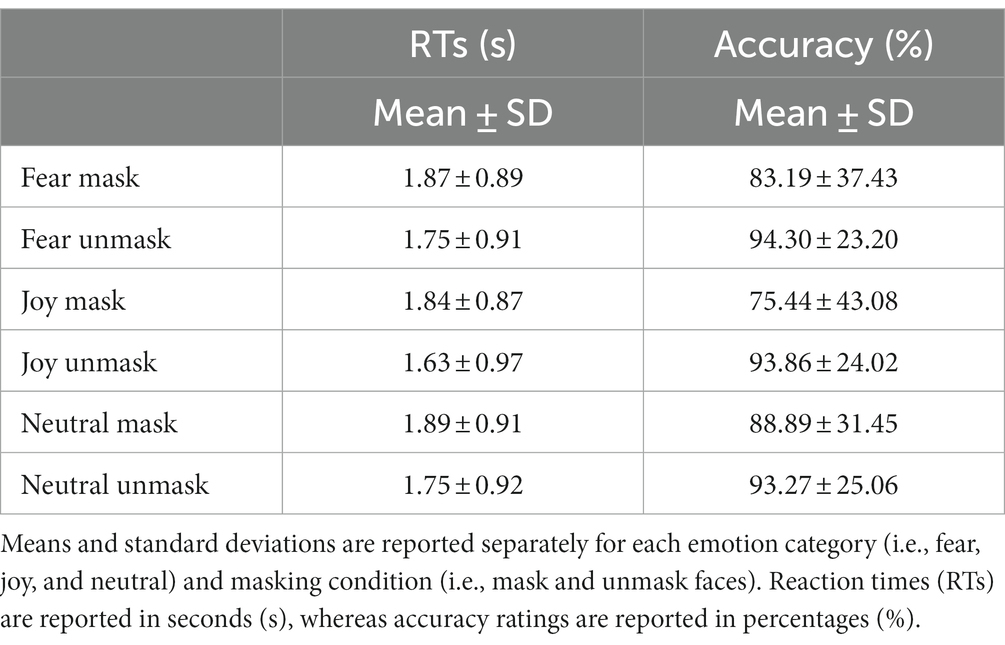

Regarding the reaction times’ analyses, a significant mask condition x emotion category interaction on the participants’ reaction times was observed (χ2 = 10.238, value of p = 0.006). Post hoc comparisons showed that the participants took longer to respond to fear mask vs. fear unmask faces (beta = 0.104, st.err. = 0.025, t.ratio = 4.149, value of p = 0.0005), to joy mask vs. joy unmask faces (beta = 0.213, st.err. = 0.026, t.ratio = 8.221, value of p < 0.0001), and to neutral mask vs. neutral unmask faces (beta = 0.123, st.err. = 0.025, t.ratio = 4.977, value of p < 0.0001). Moreover, reaction times were longer for fear unmask vs. joy unmask faces (beta = 0.127, st.err. = 0.024, t.ratio = 5.228, value of p < 0.0001) and for neutral unmask vs. joy unmask faces (beta = 0.116, st.err. = 0.025, t.ratio = 4.278, value of p < 0.0001). No such differences between emotion categories were found for mask faces (p > 0.1). All behavioral results are reported in Table 1.

3.2 fMRI results

3.2.1 Whole-brain analysis

At the neural level, whole-brain fMRI analyses revealed a significantly stronger activation of a right occipito-temporal cluster, including the fusiform gyrus, known to govern facial recognition (Rossion et al., 2000; Gerrits et al., 2019; Rigon et al., 2019), when participants saw masked versus unmasked faces (Figure 1B). These results were corroborated when investigating the same contrast in the neutral condition. On the contrary, the unmasked joyful expressions evoked a stronger activity in the right post-central gyrus with respect to their masked counterparts (Figure 1C). The neutral vs. emotional expressions comparison showed a bilateral response in the inferior subdivision of the lateral occipital cortex, along with an increased activation of the right fronto-parietal regions. The same contrast in the masked condition revealed a pronounced activity in occipital and frontal areas. These significant contrasts are shown in Table 2. Moreover, given the exploratory nature of this study (n = 25 participants), we double-ran the whole-brain analyses with a less stringent threshold (p < 0.05 FDR-corrected) and have reported the data in the Supplementary Table S1.

3.2.2 ROI-based analysis

The ROI-based analyses on the ventral occipito-temporal regions supported the whole-brain analyses’ results: a significantly stronger activation emerged in the right FFA (t = 2.67, p = 0.006) and in the OFA bilaterally (rOFA: t = 4.72, p < 0.0001; lOFA: t = 3.16, p = 0.002) for the masked > unmasked contrast. The same activation pattern was found also for the neutral masked > neutral unmasked contrast (rFFA: t = 3.32, p = 0.0014; rOFA: t = 3.22, p = 0.0018; lOFA: t = 1.90, p = 0.034). The increased bilateral response of these regions remained significant also in the neutral vs. emotional expressions contrast, regardless of the presence of a face mask (rFFA: t = 3.43, p = 0.001; lFFA: t = 2.10, p = 0.023; rOFA: t = 5.00, p < 0.0001; lOFA: t = 2.91, p = 0.003). On the other hand, the unmasked > masked contrast revealed a greater response of the right amygdala (t = 1.97, p = 0.03). Interestingly, the right amygdala showed an increased activity also for the joy unmasked > joy masked contrast (t = 1.87, p = 0.037). Lastly, emotional faces (i.e., both joy and fear expressions), as compared to neutral ones, were associated with an increased response in the left amygdala (t = 2.06, p = 0.025), irrespective of the mask condition.

3.2.3 Psychophysiological interaction analysis

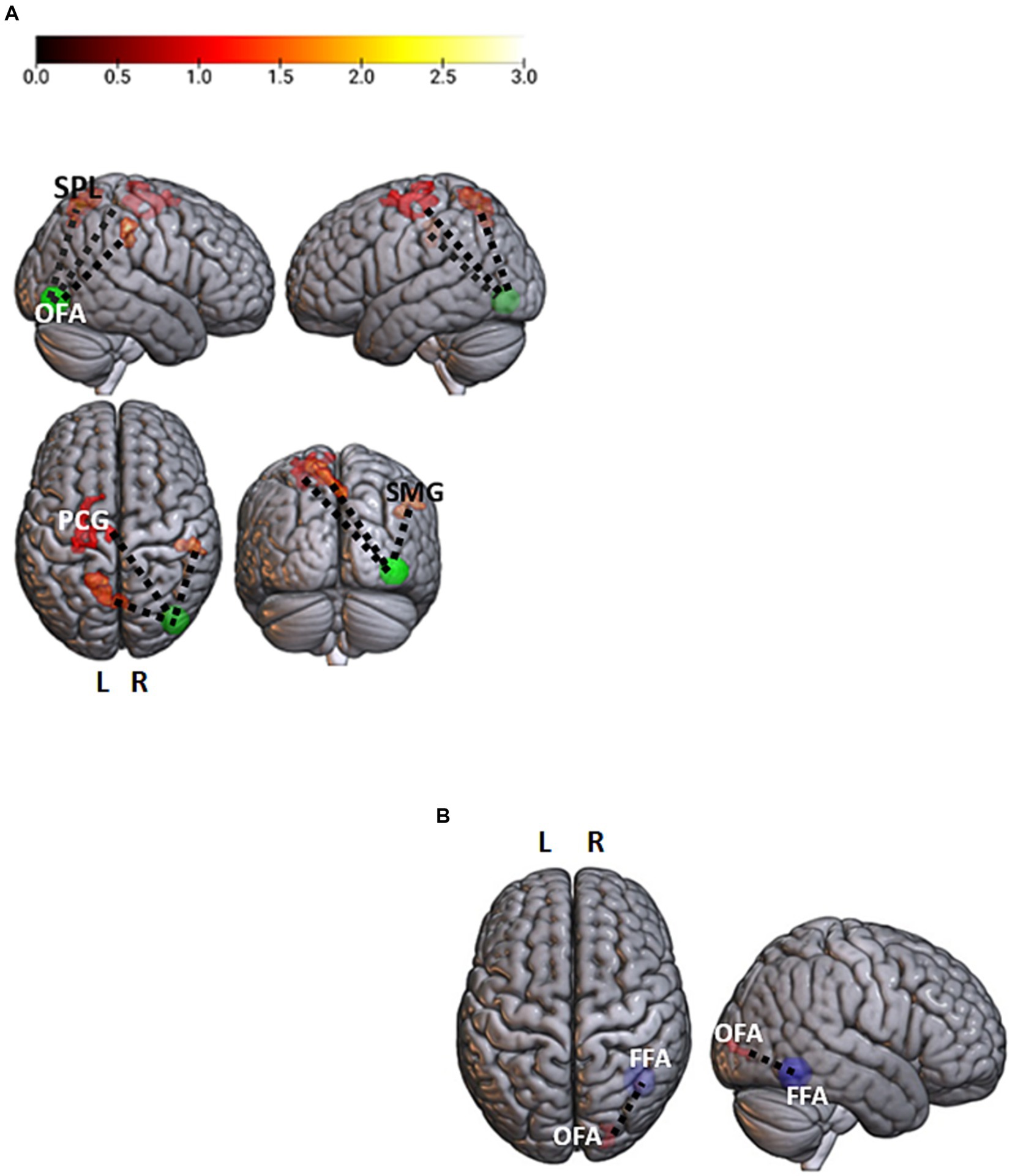

In the masked > unmasked contrast, the right OFA showed a stronger correlation with a set of bilateral fronto-parietal regions, the left superior frontal gyrus (t = 4.9; p < 0.001), the left precentral gyrus (t = 4.41; p < 0.001), the left superior parietal lobe (t = 5.89; p < 0.001), and the right supramarginal gyrus (t = 6.2; p < 0.001) (Figure 2A). The unmasked > masked contrast in the neutral condition led to a greater correlation between the right FFA and the right inferior occipital gyrus (t = 6.11; p < 0.001) (Figure 2B).

Figure 2. Facial emotions and neutral contrasts analyses. (A) Brain regions showing a significant stronger association with the right occipital face area (rOFA, p-uncorrected <0.001 voxel level and p-FWE < 0.05 cluster level) for the masked > unmasked contrast. The rOFA is represented at MNI coordinates x = 39, y = −79, z = −6, 10 mm diameter. Color bar represents t-values. (B) A significant stronger correlation between the right fusiform face area (rFFA; x = 40, y = −55, z = −12) and the homolateral OFA (rOFA; x = 39, y = −79, z = −6) for the neutral unmasked > neutral masked contrast (p-uncorrected <0.001 voxel level and p-FWE < 0.05 cluster level). OFA, Occipital Face Area; FFA, Fusiform Face Area; PCG, PreCentral Gyrus; SPL, Superior Parietal Lobe; SMG, Supramarginal Gyrus; L, Left; R, Right.

4 Discussion

The behavioral and fMRI results reported in this study suggest that the use of face masks may tax our ability, speed, and neural effort in facial emotion recognition.

Our behavioral results, in line with the recent literature, suggest that the use of masks reduces our ability to recognize facial expressions, both in terms of accuracy and response times, regardless of the emotion expressed (Carbon, 2020; Ruba and Pollak, 2020; Marini et al., 2021; Wong and Estudillo, 2022). These data suggest that the area of the face between the chin and cheekbones, hidden by the surgical mask, is equally essential for the recognition of both emotional expressions and a neutral face (Beaudry et al., 2013). However, accuracy data seem to indicate the greater importance of the mouth for the identification of joy compared to fear or neutral expressions (Wegrzyn et al., 2017).

FMRI analyses showed that the ventral occipito-temporal face recognition areas were more active when a mask was covering the target faces. Such a result might have been expected since masks hide important key features of the visual image of a face, forcing the observer to interpret the underlying facial expression only on the basis of the visible characteristics. As the OFA responds preferentially to single features, in particular to those conveyed by the eye and eyebrow regions (Arcurio et al., 2012), which are not covered by face masks, its greater activation for masked faces might indicate a higher reliance on the upper face region during facial emotion recognition. Consistent with this interpretation, highly relevant facial features led to a greater activation of the FFA (Nestor et al., 2008). These data suggest that the eye and forehead regions have a crucial role in the interpretation of masked facial expressions. Thus, the greater activation of both occipito-temporal areas may be expressive of a brain compensatory strategy in the case of the lack of crucial facial features.

Additionally, as revealed by the PPI analysis, the presence of a face mask was associated with a stronger correlation between the right OFA and fronto-parietal regions, supporting functions involved in the top–down regulation of emotional stimuli, such as episodic memory (Fletcher et al., 1995), visual working memory (Haxby et al., 2000), and attention (Wojciulik and Kanwisher, 1999). This result suggests that, for a masked face, the OFA (in which a visual structural analysis of the face is performed) needs a deeper communication with higher-order brain areas to correctly categorize and interpret the emotion it conveys. Moreover, unmasked neutral faces elicit a stronger communication between the right FFA and the homolateral OFA.

These findings indicate that the right OFA and the right FFA, independently, respond more strongly to masked neutral faces than to unmasked neutral faces, pointing to the need for more neural resources to correctly categorize and interpret these stimuli. Similarly, previous EEG studies showed larger N170 during the emotion recognition of masked (vs. unmasked) faces, suggesting that the processing of faces with surgical-like masks might require an amplified attentional process (Prete et al., 2022; Żochowska et al., 2022; Proverbio et al., 2023). One might hypothesize that the higher activations of the two ventral occipito-temporal regions support, in a compensatory manner, a feature-based processing of the face in order to identify its underlying expression on the basis of the configuration of the eyes and the forehead. On the other hand, the psychophysiological results for neutral unmasked faces, as compared to neutral masked, are consistent with the view of the OFA as the first stage of face processing, in which single facial features are extracted and then transferred to the FFA, which computes a facial Gestalt, essential to individual identity recognition (Pitcher et al., 2011a). Indeed, the processing of a neutral face might stop at this stage as there are no emotional meanings to extract. However, neutral expressions, relative to emotional ones, evoke greater involvement of the fronto-parietal regions, responsible for a higher-order facial processing (Atkinson and Adolphs, 2011). Our results are consistent with those of Carvajal et al. (2013), who observed that neutral expressions, with respect to emotional ones, led to the activation of a more complex representation requiring a more effortful cognitive processing.

At the behavioral level, we observed that neutral masked faces were recognized more accurately than joy or fear masked faces. Numerous behavioral studies showed that the recognition – in terms of accuracy – of masked neutral facial expressions, as compared to other emotional expressions (e.g., fear, happiness, sadness), is not so strongly affected by a face mask (Carbon, 2020; Carbon et al., 2022; Kim et al., 2022; Huc et al., 2023), paralleling our findings. This could be due to the fact that the area between the cheeks and the chin, i.e., the facial region typically covered by a face mask, may not be as necessary for the recognition of a neutral facial expression as it is for other emotional expressions, such as joy and fear. This may be best exemplified by how we instantly recognize faces conveying joy because of the contour of the mouth and lips forming a ´smile.´ This may also further corroborate recent findings that face masks result in more emotional expressions being confused with neutral ones (Carbon, 2020; Kim et al., 2022). Despite masked neutral expressions being better recognized at the behavioral level, we observed that they led to greater activity in the occipito-temporal and frontal brain areas as compared to masked emotional expressions. This increased fronto-occipito-temporal activation might reflect a more elaborate cognitive processing (Carvajal et al., 2013), not required for “basic” emotional expressions such as fear or joy. We suggest that, as aforementioned, since a neutral expression is not associated with a particular change in the whole facial configuration, it requires a more demanding cognitive effort, both visually and cognitively, to be correctly recognized with respect to joyful and fearful expressions, whose correspondent mouth and eye movements are extremely specific. These observations coming from previous literature provide motivation for the apparently contradictory pattern of the results observed, in which, at the behavioral level, masked neutral faces were better recognized, while at the neural level, masked neutral faces led to greater activity in circuits involved in a more effortful cognitive processing. Behaviorally, the greater recruitment of neural resources for the recognition of masked faces was paralleled by less accurate and quick performances when participants were struggling to classify emotions from a masked face. This observation confirms our neural findings that the face area between the chin and the cheekbones, covered by a face mask, is highly important for the recognition of both emotional expressions.

Interestingly, emotional faces (i.e., both joy and fear expressions), as compared to neutral ones, were associated with an increased response in the left amygdala, irrespective of the mask condition. As reported by many studies (Kensinger and Schacter, 2006; Lewis et al., 2007; Colibazzi et al., 2010; Tamietto and de Gelder, 2010), the amygdala plays a crucial role in regulating the processing of emotionally arousing stimuli. Gläscher and Adolphs (2003) attested to a predominant role of the left amygdala in the ability to detect stimulus arousal. In this study, we found that our emotional faces were more arousing than neutral expressions, even when the same expressions were half-covered by a surgical-like mask. We also showed a decreased amygdalar activation for masked joyful expressions as compared to unmasked ones, whereas not a such decrease was evident in the fear condition. This could be due to the fact that the key facial feature of joy expression is the smile (Leppänen and Hietanen, 2007; Bombari et al., 2013), which is covered by face masks, while fear is mostly expressed through the eyes and forehead (Wegrzyn et al., 2017), which are spared by mask use. Consistently with this interpretation, at the whole-brain level an unmasked joyful expression, with respect to a masked one, was found to lead to a greater involvement of the mouth area in the somatotopic representation of the secondary somatosensory cortex (SII), leading specifically to an enhanced activity in the dorso-caudal subdivision of the right post-central gyrus, which is devoted to the sensorimotor processing of lip movements (Penfield and Rasmussen, 1950; Grabski et al., 2012). Thus, the greater response of this region for an expression of joy might reflect the underlying processing of the structural changes that occur in the other’s face when the mouth widens into a smile.

To conclude, our results have highlighted the adaptability of the human neural systems underlying facial expression recognition in the condition brought forth by the spread of COVID-19. One limitation of this study was the utilization of static faces. Indeed, in our daily life and social interactions, we are usually exposed to dynamic faces. Further studies should thus confirm such results by including dynamic faces in the testing setup. Although face masks hamper our ability to interpret facial expressions of emotions and determine a more effortful neural processing of faces, one can rely, in daily life, upon other important cues allowing a correct identification of interlocutors’ emotional states, thus compensating for the lack of important facial information covered by face masks.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Comitato etico Ospedale San Raffaele, Milan, Italy. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any identifiable images or data included in this article.

Author contributions

JA: Conceptualization, Methodology, Writing – review & editing, Investigation, Writing – original draft. FG: Investigation, Writing – original draft, Data curation, Formal analysis. DF: Data curation, Investigation, Methodology, Writing – original draft. EH: Data curation, Writing – review & editing. FZ: Writing – review & editing. DE: Writing – review & editing. AS: Formal analysis, Writing – review & editing. CB: Formal analysis, Investigation, Writing – original draft. ND: Investigation, Supervision, Writing – original draft. SI: Funding acquisition, Resources, Supervision, Visualization, Writing – review & editing. FA: Conceptualization, Methodology, Resources, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

We would like to thank S.D.L. for significant contribution in enrolling participants.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2024.1339592/full#supplementary-material

Footnotes

References

Adolphs, R. (2002). Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behav. Cogn. Neurosci. Rev. 1, 21–62. doi: 10.1177/1534582302001001003

Adolphs, R., Tranel, D., Damasio, H., and Damasio, A. (1994). Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372, 669–672. doi: 10.1038/372669a0

Arcurio, L. R., Gold, J. M., and James, T. W. (2012). The response of face-selective cortex with single face parts and part combinations. Neuropsychologia 50, 2454–2459. doi: 10.1016/j.neuropsychologia.2012.06.016

Atkinson, A. P., and Adolphs, R. (2011). The neuropsychology of face perception: beyond simple dissociations and functional selectivity. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 366, 1726–1738. doi: 10.1098/rstb.2010.0349

Bates, D., Mächler, M., Bolker, B., and Walker, S. (2015). Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67, 1–48. doi: 10.18637/jss.v067.i01

Beaudry, O., Roy-Charland, A., Perron, M., Cormier, I., and Tapp, R. (2013). Featural processing in recognition of emotional facial expressions. Cogn. Emot. 28, 416–432. doi: 10.1080/02699931.2013.833500

Bombari, D., Schmid, P. C., Schmid Mast, M., Birri, S., Mast, F. W., and Lobmaier, J. S. (2013). Emotion recognition: the role of featural and configural face information. Q. J. Exp. Psychol. 2006, 2426–2442. doi: 10.1080/17470218.2013.789065

Brett, M., Anton, J.-L., Valabregue, R., and Poline, J.-B. (2002). Region of interest analysis using an SPM toolbox [abstract]. Presented at the 8th International Conference on Functional Mapping of the Human Brain, June 2-6, 2002, Sendai, Japan. NeuroImage 16:497. doi: 10.1016/S1053-8119(02)90013-3

Calbi, M., Langiulli, N., Ferroni, F., Montalti, M., Kolesnikov, A., Gallese, V., et al. (2021). The consequences of COVID-19 on social interactions: an online study on face covering. Sci. Rep. 11:2601. doi: 10.1038/s41598-021-81780-w

Carbon, C.-C. (2020). Wearing face masks strongly confuses counterparts in Reading emotions. Front. Psychol. 11:566886. doi: 10.3389/fpsyg.2020.566886

Carbon, C.-C., Held, M. J., and Schütz, A. (2022). Reading emotions in faces with and without masks is relatively independent of extended exposure and individual difference variables. Front. Psychol. 13. doi: 10.3389/fpsyg.2022.856971

Carvajal, F., Rubio, S., Serrano, J. M., Ríos-Lago, M., Alvarez-Linera, J., Pacheco, L., et al. (2013). Is a neutral expression also a neutral stimulus? A study with functional magnetic resonance. Exp. Brain Res. 228, 467–479. doi: 10.1007/s00221-013-3578-1

Colibazzi, T., Posner, J., Wang, Z., Gorman, D., Gerber, A., Yu, S., et al. (2010). Neural systems subserving valence and arousal during the experience of induced emotions. Emot. Wash. DC 10, 377–389. doi: 10.1037/a0018484

Ellison, J. W., and Massaro, D. W. (1997). Featural evaluation, integration, and judgment of facial affect. J. Exp. Psychol. Hum. Percept. Perform. 23, 213–226. doi: 10.1037/0096-1523.23.1.213

Fletcher, P. C., Frith, C. D., Baker, S. C., Shallice, T., Frackowiak, R. S., and Dolan, R. J. (1995). The mind’s eye--precuneus activation in memory-related imagery. NeuroImage 2, 195–200. doi: 10.1006/nimg.1995.1025

Gan, X., Zhou, X., Li, J., Jiao, G., Jiang, X., Biswal, B., et al. (2022). Common and distinct neurofunctional representations of core and social disgust in the brain: coordinate-based and network meta-analyses. Neurosci. Biobehav. Rev. 135:104553. doi: 10.1016/j.neubiorev.2022.104553

Geissberger, N., Tik, M., Sladky, R., Woletz, M., Schuler, A.-L., Willinger, D., et al. (2020). Reproducibility of amygdala activation in facial emotion processing at 7T. NeuroImage 211:116585. doi: 10.1016/j.neuroimage.2020.116585

Gerrits, R., Van der Haegen, L., Brysbaert, M., and Vingerhoets, G. (2019). Laterality for recognizing written words and faces in the fusiform gyrus covaries with language dominance. Cortex J. Devoted Study Nerv. Syst. Behav. 117, 196–204. doi: 10.1016/j.cortex.2019.03.010

Gläscher, J., and Adolphs, R. (2003). Processing of the arousal of subliminal and supraliminal emotional stimuli by the human amygdala. J. Neurosci. 23, 10274–10282. doi: 10.1523/JNEUROSCI.23-32-10274.2003

Grabski, K., Lamalle, L., Vilain, C., Schwartz, J.-L., Vallée, N., Tropres, I., et al. (2012). Functional MRI assessment of orofacial articulators: neural correlates of lip, jaw, larynx, and tongue movements. Hum. Brain Mapp. 33, 2306–2321. doi: 10.1002/hbm.21363

Grill-Spector, K., Weiner, K. S., Kay, K., and Gomez, J. (2017). The functional neuroanatomy of human face perception. Annu. Rev. Vis. Sci. 3, 167–196. doi: 10.1146/annurev-vision-102016-061214

Grundmann, F., Epstude, K., and Scheibe, S. (2021). Face masks reduce emotion-recognition accuracy and perceived closeness. PLoS One 16:e0249792. doi: 10.1371/journal.pone.0249792

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2002). Human neural systems for face recognition and social communication. Biol. Psychiatry 51, 59–67. doi: 10.1016/S0006-3223(01)01330-0

Haxby, J. V., Petit, L., Ungerleider, L. G., and Courtney, S. M. (2000). Distinguishing the functional roles of multiple regions in distributed neural systems for visual working memory. NeuroImage 11, 145–156. doi: 10.1006/nimg.1999.0527

Huc, M., Bush, K., Atias, G., Berrigan, L., Cox, S., and Jaworska, N. (2023). Recognition of masked and unmasked facial expressions in males and females and relations with mental wellness. Front. Psychol. 14:1217736. doi: 10.3389/fpsyg.2023.1217736

Kanwisher, N., McDermott, J., and Chun, M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997

Kensinger, E. A., and Schacter, D. L. (2006). Processing emotional pictures and words: effects of valence and arousal. Cogn. Affect. Behav. Neurosci. 6, 110–126. doi: 10.3758/CABN.6.2.110

Kim, G., Seong, S. H., Hong, S.-S., and Choi, E. (2022). Impact of face masks and sunglasses on emotion recognition in south Koreans. PLoS One 17:e0263466. doi: 10.1371/journal.pone.0263466

Leppänen, J., and Hietanen, J. (2007). Is there more in a happy face than just a big smile? Vis. Cogn. 15, 468–490. doi: 10.1080/13506280600765333

Lewis, P. A., Critchley, H. D., Rotshtein, P., and Dolan, R. J. (2007). Neural correlates of processing valence and arousal in affective words. Cereb. Cortex N. Y. N 1991, 742–748. doi: 10.1093/cercor/bhk024

Liu, M., Liu, C. H., Zheng, S., Zhao, K., and Fu, X. (2021). Reexamining the neural network involved in perception of facial expression: a meta-analysis. Neurosci. Biobehav. Rev. 131, 179–191. doi: 10.1016/j.neubiorev.2021.09.024

Marini, M., Ansani, A., Paglieri, F., Caruana, F., and Viola, M. (2021). The impact of facemasks on emotion recognition, trust attribution and re-identification. Sci. Rep. 11:5577. doi: 10.1038/s41598-021-84806-5

Nestor, A., Vettel, J. M., and Tarr, M. J. (2008). Task-specific codes for face recognition: how they shape the neural representation of features for detection and individuation. PLoS One 3:e3978. doi: 10.1371/journal.pone.0003978

Penfield, W., and Rasmussen, T. (1950). The cerebral cortex of man; a clinical study of localization of function. Oxford, England: Macmillan.

Pitcher, D., Dilks, D. D., Saxe, R. R., Triantafyllou, C., and Kanwisher, N. (2011a). Differential selectivity for dynamic versus static information in face-selective cortical regions. NeuroImage 56, 2356–2363. doi: 10.1016/j.neuroimage.2011.03.067

Pitcher, D., Walsh, V., and Duchaine, B. (2011b). The role of the occipital face area in the cortical face perception network. Exp. Brain Res. 209, 481–493. doi: 10.1007/s00221-011-2579-1

Pitcher, D., Walsh, V., Yovel, G., and Duchaine, B. (2007). TMS evidence for the involvement of the right occipital face area in early face processing. Curr. Biol. CB 17, 1568–1573. doi: 10.1016/j.cub.2007.07.063

Prete, G., D’Anselmo, A., and Tommasi, L. (2022). A neural signature of exposure to masked faces after 18 months of COVID-19. Neuropsychologia 174:108334. doi: 10.1016/j.neuropsychologia.2022.108334

Proverbio, A. M., and Cerri, A. (2022). The recognition of facial expressions under surgical masks: the primacy of anger. Front. Neurosci. 16:864490. doi: 10.3389/fnins.2022.864490

Proverbio, A. M., Cerri, A., and Gallotta, C. (2023). Facemasks selectively impair the recognition of facial expressions that stimulate empathy: an ERP study. Psychophysiology 60:e14280. doi: 10.1111/psyp.14280

R Development Core Team (2015). R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing.

Rigon, A., Voss, M. W., Turkstra, L. S., Mutlu, B., and Duff, M. C. (2019). Functional neural correlates of facial affect recognition impairment following TBI. Brain Imaging Behav. 13, 526–540. doi: 10.1007/s11682-018-9889-x

Rinck, M., Primbs, M. A., Verpaalen, I. A. M., and Bijlstra, G. (2022). Face masks impair facial emotion recognition and induce specific emotion confusions. Cogn. Res. Princ. Implic. 7:83. doi: 10.1186/s41235-022-00430-5

Rossion, B., Dricot, L., Devolder, A., Bodart, J. M., Crommelinck, M., De Gelder, B., et al. (2000). Hemispheric asymmetries for whole-based and part-based face processing in the human fusiform gyrus. J. Cogn. Neurosci. 12, 793–802. doi: 10.1162/089892900562606

Ruba, A. L., and Pollak, S. D. (2020). Children’s emotion inferences from masked faces: implications for social interactions during COVID-19. PLoS One 15:e0243708. doi: 10.1371/journal.pone.0243708

Schobert, A.-K., Corradi-Dell’Acqua, C., Frühholz, S., van der Zwaag, W., and Vuilleumier, P. (2018). Functional organization of face processing in the human superior temporal sulcus: a 7T high-resolution fMRI study. Soc. Cogn. Affect. Neurosci. 13, 102–113. doi: 10.1093/scan/nsx119

Tamietto, M., and de Gelder, B. (2010). Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 11, 697–709. doi: 10.1038/nrn2889

The Lancet Microbe (2021). COVID-19 vaccines: the pandemic will not end overnight. Lancet Microbe 2:e1. doi: 10.1016/S2666-5247(20)30226-3

Tzourio-Mazoyer, N., Landeau, B., Papathanassiou, D., Crivello, F., Etard, O., Delcroix, N., et al. (2002). Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. NeuroImage 15, 273–289. doi: 10.1006/nimg.2001.0978

Wegrzyn, M., Riehle, M., Labudda, K., Woermann, F., Baumgartner, F., Pollmann, S., et al. (2015). Investigating the brain basis of facial expression perception using multi-voxel pattern analysis. Cortex J. Devoted Study Nerv. Syst. Behav. 69, 131–140. doi: 10.1016/j.cortex.2015.05.003

Wegrzyn, M., Vogt, M., Kireclioglu, B., Schneider, J., and Kissler, J. (2017). Mapping the emotional face. How individual face parts contribute to successful emotion recognition. PLoS One 12:e0177239. doi: 10.1371/journal.pone.0177239

Wojciulik, E., and Kanwisher, N. (1999). The generality of parietal involvement in visual attention. Neuron 23, 747–764. doi: 10.1016/S0896-6273(01)80033-7

Wong, H. K., and Estudillo, A. J. (2022). Face masks affect emotion categorisation, age estimation, recognition, and gender classification from faces. Cogn. Res. Princ. Implic. 7:91. doi: 10.1186/s41235-022-00438-x

Keywords: facial emotion recognition, COVID-19, face mask, SARS-CoV-2, psychology, fMRI

Citation: Abutalebi J, Gallo F, Fedeli D, Houdayer E, Zangrillo F, Emedoli D, Spina A, Bellini C, Del Maschio N, Iannaccone S and Alemanno F (2024) On the brain struggles to recognize basic facial emotions with face masks: an fMRI study. Front. Psychol. 15:1339592. doi: 10.3389/fpsyg.2024.1339592

Edited by:

Alan J. Pegna, The University of Queensland, AustraliaReviewed by:

Xianyang Gan, University of Electronic Science and Technology of China, ChinaLuca Tommasi, University of Studies G. d'Annunzio Chieti and Pescara, Italy

Copyright © 2024 Abutalebi, Gallo, Fedeli, Houdayer, Zangrillo, Emedoli, Spina, Bellini, Del Maschio, Iannaccone and Alemanno. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Federica Alemanno, YWxlbWFubm8uZmVkZXJpY2FAaHNyLml0

Jubin Abutalebi

Jubin Abutalebi Federico Gallo

Federico Gallo Davide Fedeli

Davide Fedeli Elise Houdayer

Elise Houdayer Federica Zangrillo5

Federica Zangrillo5 Daniele Emedoli

Daniele Emedoli Alfio Spina

Alfio Spina Camilla Bellini

Camilla Bellini Federica Alemanno

Federica Alemanno