- 1Department of Humanities, University of Trieste, Trieste, Italy

- 2Department of Cultures and Civilizations, University of Verona, Verona, Italy

Syncopation – the occurrence of a musical event on a metrically weak position preceding a rest on a metrically strong position – represents an important challenge in the study of the mapping between rhythm and meter. In this contribution, we present the hypothesis that syncopation is an effective strategy to elicit the bootstrapping of a multi-layered, hierarchically organized metric structure from a linear rhythmic surface. The hypothesis is inspired by a parallel with the problem of linearization in natural language syntax, which is the problem of how hierarchically organized phrase-structure markers are mapped onto linear sequences of words. The hypothesis has important consequences for the role of meter in music perception and cognition and, more particularly, for its role in the relationship between rhythm and bodily entrainment.

1 Introduction

Syncopation is generally defined as the occurrence of a musical event on a metrically weak position preceding a rest on a metrically strong position [Huron, 2006; grounded on seminal work by Longuet-Higgins and Lee (1984)]. It represents an especially complex challenge in the study of the mapping between rhythm, intended as the linear temporal sequence in which musical events occur (Toussaint, 2013), and meter, the underlying cognitive structure within which musical events are perceived and interpreted according to influential models of rhythm perception such as the Generative Theory of Tonal Music (Lerdahl and Jackendoff, 1983), Dynamic Attending Theory (Large, 1994; Large and Kolen, 1994), and Predictive Coding (Friston, 2009). The challenge is made even more arduous by the observation that syncopation appears to be, among all forms of rhythmic complexity (Thul and Toussaint, 2008), the most effective in eliciting “the most desire to move and the most pleasure” (Witek et al., 2014, p. 1). Furthermore, we find syncopated rhythms among the most popular rhythms among different musical cultures and idioms (Toussaint, 2013). Syncopation is, thus, a powerful musical tool for making music that listeners can move to and find pleasure in listening to. The question we address here is why this is so – that is, what makes a deviation from temporal expectations such as syncopation so musically productive.

We first review some of the most prominent existing frameworks of meter (Section 2) and syncopation (Section 3) and consider how our research question is approached in other frameworks. We then suggest a novel hypothesis whereby syncopation is an especially effective strategy in eliciting the bootstrapping of hierarchically organized metric structures from linear sequences of musical events. Syncopated rhythms, by presenting an asymmetric distribution of musical events, are especially effective in suggesting a richer hierarchical organization in the underlying metric framework. We formulate this hypothesis by considering some recent empirical results and theoretical ideas concerning a parallel problem in natural language syntax: the problem of linearization. This is the problem of how hierarchically organized phrase-structure markers are mapped onto linear sequences of terminal nodes (Section 4). The hypothesis we propose has important consequences for the notion of musical meter and the role it plays in music perception and cognition, which we discuss in Section 5. Typically, meter is regarded as a governing framework, marking expectations concerning the temporal distribution of musical events. Conversely, our hypothesis suggests an approach whereby it is the linear form of rhythm that informs meter and the role of meter is that of offering a cyclic temporal framework of bodily entrainment, rather than perception.

2 Rhythm, meter, and rhythm-meter mapping

2.1 Rhythm and metric rhythm

In the most general sense, rhythm is “a patterned configuration of attacks that may or may not be constrained overall by a meter” (Powers, 2003, p. 723). In what follows, we will restrict our attention to rhythms that are constrained by a meter as it is only in the context of metric rhythm that syncopation occurs.

Minimally, a metric rhythm is a pulsation, a string of evenly spaced pulses, some of which are sounded (attacks) while other are silent (rests) (Toussaint, 2013, p. 5). The notations in (1) offer two different ways of representing a metric rhythm of eight pulsations of which the first, the second, the third and the fifth are attacks and all others are rests. The notation in (1a) represents the metric rhythm as a sequence of 1’s and 0’s; each number in the sequence corresponds to a pulse; a pulse marked by the number 1 is an attack; a pulse marked by the number 0 is a rest. The notation in (1b) (Breslauer, 1988) represents the same metric rhythm based on its durational patterns, that is, by listing the distance in number of pulses between (the onsets of) successive attacks, also called inter-onset interval (IOI).

(1)a.11101000

b.[1-1-2-4]

This view of rhythm obviously simplifies the reality of actual musical practice, where rhythms are produced by relying on a larger vocabulary of musical events than just attacks and rests. Modulations in amplitude, frequency, and timbre are powerful means in the hands of the instrumentalist to produce engaging musical rhythms. Still, as recognized since as early as Simon (1968), the listener’s ability to interpret the metric organization of a musical piece must also include the capacity to do so solely on the basis of the relative durations of IOI’s.

The restriction to metric rhythm also excludes a variety of phenomena that are nonetheless typically classified under the more general notion of rhythm. These range from Gregorian chants to melo-recitation in opera. As anticipated, the reason for this restriction is that syncopation, the topic of our study, only occurs in the context of metric rhythms.

2.2 Meter

According to the definition adopted, metric rhythm is characterized by an underlying pulsation – a sense of the temporal distance that regularly occurs between the pulses, irrespectively of whether these are materialized in the rhythm as rests or attacks (Honing, 2012). A common claim among musicologists is that the cyclic pulsation underlying metric rhythms encompasses a richer, possibly hierarchical, organization (Yeston, 1976; Lerdahl and Jackendoff, 1983; London, 2012) whereby some pulses are more salient (stronger) than others. This organization provides the listener with a relatively rich expectancy structure that assigns different degrees of expectation to different pulses (Jones, 1990; Large and Kolen, 1994; Ladinig et al., 2009; Rohrmeier and Koelsch, 2012).

While keeping in mind the limitations mentioned in the previous section, from this point on we will use the term “rhythm” to refer to the linear form of a metric rhythm. This specific notion of rhythm belongs to what Lerdahl and Jackendoff (1983, p. 48) refer to as “musical surface” and, for our purposes, can be described as a sequence of successive IOI’s.1 In contrast, we will use the term “meter” to refer to the, possibly hierarchical, organization of the underlying pulsation within which a rhythm unfolds.

Although the notion of meter finds broad support in the literature, the degree of complexity and rigidity of its inner organization remains debated. Lerdahl and Jackendoff (1983; see also Longuet-Higgins and Lee, 1984) propose a model of meter that is rigidly hierarchical and encompasses one or more intermediate levels between the pulsation and the downbeat. Yeston (1976) – a pioneer in applying the Schenkerian analytic methodology to the rhythmic domain – regarded meter as the result of the interaction between different “strata” of flat musical rhythms that are generally organized in a hierarchical fashion but can also stand in a more dynamic structural relationship with each other. A similar view is found in more recent models of meter such as those based on Dynamic Attending Theory (Jones, 1976). There is also discussion concerning the psychological reality of the inner structural organization of meter. It has been especially difficult to test intermediate levels of metric salience experimentally (Parncutt, 1994; Ladinig et al., 2009; Song et al., 2013; but see Katz et al., 2015) and sensible differences in sensitivity to intermediate metric levels have been observed when comparing trained musicians to non-musicians (Palmer and Krumhansl, 1990).

2.3 Rhythm-meter mapping

To be of explanatory value, the distinction between rhythm (intended as a cyclic, linear sequence of IOI’s) and meter (a hierarchically organized pulsation) must be supplemented with an explicit formulation of the principles that govern the mapping between the two. In the reality of musical practice, listeners infer the meter of a metric musical performance with no conscious effort or volition and based on a large variety of factors. These may range from properties internal to the musical performance (such as its amplitude envelops, its melodic and harmonic organization, and its timbral modulations) to culturally established metric templates characteristic of the music idiom of the performance at stake (Jones, 1990; London, 1990). This said, our focus in this contribution will be restricted, in the spirit of Simon (1968), to how the relative durations of the IOI’s of a metric rhythm relate to its underlying meter. In what follows, we will briefly review three of the most influential theoretical models of the mapping between meter and rhythm.

2.3.1 Well-formedness and preference rules

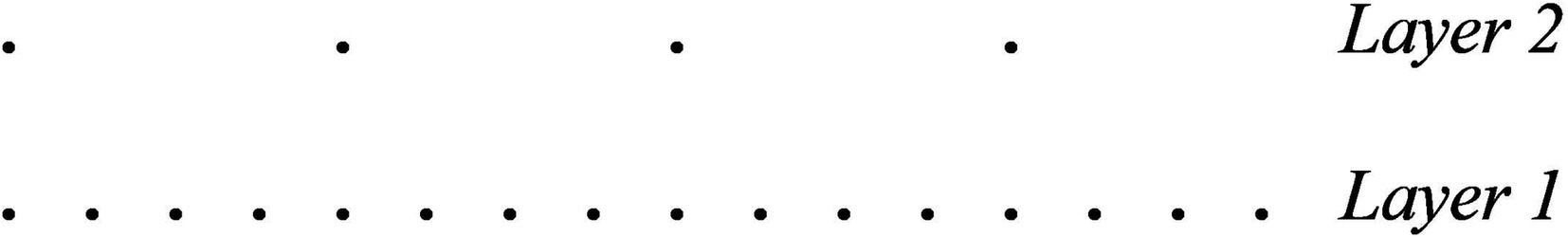

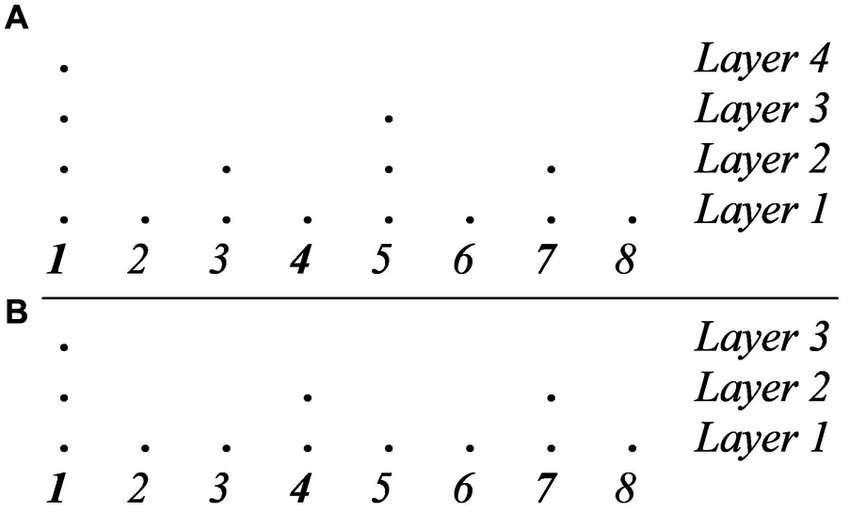

Figure 1 offers an analysis of a canonical 4/4 meter in western tonal music in the style of Lerdahl and Jackendoff’s (1983) Generative Theory of Tonal Music (GTTM). The dot-notation in the figure comprises five hierarchically organized layers of metric saliency. At the lowest level (Layer 1) we find the pulsation and at the highest level (Layer 5) the downbeat. The highest a level, the highest the saliency of its pulses and, accordingly, the expectation that an attack would occur in their position.

Figure 1. Analysis of a canonical 4/4 meter in western tonal music in the style of Lerdahl and Jackendoff’s (1983). GTTM, Generative Theory of Tonal Music.

GTTM provides two sets of rules constraining, respectively, the well-formedness of a meter and the possible associations between rhythm and meter.

Below we present the meter well-formedness rules (MWFR) that are relevant to the present discussion. Compared to their original formulation, the rules presented below are slightly simplified in those aspects that are not directly relevant to our goals and some of the terms are adapted to the terminology we have adopted so far (for example, we use the term pulse whenever GTTM talks of beat).

(MWFR 1) Every attack point must be associated with a pulse at the smallest level of metrical structure.

(MWFR 2) Every pulse at a given level must also be a pulse at the smaller level.

(MWFR 3) At each metrical level, strong pulses must be spaced either two or three pulses apart.

(MWFR 4) Each metrical level must consist of equally spaced pulses.

MWFR 1 can be regarded as a quantization constraint, aligning the actual musical events (attacks and rests) to the pulses of the meter. MWFR 2 is a constraint on the hierarchical organization of the levels in the meter. MWFR 3 and 4 are constraints on IOI’s between pulses belonging to the same level.2 These last rules are idiom specific and explicitly conceived for classical western tonal music and its preference for isochronous (binary or ternary) meters. The possibility is left open that different musical idioms may differ in this respect.

Rules of the second group are called meter preference rules (MPR). GTTM offers a number of such rules, also related to how factors such as stress, pitch, harmony and length are mapped to metrical structure. Here, we will focus only on those rules that are relevant to the present discussion.

(MPR 1) When two or more groups or parts of groups can be constructed as parallel, they preferably receive parallel metrical structure.

(MPR 3) Prefer a metrical structure in which attack onsets coincide with strong pulses.

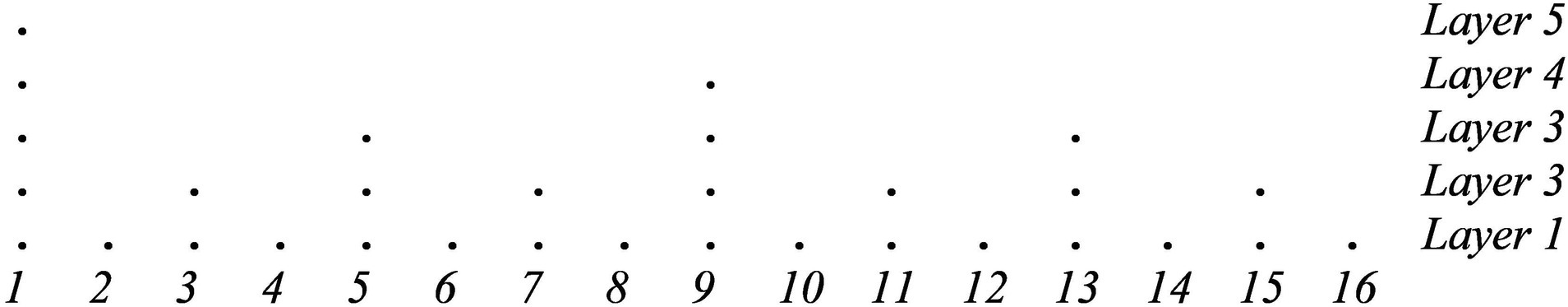

MPR 1 relies on the notion of group, which is central to the GTTM’s framework. The rule captures the intuition that a rhythm such as (2) is preferably interpreted within a metrical structure that repeats every four pulses, such as the one in Figure 2.

(2)1110111011101110

MPR 3 expresses a preference for attacks to coincide with strong pulses and rests with weak pulses, so that, for example, it is preferable to interpret (3) within the metrical structure in Figure 3A, rather than that in Figure 3B.

Figure 3. (A) Preferred metrical analysis of the rhythm 10101010 according to MPR3. (B) Less preferable metrical analysis of the rhythm 10101010 according to MPR3.

(3)10101010

An important aspect of the system of GTTM is that preference rules are not hard constraints whose violation implies some form of ungrammaticality. Some musical forms may be such that they cannot satisfy all the rules. In that case, the interpreter still relies on the rules to uncover the underlying meter by looking for the interpretation that satisfies the most rules and minimizes their violations. To see an example, the metric analysis of (4) in Figure 4A satisfies MPR 3 but violates MWFR 4. To satisfy MWFR 4 we must violate MPR 3. In this case, there are two possible analyses, reproduced in Figures 4B,C. Ultimately, the analysis in Figure 4B is preferable because it is the metric structure that produces less violations.

Figure 4. (A) Analysis of the rhythm 1010100101001010 that satisfies MPR 3 but violates MWFR 4. (B) Most optimal analysis of the rhythm 1010100101001010 that satisfies MWFR 4 but violates MPR 3. (C) Least optimal analysis of the rhythm 1010100101001010 that satisfies MWFR 4 but violates MPR 3.

(4) 1010100101001010

The question arises of why there should exist rhythms, such as (4), that violate the preference rules. We will return to this question in Section 3.

2.3.2 Dynamic attending theory

Dynamic Attending Theory (DAT) is a neurocognitive model of how temporal expectations are generated in real-time (Large and Kolen, 1994; McAuley, 1995; Large and Jones, 1999; Barnes and Jones, 2000; Jones, 2016). Central to it, is the notion of entrainment, intended, in the context of DAT, as a biological process of synchronization between external events and internal attending mechanisms. These mechanisms are assumed to have an oscillatory nature (arguably related to electrophysiological oscillations at the neural level), to have a tendency toward stability, but also to be able to adapt to expectancy violations. Another important assumption of DAT is that the attending system is able to entrain simultaneously to multiple oscillations of different temporal resolutions. Furthermore, when multiple oscillations occur together, they can resonate with each other and mutually reinforce when their peaks coincide. In this framework, meter emerges from the interaction between multiple attentional periodicities of different temporal resolutions.

To see an example, when listening to a rhythm of period t in what would be signed in western tonal notation as a 4/4 meter, the listener’s attention would be captured by periodicities of time t as well as periodicities of time t/2, t/4, t/8, and so on. The compound of these periodicities is a meter that reaches its maximal attention peak at t, a lower attention peak at t/2, an even lower attention peak at t/4, and so on, ultimately expressing an expectancy framework that is not dissimilar to that of the GTTM model (as expressed by the dot-notation in Figure 1).

In fact, the model of meter provided by DAT is not entirely incompatible with that of GTTM although there are some significant differences between the two models. GTTM focuses on the analytic properties of the final representation of meter and, therefore, does not provide a model of the actual cognitive mechanisms that support the construction of meter in real-time music perception or the real-time adaptation of a meter to a rhythm that violates it. In contrast, DAT shifts its focus to the bottom-up attentional processes that support the real-time bootstrapping of meter from the rhythmic surface and is also able to capture top-down processes such as the mechanisms of real-time detection of and adaptation to expectancy violations. Therefore, compared to GTTM, the DAT model offers a more flexible framework for explaining the dynamic interplay between rhythm and meter (Honing, 2012).

2.3.3 Predictive coding

Predictive Coding (PC) is a theory of human (and, in some of its generalizations, mammal) neurocognition grounded on Helmholz’s insight that the brain is a predictive machine whose task is generating predictive models of forthcoming events and testing them against the information gathered by the senses [see Clark (2013) for an overview]. In its most recent incarnations (Friston, 2009), PC maintains that brain networks are organized hierarchically and that the flow of information between the lower and higher layers is bidirectional. The lower layers collect sensorial information and test it against the current internal model. The prediction error that is detected is then sent upward to the higher layers, which encode the prior probabilities of the model. Depending on the magnitude of the prediction error detected, the higher levels either confirm or correct the existing model. The resulting model is then sent downward to the lower layers to be tested against the sensorial input, generating a feedback loop whose goal is that of minimizing prediction error.

As a predictive system that is hierarchically organized and allows for information about prediction errors to flow bidirectionally, PC appears particularly well suited to explain the multi-layered predictive processes of meter perception: “For meter perception, PC can explain how lower levels, e.g., events at the eighth-note level, provide metric information about the whole-note level and the salience of the downbeat (feed forward). At the same time, the whole-note level, as marked by the most salient beat, the downbeat, provides a metric framework according to which the eighth-notes at the lower level are heard (feedback). This PC way of understanding metric hierarchies emphasizes the mutual relationship between bottom-up and top-down processes.” (Vuust and Witek, 2014, p. 5; see also Vuust et al., 2009, 2018; Vuust and Witek, 2014; Gebauer et al., 2015; Lumaca et al., 2019).

The PC model of meter has obvious commonalities with both the GTTM model and the DAT model, in particular, its reliance on a multi-layered, hierarchical organization of the predictions made at different temporal resolutions. Similarly to DAT, it differs from GTTM in that it offers a dynamic model of both the bottom-up processes that support the bootstrapping of meter in real-time music perception and the top-down process of real-time adaptation to expectancy violations.

2.3.4 Linearization, antisymmetry, and structure bootstrapping in natural language syntax

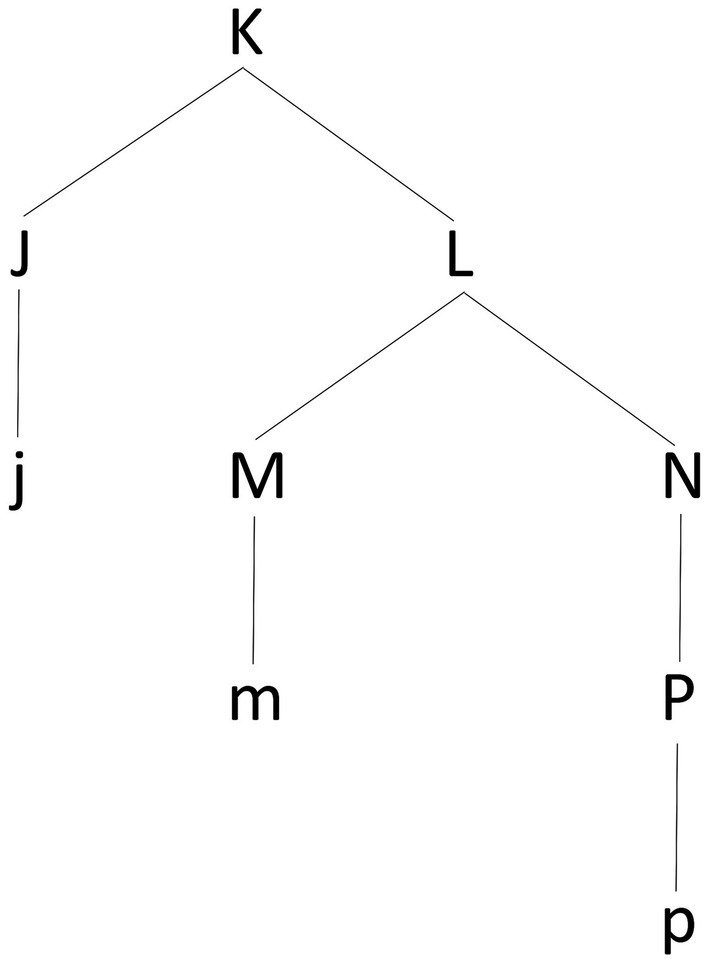

The problem of understanding the mapping between rhythm –a linear sequence of musical events – and meter – an abstract, hierarchically organized framework for the interpretation of rhythm – finds a parallel in the domain of natural language syntax with the problem of linearization – how, that is, a two-dimensional phrase-structure marker such as the one in Figure 5 is mapped into a spellable linear order. In Figure 5, the terminal nodes j, m, and p stand in a hierarchical relation of dominance. The problem of linearization concerns how these terminal nodes are ordered according to a relation of precedence/subsequence so that they can be spelled out as a linear sequence.

Figure 5. An abstract representation of a phrase-structure marker in the form of a syntactic tree. The tree has terminal nodes j, m, and p. These corresponds to the phonological exponents (terminals) of the tree structure, that is, the elements (typically words) that must be spelled-out. Conversely, the capital letters corresponds to categorial projections (non-terminals, or ‘invisible’ nodes) of these terminal nodes, expressing either their syntactic label (for example, J would be the syntactic label of j; a determiner such as “the,” for example, would be labeled as D, meaning “Determiner”) or the syntactic category resulting from the combination of two nodes (for example, a combination of a determiner and a noun, would be labeled as DP, meaning “Determiner Phrase”). According to the LCA, the linear order of the terminal nodes in a syntactic structure is read off inferred from the syntactic structure itself by translating all relations of asymmetric c-command between the nodes in the tree into relations of linear order (most typically, precedence). Applied to the phrase-structure in the figure, the LCA provides the following result. The pairs of non-terminals in a relation of asymmetric c-command are the following: <J, M>, <J, N>, <J, P>, <M, P>. This induces the following relations of precedence on the corresponding terminals: j<m, j<n, j<p, and m<p. In turn, these relations determine the string /jmp/.

The most influential hypothesis addressing this issue in the tradition of generative linguistics is Kayne’s (1994) theory of Antisymmetry and its Linear Correspondence Axiom (LCA).

Given a tree-diagram (phrase-marker), be X the set of non-terminals and d(X) the corresponding set of terminals (the members of d(X) will be the terminals dominated by each member of X; d(X) will be the image of X under d). Be now A the set of non-terminals of a particular phrase-marker such that A contains all the pairs <Xj,Yj> where Xj asymmetrically c-commands Yj (Xj commands Yj but Yj does not c-command Xj); the image under d of A will be the set d(A) of ordered pairs <aj,bj> such that aj belongs to d(Xj) and bj belongs to d(Yj): {<aj,bj>}. LCA is then defined as below:

(LCA) d(A) is a linear ordering of T (T = the set of terminals)

(DOMINANCE) Node α dominates node β iff α is in a higher position in the tree than β such that you can trace a line from α to β going only downwards.

(IMMEDIATE DOMINANCE) Node α immediately dominates node β iff α dominates β and there is no node γ such γ dominates β and α dominates γ.

(SISTERHOOD) Two nodes α and β are sisters if there is a node γ (their mother) which immediately dominates both α and β.

(C-COMMAND) α c-commands β iff

a. α and β are categories

b. no segment of α dominates β

c. every category that dominates α dominates β (May, 1985)

(ASYMMETRIC C-COMMAND) α asymmetrically c-commands β iff

a. α c-commands β and

b. β does not c-command α

Given (LCA), Antisymmetry corresponds to the requirement that the ordering of the set of terminals T in a phrase-marker P be induced by the pairwise ordering d(A). The crucial insight is that <a,b> belongs to d(A) (terminal a is ordered with respect to terminal b) if and only if the non-terminal dominating a asymmetrically c-commands the non-terminal dominating b. In this way, linear ordering is made dependent on the satisfaction of a specific structural requirement. Applied to the phrase-structure marker in Figure 5, LCA predicts the linear order j < m < p (where < symbolizes linear precedence), given that J asymmetrically c-commands both M and P and that M asymmetrically c-commands P.

Antisymmetry has a number of important consequences, such as predicting, under some additional assumptions, a restricted number of possible world orders across world languages, and has undergone a number of refinements since its original formulation (see especially Moro, 2000).

One important aspect of approaches to linearization such as Kayne’s LCA and its derivates is that they are top-down in the sense that they apply their algorithms to already existing syntactic structures in order to provide corresponding linear orders. These approaches are in line with the general wisdom that linear order does not concern syntactic structures themselves but is an interface issue, which comes into play only at the point when abstract syntactic structures must be translated into spell-out instructions that are compatible with the physiological properties of the articulatory-perceptual system, in particular the fact that we humans can only pronounce one word at a time (there are different declinations of this view, applying with different degrees of strength; for an overview see Kayne, 2022).

An alternative to this approach is the Bootstrapping Principle (BP)3 by Vender et al. (2023) and Delfitto (2023). This route from order to structure capitalizes on the main insight of so-called neo-constructionist approaches, according to which “we might characterize the bare bones of syntax in terms of the hierarchical arrangement of morphemes, the minimal building blocks of grammar” (Marantz, 2013), and more particularly on the existence of a Universal Extended Projection (UEP) of lexical categories in syntax (Grimshaw, J. 1991. Extended Projection. Ms, Brandeis University, Waltham, MA and much subsequent literature). To exemplify, consider the sequence seqN, consisting of all functional elements in the extended projection of a nominal root (as for instance the sequence Determiner-Number-Gender-Adjective-n). Each element in the sequence selects (or is selected) by the adjacent elements (for instance, Number selects Gender, and n is selected by Adjective). Intuitively, if xj selects xi, we want xj to project (for instance, if Number selects Gender within seqN, we want Number to project): xj is a new (invisible) node branching into xi and xj. This result is achieved by applying bootstrapping as defined in (BP) below, which ensures the transition from a linear array of symbols to a hierarchically organized array of symbols). More precisely, if selection has been interpreted as precedence (the selectee precedes the selector, giving rise to the sequence n-Adjective-Gender-Number-Determiner), it follows that the binary subsequence xi-xj of seqN gets mapped into the constituent xj; whereas if selection has been interpreted as subsequence (the selector precedes the selectee, giving rise to the sequence Determiner-Number-Gender-Adjective-n), it follows that the substring xj-xi should correspond to the constituent xj.

(BP)i.Given the chunk [α, β], if α precedes β, then α is contained in β (precedence)

ii.Given the chunk [α, β], if α precedes β, then α contains β (subsequence)

BP approaches linearization from the opposite perspective of Antisymmetry theory as it regards linear order as a constitutive feature for the construction of a hierarchical syntactic space. BP is in fact intended as the algorithm that maps linear order into a two-dimensional syntactic space based on the relation of dominance/containment. It provides a bottom-up bootstrapping algorithm in the sense that it builds relations of containment from relations of precedence/subsequence.4

BP is supported by behavioral experimental results obtained using an artificial grammar learning paradigm where subjects are tested with respect to their capacity to predict elements – within an abstract sequence of 0’s and 1’s produced by a Fibonacci-grammar – that cannot be predicted, demonstrably, by means of statistically-based linear computations (Vender et al., 2019, 2020, 2023). The results suggest that subjects’ anticipatory skills are based on the capacity of labeling constituents such as [01], in which 0 < 1, as 1’s, in agreement with (BP): if 0 < 1, then 1 must dominate 0, which is the case if it is 1, and not 0, that labels [01] (Vender et al., 2023).

3 Syncopation

Syncopation offers an especially interesting case-study for understanding the relationship between rhythm and meter because it is a case in which the rhythmic surface explicitly deviates from metric expectations.

There are two main facts about syncopation that, in our opinion, should be accounted for by a theory of meter and its mapping with rhythm. Firstly, although syncopation is one of many forms of rhythmic complexity (Longuet-Higgins and Lee, 1984; Fitch and Rosenfeld, 2007), when compared to the other measures of rhythmic complexity, it appears to be a most appropriate predictor of perceived rhythmic complexity; Thul and Toussaint (2008) “found that measures of syncopation outperformed other measures in explaining the behavioral data from four separate studies. The data comprised of judgments regarding perceptual, metric and performance complexity of rhythmic patterns. It was found that models of syncopation better explained the variability in these judgments, compared to for example standard deviation and entropy (i.e., the degree of uncertainty in a random sample, from an information theory perspective)” (Witek et al., 2014, p. 1). Furthermore, syncopation also appears to be the most “important structural factor in embodied and affective responses to groove” (Witek et al., 2014, p. 1).5 Syncopation is, in other words, what makes listeners experience the urge to move to the music and with other listeners and feel good. In particular, Witek et al. (2014, p. 1) report that “[w]hile entropy was found to be a poor predictor of wanting to move and pleasure, […] medium degrees of syncopation elicited the most desire to move and the most pleasure, particularly for participants who enjoy dancing to music.” In fact, the study reports an inverted U-shape relationship between the degree of syncopation of a rhythm and (ratings of) the urge to move and pleasure experienced when listening to the rhythm.6

The second, potentially related factor, is that syncopated rhythms enjoy extensive popularity across different musical idioms and cultures (Toussaint, 2013; Witek et al., 2020). The rhythm in (5), most commonly known by its Cuban name tresillo, offers a paradigmatic example (Floyd, 1999; Acquista, 2009; Biamonte, 2018; Jajoria et al., 2021).

(5)a.10010010

b.[3-3-2]

The tresillo has played an important role in research on musical rhythm, both in ethnography and musical theorizing, as is found in a large variety of musical cultures: “The durational patter [3–3-2], popular in Central Africa, is most famously known as the Cuban tresillo (tres in Spanish means three), and is widely used in the circum-Carribean. However, it forms part of almost every music tradition throughout the world, and dates back to at least thirteenth-century Baghdad, where it was called al-thakil al-thani. It is a traditional rhythm played on the banjo in bluegrass music. It was used extensively in the American rockabilly music of the 1950’s for bass, saxophone, or piano. It is sometimes referred to as the habanera rhythm or tumbao rhythm, although the term habanera usually refers to the four-onset rhythm [3-1-2-2], which is less syncopated than the tresillo since it inserts an additional attack in the middle of the cycle. The habanera rhythm is also known as the tuba francesa. If to the habanera a fifth onset is inserted at pulse five, then the resultant rhythm [3–1–1-1-2] is the bomb from Puerto Rico. On the other hand, if the third onset of the tresillo pattern is deleted, one obtains the prototypical Charleston rhythm [x.x….]” (Toussaint, 2013, p. 14–15; notice that in the quote the term onset is used to refer to what we have so far referred to as attack).

The tresillo is also at the basis of a number of other more complex rhythms such as the Bossa nova and samba rhythms. It is also the first-half of the 3:2 clave or the second of the 2:3 clave. It is also the most common rhythm shape of bass line in Latin music (called tumbao) although it is typically played while omitting the first attack. It is also a frequent rhythmic element of jazz, rock, R&B, and dance music. It is an Euclidean rhythm (Toussaint, 2005) as it represents the most even distribution of three attacks over eight pulses. It has an important counterpart in the rhythm called cinquillo (6), which is also a Euclidean rhythm representing the most even distribution of five attacks over eight pulses and which is specular to the tresillo.

(6)a.10110110

b.[2-1-2-1-2]

What is important to observe for the purposes of the present discussion is that the tresillo is commonly interpreted, in all of its various incarnations, in the context of a 4/4 meter, a fact already recognized by Jones (1959; see also Kolinski, 1973; Agawu, 2003). This means that the tresillo is a syncopated rhythm, given that its second attack occurs on a metrically weak pulse immediately preceding a rest on a metrically strong pulse.

Indeed, the tresillo is not the only syncopated rhythm. What is relevant to observe here is that one of the most popular rhythms among a vast spectrum of musical idioms is, in fact, a syncopated rhythm.

Taken together, these two observations call for a theory of syncopation that, on top of unfolding from an explanation of the structural relationship between rhythm and meter, also explains why syncopation is so effective in eliciting desire to move and pleasure and so popular across different musical cultures.

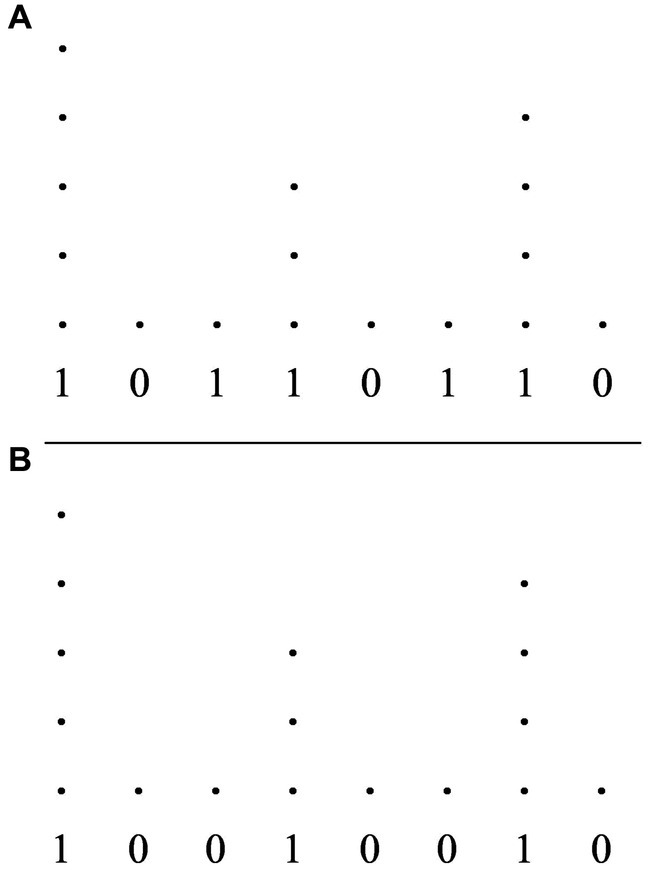

3.1 Theories of syncopation

3.1.1 Syncopation in GTTM

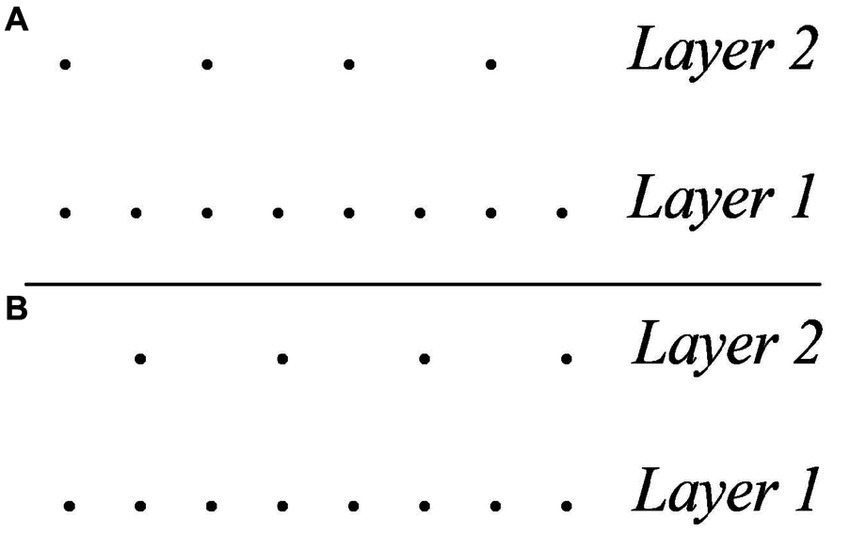

Syncopation poses a challenge to GTTM’s theory of meter. Consider again, as a paradigmatic example, the tresillo rhythm discussed above and compare the two metrical analyses in Figure 6. The analysis in Figure 6A produces a violation of MPR 3 (the second attack does not coincide with a strong pulse) as well as a violation of MPR 1 (the two parallel groups 100-100 do not receive parallel metrical structures). Conversely, the analysis in Figure 6B satisfies both MPR 1 and MPR 3 but produces a violation of MWFR 4 as now there is a metrical level that does not consists of equally spaced pulses. To be sure, both analyses are attested in actual musical practice. The meter in Figure 6B is that of a traditional Bulgarian rhythm (see, for example, Béla Bartók, Mikrokosmos, 153, Six dances in Bulgarian rhythm, n. 6). Yet, the most common metric analysis of the tresillo is the one in Figure 6A. The question, then, is why this is so and why a rhythm such as the tresillo is so popular and successful when it violates two MPR’s and is not trumped by a more optimal rhythm that does not violate them.

Figure 6. (A) Metric analysis of the tresillo rhythm that satisfies WFR 4 but violates both MPR 3 and MPR 1. (B) Metric analysis of the tresillo rhythm that satisfies MPR 1 and MPR 3 but violates WFR 4.

There is an intuition that violating the metric rules is a means to introduce tension and that doing so helps capturing and maintaining the listener’s attention (Spiech et al., 2022). This seems to be Lerdahl and Jackendoff’s view, for example, on the use of syncopation in Jazz: “The stylistic norm [of Jazz] is not simply stresses on weak beats; it consists rather of a number of strategies aimed at increasing local metrical tension. The normal preference rules do not fail to apply; in fact they are exploited as a means of creating the desired metrical tension, which results from a conflict among rules” (Lerdahl and Jackendoff, 1983, p. 279). According to Temperley (1999), the argument is implausible. In his study of syncopation in rock music, Temperely considers a number of melodies of popular rock songs featuring syncopation and observes that these melodies have very little sense of rhythmic instability or metric ambiguity, as opposed to other possible settings of the same melodies that would be more obviously compatible with the underlying meter (Temperley, 1999, p. 26). There is, in fact, some empirical evidence against the view that relates syncopation to metric tension. In a study by Keller and Schubert (2011), musicians rated pairs of unsyncopated and syncopated musical phrases for perceived complexity, enjoyment, happiness, arousal, and tension. The authors report that “overall, syncopated patterns were more enjoyed, and rated as happier, than unsyncopated patterns, while differences in perceived tension were unreliable. Complexity and arousal ratings were asymmetric by serial order, increasing when patterns moved from unsyncopated to syncopated, but not significantly changing when order was reversed. These results suggest that syncopation influences emotional valence (positively), and that while syncopated rhythms are objectively more complex than unsyncopated rhythms, this difference is more salient when complexity increases than when it decreases.” (p. 142).

In order to provide a more formal theory of the mapping between syncopated rhythmic surfaces and their underlying metric analysis within the framework of GTTMs, Temperley (1999) proposes an intermediate level of analysis between rhythm and meter. In GTTM, the musical surface of a piece represents the input of the metrical analysis:

MUSICAL SURFACE > METRICAL ANALYSIS

Temperley proposes that two levels must be distinguished within the musical surface: a ‘surface’ musical surface, henceforth simply referred to as surface structure, and a ‘deep’ musical surface, henceforth simply referred to as deep structure.

SURFACE STR > DEEP STR > METRICAL ANALYSIS

The deep structure differs from the surface structure in that all syncopated events are ‘de-syncopated’ according to the Syncopation Shifting Rule (SSR; we have adapted the rule, originally designed for melodies, to apply to rhythms)7:

(SSR)

In inferring the deep structure of a rhythm, any attack may be shifted forward by one beat at a low metrical level. The order of attacks in a line in the deep structure must be the same as their order in the surface structure.

According to this view, syncopated rhythms are surface deviations of rhythms that are not syncopated at a deeper level of representation. The idea that syncopated rhythms are the result of processes of transformation finds its roots in Schenkerian approaches to rhythm, such as Komar (1971) and Schachter (1980) and has recently found experimental and computational support in the work of Sioros and Guedes (2014).

The question remains, however, why syncopation should exist at all. Temperley (1999, p. 34–37) suggests three relevant observations: (a) syncopation allows for a variety of rhythmic surface structures from a single deep structure without endangering the stability of the underlying rhythm; (b) in the context of sung music, syncopation allows some degree of useful flexibility in fitting the melody to the rhythm of the lyrics; (c) syncopation may be exploited to suggest a latent meter that is different from the actual meter – for example, providing a three-over-two feeling – or anticipating a forthcoming change of meter – for example, from a duple to a triple meter.8

These observations are relevant in the context of syncopation in sung rock music but it is not straightforward that they extend to rhythmic syncopation more generally. To begin with, it is questionable whether Temperley’s model applies correctly to syncopation as it is featured, for example, in popular syncopated rhythms such as the tresillo. According to Temperley’s model, the surface structure of the tresillo should be the surface outcome of the deep structure in (7).

(7)a.10001010

b.[4-2-2]

This is questionable. The tresillo, as mentioned above, is a widely attested musical rhythm whose popularity extends across musical cultures and idioms. Conversely, the rhythm in (7), although a perfectly plausible and certainly attested rhythmic sequence (for example, as the second half of the cha-cha rhythm), does not enjoy the same wide-spread significance across musical cultures. This puts into question the idea that the tresillo is but a syncopated variation of an un-syncopated deep structure such as (7).

A further issue concerns the so-called habanera rhythm (8), a common variant of the tresillo which differs from it only in that it has an extra attack on pulse (8)

(8)a.10011010

b.[3-1-2-2]

The co-occurrence of an attack in pulse 4 (the syncopated position) and pulse 5 (the un-syncopated position) puts into question the idea that the syncopated attack of the tresillo is the result of the SSR rule. It is also true that the habanera has been analyzed, at least in some of its uses, as the combination of the tresillo with the so-called backbeat of the underlying 4/4 meter (Peñalosa, 2010, p. 42). This would support the view of syncopation as a strategy to enrich the surface structure of a rhythm while maintaining it grounded on a regular metric structure. We should notice, however, that it is not obvious that the notion of backbeat is entirely equivalent to that of a rhythm deep structure.

One aspect of the tresillo that confirms Temperley’s observations is its three-over-two feeling. As observed, the tresillo corresponds to the most even distribution of three attacks over eight pulses [in the terms of Toussaint (2005) it is a Euclidean rhythm] and can be regarded as a triplet quantized to fit a binary meter.9 The question remains, however, why a syncopated rhythm such as the tresillo should enjoy such popularity, as attested by its frequency across musical idioms and cultures, in contrast to its unsyncopated version. The question also remains why syncopated rhythms are so effective in eliciting bodily entrainment.

3.1.2 Syncopation in DAT

From the perspective of DAT (Large et al., 2015; Tal et al., 2017; Large et al., 2023), what renders syncopation especially interesting is that, despite being a deviation from metric expectations, it is a deviation that listeners chose not to endorse as evidence for revising their expectations (see also Velasco and Large, 2011): “Syncopation depends not only on listeners holding fast to the previously established metric context but also on their selectively construing what they hear, not as new invariants, but rather as subordinate to an established meter. Composers have long counted on (and exploited) our proclivity to maintain an established metric framework, and the force with which we will impose metric order on an uncooperative musical surface” (London, 2012, p. 16–17). London suggests that, to capture and maintain the attention of the listeners, music must challenge their metric expectations, at least to some extent, and that syncopation is one of the instruments to achieve such goal: “Music often depends on our making an effort to project and maintain an established meter in passages that involve things like syncopation and hemiola” (London, 2012, p. 25). In the framework of DAT, this effect is strictly related to the listeners’ sense of ownership and awareness of their own metric expectations: “local perturbations […] often make the listener more aware of their role in creating meter, as they must ‘feel a beat that is not there’ or otherwise have a palpable sense of the conflict between the music’s rhythms and their own metrical entrainment” (London, 2012, p. 88).

DAT, therefore, provides a framework for capturing the connection between syncopation and entrainment in the measure that syncopation affects the oscillatory nature of the attending mechanism that, according to DAT, are at the foundation of the notion of meter. The role of syncopation, according to this view, is that of awakening in the listeners a sense of awareness of their metric expectations and a sense of agency in establishing such expectations.

3.1.3 Syncopation in PC

Similarly to DAT, PC regards syncopation as the occurrence of a musical event that contradicts the meter – conceived in PC, as we saw, as a set of predictions of different, hierarchically organized temporal resolutions – although not strongly enough to undermine the meter and force a revision of its predictions (Koelsch et al., 2019; Senn et al., 2019; Sioros et al., 2022; Stupacher et al., 2022). This view is supported by significant neurological evidence (Vuust and Witek, 2014, p. 6–7). Even more interesting for the goals of the present contribution is how the PC model of meter is able to model the inverted U-shape relationship between syncopation and affective and bodily entrainment as a function of its hierarchical organization and the bidirectional flow of information across its layers: “The inverted U-shape found between degree of syncopation in groove, wanting to move, and feelings of pleasure can be seen as complying with a hierarchical perceptual system at its higher and more subjectively manifested levels. At this higher level, prediction in perception and action facilitates affective and embodied experiences. At low degrees of syncopation, there is little incongruence between the rhythm of the groove (the input) and the meter (the predicted metrical model). Thus, little prediction error is being fed forward from the lower to the higher levels, and the experiential effect is weak – there is little pleasure, and little desire to move. At high degrees of syncopation, the degree of complexity is so high, and the rhythmic input deviates from the metric framework to such an extent, that the predicted model breaks down. Affective and embodied responses are decreased since the system is in the process of ‘learning’ and adjusting its internal models. Also here there is little prediction error since the brain is unable to provide an appropriate prediction model to compare the incoming input with” (Vuust and Witek, 2014, p. 9).

Indeed, this view calls for an explanation of the correlation between the predictive nature of meter on the one hand and the mechanisms of bodily entrainment and pleasure on the other (Meyer, 1956; Huron, 2006). As for what concerns pleasure, a possible explanation contemplated by Vuust and Witek (2014, p. 10) is based on Clark’s (2013) take on PC whereby the goal of the brain is not that of minimizing prediction error but, rather, that of maximizing prediction success. In the context of this assumption, it is entirely plausible that the brain may in fact reward prediction error, particularly at lower levels, because it leads to learning – that is, to the production of more successful predictive models at higher levels. Preliminary evidence from research in rodents suggests that this effect is plausibly mediated by dopamine in the mesolimbic pathway (Gebauer et al., 2012). In particular, Schultz (2007) and Schultz et al. (2008) report dopamine release in rodents to both expected and unexpected stimuli and suggest that dopamine may play a role in ensuring “a balance between ‘explaining away’ prediction error in the short term, and maintaining an incentive to engage in novel activities (of potential high risk) leading to adaptive learning in the long term” (Vuust and Witek, 2014, p. 10).

As for what concerns desire to move that is typically associated with syncopated rhythms, a plausible hypothesis10 is that it is a consequence of the fact that syncopated rhythms present prediction violations that cannot be resolved on the basis of auditory information alone. Studies have demonstrated that in conditions of auditory ambiguity, the motor system may help disambiguating the perceptual input. In PC models, it may well be plausible to interpret the motor involvement as internal simulation (Skipper et al., 2017; Proksch et al., 2020). Bodily entrainment would operate, therefore, as a mean to disambiguate an otherwise ambiguous auditory stimulus (we will discuss this hypothesis further in Section 5).

4 Syncopation as structure bootstrapping

In this section, we present an alternative approach to syncopation whereby syncopation is a strategy of structure bootstrapping – that is, a strategy that exploits the linear order of musical events to express the presence of an underlying hierarchical organization. This view is not meant to be incompatible with the theories reviewed in the previous section. Rather, in our intentions, it discloses an aspect of syncopation that may further explain its musical function and value.

4.1 Bootstrapping temporal predictions from IOI’s

We take inspiration from the Bootstrapping Principle (BP) discussed in Section 2.3.4 to propose a cognitive model of syncopation whereby syncopation represents a strategy to express the containment of lower level temporal predictions within higher level temporal predictions on the sole basis of IOI’s.

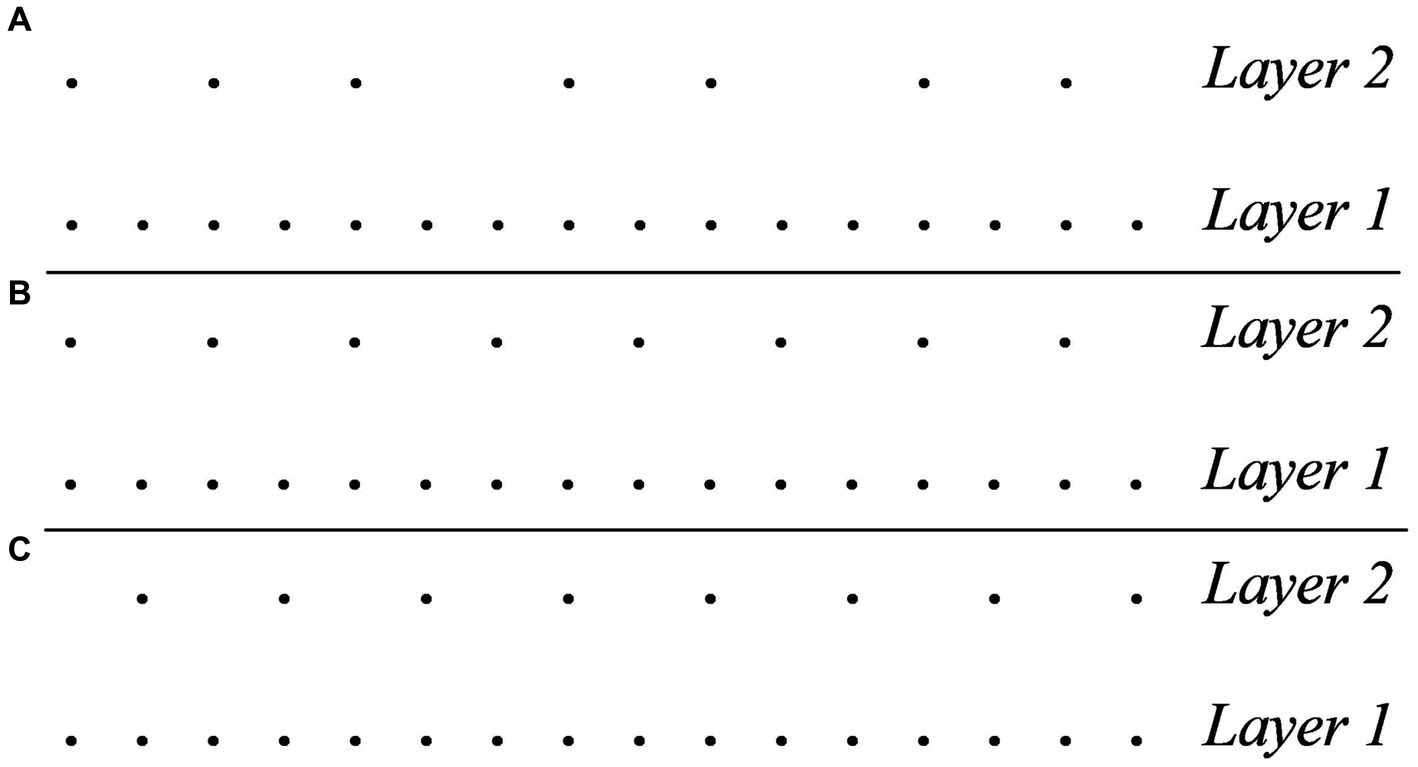

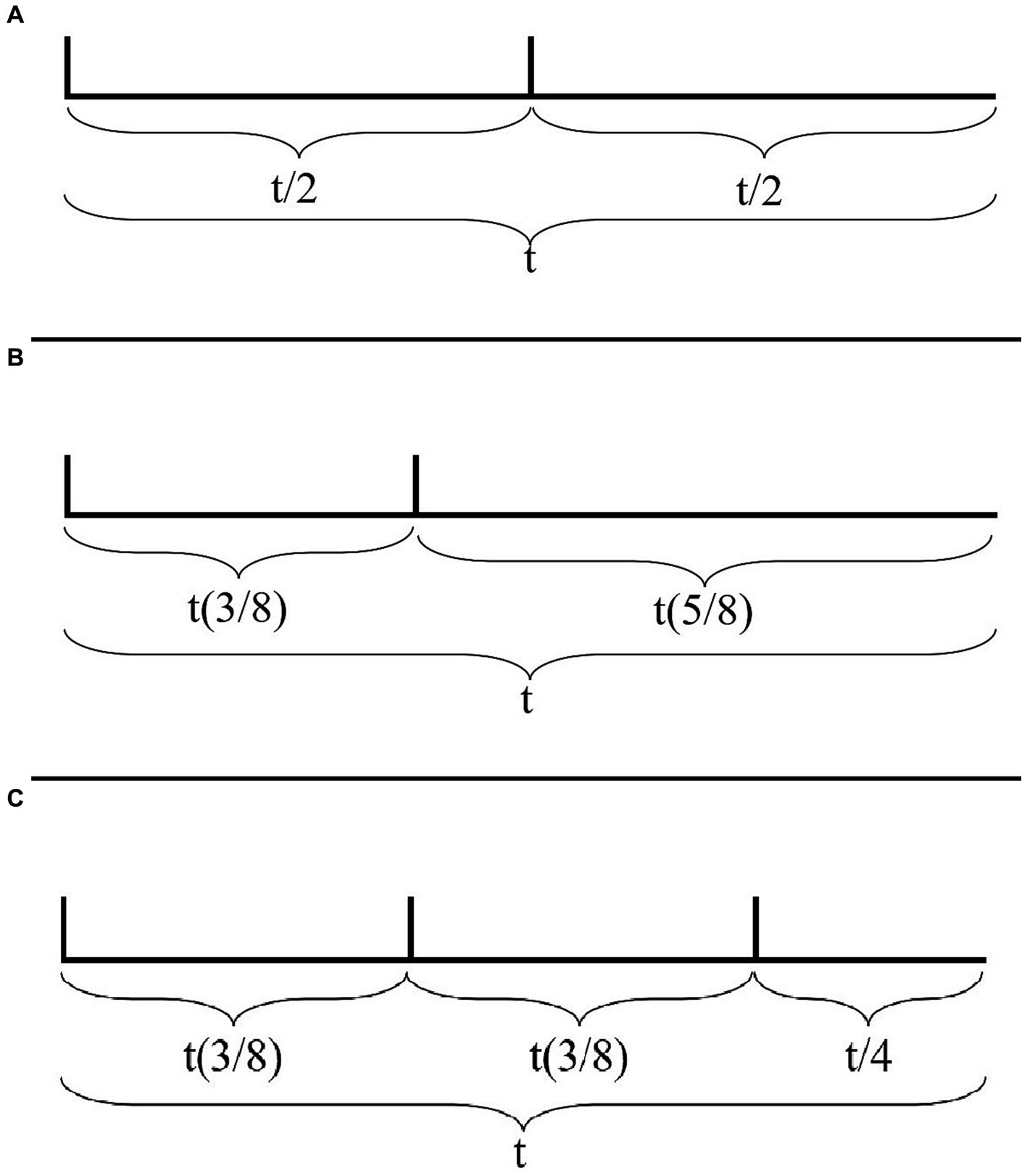

Consider the two rhythms in Figures 7A,B. These rhythms are represented solely as (onsets of) attacks on a cyclic timeline of duration t, with their IOI’s relative to t. The rhythm in Figure 7A consists of the cyclic repetition of two attacks evenly distributed within t, henceforth with IOI’s of duration t/2. The rhythm in Figure 7B (which corresponds to the Charleston rhythm) differs from that in Figure 7A in that the second attack has been anticipated by t/8.

Figure 7. (A) A rhythm represented solely as (onsets of) attacks on a cyclic timeline of duration t, with its IOI’s relative to t. The rhythm consists of the cyclic repetition of two attacks evenly distributed within t, therefore with IOI’s of duration t/2. (B) A rhythm represented solely as (onsets of) attacks on a cyclic timeline of duration t, with its IOI’s relative to t. The rhythm consists of two attacks with IOI’s of t(3/8) and t(5/8). (C) The tresillo rhythm represented solely as (onsets of) attacks on a cyclic timeline of duration t, with its IOI’s relative to t. The rhythm consists of three attacks with IOI’s of t(3/8), t(3/8), and t/4.

Consider, then, what the simplest predictive frameworks are that can be bootstrapped by the listener for the two rhythms on the sole basis of the IOI’s between the attacks. Indeed, other factors, such as timbre, pitch, or other contextual factors, may play a role in suggesting that the repetition has to be interpreted as a cycle of two attacks one of which is more prominent than the other in the intended metric organization. Furthermore, it has been demonstrated that there is a natural cognitive tendency to group isochronous consequent attacks in binary structures (Brochard et al., 2003). Once again, our goal is to evaluate how IOI’s alone contribute metric information, irrespectively of other factors.

In the case of the rhythm of Figure 7A, the listener is confronted with the regular repetition of an attack every t/2. IOI’s alone provide no evidence for any hierarchical relation between the two attacks in the sequence. Notice furthermore that the surface rhythm suggests an underlying pulsation that has a pulse every t/2 and does not suggest a pulsation that is more fine-grained than that (also in this case, a more fine-grained pulsation may be suggested by other factors but, crucially, not IOI’s alone).

Conversely, the predictive framework required by the rhythm in Figure 7B is significantly richer. To begin with, the ratio between the two attacks occurring within the cycle t demands an underlying isochronous pulsation that divides t in (at least) eight pulses.11 Furthermore, the asymmetric relation between the two attacks makes it so that any potential prediction that can be made at the lowest temporal resolution (from one attack to the next) must be discarded in favor of a prediction of a larger temporal resolution (each attack will occur every eight pulses). In this case, therefore, IOI’s alone provide sufficient information to project a metric structure that recurs every eight pulses.

Consider, then, an equivalent representation of the tresillo rhythm (Figure 7C). As the figure demonstrates, the IOI ratios of the tresillo demand an underlying isochronous pulsation of (at least) eight pulses. Notice, furthermore, that the first two IOI’s suggest a low level prediction (from one attack to the next) of t(3/8). That is, the IOI’s detected between the first three attacks provide evidence for predicting that a fourth attack will occur again after t(3/8). This low level prediction, however, is falsified by the IOI between the third and the first attacks. A coherent predictive framework can be maintained, on the face of this failure, by bootstrapping a higher level prediction that the entire cycle will repeat after an interval t.

To see this point more concretely, consider how a rhythm such as the tresillo could be implemented mechanically by using a stack of two step-sequencers, that is a mechanism (as is found in the sequencing component of many synthesizers) that sends trigger signals at regular time intervals. Suppose a step-sequencer that runs at steps of duration t/8 is made to cycle through three steps and to send a trigger signal on the first step that triggers an attack. Suppose then that a second step-sequencer, also running at steps of duration t/8, is made to cycle through eight steps and to send a trigger signal on the first step that triggers an immediate reset of the first step-sequencer. The result will be the tresillo rhythm.

As the example demonstrates, the tresillo can be conceived as a low-level prediction that an attack will occur every three pulses that is contained within a higher-level prediction of eight pulses, where containment means that the lowerlevel prediction is overruled by the higherlevel prediction.

This strategy can be pushed even further with a rhythm such as the cinquillo, which can be conceived as a low-level prediction that an attack will recur every two pulses that is contained within a higher-level prediction of three pulses that is in turn contained within an even higher prediction of eight pulses.

Importantly, both in the case of the tresillo and the cinquillo, the asymmetric distribution of their attacks within the repeating cycle provides a sufficient condition for bootstrapping not only predictive layers that go beyond the low-level predictions from one attack to the next but also relations of prominence between the attacks. In the case of the tresillo, for example, the relation of containment between the levels allows the listener to identify the first attack as the most prominent – the one, that is, where the onsets of both predictive layers coincide.

Both examples demonstrate how, by its ability to create asymmetric distributions of attacks within a rhythmic cycle, syncopation is in fact a powerful tool for informing the bootstrapping of a higher-level predictive structure on the basis of the sole IOI’s between the successive attacks occurring in the cycle.

More technically, in the context of rhythm perception, we can think of the Bootstrapping Principle (BP) as a strategy that attempts at resolving prediction errors by adopting larger chunks of IOI’s as the relevant predictor. The parser first attempts at making predictions considering single IOI’s as the relevant prior. Whenever the evidence provided by the stimulus is inconsistent with the prediction, the parser tries to make predictions by considering sequences of two IOI’s as the relevant prior. The process proceeds to larger and larger sequences of IOI’s until the prediction error is eliminated.12

To see this methodology at work, consider the simple un-syncopated rhythm in (8) and compare it with its syncopated version (9).

(8)a.1010

b.[2-2]

(9)a.1100

b.[1-3]

In the case of (8), the first evidence provided by the stimulus to the parser is an IOI of time t = 2. The prediction made is, therefore, that the following IOI will also be of time t = 2. This prediction is met and the parser has no reason to apply BP.

In the case of (9), the first evidence provided by the stimulus to the parser is an IOI of time t = 1. The prediction made is, therefore, that the following IOI will also be of time t = 1. This prediction is not met as the following IOI is of time t = 3. Given BP, the parser replaces its prior with the sequence of the preceding IOI’s, hence a sequence of one IOI of time t = 1 and a second IOI of time t = 3.

As it can be seen, the asymmetric distribution of attacks in the syncopated rhythm (9) triggers the implementation of BP and, with it, the formulation of predictions of a larger temporal resolution than that of the individual attacks and their IOI’s.

It should be observed that the strategy devised in this section has a broader application than that of syncopation as we have defined it so far. As we saw, syncopation is generally defined as the occurrence of a musical event on a metrically weak position preceding a rest on a metrically strong position (Huron, 2006). However, the strategy we have presented in this section extend also to cases of so-called fourth-position syncopation or anticipation (Temperley, 2021) such as (10).

(10)Fourth-position syncopation (anticipation)

a.1001

b.[3-1]

4.2 Accounting for the musical properties of syncopation: meter as a model of bodily entrainment

Based on the observations above, in this section we advance the proposal that syncopation is such a powerful rhythmic device across musical cultures (Toussaint, 2013; Witek et al., 2020) and so successful in eliciting the urge to move precisely because of its ability to inform the bootstrapping of hierarchically richer metric structures.

This proposal involves a revision of the more traditional understanding of the notions of syncopation and, more generally, meter. As we saw, syncopation is canonically defined as the occurrence of a musical event on a metrically weak pulse preceding (or following, if we also consider fourth-position syncopation) a rest on a metrically strong pulse. This definition offers an understanding of syncopation that is top-down and negative. Top-down in the sense that it defines syncopation in terms of the distribution of a musical event within an established metric framework, whose role is constraining (or predicting) the occurrence of musical events. Negative in as much as it defines syncopation in terms of a violation of the constraints (or predictions) of an established metric framework. Once syncopation is defined in such a top-down negative manner, it becomes inevitably difficult to account for its productive role in eliciting bodily entrainment and popularity across musical idioms. In fact, it becomes difficult even to explain why syncopation should exist at all.

In contrast, we propose a view of syncopation that emphasizes its bottom-up and constructive dimensions: Bottom-up in the sense that it understands syncopation in terms of how the linear distribution of IOI’s in a rhythm supports the inference of metric information, rather than the other way around; constructive because it regards syncopation as a source of metric information rather than a violation of it.

A major implication of this perspective is that it is incompatible with the view that meter is an model of rhythm – that is, a set of constraints or predictions governing the occurrence in time of musical events. Rather, if we are correct, meter is a model of temporal regularities that is informed by musical rhythm. That is, it is not meter that governs the linear shape of rhythm but the linear shape of rhythm that informs meter.

Indeed, this raises the question of what meter is a model of if it is not a model of musical rhythm. We propose that meter is, in fact, a model of bodily entrainment – a model of recurring regularities that listeners extrapolate from the musical surface in order to entrain motorically with it. Syncopation is, henceforth, a trigger for the extrapolation of such regularities because of its capacity to elicit the application of BP.

The significance of the connection between rhythm and movement in music perception has been studied since Fraisse (1963, 1978, 1981, 1984; see Clarke (1999) for an overview) and has represented an important source of evidence for embodied theories of musical cognition – such as Borgo (2005), Clarke (2005), Cox (2016), De Souza (2017), and Leman (2007). In recent years, a number of neuro-functional studies have demonstrated the association between meter perception and the activation of motor areas even in the absence of any overt movement (Schubotz et al., 2000; Chen et al., 2006; Grahn and Brett, 2007; Zatorre et al., 2007; Chen et al., 2008; Bengtsson et al., 2009; Grahn, 2009; Grahn and Rowe, 2009, 2012). In a study of the neural response to complex rhythms, Chapin et al. (2010) found that selective attention to syncopated rhythms led to increased BOLD responses in “motor areas including left SMA, right basal ganglia (caudate, globus pallidus, extending into nucleus accumbens), and left postcentral gyrus (BA 3)” (p. 7) – although such activity was observed only after three repetitions of the rhythms. These results find an immediate explanation within the framework we propose: The activation of motor areas is expected given the hypothesis that meter is a model of bodily entrainment, rather than a model of rhythm perception; the fact that three repetitions of a syncopated rhythm are necessary for the activation of motor areas is expected given the proposed bootstrapping mechanism, which requires the detection of cyclicities of a higher structural level, and the fact that a model of bodily entrainment is available only once these higher-level cyclicities have been detected and sufficiently confirmed.

Fitch (2016) reviews a number of biological reasons, both neurological and ecological, that movement and dance are central factors of musical rhythm and argues that “important rhythmic aspects of […] music, especially meter and syncopation, cannot be properly understood without reference to movement and dance, and that the persistent tendency of ‘art music’ to divorce itself from motion and dance is a regrettable phenomenon to be resisted by both audiences and theorists” (p. 1–2). In our framework, the connection between rhythm and movement follows from the hypothesis that meter is a model of bodily entrainment informed by the musical surface. The special role played by syncopation in this relationship has to do with its ability to inform structurally richer meters – that is, structurally richer models of bodily entrainment. In a sense, if the role of rhythm is to induce cyclic movement, the role of syncopation is to induce more complex cyclic movement – movement, that is, that has a richer inner organization.

Especially informative, in this respect, is a study by Naveda and Leman (2009) of samba music and dance. The study is grounded on the hypothesis that music and dance ought to be regarded a holistic phenomenon in samba culture (Sodré, 1979; Browning, 1995) and the observation that the distinctly syncopated rhythms of samba music, which characteristically include rhythmic forms such as the tresillo and the cinquillo (Sandroni, 2001), are pivotal to eliciting (a desire for) movement in the listeners – what Browning (1995, p.9) calls the ‘hunger’ for movement. To test the hypothesis, Nevada and Leman develop a method to investigate periodicities in both samba music and dance and apply it to a set of samba dance performances and the music over which they were performed. Their cross-modal approach is based on the Periodicity Transform (PT) method developed by Sethares and Staley (1999, 2001), which is an analytic method that searches for periodic events in a signal on the basis of a predefined heuristic. An innovative aspect of Nevada and Leman’s approach is that they use, as heuristic for the PT analysis of both music and dance, the musical meter of samba music. This is a binary meter, with accentuation on the second beat (Salazar, 1991; Chasteen, 1996; Mariani, 1998; Sandroni, 2001). The surprising result of their analysis is that “the binary metre of samba music, which is strongly recognized within musicology, is much more evident in the results of metrical content of dance than in the apparent ambiguous metre of the auditory stream” (p. 271). In fact, the analysis demonstrates how the highly syncopated rhythmic forms of the music fail to a significant extent to meet the periodicities of the assumed meter whereas, in contrast, the same metric periodicities are evident in the dancers’ movements. The authors take these results to suggest that “perception of samba may be movement-based in the sense that through self-movement (of the dancer in response to music) musical patterns get rhythmically disambiguated” (p. 272) and that this process of disambiguation “may well be the effect of the biomechanics of the human body, which imposes a certain motor structure onto an ambiguous auditory stimulus” (279). This line of reasoning can be taken even further under the view proposed here that meter is not a model of rhythm but a model of bodily entrainment. If we abandon the view that the role of meter is that of governing/predicting the occurrence of musical events and, instead, maintain that it is the role of musical events to induce meter as a model of bodily entrainment, then it is less surprising to find that meter in a highly syncopated musical form such as samba is more evident in its embodiment than it is in its musical surface.

The view of syncopation proposed here makes it legitimate to ground meter on principles belonging to the biomechanics of the human body. Consider again the example of the tresillo. As pointed out, the tresillo is typically understood in the context of a binary meter. To explain this in our model, it is sufficient to supplement the bootstrapping strategy suggested in the previous section with a tendency, belonging to the realm of motor entrainment rather than that of musical rhythm, toward entraining motorically to isochronous cyclicities. From the perspective of the bottom-up bootstrapping procedure adopted, realizing a cyclicity that is divided isochronously requires first identifying a predictive cycle that is not contradicted by a higher-level prediction – what London (2012) calls the N-cycle. In the case of the tresillo the lowest predictive level that is not contradicted by a higher-level prediction is the cycle of duration t in Figure 7C. As we saw, the syncopated distribution of the attacks in this cycle demands a division of the cycle of length t into eight pulses. Hence, if a principle of isochronous bodily entrainment is to apply successfully, it must divide the cycle binarily (a pulsation of eight pulses can be divided by two but not by three). According to this view, the meter associated to the tresillo is a model of how to entrain with it that is further optimized for isochronous bodily entrainment.13

Ultimately, according to our proposal, syncopated rhythms do not violate metric expectations. The mismatch between rhythm and meter is rather a consequence of the fact that the processes that determine the model of bodily entrainment within the N-cycle of a rhythm, which have to do with the biomechanics of human movement, may be distinct from the process that supports the bootstrapping of the relevant N-cycle from the musical surface of the rhythm.

5 Discussion

As anticipated, our proposal is not to be regarded as incompatible with existing accounts of syncopation. On the contrary, we believe that it provides further support to frameworks such as PC and DAT and, in fact, may further substantiate some of their insights. In particular, if our hypothesis is correct, it supports the view that syncopation, while causing the breakdown of low-level predictions, is nonetheless appreciated by the listeners because of its capacity to provide evidence for the bootstrapping of predictions of higher temporal resolution. It is indeed a type of low-level prediction error that promotes the learning of higher-level generalizations and, with it, the induction of richer predictive models.14

Our framework differs from the other models of meter and syncopation, particularly GTTM, in that it places its emphasis on the bottom-up relation between rhythm and meter and rejects the view of meter as governing (or making predictions about) rhythm. As we saw, according to our proposal, meter does not constraint rhythm but guides bodily entrainment. Importantly, this view does not exclude the occurrence of top-down processes that limit the range of the possible rhythms that can occur in the context of an established meter. However, it does substantially limit the scope of what is to be regarded as a rhythm that violates an established meter. More precisely, according to our proposal, a rhythm can be said to violate an established meter only when it contradicts the bootstrapping process that has produced the established meter and, as a consequence, requires a revision of the model of motor entrainment adopted.15 This flexibility may be of help in capturing Temperley’s (1999, p. 34–35) observation that, in the context of rock music, syncopation is often exploited by performers and composers as a tool to provide rhythmic variety within a stable metric context. It is also important to observe that, although our proposal rejects the view of meter as making predictions about the occurrence of musical event, it does not mean that meter is not a predictive model in other respects. It is, as we saw, a model of bodily entrainment. Hence, it makes predictions about how to entrain motorically with a rhythm. This view is confirmed by phenomena such as the negative mean asynchrony (NMA), a phenomena observed by a number of studies whereby listeners who are asked to tap their finger to a rhythm tend to anticipate musical events with their movements (see Repp and Su, 2013, for an overview).

Our framework also differs from the other accounts in the way it explains why syncopated rhythms are so popular and effective in eliciting bodily entrainment. It differs from GTTM in that it does not regard syncopation as a source of tension but, rather, as a source of metric information and it differs from DAT in that it does not rely on the sense of agency of interpreter. As we saw, a plausible hypothesis in the context of PC is that bodily entrainment is a strategy to disambiguate otherwise ambiguous syncopated rhythms. In our view, this hypothesis encounters three limitations.

The first is that the activation of motor areas while attending to rhythmic sequences is not an exclusive effect of syncopated rhythms. Listening to all rhythms, including non-syncopated ones, activates motor areas. For example, Grahn and Rowe (2012) find that even “perception of a regular pulse, or beat, within a sequence of temporal intervals is associated with basal ganglia activity.” What is characteristic of syncopated rhythms is that they have a significantly more pronounced effect on motor areas. The hypothesis that bodily entrainment is a disambiguation strategy for ambiguous rhythms would not explain why bodily entrainment is detected, at least neurologically, with non-ambiguous rhythms.

A second issue concerns the status of syncopated rhythms as ambiguous rhythms. In particular, it is not clear that the distinction between metrically ambiguous and metrically unambiguous rhythms overlaps with that between syncopated and non-syncopated rhythms. On the one hand, there are non-syncopated rhythms that are decisively ambiguous. A simple isochronous sequence of identical attacks is compatible with a number of metric analyses. On the other hand, there are syncopated rhythms that are not ambiguous. As we saw, when we focus on the exact process of how predictions are bootstrapped from the surface of the tresillo rhythm, we find that the asymmetric distribution of attacks in the rhythm lends itself to an optimal analysis whereby a prediction that an attack will occur every three pulses is overruled by a prediction, of larger temporal resolution, that the embedded sequence will repeat every eight pulses. While it is possible to conceive of alternative analyses (based for example on different rotations of the rhythm), they are not as optimal and simple as this one. From this perspective, an isochronous sequence of identical attacks is, in fact, more ambiguous than a syncopated one in the sense that it imposes less of a constraint on the set of possible metric analyses. Conversely, syncopated rhythms are more restrictive in delimiting their analytic space precisely in virtue of the asymmetric distribution of their attacks. Also relevant to this issue, are the arguments presented by Temperley (1999) against the view suggested by Lerdahl and Jackendoff’s (1983) that syncopation is a means for introducing metric ambiguity and, with it, tension. As discussed in Section 3.1.1, Temperley presents evidence that syncopated phrases in rock music are not perceived as ambiguous or as inducing a sense of tension (see also Keller and Schubert, 2011).

The third issue concerns the study of Chapin et al. (2010) who found an increased BOLD responses in the basal ganglia only after three repetitions of a syncopated rhythm. If bodily entrainment was a disambiguating strategy, we would expect that motor areas would engage when the ambiguity was detected, that is, as soon as an event would contradict the current model, and disengage when the ambiguity was resolved, that, is as soon as the model would be updated as to encompass the unaccounted event. Conversely, our framework predicts that motor areas engage as soon as a meter has been bootstrapped from the rhythm.

The main difference between our framework and PC is thus that for PC bodily entrainment is an instrument to compensate a metric ambiguity whereas, according to our proposal, it is syncopation that is an instrument to enhance bodily entrainment by lending itself to providing a richer cyclic temporal organization. As said, we find our view to be otherwise entirely compatible, and, in fact, reliant upon PC’s notion of a bidirectional predictive system that has a hierarchical structure and its analysis of syncopation as a low-level prediction error that informs higher levels. In this respect, our model specifies some inherent features of the PC model that have so far remained underspecified – more particularly, the mechanisms that mediate the exchange of predictive potential between the hierarchical levels and the role that syncopation plays in them.

It is finally important to stress once more that the framework we have proposed offers a decisively limited account of syncopation as a broader musical phenomenon. As already mentioned, our approach focuses exclusively on rhythm in terms of IOI’s and disregards at least three classes of factors that are central to a broader understanding of syncopation. The first concerns factors of musical context. In the reality of musical practice, rhythms are not only IOI’s but are produced by instruments with all sorts of timbral, melodic, and harmonic properties. These factors play a decisive role in syncopation, as attested, for example, by Temperley’s (1999) discussion of how syncopation in the lyrics of rock music is significantly affected by the phonological and prosodic properties of the words that are sang. Furthermore, rhythms are rarely performed in isolation. In poly-instrumental performances, different instruments play different, interrelated rhythms and provide together a rhythmic texture rather than a single rhythmic figure. In cases such as these, one instrument may use the support of the metric context provided by other instruments to play highly syncopated rhythmic figures.

The second class of factors has to do with cultural context. As stressed in the literature (Jones, 1990; London, 1990), factors related to the specificity of a musical idiom play a crucial role in guiding the listener’s perception of the meter underlying a rhythmic surface. Huron and Ommen (2006), for example, detect an increase in the amount of syncopation in American popular music between 1890 and 1939. Similarly, Kirlin (2020) demonstrates that syncopated patterns in rag-time music increment significantly from the early days of the musical style (1890’s) to its later days (1920’s). Arguably, as the metric conventions of these styles became more culturally ingrained, composers were able to enjoy more rhythmic freedom.

The third factor concerns the phenomenon of subjective rhythmization (Bolton, 1894; Fraisse, 1982), “whereby sounds of a monotone metronome sequence are experienced as having different intensity and that these intensity differences follow a regular pattern. In other words, despite the sounds having objectively equal amplitude, they are perceived as subjectively different” (Bååth, 2015, p. 244). One possibility to integrate these factors into the proposed framework is to admit that the bottom-up strategy based on BP co-exist with top-down constraints of either contextual, cultural, or cognitive origin. A more appealing possibility from the perspective we have been advocating for consists in reformulating these factors as themselves bottom-up strategies of structure projection that may co-exist and possibly compete with BP.16 More technically, this would amount to expressing these factors as strategies affecting the predictive priors of the parsing mechanism.

While we leave a full-fledged development of this hypothesis to future research, we briefly speculate on how this perspective may be applied to the phenomenon of subjective rhythmization. The phenomenon can be accounted for by a propensity on the part of listeners, whose source may be intrinsically cognitive or cultural, to project hierarchical structure at all costs, that is, even in absence of evidence in the surface rhythmic structure for doing so. In turn, this propensity, may be formalized in terms of a bootstrapping mechanism that allows assuming larger sequences of IOI’s as a predictive prior provided doing so does not produce prediction errors.

5.1 Some considerations on the relationship between music and language

We conclude by discussing our proposal in the broader context of the relationship between language and music.