95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 17 August 2023

Sec. Personality and Social Psychology

Volume 14 - 2023 | https://doi.org/10.3389/fpsyg.2023.1221817

Michelle R. Persich Durham1‡

Michelle R. Persich Durham1‡ Ryan Smith1,2†

Ryan Smith1,2† Sara Cloonan1

Sara Cloonan1 Lindsey L. Hildebrand1

Lindsey L. Hildebrand1 Rebecca Woods-Lubert1

Rebecca Woods-Lubert1 Jeff Skalamera3

Jeff Skalamera3 Sarah M. Berryhill1

Sarah M. Berryhill1 Karen L. Weihs1

Karen L. Weihs1 Richard D. Lane1

Richard D. Lane1 John J. B. Allen4

John J. B. Allen4 Natalie S. Dailey1

Natalie S. Dailey1 Anna Alkozei1

Anna Alkozei1 John R. Vanuk1

John R. Vanuk1 William D. S. Killgore1*

William D. S. Killgore1*Introduction: Emotional intelligence (EI) is associated with a range of positive health, wellbeing, and behavioral outcomes. The present article describes the development and validation of an online training program for increasing EI abilities in adults. The training program was based on theoretical models of emotional functioning and empirical literature on successful approaches for training socioemotional skills and resilience.

Methods: After an initial design, programming, and refinement process, the completed online program was tested for efficacy in a sample of 326 participants (72% female) from the general population. Participants were randomly assigned to complete either the EI training program (n = 168) or a matched placebo control training program (n = 158). Each program involved 10-12 hours of engaging online content and was completed during either a 1-week (n = 175) or 3-week (n = 151) period.

Results: Participants who completed the EI training program showed increased scores from pre- to post-training on standard self-report (i.e., trait) measures of EI (relative to placebo), indicating self-perceived improvements in recognizing emotions, understanding emotions, and managing the emotions of others. Moreover, those in the EI training also showed increased scores in standard performance-based (i.e., ability) EI measures, demonstrating an increased ability to strategically use and manage emotions relative to placebo. Improvements to performance measures also remained significantly higher than baseline when measured six months after completing the training. The training was also well-received and described as helpful and engaging.

Discussion: Following a rigorous iterative development process, we created a comprehensive and empirically based online training program that is well-received and engaging. The program reliably improves both trait and ability EI outcomes and gains are sustained up to six months post-training. This program could provide an easy and scalable method for building emotional intelligence in a variety of settings.

Emotions figure prominently in everyday life, yet people differ in their ability to perceive, process, regulate, and utilize emotions in an adaptive manner (Mayer et al., 2000). This individual difference in emotional abilities is captured by the construct of emotional intelligence (EI). There is strong evidence to suggest that EI plays a critical role in desirable social–emotional skills (e.g., leadership, resilience), and is beneficial in several major life domains (Grewal and Salovey, 2006). Due to the positive outcomes associated with EI and the popularity of the construct, much attention has been given to the question of whether EI can be increased through training (Schutte et al., 2013). This question has led to the creation of many EI interventions, particularly in educational and organizational settings. Overall, the evidence from such interventions suggests that EI can be trained, with several meta-analyses reporting moderate increases in EI following intervention (Kotsou et al., 2019; Mattingly and Kraiger, 2019) that also tend to persist across time (Hozdic et al., 2018).

Despite the relative success of previous intervention work, there still remains a need for strong, empirically based EI interventions, and there are remaining questions about the content, design, and administration of such inventions that need to be addressed (Schutte et al., 2013). For instance, differences in theoretical approaches to EI and the particular skills targeted in interventions may impact training outcomes (Mattingly and Kraiger, 2019). In addition, there are various components of EI interventions that require further research, such as online vs. in-person training. Finally, there have been concerns regarding the research design of previous EI intervention studies, including the pervasiveness of small sample sizes and the lack of randomized control designs, which make intervention effects difficult to interpret (Kotsou et al., 2019). Here, we describe the development and validation of an empirically based EI intervention that follows recommended practices (Hozdic et al., 2018) and addresses understudied areas in the EI intervention literature.

A major source of debate and confusion in the EI literature has revolved around how to best conceptualize and measure EI (Roberts et al., 2010). The fundamental contrast is between models that conceptualize EI as an ability and models that conceptualize EI as a trait. The ability-based model defines EI in terms of specific cognitive-emotional skills and measures EI using performance-based metrics that are compared to normative responses (Mayer et al., 2001). The most widely utilized ability-based approach is Mayer & Salovey’s four-branch model of EI (Mayer and Salovey, 1997), which views EI as the ability to (a) accurately perceive emotion, (b) use emotion to facilitate thought, (c) understand emotion, and (d) manage one’s own and others’ emotions. In contrast, the trait-based model defines EI more broadly as a collection of dispositional tendencies and self-perceived abilities (Petrides and Furnham, 2001). The trait-based approach typically utilizes self-report assessments and often includes personality-like characteristics (e.g., empathy, self-esteem, self-motivation) that may fall outside of traditional definitions of performance ability or intelligence (Roberts et al., 2010).

Despite the theoretical concerns and differences between the approaches, meta-analyses show that both trait EI and ability EI tend to increase following focused training interventions (Hozdic et al., 2018; Kotsou et al., 2019; Mattingly and Kraiger, 2019). However, many intervention studies only include either ability-based EI or trait EI measures as outcomes, making it difficult to make systematic comparisons between the different conceptualizations of EI. This is important because some have noted that the two approaches may be distinct and complementary (Petrides et al., 2007) and that the interpretation of an intervention’s success may depend on the measures used (Mattingly and Kraiger, 2019). Accordingly, it would be useful to systematically assess changes to both ability-and trait-based EI following an EI training intervention (Schutte et al., 2013). Therefore, the present study was designed to assess the effectiveness of the novel EI training program on both theoretical models.

When creating an EI intervention, careful consideration should be given as to what emotional skills should be targeted and what content should be included in the training. There is a large EI literature from which to draw content when developing an intervention. In addition, there are largely independent but conceptually related traditions such as emotion regulation (Peña-Sarrionandia et al., 2015), mindfulness (Brown and Ryan, 2003), social competence (Halberstadt et al., 2001), and clinical intervention (Barlow et al., 2011) that can supplement and reinforce EI abilities.

Previous training programs have differed widely in the EI content included, and the information chosen likely depends on the target population, purpose, and theoretical approach of the intervention. These previous interventions have taken different approaches to designing intervention content, including comprehensively training a collection of emotional skills (Nelis et al., 2009), focusing solely on single emotional abilities (Herpertz et al., 2016), and targeting areas tangentially related to EI (e.g., leadership; Kruml and Yockey, 2011). Evidence from social–emotional skill interventions suggests that multimodal trainings tend to produce better short-term and long-term outcomes compared to interventions that focus on training a single skill (Beelman et al., 1994). In addition, training programs that are specifically developed to comprehensively target EI appear to be more effective at increasing overall EI than those that only include elements of EI (Kotsou et al., 2019).

The application of computer technology and online training approaches continues to evolve rapidly and online training is becoming widely accepted and utilized in many settings. To date, the effectiveness of online EI interventions is fairly understudied, although one investigation found that a hybrid EI intervention was just as effective as traditional face-to-face administration (Kruml and Yockey, 2011). Our own preliminary work suggested that it was possible to improve EI ability using an online program to train specific emotional skills (Alkozei et al., 2019). The ability to provide online alternatives to in-person training should make such interventions more widely accessible and feasible to conduct and would be particularly useful for large-scale training, such as for the military. In addition, an online training program may provide opportunities to include components that increase learning and engagement, such as customized tailoring based on an individual’s responses, interactive scenarios and activities, immediate and personalized feedback to help guide an individual toward achieving program goals, and personal summaries of progress to assist with self-awareness and self-reflection.

A second understudied area in the EI intervention literature is the role of the timing of the training content. Research on learning and retention shows that distributing practice over a longer period tends to be more effective for the long-term retention of information than compressing practice into a shorter timeframe (Cepeda et al., 2006). However, only one study has compared different training schedules of an EI intervention. Kruml and Yockey (2011) compared a 7- and a 16-week leadership class and found no differences in the effect of the scheduling of content distribution on EI skills. If there are no significant benefits related to different training distribution, interventions may be more appropriately designed according to timeframes that maximize recruitment and retention (Coday et al., 2005).

Finally, it is worth noting some of the limitations of previously developed interventions. Although a considerable number of EI interventions exist, many have critical flaws (Kotsou et al., 2019). EI interventions often use small sample sizes that are likely underpowered to detect the effect sizes typically reported in meta-analyses, and may artificially inflate the effects (Sterne et al., 2000). Many interventions also do not include an active control group for comparison, raising potential concerns about placebo, group, and/or test–retest effects driving improvements to EI (Kotsou et al., 2019). There is, therefore, a need for adequately powered EI interventions in which participants are randomly assigned to the intervention or an active-control condition.

In light of the conceptual questions still to be answered and the limitations of previous EI intervention work, the objective of the present study was to develop, refine, and validate a comprehensive online training program to enhance EI. Critically, the lesson content was thoroughly grounded in empirically-based research on EI and related emotional skills (e.g., emotion regulation), and the training modules were developed and refined through an extensive multiple-iteration process. Once finalized, the intervention was tested against a closely matched placebo control program using a randomized control group design in a large sample of healthy adults. Our primary hypothesis was that individuals assigned to the EI training program would show improvements to both ability-and trait-based EI scores relative to those completing a similarly engaging active control training program. As a secondary hypothesis, we tested whether administering the program in a distributed manner (over 3 weeks) would increase the learning and retention of the material relative to a compressed administration of the same content (over 1 week).

In the subsequent sections, we describe the development and refinement process and the methods for program validation.

Conceptual Development. The goal of this phase of the project was to develop a training intervention that was grounded in prior research on EI, emotion theory, and skill development. To that end, a panel of experts on emotion theory and clinical psychology (WK, KW, RL, and JA) was assembled to provide input regarding potential ways to improve upon an early pilot version of the program that was described elsewhere (Alkozei et al., 2019). This re-conceptualization and refinement process resulted in a comprehensive program design that covered seven major training domains, including (1) Foundational Knowledge of Emotions, (2) Knowing One’s Own Emotions, (3) Motivation, (4) Managing Emotions, (5) Knowing others’ Emotions, (6) Managing Others’ Emotions, and (7) Empathy. Detailed descriptions of the EIT program goals and objectives are provided in Table 1. As shown in the Supplementary material, the refined program was extensively based on the MSCEIT model, but this revised and significantly expanded version also incorporated additional concepts from contemporary emotion theory and research, such as emotion regulation, mindfulness, social intelligence, and countering cognitive distortions. It also provided an overarching evidence-based framework for understanding the causes and effects of emotions, with accompanying graphics and interactive activities.

Content Development and Programming. The training intervention was designed to be self-paced and involved a wide variety of interactive game-like activities and simulations to develop a broad range of emotional and social abilities that addressed the seven conceptual domains described above. Working closely with a professional educational software development company, we designed and programmed 13 training modules comprising 4 introductory lessons, 5 “core skills” training modules, and 4 extended practice and integration modules. The Supplementary material provides detailed descriptions and example screenshots of each module. The web-based interface allowed for the inclusion of tailored feedback, interactive scenarios, game-like activities, writing prompts, and other elements to foster active involvement and improved retention of material (Ryan and Lauver, 2002; Chi and Wylie, 2014). All materials in the programs were screened by a doctoral-level certified clinical speech-language pathologist (NSD) to ensure that all information was presented at no higher than an eighth-grade reading level. The final program interface was organized into three progressive tiers of training comprising approximately 10–12 h of training content and activities to be completed at a self-directed pace over several days or weeks. The first tier focused on introducing the concept of emotional intelligence and the basics of emotional processes, as informed by leading theories of emotion such as constructivist (Barrett, 2017), functionalist (Lench et al., 2015), and appraisal theories (Moors et al., 2013). The second tier focused on teaching specific skills related to emotional intelligence, as also informed by evidence-based psychotherapeutic approaches for training emotional awareness and emotion regulation skills (Barlow et al., 2011). Finally, the third tier focused on providing opportunities for practice and self-exploration. Detailed descriptions of the individual modules are presented in the Supplementary material.

In conjunction with the development of the primary EIT program, we also developed a matched placebo condition to ensure that control participants received a training program with equal duration, engagement, and difficulty. The control condition, known as the placebo awareness training (PAT) program, included multiple lessons with no emotional content (e.g., introductory-level lessons on science and the environment) but were otherwise comparable to the EIT program. The PAT was similarly organized into a three-tier structure: Introduction to the basics of the scientific process (tier 1), learning about scientific practices and how they can be applied to better understand the external world (tier 2), and opportunities to apply knowledge (tier 3).

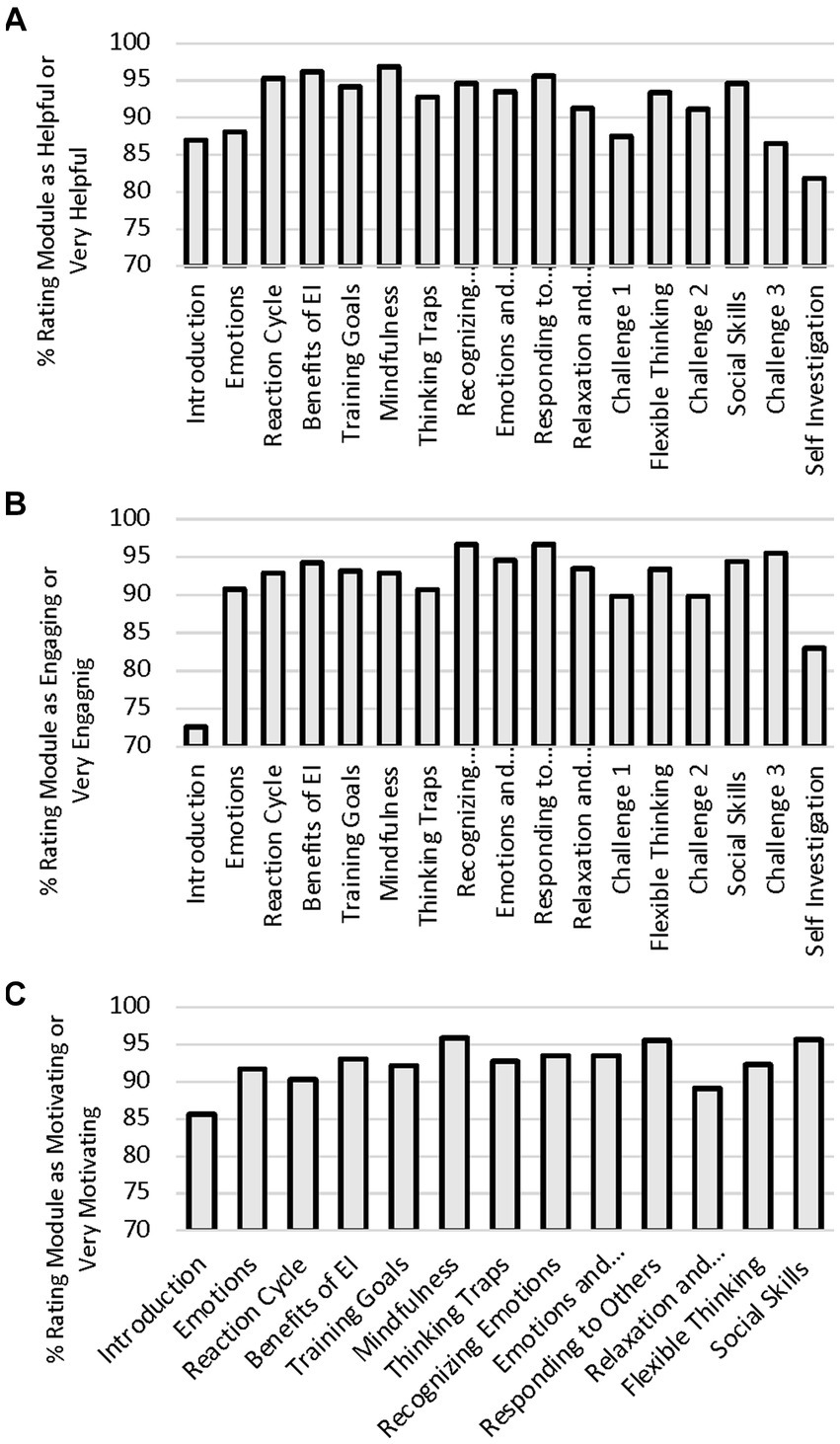

Iterative Refinement. After the modules were fully designed, completed, and implemented, we tested and refined the programs in an iterative manner with small groups of healthy participants. Participants were enrolled in either the EIT or PAT programs and were instructed to record experiences with glitches and errors, issues with comprehension, and thoughts about the appearance and design of the program. Once a sample of n = 40 was achieved, data collection was paused in order to examine and correct any issues that were identified. This process was repeated four times for a total sample of n = 159, Mage = 22.69, 64% women. During program testing, we were also able to ask the participants to rate their perceptions of each module. Using a 7-point Likert scale, participants were asked to rate how helpful (1 = extremely unhelpful; 7 = extremely helpful), how engaging (1 = extremely unengaging; 7 = extremely engaging), and their motivation to improve their emotional intelligence (1 = very unmotivated; 7 = very motivated) after completing each module.

To test our hypotheses that EI training would result in greater increases in ability-based and self-reported (trait) EI scores relative to individuals in the PAT program, and whether administering the program in a distributed manner (over 3 weeks) would increase the learning and retention of the material relative to a compressed administration (over 1 week), we conducted a large randomized trial comparing the EIT program versus the PAT program.

Participants. Participants were recruited from a large southwestern university in the United States and the surrounding metropolitan area. The study was generically advertised as an “awareness” training study to avoid expectancy effects associated with emotional skills training. To ensure that our study was adequately powered, we conducted an a priori power analysis. Using the effect sizes found for the MSCEIT in the initial pilot study for the EIT program (Alkozei et al., 2019), we found that a sample of n = 40 would be sufficient to detect a similar effect size for the interaction of interest (f = 0.28). However, for the critical between-group differences between the EIT and PAT training, we would need n = 148 per training condition for high power (1−β = 0.95) to detect similar effect sizes to the ones found in the pilot study (f = 0.21). Given the dynamic and longitudinal nature of the study, we also assumed that we would need to account for levels of attrition in our sample size estimate. Therefore, we aimed to recruit a large sample of n = 450 that would easily allow us to achieve this sample size, even after accounting for possible large levels of attrition (e.g., 30%). A total of n = 448 participants took part in the baseline assessment session, Mage = 23.72, 72% women, 6.5% Asian, 3.3% Black or African American, 21.0% Latino or Hispanic, 60.7% Caucasian, and 5.8% multiracial. Participants provided written informed consent and were compensated for their participation. The protocol for this study was reviewed and approved by the Institutional Review Board of the University of Arizona and the U.S. Army Human Research Protections Office.

The following measures were administered:

Ability-Based EI. The MSCEIT (Mayer et al., 2002) was used to assess changes in ability-based EI as a result of the EIT program. The MSCEIT is a 141-item test that measures four branches of EI: perceiving emotions, using emotions to facilitate thought, understanding emotions, and managing emotions (Mayer et al., 2003). The MSCEIT yields raw scores for each of the four branches, as well as an overall EI score. Additionally, the test also provides standardized average scores on each outcome based on a normative mean of 100 and SD of 15 derived from a normative sample of age-and sex-matched individuals. MSCEIT scores are quantified based on the match between participants’ answers and the consensus of an independent norming sample. MSCEIT scores were calculated using the general consensus scoring option with adjustments for age and sex (Mayer et al., 2003).

Self-Reported EI Abilities. The self-reported emotional intelligence scale (SREIS) was used to assess self-reports of EI abilities. The SREIS is a 19-item scale that was specifically designed to map onto the skills assessed by the MSCEIT (Brackett et al., 2006), which allows for systematic comparisons between ability-based and self-reported EI. The SREIS is rated on a 5-point scale (1 = very inaccurate; 5 = very accurate) and includes an overall EI score as well as five subscales: perceiving emotions, using emotions, understanding emotions, managing one’s own emotions, and managing the emotions of others.

Trait Emotional Intelligence. The trait emotional intelligence questionnaire (TEIQue) is a 153-item scale that comprehensively assesses domains related to trait EI (Petrides, 2009). The TEIQue captures 15 facets that are grouped into four factors: well-being, self-control, emotionality, and sociability. The TEIQue also provides a global EI score, which includes the previously described factors, plus the additional facets of adaptability and self-motivation. The TEIQue is rated on a 7-point scale ranging from 1 = completely disagree to 7 = completely agree.

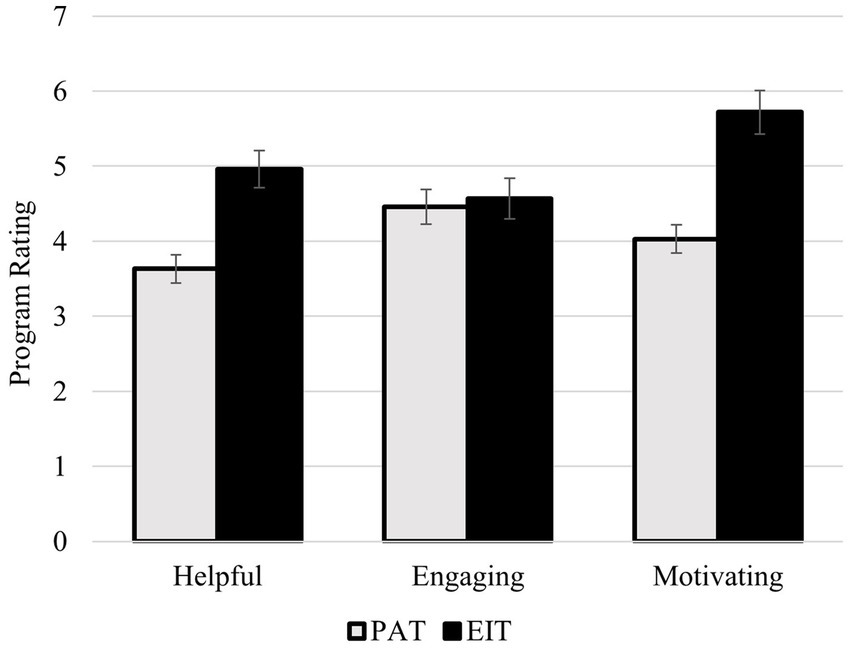

Program Perceptions. During the initial program development and iterative refinement stage, we asked participants to rate how helpful, engaging, and motivating they perceived each of the training modules to be. This provided useful information about how participants subjectively experienced the program, and we, therefore, assessed these same subjective perceptions in the validation phase of the study as well. During the post-training assessment session, participants rated how helpful (1 = extremely unhelpful; 7 = extremely helpful), how engaging (1 = extremely unengaging; 7 = extremely engaging), and how motivated they were to improve their emotional intelligence (1 = very unmotivated; 7 = very motivated). In this case, however, participants rated the program as a whole, rather than each individual module.

Procedure. Participants first reported to a laboratory where they completed demographics and baseline EI assessments and were randomly assigned to either the PAT or EIT program, and either the compressed (1 week) or the distributed (3 week) training schedule. After completing the program according to the assigned schedule, participants reported back to the laboratory to complete post-training EI assessments. All EI assessments were administered at both time points (pre- and post-training). We also conducted a six-month follow-up assessment to investigate whether the skills developed in the EIT program would demonstrate long-term persistence. It should be noted, however, that the scheduled follow-up assessments happened to align with the onset of the COVID-19 pandemic in March 2020. As the result of a university shutdown and moratorium on in-person research, several modifications were made to the study protocol, including administering all assessments online, adding flexibility in the expected timing for completing the follow-up, and collecting a smaller sample size than desired. A total of n = 91 participants completed the 6-month follow-up.

Through the iterative data collection, we were able to identify and address a number of minor issues (e.g., typos), major issues (e.g., glitches that prevented a person from interacting with the program), problems with clarity (e.g., confusing instructions), and negative personal perceptions (e.g., feedback perceived as condescending). We were also able to determine that participants in the EIT program tended to have positive subjective experiences with the training. As shown in Figure 1, each of the modules tended to be viewed quite favorably. Averaged across all modules, 91.8% of participants perceived the lessons to be helpful or extremely helpful, 91.8% considered lessons to be engaging or very engaging, and 92.4% reported that they felt motivated or very motivated to improve their EI as a result of the lessons.

Figure 1. Percentage of participants in the iterative refinement portion of the study rating each modules as helpful or very helpful (A), engaging or very engaging (B), and motivating or very motivating (C).

Baseline Descriptive Statistics and Comparison. Of the initial 448 participants, 122 did not have usable data from the post-training assessments due to scheduling issues, withdrawing from the study, or lack of compliance with the study protocol (27% attrition rate). In total, we were able to produce a high-quality dataset of n = 326, with n = 168 in the EIT condition (93 compressed; 75 distributed), and n = 158 in the PAT condition (82 compressed; 76 distributed). Means, standard deviations, and internal reliability of the assessments at baseline, as well as correlations between all measures, are presented in Table 2. Correlations among the EI measures provided further support for the notion that self-report assessments of EI tend to correlate with other self-reported assessments, but are largely unrelated to performance-based EI measures (Joseph and Newman, 2010). These correlations also revealed that there were some relationships between age, gender, and EI, and that these relationships were mixed. We therefore included both age and gender as covariates in the main analyses.

Independent sample t-tests showed that participants in the EIT and PAT programs did not differ in terms of age, sex, or EI scores at baseline (see Table 3). We conducted comparisons between the 122 participants who were excluded from the dataset and the 326 participants with complete datasets. Excluded participants did not differ in terms of whether they had been assigned to the EIT or PAT program, χ2 = 0.23, p = 0.628, nor did they differ in terms of demographic variables, ps > 0.602. Excluded participants did tend to score lower on the MSCEIT, t(440) = −3.58, p < 0.001, and TEIQue, t(445) = −2.98, p = 0.003, and were more likely to have been assigned to the 3-week training schedule, χ2 = 14.33, p < 0.001.

Program Effects. Table 4 shows the means and standard deviations of EI scores at baseline and post-training for both the EIT and PAT groups. To evaluate changes in EI, we used linear mixed effects models that could account for both between-group and within-person changes to EI scores. We conducted a series of linear mixed models in R (version 4.2.1), using the “lme4” package, that accounted for differences in age, sex, time, and the interaction between program condition and time. Table 5 shows the fixed effects of the key program condition and time interactions.

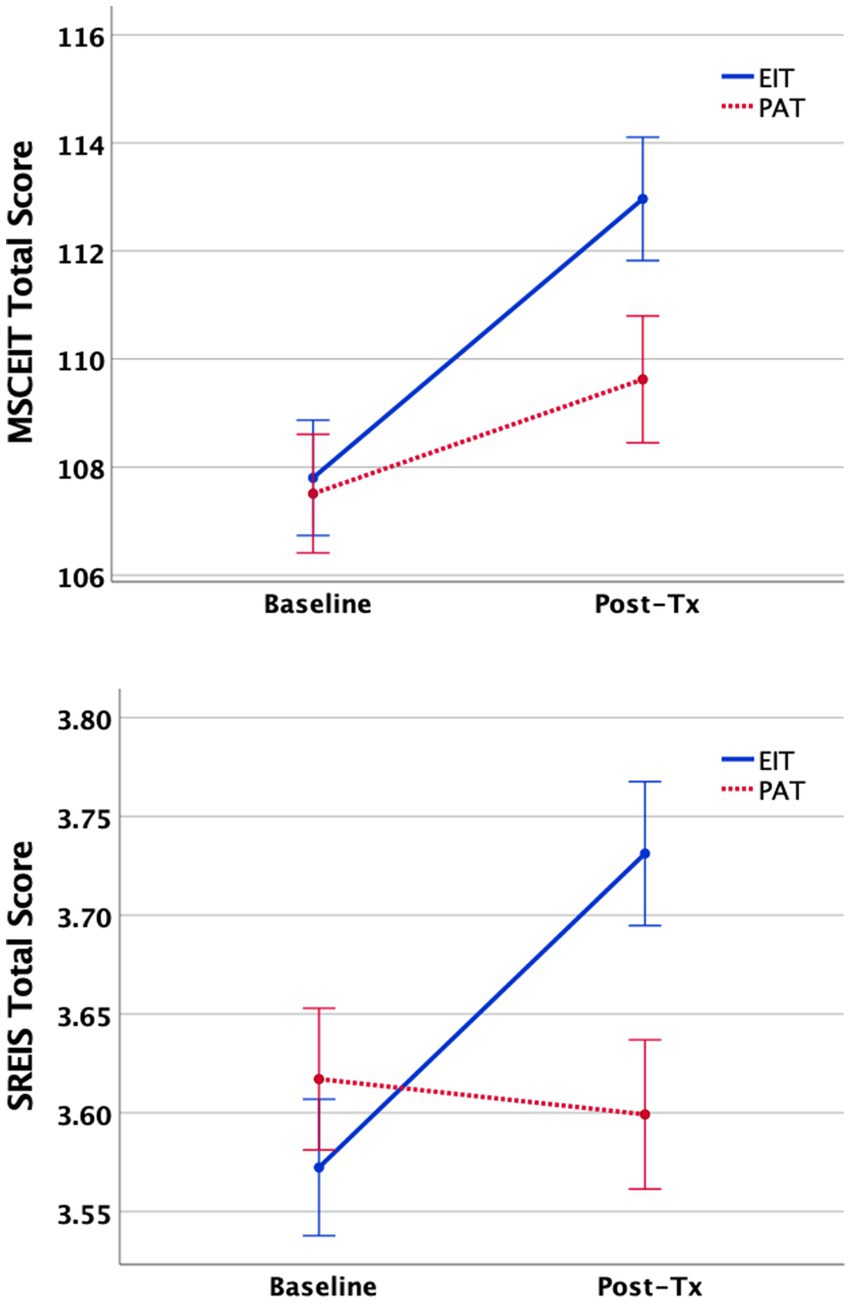

Overall, participants in the EI training program tended to show small improvements to their EI scores relative to participants in the placebo condition [f2 = 0.02 is considered small, f2 = 0.15 is considered medium, f2 = 0.35 is considered large (Cohen, 1992)]. Participants in the EIT program increased their total MSCEIT score by 5.16 points (see Figure 2), p < 0.001, moving from what would be considered “competent” (>90 to <110) to “skilled” (>110 to <130) (Mayer et al., 2003), reflecting a medium effect size improvement (d = 0.47). Comparatively, the PAT condition improved by 2.11 points (p = 0.013), reflecting a small effect size (d = 0.21). Although there was no difference at baseline, the EIT group demonstrated significantly higher MSCEIT Total scores at the post-training assessment (p = 0.042). Participants also showed improvements in each of the branches of the MSCEIT, but these changes were not statistically significant. Participants in the EIT program showed an increase in their total SREIS scores relative to those in the PAT program (p < 0.001), as well as improvements in the subscales related to perceiving (p < 0.002), understanding (p < 0.001), and managing the emotions of others (p = 0.043). Moreover, the increase in SREIS within the EIT group reflected a medium effect size (d = 0.46), while the PAT group was associated with a slight and very small decrease in self-reported EI (d = −0.05). Finally, the overall TEIQue score also had a marginal trend toward improvement in self-perceived emotional intelligence in the EIT relative to the PAT condition.

Figure 2. Changes in MSCEIT (top) and SREIS (bottom). Total scores from baseline (T1) to post-training (T2) assessments as a function of training program (mean ± 1SE).

For those in the EIT program, we examined relationships among improvements on each of the EI measures (quantified as the within-person difference between post-training and baseline scores for each measure). Changes in the SREIS and TEIQue total scores were correlated, r = 0.46, p < 0.001, indicating that participants who showed an increase in their SREIS scores also tended to show an increase in the TEIQue scores. However, the level of improvement for MSCEIT was unrelated to changes in SREIS, r = 0.07, p = 0.349, and the TEIQue, r = 0.08, p = 0.314. Further examination of the parallel MSCEIT and SREIS subscales also did not find significant correlations between performance-based and self-reported improvements in perceiving, r = 0.001, p = 0.993, using, r = 0.12, p = 0.165, understanding, r = 0.09, p = 0.231, and managing emotion, r = 0.12, p = 0.130 (SREIS managing self) and r = 0.01, p = 0.902 (SREIS managing others).

Effects of Training Distribution Schedule. One of the study aims was to investigate whether distributing the training across 3 weeks would result in better learning and retention when compared to a compressed 1-week training. Linear mixed effects models with an added condition × time × distribution term revealed that only the SREIS perceiving subscale showed a significant difference, t = 2.69, p = 0.008, such that the effect of the EIT program was stronger in the distributed (i.e., 3 week) training schedule versus the compressed (i.e., 1 week) schedule only for that outcome variable. No other significant effects were observed, with p-values ranging from p = 0.073–0.915. We therefore conclude that the distribution of training did not affect the post-training outcomes.

Program Perceptions. During the post-training assessment session, we asked participants to report on how helpful, engaging, and motivating the programs were. As shown in Figure 3, the overall EIT program was rated positively, with averages above the midpoint of the 7-point scale for each of the questions. Participants tended to view both the EIT and the placebo programs as similarly engaging, t(300) = 0.59, p = 0.553. However, participants in the EIT program perceived the training to be more helpful, t(300) = 8.33, p < 0.001, and were more motivated to improve their emotional intelligence, t(300) = 9.69, p < 0.001, relative to participants in the PAT program. These ratings were also highly correlated, such that participants who found their program to be helpful also tended to find it engaging (r = 0.56, p < 0.001) and were motivated to improve (r = 0.72, p < 0.001); and participants who found their program engaging were also motivated to improve (r = 0.41, p < 0.001).

Figure 3. Average program ratings for the placebo awareness training (PAT) and the emotional intelligence training (EIT) programs.

Long-Term Persistence of EI Improvements. As initially designed, the six-month follow-up was intended to be a key component of the main analyses. Unfortunately, the COVID-19 pandemic emerged contemporaneously with the timing of the 6-month follow-ups, potentially affecting the emotional and mental health functioning of the participants and leading to a disruption in data collection. Therefore, due to COVID-related changes to the study protocol and the general climate in which participants completed the assessments, we cannot be fully confident that the following results represent an accurate evaluation of the long-term effectiveness of the EIT program. We therefore present the 6-month follow-up analyses separately and interpret these findings cautiously.

In total, n = 91 participants completed the 6-month follow-up with n = 37 in the PAT condition (22 compressed; 15 distributed), and n = 54 in the EIT condition (25 compressed, 29 distributed). There were no baseline differences found between the 91 participants who completed the 6-month follow-up assessments and the 357 participants who did not in terms of age, gender, training distribution condition, SREIS, and TEIQue total scores (all ps > 0.522). The number of participants in each condition did not differ significantly, χ2 = 2.86, p = 0.099, but participants who completed the 6-month follow-up had higher MSCEIT total scores at baseline (M = 109.88 SD = 16.21) than the participants who did not complete the 6-month follow-up (M = 105.25, SD = 13.14), t = 2.86, p = 0.004.

To analyze the long-term effects of training, we ran linear mixed effects models that accounted for differences in age, sex, time, and the interaction between program condition and time. There was a significant group × time interaction for the MSCEIT total score, t = 2.57, p = 0.011, f2 = 0.03. Post hoc analyses revealed that the MSCEIT scores of participants in the EIT program were significantly higher than they were at baseline, at Time 2, t = 5.99, p < 0.001, d = 0.47, and marginally higher at Time 3, t = 1.76, p = 0.083, d = 0.24, suggesting that the skills trained in the program were modestly retained across an extended period, despite the emergence of the worldwide COVID-19 pandemic. There was also a significant group × time interaction for the SREIS, t = 2.40, p = 0.017, f2 = 0.01. However, post hoc analyses revealed that this effect was driven by increases in SREIS scores for EIT participants at Time 2, and their SREIS total scores were no longer significantly different than baseline at Time 3, t = 0.95, p = 0.349, d = 0.13. Finally, there was a marginally significant group × time interaction for the TEIQue, t = 1.95, p = 0.052, f2 = 0.01, but these scores on the TEIQue did not significantly differ from baseline at Time 3, t = 1.57, p = 0.123, d = 0.21. There was no effect of training distribution on any of these scores, ps > 0.320.

The goal of this study was to develop an empirically based, online training program and investigate its efficacy in building and sustaining emotional intelligence. We created a roughly 10-h web-based training program based on the MSCEIT model of EI and theoretically related traditions such as emotion regulation, mindfulness, social skills, and clinical practice then investigated the efficacy of the program using a large sample and a randomized control trial design. As hypothesized, participants who completed the EI training program improved their total ability and self-reported EI scores relative to those in the placebo condition. Overall, participants in the EIT program demonstrated over a five-point increase in their ability-based EI, to elevate the mean performance from what the MSCEIT manual describes as the “competent” to the “skilled” range (Mayer et al., 2003), consistent with a medium effect size improvement following training. Participants in the EIT program also showed increases in their self-reported EI abilities, including specific improvements in perceiving emotion, understanding emotions, and managing the emotions of others, again reflecting a medium effect size improvement. The EIT program content was rated as engaging and helpful in improving EI skills and participants reported that they were motivated to continue their emotional growth upon completion relative to the PAT group. These data suggest that the presently reported online EIT program is effective at developing a range of emotional intelligence capacities and skills and that these improvements show trend-level retention over time, even during the emergence of a worldwide pandemic crisis. The present study extends the field of research on EI interventions in a number of ways. To our knowledge, this is the first placebo-controlled study to show that a fully online EI training program can reliably increase EI scores in a large study of adults. In addition, the study design considered criticisms of previous EI interventions and incorporated numerous enhancements to counter those prior limitations. The study was adequately powered to detect small to medium effects and represents the only known large-scale, randomized, placebo-controlled, empirically-based intervention study that demonstrates the efficacy and validity of a comprehensive online EI training program (Kotsou et al., 2019).

An important advancement provided by the present study was our inclusion of multiple assessments that measured both ability-based and trait EI to allow for systematic comparisons between the two theoretical approaches to EI. We found that the EI training resulted in overall improvements to both ability-based EI and self-reported EI. Overall, the EIT program was associated with increases in Total EI scores on the MSCEIT, the most well-established and widely used ability metric of EI. The total score represents a composite index of EI abilities that is broadly representative of an individual’s ability to detect emotional information from people and situations, understand how this information relates to contexts and goals, use that information to enhance thought processes, and effectively modulate emotions in oneself and others appropriately. In particular, the EIT program was most effective at improving the ability of participants to manage and control emotional responses based on consideration of the situational context, a key component of social–emotional success (Mayer et al., 2002). The EIT program also enhanced Total EI scores on the SREIS (Brackett et al., 2006), a self-report measure of EI that is designed to measure the same content domains as the MSCEIT. Participants showed significant increases in their self-reported skills in perceiving their own emotions and the emotions of others, their perceived ability to understand the complexities of emotions (e.g., emotional antecedents; how emotions emerge over time), and their self-described ability to modulate the emotions of other people. Finally, the EIT program showed a marginally significant improvement in the TEIQue Total score, which measures trait EI. Overall, the program was effective at significantly improving the trait of Emotionality, which includes an individual’s self-perceptions of their emotional empathy, perception, and expression, and quality of relationships. The TEIQue also showed a marginally reliable improvement among those who completed the EIT on the factor of Sociability, which includes the self-perceived ability to successfully manage emotions, be assertive, and be aware of social cues and situations. Together, these findings suggest that the EIT program was successful at improving EI as defined by several conceptual models.

Interestingly, while the EIT program was effective at improving both ability and self-reported EI, within individuals overall improvements on the MSCEIT and SREIS were uncorrelated. This is consistent with prior work suggesting that trait and ability EI are only weakly correlated with one another, if at all (Webb et al., 2013). Essentially, it appeared as though improvements to a person’s measured EI abilities might not have necessarily translated into self-reported EI, and a person could have self-reported increases in EI without necessarily showing improvements in EI performance in the associated domain. Future research may want to investigate whether improvements to ability-based versus self-reported EI systematically produce differential effects or interact to predict consequential outcomes.

We also found differences in how improvements to the different EI metrics were maintained over time (although the influence of COVID-19 on this follow-up assessment makes it difficult to confidently interpret these findings). We found that increases in the MSCEIT score were marginally higher than the baseline 6 months following the EI training, whereas scores on the two self-reported EI assessments had returned to baseline levels. Overall, emotional traits should be fairly stable, but trait-related behaviors and self-perceptions can momentarily fluctuate based on context (Fleeson, 2001). Participants may have experienced an increase in their perceptions of emotional competence immediately after completing a training program specifically designed to increase emotional skills, but then ultimately returned to their typical self-perceptions once that situational influence had been removed. Conversely, ability-based EI is more strongly related to standard cognitive intelligence (Webb et al., 2013). Accordingly, emotional abilities that were learned during the training program should be more likely to persist, even after the contextual influence of the training had faded. These findings are consistent with other data from this project that showed that the EIT program significantly improved clinical scores of depression, anxiety, and suicidal ideation compared to the PAT program 6 months after training, just as the COVID-19 pandemic and associated nationwide lockdowns had emerged (Persich et al., 2021). Such findings suggest that not only is the program effective at sustaining EI skills, but that these skills were protective of mental health in a time of real-life crisis.

We also tested a second hypothesis related to the distribution schedule for completing the training content. As previous research on learning models raised the possibility that distributing training over longer periods of time could help participants learn and retain the intervention material more effectively than compressing the content into a shorter timeframe (Cepeda et al., 2006), we had hypothesized that an extended period for completing the training would enhance learning and retention of the EI skills. However, we did not find differences in EI improvement between the compressed (1 week) and distributed (3 week) training conditions. These findings are similar to Kruml and Yockey (2011), who also did not find differences between a 7- and a 16-week intervention. An important implication of this outcome is that it appears that the program can be used flexibly over time (i.e., either distributed or compressed) with similar outcomes overall. However, another important outcome relating to the training duration was that participants in the distributed condition demonstrated a significantly greater attrition rate between pre-and post-training assessments that the compressed training. It is possible that the extended timeframe increased the perceived burden on participants or that they lost interest when the training schedule was too long. Given that we did not find a benefit in extending the timeframe, future studies, and clinical applications may want to consider choosing timeframes that maximize recruitment and retention efforts, as well as testing the efficacy of flexible administration strategies.

Overall, the present study clearly shows that the EIT program produced improvements in several relevant EI metrics. However, we did not find significant results for all subscales and branches of the outcome measures, and the effects were smaller than what has typically been reported in published research using in-person training approaches (Hozdic et al., 2018; Mattingly and Kraiger, 2019). This may be a limitation of the study. These small effects could be attributable to the EIT program’s online format, the program content, or the relatively healthy and emotionally intelligent sample, and more research should be done to investigate whether these effects can be strengthened. However, it is also possible that the smaller effects were attributable to the more stringent and highly controlled study design, and the effects of EI interventions may actually be smaller than typically reported. For instance, participants in the EIT program showed improvements across all MSCEIT branches, but the PAT participants often showed increases as well. This may indicate that other factors (e.g., practice effects, attention effects, regression to the mean) could have contributed to observed improvements in EI following intervention efforts. Importantly, many EI intervention studies have not included an active control group to account for these factors, and it is therefore possible that the effects of EI interventions in published literature have been artificially inflated (Kotsou et al., 2019) due to the lack of appropriate control training conditions. We encourage those interested in developing EI interventions to adopt more rigorous tests and controls, as done here, in order to determine the true effect size of these interventions. Nonetheless, small to medium effect sizes could have meaningful effects when training interventions are implemented at a large scale. Even a 5-point increase in EI could have a tangible and meaningful effect across a large population, potentially influencing relationship quality, wellbeing, and mental health in meaningful ways.

The present study was designed as an initial validation of our novel EI intervention to show that teaching EI skills can result in an increase in quantified levels of EI. With evidence to suggest that the program can increase EI, it would be useful to continue investigating the generalizability of the program in relation to specific populations and external outcomes. This initial validation study tested the effectiveness of the EI intervention in a relatively healthy sample who tended to have above-average scores on each of the three EI measures assessed. It would be useful to extend the present research by investigating the intervention’s effectiveness in specialized samples; such as individuals with poor social–emotional functioning, individuals with clinical disorders characterized by emotional difficulties, or individuals in emotionally demanding roles such as the military or first responders (Joseph and Newman, 2010). In addition, although it is important to establish the effect of training on EI scores, the ultimate goal of an intervention is to show that it improves meaningful intrapersonal and interpersonal outcomes. Future research should test the effect of the program on outcomes such as adaptive behavior in emotionally demanding tasks, successful functioning in work, school, and relationships, as well as long-term well-being and mental health.

We have successfully developed and validated a novel online EI training program based on well-established theoretical models of emotional intelligence that incorporates empirically supported training content and methods. Our study design addressed several methodological limitations of prior studies of EI training programs. This large, randomized placebo-controlled validation study demonstrated that the program is well accepted and positively received by participants, significantly improves EI scores on both trait and ability measures, is robust to variations in the training schedule, and shows a trend toward sustaining EI skills up to 6 months post-training, even during the emotional challenges posed by the start of a worldwide pandemic crisis and nationwide lockdown orders. With further validation, this approach could provide a standardized and scalable training method for building critical emotional intelligence skills.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by University of Arizona Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

MD carried out the primary data organization and statistical analysis, wrote the initial draft of the manuscript, and contributed to revisions. RS contributed equally to the initial drafting of the manuscript and contributed to the initial study design and development of the EIT materials. SC, RW-L, JS, and SB executed data collection, organized the study database, and also reviewed and edited sections of the manuscript. LH reviewed and edited versions of the manuscript. KW, RL, and JA contributed to the initial conceptualization and redesign of the EIT materials and edited and reviewed drafts of the manuscript. ND, AA, and JV contributed to data collection and statistical analysis, and reviewed drafts of the manuscript. WK was responsible for the initial conceptualization and design of the project, obtaining the research funding, provided oversight of the EIT program design, data collection, statistical analysis and interpretation of the findings, and contributed to initial drafting and revisions of the manuscript. All authors contributed to the article and approved the submitted version.

The study was supported by funding from the U.S. Army Medical Research and Development Command (W81XWH-16-1-0062) to WK.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2023.1221817/full#supplementary-material

Alkozei, A., Smith, R., Demers, L. A., Weber, M., Berryhill, S. M., and Killgore, W. D. S. (2019). Increases in emotional intelligence after an online training program are associated with better decision-making on the Iowa gambling task. Psychol. Rep. 122, 853–879. doi: 10.1177/0033294118771705

Barlow, D. H., Farchione, T. J., Fairholme, C. P., Ellard, K. K., Boisseau, C. L., Allen, L. B., et al. (2011). The unified protocol for transdiagnostic treatment of emotional disorders: therapist guide. New York, NY: Oxford University Press.

Barrett, L. F. (2017). How emotions are made: the secret life of the brain. New York, NY: Houghton-Mifflin-Harcourt.

Beelman, A., Pfingsten, U., and Lösel, F. (1994). Effects of training social competence in children: a meta-analysis of recent evaluation studies. J. Clin. Child Psychol. 23, 260–271. doi: 10.1207/s15374424jccp2303_4

Brackett, M. A., Rivers, S. E., Shiffman, S., Lerner, N., and Salovey, P. (2006). Relating emotional abilities to social functioning: a comparison of self-report and performance measures of emotional intelligence. J. Pers. Soc. Psychol. 91, 780–795. doi: 10.1037/0022-3514.91.4.780

Brown, K., and Ryan, R. (2003). The benefits of being present: mindfulness and its role in psychological well-being. J. Pers. Soc. Psychol. 84, 822–848. doi: 10.1037/0022-3514.84.4.822

Cepeda, N. J., Pashler, H., Vul, E., Wixted, J., and Rohrer, D. (2006). Distributed practice in verbal recall tasks: a review and quantitative synthesis. Psychol. Bull. 132, 354–380. doi: 10.1037/0033-2909.132.3.354

Chi, M. T., and Wylie, R. (2014). The ICAP framework: Linking cognitive engagement to active learning outcomes. Educ. Psychol. 49, 219–243. doi: 10.1080/00461520.2014.965823

Coday, M., Boutin-Foster, C., Sher, T. G., Tennant, J., Greaney, M. L., Saunders, S. D., et al. (2005). Strategies for retaining study participants in behavioral interventions tasks: retention experiences of the NIH behavior change consortium. Ann. Behav. Med. 29, 55–65. doi: 10.1207/s15324796abm2902s_9

Cohen, J. (1992). Statistical power analysis. Curr. Dir. Psychol. Sci. 1, 98–101. doi: 10.1111/1467-8721.ep10768783

Fleeson, W. (2001). Toward a structure-and process-integrated view of personality: traits as density distribution of states. J. Pers. Soc. Psychol. 80, 1011–1027. doi: 10.1037/0022-3514.80.6.1011

Grewal, D. D., and Salovey, P. (2006). “Benefits of emotional intelligence” in A life worth living: contributions to positive psychology. eds. M. Csikszentmihalyi and I. S. Csikszentmihayli (New York: Oxford University Press), 104–119.

Halberstadt, A. G., Denham, S. A., and Dunsmore, J. C. (2001). Affective social competence. Soc. Dev. 10, 79–119. doi: 10.1111/1467-9507.00150

Herpertz, S., Schütz, A., and Nezlek, J. (2016). Enhancing emotion perception, a fundamental component of emotional intelligence: using multiple-group SEM to evaluate a training program. Personal. Individ. Differ. 95, 11–19. doi: 10.1016/j.paid.2016.02.015

Hozdic, S., Scharfen, J., Ripoll, P., Holling, H., and Zenasni, F. (2018). How efficient are emotional intelligence trainings: a meta-analysis. Emot. Rev. 10, 138–148. doi: 10.1177/1754073917708613

Joseph, D. L., and Newman, D. A. (2010). Emotional intelligence: an integrative meta-analysis and cascading model. J. Appl. Psychol. 95, 54–78. doi: 10.1037/a0017286

Kotsou, I., Mikolajczak, M., Heeren, A., Grégoire, J., and Leys, C. (2019). Improving emotional intelligence: a systematic review of existing work and future challenges. Emot. Rev. 11, 151–165. doi: 10.1177/1754073917735902

Kruml, S. M., and Yockey, M. D. (2011). Developing the emotionally intelligent leader: instructional issues. J. Leadersh. Org. Stud. 18, 207–215. doi: 10.1177/1548051810372220

Lench, H., Bench, S., Darbor, K., and Moore, M. (2015). A functionalist manifesto: goal-related emotions from an evolutionary perspective. Emot. Rev. 7, 90–98. doi: 10.1177/1754073914553001

Lorah, J. (2018). Effect size measures for multilevel models: Definition, interpretation, and TIMSS example. Large-Scale Assess. Educ. 6, 1–11. doi: 10.1186/s40536-018-0061-2

Mattingly, V., and Kraiger, K. (2019). Can emotional intelligence be trained? A meta-analytical investigation. Hum. Resour. Manag. Rev. 29, 140–155. doi: 10.1016/j.hrmr.2018.03.002

Mayer, J. D., Salovey, P., and Caruso, D. R. (2002). Mayer-Salovey-Caruso emotional intelligence test (MSCEIT) user's manual. North Tonawanda, NY: MHS.

Mayer, J. D., Salovey, P., and Caruso, D. R. (2000). “Models of emotional intelligence” in Handbook of intelligence. ed. R. J. Sternberg (Cambridge, England: Cambridge University Press), 396–420.

Mayer, J. D., Salovey, P., Caruso, D. R., and Sitarenios, G. (2003). Measuring emotional intelligence with the MSCEIT V2. 0. Emotion 3:97. doi: 10.1037/1528-3542.3.1.97

Mayer, J. D., Salovey, P., Caruso, D. R., and Sitarenious, G. (2001). Emotional intelligence as a standard intelligence. Emotion 1, 232–242. doi: 10.1037/1528-3542.1.3.232

Mayer, J. D., and Salovey, P. (1997). “What is emotional intelligence?” in Emotional development and emotional intelligence: educational implications. eds. P. Salovey and D. Sluyter (New York: Basic Books), 3–31.

Moors, A., Ellsworth, P. C., Scherer, K. R., and Frijda, N. H. (2013). Appraisal theories of emotion: state of the art and future development. Emot. Rev. 5, 119–124. doi: 10.1177/1754073912468165

Nelis, D., Quiodbach, J., Mikolajczak, M., and Hansenne, M. (2009). Increasing emotional intelligence: (how) is it possible? Personal. Individ. Differ. 47, 36–41. doi: 10.1016/j.paid.2009.01.046

Peña-Sarrionandia, A., Mikolajczak, M., and Gross, J. J. (2015). Integrating emotion regulation and emotional intelligence traditions: a meta-analysis. Front. Psychol. 6, 160–186. doi: 10.3389/fpsyg.2015.00160

Persich, M. R., Smith, R., Cloonan, S. A., Woods-Lubbert, R., Strong, M., and Killgore, W. D. S. (2021). Emotional intelligence training as a protective factor for mental health during the COVID-19 pandemic. Depress. Anxiety 38, 1018–1025. doi: 10.1002/da.23202

Petrides, K. V. (2009). “Psychometric properties of the trait emotional intelligence questionnaire (TEIQue)” in Advances in the measurement of emotional intelligence. eds. C. Stough, D. H. Saklofske, and J. D. A. Parker (New York: Springer).

Petrides, K. V., and Furnham, A. (2001). Trait emotional intelligence: psychometric investigation with reference to established trait taxonomies. Eur. J. Personal. 15, 425–448. doi: 10.1002/per.416

Petrides, K. V., Furnham, A., and Mavroveli, S. (2007). “Trait emotional intelligence: moving forward in the field of EI” in Emotional intelligence: knowns and unknowns. eds. G. Matthews, M. Zeidner, and R. Roberts (Oxford: Oxford University Press)

Roberts, R. D., Mac Cann, C., Matthews, G., and Zeidner, M. (2010). Emotional intelligence: toward a consensus of models and measures. Soc. Personal. Psychol. Compass 4, 821–840. doi: 10.1111/j.1751-9004.2010.00277.x

Ryan, P., and Lauver, D. R. (2002). The efficacy of tailored interventions. J. Nurs. Scholarsh. 34, 331–337. doi: 10.1111/j.1547-5069.2002.00331.x

Schutte, N. S., Malouff, J. M., and Thorsteinsson, E. B. (2013). Increasing emotional intelligence through training: current status and future directions. Int. J. Emot. Educ. 5, 56–72.

Sterne, J. A. C., Gavaghan, D., and Egger, M. (2000). Publication and related bias in meta-analysis: power of statistical tests and prevalence in the literature. J. Clin. Epidemiol. 53, 1119–1129. doi: 10.1016/S0895-4356(00)00242-0

Keywords: emotional intelligence, emotional skills, emotional resilience, training program, online resource, MSCEIT, SREIS, TEIQue

Citation: Durham MRP, Smith R, Cloonan S, Hildebrand LL, Woods-Lubert R, Skalamera J, Berryhill SM, Weihs KL, Lane RD, Allen JJB, Dailey NS, Alkozei A, Vanuk JR and Killgore WDS (2023) Development and validation of an online emotional intelligence training program. Front. Psychol. 14:1221817. doi: 10.3389/fpsyg.2023.1221817

Received: 15 May 2023; Accepted: 26 July 2023;

Published: 17 August 2023.

Edited by:

María del Mar Molero Jurado, University of Almeria, SpainReviewed by:

Chara Papoutsi, National Centre of Scientific Research Demokritos, GreeceCopyright © 2023 Durham, Smith, Cloonan, Hildebrand, Woods-Lubert, Skalamera, Berryhill, Weihs, Lane, Allen, Dailey, Alkozei, Vanuk and Killgore. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: William D. S. Killgore, a2lsbGdvcmVAYXJpem9uYS5lZHU=

†These authors have contributed equally to this work

‡Present address: Michelle R. Persich Durham, Department of Psychology, Western Kentucky University, Bowling Green, KY, United States

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.