- Department of Applied Computer Science, University of Winnipeg, Winnipeg, MB, Canada

The left and right hemispheres of the brain process emotion differently. Neuroscientists have proposed two models to explain this difference. The first model states that the right hemisphere is dominant over the left to process all emotions. In contrast, the second model states that the left hemisphere processes positive emotions, whereas the right hemisphere processes negative emotions. Previous studies have used these asymmetry models to enhance the classification of emotions in machine learning models. However, little research has been conducted to explore how machine learning models can help identify associations between hemisphere asymmetries and emotion processing. To address this gap, we conducted two experiments using a subject-independent approach to explore how the asymmetry of the brain hemispheres is involved in processing happiness, sadness, fear, and neutral emotions. We analyzed electroencephalogram (EEG) signals from 15 subjects collected while they watched video clips evoking these four emotions. We derived asymmetry features from the recorded EEG signals by calculating the log ratio between the relative energy of symmetrical left and right nodes. Using the asymmetry features, we trained four binary logistic regressions, one for each emotion, to identify which features were more relevant to the predictions. The average AUC-ROC across the 15 subjects was 56.2, 54.6, 51.6, and 58.4% for neutral, sad, fear, and happy, respectively. We validated these results with an independent dataset, achieving comparable AUC-ROC values. Our results showed that brain lateralization was observed primarily in the alpha frequency bands, whereas for the other frequency bands, both hemispheres were involved in emotion processing. Furthermore, the logistic regression analysis indicated that the gamma and alpha bands were the most relevant for predicting emotional states, particularly for the lateral frontal, parietal, and temporal EEG pairs, such as FT7-FT8, T7-T8, and TP7-TP8. These findings provide valuable insights into which brain areas and frequency bands need to be considered when developing predictive models for emotion recognition.

1. Introduction

Emotion recognition is an active research area in affective computing, neuroscience, and psychology. As emotions elicit specific behavioral responses, researchers often employ techniques to monitor bodily reactions and expressions to recognize different emotional states (Kop et al., 2011). One reliable method for identifying these reactions is through the use of neuroimaging techniques, such as Electroencephalography (EEG) and functional Magnetic Resonance Imaging (fMRI), which can help monitor brain activity (Rangayyan, 2002).

Brain activity reflects the magnetic and electrical activity experienced by neurons during emotional perception and regulation (Gunes and Pantic, 2010; Valderrama and Ulloa, 2012). However, research has demonstrated that this electrical activation is not uniform throughout the brain, and each hemisphere is associated with different behavior. Specifically, neuroscientists have proposed two models to explain this brain asymmetry (Alves et al., 2008). The first model, known as ‘the right-hemisphere dominance of emotions', suggests that the right hemisphere is more involved in processing emotions than the left hemisphere (Borod et al., 1998; Demaree et al., 2005). In contrast, the second model, known as ‘the valence lateralization', assumes lateralization based on the type of emotions, where the right hemisphere processes negative emotions, and the left hemisphere processes positive emotions (Ahern and Schwartz, 1985; Davidson, 1995). Nonetheless, recent neuroscience studies have challenged these asymmetrical models by reporting that the brain exhibits dynamic behavior and a bilateral activation (Morawetz et al., 2020; Stanković and Nešić, 2020; Palomero-Gallagher and Amunts, 2022). For instance, Stanković and Nešić (2020) reported that, initially, the brain displays a right-biased pattern for emotional perception, but after experiencing psychological conditions, such as stress or demanding emotional tasks, the distribution of brain activity is altered and redistributed across both hemispheres.

In addition to the distinction between hemispheres in emotion processing, neural frequency bands (delta, theta, alpha, beta, and gamma) also play distinct roles (Park et al., 2011). Indeed, researchers have focused on the alpha band (8–12 Hz) to analyze hemispheric asymmetries for different emotions using a methodology known as frontal alpha asymmetry (FAA) (Briesemeister et al., 2013). Specifically, FAA compares the electrical activity in the alpha band of the right and left hemispheres in the frontal and prefrontal areas, detecting more cortical activity in one hemisphere when its electrical activity is lower than in the other hemisphere (Gevins et al., 1997; Allen et al., 2004). The foundation of FAA relies on the fact that EEG power is inversely related to activity, indicating that lower power means more cortical activity (Lindsley and Wicke, 1974). For example, Zhao et al. (2018) used the FAA methodology to analyze EEG signals collected from subjects watching videos evoking tenderness and anger, reporting that the left hemisphere had more cortical activity when the subjects perceived tenderness, whereas the right hemisphere had more cortical activity when watching angry videos. Unlike the alpha band, beta and gamma frequency bands do not show asymmetrical behavior during emotion processing (Davidson et al., 1990). These bands exhibit similar patterns when processing emotions, such as reduced power in beta and gamma bands across the cortex during happiness and increased power in frontal regions of both hemispheres during anger Aftanas et al. (2006).

Inspired by the brain asymmetry for processing emotion, previous researchers have attempted to improve the accuracy of machine learning models to predict emotional states (Huang et al., 2012; Ahmed and Loo, 2014; Li et al., 2022; Sajno et al., 2022). To that aim, researchers have proposed features that capture the brain's asymmetrical behavior in emotion processing. These features aim to measure differences in EEG signals extracted from symmetrical positions in both hemispheres. The most commonly employed asymmetry feature type is based on the power spectrum, where the difference or ratio of electrical activity between two EEG nodes is computed (Huang et al., 2012; Duan et al., 2013; Aris et al., 2018). Although nonlinear features, such as fractal dimension, correlation dimension, or RQA analysis, have been extensively used for emotion recognition (Liu and Sourina, 2014; Yu et al., 2016; Li et al., 2018; Soroush et al., 2018, 2019, 2020; Yang et al., 2018), only one previous study has used fractal dimension to derive asymmetry indexes to classify emotions (Liu et al., 2010).

Among the studies using spectral features to reflect brain asymmetry, Pane et al. (2019) used a random forest to classify four emotions (happy, sad, relaxed, and angry) from five EEG pairs (F7-F8, T7-T8, C3-C4, O1-O2, and CP5-CP6) under four different scenarios. The first scenario used the brain activity of both hemispheres to train the random forest. In contrast, the second scenario used only the information from the right hemisphere to train the random forest, whereas the third scenario used only the information from the left hemisphere. The fourth scenario used information from the right hemisphere to predict negative emotions (sad and angry) and information from the left hemisphere to predict positive emotions (happy and relaxed). The authors reported that the fourth scenario using the T7-T8, C3-C4, and O1-O2 electrode pairs obtained the best performance for predicting emotions, thus suggesting that the inclusion of emotional lateralization is beneficial for emotion recognition approaches. Similarly, Zheng and Lu (2015) used asymmetry features calculated by taking the difference and ratio of EEG electrodes from the left and right hemispheres to train a support vector machine and a deep belief networks (DBNs) model to recognize positive, neutral, and negative emotions. The authors found that the performance of asymmetry features varied across the frequency bands, achieving the best performance for the beta and gamma bands. Li et al. (2021) further explored the discrepancy between the brain hemispheres for processing emotions by developing a deep learning model that used the horizontal and vertical streams from the left and right EEG electrodes as input. Their model yielded an accuracy between 58.13 and 74.43% for detecting four emotional states across 15 subjects. Moreover, the authors reported that the EEG nodes located in the frontal region (FP1-FP2, AF3-AF4, F7-F8, F5-F6, F3-F4, F1-F2, FT7-FT8, FC5-FC6, FC3-FC4, and FC1-FC2) were the most relevant for the classification of emotions.

Recent studies using deep learning models have also leveraged brain asymmetry to extract features reflecting asymmetric hemisphere patterns to classify emotional states. Yan et al. (2022) proposed a hemispheric asymmetry measurement network (HAMNet), which is an end-to-end network capable of automatically learning discriminant features for emotion classification tasks. Ding et al. (2022) employed a multi-scale convolutional neural network to extract both temporal and spatial features for emotion recognition. Their approach involved applying multi-scale one-dimensional convolutional kernels to the EEG channels of each hemisphere, thereby extracting comprehensive global spatial representations. Luan et al. (2022) introduced a long short-term memory (LSTM) layer to extract asymmetrical patterns from both hemispheres across different frequency bands, improving the performance of cross-subject emotion classification. Ahmed et al. (2022) expanded the exploration of asymmetry between the left and right hemispheres to encompass the entire cortex. In detail, the authors generated a 62-square matrix by taking the difference between the differential entropy of each pair of EEG nodes. This matrix was fed into a CNN model to automatically extract features. The results showed that the automated features obtained from the CNN model outperformed manually extracted features, such as the difference and ratio of differential entropy between left and right EEG nodes, in accurately classifying positive, negative, and neutral emotions.

Until now, previous research studies have mostly focused on using the asymmetry of emotion processing to build more accurate predictive models. However, little research has been conducted to explore how machine learning models can help to identify associations between brain asymmetry, frequency bands, and emotional states. The complex models used for training the predictive models, such as deep neural networks or support vector machines, are challenged by limited interpretability because they are unable to explain their predictions (Arrieta et al., 2020). Moreover, most of these studies have used a subject-dependent approach (Li et al., 2022), in which the predictive models are trained and tested using EEG signals of the same subject, thus limiting the capacity for generalizing neuronal patterns among different subjects. Although we note that brain activity varies among human beings due to factors such as age, sex, and health status (Kudielka et al., 2009; Heimann et al., 2016), using a subject-independent approach may provide insights on which EEG channels pair are more relevant for processing positive or negative emotions.

In this study, we explore how brain asymmetry is involved in processing happiness, sadness, fear, and neutrality using a subject-independent approach. We conducted two experiments on a dataset containing electroencephalogram (EEG) signals from 15 subjects collected while watching video clips evoking the four emotions. We used statistical hypothesis tests and logistic regression, an interpretable predictive model, to identify which ratios between the left and right EEG nodes and which frequency bands are more relevant to predict each type of emotion. Our approach was able to identify which EEG channels and frequency bands are more relevant to predict happiness, sadness, fear, and neutrality across subjects. Our results reveal that the pairs T7-T8, FT7-FT8, and TP7−TP8 in the gamma band and the pair FT7-FT8 in the alpha band were relevant to discriminate between happiness, sadness, and fear. The findings provide valuable insights into the specific areas of the brain that contain essential information for recognizing different emotional states, thus helping to design emotion recognition approaches.

2. Materials and methods

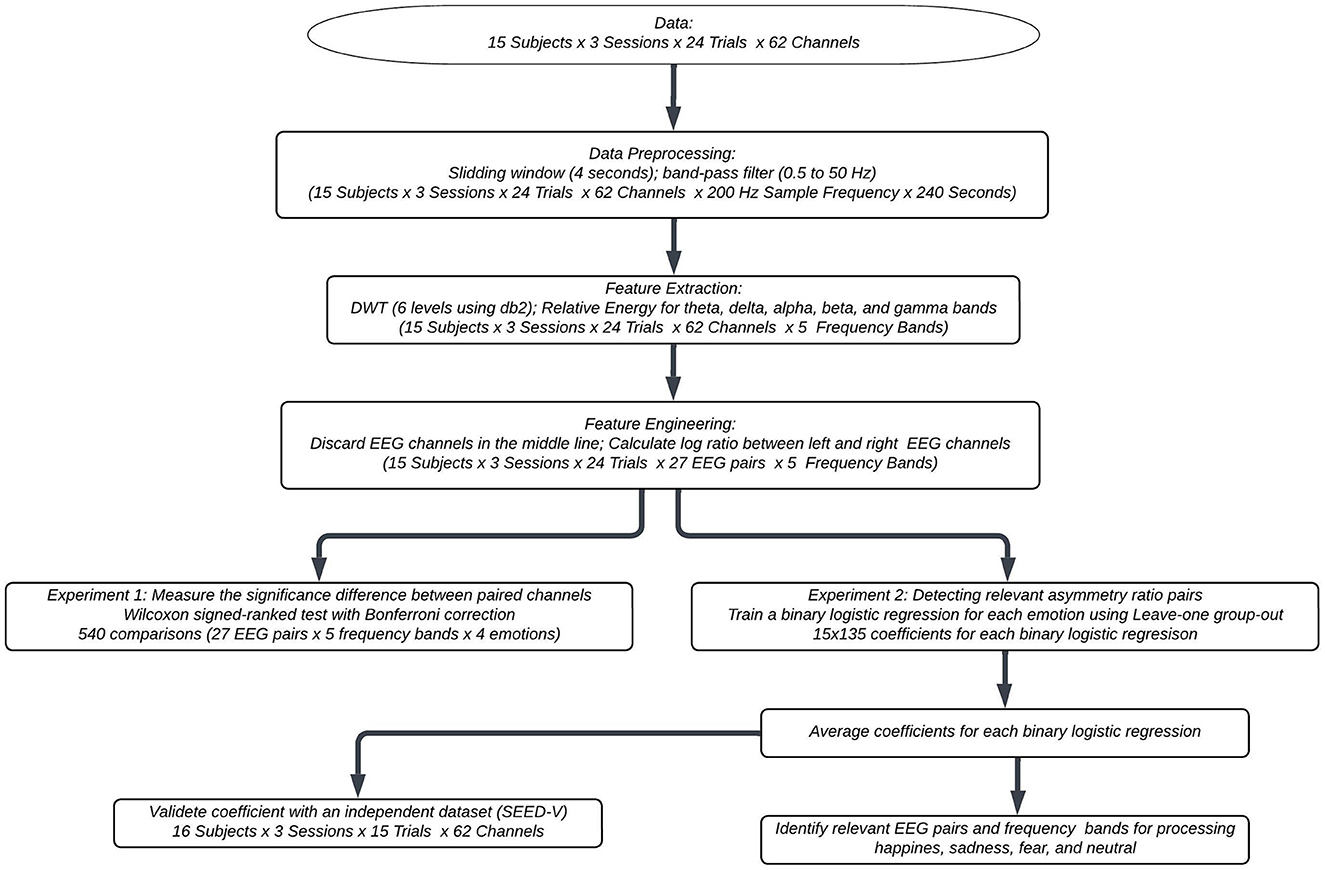

Figure 1 shows the flowchart of the proposed methodology. The subsequent subsections elaborate on the specific details of each component.

2.1. Data set

This work used the SEED-IV database (Zheng et al., 2018). The dataset consists of EEG signals recorded from 15 healthy, right-handed subjects aged between 20 and 24, with eight female participants. Each subject participated in three distinct sessions during which they watched a total of 72 videos. These videos were carefully selected based on a consensus among 44 raters, ensuring that they were capable of eliciting emotions such as happiness, fear, sadness, and neutrality. The subjects viewed the videos across three separate sessions, with each session consisting of 24 video clips. In every session, the number of video clips for each emotion was six. While watching the video clips, the subject's EEG signals were recorded using 62 EEG channels at a sampling rate of 1,000 Hz. After recording, the EEG signals were downsampled to 200 Hz. In total, 72 EEG signals (one per video clip) were recorded for each subject.

2.2. Preprocessing

The collected EEG signals were initially resampled at 200 Hz to ensure a Nyquist frequency of 100 Hz. As EEG signals are susceptible to artifacts and noise stemming from eye blinking and various physiological and non-physiological processes, it is advisable to employ signal filtering techniques to mitigate their impact on classification. Several methods have been proposed for filtering EEG signals. Advanced methods include the use of supervised machine learning to classify noise segments and applying recurrent neural networks to remove such noises (Ghosh et al., 2023). However, although this method has shown to be promising, it necessitates the availability of class labels (noisy vs. non-noisy) for each EEG segment. Alternative methods based on the spectrum domain involve using EEG signals collected in a relaxed state to adjust the spectrum of EEG responses evoked by emotional stimuli, thereby removing inter-subject differences in EEG patterns (Ahmed et al., 2023). Simpler methods for removing artifacts and noises include the use of band-pass filters (Li et al., 2022). However, it is crucial to note that band-pass filtering may not eliminate all artifacts, as eyeblink frequencies fall below 4 Hz, while muscular artifacts exhibit frequencies higher than 13 Hz (Boudet et al., 2008).

Since noise labels or EEG data collected in a relaxed state were unavailable in this study, we employed a Butterworth bandpass filter with a frequency range of 0.5–50 Hz to process the EEG signals. This filter ensured to keep the frequency range associated with delta, theta, alpha, beta, and gamma brainwaves. The filtered EEG signals were divided into segments using a non-overlapping window of 4 seconds. The window duration of 4 seconds was chosen to achieve a frequency resolution of 0.5 Hz (), allowing the detection of the delta frequency band (0.5–4 Hz).

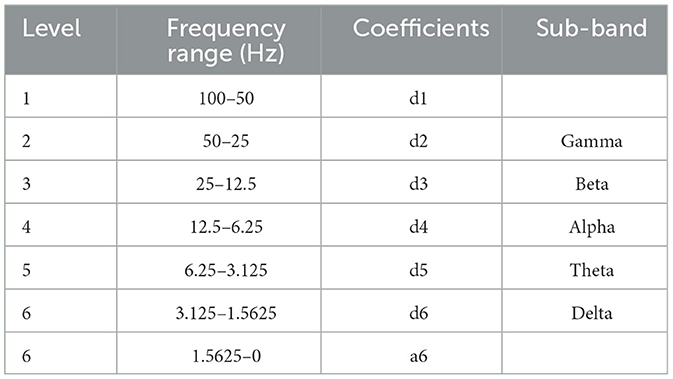

2.3. Feature extraction

We used the discrete wavelet transform (DWT) to extract spectral features from the filtered 4-second window. Because previous studies have indicated that the Daubechies 2 (db2) wavelet function is suitable for processing EEG signals for classification purposes due to its scaling properties and resemblance to EEG's spike-wave patterns (Güler and Übeyli, 2005; Subasi, 2007; Gandhi et al., 2011; Tong et al., 2018), we used the db2 function to decompose EEG signals into six levels. The decomposition process using db2 as the wavelet mother is presented in Table 1. The detail coefficients from the second to the sixth level corresponded to the gamma, beta, alpha, theta, and delta bands.

We used these detail coefficients of the gamma, beta, alpha, theta, and delta band to calculate the percentage of energy content at each frequency band, a feature that has shown potential for emotion recognition approaches (Valderrama and Ulloa, 2014; Krisnandhika et al., 2017; Valderrama, 2021). In detail, the the percentage of energy for each band was calculated as:

where d is the ith detail coefficient of the chth channel (e.g., Fp1, O2, T1) and the bth band (delta, theta, alpha, beta, or gamma), Nc denotes the number coefficient at the band b, and ETotal is the sum of the squared coefficients over all levels.

2.4. Feature engineering for the asymmetry analysis

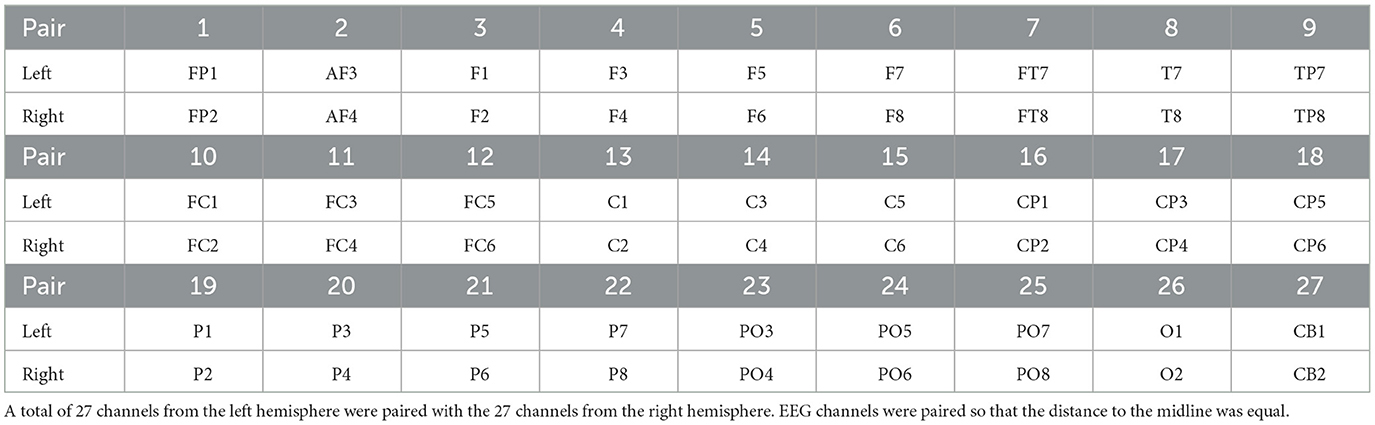

Since our focus in this study was to explore the involvement of the hemisphere asymmetry in processing emotions, we only considered the 54 EEG channels located on the right and left hemispheres, discarding the 8 EEG channels on the center line. We then paired the 54 EEG channels by mapping those channels positioned equidistantly from the center line. Table 2 shows the 27 mapped EEG pairs.

To compute an asymmetry feature, we adapted the rational asymmetry index based on power spectrum density (Huang et al., 2012; Duan et al., 2013; Aris et al., 2018) for the wavelet energy percentage. Specifically, we calculated the asymmetry feature by taking the natural logarithm of the ratio between the energy in the left and right hemispheres, as follows:

where Echx, b and Echy, b were the energy of the left EEG channel x and the right EEG channel y for the frequency band b.

2.5. Asymmetry analysis

We conducted two experiments to explore which bands and channel pairs were more involved in emotion processing. In the first experiment, we performed statistical hypothesis tests to determine whether there were significant differences between the energy of the 27 EEG pairs. In the second experiment, we trained a logistic regression model for each emotion (neutral, sadness, happiness, and fear) using the asymmetry-ratio features (Equation 2) to identify which bands and channel pairs were more important for predicting each type of emotion.

2.5.1. Experiment 1: comparing electrical activity in the right and left hemispheres

In order to compare differences between the left and right hemispheres when processing emotions, we applied the logarithmic properties to Equation 2 to express the ratio as a difference as:

where Echx, b and Echy, b were the energy of the left EEG channel x and the right EEG channel y for the frequency band b.

We then used the Wilcoxon signed-rank test to compare whether the distribution of the logarithmic difference between the EEG channel pairs (see Table 2) was symmetric about zero. The null and alternative hypotheses were defined as follows:

where ηΔchx, chy, b was the median of the logarithmic energy difference in the frequency band b between the left EEG electrode x and the right EEG electrode y.

Considering that we performed 540 hypothesis tests for the 27 pairs, four emotions, and five frequency bands, we corrected the significance value for the hypothesis tests using Bonferroni correction (α = 0.05/540 = 0.00009) to reduce the probability of type I error (false positives).

2.5.2. Experiment 2: detecting relevant asymmetry ratio pairs

To further investigate which asymmetry ratios between the EEG channel pairs were most relevant for predicting each type of emotion, we used a one-vs.-all approach to train a logistic regression model for each emotion. This approach involved training a separate logistic regression model for each emotion, with the corresponding emotion class labeled as “1” and the other emotions labeled as “0”. The reason for using a one-vs.-all approach instead of a multinomial logistic regression was that the individual binary logistic regressions allowed us to obtain a vector of coefficients that associated which ratios between the left and right EEG channels and which frequency bands were most relevant for predicting each specific emotion of interest. In contrast, a multinomial logistic regression would select an emotion as a reference and provide three coefficient vectors comparing the odds of a sample being of each emotion relative to the reference emotion.

We used the “leave-one group-out” (LOGO) cross-validation technique to train each binary logistic regression, ensuring that our models were subject-independent. This technique guaranteed that no EEG signals from a single subject were present in both the training and test sets during each iteration. As we had data from 15 subjects, we performed 15 iterations, with each iteration using the EEG samples from one subject as the test set, and the samples from the remaining 14 subjects forming the training set.

At each iteration of the LOGO cross-validation, we trained the logistic regression models using the training set and evaluated the performance of our models using eight different metrics on the test set. These metrics included sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), F1-score, geometric mean (G-mean), the area under the Receiver Operating Characteristics (AUC-ROC) curve, and the area under the precision-recall curve (AUC-PR). Sensitivity measures the proportion of true positives (samples correctly classified as belonging to the target emotion) out of all the actual target emotion samples. Specificity measures the proportion of true negatives (samples correctly classified as not belonging to the target emotion) out of all the actual non-target emotion samples. PPV measures the proportion of true positives out of all the samples classified as belonging to the target emotion, while NPV measures the proportion of true negatives out of all the samples classified as not belonging to the target emotion. The F1-score is the harmonic mean of precision and recall, while the G-mean is the square root of the product of sensitivity and specificity. The AUC-ROC measures the overall ability of the model to distinguish between target and non-target emotion samples, while the AUC-PR measures the trade-off between precision and recall for different classification thresholds. Table 3 shows a mathematical representation of the metrics used to assess the performance of binary logistic regression models.

In total, we had 135 asymmetry features (Equation 2), which were derived from the 27 EEG pairs multiplied by five frequency bands. Since developing subject-independent emotion recognition approaches is challenging due to the poor generalizability of features across subjects, we implemented two pre-processing feature steps to train the logistic regression model.

First, in order to determine which features were more relevant for the emotion of interest, we conducted an ANOVA F-value analysis. This analysis allowed us to assess whether the means of each feature were significantly different across the two classes. A higher F-value indicated a larger difference in means between classes compared to the variation within each class. Using the p-values associated with the F-value of each feature, we selected those features that were statistically significant (p-value < 0.05).

Second, to further remove irrelevant asymmetry features and to avoid overfitting, we used regularization, a technique that has shown to be effective for subject-independent emotion recognition approaches (Li et al., 2018). Thus, we applied elastic net regularization (Zou and Hastie, 2005) to train each logistic regression at each iteration of the LOGO cross-validation. The best hyperparameters for the l1-ratio (ϕ) and the regularization strength (C) were optimized using nested-cross validation on the training set. The grid search used nested-cross validation was defined by ϕ ∈ {0, 0.1, 0.2, , ..., 0.8, 0.9, 1} and C ∈ {10−3, 10−2, ..., 102}. All logistic regression models were fitted without an intercept (i.e., setting w0 = 0) to ensure that the predictions depended only on the features.

At each of the 15 iterations of the LOGO cross-validation, the logistic regression generated a coefficient for each of the 135 asymmetry features. We stored these 135 logistic regression coefficients at each iteration. Once the LOGO cross-validation was complete, we averaged the logistic regression coefficients across the iterations, thus obtaining a set of coefficients for each type of emotion. These sets of coefficients indicated which features (i.e., asymmetry log-ratios between EEG pairs and frequency bands) were most relevant for predicting each type of emotion.

2.5.3. Finding relevant EEG channels for predicting emotions

To identify relevant EEG channels in processing emotions, we conducted two analyses with the logistic regression coefficients. First, we identified the most relevant features for the binary prediction of the four logistic regressions by identifying the five highest and the five lowest average coefficients.

For our second analysis, we used the logistic regression coefficients to generate brain topography maps. To that aim, we leveraged the fact that the trained logistic regression models predict the class of a sample using the following equation:

where ŷ is the probability that the sample belongs to the emotion of interest, ωi is the logistic regression coefficient associated with the ith feature (fi), which corresponds to the log-ratio of the relative energy between the left and right EEG channels (Equation 2).

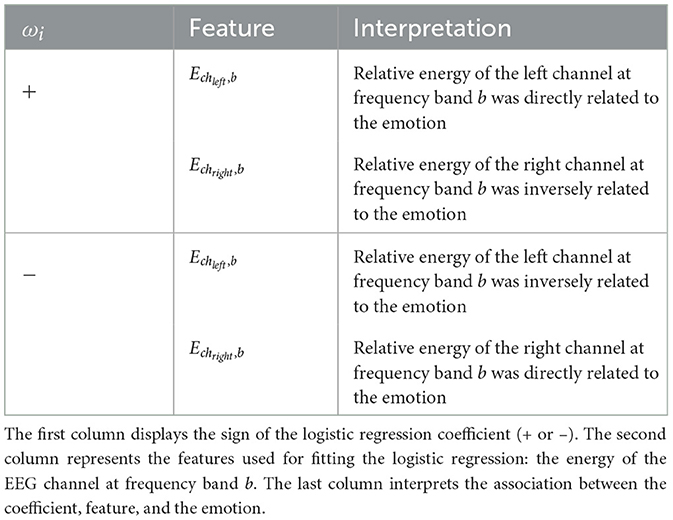

The 135 coefficients correspond to the combination of the 27 EEG channel pairs (Table 2) and the five frequency bands (delta, theta, alpha, beta, and gamma). By expanding the log-ratio of the features, the summation in the exponent of Equation 5 can be rewritten as:

where Echxj, bk and Echyj, bk were the energy of the left EEG channel x and the right EEG channel y for jth log-ratio at the frequency band bk.

The prediction of the emotion of interest can be determined based on the sign of the summation in Equation 6. If the summation is positive, the sample is predicted to belong to the emotion of interest. Conversely, if the summation is negative, the sample is predicted not to belong to the emotion of interest. Therefore, when the logistic coefficient ωi is positive, the energy of the left EEG channel x at the frequency band bk (Echxj, bk) is directly associated with the emotion of interest. In contrast, in such cases, the energy of the right EEG channel y at the frequency band bk (Echyj, bk) is inversely related to the emotion prediction. On the other hand, when the logistic coefficient ωi is negative, the opposite relationship holds: the energy of the left EEG channel is inversely related to the emotion, whereas the energy of the right EEG channel is directly related to the emotion. Table 4 summarizes the four cases we used to establish the relationship between the emotions, the features f, and the logistic regression coefficients, ω.

2.6. Validation with external dataset

To evaluate the generalization capacity of the average coefficients in predicting emotions, we conducted a performance test using an independent dataset, specifically the SEED-V dataset (Liu et al., 2021). The SEED-V dataset comprises EEG data collected from subjects while they watched video clips representing five different emotions: happy, sad, fear, disgust, and neutral. The study involved 16 participants (6 male and 10 female) who each watched 15 movie clips (3 clips per emotion) across 3 sessions. EEG signals were recorded from 62 EEG channels at a sampling rate of 1,000 Hz.

To maintain consistency with the dataset used to train the logistic regression models, we excluded the EEG samples corresponding to disgust and focused solely on samples associated with happiness, sadness, neutral, and fear. We processed the EEG signals following the same methodology used for SEED-IV signals (refer to subsection 2.2), and computed the asymmetry features by calculating the logarithmic difference between the left and right EEG nodes, as described in subsection 2.3.

For each of the four emotions, we fitted a logistic regression with the coefficients as the average coefficients obtained for the 15 subjects of the SEED-IV. We then calculated the AUC-ROC value for each of the 16 subjects of the SEED-V dataset.

3. Result

3.1. Comparing electrical activity in the right and left hemispheres

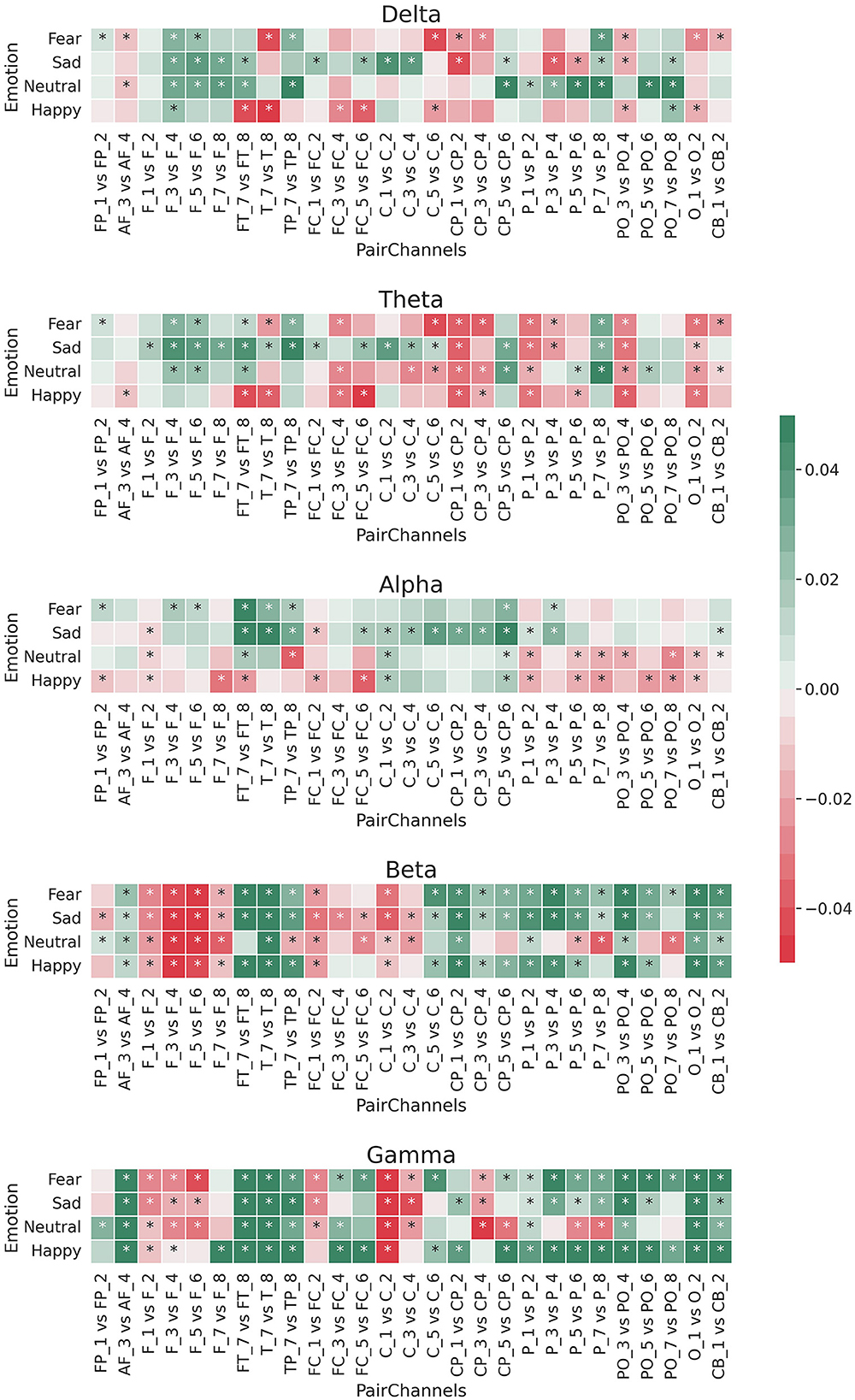

Figure 2 shows the logarithmic difference (Equation 3) between the medians of the energy of the 27 paired EEG channels for each of the five frequency bands. Most of these differences were significant [Wilcoxon rank sum test with Bonferroni correction; p-value 0.00009 (0.05/540)], most occurring at the beta and gamma frequency bands.

Figure 2. Median of logarithmic wavelet energy difference (Equation 3) between the paired EEG electrodes for the five band frequencies: delta, theta, alpha, beta, and gamma. *means that the difference was statistically significant [Bonferroni corrected p-value 0.00009 (0.05/540); Wilcoxon rank sum test].

For the beta and gamma bands, the left channels had more relative energy than the right channels for each brain region except the frontal area (F1/F2, F3/F4, F5/F6, and F7/F8). This pattern was the opposite for the theta and delta bands, in which the right channels resulted in more relative energy for EEG channels outside the frontal area. In the case of the alpha frequency band, there was a difference among the emotions. Specifically, for sad and fear, the log differences of the relative energy between the left and right channels resulted more in positive values (green colors), while for happy and neutral emotions, those log differences were mostly negative (red colors).

3.2. Predicting emotions using relevant asymmetry ratio pairs

Appendix Tables 5–8 present the performance metrics for the binary logistic regression models across the 15 subjects. The sensitivity (the accuracy for correctly detecting samples from the emotion of interest) and the specificity (the accuracy for correctly detecting samples not from the emotion of interest) exhibited variability across the subjects, with a mean sensitivity between 51.0 and 52.4%, and a mean specificity between 51.0 and 59.2%. The G-mean, a metric that combines these two metrics while considering data imbalance, obtained a mean value of 52.7, 51.7, 50.1, and 52.6% for the neutral, sad, fear, and happy models, respectively. The ROC-AUC score showed a similar classification capacity for the models, with a mean value of 56.2% for neutral, 54.6% for sad, 51.6% for fear, and 58.4% for happy. The NPV, which indicates the precision in detecting samples that were not from the emotion of interest, exhibited the best performance across the four models, with an average value between 75.1 and 82.1%. This result suggests that the models were accurate in detecting samples that were not from the emotion of interest. The mean performance of the eight metrics for the training and testing sets were similar, indicating low overfitting during the training process.

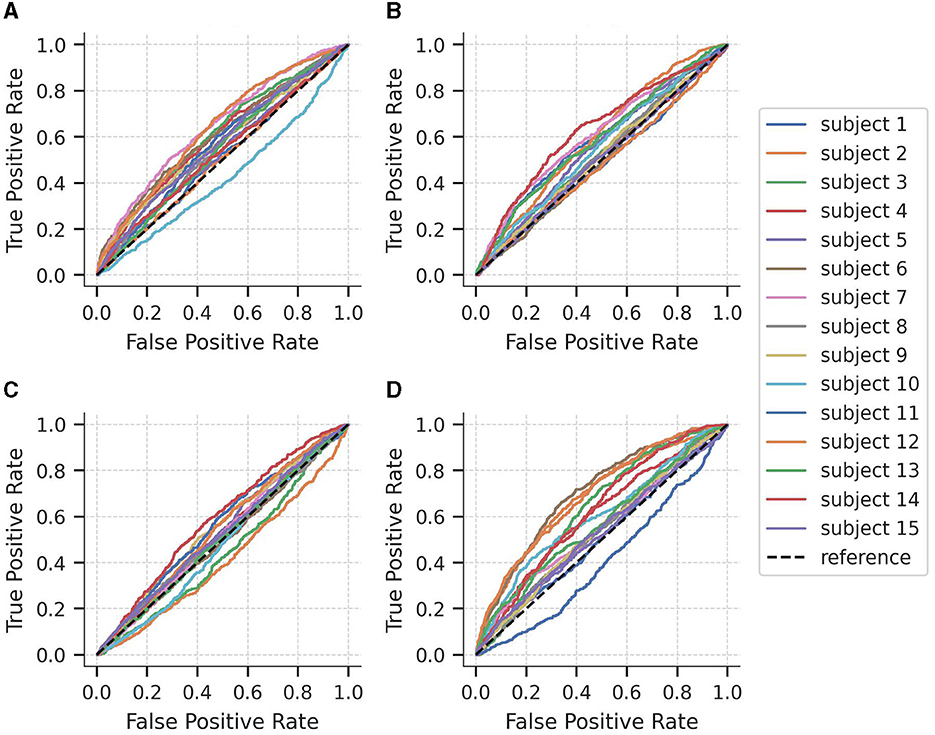

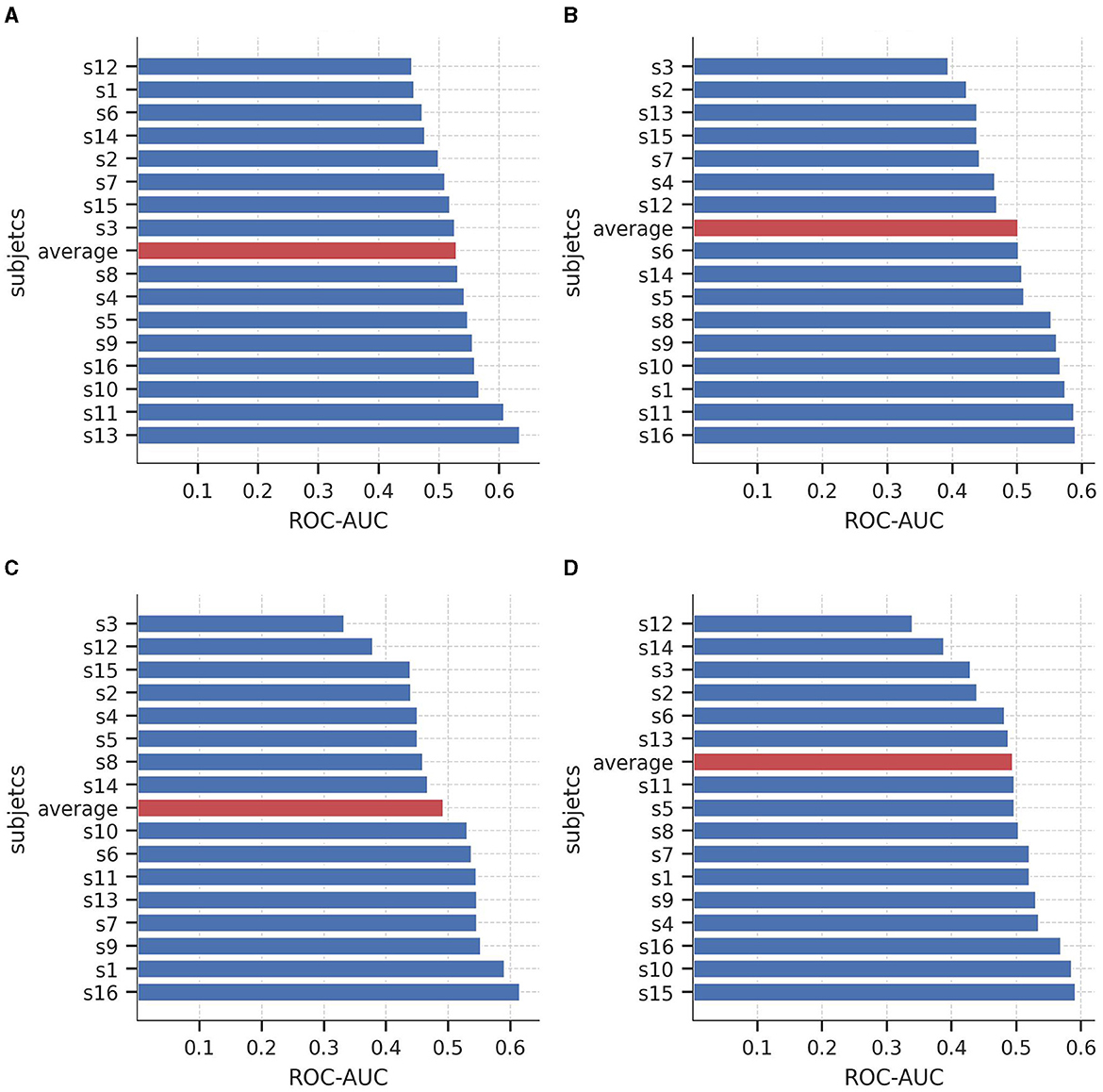

To further explore the performance of the models at different classification thresholds, Figure 3 displays the AUC-ROC curves for each subject of the SEED-IV dataset across the four binary logistic regression models. The results exhibit considerable variability in performance across subjects, with AUC-ROC values ranging from approximately 50 to 60%. Among the models, the classification of happy vs. non-happy demonstrates the highest discriminative capacity, whereas the fear vs. non-fear model exhibits the lowest ability to differentiate between samples belonging to the class and non-class categories.

Figure 3. Area under the curve of the Receiver Operating Characteristics (AUC-ROC) curves for each subject of the SEED-IV dataset for predicting (A) neutral vs. non-neutral, (B) sad vs. non-sad, (C) fear vs. non-fear, and (D) happy vs. non-happy.

3.3. Relevant EEG channels for predicting emotions

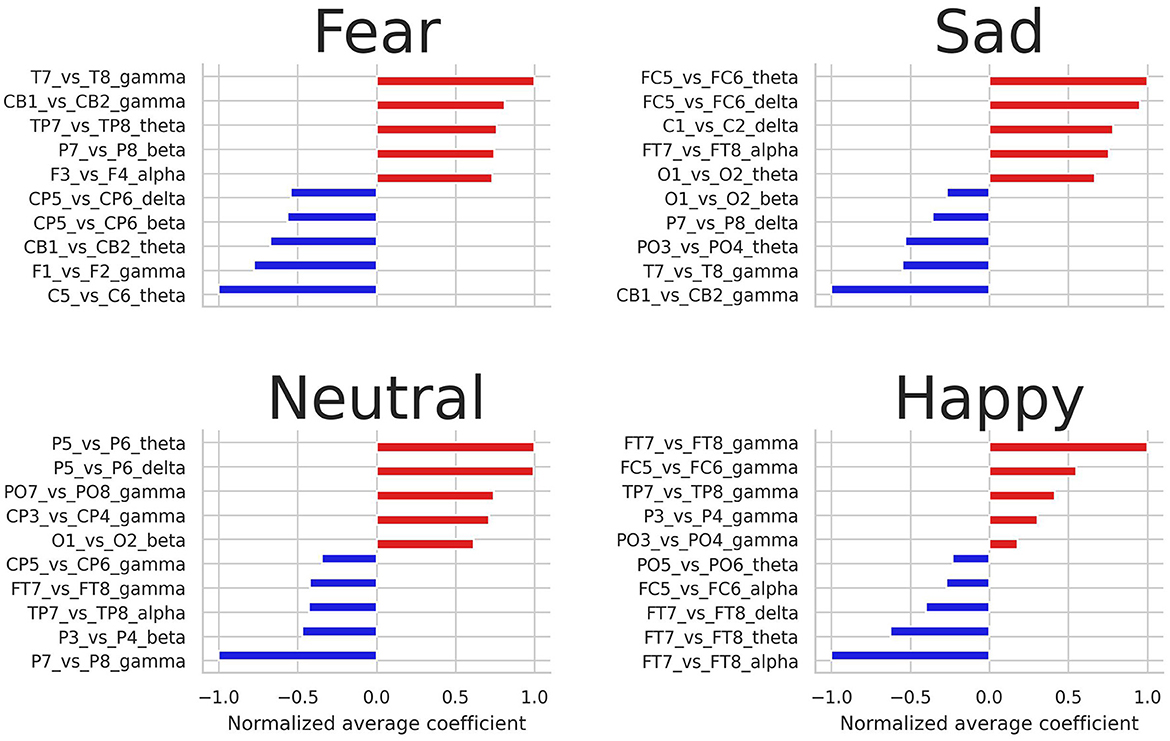

Figure 4 shows the highest and lowest normalized average coefficients for predicting fear, sadness, neutral, and happiness. Notably, the gamma frequency band emerges as the most influential in distinguishing between the four emotional states, with energy log-ratios from this band serving as crucial features for predicting the four emotional states. Additionally, asymmetry features extracted from the theta frequency band were also relevant. Regarding EEG pairs, the pairs FT7-FT8, TP7-TP8, and FC5-FC6 were among the most crucial pairs to predict the four emotions.

Figure 4. Highest and lowest five average coefficients for predicting fear, sadness, neutral, and happiness.

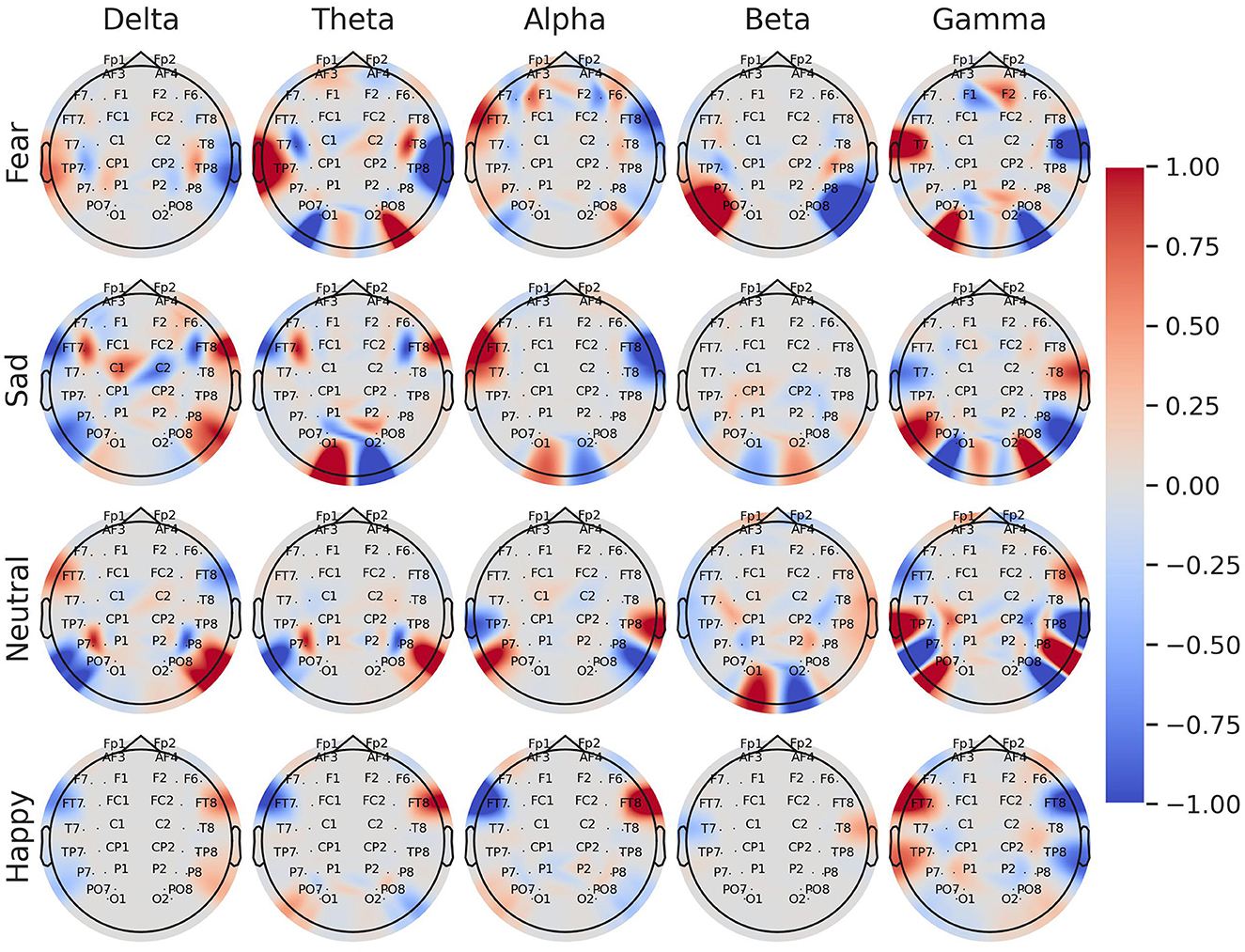

Figure 5 shows a brain topography with the average coefficients of the logistic regression models for each emotion and frequency band. Most of the associations between the emotions and the energy of EEG channels were located in the lateral frontal, temporal, and parietal areas. Specifically, in the gamma band, greater relative energy values of T7 were directly related to fear and inversely related to happiness and sadness. In contrast, greater relative energy values of the left EEG channel FT7, TP7, and PO3 were directly related to happiness and inversely related to fear. Notably, in the alpha band, higher values of energy in the channel FT7 were directly related to sadness and fear, while inversely related to happiness. The lower frequency bands, theta, and delta, mostly indicated direct and inverse relationships for the negative emotions (sad and fear).

Figure 5. Brain topography of the normalized average coefficients for logistic regression models trained with asymmetry log-ratio features for each individual class: fear, sadness, neutrality, and happiness. The color tones represent the associations between the energy of the EEG channel and the emotion. Red tones indicate a direct association between the energy of the EEG channel and the emotion, whereas blue tones indicate an inverse association.

3.4. Validation with external dataset

Figure 6 illustrates the AUC-ROC of the average coefficients when evaluated on subjects from an independent unseen dataset, SEED-V. Consistent with the findings from SEED-IV, the AUC-ROC yielded in an average range of around 0.5, with a variable performance across the subjects. Specifically, in the sad and happy classes, nine out of the 16 subjects achieved an AUC-ROC of 0.5 or higher. In the neutral and fear classes, eight subjects attained an AUC-ROC of 0.5 or higher. These results emphasize the individual differences in classification performance among subjects when detecting emotions from EEG signals.

Figure 6. Area under the curve of the Receiver Operating Characteristics (AUC-ROC) value for the 16 subjects of the SEED-V dataset for predicting (A) neutral vs. non-neutral, (B) sad vs. non-sad, (C) fear vs. non-fear, and (D) happy vs. non-happy. Red bars shows the average AUC-ROC across the subjects.

4. Discussion

Brain complexity and high variance across subjects challenge the mapping between EEG electrical activity and emotions (Li et al., 2022). These mappings could benefit from examining the asymmetry in emotional processing between the left and right hemispheres of the brain (Alves et al., 2008). In this study, we explored the utilization of asymmetry features to identify the EEG channels and frequency bands that are more relevant for predicting happiness, sadness, fear, and neutrality across subjects. To that aim, we employed the DWT to extract the relative energy of each frequency band, and then we calculated the log-ratio of electrodes located in symmetrical positions. To our knowledge, this is the first study to utilize DWT-extracted relative energy to describe the brain's asymmetry in processing happiness, sadness, fear, and neutrality.

Our results indicate that brain hemispheres and frequency bands participated differently in processing happiness, sadness, fear, and neutrality. Unlike the “right-dominance” (Borod et al., 1998; Demaree et al., 2005) and the “valence lateralization” (Ahern and Schwartz, 1985; Davidson, 1995) models, we found that the involvement of the hemisphere is more related to the frequency bands. Only in the alpha frequency band (8–12 Hz), we found lateralization when comparing a positive emotion (happiness) with negative emotions (sadness and fear). The distinct behavior shown in the alpha band between the positive (happy) and negative (sad and fear) emotions is consistent with the FAA analysis (Briesemeister et al., 2013). Higher electrical activation on the frontal right hemisphere channels (FP2, F4, and F6) for processing happiness indicates a large cortical resource allocation on the left hemisphere. Therefore, the left hemisphere is more involved in processing positive emotions. Similarly, higher activation on the left frontal channels (FP1, F3, and F5) for sadness and fear indicates more involvement of the right hemisphere to process negative emotions. This similarity is explained by the fact that the FAA is the logarithmic difference between the power of the two hemispheres, while our asymmetry feature is the logarithmic difference between the energy of the two hemispheres. Therefore, given that power is the amount of energy divided by time, there is a natural correlation between FAA and our asymmetry feature for the alpha band.

For the other frequency bands, both hemispheres were involved in processing emotions. The left hemisphere played a more significant role in processing emotions at higher frequency bands (gamma and beta), whereas the right hemisphere was more involved at lower frequency bands (theta and delta). This bilateral activation aligns with recent findings in neuroscience, indicating dynamic behavior and balanced activation in both hemispheres (Morawetz et al., 2020; Stanković and Nešić, 2020; Palomero-Gallagher and Amunts, 2022). As highlighted by Stanković and Nešić (2020), the brain initially demonstrates a right bias, but when emotional tasks are introduced, the electrical activity becomes distributed between both hemispheres. Considering that the data used in this study involved video clips lasting approximately two minutes each, it is expected that the activation of both hemispheres would increase throughout the recording session, as emotional stress was experienced.

The binary logistic regression models yielded valuable insights into the significance of frequency bands and EEG nodes in predicting emotions. The average coefficients obtained from the four trained models indicated the involvement of all frequency bands in emotion prediction, with the gamma band being particularly relevant. In particular, asymmetry features extracted from the gamma band in the temporal, frontal, and parietal areas (FT7-FT8, T7-T8, and TP7-TP8) emerged as relevant features for predicting emotions. The significance of this frequency band and its association with cortex areas can be visually demonstrated through brain topography (refer to Figure 5), where an inverse relationship between fear and happiness is observed for the EEG pairs FT7-FT8, T7-T8, and TP7-TP8 within the gamma band. Additionally, the topography reveals that EEG nodes located at the sides of the scalp hold the greatest relevance across all frequency bands in predicting emotional states. These findings offer valuable insights into the brain regions involved in emotional processing and may have important implications for developing more accurate and efficient models for emotion recognition using EEG signals.

Regarding the comparison between negative and positive emotions, our findings suggest that happiness is more opposite to fear than sadness. In detail, our logistic regression analysis showed a contradictory behavior between the coefficients for the EEG pairs in the gamma band for predicting happiness and fear. Specifically, our results suggest that higher relative energy at the PO3 node in the gamma band increases the likelihood of a subject experiencing happiness and decreases the likelihood of experiencing fear. In contrast, higher values of the EEG node T7 in the gamma band increase the probability of a subject experiencing fear and decrease the probability of experiencing happiness. Similarly, for the alpha band, higher positive values of the EEG node FT7 are directly related to identifying fear and inversely related to identifying happiness. Given that happiness and fear are emotions with high arousal, whereas sadness is an emotion with low arousal, it seems that the asymmetry lateralization is more pronounced for emotions that have opposite valence but higher arousal levels.

In regard to previous work, our findings also support the importance of the gamma and beta bands in processing emotions (see Figure 4), as previously reported by Zheng and Lu (2015). Additionally, our logistic regression analysis identified the ratios T7/T8, FT7/FT8, and FC5/FC6 as relevant EEG pairs to discriminate between positive and negative emotions, which is consistent with Pane et al. (2019), who found that brain activity extracted from the pairs T7-T8, C3-C4, and O1-O2 was important for classifying different emotional states. Finally, our results are in line with Zheng et al. (2018), who also reported that neural information from the EEG nodes located in the frontal region, such as FP1-FP2, FT7-FT8, and FC5-FC6, is one of the most relevant for predicting different emotional states.

It is important to acknowledge that the performance of the logistic regression models varied across subjects, with AUC-ROC values ranging from 41 to 70% for all 15 subjects across the four emotions. This notable inter-subject variability in the metrics highlights the difficulty of subject-independent approaches, which are inherently challenging due to the substantial EEG variability observed between individuals (Arevalillo-Herráez et al., 2019; Chen et al., 2021). Nonetheless, the limited over-fitting observed between the training and test sets indicates that the logistic regression models were able to capture some extent of emotional processing patterns. Interestingly, when utilizing the average coefficients to predict emotions in an independent dataset (SEED-V), a similar performance in terms of AUC-ROC values was achieved, suggesting that the extracted patterns may generalize when applied to EEG signals obtained from new subjects.

We also note that our accuracy rates were lower than those obtained by the deep learning models (Li et al., 2021). However, as reported in Li et al. (2021), it is expected that non-deep learning models achieved a lower performance for subject-independent predictions in the SEED-IV dataset, with an accuracy between 31 and 37% for regression models and SVM. Although we cannot directly compare with previous studies using regression models and SVM because we trained an individual model rather than a multi-class model, our performance is within the expected range for non-deep learning models attempting subject-independent approaches (Takahashi et al., 2004; Chanel et al., 2011).

One notable advantage of our approach over more accurate but complex deep learning models (Zheng and Lu, 2015; Li et al., 2021) is the interpretability provided by logistic regression models. This interpretability enabled us to successfully identify significant asymmetry relationships between EEG pairs and frequency bands associated with the processing of happiness, sadness, fear, and neutrality. Furthermore, the use of a subject-independent approach, combined with the interpretability of our model, facilitated the identification of shared patterns among the 15 subjects included in our dataset.

The lower metric performances might be a consequence of using a logistic regression model, a predictive model with high interpretability but lower performance rates than deep learning models (Arrieta et al., 2020). Future studies could attempt to solve this issue by using predictive models that have a balance between accuracy and interpretability, such as fuzzy modeling. This might be useful in improving the performance of emotion recognition as well as preserving interpretability.

One limitation of our study is that although we used an independent dataset to assess our findings, both datasets are from similar populations. In particular, both datasets (SEED-IV and SEED-V) were collected from students of Shanghai Jiao Tong University. As EEG data exhibit different brain patterns among individuals due to factors such as gender, culture, and genetics (Hamann and Canli, 2004), our results may not apply to individuals of different cultures. For instance, previous studies have reported that differences between Western and Asian cultures can affect the performance of emotion recognition approaches (Bradley et al., 2001). Nevertheless, despite the fact that both SEED-IV and SEED-V were collected at the same location, individuals of both datasets are mutually exclusive, allowing a fair validation of the results presented in our study.

Another area for improvement of our study is the absence of exploration into advanced artifact removals methods, such as recurrent networks (Ghosh et al., 2023) and spectrum adjustment (Ahmed et al., 2023). EEG signals are commonly contaminated by various artifacts, including muscle, and eye-blinking artifacts, which can significantly impact the accuracy of emotion classification. To address this limitation, future improvements could involve integrating pre-processing techniques that outperform traditional frequency filters in effectively eliminating artifacts caused by eye blinking and muscle movement. By incorporating these advanced artifact removal techniques, it is possible to reduce the effect of confounding factors on emotion recognition. Further research should focus on exploring these methods to improve the robustness and reliability of our findings.

5. Conclusion

This study aims to explore the association between asymmetry EEG pairs and frequency bands in the processing of happiness, sadness, fear, and neutrality. The findings revealed that the gamma and alpha bands in the lateral frontal, temporal, and parietal regions (T7-T8, FT7, FT7-FT8, and TP7-TP8) played a crucial role in distinguishing and predicting positive and negative emotions. Regarding the neuroscience models, we observed valence lateralization in the alpha band, with positive emotions predominantly processed in the left hemisphere and negative emotions in the right hemisphere. However, for the other frequency bands, both hemispheres were found to be involved in the processing of emotions. These outcomes provide valuable insights into the specific brain regions and frequency bands that should be considered when developing predictive models for emotion recognition. By considering these findings, future research can focus on leveraging these relevant features to enhance the accuracy and robustness of emotion classification models.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://bcmi.sjtu.edu.cn/~seed/seed-iv.html#.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the patients/participants or patients/participants legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

CV and SC are the supervisors of FM. FM and CV performed the experiments and contributed to the writing of the manuscript. SC acquired the data used for the experiments. All authors reviewed and approved the final manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2023.1217178/full#supplementary-material

References

Aftanas, L., Reva, N., Savotina, L., and Makhnev, V. (2006). Neurophysiological correlates of induced discrete emotions in humans: an individually oriented analysis. Neurosci. Behav. Physiol. 36, 119–130. doi: 10.1007/s11055-005-0170-6

Ahern, G. L., and Schwartz, G. E. (1985). Differential lateralization for positive and negative emotion in the human brain: EEG spectral analysis. Neuropsychologia 23, 745–755. doi: 10.1016/0028-3932(85)90081-8

Ahmed, M. A., and Loo, C. K. (2014). “Emotion recognition based on correlation between left and right frontal EEG assymetry,” in 2014 10th France-Japan/8th Europe-Asia Congress on Mecatronics (MECATRONICS2014-Tokyo) (IEEE), 99–103. doi: 10.1109/MECATRONICS.2014.7018585

Ahmed, M. Z. I., Sinha, N., Ghaderpour, E., Phadikar, S., and Ghosh, R. (2023). A novel baseline removal paradigm for subject-independent features in emotion classification using EEG. Bioengineering 10, 54. doi: 10.3390/bioengineering10010054

Ahmed, M. Z. I., Sinha, N., Phadikar, S., and Ghaderpour, E. (2022). Automated feature extraction on asmap for emotion classification using eeg. Sensors 22, 2346. doi: 10.3390/s22062346

Allen, J. J., Coan, J. A., and Nazarian, M. (2004). Issues and assumptions on the road from raw signals to metrics of frontal EEG asymmetry in emotion. Biol. Psychol. 67, 183–218. doi: 10.1016/j.biopsycho.2004.03.007

Alves, N. T., Fukusima, S. S., and Aznar-Casanova, J. A. (2008). Models of brain asymmetry in emotional processing. Psychol. Neurosci. 1, 63–66. doi: 10.3922/j.psns.2008.1.0010

Arevalillo-Herráez, M., Cobos, M., Roger, S., and García-Pineda, M. (2019). Combining inter-subject modeling with a subject-based data transformation to improve affect recognition from EEG signals. Sensors 19, 2999. doi: 10.3390/s19132999

Aris, S. A. M., Jalil, S. Z. A., Bani, N. A., Kaidi, H. M., and Muhtazaruddin, M. N. (2018). Statistical feature analysis of EEG signals for calmness index establishment. Int. J. Integr. Eng. 10, 3471. doi: 10.30880/ijie.2018.10.07.003

Arrieta, A. B., Díaz-Rodríguez, N., Del Ser, J., Bennetot, A., Tabik, S., Barbado, A., et al. (2020). Explainable artificial intelligence (xai): Concepts, taxonomies, opportunities and challenges toward responsible ai. Inf. Fusion 58, 82–115. doi: 10.1016/j.inffus.2019.12.012

Borod, J. C., Cicero, B. A., Obler, L. K., Welkowitz, J., Erhan, H. M., Santschi, C., et al. (1998). Right hemisphere emotional perception: evidence across multiple channels. Neuropsychology 12, 446. doi: 10.1037/0894-4105.12.3.446

Boudet, S., Peyrodie, L., Gallois, P., and Vasseur, C. (2008). “A robust method to filter various types of artifacts on long duration EEG recordings,” in 2008 2nd International Conference on Bioinformatics and Biomedical Engineering (IEEE), 2357–2360. doi: 10.1109/ICBBE.2008.922

Bradley, M. M., Codispoti, M., Sabatinelli, D., and Lang, P. J. (2001). Emotion and motivation II: sex differences in picture processing. Emotion 1, 300. doi: 10.1037/1528-3542.1.3.300

Briesemeister, B. B., Tamm, S., Heine, A., Jacobs, A. M., et al. (2013). Approach the good, withdraw from the bad—a review on frontal alpha asymmetry measures in applied psychological research. Psychology 4, 261. doi: 10.4236/psych.2013.43A039

Chanel, G., Rebetez, C., Bétrancourt, M., and Pun, T. (2011). Emotion assessment from physiological signals for adaptation of game difficulty. IEEE Trans. Syst. Man Cyber. Part A. 41, 1052–1063. doi: 10.1109/TSMCA.2011.2116000

Chen, H., Sun, S., Li, J., Yu, R., Li, N., Li, X., et al. (2021). “Personal-zscore: Eliminating individual difference for EEG-based cross-subject emotion recognition,” in IEEE Transactions on Affective Computing. doi: 10.1109/TAFFC.2021.3137857

Davidson, R. J. (1995). “Cerebral asymmetry, emotion, and affective style,” in Brain asymmetry, eds. R. J., Davidson, and K., Hugdahl (London: The MIT Press), 361–387.

Davidson, R. J., Ekman, P., Saron, C. D., Senulis, J. A., and Friesen, W. V. (1990). Approach-withdrawal and cerebral asymmetry: emotional expression and brain physiology: I. J. Person. Soc. Psychol. 58, 330. doi: 10.1037/0022-3514.58.2.330

Demaree, H. A., Everhart, D. E., Youngstrom, E. A., and Harrison, D. W. (2005). Brain lateralization of emotional processing: historical roots and a future incorporating “dominance”. Behav. Cogn. Neurosci. Rev. 4, 3–20. doi: 10.1177/1534582305276837

Ding, Y., Robinson, N., Zhang, S., Zeng, Q., and Guan, C. (2022). “Tsception: Capturing temporal dynamics and spatial asymmetry from EEG for emotion recognition,” in IEEE Transactions on Affective Computing. doi: 10.1109/TAFFC.2022.3169001

Duan, R.-N., Zhu, J.-Y., and Lu, B.-L. (2013). “Differential entropy feature for EEG-based emotion classification,” in 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER) (IEEE), 81–84. doi: 10.1109/NER.2013.6695876

Gandhi, T., Panigrahi, B. K., and Anand, S. (2011). A comparative study of wavelet families for EEG signal classification. Neurocomputing 74, 3051–3057. doi: 10.1016/j.neucom.2011.04.029

Gevins, A., Smith, M. E., McEvoy, L., and Yu, D. (1997). High-resolution EEG mapping of cortical activation related to working memory: effects of task difficulty, type of processing, and practice. Cerebral Cortex 7, 374–385. doi: 10.1093/cercor/7.4.374

Ghosh, R., Phadikar, S., Deb, N., Sinha, N., Das, P., and Ghaderpour, E. (2023). Automatic eyeblink and muscular artifact detection and removal from EEG signals using k-nearest neighbor classifier and long short-term memory networks. IEEE Sensors J. 23, 5422–5436. doi: 10.1109/JSEN.2023.3237383

Güler, I., and Übeyli, E. D. (2005). Adaptive neuro-fuzzy inference system for classification of EEG signals using wavelet coefficients. J. Neurosci. Methods 148, 113–121. doi: 10.1016/j.jneumeth.2005.04.013

Gunes, H., and Pantic, M. (2010). Automatic, dimensional and continuous emotion recognition. Int. J. Synth. Emot. 1, 68–99. doi: 10.4018/jse.2010101605

Hamann, S., and Canli, T. (2004). Individual differences in emotion processing. Curr. Opin. Neurobiol. 14, 233–238. doi: 10.1016/j.conb.2004.03.010

Heimann, M., Nordqvist, E., Strid, K., Connant Almrot, J., and Tjus, T. (2016). Children with autism respond differently to spontaneous, elicited and deferred imitation. J. Intell. Disab. Res. 60, 491–501. doi: 10.1111/jir.12272

Huang, D., Guan, C., Ang, K. K., Zhang, H., and Pan, Y. (2012). “Asymmetric spatial pattern for EEG-based emotion detection,” in The 2012 International Joint Conference on Neural Networks (IJCNN) (IEEE), 1–7. doi: 10.1109/IJCNN.2012.6252390

Kop, W. J., Synowski, S. J., Newell, M. E., Schmidt, L. A., Waldstein, S. R., and Fox, N. A. (2011). Autonomic nervous system reactivity to positive and negative mood induction: The role of acute psychological responses and frontal electrocortical activity. Biol. Psychol. 86, 230–238. doi: 10.1016/j.biopsycho.2010.12.003

Krisnandhika, B., Faqih, A., Pumamasari, P. D., and Kusumoputro, B. (2017). “Emotion recognition system based on EEG signals using relative wavelet energy features and a modified radial basis function neural networks,” in 2017 International Conference on Consumer Electronics and Devices (ICCED) (IEEE), 50–54. doi: 10.1109/ICCED.2017.8019990

Kudielka, B. M., Hellhammer, D. H., and Wüst, S. (2009). Why do we respond so differently? Reviewing determinants of human salivary cortisol responses to challenge. Psychoneuroendocrinology 34, 2–18. doi: 10.1016/j.psyneuen.2008.10.004

Li, X., Song, D., Zhang, P., Zhang, Y., Hou, Y., and Hu, B. (2018). Exploring EEG features in cross-subject emotion recognition. Front. Neurosci. 12, 162. doi: 10.3389/fnins.2018.00162

Li, X., Zhang, Y., Tiwari, P., Song, D., Hu, B., Yang, M., et al. (2022). EEG based emotion recognition: A tutorial and review. ACM Comput. Surv. 55, 1–57. doi: 10.1145/3524499

Li, Y., Wang, L., Zheng, W., Zong, Y., Qi, L., Cui, Z., et al. (2021). A novel bi-hemispheric discrepancy model for EEG emotion recognition. IEEE Trans. Cogn. Dev. Syst. 13, 354–367. doi: 10.1109/TCDS.2020.2999337

Lindsley, D. B., and Wicke, J. (1974). “Chapter 1 - the Electroencephalogram: Autonomous electrical activity in man and animals,” in Bioelectric Recording Techniques, eds. R. F., Thompson, and M. M., Patterson (New York, NY, USA: Academic Press), 3–83. doi: 10.1016/B978-0-12-689402-8.50008-0

Liu, W., Qiu, J.-L., Zheng, W.-L., and Lu, B.-L. (2021). Comparing recognition performance and robustness of multimodal deep learning models for multimodal emotion recognition. IEEE Trans. Cogn. Dev. Syst. 14, 715–729. doi: 10.1109/TCDS.2021.3071170

Liu, Y., and Sourina, O. (2014). “EEG-based subject-dependent emotion recognition algorithm using fractal dimension,” in 2014 IEEE International Conference on Systems, Man, and Cybernetics (SMC) 3166–3171. doi: 10.1109/SMC.2014.6974415

Liu, Y., Sourina, O., and Nguyen, M. K. (2010). “Real-time EEG-based human emotion recognition and visualization,” in 2010 International Conference on Cyberworlds 262–269. doi: 10.1109/CW.2010.37

Luan, X., Zhang, G., and Yang, K. (2022). “A bi-hemisphere capsule network model for cross-subject EEG emotion recognition,” in International Conference on Neural Information Processing (Springer), 325–336. doi: 10.1007/978-981-99-1645-0_27

Morawetz, C., Riedel, M. C., Salo, T., Berboth, S., Eickhoff, S. B., Laird, A. R., et al. (2020). Multiple large-scale neural networks underlying emotion regulation. Neurosci. Biobehav. Rev. 116, 382–395. doi: 10.1016/j.neubiorev.2020.07.001

Palomero-Gallagher, N., and Amunts, K. (2022). A short review on emotion processing: A lateralized network of neuronal networks. Brain Struct. Funct. 227, 673–684. doi: 10.1007/s00429-021-02331-7

Pane, E. S., Wibawa, A. D., and Purnomo, M. H. (2019). Improving the accuracy of EEG emotion recognition by combining valence lateralization and ensemble learning with tuning parameters. Cogn. Proc. 20, 405–417. doi: 10.1007/s10339-019-00924-z

Park, K. S., Choi, H., Lee, K. J., Lee, J. Y., An, K. O., and Kim, E. J. (2011). Emotion recognition based on the asymmetric left and right activation. Int. J. Med. Med. Sci. 3, 201–209. doi: 10.5897/IJMMS.9000091

Rangayyan, R. M. (2002). Biomedical Signal Analysis. Piscataway, NJ, USA: IEEE Press. doi: 10.1109/9780470544204

Sajno, E., Bartolotta, S., Tuena, C., Cipresso, P., Pedroli, E., and Riva, G. (2022). Machine learning in biosignals processing for mental health: A narrative review. Front. Psychol. 13, 1066317. doi: 10.3389/fpsyg.2022.1066317

Soroush, M. Z., Maghooli, K., Kamaledin Setarehdan, S., and Motie Nasrabadi, A. (2018). Emotion classification through nonlinear EEG analysis using machine learning methods. Int. Clin. Neurosci. J. 5, 135–149. doi: 10.15171/icnj.2018.26

Soroush, M. Z., Maghooli, K., Setarehdan, S. K., and Nasrabadi, A. M. (2019). Emotion recognition through EEG phase space dynamics and dempster-shafer theory. Med. Hypoth. 127, 34–45. doi: 10.1016/j.mehy.2019.03.025

Soroush, M. Z., Maghooli, K., Setarehdan, S. K., and Nasrabadi, A. M. (2020). Emotion recognition using EEG phase space dynamics and poincare intersections. Biomed. Signal Proc. Control 59, 101918. doi: 10.1016/j.bspc.2020.101918

Stanković, M., and Nešić, M. (2020). Functional brain asymmetry for emotions: Psychological stress-induced reversed hemispheric asymmetry in emotional face perception. Exper. Brain Res. 238, 2641–2651. doi: 10.1007/s00221-020-05920-w

Subasi, A. (2007). EEG signal classification using wavelet feature extraction and a mixture of expert model. Expert Syst. Applic. 32, 1084–1093. doi: 10.1016/j.eswa.2006.02.005

Takahashi, K. (2004). “Remarks on emotion recognition from bio-potential signals,” in 2nd International conference on Autonomous Robots and Agents (Citeseer) 1148–1153. doi: 10.1109/ICIT.2004.1490720

Tong, Y., Aliyu, I., and Lim, C.-G. (2018). Analysis of dimensionality reduction methods through epileptic EEG feature selection for machine learning in BCI. J. Korea Inst. Electr. Commun. Sci. 13, 1333–1342.

Valderrama, C. E. (2021). “A comparison between the hilbert-huang and discrete wavelet transforms to recognize emotions from electroencephalographic signals,” in 2021 43rd Annual International Conference of the IEEE Engineering in Medicine &Biology Society (EMBC) (IEEE), 496–499. doi: 10.1109/EMBC46164.2021.9630188

Valderrama, C. E., and Ulloa, G. (2012). “Spectral analysis of physiological parameters for emotion detection,” in 2012 XVII Symposium of Image, Signal Processing, and Artificial Vision (STSIVA) (IEEE), 275–280. doi: 10.1109/STSIVA.2012.6340595

Valderrama, C. E., and Ulloa, G. (2014). Combining spectral and fractal features for emotion recognition on electroencephalographic signals. WSEAS Trans. Signal Proc. 10, 481–496. Available online at: https://wseas.com/journals/articles.php?id=5213

Yan, R., Lu, N., Niu, X., and Yan, Y. (2022). “Hemispheric asymmetry measurement network for emotion classification,” in Chinese Conference on Biometric Recognition (Springer), 307–314. doi: 10.1007/978-3-031-20233-9_31

Yang, Y.-X., Gao, Z.-K., Wang, X.-M., Li, Y.-L., Han, J.-W., Marwan, N., et al. (2018). A recurrence quantification analysis-based channel-frequency convolutional neural network for emotion recognition from EEG. Chaos: Interdisc. J. Nonl. Sci. 28, 085724. doi: 10.1063/1.5023857

Yu, G., Li, X., Song, D., Zhao, X., Zhang, P., Hou, Y., et al. (2016). “Encoding physiological signals as images for affective state recognition using convolutional neural networks,” in 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 812–815. doi: 10.1109/EMBC.2016.7590825

Zhao, G., Zhang, Y., Ge, Y., Zheng, Y., Sun, X., and Zhang, K. (2018). Asymmetric hemisphere activation in tenderness: evidence from EEG signals. Scient. Rep. 8, 8029. doi: 10.1038/s41598-018-26133-w

Zheng, W., Liu, W., Lu, Y., Lu, B., and Cichocki, A. (2018). Emotionmeter: A multimodal framework for recognizing human emotions. IEEE Trans. Cybern. 49, 1110–1122. doi: 10.1109/TCYB.2018.2797176

Zheng, W.-L., and Lu, B.-L. (2015). Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Mental Dev. 7, 162–175. doi: 10.1109/TAMD.2015.2431497

Keywords: emotion recognition, electroencephalogram, affective computing, brain hemisphere asymmetry, logistic regression, interpretable predictive models

Citation: Mouri FI, Valderrama CE and Camorlinga SG (2023) Identifying relevant asymmetry features of EEG for emotion processing. Front. Psychol. 14:1217178. doi: 10.3389/fpsyg.2023.1217178

Received: 04 May 2023; Accepted: 21 July 2023;

Published: 17 August 2023.

Edited by:

Tjeerd Jellema, University of Hull, United KingdomReviewed by:

Ebrahim Ghaderpour, Sapienza University of Rome, ItalySara Bagherzadeh, Islamic Azad University, Iran

Copyright © 2023 Mouri, Valderrama and Camorlinga. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fatima Islam Mouri, bW91cmktZkB3ZWJtYWlsLnV3aW5uaXBlZy5jYQ==; Camilo E. Valderrama, Yy52YWxkZXJyYW1hQHV3aW5uaXBlZy5jYQ==

†These authors share first authorship

Fatima Islam Mouri

Fatima Islam Mouri Camilo E. Valderrama

Camilo E. Valderrama Sergio G. Camorlinga

Sergio G. Camorlinga