94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 24 July 2023

Sec. Media Psychology

Volume 14 - 2023 | https://doi.org/10.3389/fpsyg.2023.1191628

This article is part of the Research TopicCoping with an AI-Saturated World: Psychological Dynamics and Outcomes of AI-Mediated CommunicationView all 6 articles

Simone Grassini1,2*

Simone Grassini1,2*The rapid advancement of artificial intelligence (AI) has generated an increasing demand for tools that can assess public attitudes toward AI. This study proposes the development and the validation of the AI Attitude Scale (AIAS), a concise self-report instrument designed to evaluate public perceptions of AI technology. The first version of the AIAS that the present manuscript proposes comprises five items, including one reverse-scored item, which aims to gauge individuals’ beliefs about AI’s influence on their lives, careers, and humanity overall. The scale is designed to capture attitudes toward AI, focusing on the perceived utility and potential impact of technology on society and humanity. The psychometric properties of the scale were investigated using diverse samples in two separate studies. An exploratory factor analysis was initially conducted on a preliminary 5-item version of the scale. Such exploratory validation study revealed the need to divide the scale into two factors. While the results demonstrated satisfactory internal consistency for the overall scale and its correlation with related psychometric measures, separate analyses for each factor showed robust internal consistency for Factor 1 but insufficient internal consistency for Factor 2. As a result, a second version of the scale is developed and validated, omitting the item that displayed weak correlation with the remaining items in the questionnaire. The refined final 1-factor, 4-item AIAS demonstrated superior overall internal consistency compared to the initial 5-item scale and the proposed factors. Further confirmatory factor analyses, performed on a different sample of participants, confirmed that the 1-factor model (4-items) of the AIAS exhibited an adequate fit to the data, providing additional evidence for the scale’s structural validity and generalizability across diverse populations. In conclusion, the analyses reported in this article suggest that the developed and validated 4-items AIAS can be a valuable instrument for researchers and professionals working on AI development who seek to understand and study users’ general attitudes toward AI.

Artificial Intelligence (AI) is a terminology that refers to a technology that enables software and machines to emulate human intelligence (see, e.g., Fetzer and Fetzer, 1990). The potential uses of AI systems span various disciplines, including computer science, engineering, biology, neuroscience, and psychology. AI is rapidly transforming various aspects of modern society, including healthcare, transportation, finance, and education (Harari, 2017; Makridakis, 2017). As AI technologies become more integrated into daily life, understanding public attitudes and perceptions toward AI is crucial for guiding their development, adoption, and regulation (Zhang and Dafoe, 2019; Araujo et al., 2020; O’Shaughnessy et al., 2022).

The ongoing progress in AI has led to the development of potentially groundbreaking technological developments such as self-driving cars (Hong et al., 2021). Additionally, AI-based products like Apple’s Siri and Amazon’s Alexa have gained widespread adoption in everyday life (Brill et al., 2019), providing voice-command services for tasks like weather forecasts and navigation. Moreover, AI research has given rise to humanoid robots, such as social robots (Sandoval et al., 2014; Glas et al., 2016; for a review see Hentout et al., 2019). AI’s integration into daily life offers numerous benefits, such as safer driving (Manoharan, 2019), and improved healthcare (Johnson et al., 2021).

This expansion of artificial intelligence presents both exciting opportunities and potential challenges. While AI has the potential to revolutionize numerous industries, it also carries risks such as job displacement due to automation (Tschang and Almirall, 2021). Although AI can generate new employment opportunities, these roles may be markedly different from those that AI replaces (Wilson et al., 2017). Public opinion regarding AI is diverse, with some embracing its benefits, while others exhibit ambivalence or even anxiety (Fast and Horvitz, 2017). Prominent figures such as Stephen Hawking have cautioned that the progression of AI could ultimately endanger human existence (Cellan-Jones, 2014; Kumar and Choudhury, 2022). Similarly, Tesla CEO and tech entrepreneur Elon Musk has expressed concerns about AI development on multiple occasions (Cellan-Jones, 2014). He, along with other experts, has advocated for a ban on military robots (Gibbs, 2017). In March of this year, Musk and several key figures in the AI field penned an open letter calling for a temporary halt to AI development (Pause Giant AI Experiments: An Open Letter, 2023), though this proposal has received mixed reactions from other experts that instead emphasizes as AI can be an opportunity for the future of human kind (Samuel, 2023; see also earlier works as, e.g., Archer, 2021; Samuel, 2021).

Considering the ongoing debate and the numerous perspectives held by various interested parties, stakeholders, and ordinary citizens, it is of paramount importance for psychologists and cognitive scientists interested in human-computer interaction and more in general in the acceptability and adoption of emerging technologies, to develop and implement a concise, reliable, and valid instrument for assessing attitudes toward AI for assessing attitudes toward AI, that can be easily implemented and assessed. Investigating this area can yield invaluable insights into the determinants influencing individuals’ acceptance or resistance to AI, as well as the potential benefits and risks they associate with its deployment (Nadimpalli, 2017; Eitel-Porter, 2021). Moreover, understanding these factors can aid in the development of educational initiatives and public awareness campaigns that address concerns and misconceptions about AI while emphasizing its potential for positive impact and rationally inform about possible dangers. By fostering a deeper understanding of public sentiment and expectations surrounding AI, researchers and policymakers can work together to ensure that AI is developed and implemented responsibly and ethically. Such collaboration will serve to maximize the benefits of AI while mitigating its potential risks, ultimately leading to a more harmonious integration of AI technologies into our daily lives and society.

To develop the scale items, recent literature on the topic of AI perception and attitude was examined to identify major themes and previous efforts to create similar scales (see, e.g., Schepman and Rodway, 2020; Sindermann et al., 2021). It was found that experts, the public, and the media all expressed both positive and negative views about AI (Fast and Horvitz, 2017; Anderson et al., 2018; Cave et al., 2019). Large-scale surveys confirmed these mixed perspectives and reflected similar key themes (Zhang and Dafoe, 2019). Public concerns included job displacement, ethical issues, and non-transparent decision-making. In contrast, positive attitudes centered on AI’s potential to improve efficiency and provide innovative solutions in various domains. Interestingly, a phenomenon called “algorithm appreciation” has been reported, wherein AI was preferred over humans in certain contexts (Logg et al., 2019).

Some studies delved deeper into specific aspects of attitude toward AI. For instance, potential job loss emerged as a significant concern due to the high computerizability of numerous occupations (Chui et al., 2016; Frey and Osborne, 2017). Ethical concerns were also prominent, particularly in medical settings (Morley et al., 2020). Public opinion was divided on using AI for tasks traditionally performed by medical staff, and many people felt uncomfortable with personal medical information being used in AI applications. Vayena et al. (2018) suggested that trust in AI could be promoted through employing measures for personal data protection, unbiased decision-making, appropriate regulation, and transparency, a view supported by other recent discussions on trust in AI (Schaefer et al., 2016; Barnes et al., 2019; Sheridan, 2019; Middleton et al., 2022).

Although there are existing instruments that evaluate people’s acceptance of technology (e.g., Davis, 1989; Parasuraman and Colby, 2015), most of them do not specifically address AI, which may differ in key aspects regarding acceptance. The Technology Acceptance construct (Davis, 1989) primarily emphasizes a user’s willingness to adopt technology through consumer choice. However, AI adoption frequently does not involve consumer choice, as large organizations and governments often implement AI without seeking input from end users, who are then left with no option but to engage with it. Consequently, traditional technology acceptance measures may not be ideally suited for gauging attitudes toward AI. Other existing measures tend to be lengthy, context-specific, or lack empirical validation. Additionally, most questionnaires designed for evaluating technological attitudes and acceptance (e.g., The Media and Technology Usage and Attitudes Scale - MTUAS, see Rosen et al., 2013) have not been specifically developed and validated considering modern consumer-oriented AI systems, such as OpenAI ChatGPT or text-to-image AI services.

In recent years, few scales have been developed specifically with the aim to assess attitudes toward AI. Schepman and Rodway (2020) introduced the General Attitudes toward Artificial Intelligence Scale (GAISS), a 20-item scale with a two-factor structure (positive and negative attitudes toward AI). Sindermann et al. (2021) proposed a concise 5-item scale, the Attitude Toward Artificial Intelligence scale (ATAI), featuring a two-factor structure (acceptance and fear). Another scale (Threat of Artificial Intelligent Scale, TAI; Kieslich et al., 2021) has also been developed to specifically assess fear in AI technology. However, these scales face challenges concerning their practicality and possible use in the context of assessing general attitude.

The GAISS, with its many items, can be time-consuming to administer, making it less suitable for large-scale surveys or situations with limited participant time. In contrast, the ATAI scale is easier to administer but emphasizes extreme outcomes with items like “Artificial intelligence will destroy humankind” or “Artificial intelligence will benefit humankind.” This approach may not capture the nuanced attitudes people may have, which could include a mix of optimism, skepticism, and concerns about specific issues. The scale also appears to focus more on negative AI aspects (fear, destruction, and job losses) than on positive or factual aspects, potentially leading to an overemphasis on negative attitudes and a less accurate representation of people’s overall AI perceptions. The ATAI scale also highlights emotions, with three out of five items loading on the “fear” factor and only two on the “acceptance” factor in the two-factor model proposed by Sindermann et al. (2021).

Moreover, both scales were developed and tested in a context where large language models (LLMs) like ChatGPT were not yet widely available and the public debates following these AI systems did not emerge yet (for a review see Leiter et al., 2023) or discussed. The rapid implementation of these AI systems and increased exposure to information about them may have significantly altered public attitudes toward AI technology and how these systems are perceived as useful and impactful for the future. Developing a concise, reliable, and valid scale for assessing AI attitudes would facilitate research and practice in understanding and addressing public perceptions of AI. A short and usable scale would also support non-psychologists, as AI professionals and the technology sector in gathering critical individual variables when testing products for the general population.

This study aims to address the existing gap in scientific understanding by creating and validating the AI Attitude Scale (AIAS), a concise instrument designed to assess public attitudes toward AI. The AIAS strives to be a brief yet dependable tool that is easy and quick to administer for both psychological research and non-psychologists interested in gauging users’ or citizens’ attitudes toward AI, such as software developers, businesses, organizations, and stakeholders.

Drawing on established theoretical frameworks, such as the Technology Acceptance Model (TAM) (Davis, 1989) and the Unified Theory of Acceptance and Use of Technology (UTAUT) (Venkatesh et al., 2003), as well as empirical research on AI-related risks (Cheatham et al., 2019; Kaur et al., 2022), and societal implications (Ernst et al., 2019; Tschang and Almirall, 2021). The scale considers various aspects of AI’s potential influence on individuals’ lives, work, and the broader human experience, while also addressing the likelihood of future AI adoption. The AIAS scale is designed to capture attitudes toward AI by focusing on the perceived utility and potential impact of technology on society and humanity, including perceived benefits, potential risks, and intentions to use AI technologies. In line with items (and theoretical standpoints) discussed in similar scales (GAISS and ATAI), the AIAS also includes some items related that can be related to emotional statements, however, does not focus in evaluating AI-elicited emotions but attempts to provide a balanced assessment of attitudes toward AI.

The present article, based on 2 studies from distinct populations, outlines the development and validation of the AIAS, starting with an examination of the theoretical underpinnings of the scale items and the methods employed in scale development. Subsequently, the results of an exploratory factor analysis (EFA) and an assessment of the scale’s internal consistency and convergent validity are presented. Finally, the scale factor structure was evaluated using confirmatory factor analysis (CFA) with a different sample of participants.

The initial AIAS consisted of 5 items. One of the items was reverse scored to reduce response bias (DeVellis and Thorpe, 2021). Participants rated their agreement with each item using a 10-point Likert scale (1 = Not at all, 10 = Completely Agree). A 10-point scale was chosen for the high test–retest reliability, easy to use (Preston and Colman, 2000), as well as good level of granularity. The selection of these specific items was based on several considerations. Primarily, the intent was to provide a comprehensive coverage of key dimensions of attitudes toward AI, derived from prior theoretical and empirical work in technology acceptance and risk perception literature. This included factors such as perceived usefulness, potential societal impact, adoption intention, risk perception, and overall evaluation of AI’s impact on humanity. Furthermore, it was sought to incorporate the breadth of public opinion, acknowledging that attitudes toward AI can range from optimistic enthusiasm to concern and even fear of potential risks. The items originally included in the t-items scale were the following:

1) I believe that AI will improve my life.

This item was derived from the Technology Acceptance Model (TAM) (Davis, 1989), which posits that perceived usefulness (i.e., the degree to which an individual believes that using a technology will enhance their performance) is a primary determinant of technology acceptance. In the context of AI, this item assesses individuals’ beliefs about the potential benefits of AI in improving their quality of life. Such theory has been applied recently in the field of AI (see, e.g., Sohn and Kwon, 2020).

2) I believe that AI will improve my work.

Like the first item, this item is also grounded in the TAM (Davis, 1989) and reflects individuals’ beliefs about the potential benefits of AI in the work domain. This item addresses the role of AI in generally improving human work condition, in line with expectations of AI technology discussed in the current scientific literature (Wijayati et al., 2022).

3) I think I will use AI technology in the future.

This item is based on the concept of behavioral intention, which is central to several theories of technology acceptance, including the TAM (Davis, 1989) and the Unified Theory of Acceptance and Use of Technology (UTAUT) (Venkatesh et al., 2003), including its current discussion in relationship with AI (Venkatesh, 2022). Behavioral intention is considered a proximal determinant of actual technology use, and this item measures individuals’ intentions to adopt and use AI technologies in the future.

4) I think AI technology is a threat to humans (reverse item).

This reverse-scored item assesses individuals’ concerns about the potential risks and negative consequences of AI technology. The item is grounded in the literature on the risk of super intelligent AI (Barrett and Baum, 2017) and risk perception associated with technology (Slovic, 1987; Frey and Osborne, 2017). These theoretical standpoints highlight the importance of understanding individuals’ concerns about the potential dangers posed by AI, such as job displacement, loss of privacy, or even existential threats.

5) I think AI technology is positive for humanity.

This item captures individuals’ overall evaluation of the impact of AI technology on society and humanity as a whole. The item is grounded in the literature on the social implications of AI (Brynjolfsson and McAfee, 2014) and reflects the belief that AI can contribute to societal progress and well-being by addressing complex global challenges, improving healthcare (Loh et al., 2022), enhancing education (Xia et al., 2022; Yang, 2022), and fostering economic growth (Jones, 2022; Matytsin et al., 2023).

In conclusion, the selection of these items was aimed at achieving a balance between theoretical grounding, and coverage of the range of public attitudes toward AI. It is also noteworthy that these items may not be exhaustive and cover all the aspects related to AI attitude, and that future iterations of the scale may incorporate additional items to further enrich the measurement of attitudes toward AI.

Prior to the experiments, participants were given an overview on what it is AI, and how it is used. This included information on current AI developments like virtual assistants, content recommendation algorithms for media streaming services, and AI-powered communication tools such as grammar checkers and chatbots. These uses of AI systems were explicitly mentioned in the information document prior to the presentation of the questionnaires. The aim was to engage the participants in thinking about the essence of AI and its ongoing advancements, thus minimizing potential sources of misinterpretation.

In the first study (EFA), a gender-balanced convenience sample of 230 UK adults was assembled using Prolific, an online platform for participant recruitment. A sample size of >200 for factor analysis is generally accepted as fair according with the literature (Comrey and Lee, 2013). All participants indicated they used a computer or laptop with a physical keyboard. The sample size was determined by the number of variables analyzed in a more comprehensive survey on AI-generated data perception, including the questions investigated in this article. EFA is typically used for exploratory purposes, where the goal is to uncover the underlying factor structure of a set of observed variables, and it is a data-driven technique that allows for flexibility in model specification. While the scale items emerged from theoretical standpoints, it was preferred to first establish their underlying factor structure through EFA to allow for an unbiased exploration of the data, and secondly perform a CFA with a different participant sample.

For the second study (CFA), a separate convenience sample of 300 US adults, with characteristics like the UK sample, was recruited through the same platform. The sample size was based on the number of variables examined in an extensive survey focusing on human-computer and human-AI interaction, incorporating the questions examined in this article.

In both studies, participants were required to be fluent in English and over 18 years old. All participants in both studies were asked to read and explicitly accept an informed consent form before participating. They were informed about the tasks they would be performing and reminded of their right to withdraw from the study at any time. Both studies adhered to the Declaration of Helsinki for scientific studies involving human participants and complied with local and national regulations. No personally identifiable information was collected from study participants.

Participants in both studies completed an online survey that included the questions included in the AIAS, along with other measures of attitudes toward technology, as well as demographic questions. The attitude dimension of the Media and Technology Usage and Attitudes Scale (MTUAS, Rosen et al., 2013), was measured and used to explore convergent validity in both studies. The MTUAS is a comprehensive psychometric instrument designed to assess individuals’ usage patterns and attitudes toward various forms of media and technology. It has shown to have a good validity as psychometric measure (e.g., Sigerson and Cheng, 2018). This scale encompasses multiple dimensions, and the one considered in the present study are positive and negative attitudes toward technology, and anxiety related to technology use. The MTUAS has been extensively used by researchers and practitioners to gain insights into how people interact with and perceive technology in their daily lives or in professional environments (e.g., Rashid and Asghar, 2016; Becker et al., 2022; Srivastava et al., 2022). The MTUAS factors of positive, negative, multi-tasking and anxiety were included in the original survey (as they are presented together in the original scale), however, after preliminary analyses it was decided to not report the analyses for the multi-tasking factor of the scale, as not directly related to technology attitude.

For both studies, the surveys were developed using Psyktoolkit (Stoet, 2010, 2017). The surveys included a questionnaire battery containing attention check questions, such as “select the highest value for this item” or “select the lowest value for this item.” Participants who failed one or more attention checks were excluded from the sample and replaced until the pre-determined final samples were reached. In the first study, six participants needed replacement, while in the second study, 18 participants required replacement due to failing one or more attention checks.

In the survey, the sample characteristics were examined, including age, gender, and level of education. In study 1, the sample featured a balanced distribution of males and females (114 each), with two participants choosing not to answer the question or selecting a third gender option. The ages of the participants ranged from 18 to 76 years old (M = 40.2, SD = 14.6). A majority held a university degree (59.6%), followed by high school (38.7%). A smaller portion of the sample had completed middle school (0.9%) or elementary school (0.9%), and none reported having no formal schooling. The age of the participants ranged from 18 to 76 years old (M = 41.5, SD = 15). A majority held a university degree (60.7%), followed by high school (38.7%). A smaller portion of the sample had completed middle school (0.9%) or elementary school (0.9%), and none reported having no formal schooling.

In study 2, the sample as well featured a balanced distribution of males (N = 142) and females (N = 150), with eight participants choosing not to answer the question or selecting a third gender option. The ages of the participants ranged from 18 to 81 years old (M = 40.2, SD = 14.6). A majority held a university degree (59.6%), followed by high school (39%), and only one reported only completion of middle school (0.3%). None of the participants reported having completed only elementary school or not having any formal schooling. The age of the participants ranged from 18 to 76 years old (M = 40.2, SD = 14.6). A majority held a university degree (59.6%), followed by high school (38.7%). A smaller portion of the sample had completed middle school (0.9%) or elementary school (0.9%), and none reported having no formal schooling.

EFAs were performed in data from Study 1 to assess the factor structure of the AIAS (Fabrigar and Wegener, 2011). The factor structure (1 factors, 4 items) identified in study 1, was further validated using a CFA with the data collected in study 2. Internal consistency, and convergent validity with related measures were also examined for the sample in both studies (Cronbach, 1951; Shrout and Fleiss, 1979). Correlation matrixes were computed to evaluate convergent validity of the developed scale with the MTUAS scale (positive, negative, and anxiety factors). Multiple linear regressions were computed to understand the relationship between the background information collected for the samples and AIAS score. Data analysis was performed using the statistical software Jamovi 2.3.21 (The Jamovi Project, 2022).

First, descriptive statistics were obtained for each item of the scale. The descriptive statistics for the AI attitude scale items are presented in Table 1. The skewness values for the items indicated that the data is roughly symmetric. The kurtosis values for the items indicated that the data is platykurtic.

The data was analyzed to assess whether the assumptions necessary for performing an EFA were satisfied. The Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy, a critical index for determining the suitability of the data for factor analysis, was computed. With an overall KMO value of 0.827, the data demonstrated meritorious sampling adequacy, thereby confirming its appropriateness for exploratory factor analysis (Williams et al., 2010).

Furthermore, Bartlett’s test of sphericity was conducted to evaluate the presence of significant correlations between the items, a prerequisite for factor analysis. The test yielded a significant result [χ2(10) = 612, p < 0.001], providing evidence of substantial correlations among the items. Consequently, these findings support the validity of proceeding with the EFA.

In the EFA, maximum likelihood extraction method and oblimin rotation were used. Oblimin rotation was preferred as it was assumed that the factors extracted may correlated with each other. Two factors were extracted based on parallel analysis.

As shown in Table 2, the factor loadings suggest that Items 1, 2, and 3 loaded moderately to highly on Factor 1, while Items 4 and 5 loaded highly on Factor 2. The uniqueness values indicate the proportion of variance in each item that is not explained by the factors. Inter-factor correlation was shown to be high (0.749), confirming the adequacy of the use of Oblimin rotation method. Reliability of the scale was analyzed using Cronbach alpha and McDonald’s omega.

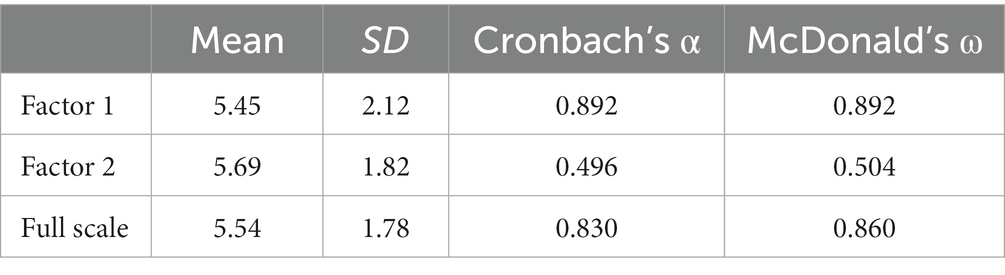

Table 3 shows the mean scores, standard deviations, Cronbach’s alpha, and McDonald’s omega values for each factor and for the entire scale. Factor 1, with a Cronbach’s alpha of 0.892 and McDonald’s omega of 0.892, showed good internal consistency. Factor 2 with a Cronbach’s alpha of 0.496 and McDonald’s omega of 0.504, showed an overall internal consistency lower than Factor 1. The entire scale had a Cronbach’s alpha of 0.830 and McDonald’s omega of 0.860, indicating good internal consistency.

Table 3. Mean, SD, Cronbach’s alpha, and McDonald’s omega values for each factor and for the entire scale.

A correlation matrix was computed to visualize the correlations between the questionnaire items. Table 4 shows a matrix of the 5 items included in the AI attitude scale.

These analyses showed relatively poor loading of item 4 in the established factors. Therefore, the item was removed from the analyses and the scale analyzed again without the item.

Due to the relatively poor loading on factor 2 and the poor correlation with the other items, item 4 of the questionnaire was eliminated from the analyses, trying to establish a scale with better psychometric characteristics compared to the 2-factors structure previously proposed. For the 4-items questionnaire, the Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy obtained a value of 0.83, demonstrated again meritorious sampling adequacies (Williams et al., 2010). Bartlett’s test of sphericity yielded a significant result [χ2(6) = 583, p < 0.001], providing evidence of substantial correlations among the items. For the EFA of the 4-items version of the questionnaire were sed the same extraction and rotation methods as the ones previously used (maximum likelihood and oblimin rotation). Table 5 shows that all the items highly load on just one factor.

The 4-items scale shows a mean score of 5.48 (SD = 1.99) and a Cronbach’s alpha of 0.902 and McDonald’s omega of 0.904, indicating good internal consistency, and exceeding the internal consistency reported for both the total 5-items scale and the 3-items factor 1 identified in the previous analyses.

Correlation analyses were computed between the 4-items AIAS and the attitude factors of the Media and Technology Usage and Attitudes Scale (MTUAS), as previously. The correlation matrix is presented in Table 6. The analyses for the 4-items AIAS do not show significant different relationship with the attitude dimensions of MTUAS compared to what observed for the same analyses where the Factor 1 of the 2-factors 5-items AIAS was included in the analyses, confirming the interpretation of the relationship between AIAS and MTUAS proposed earlier.

A multiple linear regression was computed to understand how the background information collected for the sample can explain the total score of the 4-items AIAS. The analysis was conducted to examine the relationships between age, gender, and education levels with the total score of the 4-items AIAS. The overall model was statistically significant.

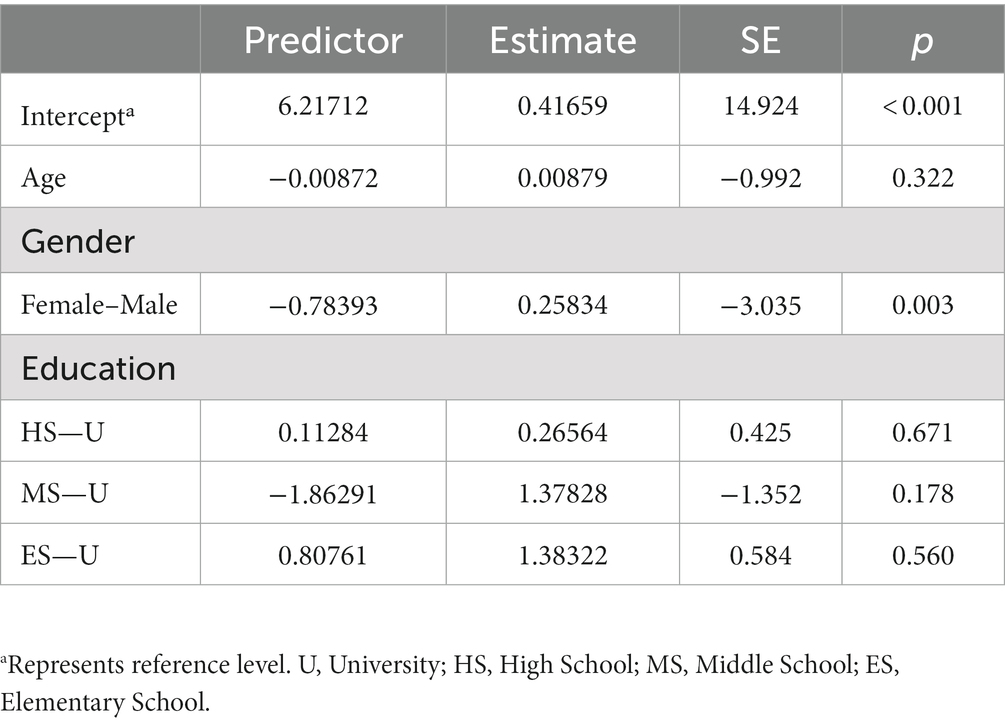

Gender emerged as the only statistically significant predictors of the 4-items AIAS total score among the ones used in the model, with female participants showing lower scores compared to male participants. Age and education levels (p > 0.05 for all comparisons) were not found to be significant predictors of the 4-items AIAS total score. Table 7 reports the results of the multiple linear regression model. Estimates are obtained with ordinary least squares minimizing the sum of squared deviations between each observed value and predicted values.

Table 7. Multiple linear regression model summarizing the relationships between age, gender, and education levels as predictors of 4-items AIAS total score.

In study 2, the 4-items version of the AIAS validated from study 1 was tested, and CFA was conducted. Descriptive statistics were calculated for each of the four items in the scale. Table 8 presents the descriptive statistics for the AI attitude scale items.

A Confirmatory Factor Analysis was conducted to examine the factor structure of the 4-item questionnaire with the sample from Study 2. The factor loadings for all items were statistically significant, suggesting that the items were strong indicators of the underlying factor. Results including standardized estimate (Rosseel, 2012) are reported in Table 9.

The model fit was assessed using several fit indices. The chi-square test for exact fit was not significant, indicating a good model fit [χ2(2) = 2.49, p = 0.289] (but see DiStefano and Hess, 2005). Additionally, the Comparative Fit Index (CFI) and the Tucker-Lewis Index (TLI) were both above the recommended threshold (see Byrne, 2016) of 0.95 (CFI = 0.999, TLI = 0.998), suggesting a good fit between the hypothesized model and the observed data. The results for the Root Mean Square Error of Approximation (RMSEA) indicate a good model fit as the value is below the suggested cutoff (MacCallum et al., 1996) of 0.05 (RMSEA = 0.0285, 90% CI [0 0.122]). Overall, the Confirmatory Factor Analysis results support the proposed single-factor structure of the 4-item questionnaire, with strong factor loadings and a good model fit (see guidelines as in Sun, 2005). Reliability analysis was then computed similarly as in Study 1. The analysis for the 4-items scale indicated good internal consistency, similar as what was observed for Study 1. A correlation table was computed to visualize the correlations between the questionnaire items. Table 10 shows a correlation matrix of the 4 items included in the second version of the AI attitude scale.

Similarly, to what was done for Study 1, an additional statistical analysis was conducted to assess the convergent validity between the Artificial Intelligence Attitude Scale (AIAS) and the attitude components of the Media and Technology Usage and Attitudes Scale (MTUAS). Pearson’s correlation coefficients were calculated between the attitude components of MTUAS and the two-factor components of AIAS. The correlation matrix is shown in Table 11. The results of the correlation matrix confirm the results already reported for the 4-items AIAS shown in Study 1, with the AIAS being moderately associated with MTUAS-p and MTUAS-n, and weakly associated with MTUAS-a. Results are shown in Table 12.

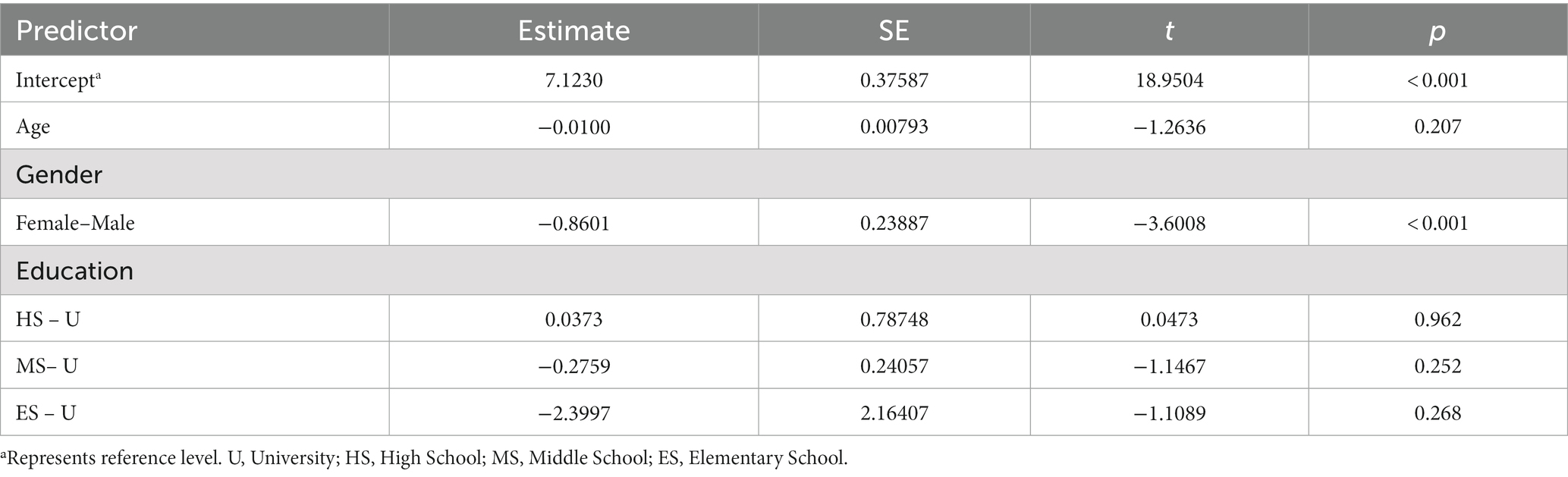

Table 12. Multiple linear regression model summarizing the relationships between age, gender, and education levels as predictors of 4-items AIAS total score for Study 2.

A further multiple linear regression was computer to understand how the background information collected for the sample can explain the total score of the 4-items AIAS for the sample of Study 2. These analyses follow the same steps of the multiple linear regression performed for the sample of Study 1. The overall model was statistically significant.

As for the analysis reported for Study 1, gender emerged as the only statistically significant predictors of the 4-items AIAS score among the ones used in the model, with female participants showing lower scores compared to male participants. Age and education levels were not found to be significant predictors of the 4-items AIAS total score. Table 12 reports the results of the multiple linear regression model. Estimates are obtained as described earlier.

In this article, the AI Attitude Scale (AIAS) is presented, and its psychometric properties were evaluated. The AIAS items were developed based on established theoretical frameworks and empirical research in technology acceptance, AI, and risk perception (Davis, 1989; Venkatesh et al., 2003; Barrett and Baum, 2017). The scale captures key dimensions of AI attitudes, including perceived benefits, potential risks, and intentions to use AI technologies (Brynjolfsson and McAfee, 2014; Bostrom, 2016). Drawing from the Technology Acceptance Model (TAM), the Unified Theory of Acceptance and Use of Technology (UTAUT), and literature on AI-related risks and societal implications, the AIAS provides a quick and psychometrically validated approach to understanding general attitude toward AI (e.g., dos Santos et al., 2019; Persson et al., 2021; Scott et al., 2021; Vasiljeva et al., 2021; Young et al., 2021). Furthermore, some of the items included in the AIAS were similar to items that were included in scales previously developed to understand attitude toward AI: Item 4 of the AIAS preliminary 5-items scale is similar with the item number 3 of the ATAI scale (“Artificial intelligence will destroy humankind”), however, it has a softer and more nuanced tone, not directly suggesting that AI will annihilate humankind. Item 5 of the preliminary version of the scale is also similar with the item number 4 of the ATAI scale (“Artificial intelligence will benefit humankind”).

An exploratory factor analysis (EFA) was conducted to investigate the factor structure of the original 5-item AIAS scale. The preliminary EFA indicated that the scale could be divided into two factors with moderate-to-high correlations between them. Factor 1, consisting of items related to individual attitudes toward AI (AIAS-IA), comprises the following items: “I believe that AI will improve my life,” “I believe that AI will improve my work,” and “I think I will use AI technology in the future.” Factor 2, which can be interpreted as a social attitude toward AI and its risks, includes the items “I think AI technology is a threat to humans” and “I think AI technology is positive for humanity.” Factor 1 demonstrated high internal consistency and reliability, as evidenced by the high values of Cronbach’s alpha coefficient and McDonald’s omega coefficient (>0.8) (Venkatesh, 2022). This suggests that the items in Factor 1 are closely related and measure the same underlying construct of attitudes toward the potential benefits of AI. The high reliability of Factor 1 indicates that it is a suitable and trustworthy measure for assessing attitudes toward the positive aspects of AI. In contrast, Factor 2 exhibited lower internal consistency and reliability compared to Factor 1, with a Cronbach’s alpha coefficient value of 0.496 and a McDonald’s omega coefficient value of 0.504 (Rosen et al., 2013). These findings imply that the items in Factor 2 may not be as strongly related or measure the same underlying construct as effectively as Factor 1. While Factor 2 may still be useful in assessing attitudes toward the potential risks of AI, further development of this factor may be needed in future studies.

The 4-items AIAS scale, developed by excluding item 4 from the original 5-item scale, demonstrated higher levels of internal consistency compared to the previously analyzed 2-factor scale. This suggests that the 4-item scale may provide a more reliable and cohesive measure of AI attitudes, thereby enhancing the usefulness of the AIAS in research and future applications within the information systems domain (Collins et al., 2021). The excluded item 4 may have been poorly understood by participants or might have measured a different psychological construct. Upon examination, this item could potentially be measuring fear toward AI (or a perceived direct threat posed by AI technology), rather than general positive or negative attitudes toward the technology (this is in line with the fact that such item is reported in the “fear” factor in the Sindermann et al., 2021).

In the survey, sample background variables were tested as predictors of the 4-items AIAS total score. Among the independent variables, gender emerged as the only statistically significant predictor of the 4-items AIAS total score. Female participants scored lower compared to males, suggesting that men may have more positive attitudes toward AI technology. This finding aligns with existing research indicating that men generally have more positive attitudes and higher levels of acceptance toward technology than women (e.g., Schumacher and Morahan-Martin, 2001; Ong and Lai, 2006; Ziefle and Wilkowska, 2010). However, the effect could depend on the specific technology (e.g., da Silva and Moura, 2020).

A confirmatory factor analysis (CFA) was conducted in a second study with 300 participants from the USA, who had similar characteristics to the first study’s sample. The analysis confirmed that the 1-factor model (4-items) of the IAPS demonstrated an adequate fit to the data, providing further evidence for the scale’s structural validity and generalizability across different populations. Follow-up analyses in Study 2 confirmed that the AIAS exhibited a good level of internal consistency, comparable to the findings in Study 1. Additionally, further analyses on convergent validity (with the analyzed factors of MTUAS) and individual differences of participants revealed similar trends to those reported in Study 1, strengthening the generalizability of the reported findings.

In summary, the reported analyses suggest the use of the one-factor 4-items scale. Future studies may want to further investigate how to efficiently measure social attitude toward AI, reworking and modifying items n. 4 and n. 5 from the originally proposed 5-items AIAS scale, or developing multi-factorial scales that specialized in particular aspects of the attitude toward AI technology.

The present research has some limitations that should be considered by researchers and professionals interested in using the proposed scale. First, the sample used in this study is not representative of the general population. The samples should be considered a convenience sample from UK (study 1) and USA (study 2) population, and the findings may not generalize to other populations with different demographic characteristics or cultural backgrounds (Bryman, 2016). As the sample was recruited online among people offering to perform research tasks using the online platform Prolific, the sample may be composed of people who are generally more IT-savvy compared to the general population. Despite participants using Prolific having generally been found to deliver high data quality (see, e.g., Peer et al., 2017), participants in online studies can be less motivated compared to, e.g., students participating in filling surveys in campus. However, the uses of control question for attention check, should have limited participants (or excluded those) that responded randomly or without reading all the items. Future research could validate the AIAS in more diverse samples, including those from different countries and selected age groups, to enhance the scale external validity and its possible impact.

Furthermore, this study only utilized self-report measures, which may be subject to social desirability bias or response biases (Podsakoff et al., 2003). Future research could benefit from incorporating behavioral, physiological, and implicit measures to complement self-report data, enhancing the understanding of AI attitudes as both a phenomenon and a psychological construct (Rosen et al., 2013). Physiological measures, in particular, could be employed in lab-based experiments where participants are exposed to information about AI or engage with AI directly. These measures may help researchers to better comprehend automatic reactions to AI-related stress or negative dispositions toward the technology.

For example, researchers could track heart rate variability, skin conductance, and cortisol levels as indicators of stress responses or arousal when individuals interact with AI technologies or are presented with AI-related stimuli. Similarly, facial expressions or eye-tracking data could provide valuable information on attention, cognitive processing, and emotional responses to AI. By incorporating these physiological measures, researchers can gain a more comprehensive and nuanced understanding of the factors influencing individuals’ attitudes toward AI and their subsequent behavior (as has been done in the related research field on technostress, Riedl, 2012; La Torre et al., 2019, 2020).

Moreover, combining physiological measures with other data collection methods, such as implicit measures or behavioral observations (e.g., choice tasks or response time), could provide valuable insights into the complex interplay of cognitive, affective, and behavioral components that shape AI attitudes. Integrating these complementary methods would enable researchers to not only identify potential biases in self-report data but also to uncover the underlying psychological processes that drive individuals’ reactions to AI and related technologies.

Future research may benefit from exploring the impact of various factors on AI attitudes, such as personality traits, cognitive abilities, and exposure to AI technology. This would contribute to a more comprehensive understanding of the factors that influence AI attitudes and help identify potential intervention areas to enhance AI acceptance and adoption. Future studies might also investigate the relationship between AI attitudes and actual AI technology usage, examining whether 4-items AIAS scores predict technology adoption and user behavior. This would provide evidence of the scale’s criterion validity and practical utility for understanding and predicting AI technology adoption in real-world contexts.

The 4-items AIAS scale could also be employed to longitudinally evaluate changes in societal attitudes toward AI during periods of rapid development and implementation of AI technologies. This would provide an index to track the status of AI technology acceptability and contribute to a better understanding of the evolving public perception of AI.

While the AIAS has shown effectiveness in gaining insights into people’s attitudes toward AI, it presents a somewhat simplified and general perspective of these attitudes. AI, as a technology, is becoming more nuanced, sophisticated, and human-like in its abilities and characteristics. This advancement and complexity of AI technology inherently make people’s attitudes and perceptions toward it more complex.

For instance, the recent theoretical developments of AI’s self-prolongation and autonomous minds are elements that go beyond the bounds of what AIAS can directly assess. The expansion of AI’s roles in society and its increasing sophistication adds several layers of complexity that the human mind must challenge when thinking about AI agents. Recent research based on the information-processing-based Bayesian Mindsponge Framework (BMF, see “Mindsponge Theory,” Vuong, 2023) found that people’s perceptions of an AI’s autonomous mind were influenced by their beliefs about the AI’s desire for self-prolongation (Vuong et al., 2023). This suggests a directional pattern of value reinforcement in perceptions of AI and indicates that as AI becomes more sophisticated in the future, it will be harder to set clear boundaries about what it means to have an autonomous mind, therefore changing the perception, evaluation, and the core attitude toward AI agents.

The value of the AIAS primarily lies in its convenience and its ability to capture a snapshot of basic attitudes toward AI. However, its simplicity also implies a limitation in capturing the full complexity and range of attitudes toward increasingly human-like AI. Therefore, it is imperative to acknowledge this lack of complexity in the scale as a limitation of the current study.

In conclusion, the AIAS offers a valuable tool for researchers and practitioners to assess attitudes toward AI technology. The scale can be used to explore factors influencing AI acceptance and adoption, inform the development of AI applications that are better aligned with user needs and expectations, evaluate development of the perception of the AI while the technology and contribute to a more comprehensive understanding of the complex interplay between AI technology and society.

The 4-items AIAS scale that was validated in this article is available in pdf format in the supplementary material of the present article (CC BY 4.0) as well as on the open research data repository Zenodo. The file also contains instructions for scoring. The validated 4-items AIAS can be also be retrieved from the following DOI and used freely.1 Please refer to this article when using the scale.

The raw data supporting the conclusions of this article will be made available by the author, without undue reservation.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

SG was responsible for the development of the main survey, funding acquisition, the analysis, and article writing.

I thank Aleksandra Sevic (Department of Public Health, University of Stavanger) for giving valuable feedback on the draft of the manuscript.

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2023.1191628/full#supplementary-material

Anderson, J., Rainie, L., and Luchsinger, A. (2018). Artificial intelligence and the future of humans. Pew Res. Center 10.

Araujo, T., Helberger, N., Kruikemeier, S., and De Vreese, C. H. (2020). In AI we trust? Perceptions about automated decision-making by artificial intelligence. AI & Soc. 35, 611–623. doi: 10.1007/s00146-019-00931-w

Archer, M. S. (2021). “Can humans and AI robots be friends?” in Post-human futures (Routledge), 132–152.

Barnes, M., Elliott, L. R., Wright, J. L., Scharine, A., and Chen, J. (2019). Human-robot interaction design research: from teleoperations to human-agent teaming US Army Combat Capabilities Development Command, Army Research Laboratory.

Barrett, A. M., and Baum, S. D. (2017). A model of pathways to artificial superintelligence catastrophe for risk and decision analysis. J. Exp. Theor. Artif. Intell. 29, 397–414. doi: 10.1080/0952813X.2016.1186228

Becker, L., Kaltenegger, H. C., Nowak, D., Weigl, M., and Rohleder, N. (2022). Physiological stress in response to multitasking and work interruptions: study protocol. PLoS One 17:e0263785. doi: 10.1371/journal.pone.0263785

Brill, T. M., Munoz, L., and Miller, R. J. (2019). Siri, Alexa, and other digital assistants: a study of customer satisfaction with artificial intelligence applications. J. Mark. Manag. 35, 1401–1436. doi: 10.1080/0267257X.2019.1687571

Brynjolfsson, E., and McAfee, A. (2014). The second machine age: work, progress, and prosperity in a time of brilliant technologies. New York, USA: W. W. Norton & Company.

Byrne, B. M. (2016). Structural equation modeling with AMOS: basic concepts, applications, and programming. New York, USA: Psychology Press.

Cave, S., Coughlan, K., and Dihal, K. (2019). “Scary robots” examining public responses to AI. in Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society. Honolulu, HI, USA, 331–337.

Cellan-Jones, R. (2014). Stephen hawking warns artificial intelligence could end mankind. BBC News 2:2014.

Cheatham, B., Javanmardian, K., and Samandari, H. (2019). Confronting the risks of artificial intelligence. McKinsey Q. 2, 1–9.

Chui, M., Manyika, J., and Miremadi, M. (2016). Where machines could replace humans-and where they can’t (yet). Available at: www.mckinsey.com

Collins, C., Dennehy, D., Conboy, K., and Mikalef, P. (2021). Artificial intelligence in information systems research: a systematic literature review and research agenda. Int. J. Inf. Manag. 60:102383. doi: 10.1016/j.ijinfomgt.2021.102383

Comrey, A. L., and Lee, H. B. (2013). A first course in factor analysis. Hlilsdale, New Jersey, USA: Lawrence Arlbaum associated.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika 16, 297–334. doi: 10.1007/BF02310555

da Silva, H. A., and Moura, A. S. (2020). Teaching introductory statistical classes in medical schools using RStudio and R statistical language: evaluating technology acceptance and change in attitude toward statistics. J. Stat. Educ. 28, 212–219. doi: 10.1080/10691898.2020.1773354

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340. doi: 10.2307/249008

DeVellis, R. F., and Thorpe, C. T. (2021). Scale development: Theory and applications. Sage publications.

DiStefano, C., and Hess, B. (2005). Using confirmatory factor analysis for construct validation: an empirical review. J. Psychoeduc. Assess. 23, 225–241. doi: 10.1177/073428290502300303

dos Santos, D. P., Giese, D., Brodehl, S., Chon, S. H., Staab, W., Kleinert, R., et al. (2019). Medical students' attitude towards artificial intelligence: a multicentre survey. Eur. Radiol. 29, 1640–1646. doi: 10.1007/s00330-018-5601-1

Eitel-Porter, R. (2021). Beyond the promise: implementing ethical AI. AI Ethics 1, 73–80. doi: 10.1007/s43681-020-00011-6

Ernst, E., Merola, R., and Samaan, D. (2019). Economics of artificial intelligence: Implications for the future of work. IZA J. Labor Policy 9.

Fast, E., and Horvitz, E. (2017). “Long-term trends in the public perception of artificial intelligence” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 31. San Francisco, California, USA.

Frey, C. B., and Osborne, M. A. (2017). The future of employment: how susceptible are jobs to computerisation? Technol. Forecast. Soc. Chang. 114, 254–280. doi: 10.1016/j.techfore.2016.08.019

Gibbs, S. (2017). Elon Musk leads 116 experts calling for outright ban of killer robots. The Guardian 20.

Glas, D. F., Minato, T., Ishi, C. T., Kawahara, T., and Ishiguro, H. (2016). “Erica: the erato intelligent conversational android” in 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (New York, NY, USA: IEEE), 22–29.

Hentout, A., Aouache, M., Maoudj, A., and Akli, I. (2019). Human–robot interaction in industrial collaborative robotics: a literature review of the decade 2008–2017. Adv. Robot. 33, 764–799. doi: 10.1080/01691864.2019.1636714

Hong, J. W., Cruz, I., and Williams, D. (2021). AI, you can drive my car: how we evaluate human drivers vs. self-driving cars. Comput. Hum. Behav. 125:106944. doi: 10.1016/j.chb.2021.106944

Johnson, K. B., Wei, W. Q., Weeraratne, D., Frisse, M. E., Misulis, K., Rhee, K., et al. (2021). Precision medicine, AI, and the future of personalized health care. Clin. Transl. Sci. 14, 86–93. doi: 10.1111/cts.12884

Jones, C. I. (2022). The past and future of economic growth: a semi-endogenous perspective. Annu. Rev. Econ. 14, 125–152. doi: 10.1146/annurev-economics-080521-012458

Kaur, D., Uslu, S., Rittichier, K. J., and Durresi, A. (2022). Trustworthy artificial intelligence: a review. ACM Comput. Surv. 55, 1–38. doi: 10.1145/3491209

Kieslich, K., Lünich, M., and Marcinkowski, F. (2021). The threats of artificial intelligence scale (TAI) development, measurement and test over three application domains. Int. J. Soc. Robot. 13, 1563–1577. doi: 10.1007/s12369-020-00734-w

Kumar, S., and Choudhury, S. (2022). Humans, super humans, and super humanoids: debating Stephen Hawking’s doomsday AI forecast. AI Ethics, 1–10. doi: 10.1007/s43681-022-00213-0

La Torre, G., De Leonardis, V., and Chiappetta, M. (2020). Technostress: how does it affect the productivity and life of an individual? Results of an observational study. Public Health 189, 60–65. doi: 10.1016/j.puhe.2020.09.013

La Torre, G., Esposito, A., Sciarra, I., and Chiappetta, M. (2019). Definition, symptoms and risk of techno-stress: a systematic review. Int. Arch. Occup. Environ. Health 92, 13–35. doi: 10.1007/s00420-018-1352-1

Leiter, C., Zhang, R., Chen, Y., Belouadi, J., Larionov, D., Fresen, V., et al. (2023). Chatgpt: A meta-analysis after 2.5 months. arXiv. arXiv:2302-13795.

Logg, J. M., Minson, J. A., and Moore, D. A. (2019). Algorithm appreciation: people prefer algorithmic to human judgment. Organ. Behav. Hum. Decis. Process. 151, 90–103. doi: 10.1016/j.obhdp.2018.12.005

Loh, H. W., Ooi, C. P., Seoni, S., Barua, P. D., Molinari, F., and Acharya, U. R. (2022). Application of explainable artificial intelligence for healthcare: a systematic review of the last decade (2011–2022). Comput. Methods Prog. Biomed. 226:107161. doi: 10.1016/j.cmpb.2022.107161

MacCallum, R. C., Browne, M. W., and Sugawara, H. M. (1996). Power analysis and determination of sample size for covariance structure modeling. Psychol. Methods 1, 130–149. doi: 10.1037/1082-989X.1.2.130

Makridakis, S. (2017). The forthcoming artificial intelligence (AI) revolution: its impact on society and firms. Futures 90, 46–60. doi: 10.1016/j.futures.2017.03.006

Manoharan, S. (2019). An improved safety algorithm for artificial intelligence enabled processors in self driving cars. J. Artif. Intell. 1, 95–104. doi: 10.36548/jaicn.2019.2.005

Matytsin, D. E., Dzedik, V. A., Makeeva, G. A., and Boldyreva, S. B. (2023). “Smart” outsourcing in support of the humanization of entrepreneurship in the artificial intelligence economy. Humanit. Soc. Sci. Commun. 10, 1–8. doi: 10.1057/s41599-022-01493-x

Middleton, S. E., Letouzé, E., Hossaini, A., and Chapman, A. (2022). Trust, regulation, and human-in-the-loop AI: within the European region. Commun. ACM 65, 64–68. doi: 10.1145/3511597

Morley, J., Machado, C. C., Burr, C., Cowls, J., Joshi, I., Taddeo, M., et al. (2020). The ethics of AI in health care: a mapping review. Soc. Sci. Med. 260:113172. doi: 10.1016/j.socscimed.2020.113172

Nadimpalli, M. (2017). Artificial intelligence risks and benefits. Int. J. Innov. Res. Sci. Eng. Technol. 6

O’Shaughnessy, M. R., Schiff, D. S., Varshney, L. R., Rozell, C. J., and Davenport, M. A. (2022). What governs attitudes toward artificial intelligence adoption and governance? Sci. Public Policy 50, 161–176. doi: 10.1093/scipol/scac056

Ong, C. S., and Lai, J. Y. (2006). Gender differences in perceptions and relationships among dominants of e-learning acceptance. Comput. Hum. Behav. 22, 816–829. doi: 10.1016/j.chb.2004.03.006

Parasuraman, A., and Colby, C. L. (2015). An updated and streamlined technology readiness index: TRI 2.0. J. Serv. Res. 18, 59–74. doi: 10.1177/1094670514539730

Pause Giant AI Experiments: An Open Letter. (2023). Available at: https://futureoflife.org/openletter/pause-giant-ai-experiments/

Peer, E., Brandimarte, L., Samat, S., and Acquisti, A. (2017). Beyond the Turk: alternative platforms for crowdsourcing behavioral research. J. Exp. Soc. Psychol. 70, 153–163. doi: 10.1016/j.jesp.2017.01.006

Persson, A., Laaksoharju, M., and Koga, H. (2021). We mostly think alike: individual differences in attitude towards AI in Sweden and Japan. Rev. Socionetw. Strat. 15, 123–142. doi: 10.1007/s12626-021-00071-y

Podsakoff, P. M., MacKenzie, S. B., Lee, J. Y., and Podsakoff, N. P. (2003). Common method biases in behavioral research: a critical review of the literature and recommended remedies. J. Appl. Psychol. 88, 879–903. doi: 10.1037/0021-9010.88.5.879

Preston, C. C., and Colman, A. M. (2000). Optimal number of response categories in rating scales: reliability, validity, discriminating power, and respondent preferences. Acta Psychol. 104, 1–15. doi: 10.1016/S0001-6918(99)00050-5

Rashid, T., and Asghar, H. M. (2016). Technology use, self-directed learning, student engagement and academic performance: examining the interrelations. Comput. Hum. Behav. 63, 604–612. doi: 10.1016/j.chb.2016.05.084

Riedl, R. (2012). On the biology of technostress: literature review and research agenda. ACM SIGMIS Database 44, 18–55. doi: 10.1145/2436239.2436242

Rosen, L. D., Whaling, K., Carrier, L. M., Cheever, N. A., and Rokkum, J. (2013). The media and technology usage and attitudes scale: an empirical investigation. Comput. Hum. Behav. 29, 2501–2511. doi: 10.1016/j.chb.2013.06.006

Rosseel, Y. (2012). Lavaan: an R package for structural equation modeling. J. Stat. Softw. 48, 1–36. doi: 10.18637/jss.v048.i02

Samuel, J. (2021). A quick-draft response to the march 2023 “pause Giant AI experiments: an open letter” by Yoshua Bengio, signed by Stuart Russell, Elon musk, Steve Wozniak, Yuval Noah Harari and others. Available at: https://ssrn.com/abstract=4412516

Samuel, J. (2023). Two keys for surviving the inevitable AI invasion. Available at: https://aboveai.substack.com/p/two-keys-for-surviving-the-inevitable

Sandoval, E. B., Mubin, O., and Obaid, M. (2014). Human robot interaction and fiction: a contradiction. in Proceedings of Social Robotics: 6th International Conference, ICSR 2014, Sydney, NSW, October 27–29, 2014. 6. New York, NY, USA: Springer International Publishing, 54–63.

Schaefer, K. E., Chen, J. Y., Szalma, J. L., and Hancock, P. A. (2016). A meta-analysis of factors influencing the development of trust in automation: implications for understanding autonomy in future systems. Hum. Factors 58, 377–400. doi: 10.1177/0018720816634228

Schepman, A., and Rodway, P. (2020). Initial validation of the general attitudes towards Artificial Intelligence Scale. Comp. Hum. Behav. Rep. 1:100014.

Schumacher, P., and Morahan-Martin, J. (2001). Gender, internet and computer attitudes and experiences. Comput. Hum. Behav. 17, 95–110. doi: 10.1016/S0747-5632(00)00032-7

Scott, I. A., Carter, S. M., and Coiera, E. (2021). Exploring stakeholder attitudes towards AI in clinical practice. BMJ Health Care Informatics 28. doi: 10.1136/bmjhci-2021-100450

Sheridan, T. B. (2019). Individual differences in attributes of trust in automation: measurement and application to system design. Front. Psychol. 10:1117. doi: 10.3389/fpsyg.2019.01117

Shrout, P. E., and Fleiss, J. L. (1979). Intraclass correlations: uses in assessing rater reliability. Psychol. Bull. 86, 420–428. doi: 10.1037/0033-2909.86.2.420

Sigerson, L., and Cheng, C. (2018). Scales for measuring user engagement with social network sites: a systematic review of psychometric properties. Comput. Hum. Behav. 83, 87–105. doi: 10.1016/j.chb.2018.01.023

Sindermann, C., Sha, P., Zhou, M., Wernicke, J., Schmitt, H. S., Li, M., et al. (2021). Assessing the attitude towards artificial intelligence: introduction of a short measure in German, Chinese, and English language. KI-Künstliche Intelligenz 35, 109–118. doi: 10.1007/s13218-020-00689-0

Sohn, K., and Kwon, O. (2020). Technology acceptance theories and factors influencing artificial intelligence-based intelligent products. Telematics Inform. 47:101324. doi: 10.1016/j.tele.2019.101324

Srivastava, T., Shen, A. K., Browne, S., Michel, J. J., Tan, A. S., and Kornides, M. L. (2022). Comparing COVID-19 vaccination outcomes with parental values, beliefs, attitudes, and hesitancy status, 2021–2022. Vaccine 10:1632. doi: 10.3390/vaccines10101632

Stoet, G. (2010). PsyToolkit - a software package for programming psychological experiments using Linux. Behav. Res. Methods 42, 1096–1104. doi: 10.3758/BRM.42.4.1096

Stoet, G. (2017). PsyToolkit: a novel web-based method for running online questionnaires and reaction-time experiments. Teach. Psychol. 44, 24–31. doi: 10.1177/0098628316677643

Sun, J. (2005). Assessing goodness of fit in confirmatory factor analysis. Meas. Eval. Couns. Dev. 37, 240–256. doi: 10.1080/07481756.2005.11909764

The Jamovi Project (2022). Jamovi (version 2.3) [computer software]. Available at: https://www.jamovi.org (Accessed March 20, 2023).

Tschang, F. T., and Almirall, E. (2021). Artificial intelligence as augmenting automation: implications for employment. Acad. Manag. Perspect. 35, 642–659. doi: 10.5465/amp.2019.0062

Vasiljeva, T., Kreituss, I., and Lulle, I. (2021). Artificial intelligence: the attitude of the public and representatives of various industries. J. Risk Finan. Manag. 14:339. doi: 10.3390/jrfm14080339

Vayena, E., Blasimme, A., and Cohen, I. G. (2018). Machine learning in medicine: addressing ethical challenges. PLoS Med. 15:e1002689. doi: 10.1371/journal.pmed.1002689

Venkatesh, V. (2022). Adoption and use of AI tools: a research agenda grounded in UTAUT. Annals Oper. Res. 1–12.

Venkatesh, V., Morris, M. G., Davis, G. B., and Davis, F. D. (2003). User acceptance of information technology: toward a unified view. MIS Q. 27, 425–478. doi: 10.2307/30036540

Vuong, Q. H., La, V. P., Nguyen, M. H., Jin, R., La, M. K., and Le, T. T. (2023). How AI’s self-prolongation influences people’s perceptions of its autonomous mind: the case of US residents. Behav. Sci. 13:470. doi: 10.3390/bs13060470

Wijayati, D. T., Rahman, Z., Rahman, M. F. W., Arifah, I. D. C., and Kautsar, A. (2022). A study of artificial intelligence on employee performance and work engagement: the moderating role of change leadership. Int. J. Manpow. 43, 486–512. doi: 10.1108/IJM-07-2021-0423

Williams, B., Onsman, A., and Brown, T. (2010). Exploratory factor analysis: a five-step guide for novices. Aust. J. Paramed. 8, 1–13. doi: 10.33151/ajp.8.3.93

Wilson, H. J., Daugherty, P., and Bianzino, N. (2017). The jobs that artificial intelligence will create. MIT Sloan Manag. Rev. 58:14.

Xia, Q., Chiu, T. K., Lee, M., Sanusi, I. T., Dai, Y., and Chai, C. S. (2022). A self-determination theory (SDT) design approach for inclusive and diverse artificial intelligence (AI) education. Comput. Educ. 189:104582. doi: 10.1016/j.compedu.2022.104582

Yang, W. (2022). Artificial intelligence education for young children: why, what, and how in curriculum design and implementation. Comput. Educ. 3:100061. doi: 10.1016/j.caeai.2022.100061

Young, A. T., Amara, D., Bhattacharya, A., and Wei, M. L. (2021). Patient and general public attitudes towards clinical artificial intelligence: a mixed methods systematic review. Lancet Digit. Health 3, e599–e611. doi: 10.1016/S2589-7500(21)00132-1

Zhang, B., and Dafoe, A. (2019). Artificial intelligence: American attitudes and trends. Available at SSRN 3312874.

Keywords: artificial intelligence, questionnaire, factor analysis, psychology, human-computer interaction (HCI)

Citation: Grassini S (2023) Development and validation of the AI attitude scale (AIAS-4): a brief measure of general attitude toward artificial intelligence. Front. Psychol. 14:1191628. doi: 10.3389/fpsyg.2023.1191628

Received: 22 March 2023; Accepted: 16 June 2023;

Published: 24 July 2023.

Edited by:

Runxi Zeng, Chongqing University, ChinaReviewed by:

Verena Nitsch, RWTH Aachen University, GermanyCopyright © 2023 Grassini. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Simone Grassini, c2ltb25lLmdyYXNzaW5pQHVpYi5ubw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.