94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Psychol., 31 May 2023

Sec. Organizational Psychology

Volume 14 - 2023 | https://doi.org/10.3389/fpsyg.2023.1169940

This article is part of the Research TopicPromoting Teamwork in HealthcareView all 24 articles

Teamwork is critical for safe patient care. Healthcare teams typically train teamwork in simulated clinical situations, which require the ability to measure teamwork via behavior observation. However, the required observations are prone to human biases and include significant cognitive load even for trained instructors. In this observational study we explored how eye tracking and pose estimation as two minimal invasive video-based technologies may measure teamwork during simulation-based teamwork training in healthcare. Mobile eye tracking, measuring where participants look, and multi-person pose estimation, measuring 3D human body and joint position, were used to record 64 third-year medical students who completed a simulated handover case in teams of four. On one hand, we processed the recorded data into the eye contact metric, based on eye tracking and relevant for situational awareness and communication patterns. On the other hand, the distance to patient metric was processed, based on multi-person pose estimation and relevant for team positioning and coordination. After successful data recording, we successfully processed the raw videos to specific teamwork metrics. The average eye contact time was 6.46 s [min 0 s – max 28.01 s], while the average distance to the patient resulted in 1.01 m [min 0.32 m – max 1.6 m]. Both metrics varied significantly between teams and simulated roles of participants (p < 0.001). With the objective, continuous, and reliable metrics we created visualizations illustrating the teams’ interactions. Future research is necessary to generalize our findings and how they may complement existing methods, support instructors, and contribute to the quality of teamwork training in healthcare.

Teamwork is critical for safe patient care. Professionals from different “tribes” must team up — oftentimes on the spot — and work together to achieve excellent patient care (Rosen et al., 2018). Poor teamwork is a considerable risk for patient safety; great teamwork is an enormous asset, particularly for highly specialized care and precision medicine (Pronovost, 2013). Teaming up across professions, specialties, and across the authority gradient does not come naturally (Edmondson, 2012). Healthcare providers, universities, and training institutions include teamwork and the ability to collaborate in any healthcare team in their learning objectives. In particular, simulation-based teamwork training allows both students and professionals to practice and reflect on teamwork skills in meaningful settings without putting patients at risk (Weaver et al., 2014; Hughes et al., 2016). To be effective, training should be guided, and teamwork performance should be measured (Salas et al., 2009). Without measuring teamwork, feedback and debriefing conversations—and ultimately learning—will be limited (Rosen et al., 2008; Rudolph et al., 2008; Fey et al., 2022). However, identifying relevant teamwork behaviors and tracking them in complex, dynamic, and fast-paced simulated clinical situations is challenging (Halgas et al., 2022). Observing and measuring teamwork in action is prone to bias and constitutes a significant cognitive load even for trained instructors (Caverni et al., 1990; Greig et al., 2014; Uher and Visalberghi, 2016; Fraser et al., 2018). Additionally, simulation educators vary in individual expertise, and feedback might differ between them (Shrivastava et al., 2010; Bosse et al., 2015). We aim to support educators by contributing to the sophisticated collection of dynamic teamwork data (Petrosoniak et al., 2019; Marcelino et al., 2020; Shuffler et al., 2020; Wiltshire et al., 2020; Abegglen et al., 2022).

The choice of how to measure teamwork impacts the possibilities of further data use. For example, while using behavioral anchored rating scales (BARS) is relatively easy, it rarely provides enough variance in the acquired data and only limited information on temporal matters (Kolbe et al., 2013; Dietz et al., 2014; Brauner et al., 2018). On the other hand, timed, event-based behavior coding of teamwork behavior provides more information on the time and duration of behaviors but is complex and time-consuming (Brauner et al., 2018). Although event-based behavior coding allows for reliably capturing many explicit and verbal teamwork behavior (e.g., giving instructions or providing information on request) and allows for capturing more implicit teamwork behavior as well (e.g., team member monitoring), it usually suffers low interrater reliability (Kolbe et al., 2013; Uher and Visalberghi, 2016; Brauner et al., 2018). Low-quality data on team performance impair correct conclusions about team processes and performance, enhance the risk of negative learning, and limit training capacities (Salas et al., 2009).

We propose that using technology to objectively, continuously, and reliably measure teamwork dynamics will improve the quality of teamwork performance data in simulation-based training in healthcare. Technology-based measurement is a promising and fast-developing field of team science that can offer many opportunities for quantitative, scalable, objective, repeatable, new ways of recording data and resulting feedback conversations based on video data (Kozlowski, 2015; Klonek et al., 2019; Kolbe and Boos, 2019; Halgas et al., 2022). Teamwork metrics derived from technology can measure multiple behaviors simultaneously and allow for continuous observation of all team members over the duration of the simulation. They could be especially helpful for observing more implicit behaviors and team interactions that are not detectable via observation by humans (Uher and Visalberghi, 2016). Once established, technology-based metrics are reproducible and could be used for measuring teamwork dynamics during training and research.

Sensor-based measurement and wearable technologies have the ability to capture team dynamics (Rosen et al., 2014; Halgas et al., 2022). For example, Radio-Frequency Identification Devices (RFID) have been successfully used to measure the proximity between team members (Isella et al., 2011) and distance traveled during nursing shifts (Hendrich et al., 2008). In another study heart rate sensors allowed assumptions regarding the physiological synchronization of surgical teams (Dias et al., 2019). In this observational study, we explored the use of video-based technologies for continuously measuring teamwork behavior during simulation-based training in healthcare. We investigated two minimally invasive, video-based technologies: eye tracking and pose estimation.

Mobile eye tracking, an established wearable and minimally invasive technology in the field of healthcare devices and training (Henneman et al., 2017; Weiss et al., 2021), measures what a team member wearing the glasses is looking at (Figure 1A). We used eye tracking and its resulting data to precisely calculate the occurrence and length of eye contact between team members. Eye contact occurs naturally in conversation and is especially relevant during listening communication patterns (Ruth, 1992; Bohannon et al., 2013). Therefore, we considered eye contact a valuable metric for teamwork (Vertegaal et al., 2001; Fasold et al., 2021).

The second video-based technology we investigated was multi-person pose estimation as newer, non-wearable technology (Cao et al., 2021; Weiss et al., 2023). It measures human pose by calculating the exact position of human joints (Figure 1B). Combining two simultaneously recorded video data sets of each team allows for calculating the 3D position of all team members and, thus, their positioning to each other. We calculated each individual’s distance to the patient and the team members. The distance to patient influences the healthcare providers’ relationship with them (Schnittker, 2004) and is relevant during the workflows of teams (Petrosoniak et al., 2019) and movement coordination (Alderisio et al., 2017). Distance and movement may allow educators to make assumptions regarding the quality of team coordination (Petrosoniak et al., 2019; Marcelino et al., 2020; Shuffler et al., 2020; Tolg and Lorenz, 2020; Wiltshire et al., 2020), therefore being a relevant measure for teamwork. In summary, the ability to precisely measure and visualize eye contact and team member pose over time is highly relevant for simulation-based training providers. It allows an automated and dynamic capturing of visual attention, eye contact, team member positioning, and distance. Being aware of our own and team’s attention and positioning enables learning.

This study aims to explore the use of mobile eye tracking and multi-person pose estimation to continuously collect data and measure teamwork during simulation-based training in healthcare. This is an essential step that will enable further studies validating eye tracking and multi-person pose estimation metrics. These technology-based metrics intend to complement existing methods of teamwork assessment, support simulation faculty, improve the quality of simulation-based training and build examples for new methods of measuring teamwork based on technology.

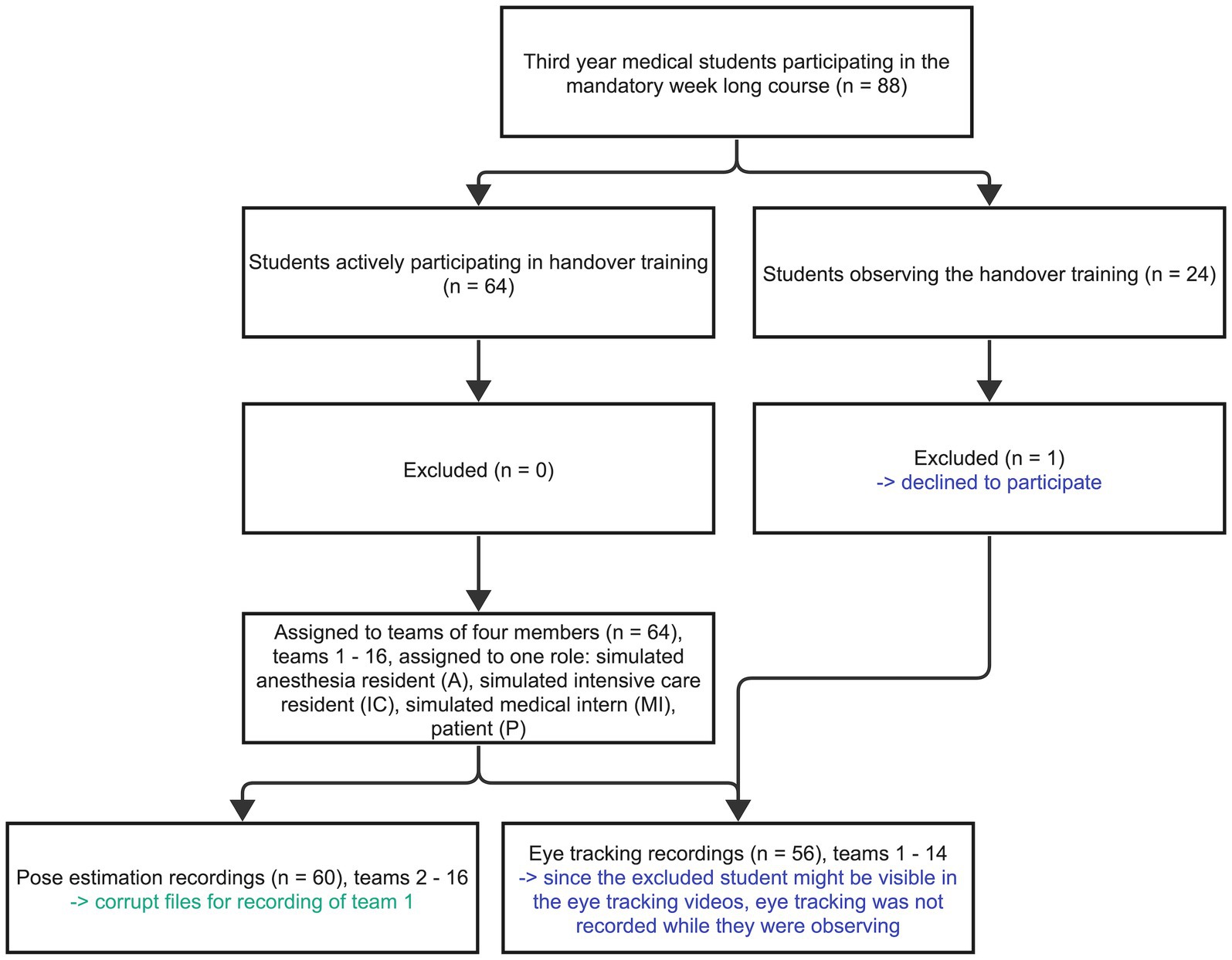

We conducted this observational study during a week-long, simulation-based training in March 2022 with a convenience sample. Third-year medical students of ETH Zurich (Zurich, Switzerland) participated in this training conducted at the simulation center of the University Hospital Zurich (Zurich, Switzerland). The training included eight four-hour simulation exercises on clinical teamwork situations. The overall 88 eligible students rotated in teams of 10–12 students through the course. We conducted this study in one of the eight clinical teamwork simulations, with the topic patient handover. The inclusion criteria were third-year medical students, trackable eyes, and participants’ consent. Of the eligible 88 students, 64 actively participated in the simulation scenarios, while the remaining 24 students observed the scenarios and participated in the subsequent debriefings (Figure 2).

Figure 2. Participant’s flow diagram visualizes the participants, including their enrollment, consent, distribution, and data sets. The excluded participant is highlighted blue and the corrupt file green.

This study was granted exemption from the ethics committee of canton Zurich, Switzerland (BASEC number: Req-2020-00200). No patients were involved, study participation was voluntary, and participants’ written informed consent was obtained.

We used a handover simulation scenario for data collection to explore the applicability of eye tracking and multi-person pose estimation. During patient handover, healthcare providers communicate information and responsibility about patients to ensure their continued, safe care during transfers among units or shift changes (Foster and Manser, 2012). Teamwork is critical during handover (Bogenstätter et al., 2009; Desmedt et al., 2021). The training’s learning objectives included the ability to describe pitfalls and risk management strategies such as iSBAR, a communication rubric to standardize team communication during handover (Müller et al., 2018). Two formally trained, experienced simulation educators with a nursing background in intensive care led the handover training. They introduced students to the course, aimed to establish and maintain a psychologically safe learning space, allowed students to familiarize themselves with the particular setting, and oriented them toward the learning objectives (Rudolph et al., 2014; Kolbe et al., 2020). After the introduction, a member of the study team and two master students explained the study goals and recording technologies, invited students to participate, and asked for informed, written consent.

The simulated case included a patient who had undergone trauma surgery after a bicycle accident to be handed over from surgery to the intensive care unit. A room in the simulation center was prepared with a bed and pictures of intensive care unit (ICU) settings. One member of the student team presumed the role of the patient (P) lying in bed. The other three students assumed the roles of anesthesia resident physician (A), intensive care resident (IC), and medical intern (MI). The scenario started with A & IC distancing themselves from P while MI took care of P. The patient was instructed to feel nauseous and in pain, challenging the team members to continue a structured handover. Team members had to take care of the patient while engaging in a structured handover. After the scenario, the two simulation instructors led debriefings based on the Debriefing with Good Judgment approach (Rudolph et al., 2007), which lasted approximately 45 min.

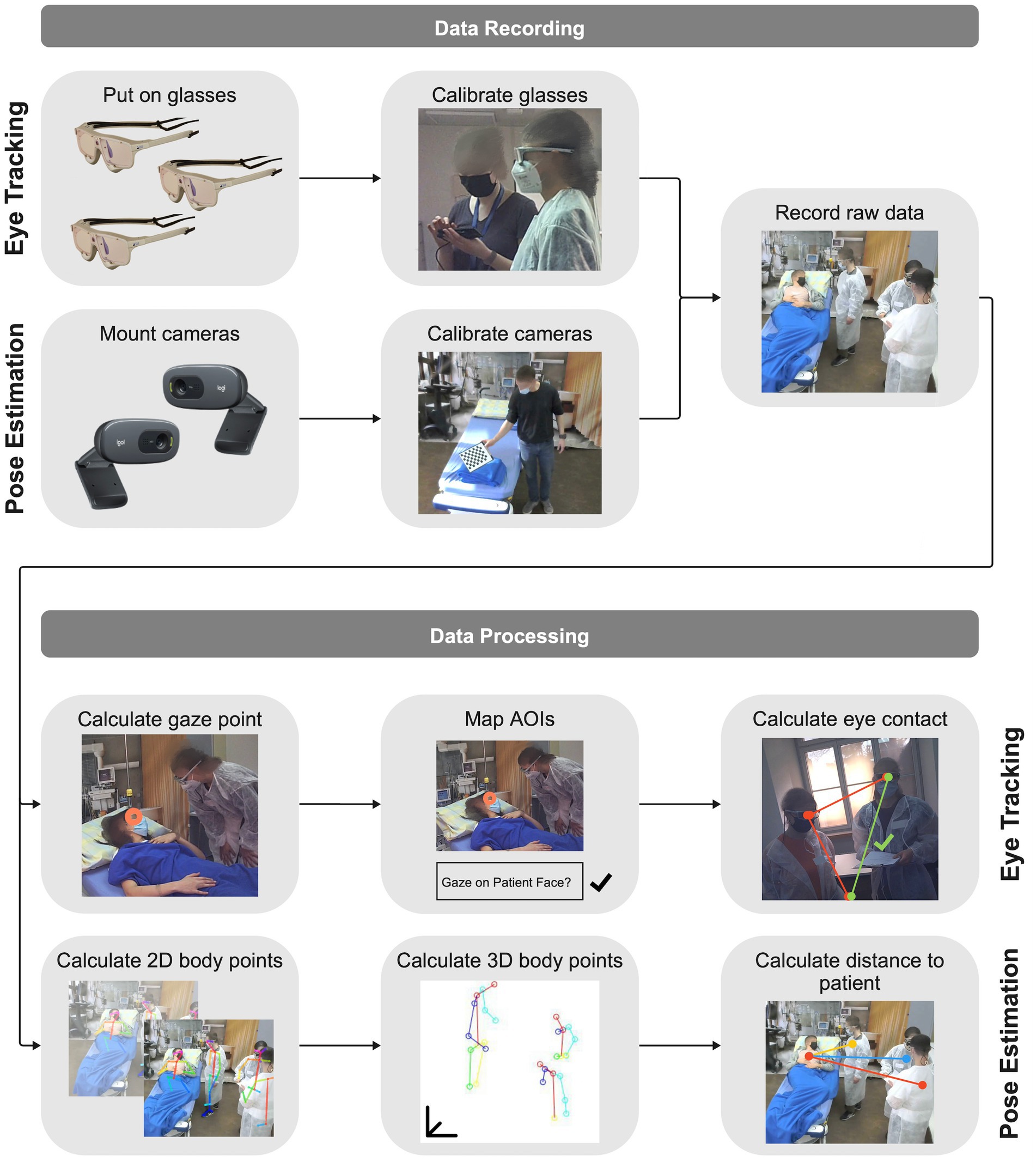

For mobile eye tracking, we used three SMI ETG 2 Wireless mobile eye tracking glasses (Figure 3, Senso Motoric Instruments, Teltow, Germany). We calibrated the eye tracking glasses for every participant with a three-point calibration technique. The glasses recorded the eyes of the participants and their point of view, including audio, therefore allowing us to calculate the gaze point. After each use, we disinfected the glasses.

Figure 3. Process of data recording with measuring technologies to raw data and data processing from raw data to teamwork metrics for mobile eye tracking and multi-person 3D pose estimation.

For multi-person pose estimation, we used two Logitech C270 webcams (Logitech, Lausanne, Switzerland) to record videos of the simulated cases (Figure 3). The cameras were neither invasive nor wearable, therefore not limiting the immersion of the participants or taking time to mount on their bodies. We mounted two pose estimation cameras on the ceiling and calibrated them once using a checkerboard.

We used SMI BeGaze 3.6 (Senso Motoric Instruments, Teltow, Germany) to process the mobile eye tracking data (Figure 3). This software calculated the gaze point, the data of in what millisecond which person is focusing on, in each individual frame. Afterward, we defined the areas of interest (AOIs), relevant and visible objects, people, backgrounds we want to base our analysis on. The AOIs were: face MI, body (excluding the face) MI, face A, body A, face IC, body IC, face P, body P, room, and patient sheet. We manually mapped the gaze point to the AOIs for each frame, for example if the gaze point focused on the patient face we mapped it to the face P AOI. Finally, we exported the mapped AOI data and further processed it using MATLAB (MathWorks, Natick, Massachusetts, USA): we calculated the eye contact time between the team members and visualized using the face AOIs. Additionally, we visualized the complete visual attention of the team members by plotting on which AOI each team member was focusing over time.

We used the open-source software OpenPose (Cao et al., 2021) to process the recorded pose estimation videos (Figure 3). OpenPose allows for detecting 2D human body skeleton points, e.g., chest, shoulder, and hand, for all team members. No body markers were needed, which makes this method completely non-invasive and, despite its limitations, the accuracy of this methodology is sufficiently high to warrant its use. We exported the resulting two 2D pose estimation data sets — one for each camera — and used MATLAB (MathWorks, Natick, Massachusetts, USA) for triangulation, resulting in 3D human body skeletal points. With the 3D representation of the team members and the patient, using their chest points, we calculated the distances between each team member to the patient, as well as the distance between the team members for every frame. Subsequently, we obtained and visualized the average distances. For both technologies, we calculated the statistics (Kruskal-Wallis tests) using SPSS Statistics 28 (IBM, Armonk, New York, USA).

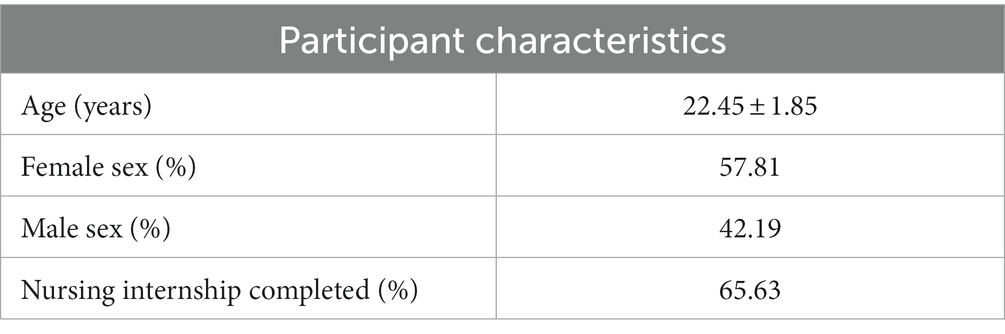

Sixty four students organized in 16 teams of four assumed an active role during the simulated cases of this study. The simulated roles were anesthesia resident physician (A), intensive care resident (IC), medical intern (MI), and patient (P). The student’s demographics are shown in Table 1. Since one student observing teams 15 and 16 chose not to participate in the study, no eye tracking was recorded since the particular student might have been visible. Therefore, we collected eye tracking data from 14 teams (teams 1–14), with three mobile eye tracking glasses per team resulting in 42 eye tracking data sets. Since the pose estimation cameras were fixed and only recorded the team itself, we did not have to exclude the videos of teams 15 and 16. Thus, we recorded all 16 teams with pose estimation. The data set of team 1 was not usable, resulting in overall usable 15 pose estimation team data sets, which allowed us to calculate 60 individual human pose estimation data sets (see Figure 2). The average simulation case length was 6.72 min [min 4.08 min – max 9.57 min], with a combined length of all cases resulting in 107.57 min.

Table 1. Participant characteristics (n = 64), including average age in years (± SD), the female and male sex ratio in percent, and the percent of participants having completed their obligatory nursing internship.

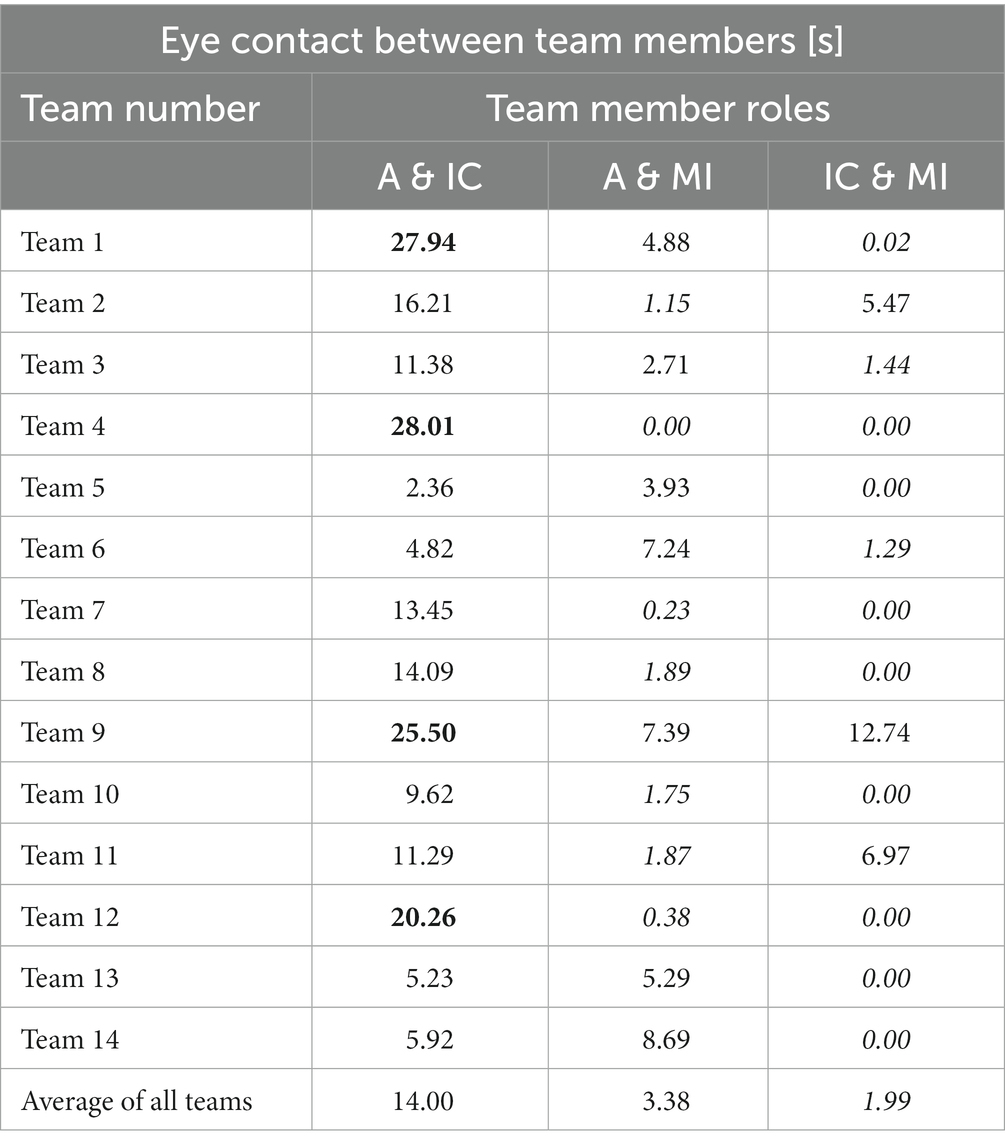

The measured eye contact times, i.e., when team members looked each other in the eye, for all teams and their members are visible in Table 2; Figure 4. The average eye contact times for all teams were 14 s for A & IC, 3.38 s for A & MI, and 1.99 s for IC & MI (H(2) = 19.029, p < 0.001) with an average eye contact time of 6.46 s for all teams and roles. Eye contact times varied extensively between teams.

Table 2. Eye contact in seconds for all teams, including the average of all teams, between the different team members depending on their roles (A, Simulated Anesthesia Resident; IC, Simulated Intensive Care Resident; MI, Simulated Medical Intern), high eye contact times (over 20 s) are highlighted bold while low eye contact times (below 2 s) are highlighted cursive.

Figure 4. Eye contact between A & IC (blue), A & MI (red), and IC & MI (yellow) of all teams over training time in minutes. A, Simulated Anesthesia Resident; IC, Simulated Intensive Care Resident; MI, Simulated Medical Intern.

An additional measure based on the eye tracking data, is the visualization of all team member’s gaze points over the whole time of the simulation. On which AOI each team member focuses on during the simulation is visualized for two example teams in Figure 5.

Figure 5. AOIs the team members (A blue, IC red, MI yellow) focused on during the training time, for example teams 3 and 7. A, Simulated Anesthesia Resident; IC, Simulated Intensive Care Resident; MI, Simulated Medical Intern.

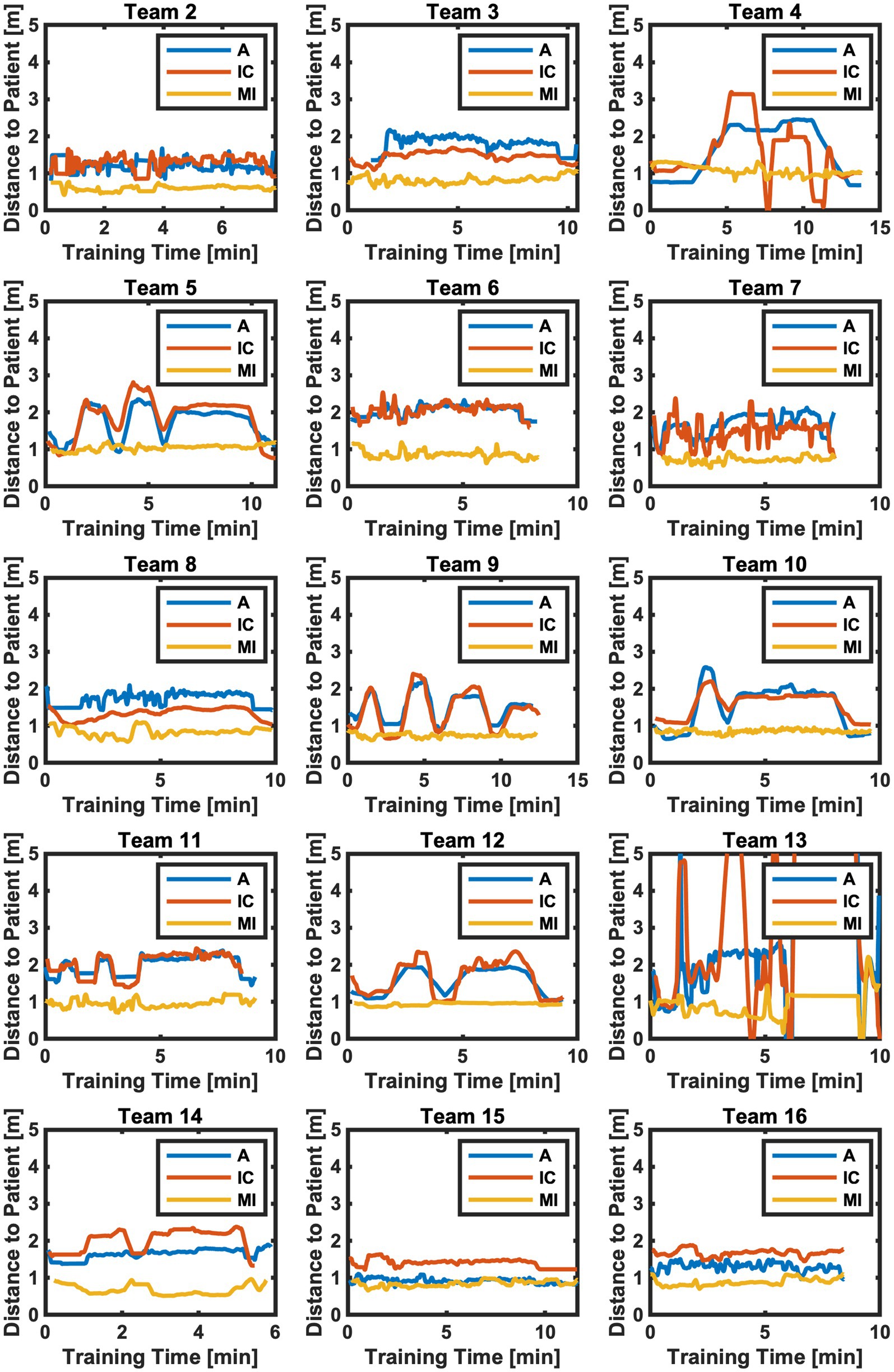

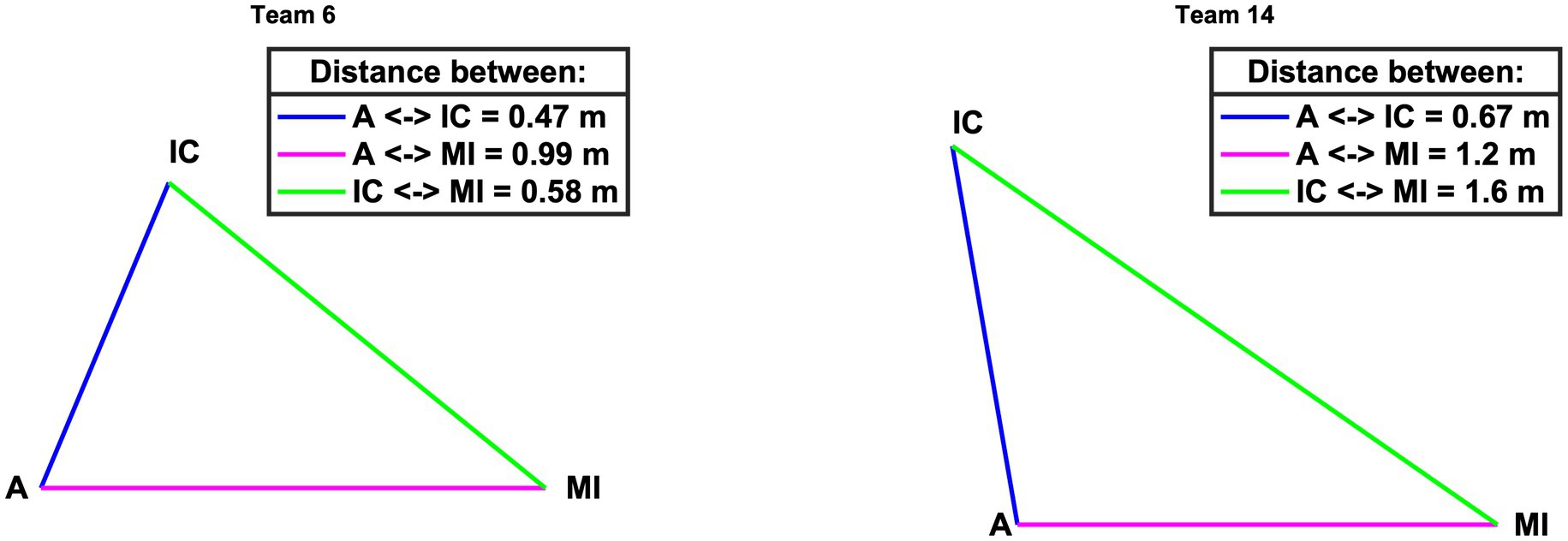

The calculated distance to the patient from team members A, IC, and MI is visualized in Figure 6 over the time of the simulated case. The average values for each team are presented in Table 3. The average distance over all teams results in 1.15 m for A, 1.11 m for IC, and 0.78 m for MI (H(2) = 16.642, value of p <0.001), with an average distance of 1.01 m to the patient for all teams and roles. The average distance between team members based on calculated 3D pose estimation data is visualized for two teams as an example in Figure 7.

Figure 6. Distance to patient for all teams and team members (A blue, IC red, MI yellow) over the training time. A, Simulated Anesthesia Resident; IC, Simulated Intensive Care Resident; MI, Simulated Medical Intern.

Table 3. Distance to patient in meters for all teams and team members, including the average of all teams (A, Simulated Anesthesia Resident; IC, Simulated Intensive Care Resident; MI, Simulated Medical Intern), high distances to patient (over 1.5 m) are highlighted bold while low distances (below 0.5 m) are highlighted cursive.

Figure 7. Average distance between team members (A & IC blue, A & MI magenta, IC & MI green) for example teams 6 and 14. A, Simulated Anesthesia Resident; IC, Simulated Intensive Care Resident; MI, Simulated Medical Intern.

This study explored the use of video-based, minimally invasive technologies to collect data to measure teamwork in simulation-based training in healthcare. We found that both technologies reliably recorded and analyzed data, only one pose estimation data set was unusable. In what follows, we discuss the feasibility, contribution, and limitations of this study.

Mobile eye tracking allowed for precise measurement of visual attention while being minimally invasive. Some participants reported casually and by themselves that they had forgotten that they were wearing the glasses while removing the mobile eye tracking glasses. However, completely non-invasive eye tracking would be beneficial. Although remote eye tracking is common, it currently cannot be used for moving participants (Ferhat and Vilariño, 2016). Handling the mobile eye tracking glasses was time-intensive during the recording since the glasses needed to be calibrated for every team member. However, the collected data yielded valuable, complex details on teamwork. We were able to track three team members simultaneously while not losing a single data set. During data processing, we had to manually map the AOIs, which was time-consuming. Automation of this processing step is being developed (Wolf et al., 2018).

The recording of multi-person pose estimation was more effortless. The one-time calibration for all recordings took little time. The method was entirely non-invasive, neither distracting participants nor hindering their immersion in the simulation. Unfortunately, one data set was unusable. We assume that the video files were corrupted during the process of being saved to the hard drive. During data processing, having multiple participants in the same camera frame was challenging. If occlusions occurred, no data could be extracted about a person if their body was not visible. We manually checked the indexes of the team members to ensure that the algorithm did not mix up the team members. A promising solution to this problem might be using depth cameras or more webcams to record data from multiple points of view.

The teamwork metrics that were calculated and visualized in this paper show the applicability of eye tracking and pose estimation to measure teamwork. Both mobile eye tracking and multi-person pose estimation allowed for collecting numerous, continuous data. The challenge—as with any technology-driven teamwork measure—is to identify parameters that matter and serve to discriminate among teams (Klonek et al., 2019). In our view, using both eye tracking and pose estimation allowed not only for precisely measuring and visualizing eye contact (Figure 4) and distance among patient and team members (Figure 6). It also allowed for discrimination between teams: eye contact among team members and distance to patient (and among team members) varied extensively from team to team. For example, all members of Team 9 had eye contact among each other numerous times (Figure 4). In contrast, members of Team 5, only A made eye contact with MI and IC a few times, while MI and IC had no eye contact at all. In Team 4, A and IC had exclusively much eye contact among each other, while A and MI and IC and MI did not look at each other (Figure 4). The visualization of every team member’s visual attention during the whole scenario duration (Figure 5) might be very interesting to investigate teamwork.

Regarding distance to patient, all members of Team 15 and 16 had little distance to the patient and slight variance in the distance over time (Figure 6). In contrast, members of Team 13 heavily varied their distance to the patient among each other and over time (Figure 6). That is, both metrics indicate sensitivity to differences in team processes. Neither eye contact nor pose tracking are possible with the naked eye. Yet, for teamwork in healthcare, certain interaction patterns may make all the difference for patient care (Kolbe et al., 2014; Su et al., 2017; Schmutz et al., 2019). The ability to precisely measure and visualize eye contact and team member pose over time is highly relevant for simulation-based training providers: it allows for more automated and dynamic capturing of visual attention, eye contact, team member positioning, and distance. Simulation educators can access this data and use it for discussing matches and mismatches in desired team performance during debriefing. For example, visual attention and eye contact data can serve discussions of situation awareness, power, and speaking up (Dovidio and Ellyson, 1982). Distance measures may provide essential details in discussing team coordination and task management. For example, in our simulated handover case, A and IC were instructed to distance themselves from P and MI to discuss the patient information, while MI should stay close to P to take care of them. If metrics depending on the teams’ position and movement are developed and validated, pose estimation allows continuous measuring of them, allowing for testing hypotheses and performance matches.

These technology-based metrics may complement behavior observation without replacing traditional methods. Medical competence assessment, especially of teamwork, needs both analytic and holistic approaches (Rotthoff et al., 2021), and mobile eye tracking and multi-person pose estimation allow to draw analytical conclusions in a more complex setting than before. An example of combining multiple methods could include self-reports of participants, supporting reflective practice (Liaw et al., 2012), technology-based metrics providing analytical observations for specific behaviors, and expert assessors observing the general behavior based on their extensive knowledge. The vision of using technology to measure teamwork is a static and fully automated recording set-up based in a simulation center. With this set-up new teamwork metrics can be easily co-created and validated with experts and subsequently used to support training. When experts find a new competence metric based on visual attention or body position, we can analyze it with our recorded data set if the participant’s consent allows it. The practical applications today are to provide educators with visualizations of existing metrics after the simulated case to use during debriefing. For example, learners may watch their parts of the recorded simulation, including the metrics, which may increase learning (Farooq et al., 2017; Gordon et al., 2017). Recording expert teams performing challenging teamwork tasks may be used in teamwork training to set masterly learning goals and provide specific guidance during rapid cycle deliberate practice (Barsuk et al., 2016; Salvetti et al., 2019; Ng et al., 2021). Our study focuses on teams of three simulated healthcare professionals and one patient to not rely solely on research with dyads to conclude the use of wearable technology in team contexts (Kazi et al., 2021; Halgas et al., 2022). Although the metrics are developed and visualized for a handover scenario, they can easily be transferred to other training scenarios.

Our study has limitations. First, although eye tracking and multi-person pose estimation showed promising opportunities and relevance, they require more validation research. In particular, indicators for criterion validity were not included in our study and are highly needed. That is, we cannot conclude if teams with a certain degree of eye contact or distance to patient performed better or worse. This is important, though, and should be studied with experienced healthcare teams rather than with a student sample.

Second, although the AOIs provided a rich set of dynamic details, their information density is high: they provided details about what each team member is looking at and how that changes over time (Figure 5). This level of detail and complexity might be too overwhelming to support simulation educators during debriefings. Simpler indices and/or visualizations will be needed to enhance the applicability of results. However, researchers might find it interesting to discover teamwork patterns in visual behavior. For example, seeing patients enhances the learning (Larsen et al., 2013), which can be measured by focusing on the two patient-related AOIs.

Third, the pose estimation teamwork metric of the team’s distance to the patient and between the team members may have been influenced by the COVID-19 situation. During data collection in March 2022 people were required to observe the social distance (Jarvis et al., 2020).

Fourth, the process of calculating the first metric for both measures was complicated and time intensive. Fortunately, every iteration and further metric was faster because the data processing framework was already established. Therefore, processing newly recorded data using existing metrics or developing new metrics and analyzing existing data takes lower effort and is faster. Additionally, the recorded and calculated data sets can be analyzed using other methods even years later, such as behavior coding or emerging machine learning techniques.

Fifth, we only studied one particular simulated case; the resulting metrics reflect only the interaction during simulated handover. Sixth, future studies may include the investigation of simulation educators’ cognitive load and overall training quality when using technology-based teamwork metrics (Fraser et al., 2018). Furthermore, the degree of acceptance of the methodologies by the participants may be quantified in future studies.

Finally, conducting this study required an interdisciplinary research team consisting of mechanical engineers and a team of healthcare simulation scientists. Currently, for using technology-based metrics to measure teamwork, interdisciplinary skills are essential: Technical knowledge is needed to program metrics and automate the process, while healthcare and teamwork knowledge is required to define relevant behaviors and metrics. However, once the technology is set up for data collection and the metrics are implemented, they will reduce the cognitive load of researchers and educators because complex team dynamics can be feasibly assessed during simulation-based teamwork training.

In this study, two minimally invasive video-based technologies, mobile eye tracking and multi-person pose estimation, were integrated into simulation-based healthcare training to measure teamwork. Both allowed the recording of objective, continuous, and reliable data that could be processed to multiple teamwork metrics. Future research in necessary to generalize our findings and how they may complement existing methods, support instructors, and contribute to the quality of teamwork training in healthcare.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Ethics Committee of Canton Zurich, Switzerland. The patients/participants provided their written informed consent to participate in this study.

KW collected and analyzed the data and wrote the first draft of the manuscript. MK wrote sections of the manuscript. All authors contributed to the conception and design of the study, manuscript revision, read, and approved the submitted version.

Open access funding by ETH Zurich.

The authors thank Dominique Motzny and Stefan Schöne for supporting this study as simulation faculty. They are very grateful to all students participating in our study. Furthermore, they thank Stefan Rau, Marco von Salis, and Andrina Nef for their technical and operational support.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

A, Simulated Anesthesia Resident; IC, Simulated Intensive Care Resident; ICU, Intensive Care Unit; MI, Simulated Medical Intern; P, Patient; AOI, Area of Interest.

Abegglen, S., Greif, R., Balmer, Y., Znoj, H. J., and Nabecker, S. (2022). Debriefing interaction patterns and learning outcomes in simulation: an observational mixed-methods network study. Adv. Simul. 7, 28–10. doi: 10.1186/s41077-022-00222-3

Alderisio, F., Lombardi, M., Fiore, G., and di Bernardo, M. (2017). A novel computer-based set-up to study movement coordination in human ensembles. Front. Psychol. 8:967. doi: 10.3389/fpsyg.2017.00967

Barsuk, J. H., Cohen, E. R., Wayne, D. B., Siddall, V. J., and McGaghie, W. C. (2016). Developing a simulation-based mastery learning curriculum: lessons from 11 years of advanced cardiac life support. Simul. Healthc. 11, 52–59. doi: 10.1097/SIH.0000000000000120

Bogenstätter, Y., Tschan, F., Semmer, N. K., Spychiger, M., Breuer, M., and Marsch, S. (2009). How accurate is information transmitted to medical professionals joining a medical emergency? A simulator study. Hum. Factors 51, 115–125. doi: 10.1177/0018720809336734

Bohannon, L. S., Herbert, A. M., Pelz, J. B., and Rantanen, E. M. (2013). Eye contact and video-mediated communication: a review. Displays 34, 177–185. doi: 10.1016/j.displa.2012.10.009

Bosse, H. M., Mohr, J., Buss, B., Krautter, M., Weyrich, P., Herzog, W., et al. (2015). The benefit of repetitive skills training and frequency of expert feedback in the early acquisition of procedural skills. BMC Med. Educ. 15, 1–10. doi: 10.1186/s12909-015-0286-5

Brauner, E., Boos, M., and Kolbe, M. (2018). The Cambridge handbook of group interaction analysis. Cambridge: Cambridge University Press.

Cao, Z., Hidalgo, G., Simon, T., Wei, S. E., and Sheikh, Y. (2021). OpenPose: Realtime multi-person 2D pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 43, 172–186. doi: 10.1109/TPAMI.2019.2929257

Desmedt, M., Ulenaers, D., Grosemans, J., Hellings, J., and Bergs, J. (2021). Clinical handover and handoff in healthcare: a systematic review of systematic reviews. Int. J. Qual. Heal. Care 33:170. doi: 10.1093/INTQHC/MZAA170

Dias, R. D., Zenati, M. A., Stevens, R., Gabany, J. M., and Yule, S. J. (2019). Physiological synchronization and entropy as measures of team cognitive load. J. Biomed. Inform. 96:103250. doi: 10.1016/j.jbi.2019.103250

Dietz, A. S., Pronovost, P. J., Benson, K. N., Mendez-Tellez, P. A., Dwyer, C., Wyskiel, R., et al. (2014). A systematic review of behavioural marker systems in healthcare: what do we know about their attributes, validity and application? BMJ Qual. Saf. 23:1031. doi: 10.1136/bmjqs-2013-002457

Dovidio, J. F., and Ellyson, S. L. (1982). Decoding visual dominance: attributions of power based on relative percentages of looking while speaking and looking while listening. Soc. Psychol. Q. 45:106. doi: 10.2307/3033933

Edmondson, A. (2012). Teaming: how organizations learn, innovate, and compete in the knowledge economy. John Wiley & Sons Available at: https://books.google.ch/books?hl=en&lr=&id=5dZYEAAAQBAJ&oi=fnd&pg=PR1&dq=Edmondson,+A.+C.+(2012).+Teaming:+How+organizations+learn,+innovate,+and+compete+in+the+knowledge+economy.+San+Francisco,+CA:+Jossey-Bass.&ots=DNWfucoX5r&sig=2U7mBA0sAdoO1pwqU1w8CpZ5

Farooq, O., Thorley-Dickinson, V. A., Dieckmann, P., Kasfiki, E. V., Omer, R. M. I. A., and Purva, M. (2017). Comparison of oral and video debriefing and its effect on knowledge acquisition following simulation-based learning. BMJ Simul. Technol. Enhanc. Learn 3, 48–53. doi: 10.1136/bmjstel-2015-000070

Fasold, F., Nicklas, A., Seifriz, F., Schul, K., Noël, B., Aschendorf, P., et al. (2021). Gaze coordination of groups in dynamic events – a tool to facilitate analyses of simultaneous gazes within a team. Front. Psychol. 12, 1–7. doi: 10.3389/fpsyg.2021.656388

Ferhat, O., and Vilariño, F. (2016). Low cost eye tracking: the current panorama. Comput. Intell. Neurosci. 2016, 1–14. doi: 10.1155/2016/8680541

Fey, M. K., Roussin, C. J., Rudolph, J. W., Morse, K. J., Palaganas, J. C., and Szyld, D. (2022). Teaching, coaching, or debriefing With Good Judgment: a roadmap for implementing “With Good Judgment” across the SimZones. Adv. Simul. 7:235. doi: 10.1186/s41077-022-00235-y

Foster, S., and Manser, T. (2012). The effects of patient handoff characteristics on subsequent care: a systematic review and areas for future research. Acad. Med. 87, 1105–1124. doi: 10.1097/ACM.0b013e31825cfa69

Fraser, K. L., Meguerdichian, M. J., Haws, J. T., Grant, V. J., Bajaj, K., and Cheng, A. (2018). Cognitive load theory for debriefing simulations: implications for faculty development. Adv. Simul. 3:28. doi: 10.1186/s41077-018-0086-1

Gordon, L., Rees, C., Ker, J., and Cleland, J. (2017). Using video-reflexive ethnography to capture the complexity of leadership enactment in the healthcare workplace. Adv. Heal. Sci. Educ. 22, 1101–1121. doi: 10.1007/s10459-016-9744-z

Greig, P. R., Higham, H., and Nobre, A. C. (2014). Failure to perceive clinical events: an under-recognised source of error. Resuscitation 85, 952–956. doi: 10.1016/j.resuscitation.2014.03.316

Halgas, E., van Eijndhoven, K., Gevers, J., Wiltshire, T. J., Westerink, J., and Sonja, R. (2022). Team coordination dynamics: a review on using wearable technology to assess team functioning and team performance. Small Gr. Res. 1–36. doi: 10.1177/10464964221125717

Hendrich, A., Chow, M. P., Skierczynski, B. A., and Lu, Z. (2008). A 36-hospital time and motion study: how do medical-surgical nurses spend their time? Perm. J. 12, 25–34. doi: 10.7812/tpp/08-021

Henneman, E. A., Marquard, J. L., Fisher, D. L., and Gawlinski, A. (2017). Eye tracking: a novel approach for evaluating and improving the safety of healthcare processes in the simulated setting. Simul. Healthc. 12, 51–56. doi: 10.1097/SIH.0000000000000192

Hughes, A. M., Gregory, M. E., Joseph, D. L., Sonesh, S. C., Marlow, S. L., Lacerenza, C. N., et al. (2016). Saving lives: a meta-analysis of team training in healthcare. J. Appl. Psychol. 101, 1266–1304. doi: 10.1037/apl0000120

Isella, L., Romano, M., Barrat, A., Cattuto, C., Colizza, V., van den Broeck, W., et al. (2011). Close encounters in a pediatric ward: measuring face-to-face proximity and mixing patterns with wearable sensors. PLoS One 6:e17144. doi: 10.1371/journal.pone.0017144

Jarvis, C. I., van Zandvoort, K., Gimma, A., Prem, K., Klepac, P., Rubin, G. J., et al. (2020). Quantifying the impact of physical distance measures on the transmission of COVID-19 in the UK. BMC Med. 18:124. doi: 10.1101/2020.03.31.20049023

Kazi, S., Khaleghzadegan, S., Dinh, J. V., Shelhamer, M. J., Sapirstein, A., Goeddel, L. A., et al. (2021). Team physiological dynamics: a critical review. Hum. Factors 63, 32–65. doi: 10.1177/0018720819874160

Klonek, F., Gerpott, F. H., Lehmann-Willenbrock, N., and Parker, S. K. (2019). Time to go wild: how to conceptualize and measure process dynamics in real teams with high-resolution. Organ. Psychol. Rev. 9, 245–275. doi: 10.1177/2041386619886674

Kolbe, M., and Boos, M. (2019). Laborious but elaborate: the benefits of really studying team dynamics. Front. Psychol. 10:1478. doi: 10.3389/fpsyg.2019.01478

Kolbe, M., Burtscher, M. J., and Manser, T. (2013). Co-ACT—a framework for observing coordination behaviour in acute care teams. BMJ Qual. Saf. 22:596. doi: 10.1136/bmjqs-2012-001319

Kolbe, M., Eppich, W., Rudolph, J., Meguerdichian, M., Catena, H., Cripps, A., et al. (2020). Managing psychological safety in debriefings: a dynamic balancing act. BMJ Simul. Technol. Enhanc. Learn. 6, 164–171. doi: 10.1136/BMJSTEL-2019-000470

Kolbe, M., Grote, G., Waller, M. J., and Wacker, J. (2014). Monitoring and talking to the room: autochthonous coordination patterns in team interaction and performance. J. Appl. Psychol. 99, 1254–1267. doi: 10.1037/a0037877

Kozlowski, S. W. J. (2015). Advancing research on team process dynamics: theoretical, methodological, and measurement considerations. Organ. Psychol. Rev. 5, 270–299. doi: 10.1177/2041386614533586

Larsen, D. P., Butler, A. C., Lawson, A. L., and Roediger, H. L. (2013). The importance of seeing the patient: test-enhanced learning with standardized patients and written tests improves clinical application of knowledge. Adv. Heal. Sci. Educ. 18, 409–425. doi: 10.1007/s10459-012-9379-7

Liaw, S. Y., Scherpbier, A., Rethans, J. J., and Klainin-Yobas, P. (2012). Assessment for simulation learning outcomes: a comparison of knowledge and self-reported confidence with observed clinical performance. Nurse Educ. Today 32, e35–e39. doi: 10.1016/J.NEDT.2011.10.006

Marcelino, R., Sampaio, J., Amichay, G., Gonçalves, B., Couzin, I. D., and Nagy, M. (2020). Collective movement analysis reveals coordination tactics of team players in football matches. Chaos Solitons Fractals 138:109831. doi: 10.1016/j.chaos.2020.109831

Müller, M., Jürgens, J., Redaèlli, M., Klingberg, K., Hautz, W. E., and Stock, S. (2018). Impact of the communication and patient hand-off tool SBAR on patient safety: a systematic review. BMJ Open 8:e022202. doi: 10.1136/bmjopen-2018-022202

Ng, C., Primiani, N., and Orchanian-Cheff, A. (2021). Rapid cycle deliberate practice in healthcare simulation: a scoping review. Med. Sci. Educ. 31, 2105–2120. doi: 10.1007/S40670-021-01446-0

Petrosoniak, A., Almeida, R., Pozzobon, L. D., Hicks, C., Fan, M., White, K., et al. (2019). Tracking workflow during high-stakes resuscitation: the application of a novel clinician movement tracing tool during in situ trauma simulation. BMJ Simul. Technol. Enhanc. Learn. 5, 78–84. doi: 10.1136/bmjstel-2017-000300

Pronovost, P. (2013). “Teamwork matters” in Developing and enhancing teamwork in organizations: Evidence-based best practices and guidelines. eds. E. Salas, S. I. Tannenbaum, D. Cohen, and G. Latham (San Francisco, CA: Jossey-Bass), 11–12.

Rosen, M. A., DiazGranados, D., Dietz, A. S., Benishek, L. E., Thompson, D., Pronovost, P. J., et al. (2018). Teamwork in healthcare: key discoveries enabling safer, high-quality care. Am. Psychol. 73, 433–450. doi: 10.1037/amp0000298

Rosen, M. A., Dietz, A. S., Yang, T., Priebe, C. E., and Pronovost, P. J. (2014). An integrative framework for sensor-based measurement of teamwork in healthcare. J. Am. Med. Informatics Assoc. 22, 11–18. doi: 10.1136/amiajnl-2013-002606

Rosen, M. A., Salas, E., Wilson, K. A., King, H. B., Salisbury, M., Augenstein, J. S., et al. (2008). Measuring team performance in simulation-based training: adopting best practices for healthcare. Simul. Healthc. 3, 33–41. doi: 10.1097/SIH.0b013e3181626276

Rotthoff, T., Kadmon, M., and Harendza, S. (2021). It does not have to be either or! Assessing competence in medicine should be a continuum between an analytic and a holistic approach. Adv. Heal. Sci. Educ. 26, 1659–1673. doi: 10.1007/s10459-021-10043-0

Rudolph, J. W., Raemer, D. B., and Simon, R. (2014). Establishing a safe container for learning in simulation the role of the presimulation briefing. Simul. Healthc. 9, 339–349. doi: 10.1097/SIH.0000000000000047

Rudolph, J. W., Simon, R., Raemer, D. B., and Eppich, W. J. (2008). Debriefing as formative assessment: closing performance gaps in medical education. Acad. Emerg. Med. 15, 1010–1016. doi: 10.1111/j.1553-2712.2008.00248.x

Rudolph, J., Simon, R., Rivard, P., Dufresne, R. L., and Raemer, D. B. (2007). Debriefing with good judgment: combining rigorous feedback with genuine inquiry. Anesthesiol. Clin. 25, 361–376. doi: 10.1016/j.anclin.2007.03.007

Ruth, D. (1992). Interpersonal communication: a review of eye contact. Infect. Control Hosp. Epidemiol. 13, 222–225. doi: 10.2307/30147101

Salas, E., Rosen, M. A., Held, J. D., and Weissmuller, J. J. (2009). Performance measurement in simulation-based training. Simul. Gaming 40, 328–376. doi: 10.1177/1046878108326734

Salvetti, F., Gardner, R., Minehart, R., and Bertagni, B. (2019). Advanced medical simulation: interactive videos and rapid cycle deliberate practice to enhance teamwork and event management: effective event. Int. J. Adv. Corp. Learn. 12, 70–81. doi: 10.3991/ijac.v12i3.11270

Schmutz, J. B., Meier, L. L., and Manser, T. (2019). How effective is teamwork really? The relationship between teamwork and performance in healthcare teams: a systematic review and meta-analysis. BMJ Open 9, 1–16. doi: 10.1136/bmjopen-2018-028280

Schnittker, J. (2004). Social distance in the clinical encounter: interactional and Sociodemographic foundations for mistrust in physicians. Soc. Psychol. Q. 67, 217–235. doi: 10.1177/019027250406700301

Shrivastava, S., Shrivastava, P., and Ramasamy, J. (2010). Effective feedback: an indispensable tool for improvement in quality of medical education. J. Pedagog. Dev. 3, 12–20.

Shuffler, M. L., Salas, E., and Rosen, M. A. (2020). The evolution and maturation of teams in organizations: convergent trends in the new dynamic science of teams. Front. Psychol. 11, 1–6. doi: 10.3389/fpsyg.2020.02128

Su, L., Kaplan, S., Burd, R., Winslow, C., Hargrove, A., and Waller, M. (2017). Trauma resuscitation: can team behaviours in the prearrival period predict resuscitation performance? BMJ Simul. Technol. Enhanc. Learn. 3, 106–110. doi: 10.1136/bmjstel-2016-000143

Tolg, B., and Lorenz, J. (2020). An analysis of movement patterns in mass casualty incident simulations. Adv. Simul. 5, 27–10. doi: 10.1186/s41077-020-00147-9

Uher, J., and Visalberghi, E. (2016). Observations versus assessments of personality: a five-method multi-species study reveals numerous biases in ratings and methodological limitations of standardised assessments. J. Res. Pers. 61, 61–79. doi: 10.1016/j.jrp.2016.02.003

Vertegaal, R., Slagter, R., Van Der Veer, G., and Nijholt, A. (2001). Eye gaze patterns in conversations: there is more to conversational agents than meets the eyes. Human Fact. Comput. Syst. 3, 301–308. doi: 10.1145/365024.365119

Weaver, S. J., Dy, S. M., and Rosen, M. A. (2014). Team-training in healthcare: a narrative synthesis of the literature. BMJ Qual. Saf. 23, 359–372. doi: 10.1136/BMJQS-2013-001848

Weiss, K. E., Hoermandinger, C., Mueller, M., Schmid Daners, M., Potapov, E. V., Falk, V., et al. (2021). Eye tracking supported human factors testing improving patient training. J. Med. Syst. 45, 55–57. doi: 10.1007/s10916-021-01729-4

Weiss, K. E., Kolbe, M., Nef, A., Grande, B., Kalirajan, B., Meboldt, M., et al. (2023). Data-driven resuscitation training using pose estimation. Adv. Simul. 81, 1–9. doi: 10.1186/S41077-023-00251-6

Wiltshire, T. J., Hudson, D., Lijdsman, P., Wever, S., and Atzmueller, M. (2020). Social analytics of team interaction using dynamic complexity heat maps and network visualizations. Available at: http://arxiv.org/abs/2009.04445

Keywords: teamwork, training, eye tracking, pose estimation, simulation, feedback, technology, behavior measurement

Citation: Weiss KE, Kolbe M, Lohmeyer Q and Meboldt M (2023) Measuring teamwork for training in healthcare using eye tracking and pose estimation. Front. Psychol. 14:1169940. doi: 10.3389/fpsyg.2023.1169940

Received: 20 February 2023; Accepted: 02 May 2023;

Published: 31 May 2023.

Edited by:

Margarete Boos, University of Göttingen, GermanyReviewed by:

Eleonora Orena, IRCCS Carlo Besta Neurological Institute Foundation, ItalyCopyright © 2023 Weiss, Kolbe, Lohmeyer and Meboldt. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kerrin Elisabeth Weiss, d2Vpc3NrZUBldGh6LmNo

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.