- 1School of Education, Jianghan University, Wuhan, China

- 2National Engineering Laboratory for Educational Big Data, Central China Normal University, Wuhan, China

- 3School of Education, State University of New York at Oswego, Oswego, NY, United States

- 4School of Education, Central China Normal University, Wuhan, China

To help optimize online learning platforms for in-service teachers’ professional development, this study aims to develop an instrument to assess the quality of this type of platforms on teacher satisfaction. After reliability and validity tests and expert empowerment, the 27-item instrument was formed. Based on the information systems (IS) success model, this instrument was designed to measure teacher perceptions of the quality of online learning platforms from three dimensions, namely, content quality, technical quality, and service quality. Moreover, the developed instrument was used to analyze the effects of the National Teacher Training Platform amid the COVID-19 outbreak in China. The findings revealed that the improvement of the platform’s style, tool function, operating efficiency, and teaching methods could enhance teachers’ experience of online training.

Introduction

Over the years, education reform and teacher training initiatives have worked hard to build and promote scalable, sustainable online communities for education professionals (Schlager and Fusco, 2003). One of these types of communities, online learning platforms for teacher professional development (TPD), has garnered widespread attention and has been growing rapidly for its capacity and flexibility to support teachers to continuously reflect, learn, and act to augment their practice throughout their teaching careers. Online learning platforms make teacher training and self-development feasible and more convenient in terms of time and space. A quality online teacher learning platform is an essential guarantee for positive teacher learning effects (Pengxi and Guili, 2018). Online technology can help supply high-quality TPD when it is suitably integrated into the learning platform. However, using online technology as a “quick fix” or integrating it into a platform without a clear purpose will not result in the desired changes in teaching and learning outcomes (Cobo Romani et al., 2022). Schlager and Fusco (2003) contended that focusing solely on online technology as a means of delivering training and/or creating online networks puts the cart before the horse by ignoring the Internet’s even greater potential to support and strengthen local communities of practice in which teachers work. The design and delivery of high-quality TPD warrant an understanding of the applicable technology, resources required, and teachers’ needs. Previous research suggested that to exert the greatest impact, professional development must be designed, implemented, and evaluated to satisfy the needs of particular teachers in particular settings (Guskey, 1994). Thus, the teachers’ perceptions of online learning platforms for TPD have become an issue of great importance, especially with regard to the quality of these platforms. Obtaining and analyzing teachers’ perceptions can help trainers, administrators, platform designers, and technologists better use and improve online learning platforms for TPD, thereby helping teachers acquire knowledge and skills more effectively. As Greenberg (2009, p. 2) pointed, “without a programmatic understanding of best practices and methods of anticipating potential roadblocks, far too many initiatives may falter or fail.”

So far, despite the availability of some instruments to measure users’ perception of online platforms or websites in general, there are few instruments specifically for teachers’ perception of the quality of online training platforms. Without appropriate measurement, some online learning platforms might not be used effectively to support TPD. To address this significant issue and research gap, this study aims to: (i) develop a teacher perception scale of the quality of online learning platforms for TPD; and (ii) apply the developed scale to evaluate the National Online Teacher Training Platform in Central China.

Study design

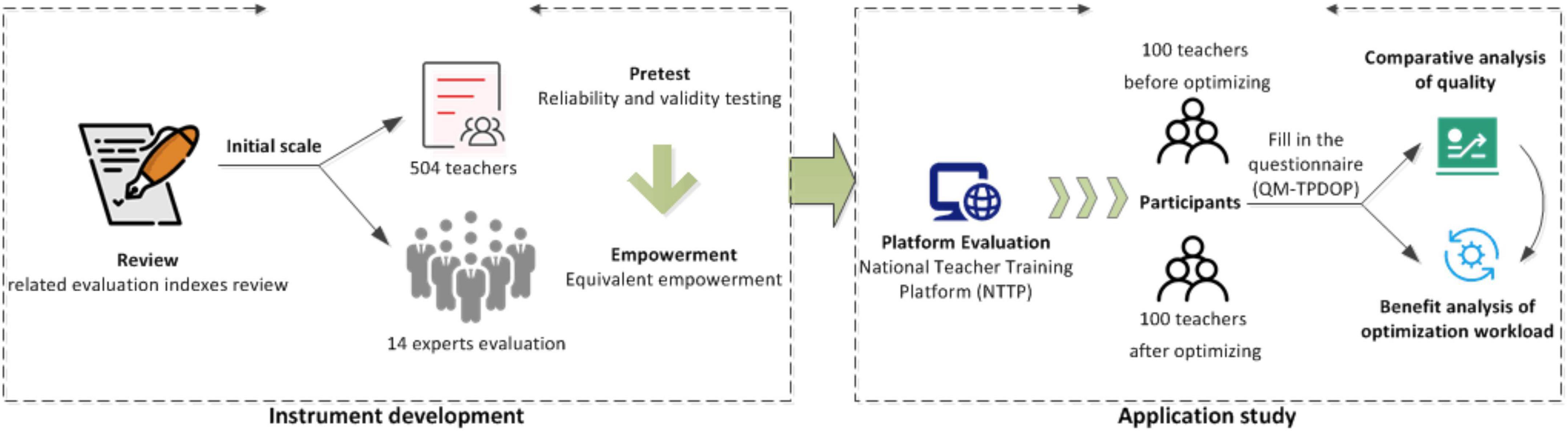

Overall, this study contained two parts, instrument development and application study. As shown in Figure 1, the first part included four steps of the instrument development: conceptual framework and related works, initial scale, preliminary test, and expert evaluation empowerment. Then, the developed instrument was used to validate and evaluate the optimization effect of the online learning platform for teachers. The second part of this study included the following steps: pre-analysis of data, platform quality comparison, and benefit analysis of platform optimization.

Instrument development

Conceptual framework and related works

One of the leading models for measuring information systems (IS) is the IS success model, which aims to provide a thorough understanding of IS success by elucidating the relationships among the critical success factors frequently considered when assessing IS. The IS success model was initially developed and later revised by DeLone and McLean, 1992, 2002, 2003 in response to input from other researchers. As shown in Figure 2, in the IS success model, the three dimensions of quality (information or content quality, system quality, and service quality) directly affect usage intentions and user satisfaction, and, consequently, net system benefits. Information quality, also known as content quality, denotes the quality of the information or content that a system can store, deliver, or produce in terms of completeness, relevance, and consistency. System quality, also known as technical quality, denotes the quality of the system in terms of functionality, usability, efficiency, and portability. Service quality usually denotes the quality of support provided to the users, including reliability, responsiveness, assurance, and empathy. So far, the IS success model has been adopted widely by existing studies to measure different types of information systems, including online learning systems and websites (Lin, 2007). Table 1 presents some main relevant instruments based on one or all of the quality dimensions. Based on the IS success model and previous related research, this study develops an instrument specifically on online platforms for teachers’ professional development. Specifically, this study attempts to identify key indicators from technical quality, content quality, and service quality to measure the effectiveness of online learning platforms for teachers’ professional development on teachers’ experience.

Content quality

Content is the core of online learning platforms, including information and resources for learning and practice. Yang and Chan (2008) focused on the content quality in the evaluation of English learning websites and prepared a differentiated evaluation of the general content, professional learning content, and exclusive training content of each website, highlighting the authority and practicality of content. In addition, Devi and Verma (2018) investigated libraries in general, as well as service information and different types of professional books, stating that attention should also be paid to the design of learning methods and strategies besides the quality of the content itself. Desjardins and Bullock (2019) claimed that only when teachers experience the problem-based learning mode in online training, their learning stays at the theoretical level with no conflicts and contradictions. Fuentes and Martínez (2018) analyzed different evaluation frameworks for English learning platforms and proposed an assessment list, including multimedia, interactive and educational content, and communication items, covering the assessment of teaching content, teaching methods, and strategies.

With the progress of teachers’ online activity, many online learning platforms are promoting both teacher tuition and practice, providing teachers with curriculum research, materials, and resources for their teaching practice. Thus, the content quality of this study denotes the quality of online learning courses and teaching-related professional resources. Regarding specific sub-dimension design, we integrated previous research on evaluating the teaching content and method strategies with the teaching effect suggested by experts. Then, content quality indicators were constructed from three aspects: resource, method, and effectiveness. Of note, resource evaluation highlights the authority and practicality of the content. Method evaluation focuses on diversity and individual motivation. Teaching effect evaluation emphasizes the impact of learning on Teachers’ teaching practices.

Technical quality

Regarding evaluating the platform’s technical quality, most studies evaluated the two general aspects of the functional effectiveness and technical aesthetics of online platforms or websites and then proposed enhancement in operation technology and interface design. Reportedly, improving online platforms helps to increase user satisfaction and user application continuity (Ng, 2014; Laperuta et al., 2017; Liu et al., 2017; Manzoor et al., 2018; Mousavilou and Oskouei, 2018). Santos et al. (2016, 2019) followed the five principles, namely, multimedia quality, content, navigation, access speed, and interaction in graphic design, to measure user satisfaction in online learning websites. They revealed that usability and navigation were the two evaluation subindicators most preferred by users.

Besides the basic usability aspect, the technical quality of the online platform is the operation’s efficiency and the users’ stickiness in the application process. For example, ÖZkan et al. (2020) aimed at the rapid upsurge of resource retrieval, assessing the retrieval quality of academic online learning platforms from the standpoint of performance, design content, meta-tags, backlinks, and other indicators. Pant (2015) examined the quality of the central science library’s websites primarily from the aspects of efficiency, satisfaction, and accessibility. The evaluation items included the smooth and fast operation of web pages, the ease of use of functions, and the reliance and trust of website services after application. Hassanzadeh et al. (2012) investigated the success factors of digital learning systems and proposed that user loyalty is a factor affecting the system’s success. Loyalty signifies users’ dependence on the platform and the willingness to recommend the platform. This study considered that teachers are different in sensitivity to technical efficiency, interface design, and overall design. Thus, in terms of assessing the rationality and effectiveness of online technology, we combined users’ perception of the development speed of the platform and users’ willingness to recommend the platform. Overall, the evaluation of online teacher learning platforms is conducted from the aspects of effectiveness, style, and development in terms of technical quality.

Service quality

Service quality is one of the key factors influencing learner satisfaction. Examining the quality of digital learning services, user satisfaction, and loyalty, reported that organizational management and learning support, as well as course quality, could affect learners’ satisfaction. Online learning services included similar human services, resources, and tool services. Velasquez and Evans (2018) focused on the public library website staff’s response time to users in terms of service quality and revealed that user satisfaction with the website could be increased by refining the staff’s service. Devi and Verma (2018) included webpage emergency response and tools as one of the quality evaluation items when assessing library websites. Fuentes and Martínez (2018) involved learning support tools and learning resources in assessing website quality when evaluating English learning websites. Moreover, the evaluation of learning services should reflect life-oriented characteristics. Using a design-based research method to propose an evaluation of English learning websites, Liu et al. (2011) analyzed those websites from the aspects of network availability and learning materials, as well as functions that assist language learning, technology integration, and learner preferences. The evaluation indicator system not only accentuates the platform that provides the systematic learning of course content for teachers but also focuses on providing extensive resources and functional applications. Regarding the evaluation of resources and functions, the indicator focuses on the platform’s richness and diversity, while it focuses on personalization in terms of learning services.

With the diversification of online learning methods for teachers and personalized online learning trends in data analysis, the formulation of service quality indicators comprehensively considers manual services, such as answering questions and providing guidance. In addition, developing data analysis and diagnosis services is included, as well as supporting teachers’ teaching, function modules, and apps for behaviors like research, discussion, and reflection. Regarding the evaluation of service items, this study focused on the effectiveness of individualized assistance, as well as the relevance and availability of related tool functions and services.

Initial scale

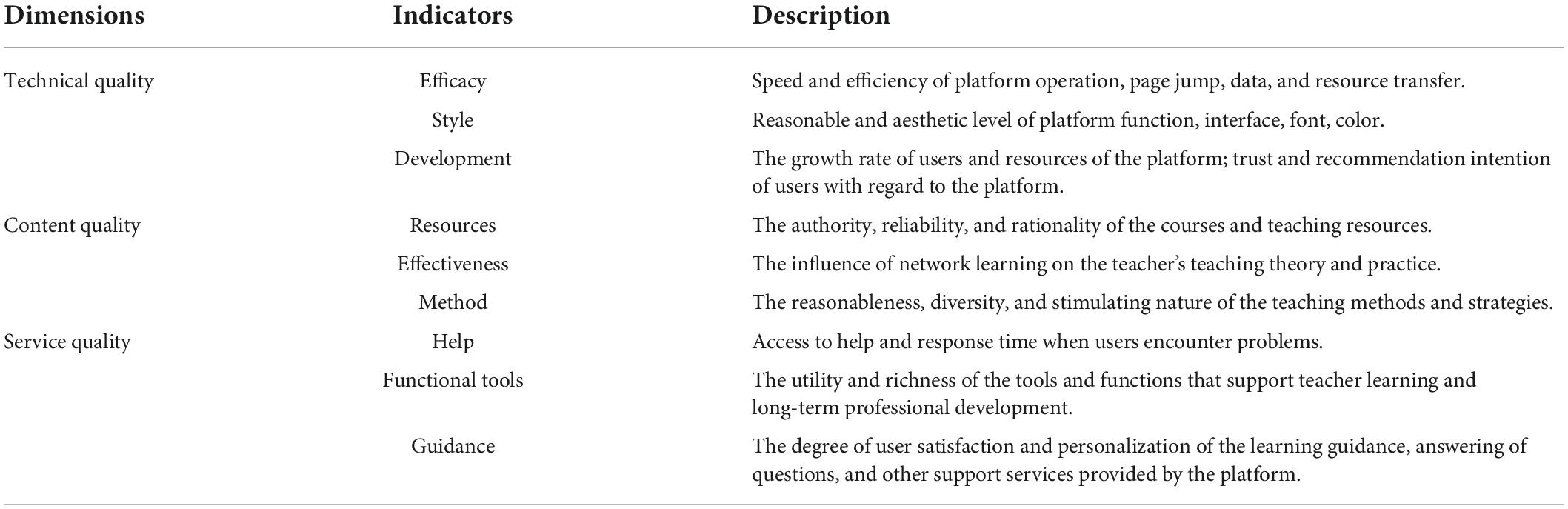

We constructed the teacher perception scale of the quality of online learning platforms for TPD (TPS-Online-TPD), including 27 question items (Table 2). Moreover, the scheme provided the meaning described by each indicator.

Technical quality measured the platform performance and the rationality of the functional interface design. The technical quality evaluation included the evaluation of users’ dependence on the platform and the development of perception, including the following: efficacy (3 items), style (4 items), and development (4 items). An example is “I think the overall layout of the platform is reasonable.”

Content quality measured the quality of all content, including online courses and teaching resources. The evaluation involved not only the authority, rationality, and reliability of the resource itself but also the learning process and results, including resources (4 items), effectiveness (2 items), and method (3 items). An example is that “The teaching content and resources provided on the platform are authoritative.”

Service quality measured the rationality and effectiveness of platform support services. It focused on the service quality that a platform is able to deliver, including: help (2 items), functional tools (3 items), and guidance (2 items). An example: “The analysis of learning data provided by the platform is very good for my learning.”

Preliminary test

A preliminary test TPS-Online-TPD containing 27 items was developed and distributed for online participation. The target population was primary and secondary school in-service teachers who had just completed a period of online training. They were required to complete evaluations of the learning platform they had used, based on their real experience. An exploratory factor analysis was performed on the recovered questionnaire with SPSS22, and the reliability and validity of the results were verified.

Participants

Participants were in-service teachers who completed their professional development training on different online learning platforms hosted by the National Teacher Training Center at Central China Normal University in 2018. A total of 567 questionnaires that contained items of TPS-Online-TPD were collected online. After excluding the high rate of identical answers and incomplete or blank answers, 504 valid questionnaires were obtained, with the efficiency of the questionnaire at 88.89%. Of 504 participants, 77.4% were teachers in primary schools, and 22.6% were teachers in middle schools. In addition, 41.2% of teachers held senior titles in total. Regarding gender, 71.2% are women, while 28.8% are men. For the length of their teaching careers, 3.9% of respondents had been teaching for >3 years, 16.3% from 3 to 10 years, and 73.8% for >10 years. The age information range of participants was as follows: 14.3% aged <30 years, 43.3% aged 30–39 years, 34.9% aged 40–49 years, and 7.5% aged >50 years. Finally, 11.3% of the responding teachers had no experience in online training.

Validity and reliability

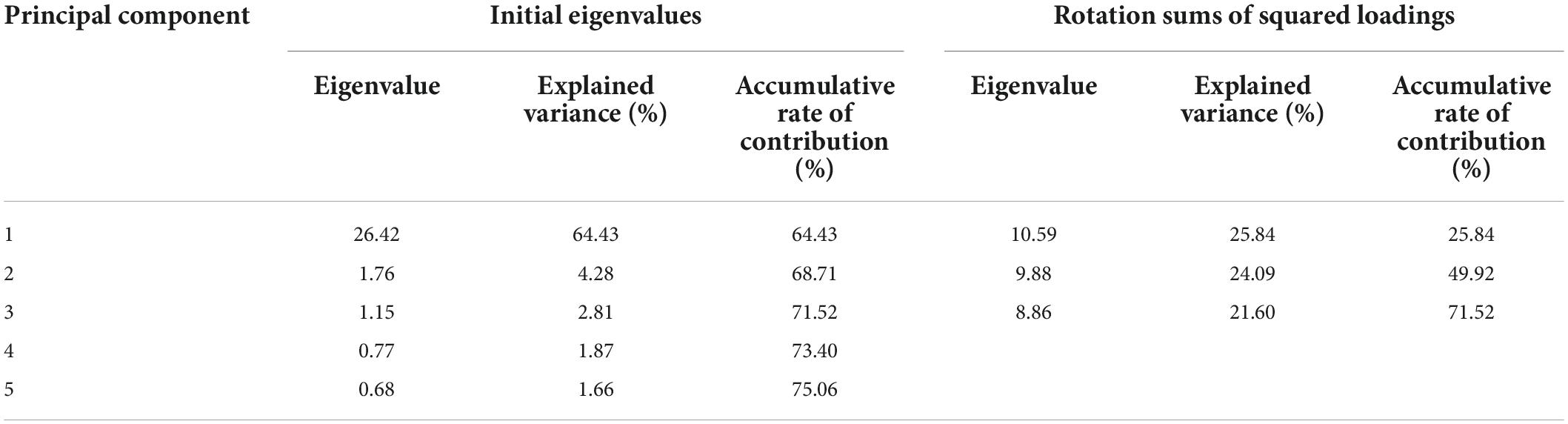

First, the questionnaires were classified to some extent using exploratory factor analysis. We used the principal factor analysis, and Varimax performed factor rotation in the factor analysis process. The cumulative variance explanation rate was the proportion of the variance due to all factors to the total variance, suggesting the total influence of all factors on the dependent variable. Typically, the cumulative variance explanation rate should at least be >50%; while >70% is better, >85% is excellent. According to the cumulative variance interpretation rate, three factors with eigenvalues >1 were extracted. Table 3 shows that three common factors have eigenvalues >1; the total variance interpretation rate for the three factors is 71.523%. Hence, most of the factors were considered to have been covered. Thus, the original three-dimensionality is retained as a common factor.

The load factor was the load of a variable on a common factor, which, in turn, reflected the relative importance of the variable on the common factor. Typically, the load factor after rotation must be >0.71 to be excellent, >0.63 to be very good, and >0.55 to be good. As shown in Table 3, the load factor of only one question in this questionnaire was <0.63, while that of all questions was >0.55, indicating that the questionnaire had a high degree of correspondence with the dimensions, and all 27 questions could be reserved. Supplementary Appendix 1 lists all questions.

Then, we tested the reliability of the questionnaire using the Cronbach coefficient for the 27 selected items. Usually, a Cronbach coefficient of 0.70 is credible, while 0.70–0.98 indicates high reliability. Table 4 demonstrates that the reliability coefficients of the three dimensions are all >0.70, with some even >0.95; the overall reliability coefficient of the questionnaire reached 0.98, indicating that the questionnaire had excellent internal consistency. Next, the KMO test statistic of the screened questionnaire was analyzed. Of note, the KMO test statistic is an index used to compare the simple correlation coefficient and the partial correlation coefficient between variables. The closer the KMO value is to 1, the stronger is the correlation between variables, and the more suitable the original variables are for factor analysis. Of note, a KMO value of 0.90 indicates excellent (marvelous), while 0.8 indicates good (meritorious), and 0.70 indicates middling. The KMO value of this questionnaire was 0.98, indicating that the questionnaire was highly suitable for factor analysis. Finally, we performed a factor analysis on the screened questionnaire. The factor loading coefficients of each question are in the table, and the corresponding factors were 0.61–0.80, indicating that the questionnaire had excellent structural validity.

Expert evaluation empowerment

As indicators are of different importance, we used the expert assessment method to empower the indicators at all levels. The data were collected through an online questionnaire and calculated with an equal weight evaluation method. First, we obtained the weights assigned to the indicators by experts through a questionnaire survey. Thus, the average overall score of each indicator item was calculated per the ranking of all the fill-in options, reflecting the overall ranking of the indicator. The calculation method is:

where Si is an average overall score of option i; Fij denotes the times of option i in position j; Wij denotes the weight of option i in position j; F denotes the times fill in this question.

The option average overall score was further processed to obtain the platform indicator weight value. The calculation formula is as follows:

where ki denotes the weight of option i; Si denotes the average overall score of option i; Sn denotes the sum of the average overall scores of all options in the dimension.

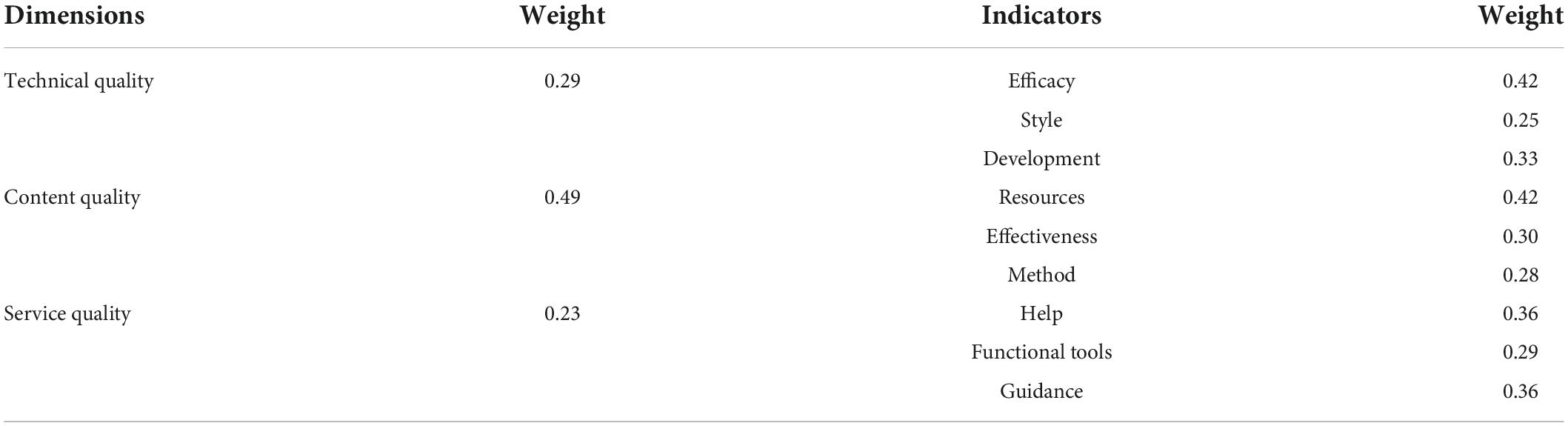

The ranking questionnaires were distributed to 14 experts, including teachers and subject supervisors from primary and secondary schools and eight instructors and advisors from universities, all of whom specialized in teacher mentorship, teacher preparation, and online learning programs. They have about an average of 23 years working experience. Supplementary Appendix 2 provides the experts’ primary background information. According to the formula above, the weights of the indicators at all levels of the platform were calculated, as shown in Table 5.

Application study

Data analysis

Study process

To validate the practicability of TPS-Online-TPD, we applied it to the annual evaluation of the National Online Teacher Training Platform in Central China in November 2019 and July 2020, respectively, which was before and after the lockdown of Wuhan. The Pearson correlation test and independent-sample t-test were conducted to ensure the data from two different groups of teachers were available for comparison before conducting a comparative analysis. Of note, the difference between the two evaluations could represent platform improvement. To further investigate the efficiency of each part of the improvement work, we further calculated the ratio between quality improvement and workload, to comprehend which improvement work could attain the fastest improvement of user satisfaction with the least workload.

Participants

We commissioned the development agency of the training platform; they invited 200 teachers (100 in November 2019 and 100 in July 2020) to fill in the questionnaires carefully in the form of a formal invitation letter through the administrative personnel of the school. All invited in-teachers were from primary and secondary schools in urban areas who had online learning experience before. We checked the collected questionnaires and found that all questionnaires were available in terms of time spent answering questions and repetition rates of the same answers.

Data preprocessing

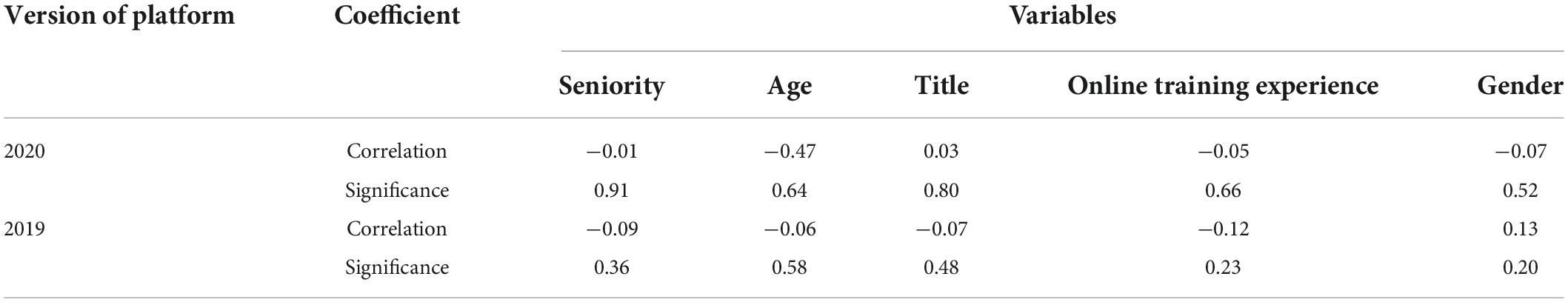

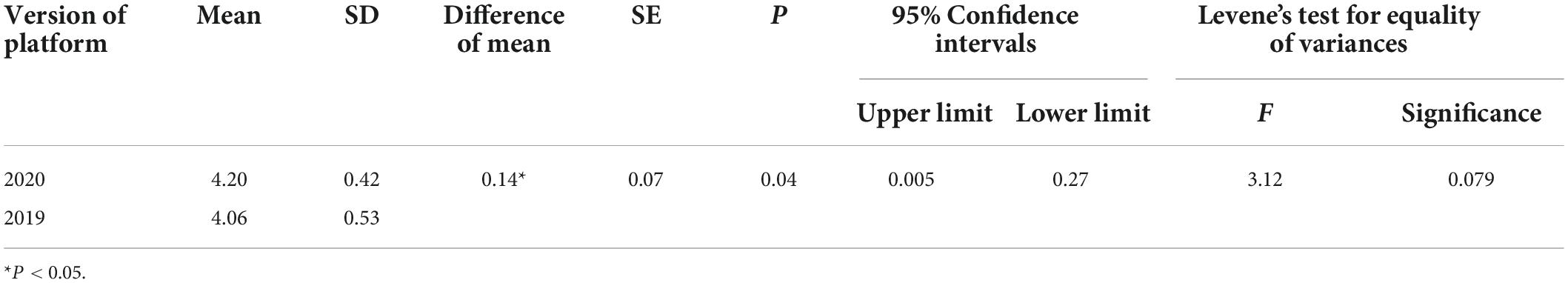

We performed the following analyses to ensure that the data collected from the two evaluation surveys were effective for comparative analysis. First, the Pearson correlation test was conducted on the correlation between scores measured in 2019 and 2020 and the background factors of samples. The significance coefficient P-value obtained was >0.05, as shown in Table 6, suggesting that the correlation was not significant; thus, the personnel background factors would not affect the scoring results, and the data were usable. Then, we conducted an independent-sample t-test. In addition, Levene’s test for equality of variances was not significant, and the corresponding P-value was <0.05, as shown in Table 7, indicating a significant difference between the two measurement results and that the results could be compared.

Results

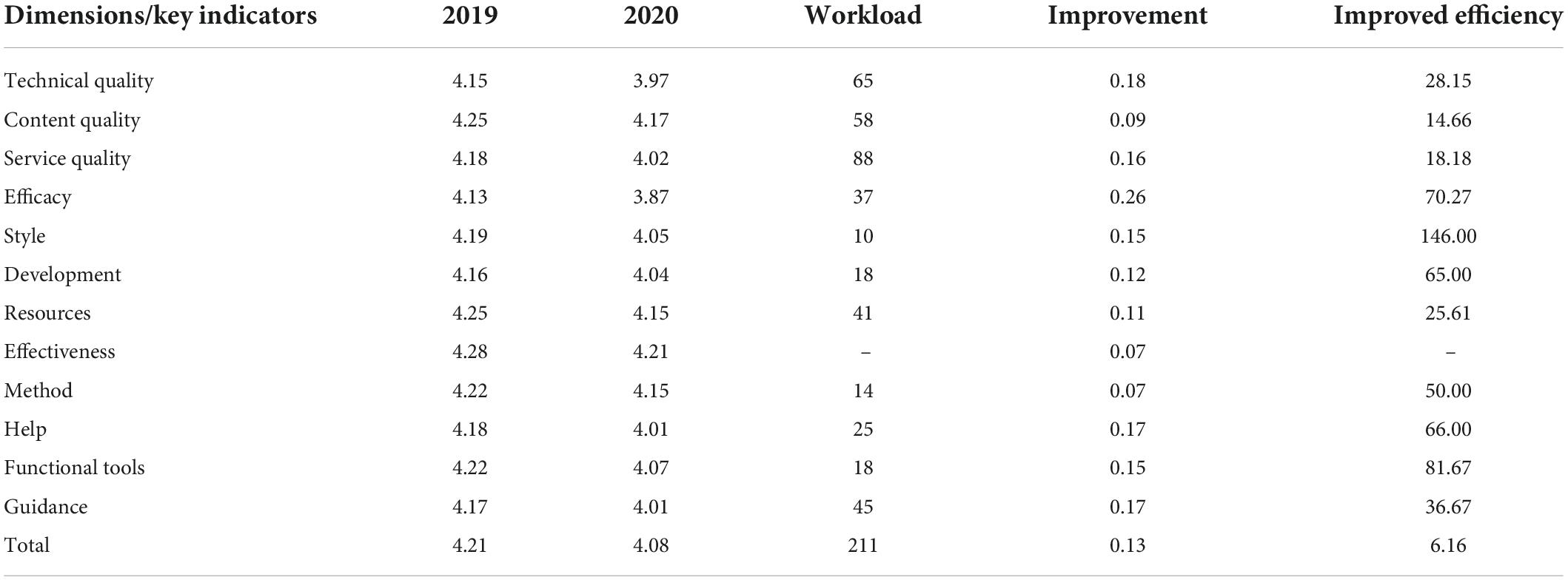

Table 8 presents the results of the two weighted measurements. The scores of all indicator items were higher in 2020 than those in 2019, showing that optimizing online teacher learning platforms amid COVID-19 improved user experience in various aspects. According to the data collected during the COVID-19 pandemic, online learning was teachers’ only professional development method. Thus, the influence of other forms of professional learning for teachers can be excluded from the study based on the data collected during this period.

The more significant the difference measured, the higher the improvement. We observed that the improvement in technical and service quality were the most significant, followed by the improvement in content quality. Overall, the improvement in webpage operation efficiency was the most significant in technical quality. The improvement in the resource quality in terms of content quality was relatively high, and so was the improvement in assistance and guidance in service quality, which were more significant than the improvement in functional tools.

After comparing the weighted average scores of the two measurements, we further combined the National Online Teacher Training Platform Operation Company’s annual improvement efforts in each module to elucidate the benefits and effects of platform improvement. The calculation formula is shown in Eq. 3, and Table 7 presents the calculation results. The bigger the input–output ratio, the higher the benefit of the improvement.

where Pn denotes efficiency improvement in dimension n; An denotes the weighted average score of dimension n this year; Anm denotes the weighted average score of dimension n last year; Ln is the workload of dimension n; C denotes the fixed rounding factor with a value of 10,000.

As shown in Table 8, it is apparent that the degrees of improvement and improved efficiency in technical quality were higher than those of the quality of service and content. Among the key indicators, the improvement in the interface style was the most efficient. Regarding the optimization in content quality, improvement in the teaching method could lead to high efficiency. The improvement of each dimension of service quality was relatively balanced, and the improvement of the platform function tools was the most efficient.

Discussion and conclusion

Based on the IS success model and previous relevant research, this study developed TPS-Online-TPD, which provides a set of key indicators for assessing the quality of online learning platforms for teacher professional development from three aspects: technical quality, content quality, and service quality. TPS-Online TPD is further used to analyze the optimization of the National Teacher Training Platform amid the COVID-19 outbreak in China. By comparing the quality evaluation of the platform before and after the lockdown of Wuhan. The findings revealed two main points. First, the optimization of the platform’s technical quality and service quality can better improve teachers’ learning experience, compared with the optimization of the platform’s content. Second, more significant improvements in the teacher learning experience can be generated when the design style, tool functions, operation efficiency, and teaching methods of the platforms are optimized. These findings corroborate the previous studies that examined key factors on continuance intention in technology-assisted learning (Yang et al., 2022) and technological barriers to learning outcomes (He and Yang, 2021).

The findings of this study have crucial practical implications for developing online learning platforms for teacher professional development; however, these have some limitations in terms of generalizability. Notably, TPS-Online TPD is designed and developed primarily based on and focused on the circumstance of primary and secondary school teachers in China, which does not include other cultural backgrounds and college instructors. In addition, technology integration in teacher professional development is a long and dynamic process (Cai et al., 2019; Zhou et al., 2022). This study only measured the current teacher perceptions of the quality of online learning platforms. Thus, we suggest that future research should consider other cultures and college instructors, and periodically assess the quality of online learning platforms for teacher professional development to obtain additional accurate information and improve the use of the platform.

Data availability statement

The original contributions presented in this study are included in the article/Supplementary material, further inquiries can be directed to the corresponding authors.

Ethics statement

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

JZ and HY: conceptualization and writing–review and editing. JZ and ZC: methodology. JZ and WG: formal analysis. WG and ZL: investigation. BW, ZC, and HY: resources. JZ: writing–original draft preparation. BW: project administration. BW, ZC, and ZL: funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Project Nos. 62077022 and 62077017).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.998196/full#supplementary-material

References

Cai, J., Yang, H. H., Gong, D., MacLeod, J., and Zhu, S. (2019). Understanding the continued use of flipped classroom instruction: A personal beliefs model in Chinese higher education. J. Comput. High. Educ. 31, 137–155. doi: 10.1007/s12528-018-9196-y

Cobo Romani, J. C., Mata-Sandoval, J. C., Quota, M. B. N., Patil, A. S., and Bhatia, J. (2022). Technology for teacher professional development navigation guide: A summary of methods. Washington, DC: World Bank Group.

DeLone, W. H., and McLean, E. R. (1992). Information systems success: The quest for the dependent variable. Inform. Syst. Res. 3, 60–95.

DeLone, W. H., and McLean, E. R. (2002). “Information systems success revisited,” in Proceedings of the 35th annual hawaii international conference on system sciences, (Big Island, HI: IEEE), 2966–2976. doi: 10.1109/HICSS.2002.994345

DeLone, W. H., and McLean, E. R. (2003). The DeLone and McLean model of information systems success: A ten-year update. J. Manage Inform. Syst. 19, 9–30. doi: 10.1080/07421222.2003.11045748

Desjardins, F., and Bullock, S. (2019). Professional development learning environments (PDLEs) embedded in a collaborative online learning environment (COLE): Moving towards a new conception of online professional learning. Educ. Inf. Technol. 24, 1863–1900. doi: 10.1007/s10639-018-9686-6

Devi, K. K., and Verma, M. K. (2018). Web content and design trends of Indian Institute of Technology (IITs) libraries’ website: An evaluation. Collnet. J. Scientomet. 12, 165–181. doi: 10.1080/09737766.2018.1433100

Fuentes, E. M., and Martínez, J. J. R. (2018). Design of a checklist for evaluating language learning websites. Porta Linguarum 30, 23–41.

Greenberg, A. D. (2009). Critical success factors for deploying distance education technologies. Duxbury, MA: Wainhouse Research.

Guskey, T. R. (1994). Results-oriented professional development: In search of an optimal mix of effective practices. J. Staff Dev. 15, 42–50.

Hassanzadeh, A., Kanaani, F., and Elahi, S. (2012). A model for measuring e-learning systems success in universities. Expert Syst. Appl. 39, 10959–10966. doi: 10.1016/j.eswa.2012.03.028

He, X., and Yang, H. H. (2021). “Technological barriers and learning outcomes in online courses during the Covid-19 pandemic,” in Proceedings of the international conference on blended learning, (Cham: Springer), 92–102. doi: 10.1007/978-3-030-80504-3_8

Laperuta, D. G. P., Pessa, S. L. R., Santos, I. R. D., Sieminkoski, T., and Luz, R. P. D. (2017). Ergonomic adjustments on a website from the usability of functions: Can deficits impair functionalities? Acta Sci. 39, 477–485. doi: 10.4025/actascitechnol.v39i4.30511

Lin, H. F. (2007). Measuring online learning systems success: Applying the updated DeLone and McLean model. Cyberpsychol. Behav. 10, 817–820. doi: 10.1089/cpb.2007.9948

Liu, G. Z., Liu, Z. H., and Hwang, G. J. (2011). Developing multi-dimensional evaluation criteria for English learning websites with university students and professors. Comput. Educ. 56, 65–79. doi: 10.1016/j.compedu.2010.08.019

Liu, Y. Q., Bielefield, A., and Mckay, P. (2017). Are urban public libraries websites accessible to Americans with disabilities? Universal Access Inf. 18, 191–206. doi: 10.1007/s10209-017-0571-7

Manzoor, M., Hussain, W., Sohaib, O., Hussain, F. K., and Alkhalaf, S. (2018). Methodological investigation for enhancing the usability of university websites. J. Amb. Intel. Hum. Comp. 10, 531–549. doi: 10.1007/s12652-018-0686-6

Mousavilou, Z., and Oskouei, R. J. (2018). An optimal method for URL design of webpage journals. Soc. Netw. Anal. Min. 8:53. doi: 10.1007/s13278-018-0532-z

Ng, W. S. (2014). Critical design factors of developing a high-quality educational website: Perspectives of pre-service teachers. Issues Informing Sci. Inf. Technol 11, 101–113. doi: 10.28945/1983

ÖZkan, B., Zceylan, E., Kabak, M., and Dadeviren, M. (2020). Evaluating the websites of academic departments through SEO criteria: A hesitant fuzzy linguistic MCDM approach. Artif. Intell. Rev. 53, 875–905. doi: 10.1007/s10462-019-09681-z

Pant, A. (2015). Usability evaluation of an academic library website: Experience with the Central Science Library, University of Delhi. Electron. Library 33, 896–915. doi: 10.1108/EL-04-2014-0067

Pengxi, L., and Guili, Z. (2018). “Design of training platform of young teachers in engineering colleges based on TPCK theory,” in. Proceedings of the 2018 4th international conference on information management (ICIM), (Oxford: IEEE), 290–295. doi: 10.1109/INFOMAN.2018.8392852

Santos, A. M., Cordón-García, J. A., and Díaz, R. G. (2016). “Websites of learning support in primary and high school in Portugal: A performance and usability study,” in Proceedings of the international conference on technological ecosystems for enhancing multiculturality, (New York, NY: ACM), 1121–1125. doi: 10.1145/3012430.3012657

Santos, A. M., Cordón-García, J. A., and Díaz, R. G. (2019). Evaluation of high school websites based on users: A perspective of usability and performance study. J. Inf. Technol. Res. 12, 72–90. doi: 10.4018/JITR.2019040105

Schlager, M. S., and Fusco, J. (2003). Teacher professional development, technology, and communities of practice: Are we putting the cart before the horse? Inform. Soc. 19, 203–220. doi: 10.1080/01972240309464

Velasquez, D., and Evans, N. (2018). Public library websites as electronic branches: A multi-country quantitative evaluation. Inf. Res. 23:25.

Yang, H., Cai, J., Yang, H. H., and Wang, X. (2022). Examining key factors of beginner’s continuance intention in blended learning in higher education. J. Comput. High. Educ. 1–18. doi: 10.1007/s12528-022-09322-5 [Epub ahead of print].

Yang, Y. T. C., and Chan, C. Y. (2008). Comprehensive evaluation criteria for English learning websites using expert validity surveys. Comput. Educ. 51, 403–422. doi: 10.1016/j.compedu.2007.05.011

Zhou, C., Wu, D., Li, Y., Yang, H. H., Man, S., and Chen, M. (2022). The role of student engagement in promoting teachers’ continuous learning of TPACK: Based on a stimulus-organism-response framework and an integrative model of behavior prediction. Educ. Inf. Technol. 1–21. doi: 10.1007/s10639-022-11237-8 [Epub ahead of print].

Keywords: teacher education, online learning, quality evaluation, user experience, training platform

Citation: Zhang J, Wang B, Yang HH, Chen Z, Gao W and Liu Z (2022) Assessing quality of online learning platforms for in-service teachers’ professional development: The development and application of an instrument. Front. Psychol. 13:998196. doi: 10.3389/fpsyg.2022.998196

Received: 19 July 2022; Accepted: 15 September 2022;

Published: 07 October 2022.

Edited by:

Zhongling Pi, Shaanxi Normal University, ChinaCopyright © 2022 Zhang, Wang, Yang, Chen, Gao and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bing Wang, c3h3YW5nYmluZ0BtYWlsLmNjbnUuZWR1LmNu; Harrison Hao Yang, SGFycmlzb24ueWFuZ0Bvc3dlZ28uZWR1

Jing Zhang

Jing Zhang Bing Wang

Bing Wang Harrison Hao Yang

Harrison Hao Yang Zengzhao Chen

Zengzhao Chen Wei Gao

Wei Gao Zhi Liu

Zhi Liu